MaizeStar-YOLO: Precise Detection and Localization of Seedling-Stage Maize

Abstract

1. Introduction

2. Materials and Methods

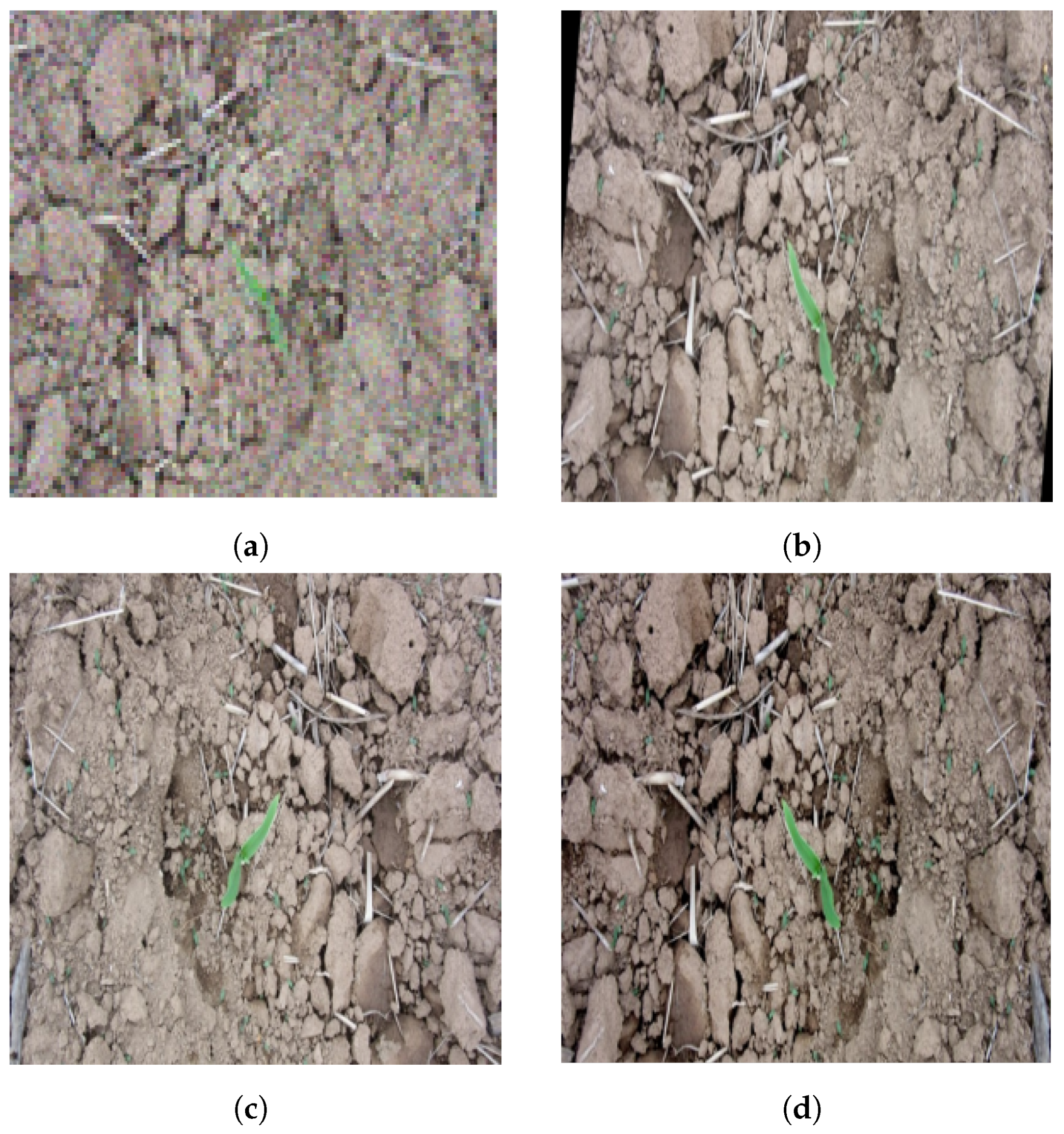

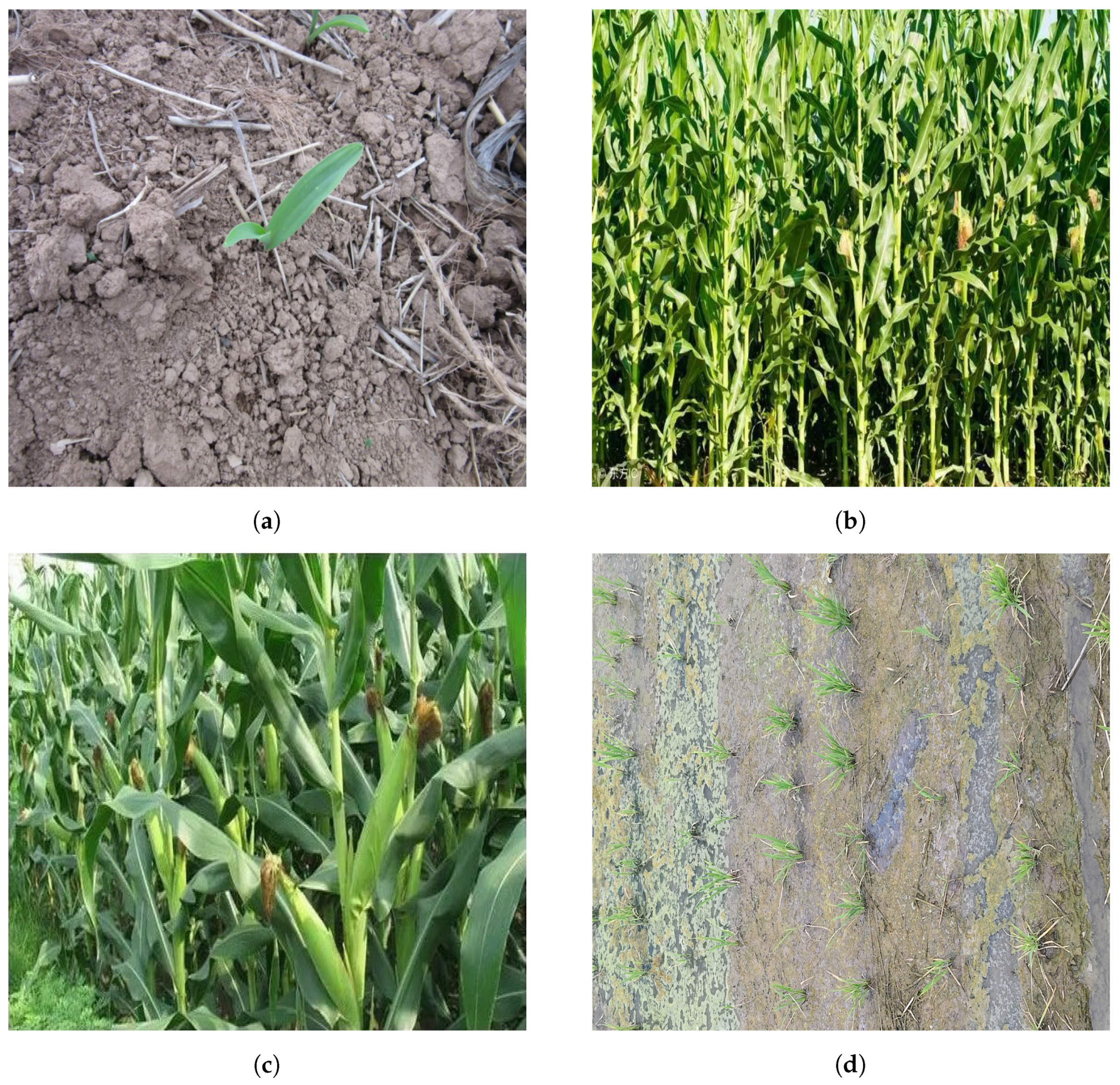

2.1. Image Acquisition and Data Construction

2.2. Image Preprocessing

2.2.1. Data Annotation and Standardization

2.2.2. Data Augmentation

2.2.3. Dataset Partitioning

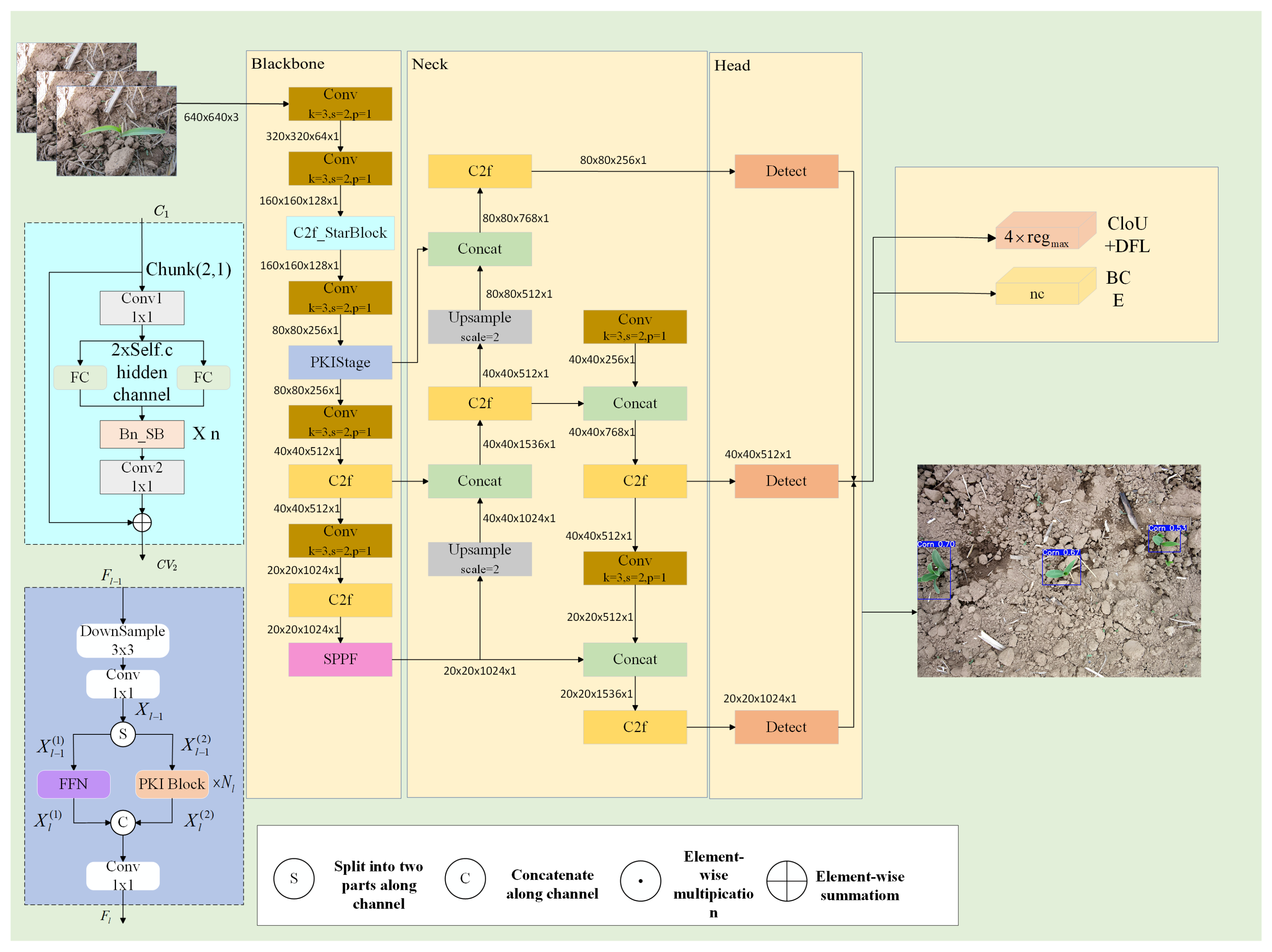

2.3. The Improved YOLOv8 Model

2.3.1. C2F_StarsBlock Module—Coarse-to-Fine Feature Fusion Module

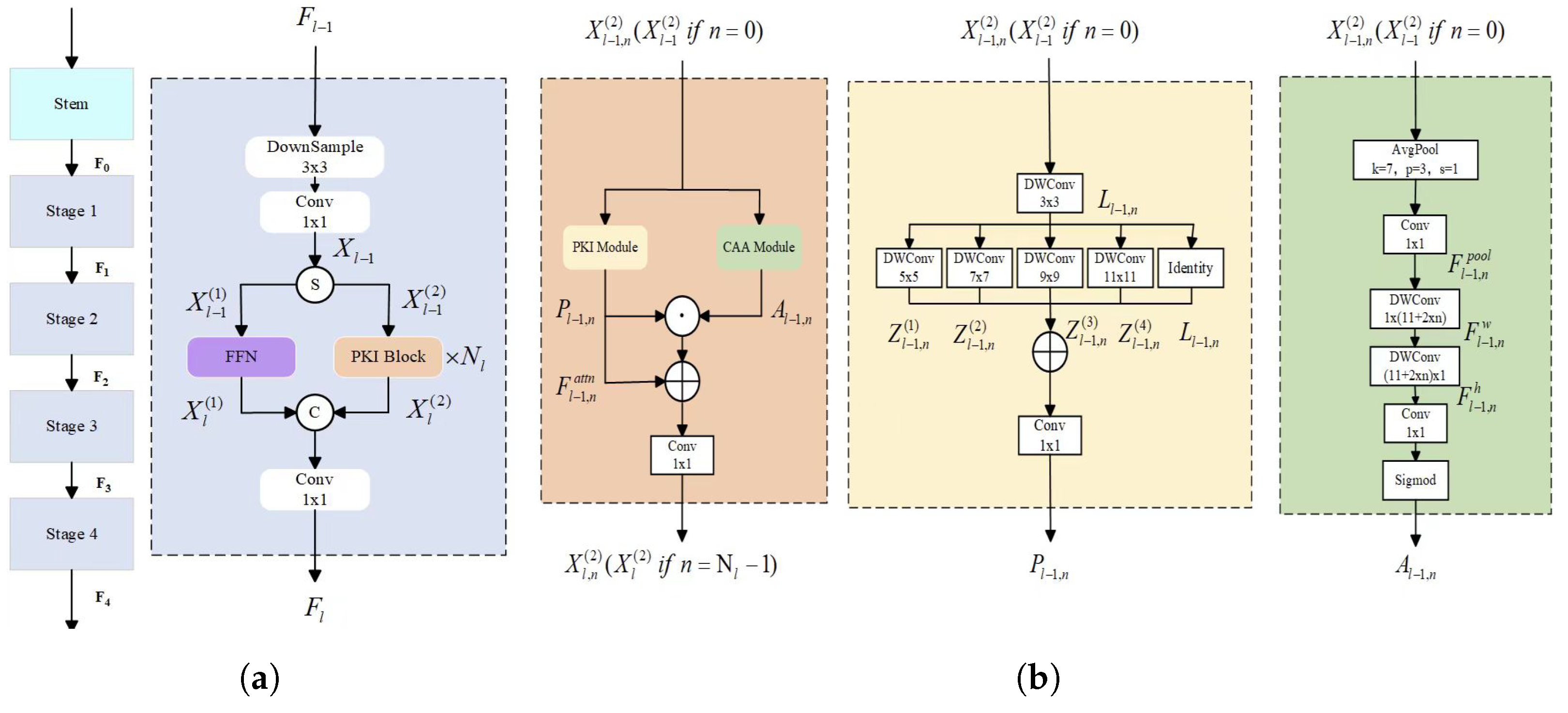

2.3.2. PKIStage Module—Pyramid Kernel Interaction Module

3. Results

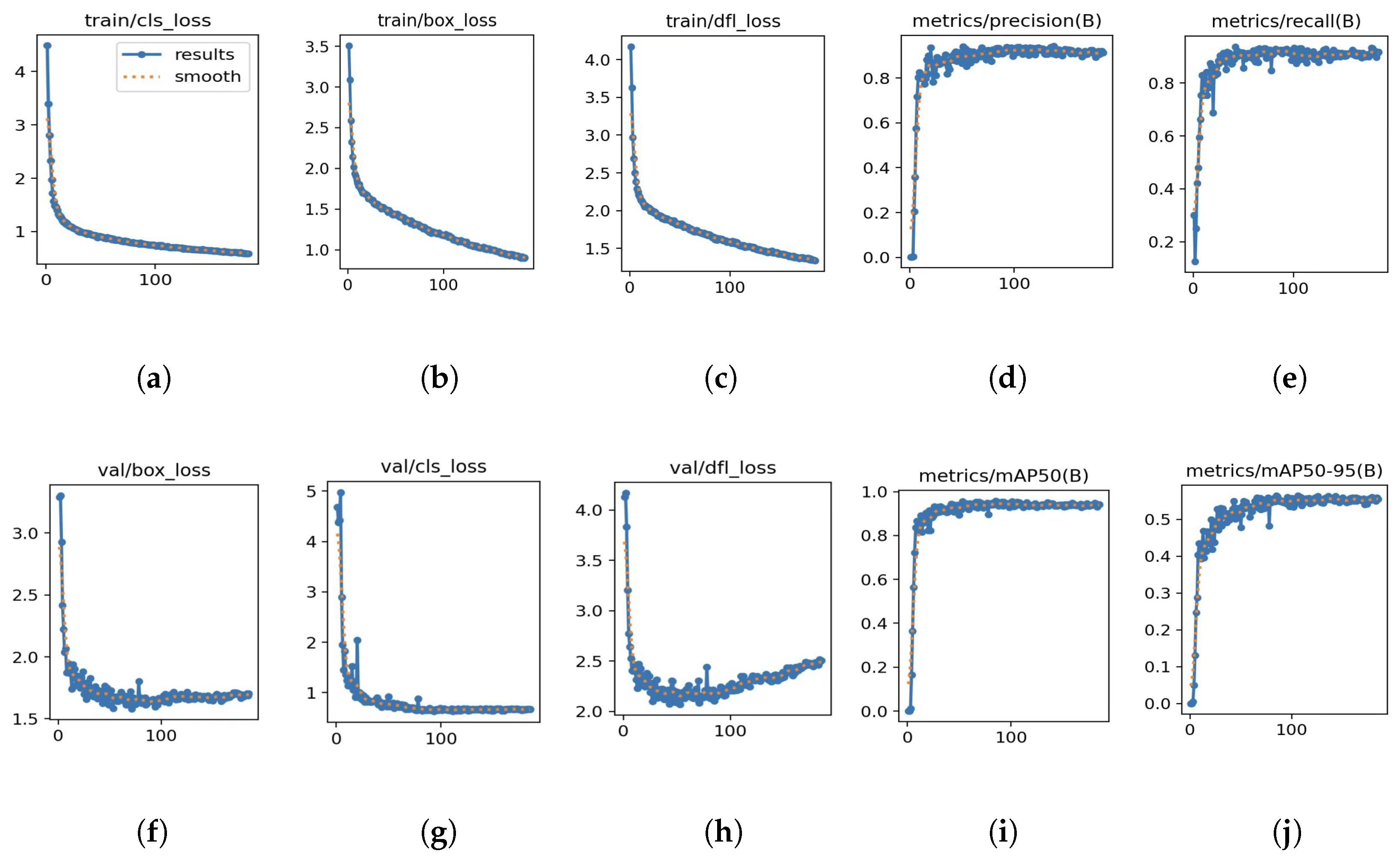

3.1. Training Environment and Evaluation System

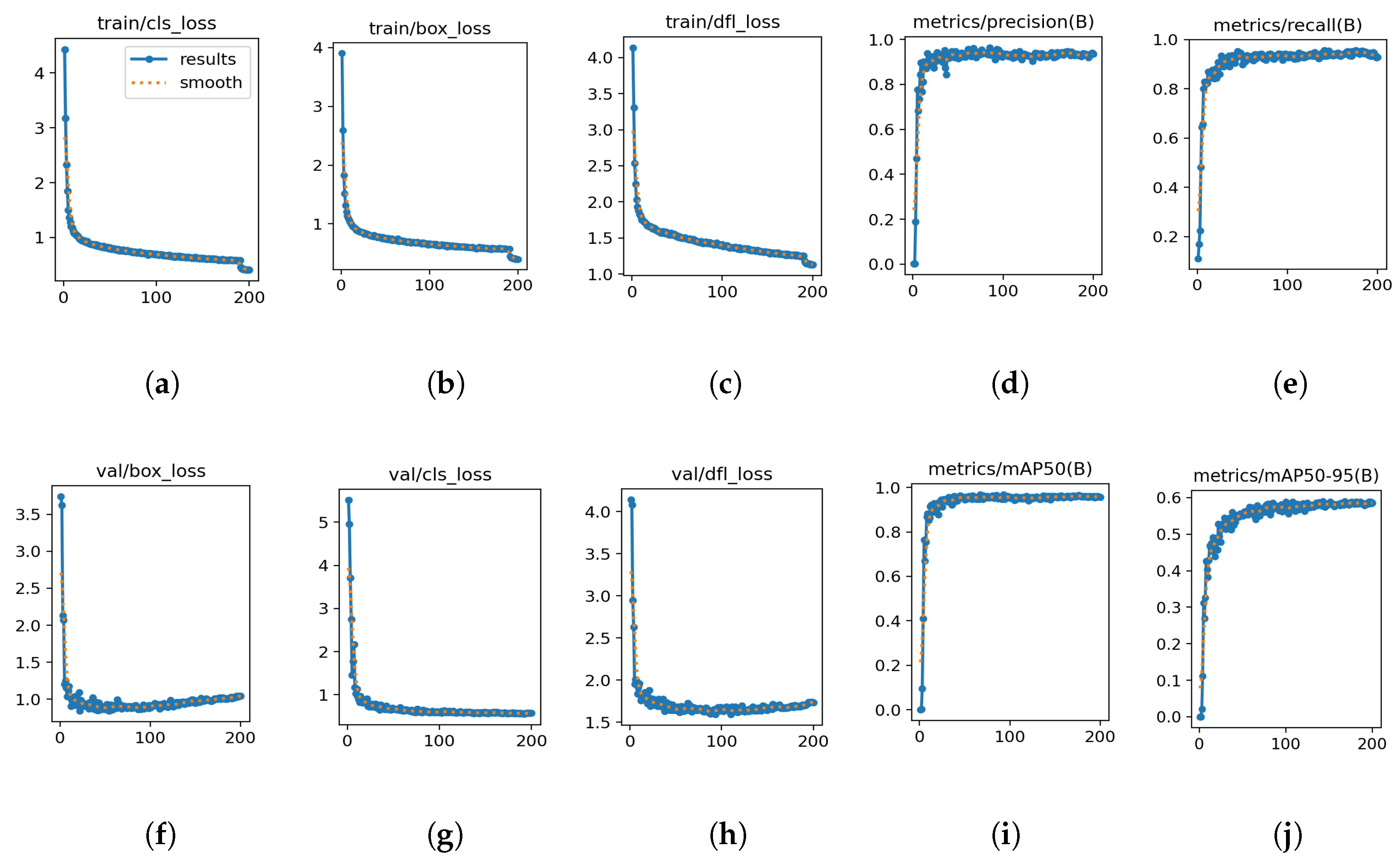

3.2. Performance Analysis of the Original YOLOv8 Model

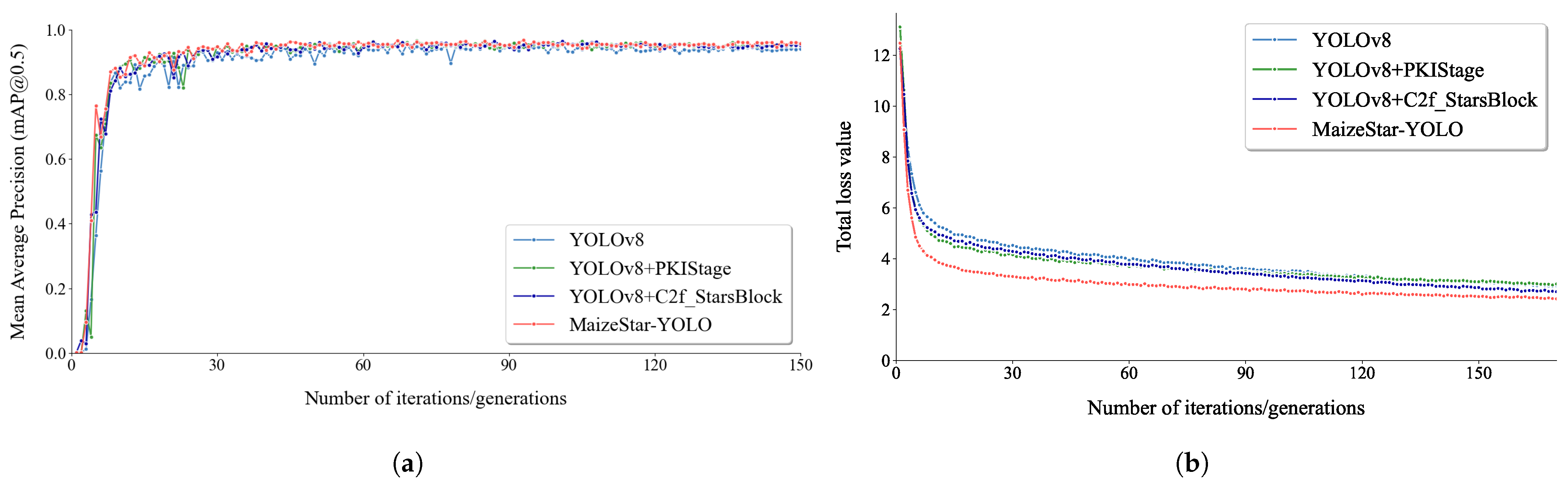

3.3. Contrast Experiment

3.4. Ablation Experiment

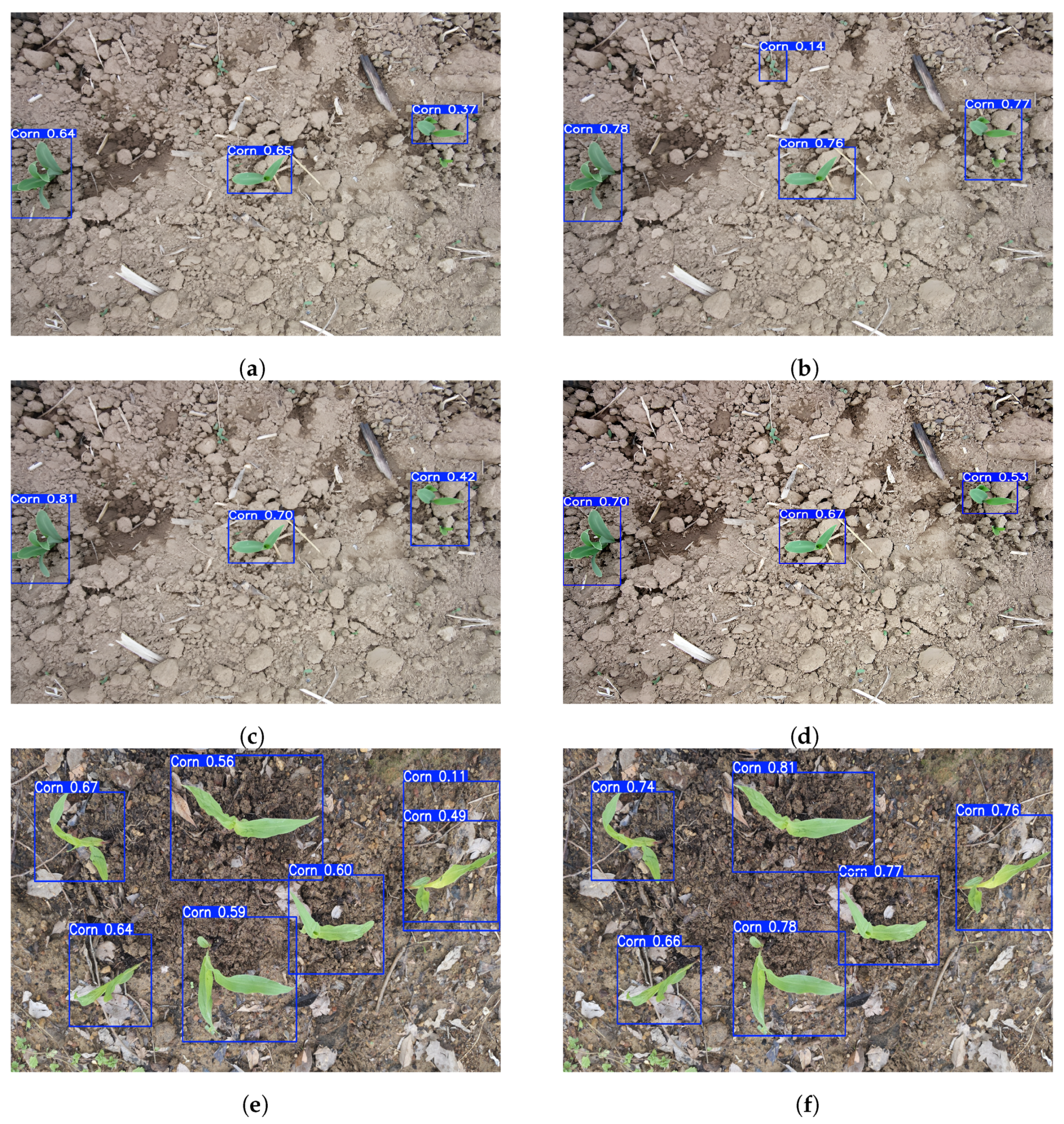

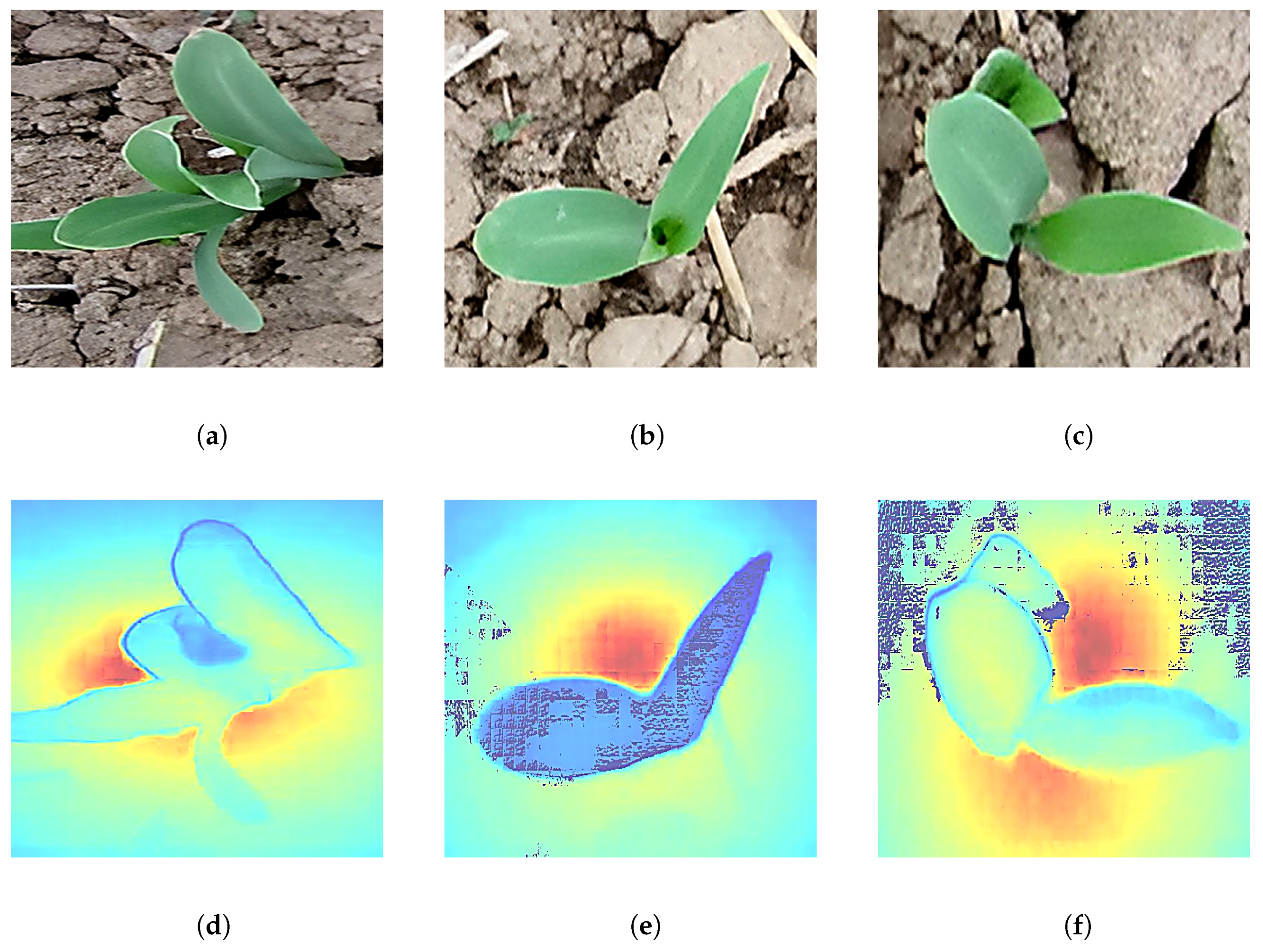

3.5. Model Checking and Application Visualization

4. Discussion

- (1)

- Inadequate Coverage of Later Maize Growth Stages in Model Validation

- (2)

- Lack of Deployment and Evaluation on Edge Devices

- (3)

- Broader Scientific and Practical Implications

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jafari, F.; Wang, B.; Wang, H.; Zou, J. Breeding Maize of Ideal Plant Architecture for High-Density Planting Tolerance through Modulating Shade Avoidance Response and Beyond. J. Integr. Plant Biol. 2023, 66, 849–864. [Google Scholar] [CrossRef] [PubMed]

- Campos, H.; Cooper, M.; Habben, J.E.; Edmeades, G.O.; Schussler, J.R. Improving Drought Tolerance in Maize: A View from Industry. Field Crops Res. 2004, 90, 19–34. [Google Scholar] [CrossRef]

- Shi, M.; Zhang, S.; Lu, H.; Zhao, X.; Wang, X.; Cao, Z. Phenotyping Multiple Maize Ear Traits from a Single Image: Kernels per Ear, Rows per Ear, and Kernels per Row. Comput. Electron. Agric. 2022, 193, 106681. [Google Scholar] [CrossRef]

- Zhang, R.; Ma, S.; Li, L.; Zhang, M.; Tian, S.; Wang, D.; Liu, K.; Liu, H.; Zhu, W.; Wang, X. Comprehensive Utilization of Corn Starch Processing By-Products: A Review. Grain Oil Sci. Technol. 2021, 4, 89–107. [Google Scholar] [CrossRef]

- Ratna, A.S.; Ghosh, A.; Mukhopadhyay, S. Advances and Prospects of Corn Husk as a Sustainable Material in Composites and Other Technical Applications. J. Clean. Prod. 2022, 371, 133563. [Google Scholar] [CrossRef]

- Saragih, S.A.; Munar, A.; Hasibuan, W.R. Forescating the Amount of Corn Production in North Sumatra Based on 2017–2021 Data Using the Single and Double Exponential Smoothing Method (Case Study of Central Bureau of Statistics of North Sumatra). J. Artif. Intell. Eng. Appl. (JAIEA) 2024, 3, 614–617. [Google Scholar] [CrossRef]

- Luo, J.; He, C.; Yan, S.; Jiang, C.; Chen, A.; Li, K.; Zhu, Y.; Gui, S.; Yang, N.; Xiao, Y.; et al. A metabolic roadmap of waxy corn flavor. Mol. Plant 2024, 17, 1883–1898. [Google Scholar] [CrossRef] [PubMed]

- Ashwini, C.; Sellam, V. An optimal model for identification and classification of corn leaf disease using hybrid 3D-CNN and LSTM. Biomed. Signal Process. Control 2024, 92, 106089. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Solimani, F.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Reno, V. Detection of Tomato Plant Phenotyping Traits Using YOLOv5-Based Single Stage Detectors. Comput. Electron. Agric. 2023, 207, 107757. [Google Scholar] [CrossRef]

- Chen, J.; Zhao, D.; Zheng, Z.; Xu, C.; Pang, Y.; Zeng, Y. A Clustering-Based Automatic Registration of UAV and Terrestrial LiDAR Forest Point Clouds. Comput. Electron. Agric. 2024, 217, 108648. [Google Scholar] [CrossRef]

- ElManawy, A.I.; Sun, D.; Abdalla, A.; Zhu, Y.; Cen, H. HSI-PP: A Flexible Open-Source Software for Hyperspectral Imaging-Based Plant Phenotyping. Comput. Electron. Agric. 2022, 200, 107248. [Google Scholar] [CrossRef]

- Feng, J.; Saadati, M.; Jubery, T.; Jignasu, A.; Balu, A.; Li, Y.; Attigala, L.; Schnable, P.S.; Sarkar, S.; Ganapathysubramanian, B. 3D Reconstruction of Plants Using Probabilistic Voxel Carving. Comput. Electron. Agric. 2023, 213, 108248. [Google Scholar] [CrossRef]

- Guo, R.; Xie, J.; Zhu, J.; Cheng, R.; Zhang, Y.; Zhang, X.; Gong, X.; Zhang, R.; Wang, H.; Meng, F. Improved 3D Point Cloud Segmentation for Accurate Phenotypic Analysis of Cabbage Plants Using Deep Learning and Clustering Algorithms. Comput. Electron. Agric. 2023, 211, 108014. [Google Scholar] [CrossRef]

- Sohan, M.; Ram, T.S.; Reddy, C.V.R. A review on YOLOv8 and its advancements. In Cryptology and Network Security with Machine Learning; Springer: Berlin/Heidelberg, Germany, 2024; pp. 529–545. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. YOLOv8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, K.; Wang, L.; Wu, L. An improved YOLOv8 algorithm for rail surface defect detection. IEEE Access 2024, 12, 44984–44997. [Google Scholar] [CrossRef]

- Bao, Z. The UAV Target Detection Algorithm Based on Improved YOLO V8. In Proceedings of the International Conference on Image Processing, Machine Learning and Pattern Recognition, Guangzhou, China, 13–15 September 2024; pp. 264–269. [Google Scholar] [CrossRef]

- Moussaoui, H.; Akkad, N.E.; Benslimane, M.; El-Shafai, W.; Baihan, A.; Hewage, C.; Rathore, R.S. Enhancing automated vehicle identification by integrating YOLO v8 and OCR techniques for high-precision license plate detection and recognition. Sci. Rep. 2024, 14, 14389. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Zhang, S.; Sun, Z.; Liu, C.; Sun, Y.; Ji, K.; Kuang, G. Cross-Sensor SAR Image Target Detection Based on Dynamic Feature Discrimination and Center-Aware Calibration. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5209417. [Google Scholar] [CrossRef]

- Du, S.; Pan, W.; Li, N.; Dai, S.; Xu, B.; Liu, H.; Xu, C.; Li, X. TSD-YOLO: Small traffic sign detection based on improved YOLO v8. IET Image Process. 2024, 18, 2884–2898. [Google Scholar] [CrossRef]

- Ma, C.; Chi, G.; Ju, X.; Zhang, J.; Yan, C. YOLO-CWD: A novel model for crop and weed detection based on improved YOLOv8. Crop Prot. 2025, 192, 107169. [Google Scholar] [CrossRef]

- Sun, S.; Mo, B.; Xu, J.; Li, D.; Zhao, J.; Han, S. Multi-YOLOv8: An infrared moving small object detection model based on YOLOv8 for air vehicle. Neurocomputing 2024, 588, 127685. [Google Scholar] [CrossRef]

- Wei, L.; Tong, Y. Enhanced-YOLOv8: A new small target detection model. Digit. Signal Process. 2024, 153, 104611. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. Available online: https://papers.nips.cc/paper/5638-faster-r-cnn-towards-real-time-object-detection-with-region-proposal-networks (accessed on 23 July 2025). [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5. GitHub. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 23 July 2025).

- Vision, M. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. Available online: https://github.com/meituan/YOLOv6 (accessed on 23 July 2025).

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Ultralytics. YOLOv8: Cutting-Edge Object Detection & Segmentation Model. GitHub. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 23 July 2025).

- Liu, M.; Liu, Y.; Wang, Q.; He, Q.; Geng, D. Real-Time Detection Technology of Corn Kernel Breakage and Mildew Based on Improved YOLOv5s. Agriculture 2024, 14, 725. [Google Scholar] [CrossRef]

- Guan, H.; Deng, H.; Ma, X.; Zhang, T.; Zhang, Y.; Zhu, T.; Zhou, H.; Gu, Z.; Lu, Y. A Corn Canopy Organs Detection Method Based on Improved DBi-YOLOv8 Network. Eur. J. Agron. 2024, 150, 127076. [Google Scholar] [CrossRef]

- Li-Jun, C.; Xue-Wei, B.; Wen-Tao, R. Identification and location of corn seedling based on computer vision. In Proceedings of the IEEE 10th International Conference on Signal Processing (ICSP), Beijing, China, 24–28 October 2010; pp. 1240–1243. [Google Scholar]

- Liu, S.; Yin, D.; Xu, X.; Shi, L.; Jin, X.; Feng, H.; Li, Z. Estimating Maize Seedling Number with UAV RGB Images and Advanced Image Processing Methods. Precis. Agric. 2022, 23, 1301–1322. [Google Scholar] [CrossRef]

- Yang, T.; Zhu, S.; Zhang, W.; Zhao, Y.; Song, X.; Yang, G.; Yao, Z.; Wu, W.; Liu, T.; Sun, C.; et al. Unmanned Aerial Vehicle-Scale Weed Segmentation Method Based on Image Analysis Technology for Enhanced Accuracy of Maize Seedling Counting. Agriculture 2024, 14, 175. [Google Scholar] [CrossRef]

- Ma, X.; Dai, X.; Bai, Y.; Wang, Y.; Fu, Y. Rewrite the Stars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 5694–5703. [Google Scholar]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly Kernel Inception Network for Remote Sensing Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 27706–27716. [Google Scholar]

- Wan, H.-P.; Zhang, W.-J.; Chen, Y.; Luo, Y.; Todd, M.D. An Efficient Three-Dimensional Point Cloud Segmentation Method for the Dimensional Quality Assessment of Precast Concrete Components Utilizing Multiview Information Fusion. J. Comput. Civ. Eng. 2025, 39, 04025028. [Google Scholar] [CrossRef]

- Ravichandran, A.; Mahajan, V.; Van de Kemp, T.; Taubenberger, A.; Bray, L.J. Phenotypic Analysis of Complex Bioengineered 3D Models. Trends Cell Biol. 2025, 35, 470–482. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Zhang, H.; Lu, X. Adaptive Feature Fusion for Small Object Detection. Appl. Sci. 2022, 12, 11854. [Google Scholar] [CrossRef]

- Li, X.; Yang, K.; Huang, R.; Zhou, B.; Xiao, J.; Gao, Z. Detecting Small Objects Using Multi-Scale Feature Fusion Mechanism with Convolutional Block Attention. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 4620–4625. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, H.; Hong, X.; Zhou, Q. Small Object Detection Based on Modified FSSD and Model Compression. In Proceedings of the 2021 IEEE 6th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 22–24 October 2021; pp. 88–92. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, J.; Zhang, G. FFEDet: Fine-Grained Feature Enhancement for Small Object Detection. Remote Sens. 2024, 16, 2003. [Google Scholar] [CrossRef]

- Yang, J.; Liu, X.; Liu, Z. Attention-Guided Feature Fusion for Small Object Detection. In Proceedings of the 2023 IEEE International Conference on Imaging Systems and Techniques (IST), Copenhagen, Denmark, 7–19 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 3–7 May 2021. [Google Scholar] [CrossRef]

- Lin, I.-A.; Cheng, Y.-W.; Lee, T.-Y. Enhancing Smart Agriculture With Lightweight Object Detection: MobileNetv3-YOLOv4 and Adaptive Width Multipliers. IEEE Sens. J. 2024, 24, 40017–40028. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Epochs | 200 |

| Optimizer | SGD |

| Initial Learning Rate | 0.01 |

| Momentum | 0.9 |

| Weight Decay | |

| Image Size | 640 |

| Number of Workers | 8 |

| Model | mAP@0.5 (%) | Model Size (MB) | GFLOPs |

|---|---|---|---|

| YOLOv8n | 89.2 | 6.3 | 8.1 |

| YOLOv8s | 92.0 | 22.5 | 28.4 |

| YOLOv8m | 93.7 | 52.0 | 78.7 |

| YOLOv8l | 93.5 | 87.7 | 164.8 |

| YOLOv8x | 92.0 | 136.7 | 257.4 |

| Model | P (%) | R (%) | mAP@0.5 (%) | mAP@[0.5:0.95] (%) | GFLOPs |

|---|---|---|---|---|---|

| FasterRCNN | 71.72 | 87.83 | 87.60 | – | 470.46 |

| SSD | 67.6 | 70.6 | 68.4 | – | 30.53 |

| NanoDet | 67.76 | 44 | 67.7 | 40.27 | 1.35 |

| YOLOv5s | 99.3 | 80.6 | 90.1 | 61 | 7.1 |

| YOLOv6n | 97.2 | 78.3 | 88 | 59.9 | 11.8 |

| YOLOv7-tiny | 95.1 | 86.7 | 86.1 | 50.1 | 6.52 |

| YOLOv8n | 98.6 | 78.9 | 89.2 | 60 | 8.1 |

| YOLOv11n | 98.7 | 81.7 | 90.5 | 61.7 | 6.3 |

| MaizeStar–YOLO | 98.1 | 86.1 | 92.8 | 62.3 | 3.0 |

| Model | P (%) | R (%) | mAP@0.5 (%) | mAP@[0.5:0.95] (%) | GFLOPs |

|---|---|---|---|---|---|

| YOLOv8 | 98.6 | 78.9 | 89.2 | 60 | 8.1 |

| +C2F_StarsBlock | 98.1 | 83.9 | 91.6 | 61.4 | 8.2 |

| +PKIStage | 97.5 | 85 | 91.8 | 61.5 | 2.9 |

| MaizeStar–YOLO | 98.1 | 86.1 | 92.8 | 62.3 | 3.0 |

| Crop/Stage | P (%) | R (%) | mAP@0.5 (%) | mAP@[0.5:0.95] (%) | GFLOPs |

|---|---|---|---|---|---|

| maize | 78.3 | 52.9 | 70 | 37.6 | 3.0 |

| Rice (Seedling Stage) | 90.7 | 59.6 | 75.8 | 39.4 | 3.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chu, T.; Zha, H.; Wang, Y.; Yao, Z.; Wang, X.; Wu, C.; Liao, J. MaizeStar-YOLO: Precise Detection and Localization of Seedling-Stage Maize. Agronomy 2025, 15, 1788. https://doi.org/10.3390/agronomy15081788

Chu T, Zha H, Wang Y, Yao Z, Wang X, Wu C, Liao J. MaizeStar-YOLO: Precise Detection and Localization of Seedling-Stage Maize. Agronomy. 2025; 15(8):1788. https://doi.org/10.3390/agronomy15081788

Chicago/Turabian StyleChu, Taotao, Hainie Zha, Yuanzhi Wang, Zhaosheng Yao, Xingwang Wang, Chenliang Wu, and Jianfeng Liao. 2025. "MaizeStar-YOLO: Precise Detection and Localization of Seedling-Stage Maize" Agronomy 15, no. 8: 1788. https://doi.org/10.3390/agronomy15081788

APA StyleChu, T., Zha, H., Wang, Y., Yao, Z., Wang, X., Wu, C., & Liao, J. (2025). MaizeStar-YOLO: Precise Detection and Localization of Seedling-Stage Maize. Agronomy, 15(8), 1788. https://doi.org/10.3390/agronomy15081788