Automatic Potato Crop Beetle Recognition Method Based on Multiscale Asymmetric Convolution Blocks

Abstract

1. Introduction

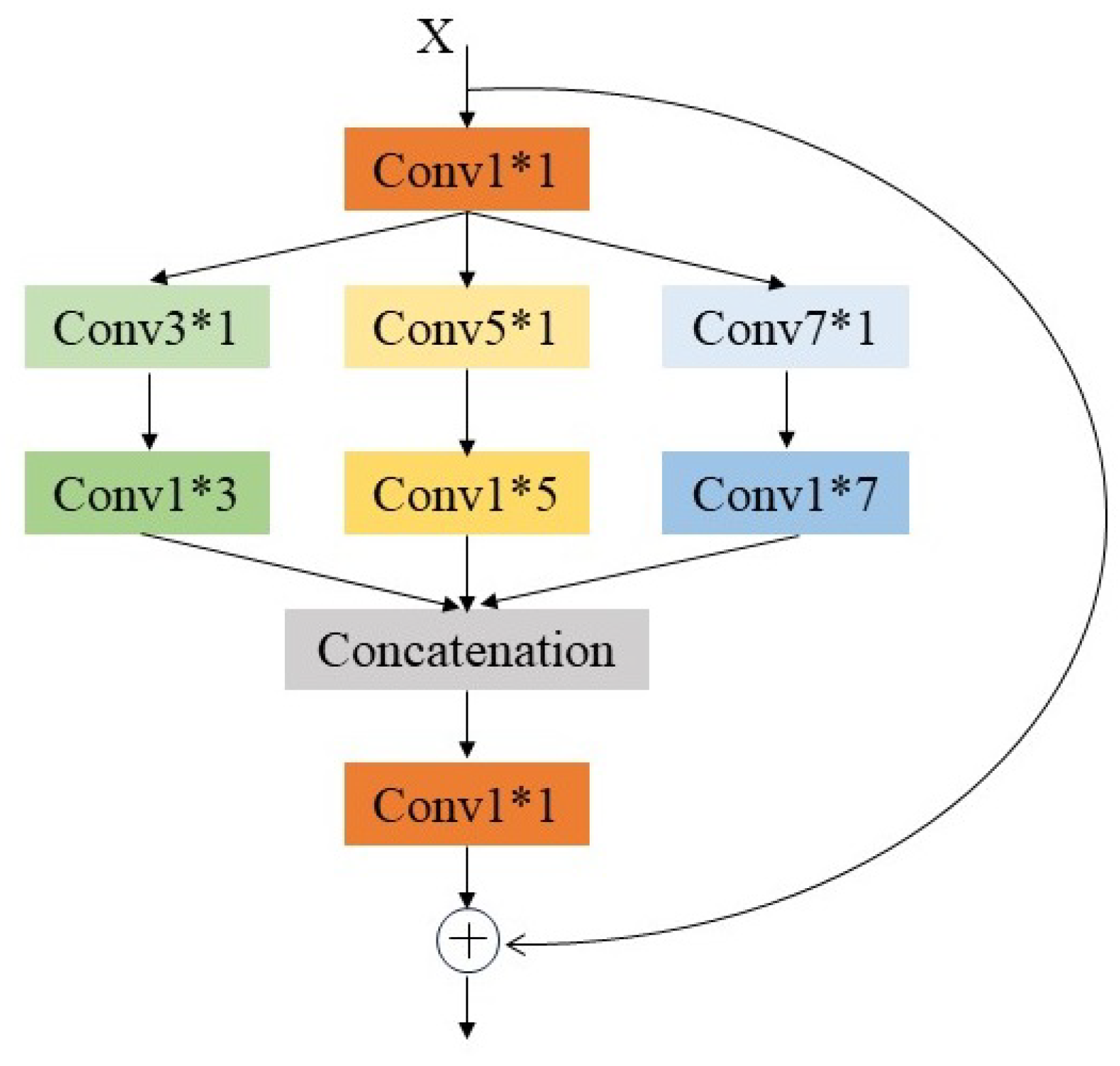

- A multiscale asymmetric convolution block was designed and developed to extract features at multiple scales by integrating asymmetric convolution kernels of different sizes in parallel.

- A novel CNN built based on the aforementioned multiscale asymmetric convolution block, named ‘MSAC-ResNet’, was proposed to distinguish between these five beetle species. The proposed algorithm outperforms five other SOTA networks.

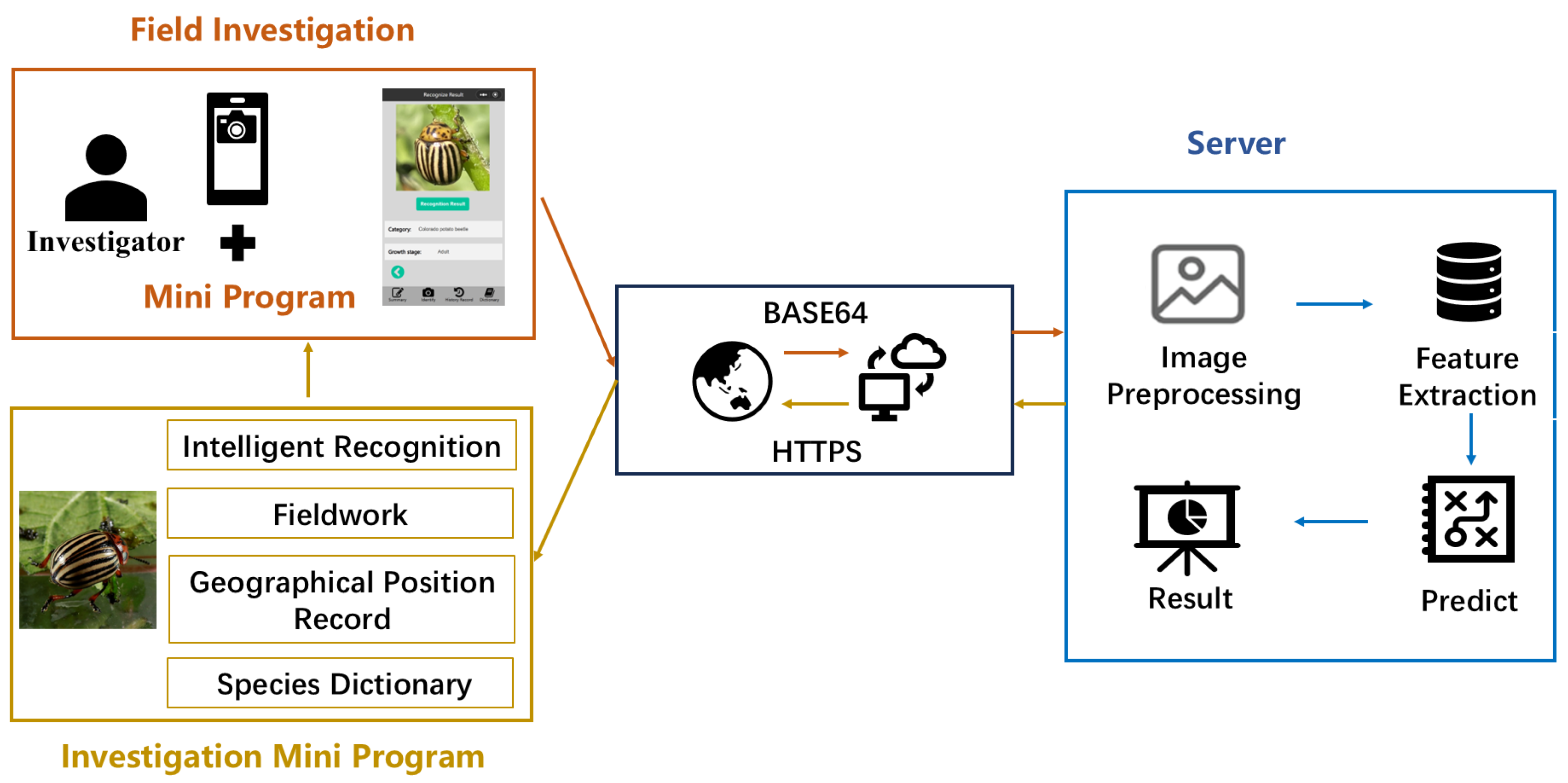

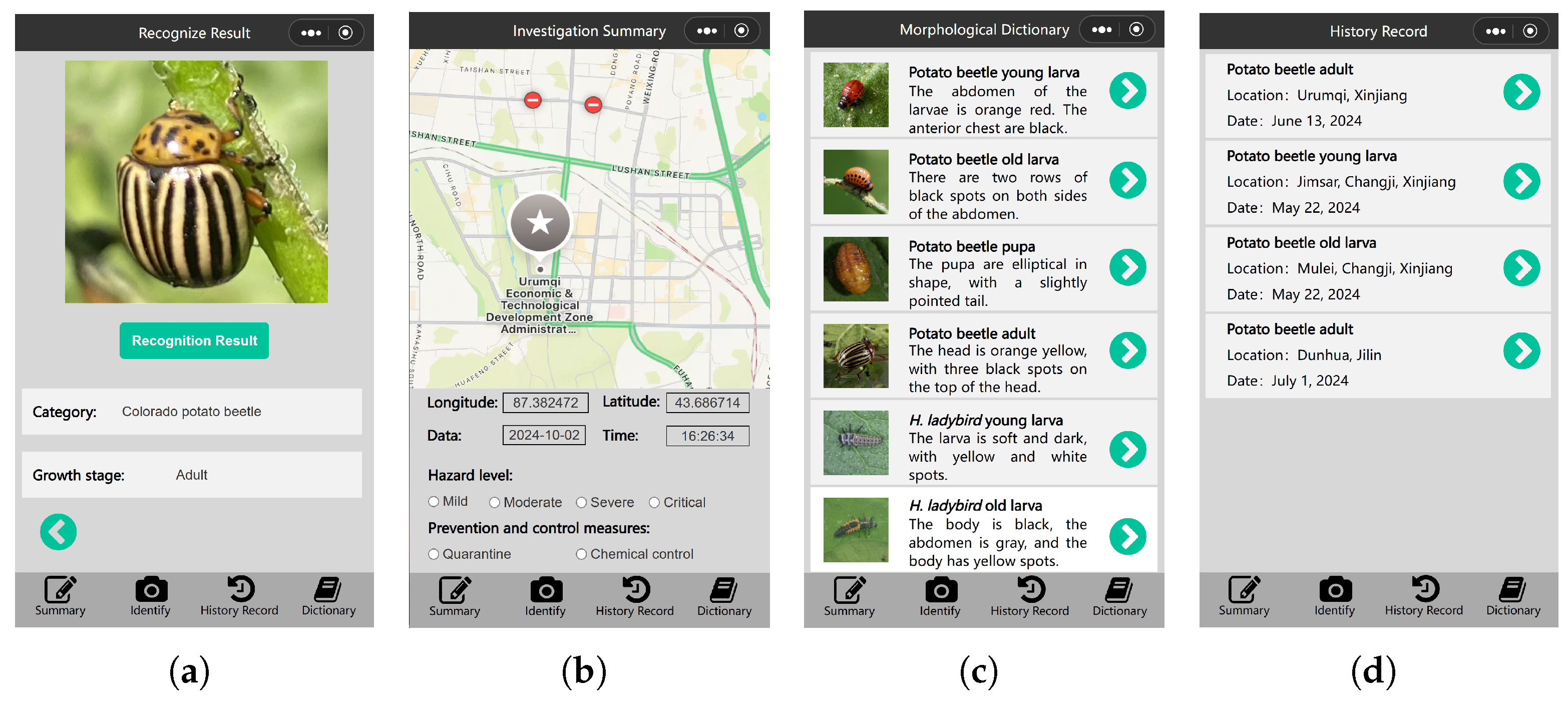

- The developed field investigation mini-program can identify all developmental stages of these five beetle species, from young larvae to adults, and provide management (or protection) suggestions in a timely manner.

2. Materials and Methods

2.1. Overall Framework of Colorado Potato Beetle Investigation System

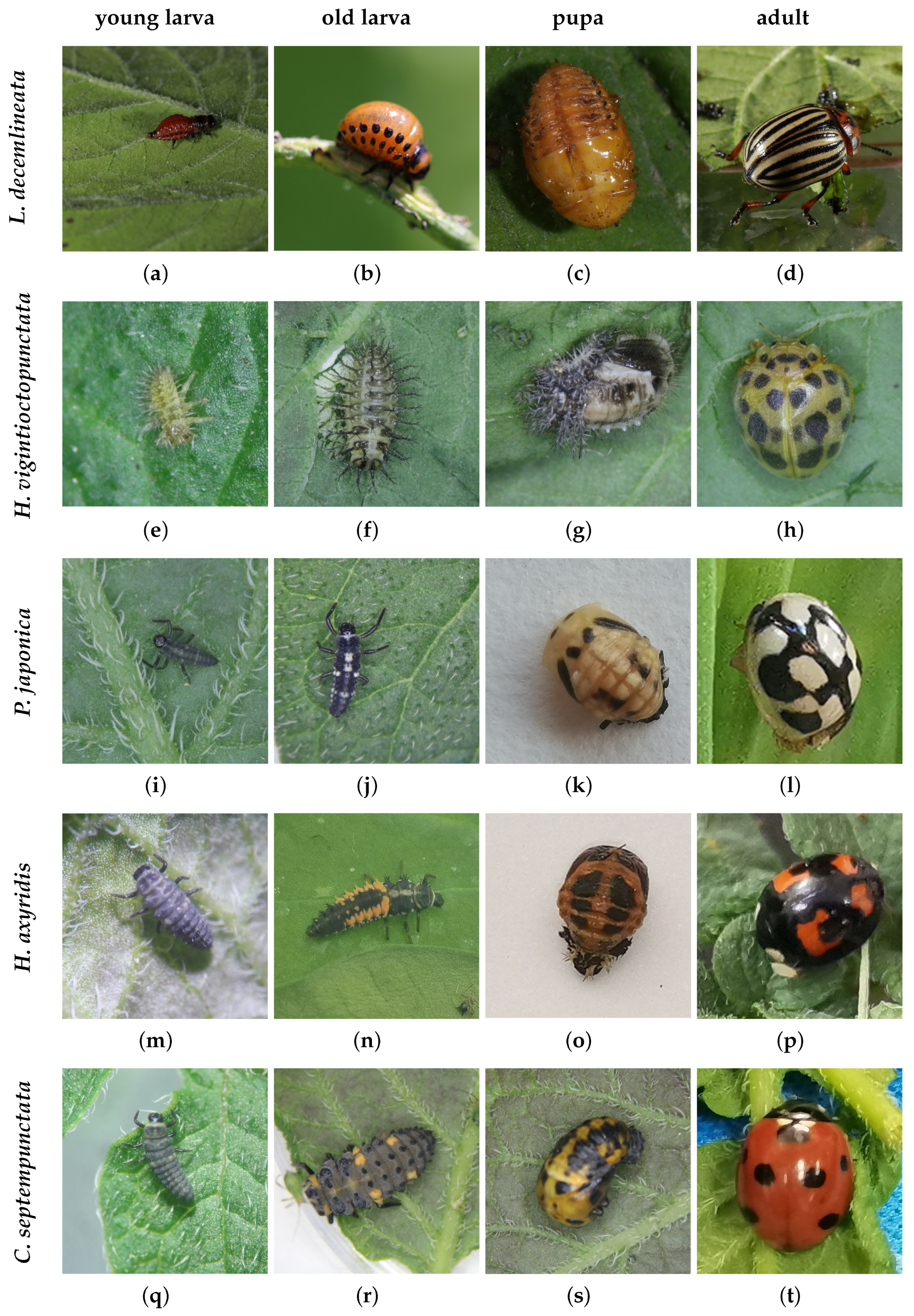

2.2. Beetle Image Dataset Organization

2.2.1. Image Collection

2.2.2. Data Augmentation

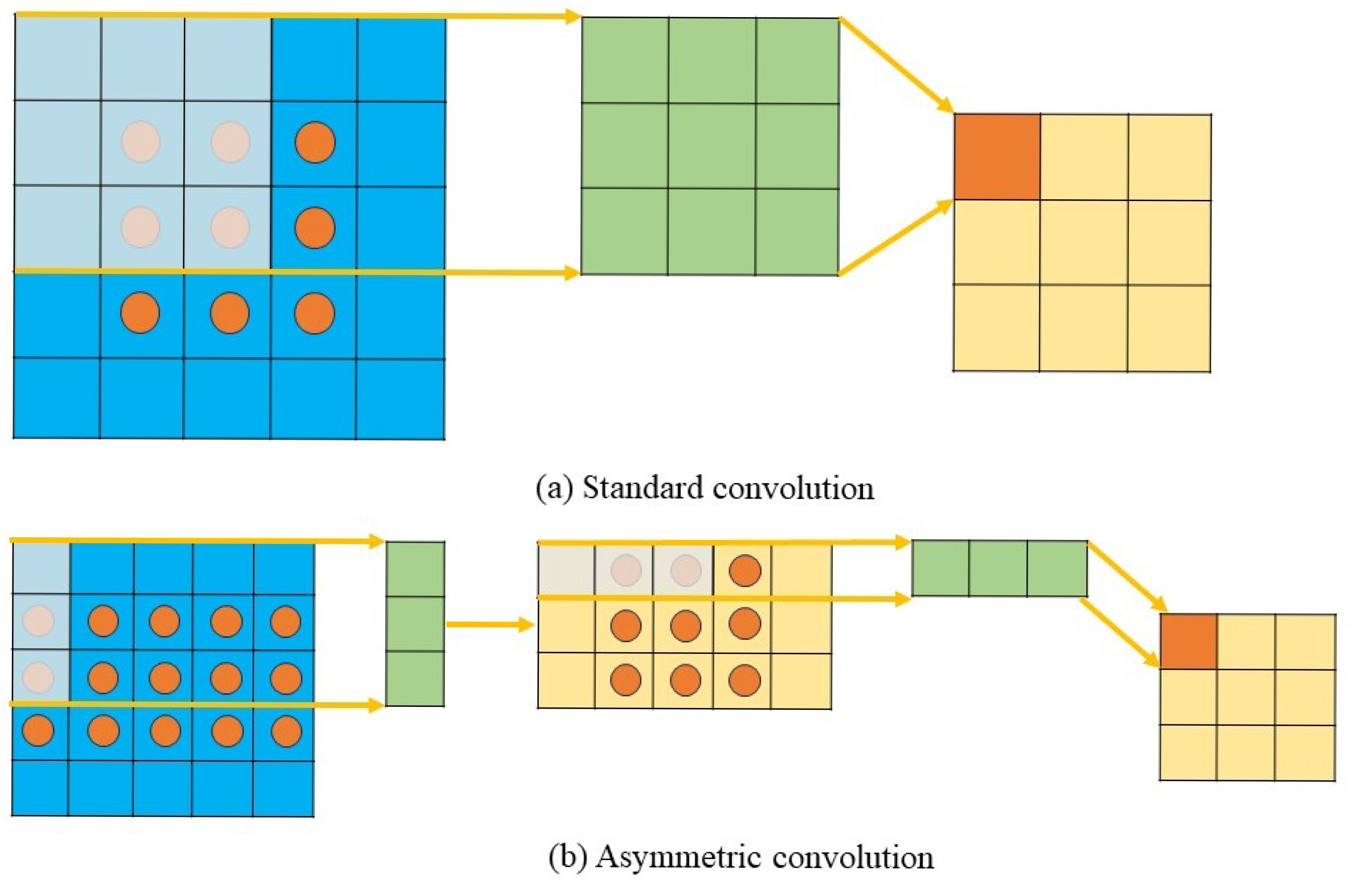

2.3. Asymmetric Convolution

2.4. Multiscale Asymmetric Convolution Block

2.5. MSAC-ResNet Architecture

2.6. Transfer Learning

3. Results

3.1. Performance Evaluation

3.2. Comparison with SOTA Networks

3.3. The Design of the Field Investigation Mini-Program

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guo, W.; Xun, T.; Xu, J.; Liu, J.; He, J. Research on the identification of colorado potato beetle and its distribution dispersal and damage in Xinjiang. Xinjiang Agric. Sci. 2010, 47, 906–909. [Google Scholar]

- Epilachna vigintioctopunctata. Available online: https://www.cabidigitallibrary.org/doi/10.1079/cabicompendium.21518#sec-6 (accessed on 16 June 2025).

- Fabricius. Wikipedia. Available online: http://en.wikipedia.org/wiki/Henosepilachna_vigintioctopunctata#Distribution (accessed on 16 June 2025).

- Animal Diversity Web. Available online: https://animaldiversity.org/accounts/Coccinella_septempunctata/ (accessed on 16 June 2025).

- Propylea japonica. Available online: https://www.cabidigitallibrary.org/doi/10.1079/cabicompendium.44604 (accessed on 16 June 2025).

- Harmonia axyridis. Available online: https://www.cabidigitallibrary.org/doi/10.1079/cabicompendium.26515 (accessed on 16 June 2025).

- Leptinotarsa decemlineata. Available online: https://www.cabidigitallibrary.org/doi/10.1079/cabicompendium.30380 (accessed on 16 June 2025).

- Bevers, N.; Sikora, E.J.; Hardy, N.B. Soybean disease identification using original field images and transfer learning with convolutional neural networks. Comput. Electron. Agric. 2022, 203, 107449. [Google Scholar] [CrossRef]

- Bertolla, A.B.; Cruvinel, P.E. Computational Intelligence Approach for Fall Armyworm Control in Maize Crop. Electronics 2025, 14, 1449. [Google Scholar] [CrossRef]

- Roldán-Serrato, K.L.; Escalante-Estrada, J.; Rodríguez-González, M. Automatic pest detection on bean and potato crops by applying neural classifiers. Eng. Agric. Environ. Food 2018, 11, 245–255. [Google Scholar] [CrossRef]

- Sohel, A.; Shakil, M.S.; Siddiquee, S.M.T.; Marouf, A.A.; Rokne, J.G.; Alhajj, R. Enhanced Potato Pest Identification: A Deep Learning Approach for Identifying Potato Pests. IEEE Access 2024, 12, 172149–172161. [Google Scholar] [CrossRef]

- Wang, F.; Wang, R.; Xie, C.; Zhang, J.; Li, R.; Liu, L. Convolutional neural network based automatic pest monitoring system using hand-held mobile image analysis towards non-site-specific wild environment. Comput. Electron. Agric. 2021, 187, 106268. [Google Scholar] [CrossRef]

- Chen, C.; Liang, Y.; Zhou, L.; Tang, X.; Dai, M. An automatic inspection system for pest detection in granaries using YOLOv4. Comput. Electron. Agric. 2022, 201, 107302. [Google Scholar] [CrossRef]

- Li, H.; Liang, Y.; Liu, Y.; Xian, X.; Xue, Y.; Huang, H.; Yao, Q.; Liu, W. Development of an intelligent field investigation system for Liriomyza using SeResNet-Liriomyza for accurate identification. Comput. Electron. Agric. 2023, 214, 108276. [Google Scholar] [CrossRef]

- Balingbing, C.B.; Kirchner, S.; Siebald, H.; Kaufmann, H.H.; Gummert, M.; Hung, N.V.; Hensel, O. Application of a multi-layer convolutional neural network model to classify major insect pests in stored rice detected by an acoustic device. Comput. Electron. Agric. 2024, 225, 109297. [Google Scholar] [CrossRef]

- Wray, A.K.; Agnew, A.C.; Brown, M.E.; Dean, E.M.; Hernandez, N.D.; Jordon, A.; Morningstar, C.R.; Piccolomini, S.E.; Pickett, H.A.; Daniel, W.M.; et al. Understanding gaps in early detection of and rapid response to invasive species in the United States: A literature review and bibliometric analysis. Ecol. Inform. 2024, 84, 102855. [Google Scholar] [CrossRef]

- Guo, W.; Xun, T.; Cheng, D.; Tan, W. Research progress on the main biology and ecology of potato beetles in my country and their monitoring and control strategies. Plant Prot. 2014, 40, 1–11. [Google Scholar]

- Yan, J.; Guo, W.; Guoqing, L.; Pan, H. Current status and prospects of the management of important insect pests on potato in China. Plant Prot. 2023, 49, 190–206. [Google Scholar]

- Ding, X.; Guo, Y.; Ding, G.; Han, J. ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Blocks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1911–1920. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Lo, S.Y.; Hang, H.M.; Chan, S.W.; Lin, J.J. Efficient Dense Modules of Asymmetric Convolution for Real-Time Semantic Segmentation. In Proceedings of the ACM Multimedia Asia, Beijing, China, 16–18 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Journal of Machine Learning Research, Sardinia, Italy, 13–15 May 2010; Volume 9, pp. 249–256. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; Volume 2016, pp. 770–778. [Google Scholar] [CrossRef]

- Waheed, A.; Goyal, M.; Gupta, D.; Khanna, A.; Hassanien, A.E.; Pandey, H.M. An optimized dense convolutional neural network model for disease recognition and classification in corn leaf. Comput. Electron. Agric. 2020, 175, 105456. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Doll, P. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lile, France, 6–11 July 2015; Volume 1, pp. 448–456. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In Proceedings of the Artificial Neural Networks and Machine Learning–ICANN 2018: 27th International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; pp. 270–279. [Google Scholar]

- Zhou, K.; Liu, Z.; Qiao, Y.; Xiang, T.; Loy, C.C. Domain Generalization: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4396–4415. [Google Scholar] [CrossRef] [PubMed]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 1314–1324. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the Proceedings—30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Huang, W.; Xie, X.; Huo, L.; Liang, X.; Wang, X.; Chen, X. An integrative DNA barcoding framework of ladybird beetles (Coleoptera: Coccinellidae). Sci. Rep. 2020, 10, 10063. [Google Scholar] [CrossRef] [PubMed]

| Species | Young Larva | Older Larva | Pupa | Adult Insect |

|---|---|---|---|---|

| Colorado potato beetle | 718 | 622 | 594 | 507 |

| Henosepilachna vigintioctopunctata | 661 | 613 | 574 | 693 |

| Propylea japonica | 507 | 663 | 634 | 457 |

| Harmonia axyridis | 519 | 544 | 510 | 590 |

| Coccinella septempunctata | 554 | 520 | 393 | 452 |

| Parameter | Value |

|---|---|

| Optimization algorithm | SGD |

| Initial learning rate | 0.001 |

| Epoch | 150 |

| Batch size | 64 |

| Network | Precision | Recall | F1-score | Accuracy | Inference Time (S) |

|---|---|---|---|---|---|

| AlexNet | 97.21% | 96.79% | 96.85% | 96.79% | 4.335 |

| MobileNet-v3 | 96.80% | 95.97% | 96.00% | 95.97% | 4.751 |

| EfficientNet-b0 | 96.77% | 96.98% | 96.78% | 96.98% | 4.691 |

| DenseNet | 97.96% | 97.61% | 97.58% | 97.61% | 4.557 |

| ResNet101 | 98.20% | 98.06% | 98.08% | 98.06% | 4.650 |

| MSAC-ResNet | 99.18% | 99.11% | 99.11% | 99.11% | 4.505 |

| Species | Stage | AlexNet | MobileNet-v3 | EfficientNet-b0 | DenseNet | ResNet101 | MSAC-ResNet |

|---|---|---|---|---|---|---|---|

| Colorado potato beetle | Young larva | 99% | 97% | 91% | 100% | 95% | 100% |

| Colorado potato beetle | Older larva | 99% | 97% | 98% | 100% | 97% | 100% |

| Colorado potato beetle | Pupa | 98% | 99% | 100% | 99% | 100% | 100% |

| Colorado potato beetle | Adult insect | 70% | 57% | 92% | 100% | 93% | 100% |

| H. vigintioctopunctata | Young larva | 93% | 93% | 93% | 94% | 94% | 93% |

| H. vigintioctopunctata | Older larva | 100% | 100% | 100% | 100% | 100% | 100% |

| H. vigintioctopunctata | Pupa | 100% | 100% | 100% | 100% | 100% | 100% |

| H. vigintioctopunctata | Adult insect | 100% | 100% | 100% | 100% | 100% | 100% |

| P. japonica | Young larva | 98% | 99% | 93% | 100% | 100% | 100% |

| P. japonica | Older larva | 100% | 100% | 100% | 100% | 100% | 100% |

| P. japonica | Pupa | 90% | 91% | 85% | 99% | 96% | 97% |

| P. japonica | Adult insect | 100% | 100% | 100% | 100% | 100% | 100% |

| H. axyridis | Young larva | 100% | 100% | 100% | 100% | 100% | 100% |

| H. axyridis | Older larva | 94% | 98% | 99% | 99% | 97% | 100% |

| H. axyridis | Pupa | 100% | 99% | 100% | 100% | 100% | 100% |

| H. axyridis | Adult insect | 98% | 98% | 98% | 100% | 98% | 100% |

| C. septempunctata | Young larva | 100% | 100% | 100% | 72% | 100% | 100% |

| C. septempunctata | Older larva | 92% | 98% | 87% | 91% | 88% | 91% |

| C. septempunctata | Pupa | 100% | 99% | 100% | 100% | 100% | 100% |

| C. septempunctata | Adult insect | 100% | 98% | 100% | 100% | 98% | 100% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, J.; Xian, X.; Qiu, M.; Li, X.; Wei, Y.; Liu, W.; Zhang, G.; Jiang, L. Automatic Potato Crop Beetle Recognition Method Based on Multiscale Asymmetric Convolution Blocks. Agronomy 2025, 15, 1557. https://doi.org/10.3390/agronomy15071557

Cao J, Xian X, Qiu M, Li X, Wei Y, Liu W, Zhang G, Jiang L. Automatic Potato Crop Beetle Recognition Method Based on Multiscale Asymmetric Convolution Blocks. Agronomy. 2025; 15(7):1557. https://doi.org/10.3390/agronomy15071557

Chicago/Turabian StyleCao, Jingjun, Xiaoqing Xian, Minghui Qiu, Xin Li, Yajie Wei, Wanxue Liu, Guifen Zhang, and Lihua Jiang. 2025. "Automatic Potato Crop Beetle Recognition Method Based on Multiscale Asymmetric Convolution Blocks" Agronomy 15, no. 7: 1557. https://doi.org/10.3390/agronomy15071557

APA StyleCao, J., Xian, X., Qiu, M., Li, X., Wei, Y., Liu, W., Zhang, G., & Jiang, L. (2025). Automatic Potato Crop Beetle Recognition Method Based on Multiscale Asymmetric Convolution Blocks. Agronomy, 15(7), 1557. https://doi.org/10.3390/agronomy15071557