Large-Scale Apple Orchard Identification from Multi-Temporal Sentinel-2 Imagery

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Data Source

2.2.1. Remote Sensing Data

Sentinel-2 Level-2A Imagery

Google Earth Ultra High-Resolution Imagery

2.2.2. Other Data

Field Measurements

Official Statistics

2.3. Methods

2.3.1. Data Preprocessing

2.3.2. Dataset Construction

Random Forest Apple Orchard Classification

K-Means Apple Orchard Classification

Multi-Source Classification Result Fusion

Data Augmentation

2.3.3. Feature Optimization

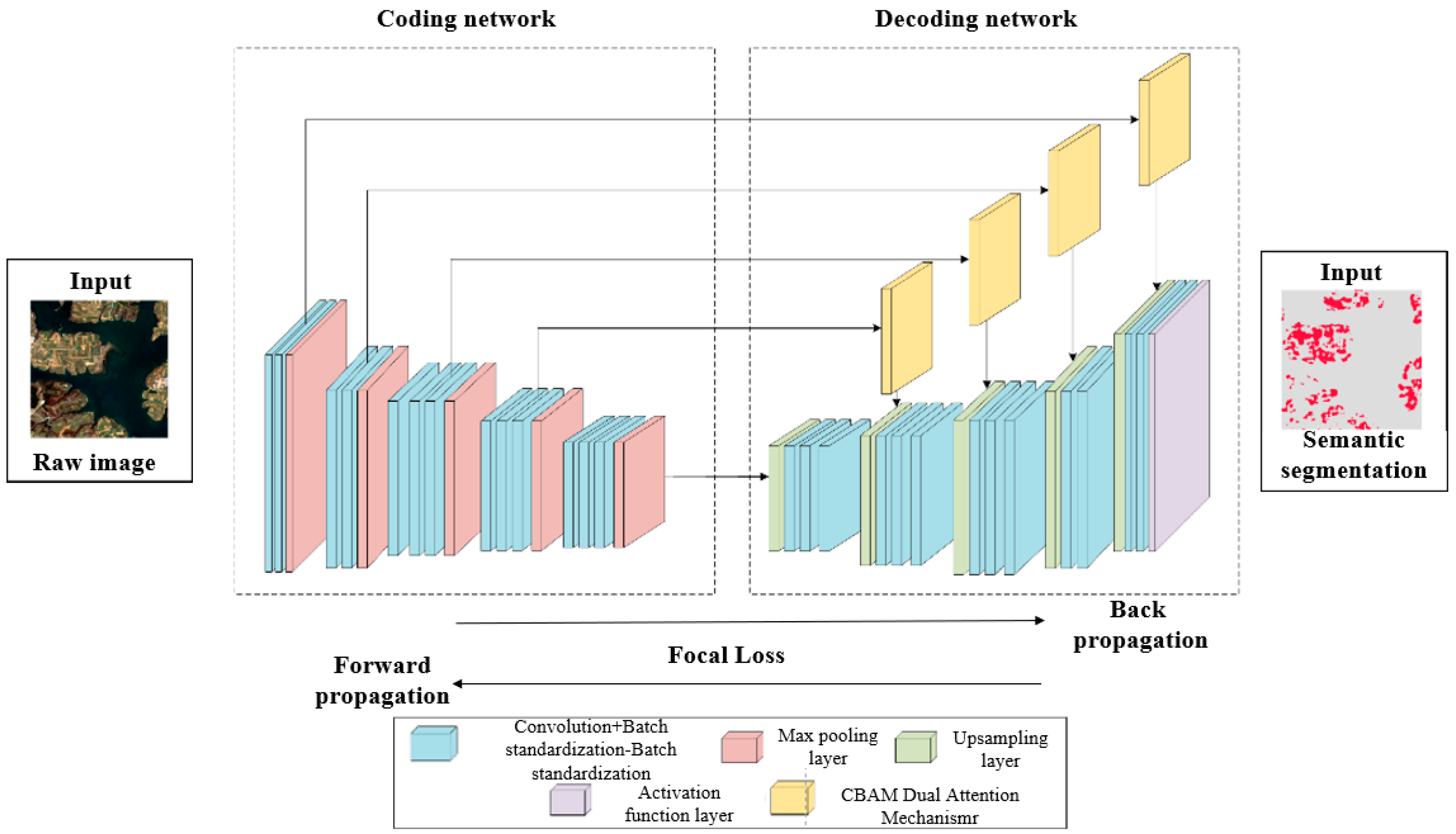

2.3.4. AOCF-SegNet Model Construction

Attention Mechanism

Loss Function

Hyperparameter Optimization

2.3.5. Accuracy Assessment

3. Results

3.1. Multi-Temporal Feature Variable Selection

3.2. Models and Phases for Apple Mapping

3.3. Apple Orchard Mapping with AOCF-SegNet Model

3.4. Apple Orchard Extraction Maps in Yantai

4. Discussion

4.1. AOCF-SegNet Semantic Segmentation Network

4.2. Automatic Construction of Apple Orchard Dataset

4.3. Potential Limitations

5. Conclusions

- By classifying apple orchards using two machine learning methods and integrating the classification results, the data can be mutually validated, thereby enhancing the reliability of the sample set. This approach can address the challenges associated with constructing sample sets for apple orchard extraction from satellite imagery to some extent.

- The SegNet model is more suitable for extracting apple orchard information compared to FCN-8s and U-Net. From the perspective of multi-temporal classification results, SegNet achieves the highest accuracy. The results of the SegNet model can delineate more regular boundaries between apple and non-apple areas, suppressing internal fragmentation and misclassification to a certain extent.

- The AOCF-SegNet model can better extract information from apple orchards, effectively reducing the incidence of omissions and misclassifications. Compared to the original SegNet model, OA, F1-Score, MIoU, and FWIoU improved by 3.00%, 4.74%, 3.86%, and 3.15%, respectively. In addition, the extracted area of the apple orchard showed high consistency with the statistical data, achieving an accuracy of 71.97%.

6. Patents

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, Y.; Yang, G.; Yang, H.; Wu, J.; Lei, L.; Zhao, F.; Fan, L.; Zhao, C. Identification of Apple Orchard Planting Year Based on Spatiotemporally Fused Satellite Images and Clustering Analysis of Foliage Phenophase. Remote Sens. 2020, 12, 1199. [Google Scholar] [CrossRef]

- Wang, J.; Liu, T. Spatiotemporal evolution and suitability of apple production in China from climate change and land use transfer perspectives. Food Energy Secur. 2022, 11, e386. [Google Scholar] [CrossRef]

- Li, J.; Yang, G.; Yang, H.; Xu, W.; Feng, H.; Xu, B.; Chen, R.; Zhang, C.; Wang, H. Orchard classification based on super-pixels and deep learning with sparse optical images. Comput. Electron. Agric. 2023, 215, 108379. [Google Scholar] [CrossRef]

- Ou, C.; Yang, J.; Du, Z.; Zhang, T.; Niu, B.; Feng, Q.; Liu, Y.; Zhu, D. Landsat-Derived Annual Maps of Agricultural Greenhouse in Shandong Province, China from 1989 to 2018. Remote Sens. 2021, 13, 4830. [Google Scholar] [CrossRef]

- Peña, M.A.; Brenning, A. Assessing fruit-tree crop classification from Landsat-8 time series for the Maipo Valley, Chile. Remote Sens. Environ. 2021, 171, 234–244. [Google Scholar] [CrossRef]

- Morell-Monzó, S.; Sebastiá-Frasquet, M.-T.; Estornell, J.; Moltó, E. Detecting abandoned citrus crops using Sentinel-2 time series. A case study in the Comunitat Valenciana region (Spain). ISPRS J. Photogramm. Remote Sens. 2023, 201, 54–66. [Google Scholar] [CrossRef]

- Xu, W.; Li, Z.; Lin, H.; Shao, G.; Zhao, F.; Wang, H.; Cheng, J.; Lei, L.; Chen, R.; Han, S.; et al. Mapping Fruit-Tree Plantation Using Sentinel-1/2 Time Series Images with Multi-Index Entropy Weighting Dynamic Time Warping Method. Remote Sens. 2024, 16, 3390. [Google Scholar] [CrossRef]

- Peng, Y.; Qiu, B.; Tang, Z.; Xu, W.; Yang, P.; Wu, W.; Chen, X.; Zhu, X.; Zhu, P.; Zhang, X.; et al. Where is tea grown in the world: A robust mapping framework for agroforestry crop with knowledge graph and sentinels images. Remote Sens. Environ. 2024, 303, 114016. [Google Scholar] [CrossRef]

- Chen, H.; Li, H.; Liu, Z.; Zhang, C.; Zhang, S.; Atkinson, P.M. A novel Greenness and Water Content Composite Index (GWCCI) for soybean mapping from single remotely sensed multispectral images. Remote Sens. Environ. 2023, 295, 113679. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, J.; Zhang, Z. A new Bayesian semi-supervised active learning framework for large-scale crop mapping using Sentinel-2 imagery. ISPRS J. Photogramm. Remote Sens. 2024, 209, 17–34. [Google Scholar] [CrossRef]

- Amorós-López, J.; Gómez-Chova, L.; Alonso, L.; Guanter, L.; Zurita-Milla, R.; Moreno, J.; Camps-Valls, G. Multitemporal fusion of Landsat/TM and ENVISAT/MERIS for crop monitoring. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 132–141. [Google Scholar] [CrossRef]

- Zhang, T.; Hu, D.; Wu, C.; Liu, Y.; Yang, J.; Tang, K. Large-scale apple orchard mapping from multi-source data using the semantic segmentation model with image-to-image translation and transfer learning. Comput. Electron. Agric. 2023, 213, 108204. [Google Scholar] [CrossRef]

- Yan, C.; Li, Z.; Zhang, Z.; Sun, Y.; Wang, Y.; Xin, Q. High-resolution mapping of paddy rice fields from unmanned airborne vehicle images using enhanced-TransUnet. Comput. Electron. Agric. 2023, 210, 107867. [Google Scholar] [CrossRef]

- Liu, J.; Feng, Q.; Gong, J.; Zhou, J.; Liang, J.; Li, Y. Winter wheat mapping using a random forest classifier combined with multitemporal and multi-sensor data. Int. J. Digit. Earth 2018, 11, 783–802. [Google Scholar] [CrossRef]

- Zhou, X.-X.; Li, Y.-Y.; Luo, Y.-K.; Sun, Y.-W.; Su, Y.-J.; Tan, C.-W.; Liu, Y.-J. Research on remote sensing classification of fruit trees based on Sentinel-2 multi-temporal imageries. Sci. Rep. 2022, 12, 11549. [Google Scholar] [CrossRef]

- Chen, R.; Li, X.; Zhang, Y.; Zhou, P.; Wang, Y.; Shi, L.; Jiang, L.; Ling, F.; Du, Y. Spatiotemporal Continuous Impervious Surface Mapping by Fusion of Landsat Time Series Data and Google Earth Imagery. Remote Sens. 2021, 13, 2409. [Google Scholar] [CrossRef]

- Phalke, A.R.; Özdoğan, M.; Thenkabail, P.S.; Erickson, T.; Gorelick, N.; Yadav, K.; Congalton, R.G. Mapping croplands of Europe, Middle East, Russia, and Central Asia using Landsat, Random Forest, and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 167, 104–122. [Google Scholar] [CrossRef]

- Cai, Y.; Xu, X.; Zhu, P.; Nie, S.; Wang, C.; Xiong, Y.; Liu, X. Unveiling spatiotemporal tree cover patterns in China: The first 30 m annual tree cover mapping from 1985 to 2023. ISPRS J. Photogramm. Remote Sens. 2024, 216, 240–258. [Google Scholar] [CrossRef]

- Tian, J.; Wang, L.; Diao, C.; Zhang, Y.; Jia, M.; Zhu, L.; Xu, M.; Li, X.; Gong, H. National scale sub-meter mangrove mapping using an augmented border training sample method. ISPRS J. Photogramm. Remote Sens. 2025, 220, 156–171. [Google Scholar] [CrossRef]

- Chen, R.; Xiong, S.; Zhang, N.; Fan, Z.; Qi, N.; Fan, Y.; Feng, H.; Ma, X.; Yang, H.; Yang, G.; et al. Fine-scale classification of horticultural crops using Sentinel-2 time-series images in Linyi country, China. Comput. Electron. Agric. 2025, 236, 110425. [Google Scholar] [CrossRef]

- Turkoglu, M.O.; D’Aronco, S.; Perich, G.; Liebisch, F.; Streit, C.; Schindler, K.; Wegner, J.D. Crop mapping from image time series: Deep learning with multi-scale label hierarchies. Remote Sens. Environ. 2021, 264, 112603. [Google Scholar] [CrossRef]

- Wang, B.; Zhao, H.; Wang, X.; Lyu, G.; Chen, K.; Xu, J.; Cui, G.; Zhong, L.; Yu, L.; Huang, H.; et al. Bamboo classification based on GEDI, time-series Sentinel-2 images and whale-optimized, dual-channel DenseNet: A case study in Zhejiang province, China. ISPRS J. Photogramm. Remote Sens. 2024, 209, 312–323. [Google Scholar] [CrossRef]

- Zhang, C.; Valente, J.; Wang, W.; Guo, L.; Tubau Comas, A.; Van Dalfsen, P.; Rijk, B.; Kooistra, L. Feasibility assessment of tree-level flower intensity quantification from UAV RGB imagery: A triennial study in an apple orchard. ISPRS J. Photogramm. Remote Sens. 2023, 197, 256–273. [Google Scholar] [CrossRef]

- Li, W.; Dong, R.; Fu, H.; Wang, J.; Yu, L.; Gong, P. Integrating Google Earth imagery with Landsat data to improve 30-m resolution land cover mapping. Remote Sens. Environ. 2020, 237, 111563. [Google Scholar] [CrossRef]

- Fu, Y.; Li, J.; Weng, Q.; Zheng, Q.; Li, L.; Dai, S.; Guo, B. Characterizing the spatial pattern of annual urban growth by using time series Landsat imagery. Sci. Total Environ. 2019, 666, 274–284. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Xuan, F.; Dong, Y.; Huang, X.; Liu, H.; Zeng, Y.; Su, W.; Huang, J.; Li, X. Performance of GEDI data combined with Sentinel-2 images for automatic labelling of wall-to-wall corn mapping. Int. J. Appl. Earth Obs. Geoinf. 2024, 127, 103643. [Google Scholar] [CrossRef]

- Sun, Y.; Qin, Q.; Ren, H.; Zhang, Y. Decameter Cropland LAI/FPAR Estimation From Sentinel-2 Imagery Using Google Earth Engine. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Zhao, D.; Huang, J.; Li, Z.; Yu, G.; Shen, H. Dynamic monitoring and analysis of chlorophyll-a concentrations in global lakes using Sentinel-2 images in Google Earth Engine. Sci. Total Environ. 2024, 912, 169152. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Kumar, M.; Bhattacharya, B.K.; Pandya, M.R.; Handique, B.K. Machine learning based plot level rice lodging assessment using multi-spectral UAV remote sensing. Comput. Electron. Agric. 2024, 219, 108754. [Google Scholar] [CrossRef]

- Li, Z.; Deng, X.; Lan, Y.; Liu, C.; Qing, J. Fruit tree canopy segmentation from UAV orthophoto maps based on a lightweight improved U-Net. Comput. Electron. Agric. 2024, 217, 108538. [Google Scholar] [CrossRef]

- Duan, M.; Song, X.; Liu, X.; Cui, D.; Zhang, X. Mapping the soil types combining multi-temporal remote sensing data with texture features. Comput. Electron. Agric. 2022, 200, 107230. [Google Scholar] [CrossRef]

- Cai, X.; Wu, L.; Li, Y.; Lei, S.; Xu, J.; Lyu, H.; Li, J.; Wang, H.; Dong, X.; Zhu, Y.; et al. Remote sensing identification of urban water pollution source types using hyperspectral data. J. Hazard. Mater. 2023, 459, 132080. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. Evaluation of the MERIS terrestrial chlorophyll index (MTCI). Adv. Space Res. 2007, 39, 100–104. [Google Scholar] [CrossRef]

- Perry, C.R.; Lautenschlager, L.F. Functional equivalence of spectral vegetation indices. Remote Sens. Environ. 1984, 14, 169–182. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote sensing of chlorophyll concentration in higher plant leaves. Adv. Space Res. 1998, 22, 689–692. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Daughtry, C. Estimating Corn Leaf Chlorophyll Concentration from Leaf and Canopy Reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Grayson, B.; Christopher, B.; Joseph, A. Canopy near-infrared reflectance and terrestrial photosynthesis. Sci. Adv. 2017, 3, e1602244. [Google Scholar] [CrossRef]

- Evangelides, C.; Nobajas, A. Red-Edge Normalised Difference Vegetation Index (NDVI705) from Sentinel-2 imagery to assess post-fire regeneration. Remote Sens. Appl. Soc. Environ. 2020, 17, 100283. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Wu, C.; Jia, W.; Yang, J.; Zhang, T.; Dai, A.; Zhou, H. Economic Fruit Forest Classification Based on Improved U-Net Model in UAV Multispectral Imagery. Remote Sens. 2023, 15, 2500. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. arXiv 2016. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, I. Attention is all you need. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Valente, J.; Van Der Voort, M.; Tekinerdogan, B. Effect of Attention Mechanism in Deep Learning-Based Remote Sensing Image Processing: A Systematic Literature Review. Remote Sens. 2021, 13, 2965. [Google Scholar] [CrossRef]

- Du, L.; Lu, Z.; Li, D. Broodstock breeding behaviour recognition based on Resnet50-LSTM with CBAM attention mechanism. Comput. Electron. Agric. 2022, 202, 107404. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, H.; Tian, S. Phenology-assisted supervised paddy rice mapping with the Landsat imagery on Google Earth Engine: Experiments in Heilongjiang Province of China from 1990 to 2020. Comput. Electron. Agric. 2023, 212, 108105. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Weng, Q.; Zhang, Y.; Dou, P.; Zhang, L. Cloud and cloud shadow detection for optical satellite imagery: Features, algorithms, validation, and prospects. ISPRS J. Photogramm. Remote Sens. 2022, 188, 89–108. [Google Scholar] [CrossRef]

| Feature Type | Apple | Woodland | Other Orchard | Arable Land | Grass |

|---|---|---|---|---|---|

| Quantity | 81 | 29 | 75 | 159 | 35 |

| Characteristic Index | Calculation Formula |

|---|---|

| Modified Terrestrial Chlorophyll Index (MTCI) [36] | |

| Triangular Vegetation Index (TVI) [37] | |

| Enhanced Vegetation Index (EVI) [38] | |

| Ratio Vegetation Index (RVI) [39] | |

| Modified Chlorophyll Absorption Ratio Index (MCARI) [40] | |

| Near-Infrared Reflectance of vegetation (NIRv) [41] | |

| Normalized Difference Red Edge Index (NDre3) [42] | |

| Modified Red Edge Simple Ratio Index (MRESR) [43] | |

| Red Edge Normalized Difference Vegetation Index (NDVIre32) [42] |

| Model | OA | F1-Score | MIoU | FWIoU |

|---|---|---|---|---|

| FCN-8s | 71.58% | 47.34% | 38.60% | 65.69% |

| U-Net | 76.88% | 52.54% | 43.24% | 70.80% |

| SegNet | 73.28% | 52.06% | 41.78% | 67.30% |

| Model | OA | F1-Score | MIoU | FWIoU |

|---|---|---|---|---|

| FCN-8s | 79.74% | 54.92% | 45.60% | 73.62% |

| U-Net | 82.73% | 52.77% | 45.33% | 76.33% |

| SegNet | 86.34% | 56.70% | 49.05% | 79.98% |

| SegNet + CBAM | 88.28% | 56.84% | 49.60% | 81.72% |

| SegNet + Focal Loss | 88.56% | 56.91% | 49.75% | 82.02% |

| AOCF-SegNet | 89.34% | 61.44% | 52.91% | 83.13% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, C.; Liu, Y.; Yang, J.; Dai, A.; Zhou, H.; Tang, K.; Zhang, Y.; Wang, R.; Wei, B.; Wang, Y. Large-Scale Apple Orchard Identification from Multi-Temporal Sentinel-2 Imagery. Agronomy 2025, 15, 1487. https://doi.org/10.3390/agronomy15061487

Wu C, Liu Y, Yang J, Dai A, Zhou H, Tang K, Zhang Y, Wang R, Wei B, Wang Y. Large-Scale Apple Orchard Identification from Multi-Temporal Sentinel-2 Imagery. Agronomy. 2025; 15(6):1487. https://doi.org/10.3390/agronomy15061487

Chicago/Turabian StyleWu, Chunxiao, Yundan Liu, Jianyu Yang, Anjin Dai, Han Zhou, Kaixuan Tang, Yuxuan Zhang, Ruxin Wang, Binchuan Wei, and Yifan Wang. 2025. "Large-Scale Apple Orchard Identification from Multi-Temporal Sentinel-2 Imagery" Agronomy 15, no. 6: 1487. https://doi.org/10.3390/agronomy15061487

APA StyleWu, C., Liu, Y., Yang, J., Dai, A., Zhou, H., Tang, K., Zhang, Y., Wang, R., Wei, B., & Wang, Y. (2025). Large-Scale Apple Orchard Identification from Multi-Temporal Sentinel-2 Imagery. Agronomy, 15(6), 1487. https://doi.org/10.3390/agronomy15061487