FCA-YOLO: An Efficient Deep Learning Framework for Real-Time Monitoring of Stored-Grain Pests in Smart Warehouses

Abstract

1. Introduction

- Construction of the Grain Pest Image Dataset MPest3: This dataset focuses on stored wheat and includes morphological features of typical pests such as Tribolium castaneum, Sitophilus oryzae, and Cryptolestes ferrugineus, providing a foundation for feature extraction and analysis in complex scenarios.

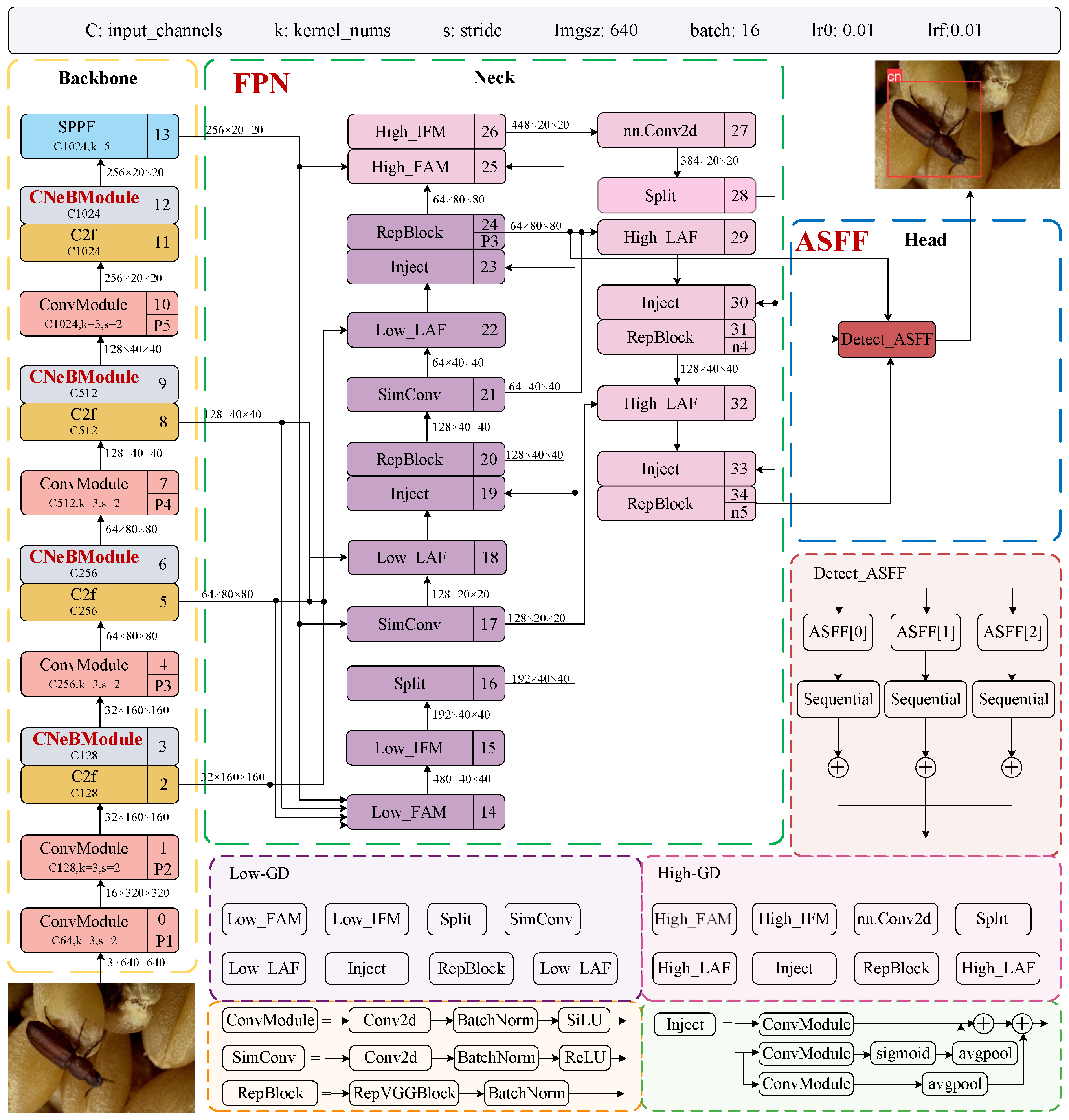

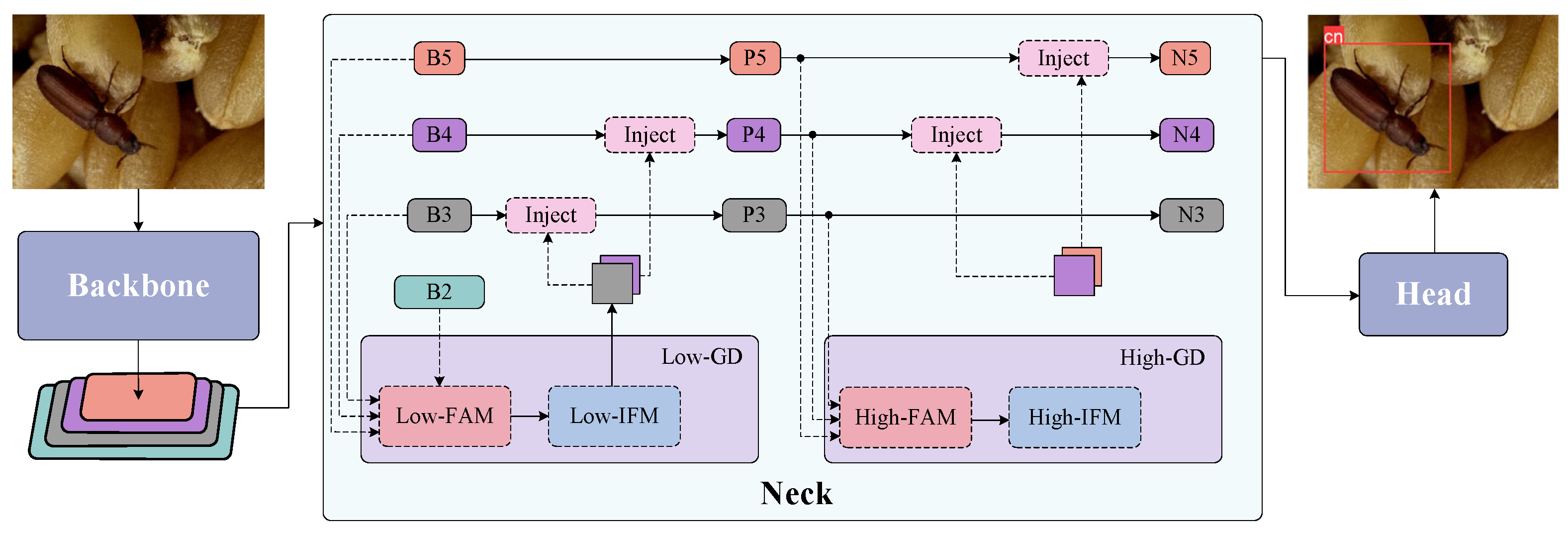

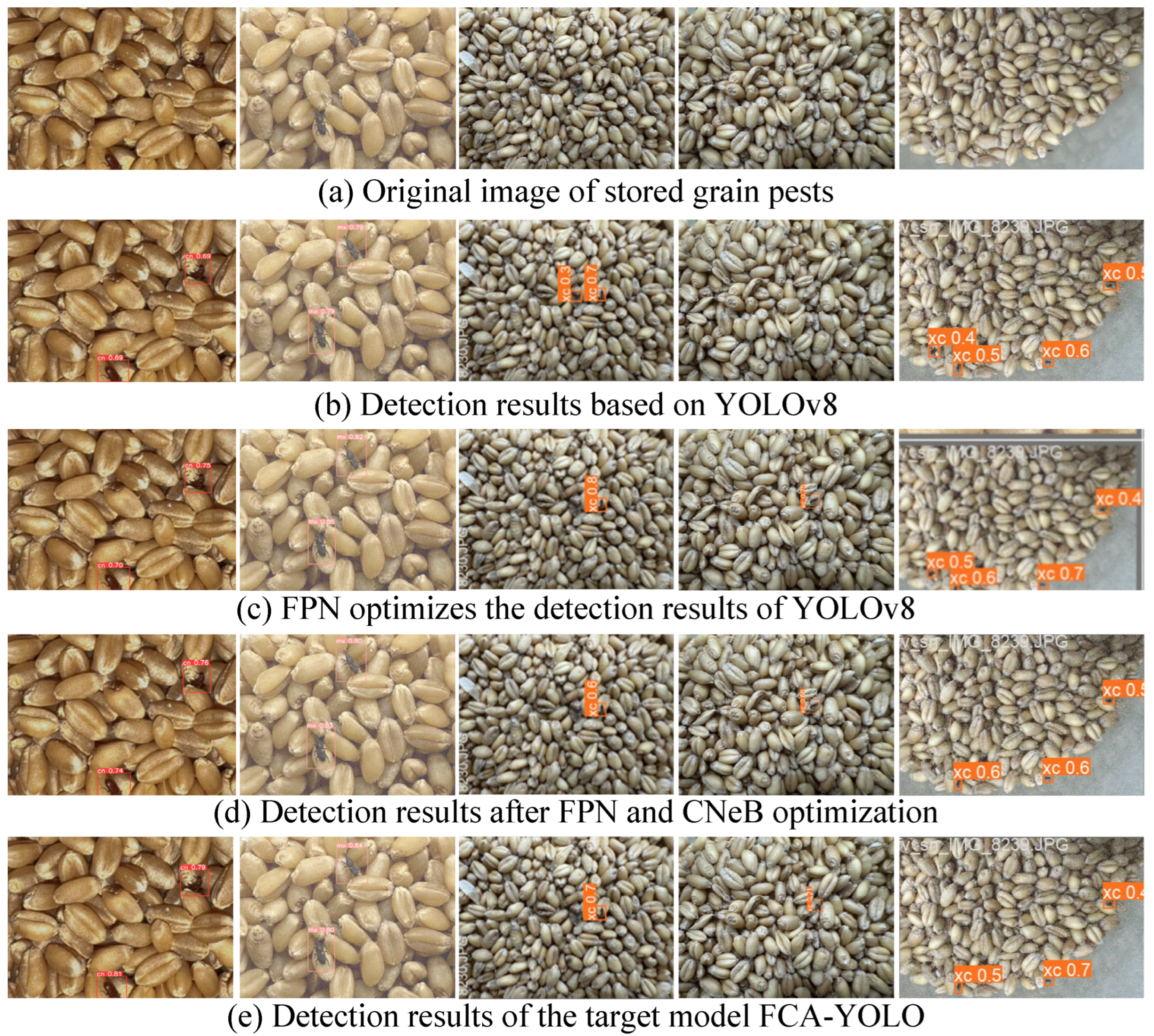

- Design of Multi-Scale Feature Fusion Mechanism: The FPN structure is integrated into YOLOv8, enhancing the detection performance of small targets across multiple scales by effectively combining shallow and deep features.

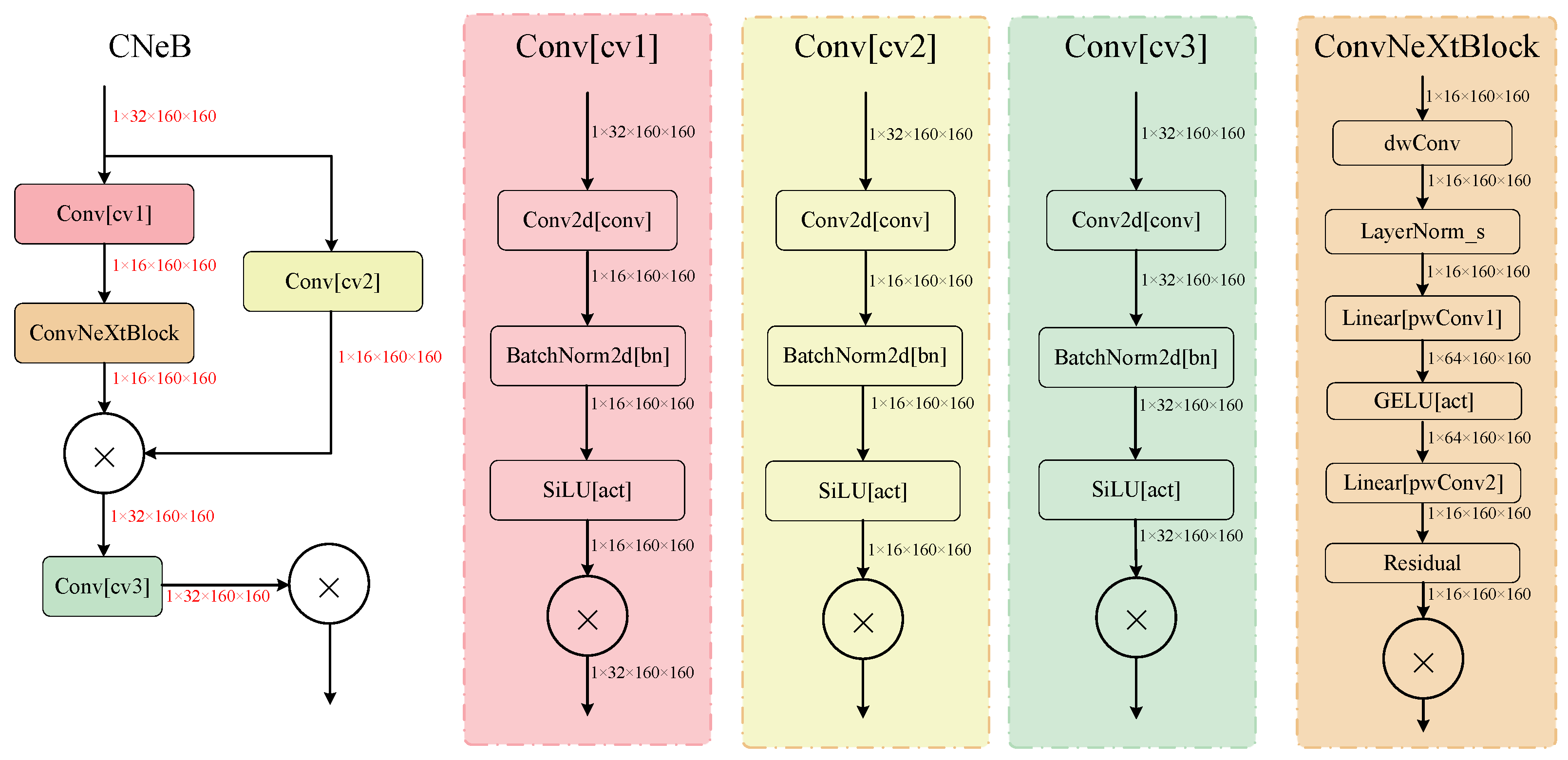

- Introduction of the Lightweight Residual Module CNeB: This module combines depthwise separable convolutions and feature alignment strategies to reduce computational cost while improving feature representation and model stability.

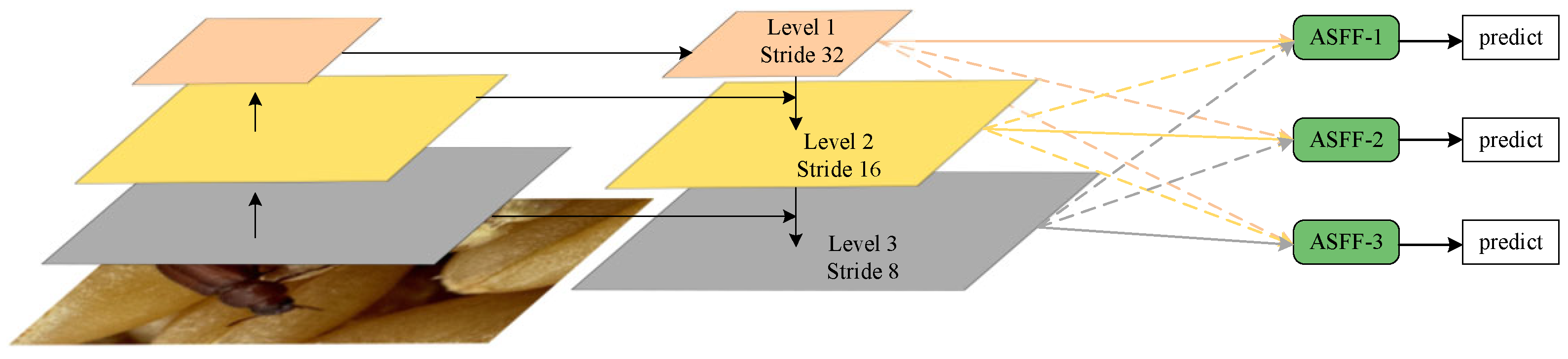

- Proposal of the ASFF Detection Head Structure: This structure uses an adaptive weighting mechanism in the spatial dimension to effectively mitigate the interference of complex backgrounds and target occlusion on detection performance.

2. Materials and Methods

2.1. Experimental Datasets

2.2. Experimental Environment

2.3. Modeling Assessment

2.4. FCA-YOLO Model Architecture

| Algorithm 1 FCA-YOLO Detection Framework (Part 1) |

| Require Input Ensure: Result

|

| Algorithm 2 FCA-YOLO Detection Framework (Part 2) |

|

2.4.1. Baseline Model YOLOv8

2.4.2. Gold-YOLO: A Model Based on Feature Pyramid Networks

2.4.3. Attention Mechanism CNeB

2.4.4. Attention Mechanisms ASFF

3. Results

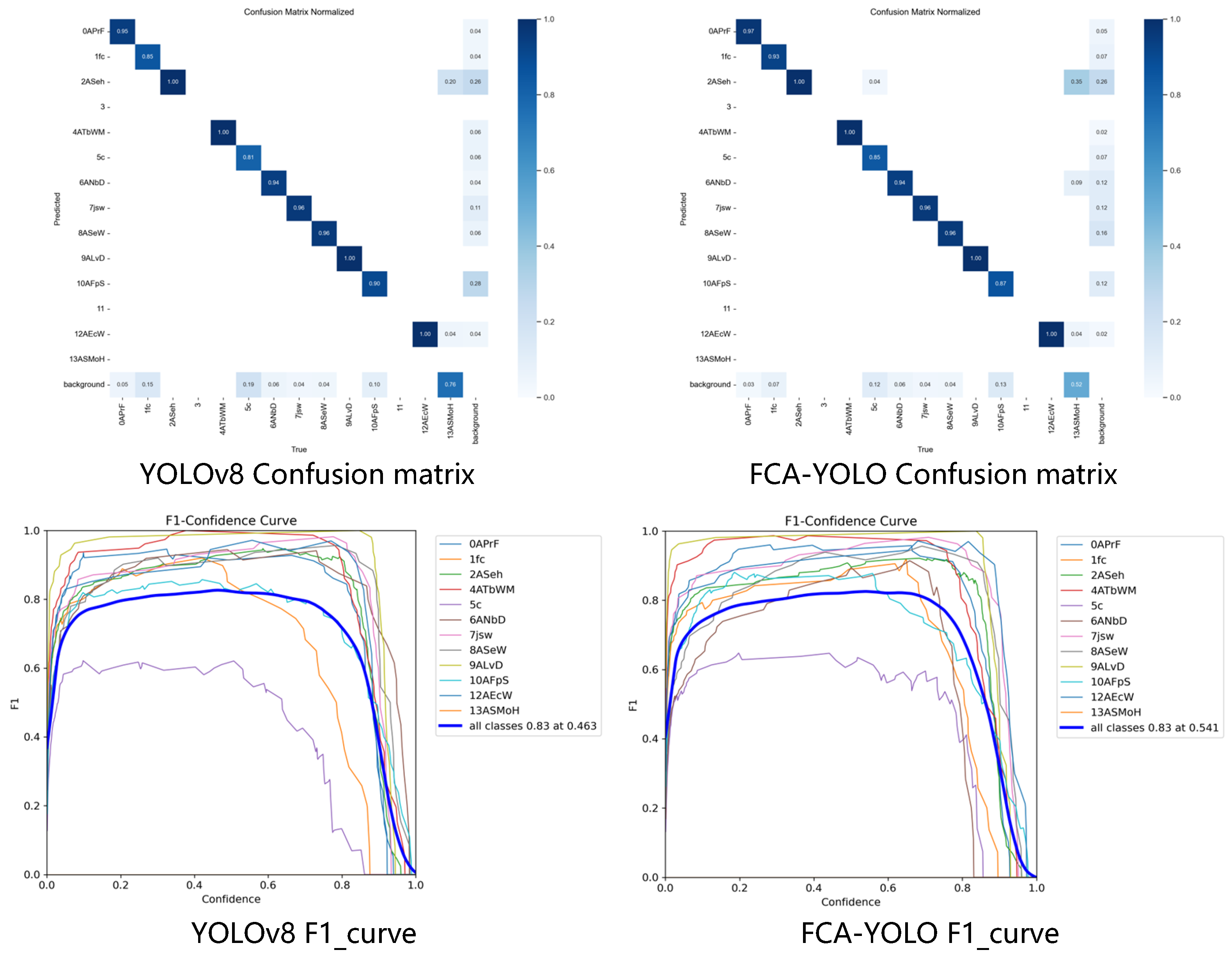

3.1. Comparison Experiment

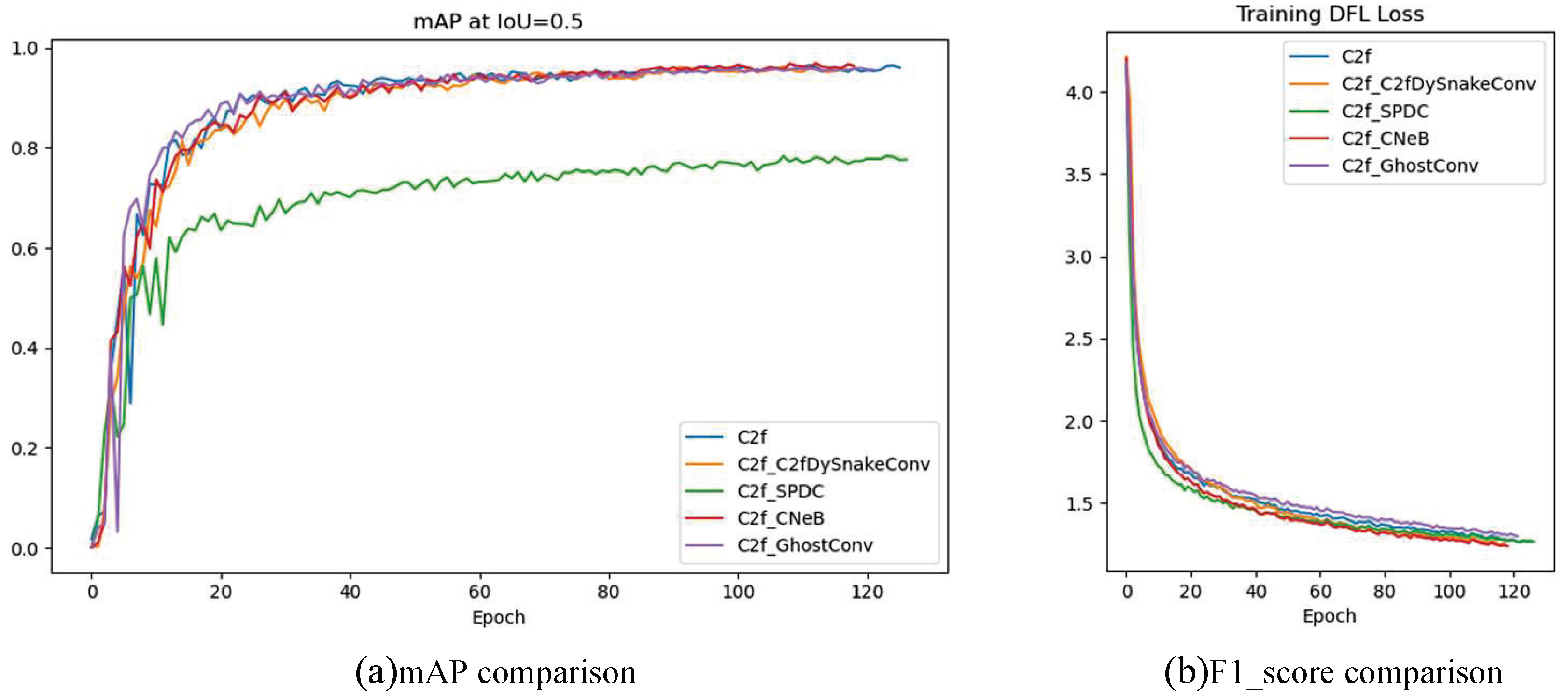

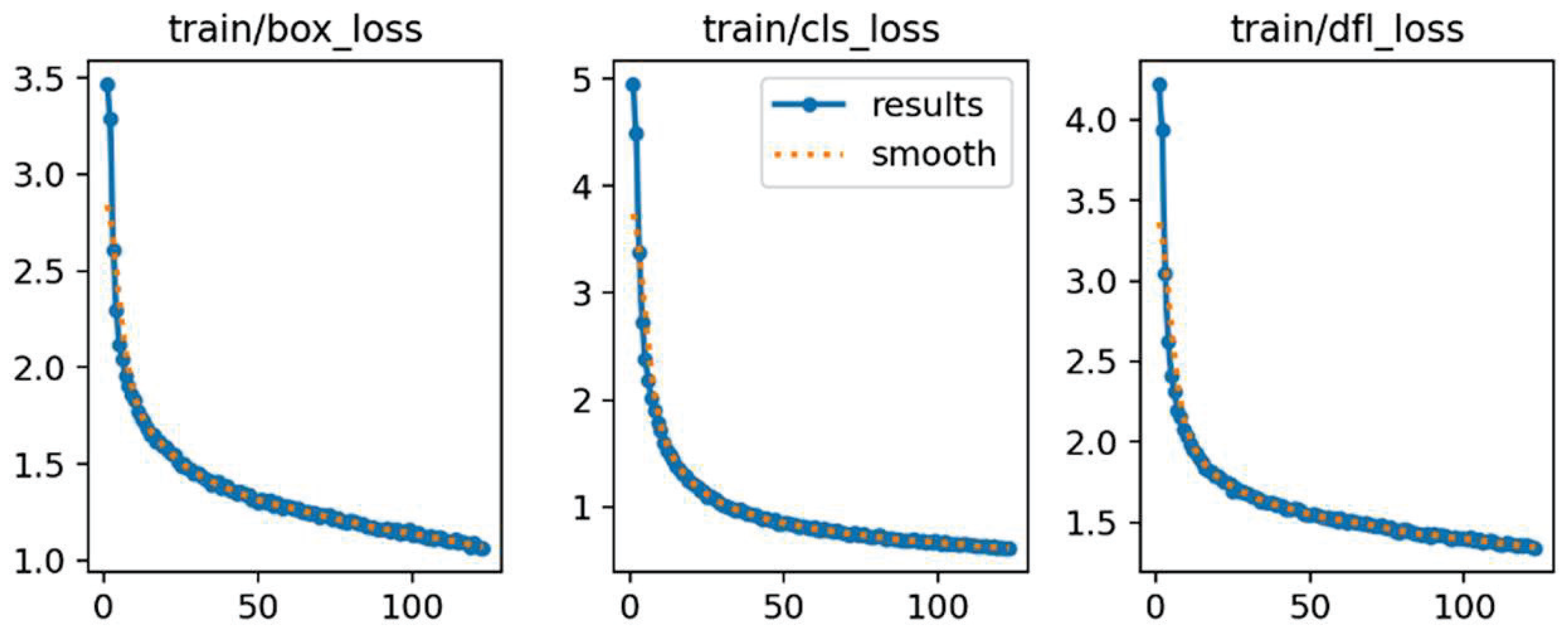

3.2. Ablation Experiment

4. Discussion

4.1. Hybrid Technology Comparison

4.2. Cross-Domain Validation

4.3. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cheng, S.K. Research on Detection Method of Storage Pests Based on Deep Learning. Master’s Thesis, Henan University of Technology, Zhenzhou, China, 2017. [Google Scholar]

- Wang, D.X.; Dou, Y.H.; Yan, X.P.; Wang, Z.M.; Shao, X.L.; He, Y.P. Research on fauna investigation of primary and representative species of stored grain insects in seven grain storage ecoregion of China. J. Chin. Cereals Oils Assoc. 2025, 1–15. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Z.M. Major stored-product pests and advances in control technologies. Chin. J. Hyg. Insect. Equip. 2025, 31, 8–11+21. [Google Scholar]

- Shen, Y.F.; Zhou, H.L.; Li, J.T.; Jian, F.J.; Jayas, D.S. Detection of stored-grain insects using deep learning. Comput. Electron. Agric. 2018, 145, 319–325. [Google Scholar] [CrossRef]

- Zou, Z.X.; Chen, K.Y.; Shi, Z.W.; Guo, Y.H.; Ye, J.P. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Zhao, L.; Yao, H.T.; Fan, Y.J.; Ma, H.H.; Li, Z.H.; Tian, M. Power Line Detection for Aerial Images Using Object-Based Markov Random Field With Discrete Multineighborhood System. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Fu, S.T.; Lai, Y.J.; Gu, C.S.; Gu, H. Prediction model of concrete dam deformation based on EEMD-AFSA-CNN. Adv. Sci. Technol. Water Resour. 2025, 1–10. [Google Scholar]

- Xiao, Y.D. Integrating CNN and RANSAC for improved object recognition in industrial robotics. Syst. Soft Comput. 2025, 7, 200240. [Google Scholar] [CrossRef]

- Walker, J.C.; Swineford, C.; Patel, K.R.; Dougherty, L.R.; Wiggins, J.L. Deep learning identification of reward-related neural substrates of preadolescent irritability: A novel 3D CNN application for fMRI. Neuroimage Rep. 2025, 5, 100259. [Google Scholar] [CrossRef]

- Akter, R.; Islam, M.R.; Debnath, S.K.; Sarker, P.K.; Uddin, M.K. A hybrid CNN-LSTM model for environmental sound classification: Leveraging feature engineering and transfer learning. Digit. Signal Process. 2025, 163, 105234. [Google Scholar] [CrossRef]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 16965–16974. [Google Scholar]

- Zhao, Y.; Lv, W.Y.; Xu, S.L.; Wei, J.M.; Wang, G.Z.; Dang, Q.Q. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Melki, M.N.E.; Khayri, J.M.A.; Aldaej, M.I.; Almaghasla, M.I.; Moueddeb, K.E.; Khlifi, S. Assessment of the Effect of Climate Change on Wheat Storage in Northwestern Tunisia: Control ofRhyzopertha dominicaby Aeration. Agronomy 2023, 13, 1773. [Google Scholar] [CrossRef]

- Radek, A.; Ali, S.J.; Vlastimil, K.; Li, Z.H.; Vaclav, S. Control of Stored Agro-Commodity Pests Sitophilus granarius and Callosobruchus chinensis by Nitrogen Hypoxic Atmospheres: Laboratory and Field Validations. Agronomy 2022, 12, 2748. [Google Scholar] [CrossRef]

- Li, R.; Li, C.; Wen, Y.M.; Li, H.; Wang, D.X. Research on trapping of Stored Grain Pests in main Grain Storage Ecological Areas of Yunnan Province. Grain Storage 2018, 47, 6–11. [Google Scholar]

- Agrafioti, P.; Lampiri, E.; Kaloudis, E.; Gourgouta, M.; Vassilakos, T.N.; Ioannidis, P.M.; Athanassiou, C.G. Spatio-Temporal Distribution of Stored Product Insects in a Feed Mill in Greece. Agronomy 2024, 14, 2812. [Google Scholar] [CrossRef]

- Zhen, T.; Wang, J.; Li, Z.H.; Zhu, Y.H. A review of the application of computer vision and image detection technology in the monitoring of stored grain pests. JCCOA 2025, 1–14. [Google Scholar] [CrossRef]

- Kuzuhara, H.; Takimoto, H.; Sato, Y.; Kanagawa, A. Insect Pest Detection and Identification Method Based on Deep Learning for Realizing a Pest Control System. In Proceedings of the 59th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Chiang Mai, Thailand, 23–26 September 2020; pp. 709–714. [Google Scholar]

- Dong, Q.; Sun, L.; Han, T.X.; Cai, M.Q.; Gao, C. PestLite: A Novel YOLO-Based Deep Learning Technique for Crop Pest Detection. Agriculture 2024, 14, 228. [Google Scholar] [CrossRef]

- Yang, Y.Y.; Xiao, Y.J.; Chen, Z.A.; Tang, D.X.; Li, Z.H.; Li, Z.Y. FCBTYOLO: A Lightweight and High-Performance Fine Grain Detection Strategy for Rice Pests. IEEE Access 2023, 11, 101286–101295. [Google Scholar] [CrossRef]

- Shi, Z.C.; Dang, H.; Liu, Z.C.; Zhou, X.G. Detection and Identification of Stored-Grain Insects Using Deep Learning: A More Effective Neural Network. IEEE Access 2020, 8, 163703–163714. [Google Scholar] [CrossRef]

- Liu, L.; Wang, R.J.; Xie, C.J.; Yang, P.; Wang, F.Y.; Sudirman, S. PestNet: An End-to-End Deep Learning Approach for Large-Scale Multi-Class Pest Detection and Classification. IEEE Access 2019, 7, 45301–45312. [Google Scholar] [CrossRef]

- Min, C.; Zhan, W.; Zhang, Y.Q.; Lv, J.H.; Hong, S.B.; Dong, T.Y.; She, J.H.; Huang, H.Z. Trajectory Tracking and Behavior Analysis of Stored Grain Pests via Hungarian Algorithm and LSTM Network. JCCOA 2023, 38, 28–34. [Google Scholar]

- Wu, J.; Zhao, F.Y.; Yao, G.T.; Jin, Z.H. FGA-YOLO: A one-stage and high-precision detector designed for fine-grained aircraft recognition. Neurocomputing 2025, 618, 129067. [Google Scholar] [CrossRef]

- Liu, X.Y.; Wang, T.; Yang, J.M.; Tang, C.W.; Lv, J.C. MPQ-YOLO: Ultra low mixed-precision quantization of YOLO for edge devices deployment. Neurocomputing 2024, 574, 127210. [Google Scholar] [CrossRef]

- Xie, W.N.; Ma, W.F.; Sun, X.T. An efficient re-parameterization feature pyramid network on YOLOv8 to the detection of steel surface defect. Neurocomputing 2025, 614, 128775. [Google Scholar] [CrossRef]

- Ghazlane, Y.; Ahmed, E.H.A.; Hicham, M. Real-time lightweight drone detection model: Fine-grained Identification of four types of drones based on an improved Yolov7 model. Neurocomputing 2024, 596, 127941. [Google Scholar] [CrossRef]

- Varghese, R.J.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z.; Liu, S.T.; Wang, F.; Li, Z.M.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Ho, K.C.; Young, K.S. Real-time object detection and segmentation technology: An analysis of the YOLO algorithm. JMST Adv. 2023, 5, 69–76. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.F.; Shi, J.P.; Jia, J.Y. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Zhang, X.Y.; Zou, J.H.; He, K.M.; Sun, J. Accelerating Very Deep Convolutional Networks for Classification and Detection. TPAMI 2016, 38, 1943–1955. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.Z.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S.N. A ConvNet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar]

- Liu, S.T.; Huang, D.; Wang, Y.H. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Kai, C.; Wang, J.Q.; Pang, J.M.; Cao, Y.H.; Xiong, Y.; Li, X.X.; Sun, S.Y.; Feng, W.S.; Liu, Z.W.; Xu, J.R.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Singh, B.; Davis, L.S. An Analysis of Scale Invariance in Object Detection-SNIP. arXiv 2018, arXiv:1711.08189. [Google Scholar]

- Tao, Z.Y.; Sun, S.F.; Luo, C.S. Study on peanut pest image recognition based on Faster-RCNN. Jiangsu Agric. Sci. 2019, 47, 247–250. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. TPAMI 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Sandler, M.; Howard, A.; Zhu, M.L.; Zhmoginov, A. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Li, C.Y.; Li, L.L.; Jiang, H.L.; Weng, K.H.; Geng, Y.F.; Li, L.; Ke, Z.D.; Li, Q.Y.; Cheng, M.; Nie, W.Q.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Guan, S.T.; Lin, Y.M.; Lin, G.Y.; Su, P.S.; Huang, S.L.; Meng, X.Y.; Liu, P.Z.; Yan, J. Real-Time Detection and Counting of Wheat Spikes Based on Improved YOLOv10. Agronomy 2024, 14, 1936. [Google Scholar] [CrossRef]

- Tian, Y.J.; Ye, Q.X.; David, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Qi, Y.L.; He, Y.T.; Qi, X.M.; Zhang, Y.; Yang, G.Y. Dynamic Snake Convolution based on Topological Geometric Constraints for Tubular Structure Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 6047–6056. [Google Scholar]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects. In Proceedings of the ECML PKDD, Grenoble, France, 19–23 September 2023; pp. 443–459. [Google Scholar]

- Han, K.; Wang, Y.H.; Tian, Q.; Guo, J.Y.; Xu, C.J.; Xu, C. GhostNet: More Features From Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1577–1586. [Google Scholar]

- Chen, J.R.; Kao, S.H.; He, H.; Zhuo, W.P.; Wen, S.; Lee, C.H. Run Do not Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Li, J.F.; Wen, Y.; He, L.H. SCConv: Spatial and Channel Reconstruction Convolution for Feature Redundancy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 6153–6162. [Google Scholar]

- Yu, H.Y.; Wan, C.; Liu, M.C.; Chen, D.D.; Xiao, B.; Dai, X.Y. Real-Time Image Segmentation via Hybrid Convolutional-Transformer Architecture Search. arXiv 2024, arXiv:2403.10413. [Google Scholar]

- Ding, X.H.; Zhang, X.Y.; Ma, N.N.; Han, J.G.; Ding, G.G.; Sun, J. RepVGG: Making VGG-style ConvNets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13728–13737. [Google Scholar]

- Wang, S.W.; Li, Y.; Qiao, S.H. ALF-YOLO: Enhanced YOLOv8 based on multiscale attention feature fusion for ship detection. Ocean Eng. 2024, 308, 118233. [Google Scholar] [CrossRef]

| Dataset | Training/Sheet | Test/Sheet | Validation/Sheet |

|---|---|---|---|

| Tribolium castaneum | 878 + 272 | 120 + 34 | 109 + 34 |

| Sitophilus oryzae | 980 + 307 | 113 + 39 | 123 + 38 |

| Cryptolestes ferrugineus | 649 + 278 | 106 + 35 | 105 + 35 |

| Version Information | Experimental Parameters | ||

|---|---|---|---|

| Windows | 11 Professional Edition | Input Image Size | |

| GPU | NVIDIA GeForce GTX 1660 SUPER (NVIDIA, Santa Clara, CA, USA) | Epochs | 200 |

| Python | 3.9.19 | Optimizer | SGD |

| Torch | 2.0.0 | SGD Momentum | 0.937 |

| Cuda | 11.8 | Batch Size | 16 |

| C++ Version | 199711 | Patience | 10 |

| Num | Model Name | Precision (%) | Recall (%) | mAP (%) | FLOPs (G) | Params (M) | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| cn | mx | xc | cn | mx | xc | |||||

| 1 | Faster-rcnn_r50_fpn | 98.7 | 96.5 | 96.1 | 92.6 | 98.1 | 83.9 | 96.5 | 74.16 | 41.36 |

| 2 | SSDlite_mobilenetv2 | 96.0 | 96.7 | 87.3 | 95.1 | 97.5 | 23.1 | 79.8 | 0.69 | 3.06 |

| 3 | YOLOv6 | 67.4 | 73.6 | 46.8 | 72.9 | 77.7 | 57.1 | 95.2 | 11.39 | 4.64 |

| 4 | YOLOv8 | 96.6 | 95.3 | 90.2 | 91.8 | 96.8 | 82.7 | 95.2 | 8.1 | 3.01 |

| 5 | YOLOv10 | 94.2 | 94.6 | 88.8 | 92.7 | 94.8 | 79.5 | 94.2 | 8.2 | 2.70 |

| 6 | YOLOv12 | 95.7 | 99.1 | 90.6 | 94.9 | 94.9 | 78.4 | 94.2 | 5.8 | 2.51 |

| Num | Attention Mechanism | mAP (%) | Params (M) | Preprocess | FLOPs (G) |

|---|---|---|---|---|---|

| 1 | C2f | 96.23 | 8.04 | 0.5 ms | 17.5 |

| 2 | C2f_DySnakeConv | 96.28 | 8.97 | 0.4 ms | 19.6 |

| 3 | C2f_SPDC | 77.45 | 8.04 | 0.6 ms | 4.6 |

| 4 | C2f_CNeB | 96.86 | 8.45 | 0.4 ms | 18.6 |

| 5 | C2f_GhostConv | 95.97 | 7.85 | 0.6 ms | 17 |

| Num | Attention Mechanism | mAP (%) | Layers | Preprocess | FLOPs (G) |

|---|---|---|---|---|---|

| 1 | Detect | 96.86 | 533 | 0.4ms | 18.6 |

| 2 | PC_Detect | 96.58 | 561 | 0.5 ms | 16.1 |

| 3 | SC_Detect | 94.81 | 546 | 0.4 ms | 16.2 |

| 4 | SA_Detect | 95.92 | 613 | 0.5 ms | 17.5 |

| 5 | Rep_Detect | 96.41 | 562 | 0.5 ms | 18.9 |

| 6 | ASFF_Detect | 97.29 | 612 | 0.4 ms | 20.8 |

| Num | G | C | A | Precision (%) | mAP (%) | Accuracy (%) | F1-Score | Postprocess | ||

|---|---|---|---|---|---|---|---|---|---|---|

| cn | mx | xc | ||||||||

| 1 | 0.97 | 0.95 | 0.90 | 95.23 | 85.74 | 0.92 | 1.1 ms | |||

| 2 | ✔ | 0.97 | 0.99 | 0.93 | 96.23 | 90.25 | 0.95 | 0.8 ms | ||

| 3 | ✔ | ✔ | 0.97 | 0.98 | 0.90 | 96.86 | 90.36 | 0.94 | 0.8 ms | |

| 4 | ✔ | ✔ | ✔ | 0.96 | 0.98 | 0.95 | 97.29 | 90.25 | 0.95 | 0.7 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ge, H.; Wang, J.; Zhen, T.; Li, Z.; Zhu, Y.; Pan, Q. FCA-YOLO: An Efficient Deep Learning Framework for Real-Time Monitoring of Stored-Grain Pests in Smart Warehouses. Agronomy 2025, 15, 1313. https://doi.org/10.3390/agronomy15061313

Ge H, Wang J, Zhen T, Li Z, Zhu Y, Pan Q. FCA-YOLO: An Efficient Deep Learning Framework for Real-Time Monitoring of Stored-Grain Pests in Smart Warehouses. Agronomy. 2025; 15(6):1313. https://doi.org/10.3390/agronomy15061313

Chicago/Turabian StyleGe, Hongyi, Jing Wang, Tong Zhen, Zhihui Li, Yuhua Zhu, and Quan Pan. 2025. "FCA-YOLO: An Efficient Deep Learning Framework for Real-Time Monitoring of Stored-Grain Pests in Smart Warehouses" Agronomy 15, no. 6: 1313. https://doi.org/10.3390/agronomy15061313

APA StyleGe, H., Wang, J., Zhen, T., Li, Z., Zhu, Y., & Pan, Q. (2025). FCA-YOLO: An Efficient Deep Learning Framework for Real-Time Monitoring of Stored-Grain Pests in Smart Warehouses. Agronomy, 15(6), 1313. https://doi.org/10.3390/agronomy15061313