1. Introduction

Rice, an eminent cornerstone of global sustenance, occupies an increasingly pivotal role in the alimentary provisioning of diverse nations [

1]. However, rice is susceptible to disease during its growth, resulting in a significant reduction in both quality and yield. Therefore, prioritizing effective rice disease control is essential for ensuring food security [

2]. Currently, experts primarily diagnose diseases in rice plants visually [

3]. This method is not only time-consuming and labor-intensive, but also limited in both recognition accuracy and efficiency, making it inadequate for meeting the practical requirements of efficient pest management.

With the advancement of computer vision technology, researchers have begun to apply image processing and machine learning methods for the detection of crop diseases and pests. In the case of rice disease detection, artificial intelligence (AI) technologies, such as computer vision and deep learning, offer a promising solution [

4]. Sahu et al. [

5] employed Support Vector Machine (SVM) and a novel hybrid Random Forest (RF) model to detect plant leaf diseases. Regiana et al. [

6] improved a leaf image clustering model using the K-means algorithm for the identification of rice leaf diseases. In Guan et al. [

7], a method for identifying peanut leaf spot disease based on hyperspectral UAV imagery was proposed, integrating hyperspectral imaging with superpixel unmixing techniques. In An et al. [

8], hyperspectral data combined with SVM were utilized to detect and classify wheat powdery mildew (WPM), effectively distinguishing between healthy and infected wheat, and further enabling the classification of varying infection levels of WPM. Rajput et al. [

9] constructed a comprehensive dataset containing healthy and diseased pigeon pea leaf images, and K-Nearest Neighbors (KNN) and SVM were employed to classify pigeon pea leaf diseases. Terensan et al. [

10] applied the K-means clustering method to distinguish between rice blast and brown spot diseases. Harjanti et al. [

11] employed Euclidean distance and K-means clustering to classify mint leaf types. Banerjee et al. [

12] utilized convolutional neural network (CNN) and SVM methods to identify and classify banana leaf diseases, distinguishing them from healthy leaves. Additionally, Saputra et al. [

13] employed the SVM algorithm for the detection of rice leaf diseases. In summary, traditional methods for detecting crop diseases often rely on manual feature extraction, which is time-consuming and limits both the accuracy and efficiency of detection. Consequently, this affects the real-time performance and practical application of disease identification.

Recently, with the rapid advancement of image processing technologies, the field of deep learning has evolved significantly and has been increasingly integrated into object detection tasks. By leveraging deep learning methods, the field has acquired the capability to autonomously extract disease-related features. Consequently, the integration of artificial intelligence (AI) into agriculture has opened new avenues for improving crop management. One notable application is the detection of rice diseases. Artificial intelligence, particularly machine learning algorithms, has proven effective at analyzing large-scale image datasets to identify patterns associated with various plant diseases. Global research endeavors are currently underway in the realm of plant disease detection. Object detection algorithms are divided into two categories. One is the two-stage algorithm based on regional suggestion box prediction, such as Fast-RCNN [

14] and Faster-RCNN [

15]. Junare et al. [

16] proposed an improved Mask R-CNN model based on Faster R-CNN for plant disease detection in complex scenarios. The experimental results demonstrated that the proposed model outperformed existing methods in terms of detection performance. Guan et al. [

17] introduced a more efficient GC-Faster R-CNN model for agricultural pest detection. Compared with the original Faster R-CNN, the proposed model achieved a 4.5% improvement in mAP and a significant 16.6% increase in recall, substantially enhancing overall detection effectiveness. Hou et al. [

18] proposed the FPN-ISResNet-Faster RCNN model, effectively enhancing the precision of leaf disease detection. Their model exhibited impressive accuracy and generalization in detecting diseases in apple leaves. Admass et al. [

19] proposed an automated system for mango disease detection and classification that integrated CNN with Histogram of Oriented Gradients (HOG). Experimental results demonstrated that the hybrid CNN-HOG model outperformed the use of CNN or HOG alone in both detection and classification tasks, highlighting the complementary strengths of the two methods. The model achieved accuracy rates of 98.80% on the training set and 99.5% on the testing set, and exhibited an outstanding performance across various metrics, including accuracy, precision, and recall. Meanwhile, WANG et al. [

20] pioneered an innovative technique for in situ sweet potato leaf detection. This method, predicated on a refined Faster R-CNN framework and a visual attention mechanism, attained a mean average precision of 95.7%. Notably, this performance surpassed the original Faster R-CNN by 2.9% and outperformed YOLOv5 by 7.0%. This innovation holds immense potential for applications in smart agriculture and ecological monitoring, particularly for tasks such as growth monitoring and plant phenotyping, especially when dealing with densely populated or occluded leaves. While such algorithms deliver commendable detection efficacy, their operational pace regrettably lags.

Another useful method is the use of a single-stage algorithm based on the regression problem, such as SSD [

21] or YOLO [

22]. While these algorithms may exhibit a slightly diminished detection efficacy in comparison to their two-stage counterparts, they more than compensate with their swiftness and real-time performance. Yin et al. [

23] proposed a high-precision detection model for jujube leaf spot diseases, termed JujubeSSD. The model achieved an mAP of 97.1% in the detection task, representing an improvement of approximately 6.35 percentage points over the baseline algorithm. These results effectively validate the model’s superior performance and strong practical applicability in the identification of jujube diseases. Dahua et al. [

24] presented a novel SSD-based architecture for detecting citrus leaf diseases. The model utilizes the lightweight neural network MobileNetV2 [

25] as the backbone of the SSD framework and integrates the Coordinate Attention (CA) [

26] mechanism along with the Receptive Field Block (RFB) [

27] module. These enhancements resulted in a model size reduction of 52.3 MB, an increase of 3.15 FPS in inference speed, and a 4.4 percentage point improvement in mAP. This approach not only significantly enhanced detection performance, but also demonstrated a strong real-time capability, offering an effective technical solution for the rapid and accurate diagnosis of citrus leaf diseases. It facilitates early identification and timely intervention, thereby supporting farmers in effective disease management. Wang et al. [

28] introduced a lightweight design into the YOLO architecture by replacing conventional convolutional layers with ghost modules to effectively reduce the number of model parameters. Additionally, the Convolutional Block Attention Module (CBAM) [

29] was integrated to enhance feature representation, and an additional prediction head was incorporated to improve detection capability. The proposed MGA-YOLO model demonstrated excellent performance in apple leaf disease detection, achieving an mAP of 89.3% and an inference speed of 84.1 FPS on a GPU server, highlighting its strong detection accuracy and real-time applicability. Sangaiah et al. [

30] developed a rice leaf disease identification model based on Tiny YOLO v4, which was deployed on unmanned aerial vehicles (UAVs) to leverage aerial computing for large-scale monitoring and recognition of agricultural diseases. By incorporating Spatial Pyramid Pooling (SPP), CBAM, ghost modules, and additional convolutional layers into the network, the resulting UAV Tiny YOLO Rice (UAV T-YOLO-RICE) model was trained on a custom rice leaf disease dataset. The model achieved a testing mAP of 86%, outperforming all other models in prior studies, thus demonstrating its effectiveness in real-world agricultural disease detection scenarios. He et al. [

31] proposed an improved method for corn leaf pest and disease detection based on the YOLOv11 framework. The approach first integrates the RepLKNet module to enhance the model’s ability to represent disease and pest features. Then, the CBAM attention mechanism is incorporated into the neck network to improve feature extraction accuracy. Finally, the detection head is enhanced with DynamicHead and the Weighted IoU (WIoU) loss function to improve both the detection precision and localization performance. The experimental results indicated that the proposed model achieved improvements of 4.9% in accuracy and 9.0% in recall compared to the baseline model.

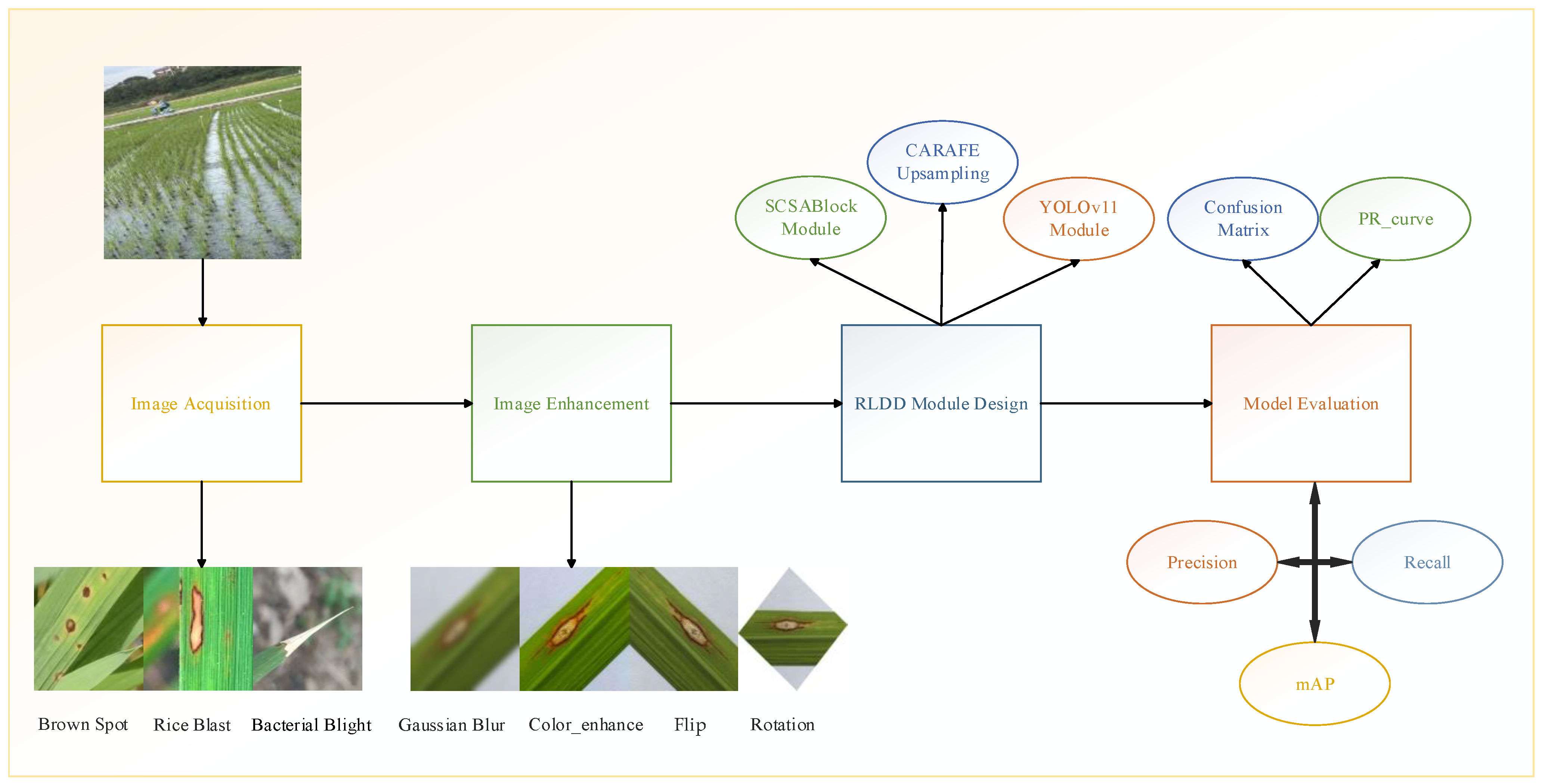

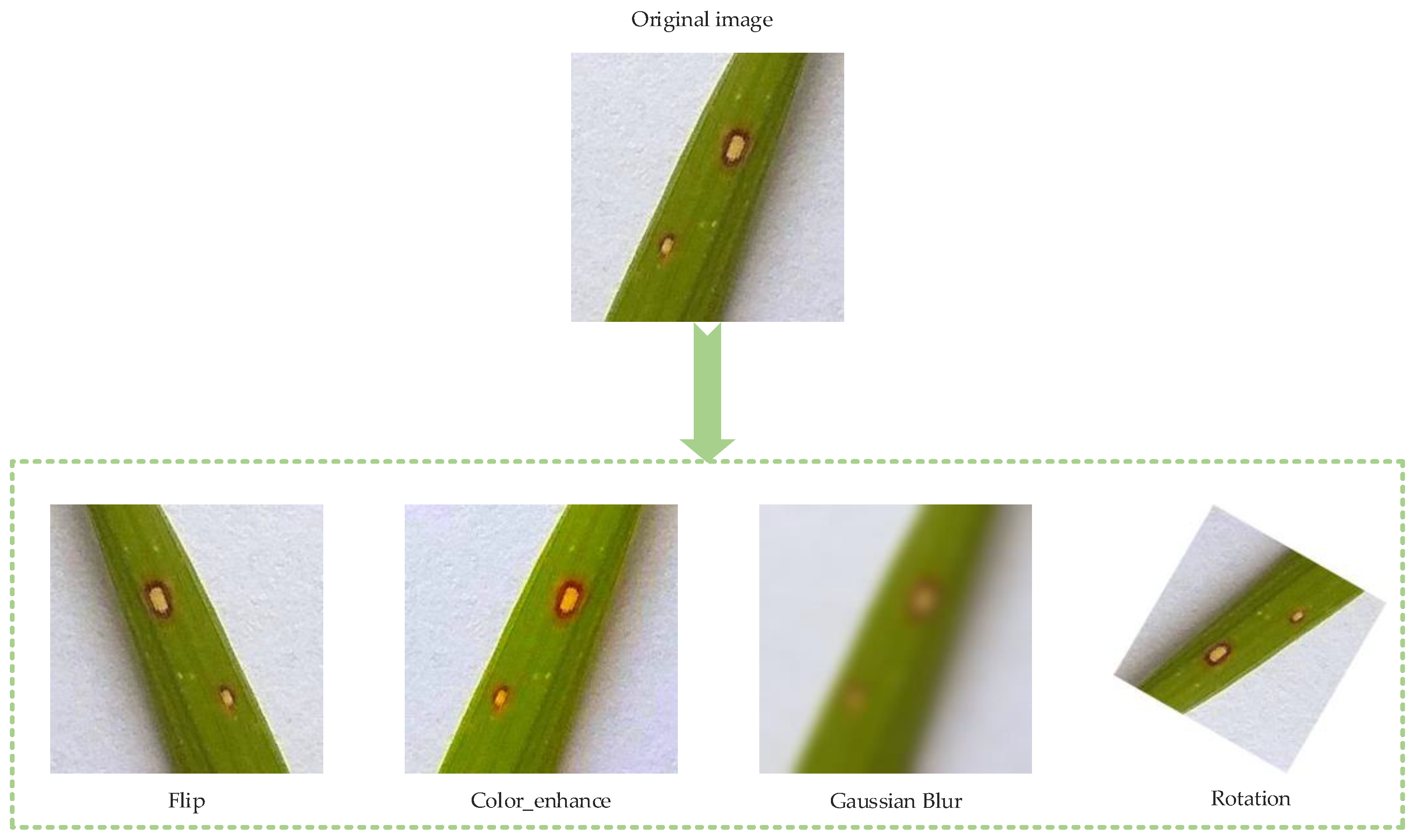

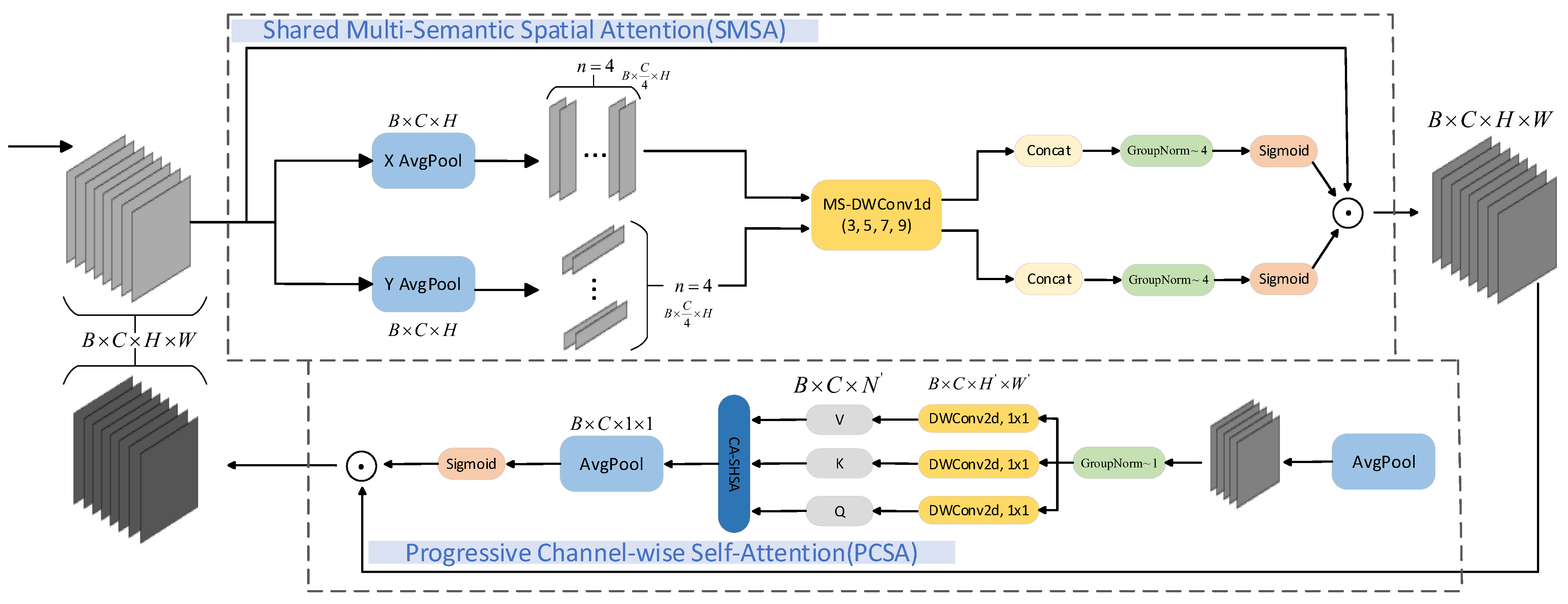

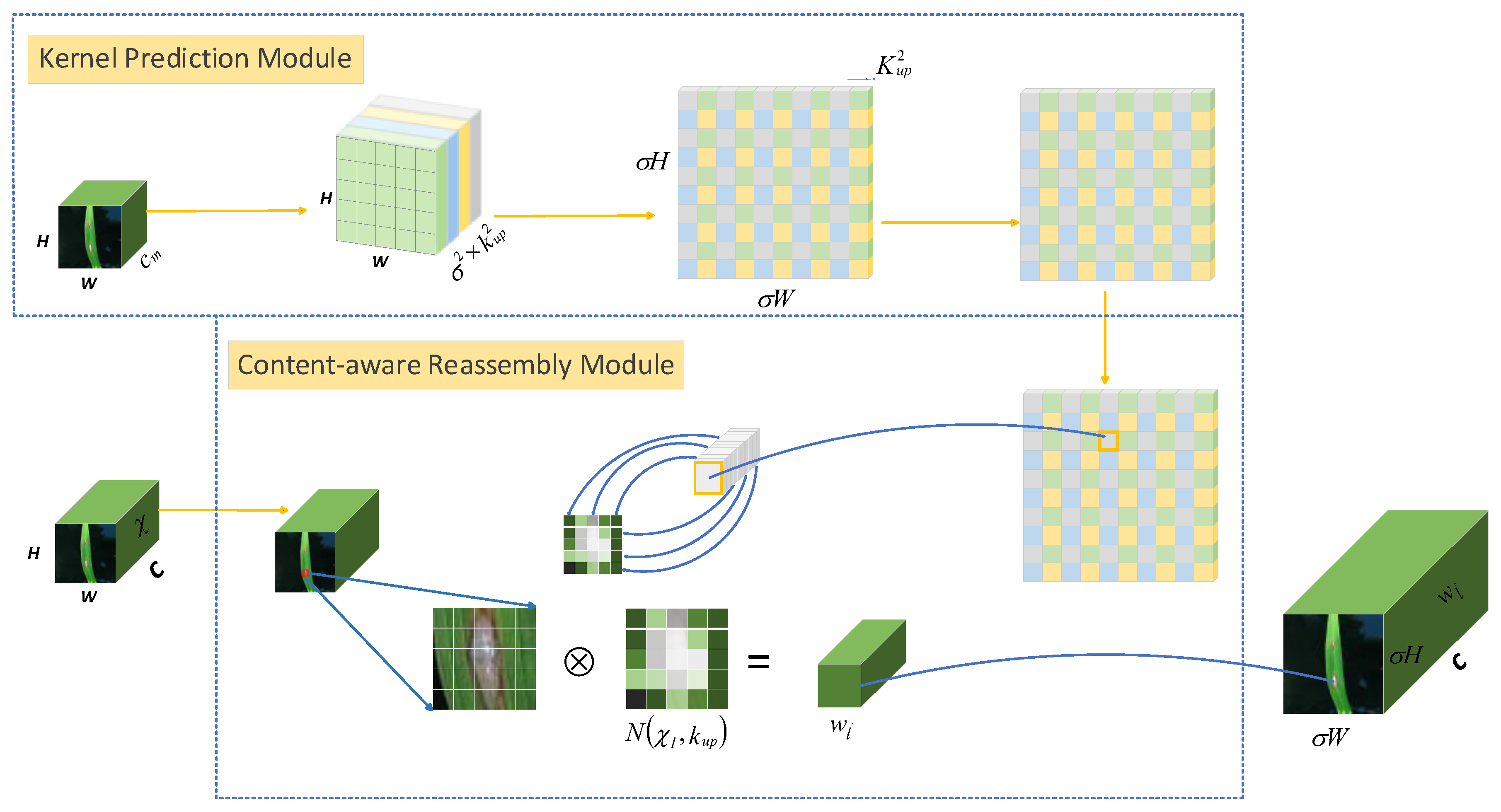

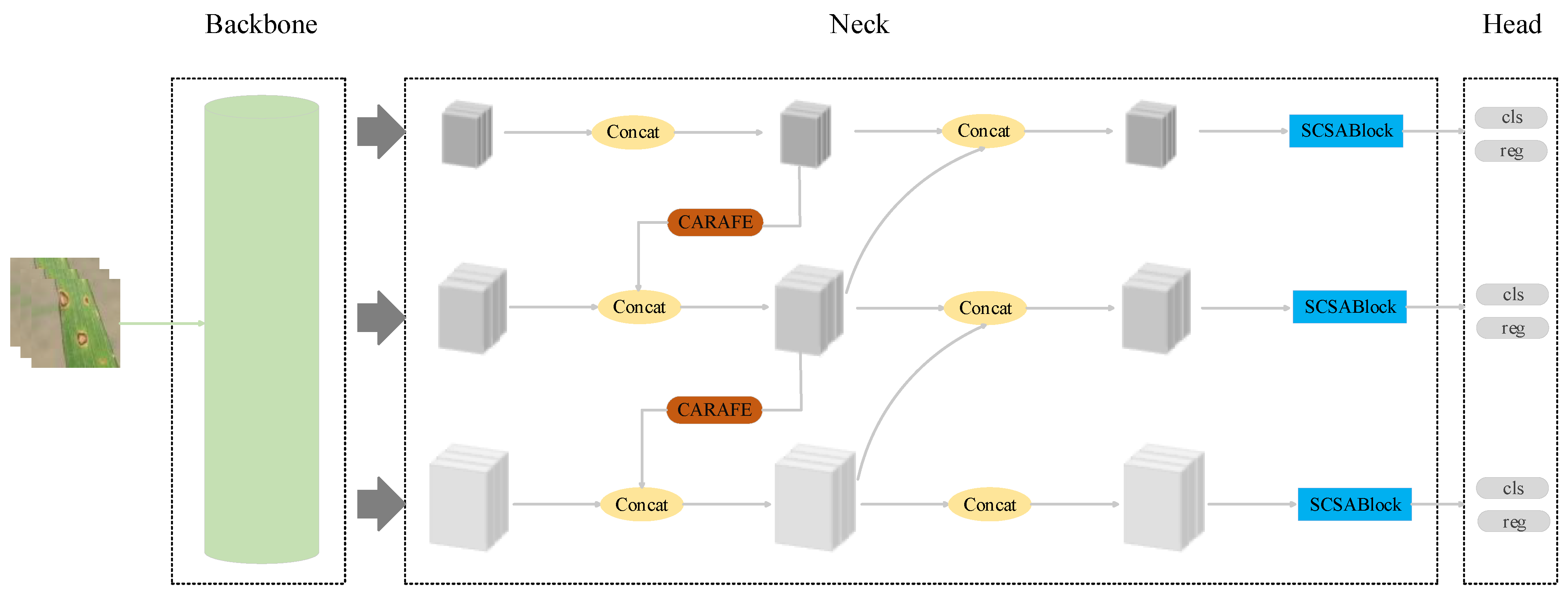

Currently, traditional manual inspection methods are inefficient and susceptible to subjective factors, making them inadequate to meet the demands of modern agricultural production. In recent years, deep-learning-based object detection techniques have achieved significant progress in the field of agricultural disease identification; however, challenges such as insufficient detection accuracy, high model complexity, and difficulties in practical application remain. To address these issues, this study proposes a rice leaf disease detection model, RLDD-YOLOv11n, based on the YOLOv11n framework, aiming to improve the detection accuracy and real-time performance of rice leaf disease identification, overcome the shortcomings of existing methods in practical applications, and provide effective technical support for precise agricultural disease management. The results demonstrate that the proposed model exhibits a strong performance in rice leaf disease recognition tasks. The main contributions of this study are as follows: (1) The integration of the Residual Attention (SCSABlock) module at each object detection output location. SCSABlock combines the SCSA attention mechanism and residual structure to facilitate multi-semantic information fusion, thereby improving recognition accuracy. (2) Replacing the traditional upsampling module with the CARAFE upsampling module effectively restores fine image details, which effectively recovers fine image details. (3) A rice leaf disease dataset was constructed to validate the effectiveness of the model.

3. Results

3.1. Experimental Platform and Parameter Settings

To verify the effectiveness of the improved method proposed in this paper, ablation studies were implemented. The baseline of each experiment is the YOLOV11n model. Thereafter, an overall performance evaluation of the proposed model is discussed.

The proposed rice leaf disease detection model is implemented on PyTorch 1.8 and uses NVIDIA for training and testing. The SGD (Stochastic Gradient Descent) optimizer is adopted with an initial learning rate set to 0.1, which effectively improves convergence stability in the later stages of training and enhances the final model performance. The momentum factor is set to 0.937 and the weight decay coefficient is 0.0005 to suppress overfitting and accelerate gradient updates. The input image size is fixed at 320 × 320 pixels, which balances detection accuracy while significantly reducing the model’s computational resource consumption. The batch size is set to 16, which maintains gradient update stability and improves training efficiency under the memory constraints of the current GPU (RTX 2080 Ti). The total number of training epochs is 200, ensuring sufficient iterations for the network to capture key features and prevent underfitting. The hardware and software platform used for training includes an Intel Xeon E5-2680v3 CPU and an RTX 2080 Ti GPU, with the environment configured as CUDA 11.2 and PyTorch 1.8, which meets the requirements for model training. The specific training parameters and configurations are shown in

Table 4.

3.2. Ablation Experiment

To assess the specific impact of each module on the model’s performance, this study conducted ablation experiments to verify the effectiveness of the SCSABlock attention module and the CARAFE upsampling module within the YOLOv11n architecture. As shown in

Table 5, the baseline YOLOv11n model achieved AP@0.5 scores of 0.906 for Brown Spot, 0.676 for Rice Blast, and 0.984 for Bacterial Blight, with an overall mAP@0.5 of 0.855 and mAP@0.95 of 0.547. After incorporating the SCSABlock module, detection accuracy for all disease categories improved, and the mAP@0.5 increased to 0.865. This validates the effectiveness of the attention mechanism in enhancing feature representation and improving feature focus. Subsequently, the CARAFE module was integrated into the original model to replace the conventional upsampling operation. This led to a further increase in mAP@0.5 to 0.876, indicating that the enhanced spatial information restoration contributed to more accurate localization of diseased regions.

The final proposed RLDD-YOLOv11n model, which integrates both the SCSABlock and CARAFE modules, achieved the best overall performance, with an mAP@0.5 of 0.883—an improvement of 2.8% over the baseline—and an mAP@0.95 of 0.607. These results further demonstrate the synergistic effect of the two modules in significantly improving the model’s ability to perceive fine-grained disease features. From the perspective of individual class metrics, RLDD-YOLOv11n achieved an AP of 0.969 for Brown Spot, 0.699 for Rice Blast, and 0.982 for Bacterial Blight, reflecting a strong adaptability and stable detection performance across all disease types. However, RLDD-YOLOv11 achieved an AP@0.5 of 0.699 for the Rice Blast category, which is relatively lower compared to other disease categories. This phenomenon is mainly attributed to the short infection cycle and scattered distribution of Rice Blast, resulting in a limited number of field-collected samples. To supplement the dataset, some images were sourced from the internet; however, these images often suffer from low resolution and blurred features. Additionally, the visual similarity between Rice Blast and Brown Spot increases the difficulty of discrimination for the model, thereby affecting the detection performance. In summary, the ablation study results clearly demonstrate the advantages of the introduced modules in enhancing information interaction and feature reconstruction, further highlighting the superior performance and practical value of RLDD-YOLOv11n in the task of rice leaf disease detection.

3.3. Comparison of the Results of Different Models

To validate the overall performance advantages of the proposed RLDD-YOLOv11n model in the task of rice leaf disease detection, a series of comparative experiments were conducted using several mainstream object detection models. These include the two-stage detection algorithm Faster R-CNN, as well as lightweight one-stage detection models such as YOLOv5s, YOLOv7, YOLOv8n, YOLOv10n, and YOLOv11n.

As shown in

Table 6, the RLDD-YOLOv11n model demonstrates a superior performance across all evaluation metrics. With a model size of only 2.58 MB and a computational complexity of just 6.3 GFLOPS, it achieves a precision of 91.6%, a recall of 83.8%, and a mAP@0.5 of 88.3%, significantly outperforming other models in terms of overall performance. Compared to the baseline YOLOv11n model, it improves precision by 4.9 percentage points and mAP@0.5 by 2.8 percentage points, fully demonstrating a favorable balance between detection accuracy and computational efficiency.

Faster R-CNN, as a classical two-stage detection algorithm, performs reasonably well in recall (84.9%) and mAP@0.5 (79.9%), but its precision is only 68.8%. Moreover, it has a large model size of 82.1 MB and a high computational cost of 120.7 GFLOPS, making it unsuitable for deployment in resource-constrained environments. In contrast, although YOLOv5s and YOLOv7 achieve high precision and mAP@0.5 (with precisions of 88.9% and 86.0%, and mAP@0.5 values of 86.9% and 86.6%, respectively), they also incur considerable model sizes and computational costs (7.01 MB/15.8 GFLOPS and 36.8 MB/103.5 GFLOPS, respectively). Lightweight models such as YOLOv8n and YOLOv10n offer smaller model sizes (3.0 MB and 2.69 MB, respectively), but their detection precision and recall rates are notably lower, limiting their applicability in high-precision agricultural disease detection tasks.

In summary, RLDD-YOLOv11n improves detection performance while maintaining a lightweight architecture, demonstrating its application potential in intelligent agricultural disease monitoring and suitability for deployment in resource-constrained edge computing scenarios.

To validate the effectiveness of the proposed model in real-world application scenarios,

Figure 8 presents a comparative analysis of detection results between YOLOv11n and RLDD-YOLOv11n on actual rice leaf images. In the first column, RLDD-YOLOv11n achieves a confidence score of 0.89 for typical Brown Spot symptoms, significantly outperforming YOLOv11n’s score of 0.79, indicating superior feature extraction and object recognition capabilities. The second column demonstrates that when lesion appearance closely resembles the background, YOLOv11n generates false detections, whereas RLDD-YOLOv11n successfully distinguishes between disease and background, reflecting higher robustness. In the third column, under densely distributed lesion conditions, RLDD-YOLOv11n achieves precise identification of all targets, while YOLOv11n exhibits missed detections. Finally, when multiple disease types are present in the same image, RLDD-YOLOv11n continues to provide higher-confidence predictions, confirming its enhanced multi-class recognition ability. In summary, RLDD-YOLOv11n exhibits a superior comprehensive detection performance in small object identification, background interference suppression, and multi-disease coexistence recognition.

Combining quantitative metrics and qualitative visualizations, RLDD-YOLOv11n achieves a favorable balance between detection accuracy and lightweight design, demonstrating greater robustness and deployment efficiency, particularly suitable for large-scale agricultural disease identification tasks in resource-constrained environments.

3.4. RLDD-YOLOv11n Detection Results Analysis

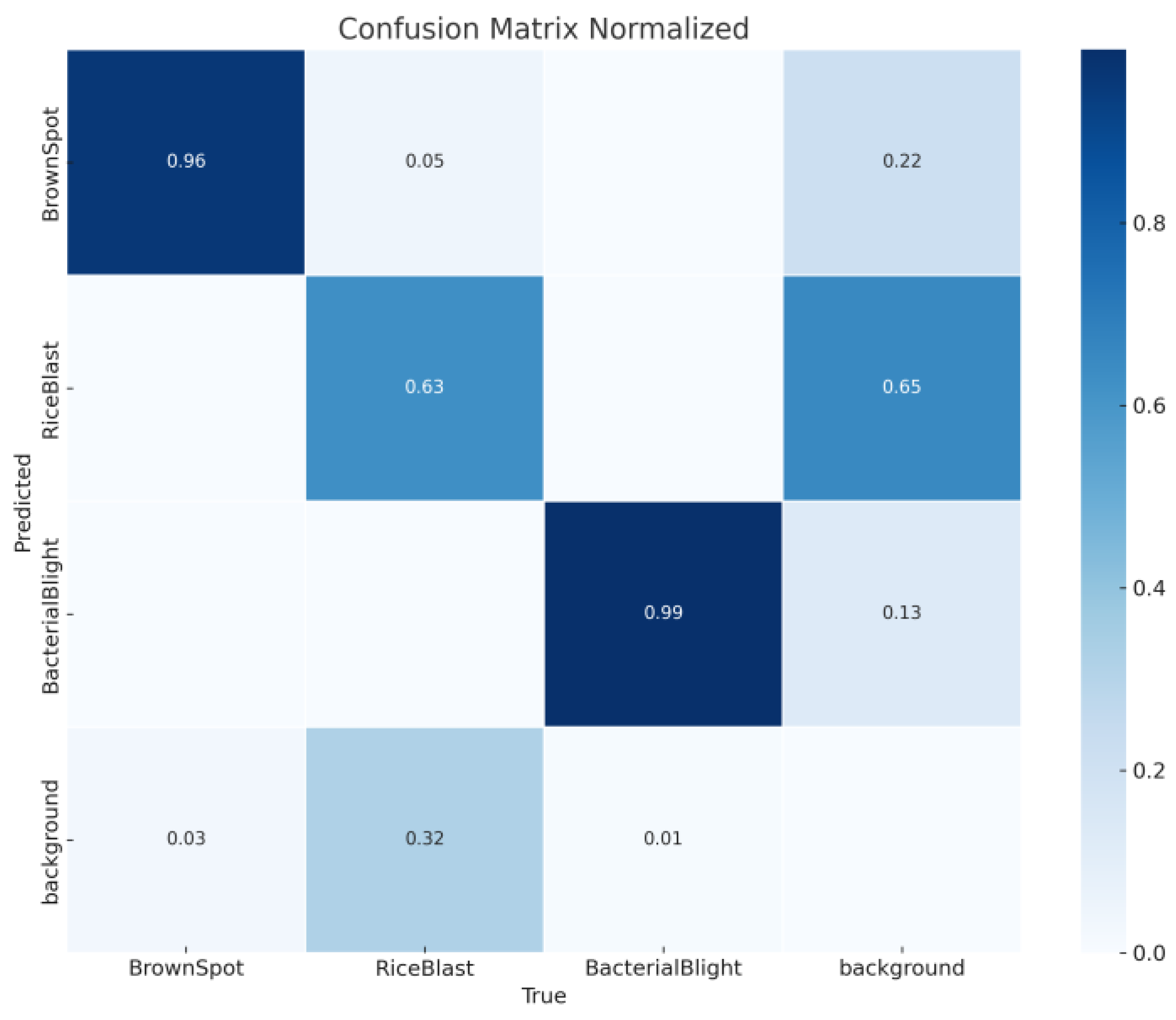

The confusion matrix is a vital tool in machine learning, offering a detailed analysis of classification performance by juxtaposing the predicted results with the ground truth. In this study, the primary objective of the confusion matrix is to rigorously evaluate the performance of RLDD-YOLOv11n. As shown in

Figure 9, the Brown Spot class achieves 96% accuracy along the main diagonal; however, it exhibits a 3% false negative rate (Bacterial Blight misclassified as background) and a 22% background false positive rate (background misclassified as Bacterial Blight). These findings suggest the model’s limited sensitivity to early-stage symptoms and insufficient generalization to diverse background conditions. The classification accuracies for Rice Blast and Bacterial Blight both exceed 90%, confirming the model’s strong capability in capturing complex textured lesions. Nevertheless, cross-category errors between background and disease classes (e.g., 0.22, 0.03) reflect a bottleneck in fine-grained feature differentiation, which may be constrained by factors such as lighting variations or image resolution. In summary, the confusion matrix provides an intuitive visualization of RLDD-YOLOv11n’s strengths and weaknesses across different lesion types, highlighting both its advantages and the areas that require further improvement.

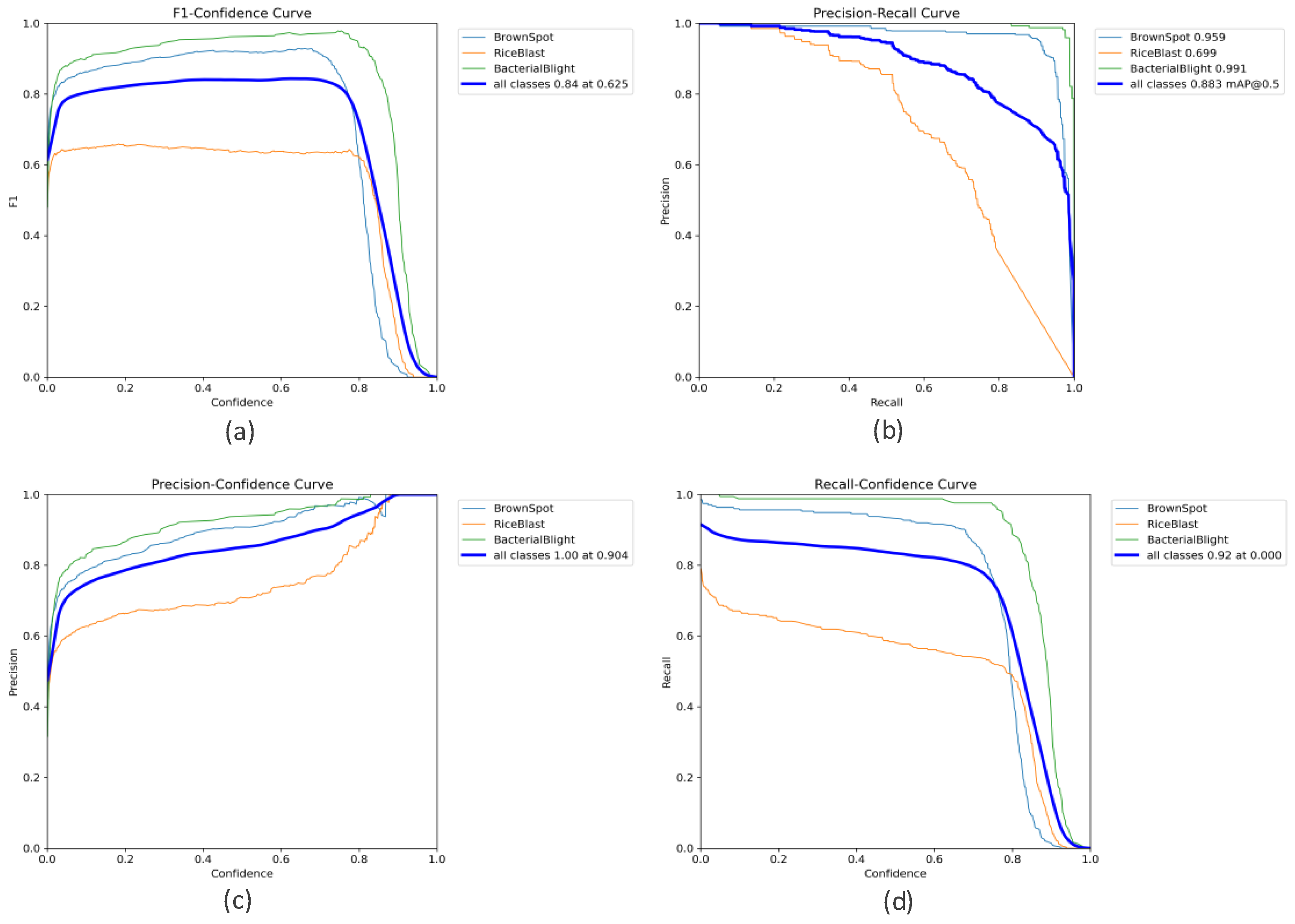

Figure 10 illustrates the performance of RLDD-YOLOv11n across multiple evaluation metrics. The F1−Confidence curve demonstrates that the model maintains a high F1 score even at high confidence thresholds, with a peak value of 0.84, outperforming the conventional YOLOv11n. This indicates a more optimal balance between precision and recall. The Precision−Recall curve further highlights the model’s high stability, particularly achieving an mAP@0.5 of 0.991 for the Bacterial Blight class and 0.959 for the Brown Spot class, suggesting that RLDD-YOLOv11n can more effectively distinguish between different disease categories and exhibits excellent class discrimination capabilities. Additionally, the Precision−Confidence and Recall−Confidence curves indicate that the model retains robust detection performance even under low-confidence conditions, reflecting a stronger generalization ability.

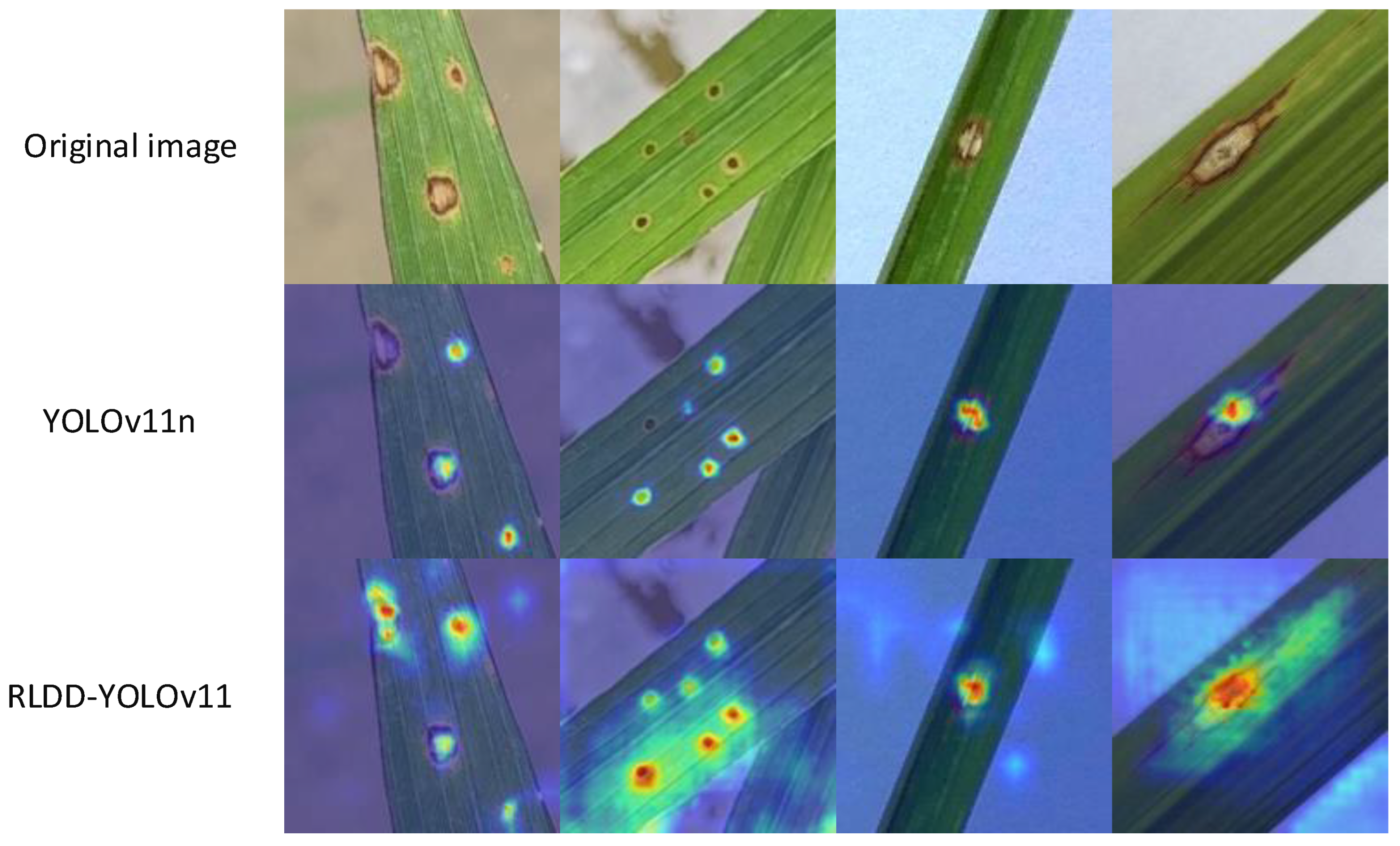

To further verify the model’s understanding and focus on semantic regions within images,

Figure 11 visualizes the attention distribution on representative samples using Grad-CAM [

39]. As shown in the visualizations, YOLOv11n’s heatmaps exhibit issues such as imprecise localization and attention drift, especially in cases with dense lesions or blurred edges, leading to an unstable performance. In contrast, RLDD-YOLOv11n accurately concentrates on key features within diseased areas. Its heatmaps show compact, well-defined attention regions that closely align with the actual lesion areas, demonstrating a superior spatial focus and fine-grained feature extraction capabilities. In the bottom-right image, the heatmap generated by RLDD-YOLOv11n not only covers the entire lesion, but also reasonably extends to the lesion’s boundaries, indicating more refined modeling of lesion morphology and edges.

In summary, the combined analysis of

Figure 10 and

Figure 11 confirms that RLDD-YOLOv11n significantly outperforms YOLOv11n in terms of numerical performance, perceptual visualization, and fine-grained target modeling. It is particularly well-suited for complex scenarios involving multi-class, multi-target dense detection of rice leaf diseases, showcasing enhanced robustness and practical applicability.

4. Discussion

4.1. Key Contributions

This study primarily addresses the challenges encountered in rice leaf disease detection, including the time-consuming and labor-intensive nature of manual detection, the significant differences between various disease categories, and the trade-off between model accuracy and complexity. First, by integrating residual bottleneck blocks and an SCSA, the fusion of multi-semantic information is enhanced, thereby improving the detection capability for small target diseases. Second, the CARAFE lightweight upsampling module is employed, utilizing intelligent feature map reconstruction to enhance the quality of feature map recovery and thereby improve detection accuracy. Finally, a rice leaf disease dataset was constructed based on the training data used in this study.

4.2. Comparison with Existing Methods

Compared with mainstream models in the YOLO series, RLDD-YOLOv11n improves detection performance while maintaining a lightweight structure. Compared to YOLOv7, it achieves a 1.7% improvement in mAP, with a 92% reduction in parameters and a 94% decrease in GFLOPs. Compared to YOLOv5, it reduces parameters by 63.2%, lowers GFLOPs by 60.1%, and improves mAP by 1.4%. Compared to YOLOv8n, it shows a 5% increase in mAP@0.5 and superior performance in mAP@0.95. Compared to YOLOv10n, mAP@0.5 and mAP@0.5:0.95 are improved by 12.9% and 18.4%, respectively. Furthermore, the RLDD-YOLOv11n model size is only 2.58 MB, smaller than that of the UAV T-YOLO-rice model [

31] (3.12 MB), and it achieves a recall of 83.8% under complex back-grounds, surpassing the approximately 79.5% recall of UAV T-YOLO-rice, reflecting a superior lightweight design and robustness in complex environments.

To address the challenge of detecting small lesion areas in rice leaf diseases, the pro-posed RLDD-YOLOv11n model introduces CARAFE upsampling and the SCSABlock module, effectively enhancing multi-scale feature representation. Under the more stringent mAP@0.5:0.95 metric, RLDD-YOLOv11n achieves 0.607, significantly higher than YOLOv5-Lite (approximately 0.49) [

32] and the improved SSD (approximately 0.55) [

25], demonstrating superior localization accuracy and robustness.

In summary, RLDD-YOLOv11n exhibits comprehensive advantages in detection ac-curacy, lightweight model design, and localization capability, offering strong practical application value, particularly suitable for edge computing and resource-constrained environments.

4.3. Limitations and Future Work

This study still has some limitations and areas for further exploration. First, the model’s reliance on GPU computing power limits its application in resource-constrained environments. Future work will explore lightweight optimization strategies, such as model quantization and structured pruning, to improve real-time performance and energy efficiency for deployment on edge devices. Second, although RLDD-YOLOv11n demonstrates an excellent disease detection performance on the existing dataset, its generalization capability in complex field environments—such as variations in leaf morphology across different growth stages, overlapping occlusions, and lighting interference—still has room for improvement. Therefore, there are plans to construct an enhanced dataset that includes multiple growth stages, varied lighting conditions, and background interference to improve the model’s generalization. Moreover, although RLDD-YOLOv11 is specifically designed for rice leaf disease detection, its architecture and feature learning possess strong transfer potential. Given the visual similarities among various crop diseases, the model can be fine-tuned on crops such as wheat and maize through transfer learning, thereby reducing annotation requirements. The adopted SCSABlock and CARAFE modules enhance the recognition of complex textures and small targets, improving the model’s generalization performance in complex environments. This design supports the future extension of the model to various agricultural applications, including fruit and vegetable disease identification, weed detection, and farmland pest and disease monitoring, demonstrating broad application prospects. Finally, this study will compare the performance boundaries of current mainstream detection models and integrate cutting-edge technologies, such as knowledge distillation and dynamic network structures, to further enhance the model’s ability to represent small-scale disease features and improve classification accuracy.