Real-Time Semantic Reconstruction and Semantically Constrained Path Planning for Agricultural Robots in Greenhouses

Abstract

1. Introduction

- (1)

- To address the issue of class imbalance in soil-based greenhouse environments, we propose a semantic segmentation method that applies a mixed loss function to the Segformer network, significantly improving segmentation accuracy for narrow furrow terrain.

- (2)

- To address the challenge of acquiring global semantic maps in multi-span greenhouses, a visual-semantic fusion SLAM point cloud reconstruction algorithm is proposed. A semantic point cloud rasterization method based on semantic feature vectors is introduced, enabling rapid global map construction.

- (3)

- To address the challenge of planning valid operational paths on the global semantic map of greenhouses, a semantic-constrained A* path planning algorithm is designed. This algorithm explicitly simulates the interactive characteristics of weeding robots, enabling the generation of operational paths that meet agricultural requirements.

2. Materials and Methods

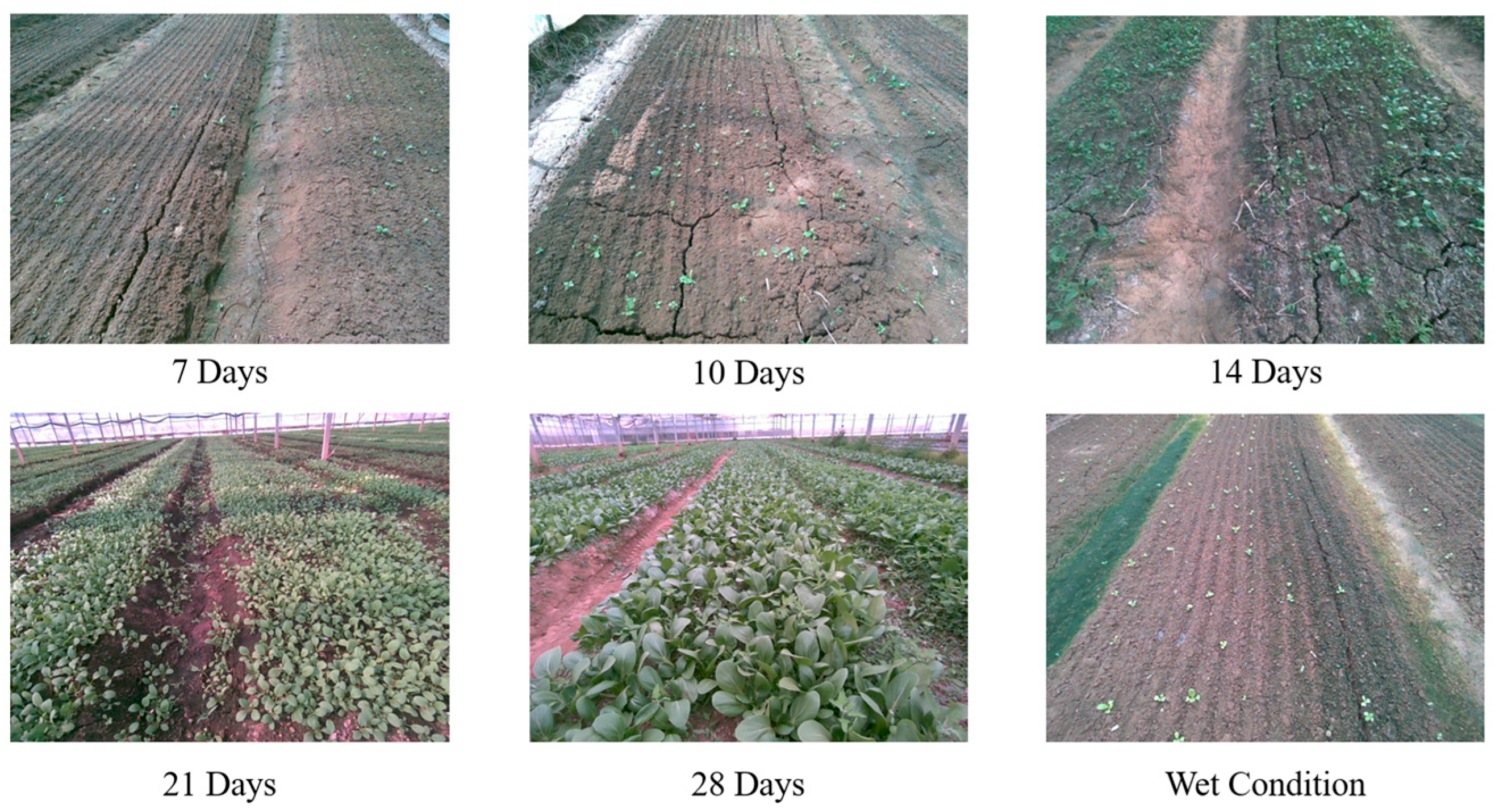

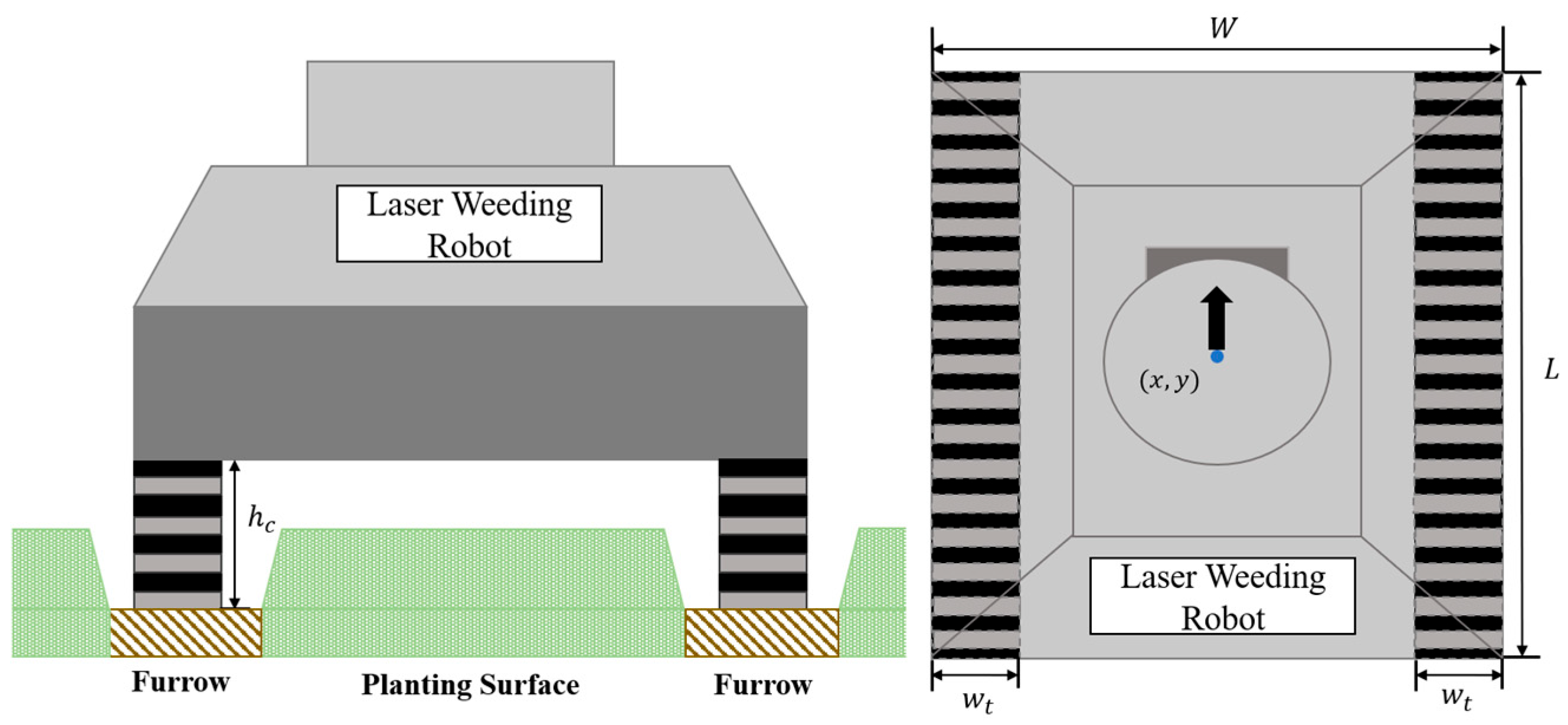

2.1. Experimental Facilities and Data Preparation

2.1.1. Experimental Site

2.1.2. Experimental Platform

2.1.3. Dataset Preparation

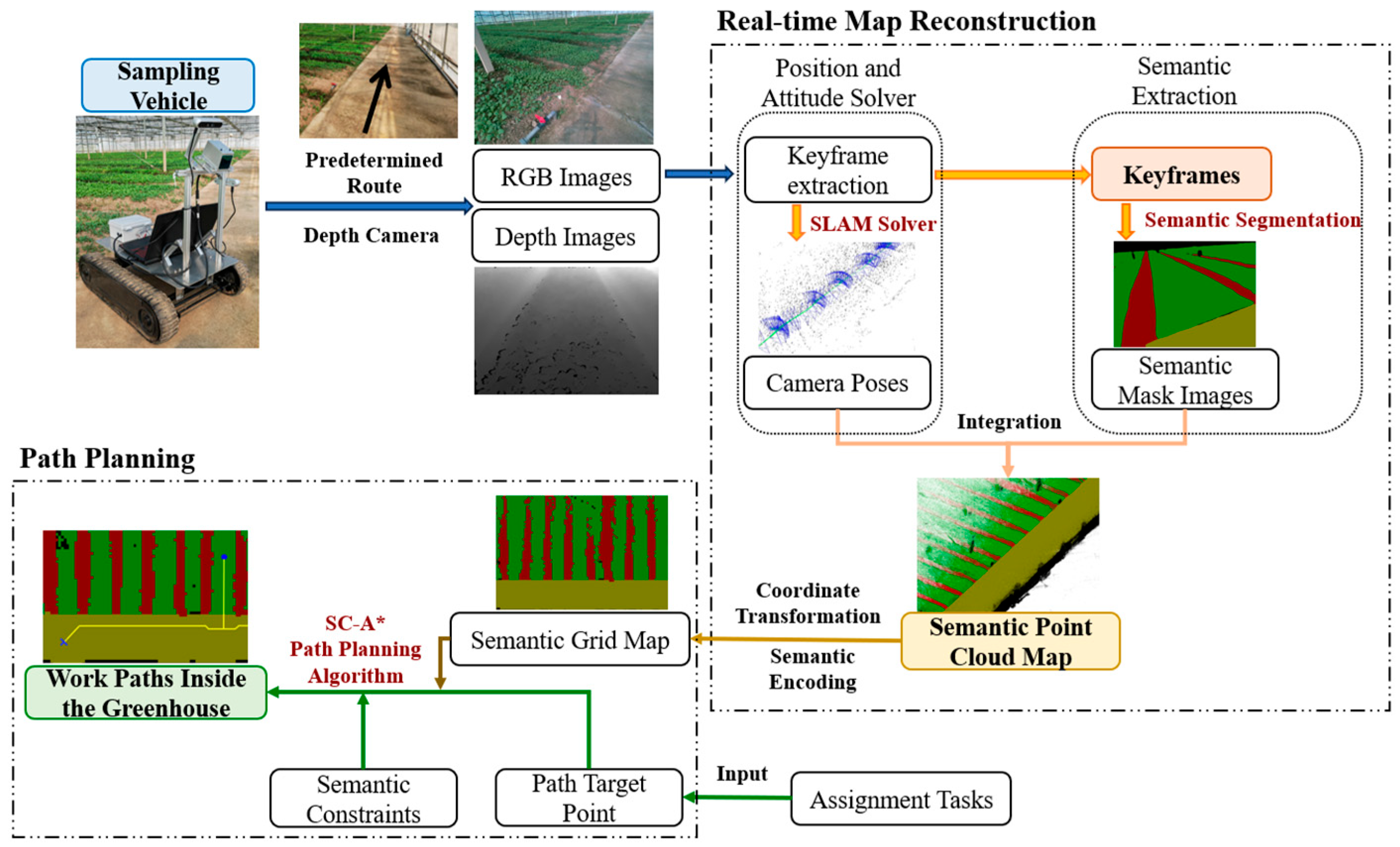

2.2. Algorithm for Real-Time Semantic Reconstruction and Task Path Generation in Facility Greenhouses

2.2.1. Algorithm Framework

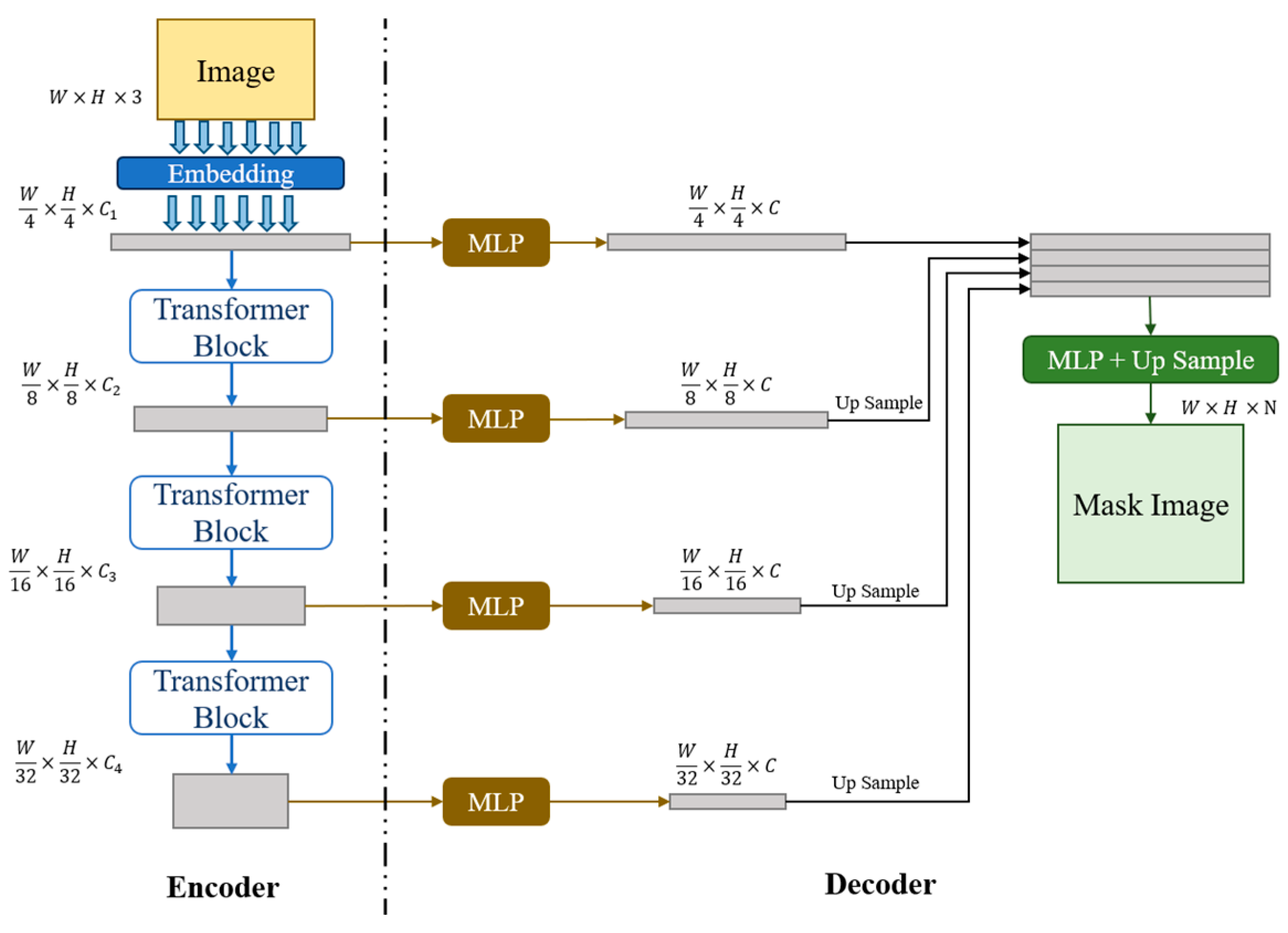

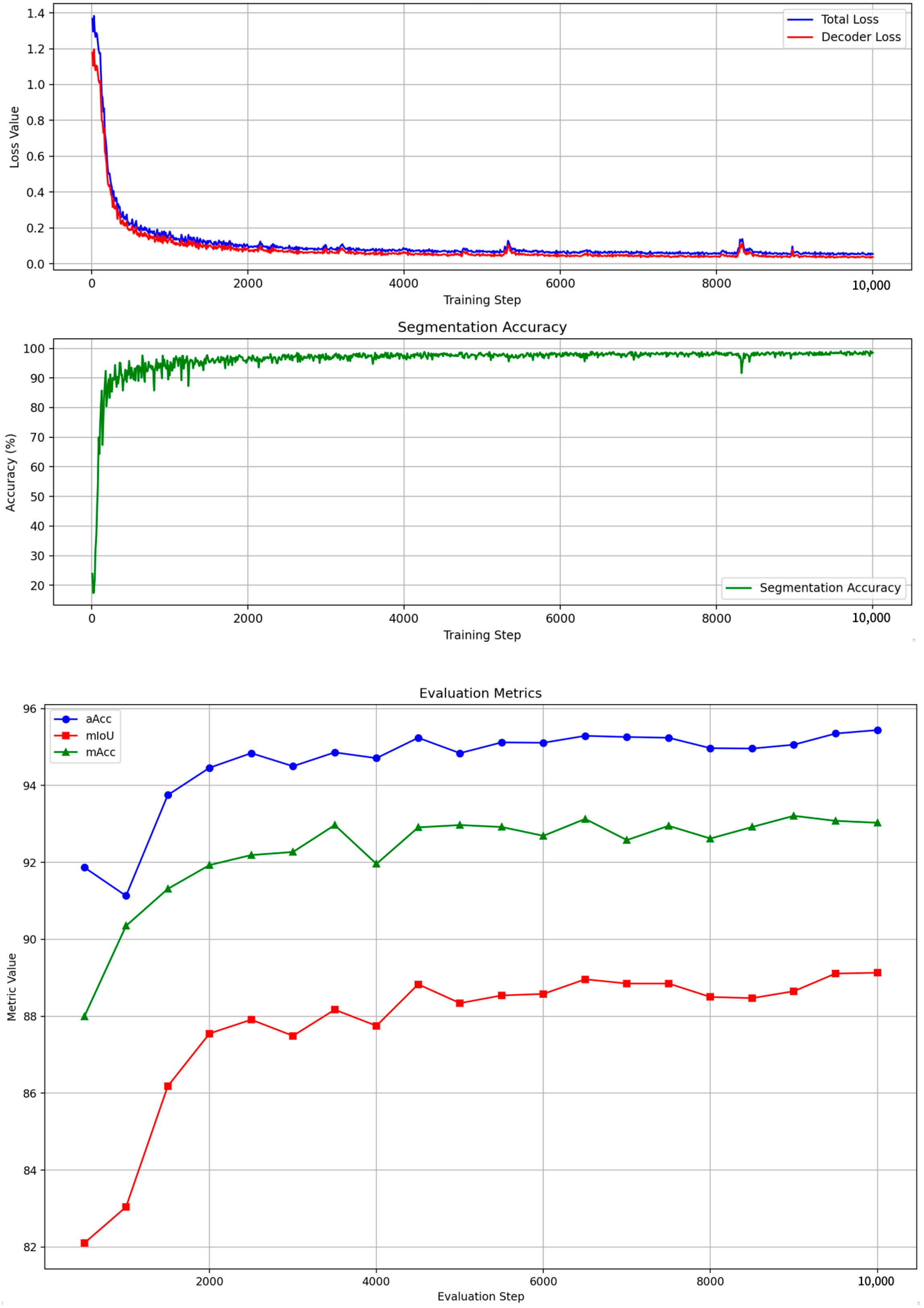

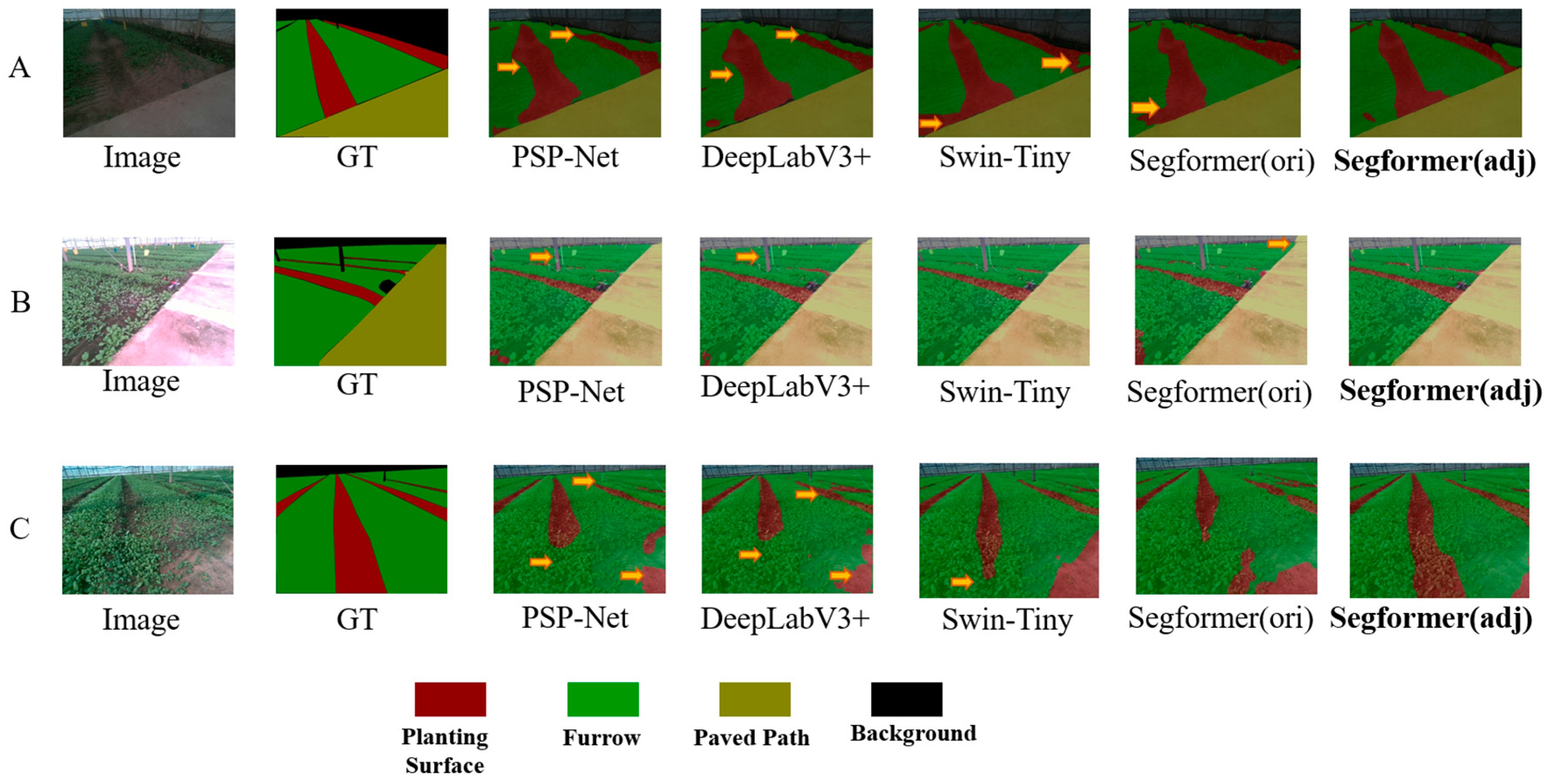

2.2.2. Lightweight Semantic Segmentation for Greenhouse Scenes Based on SegFormer

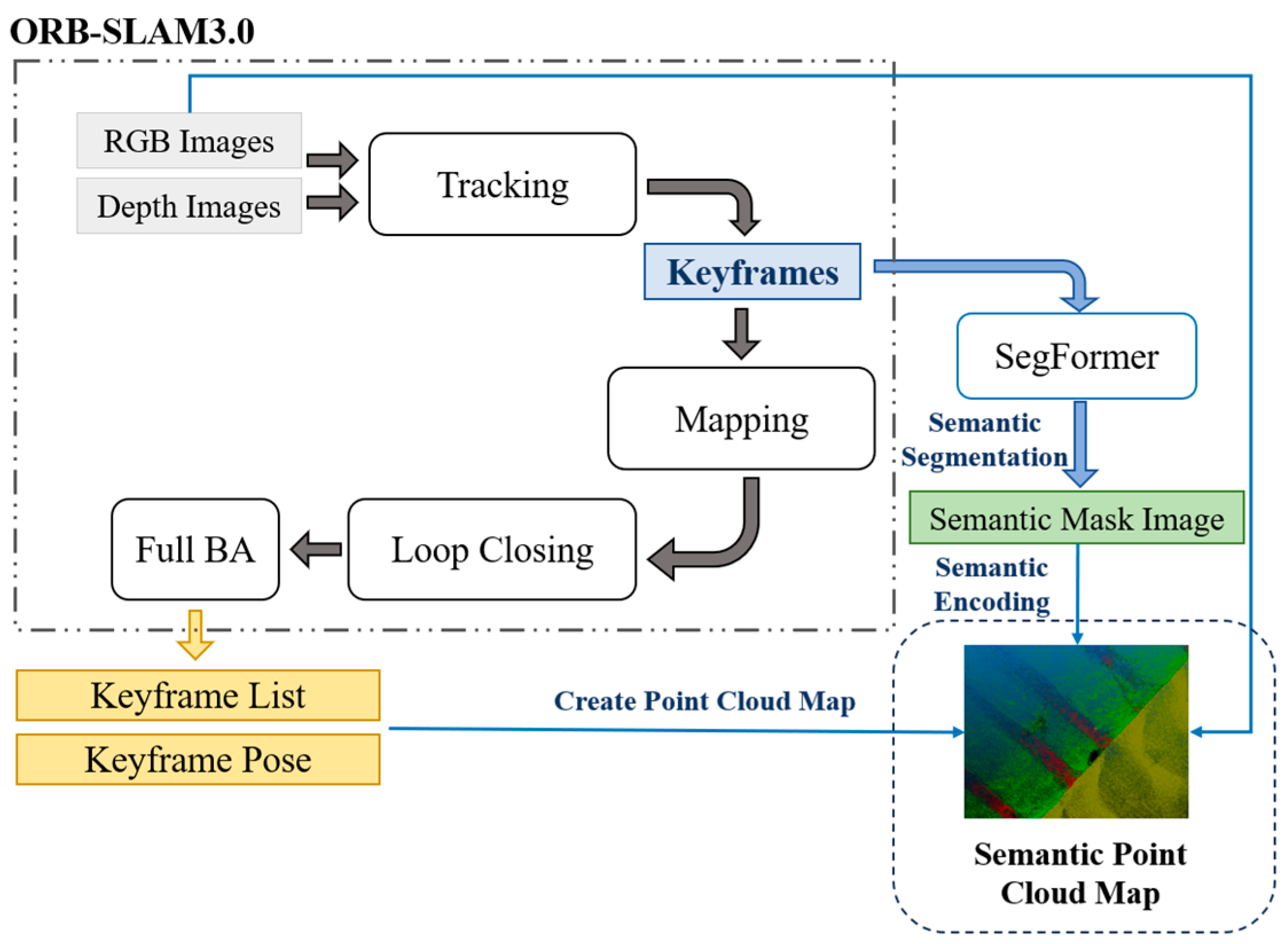

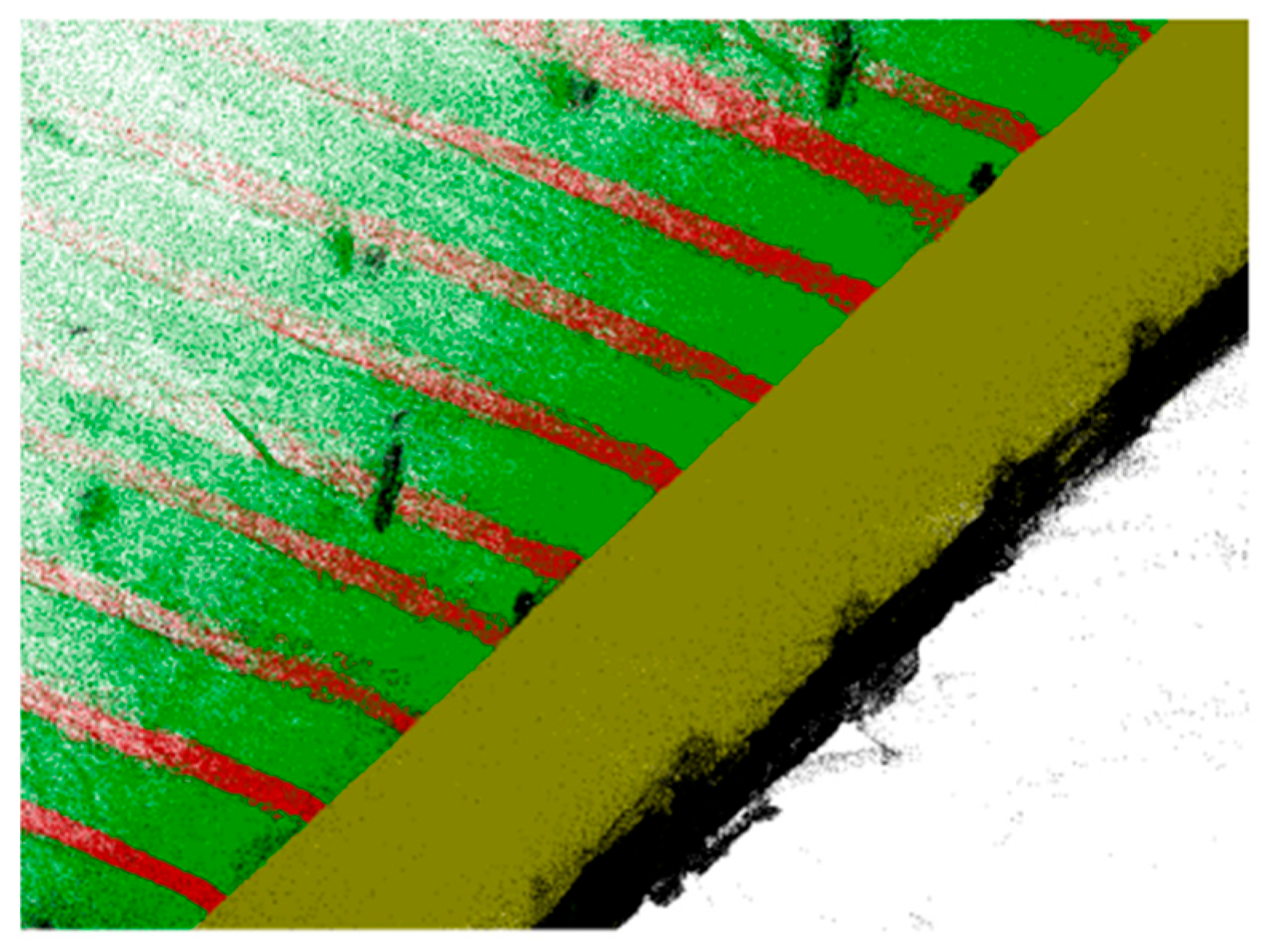

2.2.3. SLAM Semantic Map Reconstruction and Raster Map Conversion

| Algorithm 1: Visual SLAM Point Cloud Reconstruction Algorithm Incorporating Segformer |

| Input: Images captured from the camera with timestamps: (, , timeStamp) SegformerNet: Trained semantic segmentation network Intrinsic Parameters: , Extrinsic Parameters: , Noise Filtering Threshold: |

| Output: Global Semantic Point Cloud: MP |

| Global variables: KFs <- {} //Keyframe Set MP <- {} //Global Semantic Point Cloud DataBuffer <- ThreadSafeQueue () //Thread-safe Queue for Synchronizing Data |

| 1: System::Run () //Main Program 2: ORB_SLAM3.Initialize () 3: Segformer.LoadModel () 4: launch_thread (ORB_SLAM3_Track) 5: launch_thread (SemanticSegmentation_Thread) 6: launch_thread (PointCloudReconstruction_Thread) 7: wait_for_all_threads_to_finish () 8: 9: Thread1 ORB_SLAM3 () //Visual SLAM Thread 10: while not terminated do 11: (, , timeStamp) <- GrabFrame() 12: <- ORB_SLAM3.Track (, ) 13: if IsNewKeyFrame () then 14: KFs.add () 15: SegmentQueue.add () 16: end if 17: DataBuffer.push ((, , , timeStamp)) 18: ORB_SLAM3.Mapping () 19: <- ORB_SLAM3.LoopClosing () 20: KFs. . <- 21: end while 22: 23: Thread2 SemanticSegmentation () //Semantic Segmentation Thread 24: while not terminated do 25: (, timestamp) <- SegmentQueue.pop () 26: <- SegformerNet.predict () 27: DataBuffer.update ((, timeStamp)) 28: end while 29: 30: Thread3 SemanticReconsturctionThread (): //Semantic Reconstruction Thread 31: while not terminated do 32: if NewKeyFrameAvailable () then 33: (, , , ) <- KeyframeQueue.pop () 34: LocalPointCloud <- {} 35: for each pixel in do 36: if > 0 then 37: <- * * 38: <- * [ * ; 1] 39: LocalPointCloud.add (.x, .y, .z, , ) 40: end if 41: end for 42: MP.add (LocalPointCloud) 43: MP <- RemoveOutliers (MP, ) 44: end if 45: end while |

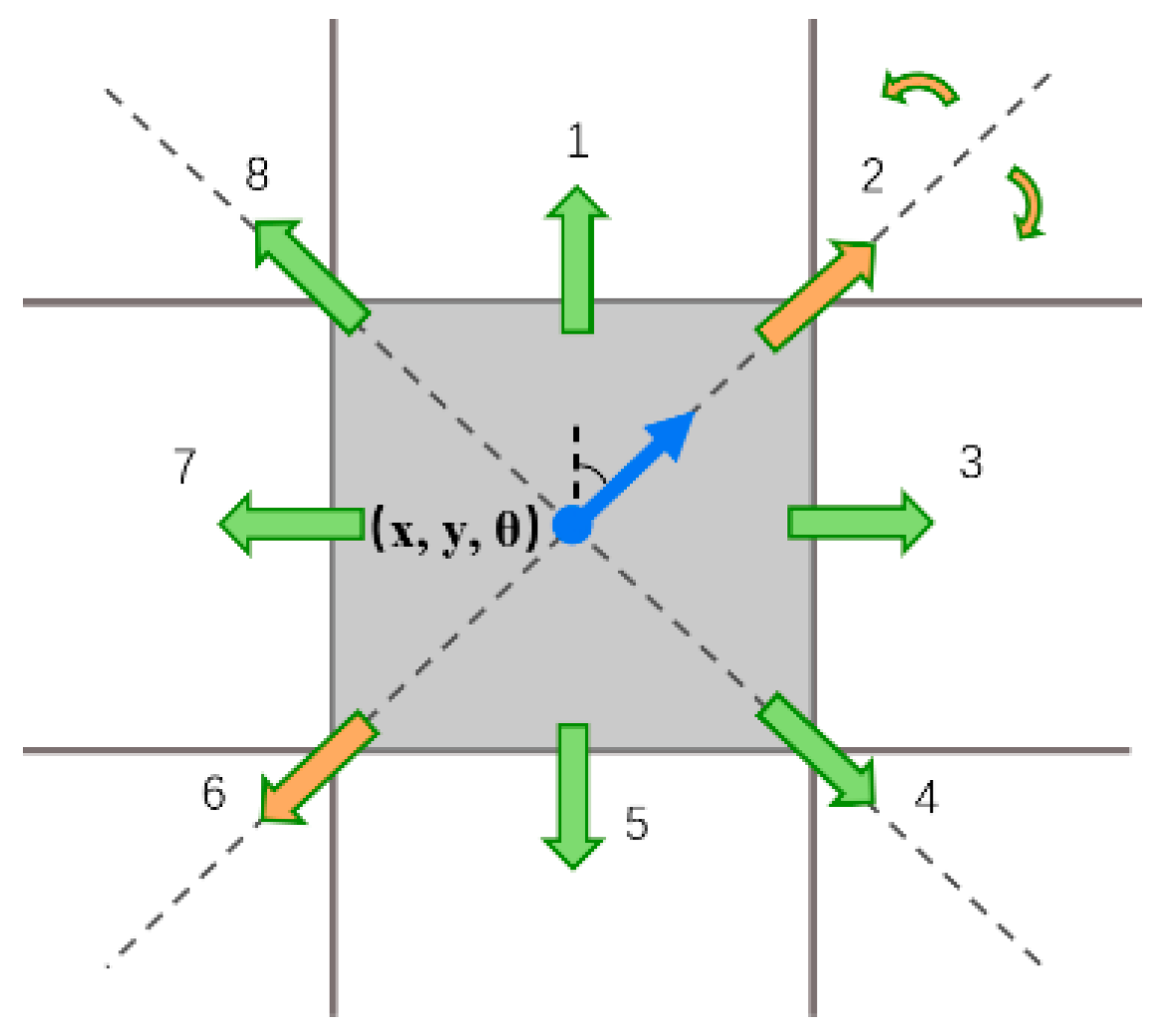

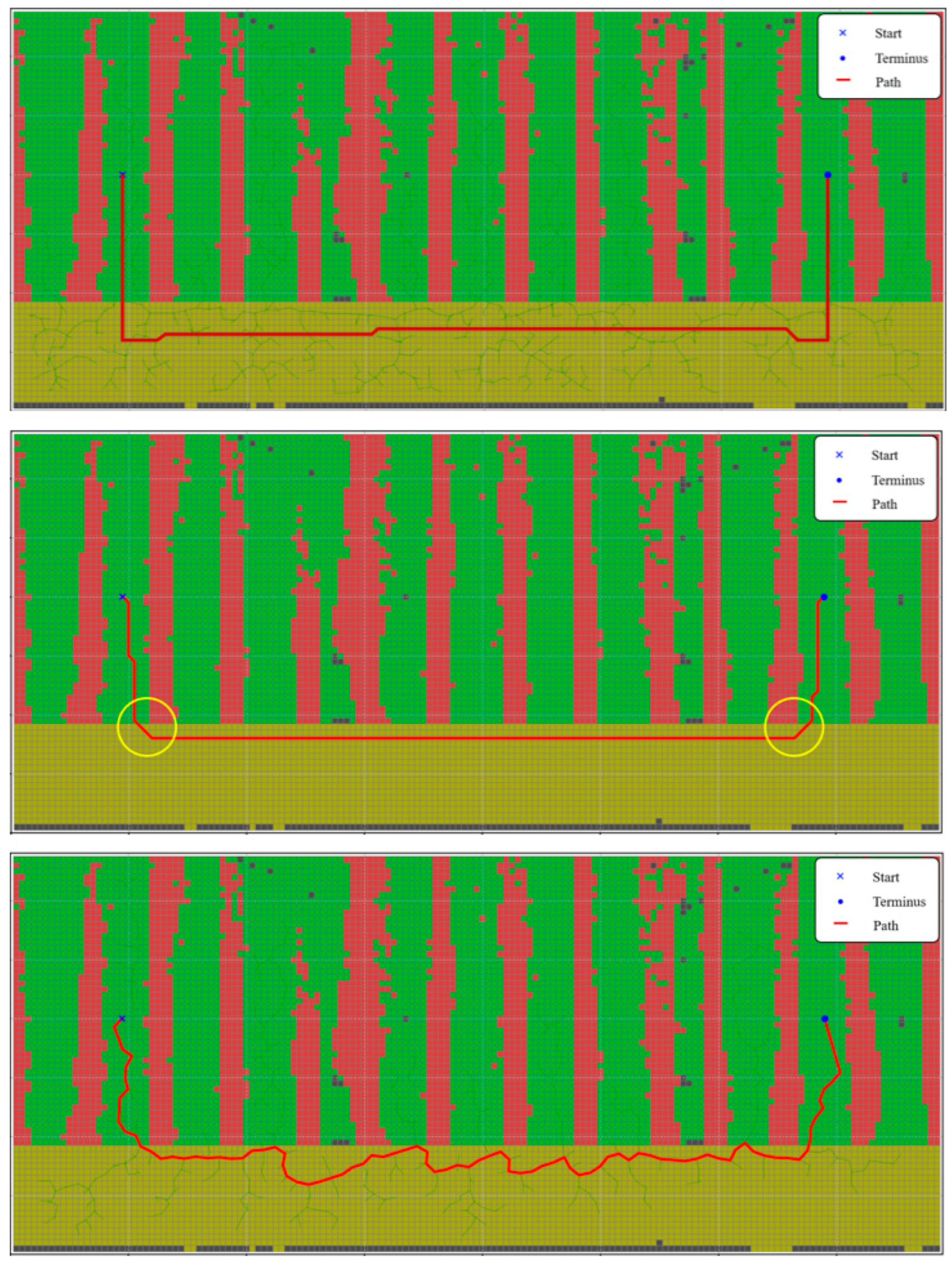

2.2.4. SC-A* Path Planning Algorithm Based on Scene Semantic Constraints

| Algorithm 2: SC-A* Algorithm |

| Input: Grid map: G Initial state: Target State: Robot Length: L, Robot Width: W, Track Width: |

| Output: Path: State sequence from the starting point to the target point |

| Global variables: PendingState: Priority queue, storing the current states to be searched, sorted in ascending order by . VisitedState: Set, store evaluated states gScore: Dictionary, storing the minimum known cost from the starting point to each state lastState: Dictionary, storing the predecessor state for each state, used for path reconstruction |

| 1: Function SC_A_Star (G, , , L, W, ): 2: PendingState.add () 3: gScore() <- 0 4: fScore() <- EuclideanDistance (, ) //the predicted shortest path (Euclidean distance) 5: 6: while PendingState is not empty do 7: <- PendingState.pop_min_fScore () //prioritize updating states that minimize the loss function. 8: if is then 9: return ReconstructPath (lastState(), ) 10: 11: VisitedState.add () 12: for each in PossibleAction () do 13: nextState <- ApplyAction (, ) 14: if nextState in VisitedState then 15: continue 16: end if 17: if not ValidSemanticConstraints (nextState, G) then //check whether semantic constraints are satisfied 18: continue 19: end if 20: tentative_gScore <- gScore() + Cost (, nextState) 21: if nextState not in PendingState or tentative_gScore < gScore (nextState) then 22: lastState (nextState) <- 23: gScore (nextState) <- tentative_gScore 24: fScore (nextState) <- gScore (nextState) + EuclideanDistance (nextState, ) 25: if naxtState not in PendingState then 26: PendingState.add (nextState) 27: end if 28: end if 29: end for 30: end while 31: 32: return NULL //No valid path found 33: 34: Function ValidSemanticConstraints (State, G): 35: , <- CalculateOccupiedGrids (State, G, W, L, ) 36: for each grid in do //verify track restraint 37: <- G.getSemantic (grid) 38: if is not and is not then 39: return false 40: end if 41: end for 42: for each grid in do //verify vehicle restraint 43: <- G.getSemantic (grid) 44: if is then 45: return false 46: end if 47: end for 48: 49: return true |

3. Results

3.1. Semantic Segmentation Performance Evaluation

3.2. Evaluation of Semantic Map Reconstruction Results

3.3. Path Planning Experiment Evaluation

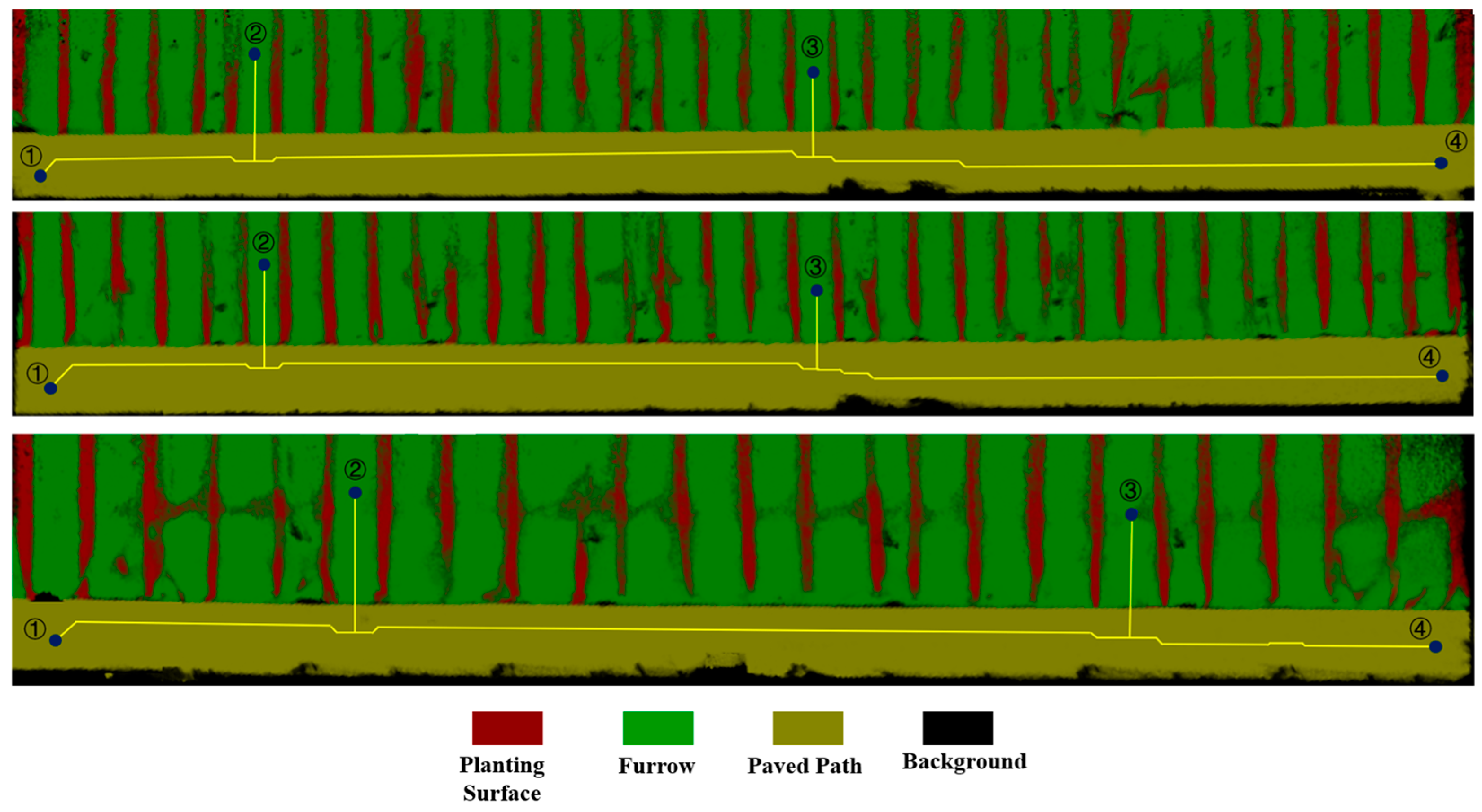

3.4. Algorithm Experiment Evaluation in Real-World Environments

4. Discussion

- (1)

- The lightweight semantic segmentation algorithm Segformer achieved a global accuracy of 95.44% and a mIoU of 89.13% on the facility greenhouse dataset. It attained a recognition accuracy of 92.90% for furrows, with the joint loss function yielding a 3.9% improvement over a single loss function. Compared to other algorithms, it features fewer parameters and faster inference speeds.

- (2)

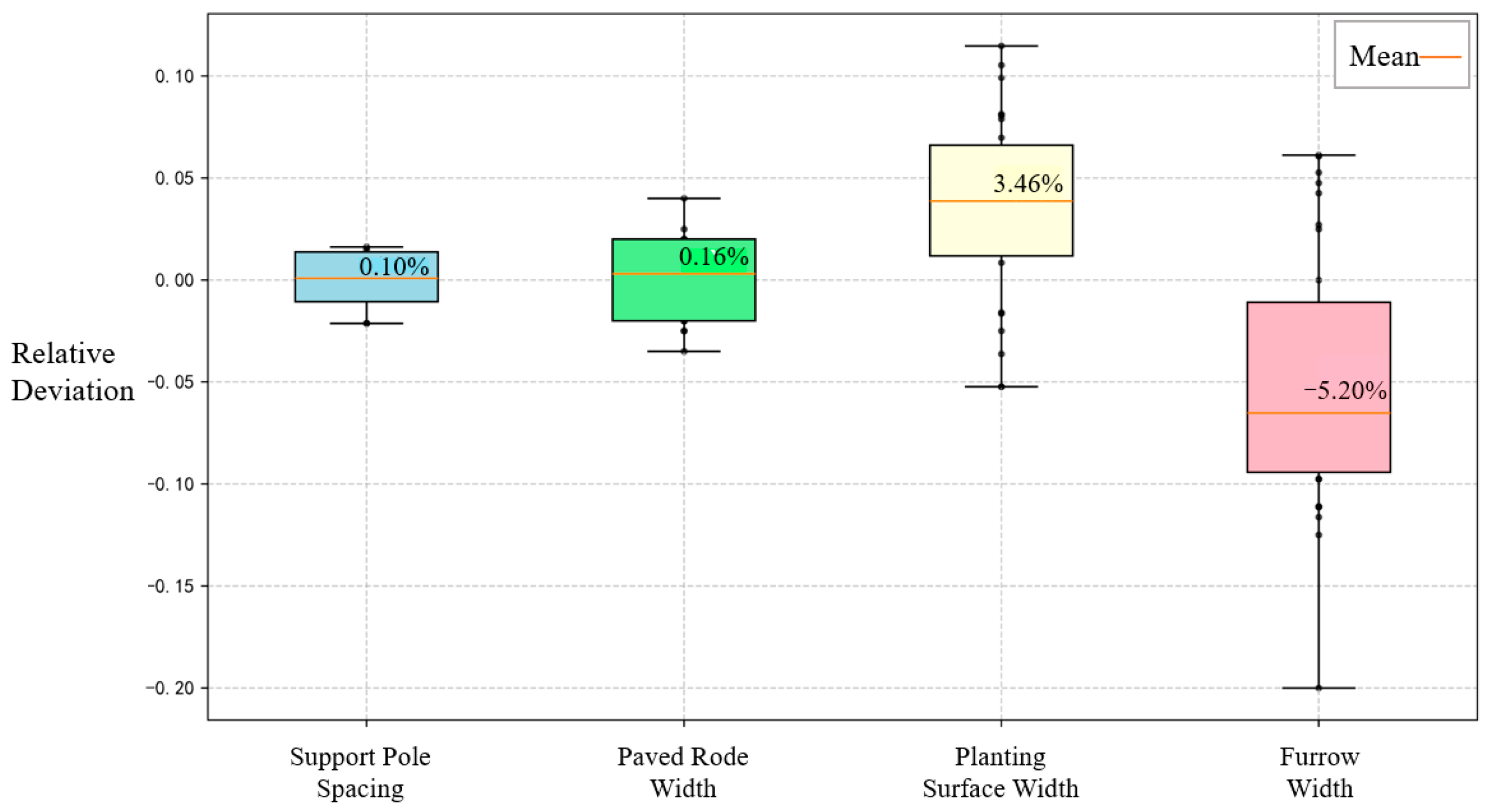

- The semantic map reconstruction algorithm based on visual SLAM achieves an average relative error of <2% for reconstructing support pole spacing and paved road width within greenhouses, demonstrating high accuracy in scene reconstruction. For the two types of boundaries defined by semantic information—planting surfaces and furrows—the average relative errors in reconstruction were 5.82% and 7.37%, respectively. The average deviation values were 3.46% and −5.20%, indicating high semantic reconstruction accuracy.

- (3)

- The SC-A* path planning algorithm completed its computation in 0.124 s on the workstation within the experimental simulation scenario. It successfully planned the robot’s operational path, demonstrating higher computational efficiency than the traditional A* algorithm. Additionally, it successfully avoided the planting surface at the junction between the planting zone and paved roadway.

- (4)

- The system’s feasibility and real-time performance were validated across three greenhouse facilities with distinct environmental conditions. The system successfully reconstructed semantic maps in all three greenhouses and achieved path planning without violating constraints. On the PC in the vehicle platform, semantic segmentation took approximately 0.13 s per frame, point cloud fusion took about 0.35 s per frame, map conversion took roughly 30 s, and path planning completed in under 1 s, meeting real-time requirements.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lytridis, C.; Pachidis, T. Recent Advances in Agricultural Robots for Automated Weeding. AgriEngineering 2024, 6, 3279–3296. [Google Scholar] [CrossRef]

- Li, Y.; Guo, Z.; Shuang, F.; Zhang, M.; Li, X. Key Technologies of Machine Vision for Weeding Robots: A Review and Benchmark. Comput. Electron. Agric. 2022, 196, 106880. [Google Scholar] [CrossRef]

- Autonomous LaserWeeder Demo Unit—Carbon Robotics. Available online: https://carbonrobotics.com/autonomous-weeder (accessed on 6 January 2025).

- TerraSentia—EarthSense. Available online: https://www.earthsense.co/terrasentia (accessed on 6 January 2025).

- Ecorobotix: Smart Spraying for Ultra-Localised Treatments. Available online: https://ecorobotix.com/en/ (accessed on 6 January 2025).

- Our Vision for the Future: Autonomous Weeding (in Development) AVO. Available online: https://ecorobotix.com/en/avo/ (accessed on 6 January 2025).

- Diao, Z.; Guo, P.; Zhang, B.; Zhang, D.; Yan, J.; He, Z.; Zhao, S.; Zhao, C.; Zhang, J. Navigation Line Extraction Algorithm for Corn Spraying Robot Based on Improved YOLOv8s Network. Comput. Electron. Agric. 2023, 212, 108049. [Google Scholar] [CrossRef]

- Liu, X.; Qi, J.; Zhang, W.; Bao, Z.; Wang, K.; Li, N. Recognition Method of Maize Crop Rows at the Seedling Stage Based on MS-ERFNet Model. Comput. Electron. Agric. 2023, 211, 107964. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. CRowNet: Deep Network for Crop Row Detection in UAV Images. IEEE Access 2020, 8, 5189–5200. [Google Scholar] [CrossRef]

- Qiu, Z.; Zhao, N.; Zhou, L.; Wang, M.; Yang, L.; Fang, H.; He, Y.; Liu, Y. Vision-Based Moving Obstacle Detection and Tracking in Paddy Field Using Improved Yolov3 and Deep SORT. Sensors 2020, 20, 4082. [Google Scholar] [CrossRef]

- Liu, G.; Jin, C.; Ni, Y.; Yang, T.; Liu, Z. UCIW-YOLO: Multi-Category and High-Precision Obstacle Detection Model for Agricultural Machinery in Unstructured Farmland Environments. Expert Syst. Appl. 2025, 294, 128686. [Google Scholar] [CrossRef]

- Cañadas-Aránega, F.; Blanco-Claraco, J.L.; Moreno, J.C.; Rodriguez-Diaz, F. Multimodal Mobile Robotic Dataset for a Typical Mediterranean Greenhouse: The GREENBOT Dataset. Sensors 2024, 24, 1874. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Qu, D.; Xu, F.; Zou, F.; He, G.; Sun, M. Mobile Robot Motion Control and Autonomous Navigation in GPS-Denied Outdoor Environments Using 3D Laser Scanning. Assem. Autom. 2018, 39, 469–478. [Google Scholar] [CrossRef]

- Yan, Y.; Zhang, B.; Zhou, J.; Zhang, Y.; Liu, X. Real-Time Localization and Mapping Utilizing Multi-Sensor Fusion and Visual–IMU–Wheel Odometry for Agricultural Robots in Unstructured, Dynamic and GPS-Denied Greenhouse Environments. Agronomy 2022, 12, 1740. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, Z.; Zhou, H.; Chen, S. 3D Global Mapping of Large-Scale Unstructured Orchard Integrating Eye-in-Hand Stereo Vision and SLAM. Comput. Electron. Agric. 2021, 187, 106237. [Google Scholar] [CrossRef]

- Li, F.; Liu, Y.; Zhang, K.; Hu, Z.; Zhang, G. DDETR-SLAM: A Transformer-Based Approach to Pose Optimisation in Dynamic Environments. In Proceedings of the International Journal of Robotics and Automation, Yokohama, Japan, 1 January 2024; ACTA Press: Calgary, AB, Canada, 2024; Volume 39, pp. 407–421. [Google Scholar]

- Ban, C.; Wang, L.; Chi, R.; Su, T.; Ma, Y. A Camera-LiDAR-IMU Fusion Method for Real-Time Extraction of Navigation Line between Maize Field Rows. Comput. Electron. Agric. 2024, 223, 109114. [Google Scholar] [CrossRef]

- Shi, Z.; Bai, Z.; Yi, K.; Qiu, B.; Dong, X.; Wang, Q.; Jiang, C.; Zhang, X.; Huang, X. Vision and 2D LiDAR Fusion-Based Navigation Line Extraction for Autonomous Agricultural Robots in Dense Pomegranate Orchards. Sensors 2025, 25, 5432. [Google Scholar] [CrossRef] [PubMed]

- Kang, H.; Wang, X. Semantic Segmentation of Fruits on Multi-Sensor Fused Data in Natural Orchards. Comput. Electron. Agric. 2023, 204, 107569. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, T.; Li, Y.; He, C.; Xiu, X.; Miao, Z. Optimal Motion Planning and Navigation for Nonholonomic Agricultural Robots in Multi-Constraint and Multi-Task Environments. Comput. Electron. Agric. 2025, 238, 110822. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, W.; Li, Y.; Zhao, L.; Hou, J.; Zhu, Q. Path Planning of Agricultural Robot Based on Improved A* and LM-BZS Algorithms. Trans. Chin. Soc. Agric. Mach. 2024, 55, 81–92. [Google Scholar]

- Wang, Y.; Fu, C.; Huang, R.; Tong, K.; He, Y.; Xu, L. Path Planning for Mobile Robots in Greenhouse Orchards Based on Improved A* and Fuzzy DWA Algorithms. Comput. Electron. Agric. 2024, 227, 109598. [Google Scholar] [CrossRef]

- Li, D.; Li, B.; Feng, H.; Kang, S.; Wang, J.; Wei, Z. Low-Altitude Remote Sensing-Based Global 3D Path Planning for Precision Navigation of Agriculture Vehicles—beyond Crop Row Detection. ISPRS J. Photogramm. Remote Sens. 2024, 210, 25–38. [Google Scholar] [CrossRef]

- Stefanović, D.; Antić, A.; Otlokan, M.; Ivošević, B.; Marko, O.; Crnojević, V.; Panić, M. Blueberry Row Detection Based on UAV Images for Inferring the Allowed UGV Path in the Field. In Proceedings of the ROBOT2022: Fifth Iberian Robotics Conference, Zaragoza, Spain, 23–25 November 2023; Tardioli, D., Matellán, V., Heredia, G., Silva, M.F., Marques, L., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 401–411. [Google Scholar]

- Stache, F.; Westheider, J.; Magistri, F.; Popović, M.; Stachniss, C. Adaptive Path Planning for UAV-Based Multi-Resolution Semantic Segmentation. In Proceedings of the 2021 European Conference on Mobile Robots (ECMR), Bonn, Germany, 31 August–3 September 2021; pp. 1–6. [Google Scholar]

- Pu, Y.; Ren, A.; Fang, Z.; Li, J.; Zhao, L.; Chen, Z.; Yang, M. Complex path planning in orchard using aerial images and improved U-net semantic segmentation. Trans. Chin. Soc. Agric. Eng. 2025, 41, 243–253. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the Proceedings of the 35th International Conference on Neural Information Processing Systems, Los Angeles, CA, USA, 6–14 December 2021; Curran Associates Inc.: Red Hook, NY, USA, 2021; pp. 12077–12090. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 548–558. [Google Scholar]

- Li, X.; Sun, X.; Meng, Y.; Liang, J.; Wu, F.; Li, J. Dice Loss for Data-Imbalanced NLP Tasks. In Proceedings of the Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Seattle, WA, USA, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 465–476. [Google Scholar]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-Entropy Loss Functions: Theoretical Analysis and Applications. arXiv 2023, arXiv:2304.07288v2. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A Formal Basis for the Heuristic Determination of Minimum Cost Paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

| Class | IoU/% | Acc/% | F1-Score | mIoU/% | OA/% |

|---|---|---|---|---|---|

| Background | 81.43 | 83.88 | 89.8 | 89.13 | 95.44 |

| Furrow | 83.21 | 92.90 | 90.84 | ||

| Planting Surface | 93.43 | 96.78 | 96.6 | ||

| Paved road | 98.41 | 99.31 | 99.2 |

| Model | mIoU/% | OA/% | mF1-Score | Furrow IoU/% |

|---|---|---|---|---|

| only wce loss | 88.56 | 94.31 | 92.84 | 79.37 |

| only Dice loss | 86.31 | 93.13 | 91.03 | 80.07 |

| wce loss + Dice loss | 89.13 | 95.44 | 93.40 | 83.21 |

| Model | BackBone | Optimizer | Learning Rate | Weight Decay |

|---|---|---|---|---|

| PSP-Net | ResNet-50 | AdamW | 1 × 10−4 | 0.01 |

| DeepLabV3+ | ResNet-50 | SGD | 1 × 10−4 | 0.01 |

| Swin + upperNet | Swin-Tiny | AdamW | 5 × 10−5 | 0.01 |

| Segformer-B0 | MiT-B0 | AdamW | 6 × 10−5 | 0.01 |

| Model | mIoU/% | OA/% | mF1-Score | Furrow IoU/% | Fps | Parameters |

|---|---|---|---|---|---|---|

| PSP-Net | 87.11 | 94.34 | 92.9 | 79.73 | 101.83 | 46.603 M |

| DeepLab V3+ | 87.31 | 94.48 | 93.03 | 80.07 | 63.76 | 41.217 M |

| swin-tiny | 88.8 | 95.17 | 93.92 | 81.94 | 44.12 | 120 M |

| Segformer (ori) | 86.58 | 94.12 | 92.87 | 78.15 | 120.33 | 3.716 M |

| Segformer (adj) | 87.93 | 94.7 | 93.4 | 83.21 | 121.56 | 3.716 M |

| Class | Reconstructed Mean/m | Measurement Mean/m | MAE/cm | MRE/% |

|---|---|---|---|---|

| support pole spacing | 7.99 | 8.00 | 11 | 1.43% |

| paved road width | 2.07 | 2.09 | 4 | 1.88% |

| planting surface width | 1.31 | 1.27 | 7 | 5.28% |

| furrow width | 0.40 | 0.42 | 3 | 7.37% |

| Algorithm | Path Length | Computation Time | Violations Times | Turning Times |

|---|---|---|---|---|

| Traditional A* | 161.142 | 0.134 s | 4 | 9 |

| RRT | 184.978 | 0.378 s | 11 | 62 |

| SC-A* | 172.433 | 0.124 s | 0 | 8 |

| Greenhouse | MRE (Planting Surface)/% | MRE (Furrow)/% |

|---|---|---|

| Greenhouse1 | 5.28% | 7.37% |

| Greenhouse2 | 7.91% | 10.13% |

| Greenhouse3 | 6.39% | 7.91% |

| No. | Keyframe Number | Semantic Segmentation (s/f) | Point Cloud Fusion (s/f) | Map Conversion (s) | Path Planning (s) |

|---|---|---|---|---|---|

| Greenhouse1 | 1363 | 0.134 | 0.339 | 32.64 | 0.815 |

| Greenhouse2 | 1390 | 0.136 | 0.355 | 31.21 | 0.897 |

| Greenhouse3 | 898 | 0.127 | 0.217 | 16.45 | 0.732 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quan, T.; Luo, J.; Xie, S.; Ren, X.; Miao, Y. Real-Time Semantic Reconstruction and Semantically Constrained Path Planning for Agricultural Robots in Greenhouses. Agronomy 2025, 15, 2696. https://doi.org/10.3390/agronomy15122696

Quan T, Luo J, Xie S, Ren X, Miao Y. Real-Time Semantic Reconstruction and Semantically Constrained Path Planning for Agricultural Robots in Greenhouses. Agronomy. 2025; 15(12):2696. https://doi.org/10.3390/agronomy15122696

Chicago/Turabian StyleQuan, Tianrui, Junjie Luo, Shuxin Xie, Xuesong Ren, and Yubin Miao. 2025. "Real-Time Semantic Reconstruction and Semantically Constrained Path Planning for Agricultural Robots in Greenhouses" Agronomy 15, no. 12: 2696. https://doi.org/10.3390/agronomy15122696

APA StyleQuan, T., Luo, J., Xie, S., Ren, X., & Miao, Y. (2025). Real-Time Semantic Reconstruction and Semantically Constrained Path Planning for Agricultural Robots in Greenhouses. Agronomy, 15(12), 2696. https://doi.org/10.3390/agronomy15122696