1. Introduction

With the tightening of the global agricultural labor market, the traditional labor-intensive agricultural operations can no longer meet the high-efficiency demands of modern agricultural production. The research and application of intelligent agricultural robot technology have become the key to agricultural modernization [

1]. Among various agricultural picking environments, apple picking stands out as a challenging area for automation technology due to its strong seasonality, high labor demand, and complex working environment.

Zhao et al. [

2] have developed a robotic device for apple picking, which consists of a manipulator, an end effector, and an image-based visual servo control system [

3]. They have developed an apple recognition algorithm to achieve automatic detection and positioning during picking.

The motion control of dual-arm picking robots is more complex than that of a single mechanical arm [

4]. In the field of robot path planning [

5,

6], numerous path planning algorithms have been introduced one after another [

7,

8]. For example, Shi et al. [

9] proposed an obstacle avoidance path planning method for dual-arm robots, which introduces target probability bias and cost functions based on the Rapidly exploring Random Tree (RRT) algorithm [

10] to reduce the randomness and blindness in the expansion process of the RRT algorithm. Long et al. [

11] proposed a new motion planner named RRT*Smart-AD, which ensures that dual-arm robots can adapt to obstacle avoidance constraints and dynamic characteristics in dynamic environments. Zhang et al. [

12] proposed a novel dual-arm robot obstacle avoidance strategy based on the Artificial Potential Field (APF) algorithm, which introduces an attractive function and a repulsive function to guide the movement of the mechanical arm towards the target object while avoiding obstacles [

13]. These algorithms impose a significant computational burden in the complex picking environment of orchards, leading to insufficient real-time performance of the arm planning and difficulty in ensuring globally optimal paths. Additionally, the ability of these algorithms to process dynamic environmental information limits the effectiveness and reliability of the arm’s obstacle avoidance strategies.

Reinforcement learning is increasingly applied in the field of motion planning for dual-arm robots [

14,

15], enabling the learning of optimal behaviors to adapt to complex environments. James et al. [

16] have employed the Deep Q-Network [

17] to control the joint rotation of robot arms for grasping tasks. Prianto et al. [

18] have utilized the Soft Actor-Critic (SAC) [

19] method to plan the shortest paths for multiple robot arms. Ha et al. [

20] have proposed the use of SAC for closed-loop decentralized planning of multiple robot arms. Zhao et al. [

21] have applied Proximal Policy Optimization (PPO) [

22] for cooperative planning of robot arms. The algorithm developed by Zhang et al. [

23] integrates experience replay with shortest path constraints. Despite these methods enhancing adaptability and cooperative effects, their high computational cost limits their widespread application. Therefore, it is necessary to explore more efficient computational strategies to reduce resource consumption.

In unstructured environments of dense orchards, single-arm robots are often constrained by their inherent limitations in physical degrees of freedom and operational modes. When dealing with fruit clusters obscured by foliage or scenarios requiring simultaneous handling of multiple dispersed targets, they typically necessitate frequent repositioning and sequential operations to accomplish harvesting tasks. In contrast, dual-arm robots leverage their parallel processing capabilities in complex spaces to effectively overcome the limitations of single-arm systems in sequential harvesting, thereby significantly reducing the time required for individual fruit picking [

24].

In this study, the underlying multi-agent coordination control employs the Multi-Agent Proximal Policy Optimization [

25] algorithm, whose “centralized training with decentralized execution” framework is particularly suitable for dual robotic arm collaboration scenarios. Compared to fully distributed approaches, this framework leverages a centralized Critic network during the training phase to fully utilize global state information, effectively addressing non-stationarity issues in multi-agent environments and significantly enhancing the coordination and stability of policy learning. Meanwhile, in contrast to fully centralized methods, its decentralized execution mode ensures independent decision-making capability and scalability during real-world deployment, achieving an effective balance between sample efficiency and control performance.

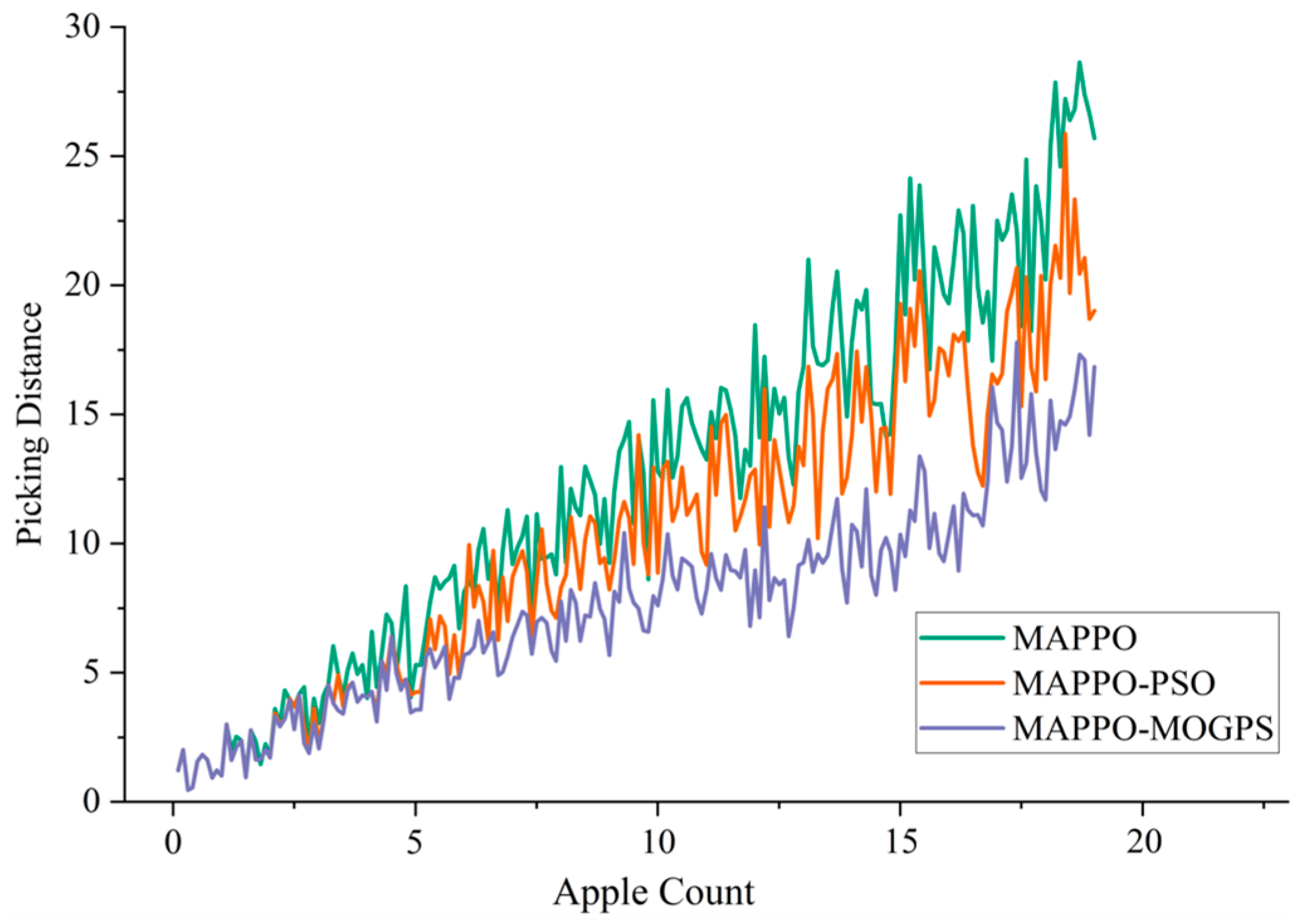

At the top-level task allocation and path planning layer, we adopt an improved method based on Multi-Objective Greedy Policy Search [

26]. This strategy conducts weighted evaluations of multiple objectives such as distance, obstacle distribution, and fruit density, enabling rapid generation of near-optimal task allocation schemes in dynamic environments [

27]. Compared to swarm intelligence optimization methods like ant colony optimization [

28] and genetic algorithms [

29], the enhanced MOGPS algorithm leverages its single-step greedy selection mechanism and heuristic evaluation function to significantly reduce computational complexity while maintaining solution quality. This approach better aligns with the stringent real-time and computational efficiency requirements of fruit and vegetable harvesting tasks, effectively supporting the system’s online decision-making needs.

By hierarchically integrating MOGPS at the top level for macroscopic multi-objective optimization and path planning, the system’s global optimality is ensured. Concurrently, MAPPO operates at the bottom level, dedicated to executing local motion coordination and dynamic obstacle avoidance, thus establishing a closed-loop “global planning–local execution” mechanism. This collaborative paradigm effectively capitalizes on the strengths of both algorithms while mitigating their individual limitations, ultimately enhancing the intelligence and operational efficiency of the dual robotic arm system in complex agricultural settings.

This paper proposes a collaborative picking strategy for dual-arm robots in complex orchard environments, integrating the MAPPO [

25] and MOGPS algorithms. The strategy enables the dual arms to autonomously perform precise picking through centralized training of the Critic network and distributed execution of the Actor network. To address dynamic obstacle avoidance during picking, a strategy for real-time monitoring and evaluation of collision risks is proposed [

30], dynamically optimizing the picking path. The MOGPS algorithm considers the uneven distribution of fruit and the complexity of the working environment, dynamically allocating tasks and optimizing paths for the dual arms. Simulation experiments have verified the adaptability and path optimization capabilities of the MAPPO-MOGPS algorithm in a simulated apple picking environment. Additionally, experiments were conducted on the MAPPO-MOGPS algorithm in a real-world picking scenario, combined with a visual perception system [

31], to validate its effectiveness.

2. Materials and Methods

To achieve efficient and safe dual-arm collaborative apple harvesting, this study designs an innovative strategy that integrates Multi-Agent Proximal Policy Optimization, a multi-objective greedy target selection mechanism, and a dynamic collision risk term. This section aims to outline the overall framework and the underlying rationale of the proposed methodology. Specifically, MAPPO serves as the underlying learning architecture, facilitating coordinated policy learning through parameter sharing between the agents. The multi-objective greedy selection mechanism is responsible for real-time task allocation and fruit target decision-making in complex environments. Meanwhile, the dynamic collision risk term is incorporated into the motion planning to explicitly avoid collisions between the arms and with environmental obstacles. Together, these components form a comprehensive decision-making system. By enabling real-time decision-making, the proposed approach enhances the coordination between the dual arms and their adaptability to dynamic environments.

2.1. MAPPO Algorithm

The PPO algorithm [

32] is an on-policy reinforcement learning algorithm that stabilizes the learning process through trust region methods. Its policy update mechanism restricts the difference between the new and old policies, improving convergence and sample efficiency. MAPPO [

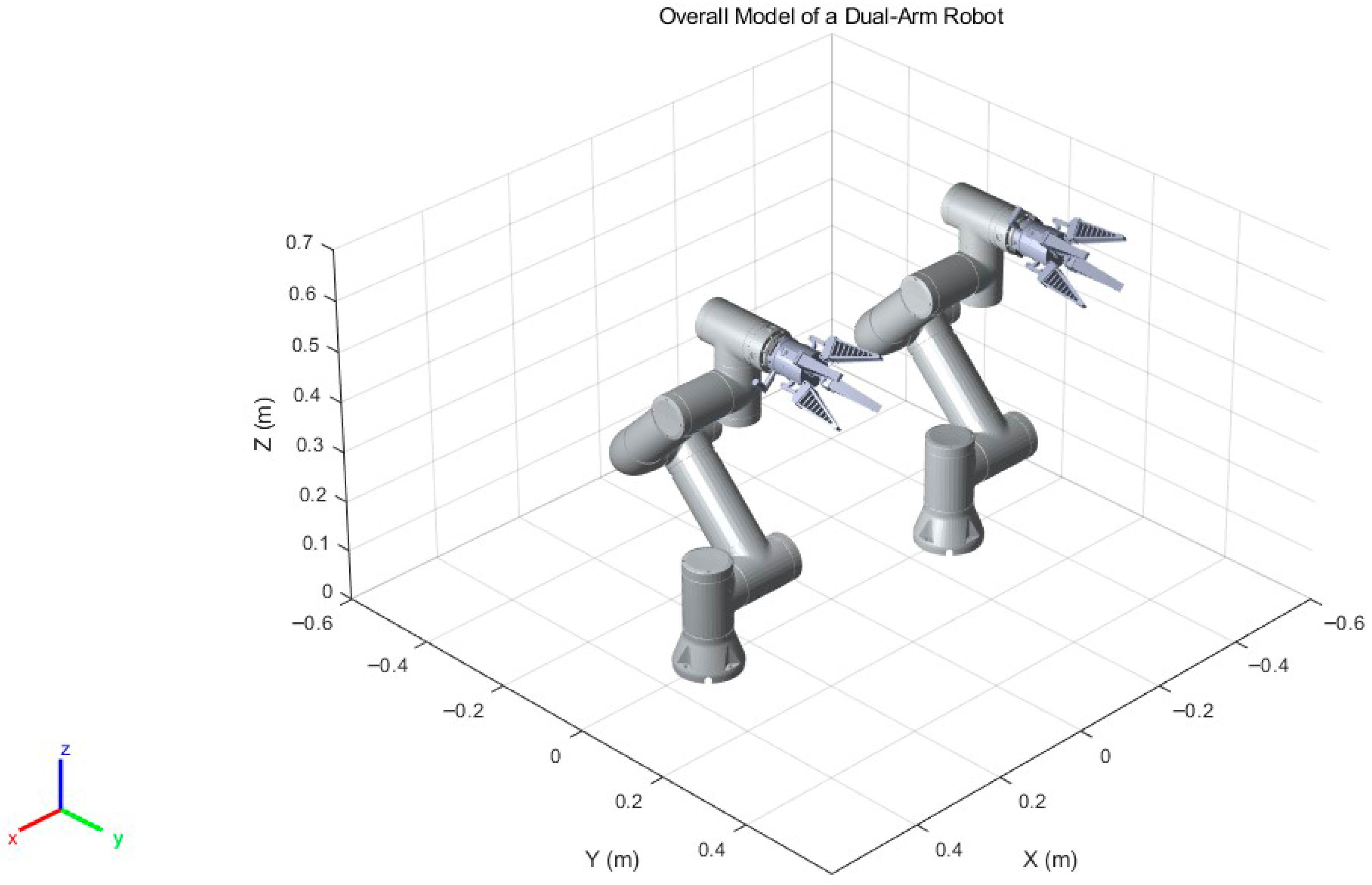

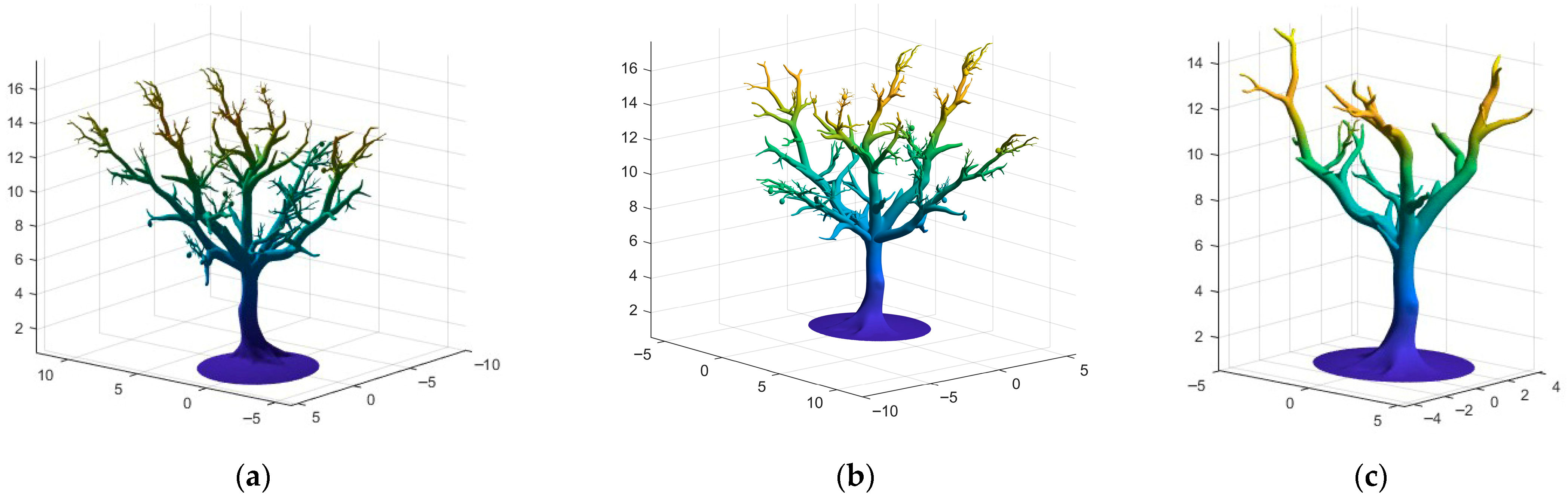

22] is an extension of the PPO algorithm for multi-agent environments, adopting a centralized training with decentralized execution framework. Agents share information during training and make independent decisions during execution to achieve collaborative work. This study employs the Multi-Agent Proximal Policy Optimization (MAPPO) framework within a deep reinforcement learning context for a dual-arm apple harvesting robot. By jointly training the policy and value networks, we optimize the cooperative control strategy between the two arms, enabling enhanced collaborative performance. A physical simulation model of the robot developed for this research is presented in

Figure 1.

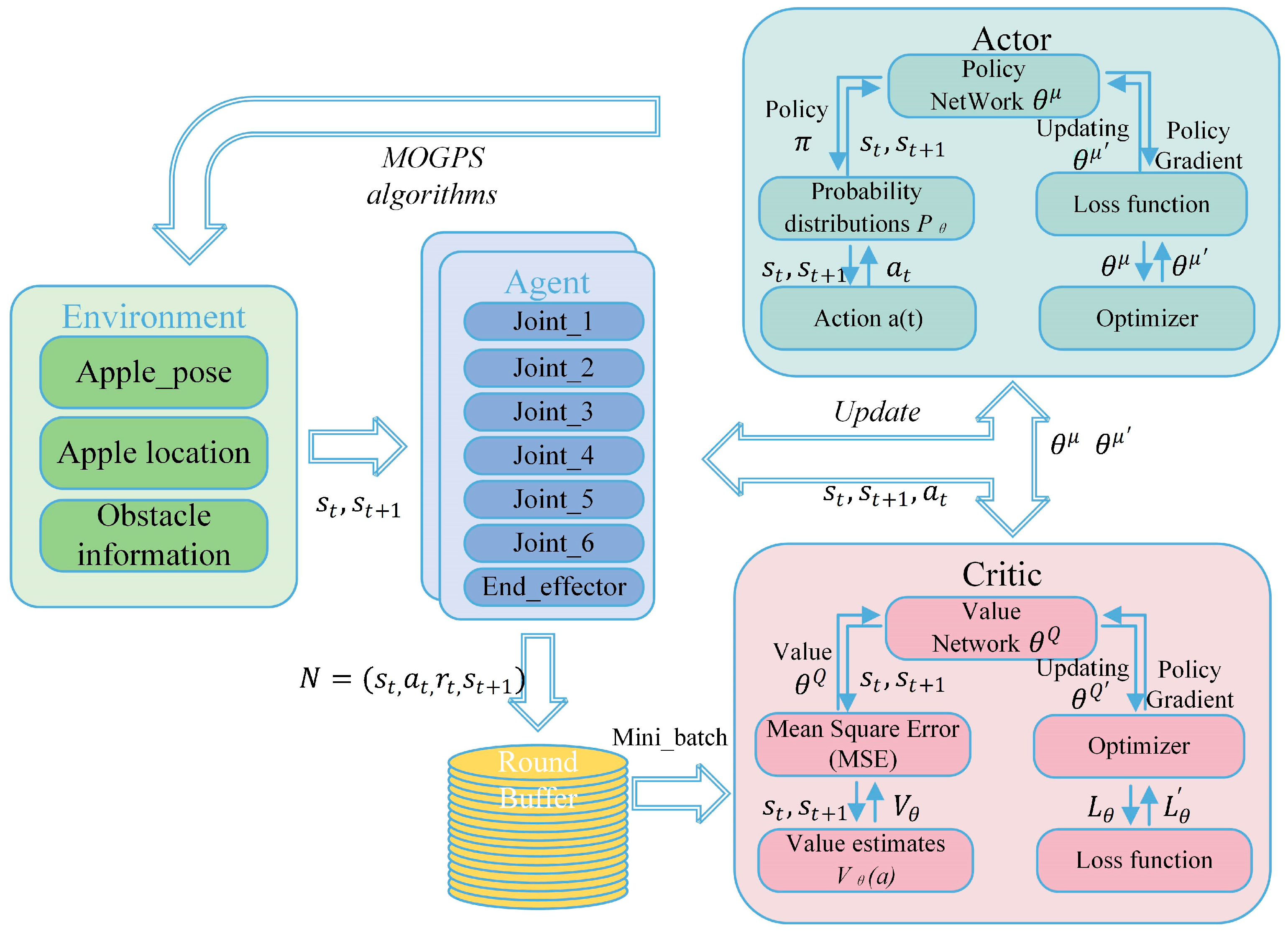

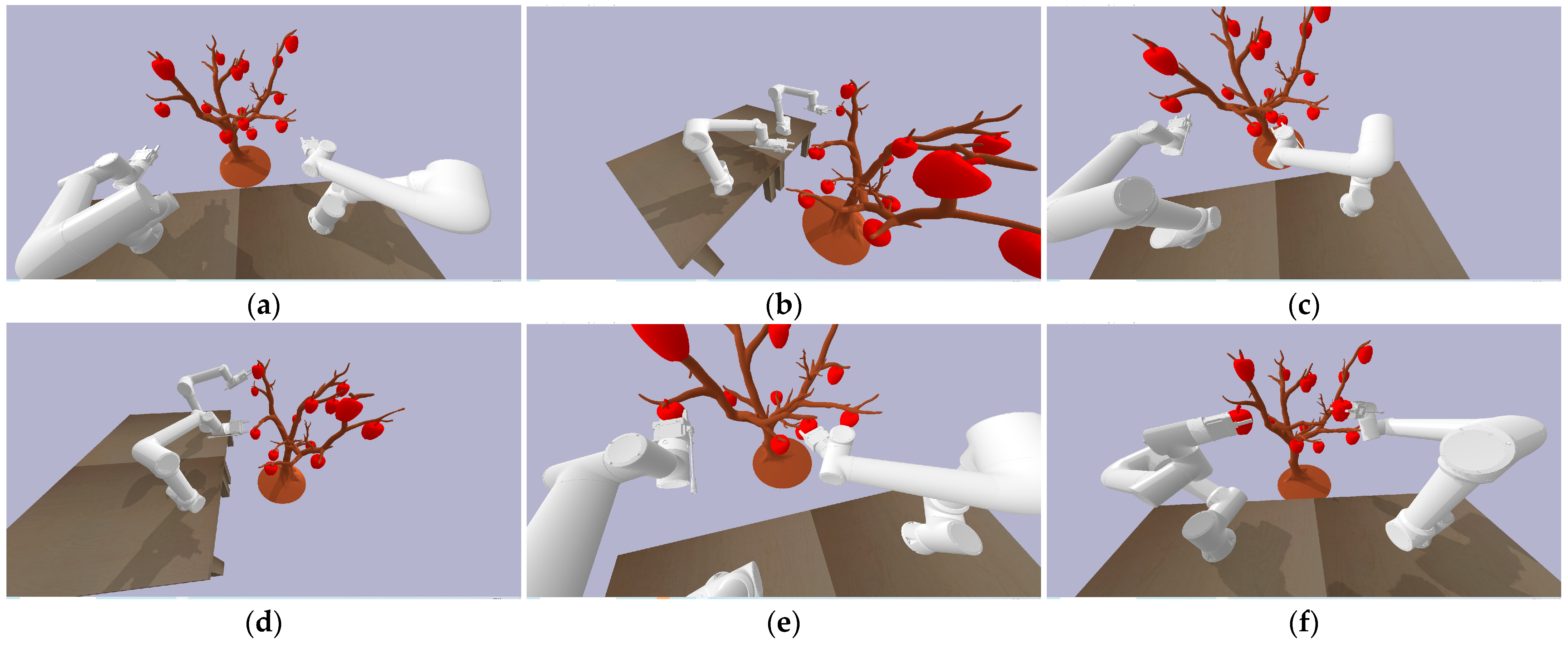

Within the MAPPO-MOGPS framework, the dual-arm picking robot system continuously monitors the operational environment to obtain the state

of the target objects (such as apples, obstacles, etc.) at time

as shown in

Figure 2. This state consists of the position and orientation of the apples, as well as information about the obstacles. In the network architecture, two independent agents represent the left arm and the right arm, respectively. These agents read the joint state information of the dual-arm robot, denoted as

, at time

, which includes joint coordinates, joint angles, and the coordinates and rotation pose of the end effector. Subsequently, the joint state information

is integrated into the environmental state

. Based on the current state information, the value

of the actions produced is evaluated.

The dual-arm agent receives action commands

from the Actor network and produces the next state

through interaction with the environment. The experience tuple

is stored in the round buffer. Meanwhile, the cumulative reward is used to calculate the return

for each time step

in the trajectory, and the Critic network is used to estimate the value function

for each state

. The generalized advantage estimation function is shown in Equation (1).

is the temporal difference error for agent at time step ; is the discount factor used to balance immediate rewards and future rewards. is the hyperparameter of Generalized Advantage Estimation used to control the bias-variance trade-off in advantage estimation. The generalized advantage estimation function calculates the action advantage values and, by balancing the bias and variance in the estimation, provides stable and efficient directional guidance for the optimization of the policy network.

The advantage function of agent is used to calculate the target function of the Actor network. By computing the gradient of the policy loss, the Actor network is guided to update its parameters in the direction of gradient ascent. At the same time, the target function of the Critic network is calculated, and through the computation of the loss gradient, its parameters are optimized in the direction of gradient descent.

The optimization objective of the Actor network is:

where

is the policy update ratio for agent

;

represents the entropy parameter of the policy;

is the parameter used to control the entropy coefficient.

limits the magnitude of the policy update ratio

, and

denotes the entropy of the policy

under the observation

.

denotes the number of batches.

The optimization objective of the Critic network is:

where

is the discounted reward.

represents the size of the batch size;

is the state-value function, which is used to estimate the expected return of state

.

The two agents that make up the dual-arm picking robot system are isomorphic; they share a set of network parameters, forming a compact agent model. Based on this, the dual-arm agent undergoes coordinated training through the policy network and the value function network to achieve synchronized decision-making and action execution. The parameter sharing mechanism promotes policy consistency between the dual-arm agents, and through the iteration of picking strategies between the arms, it enhances the collective learning efficacy and action coordination of the dual-arm robot picking system.

2.2. Dual-Arm Dynamic Collision Assessment Strategy

In the complex orchard picking environment, the dual-arm picking robot system has extremely stringent requirements for obstacle avoidance precision during picking operations. To address the dynamic obstacle avoidance between the two arms during the operation of the dual-arm robot, as well as the static obstacle avoidance with branch obstacles, a dual-arm dynamic collision assessment strategy (DDC) based on the MAPPO reinforcement learning framework is proposed. By kinematically modeling the joints of the dual arms and real-time detecting and evaluating the risk of collision between the arms, the obstacle avoidance picking path of the dual-arm robot is optimized.

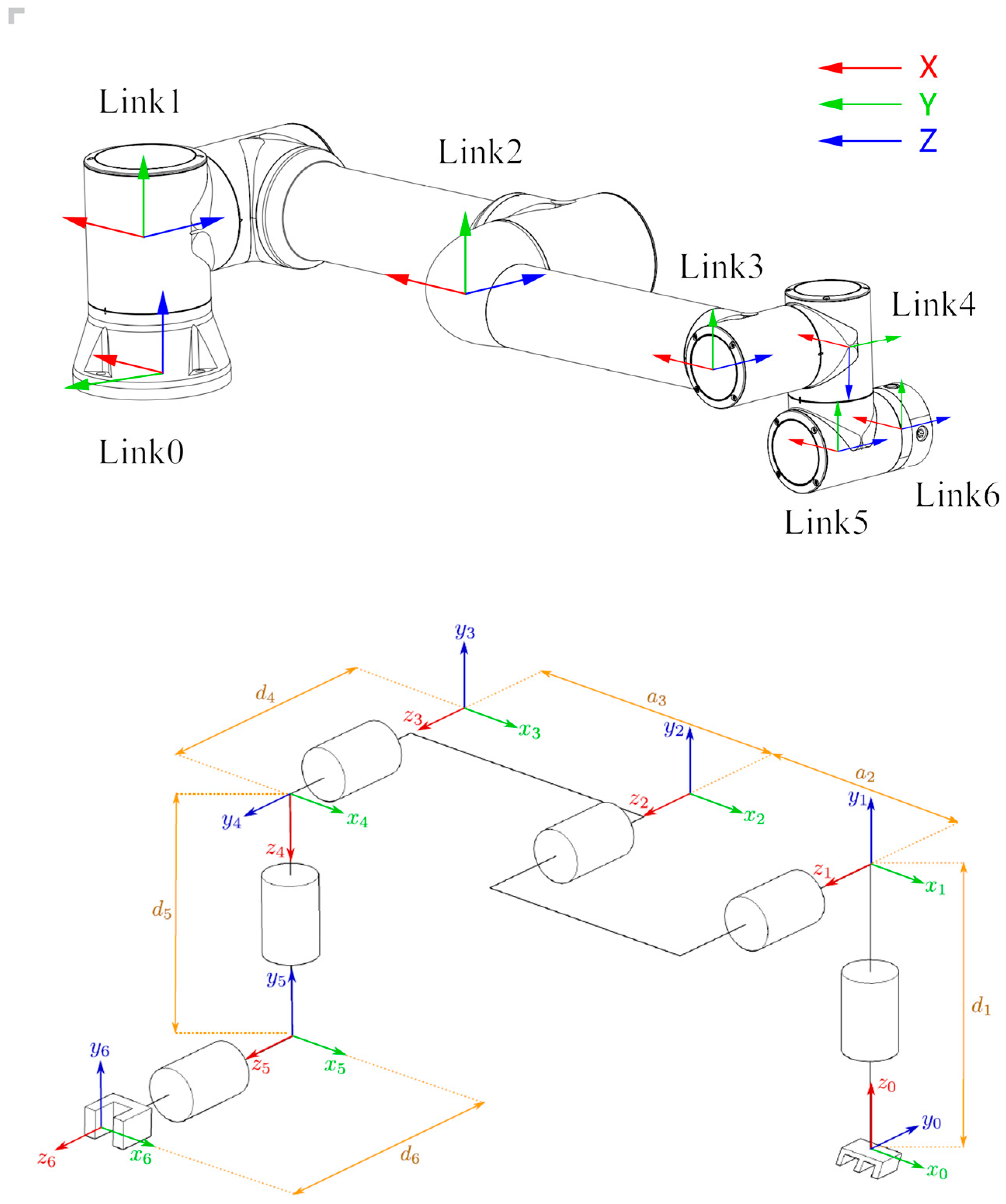

By using the DH modeling parameters as shown in

Table 1, the transformation matrix for joint coordinates is constructed as shown in

Figure 3, calculating the positions and orientations between each joint of the dual-arm robot as shown in Equation (4). Through the kinematic analysis of these parameters, a series of homogeneous transformation matrices are obtained to describe the entire motion chain of the robot from the base to the end effector.

In the DH parameters, , , , and denote the joint angle, link length, joint distance, and link twist angle, respectively.

Based on the kinematic analysis of the aforementioned dual-arm picking robot, a dual-arm dynamic obstacle avoidance assessment strategy is introduced. By analyzing the Euclidean distance between the robot’s end effector and the apples, as well as between the joints of the dual arms, the collision risk value is calculated to evaluate the robot’s action decisions in real-time as shown in Equation (10), and accordingly, the reward distribution is dynamically adjusted.

Based on the dynamic model of the dual-arm robot and the picking environment model, the collision risk for apple picking is calculated by predicting future states. Obtain the current state

of the dual-arm robot (joint angles, angular velocities, angular accelerations) and the state of the environment (the positions of apples and branches), and predict the future state

after the dual-arm performs the action

by using the state transition model

. After obtaining the future joint states of the dual-arm robot, the potential for dynamic collisions between the arms and obstacles, as well as between the joints of the dual arms, is assessed based on the distances between them. In the MAPPO network, the expected collision risk

is calculated based on real-time predictions of future states and collision detection results. As shown in

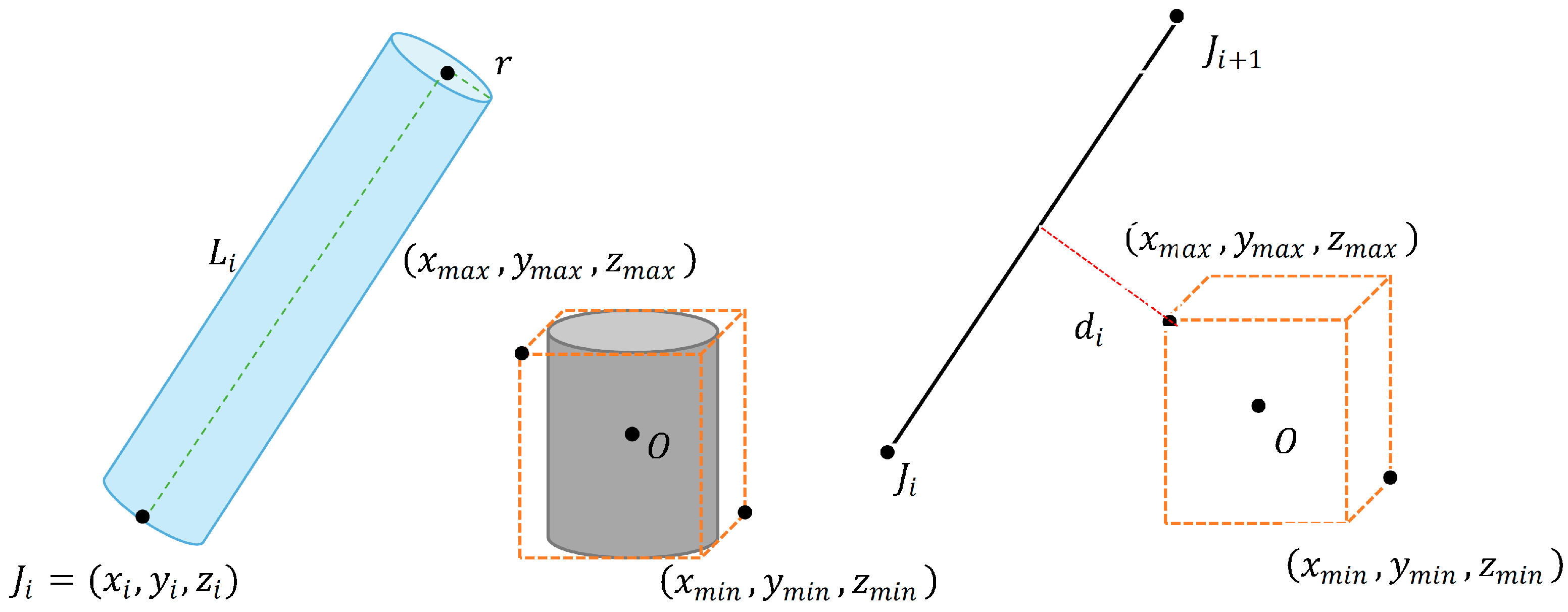

Figure 4, for the sake of description, obstacles are defined as:

In collision detection algorithms, an axis-aligned bounding box model is employed to approximate obstacles for improved computational efficiency. This cuboid is uniquely defined by its minimum and maximum corner coordinates, which simplifies subsequent calculations

At time step

, the coordinate of the robot arm joint

is

, and the closest point between the robot arm and the obstacle is

. Then the direction vector of joint

of the robot arm

is:

The vector from the nearest point of the tree branch obstacle

to the robot arm joint

is:

The shortest distance from an obstacle to joint

of the robotic arm can be represented as

, which corresponds to the perpendicular component of the projection of vector

onto the direction of

.

Calculate the Euclidean distance between the coordinates of each joint of the dual arms, where

represents the Euclidean distance between the coordinate of the

joint of the left arm and the

joint of the right arm, and compute the potential collision risk between the joints of the dual arms.

represents the Expected Collision Risk at time step

, which has an exponential relationship with the distance between the robot arm and the obstacle. The coefficient

is used to limit the collision risk threshold:

is a coefficient used to adjust the impact of the collision risk assessment on the total reward. This formula indicates that the reward function depends not only on the task completion status but also on the collision risk assessment based on the robot’s current state. Dynamically adjust the reward function based on the collision risk assessment , so that the robot can optimize the obstacle-avoiding picking path during the harvesting process.

2.3. Multi-Target Dynamic Allocation Strategy

In the process of task allocation for dual-arm robots performing apple picking tasks in multi-target operational areas, it is necessary to consider the non-uniformity of fruit distribution within the dual-arm picking robot’s operational unit and the heterogeneity of the complexity of the working environment. Based on the greedy algorithm, a Multi-Objective Greedy Picking Strategy is proposed. This strategy divides the picking domain of the dual-arm robot into separate working areas and collaborative working areas. Within the independent working space, the objective function of the algorithm includes multiple dimensions. When determining the order of apple picking, various factors such as picking distance, obstacle influence, and the spatial distribution of the fruit are comprehensively considered.

The position of the robotic arm’s end effector in the world coordinate system is

, and the position of the

th apple in the world coordinate system is

. The calculation formula for the picking distance

is:

By using the vector directional projection analysis method, the occupation ratio of obstacles on the projection interface in the direction of the projection vector is quantified to assess the obstacle-free degree of the path from the end effector of the dual-arm manipulator to the target fruit. When formulating the obstacle avoidance strategy

, priority should be given to maximizing the efficiency of obstacle avoidance to ensure the optimization of the picking path and the safety of the operation as shown in Equation (13).

represents the projected area of an obstacle on the projection plane in a specific projection direction , and represents the total area of the projection plane.

Accurately analyze the distribution pattern of apples within the workspace, calculate the apple density in the space, and determine the picking priority of different areas based on the calculation results. This allows the dual-arm picking robot to prioritize visiting areas with high fruit density, reducing the empty travel distance of picking and enhancing the efficiency of path planning and resource utilization in the overall picking operation.

where

represents the fruit density in the

th region,

indicates the number of fruits within that region, and

represents the volume of the region.

To integrate three distinct metrics of different dimensions distance, obstacle avoidance strategy, and density into a unified score for target ranking, a weighted summation method is employed to calculate the comprehensive score

for each potential target

, as shown in Equation (15).

and represent the Min–Max normalized values of distance and density, respectively, while , , and denote the weight coefficients, satisfying . The determination of the weight coefficients is based on task priority: in this work, priority is given to ensuring operational safety and efficiency. Therefore, parameter tuning was conducted through grid search, resulting in the final assignment of , , and .

Under the multi-objective greedy grasping strategy, the dual-arm robot determines the grasping priority for spatially non-uniformly distributed apples based on their comprehensive scores

. As illustrated in

Figure 5, targets located within the shared workspace of both arms are dynamically assigned to either the left or right arm for execution. The specific allocation rule is as follows: for the same target, the comprehensive scores derived from its interaction models with the left and right arms are compared, and the arm with the higher score is granted the grasping privilege for that target.

In summary, this study establishes a hierarchical decision-making system for dual-arm apple harvesting. The system comprises three core components: First, at the perception and decision-making layer, the Multi-Objective Greedy Picking Strategy Selection Mechanism comprehensively evaluates fruit spatial distribution, path length, and occlusion status to generate an optimal target assignment sequence. Subsequently, this command is transmitted to the control and execution layer, where the MAPPO algorithm, leveraging parameter sharing, decodes the abstract goals into specific joint control strategies for the dual-arm agents, accomplishing dynamic grasping tasks. Finally, the Dynamic Collision Risk Term is embedded into the motion planning process as an independent safety layer, enabling real-time trajectory adjustment through continuous risk assessment to achieve proactive obstacle avoidance. Collectively, these components ensure the high efficiency and robustness of the dual-arm apple harvesting operation.

4. Experiment

To validate the transferability and effectiveness of the MAPPO-MOGPS from simulation to real-world scenarios after training, this study constructed a dual-arm robotic picking experimental platform. The core hardware configuration of the platform consists of two 6-DOF collaborative robotic arms (Fr3) and an Intel RealSense D455 depth camera. The specific parameters of these components are provided in

Table 6. In this setup, the robotic arms serve as the actuators, while the depth camera is responsible for environmental perception and target localization. The software system is built on the Robot Operating System framework, integrating modules for visual perception, pose estimation, motion planning, and control, which achieves a complete closed loop for the algorithm from simulation to physical hardware. Furthermore, to minimize damage to the fruit during the picking process, the end-effector employs an adaptive gripper featuring compliant gripping characteristics.

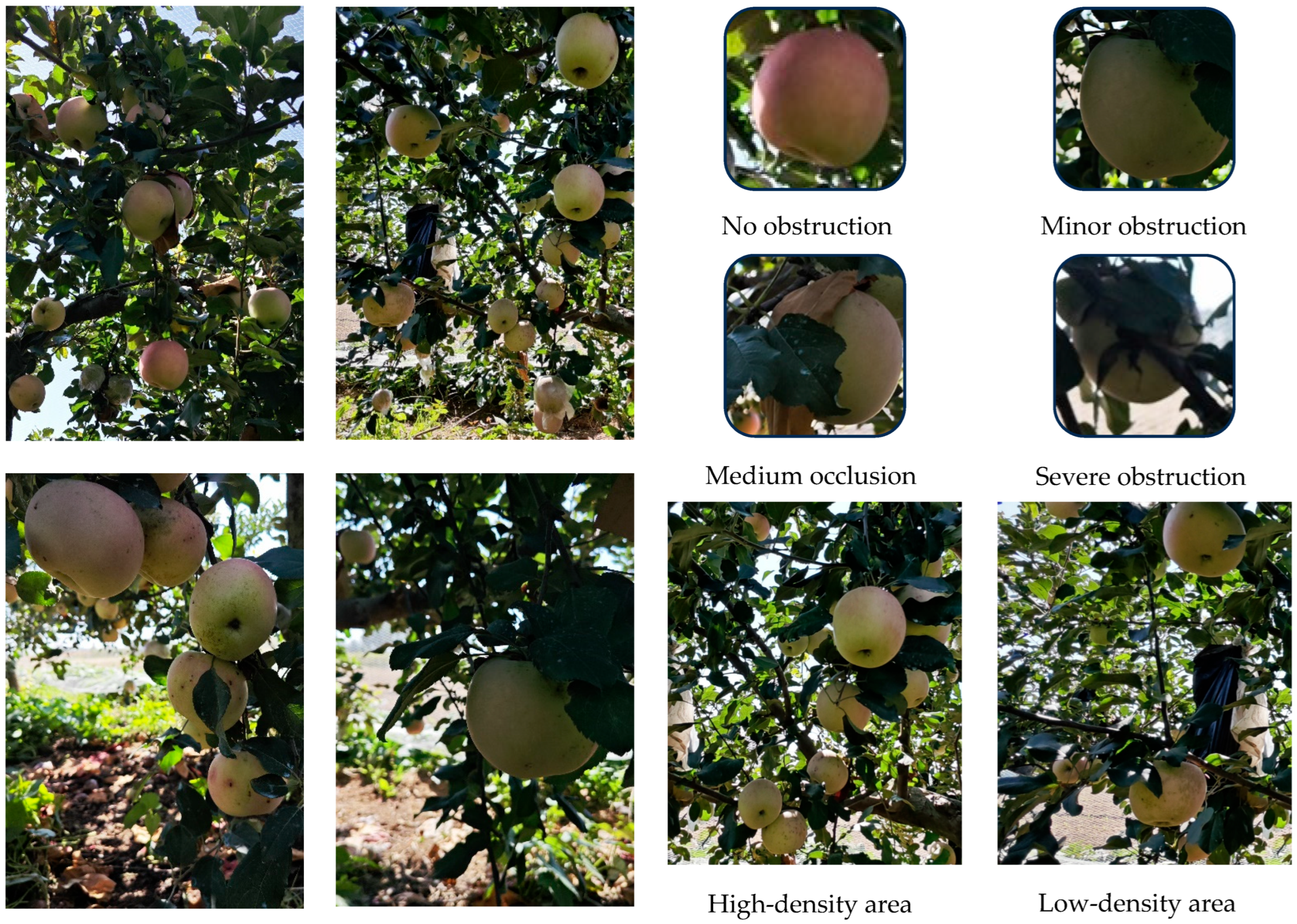

To accurately identify apple targets and obtain their spatial pose information, this study employs a depth camera to capture RGB images of apples and trains a model on the image dataset based on an improved YOLO 11s neural network architecture. Examples of the training data are shown in

Figure 10. This system integrates the SCH-YOLO11s [

31] instance segmentation network to achieve simultaneous segmentation of the apple body and the calyx region. The network incorporates several structural improvements: embedding the C3k2_SimAM module to enhance feature extraction capabilities, using the CMUNeXt module to replace standard convolution operations in the backbone network to improve the model’s expressive efficiency, and fusing a Histogram Transformer (HTB) into the C2PSA module, thereby enhancing its adaptability to complex textures and lighting variations.

After obtaining the segmentation results, the 3D center point of the apple’s main body is fitted using the least squares method based on its point cloud data. Simultaneously, the geometric center of the calyx region is calculated by averaging its point cloud data. The spatial vector connecting the fruit center and the calyx center is then used to define the apple’s growth axis, which in turn determines its orientation and pose. The final, complete pose data output provides a precise input for subsequent robotic arm grasping path planning.

The evaluation of the SCH-YOLO11s model on an apple dataset demonstrates excellent performance. For the segmentation tasks, it achieved mean Average Precision of 97.1% for sound apples and 94.7% for their calyxes. Furthermore, in the vision-based pose estimation task, the model also exhibited high accuracy, with a mean angular error of 12.3° calculated over 100 independent tests.

To determine the relative pose of the apple in the dual-arm robot base frame, this study employs a hand-eye calibration method to establish the transformation relationship between the depth camera coordinate system and the robot base coordinate system. During the calibration process, a checkerboard calibration plate is attached to the robot end-effector, and the robot is controlled to assume multiple distinct poses. At each pose, stereo images are captured synchronously while the corresponding end-effector pose data in the base frame is recorded. Under the Eye-to-Hand configuration, the pose of the calibration plate in the camera coordinate system at each position is obtained through image processing. Based on multiple pose correspondences, a transformation matrix comprising rotation and translation is solved, which defines the fixed spatial transformation from the camera coordinate system to the robot base coordinate system. After calibration, this matrix enables accurate conversion of the apple’s initial pose measured in the camera coordinate system to the robot base coordinate system, thereby obtaining its three-dimensional position and orientation in the robot world coordinate system and providing a precise target localization basis for subsequent grasping tasks.

During the harvesting process, collaborative optimization of multi-target apple picking paths is achieved using the MAPPO-MOGPS algorithm. In this study, based on the acquired 3D pose information of the apples, a multi-objective optimization function is constructed, which integrates Euclidean distance, spatial distribution density, and the degree of occlusion by obstacles.

For quantitative evaluation, a spatial grid modeling method is employed to process the 3D poses of the identified apples. By extracting the coordinate extrema of each apple along the X, Y, and Z axes, a cubic bounding box that covers all targets is established and then discretized into uniform unit grids. The spatial distribution density is quantified based on the number of apples within each grid cell. Simultaneously, an occlusion weight coefficient is calculated for each apple to comprehensively assess its field-of-view completeness and picking accessibility. Based on these multi-dimensional evaluation results, a prioritized sequence of picking targets is generated.

The hardware system acquires joint angle information through the real-time collection of robotic arm joint encoder data, which is then fed into the pre-trained MAPPO-MOGPS algorithm. Based on historical picking experience and the current environmental state, the algorithm generates collaborative motion trajectories for the dual arms. These control commands are transmitted to the low-level driver modules of the robotic arms via the ROS (Robot Operating System) communication architecture to achieve precise motion control. Upon completion of the picking task, the harvested fruits are gathered by an automated collection system. The experimental workflow is illustrated in

Figure 11.

To systematically evaluate the algorithm’s performance, experiments were conducted in an indoor environment with a simulated fruit tree, where simulated apples were randomly placed to mimic their natural growth distribution. For 10 target apples, four sets of 50 repetitive picking trials were performed in both the simulation environment and the physical system. The key performance metrics from these experiments were recorded and subsequently analyzed.

As shown in

Table 7, this study observed a performance gap between the simulation environment and the physical system: while the success rate in the simulation reached 92.3%, the success rate in the physical experiments under moderate occlusion conditions was 81.4%, accompanied by numerous collisions with branches. This discrepancy primarily stems from two factors: the perception-reality gap and model mismatch.

At the perception level, the simulation provides ideal state information, whereas in the physical system, localization errors from the visual perception module are propagated to the motion planning layer. This causes pose deviations in the robotic arm as it approaches the target, leading to contact with surrounding branches, which, in turn, reduces the picking success rate and increases the risk of collisions. At the system modeling level, the dynamic models used in the simulation struggle to fully replicate complex physical properties of the real world, such as the flexibility of branches and the connection strength of fruit stems. This thereby limits the generalization performance of the purely simulation-trained strategy on the physical platform.

Nevertheless, in the presence of the aforementioned errors and uncertainties, the system still achieved a picking success rate of over 81% and maintained zero collisions between the dual arms throughout. Simultaneous collisions mainly occur between the robotic arm and branch obstacles. This demonstrates the effectiveness and robustness of the MAPPO-MOGPS algorithm in maintaining the safety of dual-arm collaborative operations in complex scenarios.

In summary, the physical experiments indicate that the primary bottlenecks of the current system are concentrated in visual perception accuracy and the capability for modeling and avoiding fine-grained obstacles. Future work will focus on enhancing perceptual robustness through multi-sensor fusion and performing closed-loop optimization of the simulation model using real physical interaction data, to further advance the cross-domain transfer performance from simulation to reality.

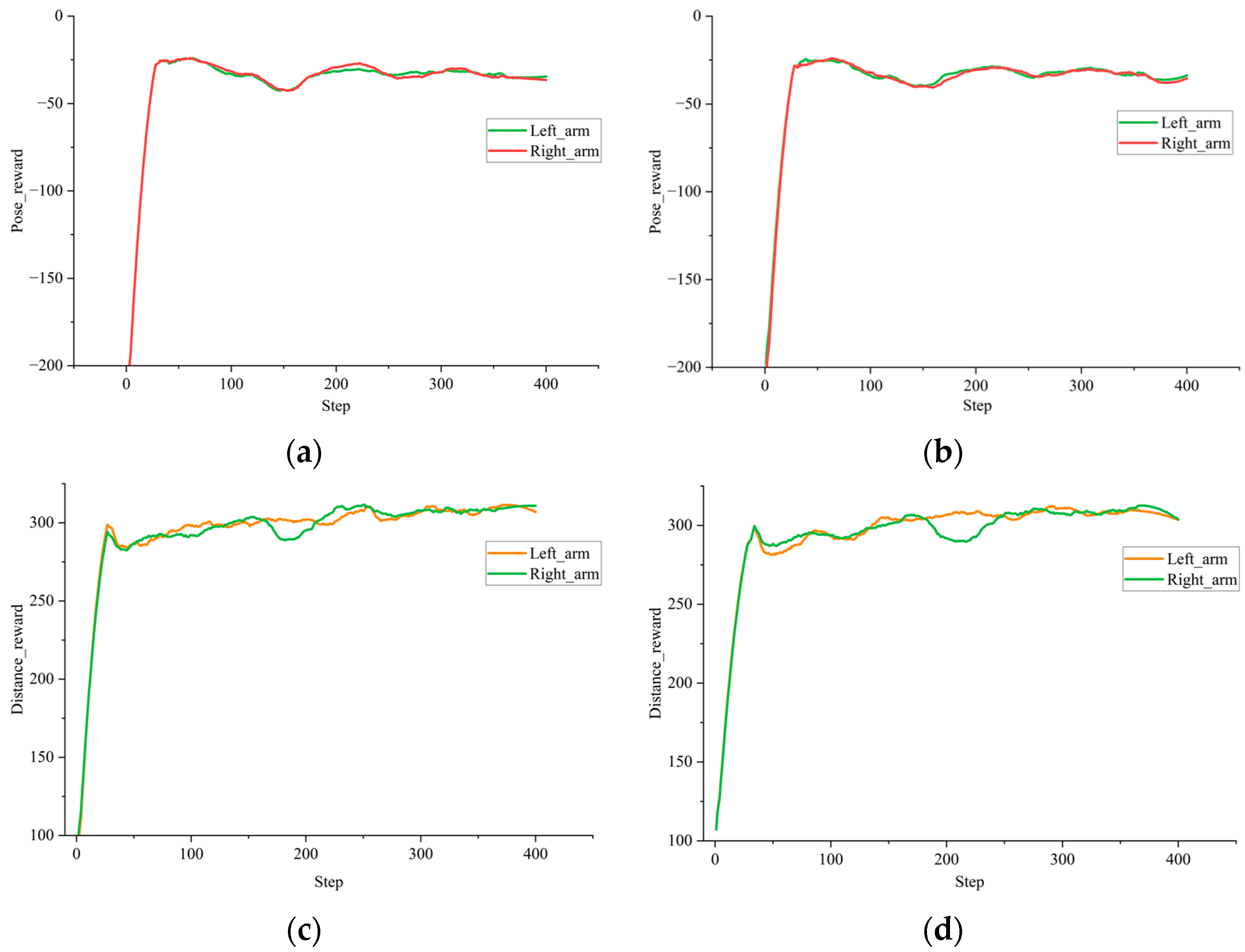

5. Results and Discussion

Simulation results demonstrate that the MAPPO-MOGPS proposed in this paper exhibits strong overall performance in the dual-arm apple harvesting task. According to statistical t-test results, the obstacle avoidance success rate reaches 92.3%, the mean positioning error of the end-effector is controlled within 0.014 m, and path planning efficiency is improved by 15.11% compared to baseline methods, fully validating the algorithm’s superiority in simulation environments. In practical dynamic harvesting validation, the success rate for apples in the outer canopy reaches 87.62%, with an average single-fruit harvesting time of 5.62 s, further proving the strategy’s good generalization capability in unobstructed scenarios.

Although the strategy performs well in simulation and simple scenarios, its performance in highly occluded complex environments still requires improvement, primarily due to challenges in simulation modeling and system implementation. At the simulation modeling level, to balance computational efficiency and realism, necessary simplifications were made to certain key physical properties: for instance, the flexible dynamics of fruit stems and branches were simplified as rigid bodies, ignoring elastic deformations in real environments; the visual perception module was built on ideal rendering without fully simulating disturbances such as sensor noise and variable lighting. Additionally, the contact model in the physics engine, as an approximation of real-world interactions, has inherent deviations in force transmission and friction characteristics. While these simplifications ensure training efficiency, they inevitably introduce systematic errors in the simulation-to-reality transfer. At the system implementation level, minor positioning errors in the visual perception stage are transmitted and amplified through the control chain, ultimately leading to pose deviations between the end-effector and the ideal harvesting point.

Furthermore, the current method still requires improvement in model uncertainty and risk assessment: for example, the one-step prediction model does not explicitly consider sensor noise and model mismatch; risk assessment also does not incorporate key factors such as relative velocity and time to collision. In addition, the biomechanical interactions during fruit harvesting and their potential damage have not been directly monitored or evaluated.

Based on the above analysis, future research will focus on the following directions: First, by integrating multi-sensor information and robust image algorithms, the perception accuracy of the system under complex conditions will be enhanced. Second, the introduction of flexible body dynamics and random disturbances into the simulation will help build a higher-fidelity virtual training environment. Finally, cross-crop adaptability studies will be expanded by establishing a physical parameter database for different fruits and optimizing grasping strategies, promoting the application of harvesting technology in diversified orchard scenarios. The advancement of these efforts will significantly enhance the operational robustness of the harvesting system in real-world complex environments and accelerate the transition of agricultural robotics from theoretical research to industrial application.