Abstract

Agricultural development is one of the most essential needs worldwide. In Norway, the primary foundation of grain production is based on geological and biological features. Existing research is limited to regional-scale yield predictions using artificial intelligence (AI) models, which provide a holistic overview of crop growth. In this paper, the authors propose detecting several field-scale crop types and use this analysis to predict yield production early in the growing season. In this study, the authors utilise a multi-temporal satellite image, meteorological, geographical, and grain production data corpus. The authors extract relevant vegetation indices from satellite images. Furthermore, the authors use field-area-specific features to build a field-based crop type classification model. The proposed model, consisting of a time-distributed network and a gated recurrent unit, can efficiently classify crop types with an accuracy of 70%. In addition, the authors justified that the attention-based multiple-instance learning models could learn semi-labelled agricultural data, and thus, allow realistic early in-season predictions for farmers.

1. Introduction

In Norwegian agriculture, crop yield determinants are significantly influenced by agro-climatic conditions, precipitation consistency, soil quality, and greenhouse gas emissions [1]. Concurrently, the evolving dietary preferences of the global populace pose a formidable challenge to agriculturists in cultivating diverse, superior-quality cereals to meet individual nutritional demands [2,3]. This study introduces AI-facilitated methodologies for cereal categorisation, prognostication, and quality validation, aiming to bolster sustainable agricultural practices in Norway by equipping farmers with data-driven insights. This investigation identifies cereal varietals within Norway, intending to craft a pragmatic framework for forecasting cereal yields on Norwegian farms, thereby providing farmers with early or concurrent seasonal insights.

Emerging studies have employed remote sensing imagery and convolutional neural networks (CNNs) for regional crop yield forecasting. Researchers like Sharma et al. [4], You et al. [5], and Russello and Shan [6] have integrated temporal satellite imagery with CNNs, yielding precise crop yield forecasts. They enhanced prediction accuracy by amalgamating agricultural textual data with long short-term memory (LSTM). Engen et al. (2021) [7,8] innovated early-season crop yield forecasting, showcasing significant accuracy improvements with minimal error rates during specific growth season weeks in Norway.

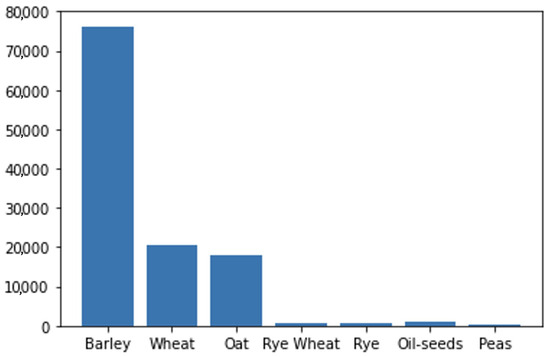

Furthermore, this research delves into crop type categorisation models. Given Norway’s challenging agricultural climate, the diversity and extent of crops cultivated for consumption are restricted. Observations indicate a substantial portion of arable land is dedicated to cereal production, mainly barley, wheat, oats, and various rye species [9], diverging from studies that generalised crop types in Norway [10,11]. Efforts by Kussul et al. [12] and Ji et al. [13] have sought to transcend these limitations by using temporal satellite imagery in multi-dimensional CNNs. In contrast, Foerster et al. [14] validated the effectiveness of NDVI vegetation indices in differentiating twelve crop varieties, offering valuable insights for the author´s inquiry. By leveraging deep learning, the authors aim to refine precision agriculture in Norway, enhancing future sustainability [10].

1.1. Problem Statement and Hypotheses

Based on the aforementioned observations, this manuscript underscores the criticality of early yield forecasting within Norwegian agriculture, advocating for the utilisation of AI models capable of facilitating precise pre-harvest predictions. Drawing inspiration from the research conducted by Engen et al. in 2021 [7], it accentuates the importance of early forecasts tailored to specific agricultural zones while acknowledging the limitations associated with delayed data acquisition. The manuscript highlights the imperative of discerning crop types for accurate yield estimation, thereby necessitating exploring the diverse range of cereals cultivated within Norwegian farming landscapes. To address this, a semi-labelled dataset was compiled leveraging farmer-reported cereal information, notwithstanding the evident requirement for more detailed field-level data. This underscores the necessity for a robust cereal classification framework, presenting a novel, knowledge-driven methodology beneficial to farmers and the broader AI agricultural research community. This foundational work provides a compelling rationale for adopting this approach as the state-of-the-art methodology for the present research endeavour in this manuscript. To further delineate the research problem, the authors articulate five hypotheses that will be empirically tested during this study.

Hypothesis 1.

Features associated with sunlight exposure, growth temperature, ground state, and soil quality hold potential for enhancing prediction models aimed at improving grain yield forecasts.

Recent investigations using the state-of-the-art approach have demonstrated successful grain yield predictions for Norwegian farms. However, these predictions were made utilising a limited set of environmental features. Integrating additional pertinent features holds promise for refining the state-of-the-art yield prediction models in Norway. In this study, the authors intend to explore and pre-process novel features, aligning them with the same temporal resolution as the daily meteorological variables utilised in the aforementioned studies. Subsequently, the authors will reconstruct and train deep learning models comparable to those employed in the state-of-the-art approach, incorporating these newly identified features. The outcomes of this extended investigation will be detailed later in this manuscript, accompanied by a comparative analysis concerning the original grain yield prediction outcomes reported in the state-of-the-art approach. This endeavour will pave the way for exploring the second hypothesis, wherein the same model architecture will be evaluated using data spanning an additional growing season.

Hypothesis 2.

Extending the agricultural dataset to predict grain yields by incorporating an additional year of data samples is anticipated to result in improved predictive accuracy, highlighting the significance of longitudinal data collection.

Engen et al. identified the limited coverage of data across seasons as a notable constraint impacting the performance of their predictive models. Motivated by the findings of the first hypothesis, an alternative approach is proposed, focusing on including new data samples rather than introducing novel features. This methodological pivot is designed to bolster the predictive capabilities of the models and effectively tackle the challenges inherent in crop monitoring within the context of Norwegian agriculture. Consequently, agricultural and meteorological data pertinent to the 2020 season will be procured and processed in subsequent sections of this manuscript, adhering rigorously to the formatting conventions employed for features extracted from preceding seasons in the state-of-the-art investigations. Following data acquisition and pre-processing, this new dataset will be amalgamated with the preexisting dataset and subsequently leveraged to retrain the models. The ensuing evaluation will entail a comprehensive analysis of performance metrics, encompassing measures such as loss and accuracy. These findings will be expounded upon in Section 3.1, with due justification provided compared to the original findings delineated in the state-of-the-art approach.

Hypothesis 3.

Integrating satellite imagery and vegetation indices into convolutional neural networks (CNNs) offers a promising avenue for achieving precise grain classification within agricultural fields.

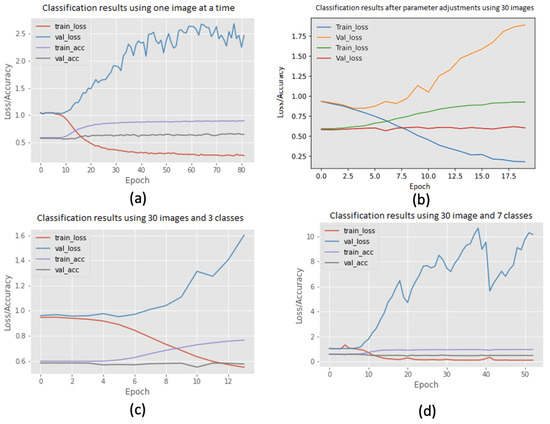

This research endeavour is motivated by many factors necessitating the exploration of crop classification and mapping techniques tailored to Norwegian agriculture. These include the imperative to delineate field usage and facilitate early-season yield predictions accurately. Following an exhaustive review of pertinent literature encompassing classification methodologies, feature engineering approaches, and performance benchmarks, a time-distributed CNN architecture is instantiated in the forthcoming sections. The proposed model framework incorporates raw satellite imagery alongside derived vegetation indices, leveraging the temporal dimensionality inherent in the data. Concurrently, efforts are directed towards generating ground truth class labels for fields wherever feasible to facilitate supervised learning. Subsequently, the models are trained using the amalgamated dataset. The ensuing Results and Discussion, Section 2.2.1, comprehensively presents the outcomes of the model training process, elucidating performance metrics and effectuating comparative analyses across various methodological approaches.

Hypothesis 4.

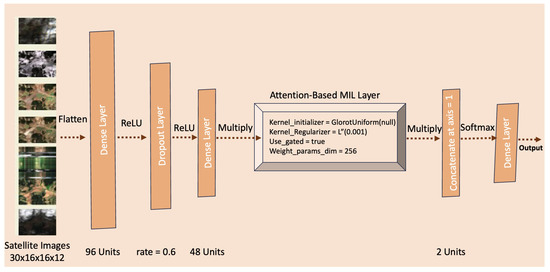

Multi-class attention-based deep multiple-instance learning (MAD-MIL) can potentially leverage entire field datasets, augmenting crop classification accuracy.

An additional rationale for undertaking crop classification endeavours stems from the need for comprehensive records detailing the crops planted and cultivated within individual fields. The extant information available, at best, yields a semi-labelled dataset. Such datasets, featuring incomplete label information, necessitate the employment of specialised methodologies inherent to semi-supervised learning, particularly within the domain of field classification in Norwegian agriculture. In the pursuit of leveraging the entirety of the dataset, a semi-supervised learning framework, specifically, multi-class attention-based deep multiple-instance learning (MAD-MIL), will be implemented in this study. This methodology endeavours to surpass the efficacy of CNN-based approaches by exploiting the inherent structure of the dataset. The ensuing section, Section 3, will explain a comparative analysis, delineating the performance disparities between the MAD-MIL approach and its CNN-based counterpart.

Hypothesis 5.

In-season early yield predictions maintain their efficacy when utilising predicted crop types based solely on the available data up to the prediction time.

The importance of in-season yield prediction, as elaborated earlier in this section, underscores the necessity within Norwegian agriculture to anticipate and project future yields. To achieve precise predictions, it is imperative for experiments to adapt by exclusively utilising information available at the time of prediction. This research endeavour aims to consolidate the insights and accomplishments obtained from preceding hypotheses into an early yield prediction framework, as outlined in Section 3. This approach’s essence lies in prognosticating each field’s crop type solely based on the earliest accessible satellite imagery, subsequently generating farm-specific masks for each predicted crop type. This methodology empowers the yield model to furnish accurate predictions early in the growth cycle. The ensuing section, Section 3, will elucidate the performance of this early yield prediction system compared to prior iterations, thereby facilitating a comparative assessment of its effectiveness.

1.2. Research Challenges and Contributions

The presented hypotheses delineate specific scenarios hitherto unexplored within the prevailing environmental and data constraints. It is the contention of the authors that these research endeavours will furnish invaluable insights and methodologies to the realms of Norwegian agriculture, food production, and AI research. Nevertheless, during experimental phases, challenges such as procuring 12-band satellite imagery for every Norwegian farm, the limited availability of data on plant growth, field specifics, and farmer activities, cloud interference in satellite imagery affecting sensor accuracy, and the heightened computational demands posed by an enlarged agricultural data corpus were encountered. In response, the research contributions encompass broadening the agricultural data corpus, improving cereal yield forecasts, substantiating the relevance of soil quality data, developing a crop classification model for early in-season yield forecasting, and appraising the current status of precision agriculture in Norway. By reflecting on these challenges and contributions, the authors introduce cutting-edge methodologies to substantiate the innovative Norwegian cereal type categorisation and yield forecasting approaches.

- Early and seasonal grain yield prediction: This research identified a pressing need for advanced in-season yield forecasting methodologies, prompting a comparative study of early prediction techniques. Employing a CNN-LSTM architecture, Sharma et al. [4] achieved minimal training and validation losses. Following Engen et al., who introduced a hybrid deep neural network that integrated various agricultural data sources, yielding a mean absolute error of 76 kg per 1000 m2 and recorded error rate increases from weeks 10 to 26 and 10 to 21, respectively, this study seeks to construct an accurate early-season yield prediction framework for diverse cereal types.

- Crop type delineation and mapping: Addressing the scarcity of agriculture-specific data in Norway, Kussul et al. [12] advanced a field classification framework, demonstrating high accuracy rates for both 1D and 2D models, particularly the latter, which interprets spatial context in satellite imagery or vegetation indices. Their progress in winter wheat classification underscores the value of phenological data in remote sensing of wheat grains. Additionally, DigiFarm’s (https://digifarm.io/, (accessed on 13 April 2024)) technology, which achieves a 92% accuracy rate in delineating field boundaries and identifying crop types via satellite imagery in specific locales, is notably pertinent to this research.

- Employing vegetation indices for crop categorisation: Remote sensing techniques, which analyse the spectral composition of Earth’s surface, elucidate land, water, and vegetation features. Vegetation indices, derived from multiple spectral bands, convert reflective data into metrics that elucidate vegetation characteristics and terrestrial changes. These indices, essential in remote sensing analyses, necessitate careful selection to suit specific objectives in varied environments, especially in grain type differentiation, where variations are more nuanced [15]. This study utilises a suite of ten vegetation indices, thoughtfully chosen to optimise accuracy in cereal type categorisation.

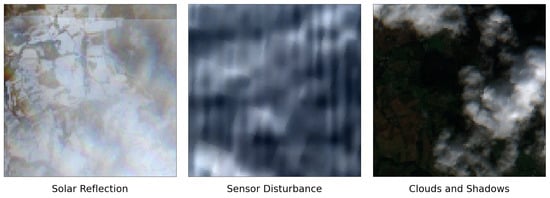

- Mitigation of cloud-induced noise in satellite imagery: The investigation acknowledges the potential of a two-step robust principal component analysis (RPCA) methodology, as outlined by Wen et al. [16], for cloud detection and mitigation in satellite images. Examples of these types of noise are visualised in Figure 1, which shows four types of noise in satellite images, solar reflection, sensor disturbance, cloud coverage, and presence of shadows, all identified at the same farm in the same year. This provides some insight into noise frequency, which can be problematic when extracting information and features from satellite images and generally results in a noisy dataset lacking essential information. Anticipating the building of such a framework to enhance analysis accuracy through noise reduction will be a focus of future work.

Figure 1. Examples of different types of noise in satellite images. Reprinted/adapted with permission from the authors Mikkel Andreas Kvande and Sigurd Løite Jacobsen, Hybrid Neural Networks with Attention-Based Multiple Instance Learning for Improved Grain Identification and Grain Yield Predictions. Masters Thesis; published by University of Agder, Grimstad, Norway, 2022 [17].

Figure 1. Examples of different types of noise in satellite images. Reprinted/adapted with permission from the authors Mikkel Andreas Kvande and Sigurd Løite Jacobsen, Hybrid Neural Networks with Attention-Based Multiple Instance Learning for Improved Grain Identification and Grain Yield Predictions. Masters Thesis; published by University of Agder, Grimstad, Norway, 2022 [17].

2. Material and Methods

This section provides a foundational background on theories and techniques pertinent to the proposed experiments, alongside an overview of antecedent experiments and research efforts relevant to AI in agriculture. A comprehensive spectrum of research endeavours and experiments will be elucidated in this section. Primarily, theories and research about agriculture, encompassing growth factors and farming activities, will be expounded upon in Section 2.1.1. Remote sensing emerges as a pivotal technique underpinning this research endeavour, given its ubiquitous application across all five research hypotheses. Section 2.1.2 furnishes a comprehensive compilation of antecedent research efforts and overarching findings in remote sensing coupled with methodologies for cloud abatement. Lastly, a foundational exposition on the principal AI techniques deployed in this research endeavour, alongside overarching work within yield prediction and crop classification, will be provided. Additionally, it will delineate comparative state-of-the-art techniques and solutions for early yield prediction and crop classification in Section 2.1.3. The research endeavours in this section will inspire the techniques and methodologies employed, serving as benchmarks for evaluating the findings when deemed pertinent. A discerning critique will be interwoven throughout the section, aimed at assessing the applicability of state-of-the-art methodologies to the methods outlined in this manuscript, thereby facilitating the execution of experiments and the evaluation of the proposed hypotheses.

2.1. Norwegian Agriculture and AI Advancements

2.1.1. Growth Factors and Farming Activities in Norwegian Agriculture

Precision agriculture constitutes a farming paradigm engineered to augment efficiency, productivity, and profitability, mirroring the objectives of this research study. Conceptually, it entails the utilisation of information technology to enhance and optimise production processes while mitigating adverse impacts on the environment and biodiversity. Often delineated as a farm-centric management strategy, precision agriculture integrates all available farm data to optimise various activities and production trajectories. Its applicability spans multiple agricultural domains, including grain production, farming, forestry, and fisheries. Nonetheless, the focus of this discourse, akin to the broader research endeavour, is directed towards grain production [18]. The variability intrinsic to grain yields can principally be bifurcated into two categories; (i) spatial variability which Signifies the divergence in soil composition, crop attributes, landscape features, and environmental conditions observed across a delineated geographical expanse [18] (p. 121); and (ii) temporal variability which denotes the fluctuation in soil properties, crop characteristics, and environmental factors discerned within a specified area at different instances of measurement [18] (p. 121).

- Status of norwegian agriculture: Norwegian agriculture demonstrates a significant reliance on the geographic positioning of farms. Contiguous regions conducive to field cultivation are primarily found along the coastline and within the Innlandet region, characterised by relatively flat terrain compared to other areas. Notably, grain production is concentrated predominantly in the low-lying areas of southern Norway, such as Innlandet. Nonetheless, a considerable portion of agriculturally viable land is designated for farming and grass production, benefiting from more favourable geographic and climatic conditions. Barley constitutes approximately half of the total grain output, with wheat and oats contributing roughly a quarter each [9]. Field preparation and sowing activities typically occur between April and May in Norway, contingent upon the duration and severity of the preceding winter and spring seasons. Spring-sown crops typically reach maturity by September, marking the commencement of the harvesting period. However, the harvest timing is subject to variables such as the sowing schedule and various temporal factors affecting the growth cycle. Autumn-sown grains are typically planted between August and September in Norway; however, these grains are beyond the scope of the present research endeavour [19].

- Meteorological factors: Optimal spring farming conditions necessitate minimal precipitation and sufficiently warm temperatures to facilitate ground thawing, enabling the use of heavy equipment for field operations such as ploughing and sowing. Dry soil conditions enhance manageability during these activities. Ideal lighting conditions for seed germination involve fluorescent cool white lights, as incandescent lights may generate excessive heat harmful to crops. Grain seeds and plants require stable temperatures and regular precipitation throughout the growing season to develop into harvestable grains. At maturity, grain plants must undergo drying to reduce moisture content for safe harvesting and storage [19,20].Temperature and precipitation profoundly influence farming operations and plant growth. Research by Johnson et al. (2014) [21] highlights the significant correlation between weather variables and crop yield, with a consensus favouring a favourable combination of warmth and rainfall for increased yields. Sunlight, crucial for photosynthesis and photomorphogenesis, benefits grain crops, with prolonged exposure often moderating temperature extremes. However, excessively high temperatures exceeding 30 °C, notably surpassing 45 °C, can stress or even kill most plant species [22]. Kukal and Irmak (2018) [23] emphasise the substantial impact of climate variability on crop yields, with precipitation emerging as a critical predictor across maize, sorghum, and soybean crops. These insights underscore the vital importance of temperature and precipitation in shaping crop growth and yield outcomes, informing effective yield prediction models.

- Agriculture field and soil factors: Soil texture and quality influence plant growth by dictating nutrient and water retention, water flow dynamics, and soil stability against disruptive forces. Factors such as animal activity, mechanical fieldwork, organic matter depletion, and cultivation practices can adversely impact soil properties, affecting water and oxygen capacity and promoting evaporation, thereby influencing seed and plant viability. Yield correlations are often observed with soil susceptibility to erosion by water and wind. Organic matter stabilises soil structure, enhances nutrient retention, and fosters microbial activity. Soil moisture content, accounting for up to 50% of yield variability, impacts soil temperature and nutrient uptake. Addressing soil factors such as water availability and organic matter content through fertilisation and irrigation can enhance plant productivity [18]. Field elevation and slope variations can also impact crop yields indirectly. Higher-altitude fields tend to be shallower with coarser textures, potentially impeding plant growth. Conversely, lower-altitude fields may experience colder air, hindering water accumulation and movement through the soil [18]. Foerster et al. [14] conducted crop classification and mapping, observing high temporal variability in the seasonal Normalised Difference Vegetation Index (NDVI), attributed to crop dependence on specific soil properties and water availability. These findings underscore the significance of soil characteristics in influencing crop growth and suggest that incorporating such information can refine crop classification and yield prediction models, enhancing agricultural decision-making processes.

- Farming activities: Various farming practices, such as fertilisation, irrigation, and field preparation techniques, significantly influence crop growth and yield outcomes. Farmers often utilise fertilisers and irrigation to enhance soil nutrient content and moisture levels, creating favourable conditions for plant growth. Irrigation, in particular, is crucial in mitigating the adverse effects of high temperatures and low precipitation, contributing to more stable yields amid climate variability. Additionally, organic farming practices prioritise sustainability over yield, employing natural fertilisers and crop rotation strategies to maintain soil fertility and ecosystem health. Field preparation activities, including ploughing, cultivation, and sowing, also impact crop performance by facilitating optimal seed placement and germination conditions. Studies such as that by Shah et al. [24] underscore the significance of factors like sowing date in determining yield outcomes, albeit within specific regional contexts. In summary, accounting for a comprehensive range of farming activities in yield prediction datasets is essential for accurately assessing crop growth and productivity.

2.1.2. Remote Sensing in Norwegian Agriculture

Prior to the emergence of readily accessible remote sensing technology, scholars relied upon surveys about meteorological phenomena, agricultural land conditions, and crop categorisations to facilitate the prediction and monitoring of crop yields. However, acquiring such data posed formidable challenges, frequently proving arduous and inaccessible, particularly within developing regions. The difficulties of precision agriculture, which necessitate a copious volume of samples for effective implementation, further compounded the obstacles associated with data procurement. In conjunction with their temporal dynamics, the documentation of field attributes, surface characteristics, and soil properties entailed substantial temporal and financial investments, thereby inherently constraining the process. These challenges underscore the pivotal role of remote sensing as a promising and productive modality in agricultural research [5]. Through remote sensing technologies, researchers are empowered to surmount the limitations inherent to traditional data collection methodologies, thereby facilitating the comprehensive monitoring and analysis of agricultural terrains. Remote sensing involves detecting and surveilling physical attributes within a defined area by analysing reflected radiation, typically through sensors deployed on satellites or aircraft [2]. Satellite sensor systems provide spectral, spatial, and temporal data regarding target objects like fields and farms. Spectral data encompasses insights from distinct sensor bands and visual wavelengths, while spatial data relates to geographical dimensions. Temporal data tracks evolving conditions, enabling diverse agricultural applications such as improved weed and water management, crop mapping, drought detection, and growth monitoring [25]. Remote sensing also allows for deriving numerous vegetation indices, capturing essential parameters like chlorophyll content and leaf area index [26]. Nonetheless, the effectiveness of remote sensing systems is constrained by susceptibility to atmospheric disturbances and cloud cover, necessitating noise mitigation strategies in data processing [27,28].

- Mitigation of cloud-induced noise in satellite imagery: Data acquired through remote sensing systems are vulnerable to various sources of noise, encompassing factors such as sensor orientation, atmospheric fluctuations, and phenomena such as cloud cover. Consequently, satellite imagery often contains contaminants like noise, clouds, and dead pixels, posing challenges to data integrity [12,29]. Reflective information captured by sensors can undergo distortion due to atmospheric influences, such as water droplets leading to cloud formation or optical impediments causing shadows, exacerbating the issue [30]. These artefacts significantly disrupt the usability of remote sensing data, necessitating pre-processing techniques to enhance their quality [31]. Kussul et al. [12] addressed noise and reflection issues in satellite images for land classification by employing a method involving masking out noisy regions and utilising self-organising Kohonen maps to restore missing pixel values in specific spectral bands [32]. Rooted in machine learning algorithms, this approach demonstrated potential for improving yield predictions and grain classifications in agricultural research contexts.Moreover, various information reconstruction methods have been devised for remote sensing images [29]. Zhang et al. [33] proposed a deep learning framework employing spatial–temporal patching to address thin and thick cloudy areas and shadows in Sentinel and Landsat images. Lin et al. [34] adopted an alternative strategy, utilising information cloning to replace cloudy regions with temporally correlated cloud-free areas from remote sensing imagery, assuming minimal land cover change over short periods. Additionally, Zhang et al. [35] developed a novel approach for reconstructing missing information in remote sensing features using a deep convolutional neural network (CNN) combined with spatial–temporal–spectral information. This framework showed promising results in mitigating cloud and noise issues, offering a feasible solution for enhancing the quality of satellite images pertinent to agricultural applications. However, applying such methodologies to satellite imagery of Norwegian farms necessitates access to suitable datasets comprising both cloudy and cloud-free spatial–temporal data, entailing substantial time investment for dataset preparation.

- Derived vegetation indices: Remote sensing systems excel in capturing and interpreting the spectral composition of radiation emitted from the Earth’s surface, facilitating insights into land, water bodies, and various vegetation features. Vegetation indices, derived from multiple remote sensing spectral bands, serve as straightforward transformations aimed at condensing reflective information into more interpretable metrics, thereby enhancing understanding of vegetation properties and terrestrial dynamics [36,37]. The origins of vegetation indices trace back to 1972, with Pearson and Miller pioneering the creation of the first two indices to discern contrasts between vegetation and ground surfaces [38]. Subsequently, over 519 unique vegetation indices have been developed, tailored to diverse purposes, applications, and environmental contexts [26]. However, not all vegetation indices are universally applicable, as demonstrated by studies indicating limitations of widely used indices like the Normalised Difference Vegetation Index (NDVI) and Enhanced Vegetation Index (EVI) in specific scenarios [39,40]. Accordingly, selecting suitable vegetation indices for particular research objectives and environmental conditions is imperative, particularly in contexts such as crop type classification, where nuances among grain types pose challenges [15].Fundamental studies, exemplified by Massey et al. [41], have underscored the utility of vegetation indices, notably NDVI, in crop mapping and classification endeavours. Leveraging decision tree algorithms and time-series NDVI data extracted from satellite images, Massey et al. achieved notable accuracy in crop type classification, demonstrating the efficacy of NDVI despite challenges stemming from similarities among target crop types. Ten vegetation indices were meticulously selected in this research based on theoretical foundations, practical applications, and extant literature. These indices are elucidated alongside their respective theories, applications, and formulae, sourced from Kobayashi et al. [42], Henrich et al. [26], and pertinent references. Notably, the wavelength parameters in the formulae represent ranges rather than precise values, acknowledging variations in band wavelengths across diverse remote sensing systems. This variation necessitates adaptability in selecting neighbouring band wavelengths for specific indices.

- Enhanced Vegetation Index (EVI) distinguishes the canopy structure and vegetation growth status of crops and is calculated from the visible near-infrared. Equation (1) shows how the EVI is calculated. L is a canopy background adjustment value. and are aerosol resistance coefficients that correct aerosol influences between wavelengths of 640 and 760 nm by using a band between wavelengths of 420 and 480 nm, and G is the gain factor. These factors are used between different remote sensing systems to account for their unique reflections and atmospheric values [37].

- Land Surface Water Index (LSWI) distinguishes the water content in crops based on near- and shortwave infrared. These bands are sensitive to soil moisture and leaf water, making the LSWI sensitive to water. Equation (2) shows how the LSWI is calculated [39].

- Normalised Difference Senescent Vegetation Index (NDSVI), designed by Qi et al. [43], distinguishes both water content and the growth status and is based on visible shortwave infrared, a combination of the EVI and LSWI. Equation (3) shows how the NDSVI is calculated. These wavelengths are in the water absorption regions of the spectrum and can, therefore, be combined to extract information related to senescent vegetation.

- Normalised Difference Vegetation Index (NDVI), designed by Rouse et al. [44], measures the amount and density of green vegetation based on the near-infrared and red bands. This is useful for agriculture, as unhealthy crops reflect less near-infrared than healthy crops [45]. Equation (4) shows how the NDVI is calculated. The normalisation in the NDVI enables it to consistently measure the greenness with fewer deviations than a more straightforward ratio of the two bands [44].

- Shortwave Infrared Water Stress Index (SIWSI), estimates the water content of crop leaves. A shortwave infrared band operates on a wavelength influenced by leaf water content, enabling SIWSI to extract information related to the water content in crops. This can identify the stress level inflicted by the water content. Equation (5) shows how the SIWSI is calculated. An index value below zero indicates that the crops have sufficient water content. In contrast, values above zero indicate that the crops are experiencing some level of water stress due to too much water [46].

- Green–Red Normalised Difference Vegetation Index (GRNDVI), created by Wang et al. [47], is one of multiple vegetation indices using a combination of the red, green, and blue bands in an NDVI manner. The GRNDVI is one of the better vegetation indices for measuring leaf area index (LAI), an important feature related to crop health, growing stage, and type. Equation (6) shows the calculation for the GRNDVI.

- Normalised Difference Red-Edge (NDRE) is a vegetation index similar to the NDVI, which uses a ratio between the near-infrared and red-edge bands. It can extract information from remote sensing data regarding crops’ health and status, including the canopy’s greenness. Equation (7) shows the calculations for NDRE [48,49].

- Structure Intensive Pigment Index 3 (SIPI3) is a function of carotenoids and chlorophyll a. Carotenoids provide information about canopy physiological status while chlorophyll includes information about plant photosynthesis. The original SIPI was designed by Penuelas et al. [50] while attempting to achieve the highest correlation between carotenoids and chlorophyll for acquiring plant physiology and phenology information. The SIPI3 is a variation of this, shown in Equation (8).

- Photosynthetic Vigour Ratio (PVR) is a simple combination of the red and green bands, reflecting chlorophyll absorption, canopy greenness, and photosynthetic activity. Equation (9) shows the formula for the PVR [18,49].

- Green Atmospherically Resistant Vegetation Index (GARI), created by Gitelson et al. [51], is a vegetation index resistant to atmospheric effects while also being sensitive to a range of chlorophyll-a concentrations, plant stress, and rate of photosynthesis. The GARI is as much as four times less sensitive to atmospheric effects than the NDVI while providing similar information. Equation (10) shows how the GARI is calculated.

2.1.3. AI for Norwegian Agriculture: Crop Yield Prediction

Crop yield prediction has been explored across various environments, employing diverse data types and machine learning models. Raun et al. [52] conducted a study to ascertain the feasibility of predicting winter wheat grain yields using multi-spectral seasonal features. They achieved a high correlation with yield by incorporating two Normalised Difference Vegetation Index (NDVI) measurements into an estimated yield feature, explaining 83% of yield variability. However, their approach could not capture growth changes due to environmental factors such as rain, lodging, and shattering. Nevavuor et al. [53] and You et al. [5] employed deep learning techniques for crop yield predictions. Nevavuor et al. utilised RGB bands and NDVI from multi-spectral images in a convolutional neural network (CNN) model, achieving a mean absolute percentage error (MAPE) as low as 8.8%. You et al. utilised temporal satellite images and textual features to predict soybean yields, reducing the root mean square error (RMSE) by 30%

Khaki and Wang [54] integrated genotype, soil, and weather data into a deep neural network (DNN) to predict corn yield and yield difference. Their model, trained on soil and weather data, yielded an RMSE of 11%, outperforming regression tree and least absolute shrinkage and selection operator methods. Their findings underscored the importance of soil and weather data in yield prediction, advocating for weather prediction as an essential component. Russello and Shan [6] utilised satellite images in a CNN to predict soybean yields, identifying features between February and September as most crucial for prediction. Basnyat et al. [55] investigated the optimal timing for using remote sensing to predict crop yield by analysing the correlation between the NDVI and yield across different periods. They concluded that the NDVI derived between the 10th and 30th of July was most suitable for predicting yields of spring-seeded grains, aligning with the maturity period of these crops. These findings are pertinent for evaluating the timing of early yield predictions for Norwegian farms, considering that spring-seeded grains in Norway typically mature in September.

- Crop classification and mapping: Satellite imagery has demonstrated efficacy in classifying various crop types and capturing their spectral dynamics, facilitating the detection of phenological disparities among them. However, leveraging satellite images for classification purposes presents inherent challenges [15]. For instance, Hu et al. [56] and Qui et al. [57] observed that stacking satellite images throughout the growing season often yielded redundant information. Hence, it is imperative to discern the types of features to employ and the appropriate AI architectures and techniques to utilise. Castillejo-González et al. [28] investigated the impact of transitioning from pixel-based classification (1D) to object-based classification (aggregating pixels). Their findings consistently favoured the object-based approach, indicating the superiority of 2D images over single-pixel values for grain classification. Ji et al. [13] employed multi-spectral temporal satellite images in 3D convolutional neural networks (CNNs) for crop classification. By leveraging a 3D CNN framework, they captured the temporal representation of the entire growing season, outperforming analogous 2D methods and exhibiting exceptional proficiency in identifying growth patterns and crop types. The prevalence of 3D CNN architectures in achieving superior feature extraction and representation of multi-spectral temporal data is well documented. Due to the diminished accuracy of pixel-based classification methods, Peña-Barragán et al. [58] devised an Object-Based Crop Identification and Mapping model, attaining an accuracy of 79%. Their analysis highlighted the substantial contribution of remote sensing images from the late-summer period to the classification model, followed by mid-spring and early-summer images. This disparity underscores the significance of late-growing-season images and underscores the challenge of early-season classification.

- Attention-based multiple-instance learning: In image classification research endeavours, datasets typically possess consistent labelling corresponding to their respective classes. However, this conformity is often lacking in real-world applications, as evidenced in medical imaging studies where multiple images may need more specific class labels, providing only generalised statements regarding their categorisation. Similarly, in agricultural contexts, the labelling of crops for each field needs to be completed, resulting in a semi-labelled dataset. Multiple-instance learning (MIL) has emerged as a thoroughly investigated semi-supervised learning method [59,60,61] to mitigate this challenge. MIL aims to predict the labels of bags, where bags comprise a mixture of labelled and unlabelled instances, departing from the conventional approach of classifying individual instances. In a traditional MIL framework, a single target class is specified, and bags are labelled as positive or negative depending on the presence or absence of at least one instance of the target class. Consequently, comprehensive knowledge of instance labels becomes unnecessary, thereby enhancing the suitability of MIL in scenarios with partially labelled data [60,62]. Notably, MIL models incorporate an attention mechanism that assigns an attention score to each instance within a bag, indicating its influence on bag classification. These scores are calculated using the softmax function, ensuring their collective summation to unity.Prior research has explored various approaches to bag classification. Cheplygina et al. [63] introduced bag dissimilarity vectors as features in the training dataset, representing each bag’s dissimilarity. Chen et al. [64] leveraged instance similarities within bags to construct a feature space, extracting crucial features for traditional supervised learning. Raykar et al. [65] investigated diverse instance-level classification techniques and feature selection methods within bags, yielding promising and competitive outcomes.

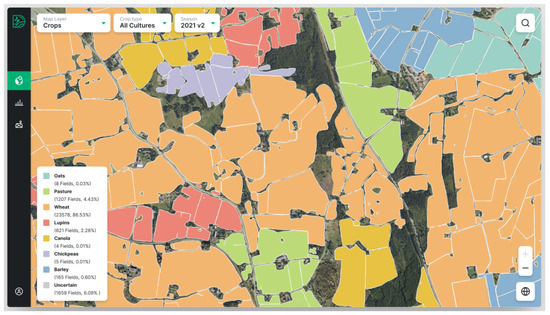

- State of the art techniques: In this section, the authors present state-of-the-art research endeavours pertinent to each hypothesis delineated earlier in this manuscript. The major focus is on identifying the most promising technique for cloud and noise removal and surveying multiple studies concerning crop classification utilising vegetation indices, thereby facilitating the selection of appropriate indices. Furthermore, the authors provide an overview of comparative state-of-the-art methodologies and solutions for early yield prediction and crop classification. The insights gleaned from these research efforts serve as a source of inspiration for the techniques and methods applied in this research study, serving as benchmarks against which to evaluate the outcomes in forthcoming sections.Detecting clouds in remote sensing images to create a training dataset may pose challenges for this research study. To address this requirement, Wen et al. [16] proposed a two-step robust principal component analysis (RPCA) framework designed to identify cloud regions within satellite images, removing them and restoring the affected areas. Their innovative approach obviates the need for a cloud-free satellite image dataset or any preliminary cloud detection pre-processing steps. Among the various promising cloud removal methods available, the recent work by Wen et al. [16] emerges as the most suitable technique. Given the time availability constraints, their methodology holds promise for mitigating noise in satellite images, potentially enhancing the accuracy of pertinent deep learning models.Sharma et al. [4] conducted an extensive investigation into the temporal dynamics of crop yield prediction, emphasising the importance of early-stage observations. Their study highlighted the critical role of satellite images in predicting crop yields from the initial months of the growing season, particularly the first month. Through systematic evaluation by replacing images from each month with noise and assessing the resulting root mean square error (RMSE), they demonstrated a significant increase in prediction error upon removing images from the first month, indicative of the pivotal role of early-season data. This observation resonates with the notion of early-season farming activities exerting substantial influence on subsequent yield outcomes, as detailed in Section 2.1.1. Motivated by this insight, Sharma et al. [4] devised a predictive framework for wheat crop yields leveraging raw satellite imagery. Their methodology involved the utilisation of convolutional neural networks (CNNs) to extract relevant information from multi-band satellite images over a time series. Subsequently, the CNN outputs were integrated into a long short-term memory (LSTM) network, facilitating the encoding of temporal features for yield prediction. Remarkably, their model achieved notably low training and validation losses, underscoring the effectiveness of their approach. Additionally, they augmented the prediction accuracy by incorporating supplementary features such as location and area information, enriching the input vector of the LSTM. The methodologies employed by Sharma et al. [4] are, thus, poised to play a pivotal role in shaping the methodology of this research study.Building upon this foundation, researchers delved into grain yield prediction specific to Norwegian agriculture, leveraging multi-temporal satellite imagery, weather data time series, and farm information. Their exploration culminated in the development of a hybrid deep neural network model, comprising sets of CNNs akin to Sharma et al. [4], along with a gated recurrent neural network for integrating CNN outputs with extensive weather, farm, and field features. Impressively, their hybrid model achieved a mean absolute error (MAE) as low as 76 kg/daa, demonstrating robust performance in predicting crop yields at the farm scale. Notably, their study compared favourably with the findings of Sharma et al. [4], indicating comparable levels of prediction accuracy. Furthermore, researchers ventured into early yield prediction endeavours, striving to forecast yields during the growing season. They observed an increase in error rates when attempting predictions using data from weeks 10 to 26 and from weeks 10 to 21, highlighting the challenges of in-season yield forecasting. Their findings underscored the need for comprehensive feature sets, including grain types, growing areas, and production benefits, to enhance the accuracy of in-season predictions. Additionally, they harnessed various data sources relevant to Norwegian agriculture (https://www.landbruksdirektoratet.no/nb (accessed on 21 April 2024)), including Sentinel-2 satellite images (https://sentinel.esa.int/web/sentinel/home (accessed on 21 April 2024)), meteorological data (https://frost.met.no/index.html (accessed on 21 April 2024)), cadastral layers (https://www.kartverket.no/en (accessed on 21 April 2024)), and field boundaries (https://www.geonorge.no/ (accessed on 21 April 2024)), emphasising the utility of diverse datasets in yield prediction research efforts. Given the limited availability of data related to grain production in Norway, the methodologies and datasets curated by researchers hold significant relevance and applicability for this research study. As such, their code base and acquired datasets have been integrated into our research efforts to streamline development and leverage existing computational resources effectively. This collaborative approach ensures continuity and builds upon the foundations laid by previous investigations, advancing the frontiers of crop yield prediction research within the Norwegian agricultural landscape.Prior to utilising remote sensing for crop productivity and yield prediction, a crucial preliminary task entails crop identification and farmland area calculation, which is currently unavailable during the growing season in Norway, as highlighted in the Introduction section. This necessitates predicting farmland area and crop content for accurate crop yield forecasting [66]. Kussul et al. [12] investigated the impact of spatial context learning on field classification performance, comparing a 2D model with a spectral domain learning approach from single pixels (1D). Their study employed five sets of convolutional neural networks (CNNs), each comprising two convolutional and max-pooling layers, followed by two fully connected layers with varying neuron numbers, utilising the ReLU activation function. The 1D and 2D implementations achieved accuracies of 93.5% and 94.6%, respectively, marking the highest classification accuracy identified to date. This suggests the relevance of both implementations, particularly the 2D version, to our research study, where satellite images or vegetation indices could be employed. Notably, their findings revealed that the classification accuracy for winter wheat, their sole grain type, surpassed other classes by 2–3%, indicating the presence of phenological information in remote sensing data pertinent to distinguishing wheat from other crop types. Although successful crop classification studies exist, each research effort exhibits uniqueness based on geographical environment, target crops, and available data. To our knowledge, only one previous work on crop classification in Norway has been conducted, by DigiFarm (https://digifarm.io/ (accessed on 3 May 2024)), a leading agriculture technology company in Norway. Their system utilises Sentinel images upscaled to 1 m resolution and field boundaries to classify over six crop types, achieving an accuracy of up to 92%. While their system employs satellite images of higher resolution than those in this study, it has been limited to specific regions in Norway. This can be seen in Figure 2. Notably, no research has been undertaken to generalise grain classification across all Norwegian grain producers.

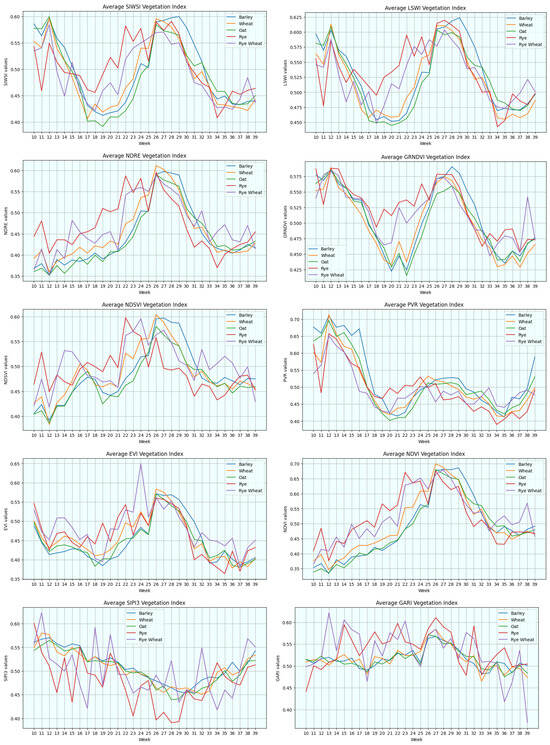

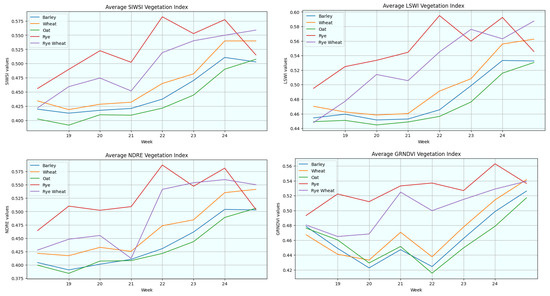

Figure 2. DigiFarm’s crop detection system. Reprinted/adapted with permission from the authors Mikkel Andreas Kvande and Sigurd Løite Jacobsen, Hybrid Neural Networks with Attention-Based Multiple Instance Learning for Improved Grain Identification and Grain Yield Predictions. Masters Thesis; published by University of Agder, Grimstad, Norway, 2022 [17].The MIL techniques delineated in Section 2.1.3 denoted advancements over contemporary methods. However, instance-level classification emerged as the sole approach yielding interpretable instance label results, albeit with generally low accuracy [62]. In response, Ilse et al. [62] introduced attention-based multiple-instance learning (MAD-MIL) to redress these shortcomings. Attention-based multiple-instance learning is a single-class MIL technique wherein a neural network model learns the Bernoulli distribution of bag labels through an attention-based mechanism. This mechanism, operating as a permutation-invariant aggregation operator, serves as an attention layer within the network. Utilising the weights of this layer post-prediction enables the extraction of interpretable information regarding the contribution of each instance within a bag to the bag’s label, along with providing indications of each instance’s label. Ilse et al. [62] outperformed several prior MIL research studies, achieving results comparable to state-of-the-art solutions. Additionally, they engineered a more adaptable MIL system, streamlining implementation. Adopting Ilse et al.’s [62] system could yield benefits for training a classifier using semi-labelled data for remote sensing crop classification. However, it is pertinent to note that Ilse et al.’s [62] system is tailored for a single target class, necessitating extension to operate within a multi-class environment.Vegetation indices play a pivotal role in agricultural applications, particularly in crop classification and mapping. However, limited research focusing exclusively on grain types within crop classification has been identified. Hence, exploring the application of vegetation indices becomes imperative, considering the multitude of available indices and their potential to differentiate common crop types in Norwegian agriculture. This section delves into state-of-the-art research on various vegetation indices. These emphasised studies have achieved high classification accuracy or revealed insightful characteristics of specific indices relevant to crop types. Hu et al. [15] employed a time series of multiple vegetation indices to discern phenological differences between corn, rice, and soybeans. Leveraging 500 m spatial resolution satellite images, the researchers investigated the efficacy of five vegetation indices, including the LSWI and the NDSVI, in distinguishing target classes. Their findings underscored the importance of using multiple vegetation indices over time, showcasing the promising utility of the LSWI and the NDSVI in crop classification. Foerster et al. [14] utilised the NDVI vegetation index to classify 12 crop types, reporting varying accuracy levels across different crops. Notably, the NDVI performed well in classifying grain types, with winter wheat, barley, and rye achieving high accuracy. Their temporal analysis of NDVI profiles throughout the growing season revealed distinct trends for each crop type, offering valuable insights for crop mapping efforts.Peña-Barragán et al. [58] highlighted the positive impact of NDVI and SWIR-based vegetation indices in crop type mapping, with the NDVI contributing significantly to model learning. This underscores the relevance of NDVI and SWIR-based indices in crop classification research. Ma et al. [67] investigated multiple vegetation indices derived from multi-temporal satellite images to detect powdery mildew diseases in wheat crops. Their findings demonstrated the efficacy of these indices in predicting crop diseases, highlighting their potential for providing early insights into crop health. Wang et al. [47] evaluated various combinations of red, green, and blue bands to develop the GRNDVI, which exhibited strong correlations with leaf area index (LAI). The GRNDVI emerges as a promising index for differentiating crop types based on leaf sizes. Barzin et al. [48] analysed 26 vegetation indices to predict corn yields, identifying NDRE, the GARI, the SCCCI, and the GNDVI as dominant variables for yield prediction. Their findings offer valuable insights into feature selection for crop yield prediction models. Susantoro et al. [68] extensively analysed 23 vegetation indices to map sugarcane conditions, identifying the NDVI, LAI, SIPI, ENDVI, and GDVI as pertinent indices. The SIPI emerges as a novel index with potential applicability in crop classification. Metternicht et al. [69] evaluated four indices, including the PVR, for distinguishing crop density variations. The PVR emerges as a promising index for classifying crop types based on density variations, offering valuable information for machine learning models. These research efforts collectively underscore the significance of vegetation indices in crop classification and mapping, providing a diverse array of indices with potential applicability in the agricultural domain.

Figure 2. DigiFarm’s crop detection system. Reprinted/adapted with permission from the authors Mikkel Andreas Kvande and Sigurd Løite Jacobsen, Hybrid Neural Networks with Attention-Based Multiple Instance Learning for Improved Grain Identification and Grain Yield Predictions. Masters Thesis; published by University of Agder, Grimstad, Norway, 2022 [17].The MIL techniques delineated in Section 2.1.3 denoted advancements over contemporary methods. However, instance-level classification emerged as the sole approach yielding interpretable instance label results, albeit with generally low accuracy [62]. In response, Ilse et al. [62] introduced attention-based multiple-instance learning (MAD-MIL) to redress these shortcomings. Attention-based multiple-instance learning is a single-class MIL technique wherein a neural network model learns the Bernoulli distribution of bag labels through an attention-based mechanism. This mechanism, operating as a permutation-invariant aggregation operator, serves as an attention layer within the network. Utilising the weights of this layer post-prediction enables the extraction of interpretable information regarding the contribution of each instance within a bag to the bag’s label, along with providing indications of each instance’s label. Ilse et al. [62] outperformed several prior MIL research studies, achieving results comparable to state-of-the-art solutions. Additionally, they engineered a more adaptable MIL system, streamlining implementation. Adopting Ilse et al.’s [62] system could yield benefits for training a classifier using semi-labelled data for remote sensing crop classification. However, it is pertinent to note that Ilse et al.’s [62] system is tailored for a single target class, necessitating extension to operate within a multi-class environment.Vegetation indices play a pivotal role in agricultural applications, particularly in crop classification and mapping. However, limited research focusing exclusively on grain types within crop classification has been identified. Hence, exploring the application of vegetation indices becomes imperative, considering the multitude of available indices and their potential to differentiate common crop types in Norwegian agriculture. This section delves into state-of-the-art research on various vegetation indices. These emphasised studies have achieved high classification accuracy or revealed insightful characteristics of specific indices relevant to crop types. Hu et al. [15] employed a time series of multiple vegetation indices to discern phenological differences between corn, rice, and soybeans. Leveraging 500 m spatial resolution satellite images, the researchers investigated the efficacy of five vegetation indices, including the LSWI and the NDSVI, in distinguishing target classes. Their findings underscored the importance of using multiple vegetation indices over time, showcasing the promising utility of the LSWI and the NDSVI in crop classification. Foerster et al. [14] utilised the NDVI vegetation index to classify 12 crop types, reporting varying accuracy levels across different crops. Notably, the NDVI performed well in classifying grain types, with winter wheat, barley, and rye achieving high accuracy. Their temporal analysis of NDVI profiles throughout the growing season revealed distinct trends for each crop type, offering valuable insights for crop mapping efforts.Peña-Barragán et al. [58] highlighted the positive impact of NDVI and SWIR-based vegetation indices in crop type mapping, with the NDVI contributing significantly to model learning. This underscores the relevance of NDVI and SWIR-based indices in crop classification research. Ma et al. [67] investigated multiple vegetation indices derived from multi-temporal satellite images to detect powdery mildew diseases in wheat crops. Their findings demonstrated the efficacy of these indices in predicting crop diseases, highlighting their potential for providing early insights into crop health. Wang et al. [47] evaluated various combinations of red, green, and blue bands to develop the GRNDVI, which exhibited strong correlations with leaf area index (LAI). The GRNDVI emerges as a promising index for differentiating crop types based on leaf sizes. Barzin et al. [48] analysed 26 vegetation indices to predict corn yields, identifying NDRE, the GARI, the SCCCI, and the GNDVI as dominant variables for yield prediction. Their findings offer valuable insights into feature selection for crop yield prediction models. Susantoro et al. [68] extensively analysed 23 vegetation indices to map sugarcane conditions, identifying the NDVI, LAI, SIPI, ENDVI, and GDVI as pertinent indices. The SIPI emerges as a novel index with potential applicability in crop classification. Metternicht et al. [69] evaluated four indices, including the PVR, for distinguishing crop density variations. The PVR emerges as a promising index for classifying crop types based on density variations, offering valuable information for machine learning models. These research efforts collectively underscore the significance of vegetation indices in crop classification and mapping, providing a diverse array of indices with potential applicability in the agricultural domain.

2.2. Research Methodology

This section describes the collection and pre-processing of multi-source agricultural data before integration into AI models. Various geographical, remote sensing, meteorological, and textual features encompassing grain production data were employed. The collection and pre-processing of multi-spectral satellite images are elaborated earlier in this manuscript. The authors now detail the methodologies for collecting and pre-processing geographical and temporal meteorological data. The utilisation of Norwegian grain production data, including grain delivery data and production subsidies, are presented in the forthcoming sections.

2.2.1. Sentinel-2A Satellite Images

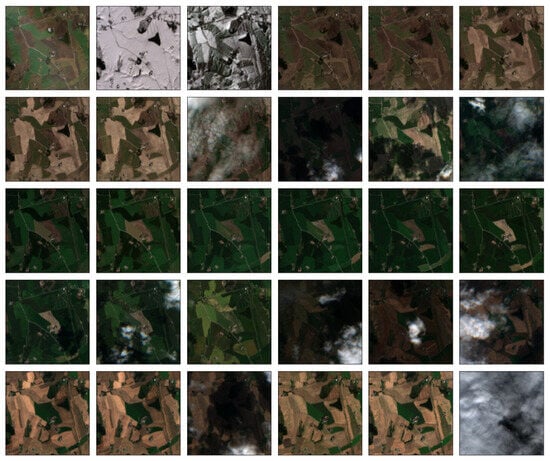

The authors utilised satellite images that can be obtained from orbiting satellites and are publicly accessible (https://www.sentinel-hub.com/ (accessed on 4 May 2024)). These provide detailed high-resolution observations of Earth. The authors acquired farm-based satellite images from 2017 to 2019, encompassing 30 images for each farm yearly. An example of all 30 raw satellite images of a farm in a given year can be seen in Figure 3. Each image comprises 12 spectral bands (https://sentinel.esa.int/web/sentinel/missions/sentinel-2 (accessed on 4 May 2024)), with distinct meanings, wavelengths, bandwidths, and resolutions detailed in Table 1. The acquisition period spanned from 1st March to 1st October, which aligns with Norway’s growing season.

Figure 3.

An example of the 30 raw satellite images throughout a growing season of a specific farm, visualised in RGB.

Table 1.

Overview of Sentinel-2A bands, their specifications, and the information they reflect.

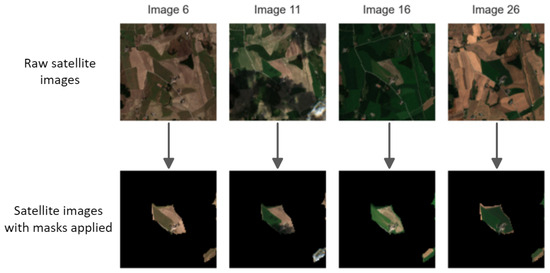

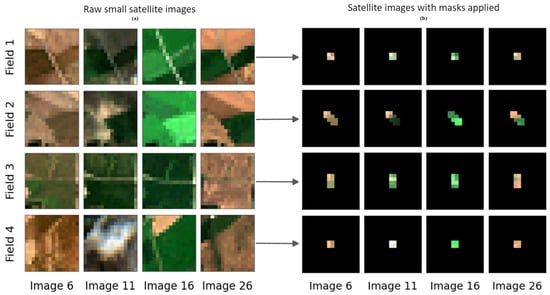

- Masking remote sensing images: To filter out pixels and extract relevant information from remote sensing images, masks were applied. The process of generating image masks involves three key steps, as explained in the state-of-the-art work in [7]. Firstly, the bounding box intersects with the cultivated fields for each farm, retaining only the coordinates corresponding to the cultivated areas within the satellite images. Next, the geographic map coordinates of longitude and latitude are converted to pixel locations within the satellite images. Since the images have dimensions of pixels, the bounding boxes delineate the borders of these images, with the top-left corner being (0,0) and the bottom-right corner being (100,100). Each point within the bounding box representing a cultivated field is then mapped to pixel coordinates based on its relative position within the bounding box. Finally, a matrix of zeros and ones is generated based on the locations of the cultivated fields, resulting in a binary matrix. This matrix serves as the mask, with ones indicating the presence of cultivated areas and zeros representing non-cultivated regions within the satellite images.This research effort generated additional masks for various types of remote sensing images. The mask creation process was based on the geometry of the farm obtained from disposed properties, which will be explained later in this manuscript, representing the arable land area of the farm. The coordinates of the farm’s location were utilised as the centre of the mask to ensure precise alignment with satellite images. Each pixel in the mask was assigned a Boolean value, where a value of true indicated that the pixel fell within a property boundary belonging to the farm. Prior to utilising remote sensing images, each channel of these images was multiplied by the corresponding mask. This operation effectively converted pixels outside the farm’s property boundaries to zero, thereby eliminating irrelevant information for subsequent modelling tasks. This process is visually depicted in Figure 4, where the left side illustrates four satellite images of the same farm, while the right side displays the same images with masks applied.

Figure 4. An example of property masking applied to four 100 × 100 satellite images of a farm spread throughout the growing season.

Figure 4. An example of property masking applied to four 100 × 100 satellite images of a farm spread throughout the growing season. - Extracting field boundaries from remote sensing images: For the preliminary crop classification experiment, raw satellite images were employed. During experimentation, it became evident that each satellite image encapsulates information for multiple fields within a farmer’s farmland, often featuring different crop types. Consequently, applying a single crop type label to every image proved impractical. To address this, new field-based images with dimensions of were generated, each covering a single field. To facilitate the creation of these field-based images, the latitude and longitude for each farm and field were translated to values between 0 and 100, corresponding to its central position on the raw satellite image. In cases where the field’s position extended beyond the satellite image boundaries, an offset was applied. Once the field was correctly positioned, the satellite image was cropped using a side, as illustrated in Figure 5.

Figure 5. An example of four 16 × 16 fields throughout the growing season, created by the original satellite images in (a) and the satellite images with field masks applied in (b). Reprinted/adapted with permission from the authors Mikkel Andreas Kvande and Sigurd Løite Jacobsen, Hybrid Neural Networks with Attention-Based Multiple Instance Learning for Improved Grain Identification and Grain Yield Predictions. Masters Thesis; published by University of Agder, Grimstad, Norway, 2022 [17].

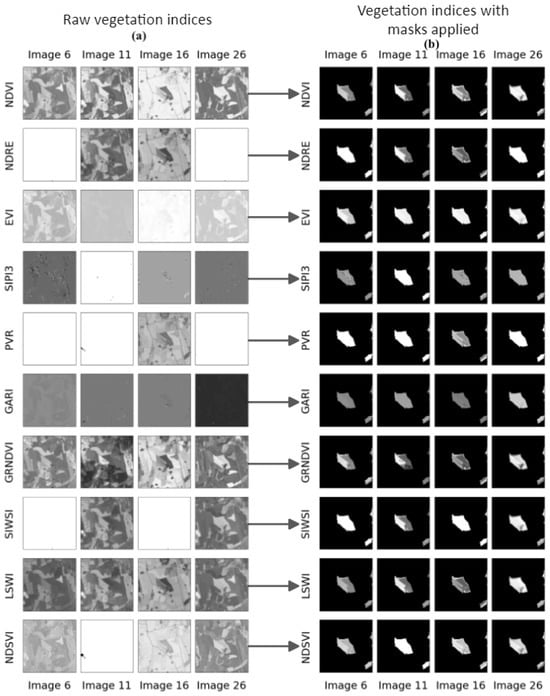

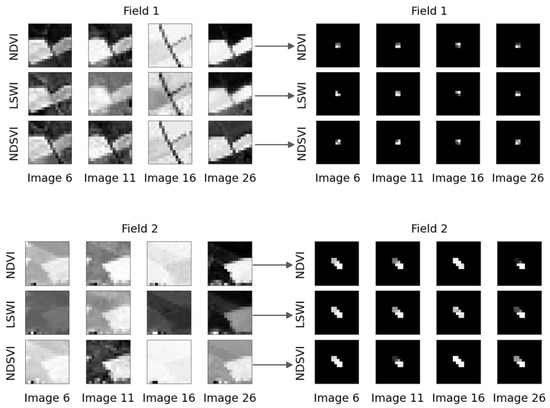

Figure 5. An example of four 16 × 16 fields throughout the growing season, created by the original satellite images in (a) and the satellite images with field masks applied in (b). Reprinted/adapted with permission from the authors Mikkel Andreas Kvande and Sigurd Løite Jacobsen, Hybrid Neural Networks with Attention-Based Multiple Instance Learning for Improved Grain Identification and Grain Yield Predictions. Masters Thesis; published by University of Agder, Grimstad, Norway, 2022 [17]. - Vegetation indices derived from satellite images: Throughout the literature reviewed in the previous Section 2.1.2, the efficacy of various vegetation indices for classifying and mapping crop types was demonstrated by several research efforts, including those by Hu et al. [15], Ma et al. [67], Wang et al. [47], and Barzin et al. [48]. These studies highlighted the utility of different vegetation indices derived from raw Sentinel images for agricultural applications. To identify suitable indices for Norwegian agriculture, a selection was made based on the findings of these research efforts.The appropriate Sentinel-2A bands for each vegetation index were determined using the wavelength information provided earlier in this manuscript, as well as studies by Kobayashi et al. [42] and Henrich et al. [26]. Subsequently, formulae were derived based on the theoretical foundations presented earlier. These formulae utilised the band numbers corresponding to the Sentinel-2A bands listed in Table 1. These calculations were performed to generate vegetation indices from every available raw satellite image, adhering to established methodologies and principles outlined in the literature.The vegetation indices were computed by extracting the relevant bands from the acquired Sentinel-2A satellite images and applying their respective formulae, as explained earlier in this manuscript. Ten vegetation indices were calculated for each of the 30 satellite images captured throughout the growing season for each farm. These indices were then stacked to form a image array. Examples of ten vegetation indices can be seen in Figure 6a, with the corresponding property masks applied that can be seen in Figure 6b. The images were normalised to a range between 0 and 1 to facilitate visualisation of the differences within the images. A greyscale representation was used as the images consist of a single channel. Blurry or blank areas in some images could be attributed to factors such as cloud cover, noise, limitations inherent to the vegetation index, or constraints in visualisation. A field-based dataset was constructed from the vegetation index images to facilitate classification using the vegetation indices, akin to the process employed for raw satellite images previously detailed in this section. Examples of two fields depicted using the NDVI, LSWI, and NDSVI vegetation indices are illustrated on the left side of Figure 7, with the corresponding field masks applied on the right side of Figure 7.

Figure 6. Ten 100 × 100 vegetation indices derived from the satellite images in (a), spread throughout the growing season. The vegetation indices with property masks applied can be seen in (b).

Figure 6. Ten 100 × 100 vegetation indices derived from the satellite images in (a), spread throughout the growing season. The vegetation indices with property masks applied can be seen in (b). Figure 7. Left side: Two sets of 16 × 16 field images throughout the growing season, for the vegetation indices NDVI, LSWI, and NDSVI, created by the vegetation images in Figure 6. Right side: The field-based vegetation indices from the left side with field masks applied. Reprinted/adapted with permission from the authors Mikkel Andreas Kvande and Sigurd Løite Jacobsen, Hybrid Neural Networks with Attention-Based Multiple Instance Learning for Improved Grain Identification and Grain Yield Predictions. Masters Thesis; published by University of Agder, Grimstad, Norway, 2022 [17].

Figure 7. Left side: Two sets of 16 × 16 field images throughout the growing season, for the vegetation indices NDVI, LSWI, and NDSVI, created by the vegetation images in Figure 6. Right side: The field-based vegetation indices from the left side with field masks applied. Reprinted/adapted with permission from the authors Mikkel Andreas Kvande and Sigurd Løite Jacobsen, Hybrid Neural Networks with Attention-Based Multiple Instance Learning for Improved Grain Identification and Grain Yield Predictions. Masters Thesis; published by University of Agder, Grimstad, Norway, 2022 [17].

2.2.2. Geographical Data

The authors employed geographical data, which include disposed properties and coordinates, to delineate field shapes and boundaries. This excludes features such as trees, rivers, and mountains, which span the years 2017 to 2019. The disposed properties represent the intersection of the Norwegian cadastral data. The experimental findings revealed that, despite each farm being registered with a specific organisation number, they had distinct locations recorded at the Norwegian Brnnysund Register Centre (Brnnysundregistrene) (https://data.brreg.no/fullmakt/docs/index.html (accessed on 5 May 2024)). Inaccuracies in these registered locations could lead to misleading information, associating them with different properties within a city, sometimes distant from the actual farm. New longitude and latitude values were computed for the extracted polygons from the disposed properties data to address this issue. Updated coordinates effectively narrowed the geographical area, proving to be more accurate compared to the baseline study.

2.2.3. Temporal Meteorological Data

Temperature and precipitation were considered pertinent for plant growth in Norwegian agriculture, as indicated in [7,8]. Additional meteorological features (https://forages.oregonstate.edu/ssis/plants/plant-tolerances (accessed on 5 May 2024)), including the growth degree of the plant, sunlight duration, and the state of the land surface and soil (e.g., dry surface, moist ground, or frozen ground with measurable ice), were also extracted in this study.

2.2.4. Norwegian Grain Production Data:

The authors utilised official public archives containing farmer grant applications, production subsidies, and grain deliveries (https://data.norge.no/ (accessed on 5 May 2024)) from the Norwegian Agriculture Agency. Norwegian grain farmers, reliant on subsidies, annually submit grant applications detailing crop cultivation. The authors found three primary reports that served as foundational data: grain delivery reports specified quantities of barley, oats, wheat, rye, and rye wheat sold; agriculture production subsidies encompassed 174 features, including land area, crop types, and subsidies calculations; and a detailed report linked farmers’ organisation numbers to cadastral units. Consequently, the authors used grain production data from 2012 to the 2017 season, capturing historical yields and vital subsidy-related features for this research study.

3. Results

In this section, the authors evaluate and validate the baseline methodologies proposed in [7]. This assessment involves the augmentation of the agricultural data corpus to improve the overall predictive efficacy of grain yield. The authors precisely extract vegetation indices from satellite imagery to facilitate the classification of grains, concurrently evaluating the suitability of the attention-based multiple-instance learning (MAD-MIL) approach in the context of semi-labelled agricultural data. Furthermore, the authors apply these models to optimise early in-season yield predictions.

3.1. Baseline Approaches: Improving Grain Yield Predictions with DNN

In the preliminary phase of this experimentation, the authors extend the training dataset by incorporating supplementary features and additional data samples, surpassing the parameters defined in the baseline approach. Subsequent subsections explicate the individual testing of various methodologies.

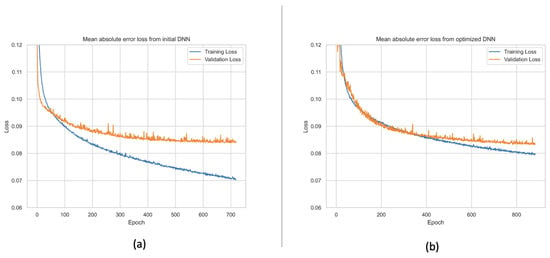

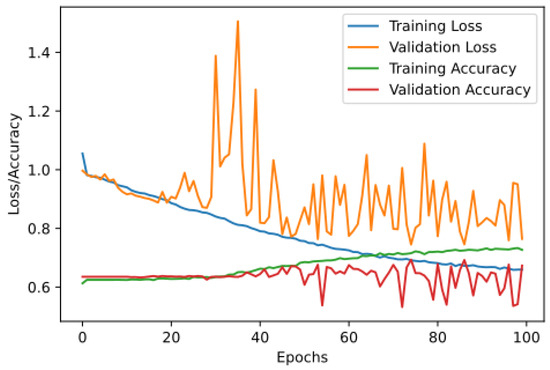

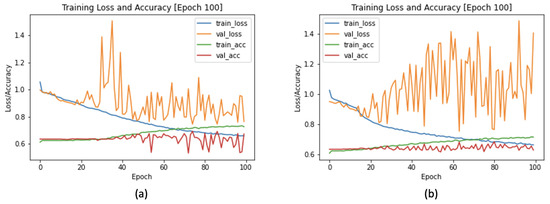

- Weather features to dense neural network: The authors enhance the utilised data by introducing three novel features—sunlight duration, state of the ground, and growth degree—into the training dataset. These additional features are integrated with farmer organisation numbers, expanding the feature count from 900 to approximately 1500. The authors subsequently re-implement the dense neural network (DNN), where the authors observed the initial signs of overfitting. Consequently, the model is adjusted by reducing the size of the first dense layer to 256 and marginally increasing the dropout percentages to 0.25 and 0.5, respectively. As illustrated in Figure 8b, a noticeably diminished disparity between training loss and validation loss is evident compared to Figure 8a, signifying mitigation of overfitting. The mean absolute errors (MAEs), measured in kg per 1000 m2 for the baseline and the newly proposed optimised models, stand at 83.85 kg per 1000 m2 and 83.28 kg per 1000 m2, respectively; see Table 2 and Figure 8. As a result, the authors opt to employ this newly proposed and optimised DNN model for subsequent experiments.

Figure 8. Comparative analysis of baseline DNN model in (a) and newly proposed optimised DNN model in (b).

Figure 8. Comparative analysis of baseline DNN model in (a) and newly proposed optimised DNN model in (b). Table 2. Summary of the baseline models to improve grain yield predictions.

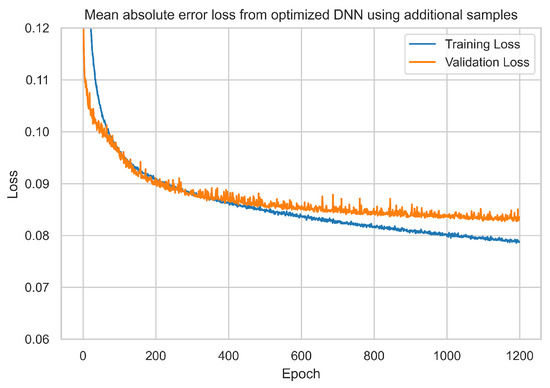

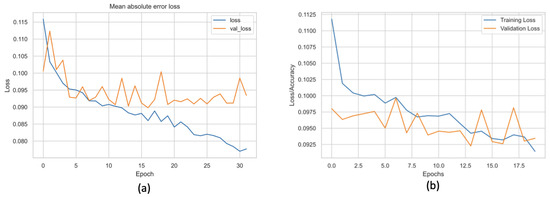

Table 2. Summary of the baseline models to improve grain yield predictions. - Extended data corpus to dense neural network: The authors believe that augmenting the training dataset with additional data samples has the potential to improve accuracy. To substantiate this hypothesis, they re-implemented scripts sourced from the Frost API (https://frost.met.no/ (accessed on 5 May 2024)) to expand the weather data by 33% for 2020. Following this augmentation, the data underwent pre-processing, employing interpolation and normalisation techniques. The optimised dense neural network (DNN) model was subsequently trained for 1200 epochs, resulting in diminished loss values for both the training and validation sets. Furthermore, the mean absolute error (MAE) for this particular experiment was computed as 82.82 kg per 1000 m2 (see Table 2 and Figure 9), closely resembling the performance of the optimised DNN model discussed earlier in this section.

Figure 9. Yield prediction loss achieved from the optimised model when adding more data to the corpus.

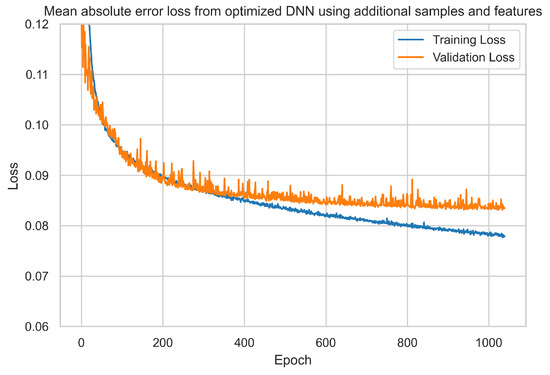

Figure 9. Yield prediction loss achieved from the optimised model when adding more data to the corpus. - Integration of features and data corpus to dense neural network: Following the integration of the dataset with additional weather features and data samples, as previously detailed, a further 600 features and approximately 15,000 samples were incorporated. The optimised dense neural network (DNN) model, adapted to accommodate the expanded input shape, underwent 1200 training epochs. As a consequence, the mean absolute error (MAE) value exhibited a marginal decrease of approximately 0.7 kg per 1000 m2, which resulted in a value of 82.60 kg per 1000 m2; see Table 2 and Figure 10. Furthermore, a minor increase in training time of approximately 5 to 6 min was observed, attributed to the enlarged data corpus.

Figure 10. Yield prediction loss achieved from the optimised model when integrating the features and data corpus to the dense neural network.

Figure 10. Yield prediction loss achieved from the optimised model when integrating the features and data corpus to the dense neural network.

3.2. State-of-the-Art Approach: Improving Grain Yield Predictions with Hybrid CNN Model