A Method for Analyzing the Phenotypes of Nonheading Chinese Cabbage Leaves Based on Deep Learning and OpenCV Phenotype Extraction

Abstract

1. Introduction

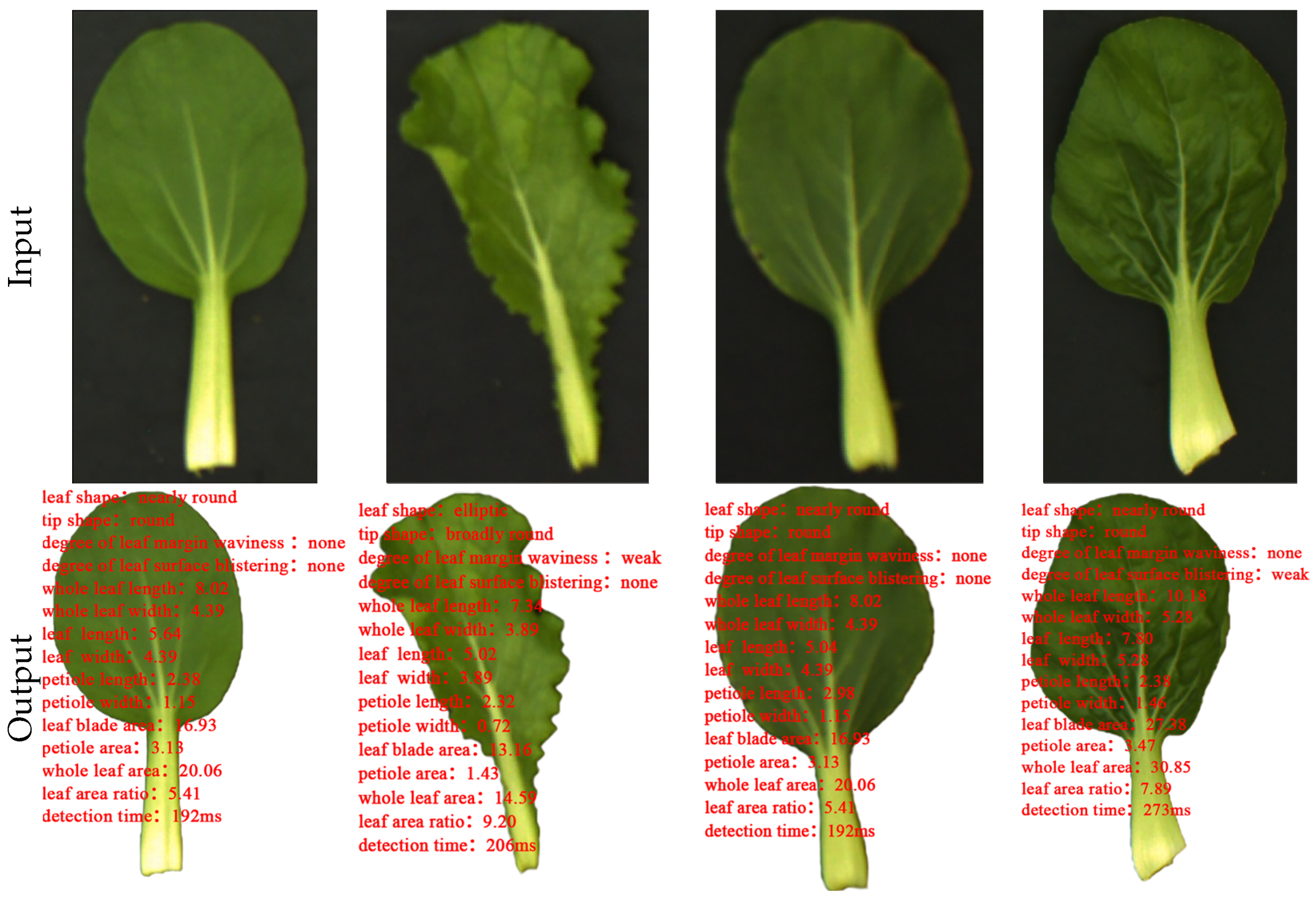

2. Materials and Methods

2.1. Construction of Test Materials and Datasets

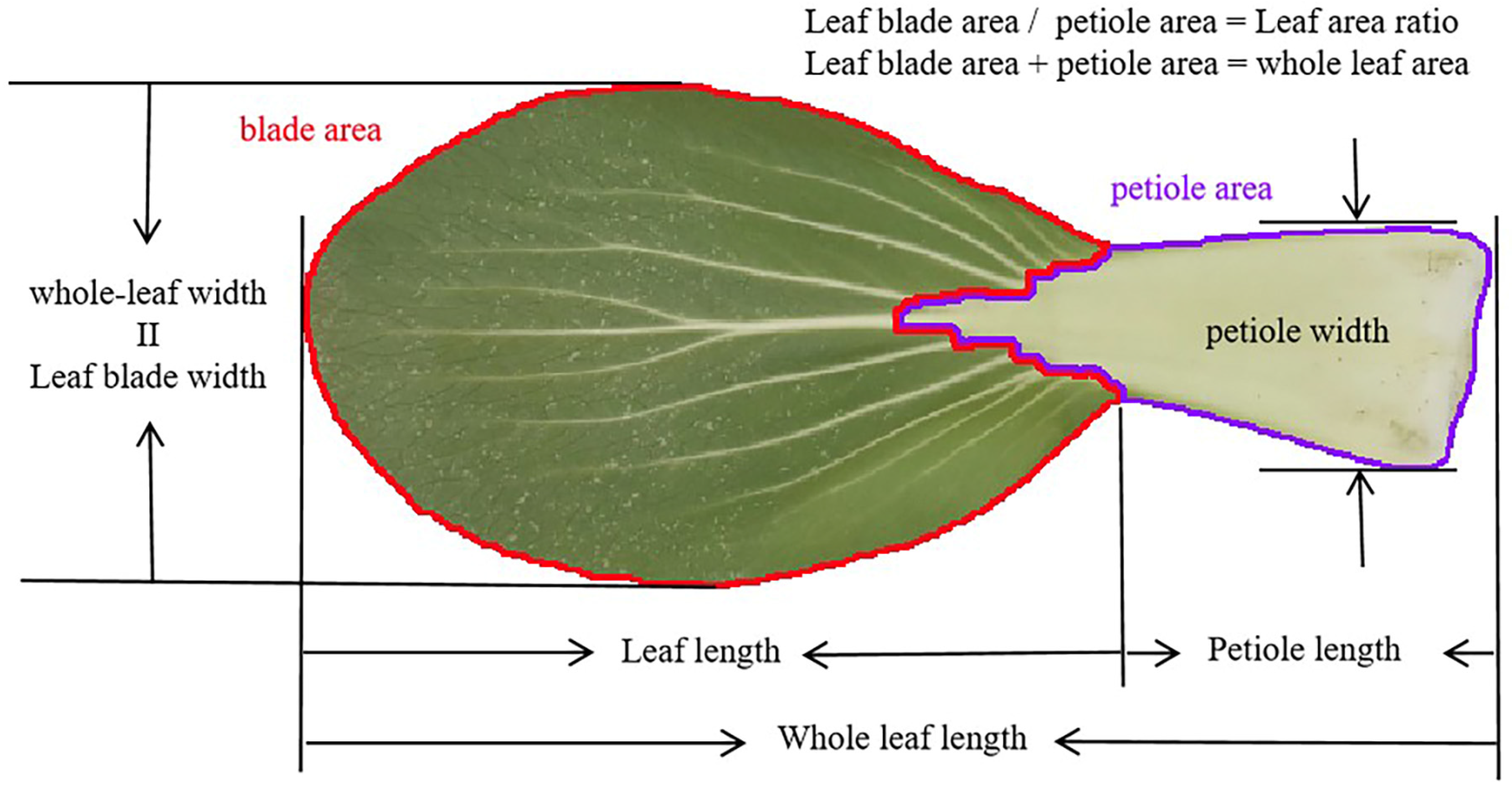

2.2. Phenotypic Traits of Nonheading Chinese Cabbage Leaves

2.3. Extraction Algorithm for Leaf Phenotypic Traits

2.3.1. Selection of the Deep Learning Model

2.3.2. OpenCV Image Processing Technology

- (1)

- The image is converted from the RGB channel to the HSV channel, simplifying color identification through hue, saturation, and brightness components. This aids in selecting specific color ranges during subsequent background replacement, with the specific formula as follows:V = max

- (2)

- Analyzing the pixel value characteristics of the leaf overall and the background in the image, it was found that there was a significant difference in the hue component between the leaf overall and the background, while the differences in the saturation and brightness components were smaller. Therefore, by setting maximum and minimum values for the three channels, an ROI mask is created, and the background is entirely blackened to eliminate its impact on leaf edge detection. After threshold adjustment tests, the entire leaf can be extracted at a mask threshold of , leaf parts can be segmented at , and petiole parts can be segmented at . Three masking thresholds were applied to process the HSV channel images, resulting in images that preserve the whole leaf, the leaf blade, and the petiole while darkening the background, as shown in Figure 5.

- (3)

- The images with darkened backgrounds of three different parts are then converted into the lab color space to enhance the image, providing better brightness and color invariance for subsequent processing.

- (4)

- Median filtering is used to remove salt-and-pepper noise from the three images.

- (5)

- The three images are converted into a binary image. The image is thresholded, setting pixel values below the threshold to 0 (black) and those above the threshold to 255 (white). After repeated testing, a threshold of 10 is selected, resulting in an image with a black background and a white subject.

- (6)

- Edge detection algorithms are used to extract the coordinates of the edge contour pixels of the subject, leaves, and petioles from the three images of different parts, and save them as arrays. By using the array, the coordinates of the top left and bottom right corners of the contour are obtained, the length, width, and height of each part are calculated, and the area information of each part is obtained based on the number of pixels within the contour.

3. Experiments and Result Analysis

3.1. Classification Model Training

3.1.1. Parameter Setting

3.1.2. Evaluation Indicators

3.1.3. Model Training

3.2. Quantitative Traits

3.3. Leaf Phenotype Extraction Analysis Based on Deep Learning and OpenCV Fusion

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shao, G.; Cao, P.; Zhu, B.; Lin, X.; Fang, Y.; Wang, S.; Ma, S.; Zhong, F. Genetic analysis of agronomic traits in distantcrosses between non-bearing cabbage and seedling cabbage. Fujian J. Agric. 2023, 38, 1047–1053. [Google Scholar]

- Zhao, M.; Han, R.; Wang, Z.; Ren, Y. Diversity analysis of agronomic traits in 20 Brassica napus varieties introductory trials. South. J. Agric. 2020, 51, 1960–1969. [Google Scholar]

- Hou, X.; Li, Y.; Liu, T. Advances in genetic breeding and molecular biology of non-heading Chinese cabbage. Nanjing Nongye Daxue Xuebao (J. Nanjing Agric. Univ.) 2022, 45, 864–873. [Google Scholar]

- Liu, X.; Jin, J.; Wang, G.; Herbert, S.J. Soybean yield physiology and development of high-yielding practices in Northeast China. Field Crops Res. 2008, 105, 157–171. [Google Scholar] [CrossRef]

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Zhang, S.; Li, Y.; Zang, X.; Wang, Y.; Wang, G. Study on the diversity of leaf phenotypic traits in Yanshan chestnut germplasm resources. J. Hortic. 2022, 49, 1673–1688. [Google Scholar]

- Singh, A.; Jones, S.; Ganapathysubramanian, B.; Sarkar, S.; Mueller, D.; Sandhu, K.; Nagasubramanian, K. Challenges and opportunities in machine-augmented plant stress phenotyping. Trends Plant Sci. 2021, 26, 53–69. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Fang, R.; Chen, X.; Zhou, K.; Yuan, X.; Lei, G.; Huang, Y. Identification of phenotypic traits and evaluation of yellow wilt resistance in eggplant germplasm. J. Nucl. Agric. 2020, 34, 31–40. [Google Scholar]

- Wei, L.; Jun, Y.; Li, Z.; Kou, G.; Qu, H. Multiclassification detection method for plant leaf diseases based on kernel function support vector machine. J. Agric. Mach. 2017, 48, 166–171. [Google Scholar]

- Deng, H.; Zhao, D.; Zheng, C. Video image detection of large-leaved boxwood leaf featuresbased on OpenCV. Guangdong Agric. Sci. 2014, 41, 173–175+237. [Google Scholar]

- Gong, Z.; Dong, C.; Yu, H.; Liu, B.; Li, C.; Liu, T.; Li, J. Development of machine vision-based softwarefor leaf morphology measurement in winter wheat. China Agric. Meteorol. 2022, 43, 935–944. [Google Scholar]

- Xu, S.; Li, L.; Tong, H.; Wang, C.; Bie, Z.; Huang, Y. Research on 3D phenotypic high-throughput measurement system of cucumber seedlings based on RGB-D camera. J. Agric. Mach. 2023, 54, 204–213+281. [Google Scholar]

- Jaisakthi, S.M.; Mirunalini, P.; Thenmozhi, D. Grape leaf disease identification using machine learning techniques. In Proceedings of the 2019 International Conference on Computational Intelligence in Data Science (ICCIDS), Chennai, India, 21–23 February 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Dara, S.; Tumma, P. Feature extraction by using deep learning: A survey. In Proceedings of the 2018 Second International Conference on Electronics, Communication and Aerospace Technology(ICECA), Coimbatore, India, 29–31 March 2018; IEEE: New York, NY, USA, 2018; pp. 1795–1801. [Google Scholar]

- Yang, L.; Wang, J.; Bei, S.; Bai, Z.; Chang, J.; He, X.; Li, W.; Shi, J. Research on maize leaf phenotype detection method based on computer vision. Jiangsu Agric. Sci. 2023, 51, 195–202. [Google Scholar]

- Yuan, Y. Computer Vision and Deep Learning for Precise Agriculture: A Case Study of Lemon Leaf Image Classification. J. Phys. Conf. Ser. 2023, 2547, 012024. [Google Scholar] [CrossRef]

- Chang, L.; Ding, X.; Yang, X.; Sun, P.; Cai, X.; Dong, G. Plant leaf recognition based on lightweight YOLO model. Intell. Comput. Appl. 2023, 13, 118–122. [Google Scholar]

- Liu, S.; Hu, B.; Zhao, C. Detection and identification of cucumber leaf pests and diseases based on improved YOLOv7. J. Agric. Eng. 2023, 39, 163–171. [Google Scholar]

- Miao, R.; Li, Z.; Wu, J. A lightweight cherry tomato ripening detection method based on improved YOLOv7. J. Agric. Mach. 2023, 54, 225–233. [Google Scholar]

- Wang, H.; Cao, L. Automatic segmentation and phenotypic analysis of plant leaves based on full convolutional neural network. Chin. J. Agric. Mech. Chem. 2021, 42, 161–168. [Google Scholar]

- Li, B.; Fan, J. Rice pest classification based on YOLOv5. Jiangsu Agric. Sci. 2024, 52, 175–182. [Google Scholar]

- Zhang, L.; Bayin, T.; Zeng, Q. Early disease detection method for grapevine leaves based on StyleGAN2-ADA and improved YOLOv7. J. Agric. Mach. 2024, 55, 241–252. [Google Scholar]

- Han, X.; Xu, Y.; Feng, R.; Liu, T.; Bai, J.; Lan, Y. Early identification of crop diseases based on infrared thermography and improved YOLOv5. J. Agric. Mach. 2023, 54, 300–307+375. [Google Scholar]

- Liu, Q.; Yang, X.; Jiang, H.; Sun, S.; Liang, Z. YOLOv7 rice seed detection based on fusion GhostNetV2. J. Agric. Mach. 2023, 54, 253–260+299. [Google Scholar]

- Li, L.; Lu, S.; Ren, H.; Xu, G.; Zhou, Y. A method for recognizing and locating mulberry branches and trunks in complex environments based on improved YOLO v5. J. Agric. Mach. 2024, 55, 249–257. [Google Scholar]

- NY/T 2223-2012; Guidelines for Testing the Specificity, Consistency and Stability of Newplant Varieties of Cabbage without Nodules. Chinese Standard: Beijing, China, 2012.

- Yan, Z.; Chen, L.; Liu, W.; Lai, H.; Ye, S. A YOLOGFFD-based method for recognizing fruit varieties and freshness. Food Mach. 2024, 40, 115–121. [Google Scholar]

- Che, G.; Liu, W.; Wan, L.; Yi, S.; Liu, H.; Wang, Q.; Lu, B. Design and test of automatic leveling system for rice seedling bed precision leveling machine. J. Agric. Eng. 2023, 39, 9–17. [Google Scholar]

- Liu, J.; Wang, X. Tomato diseases and pests detection based on improved Yolo V3 convolutional neural network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Wang, X.; Liu, J.; Zhang, D.; Lu, Y.; Zhou, Y.; Sun, L.; Hou, S.; Fan, X.; Shen, S.; et al. Multispectral Drone Imagery and SRGAN for Rapid Phenotypic Mapping of Individual Chinese Cabbage Plants. Plant Phenomics 2022, 2022, 0007. [Google Scholar] [CrossRef] [PubMed]

| Accuracy/% | Detection Speed/ms | Model Size/M | |

|---|---|---|---|

| EfficientNet | 94.2 | 56.1 | 21.4 |

| ResNet | 83.4 | 162.6 | 46.3 |

| SWIN Transformer | 92.1 | 124.6 | 87.6 |

| YOLOv8 | 90.7 | 12.9 | 2.7 |

| Vision Transformer | 92.3 | 73.6 | 91.3 |

| Accuracy/% | Precision/% | Recall/% | F1 Score | |

|---|---|---|---|---|

| Leaf shape model | 95.56 | 96.20 | 96.18 | 0.9619 |

| Tip shape model | 92.50 | 92.56 | 92.50 | 0.9253 |

| Leaf margin waviness model | 96.88 | 98.81 | 98.75 | 0.9878 |

| Leaf surface blistering model | 96.05 | 96.78 | 97.82 | 0.9730 |

| Average | 95.25 | 96.09 | 96.31 | 0.9620 |

| Manual Measurement Average Value | OpenCV Calculation Average Value | MAE | RMSE | |

|---|---|---|---|---|

| Leaf length | 11.03 | 11.07 | 0.01 | 0.19 |

| Leaf width | 5.65 | 5.64 | 0.01 | 0.1762 |

| Area | 35.13 | 35.11 | 0.02 | 0.2161 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, H.; Fu, L.; Li, J.; Lin, X.; Chen, L.; Zhong, F.; Hou, M. A Method for Analyzing the Phenotypes of Nonheading Chinese Cabbage Leaves Based on Deep Learning and OpenCV Phenotype Extraction. Agronomy 2024, 14, 699. https://doi.org/10.3390/agronomy14040699

Xu H, Fu L, Li J, Lin X, Chen L, Zhong F, Hou M. A Method for Analyzing the Phenotypes of Nonheading Chinese Cabbage Leaves Based on Deep Learning and OpenCV Phenotype Extraction. Agronomy. 2024; 14(4):699. https://doi.org/10.3390/agronomy14040699

Chicago/Turabian StyleXu, Haobin, Linxiao Fu, Jinnian Li, Xiaoyu Lin, Lingxiao Chen, Fenglin Zhong, and Maomao Hou. 2024. "A Method for Analyzing the Phenotypes of Nonheading Chinese Cabbage Leaves Based on Deep Learning and OpenCV Phenotype Extraction" Agronomy 14, no. 4: 699. https://doi.org/10.3390/agronomy14040699

APA StyleXu, H., Fu, L., Li, J., Lin, X., Chen, L., Zhong, F., & Hou, M. (2024). A Method for Analyzing the Phenotypes of Nonheading Chinese Cabbage Leaves Based on Deep Learning and OpenCV Phenotype Extraction. Agronomy, 14(4), 699. https://doi.org/10.3390/agronomy14040699