1. Introduction

Tomato farming is one of the most profitable agribusinesses. After potatoes, it is the second most important crop in the world. Tomatoes are high in vitamins like A and C as well as antioxidants. Various diseases and pests can affect tomatoes during their growing stage. Lower yields or even crop loss could follow if control is not carried out promptly. The most important stage is to precisely detect disease types and insect pests in order to effectively apply mitigation to diseases and pests to help vegetable farmers increase tomato yields [

1].

Conventional methods of tomato disease identification rely on visual inspection by skilled agronomists, which can be time-consuming, labor-intensive, and subject to human error. As a result, there is a growing interest in leveraging the advancement in computer technologies [

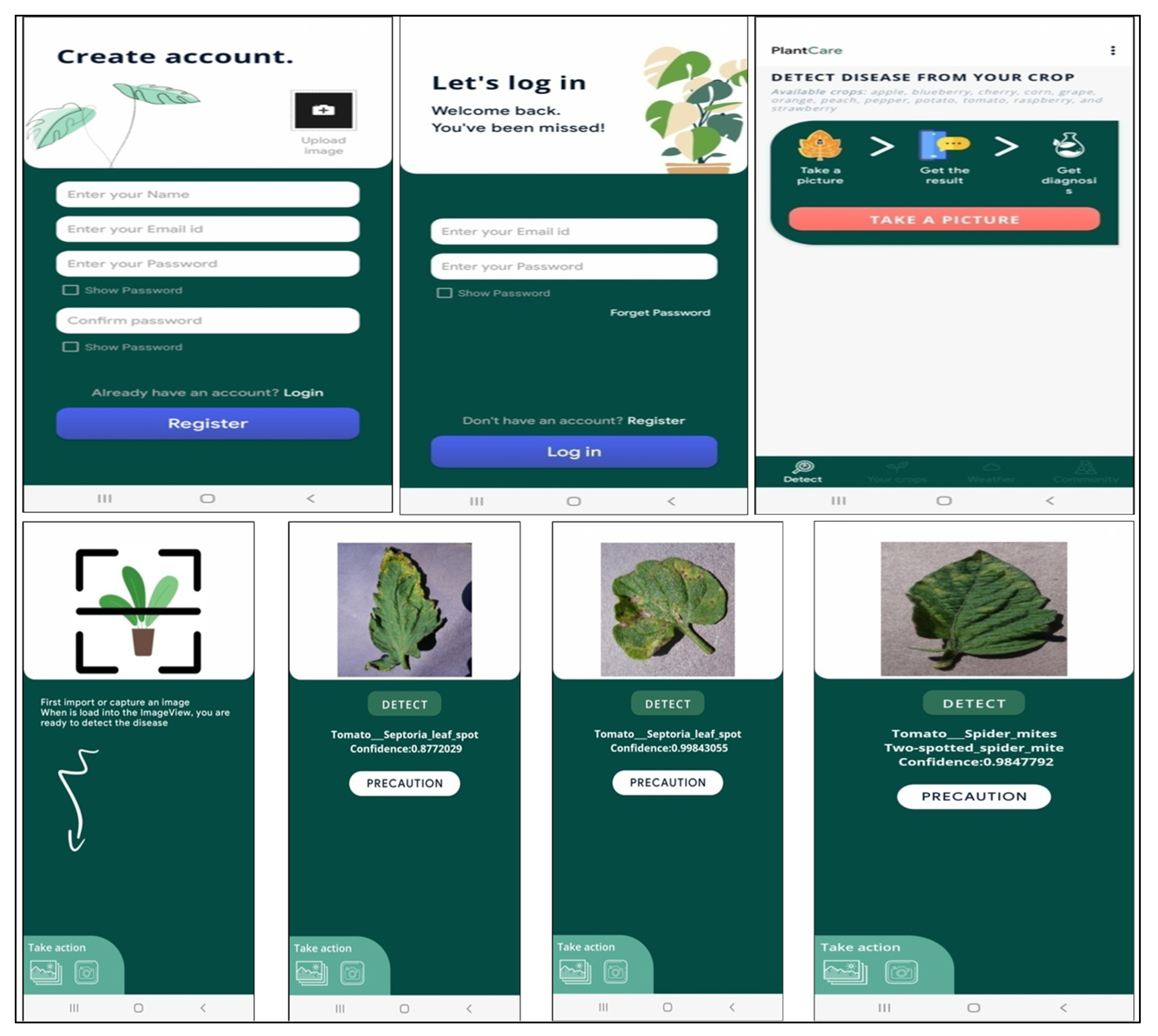

2] to automate and enhance the process of disease identification in plants. The goal of this work is to innovate technologies for preventing diseases in plant leaves and to increase farmers’ knowledge of these technologies. Since tomato is a commonly available vegetable, this work focused on machine learning and smartphone-based technology for developing an effective solution for tomato plant leaf disease detection. As opposed to manual plant leaf disease recognition, computer vision-based technology has the potential for directly detecting plant leaf diseases using raw input from camera images captured using a smartphone.

Convolutional Neural Network (CNN) has emerged as a predominant architecture for visual recognition in recent years, demonstrating notable success. The incorporation of convolution operations, imparting crucial inductive biases such as translation equivalence, is widely acknowledged as a key factor contributing to this success in object identification. Recent studies by Barman et al. [

3] and Agarwal et al. [

4] have reported successful identification of plant diseases in citrus and tomato leaves, respectively through the utilization of MobileNet and CNN. Again, MobileNet is reported as demonstrating 99.30% accuracy by Ahmed et al. [

5]. While CNNs and pretrained models have been extensively employed for tomato plant disease detection [

5,

6,

7], noteworthy research by Zhao et al. [

7] proposed a recognition model for tomato disease without relying on convolutions. In a similar vein, Karthik et al. [

8] introduced an attention-based mechanism for detecting diseases in tomato leaves. These advancements underscore the diverse approaches within the realm of neural networks for addressing the challenges of plant disease recognition.

Recent developments in deep learning have shown astounding success in a variety of computer vision applications, including object detection and image classification. One such groundbreaking development is the Vision Transformer (ViT) architecture [

9], which has shown exceptional performance on large-scale image classification tasks [

10,

11,

12]. Unlike CNNs, which have been widely used in computer vision, ViT operates solely on self-attention mechanisms, enabling it to capture global relationships between image patches and learn representations effectively [

13].

Like ViT, Inception was used by Sakkarvarthi et al., 2022 [

14], to detect tomato illness with a validation accuracy of 80%, which is lower than the accuracy reported in this study for Inception V3. They also reported a very low accuracy using VGG and ResNet. ResNet is again reported as a good technique for tomato leaf disease detection by Zhang et al. [

15]. A total of 92% accuracy was reported by Liu and Wang [

16] in their study using the improved Yolo V3. An accuracy of up to 95.65% for DensNet161, 94.93% for DensNet121, and 90.58% for VGG16 was reported by Ouhami et al. [

17].

In the context of tomato leaf disease detection through deep learning, despite various techniques explored by researchers [

18,

19], several questions persist:

- (i)

The challenge of assigning equal weights to all deep features in deep learning models prompts the question of whether an attention mechanism is essential [

20].

- (ii)

Researchers question whether integrating attention mechanisms in deep learning or transfer learning-based models could address the risk of overlooking significant features during classification.

The Vision Transformer (ViT) model holds significance in addressing the unanswered questions in tomato leaf disease detection using deep learning:

- (i)

ViT introduces a transformative approach by leveraging self-attention mechanisms, allowing the model to focus on relevant features without manual extraction. Its architecture inherently addresses the need for efficient automated feature extraction, potentially mitigating concerns about excluding vital information in the detection process [

21].

- (ii)

ViT inherently incorporates attention mechanisms through its self-attention mechanisms. Unlike traditional models, ViT dynamically assigns weights to different regions of the input image during processing. This addresses the challenge of equal weight distribution in deep features, potentially ensuring that significant features receive adequate attention during the classification of tomato leaf diseases.

In essence, the ViT model, with its attention mechanisms and unique architecture, provides a promising avenue for advancing automated feature extraction and addressing the challenges posed by traditional approaches. It aligns with the evolving needs of deep learning in the context of tomato leaf disease detection, offering potential solutions to the identified questions in the field.

Considering the above points, ViT was used in this study, which applies the pure self-attention-based architecture to sequences of picture patches and achieves competitive performance on the tough ImageNet classification task when compared to CNNs [

5]. The outcomes of this research have the potential to revolutionize the field of tomato disease management by providing an efficient, automated, and accurate solution for disease detection. By enabling early detection and intervention, farmers can minimize crop losses, optimize resource allocation, and contribute to sustainable and smart agricultural practices [

22].

3. Results and Discussion

As discussed above, the Inception V3 and ViT models were used for tomato disease detection. At first, the Inception V3 model was implemented with 30 epochs. The model yielded an accuracy of 94.80% and a 1.00% loss. The sparse categorical cross-entropy loss-based Adam optimizer was used in each layer for the optimization of the error and calculation of the loss in each layer. Initially, Inception V3 reported 65% accuracy in training and 70% accuracy in validation. With the increase of the epochs, the overall accuracy of the model was increased, and the losses were also reduced accordingly. The overall accuracy of the model from epoch 1 to 30 is shown in

Table 5 and

Figure 4.

Like Inception V3, the keras preprocessing layers were used for data augmentation and then embed in the ViT model. A multilayer perceptron (MLP) network with a dense layer and a dropout layer was introduced. A network that can change the images into patches was introduced after the MLP layer. For this, we used of the extract_patches module offered by TensorFlow. After the image was divided into patches, a patch encoder that executes the image patches’ linear transformation and adds a learnable position embedding to the projected vector was reported.

Precedingly, in the compilation step, a sparse categorical cross-entropy loss-based Adam optimizer was used for optimization and calculating the losses. Lastly was the training step: the model ran for 30 epochs and achieved an accuracy of 95% with 0.15% loss, as shown in

Table 6 and

Figure 5.

From

Table 5, it is observed that the ViT reported very good results from epoch number 10 onwards, with a minimum error in the model. The comparison data for accuracy and loss of Inception V3 and ViT are tabulated below, in

Table 7.

Table 6 compares the performance metrics between Inception V3 and Vision Transformer (ViT) models in terms of accuracy and loss. The ViT model outperforms Inception V3, achieving a significantly higher accuracy of 97.37% compared to Inception V3’s accuracy of 89.24%. This indicates that ViT has a superior ability to make correct predictions during the training phase. In addition to accuracy, the loss metrics are compared. The loss is a measure of how well the model is performing, with lower values indicating better performance. ViT demonstrates a remarkably lower loss of 0.07% compared to Inception V3’s loss of 1.36%. This signifies that the ViT model exhibits better optimization and is able to minimize errors more effectively during training.

Furthermore, the validation accuracy and validation loss are presented in

Table 6. Validation accuracy represents the model’s performance on a separate dataset not used during training; here, ViT maintains a higher accuracy of 95.76% compared to Inception V3’s 88.98%. Similarly, ViT achieves a lower validation loss of 0.15% in contrast to Inception V3’s 1.22%.

The results in

Table 6 highlight the superior training and validation performance of the ViT model over Inception V3 in terms of accuracy and loss metrics. These findings underscore the effectiveness of ViT in achieving higher accuracy and better optimization during the training process, making it a more robust model for tomato disease detection. ViT surpassed Inception V3 in terms of producing better accuracy at a lower loss rate, according to the results in

Table 7. Therefore, we created a confusion matrix for the ViT model and computed a classification report.

Table 8 presents the comprehensive classification report for the ViT model, which stands out as the best-performing classification algorithm in our study. The model showcases impressive precision, recall, and F1 scores across various classes representing different tomato diseases.

Figure 6 shows the confusion matrix of the ViT model for tomato leaf disease detection in our experiment.

For the detection of Tomato Bacterial Spot Disease, the ViT model achieves a precision of 0.95, indicating a high accuracy of positive predictions. The recall of 0.91 reflects the model’s ability to capture a significant portion of actual positive instances. The corresponding F1 score, a harmonic mean of precision and recall, is calculated at 0.93, underlining a robust balance between precision and recall for this class. The overall testing accuracy for Tomato Bacterial Spot Disease is reported as 90.99%, emphasizing the model’s efficacy in accurately classifying instances of this particular disease.

A similar high-level performance is observed for other tomato diseases, with varying precision, recall, and F1 scores. For instance, Tomato Mosaic Virus detection reaches exceptional precision, recall, and F1 scores of 0.99, 0.98, and 0.98, respectively, showcasing the model’s excellence in identifying this specific disease. Additionally, Tomato Late Blight, Tomato Septoria Leaf Spot, and Tomato Spider Mite demonstrate commendable performance, with balanced precision, recall, and F1 scores.

This detailed classification report (

Figure 6) provides insights into the ViT model’s effectiveness in distinguishing between different tomato diseases, highlighting its ability to deliver accurate and reliable predictions across various classes. The robust evaluation metrics presented in

Table 8 underscore the model’s potential for practical deployment in real-world scenarios, particularly in the context of tomato disease detection and classification.

In the comparative analysis (

Table 9), we studied various authors who experimented with tomato disease classification using different algorithms and datasets. Here are some observations:

The selection of the Vision Transformer (ViT) model was based on its proven efficacy in various image recognition tasks and its ability to capture global dependencies in data, particularly in the context of plant disease classification [

25]. The comparison with Inception V3 was chosen to benchmark ViT against a well-established convolutional neural network (CNN) architecture. Inception V3 serves as a reference model widely used in plant disease classification tasks [

26]. The goal was to assess the relative performance of ViT against a recognized baseline, providing insights into the strengths and weaknesses of each model.

ViT demonstrated impressive performance in handling diverse datasets, making it a suitable candidate for our study. The Vision Transformer (ViT) model demonstrated a testing accuracy of 90.99%, marginally lower than some counterparts. The comparison mandates consideration of factors such as model complexity and dataset characteristics. The 88% validation accuracy of Inception V3 highlights the inherent variability in model performance. However, interpreting these results necessitates attention to additional factors like dataset size, data preprocessing techniques, and experimental setup. A holistic evaluation is essential, emphasizing the multifaceted nature of assessing model efficacy beyond raw accuracy numbers.