HHS-RT-DETR: A Method for the Detection of Citrus Greening Disease

Abstract

1. Introduction

- Model innovation: This paper proposes an improved HHS-RT-DETR model. The model incorporates an adaptive mechanism that effectively handles feature maps of varying sizes, achieving more precise and efficient object detection, particularly for objects of different scales. This enhancement grants the model greater robustness and accuracy when dealing with complex scenes.

- Resource optimization: The HHS-RT-DETR model presented in this paper maintains high detection performance while significantly reducing the number of parameters and increasing detection speed. These optimizations make the model particularly suitable for deployment on resource-constrained platforms, such as mobile devices and embedded systems. The research findings of this paper provide important technical support and references for these platforms, contributing to the advancement of real-time object detection technology in edge computing environments.

2. Experimental Materials

2.1. Data Collection

2.2. Dataset Production and Operation Environment

3. Research Methodology

3.1. HHS-RT-DETR Model Design

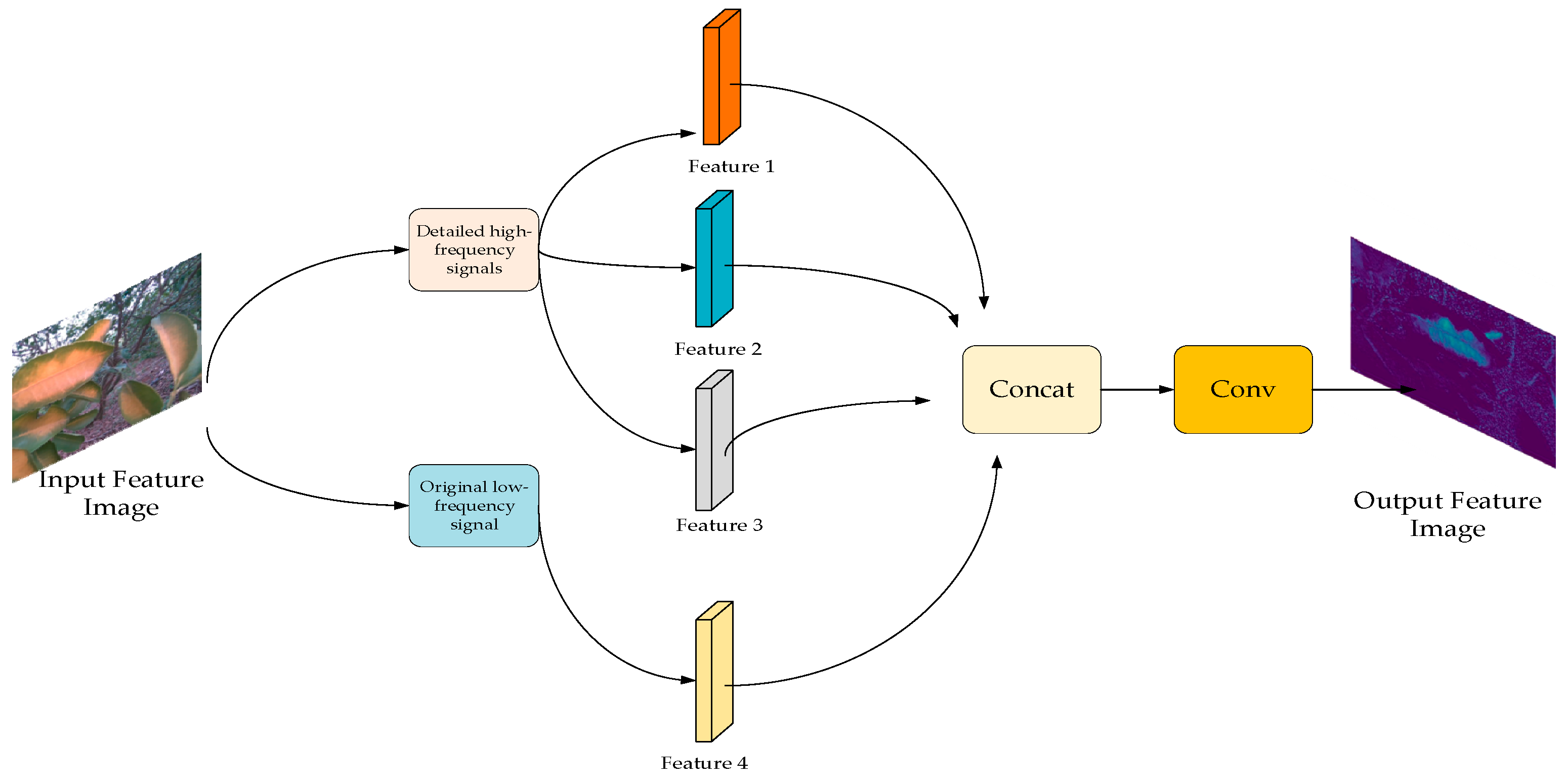

3.2. HS-FPN Network

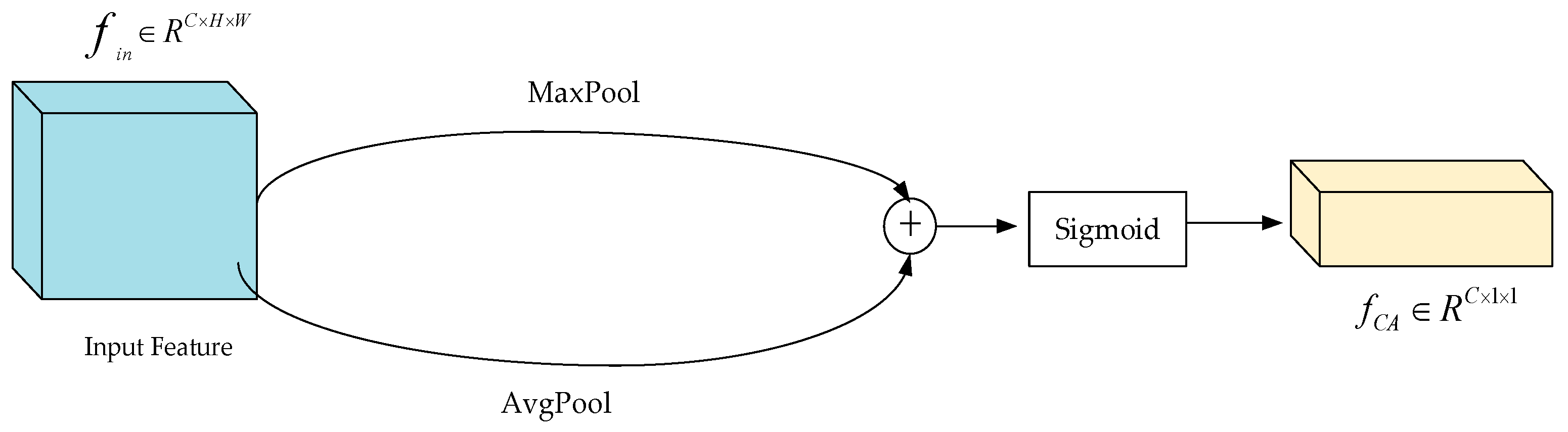

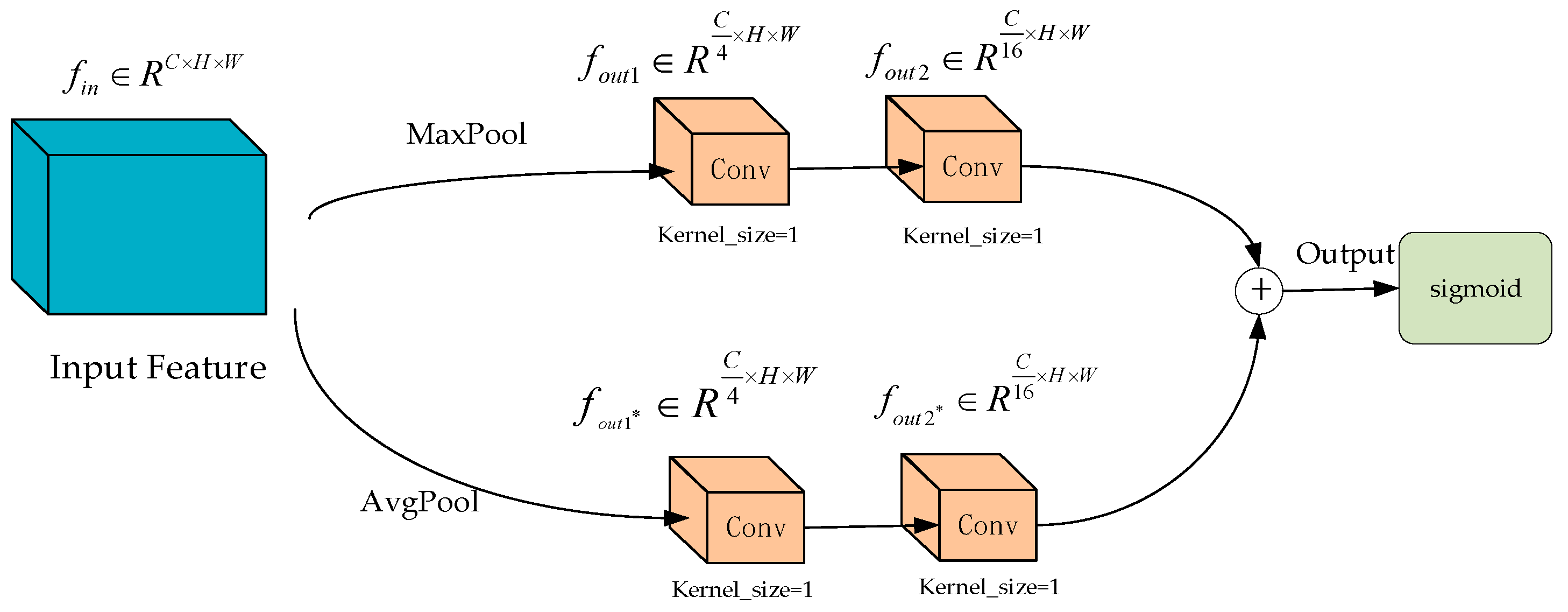

3.3. ChannelAttention_SFPN Network Module

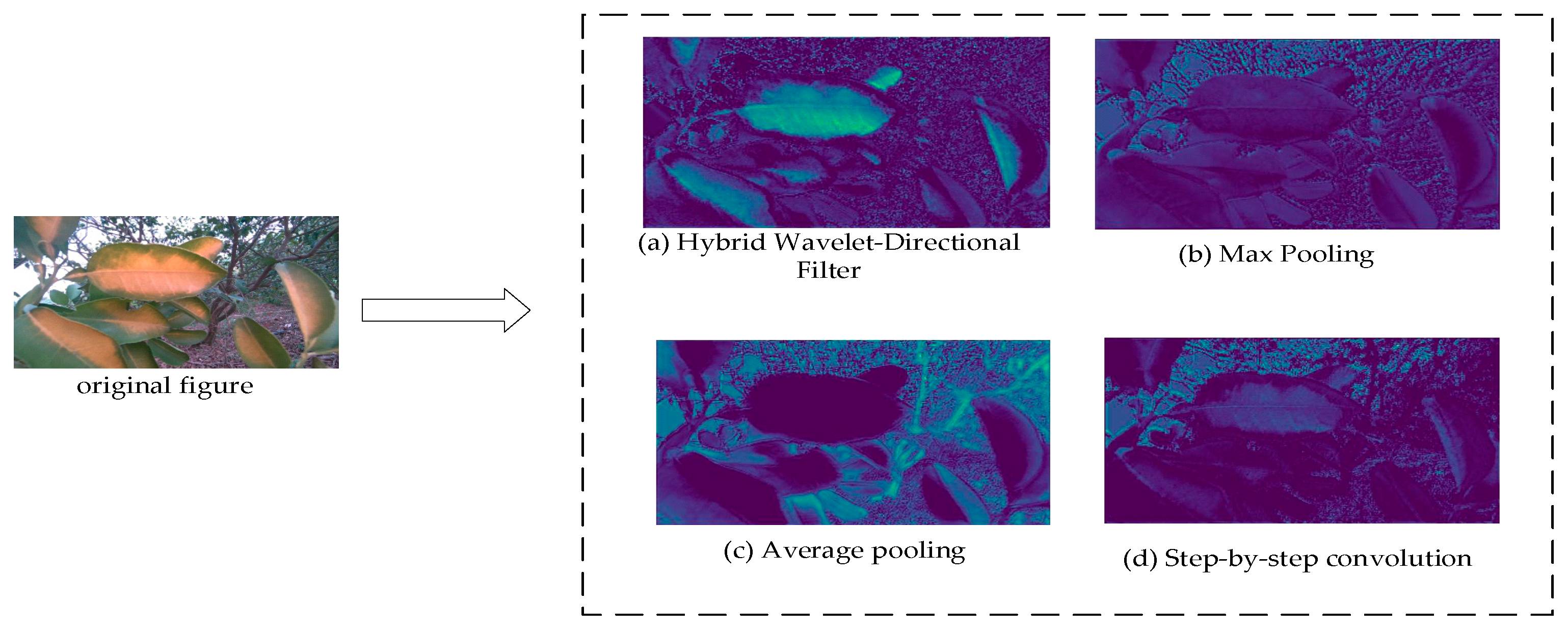

3.4. HWD Downsampling Operator

3.5. ShapeIoU Loss Function

- IoU—the traditional regression loss function.

- —the cost function of the shape of the GT box and the predicted box.

3.6. Model Training and Model Evaluation Indicators

- TP: True positive, predicted to be a positive sample, actual positive sample.

- FP: False positive, predicted to be a positive sample, actual negative sample.

- FN: False negative, predicted as a negative sample, actual positive sample.

- TN: True negative, predicted to be a negative sample, actual negative sample.

4. Results and Discussion

4.1. Ablation Experiments

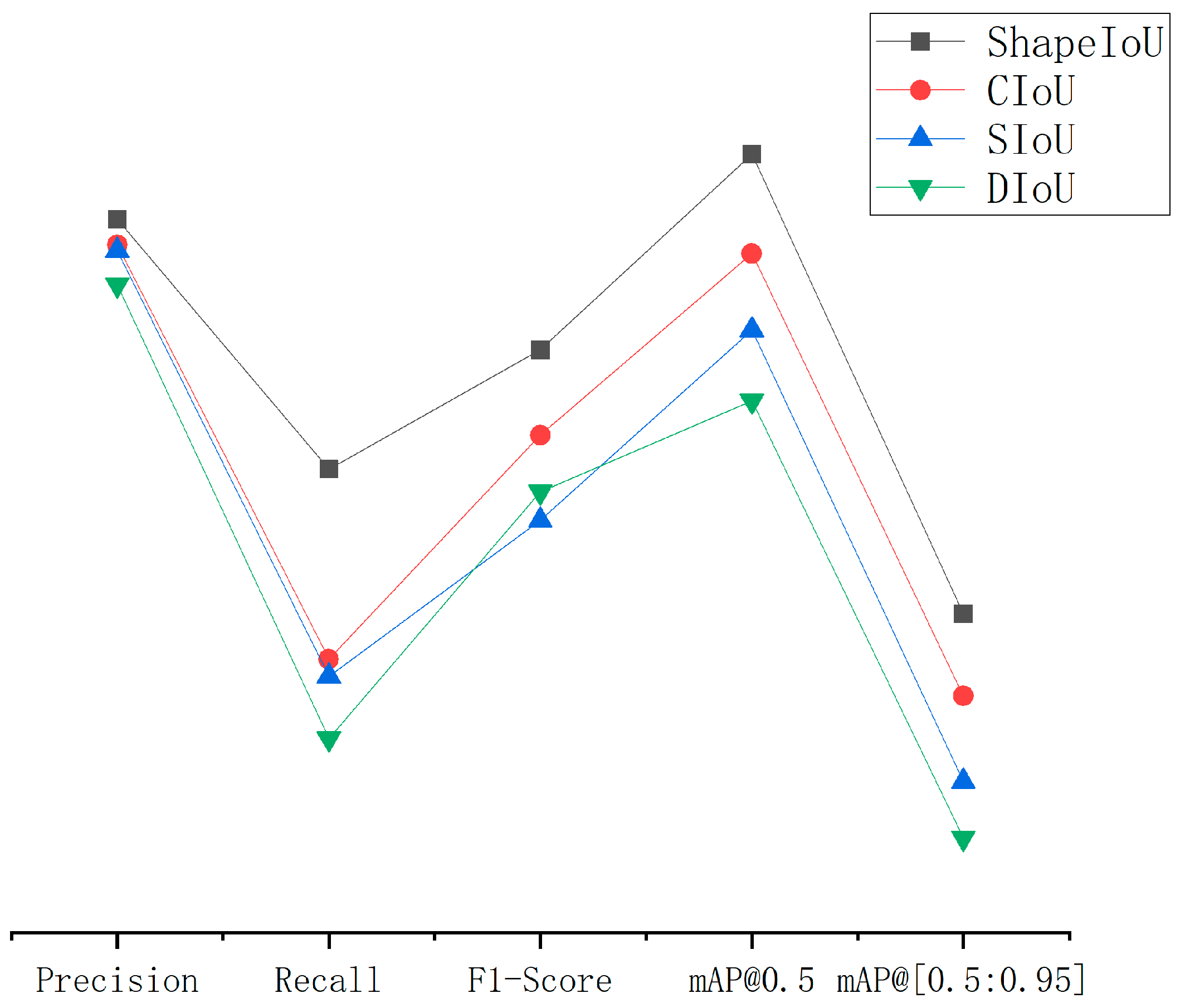

4.2. Comparison of Different Loss Functions

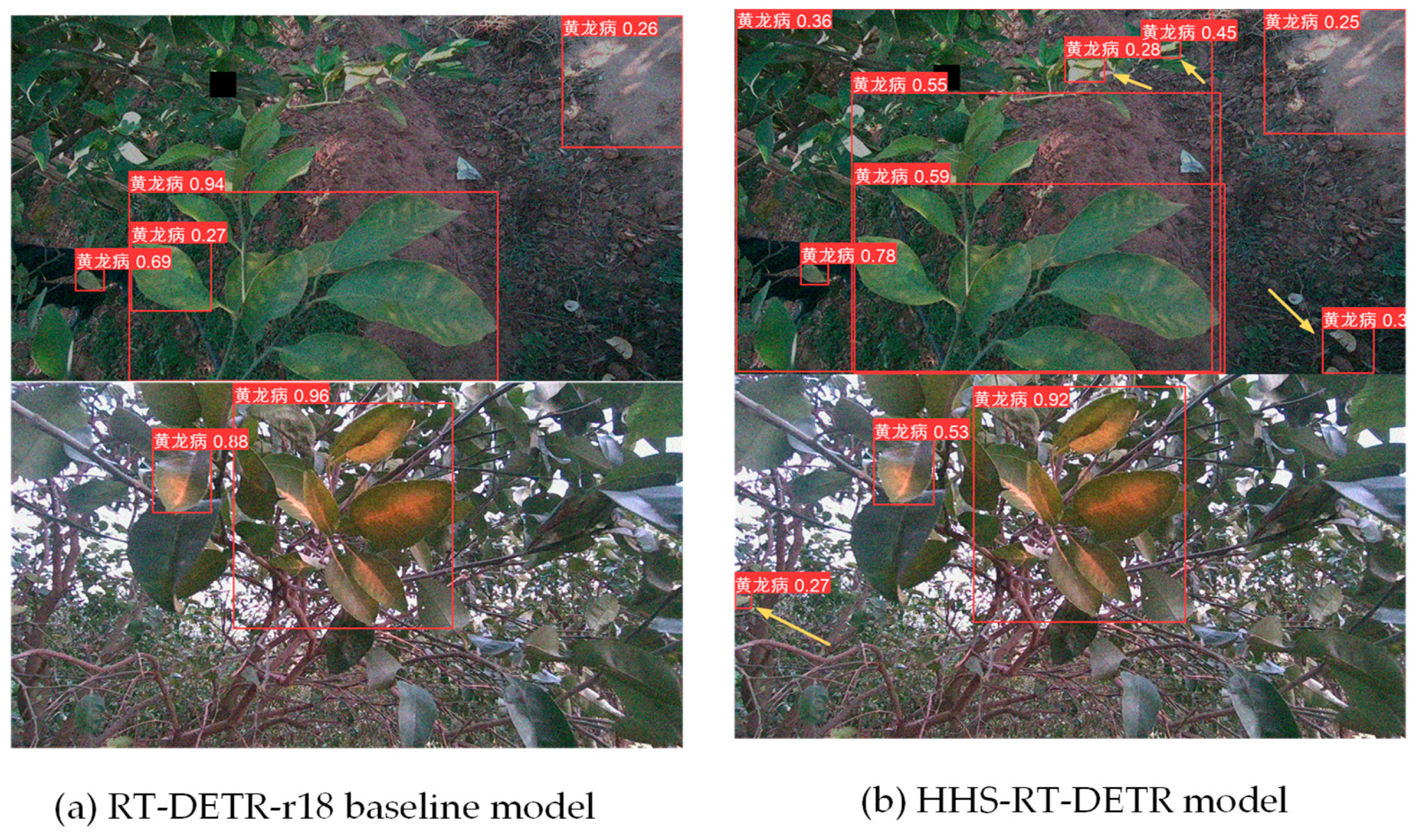

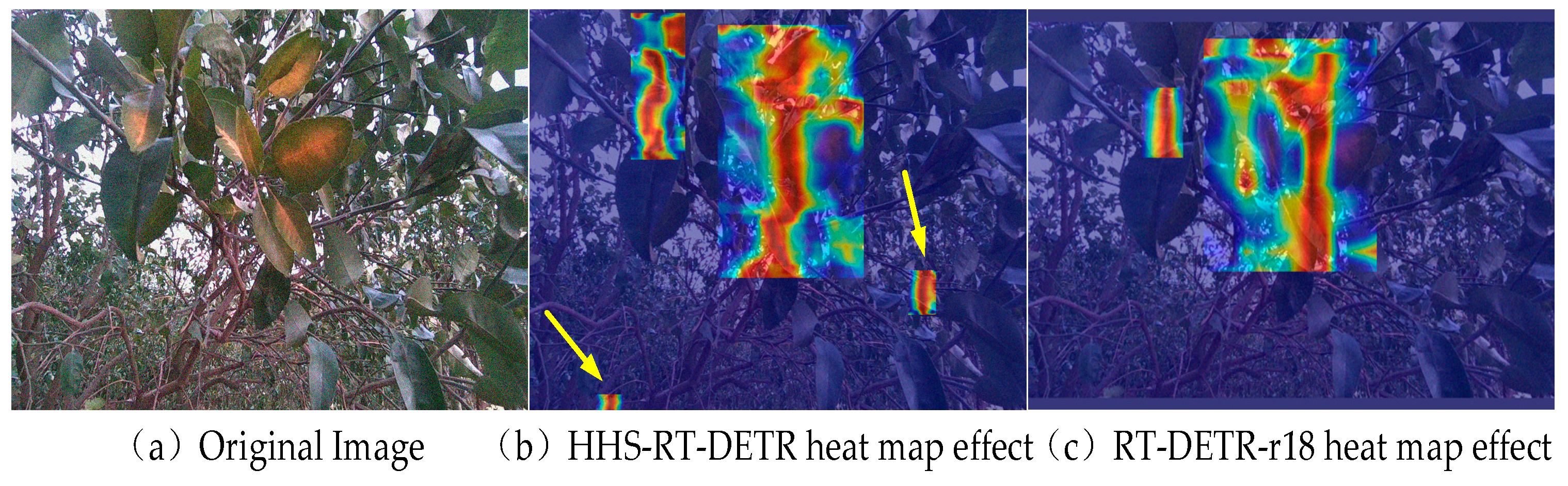

4.3. Analysis of Global Contextual Information Utilization Ability

4.4. Comparison of Different Models

- In the training process of the improved model, the parameter update is more stable, which means that the training process of the model is also more stable, and the convergence speed is faster.

- The improved model has stronger generalization ability in the test set.

- Compared with other models, the improved model is not sensitive to changes in data distribution, indicating that the improved model is more stable when dealing with other different distribution data.

4.5. Experimental Comparison of Public Datasets

5. Conclusions

- Expanding the dataset to include a wider variety of citrus trees and different stages of citrus greening disease to enhance the model’s recognition capabilities.

- Incorporating leaf images under various lighting conditions and different weather scenarios to improve the model’s generalization performance in diverse environments.

- Deploying the mature detection model to drones and other edge devices to enable real-time monitoring and precise control of citrus greening disease.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| # Parameters |

| nc: 1 |

| scales: |

| # [depth, width, max_channels] |

| l: [1.00, 1.00, 1024] |

| backbone: |

| # [from, repeats, module, args] |

| - [−1, 1, ConvNormLayer, [32, 3, 2, None, False, ‘relu’]] # 0-P1/2 |

| - [−1, 1, ConvNormLayer, [32, 3, 1, None, False, ‘relu’]] # 1 |

| - [−1, 1, ConvNormLayer, [64, 3, 1, None, False, ‘relu’]] # 2 |

| - [-1, 1, nn.MaxPool2d, [3, 2, 1]] # 3-P2/4 |

| # [ch_out, block_type, block_nums, stage_num, act, variant] |

| - [−1, 1, Blocks, [64, BasicBlock, 2, 2, ‘relu’]] # 4 |

| - [−1, 1, Blocks, [128, BasicBlock, 2, 3, ‘relu’]] # 5-P3/8 |

| - [−1, 1, Blocks, [256, BasicBlock, 2, 4, ‘relu’]] # 6-P4/16 |

| - [−1, 1, Blocks, [512, BasicBlock, 2, 5, ‘relu’]] # 7-P5/32 |

| head: |

| - [−1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 8 input_proj.2 |

| - [−1, 1, AIFI, [1024, 8]] # 9 |

| - [−1, 1, HWD, [256]] # 10, Y5, lateral_convs.0 |

| - [−1, 1, ChannelAttention_HSFPN, []] # 11 |

| - [−1, 1, nn.Conv2d, [256, 1]] # 12 |

| - [−1, 1, nn.ConvTranspose2d, [256, 3, 2, 1, 1]] # 13 |

| - [6, 1, ChannelAttention_HSFPN, []] # 14 |

| - [−1, 1, HWD, [256]] # 15 |

| - [13, 1, ChannelAttention_HSFPN, [4, False]] # 16 |

| - [[−1, −2], 1, Multiply, []] # 17 |

| - [[−1, 13], 1, Add, []] # 18 |

| - [−1, 3, RepC3, [256, 0.5]] # 19 P4/16 |

| - [13, 1, nn.ConvTranspose2d, [256, 3, 2, 1, 1, 16]] # 20 |

| - [5, 1, ChannelAttention_HSFPN, []] # 21 |

| - [−1, 1, HWD, [256]] # 22 |

| - [20, 1, ChannelAttention_HSFPN, [4, False]] # 23 |

| - [[−1, −2], 1, Multiply, []] # 24 |

| - [[−1, 20], 1, Add, []] # 25 |

| - [−1, 3, RepC3, [256, 0.5]] # 26 P3/8 |

| - [[26, 19, 12], 1, RTDETRDecoder, [nc, 256, 300, 4, 8, 3]] # Detect (P3, P4, P5) |

References

- Gottwald, T.R. Current epidemiological understanding of citrus huanglongbing. Annu. Rev. Phytopathol. 2010, 48, 119–139. [Google Scholar] [CrossRef] [PubMed]

- Da Graca, J. Citrus greening disease. Annu. Rev. Phytopathol. 1991, 29, 9–136. [Google Scholar] [CrossRef]

- Bové, J.M. Huanglongbing: A destructive, newly-emerging, century-old disease of citrus. J. Plant Pathol. 2006, 7–37. [Google Scholar]

- Wang, X.; Lei, T.; Zhou, C. Occurrence and Control of Citrus Huanglongbing. Mod. Agrochem. 2023, 22, 13–16+21. [Google Scholar]

- Huang, S.; Huang, P.; Zhou, X.; Hu, Y. Research Progress on Comprehensive Prevention and Control Technologies for Huanglongbing. Sci. Technol. Inf. 2023, 21, 166–169. [Google Scholar] [CrossRef]

- Huang, F.; Cui, Y.; Song, X.; Peng, A.; Ling, J.; Chen, X. The influences of citrus huanglongbing on phyllosphere microbiome. J. Plant Prot. 2023, 50, 1150–1160. [Google Scholar] [CrossRef]

- Zeng, W.; Cheng, Y.; Hu, G.; Bao, W.; Liang, D. Detection of Citrus Huanglongbing in Nature Background by SMS and Two-way Feature Fusion. Trans. Chin. Soc. Agric. Mach. 2022, 53, 280–287. [Google Scholar]

- Sommer, L.; Schmidt, N.; Schumann, A.; Beyerer, J. Search area reduction fast-RCNN for fast vehicle detection in large aerial imagery. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3054–3058. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA; pp. 6517–6525. [Google Scholar]

- Sun, H.; Xu, H.; Liu, B.; He, D.; He, J.; Zhang, H.; Geng, N. MEAN-SSD: A novel real-time detector for apple leaf diseases using improved light-weight convolutional neural networks. Comput. Electron. Agric. 2021, 189, 106379. [Google Scholar] [CrossRef]

- Srikanth, A.; Srinivasan, A.; Indrajit, H.; Venkateswaran, N. Contactless Object Identification Algorithm for the Visually Impaired using EfficientDet. In Proceedings of the 2021 Sixth International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 25–27 March 2021. [Google Scholar]

- Chen, X.; Gupta, A. An Implementation of Faster RCNN with Study for Region Sampling. arXiv 2017, arXiv:1702.02138. [Google Scholar]

- Dong, C.; Zhang, K.; Xie, Z.; Shi, C. An improved cascade RCNN detection method for key components and defects of transmission lines. IET Gener. Transm. Distrib. 2023, 17, 4277–4292. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Li, W.; Wei, J.; Wang, Y.; Chen, J.; Luo, H. Detection of citrus pests and diseases based on improved YOLOv5. J. Nanjing Agric. Univ. 2024, 47, 1000–1008. [Google Scholar]

- Zheng, Y.; Chen, R.; Yang, C.; Zou, T. Improved YOLOv5s based identification of pests and diseases in citrus. J. Huazhong Agric. Univ. 2024, 43, 134–143. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, H.; Fan, J.; Chen, B.; Yun, T. Detection and identification of tomato diseases and pests based on deep learning networks. J. China Agric. Univ. 2023, 28, 165–181. [Google Scholar]

- Lan, Y.; Zhu, Z.; Deng, X.; Lian, B.; Huang, J.; Huang, Z.; Hu, J. Monitoring and classification of citrus Huanglongbing based on UAV hyperspectral remote sensing. Trans. Chin. Soc. Agric. Eng. 2019, 35, 92–100. [Google Scholar]

- Hu, J. Application of PCA method on pest information detection of electronic nose. In Proceedings of the 2006 IEEE International Conference on Information Acquisition, Veihai, China, 20–23 August 2006; pp. 1465–1468. [Google Scholar]

- Kuzuhara, H.; Takimoto, H.; Sato, Y.; Kanagawa, A. Insect pest detection and identification method based on deep learning for realizing a pest control system. In Proceedings of the 2020 59th Annual conference of the society of instrument and control engineers of Japan (SICE), Chiang Mai, Thailand, 23–26 September 2020; pp. 709–714. [Google Scholar]

- Mohamed, A.M.; Muthu, K.M.; Navin, R.; Pughazendi, N. Multiclass pest detection and classification based on deep learning using convolution neural networks. Int. J. Res. Appl. Sci. Eng. Technol. 2020, 8, 1469–1473. [Google Scholar]

- He, C. Rapid Detection Citrus HLB by Developing a Handheld Device Based on Spectral Imaging Technology. Master’s Thesis, Fujian Agriculture and Forestry University, Fuzhou, China, 2022. [Google Scholar]

- Dai, Z. Research on Citrus Huanglongbing Diagnosis System Based on Edge Computing. Master’s Thesis, South China Agricultural University, Guangzhou, China, 2022. [Google Scholar]

- Lian, B. Research on Online Diagnostic Technology and System of Citrus Huanglongbing Based on MobileNet. Master’s Thesis, South China Agricultural University, Guangzhou, China, 2019. [Google Scholar]

- Liu, Y.; Xiao, H.; Sun, X.; Zhu, D.; Han, R.; Ye, L.; Wang, J.; Ma, K. Spectral feature selection and discriminant model building for citrus leaf Huanglongbing. Trans. Chin. Soc. Agric. Eng. 2018, 34, 180–187. [Google Scholar]

- Deng, Q. Rapid Nondestructive Detection of Citrus Greening (HLB) Using Hyperspectral Imaging Technology. Master’s Thesis, South China Agricultural University, Guangzhou, China, 2016. [Google Scholar]

- Bové, J.; Chau, N.M.; Trung, H.M.; Bourdeaut, J.; Garnier, M. Huanglongbing (greening) in Vietnam: Detection of Liberobacter asiaticum by DNA-hybridization with probe in 2.6 and PCR-amplification of 16S ribosomal DNA. In International Organization of Citrus Virologists Conference Proceedings (1957–2010); International Organization of Citrus Virologists (IOCV): Riverside, CA, USA, 1996. [Google Scholar]

- Weng, H.; Liu, Y.; Captoline, I.; Li, X.; Ye, D.; Wu, R. Citrus Huanglongbing detection based on polyphasic chlorophyll a fluorescence coupled with machine learning and model transfer in two citrus cultivars. Comput. Electron. Agric. 2021, 187, 106289. [Google Scholar] [CrossRef]

- He, C.; Li, X.; Liu, Y.; Yang, B.; Wu, Z.; Tan, S.; Ye, D.; Weng, H. Combining multicolor fluorescence imaging with multispectral reflectance imaging for rapid citrus Huanglongbing detection based on lightweight convolutional neural network using a handheld device. Comput. Electron. Agric. 2022, 194, 106808. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Chen, Y.; Zhang, C.; Chen, B.; Huang, Y.; Sun, Y.; Wang, C.; Fu, X.; Dai, Y.; Qin, F.; Peng, Y. Accurate leukocyte detection based on deformable-DETR and multi-level feature fusion for aiding diagnosis of blood diseases. Comput. Biol. Med. 2024, 170, 107917. [Google Scholar] [CrossRef]

- Xu, G.; Liao, W.; Zhang, X.; Li, C.; He, X.; Wu, X. Haar wavelet downsampling: A simple but effective downsampling module for semantic segmentation. Pattern Recognit. 2023, 143, 109819. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S. Shape-iou: More accurate metric considering bounding box shape and scale. arXiv 2023, arXiv:2312.17663. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- He, B.; Zhang, Y.; Gong, J.; Fu, G.; Zhao, Y.; Wu, R. Fast recognition of tomato fruit in greenhouse at night based on improved YOLO v5. Trans. Chin. Soc. Agric. Mach. 2022, 53, 201–208. [Google Scholar]

- Ma, H.; Dong, K.; Wang, Y.; Wei, S.; Huang, W.; Gou, J. Lightweight Plant Recognition Model Based on Improved YOLO v5s. Trans. Chin. Soc. Agric. Mach. 2023, 54, 267–276. [Google Scholar]

- Zhou, W.; Niu, Y.-Z.; Wang, Y.-W.; Li, D. Rice pests and diseases identification method based on improved YOLOv4-GhostNet. Jiangsu J. Agric. Sci. 2022, 38, 685–695. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Citrus Category | Number of Complex Background Images | Number of Simple Background Images | Collection Time |

|---|---|---|---|

| Ice-sugar orange greening | 200 | 200 | 9:00 a.m.~11:00 a.m., 15:00 p.m.~17:00 p.m. |

| Wokan orange greening | 200 | 200 | 8:30 a.m.~10:00 a.m., 16:00 p.m.~17:25 p.m. |

| Grapefruit greening | 200 | 200 | 9:00 a.m.~11:20 a.m., 14:00 p.m.~16:00 p.m. |

| Experimental Environment | Experimental Configuration |

|---|---|

| CPU | AMD Ryzen 9 5900X 12-Core Processor |

| GPU | RTX 3090 24G |

| Operating system | Ubuntu 22.04 |

| Experimental tools | Pycharm 2021.1.3 + python 3.8.16 + Pytorch 1.13.1 |

| Cuda | 11.7 |

| Evaluation Indicators | Evaluation Formula |

|---|---|

| P (Precision) | |

| R (Recall) | |

| F1-score | |

| Accuracy | |

| AP (Average precision) | |

| mAP (Mean average precision) |

| Baseline | HS-FPN | HWD | P (%) | R (%) | mAP (%) | Params (107) | FPS (Frame/s) |

|---|---|---|---|---|---|---|---|

| RT-DETR-r18 | × | × | 82.6 | 73.8 | 84.9 | 2.008 | 68 |

| RT-DETR-r18 | √ | × | 86.0 | 75.2 | 86.5 | 1.832 | 73 |

| RT-DETR-r18 | √ | √ | 90.5 | 83.7 | 92.4 | 1.871 | 72 |

| Baseline | ShapeIoU | CIoU | SIoU | DIoU | P(%) | R(%) | (%) | (%) | |

|---|---|---|---|---|---|---|---|---|---|

| RT-DETR-r18 | √ | × | × | × | 82.6 | 73.8 | 78.0 | 84.9 | 68.7 |

| RT-DETR-r18 | × | √ | × | × | 81.7 | 67.1 | 75.0 | 81.4 | 65.8 |

| RT-DETR-r18 | × | × | √ | × | 81.5 | 66.5 | 72.0 | 78.7 | 62.8 |

| RT-DETR-r18 | × | × | × | √ | 80.3 | 64.3 | 73.0 | 76.2 | 60.8 |

| Method | P (%) | R (%) | F1-Score (%) | (%) | (%) | Params (106) |

|---|---|---|---|---|---|---|

| YOLO v5m | 85.3 | 75.7 | 80.3 | 85.8 | 69.8 | 2.11 |

| YOLO v5s | 86.8 | 76.2 | 81.0 | 86.9 | 70.5 | 2.50 |

| YOLO v8n | 89.7 | 83.0 | 82.0 | 92.3 | 65.7 | 3.01 |

| YOLO v6s | 82.6 | 69.5 | 76.0 | 83.3 | 67.5 | 4.23 |

| RT-DETR-r18 | 82.6 | 73.82 | 78.0 | 84.9 | 68.7 | 20.08 |

| HHS-RT-DETR | 90.5 | 83.7 | 83.0 | 92.4 | 78.5 | 18.71 |

| Method | P (%) | R (%) | F1-Score (%) | (%) | |

|---|---|---|---|---|---|

| YOLO v5m | 40.5 | 38.5 | 38.0 | 34.2 | 21.6 |

| YOLO v5s | 42.0 | 39.1 | 39.0 | 35.6 | 22.0 |

| YOLO v8n | 53.2 | 46.4 | 48.0 | 47.0 | 30.6 |

| YOLO v6s | 38.4 | 35.9 | 35.0 | 32.7 | 21.8 |

| RT-DETR-r18 | 56.6 | 47.7 | 51.0 | 48.3 | 35.2 |

| HHS-RT-DETR | 58.9 | 48.9 | 53.0 | 51.8 | 38.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huangfu, Y.; Huang, Z.; Yang, X.; Zhang, Y.; Li, W.; Shi, J.; Yang, L. HHS-RT-DETR: A Method for the Detection of Citrus Greening Disease. Agronomy 2024, 14, 2900. https://doi.org/10.3390/agronomy14122900

Huangfu Y, Huang Z, Yang X, Zhang Y, Li W, Shi J, Yang L. HHS-RT-DETR: A Method for the Detection of Citrus Greening Disease. Agronomy. 2024; 14(12):2900. https://doi.org/10.3390/agronomy14122900

Chicago/Turabian StyleHuangfu, Yi, Zhonghao Huang, Xiaogang Yang, Yunjian Zhang, Wenfeng Li, Jie Shi, and Linlin Yang. 2024. "HHS-RT-DETR: A Method for the Detection of Citrus Greening Disease" Agronomy 14, no. 12: 2900. https://doi.org/10.3390/agronomy14122900

APA StyleHuangfu, Y., Huang, Z., Yang, X., Zhang, Y., Li, W., Shi, J., & Yang, L. (2024). HHS-RT-DETR: A Method for the Detection of Citrus Greening Disease. Agronomy, 14(12), 2900. https://doi.org/10.3390/agronomy14122900