Non-Destructive Measurement of Rice Spikelet Size Based on Panicle Structure Using Deep Learning Method

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials and Dataset

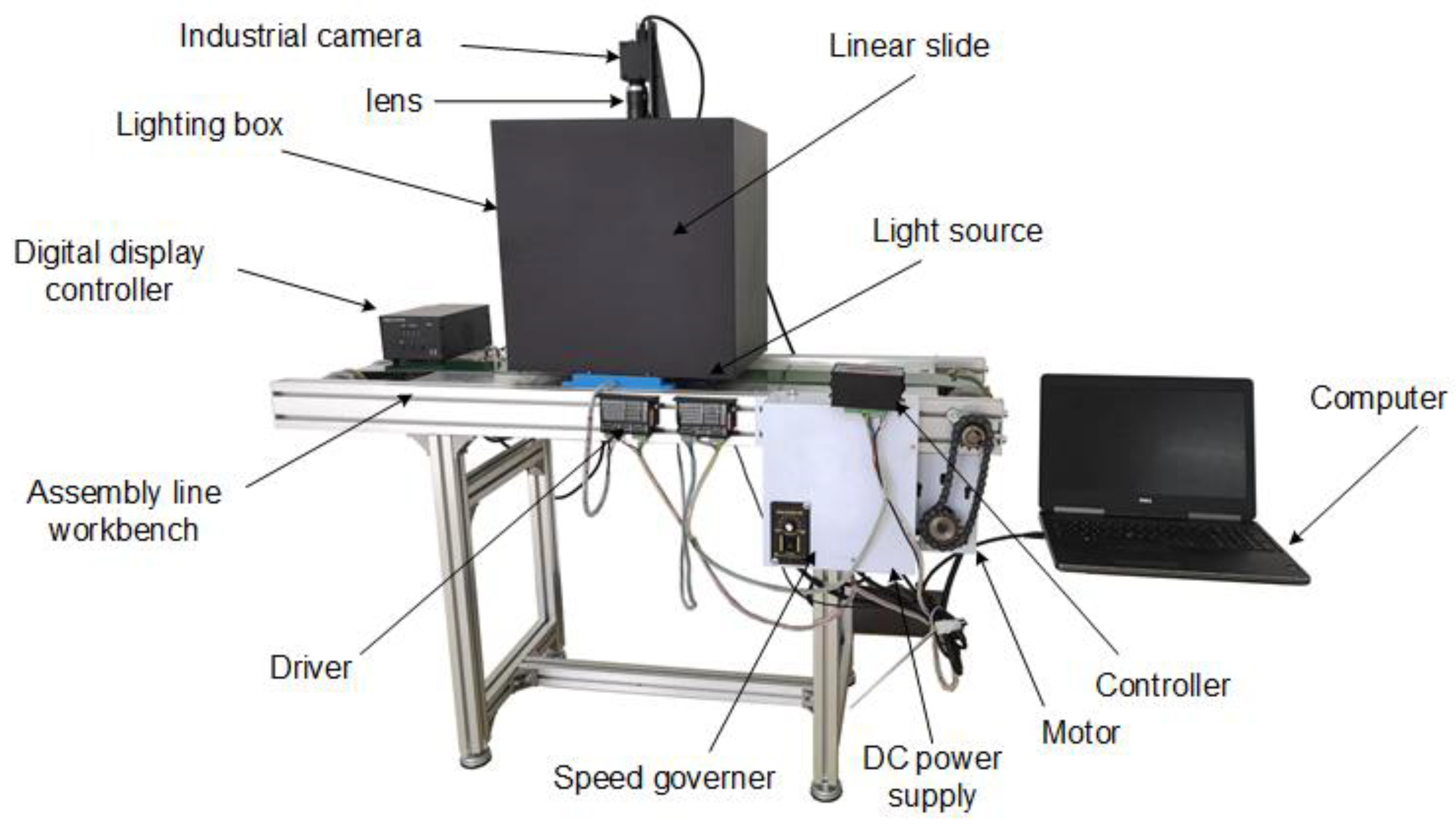

2.1.1. Description of Image Collecting Equipment

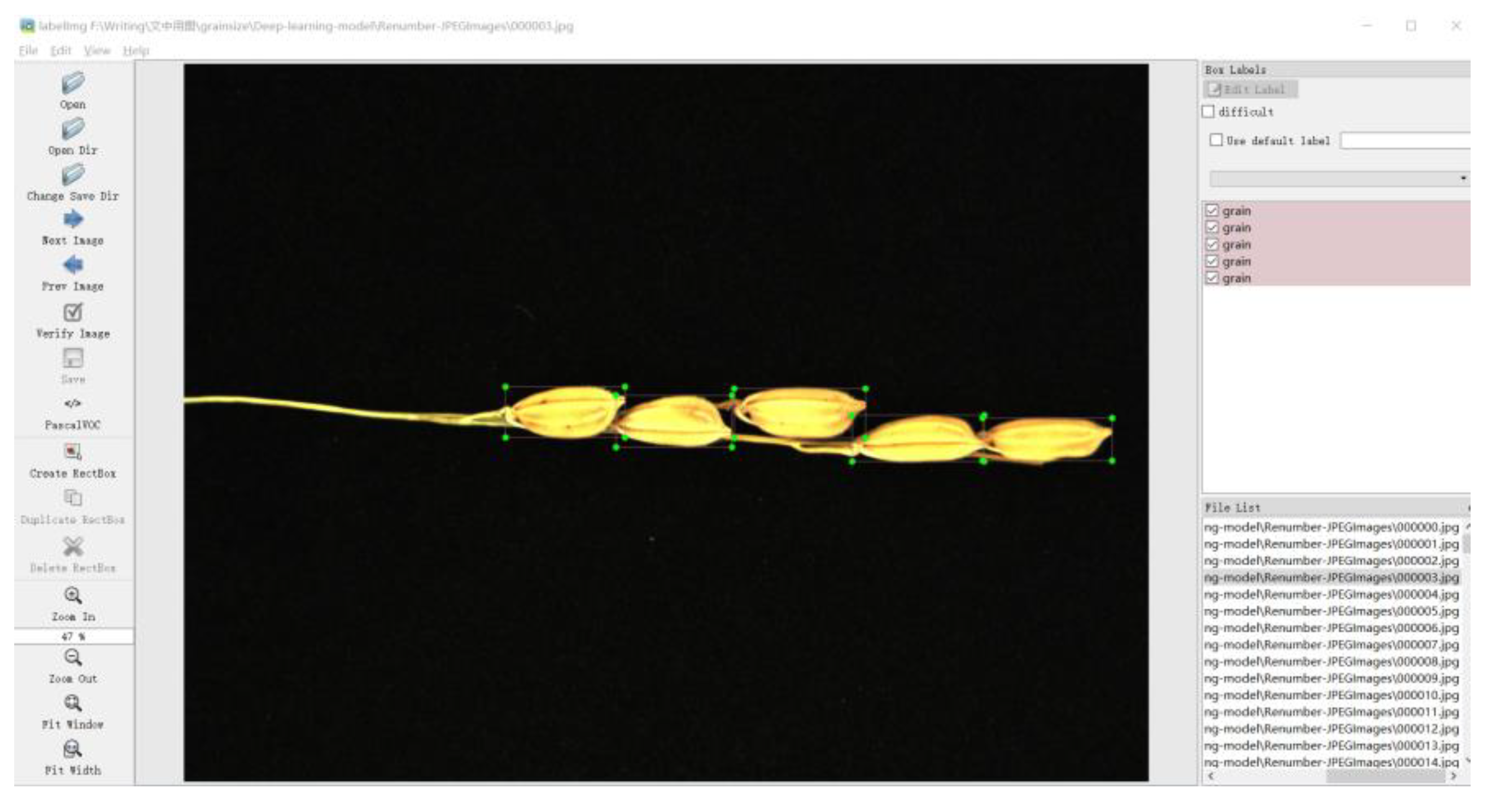

2.1.2. Image Set

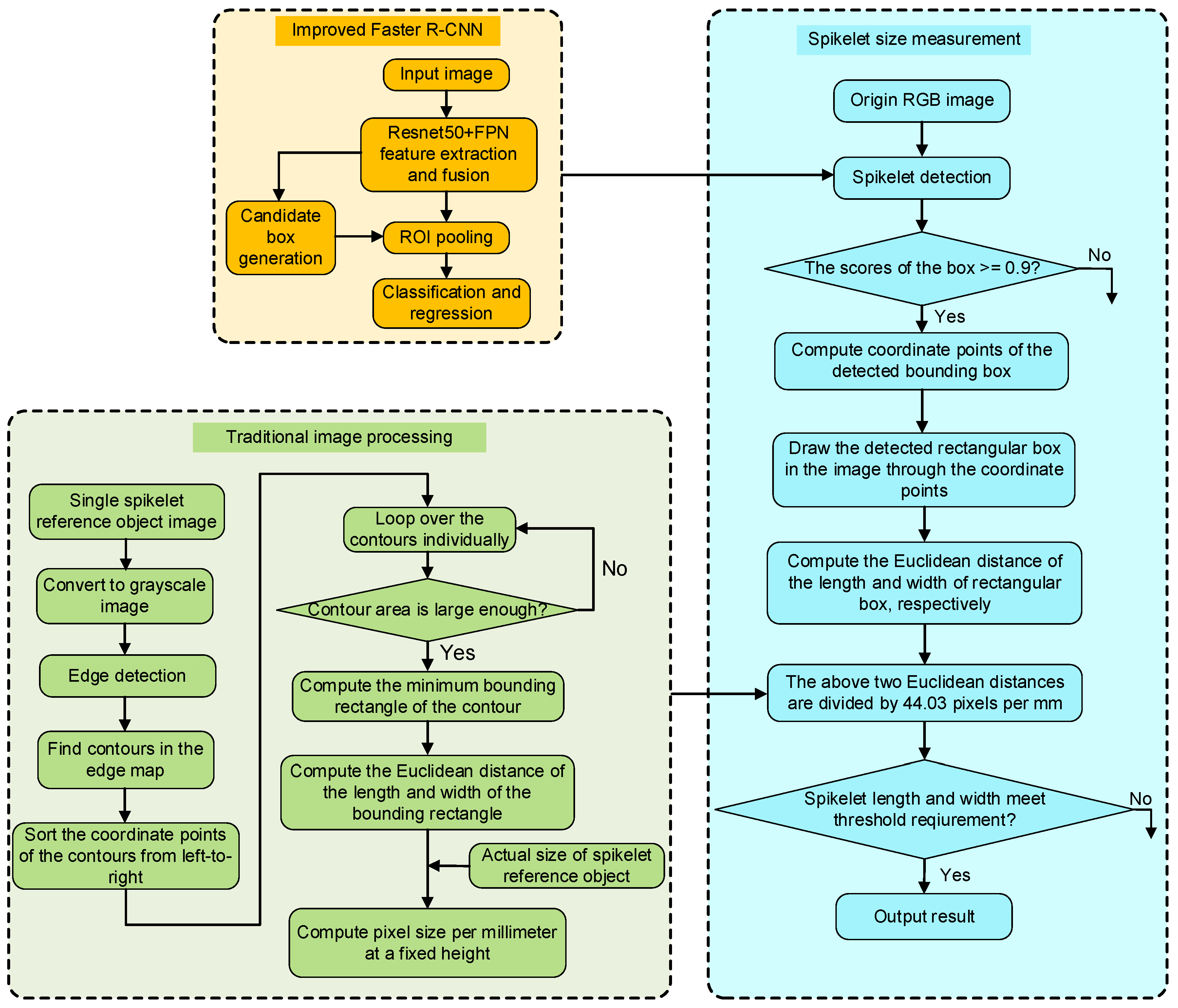

2.2. Spikelet Size Measurement Method

2.2.1. Image Preprocessing

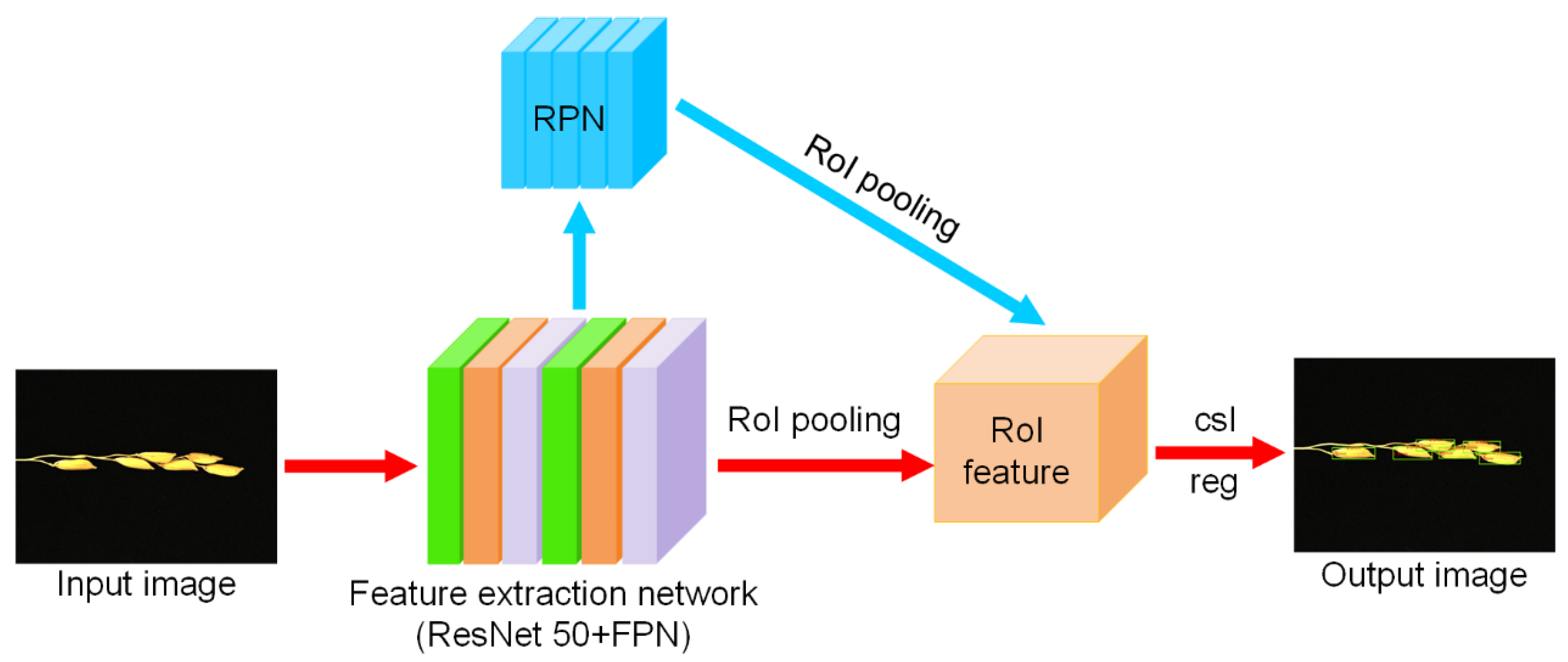

2.2.2. Spikelet Detection with Faster R-CNN

2.2.3. Training of Spikelet Detection Model

2.3. Calculation of Unit Pixel Size Based on Traditional Image Processing

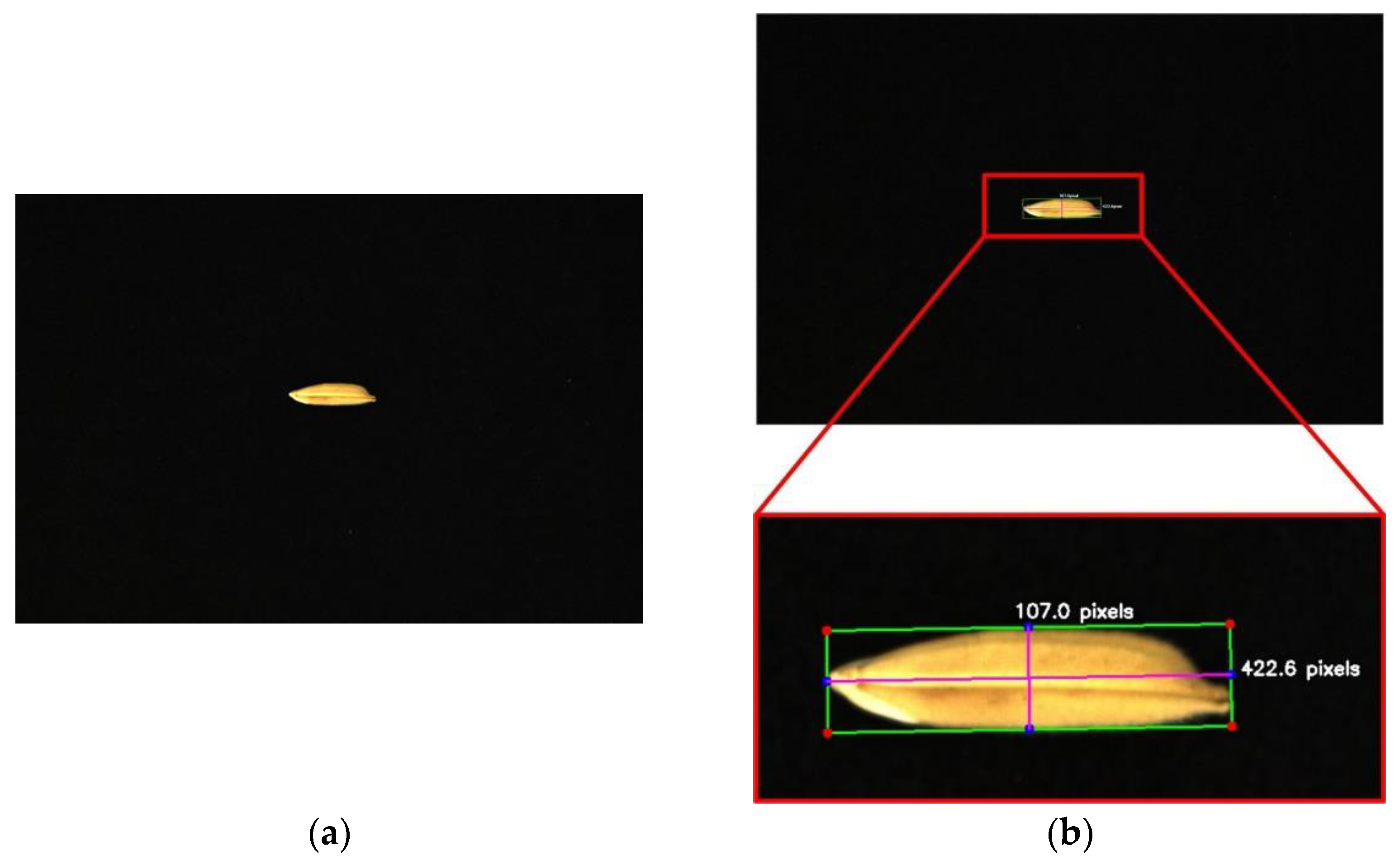

2.3.1. Image Acquisition of Spikelet Reference Object

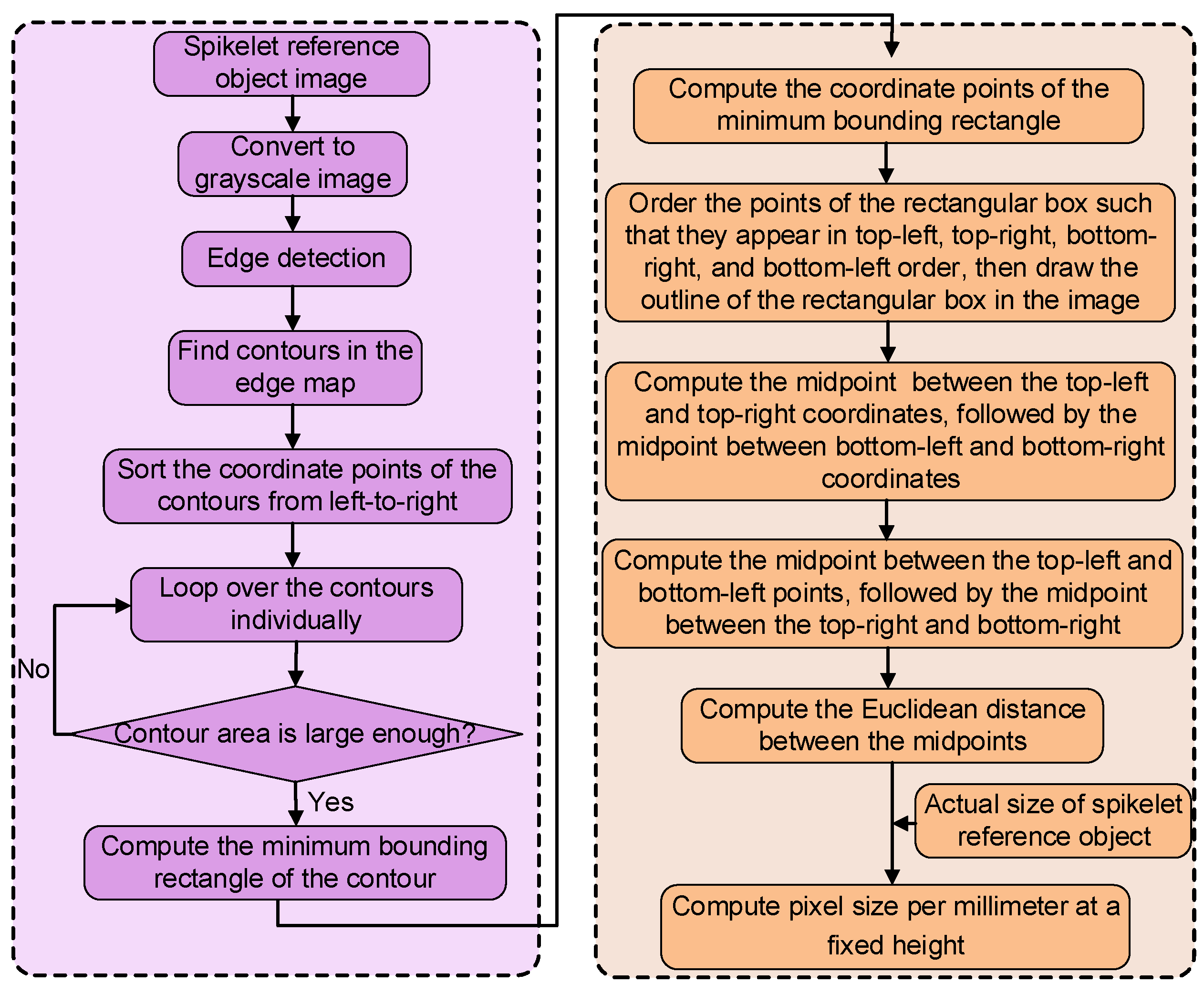

2.3.2. Measurement of Pixel Size for Spikelet Reference Object

2.3.3. Measurement of Actual Size for Spikelet Reference Object

2.4. Evaluation Metrics

3. Results and Discussion

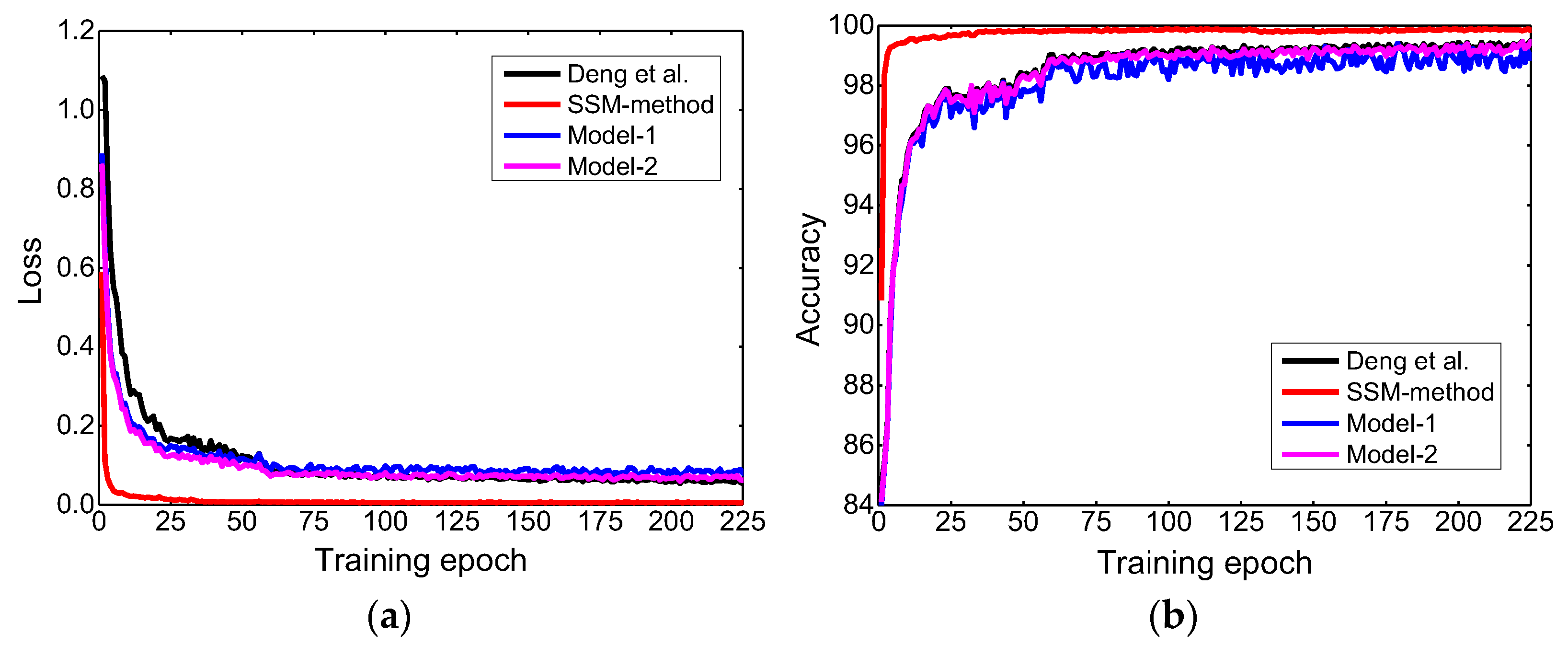

3.1. Training Results of the SSM-Method

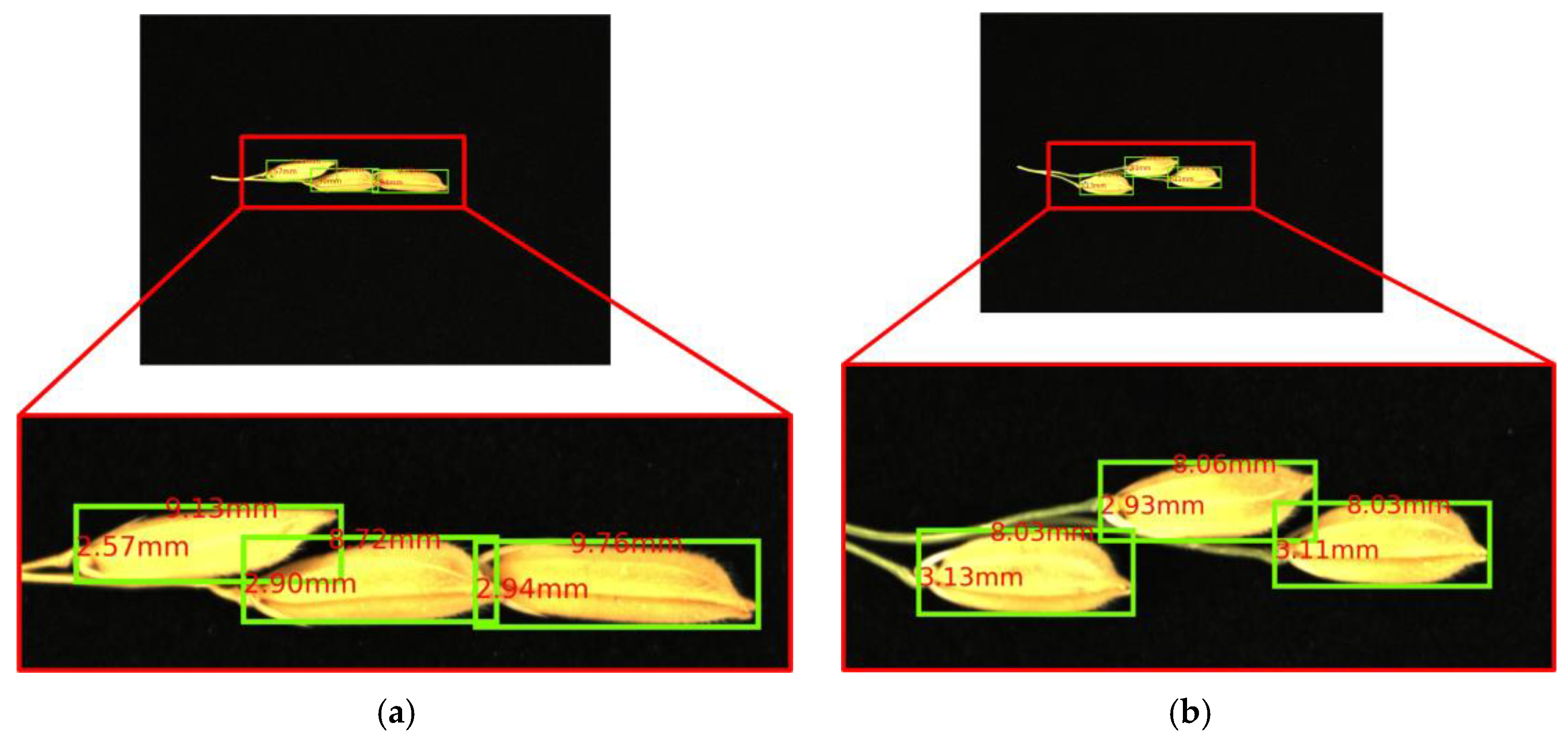

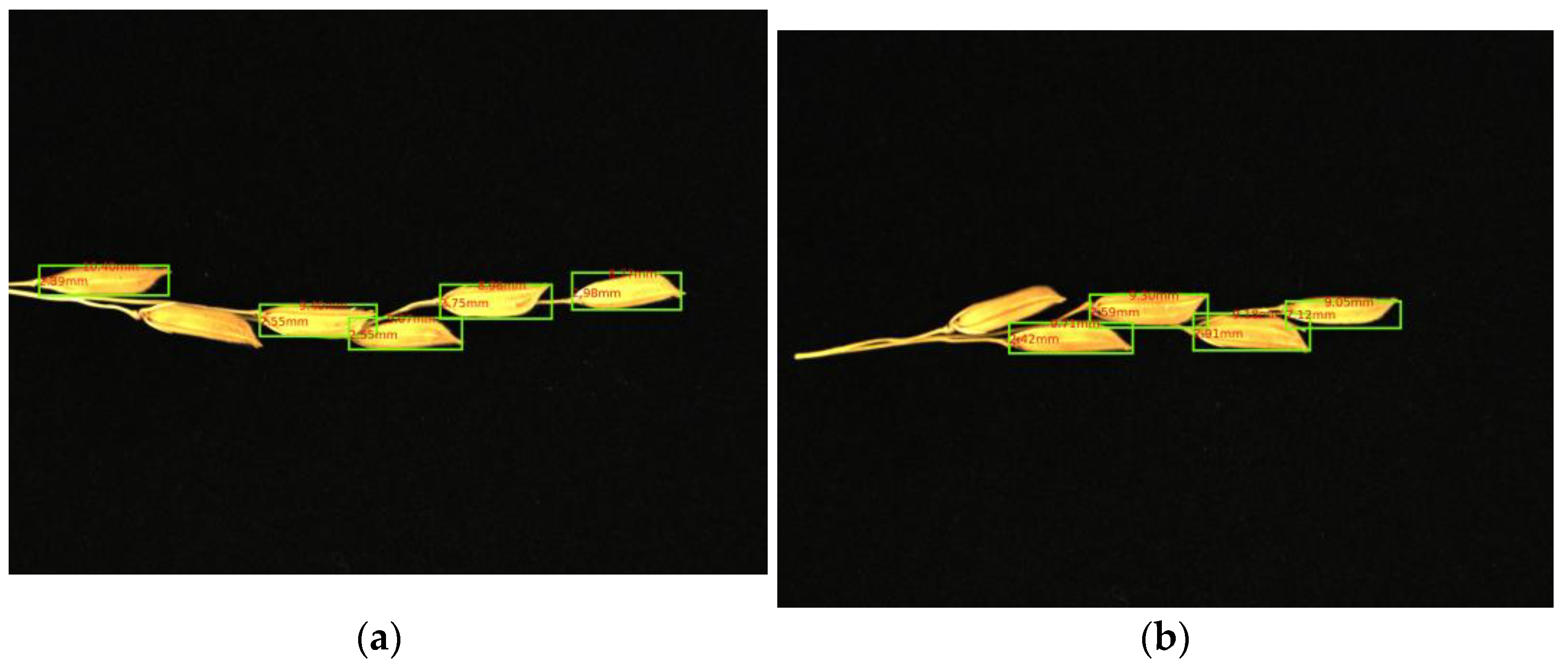

3.2. Measurement Results of the SSM-Method

3.3. Measurement Accuracy of SSM-Method

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, N.; Xu, R.; Duan, P.; Li, Y. Control of grain size in rice. Plant Reprod. 2018, 31, 237–251. [Google Scholar] [CrossRef]

- Singh, U. Aromatic Rices; International Rice Research Institute: New Delhi, India, 2000. [Google Scholar]

- Fan, C.; Xing, Y.; Mao, H.; Lu, T.; Han, B.; Xu, C.; Li, X.; Zhang, Q. GS3, a major QTL for grain length and weight and minor QTL for grain width and thickness in rice, encodes a putative transmembrane protein. Theor. Appl. Genet. 2006, 112, 1164–1171. [Google Scholar] [CrossRef]

- Armstrong, B.; Aldred, G.; Armstrong, T.; Blakeney, A.; Lewin, L. Measuring rice grain dimensions with an image analyser. Quest 2005, 2, 2–35. [Google Scholar]

- Murthy, P.; Govindaswamy, S. Inheritance of grain size and its correlation with the hulling and cooking qualities. Oryza 1967, 4, 12–21. [Google Scholar]

- Mahale, B.; Korde, S. Rice quality analysis using image processing techniques. In Proceedings of the International Conference for Convergence for Technology-2014, Pune, India, 6–8 April 2014. [Google Scholar]

- Santos, M.V.; Cuevas, R.P.O.; Sreenivasulu, N.; Molina, L. Measurement of Rice Grain Dimensions and Chalkiness, and Rice Grain Elongation Using Image Analysis. In Rice Grain Quality: Methods Protocols; Humana Press: New York, NY, USA, 2019; Volume 1892, pp. 99–108. [Google Scholar] [CrossRef]

- Li, T.; Liu, H.; Mai, C.; Yu, G.; Li, H.; Meng, L.; Jian, D.; Yang, L.; Zhou, Y.; Zhang, H. Variation in allelic frequencies at loci associated with kernel weight and their effects on kernel weight-related traits in winter wheat. Crop J. 2019, 7, 30–37. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, Z. GridFree: A python package of image analysis for interactive grain counting and measuring. Plant Physiol. 2021, 186, 2239–2252. [Google Scholar] [CrossRef]

- Yin, C.; Li, H.; Li, S.; Xu, L.; Zhao, Z.; Wang, J. Genetic dissection on rice grain shape by the two-dimensional image analysis in one japonica x indica population consisting of recombinant inbred lines. Theor. Appl. Genet. 2015, 128, 1969–1986. [Google Scholar] [CrossRef]

- Rasband, W.S. Imagej; US National Institutes of Health: Bethesda, MD, USA, 2011. Available online: http://imagej.nih.gov/ij/ (accessed on 9 October 2020).

- Tanabata, T.; Shibaya, T.; Hori, K.; Ebana, K.; Yano, M. SmartGrain: High-throughput phenotyping software for measuring seed shape through image analysis. Plant Physiol. 2012, 160, 1871–1880. [Google Scholar] [CrossRef]

- Whan, A.P.; Smith, A.B.; Cavanagh, C.R.; Ral, J.-P.F.; Shaw, L.M.; Howitt, C.A.; Bischof, L. GrainScan: A low cost, fast method for grain size and colour measurements. Plant Methods 2014, 10, 23. [Google Scholar] [CrossRef]

- Gao, K.; White, T.; Palaniappan, K.; Warmund, M.; Bunyak, F. Museed: A mobile image analysis application for plant seed morphometry. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 2826–2830. [Google Scholar]

- Feng, X.; Wang, Z.; Zeng, Z.; Zhou, Y.; Lan, Y.; Zou, W.; Gong, H.; Qi, L. Size measurement and filled/unfilled detection of rice grains using backlight image processing. Front. Plant Sci. 2023, 14, 1213486. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine learning for high-throughput stress phenotyping in plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- Wang, Z.; Ding, Y.; Zhang, T.; Huang, X. Automatic real-time fire distance, size and power measurement driven by stereo camera and deep learning. Fire Saf. J. 2023, 140, 103891. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Z.; Sun, J.; Fu, Y.; Jia, D.; Liu, T. Characterization of particle size and shape by an IPI system through deep learning. J. Quant. Spectrosc. Radiat. Transf. 2021, 268, 107642. [Google Scholar] [CrossRef]

- Park, S.E.; Eem, S.-H.; Jeon, H. Concrete crack detection and quantification using deep learning and structured light. Constr. Build. Mater. 2020, 252, 119096. [Google Scholar] [CrossRef]

- Zhang, S.; Liang, X.; Huang, X.; Wang, K.; Qiu, T. Precise and fast microdroplet size distribution measurement using deep learning. Chem. Eng. Sci. 2022, 247, 116926. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN Towards Real-Time Object Detection with Region Proposal Network. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Deng, R.; Qi, L.; Pan, W.; Wang, Z.; Fu, D.; Yang, X. Automatic estimation of rice grain number based on a convolutional neural network. J. Opt. Soc. Am. A 2022, 39, 1034–1044. [Google Scholar] [CrossRef]

- Darrenl. Labelimg: Labelimg Is a Graphical Image Annotation Tool and Label Object Bounding Boxes in Images. 2017. Available online: https://github.com/tzutalin/labelImg (accessed on 20 October 2020).

- Joseph Redmon, A.F. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Deng, R.; Tao, M.; Huang, X.; Bangura, K.; Jiang, Q.; Jiang, Y.; Qi, L. Automated Counting Grains on the Rice Panicle Based on Deep Learning Method. Sensors 2021, 21, 281. [Google Scholar] [CrossRef]

| Supplier | Resolution | Sensor Type | Sensor Model | Pixel Size |

|---|---|---|---|---|

| Hikvision, China | 5472 × 3648 | CMOS, Rolling Shutter | Sony IMX183 | 2.4 μm × 2.4 μm |

| Supplier | Focal length | Aperture | Sensor size | Distortion | Mount |

|---|---|---|---|---|---|

| Hikvision, China | 16 mm | 2.8 | 1.1″ | <0.5% | C-Mount |

| Rice Variety | SSM-Method | Manual Method | Absolute Error | Relative Error |

|---|---|---|---|---|

| Huahang 15 | 9.42 mm | 9.38 mm | 0.14 mm | 1.56% |

| Qingyang | 7.85 mm | 7.79 mm | 0.19 mm | 2.39% |

| Rice Variety | SSM-Method | Manual Method | Absolute Error | Relative Error |

|---|---|---|---|---|

| Huahang 15 | 2.63 mm | 2.72 mm | 0.18 mm | 7.18% |

| Qingyang | 3.16 mm | 3.15 mm | 0.20 mm | 6.40% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, R.; Liu, W.; Liu, H.; Liu, Q.; Zhang, J.; Hou, M. Non-Destructive Measurement of Rice Spikelet Size Based on Panicle Structure Using Deep Learning Method. Agronomy 2024, 14, 2398. https://doi.org/10.3390/agronomy14102398

Deng R, Liu W, Liu H, Liu Q, Zhang J, Hou M. Non-Destructive Measurement of Rice Spikelet Size Based on Panicle Structure Using Deep Learning Method. Agronomy. 2024; 14(10):2398. https://doi.org/10.3390/agronomy14102398

Chicago/Turabian StyleDeng, Ruoling, Weisen Liu, Haitao Liu, Qiang Liu, Jing Zhang, and Mingxin Hou. 2024. "Non-Destructive Measurement of Rice Spikelet Size Based on Panicle Structure Using Deep Learning Method" Agronomy 14, no. 10: 2398. https://doi.org/10.3390/agronomy14102398

APA StyleDeng, R., Liu, W., Liu, H., Liu, Q., Zhang, J., & Hou, M. (2024). Non-Destructive Measurement of Rice Spikelet Size Based on Panicle Structure Using Deep Learning Method. Agronomy, 14(10), 2398. https://doi.org/10.3390/agronomy14102398