1. Introduction

The monitoring and quality control of ginseng (

Panax ginseng C. A. Meyer), as the products at the “top of Chinese medicine”, are particularly important. The appearance and quality of ginseng have mainly been monitored manually [

1]. The main drawbacks of this method are that it wastes a large amount of manpower, relies heavily on professionals, and results in a slow grading speed and low accuracy rate. Secondly, the grading standards are difficult to unify and there is a lack modern management tools, making it difficult to guarantee the quality of ginseng products [

2]. According to the ginseng and deer antler commodity standards [

3], the identification points of ginseng [

4], a survey on the status quo regarding the classification of commodity specifications in the Chinese herbal medicine market [

5], a study on the quality (grade) standards of ginseng and red ginseng [

6], and the proof provided by Li et al. [

7], the shape of the root is the most important factor in defining the quality of ginseng. It must be emphasized that the shape of the root is a determining factor in evaluating the quality of the root. Although the morphology of ginseng roots is highly susceptible to change, even when the plants are from the same place of origin, their color and texture are affected to a lesser extent [

8]. Therefore, the classification of ginseng mainly focuses on the extraction of color and texture features, which are more complex and difficult to distinguish, for the purpose of ginseng grading. In summary, this paper aims to introduce deep learning technology into the field of white ginseng herbal grading to improve accuracy in grading the quality of white ginseng.

In recent years, computer vision techniques have played an important role in plant phenotype identification. For example, Kumar et al. [

9] proposed a method to identify Indian medicinal plants based on leaf color, area, and edge features, but this work was limited to the detection of mature leaves. Lee et al. [

10] proposed a fast Fourier transform method for leaf images; they performed a distance analysis of the leaf’s contour and center of mass. An average recognition accuracy of 97.19% was achieved by inputting the features into the system. Kadir et al. [

11] proposed a plant recognition method that extracted the texture, shape, and color features of leaves using morphological openings and classified the plants using probabilistic neural networks. The method was tested on the Flavia dataset containing 32 plant leaves, with an average accuracy of 93.75%. Carranza-Rojas et al. [

12] used a GoogLeNet network to process plant specimens, fine-tuned the original model, and experimented with several databases on different datasets with good results. Sun et al. [

13] proposed a convolutional neural network (CNN) for herb identification and retrieval and trained a VGGNet-based network model, which improved the accuracy of herb identification in a cluttered background and made herb identification more suitable for practical applications. However, the average recognition accuracy was 71%, and the average retrieval accuracy was 53%. Thus, the accuracy of herb identification still needs to be improved. Liu et al. [

14] proposed a deep-learning-based method to distinguish the origin of Astragalus using NIR spectroscopy and a CNN, which simplified the data-pre-processing process while ensuring prediction accuracy and precision. The proposed method showed potential for the non-destructive and accurate assessment of ginseng quality using hyperspectral imaging. Li et al. [

15] improved the process of assessing the quality of ginseng by replacing the traditional ReLU (rectified linear unit) activation function and using a self-built dataset with data augmentation. The predictive capability of their model was improved by 3.02% compared to that of the original model, and the method demonstrated that deep learning can effectively classify the quality of the appearance of herbal medicines. This experiment is of great value for identifying the quality of the appearance of herbal medicines.

To date, researchers at home and abroad have designed a variety of deep learning modules that have high levels of accuracy and demonstrate a good performance. In 2018, Zhang et al. [

16] used the highly efficient CNN (convolutional neural network) architecture ShuffleNet, which utilizes channel shuffling to help to reduce computational complexity and information flow. They greatly reduced the computational effort required as compared to other models while maintaining comparable accuracy. In 2021, Wightman et al. [

17] proposed a structure-based re-parameterization method to reconstruct a solar speckle map and re-parameterize each branch structure, which improved the accuracy of the model to a large extent and saved computation time. Wang et al. [

18] explored the efficient training of VGG-style super-resolution networks using structural re-parameterization techniques and introduced a new re-parameterizable block that achieved a superior performance and a better trade-off between this better performance and the runtime compared to previous methods. Bello et al. [

19] combined this improved module with their proposed backbone network, ConvNeXt, which had a convolutional structure. Originally, their approach could only learn the adjacent spatial positions of the feature map locally, but it later became more able to combine the global information provided in images, effectively improving the network’s learning capability. In addition to improving the depth of the CNN, the attention module was also combined with long- and short-term memory networks to achieve better classification results for images, and it was demonstrated that fusing high-level feature information between channels can improve a network’s learning ability.

Preliminary experiments have shown that deep convolutional neural network (CNN) technology can be applied to the grading process of Chinese herbal medicines, but problems such as a slow processing speed, low grading accuracy, and unstable loss rate occur, which are the main difficulties in the application of neural network technology to ginseng grading production. Therefore, through information fusion to improve the network operation rate and address ginseng-grading performance problems, inspired by the above-mentioned high-performance module applications, an effective ConvNeXt [

20] framework was designed in this study for the fine-grained classification task of ginseng appearance quality identification.

Section 2 of this paper introduces the data pre-processing,

Section 3 partly introduces the improved ConvNeXt network framework,

Section 4 focuses on the experimental validation and results’ analysis, and

Section 5 partly summarizes and discusses the findings reported in this paper.

3. Building the Network Model

Ginseng images pose several challenges due to their complexity, including variations in shape, size, and color and the influences of factors such as illumination, orientation, and background. These factors make it difficult to achieve the accurate classification and grading of ginseng images using traditional computer vision techniques. To address these challenges, the ConvNeXt network was selected as the backbone network for this study. The ConvNeXt network combines convolutional and cross-channel parameterization layers to extract features from images. The inclusion of cross-channel parameterization allows the network to capture more complex relationships between different channels, which is crucial for accurately capturing the unique features present.

3.1. Construction of Ginseng Appearance Quality Grading Model

ConvNeXt was used as the backbone network for ginseng appearance quality classification, and an improved structural re-parameterization module and channel cleaning method were introduced into the network. The backbone network contained four stages with the ratio of 3:3:27:3. The numbers of channels C for each stage were (128, 256, 512, 1024), and the dimensions of the feature map were (56 × 56, 28 × 28, 14 × 14, 7 × 7).

In this paper, a connected network structure based on the improved ConvNeXt is proposed, as shown in

Figure 2. The network is divided into four stages A–D:

A-Stage: The input image, using the combined online and offline data enhancement method, obtains a feature map of 224 × 224 dimensions, reduces the image height and width via a convolution operation, and increases the channel size to obtain a feature map F1∈R56×56×128.

B-Stage: The feature map is entered into the modified ConvNeXt structure and is firstly re-parameterized using the structure re-parameterization module, which adds a relatively small (5 × 5) kernel to a large (13 × 13) kernel via a linear transformation, and then enters into the depth convolution (DW) [

21], using LayerNorm (LN) normalization instead. Batch normalization (BN), after increasing the channel size by 1 × 1 convolution with the PReLU activation function to 1 × 1 convolution so as to reduce the input channel size, fuses the output with F

1 through the LayerScale [

22] layer and DropPath layer [

23] to obtain the Fc-out.

C-Stage: The Fc-out is normalized by LayerNorm (LN) to reduce the feature map size and increase the channel size by 2 × 2 convolutions. The Channel Shuffle module and ConvNeXt Block are entered again after four stages to extract the feature information and yield the final output Fout∈R7×7×1024, where the ratio of the Channel Shuffle and ConvNeXt Block part of this stage is 3:3:27:3.

D-Stage: Fout is normalized by the average pooling layer and then by LN. The feature graph spatial densities are further reused and passed to the fully connected layer, finally completing the classification.

3.2. Improving the ConvNeXt Model

3.2.1. Introduction of the Channel Shuffle Module

To enhance the cross-channel information interaction between multilayer features on different scales, the ConvNeXt module incorporates a channel-blending operation. This operation improves the network’s ability to capture and integrate information from different channels, enabling better feature representation for ginseng images. Furthermore, to improve the computational efficiency, the ConvNeXt module utilizes the group convolution method for 1 × 1 convolutions. This approach enhances both the efficiency and accuracy of network recognition. It is particularly beneficial for the grading of fine-grained images of ginseng, where there might be limited phenotypic differentiation between different classes in the dataset. A schematic diagram of the channel-blending operation is shown in

Figure 3. In the figure, group a indicates that the input feature maps are grouped by channel and subjected to the convolution operation, which reduces the expressiveness of the feature maps. In contrast, the channel-blending operation (shown in groups b and c) blends the features of each group with the features of the other groups. Using the channel-blending method ensures that the grouped convolution employed next takes its input from different groups and the information can flow between the different groups.

3.2.2. Improved Re-Parameterization Module

Structural re-parameterization [

24,

25,

26,

27,

28] is a method used to equivalently transform the model structure by transforming its parameters to improve the representational power of the model, thus enabling the efficient grading of human parameters at fine grains without a loss of accuracy. Structural re-parameterization methods are widely used in neural network modules such as convolution (Conv), average pooling layers, and residual connections. Conv converts the input features to output

O. The formula is as follows:

where

C,

H, and

W are the input channel, height, and width, respectively.

, and

are the parameters of the convolution operator, where the values of

and

depend on several variables, including the kernel size, padding, step size, etc. The convolution operator’s additivity demonstrates that several branches can be combined into a single convolution and, as a result, have additivity. For example,

Table 3 summarizes the detailed operation space. RepVGG [

24] proposes an extended 3 × 3 Conv that includes a 1 × 1 Conv and the remaining connections; DBB [

24] proposes a diverse branching block to replace the original K × K Conv; and RepNAS [

29] aims to search the DBB branches (NAS) using a neural structure search.

Figure 4 illustrates the enhanced reparameterization module, which incorporates kernels of the dimensions 5 × 5 and 13 × 13. By using a very large kernel, the model is able to capture specific patterns within a smaller range, leading to an improved performance. In addition, the convolutional layer in the module employs batch normalization (BN) and utilizes a weight-sharing strategy, treating the entire feature map as neurons. This approach further enhances the accuracy of the optimized module. The experimental results demonstrate that the accuracy of the optimized module is approximately 5% higher than that of the conventional ConvNeXt network.

3.2.3. Using the PReLU Activation Function

The original ConvNeXt network used GELU (Gaussian Error Linear Units) [

30] as the activation function, but more activation functions have emerged over time. GELU is an activation function that incorporates regularization, a smoother variant of the ReLU (Rectified Linear Unit) [

31]. In general, its activation function takes the form of:

where

x is used as the neuron input. The larger

x is, the more likely it is that the activation output

x will be retained, and the smaller

x is, the more likely it is that the activation result will be 0.

The GELU activation function introduces the idea of stochastic regularity to alleviate the gradient disappearance problem, but it affects the convergence in the hard saturation region. The ReLU function, defined as

, has the problem that when the input is less than zero, the gradient is zero and the neuron cannot learn via back propagation. The Sigmoid function is calculated as

, where

x is the input value, and the output range of the Sigmoid function is (0, 1). However, the Sigmoid function is affected by the problem of gradient saturation, where the gradient approaches zero at large or small input values, leading to gradient disappearance and gradient explosion. Hence, this study utilizes the PReLU (Parametric Rectified Linear Unit) activation function. It is defined as

. The corresponding image is shown in

Figure 5. The PReLU activation function solves the hard saturation problem of the GELU at

x < 0 and transmits the ginseng information more efficiently, increasing the nonlinear variability of the ConvNeXt network model.

4. Experimental Validation and Analysis of the Results

4.1. Test Configuration Environment

We implemented our approach based on PyTorch. The processor of the experimental workstation was Xeon 4210 (8-core 2.45 GHz) (Intel., Santa Clara, CA, USA); the memory was 64 G. The GPU was an NVIDIA GeForce GTX 1080 ti (NVIDIA., Santa Clara, CA, USA); the running memory was 11 GB RAM. The software experimental configuration environment was based on Ubuntu 16.04; Python 3.7.0; Pytorch 1.10.1; and CUDA 10.2.

4.2. Experimental Training Process

In addition to the design of the network architecture, the training process also affects the final performance. The Vision Transformer [

32] approach in the original network architecture not only brings with it a new set of modules and architectural design decisions but also introduces different training methods (e.g., the AdamW optimizer) for the experiments. In this case, the approach mainly concerns the optimization strategy and the associated hyperparameter settings. Thus, the first step of our experiments is to train the base model using the training procedure of Vision Transformer, which, in this case, is based on ConvNeXt _base. It has been shown [

33] that the performance of a simple ResNet-50 model can be significantly improved using modern training techniques. The training parameters of the optimized ConvNeXt network model used in the present study are shown in

Table 4. Regarding the training method, we used the AdamW [

34] optimizer.

4.3. Model Evaluation

In order to comprehensively measure the effectiveness of the proposed ConvNeXt network, the accuracy (Acc), recall (Rec), precision (Pre), and specificity (Spe) were used as evaluation metrics, which were calculated as follows:

where

TP is the number of samples correctly predicted as positive samples, i.e., the number of accurately identified ginseng specimens;

TN is the number of samples correctly predicted as negative samples, i.e., the number of other accurately identified ginseng specimens;

FP is the number of samples incorrectly predicted as positive samples, i.e., the number of incorrectly identified ginseng specimens; and

FN is the number of samples incorrectly predicted as negative samples, i.e., the number of ginseng specimens identified as other species.

4.4. Impact of the Activation Function on Model Performance

The activation function is crucial in neural networks and has a significant impact on the model performance. In this experiment, the original GELU activation function was replaced with three activation functions, namely, the Sigmoid [

35], ReLU, and PReLU, for comparison. The results show that the GELU function takes longer to train, while Sigmoid and ReLU have significant differences compared to PReLU. ReLU causes the necrosis of negative neurons, and Sigmoid cannot handle the problem of feature differences in the training data.

To solve these problems and improve the efficiency, PReLU is used instead of the GELU activation function in this paper. Compared with the original model, as shown in

Table 5, PReLU improves the accuracy by 2.77%, reduces the loss value by 0.033, and saves 3.08 s of training time. PReLU directly trains the deep neural network, alleviates the gradient disappearance problem, and improves the training efficiency. At the same time, PReLU can deliver more detailed information to maximize inter-grade differences, such as texture, line, and color, to quickly extract the detailed information of hard-to-capture features, obtaining a better generalization performance and recognition effect and significantly improving the model’s performance.

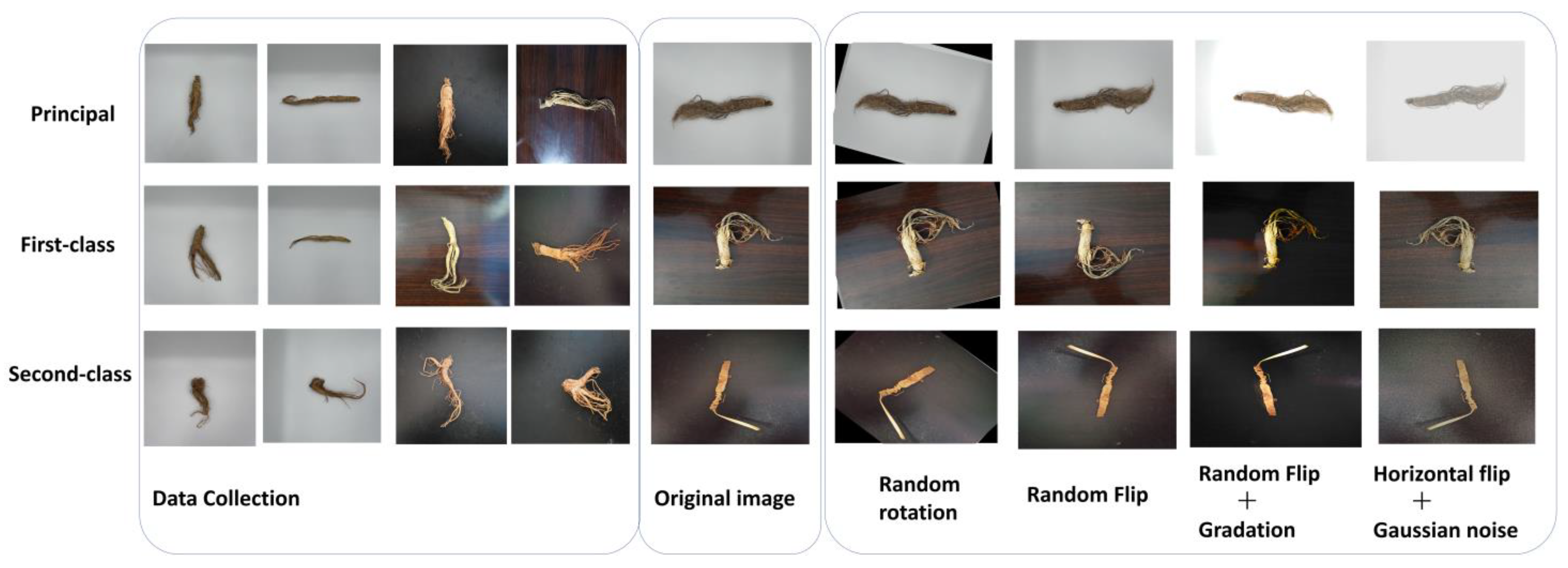

4.5. Impact of Data Enhancement on Model Performance

To enhance the recognition performance of the new network model, this study addresses the issue of homogeneous backgrounds in the ginseng dataset by employing several strategies. The dataset’s augmentation includes five methods aimed at improving the model’s generalization and robustness: (1) random rotation and flip; (2) random flip combined with a sharpening effect; (3) horizontal flip combined with Gaussian blur; (4) an added black leather background and reddish-brown wood-grain background; and (5) an online enhancement method using the PIL (Python Image Library) module in the Python Image Library, where the given image is uniformly cropped to 256 pixels × 256 pixels before each training round, and then the image is processed using the center-cropping method.

Using the new network as the experimental model, the pre-expansion dataset (1680 images) and the post-expansion dataset (5116 images) were tested for comparison under the same conditions for the other parameters, and the graphs are shown in

Figure 6, which show the test results of 78.67% and 85.97%, respectively, with a 7.3% increase.

4.6. Impact of Different Module Combinations on the Experimental Results

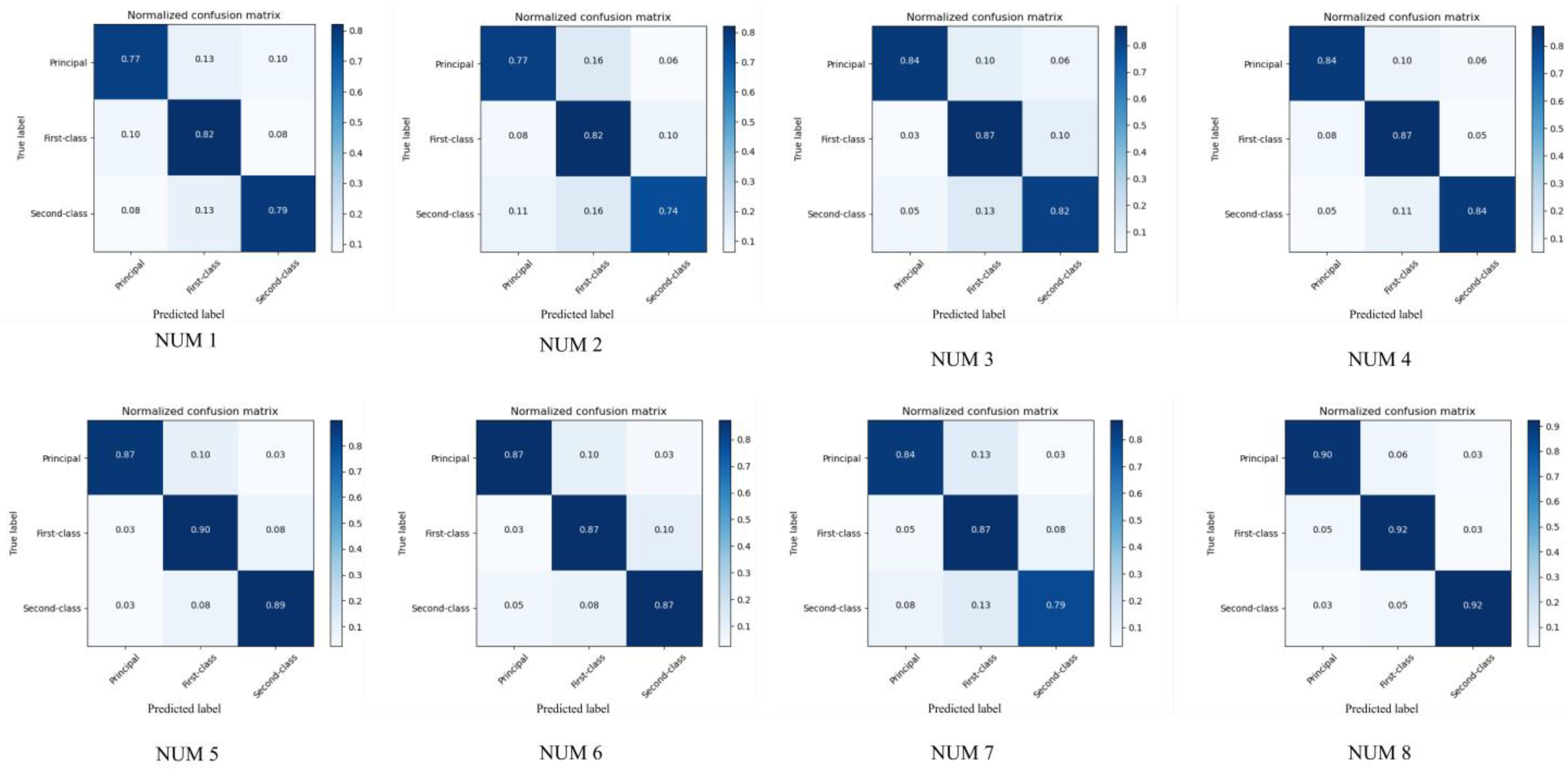

The identification results of each different method combination based on the test set are shown in

Table 6, where the “↑” symbol in the table indicates the improvement in accuracy compared to the original ConvNeXt model. Overall, for NUM 3, NUM 5, NUM 6, and NUM 8, the recognition accuracies of the models were 90.12%, 92.59%, 91.76%, and 94.44%, respectively. Compared with the other three types of models, the NUM 8 model was able to locate and recognize ginseng features more accurately, effectively improve the accuracy of the ConvNeXt model, better balance the grading results of the different grades of ginseng, and achieve the accurate identification of ginseng grades.

According to the details presented in

Table 6, it is observed that the incorporation of the Channel Shuffle module, re-parameterization module, and PReLU module can effectively alleviate the issues of lower accuracy and precision encountered when using the ConvNeXt model. These modules contribute to the improvement in the model’s performance and make it more suitable for the classification of ginseng grades. By integrating these modules, the model achieves an enhanced accuracy and precision, enabling more reliable and accurate classification results for ginseng-grading tasks. Among them, the introduction of the re-parameterization module has the best effect on the overall identification of the ConvNeXt model under the influences of individual modules, with the accuracy, recall, and specificity of the model being 90.12%, 85.09%, and 85.23%, respectively; therefore, the introduction of the Re-parameterization module in a single module is more effective in improving the recognition performance of the ConvNeXt model. In the case of the fusion of two modules, the Channel Shuffle module and the re-parameterization module together have the best effect, showing an improvement of 7.4% compared to the original module. Meanwhile, the combination of the PReLU and re-parameterization module has the lowest effect on the accuracy rate, improving the accuracy by only 3.7%, verifying that the Channel Shuffle module has a competitive advantage and has strong robustness and generalization ability.

Figure 7 depicts the confusion matrix that corresponds to the aforementioned table, where Principal stands for extra ginseng, First-class for first-class ginseng, and Second-class for second-class ginseng; the horizontal coordinate stands for the true class, while the vertical coordinate stands for the predicted class. According to the confusion matrix, the original model’s accuracy in identifying the Principal and Second-class cohorts is just 75%.

The inclusion of the PReLU activation function module (NUM4) improved the grading performance specifically for the second-class ginseng. However, there was no improvement for the extra-grade or first-class ginseng; in fact, the inclusion of this module led to an increased misclassification rate for the extra-grade ginseng. When both the PReLU activation function module and the re-parameterization module were introduced (NUM7), the model’s performance decreased compared to the configuration with the embedded PReLU activation function module and the Channel Shuffle module (NUM6). Specifically, there were decreases of 0.03% and 0.08% in the accuracy of the Principal and Second-class ginseng classifications, respectively. Furthermore, there was no improvement in the accuracy of the First-class ginseng classification.The incorporation of the three improved modules (NUM8) resulted in a significant improvement in the grading performance of both the first-class and second-class ginseng, effectively reducing the misclassification rate. As a result, the recognition accuracy for all types of ginseng exceeded 90%. This achievement demonstrates the superior recognition effect and enhanced generalization performance of the model. The introduction of these modules proved to be highly beneficial, resulting in improved accuracy and ensuring the model’s ability to accurately classify and distinguish different grades of ginseng.

We analyzed the accuracy and loss change curves of the improved ConvNeXt network. During the training process, the loss of the model decreased, and the accuracy increased faster in the first 75 rounds of training, and then the curve gradually stabilized and slowed down. At approximately 110 rounds of training, the accuracy and loss curves of the model leveled off, indicating that the improved ConvNeXt model was in a saturated state and reached the highest recognition accuracy. The trends of the loss rate and accuracy curves are consistent, indicating that the model generally converges well and does not overfit, which validates the effectiveness of the improved ConvNeXt model.

4.7. Comparison of Experimental Models with Mainstream Networks

The classical network and the ginseng dataset were selected for comparison tests according to the prototype framework and parameter setting methods in the corresponding original paper, and the good classification performance achieved using the improved ConvNeXt network on the ginseng dataset was demonstrated by combining the four category performance metrics in

Table 7.

Overall, the ConvNeXt network, before improvement, showed improvements of 2.47%, 1.86%, 0.62%, and 4.94% compared to ResNet-50 [

35] ResNet-101 [

36], DenseNet-121 [

37], and Incep-tionV3 [

38], respectively, with a recall of 78.06%, which indicates that the original ConvNeXt network results are more stable and that the model performs better compared to the conventional network.

Compared with the original ConvNeXt model, the improved ConvNeXt network has 9.25%, 13.86%, 12.98%, and 7.02% higher accuracy, precision, recall, and specificity, respectively, and several indexes are better than those of the Vision Transformer and Swim Transformer networks. The accuracy reaches 94.44%, and its better results are achieved for the following three reasons: firstly, the channel mixing wash is added after the down-sampling of the backbone network, so that the channel features are fully fusedm and the network operation accuracy is improved. Second, the addition of the reparameterization module to the ConvNeXt Block maintains the high accuracy of the network. Finally, the PReLU activation function increases the nonlinear variability of the neural network model and enhances the network operation rate. The model can provide a valuable reference for subsequent applications in ginseng appearance quality recognition.

4.8. Comparison of the Experimental Model and Expert Identification Results

The accuracy comparison between expert identification and the ConvNeXt model is shown in

Table 8. In the ginseng-grading task, the ConvNeXt model achieved high accuracy on all grades of ginseng-grading tasks. Compared with the expert identification results, the model improved by 7.86 percentage points in the grading of extra-grade ginseng, 8.71 percentage points for first-grade ginseng, and 3.30 percentage points for second-grade ginseng. This proves that the ConvNeXt model has a significant advantage in ginseng-grading accuracy, as compared with the expert identification results, and can better capture the features of ginseng images and perform effective grading.

The experimental model’s high accuracy in the performance of ginseng-grading tasks highlights its practical importance. By utilizing the ConvNeXt model, the accurate classification of various grades of ginseng can be achieved, eliminating the subjectivity and human bias associated with reliance on expert identification alone. Moreover, the model’s efficiency enables swift ginseng grading identification, significantly improving the work efficiency. This proves the necessity and effectiveness of the model in solving the difficult problem of ginseng grading and provides an accurate and efficient solution for the task of ginseng grading.

5. Conclusions

It was found that although the generic classification model achieves high accuracy on public datasets, different application scenarios have different characteristics and needs. In some specific scenarios, such as the application scenario described in this paper, only three different contexts need to be classified for ginseng; therefore, the model cannot reach the high performance level of the general classification model. The confusion matrix with the original model shows that the second-class ginseng has the lowest recognition accuracy, which also reflects the fact that when using highly similar ginseng datasets, the feature extraction capability of the model needs to be further improved to provide better recognition results.

In conclusion, this study addresses the specific challenge of classifying ginseng in different contexts and proposes a ConvNeXt model based on the fusion of Channel Shuffle with re-parameterization improvement. The model effectively solves the problem of grading ginseng of various grades, achieving accurate and efficient ginseng grading recognition. The analysis of data augmentation, Channel Shuffle module, structural re-parameterization module, activation function, and the improved ConvNeXt model yielded the following key findings:

Data augmentation improves the accuracy of the augmented dataset by 7.3%, validating the effectiveness of this technique.

Embedding the Channel Shuffle module after the down-sampled layer enhances the model’s accuracy through improved cross-channel information interaction.

The optimized structural re-parameterization module increases the model’s feature extraction capability, contributing to improved performance.

The utilization of the PReLU activation function instead of GELU improves the accuracy by 2.77%, reduces the loss value by 0.033, and demonstrates the model’s ability to solve the vanishing gradient problem and enhance the detailed feature information.

The improved ConvNeXt model achieves an accuracy of 94.44% on the test set, outperforming common classification models such as ResNet-50, ResNet-101, DenseNet-121, and InceptionV3 by significant margins, showcasing its generalization ability and robustness in accurately identifying ginseng ranks.

In addition, the improved ConvNeXt model demonstrated a high degree of agreement with the expert identification results in accurately classifying ginseng grades. This indicates its reliability and effectiveness in ginseng-grading tasks. However, to further enhance the model’s reliability and adaptability, future work should focus on expanding the dataset to encompass a wider range of ginseng species and appearance features. Additionally, the integration of additional relevant information, such as geographical origin, cultivation methods, and processing techniques, could enhance the model’s understanding and predictive ability. These efforts will contribute to improving the overall robustness of the model and provide valuable technical support for the intelligent grading of ginseng. By continually refining and expanding the dataset and incorporating diverse information, the model’s performance and applicability could be further improved, enabling more accurate and comprehensive ginseng-grading capabilities.