Fuzzy Comprehensive Evaluation for Grasping Prioritization of Stacked Fruit Clusters Based on Relative Hierarchy Factor Set

Abstract

:1. Introduction

2. Materials and Methods

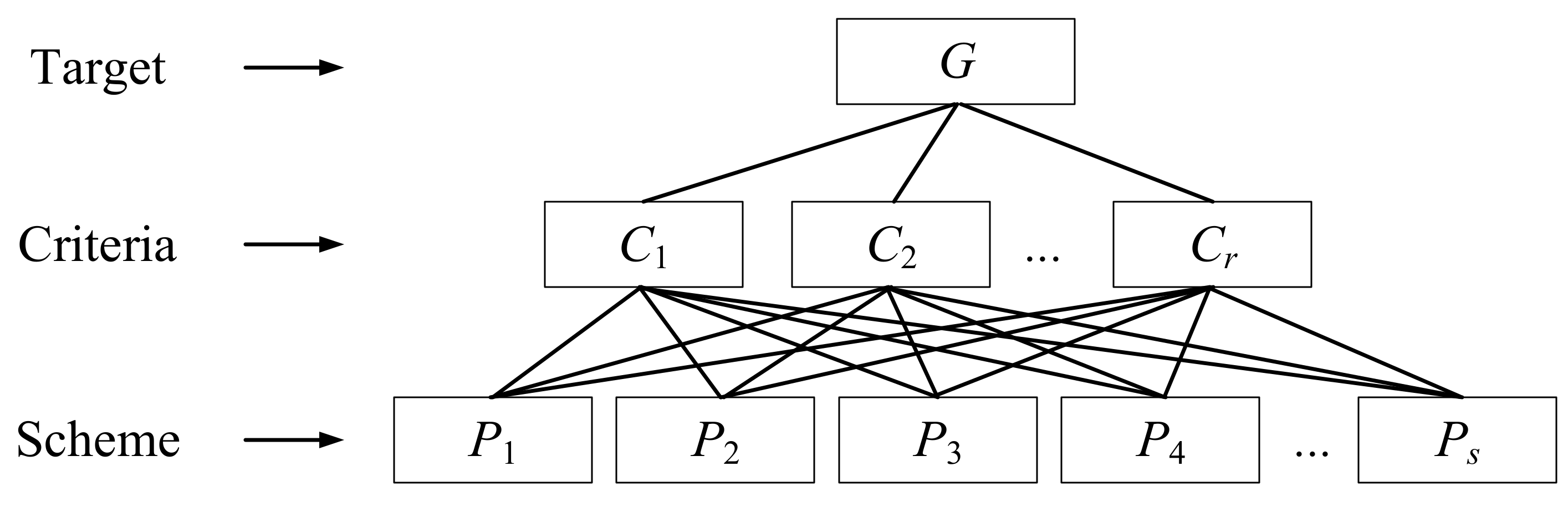

2.1. Existing Fuzzy Comprehensive Evaluation Method

2.2. Improved Fuzzy Comprehensive Evaluation Method Based on Relative Hierarchy Factor Set

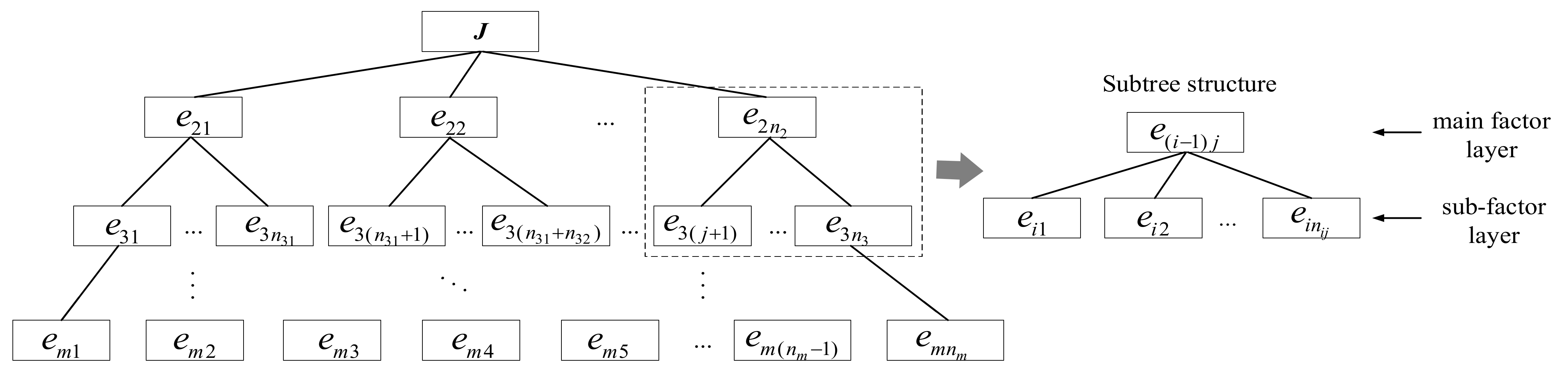

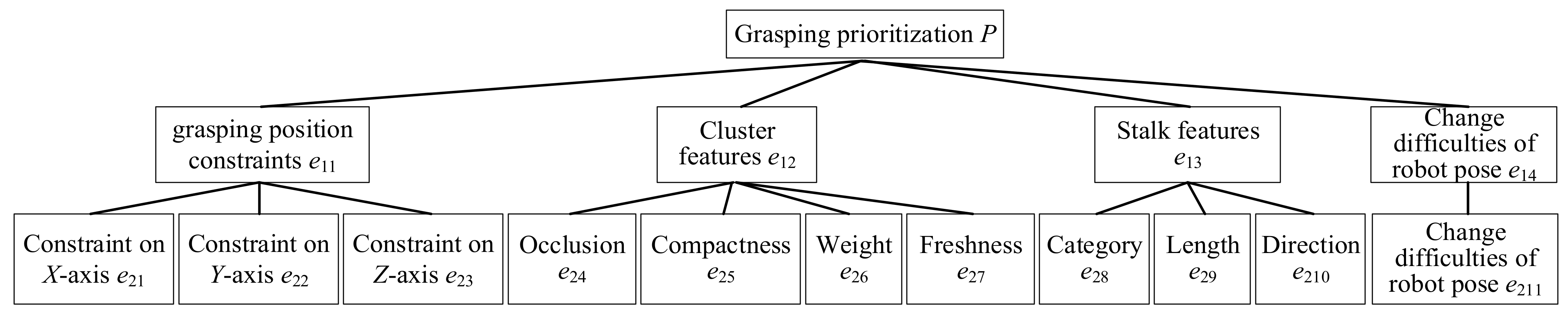

2.2.1. Hierarchical Tree Model without a Cross

2.2.2. Relative Factor Set with Positive and Negative Effects

2.2.3. Forward Construction of Weight Vector

- (1)

- Construction of a comparison matrix

- (2)

- Consistency verification of comparison matrix

- (3)

- Weight calculation based on a comparison matrix

2.2.4. Inverse Calculation of the Membership Matrix and Comprehensive Evaluation Value

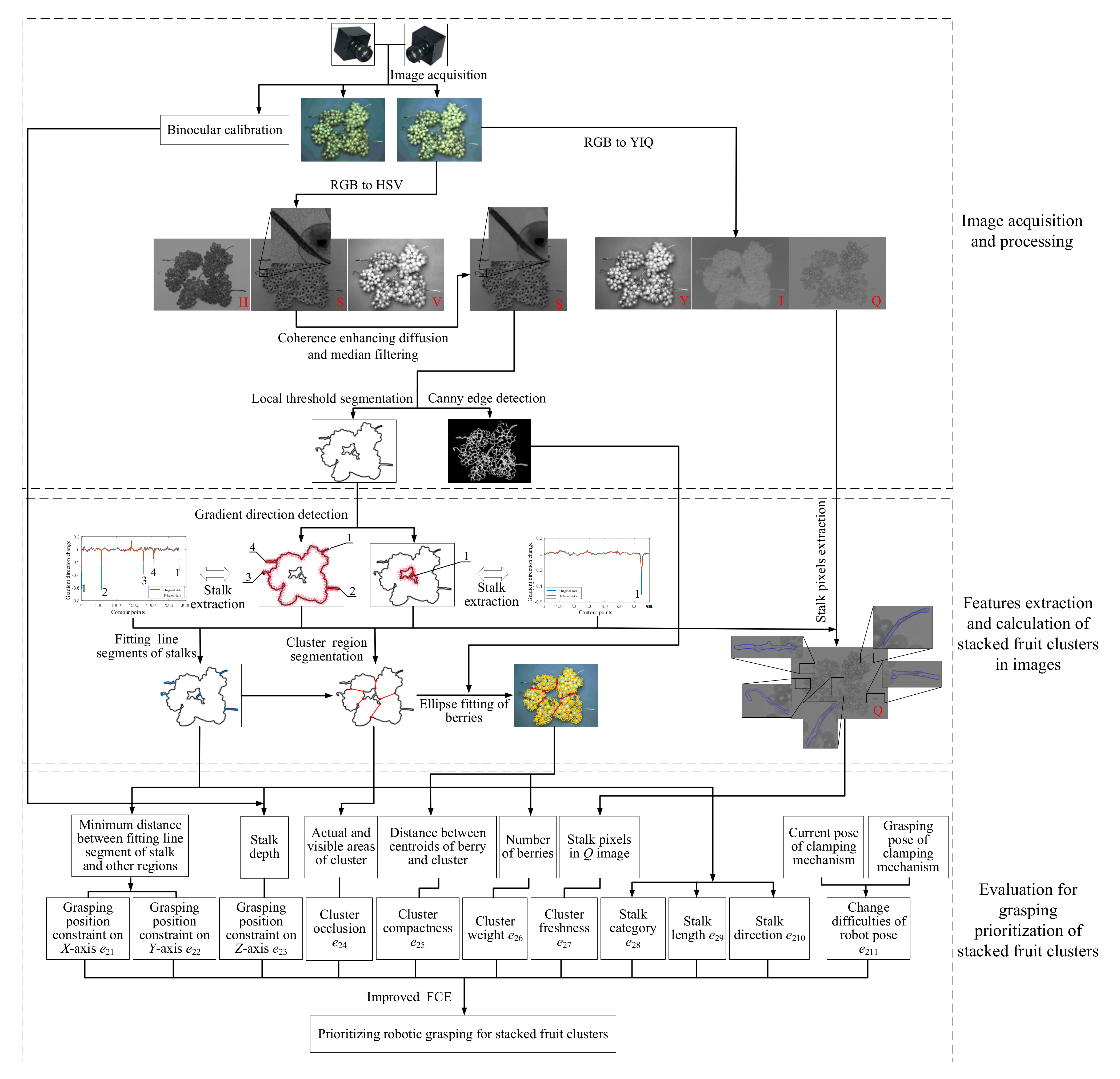

2.3. Prioritizing Robotic Grasping for Stacked Fruit Clusters Based on Improved FCE

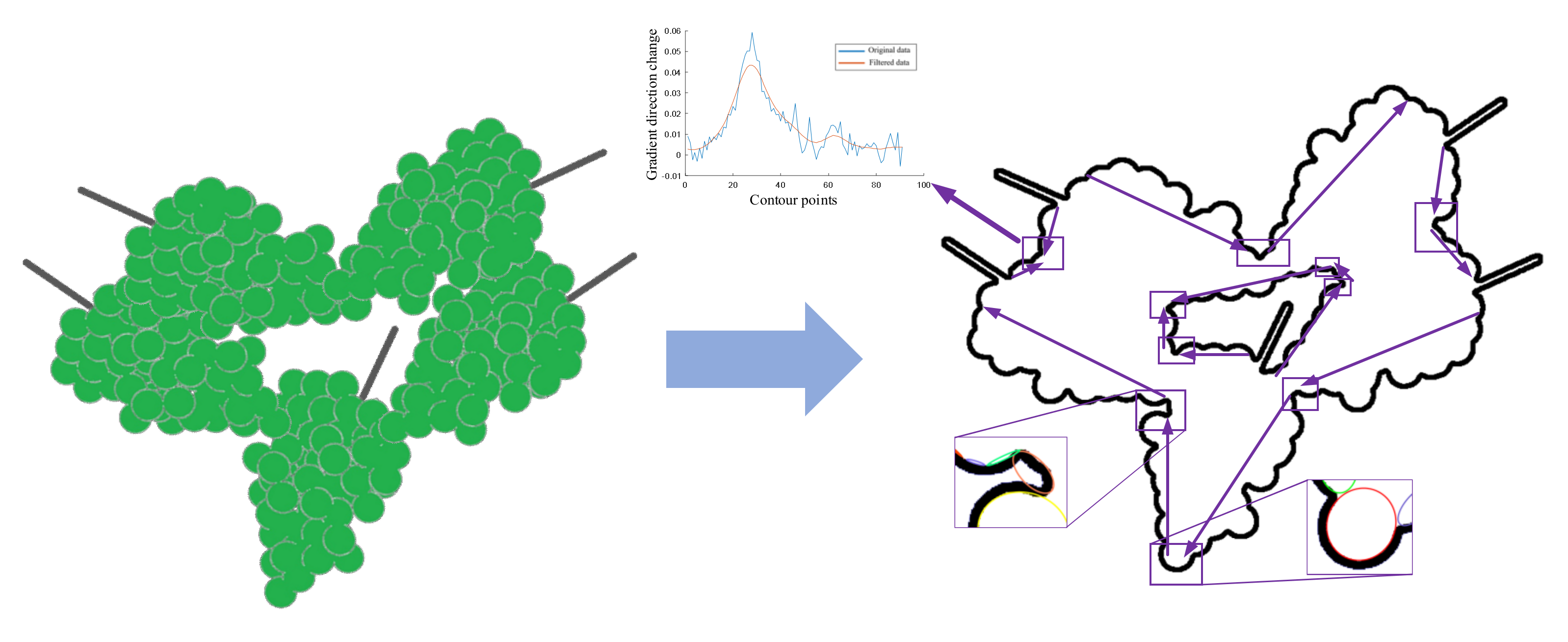

2.3.1. Image Acquisition and Processing

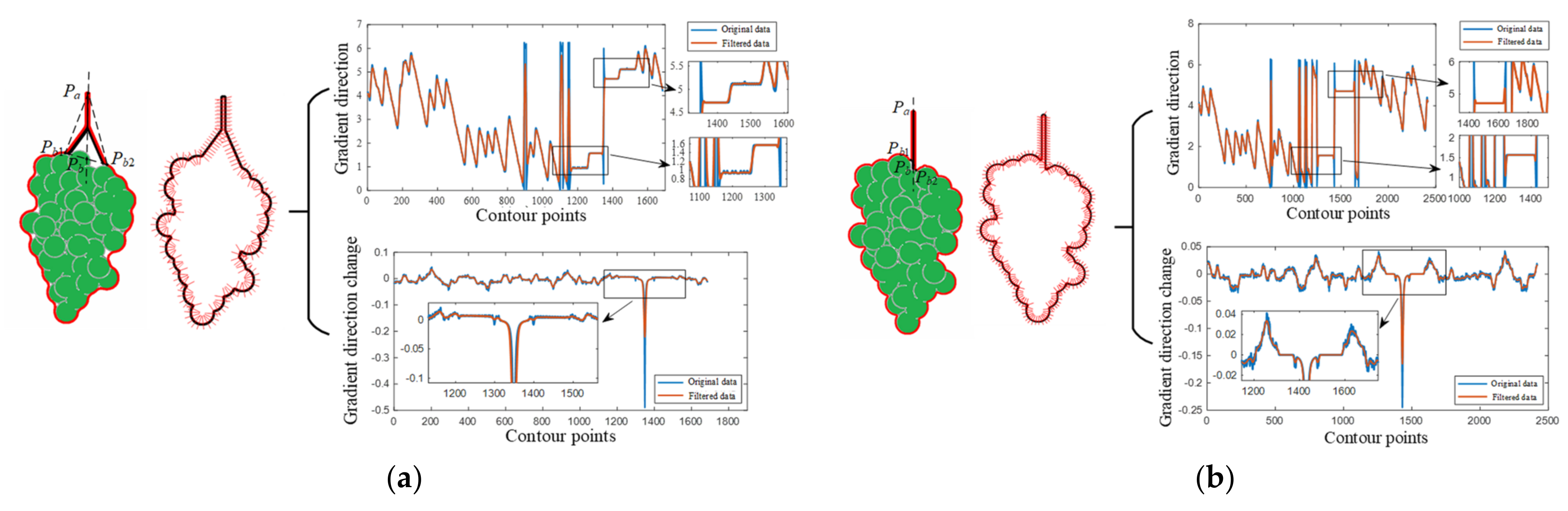

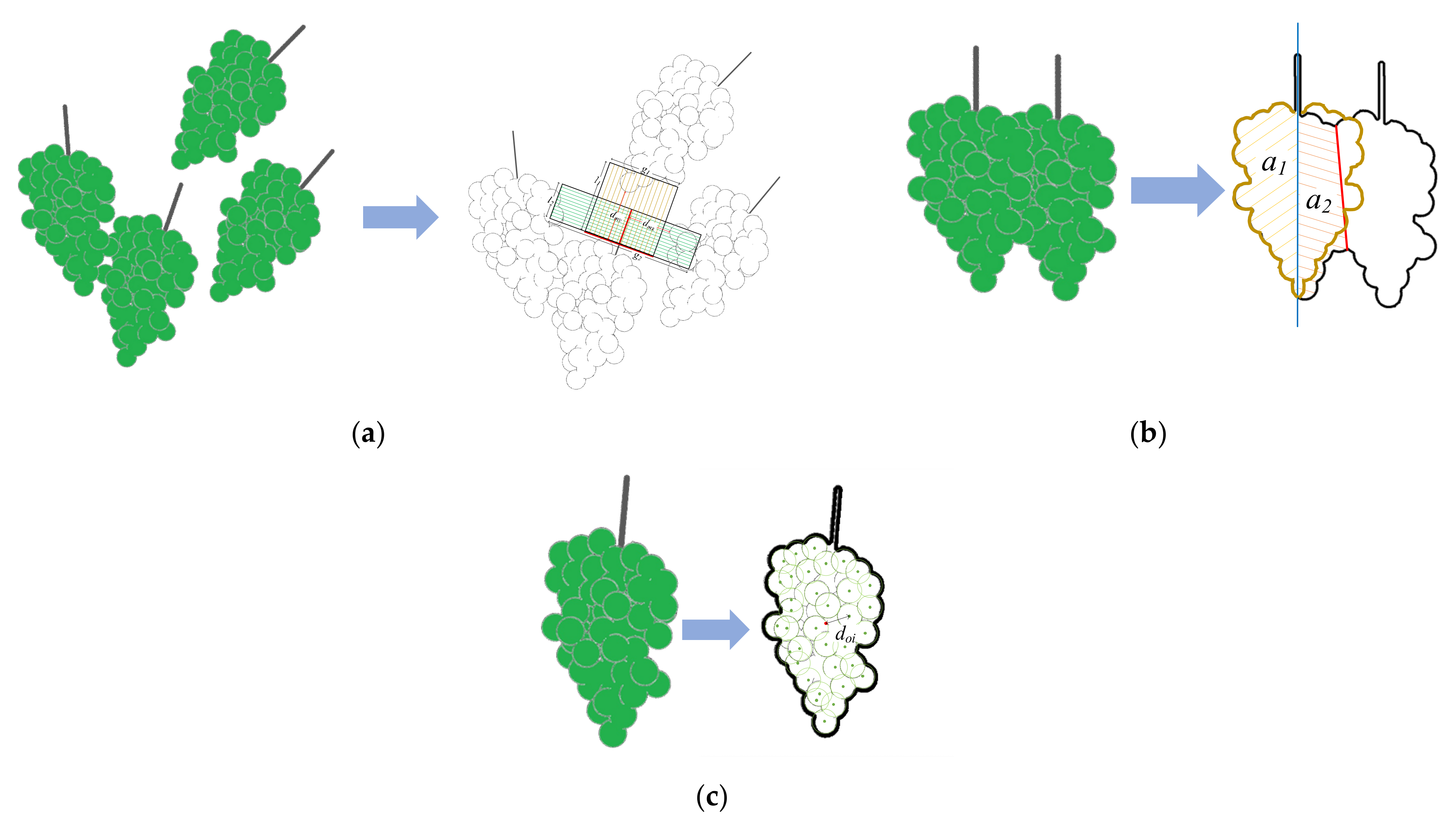

2.3.2. Features Extraction and Calculation of Stacked Fruit Clusters in Images

2.3.3. Evaluation for Grasping Prioritization of Stacked Fruit Clusters

3. Results

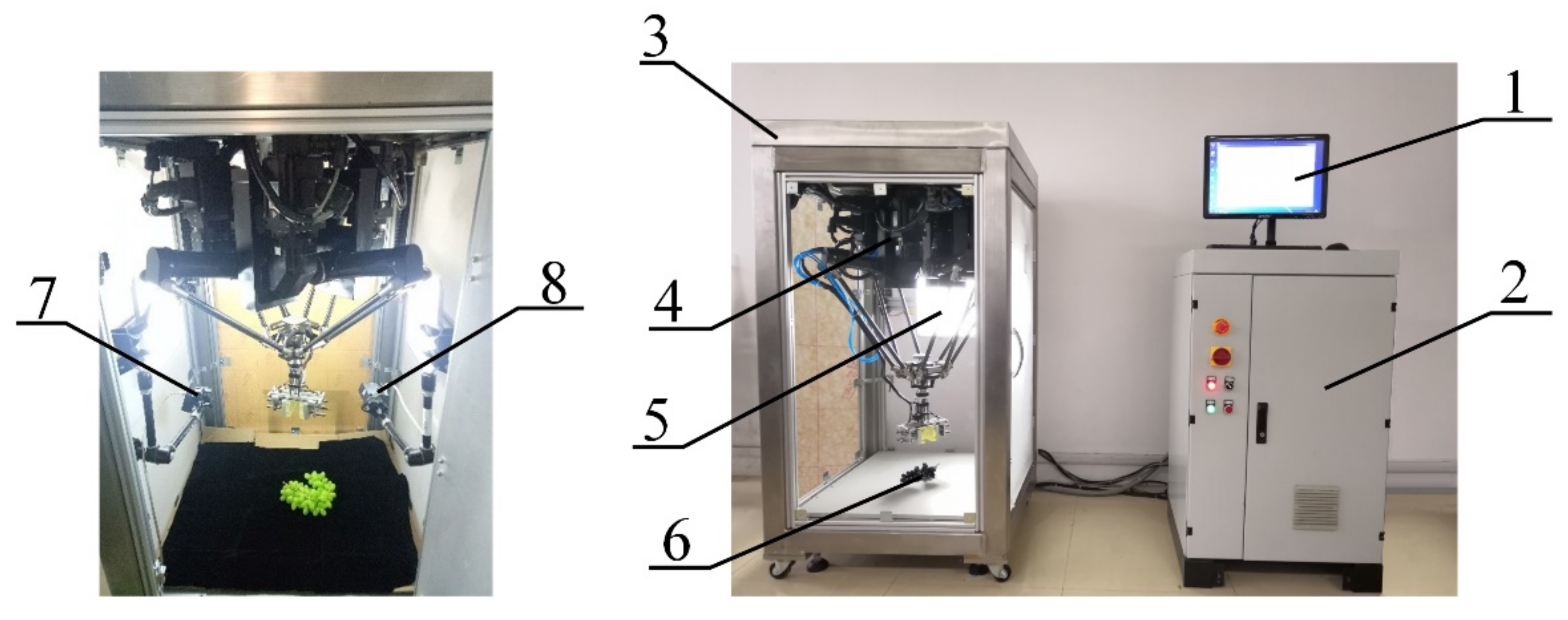

3.1. Experimental Platform

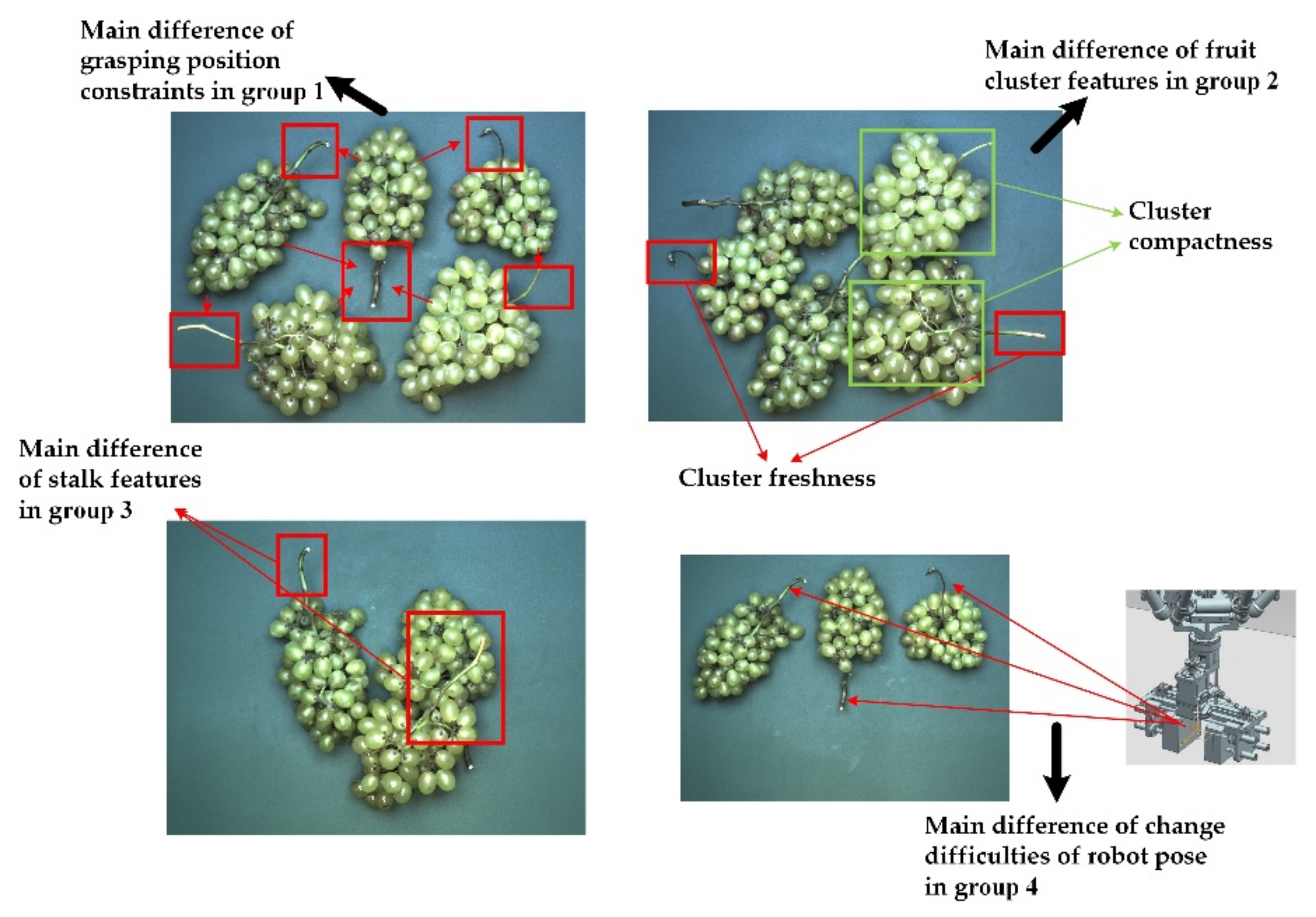

3.2. Experimental Method

3.3. Experimental Results and Discussion

4. Discussion and Conclusions

- (1)

- According to the multiple factors affecting the evaluation results, a hierarchical tree model without a cross based on a subtree structure is constructed. It solves the problem that it is difficult to construct a model and calculate a membership for multiple evaluation factors with unclear boundaries by existing FCE.

- (2)

- According to the relationship between the factors in each image and the positive or negative effect of each factor on the evaluation results, a relative factor set with positive and negative effects is constructed. It solves the problems that the absolute factor values for the same grasping prioritization in different images are inconsistent, and the different evaluation factors have different influence weights on grasping prioritization.

- (3)

- The average random consistency index and consistency satisfaction value are constructed based on mathematical expectations. It solves the problem that the use of experiential data in the existing FCE makes it difficult to construct and verify an accurate and effective comparison matrix for grasping prioritization. In addition, based on an analytic hierarchy process and subtree structure, the weight vector of evaluation factors is constructed from the top to the bottom of the model, and the membership matrix and comprehensive evaluation value of the multi-layer factors are calculated from the bottom to the top of the model.

- (4)

- The improved FCE was applied to evaluate the grasping prioritization of stacked fruit clusters in a self-developed parallel robot sorting system. The experimental results demonstrate that compared with the existing FCE, the average precision of the grasping prioritization of stacked fruit clusters based on the proposed method increased by 27.77%. The improved FCE based on the relative hierarchy factor set can effectively improve prioritization precision for grasping randomly stacked fruit clusters affected by multiple factors and further realize accurate automatic sorting.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shi, H.; Wang, Q.; Gu, W.; Wang, X.; Gao, S. Non-destructive firmness detection and grading of bunches of red globe grapes based on machine vision. Food Sci. 2021, 42, 232–239. [Google Scholar] [CrossRef]

- Schouterden, G.; Verbiest, R.; Demeester, E.; Kellens, K. Robotic cultivation of pome fruit: A benchmark study of manipulation tools-from research to industrial standards. Agronomy 2021, 11, 1922. [Google Scholar] [CrossRef]

- Li, Y.; Shang, D.; Fan, X.; Liu, Y. Motion reliability analysis of the delta parallel robot considering mechanism errors. Math. Probl. Eng. 2019, 2019, 3501921. [Google Scholar] [CrossRef]

- Sun, F.; Liu, C.; Huang, W.; Zhang, J. Object classification and grasp planning using visual and tactile sensing. IEEE Trans. Syst. Man Cybern. Syst. 2017, 46, 969–979. [Google Scholar] [CrossRef]

- Luo, L.; Tang, Y.; Zou, X.; Ye, M.; Feng, W.; Li, G. Vision-based extraction of spatial information in grape clusters for harvesting robots. Biosyst. Eng. 2016, 151, 90–104. [Google Scholar] [CrossRef]

- Liu, X.; Jia, W.; Ruan, C.; Zhao, D.; Gu, Y.; Chen, W. The recognition of apple fruits in plastic bags based on block classification. Precis. Agric. 2018, 19, 735–749. [Google Scholar] [CrossRef]

- Li, J.; Tang, Y.; Zou, X.; Lin, G.; Wang, H. Detection of fruit-bearing branches and localization of litchi clusters for vision-based harvesting robots. IEEE Access 2020, 8, 117746–117758. [Google Scholar] [CrossRef]

- Zhang, Q.; Gao, G. Grasping point detection of randomly placed fruit cluster using adaptive morphology segmentation and principal component classification of multiple features. IEEE Access 2019, 7, 158035–158050. [Google Scholar] [CrossRef]

- Zhang, Q.; Gao, G. Prioritizing robotic grasping of stacked fruit clusters based on stalk location in RGB-D images. Comput. Electron. Agric. 2020, 172, 105359. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Wang, S.; Qiao, F. Novel industrial robot sorting technology based on machine vision. In Proceedings of the 9th International Conference on Modelling, Identification and Control (ICMIC), Kunming, China, 10–12 July 2017. [Google Scholar] [CrossRef]

- Xiang, S.; Gao, H.; Liu, Z.; Gosselin, C. Dynamic transition trajectory planning of three-DOF cable-suspended parallel robots via linear time-varying MPC. Mech. Mach. Theory 2020, 146, 103715. [Google Scholar] [CrossRef]

- Zhang, C.; Zhao, C.; Huang, W.; Wang, Q.; Liu, S.; Li, J.; Guo, Z. Automatic detection of defective apples using nir coded structured light and fast lightness correction. J. Food Eng. 2017, 203, 69–82. [Google Scholar] [CrossRef]

- Wang, H.; Hu, R.; Zhang, M.; Zhai, Z.; Zhang, R. Identification of tomatoes with early decay using visible and near infrared hyperspectral imaging and image-spectrum merging technique. J. Food Process Eng. 2021, 44, e13654. [Google Scholar] [CrossRef]

- Kitaaki, Y.; Haraguchi, R.; Shiratsuchi, K.; Domae, Y.; Okuda, H.; Noda, A.; Sumi, K.; Fukuda, T.; Kaneko, S.I.; Matsuno, T. A robotic assembly system capable of handling flexible cables with connector. In Proceedings of the 2011 IEEE International Conference on Mechatronics and Automation, Beijing, China, 7–10 August 2011. [Google Scholar] [CrossRef]

- Jabalameli, A.; Behal, A. From single 2D depth image to gripper 6D pose estimation: A fast and robust algorithm for grabbing objects in cluttered scenes. Robotics 2019, 8, 63. [Google Scholar] [CrossRef] [Green Version]

- Domae, Y.; Okuda, H.; Taguchi, Y.; Sumi, K.; Hirai, T. Fast graspability evaluation on single depth maps for bin picking with general grippers. In Proceedings of the IEEE International Conference on Robotics & Automation, Hong Kong, China, 31 May–7 June 2014. [Google Scholar] [CrossRef] [Green Version]

- Maehara, K.; Miyagawa, H. Object Grasping Control Method and Apparatus. US Patent US8788095B2, 22 July 2014. [Google Scholar]

- Wang, W.; Li, R.; Diekel, Z.M.; Jia, Y. Robot action planning by online optimization in human–robot collaborative tasks. Int. J. Intell. Robot. Appl. 2018, 2, 161–179. [Google Scholar] [CrossRef]

- Zhang, H.; Liang, H.; Ni, T.; Huang, L.; Yang, J. Research on multi-object sorting system based on deep learning. Sensors 2021, 21, 6238. [Google Scholar] [CrossRef]

- Zhang, H.; Lan, X.; Zhou, X.; Tian, Z.; Zheng, Y.; Zheng, N. Visual manipulation relationship network for autonomous robotics. In Proceedings of the IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Beijing, China, 6–9 November 2018. [Google Scholar] [CrossRef]

- Kong, C.; Wang, S.; Wang, Y.; Gong, C.; Lu, H. Application of AHP-FCA modeling in visual guided manipulator. In Proceedings of the 2017 2nd International Conference on Robotics and Automation Engineering (ICRAE), Shanghai, China, 29–31 December 2017. [Google Scholar] [CrossRef]

- Zhou, R.; Song, H.; Li, J. Research on settlement particle recognition based on fuzzy comprehensive evaluation method. EURASIP J. Image Video Process. 2018, 108, 1–15. [Google Scholar] [CrossRef]

- Xu, X.; Nie, C.; Jin, X.; Li, Z.; Zhu, H.; Xu, H.; Wang, J.; Zhao, Y.; Feng, H. A comprehensive yield evaluation indicator based on an improved fuzzy comprehensive evaluation method and hyperspectral data. Field Crops Res. 2021, 270, 108204. [Google Scholar] [CrossRef]

- Sen, P.; Roy, M.; Pal, P. Evaluation of environmentally conscious manufacturing programs using a three-hybrid multi-criteria decision analysis method. Ecol. Indic. 2017, 73, 264–273. [Google Scholar] [CrossRef]

- Gao, G.Q.; Zhang, Q.; Zhang, S. Pose detection of parallel robot based on improved RANSAC algorithm. Meas. Control. 2019, 52, 855–868. [Google Scholar] [CrossRef] [Green Version]

- Tang, Y.; Li, L.; Wang, C.; Chen, M.; Feng, W.; Zou, X.; Huang, K. Real-time detection of surface deformation and strain in recycled aggregate concrete-filled steel tubular columns via four-ocular vision. Robot. Comput.-Integr. Manuf. 2019, 59, 34–46. [Google Scholar] [CrossRef]

- Yan, X.; Jin, R.; Weng, G. Active contours driven by order-statistic filtering and coherence-enhancing diffusion filter for fast image segmentation. J. Electron. Imaging 2020, 29, 023012. [Google Scholar] [CrossRef]

- Ruan, C.; Zhao, D.; Jia, W.; Chen, Y. A New Image Denoising Method by Combining WT with ICA. Math. Probl. Eng. 2015, 2015, 582640. [Google Scholar] [CrossRef]

- Rabatel, G.; Guizard, C. Grape berry calibration by computer vision using elliptical model fitting. In Proceedings of the 6th European Conference on Precision Agriculture, Skiathos, Greece, 6 June 2007; Available online: https://hal.archives-ouvertes.fr/hal-00468536 (accessed on 20 January 2022).

- Chen, J.; Li, R.; Mo, P.; Zhou, G.; Cai, S.; Chen, D. A modified method for morphology quantification and generation of 2D granular particles. Granul. Matter 2021, 24, 16. [Google Scholar] [CrossRef]

- Zhang, Q.; Gao, G. Grasping model and pose calculation of parallel robot for fruit cluster. Trans. Chin. Soc. Agric. Eng. 2019, 35, 37–47. [Google Scholar] [CrossRef]

- Blanes, C.; Mellado, M.; Ortiz, C.; Valera, A. Review. Technologies for robot grippers in pick and place operations for fresh fruits and vegetables. Span. J. Agric. Res. 2011, 9, 1130–1141. [Google Scholar] [CrossRef]

- Mo, J.; Shao, Z.; Guan, L.; Xie, F.; Tang, X. Dynamic performance analysis of the x4 high-speed pick-and-place parallel robot. Robot. Comput.-Integr. Manuf. 2017, 46, 48–57. [Google Scholar] [CrossRef]

- Liu, J.; Tang, S.; Shan, S.; Ju, J.; Zhu, X. Simulation and test of grapefruit cluster vibration for robotic harvesting. Trans. Chin. Soc. Agric. Mach. 2016, 47, 1–8. [Google Scholar] [CrossRef]

| Grasping Position Constraint on X-axis | Grasping Position Constraint on Y-axis | Grasping Position Constraint on Z-axis | Cluster Occlusion | Cluster Compactness | Cluster Weight | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| e21(mm) | e″21 | e22(mm) | E″22 | e23(mm) | e″23 | e24(%) | e″24 | e25(pixel) | e″25 | e26(g) |

| −21.13 | 0.129 | 105.70 | 1.000 | 14.02 | 0.366 | 54.36 | 0.000 | 2.01 | −0.101 | 635.55 |

| −42.47 | 0.000 | −7.47 | 0.000 | 31.34 | 0.630 | 63.55 | 0.591 | 1.99 | −0.076 | 735.90 |

| 64.18 | 0.642 | 55.36 | 0.555 | 55.59 | 1.000 | 69.90 | 1.000 | 2.72 | −1.000 | 479.45 |

| 75.96 | 0.713 | 78.94 | 0.764 | 41.28 | 0.782 | 61.18 | 0.439 | 2.34 | −0.519 | 568.65 |

| 123.60 | 1.000 | 23.89 | 0.277 | −10.01 | 0.000 | 56.37 | 0.129 | 1.93 | 0.000 | 769.35 |

| Cluster Weight | Cluster Freshness | Stalk Category | Stalk Length | Stalk Direction | ||||||

| e″26 | e27(pixel) | e″27 | e28 | e″28 | e29(mm) | e″29 | x210(mm) | x″210 | y210(mm) | y″210 |

| −0.538 | 89.15 | 0.000 | 1.00 | 0.000 | 68.70 | 0.789 | −0.97 | 0.000 | −0.24 | −0.243 |

| −0.885 | 93.33 | −0.413 | 1.00 | 0.000 | 69.59 | 0.812 | −0.89 | −0.042 | 0.40 | −0.673 |

| 0.000 | 90.84 | −0.167 | 2.00 | −0.500 | 38.52 | 0.000 | 0.92 | −1.000 | −0.36 | −0.163 |

| −0.308 | 99.26 | −1.000 | 3.00 | −1.000 | 76.77 | 1.000 | 0.79 | −0.932 | −0.60 | 0.000 |

| −1.000 | 89.50 | −0.035 | 1.00 | 0.000 | 61.24 | 0.594 | −0.36 | −0.321 | 0.89 | −1.000 |

| Stalk Direction | Change Difficulties of Robot Pose | |||||||||

| z210(mm) | z″210 | x211(mm) | x″211 | y211(mm) | y″211 | z211(mm) | z″211 | θ211(°) | θ″211 | |

| −0.08 | −1.000 | −357.25 | 0.000 | 24.65 | −0.786 | −125.87 | 0.000 | 89.56 | −0.781 | |

| −0.22 | −0.272 | 235.64 | −1.000 | 118.13 | −1.000 | 489.53 | −1.000 | 155.78 | −1.000 | |

| −0.18 | −0.460 | 145.20 | −0.847 | −318.90 | 0.000 | 337.20 | −0.752 | −99.45 | −0.156 | |

| −0.15 | −0.650 | −65.73 | −0.492 | −44.82 | −0.627 | 246.81 | −0.606 | −146.80 | 0.000 | |

| −0.27 | 0.000 | −212.61 | −0.244 | 57.88 | −0.862 | −33.76 | −0.150 | 66.84 | −0.706 | |

| Grasping Position Constraint on X-axis | Grasping Position Constraint on Y-axis | Grasping Position Constraint on Z-axis | Cluster Occlusion | Cluster Compactness | Cluster Weight | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| e21(mm) | e″21 | e22(mm) | e″22 | e23(mm) | e″23 | e24(%) | e″24 | e25(pixel) | e″25 | e26(g) |

| 31.99 | 0.381 | 21.15 | 0.073 | 21.82 | 0.355 | 77.28 | 0.722 | 2.58 | −0.374 | 401.40 |

| 43.13 | 0.574 | 15.83 | 0.000 | 16.18 | 0.153 | 86.94 | 1.000 | 3.77 | −1.000 | 869.70 |

| 67.71 | 1.000 | 47.73 | 0.440 | 29.01 | 0.614 | 52.24 | 0.000 | 2.21 | −0.179 | 713.60 |

| 10.04 | 0.000 | 88.40 | 1.000 | 39.77 | 1.000 | 69.15 | 0.487 | 1.87 | 0.000 | 535.20 |

| 37.70 | 0.480 | 26.40 | 0.146 | 11.93 | 0.000 | 72.30 | 0.578 | 3.02 | −0.605 | 613.25 |

| Cluster Weight | Cluster Freshness | Stalk Category | Stalk Length | Stalk Direction | ||||||

| e″26 | e27(pixel) | e″27 | e28 | e″28 | e29(mm) | e″29 | x210(mm) | x″210 | y210(mm) | y″210 |

| 0.000 | 89.81 | 0.000 | 3.00 | −1.000 | 84.53 | 1.000 | 0.71 | −0.939 | −0.70 | −0.008 |

| −1.000 | 108.23 | −0.460 | 2.00 | −0.500 | 38.67 | 0.000 | 0.57 | −0.850 | 0.79 | −1.000 |

| −0.667 | 129.86 | −1.000 | 2.00 | −0.500 | 46.87 | 0.179 | −0.69 | −0.037 | −0.71 | 0.000 |

| −0.286 | 100.02 | −0.255 | 1.00 | 0.000 | 79.54 | 0.891 | −0.75 | 0.000 | −0.64 | −0.045 |

| −0.452 | 115.50 | −0.641 | 1.00 | 0.000 | 67.77 | 0.635 | 0.80 | −1.000 | 0.58 | −0.860 |

| Stalk Direction | Change Difficulties of Robot Pose | |||||||||

| z210(mm) | z″210 | x211(mm) | x″211 | y211(mm) | y″211 | z211(mm) | z″211 | θ211(°) | θ″211 | |

| −0.07 | −1.000 | 15.64 | −0.411 | 73.60 | −0.702 | 108.95 | −1.000 | 105.67 | −1.000 | |

| −0.23 | 0.000 | −112.97 | −0.182 | 268.94 | −1.000 | −145.78 | 0.000 | 15.31 | −0.671 | |

| −0.13 | −0.663 | −215.40 | 0.000 | −146.32 | −0.366 | −78.25 | −0.265 | −89.76 | −0.288 | |

| −0.16 | −0.426 | 347.38 | −1.000 | −386.10 | 0.000 | 14.55 | −0.629 | −168.90 | 0.000 | |

| −0.14 | −0.569 | −46.80 | −0.300 | 33.65 | −0.641 | −88.45 | −0.225 | 57.25 | −0.824 | |

| Grasping Position Constraint on X-axis | Grasping Position Constraint on Y-axis | Grasping Position Constraint on Z-axis | Cluster Occlusion | Cluster Compactness | Cluster Weight | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| e21(mm) | e″21 | e22(mm) | e″22 | e23(mm) | e″23 | e24(%) | e″24 | e25(pixel) | e″25 | e26(g) |

| 26.84 | 0.132 | 53.81 | 0.632 | −3.23 | 0.000 | 54.85 | 0.000 | 2.23 | −0.333 | 691.30 |

| 15.81 | 0.000 | 43.21 | 0.524 | 12.83 | 0.187 | 59.57 | 0.364 | 2.75 | −0.778 | 657.85 |

| 99.58 | 1.000 | −8.17 | 0.000 | 43.65 | 0.546 | 63.52 | 0.668 | 1.84 | 0.000 | 524.05 |

| 88.46 | 0.867 | 89.90 | 1.000 | 82.68 | 1.000 | 54.91 | 0.005 | 3.01 | −1.000 | 747.05 |

| 68.85 | 0.633 | 42.30 | 0.515 | 36.29 | 0.460 | 67.83 | 1.000 | 1.91 | −0.060 | 635.55 |

| Cluster Weight | Cluster Freshness | Stalk Category | Stalk Length | Stalk Direction | ||||||

| e″26 | e27(pixel) | e″27 | e28 | e″28 | e29(mm) | e″29 | x210(mm) | x″210 | y210(mm) | y″210 |

| −0.750 | 101.50 | −0.752 | 3.00 | −0.400 | 87.41 | 1.000 | 0.64 | −0.466 | 0.76 | −1.000 |

| −0.600 | 94.29 | −0.350 | 6.00 | −1.000 | 47.07 | 0.232 | 0.43 | 0.000 | −0.85 | 0.000 |

| 0.000 | 88.01 | 0.000 | 2.00 | −0.200 | 39.26 | 0.084 | 0.70 | −0.594 | 0.65 | −0.932 |

| −1.000 | 91.36 | −0.187 | 1.00 | 0.000 | 56.72 | 0.416 | 0.88 | −1.000 | −0.46 | −0.242 |

| −0.500 | 105.94 | −1.000 | 4.00 | −0.600 | 34.86 | 0.000 | 0.88 | −1.000 | −0.44 | −0.256 |

| Stalk Direction | Change Difficulties of Robot Pose | |||||||||

| z210(mm) | z″210 | x211(mm) | x″211 | y211(mm) | y″211 | z211(mm) | z″211 | θ211(°) | θ″211 | |

| −0.09 | −1.000 | −99.65 | −0.070 | 278.42 | −1.000 | −138.50 | 0.000 | −78.24 | 0.000 | |

| −0.31 | 0.000 | −10.31 | −0.240 | 55.84 | −0.585 | 98.75 | −0.459 | −55.33 | −0.124 | |

| −0.30 | −0.048 | 55.80 | −0.366 | −15.76 | −0.451 | 378.91 | −1.000 | −26.70 | −0.280 | |

| −0.15 | −0.724 | −136.20 | 0.000 | 44.85 | −0.564 | 307.34 | −0.862 | 94.36 | −0.938 | |

| −0.19 | −0.530 | 388.50 | −1.000 | −257.90 | 0.000 | 255.90 | −0.762 | 105.80 | −1.000 | |

| Grasping Position Constraint on X-axis | Grasping Position Constraint on Y-axis | Grasping Position Constraint on Z-axis | Cluster Occlusion | Cluster Compactness | Cluster Weight | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| e21(mm) | e″21 | e22(mm) | e″22 | e23(mm) | e″23 | e24(%) | e″24 | e25(pixel) | e″25 | e26(g) |

| 22.48 | 0.000 | 26.78 | 0.379 | −7.42 | 0.000 | 61.22 | 0.369 | 1.83 | 0.000 | 535.20 |

| 63.56 | 0.846 | 18.49 | 0.269 | 3.19 | 0.154 | 70.85 | 1.000 | 1.93 | −0.105 | 713.60 |

| 52.16 | 0.611 | 57.89 | 0.793 | 61.27 | 1.000 | 64.03 | 0.553 | 2.78 | −1.000 | 680.15 |

| 71.04 | 1.000 | −1.73 | 0.000 | 37.76 | 0.658 | 58.30 | 0.178 | 2.04 | −0.221 | 602.10 |

| 45.17 | 0.467 | 73.50 | 1.000 | 30.11 | 0.546 | 55.59 | 0.000 | 2.61 | −0.821 | 434.85 |

| Cluster Weight | Cluster Freshness | Stalk Category | Stalk Length | Stalk Direction | ||||||

| e″26 | e27(pixel) | e″27 | e28 | e″28 | e29(mm) | e″29 | x210(mm) | x″210 | y210(mm) | y″210 |

| −0.360 | 100.27 | −0.782 | 1.00 | 0.000 | 65.83 | 0.601 | 0.57 | −0.912 | −0.81 | 0.000 |

| −1.000 | 99.02 | −0.716 | 2.00 | −0.500 | 39.95 | 0.000 | −0.68 | −0.126 | −0.63 | −0.136 |

| −0.880 | 104.37 | −1.000 | 2.00 | −0.500 | 43.77 | 0.089 | −0.88 | 0.000 | −0.25 | −0.424 |

| −0.600 | 85.54 | 0.000 | 1.00 | 0.000 | 71.40 | 0.731 | 0.71 | −1.000 | −0.69 | −0.089 |

| 0.000 | 92.33 | −0.361 | 3.00 | −1.000 | 82.99 | 1.000 | −0.86 | −0.014 | 0.49 | −1.000 |

| Stalk Direction | Change Difficulties of Robot Pose | |||||||||

| z210(mm) | z″210 | x211(mm) | x″211 | y211(mm) | y″211 | z211(mm) | z″211 | θ211(°) | θ″211 | |

| −0.15 | −0.886 | 367.25 | −1.000 | −157.98 | −0.423 | 507.45 | −1.000 | −33.76 | −0.386 | |

| −0.38 | −0.078 | −57.94 | −0.436 | −355.60 | 0.000 | −167.89 | 0.000 | 64.91 | −0.794 | |

| −0.40 | 0.000 | −387.30 | 0.000 | 98.67 | −0.973 | −33.68 | −0.199 | 114.60 | −1.000 | |

| −0.12 | −1.000 | 218.60 | −0.803 | 102.74 | −0.981 | 336.30 | −0.747 | −126.87 | 0.000 | |

| −0.14 | −0.935 | 14.70 | −0.533 | 111.45 | −1.000 | 77.89 | −0.364 | −88.35 | −0.160 | |

| Groups | RP21 | RP22 | RP23 | RP24 | RP25 | RP26 | RP27 | RP28 | RP29 |

|---|---|---|---|---|---|---|---|---|---|

| Group 1 | 1.344 | 1.071 | 1.180 | 0.222 | 0.290 | 0.377 | 0.102 | 0.667 | 0.498 |

| Group 2 | 0.852 | 0.821 | 0.700 | 0.399 | 0.504 | 0.538 | 0.308 | 0.667 | 0.543 |

| Group 3 | 0.841 | 0.821 | 1.039 | 0.191 | 0.389 | 0.299 | 0.169 | 0.833 | 0.601 |

| Group 4 | 0.684 | 1.024 | 1.121 | 0.215 | 0.342 | 0.391 | 0.180 | 0.667 | 0.519 |

| Groups | RP210 | RP211 | |||||||

| RPx210 | RPy210 | RPz210 | RPx211 | RPy211 | RPz211 | RPθ211 | |||

| Group 1 | 2.057 | 1.672 | 2.511 | 2.080 | 2.516 | 3.700 | 1.257 | 1.942 | 2.354 |

| Group 2 | 1.928 | 1.905 | 2.135 | 1.990 | 1.620 | 2.436 | 2.338 | 2.598 | 2.248 |

| Group 3 | 0.509 | 2.112 | 2.406 | 1.676 | 1.351 | 1.926 | 1.366 | 1.740 | 1.595 |

| Group 4 | 2.234 | 2.631 | 2.382 | 2.416 | 2.055 | 4.191 | 1.331 | 2.107 | 2.421 |

| Methods | Statistic | Group 1 | Group 2 | Group 3 | Group 4 | |

|---|---|---|---|---|---|---|

| -- | Total number of fruit clusters | 652 | 385 | 600 | 420 | -- |

| Average number of fruit clusters in a single image | 8.15 | 4.81 | 7.50 | 5.25 | -- | |

| Existing FCE | 458 | 256 | 452 | 258 | -- | |

| 194 | 129 | 148 | 162 | -- | ||

| (%) | 70.25 | 66.49 | 75.33 | 61.43 | 68.38 | |

| 0.869 | 0.552 | 0.809 | 0.601 | -- | ||

| Improved FCE | 628 | 368 | 581 | 403 | -- | |

| 24 | 17 | 19 | 17 | -- | ||

| (%) | 96.32 | 95.58 | 96.83 | 95.95 | 96.17 | |

| 1.352 | 0.815 | 1.311 | 0.906 | -- |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Q.; Zhao, Z.; Gao, G. Fuzzy Comprehensive Evaluation for Grasping Prioritization of Stacked Fruit Clusters Based on Relative Hierarchy Factor Set. Agronomy 2022, 12, 663. https://doi.org/10.3390/agronomy12030663

Zhang Q, Zhao Z, Gao G. Fuzzy Comprehensive Evaluation for Grasping Prioritization of Stacked Fruit Clusters Based on Relative Hierarchy Factor Set. Agronomy. 2022; 12(3):663. https://doi.org/10.3390/agronomy12030663

Chicago/Turabian StyleZhang, Qian, Zhenghui Zhao, and Guoqin Gao. 2022. "Fuzzy Comprehensive Evaluation for Grasping Prioritization of Stacked Fruit Clusters Based on Relative Hierarchy Factor Set" Agronomy 12, no. 3: 663. https://doi.org/10.3390/agronomy12030663

APA StyleZhang, Q., Zhao, Z., & Gao, G. (2022). Fuzzy Comprehensive Evaluation for Grasping Prioritization of Stacked Fruit Clusters Based on Relative Hierarchy Factor Set. Agronomy, 12(3), 663. https://doi.org/10.3390/agronomy12030663