A Method of Grasping Detection for Kiwifruit Harvesting Robot Based on Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Description of Kiwifruit in Orchard

2.2. Description of the Grasping Pose of Manipulator

2.2.1. Grasping Pose

2.2.2. Grasping Angle

2.3. Image Acquisition

2.4. Grasping Datasets

2.5. Grasping Detection Network

2.5.1. Network Structure

2.5.2. Evaluation and Hyperparameters

3. Results and Analysis

3.1. Network Training Results

3.2. Grasping Detection Results

3.3. Verification Test of Robotic Picking

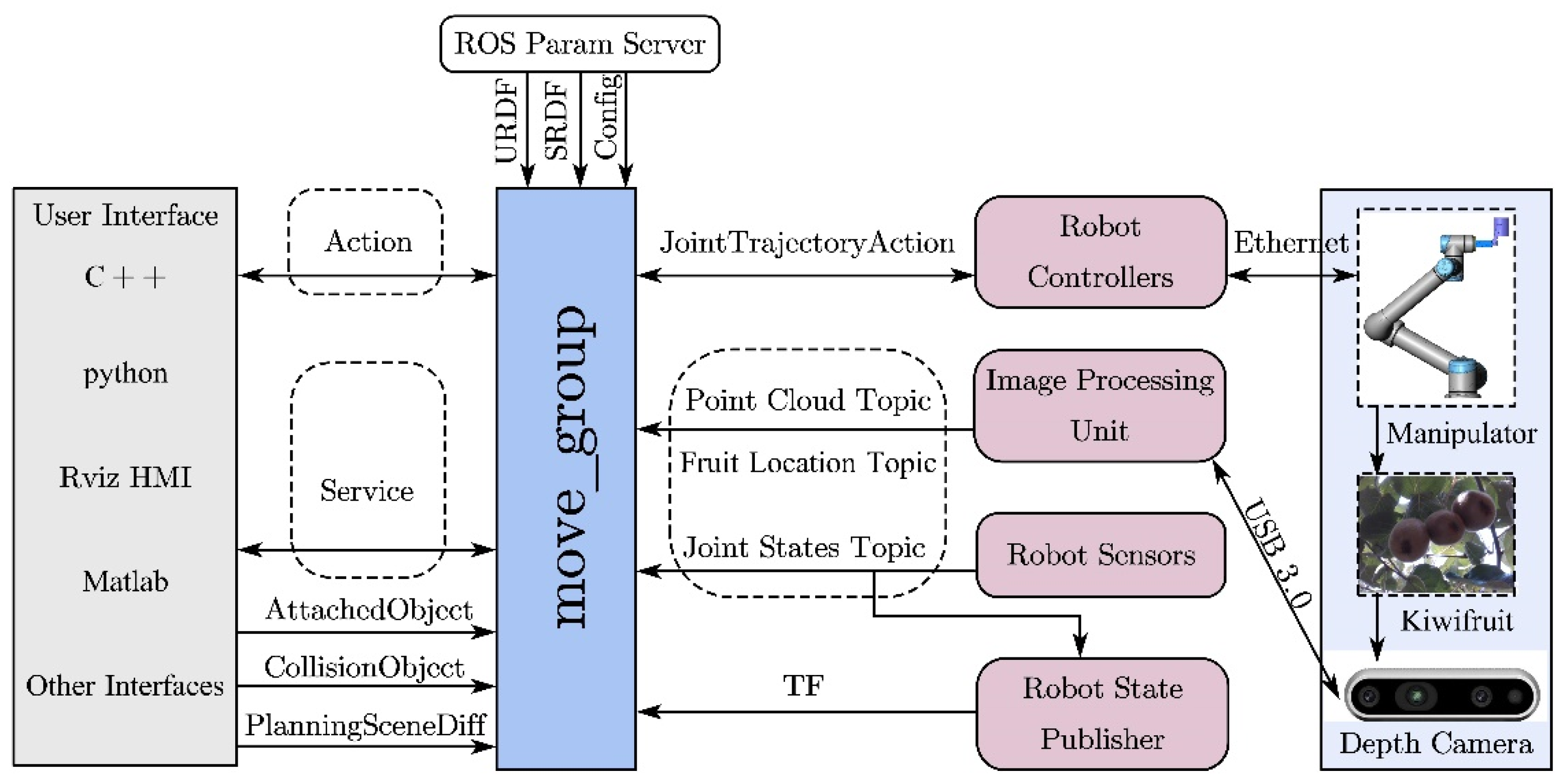

3.3.1. Overall Structure

3.3.2. Control System

3.3.3. Test Method

3.3.4. Results and Analysis

4. Conclusions

- (1)

- In this study, a grasping-detection method for a kiwifruit harvesting robot was proposed based on the GG-CNN2, which enables the gripper to safely and effectively grasp the clustered fruits and avoid the interference of the bending action on the neighboring fruits. We mainly divided the clustered kiwifruit into three types, including single fruit, linear cluster, and other cluster.

- (2)

- The performance test results of the grasping-detection network showed that the number of parameters of the GG-CNN2 was 66.7 k, the average image calculation speed was 58 ms, and the average accuracy was 76.0%, which ensures that the grasping prediction can complete the most tasks and run in real-time.

- (3)

- The verification test results of robotic picking showed that the manipulator combined with the position information provided by the target-detection network YOLO v4 and the grasping angle provided by the grasping-detection network GG-CNN2 achieved a harvesting success rate of 88.7% and a fruit drop rate of 4.8%; the average picking time was 6.5 s. Compared with the method which was only based on the target-detection information, the harvesting success rate of this method was increased by 8.1%, and the fruit drop rate was decreased by 4.9%; the picking time was slightly increased. The grasping-detection method is suitable for near-neighbor multi-kiwifruit picking.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Baranowska, W.E.; Dominik, S. Characteristics and pro-health properties of mini kiwi (Actinidia arguta). Hortic. Environ. Biotechnol. 2019, 60, 217–225. [Google Scholar] [CrossRef]

- Production of Kiwi (Fruit) by Countries. UN Food and Agriculture Organization. 2020. Available online: https://www.fao.org/faostat/en/#data (accessed on 10 August 2022).

- García-Quiroga, M.; Nunes-Damaceno, M.; Gómez-López, M.; Arbones-Maciñeira, E.; Muñoz-Ferreiro, N.; Vázquez-Odériz, M.L.; Romero-Rodríguez, M.A. Kiwifruit in syrup: Consumer acceptance, purchase intention and influence of processing and storage time on physicochemical and sensory characteristics. Food Bioprocess Technol. 2015, 8, 2268–2278. [Google Scholar] [CrossRef]

- Williams, H.A.M.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Williams, H.; Ting, C.; Nejati, M.; Jones, M.H.; Penhall, N.; Lim, J.Y.; Seabright, M.; Bell, J.; Ahn, H.S.; Scarfe, A.; et al. Improvements to and large-scale evaluation of a robotic kiwifruit harvester. J. Field Robot. 2020, 37, 187–201. [Google Scholar] [CrossRef]

- Mu, L.; Cui, G.; Liu, Y.; Cui, Y.; Fu, L.; Gejima, Y. Design and simulation of an integrated end-effector for picking kiwifruit by robot. Inf. Process. Agric. 2020, 7, 58–71. [Google Scholar] [CrossRef]

- Cui, Y.; Ma, L.; He, Z.; Zhu, Y.; Wang, Y.; Li, K. Design and Experiment of Dual Manipulators Parallel Harvesting Platform for Kiwifruit Based on Optimal Space. Trans. CSAM 2022, 53, 132–143. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Fu, L.; Feng, Y.; Elkamil, T.; Liu, Z.; Li, R.; Cui, Y. Image recognition method of multi-cluster kiwifruit in field based on convolutional neural networks. Trans. CSAE 2018, 34, 205–211. [Google Scholar] [CrossRef]

- Mu, L.; Gao, Z.; Cui, Y.; Li, K.; Liu, H.; Fu, L. Kiwifruit Detection of Far-view and Occluded Fruit Based on Improved AlexNet. Trans. CSAM 2019, 50, 24–34. [Google Scholar] [CrossRef]

- Fu, L.; Feng, Y.; Wu, J.; Liu, Z.; Gao, F.; Majeed, Y.; Al-Mallahi, A.; Zhang, Q.; Li, R.; Cui, Y. Fast and accurate detection of kiwifruit in orchard using improved YOLOv3-tiny model. Precis. Agric. 2021, 22, 754–776. [Google Scholar] [CrossRef]

- Suo, R.; Gao, F.; Zhou, Z.; Fu, L.; Song, Z.; Dhupia, J.; Li, R.; Cui, Y. Improved multi-classes kiwifruit detection in orchard to avoid collisions during robotic picking. Comput. Electron. Agric. 2021, 182, 106052. [Google Scholar] [CrossRef]

- Au, C.K.; Redstall, M.; Duke, M.; Kuang, Y.C.; Lim, S.H. Obtaining the effective gripper dimensions for a kiwifruit harvesting robot using kinematic calibration procedures. Ind. Robot Int. J. Robot. Res. Appl. 2021, 49, 865–876. [Google Scholar] [CrossRef]

- Fu, L.; Tola, E.; Al-Mallahi, A.; Li, R.; Cui, Y. A novel image processing algorithm to separate linearly clustered kiwifruits. Biosyst. Eng. 2019, 183, 184–195. [Google Scholar] [CrossRef]

- Liu, M.-Y.; Tuzel, O.; Veeraraghavan, A.; Taguchi, Y.; Marks, T.K.; Chellappa, R. Fast object localization and pose estimation in heavy clutter for robotic bin picking. Int. J. Robot. Res. 2012, 31, 951–973. [Google Scholar] [CrossRef]

- Wang, X.; Kang, H.; Zhou, H.; Au, W.; Chen, C. Geometry-aware fruit grasping estimation for robotic harvesting in apple orchards. Comput. Electron. Agric. 2022, 193, 106716. [Google Scholar] [CrossRef]

- Gao, R.; Zhou, Q.; Cao, S.; Jiang, Q. An Algorithm for Calculating Apple Picking Direction Based on 3D Vision. Agriculture 2022, 12, 1170. [Google Scholar] [CrossRef]

- Ni, P.; Zhang, W.; Bai, W.; Lin, M.; Cao, Q. A new approach based on two-stream cnns for novel objects grasping in clutter. J. Intell. Robot. Syst. 2019, 1, 161–177. [Google Scholar] [CrossRef]

- Morrison, D.; Corke, P.; Leitner, J. Learning robust, real-time, reactive robotic grasping. Int. J. Robot. Res. 2020, 39, 183–201. [Google Scholar] [CrossRef]

- Cui, Y.; Su, S.; Wang, X.; Tian, Y.; Li, P.; Zhang, F. Recognition and Feature Extraction of Kiwifruit in Natural Environment Based on Machine Vision. Trans. CSAM 2013, 44, 247–252. [Google Scholar] [CrossRef]

- Fu, L.; Zhang, F.; Gejima, Y.; Li, Z.; Wang, B.; Cui, Y. Development and Experiment of End-effector for Kiwifruit Harvesting Robot. Trans. CSAM 2015, 46, 1–8. [Google Scholar] [CrossRef]

- Mu, L. Full Field of View Information Perception and Integrated Picking Method for Kiwifruit Harvesting Robot; Northwest A&F University: Xianyang, China, 2019. [Google Scholar]

- Xu, J.; Liu, N.; Li, D.; Lin, L.; Wang, G. A Grasping Poses Detection Algorithm for Industrial WorkpiecesBased on Grasping Cluster and Collision Voxels. Robot 2022, 44, 153–166. [Google Scholar] [CrossRef]

- Xiao, Z.; Zhou, M.; Yuan, H.; Liu, Y.; Fan, C.; Cheng, M. Influence Analysis of Light Intensity on Kinect v2 Depth Measurement Accuracy. Trans. CSAM 2021, 52, 108–117. [Google Scholar] [CrossRef]

- Lenz, I.; Lee, H.; Saxena, A. Deep learning for detecting robotic grasps. Int. J. Robot. Res. 2015, 34, 705–724. [Google Scholar] [CrossRef] [Green Version]

- Wang, D.; Liu, C.; Chang, F.; Li, N.; Li, G. High-Performance Pixel-Level Grasp Detection Based on Adaptive Grasping and Grasp-Aware Network. IEEE Trans. Ind. Electron. 2022, 69, 11611–11621. [Google Scholar] [CrossRef]

- Zeng, A.; Song, S.; Yu, K.T.; Donlon, E.; Hogan, F.R.; Bauza, M.; Ma, D.; Taylor, O.; Liu, M.; Romo, E.; et al. Robotic Pick-and-Place of Novel Objects in Clutter with MultiAffordance Grasping and Cross-Domain Image Matching. Int. J. Robot. Res. 2017, 41, 690–705. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Adn Mach. Intell. 2017, 99, 2999–3007. [Google Scholar]

- Jiang, Y.; Moseson, S.; Saxena, A. Efficient grasping from rgbd images: Learning using a new rectangle representation. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3304–3311. [Google Scholar] [CrossRef] [Green Version]

- Hernandez-Mendez, S.; Maldonado-Mendez, C.; Marin-Hernandez, A.; Rios-Figueroa, H.V.; Vazquez-Leal, H.; Palacios-Hernandez, E.R. Design and implementation of a robotic arm using ROS and MoveIt! In Proceedings of the International Autumn Meeting on Power, Electronics and Computing (ROPEC), Ixtapa, Mexico, 8–10 November 2017. [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; BenShahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef] [Green Version]

- Yaguchi, H.; Nagahama, K.; Hasegawa, T.; Inaba, M. Development of an autonomous tomato harvesting robot with rotational plucking gripper. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 652–657. [Google Scholar] [CrossRef]

- Rong, J.; Wang, P.; Wang, T.; Hu, L.; Yuan, T. Fruit pose recognition and directional orderly grasping strategies for tomato harvesting robots. Comput. Electron. Agric. 2022, 202, 107430. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

| Algorithm | Parameters | Clusters | Samples | Average Accuracy | Speed (ms) |

|---|---|---|---|---|---|

| GG-CNN2 | 66.7 k | SF | 25 | 80.3% | 58 |

| LC | 25 | 77.7% | |||

| OC | 25 | 70.0% |

| Method | Grasping Rate | Unseparated | Dropped | Harvesting Success Rate | Average Picking Time (s) | ||

|---|---|---|---|---|---|---|---|

| SF | LC | OC | |||||

| Method I | 9/10 | 21/25 | 20/27 | 6 | 6 | 80.6% | 5.8 |

| 90.0% | 84.0% | 74.1% | 9.7% | 9.7% | |||

| Method II | 9/10 | 23/25 | 23/27 | 4 | 3 | 88.7% | 6.5 |

| 90.0% | 92.0% | 85.2% | 6.5% | 4.8% | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, L.; He, Z.; Zhu, Y.; Jia, L.; Wang, Y.; Ding, X.; Cui, Y. A Method of Grasping Detection for Kiwifruit Harvesting Robot Based on Deep Learning. Agronomy 2022, 12, 3096. https://doi.org/10.3390/agronomy12123096

Ma L, He Z, Zhu Y, Jia L, Wang Y, Ding X, Cui Y. A Method of Grasping Detection for Kiwifruit Harvesting Robot Based on Deep Learning. Agronomy. 2022; 12(12):3096. https://doi.org/10.3390/agronomy12123096

Chicago/Turabian StyleMa, Li, Zhi He, Yutao Zhu, Liangsheng Jia, Yinchu Wang, Xinting Ding, and Yongjie Cui. 2022. "A Method of Grasping Detection for Kiwifruit Harvesting Robot Based on Deep Learning" Agronomy 12, no. 12: 3096. https://doi.org/10.3390/agronomy12123096

APA StyleMa, L., He, Z., Zhu, Y., Jia, L., Wang, Y., Ding, X., & Cui, Y. (2022). A Method of Grasping Detection for Kiwifruit Harvesting Robot Based on Deep Learning. Agronomy, 12(12), 3096. https://doi.org/10.3390/agronomy12123096