An Object-Based Weighting Approach to Spatiotemporal Fusion of High Spatial Resolution Satellite Images for Small-Scale Cropland Monitoring

Abstract

1. Introduction

- (1)

- how to depict spatial structures well and change patterns at a fine scale,

- (2)

- how to estimate temporal variations between the base and prediction dates,

- (3)

- how to account for spectral patterns of the imagery at the prediction date.

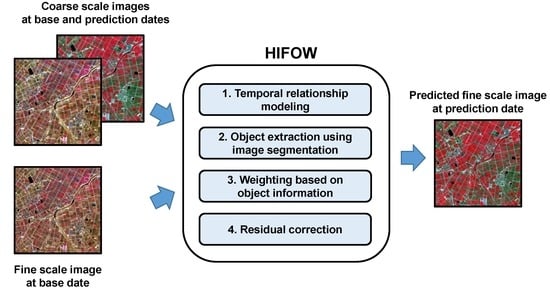

2. Methods

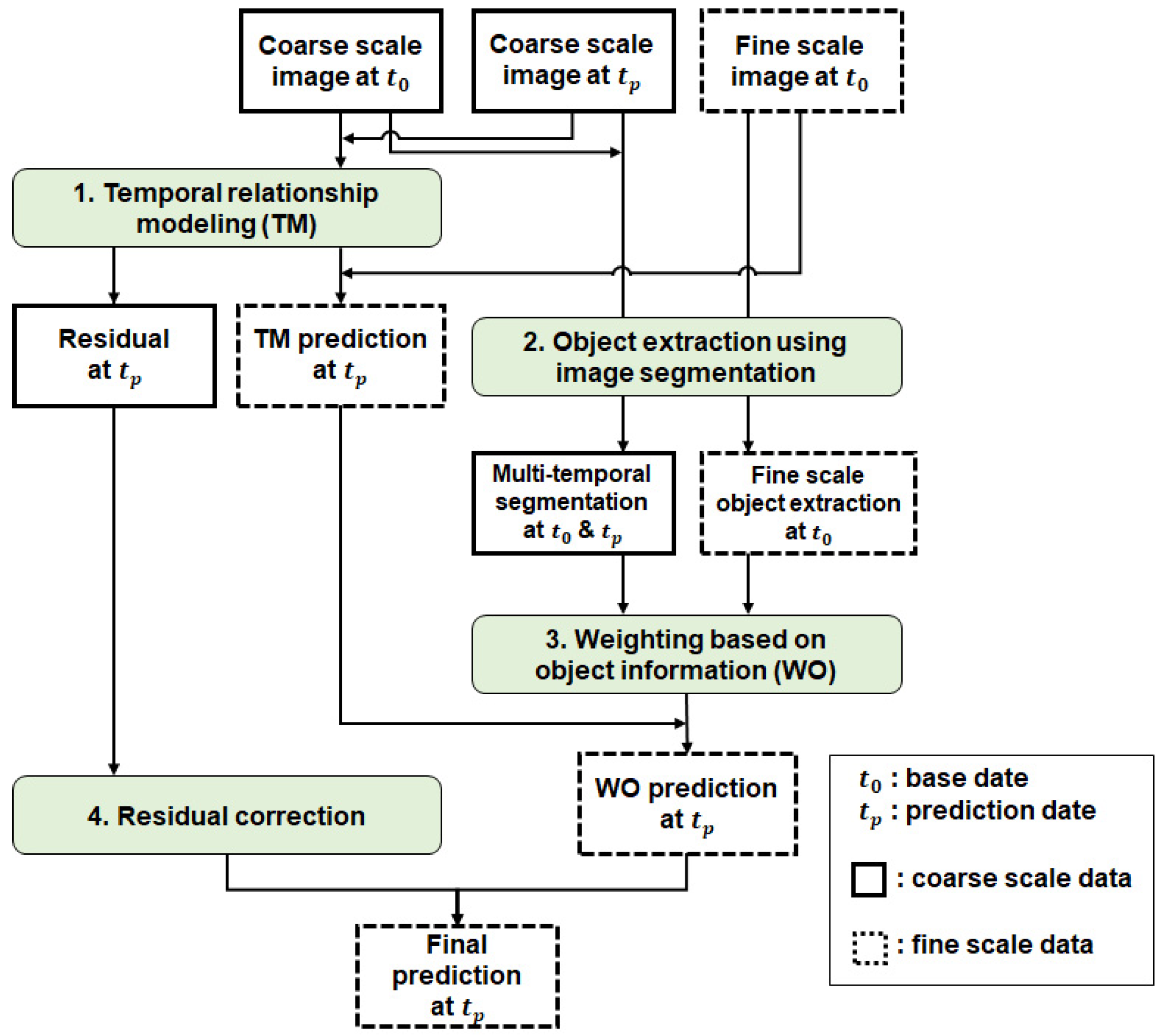

2.1. Temporal Relationship Modeling (TM)

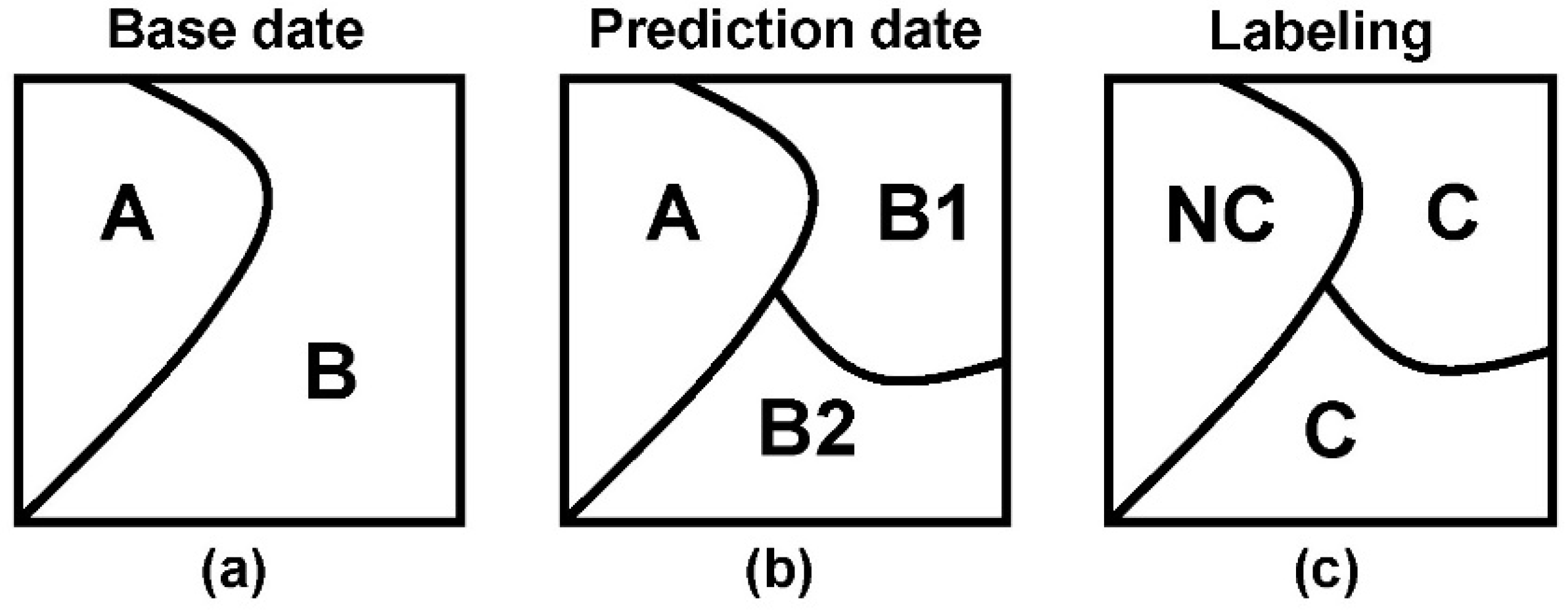

2.2. Object Extraction Using Image Segmentation

2.3. Weighting Based on Object Information (WO)

2.4. Residual Correction

3. Materials and Experimental Setup

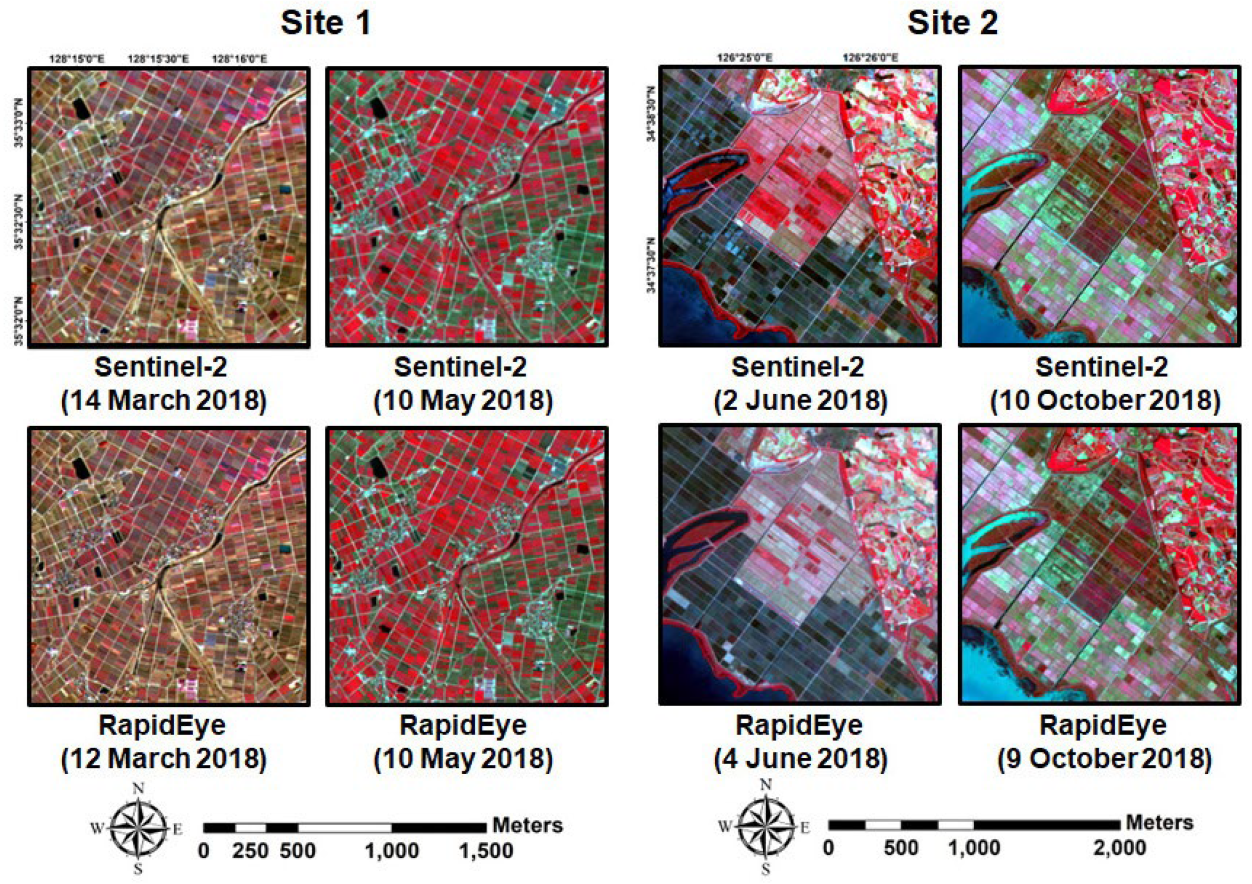

3.1. Study Areas

3.2. Satellite Images

3.3. Parameter Settings for HIFOW

3.4. Comparison and Evaluation

4. Results

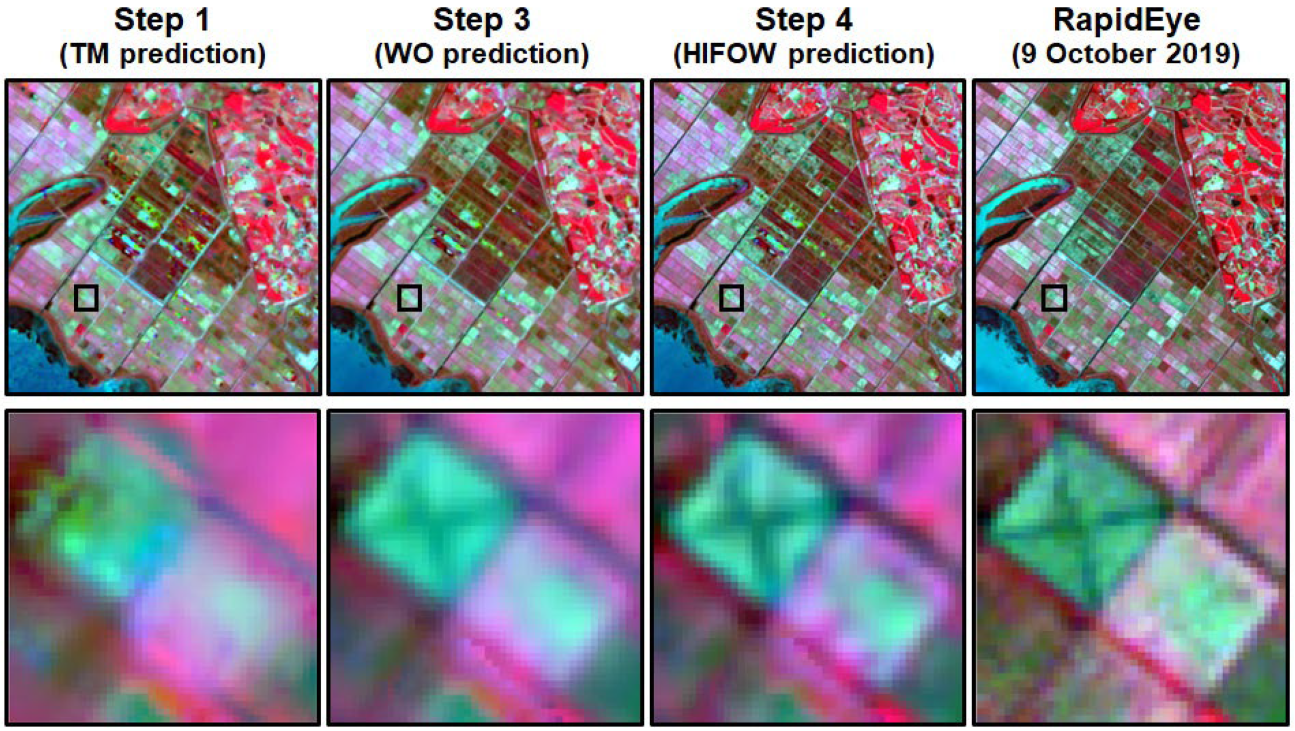

4.1. Comparison between Interim Results of HIFOW

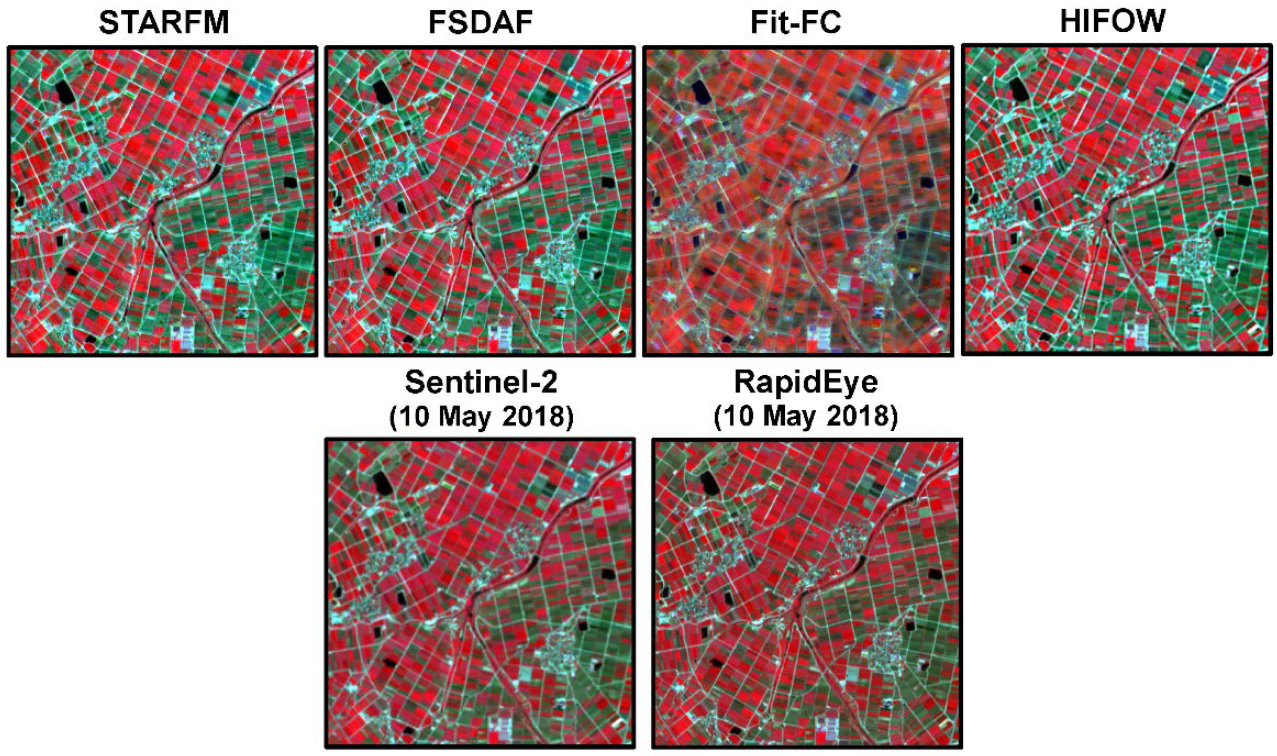

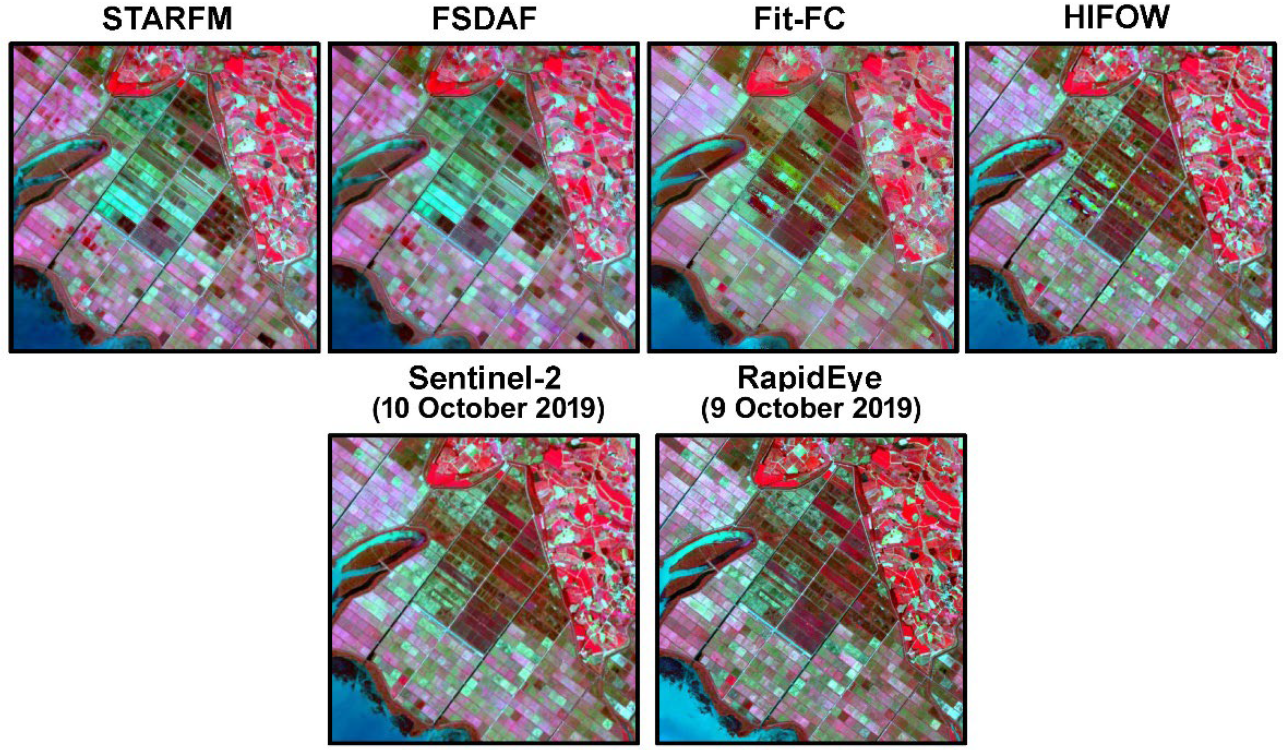

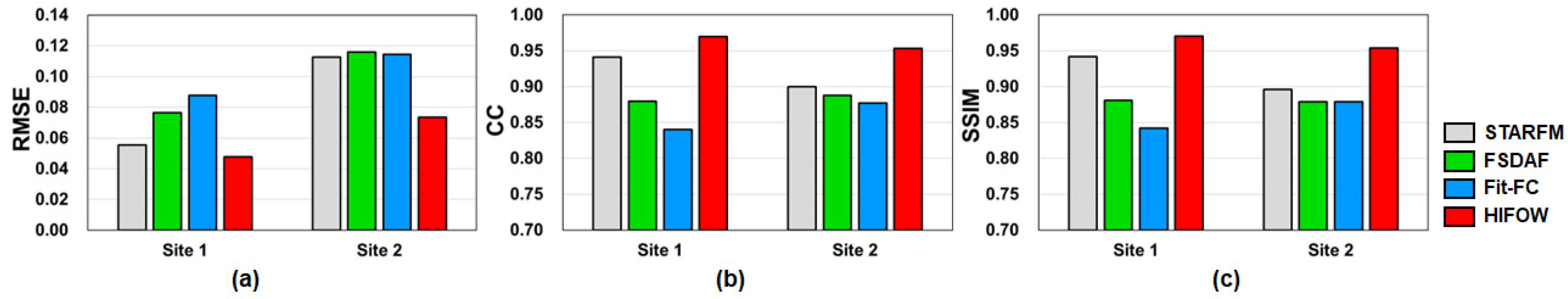

4.2. Comparison with other STIF Models

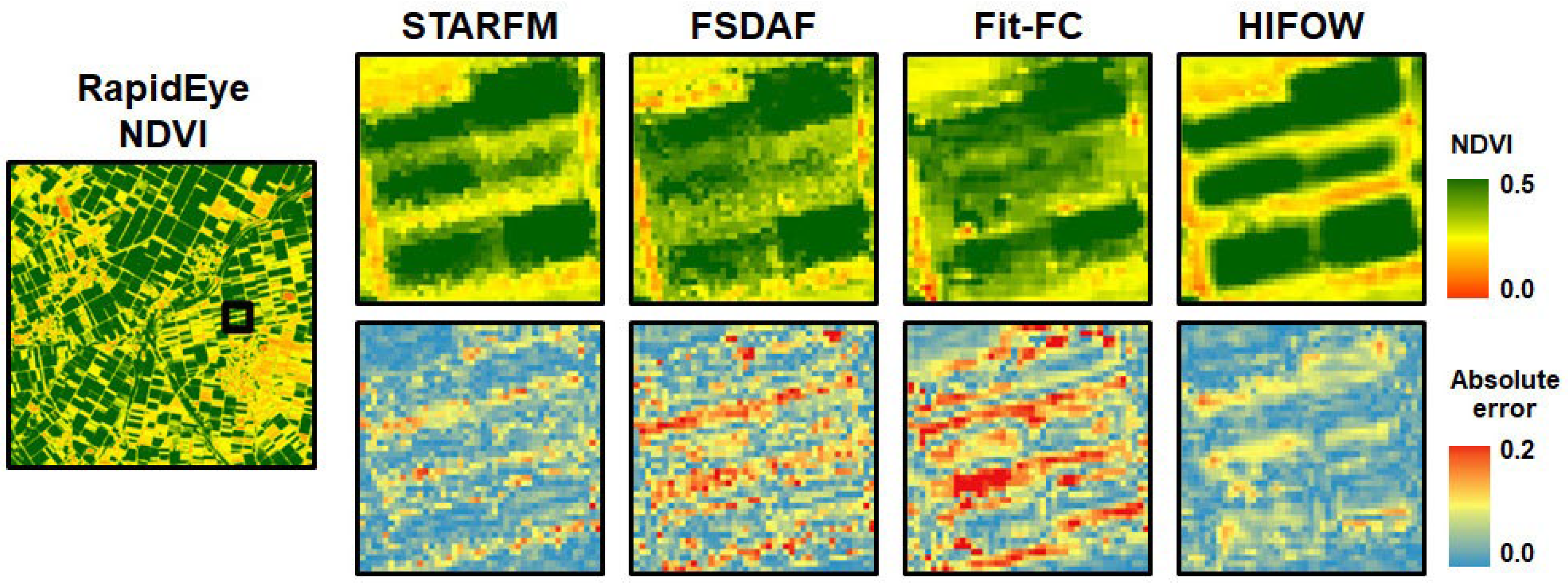

4.3. NDVI Prediction Results

5. Discussion

5.1. Novelty of HIFOW

5.2. Future Research Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Abbreviation | Definition |

|---|---|

| CC | Correlation Coefficient |

| CTFS | Coarse Temporal resolution but Fine Spatial resolution |

| Fit-FC | regression model Fitting, spatial Filtering, and residual Compensation |

| FSDAF | Flexible Spatiotemporal DAta Fusion |

| FST | Fine SpatioTemporal resolution |

| FTCS | Fine Temporal resolution but Coarse Spatial resolution |

| HIFOW | High spatial resolution Image Fusion using Object-based Weighting |

| HISTIF | HIgh-resolution SpatioTemporal Image Fusion |

| NDVI | Normalized Difference Vegetation Index |

| NIR | Near InfraRed |

| RI | Relative Improvement |

| RMSE | Root Mean Square Error |

| rRMSE | relative Root Mean Square Error |

| SSIM | Structural SIMilarity |

| STARFM | Spatial and Temporal Adaptive Reflectance Fusion Model |

| STIF | SpatioTemporal Image Fusion |

| TM | Temporal relationship Modeling |

| WO | Weighting based on Object information |

References

- Rogan, J.; Chen, D. Remote sensing technology for mapping and monitoring land-cover and land-use change. Prog. Plann. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Maktav, D.; Erbek, F.S.; Jürgens, C. Remote sensing of urban areas. Int. J. Remote Sens. 2006, 26, 655–659. [Google Scholar] [CrossRef]

- Ozdogan, M.; Yang, Y.; Allez, G.; Cervantes, C. Remote sensing of irrigated agriculture: Opportunities and challenges. Remote Sens. 2010, 2, 2274–2304. [Google Scholar] [CrossRef]

- Muller-Karger, F.; Roffer, M.; Walker, N.; Oliver, M.; Schofield, O.; Abbott, M.; Graber, H.; Leben, R.; Goni, G. Satellite remote sensing in support of an integrated ocean observing system. IEEE Geosci. Remote Sens. Mag. 2013, 1, 8–18. [Google Scholar] [CrossRef]

- Ryu, S.; Kwon, Y.-J.; Kim, G.; Hong, S. Temperature vegetation dryness index-based soil moisture retrieval algorithm developed for Geo-KOMPSAT-2A. Remote Sens. 2021, 13, 2990. [Google Scholar] [CrossRef]

- Park, N.-W.; Kim, Y.; Kwak, G.-H. An overview of theoretical and practical issues in spatial downscaling of coarse resolution satellite-derived products. Korean J. Remote Sens. 2019, 35, 589–607. [Google Scholar]

- Dawbin, K.W.; Evans, J.C. Large area crop classification in New South Wales, Australia, using Landsat data. Int. J. Remote Sens. 1988, 9, 295–301. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L. Large-area crop mapping using time-series MODIS 250 m NDVI data: An assessment for the U.S. Central Great Plains. Remote Sens. Environ. 2008, 112, 1096–1116. [Google Scholar] [CrossRef]

- Kussul, N.; Lemoine, G.; Gallego, F.J.; Skakun, S.V.; Lavreniuk, M.; Shelestov, A.Y. Parcel-based crop classification in Ukraine using Landsat-8 data and Sentinel-1A data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2500–2508. [Google Scholar] [CrossRef]

- Kim, Y.; Kyriakidis, P.C.; Park, N.-W. A cross-resolution, spatiotemporal geostatistical fusion model for combining satellite image time-series of different spatial and temporal resolutions. Remote Sens. 2020, 12, 1553. [Google Scholar] [CrossRef]

- Cadastral Statistical Annual Report 2021. National Spatial Data Infrastructure Portal. Available online: https://nsdi.go.kr (accessed on 4 July 2022).

- Zhang, H.; Li, Q.; Liu, J.; Shang, J.; Du, X.; McNairn, H.; Champagne, C.; Dong, T.; Liu, M. Image classification using RapidEye data: Integration of spectral and textual features in a random forest classifier. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5334–5349. [Google Scholar] [CrossRef]

- Jin, Y.; Guo, J.; Ye, H.; Zhao, J.; Huang, W.; Cui, B. Extraction of arecanut planting distribution based on the feature space optimization of PlanetScope imagery. Agriculture 2021, 11, 371. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Bhadra, S.; Maimaitiyiming, M.; Brown, D.R.; Sidike, P.; Fritschi, F.B. Field-scale crop yield prediction using multi-temporal WorldView-3 and PlanetScope satellite data and deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 174, 265–281. [Google Scholar] [CrossRef]

- Ghamisi, P.; Rasti, B.; Yokoya, N.; Wang, Q.; Höfle, B.; Bruzzone, L.; Bovolo, F.; Chi, M.; Anders, K.; Gloaguen, R.; et al. Multisource and multitemporal data fusion in remote sensing: A comprehensive review of the state of the art. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–39. [Google Scholar] [CrossRef]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T.K.-A. Spatiotemporal fusion of multisource remote sensing data: Literature survey, taxonomy, principles, applications, and future directions. Remote Sens. 2018, 10, 527. [Google Scholar] [CrossRef]

- Belgiu, M.; Stein, A. Spatiotemporal image fusion in remote sensing. Remote Sens. 2019, 11, 818. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. Spatio-temporal fusion for daily Sentinel-2 images. Remote Sens. Environ. 2018, 204, 31–42. [Google Scholar] [CrossRef]

- Wu, M.; Niu, Z.; Wang, C.; Wu, C.; Wang, L. Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion model. J. App. Remote Sens. 2012, 6, 063507. [Google Scholar]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal reflectance fusion via sparse representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Song, H.; Huang, B. Spatiotemporal satellite image fusion through one-pair image learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1883–1896. [Google Scholar] [CrossRef]

- Tan, Z.; Yue, P.; Di, L.; Tang, J. Deriving high spatiotemporal remote sensing images using deep convolutional network. Remote Sens. 2018, 10, 1066. [Google Scholar] [CrossRef]

- Jia, D.; Song, C.; Cheng, C.; Shen, S.; Ning, L.; Hui, C. A novel deep learning-based spatiotemporal fusion method for combining satellite images with different resolutions using a two-stream convolutional neural network. Remote Sens. 2020, 12, 698. [Google Scholar] [CrossRef]

- Zhang, H.; Song, Y.; Han, C.; Zhang, L. Remote sensing image spatiotemporal fusion using a generative adversarial network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4273–4286. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat-MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Comparison of spatiotemporal fusion models: A review. Remote Sens. 2015, 7, 1798–1835. [Google Scholar] [CrossRef]

- Park, S.; Kim, Y.; Na, S.-I.; Park, N.-W. Evaluation of spatio-temporal fusion models of multi-sensor high-resolution satellite images for crop monitoring: An experiment on the fusion of Sentinel-2 and RapidEye images. Korean J. Remote Sens. 2020, 35, 807–821, (In Korean with English Abstract). [Google Scholar]

- Jiang, J.; Zhang, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. HISTIF: A new spatiotemporal image fusion method for high-resolution monitoring of crops at the subfield level. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4607–4626. [Google Scholar] [CrossRef]

- Kim, Y.; Park, N.-W. Impact of trend estimates on predictive performance in model evaluation for spatial downscaling of satellite-based precipitation data. Korean J. Remote Sens. 2017, 33, 25–35. [Google Scholar] [CrossRef][Green Version]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Immerzeel, W.W.; Rutten, M.M.; Droogers, P. Spatial downscaling of TRMM precipitation using vegetative response on the Iberian Peninsula. Remote Sens. Environ. 2009, 113, 362–370. [Google Scholar] [CrossRef]

- Sharifi, E.; Saghafian, B.; Steinacker, R. Downscaling satellite precipitation estimates with multiple linear regression, artificial neural networks, and spline interpolation techniques. J. Geophys. Res. Atmos. 2019, 124, 789–805. [Google Scholar] [CrossRef]

- Park, S.; Park, N.-W. Effects of class purity of training patch on classification performance of crop classification with convolutional neural network. Appl. Sci. 2020, 10, 3773. [Google Scholar] [CrossRef]

- Gascon, F.; Bouzinac, C.; Thépaut, O.; Jung, M.; Francesconi, B.; Louis, J.; Languille, F. Copernicus Sentinel-2A calibration and products validation status. Remote Sens. 2017, 9, 584. [Google Scholar] [CrossRef]

- ESA, Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu (accessed on 13 December 2021).

- Tyc, G.; Tulip, J.; Schulten, D.; Krischke, M.; Oxfort, M. The RapidEye mission design. Acta Astronaut. 2005, 56, 213–219. [Google Scholar] [CrossRef]

- Bai, B.; Tan, Y.; Donchyts, G.; Haag, A.; Weerts, A. A simple spatio–temporal data fusion method based on linear regression coefficient compensation. Remote Sens. 2020, 12, 3900. [Google Scholar] [CrossRef]

- Wei, X.; Chang, N.B.; Bai, K. A comparative assessment of multisensor data merging and fusion algorithms for high-resolution surface reflectance data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4044–4059. [Google Scholar] [CrossRef]

- Chander, G.; Haque, M.O.; Sampath, A.; Brunn, A.; Trosset, G.; Hoffmann, D.; Anderson, C. Radiometric and geometric assessment of data from the RapidEye constellation of satellites. Int. J. Remote Sens. 2013, 34, 5905–5925. [Google Scholar] [CrossRef]

- eCognition. Available online: https://geospatial.trimble.com/products-and-solutions/ecognition (accessed on 23 May 2022).

- STARFM. Available online: https://www.ars.usda.gov/research/software/download/?softwareid=432 (accessed on 28 March 2022).

- FSDAF. Available online: https://xiaolinzhu.weebly.com/open-source-code.html (accessed on 28 March 2022).

- Fit-FC. Available online: https://github.com/qunmingwang/Fit-FC (accessed on 28 March 2022).

- Maselli, F.; Chiesi, M.; Pieri, M. A new method to enhance the spatial features of multitemporal NDVI image series. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4967–4979. [Google Scholar] [CrossRef]

- Sun, R.; Chen, S.; Su, H.; Mi, C.; Jin, N. The effect of NDVI time series density derived from spatiotemporal fusion of multisource remote sensing data on crop classification accuracy. ISPRS Int. J. Geo.-Inf. 2019, 8, 502. [Google Scholar] [CrossRef]

- Jarihani, A.A.; McVicar, T.R.; Van Niel, T.G.; Emelyanova, I.V.; Callow, J.N.; Johansen, K. Blending Landsat and MODIS data to generate multispectral indices: A comparison of “Index-then-Blend” and “Blend-then-Index” approaches. Remote Sens. 2014, 6, 9213–9238. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Chen, J.; Chen, X.; Zhu, X.; Qiu, Y.; Song, H.; Rao, Y.; Zhang, C.; Cao, X.; Cui, X. Sensitivity of six typical spatiotemporal fusion methods to different influential factors: A comparative study for a normalized difference vegetation index time series reconstruction. Remote Sens. Environ. 2021, 252, 112130. [Google Scholar] [CrossRef]

- Liu, M.; Ke, Y.; Yin, Q.; Chen, X.; Im, J. Comparison of five spatio-temporal satellite image fusion models over landscapes with various spatial heterogeneity and temporal variation. Remote Sens. 2019, 11, 2612. [Google Scholar] [CrossRef]

- Park, S.; Na, S.-I.; Park, N.-W. Effect of correcting radiometric inconsistency between input images on spatio-temporal fusion of multi-sensor high-resolution satellite images. Korean J. Remote Sens. 2021, 37, 999–1011, (In Korean with English Abstract). [Google Scholar]

- Zhao, Y.; Huang, B.; Song, H. A robust adaptive spatial and temporal image fusion model for complex land surface changes. Remote Sens. Environ. 2018, 208, 42–62. [Google Scholar] [CrossRef]

- Vincent, L.; Soille, P. Watersheds in digital spaces: An efficient algorithm based on immersion simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 583–598. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Scikit-Image: Image Processing in Python. Available online: https://scikit-image.org/docs/stable/api/skimage.segmentation.html (accessed on 13 September 2022).

- Zhang, H.; Sun, Y.; Shi, W.; Guo, D.; Zheng, N. An object-based spatiotemporal fusion model for remote sensing images. Eur. J. Remote Sens. 2021, 54, 86–101. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing information reconstruction of remote sensing data: A technical review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

| Specification | Sentinel-2 | RapidEye | |

|---|---|---|---|

| Product type | Ortho Level-1C | Ortho Level-3A | |

| Spatial resolution | 10 m (Green, Red, NIR 1) 20 m (Red-edge) | 5 m | |

| Spectral band (Central wavelength) | Green (560 nm) Red (665 nm) Red-edge (705 nm) NIR 1 (842 nm) | Green (555 nm) Red (658 nm) Red-edge (710 nm) NIR 1 (805 nm) | |

| Acquisition date | 2 (Site 1) | 14 March 2018 | 12 March 2018 |

| 3 (Site 1) | 10 May 2018 | 10 May 2018 | |

| 2 (Site 2) | 2 June 2019 | 4 June 2019 | |

| 3 (Site 2) | 10 October 2019 | 9 October 2019 | |

| Statistics | Band | Site 1 | Site 2 | ||||

|---|---|---|---|---|---|---|---|

| TM 1 Prediction | WO 2 Prediction | HIFOW 3 Prediction | TM 1 Prediction | WO 2 Prediction | HIFOW 3 Prediction | ||

| RMSE 4 | Green | 0.0098 | 0.0199 | 0.0054 | 0.0195 | 0.0166 | 0.0165 |

| Red | 0.0169 | 0.0120 | 0.0089 | 0.0235 | 0.0175 | 0.0174 | |

| Red-edge | 0.0208 | 0.0298 | 0.0187 | 0.0195 | 0.0159 | 0.0167 | |

| NIR | 0.0270 | 0.0182 | 0.0152 | 0.0379 | 0.0290 | 0.0285 | |

| rRMSE 5 | Green | 0.0787 | 0.1590 | 0.0433 | 0.1942 | 0.1647 | 0.1643 |

| Red | 0.1357 | 0.0958 | 0.0714 | 0.2330 | 0.1735 | 0.1726 | |

| Red-edge | 0.1668 | 0.2387 | 0.1500 | 0.1935 | 0.1578 | 0.1658 | |

| NIR | 0.2160 | 0.1455 | 0.1220 | 0.3762 | 0.2883 | 0.2833 | |

| CC 6 | Green | 0.8206 | 0.7277 | 0.9549 | 0.7571 | 0.8755 | 0.8830 |

| Red | 0.7909 | 0.9050 | 0.9572 | 0.7103 | 0.8450 | 0.8604 | |

| Red-edge | 0.5470 | 0.8124 | 0.7646 | 0.8256 | 0.8780 | 0.8831 | |

| NIR | 0.8980 | 0.9607 | 0.9815 | 0.9037 | 0.9477 | 0.9548 | |

| SSIM 7 | Green | 0.8477 | 0.7631 | 0.9605 | 0.8352 | 0.8741 | 0.9453 |

| Red | 0.8099 | 0.9137 | 0.9604 | 0.8394 | 0.8855 | 0.9453 | |

| Red-edge | 0.6087 | 0.8438 | 0.7920 | 0.7486 | 0.7766 | 0.9239 | |

| NIR | 0.9010 | 0.9618 | 0.9819 | 0.8614 | 0.9076 | 0.9599 | |

| Statistics | Band | Site 1 | Site 2 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| STARFM | FSDAF | Fit-FC | HIFOW | STARFM | FSDAF | Fit-FC | HIFOW | ||

| RMSE | Green | 0.0084 | 0.0082 | 0.0170 | 0.0054 | 0.0248 | 0.0252 | 0.0188 | 0.0165 |

| Red | 0.0140 | 0.0137 | 0.0229 | 0.0089 | 0.0300 | 0.0299 | 0.0215 | 0.0174 | |

| Red edge | 0.0192 | 0.0194 | 0.0206 | 0.0187 | 0.0230 | 0.0235 | 0.0238 | 0.0167 | |

| NIR | 0.0195 | 0.0218 | 0.0274 | 0.0152 | 0.0350 | 0.0356 | 0.0358 | 0.0285 | |

| rRMSE | Green | 0.0673 | 0.0657 | 0.1361 | 0.0432 | 0.2461 | 0.2508 | 0.1872 | 0.1643 |

| Red | 0.1121 | 0.1097 | 0.1833 | 0.0713 | 0.2982 | 0.2975 | 0.2140 | 0.1726 | |

| Red edge | 0.1537 | 0.1553 | 0.1649 | 0.1497 | 0.2285 | 0.2330 | 0.2360 | 0.1658 | |

| NIR | 0.1561 | 0.1745 | 0.2194 | 0.1217 | 0.3478 | 0.3535 | 0.3556 | 0.2833 | |

| CC | Green | 0.8757 | 0.8880 | 0.6643 | 0.9549 | 0.6699 | 0.6434 | 0.7804 | 0.8830 |

| Red | 0.8668 | 0.8748 | 0.7655 | 0.9572 | 0.6209 | 0.6151 | 0.7415 | 0.8604 | |

| Red edge | 0.6560 | 0.6521 | 0.6199 | 0.7646 | 0.7639 | 0.7437 | 0.7732 | 0.8831 | |

| NIR | 0.9551 | 0.9460 | 0.8943 | 0.9815 | 0.9188 | 0.9145 | 0.9110 | 0.9548 | |

| SSIM | Green | 0.8929 | 0.9027 | 0.7115 | 0.9605 | 0.9139 | 0.8860 | 0.8658 | 0.9453 |

| Red | 0.8777 | 0.8848 | 0.7985 | 0.9604 | 0.8900 | 0.8815 | 0.8731 | 0.9453 | |

| Red edge | 0.6972 | 0.6933 | 0.6667 | 0.7920 | 0.9027 | 0.8775 | 0.8665 | 0.9239 | |

| NIR | 0.9563 | 0.9475 | 0.8974 | 0.9819 | 0.9396 | 0.9267 | 0.8954 | 0.9599 | |

| Band | Site1 | Site2 | ||||

|---|---|---|---|---|---|---|

| STARFM | FSDAF | Fit-FC | STARFM | FSDAF | Fit-FC | |

| Green | 35.7 | 34.1 | 68.2 | 33.2 | 34.5 | 12.2 |

| Red | 36.4 | 35.0 | 61.1 | 42.1 | 42.0 | 19.4 |

| Red edge | 2.6 | 3.6 | 9.2 | 27.4 | 28.8 | 29.7 |

| NIR | 22.1 | 30.3 | 44.5 | 18.5 | 19.9 | 20.3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Park, N.-W.; Na, S.-i. An Object-Based Weighting Approach to Spatiotemporal Fusion of High Spatial Resolution Satellite Images for Small-Scale Cropland Monitoring. Agronomy 2022, 12, 2572. https://doi.org/10.3390/agronomy12102572

Park S, Park N-W, Na S-i. An Object-Based Weighting Approach to Spatiotemporal Fusion of High Spatial Resolution Satellite Images for Small-Scale Cropland Monitoring. Agronomy. 2022; 12(10):2572. https://doi.org/10.3390/agronomy12102572

Chicago/Turabian StylePark, Soyeon, No-Wook Park, and Sang-il Na. 2022. "An Object-Based Weighting Approach to Spatiotemporal Fusion of High Spatial Resolution Satellite Images for Small-Scale Cropland Monitoring" Agronomy 12, no. 10: 2572. https://doi.org/10.3390/agronomy12102572

APA StylePark, S., Park, N.-W., & Na, S.-i. (2022). An Object-Based Weighting Approach to Spatiotemporal Fusion of High Spatial Resolution Satellite Images for Small-Scale Cropland Monitoring. Agronomy, 12(10), 2572. https://doi.org/10.3390/agronomy12102572