Design and Experimental Verification of the YOLOV5 Model Implanted with a Transformer Module for Target-Oriented Spraying in Cabbage Farming

Abstract

1. Introduction

2. Materials and Methods

2.1. Construction of the Cabbage-Identification Model

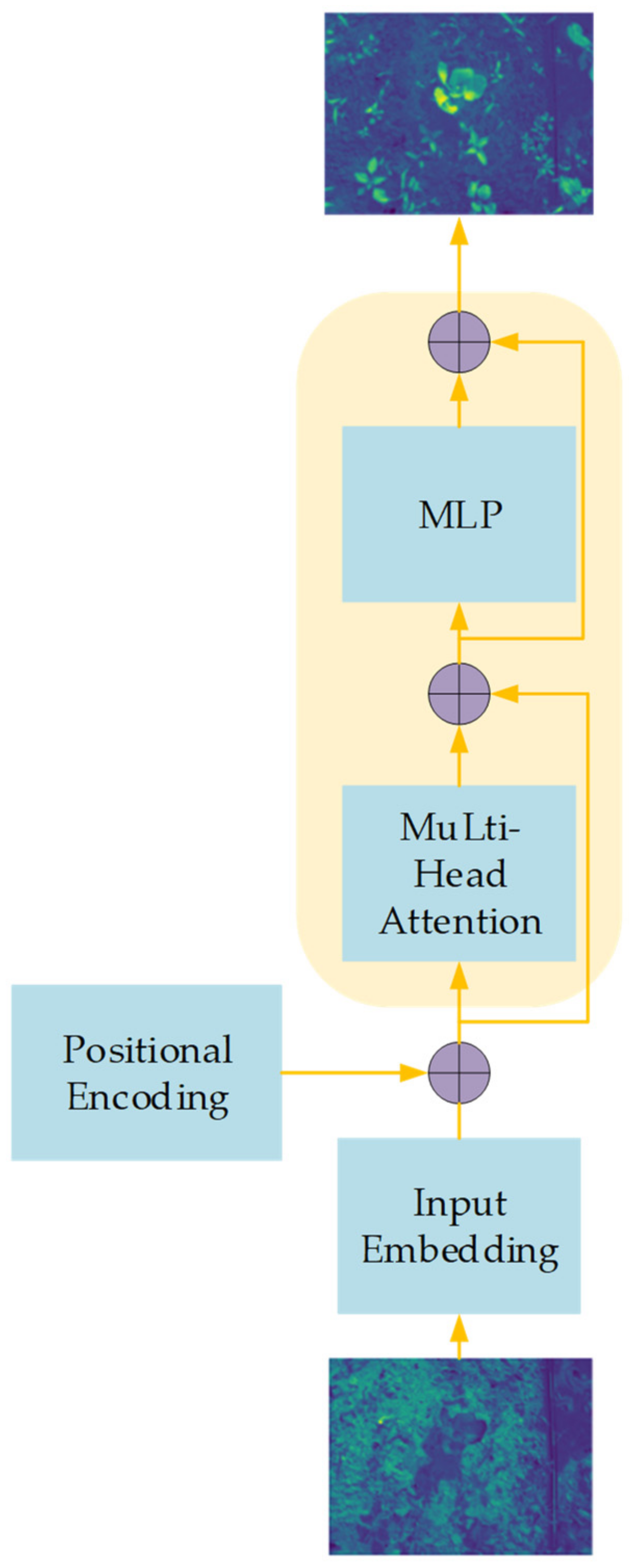

2.1.1. Implementation of the Transformer Module

2.1.2. Overall Structure of the Cabbage-Detection Model

2.1.3. Positioning Method for Cabbages

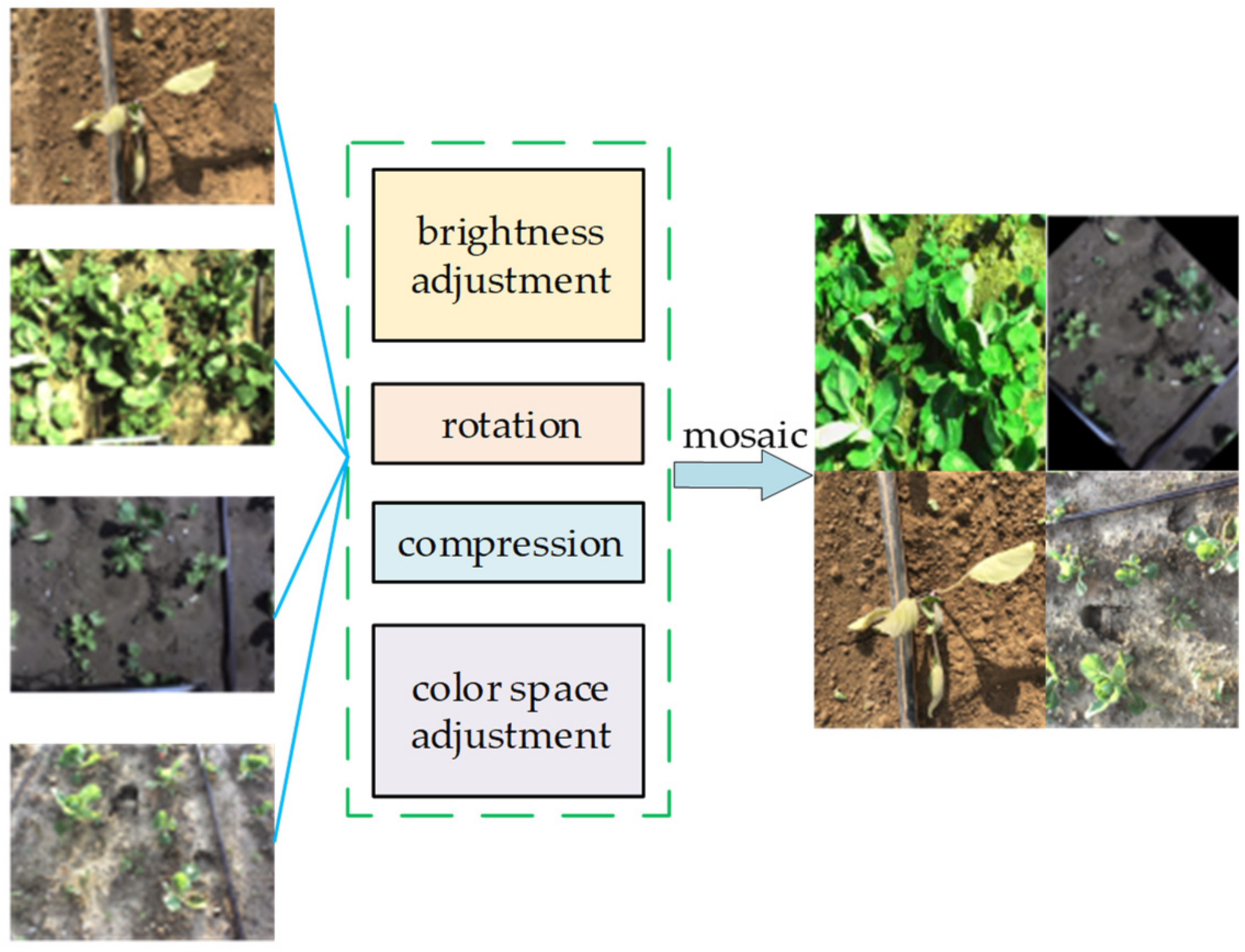

2.2. Preprocessing Method

2.3. Model Training

2.3.1. Training Platform

2.3.2. Training Strategy

2.3.3. Evaluation Method for the Cabbage-Identification Model

- —number of true positives.

- —number of false positives.

- —number of false negatives.

- P—precision (%).

- R—recall (%).

- AP—average precision (%).

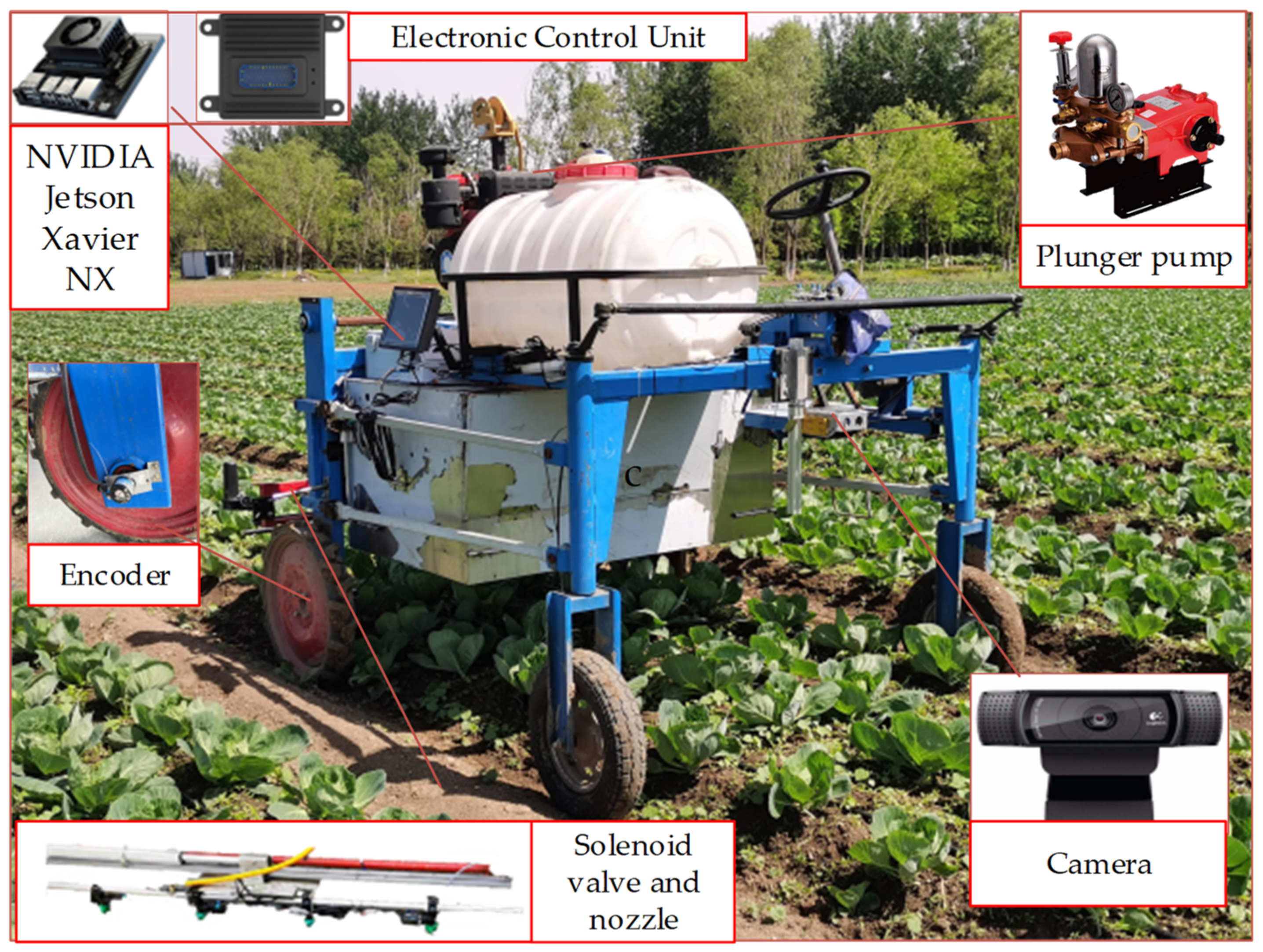

2.4. Design of the Target-Oriented Spray System for Cabbages

2.5. Field Experiment

2.5.1. Model Performance Comparison Test

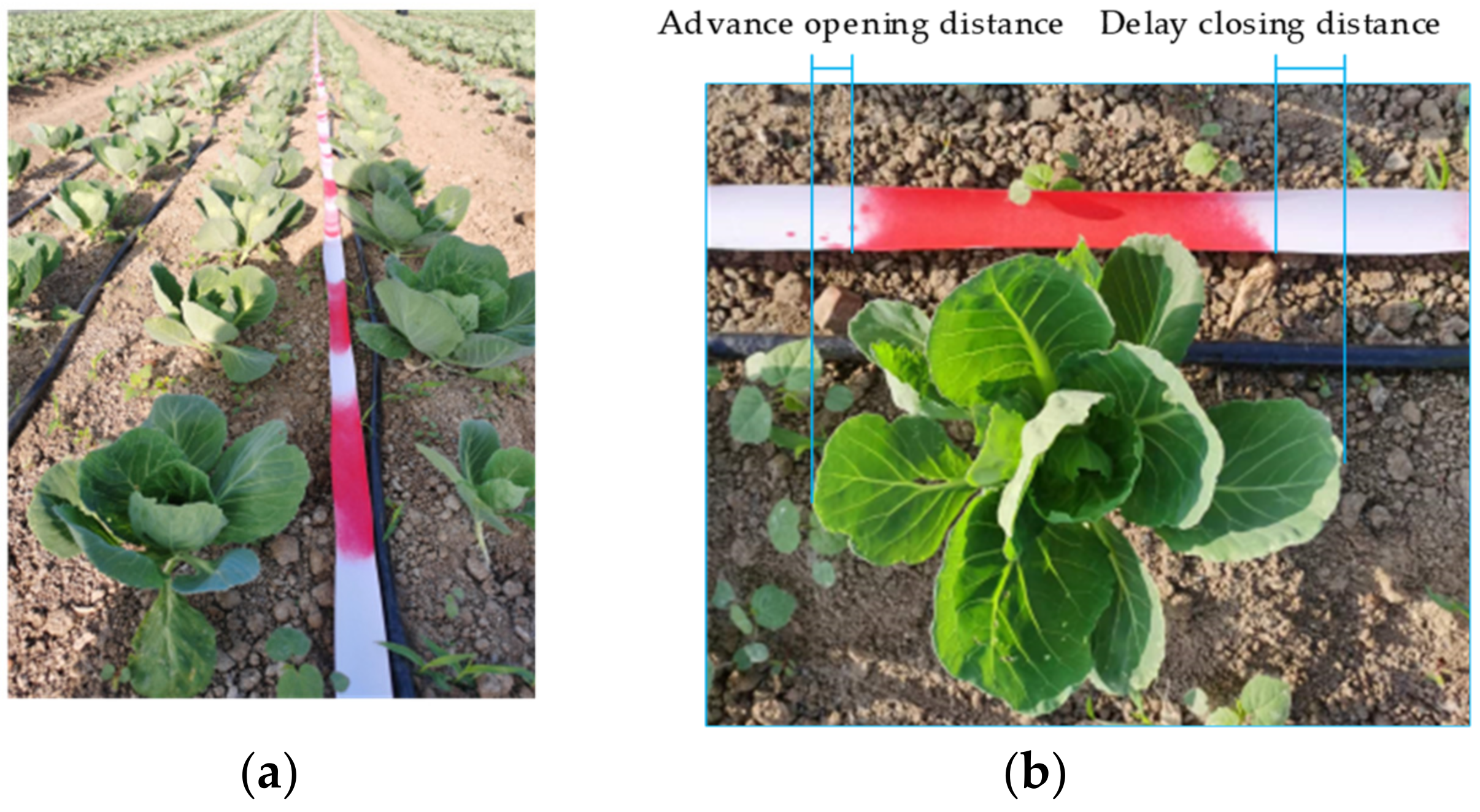

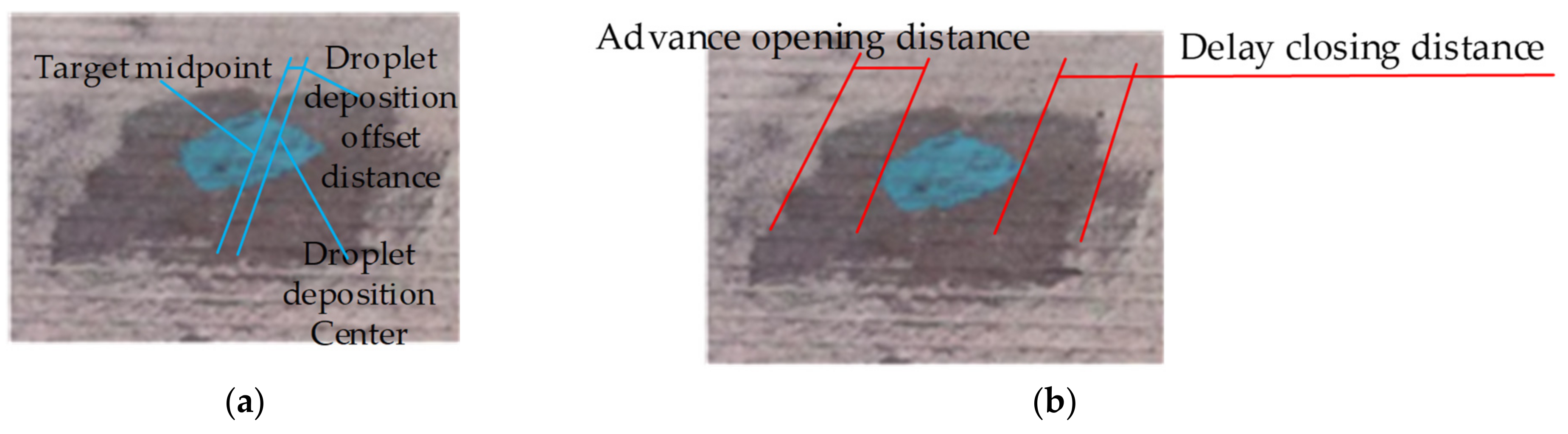

2.5.2. Target Spraying System Performance Test

3. Results

3.1. Laboratory Experimental Results

3.1.1. Comparison of the Results of the Cabbage-Identification Models

3.1.2. Experimental Results of Data Augmentation with Motion Blurring

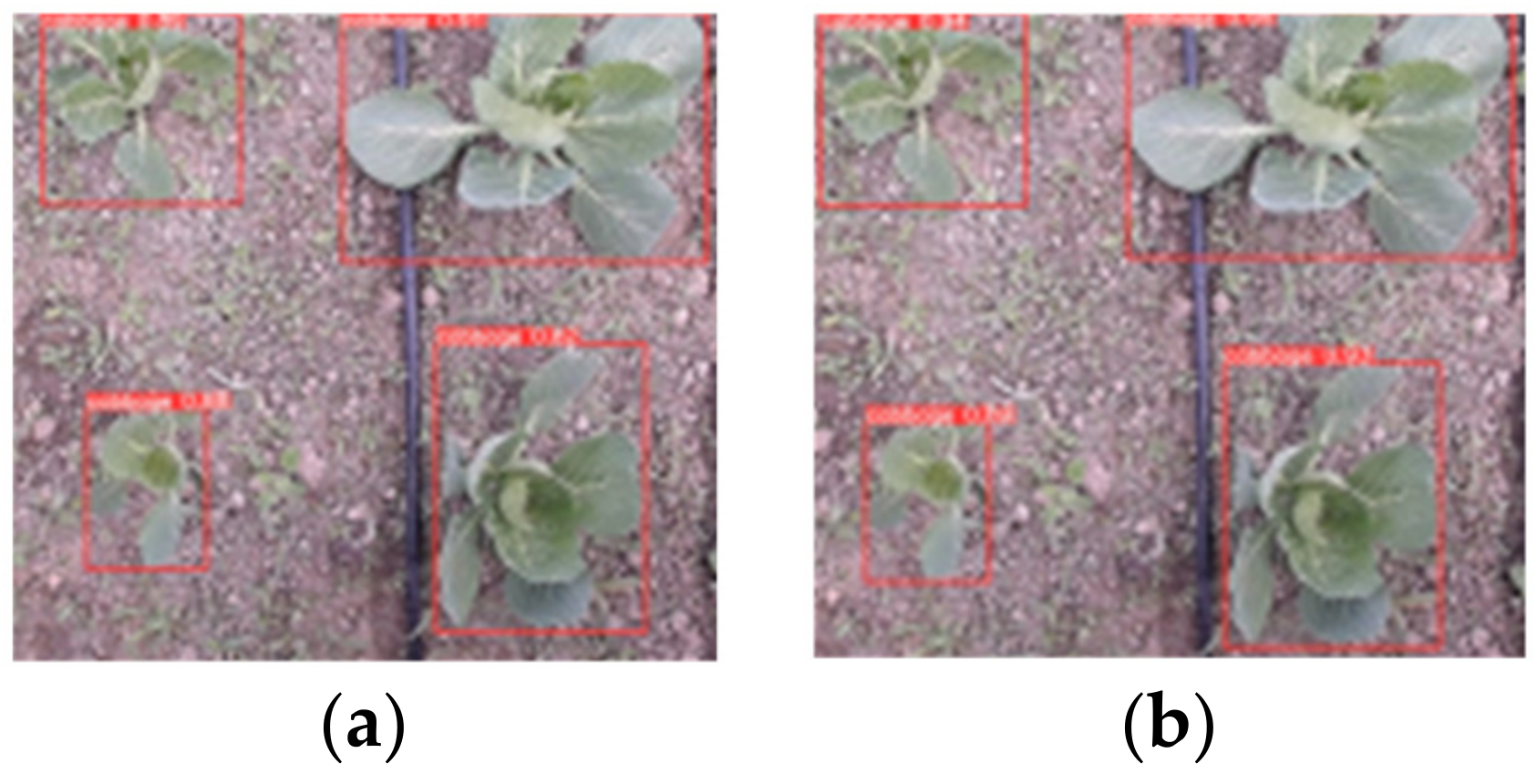

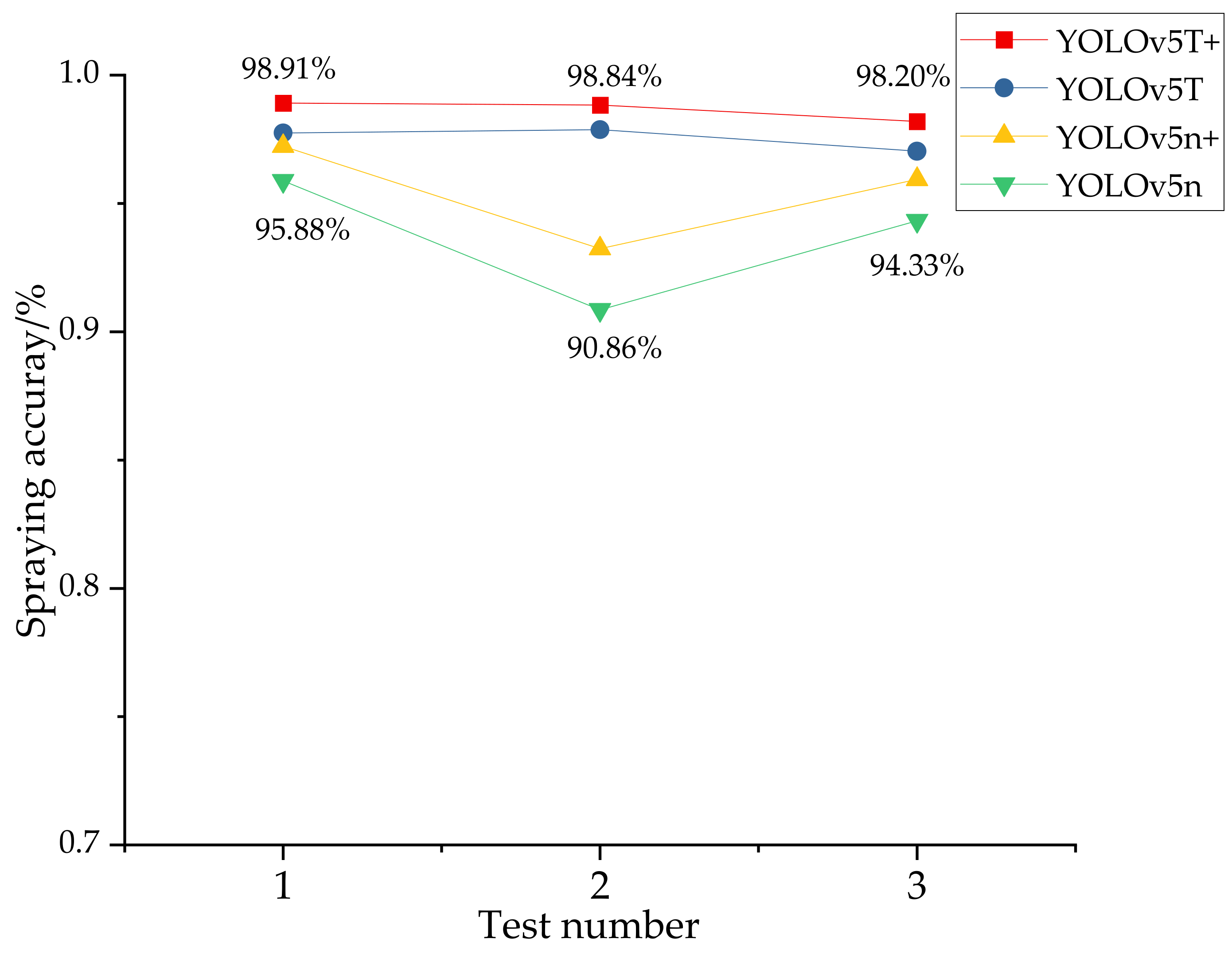

3.2. Comparative Tests of Model Spraying Accuracy in the Field

3.3. Performance Test Results of the Target Spraying System

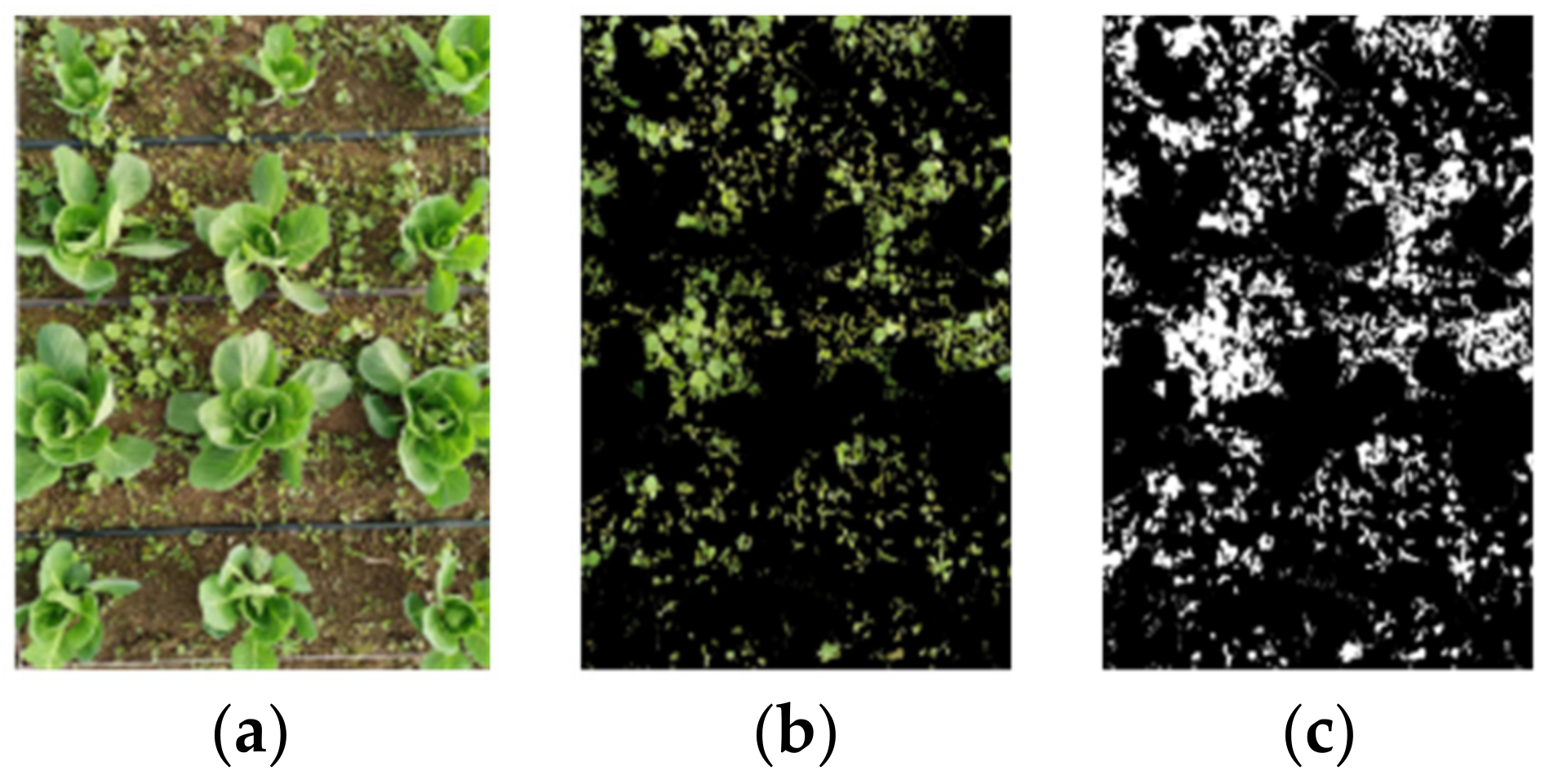

3.3.1. Measurement Results of the Weed Density

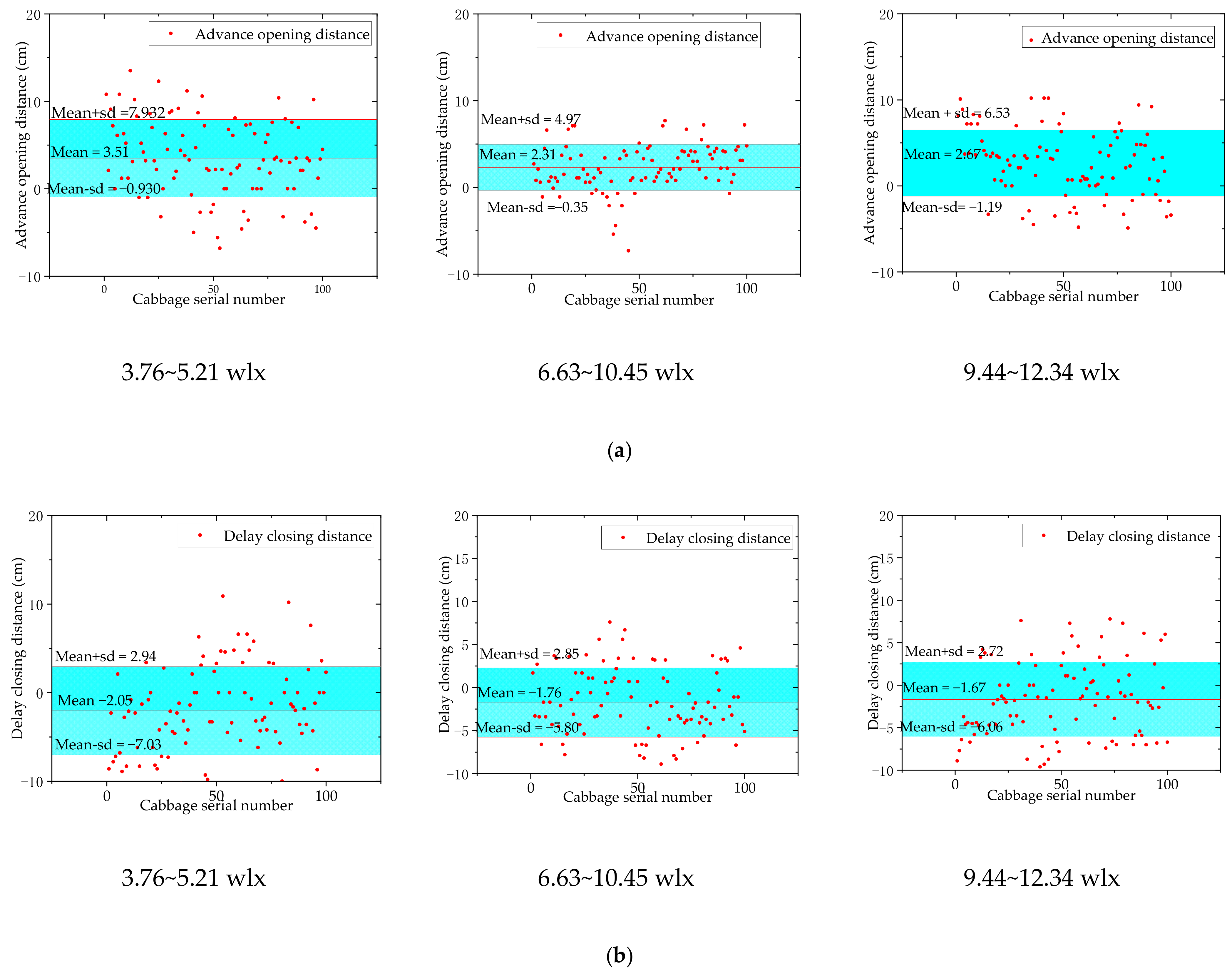

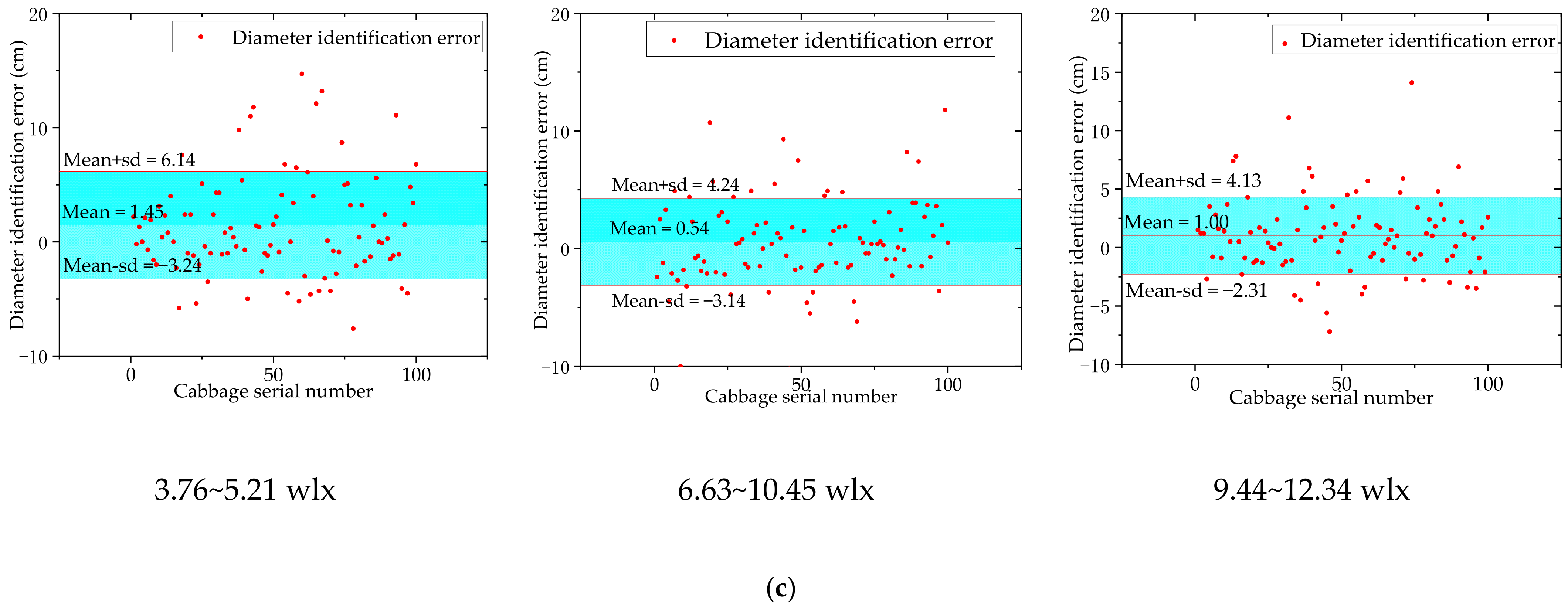

3.3.2. Experimental Target Error

3.3.3. Test Results for Savings Rate and Droplet Deposition

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Özlüoymak, Ö.B. Design and development of a servo-controlled target-oriented robotic micro-dose spraying system in precision weed control. Semin. Ciênc. Agrár. 2021, 42, 635–656. [Google Scholar] [CrossRef]

- Lameski, P.; Zdravevski, E.; Kulakov, A. Review of automated weed control approaches: An environmental impact perspective. In ICT Innovations 2018. Engineering and Life Sciences; Kalajdziski, S., Ackovska, N., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 132–147. [Google Scholar] [CrossRef]

- Allmendinger, A.; Spaeth, M.; Saile, M.; Peteinatos, G.G.; Gerhards, R. Precision chemical weed management strategies: A review and a design of a new CNN-based modular spot sprayer. Agronomy 2022, 12, 1620. [Google Scholar] [CrossRef]

- Zou, K.; Chen, X.; Wang, Y.; Zhang, C.; Zhang, F. A modified U-net with a specific data argumentation method for semantic segmentation of weed images in the field. Comput. Electron. Agric. 2021, 187, 106242. [Google Scholar] [CrossRef]

- Binch, A.; Fox, C.W. Controlled comparison of machine vision algorithms for Rumex and Urtica detection in grassland. Comput. Electron. Agric. 2017, 140, 123–138. [Google Scholar] [CrossRef]

- Zhou, M.; Jiang, H.; Bing, Z.; Su, H.; Knoll, A. Design and evaluation of the target spray platform. Int. J. Adv. Robot. Syst. 2021, 18, 172988142199614. [Google Scholar] [CrossRef]

- Andújar, D.; Weis, M.; Gerhards, R. An ultrasonic system for weed detection in cereal crops. Sensors 2012, 12, 17343–17357. [Google Scholar] [CrossRef]

- Ulloa, C.C.; Krus, A.; Barrientos, A.; Cerro, J.d.; Valero, C. Robotic fertilization in strip cropping using a CNN vegetables detection-characterization method. Comput. Electron. Agric. 2022, 193, 106684. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Hamuda, E.; Mc Ginley, B.; Glavin, M.; Jones, E. Automatic crop detection under field conditions using the HSV colour space and morphological operations. Comput. Electron. Agric. 2017, 133, 97–107. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhu, Q.; Huang, M.; Guo, Y.; Qin, J. Maize and weed classification using color indices with support vector data description in outdoor fields. Comput. Electron. Agric. 2017, 141, 215–222. [Google Scholar] [CrossRef]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G.K. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in agriculture by machine and deep learning techniques: A review of recent developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Kounalakis, T.; Triantafyllidis, G.A.; Nalpantidis, L. Deep learning-based visual recognition of rumex for robotic precision farming. Comput. Electron. Agric. 2019, 165, 104973. [Google Scholar] [CrossRef]

- Li, G.; Suo, R.; Zhao, G.; Gao, C.; Fu, L.; Shi, F.; Dhupia, J.; Li, R.; Cui, Y. Real-time detection of kiwifruit flower and bud simultaneously in orchard using YOLOv4 for robotic pollination. Comput. Electron. Agric. 2022, 193, 106641. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Suh, H.K.; Ijsselmuiden, J.; Hofstee, J.W.; van Henten, E.J. Transfer learning for the classification of sugar beet and volunteer potato under field conditions. Biosyst. Eng. 2018, 174, 50–65. [Google Scholar] [CrossRef]

- Ahmad, A.; Saraswat, D.; Aggarwal, V.; Etienne, A.; Hancock, B. Performance of deep learning models for classifying and detecting common weeds in corn and soybean production systems. Comput. Electron. Agric. 2021, 184, 106081. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Weed detection by faster RCNN model: An enhanced anchor box approach. Agronomy 2022, 12, 1580. [Google Scholar] [CrossRef]

- Yang, J.; Wang, Y.; Chen, Y.; Yu, J. Detection of weeds growing in Alfalfa using convolutional neural networks. Agronomy 2022, 12, 1459. [Google Scholar] [CrossRef]

- Zhang, P.; Li, D. EPSA-YOLO-V5s: A novel method for detecting the survival rate of rapeseed in a plant factory based on multiple guarantee mechanisms. Comput. Electron. Agric. 2022, 193, 106714. [Google Scholar] [CrossRef]

- Ying, B.; Xu, Y.; Zhang, S.; Shi, Y.; Liu, L. Weed detection in images of carrot fields based on improved YOLO v4. Trait. Signal 2021, 38, 341–348. [Google Scholar] [CrossRef]

- Sellmann, F.; Bangert, W.; Grzonka, D.S.; Hänsel, M.; Haug, S.; Kielhorn, A.; Michaels, A.; Möller, K.; Rahe, D.F.; Strothmann, W.; et al. RemoteFarming.1: Human-Machine Interaction for a Field- Robot-Based Weed Control Application in Organic Farming. Available online: https://www.semanticscholar.org/paper/RemoteFarming.1%3A-Human-machine-interaction-for-a-in-Sellmann-Bangert/c5ad4fb09438c31db2b19d9f6f460c13fd448134?p2df (accessed on 14 July 2022).

- FarmWise. Available online: https://farmwise.io/ (accessed on 14 July 2022).

- Carbonrobotics. Available online: https://carbonrobotics.com/ (accessed on 14 July 2022).

- Blueriver Technology. Available online: https://bluerivertechnology.com/ (accessed on 14 July 2022).

- Wang, A.; Xu, Y.; Wei, X.; Cui, B. Semantic segmentation of crop and weed using an encoder-decoder network and image enhancement method under uncontrolled outdoor illumination. IEEE Access 2020, 8, 81724–81734. [Google Scholar] [CrossRef]

- Li, Y.; Guo, Z.; Shuang, F.; Zhang, M.; Li, X. Key technologies of machine vision for weeding robots: A review and benchmark. Comput. Electron. Agric. 2022, 196, 106880. [Google Scholar] [CrossRef]

- Liu, J.; Abbas, I.; Noor, R.S. Development of deep learning-based variable rate agrochemical spraying system for targeted weeds control in strawberry crop. Agronomy 2021, 11, 1480. [Google Scholar] [CrossRef]

- Tan, C.; Li, C.; He, D.; Song, H. Towards real-time tracking and counting of seedlings with a one-stage detector and optical flow. Comput. Electron. Agric. 2022, 193, 106683. [Google Scholar] [CrossRef]

- Hussain, N.; Farooque, A.; Schumann, A.; McKenzie-Gopsill, A.; Esau, T.; Abbas, F.; Acharya, B.; Zaman, Q. Design and development of a smart variable rate sprayer using deep learning. Remote Sens. 2020, 12, 4091. [Google Scholar] [CrossRef]

- Partel, V.; Charan Kakarla, S.; Ampatzidis, Y. Development and evaluation of a low-cost and smart technology for precision weed management utilizing artificial intelligence. Comput. Electron. Agric. 2019, 157, 339–350. [Google Scholar] [CrossRef]

- de Aguiar, A.S.P.; dos Santos, F.B.N.; dos Santos, L.C.F.; Filipe, V.M.J.; de Sousa, A.J.M. Vineyard trunk detection using deep learning—An experimental device benchmark. Comput. Electron. Agric. 2020, 175, 105535. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need; NIPS: Kolkata, India, 2017. [Google Scholar] [CrossRef]

- Zhai, C.; Fu, H.; Zheng, K.; Zheng, S.; Wu, H.; Zhao, X. Establishment and experimental verification of deep learning model for on-line recognition of field cabbage. Trans. Chin. Soc. Agric. Mach. 2022, 53, 293–303. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, X.; Li, C.; Fu, H.; Yang, S.; Zhai, C. Cabbage and Weed Identification Based on Machine Learning and Target Spraying System Design. Front. Plant Sci. 2022, 13, 924973. [Google Scholar] [CrossRef] [PubMed]

- GB T 17997-2008; Evaluating Regulations for the Operation and Spraying Quality of Sprayers in the Field. CNIS: Beijing, China, 2009.

- Özlüoymak, Ö.B.; Bolat, A.; Bayat, A.; Güzel, E. Design, development, and evaluation of a target oriented weed control system using machine vision. Turk. J. Agric. For. 2019, 43, 164–173. [Google Scholar] [CrossRef]

- Li, H.; Quan, L.; Pi, P.; Guo, Y.; Ma, Z.; Yu, T. Method for controlling directional deposition of mist droplets for target application in field. Trans. Chin. Soc. Agric. Mach. 2022, 53, 102–109, 258. [Google Scholar] [CrossRef]

- Srinivas, A.; Lin, T.Y.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck transformers for visual recognition. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: Nashville, TN, USA, 2021; pp. 16519–16529. [Google Scholar]

- Celen, I.H.; Kilic, E.; Durgut, M.R. Technical note: Development of an automatic weed control system for sunflower. Appl. Eng. Agric. 2008, 24, 23–27. [Google Scholar] [CrossRef]

- Zhao, X.; Zheng, S.; Yi, K.; Wang, X.; Zou, W.; Zhai, C. Design and experiment of the target-oriented spraying system for field vegetable considering spray height. Trans. CSAE 2022, 38, 1–11. [Google Scholar] [CrossRef]

| No | Equipment | Specification | Manufacturer | City, Country: |

|---|---|---|---|---|

| 1 | Encoder | Hts-5008 encoder | Wuxi Hengte Technology Co., Ltd | ZJ, CN |

| 2 | Power supply | 100 Ah 12 V lithium battery | Dongguan Golden Phoenix Energy Technology Co., Ltd | GZ, CN |

| 3 | Converter | Xiao Boshi | Binhai Qiangsheng Electronics Co., Ltd | JS, CN |

| 4 | Display | 13.6-inch LED display | Hunan Chuanglebo Intelligent Technology Co., Ltd | HN, CN |

| 5 | Controller | HSC37 | Beijing Yingzhijie Technology Co., Ltd | BJ, CN |

| 6 | Edge computing equipment | NVIDIA Jetson Xavier NX | Hunan Chuanglebo Intelligent Technology Co., Ltd | HN, CN |

| 7 | Camera | Logitech c930 | Shanghai Liuxiang Trading Co., Ltd | SH, CN |

| 8 | Pump | Plunger pump | Hebei Deke Machinery Technology Co., Ltd | HE, CN |

| e | Solenoid valve | Teejet DS115880-12 | Tegent Spray Technology (Ningbo) Co., Ltd | ZJ, CN |

| 10 | Pressure sensor | 131-B | Beijing Aosheng Automation Technology Co., Ltd | BJ, CN |

| 11 | Ball valves | FRY-02T | Jiangsu Valve Ruiyi Valve Equipment Co., Ltd | JS, CN |

| 12 | Pesticide box | 200 L | Handan Daoer Plastic Industry Co., Ltd | HE, CN |

| Model | Precision | Recall | AP | Time Consumption of GPU (ms) | Time Consumption of NX (ms) |

|---|---|---|---|---|---|

| YOLOV5n | 88.21% | 89.97% | 94.24% | 10.30 | 55.90 |

| Ours | 92.90% | 93.23% | 96.14% | 9.70 | 51.07 |

| Model | Total Amount/Piece | Missing/Piece | Misidentification/Piece | AP |

|---|---|---|---|---|

| Without motion-blur augmentation | 1428 | 63 | 18 | 95.58% |

| With motion-blur augmentation | 1428 | 31 | 6 | 97.83% |

| Light Intensity/(wlx) | Humidity/% | Wind Speed (m/s) | Temperature (°C) |

|---|---|---|---|

| 3.76~5.21 | 55.31~61.56 | 0.64~3.94 | 16.05~17.2 |

| 6.63~10.45 | 18~24.81 | 2.1~5.29 | 19.2~20.4 |

| 9.44~12.34 | 13.94~19.06 | 0.22~3.15 | 21.65~23.75 |

| DF | Mean Square | F Value | p Value | |

|---|---|---|---|---|

| Advance opening distance | 2 | 4.36 | 0.451 | 0.637 |

| Delay closing distance | 2 | 3.91 | 0.194 | 0.824 |

| Diameter | 2 | 20.52 | 1.320 | 0.268 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, H.; Zhao, X.; Wu, H.; Zheng, S.; Zheng, K.; Zhai, C. Design and Experimental Verification of the YOLOV5 Model Implanted with a Transformer Module for Target-Oriented Spraying in Cabbage Farming. Agronomy 2022, 12, 2551. https://doi.org/10.3390/agronomy12102551

Fu H, Zhao X, Wu H, Zheng S, Zheng K, Zhai C. Design and Experimental Verification of the YOLOV5 Model Implanted with a Transformer Module for Target-Oriented Spraying in Cabbage Farming. Agronomy. 2022; 12(10):2551. https://doi.org/10.3390/agronomy12102551

Chicago/Turabian StyleFu, Hao, Xueguan Zhao, Huarui Wu, Shenyu Zheng, Kang Zheng, and Changyuan Zhai. 2022. "Design and Experimental Verification of the YOLOV5 Model Implanted with a Transformer Module for Target-Oriented Spraying in Cabbage Farming" Agronomy 12, no. 10: 2551. https://doi.org/10.3390/agronomy12102551

APA StyleFu, H., Zhao, X., Wu, H., Zheng, S., Zheng, K., & Zhai, C. (2022). Design and Experimental Verification of the YOLOV5 Model Implanted with a Transformer Module for Target-Oriented Spraying in Cabbage Farming. Agronomy, 12(10), 2551. https://doi.org/10.3390/agronomy12102551