Automatic Wheat Lodging Detection and Mapping in Aerial Imagery to Support High-Throughput Phenotyping and In-Season Crop Management

Abstract

1. Introduction

2. Materials and Methods

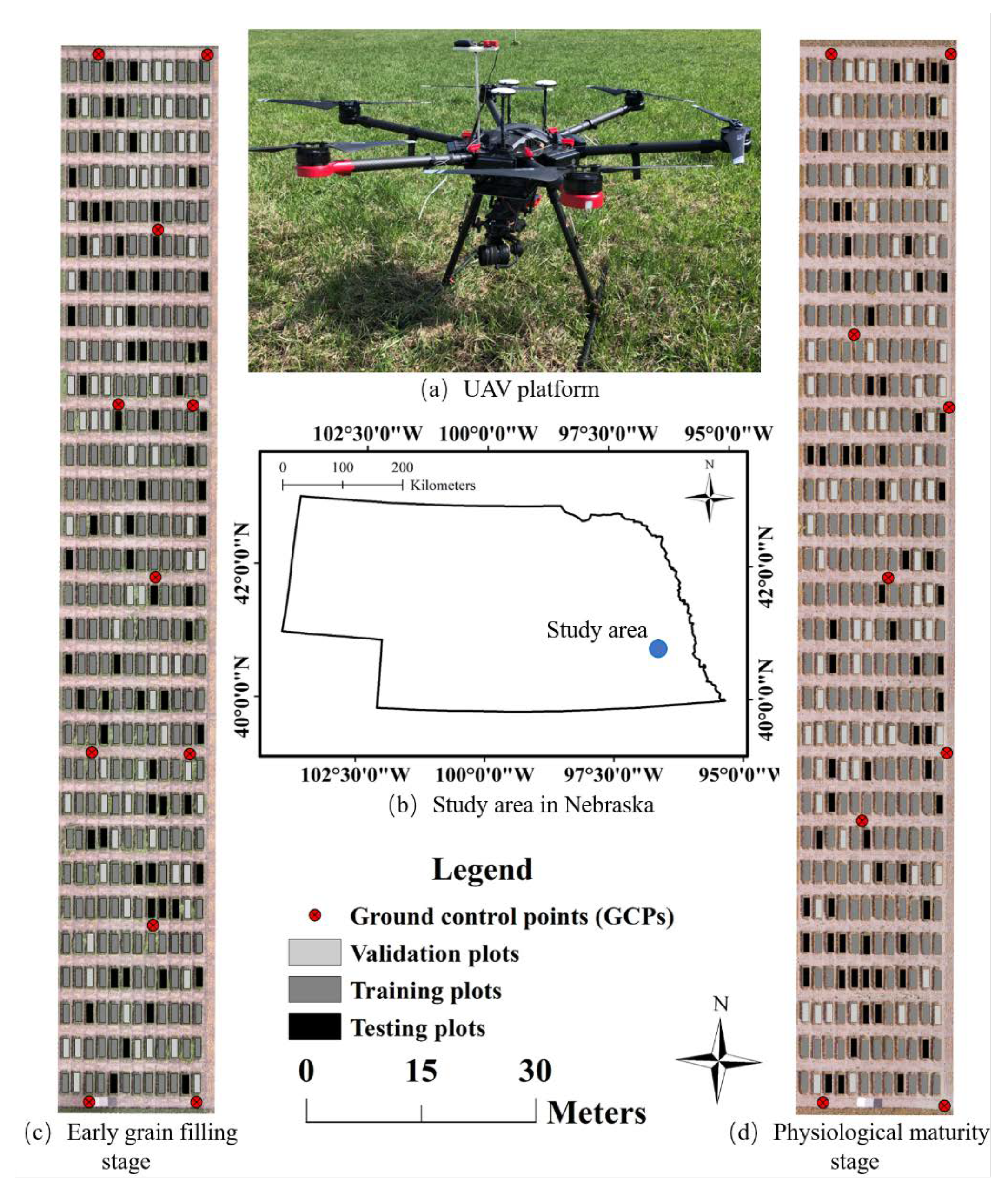

2.1. Study Site and UAV Image Collections

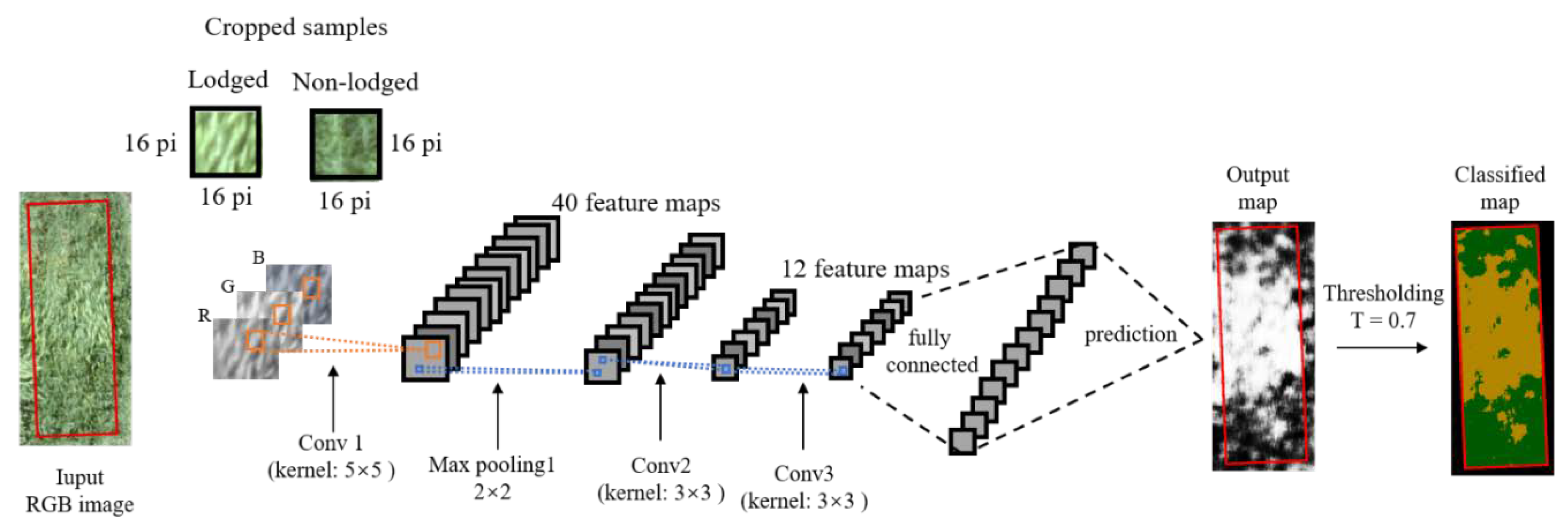

2.2. Image Processing and CNN Modeling

2.2.1. Image Pre-Processing

2.2.2. CNN Architecture and Experimental Design

2.3. Model Optimization and Accuracy Assessment

2.3.1. Model Validation

2.3.2. Accuracy Assessment of Lodging Classification in Testing Dataset

3. Results and Discussions

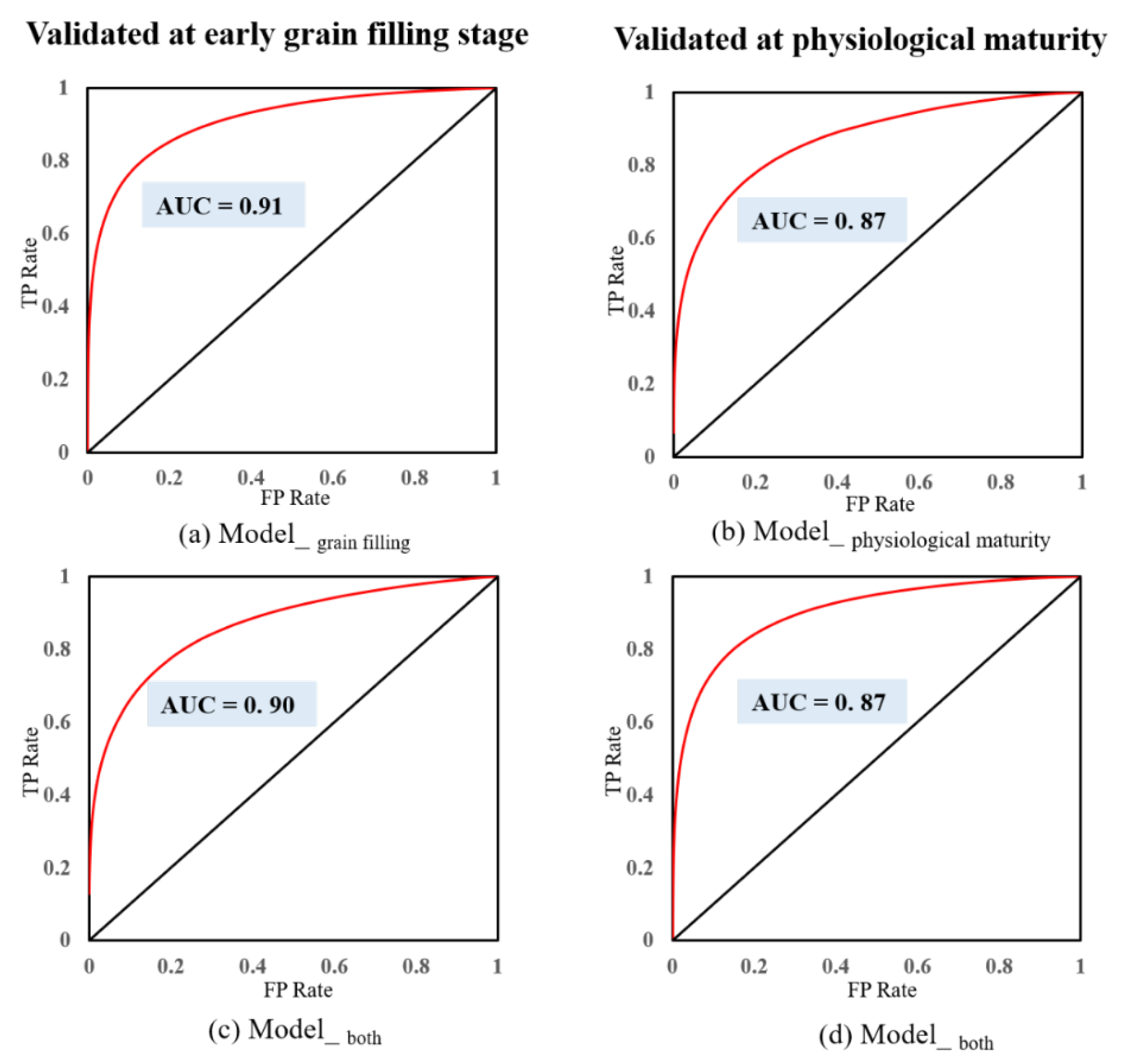

3.1. Model Validation

3.2. Accuracy of Lodging Classification and Mapping

3.2.1. Overall Quantitative Evaluation with Confusion Matrices

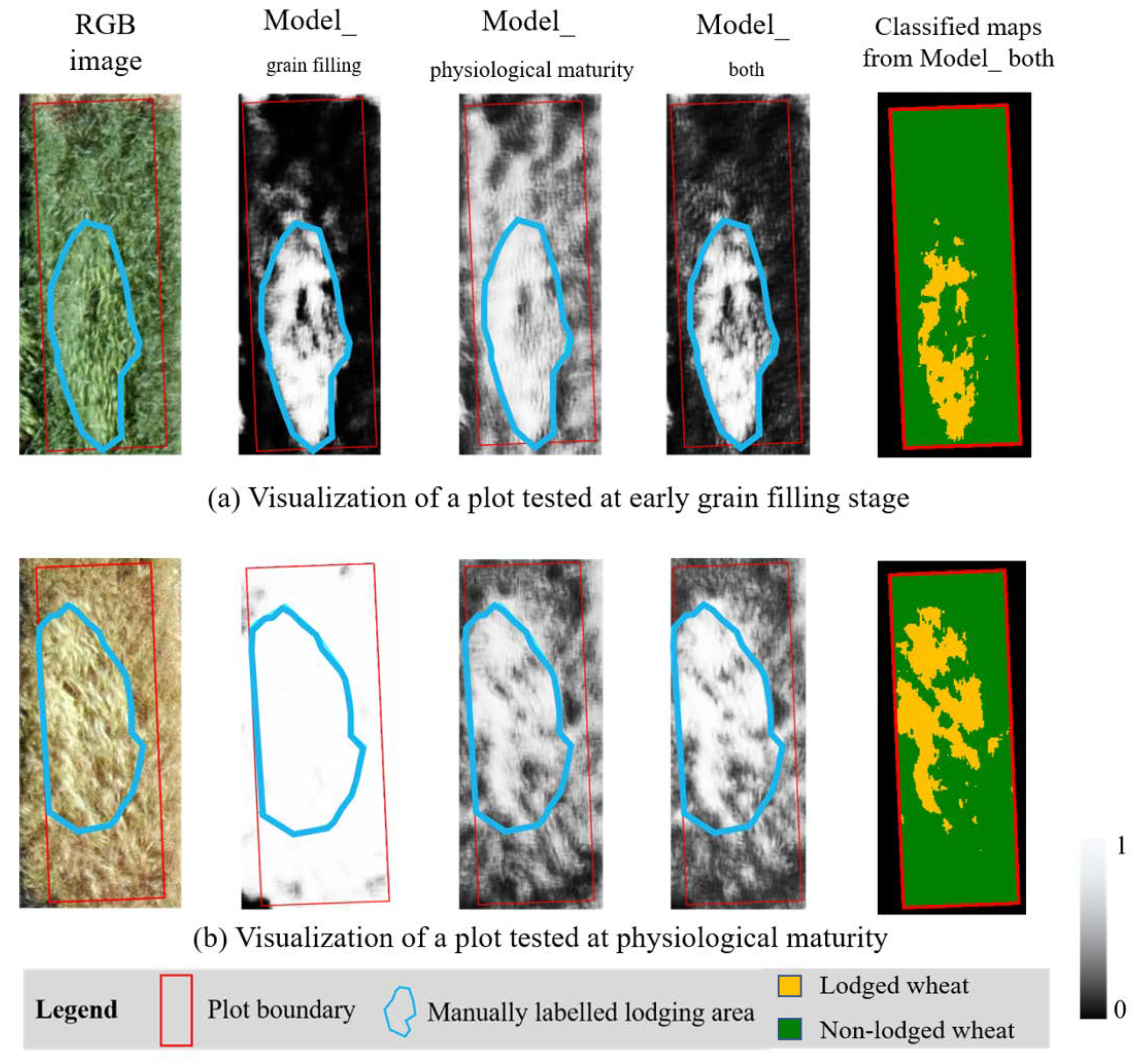

3.2.2. Visualization of Model Performance

3.3. Applications and Limitations

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef]

- Food and Agricultural Organization of the United Nations (FAO). FAOSTAT Statistical Database. Crops. Available online: http://www.fao.org/faostat/en/?#data/QC (accessed on 27 August 2019).

- Berry, P.; Spink, J. Predicting yield losses caused by lodging in wheat. Field Crop. Res. 2012, 137, 19–26. [Google Scholar] [CrossRef]

- Foulkes, M.J.; Slafer, G.A.; Davies, W.J.; Berry, P.M.; Sylvester-Bradley, R.; Martre, P.; Calderini, D.F.; Griffiths, S.; Reynolds, M.P. Raising yield potential of wheat. III. Optimizing partitioning to grain while maintaining lodging resistance. J. Exp. Bot. 2010, 62, 469–486. [Google Scholar] [CrossRef] [PubMed]

- Peng, D.; Chen, X.; Yin, Y.; Lu, K.; Yang, W.; Tang, Y.; Wang, Z. Lodging resistance of winter wheat (Triticum aestivum L.): Lignin accumulation and its related enzymes activities due to the application of paclobutrazol or gibberellin acid. Field Crop. Res. 2014, 157, 1–7. [Google Scholar] [CrossRef]

- Pinthus, M.J. Lodging in wheat, barley, and oats: The phenomenon, its causes, and preventive measures. In Advances in Agronomy; Elsevier: Amsterdam, The Netherlands, 1974; Volume 25, pp. 209–263. ISBN 978-0-12-000725-7. [Google Scholar]

- Berry, P.M.; Sterling, M.; Spink, J.H.; Baker, C.J.; Sylvester-Bradley, R.; Mooney, S.J.; Tams, A.R.; Ennos, A.R. Understanding and Reducing Lodging in Cereals. In Advances in Agronomy; Elsevier: Amsterdam, The Netherlands, 2004; Volume 84, pp. 217–271. ISBN 978-0-12-000782-0. [Google Scholar]

- Tripathi, S.; Sayre, K.; Kaul, J.; Narang, R. Lodging behavior and yield potential of spring wheat (Triticum aestivum L.): Effects of ethephon and genotypes. Field Crop. Res. 2004, 87, 207–220. [Google Scholar] [CrossRef]

- Yang, H.; Chen, E.; Li, Z.; Zhao, C.; Yang, G.; Pignatti, S.; Casa, R.; Zhao, L. Wheat lodging monitoring using polarimetric index from RADARSAT-2 data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 157–166. [Google Scholar] [CrossRef]

- Chauhan, S.; Darvishzadeh, R.; Lu, Y.; Stroppiana, D.; Boschetti, M.; Pepe, M.; Nelson, A. Wheat Lodging Assessment Using Multispectral Uav Data. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 235–240. [Google Scholar] [CrossRef]

- Shah, A.N.; Tanveer, M.; Rehman, A.U.; Anjum, S.A.; Iqbal, J.; Ahmad, R. Lodging stress in cereal–effects and management: An overview. Environ. Sci. Pollut. Res. 2017, 24, 5222–5237. [Google Scholar] [CrossRef]

- Berry, P.M.; Kendall, S.; Rutterford, Z.; Orford, S.; Griffiths, S. Historical analysis of the effects of breeding on the height of winter wheat (Triticum aestivum) and consequences for lodging. Euphytica 2014, 203, 375–383. [Google Scholar] [CrossRef]

- Piñera-Chavez, F.; Berry, P.; Foulkes, M.; Jesson, M.; Reynolds, M. Avoiding lodging in irrigated spring wheat. I. Stem and root structural requirements. Field Crop. Res. 2016, 196, 325–336. [Google Scholar] [CrossRef]

- Baker, C.; Berry, P.; Spink, J.; Sylvester-Bradley, R.; Griffin, J.; Scott, R.; Clare, R. A Method for the Assessment of the Risk of Wheat Lodging. J. Theor. Biol. 1998, 194, 587–603. [Google Scholar] [CrossRef]

- Sterling, M.; Baker, C.; Berry, P.; Wade, A. An experimental investigation of the lodging of wheat. Agric. For. Meteorol. 2003, 119, 149–165. [Google Scholar] [CrossRef]

- Prajapat, P.; Choudhary, R.; Jat, B.L. Studies on agro-chemicals for lodging management in wheat (Triticum aestivum L.) for higher productivity. Asian J. BIO Sci. 2017, 12, 134–155. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, H.; Yi, Y.; Ding, J.; Zhu, M.; Li, C.; Guo, W.; Feng, C.; Zhu, X. Effect of nitrogen levels and nitrogen ratios on lodging resistance and yield potential of winter wheat (Triticum aestivum L.). PLoS ONE 2017, 12, e0187543. [Google Scholar] [CrossRef] [PubMed]

- Erickson, B.J.; Johannsen, C.J.; Vorst, J.J.; Biehl, L.L. Using Remote Sensing to Assess Stand Loss and Defoliation in Maize. Photogramm. Eng. Remote Sens. 2004, 70, 717–722. [Google Scholar] [CrossRef]

- Liu, W.; Huang, J.; Wei, C.; Wang, X.; Mansaray, L.R.; Han, J.; Zhang, D.; Chen, Y. Mapping water-logging damage on winter wheat at parcel level using high spatial resolution satellite data. ISPRS J. Photogramm. Remote Sens. 2018, 142, 243–256. [Google Scholar] [CrossRef]

- Peters, A.J.; Griffin, S.C.; Vina, A.; Ji, L. Use of Remotely Sensed Data for Assessing Crop Hail Damage. Photogramm. Eng. Remote Sens. 2000, 66, 1349–1355. [Google Scholar]

- Mirik, M.; Jones, D.C.; Price, J.; Workneh, F.; Ansley, R.J.; Rush, C.M. Satellite Remote Sensing of Wheat Infected by Wheat streak mosaic virus. Plant Dis. 2011, 95, 4–12. [Google Scholar] [CrossRef]

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.S.; Neely, H.L.; et al. Unmanned Aerial Vehicles for High-Throughput Phenotyping and Agronomic Research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Liu, H.-Y.; Yang, G.J.; Zhu, H.-C. The Extraction of Wheat Lodging Area in UAV’s Image Used Spectral and Texture Features. Appl. Mech. Mater. 2014, 651, 2390–2393. [Google Scholar] [CrossRef]

- Mardanisamani, S.; Maleki, F.; Kassani, S.H.; Rajapaksa, S.; Duddu, H.; Wang, M.; Shirtliffe, S.; Ryu, S.; Josuttes, A.; Zhang, T.; et al. Crop Lodging Prediction From UAV-Acquired Images of Wheat and Canola Using a DCNN Augmented With Handcrafted Texture Features. arXiv 2019, arXiv:1906.07771. [Google Scholar]

- Rajapaksa, S.; Eramian, M.; Duddu, H.; Wang, M.; Shirtliffe, S.; Ryu, S.; Josuttes, A.; Zhang, T.; Vail, S.; Pozniak, C.; et al. Classification of Crop Lodging with Gray Level Co-occurrence Matrix. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 251–258. [Google Scholar]

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of Unmanned Aerial Vehicle Imagery and Deep Learning UNet to Extract Rice Lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef] [PubMed]

- Maresma, Á.; Ariza, M.; Martínez, E.; Lloveras, J.; Casasnovas, J.A.M. Analysis of Vegetation Indices to Determine Nitrogen Application and Yield Prediction in Maize (Zea mays L.) from a Standard UAV Service. Remote Sens. 2016, 8, 973. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Näsi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating Biomass and Nitrogen Amount of Barley and Grass Using UAV and Aircraft Based Spectral and Photogrammetric 3D Features. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Z.; Wang, L.; Liu, J.; Zhou, Q. Area extraction of maize lodging based on remote sensing by small unmanned aerial vehicle. Trans. Chin. Soc. Agric. Eng. 2014, 30, 207–213. [Google Scholar]

- Chu, T.; Starek, M.J.; Brewer, M.J.; Masiane, T.; Murray, S.C. UAS imaging for automated crop lodging detection: A case study over an experimental maize field. In SPIE Commercial + Scientific Sensing and Imaging; Thomasson, J.A., McKee, M., Moorhead, R.J., Eds.; Proc. SPIE: Anaheim, CA, USA, 2017; p. 102180E. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Liu, Y.; Cen, C.; Che, Y.; Ke, R.; Ma, Y.; Ma, Y. Detection of Maize Tassels from UAV RGB Imagery with Faster R-CNN. Remote Sens. 2020, 12, 338. [Google Scholar] [CrossRef]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine Learning for High-Throughput Stress Phenotyping in Plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef] [PubMed]

- Ubbens, J.R.; Stavness, I. Deep Plant Phenomics: A Deep Learning Platform for Complex Plant Phenotyping Tasks. Front. Plant Sci. 2017, 8, 1190. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A.P. Deep Learning Based Oil Palm Tree Detection and Counting for High-Resolution Remote Sensing Images. Remote Sens. 2016, 9, 22. [Google Scholar] [CrossRef]

- Lu, J.; Hu, J.; Zhao, G.; Mei, F.; Zhang, C. An in-field automatic wheat disease diagnosis system. Comput. Electron. Agric. 2017, 142, 369–379. [Google Scholar] [CrossRef]

- Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Tsai, H.-P. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-date UAV Visible Images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef]

- Zhang, Z.; Flores, P.; Igathinathane, C.; Naik, D.L.; Kiran, R.; Ransom, J.K. Wheat Lodging Detection from UAS Imagery Using Machine Learning Algorithms. Remote Sens. 2020, 12, 1838. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI ’16), Savannah, GA, USA, 2–4 November 2016; Volume 21. [Google Scholar]

- Trimble Inc. Trimble Tutorial 7-Convolutional Neural Networks in eCognition; Trimble Inc.: Raunheim, Germany, 2017. [Google Scholar]

- Trimble Inc. Trimble eCognition Developer 9.3 Reference Book; Trimble Inc.: Raunheim, Germany, 2018. [Google Scholar]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of citrus trees from unmanned aerial vehicle imagery using convolutional neural networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef]

- Timilsina, S.; Sharma, S.K.; Aryal, J. Mapping urban trees within cadastral parcels using an object-based convolutional neural network. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 111–117. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Tiede, D.; Dabiri, Z.; Sudmanns, M.; Lang, S. Dwelling extraction in refugee camps using CNN–first experiences and lessons learnt. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 161–166. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Tiede, D.; Wendt, L.; Sudmanns, M.; Lang, S. Transferable instance segmentation of dwellings in a refugee camp-integrating CNN and OBIA. Eur. J. Remote Sens. 2020, 1–14. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Geng, J.; Xiao, L.; He, X.; Rao, X. Discrimination of clods and stones from potatoes using laser backscattering imaging technique. Comput. Electron. Agric. 2019, 160, 108–116. [Google Scholar] [CrossRef]

| Trained (Validated) by Images from | Tested on Imagery at | ||

|---|---|---|---|

| 1 | Model_ grain filling | early grain filling stage | both early grain filling stage and physiological maturity |

| 2 | Model_ physiological maturity | physiological maturity | both early grain filling stage and physiological maturity |

| 3 | Model_ both | both early grain filling stage and physiological maturity | both early grain filling stage and physiological maturity |

| Predicted | Precision | Recall | F1-Score | OA | Kc | |||

|---|---|---|---|---|---|---|---|---|

| Lodging | Non-Lodging | (%) | (%) | (%) | (%) | |||

| Model_ grain filling † and tested at early grain filling stage | ||||||||

| Actual | Lodging | 563426 | 328653 | 71.79 | 63.16 | 67.20 | 90.22 | 0.61 |

| Non-lodging | 221430 | 4508971 | ||||||

| Model_ grain filling † and tested at physiological maturity | ||||||||

| Lodging | 569850 | 5495 | 9.41 | 99.04 | 17.18 | 14.41 | 0.01 | |

| Non-lodging | 5487168 | 354986 | ||||||

| Model_ physiological maturity † and tested at early grain filling stage | ||||||||

| Lodging | 320180 | 147047 | 30.03 | 68.53 | 41.79 | 84.13 | 0.32 | |

| Non-lodging | 745032 | 4410221 | ||||||

| Model_ physiological maturity † and tested at physiological maturity | ||||||||

| Lodging | 588172 | 320238 | 49.54 | 64.75 | 56.13 | 85.67 | 0.48 | |

| Non-lodging | 599088 | 4908403 | ||||||

| Model_ both † and tested at early grain filling stage | ||||||||

| Lodging | 620320 | 271759 | 65.96 | 69.54 | 67.70 | 89.47 | 0.61 | |

| Non-lodging | 320180 | 4410221 | ||||||

| Model_ both † and tested at physiological maturity | ||||||||

| Lodging | 488492 | 419918 | 62.96 | 53.77 | 58.01 | 88.98 | 0.52 | |

| Non-lodging | 287324 | 5220167 | ||||||

| IoU of Lodging Class | Model_ grain filling † | Model_ physiological maturity † | Model_ both † | Model_ grain filling † | Model_ physiological maturity † | Model_ both † |

|---|---|---|---|---|---|---|

| Tested at Early Grain Filling Stage | Tested at Physiological Maturity | |||||

| Plots in Figure 4 | 0.47 | 0.55 | 0.47 | 0.45 | 0.59 | 0.59 |

| All test plots | 0.51 | 0.26 | 0.51 | 0.09 | 0.39 | 0.41 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, B.; Li, J.; Baenziger, P.S.; Belamkar, V.; Ge, Y.; Zhang, J.; Shi, Y. Automatic Wheat Lodging Detection and Mapping in Aerial Imagery to Support High-Throughput Phenotyping and In-Season Crop Management. Agronomy 2020, 10, 1762. https://doi.org/10.3390/agronomy10111762

Zhao B, Li J, Baenziger PS, Belamkar V, Ge Y, Zhang J, Shi Y. Automatic Wheat Lodging Detection and Mapping in Aerial Imagery to Support High-Throughput Phenotyping and In-Season Crop Management. Agronomy. 2020; 10(11):1762. https://doi.org/10.3390/agronomy10111762

Chicago/Turabian StyleZhao, Biquan, Jiating Li, P. Stephen Baenziger, Vikas Belamkar, Yufeng Ge, Jian Zhang, and Yeyin Shi. 2020. "Automatic Wheat Lodging Detection and Mapping in Aerial Imagery to Support High-Throughput Phenotyping and In-Season Crop Management" Agronomy 10, no. 11: 1762. https://doi.org/10.3390/agronomy10111762

APA StyleZhao, B., Li, J., Baenziger, P. S., Belamkar, V., Ge, Y., Zhang, J., & Shi, Y. (2020). Automatic Wheat Lodging Detection and Mapping in Aerial Imagery to Support High-Throughput Phenotyping and In-Season Crop Management. Agronomy, 10(11), 1762. https://doi.org/10.3390/agronomy10111762