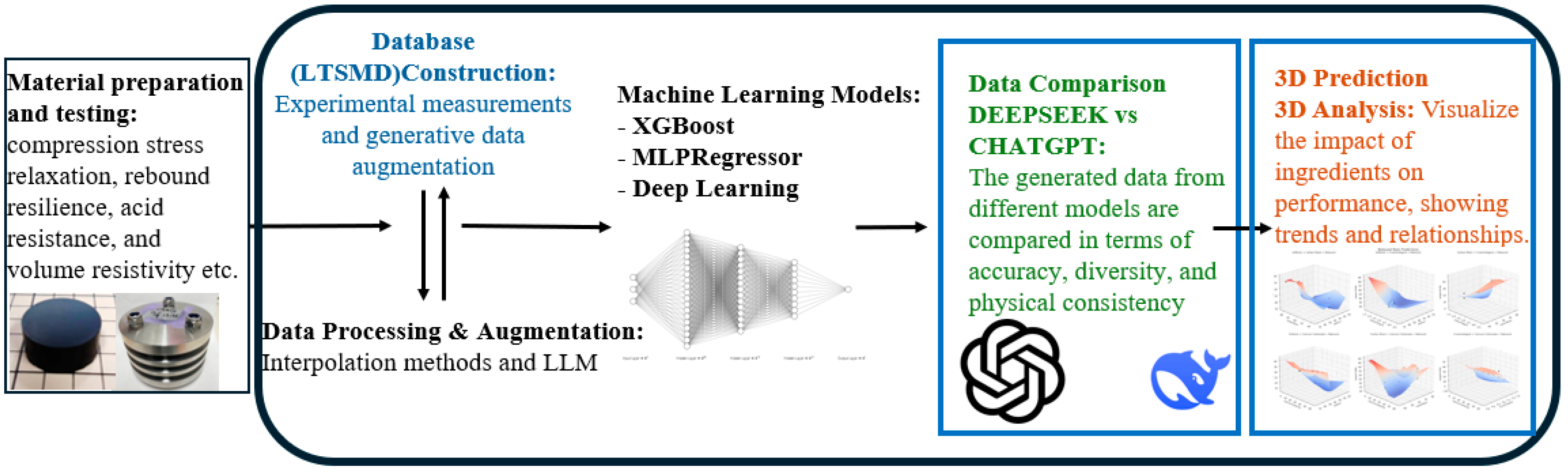

Low-Temperature Sealing Material Database and Optimization Prediction Based on AI and Machine Learning

Abstract

1. Introduction

1.1. Importance and Challenges of Low-Temperature Sealing Materials

1.2. Optimization Strategies for Low-Temperature Sealing Materials

1.3. Application of Machine Learning in Material Optimization

- Existing studies are primarily based on experimental data, lacking validation for AI-generated data augmentation methods;

- Supervised learning is not optimized well enough for cryogenic sealing materials;

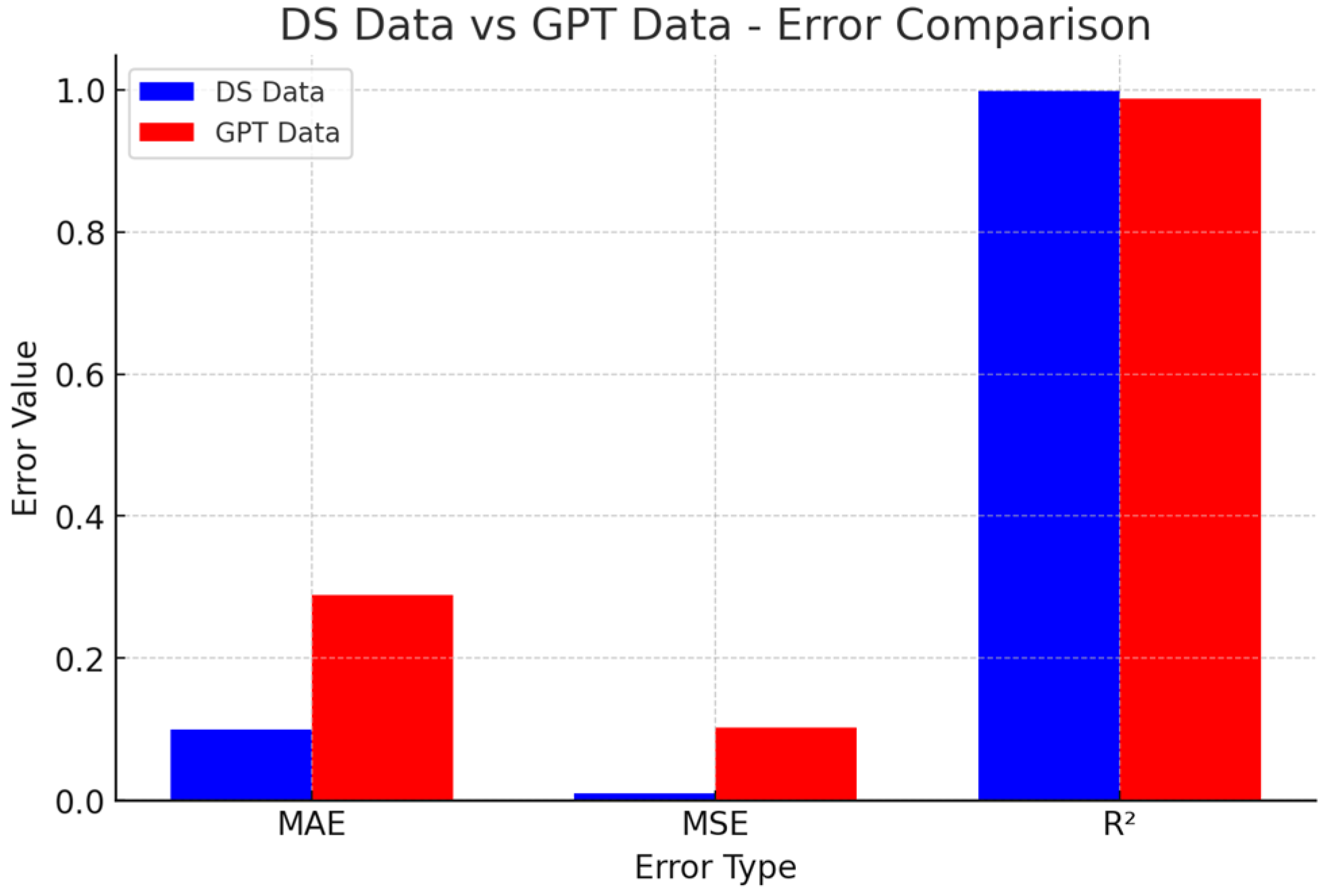

- No comparative studies have been conducted on the effectiveness of DS data (DeepSeek-v3-v3-v3) vs. GPT-generated data in material optimization.

- Probabilistic interpolation—generating additional data points by estimating values between known experimental samples;

- Monte Carlo simulations—using statistical sampling methods to synthesize realistic material properties;

- Generative Adversarial Networks (GANs)—learning complex data distributions to generate synthetic yet highly realistic material datasets [23].

1.4. Research Objectives and Contributions

- Construct a comprehensive Low-Temperature Sealing Material Database (LTSMD) that unifies material composition, physical properties and experimental performance metrics;

- Address key challenges in cryogenic sealing material design, such as limited sample availability and the need to balance multiple performance criteria (e.g., rebound rate, volume resistivity and acid resistance);

- Introduce data augmentation strategies, including probabilistic interpolation, ChatGPT4o4o-generated data and DeepSeek-v3-enhanced data, to expand the design space and improve prediction robustness;

- Apply and compare multiple machine learning models (e.g., XGBoost, MLPRegressor) to perform multi-objective prediction and uncover nonlinear relationships between formulation and performance;

- Clarify the differences in predictive behavior between DeepSeek-v3 and GPT-generated data, thereby providing insight into their respective contributions to materials modeling;

- Provide a validated, user-oriented tool for real-time querying, analysis and decision-making, enabling more efficient material screening and optimization.

2. Materials and Methods

2.1. Materials and Experiment

2.2. Data Collection

2.3. Data Preprocessing and Augmentation

2.3.1. Data Preprocessing

- Column name standardization: removing extra spaces and replacing special characters to ensure format consistency;

- Missing data handling: missing values were filled using forward filling to maintain data continuity;

- Data normalization: to eliminate the impact of different variable scales, min-max scaling was applied using the following formula:

2.3.2. Data Augmentation

2.4. Database Construction (LTSMD)

2.4.1. Database Architecture and Data Import

2.4.2. Database Interface Overview

2.4.3. Database Functionality and Data Structure

- Material composition table: stores chemical composition and additive ratios;

- Physical properties table: includes properties such as density, hardness and modulus;

- Performance testing table: records experimental results of rebound resilience, volume resistivity and acid resistance.

2.5. Machine Learning Methods

- Independent optimization of each target: training separate models ensures that each performance indicator is optimized independently, avoiding potential interference between different targets;

- Better convergence and stability: multi-output models may introduce additional complexity, leading to slower convergence and possible suboptimal solutions;

- Flexibility in feature selection: each target variable may have different key influencing factors and using independent networks allows us to optimize the feature selection and hyperparameters accordingly.

2.6. Data Augmentation and Expansion Methods

2.6.1. Interpolation Method

2.6.2. Deep Learning-Based Data Augmentation

- Generator: produces synthetic data samples;

- Discriminator: differentiates between real and generated samples.

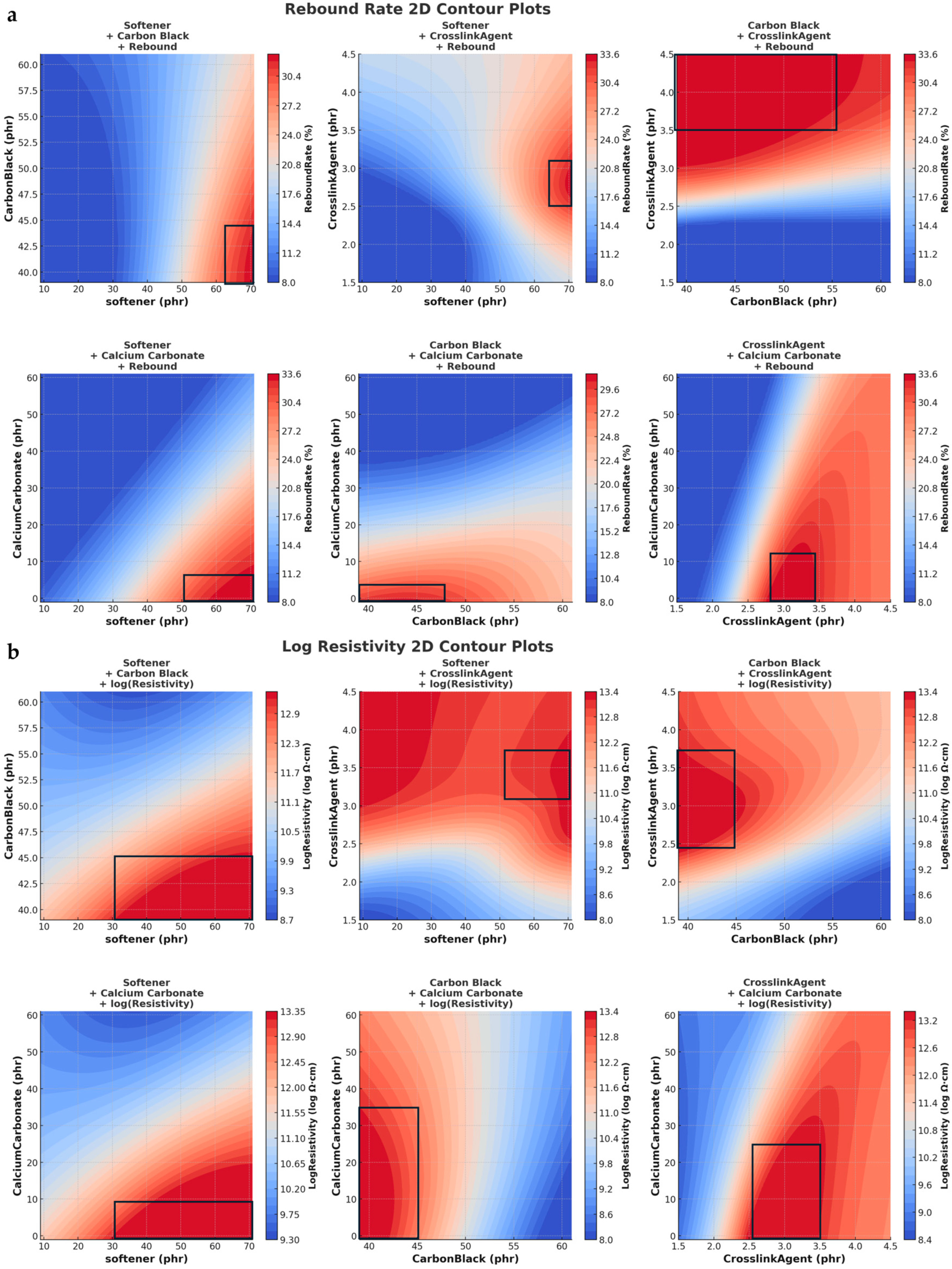

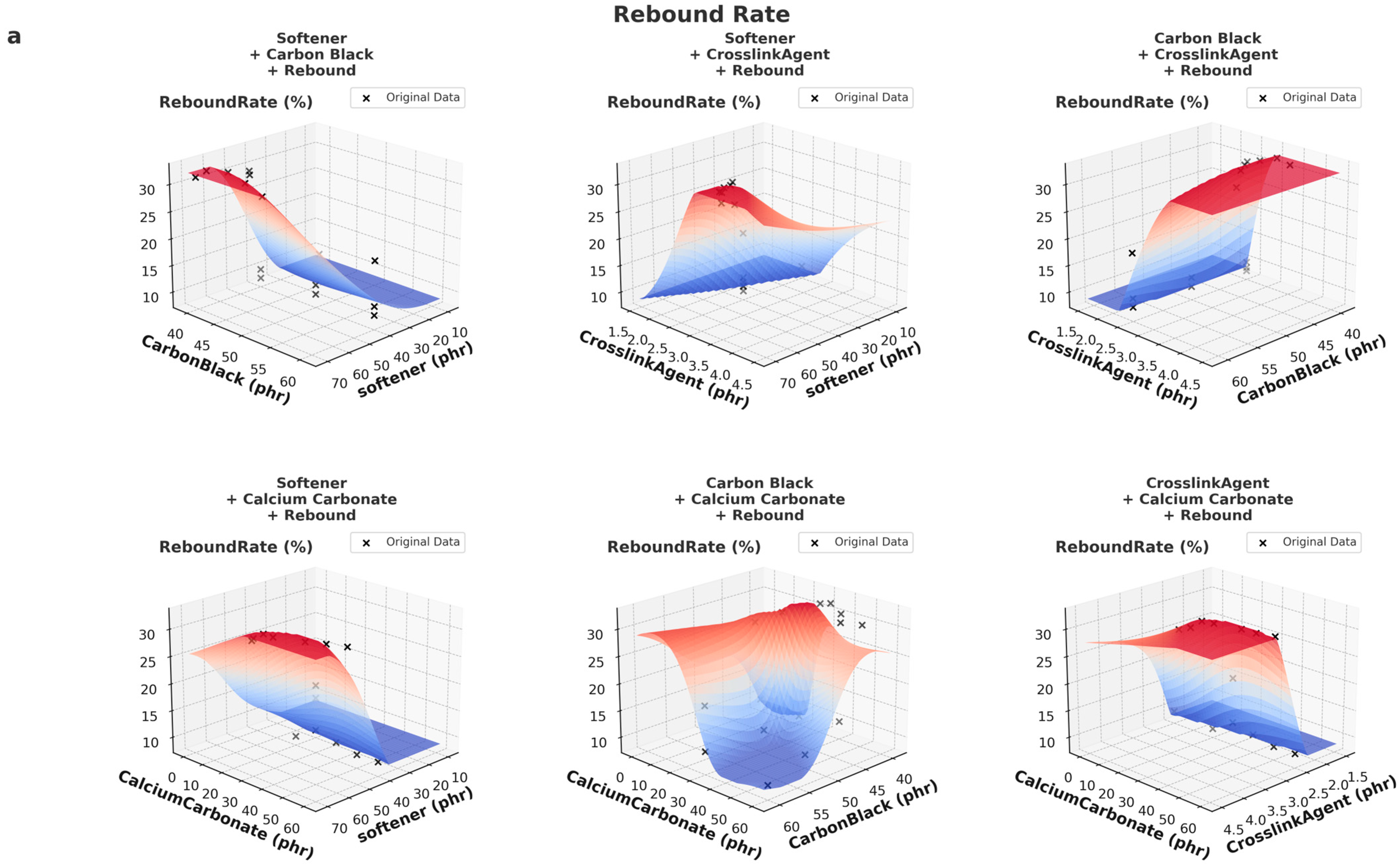

3. Experiments and Results

- Softener: affects elasticity;

- Carbon black: influences electrical conductivity;

- Crosslinking co-agent: impacts crosslink density;

- Calcium carbonate: affects acid resistance.

- Low-temperature rebound: reflects the material’s ability to recover elasticity at extremely low temperature.

- Volume resistivity: measures the material’s electrical conductivity.

- Acid resistance: indicates the material’s reaction when exposed to an acidic environment.

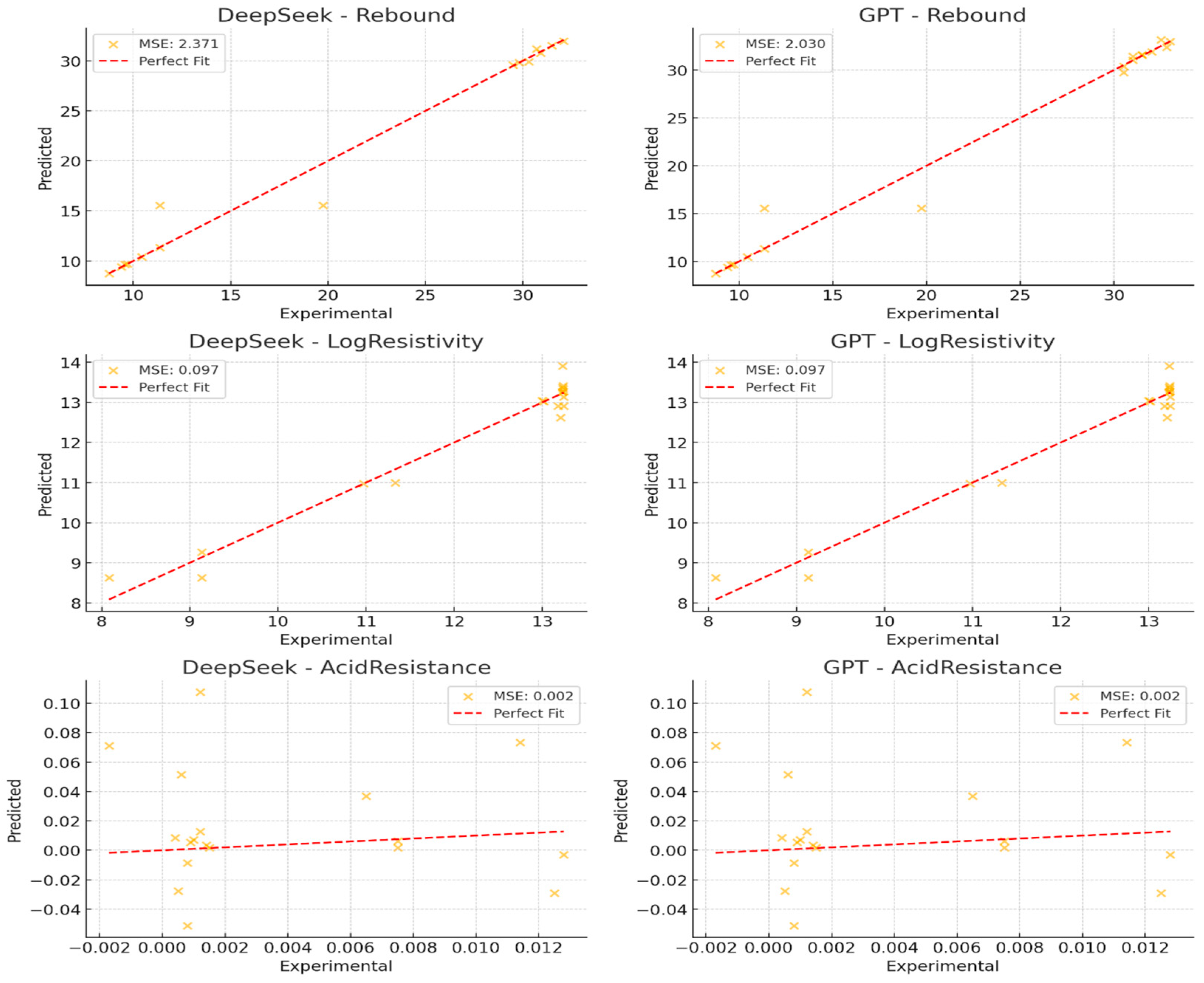

3.1. Forecast Result Analysis

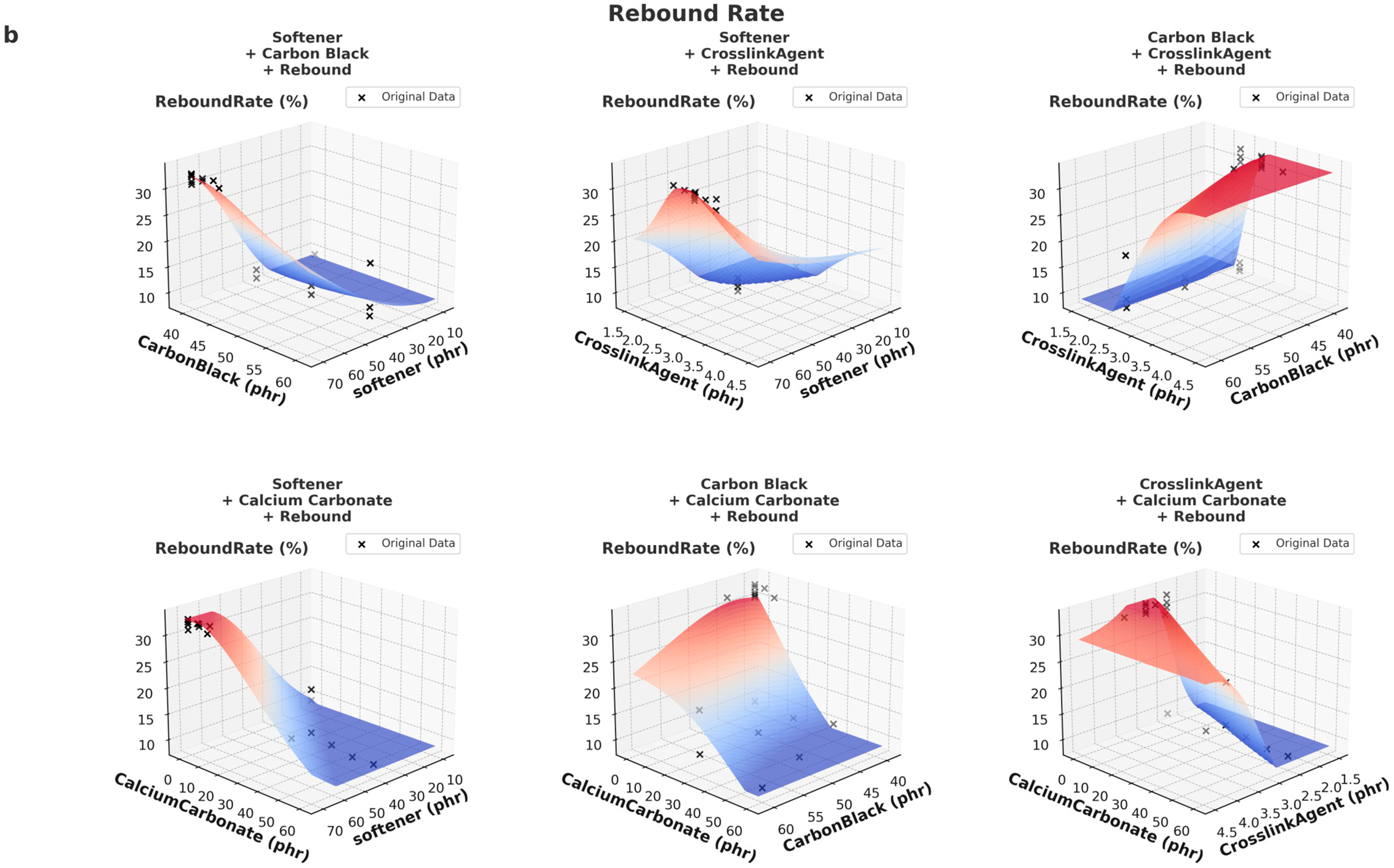

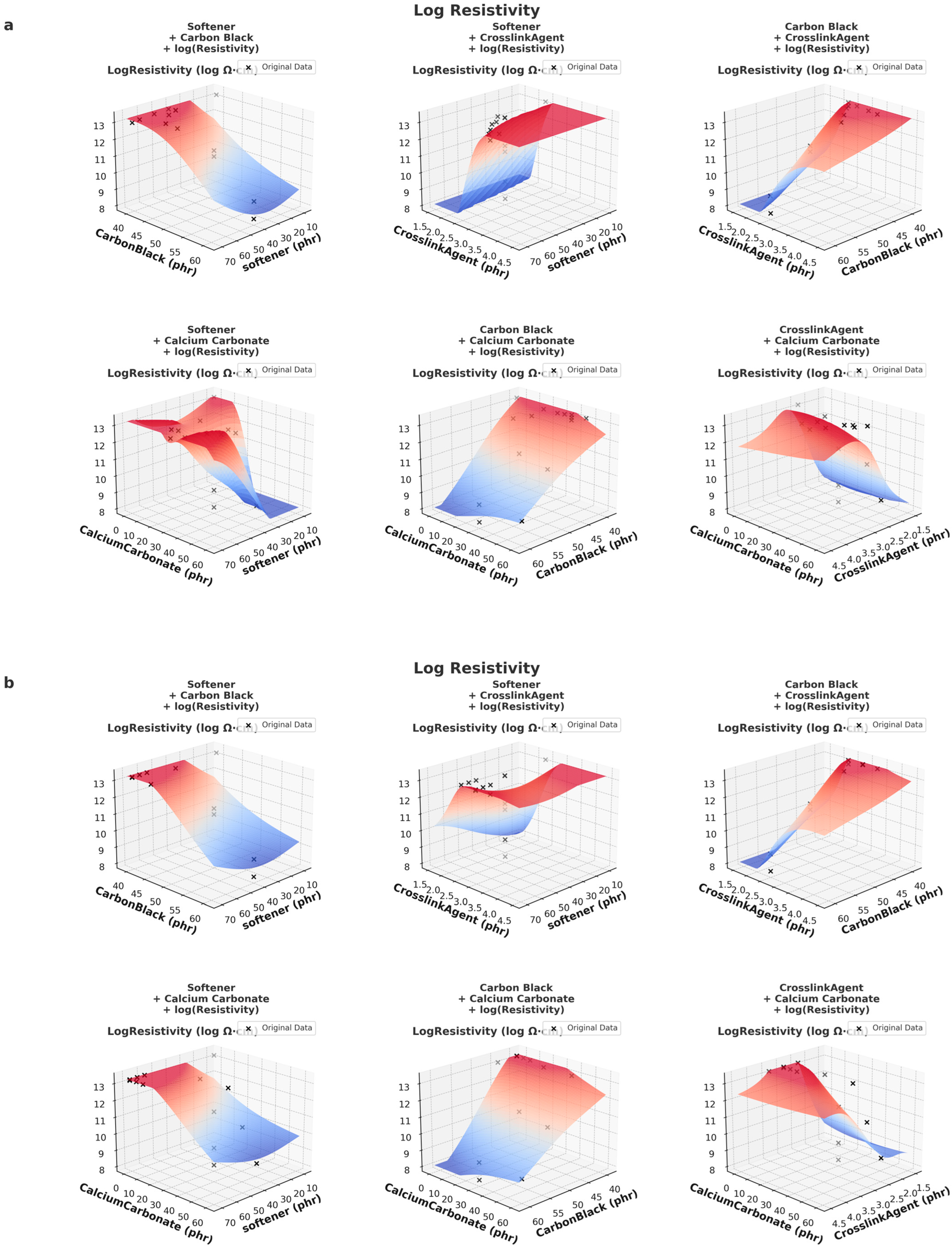

3.2. Key 3D Prediction Analysis

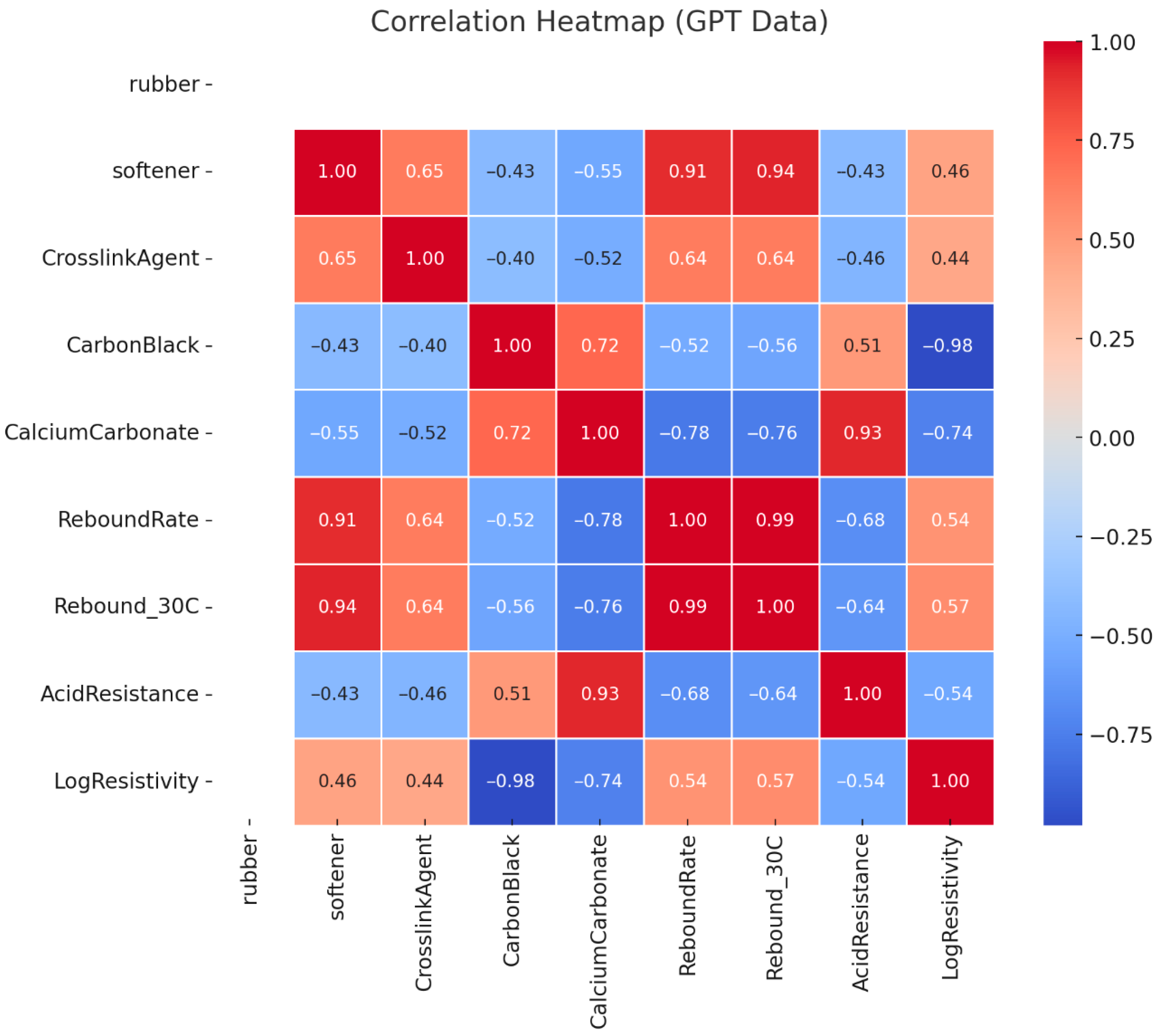

3.3. Data Relationship Distribution Diagram Analysis

4. Discussion

4.1. Applicability of Machine Learning Models

4.2. Impact of Data Characteristics on Model Prediction

4.3. Future Directions for Improvement

- (1)

- Improving data quality and enhancing model robustness

- (2)

- Exploring more advanced deep learning architectures

- (3)

- Optimizing feature engineering and variable selection

4.4. Summary of Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LLM | large language model |

| DS | DeepSeek-v3 |

| GPT | ChatGpt |

| EPDM | Ethylene Propylene Diene Monomer |

| PTFE | Polytetrafluoroethylene |

| MLP | Multilayer Perceptron |

| LTSMD | Low-Temperature Sealing Material Database |

| GNNs | Graph Neural Networks |

| FEA | Finite Element Analysis |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| SHAP | SHapley Additive exPlanations |

| PCA | Principal Component Analysis |

| SPIC | State Power Investment Corporation |

| EBT | EPDM-Based Trial Rubber (K9330M by Mitsui Chemicals) |

| PAO | Polyalphaolefin |

| Viscort#230 | Commercial crosslinking co-agent supplied by Osaka Organic Chemical Industry Ltd.; enhances crosslink density and improves mechanical performance. |

| PERHEXA-C/PERCUMYL-D | Organic peroxide curing agents produced by NOF Corporation; used for thermal vulcanization of EPDM rubber. |

| SPHERON 5200 | GPF-grade conductive carbon black manufactured by Cabot Japan K.K.; used to adjust electrical conductivity and mechanical reinforcement in rubber compounds. |

| phr | parts per hundred rubber |

References

- Ellis, M.; Von Spakovsky, M.; Nelson, D. Fuel cell systems: Efficient, flexible energy conversion for the 21st century. Proc. IEEE 2001, 89, 1808–1818. [Google Scholar] [CrossRef]

- Abdelkareem, M.A.; Elsaid, K.; Wilberforce, T.; Kamil, M.; Sayed, E.T.; Olabi, A. Environmental aspects of Fuel cells: A review. Sci. Total Environ. 2021, 752, 141803. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Chen, K.S.; Mishler, J.; Cho, S.C.; Adroher, X.C. A review of polymer electrolyte membrane Fuel cells: Technology, applications, and needs on fundamental research. Appl. Energy 2011, 88, 981–1007. [Google Scholar] [CrossRef]

- Bockris, J.O. The hydrogen economy: Its history and prospects. Int. J. Hydrogen Energy 2013, 38, 2579–2588. [Google Scholar] [CrossRef]

- Tan, X.; Li, H. A Review of Sealing Systems for Proton Exchange Membrane Fuel cells. World Electr. Veh. J. 2023, 15, 358. [Google Scholar]

- Gittleman, C.S.; Haugh, J.R.; Hirsch, D.F. Elastomer seals for Fuel cell applications: Performance and degradation mechanisms. J. Power Sources 2015, 282, 493–501. [Google Scholar]

- Zhou, Y.; Li, Y.; Zhang, J. A review on the sealing structure and materials of Fuel cell stacks. Clean Energy 2023, 7, 59–74. [Google Scholar] [CrossRef]

- Lange, F.F. Thermal and chemical stability of elastomeric seals in hydrogen environments. J. Mater. Chem. A 2019, 7, 14250–14261. [Google Scholar]

- Smart, B.E.; Fernandez, R.E. Fluorocarbon Elastomers. In Kirk-Othmer Encyclopedia of Chemical Technology, 4th ed.; Kroschwitz, J.I., Ed.; Wiley-Interscience: Hoboken, NJ, USA, 2000. [Google Scholar] [CrossRef]

- Tan, J.; Chao, Y.J.; Wang, H.; Gong, J.; Van Zee, J. Chemical and mechanical stability of EPDM in a PEM fuel cell environment. Polym. Degrad. Stab. 2009, 94, 2072–2078. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Q.; Zhou, C.; Zhou, Y. Mechanical properties of PTFE coated fabrics. J. Reinf. Plast. Compos. 2010, 29, 3624–3632. [Google Scholar] [CrossRef]

- Chen, Y. Advances in UV-curable adhesives for Fuel cell applications. Adv. Funct. Mater. 2020, 30, 2001259. [Google Scholar]

- Lee, S.; Park, J. High-performance sealing materials for proton exchange membrane Fuel cells. J. Appl. Polym. Sci. 2018, 135, 46677. [Google Scholar]

- Sarang, P. Effect of oxidative aging on the performance of rubber-based Fuel cell seals. Energy Environ. Sci. 2021, 14, 1492–1505. [Google Scholar]

- Himabindu, V.; Kumar, R.R. Hydrogen energy and Fuel cells: A clean energy solution. Renew. Sustain. Energy Rev. 2019, 101, 209–225. [Google Scholar]

- Wang, J.; Li, S.; Yang, L.; Liu, B.; Xie, S.; Qi, R.; Zhan, Y.; Xia, H. Graphene-based hybrid fillers for rubber composites. Molecules 2024, 29, 1009. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Ding, A.; Dong, X.; Zheng, S.; Wang, T.; Tian, R.; Wu, A.; Huang, H. Low-temperature resistance of fluorine rubber with modified Si-based nanoparticles. Mater. Today Commun. 2022, 33, 104947. [Google Scholar] [CrossRef]

- Blaiszik, B.J.; Kramer, S.L.B.; Olugebefola, S.C.; Moore, J.S.; Sottos, N.R.; White, S.R. Self-Healing Polymers and Composites. Annu. Rev. Mater. Res. 2010, 40, 179–211. [Google Scholar] [CrossRef]

- Pihosh, Y.; Biederman, H.; Slavinska, D.; Kousal, J.; Choukourov, A.; Trchova, M.; Mackova, A.; Boldyryeva, A. Composite SiOx/fluorocarbon plasma polymer films prepared by R.F. magnetron sputtering of SiO2 and PTFE. Vacuum 2006, 81, 38–44. [Google Scholar]

- Batra, R.; Dai, H.; Huan, T.D.; Chen, L.; Kim, C.; Gutekunst, W.R.; Song, L.; Ramprasad, R. Polymers for extreme conditions designed using syntax-directed variational autoencoders. arXiv 2020, arXiv:2011.02551. [Google Scholar] [CrossRef]

- Schmidt, J.; Marques, M.R.G.; Botti, S.; Marques, M.A.L. Recent advances and challenges in machine learning for materials science. Npj Comput. Mater. 2019, 5, 83. [Google Scholar] [CrossRef]

- Butler, K.T.; Davies, D.W.; Cartwright, H.; Isayev, O.; Walsh, A. Machine learning for molecular and materials science. Nature 2018, 559, 547–555. [Google Scholar] [CrossRef] [PubMed]

- Cai, W. Enhancing materials discovery through generative adversarial networks: A case study in Fuel cell sealing materials. Comput. Mater. Sci. 2021, 184, 109874. [Google Scholar]

- Pirayeh Gar, S.; Zhong, A.; Jacob, J. Machine Learning Aided Optimization of Non-Metallic Seals in Downhole Tools. Eng. Model. Anal. Simul. 2024, 1. [Google Scholar] [CrossRef]

- Jaunich, M.; Wolff, D.; Stark, W. Investigating Low-temperature Properties of Rubber Seals—13020. In Proceedings of the WM2013 Conference, Phoenix, AZ, USA, 24–28 February 2013. [Google Scholar]

- Su, C.; Ding, Y.; Yang, C.; He, P.; Ke, Y.; Tian, Y.; Zhang, W.; Zhu, L.; Wu, C. Formula Design of EBT EPDM with Low-temperature Resistance and Ultra-low Compression Set. Rubber Sci. Technol. 2024, 22, 15–19. [Google Scholar]

- Meng, T. Research progress of low-temperature resistant rubber materials. Rubber Plast. Resour. Util. 2014, 1, 16–18. [Google Scholar]

- Pyzer-Knapp, E.O.; Manica, M.; Staar, P.; Morin, L.; Ruch, P.; Laino, T.; Smith, J.R.; Curioni, A. Foundation models for materials discovery—Current state and future directions. Npj Comput. Mater. 2025, 11, 61. [Google Scholar] [CrossRef]

- Tran, H.; Gurnani, R.; Kim, C.; Pilania, G.; Kwon, H.-K.; Lively, R.P.; Ramprasad, R. Design of functional and sustainable polymers assisted by artificial intelligence. Nat. Rev. Mater. 2024, 9, 866. [Google Scholar] [CrossRef]

| Material | Applications | Limitations |

|---|---|---|

| Silicone Rubber | Used in cryogenic storage, aerospace and medical devices [8] | Degrades under prolonged exposure to acidic environments |

| Fluoroelastomers | Suitable for aggressive chemical environments in automotive and aerospace [9] | High-cost limits large-scale application |

| Ethylene Propylene Diene Monomer (EPDM) | Widely used in PEM fuel cells and electrical insulation [10] | Lower chemical resistance compared to fluorinated materials |

| Polytetrafluoroethylene (PTFE) | Applied in chemical processing and corrosion-resistant gaskets [11] | Low mechanical strength often requires structural reinforcement with other materials |

| Sample | Substrate | Compression Stress Relaxation (%) | Compression Set (%) | Rebound Resilience at Room Temperature (23 °C) (%) | Rebound Resilience at −40 °C (%) | Rebound Resilience at −30 °C (%) | Rebound Resilience at −20 °C (%) | Acid Resistance (Mass Change %) | Rebound Resilience After High Temperature/High Humidity Aging (90 °C/100%) (%) | Rebound Resilience After −40 °C Damp Heat Aging (%) | Surface Resistivity (Ω/cm2) | Volume Resistivity (Ω·cm) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| EBT-1 | EBT (K9330M) | 10.36 | 8.591 | 48.83 | 12.20 | 19.90 | 27.14 | −0.15% | 49.04 | 10.92 | 1.95 × 1010 | 3.31 × 109 |

| EBT-2 | 11.1 | 8.938 | 51.77 | 9.04 | 20.77 | 28.24 | −0.15% | 52.41 | 10.11 | 3.42 × 1011 | 5.79 × 109 | |

| EBT-3 | 11.34 | 8.938 | 53.93 | 9.73 | 26.17 | 31.87 | −0.19% | 53.39 | 8.94 | 7.79 × 1013 | 1.18 × 1013 | |

| EBT-4 | 13.07 | 14.196 | 55.52 | 10.35 | 25.28 | 30.71 | −0.18% | 54.91 | 10.57 | 8.09 × 1013 | 1.80 × 1013 |

| Rubber | Softener | Crosslink Agent | Carbon Black | Calcium Carbonate | Rebound | Acid Resistance | log(Resistivity) |

|---|---|---|---|---|---|---|---|

| 100 | 70 | 2.5 | 40 | 0 | 33 | 0.12 | 1.70 × 1013 |

| 100 | 70 | 3 | 40 | 5 | 32.8 | 0.09 | 1.68 × 1013 |

| 100 | 70 | 3 | 40 | 0 | 32.5 | 0.1 | 1.70 × 1013 |

| 100 | 70 | 3 | 45 | 0 | 32 | 0.12 | 1.50 × 1013 |

| 100 | 70 | 3 | 40 | 10 | 31.5 | 0.14 | 1.60 × 1013 |

| Performance | Data Source | Softener | Carbon Black | Crosslinking Co-Agent | Calcium Carbonate |

|---|---|---|---|---|---|

| Low-Temperature Rebound rate | CHATGPT | 60–70 | 40–47.5 | 2.75–3.25 | 0–10 |

| DEEPSEEK-V3 | 60–70 | 40–45 | 3–3.5 | 30–35 | |

| Volume Resistivity | CHATGPT | 10–20 | 40–45 | 2.5–3.5 | 0–10 |

| DEEPSEEK-V3 | 50–70 | 40–45 | 3–3.5 | 40–50 | |

| Acid Resistance | CHATGPT | 60–70 | 40–45 | 2.75–4.5 | 0–5 |

| DEEPSEEK-V3 | 60–70 | 45–50 | 2.5–3.5 | 0–10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, H.; Tai, Z.; Lyu, R.; Ishikawa, K.; Sun, Y.; Cao, J.; Ju, D. Low-Temperature Sealing Material Database and Optimization Prediction Based on AI and Machine Learning. Polymers 2025, 17, 1233. https://doi.org/10.3390/polym17091233

Jia H, Tai Z, Lyu R, Ishikawa K, Sun Y, Cao J, Ju D. Low-Temperature Sealing Material Database and Optimization Prediction Based on AI and Machine Learning. Polymers. 2025; 17(9):1233. https://doi.org/10.3390/polym17091233

Chicago/Turabian StyleJia, Honghao, Zhongxu Tai, Rui Lyu, Kousuke Ishikawa, Yixiao Sun, Jianting Cao, and Dongying Ju. 2025. "Low-Temperature Sealing Material Database and Optimization Prediction Based on AI and Machine Learning" Polymers 17, no. 9: 1233. https://doi.org/10.3390/polym17091233

APA StyleJia, H., Tai, Z., Lyu, R., Ishikawa, K., Sun, Y., Cao, J., & Ju, D. (2025). Low-Temperature Sealing Material Database and Optimization Prediction Based on AI and Machine Learning. Polymers, 17(9), 1233. https://doi.org/10.3390/polym17091233