1. Introduction

In recent years, perovskite materials have attracted widespread attention in research on solid oxide fuel cell (SOFC) materials owing to their superior properties such as mixed ionic–electronic conductivity (MIEC), excellent oxygen reduction reaction (ORR) catalytic activity, highly tunable chemical composition, and outstanding thermodynamic and chemical stability [

1,

2,

3,

4,

5,

6,

7]. Considering the extreme and harsh operating conditions of SOFCs—high temperatures of 600–1000 °C, repeated redox cycling, and exposure to toxic fuel impurities—material stability is crucial; only with excellent stability can the safety and longevity of the cell be ensured [

8,

9]. The chemical composition of ABO

3-type perovskite structures can be tailored by hetero-ion doping at the A- and B-sites (e.g., Sr, Ni), thereby enhancing properties such as electrical conductivity, catalytic performance, and stability [

10,

11,

12]. Therefore, the development of doped perovskite materials holds significant scientific importance and practical value.

Traditional materials discovery requires extensive experimentation, making it a time-consuming and costly process [

13]. Computational methods provide an attractive alternative for exploring a wider design space. Density functional theory (DFT) offers strong predictive capability; however, its high computational cost makes exhaustive high-throughput screening over vast compositional and configurational spaces challenging [

14]. In addition to conventional high-throughput DFT calculations, global structure prediction frameworks such as the USPEX code [

15,

16] have been successfully employed to explore complex energy landscapes, predict stable crystal structures, and discover new compounds, including perovskite-type oxides. These evolutionary-algorithm-based approaches provide an essentially unbiased and accurate search over structural and compositional space, but their computational cost remains substantial when thousands of doped compositions or large design spaces must be systematically evaluated.

With the rapid development of artificial intelligence (AI) in materials science, machine-learning-driven approaches have shown tremendous potential in predicting and designing the properties of perovskite materials [

17,

18]. Li et al. compared multiple machine learning algorithms for predicting the formation energies of perovskites and demonstrated that ensemble strategies based on random forests, support vector machines, and neural networks can effectively improve predictive accuracy. These models also simultaneously predicted relevant physical properties, such as cell volume and thermodynamic stability, showing good generalization performance [

19]. Emery et al. developed property prediction models based on DFT data for multiple ABX

3 perovskite systems and systematically analyzed their formation energies, thermodynamic stability, and oxygen vacancy formation energies [

20]. These studies highlight the promise of data-driven models as efficient surrogates for accelerating perovskite materials discovery.

Although machine learning has made significant progress in perovskite property prediction, most existing works are still limited to conventional ABO

3-type compositions. In contrast, doped perovskites—owing to their enhanced tunability and performance potential—are more relevant for practical SOFC applications, yet remain underexplored. On one hand, doped structures are severely underrepresented in existing databases and suffer from typical issues such as small sample sizes and imbalanced composition distributions. On the other hand, their higher structural and chemical complexity leads to larger discrepancies in feature distributions between simple and doped systems, increasing the difficulty of model training. As a result, traditional supervised learning models often perform poorly on such small-sample and distribution-shift scenarios, tending to overfit and exhibiting limited generalization [

21]. In this context, there is a strong need for an efficient and robust framework that can exploit knowledge learned from large datasets of simple perovskites and transfer it to complex doped systems, thereby providing a fast and scalable pre-screening tool that is complementary to high-cost first-principles and evolutionary structure search methods such as USPEX.

To address these challenges, the emergence of small-sample learning strategies—particularly transfer learning (TL)—has offered new solutions for improving the prediction accuracy of complex material structures. The core concept of TL is to transfer the knowledge learned from a “source domain” with abundant data to a “target domain” with limited data, enabling good model performance even under small-data conditions [

22,

23,

24]. In recent years, transfer learning has shown great potential in material modeling tasks. Lee and Asahi [

25] applied transfer learning to crystal graph convolutional neural networks (CGCNN). By first training on a large dataset (source domain) and then transferring the learned structure–property mapping capabilities to a small dataset (target domain), they significantly improved the predictive accuracy under small-sample conditions. They also verified that the transfer strategy was effective across different crystal systems, such as metal oxides and nitrides, offering a feasible route for deploying deep graph neural networks in materials science. Moreover, Zhou et al. [

26] proposed a center–environment embedding mechanism, enabling cross-structure transfer learning from spinel oxides to perovskite oxides. Despite the scarcity of data for the target system (perovskites), the model maintained low prediction errors, demonstrating its effectiveness in capturing local structural correlations across different crystal systems. It provides a promising direction for knowledge transfer between complex structures, especially for structure–property prediction under small-data scenarios.

In summary, this work proposes a transfer learning strategy for predicting the formation energy of perovskite materials across multiple structural types. ABO

3-type structures are used as the source domain, while doped structures are treated as the target domain. By constructing a unified feature representation for element and structure information, we first train a deep neural network model on the source domain, and then transfer its learned weights to the small-sample target structures. This approach significantly improves prediction performance under data-scarce conditions. The proposed method is expected to provide a reference solution for small-data modeling in material science and accelerate the screening and design of complex doped perovskite materials. As illustrated in

Figure 1, the overall workflow of this study includes key steps such as data preprocessing, model training, and weight transfer, offering a clear framework for applying transfer learning in perovskite property prediction.

2. Methods

2.1. Dataset Preparation

To construct a representative multi-structure perovskite dataset, we select four typical perovskites and their derivative structures from the publicly available Materials Project database [

27]. These structures include ABO

3, A

2B′BO

6, AA′B

2O

6, and AA′BB′O

6. A

2BB′O

6 is structurally equivalent to AB

0.5B′

0.5O

3, in which the B-site is half-substituted by B′. Similarly, AA′B

2O

6 represents a configuration in which the A-site is half occupied by a dopant cation A′, while AA′BB′O

6 incorporates dopants at both A- and B-sites, forming a double substitutional perovskite structure. The number of species is shown in

Table 1.

The data selection process followed these principles:

Valid Formation Energy Labels: Each sample must include a valid formation energy label (eV/atom) to ensure that each data point has a reliable target value.

Chemical Formula Duplication Handling: When records with the same chemical formula are found, only the sample with the smallest formation energy is retained to ensure that the most stable structure is selected.

Perovskite Material Scope [

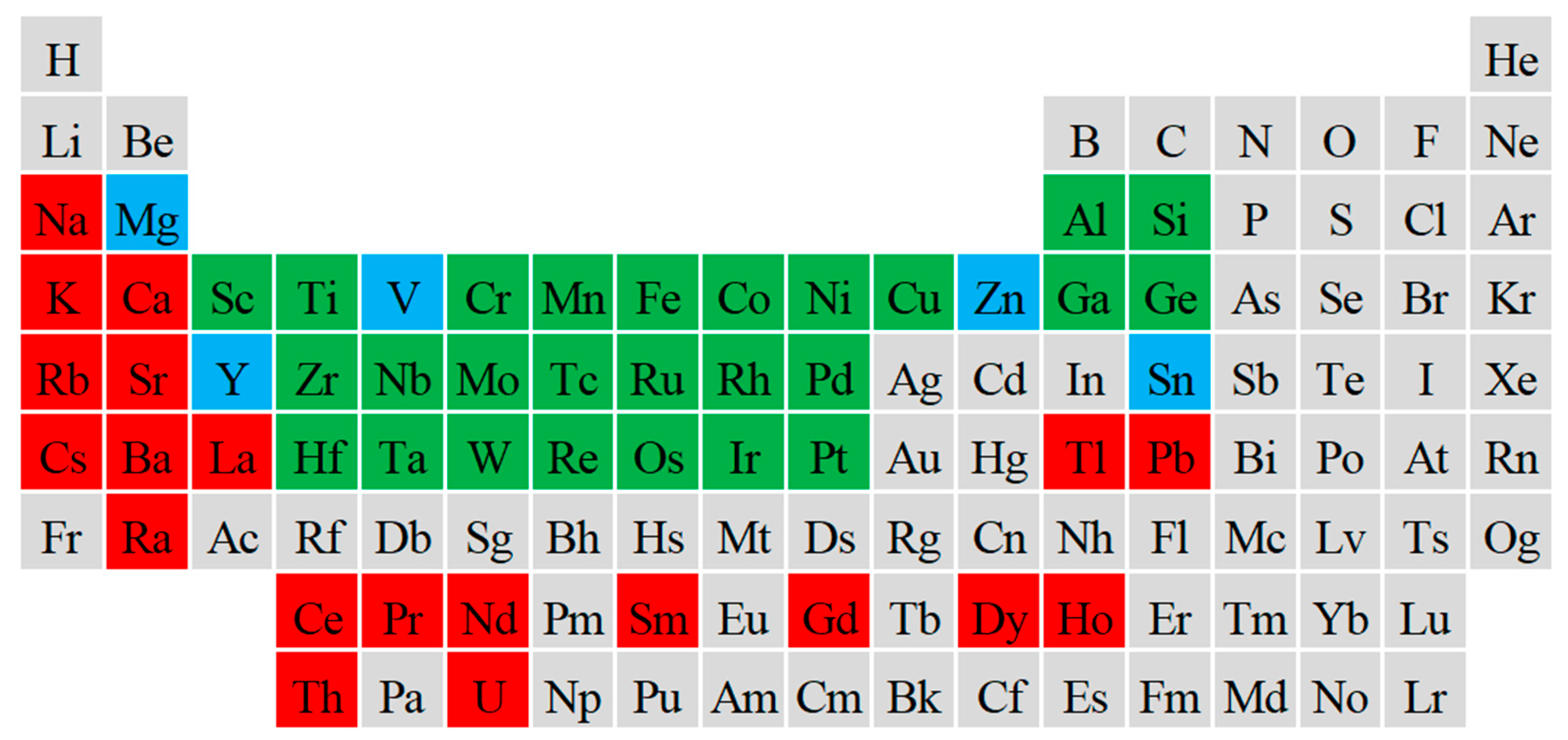

28]: In this study, the A-site elements are limited to the following candidate elements: “Ba”, “Ca”, “Cd”, “Ce”, “Cs”, “K”, “La”, “Na”, “Nd”, “Pb”, “Ra”, “Rb”, “Sm”, “Sr”, “Th”, “Tl”, “U”, “Y”, “Pr”, “Zn”, “Dy”, “Gd”, “Ho”, “Sn”, “Mg”, “Er”. The B-site elements are limited to the following candidate elements: “Al”, “Co”, “Cr”, “Cu”, “Fe”, “Ga”, “Ge”, “Hf”, “Ir”, “Mg”, “Mn”, “Mo”, “Nb”, “Ni”, “Os”, “Pd”, “Pt”, “Re”, “Rh”, “Ru”, “Sc”, “Si”, “Sn”, “Ta”, “Tc”, “Ti”, “V”, “W”, “Y”, “Zn”, “Zr”, “Nd”. Additionally, the stoichiometric ratio of the sample must satisfy A:B:O = 1:1:3, ensuring that the selected materials belong to the perovskite category.The elements used in this work are shown in

Figure 2.

2.2. Feature Engineering

The features used in this study are primarily constructed based on elemental characteristics and crystal structure information, including the basic physicochemical properties of the A- and B-site cations and derived structural factors. All the physicochemical properties are automatically extracted using the Python material modeling toolkit pymatgen [

29]. These elemental features are further used to derive structural descriptors, such as the tolerance factor. All elemental and structural features are then integrated and used as input to the neural network.

2.2.1. Elemental Property Feature Extraction

We use the pymatgen extraction tool to extract 8 types of elemental property features from each of the A- and B-site cations, which include atomic number Z, atomic mass M, electronegativity χ, ionization energy I, ionic radius , Mendeleev group number G, melting point , and boiling point .

To ensure consistent input dimensions across different structure types, we apply the following processing methods for the A- and B-site elements: If the A-site has no dopant element, the features of the A′-site are set to be the same as those of the A-site. Similarly, if the B-site has no dopant element, the features of the B′-site are set to be the same as those of the B-site. This ensures that the elemental feature vector always has a fixed dimension of 8 × 4 = 32.

We conceptually considered alternative schemes, such as zero-padding, using a separate “vacant” token, or adding explicit binary mask features for doped/undoped sites. However, these approaches either break the physical symmetry between chemically identical sites or introduce additional hyperparameters and complexity without clear benefit for the present data size. In contrast, the adopted mirroring strategy preserves invariance with respect to identical site occupancies and yields a deterministic, easily reproducible input representation. Importantly, this choice does not by construction favor doped over undoped systems; it only ensures that any predictive advantage arises from genuine differences in elemental descriptors rather than from arbitrary encoding artifacts. We acknowledge that more sophisticated occupancy encodings (e.g., explicit site-fraction vectors) may further improve performance for larger and more diverse datasets and leave this as an interesting direction for future work.

2.2.2. Derived Structural Feature Calculation

We include the tolerance factor and octahedral factor, which are commonly used indicators of structural stability in perovskite systems, as part of our model features.

The tolerance factor is a key geometric parameter that measures the stability of the perovskite structure, proposed by Goldschmidt [

30], and is defined as

where

,

represent the ionic radius of the A-site and B-site cations and anions, respectively.

In multi-doped perovskite systems (such as A

2B′BO

6, AA′B

2O

6, etc.), the same lattice sites (such as the A-site or B-site) are often jointly occupied by multiple chemical elements. To accurately quantify the effect of doping on the lattice geometric parameters, this study uses the stoichiometric ratio weighted average method to calculate the equivalent ionic radius of the cations at each lattice site:

For structures with

types of doped A-site elements, the effective ionic radius is defined as

where

is the mole fraction of the i-th element in the chemical formula, and

is the Shannon ionic radius of the corresponding element in a specific oxidation state and coordination environment.

Similarly, for structures with m types of doped B-site elements:

Additionally, there is the octahedral factor, which quantifies the size matching between the B-site cation and the oxygen octahedral cavity (with the oxygen ionic radius), reflecting the degree of octahedral distortion. The further this value deviates from the ideal range (≥0.414), the lower the structural stability [

31]. The formula is as follows:

2.3. Transfer Learning Strategy

To address the challenges posed by small datasets, such as low prediction accuracy and limited generalization capability, this study employs a transfer learning approach. The ABO3 type perovskite structure is selected as the source domain, where a source model is trained using 463 samples from this category. Although the sample sizes of the target domain structure, namely A2B′BO6 (449 samples), AA′B2O6 (138 samples), and AA′BB′O6 (441 samples), the ABO3 structure and its doped derivatives share strong structural similarity. Therefore, transfer learning can still effectively improve the prediction accuracy for the target structures by transferring the knowledge learned from the source domain.

First, we train a deep neural network (DNN) on the source domain data (ABO3-type structures) using ten-fold cross-validation. The network consists of six fully connected layers: the input layer connects to a hidden layer with 512 units, followed by a 15% dropout layer to mitigate overfitting. Subsequent hidden layers decrease in size by half (i.e., 256, 128, 64, and 32 units), each employing the ReLU activation function. The final output layer produces the predicted formation energy. The model is trained using the mean squared error (MSE) loss function for 100 epochs, with a batch size of 32 and a learning rate of 0.0005. Among the ten trained models, the one that achieves the highest coefficient of determination (R2) on the validation set is selected as the source model and saved for transfer learning.

The pre-trained weights from the source model are then transferred to the target domain model and fine-tuned on the target dataset. During fine-tuning, no layers are frozen, and incremental transfer learning is not adopted, as preliminary experiments on our small datasets show that these strategies do not yield satisfactory results. Therefore, we employ full fine-tuning of all parameters, enabling the model to effectively transfer knowledge from the source domain to the target structures. This approach is particularly beneficial for small datasets, where models with randomly initialized weights often fail to converge effectively. By leveraging pre-trained weights from the source domain, especially when the target and source domains are closely related (e.g., ABO3 perovskites and their doped counterparts), transfer learning accelerates convergence and enhances prediction performance, even with limited data.

3. Results and Discussion

In this section, we validate the effectiveness of transfer learning on small datasets by comparing the performance of the baseline model (i.e., the model trained directly using the target domain data) with the transfer learning model. To minimize the influence of randomness, all models were trained and evaluated using 10-fold cross-validation.

During the training process, we set the learning rate, batch size, and other hyperparameters consistently to ensure a fair comparison between the models. The models use the mean absolute error (MAE) as the loss function and employ the coefficient of determination (R2) and root mean square error (RMSE) as performance evaluation metrics. The performance comparison between the baseline model and the transfer learning model for different structures is shown in the table, further proving the effectiveness of transfer learning in improving prediction accuracy for small datasets.

As shown in

Figure 3, the transfer learning model outperforms the baseline model across all structure types, with particularly significant improvements in structures with smaller sample sizes. The results in the table represent the average performance under 10-fold cross-validation.

As demonstrated in

Table 2, For the AA′B

2O

6 structure, which has the smallest number of samples, transfer learning improves the R

2 value from 0.919 to 0.956, while MAE and RMSE decrease by approximately 17.6% and 24.8%, respectively. This result further confirms the effectiveness of transfer learning in small-sample scenarios.

It is worth noting that transfer learning also achieves stable performance improvements in structures such as A2B′BO6 and AA′BB′O6, whose sample sizes are comparable to those of the source domain. For example, in the A2B′BO6 structure, R2 increased from 0.909 to 0.941, MAE decreased from 0.096 to 0.079 (a reduction of about 17.7%), and RMSE dropped from 0.132 to 0.108 (a reduction of about 18.2%), indicating a significant reduction in model error and enhanced fitting capability. In the AA′BB′O6 structure, R2 improved from 0.913 to 0.931, MAE decreased from 0.084 to 0.075 (a reduction of about 10.7%), and RMSE declined from 0.116 to 0.105 (a reduction of about 9.5%), demonstrating that even under non-extremely low sample conditions, the knowledge learned from the source domain model still exhibits good transferability and generalization ability.

Building upon these encouraging validation results, we apply the trained models to large-scale forward prediction tasks. Specifically, for each structure type, we select the best-performing model from the 10-fold cross-validation (i.e., the one with the highest R2) as the final prediction model.

To construct the prediction datasets, we systematically recombine the A-site and B-site elements that have appeared in the training data. The core rationale behind this strategy is that the model has already learned the mapping relationships between these elemental combinations and their corresponding formation energies. Therefore, recombining within the known elemental space not only expands the material design space but also ensures that predictions remain within the effective domain of the model’s learned chemical knowledge.

Using this approach, we predicted the formation energies of 12,897 A

2B′BO

6 compounds, 10,401 AA′B

2O

6 compounds, and 49,723 AA′BB′O

6 compounds. These candidate materials were subsequently filtered based on the following structural criteria: Tolerance factor

, Octahedral factor

, Modified tolerance factor [

29]

.

In this formula,

,

and

represent the ionic radii of the A-site cation, B-site cation, and anion, respectively, while

is taken as the weighted average oxidation state of the A-site cations. The oxidation states used in this work are determined using the oxidation-state assignment routines implemented in pymatgen and are provided, together with the corresponding scripts and input data, in the

Supplementary Data (xlsx file).

After applying these filters, 3389 A

2B′BO

6, 3002 AA′B

2O

6, and 13,563 AA′BB′O

6 compounds are retained as potentially stable doped perovskite candidates. The predicted materials for each category are illustrated in

Table 3; only the top ten are shown.

Among these filtered results, 821 A2B′BO6, 69 AA′B2O6, and 6 AA′BB′O6 compounds are found to have matching entries in the OQMD database, indicating that they have already been independently verified as stable doped perovskite materials.

To further assess the behavior of the model in a forward-prediction scenario, we evaluate its performance on an independent test set constructed from OQMD, which is not used during training and is generated using different DFT settings from those of the Materials Project. As summarized in

Table 4, the model maintains a clear correlation with the OQMD formation energies for all structure types; however, both the MAE and R2R^2R2 values are noticeably degraded compared with the internal cross-validation results on the MP-based dataset (

Table 2). In particular, the increase in error indicates that the present model does not exhibit uniformly strong, database-independent generalization. Instead, these discrepancies are consistent with a systematic domain shift between the MP and OQMD computational protocols (e.g., different pseudopotentials, DFT+UUU settings, and reference energies). Therefore, the OQMD evaluation should be interpreted as an out-of-distribution stress test: it demonstrates that the proposed transfer learning framework preserves a certain level of transferability beyond its training domain, while also revealing its limitation that the predictions remain anchored to the “DFT dialect” of the Materials Project rather than representing universally calibrated formation energies.

Finally, for each type of doped perovskite, we select the compound with the lowest predicted formation energy—that is, the most thermodynamically stable candidate—for further DFT calculations. As a result, three stable doped perovskite structures are identified: CaSrHfScO6, BaSrHf2O6, and Ba2HfNdO6.

4. Conclusions

This study proposes a transfer learning-based strategy for predicting the formation energy of perovskite materials, successfully addressing the issue of reduced prediction accuracy caused by small sample datasets. By using the ABO3-type perovskite structure as the source domain and other doped perovskite structures as the target domain, we employ a deep neural network model and fine-tune the weights on the target structures, significantly improving the prediction performance for small sample target structures. The experimental results show that the transfer learning model significantly outperforms the baseline model across all structure types, especially in structures with fewer samples (such as AA′B2O6 and A3B2B′O9), where transfer learning improved prediction accuracy and effectively reduced errors.

Although this study has achieved certain results, there are still areas worth further exploration. For instance, future research could explore combining multi-source domain models with transfer learning to enhance the adaptability to complex perovskite material systems and improve model generalization ability. Furthermore, with the development of deep learning technologies, combining novel neural network architectures (such as graph neural networks) with transfer learning strategies may further improve prediction accuracy, providing more efficient tools for the design and optimization of perovskite materials.

Overall, transfer learning provides an effective approach to solving the prediction problems of perovskite materials, especially under small-dataset conditions, and has important application potential. This study offers new insights for the screening and design of perovskite materials and provides a reference path for small-sample learning issues in materials science. Furthermore, the underlying concept is not limited to perovskites: in conventional machine learning models, the network parameters are typically initialized randomly, whereas in transfer learning, the model is initialized from a chemically related source domain, which can significantly improve convergence and generalization when the target system shares similar structural motifs and descriptor space. Therefore, provided that comparable feature definitions (e.g., site-resolved elemental descriptors) and analogous structural representations can be constructed, the present framework could in principle be extended to other oxide families or non-perovskite crystal structures, offering a promising route for efficient property prediction and composition screening beyond the perovskite domain.