Breaking Evolution’s Ceiling: AI-Powered Protein Engineering

Abstract

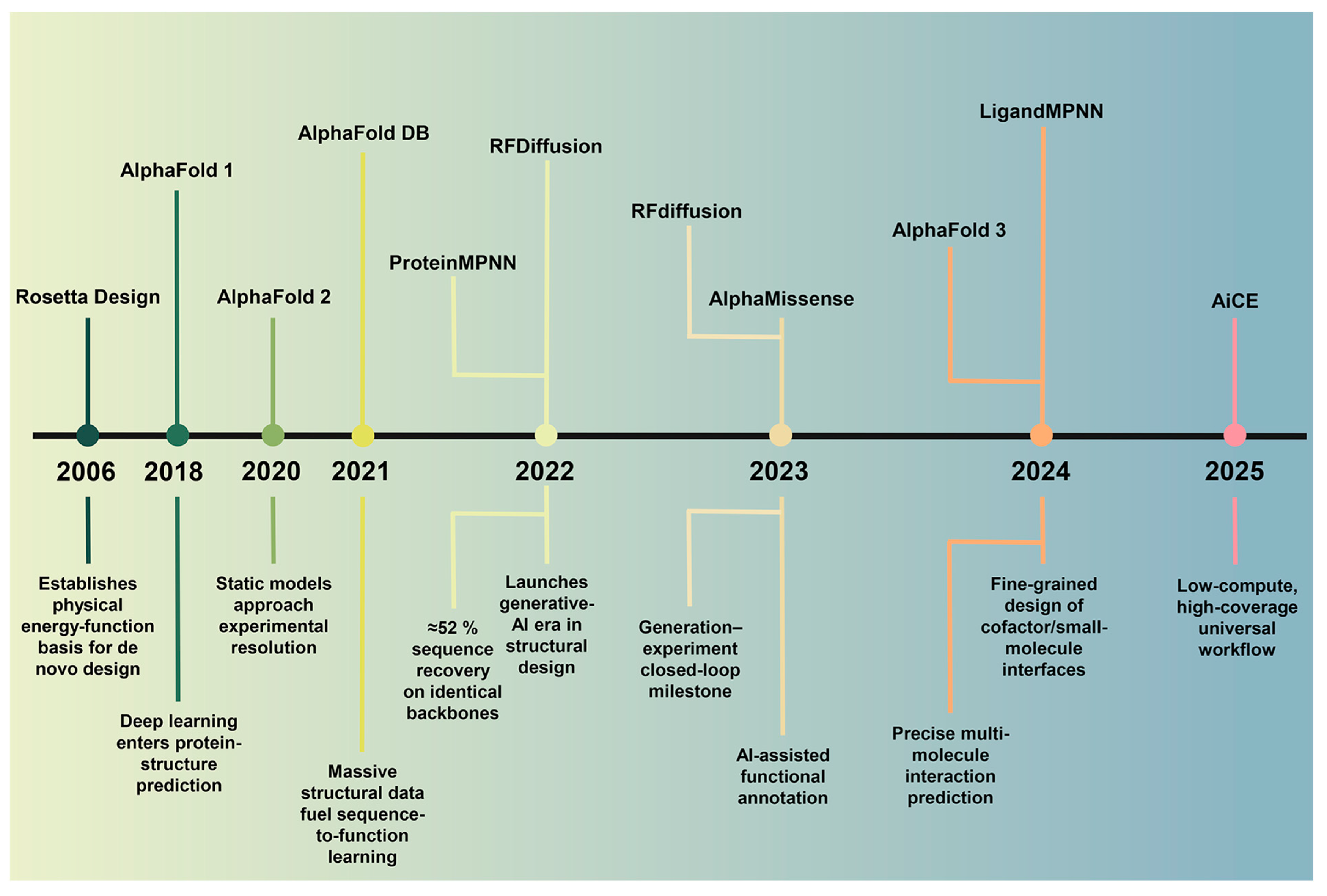

1. Introduction

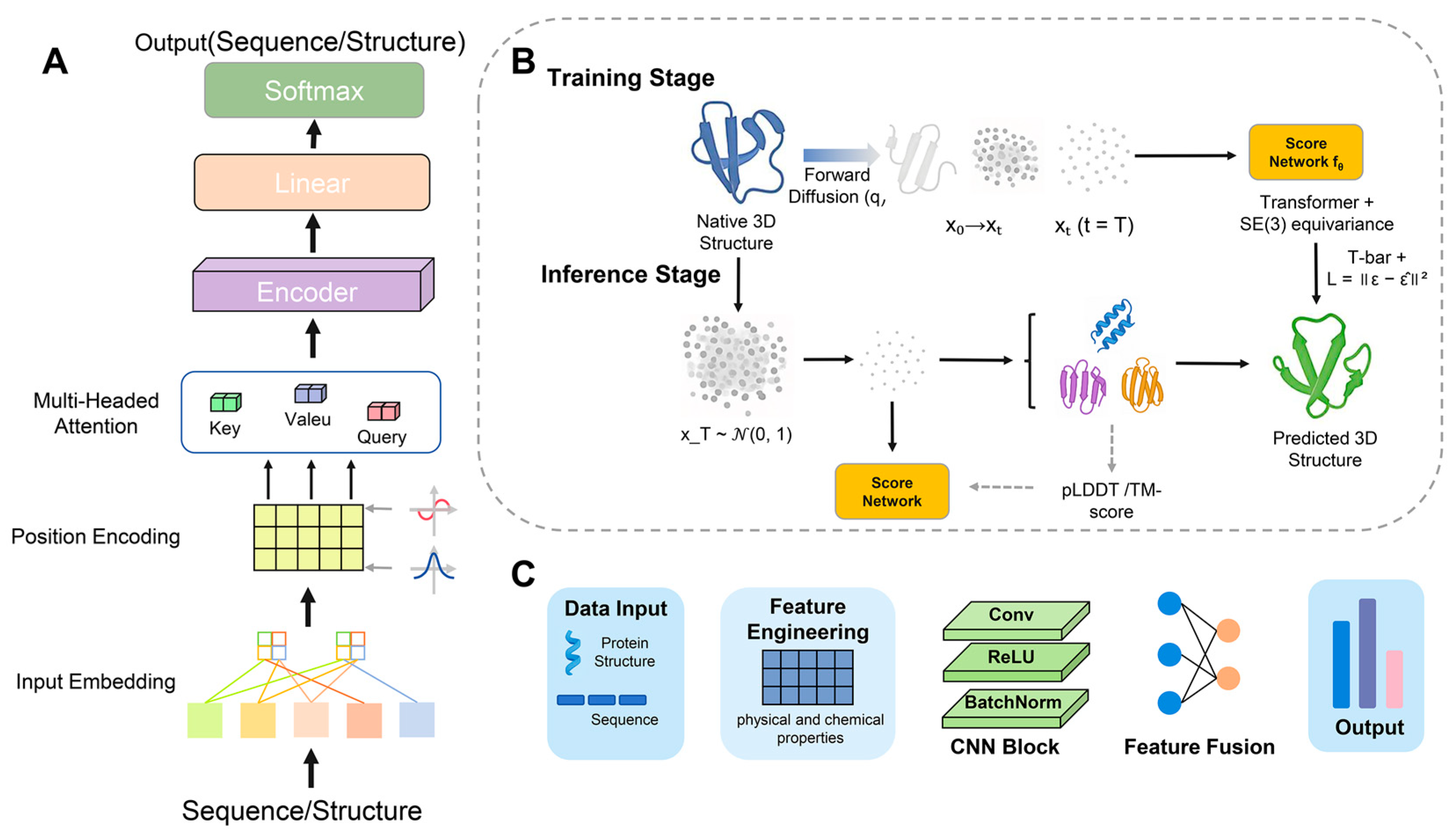

2. Machine Learning and Data Foundations

2.1. Basic Algorithms

2.2. Data Foundation for ML

3. Algorithm Architecture and Method Evaluation

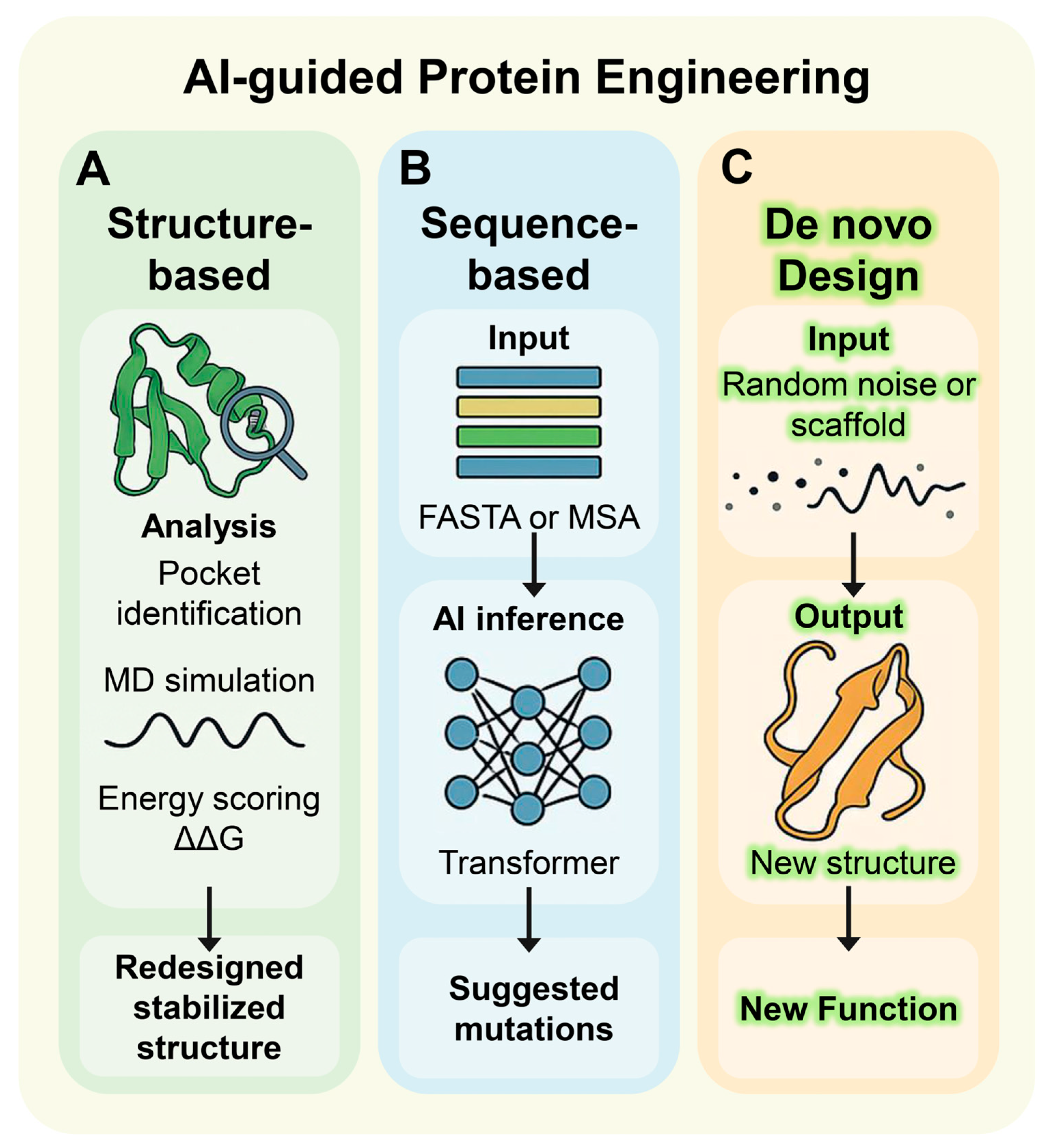

4. Structural and Sequence Parallel Computational Strategies

4.1. Structure-Based Design Strategies

| Category | AI Tools | Function | |

|---|---|---|---|

| Structure Prediction | AlphaFold3 | High-accuracy prediction of protein 3D structures for single chains and complexes | [54] |

| RoseTTAFold | Rapid structure prediction from sequence, suitable for general modeling | [55] | |

| DeepFold | De novo protein structure prediction | [56] | |

| trRosetta | Residue contact-based modeling for protein structures and multimer prediction | [51] | |

| RosettaDesign | Protein structure prediction and design platform | [57] | |

| Structure Design and Generation | RFdiffusion | De novo protein sequence generation from amino acid sequences | [58] |

| ProGen | De novo protein sequence generation from amino acid sequences | [59] | |

| EvoEF | Protein function and structure prediction using evolutionary data | [60] | |

| ESM | Multimodal language models for sequence, structure, and function generation | [61] | |

| AlphaDesign | Design of novel protein sequences for enhanced function, stability, and affinity | [62] | |

| Sequence Optimization and Mutation Prediction | AlphaMissense | Predicts pathogenicity of missense mutations using structure and evolutionary priors | [63] |

| ProtBert | BERT-based pre-trained language model for protein sequence analysis | [64] | |

| µFormer | Models fitness landscapes; effective in predicting high-order mutational effects | [65] | |

| AiCE | Universal inverse folding framework combining structure and evolutionary constraints | [66] | |

| MutaGene | Prediction of mutation effects on protein structure and function | [67] | |

| Binding Prediction and Affinity Estimation | DeepAffinity | Predicts protein-small molecule binding affinities | [68] |

| AtomNet | Deep learning-based docking for drug-target interaction modeling | [69] | |

| EquiBind | Equivariant GNN for predicting protein-ligand binding poses | [70] | |

| Graph Neural Network Applications | ProteinMPNN | Graph neural network-based design of function-specific proteins | [71] |

| DynamicBind | Combines GNNs and diffusion for binding conformation prediction | [47] | |

| Integrated Multitask Platforms | BioNeMo | NVIDIA’s unified AI platform for modeling structure, sequence, and function | [72] |

| DeepSequence | Prediction of protein function and stability for mutant screening and optimization | [73] |

4.2. Sequence-Based Design Strategies

4.3. De Novo Design Strategy

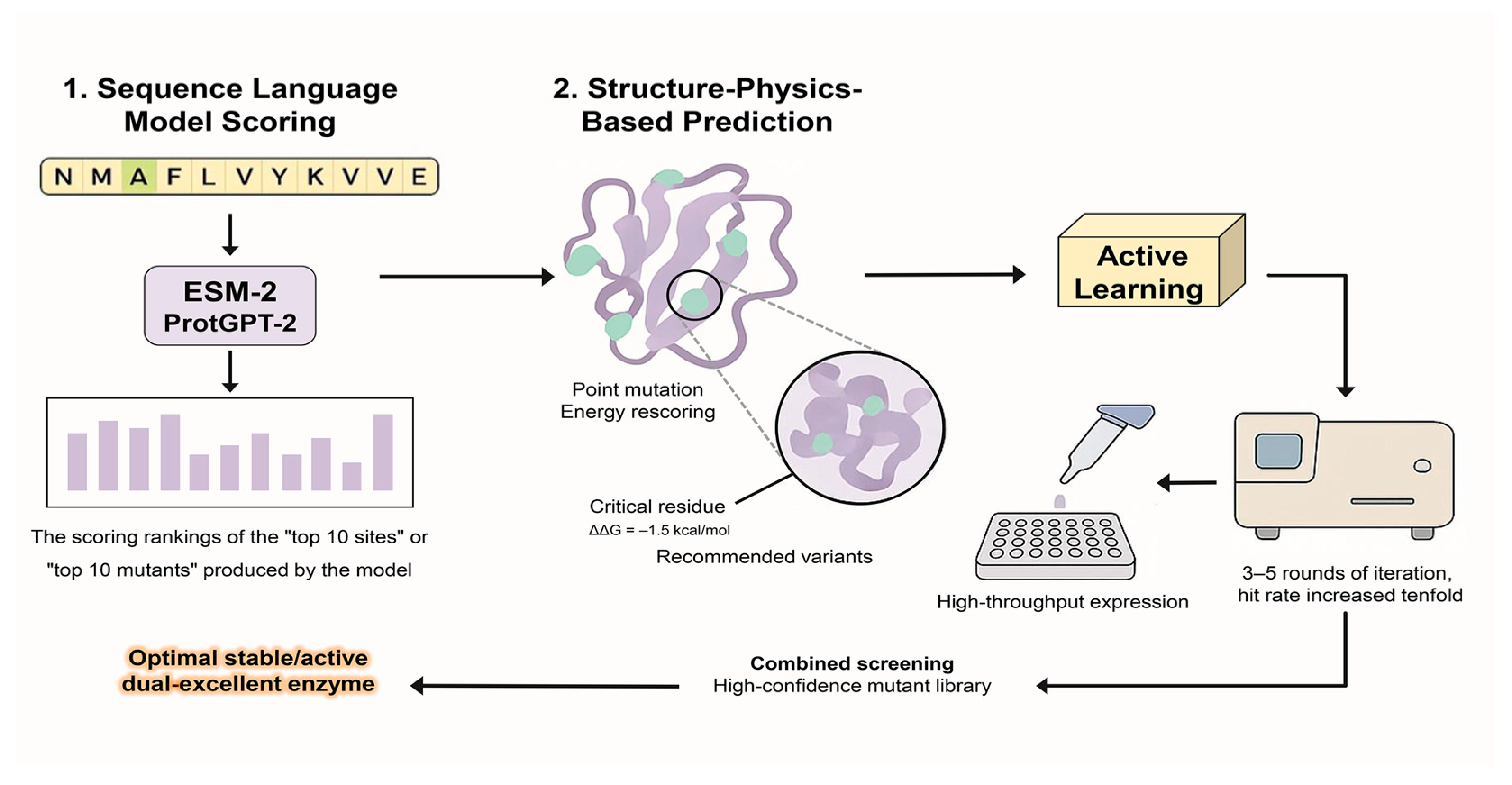

5. AI-Driven Strategies for Predicting Enzyme Mutations and Optimizing Stability

5.1. Predicting Mutation Effects and Variant Performance

5.2. Computational Strategies and Models for Enhancing Enzyme Stability

6. New Challenges of AI in Protein Engineering

6.1. Limits of Structural Prediction on Complex and Dynamic Systems

6.2. Design–Experiment Gap, Data Limitations, and Model Interpretability

6.3. Benchmarks and Fair Comparison

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Buller, R.; Lutz, S.; Kazlauskas, R.; Snajdrova, R.; Moore, J.; Bornscheuer, U. From nature to industry: Harnessing enzymes for biocatalysis. Science 2023, 382, 8615. [Google Scholar] [CrossRef] [PubMed]

- De Santis, P.; Meyer, L.-E.; Kara, S. The rise of continuous flow biocatalysis–fundamentals, very recent developments and future perspectives. React. Chem. Eng. 2020, 5, 2155–2184. [Google Scholar] [CrossRef]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A. Highly accurate protein structure prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Landwehr, G.M.; Bogart, J.W.; Magalhaes, C.; Hammarlund, E.G.; Karim, A.S.; Jewett, M.C. Accelerated enzyme engineering by machine-learning guided cell-free expression. Nat. Commun. 2025, 16, 865. [Google Scholar] [CrossRef] [PubMed]

- Shen, L.; Yang, W. Molecular dynamics simulations with quantum mechanics/molecular mechanics and adaptive neural networks. J. Chem. Theory Comput. 2018, 14, 1442–1455. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine learning: Algorithms, real-world applications and research directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Dargan, S.; Kumar, M.; Ayyagari, M.R.; Kumar, G. A survey of deep learning and its applications: A new paradigm to machine learning. Arch. Comput. Methods Eng. 2020, 27, 1071–1092. [Google Scholar] [CrossRef]

- Budach, L.; Feuerpfeil, M.; Ihde, N.; Nathansen, A.; Noack, N.; Patzlaff, H.; Naumann, F.; Harmouch, H. The effects of data quality on machine learning performance. arXiv 2022, arXiv:2207.14529. [Google Scholar] [CrossRef]

- Eraslan, G.; Avsec, Ž.; Gagneur, J.; Theis, F.J. Deep learning: New computational modelling techniques for genomics. Nat. Rev. Genet. 2019, 20, 389–403. [Google Scholar] [CrossRef]

- Kuhlman, B.; Bradley, P. Advances in protein structure prediction and design. Nat. Rev. Mol. Cell Biol. 2019, 20, 681–697. [Google Scholar] [CrossRef]

- Mahesh, B. Machine learning algorithms-a review. Int. J. Sci. Res. (IJSR) 2020, 9, 381–386. [Google Scholar] [CrossRef]

- Pethe, M.A.; Rubenstein, A.B.; Khare, S.D. Data-driven supervised learning of a viral protease specificity landscape from deep sequencing and molecular simulations. Proc. Natl. Acad. Sci. USA 2019, 116, 168–176. [Google Scholar] [CrossRef]

- Zhou, P.; Wen, L.; Lin, J.; Mei, L.; Liu, Q.; Shang, S.; Li, J.; Shu, J. Integrated unsupervised–supervised modeling and prediction of protein–peptide affinities at structural level. Brief. Bioinform. 2022, 23, bbac097. [Google Scholar] [CrossRef]

- Kim, H.R.; Ji, H.; Kim, G.B.; Lee, S.Y. Enzyme functional classification using artificial intelligence. Trends Biotechnol. 2025. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Wang, Y.; Lin, Y.; Zhang, M.; Liu, O.; Shuai, J.; Zhao, Q. A Multi-Task Self-Supervised Strategy for Predicting Molecular Properties and FGFR1 Inhibitors. Adv. Sci. 2025, 12, 2412987. [Google Scholar] [CrossRef] [PubMed]

- Harshvardhan, G.; Gourisaria, M.K.; Pandey, M.; Rautaray, S.S. A comprehensive survey and analysis of generative models in machine learning. Comput. Sci. Rev. 2020, 38, 100285. [Google Scholar] [CrossRef]

- Ertelt, M.; Moretti, R.; Meiler, J.; Schoeder, C.T. Self-supervised machine learning methods for protein design improve sampling but not the identification of high-fitness variants. Sci. Adv. 2025, 11, eadr7338. [Google Scholar] [CrossRef]

- Fang, X.; Huang, J.; Zhang, R.; Wang, F.; Zhang, Q.; Li, G.; Yan, J.; Zhang, H.; Yan, Y.; Xu, L. Convolution neural network-based prediction of protein thermostability. J. Chem. Inf. Model. 2019, 59, 4833–4843. [Google Scholar] [CrossRef]

- Pfeiffenberger, E.; Bates, P.A. Predicting improved protein conformations with a temporal deep recurrent neural network. PLoS ONE 2018, 13, e0202652. [Google Scholar] [CrossRef] [PubMed]

- Lin, E.; Lin, C.-H.; Lane, H.-Y. De novo peptide and protein design using generative adversarial networks: An update. J. Chem. Inf. Model. 2022, 62, 761–774. [Google Scholar] [CrossRef]

- Gong, Y.; Liu, G.; Xue, Y.; Li, R.; Meng, L. A survey on dataset quality in machine learning. Inf. Softw. Technol. 2023, 162, 107268. [Google Scholar] [CrossRef]

- UniProt: The Universal protein knowledgebase in 2025. Nucleic Acids Res. 2025, 53, D609–D617. [CrossRef]

- Burley, S.K.; Berman, H.M.; Kleywegt, G.J.; Markley, J.L.; Nakamura, H.; Velankar, S. Protein Data Bank (PDB): The single global macromolecular structure archive. Protein Crystallogr. Methods Protoc. 2017, 1607, 627–641. [Google Scholar]

- Zhu, J.-J.; Yang, M.; Ren, Z.J. Machine learning in environmental research: Common pitfalls and best practices. Environ. Sci. Technol. 2023, 57, 17671–17689. [Google Scholar] [CrossRef]

- Whang, S.E.; Roh, Y.; Song, H.; Lee, J.-G. Data collection and quality challenges in deep learning: A data-centric ai perspective. VLDB J. 2023, 32, 791–813. [Google Scholar] [CrossRef]

- Aksu, G.; Güzeller, C.O.; Eser, M.T. The effect of the normalization method used in different sample sizes on the success of artificial neural network model. Int. J. Assess. Tools Educ. 2019, 6, 170–192. [Google Scholar] [CrossRef]

- Stock, M.; Van Criekinge, W.; Boeckaerts, D.; Taelman, S.; Van Haeverbeke, M.; Dewulf, P.; De Baets, B. Hyperdimensional computing: A fast, robust, and interpretable paradigm for biological data. PLoS Comput. Biol. 2024, 20, e1012426. [Google Scholar] [CrossRef]

- Anowar, F.; Sadaoui, S.; Selim, B. Conceptual and empirical comparison of dimensionality reduction algorithms (pca, kpca, lda, mds, svd, lle, isomap, le, ica, t-sne). Comput. Sci. Rev. 2021, 40, 100378. [Google Scholar] [CrossRef]

- Li, F.; Yuan, L.; Lu, H.; Li, G.; Chen, Y.; Engqvist, M.K.; Kerkhoven, E.J.; Nielsen, J. Deep learning-based k cat prediction enables improved enzyme-constrained model reconstruction. Nat. Catal. 2022, 5, 662–672. [Google Scholar] [CrossRef]

- Venanzi, N.A.E.; Basciu, A.; Vargiu, A.V.; Kiparissides, A.; Dalby, P.A.; Dikicioglu, D. Machine learning integrating protein structure, sequence, and dynamics to predict the enzyme activity of bovine enterokinase variants. J. Chem. Inf. Model. 2024, 64, 2681–2694. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, V.; McShan, A.C. The power and pitfalls of AlphaFold2 for structure prediction beyond rigid globular proteins. Nat. Chem. Biol. 2024, 20, 950–959. [Google Scholar] [CrossRef]

- AlQuraishi, M.; Sorger, P.K. Differentiable biology: Using deep learning for biophysics-based and data-driven modeling of molecular mechanisms. Nat. Methods 2021, 18, 1169–1180. [Google Scholar] [CrossRef]

- Gao, W.; Mahajan, S.P.; Sulam, J.; Gray, J.J. Deep learning in protein structural modeling and design. Patterns 2020, 1, 100142. [Google Scholar] [CrossRef]

- Le, N.Q.K. Leveraging transformers-based language models in proteome bioinformatics. Proteomics 2023, 23, 2300011. [Google Scholar] [CrossRef]

- Chandra, A.; Tünnermann, L.; Löfstedt, T.; Gratz, R. Transformer-based deep learning for predicting protein properties in the life sciences. eLife 2023, 12, e82819. [Google Scholar] [CrossRef]

- Zhang, S.; Fan, R.; Liu, Y.; Chen, S.; Liu, Q.; Zeng, W. Applications of transformer-based language models in bioinformatics: A survey. Bioinform. Adv. 2023, 3, vbad001. [Google Scholar] [CrossRef] [PubMed]

- Ling, X.; Li, Z.; Wang, Y.; You, Z. Transformer in Protein: A Survey. arXiv 2025, arXiv:2505.20098. [Google Scholar] [CrossRef]

- Rives, A.; Meier, J.; Sercu, T.; Goyal, S.; Lin, Z.; Liu, J.; Guo, D.; Ott, M.; Zitnick, C.L.; Ma, J. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc. Natl. Acad. Sci. USA 2021, 118, e2016239118. [Google Scholar] [CrossRef] [PubMed]

- Raad, J.; Bugnon, L.A.; Milone, D.H.; Stegmayer, G. miRe2e: A full end-to-end deep model based on transformers for prediction of pre-miRNAs. Bioinformatics 2021, 38, 1191–1197. [Google Scholar] [CrossRef] [PubMed]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Zhang, M.; Qamar, M.; Kang, T.; Jung, Y.; Zhang, C.; Bae, S.-H.; Zhang, C. A survey on graph diffusion models: Generative ai in science for molecule, protein and material. arXiv 2023, arXiv:2304.01565. [Google Scholar] [CrossRef]

- Wu, K.E.; Yang, K.K.; van den Berg, R.; Alamdari, S.; Zou, J.Y.; Lu, A.X.; Amini, A.P. Protein structure generation via folding diffusion. Nat. Commun. 2024, 15, 1059. [Google Scholar] [CrossRef] [PubMed]

- Lu, C.; Lubin, J.H.; Sarma, V.V.; Stentz, S.Z.; Wang, G.; Wang, S.; Khare, S.D. Prediction and design of protease enzyme specificity using a structure-aware graph convolutional network. Proc. Natl. Acad. Sci. USA 2023, 120, e2303590120. [Google Scholar] [CrossRef]

- Sikander, R.; Wang, Y.; Ghulam, A.; Wu, X. Identification of enzymes-specific protein domain based on DDE, and convolutional neural network. Front. Genet. 2021, 12, 759384. [Google Scholar] [CrossRef] [PubMed]

- Nosouhian, S.; Nosouhian, F.; Khoshouei, A.K. A Review of Recurrent Neural Network Architecture for Sequence Learning: Comparison Between LSTM and GRU. 2021. Available online: https://www.preprints.org/manuscript/202107.0252/v1 (accessed on 14 July 2025).

- Zhou, J.; Huang, M. Navigating the landscape of enzyme design: From molecular simulations to machine learning. Chem. Soc. Rev. 2024, 53, 8202–8239. [Google Scholar] [CrossRef] [PubMed]

- Lu, W.; Zhang, J.; Huang, W.; Zhang, Z.; Jia, X.; Wang, Z.; Shi, L.; Li, C.; Wolynes, P.G.; Zheng, S. DynamicBind: Predicting ligand-specific protein-ligand complex structure with a deep equivariant generative model. Nat. Commun. 2024, 15, 1071. [Google Scholar] [CrossRef]

- Cui, X.-C.; Zheng, Y.; Liu, Y.; Yuchi, Z.; Yuan, Y.-J. AI-driven de novo enzyme design: Strategies, applications, and future prospects. Biotechnol. Adv. 2025, 82, 108603. [Google Scholar] [CrossRef]

- Notin, P.; Kollasch, A.; Ritter, D.; Van Niekerk, L.; Paul, S.; Spinner, H.; Rollins, N.; Shaw, A.; Orenbuch, R.; Weitzman, R. Proteingym: Large-scale benchmarks for protein fitness prediction and design. Adv. Neural Inf. Process. Syst. 2023, 36, 64331–64379. [Google Scholar]

- Abramson, J.; Adler, J.; Dunger, J.; Evans, R.; Green, T.; Pritzel, A.; Ronneberger, O.; Willmore, L.; Ballard, A.J.; Bambrick, J. Accurate structure prediction of biomolecular interactions with AlphaFold 3. Nature 2024, 630, 493–500. [Google Scholar] [CrossRef]

- Du, Z.; Su, H.; Wang, W.; Ye, L.; Wei, H.; Peng, Z.; Anishchenko, I.; Baker, D.; Yang, J. The trRosetta server for fast and accurate protein structure prediction. Nat. Protoc. 2021, 16, 5634–5651. [Google Scholar] [CrossRef]

- Baek, M.; McHugh, R.; Anishchenko, I.; Jiang, H.; Baker, D.; DiMaio, F. Accurate prediction of protein–nucleic acid complexes using RoseTTAFoldNA. Nat. Methods 2024, 21, 117–121. [Google Scholar] [CrossRef] [PubMed]

- Goverde, C.A.; Pacesa, M.; Goldbach, N.; Dornfeld, L.J.; Balbi, P.E.; Georgeon, S.; Rosset, S.; Kapoor, S.; Choudhury, J.; Dauparas, J. Computational design of soluble and functional membrane protein analogues. Nature 2024, 631, 449–458. [Google Scholar] [CrossRef]

- He, X.-h.; Li, J.-r.; Shen, S.-y.; Xu, H.E. AlphaFold3 versus experimental structures: Assessment of the accuracy in ligand-bound G protein-coupled receptors. Acta Pharmacol. Sin. 2025, 46, 1111–1122. [Google Scholar] [CrossRef]

- Lisanza, S.L.; Gershon, J.M.; Tipps, S.W.; Sims, J.N.; Arnoldt, L.; Hendel, S.J.; Simma, M.K.; Liu, G.; Yase, M.; Wu, H. Multistate and functional protein design using RoseTTAFold sequence space diffusion. Nat. Biotechnol. 2024, 43, 1288–1298. [Google Scholar] [CrossRef]

- Lee, J.-W.; Won, J.-H.; Jeon, S.; Choo, Y.; Yeon, Y.; Oh, J.-S.; Kim, M.; Kim, S.; Joung, I.; Jang, C. DeepFold: Enhancing protein structure prediction through optimized loss functions, improved template features, and re-optimized energy function. Bioinformatics 2023, 39, btad712. [Google Scholar] [CrossRef] [PubMed]

- Schmitz, S.; Ertelt, M.; Merkl, R.; Meiler, J. Rosetta design with co-evolutionary information retains protein function. PLoS Comput. Biol. 2021, 17, e1008568. [Google Scholar] [CrossRef]

- Watson, J.L.; Juergens, D.; Bennett, N.R.; Trippe, B.L.; Yim, J.; Eisenach, H.E.; Ahern, W.; Borst, A.J.; Ragotte, R.J.; Milles, L.F. De novo design of protein structure and function with RFdiffusion. Nature 2023, 620, 1089–1100. [Google Scholar] [CrossRef]

- Madani, A.; Krause, B.; Greene, E.R.; Subramanian, S.; Mohr, B.P.; Holton, J.M.; Olmos Jr, J.L.; Xiong, C.; Sun, Z.Z.; Socher, R. Large language models generate functional protein sequences across diverse families. Nat. Biotechnol. 2023, 41, 1099–1106. [Google Scholar] [CrossRef]

- Huang, X.; Pearce, R.; Zhang, Y. EvoEF2: Accurate and fast energy function for computational protein design. Bioinformatics 2020, 36, 1135–1142. [Google Scholar] [CrossRef] [PubMed]

- Cordoves-Delgado, G.; García-Jacas, C.R. Predicting antimicrobial peptides using ESMFold-predicted structures and ESM-2-based amino acid features with graph deep learning. J. Chem. Inf. Model. 2024, 64, 4310–4321. [Google Scholar] [CrossRef]

- Jendrusch, M.A.; Yang, A.L.; Cacace, E.; Bobonis, J.; Voogdt, C.G.; Kaspar, S.; Schweimer, K.; Perez-Borrajero, C.; Lapouge, K.; Scheurich, J. AlphaDesign: A de novo protein design framework based on AlphaFold. Mol. Syst. Biol. 2025, 1–24. [Google Scholar] [CrossRef]

- Cheng, J.; Novati, G.; Pan, J.; Bycroft, C.; Žemgulytė, A.; Applebaum, T.; Pritzel, A.; Wong, L.H.; Zielinski, M.; Sargeant, T. Accurate proteome-wide missense variant effect prediction with AlphaMissense. Science 2023, 381, eadg7492. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, G.; Li, K.; Li, F.; Huang, L.; Duan, M.; Zhou, F. HLAB: Learning the BiLSTM features from the ProtBert-encoded proteins for the class I HLA-peptide binding prediction. Brief. Bioinform. 2022, 23, 173. [Google Scholar] [CrossRef]

- Sun, H.; He, L.; Deng, P.; Liu, G.; Liu, H.; Cao, C.; Ju, F.; Wu, L.; Qin, T.; Liu, T.-Y. Accelerating protein engineering with fitness landscape modeling and reinforcement learning. bioRxiv 2023. [Google Scholar] [CrossRef]

- Fei, H.; Li, Y.; Liu, Y.; Wei, J.; Chen, A.; Gao, C. Advancing protein evolution with inverse folding models integrating structural and evolutionary constraints. Cell 2025, 188, 4674–4692. [Google Scholar] [CrossRef]

- Kosicki, M.; Zhang, B.; Hecht, V.; Pampari, A.; Cook, L.E.; Slaven, N.; Akiyama, J.A.; Plajzer-Frick, I.; Novak, C.S.; Kato, M. In vivo mapping of mutagenesis sensitivity of human enhancers. Nature 2025, 643, 839–846. [Google Scholar] [CrossRef]

- Karimi, M.; Wu, D.; Wang, Z.; Shen, Y. DeepAffinity: Interpretable deep learning of compound–protein affinity through unified recurrent and convolutional neural networks. Bioinformatics 2019, 35, 3329–3338. [Google Scholar] [CrossRef] [PubMed]

- Stafford, K.A.; Anderson, B.M.; Sorenson, J.; van den Bedem, H. AtomNet PoseRanker: Enriching ligand pose quality for dynamic proteins in virtual high-throughput screens. J. Chem. Inf. Model. 2022, 62, 1178–1189. [Google Scholar] [CrossRef] [PubMed]

- Stärk, H.; Ganea, O.; Pattanaik, L.; Barzilay, R.; Jaakkola, T. Equibind: Geometric deep learning for drug binding structure prediction. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 20503–20521. [Google Scholar]

- Dauparas, J.; Anishchenko, I.; Bennett, N.; Bai, H.; Ragotte, R.J.; Milles, L.F.; Wicky, B.I.; Courbet, A.; de Haas, R.J.; Bethel, N. Robust deep learning–based protein sequence design using ProteinMPNN. Science 2022, 378, 49–56. [Google Scholar] [CrossRef] [PubMed]

- John, P.S.; Lin, D.; Binder, P.; Greaves, M.; Shah, V.; John, J.S.; Lange, A.; Hsu, P.; Illango, R.; Ramanathan, A. BioNeMo Framework: A modular, high-performance library for AI model development in drug discovery. arXiv 2024, arXiv:2411.10548. [Google Scholar]

- Strodthoff, N.; Wagner, P.; Wenzel, M.; Samek, W. UDSMProt: Universal deep sequence models for protein classification. Bioinformatics 2020, 36, 2401–2409. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Luo, X.; Li, D.; Gao, Y.; Chen, X.; Xi, Z.; Zheng, Z. Increased thermal stability and catalytic efficiency of 3-ketosteroid Δ1-dehydrogenase5 from Arthrobacter simplex significantly reduces enzyme dosage in prednisone acetate biosynthesis. Int. J. Biol. Macromol. 2024, 283, 137855. [Google Scholar] [CrossRef]

- An, L.; Said, M.; Tran, L.; Majumder, S.; Goreshnik, I.; Lee, G.R.; Juergens, D.; Dauparas, J.; Anishchenko, I.; Coventry, B. Binding and sensing diverse small molecules using shape-complementary pseudocycles. Science 2024, 385, 276–282. [Google Scholar] [CrossRef] [PubMed]

- Mahtarin, R.; Islam, S.; Islam, M.J.; Ullah, M.O.; Ali, M.A.; Halim, M.A. Structure and dynamics of membrane protein in SARS-CoV-2. J. Biomol. Struct. Dyn. 2022, 40, 4725–4738. [Google Scholar] [CrossRef]

- Sumida, K.H.; Núñez-Franco, R.; Kalvet, I.; Pellock, S.J.; Wicky, B.I.; Milles, L.F.; Dauparas, J.; Wang, J.; Kipnis, Y.; Jameson, N. Improving protein expression, stability, and function with ProteinMPNN. J. Am. Chem. Soc. 2024, 146, 2054–2061. [Google Scholar] [CrossRef] [PubMed]

- Dauparas, J.; Lee, G.R.; Pecoraro, R.; An, L.; Anishchenko, I.; Glasscock, C.; Baker, D. Atomic context-conditioned protein sequence design using LigandMPNN. Nat. Methods 2025, 22, 717–723. [Google Scholar] [CrossRef]

- Bennett, N.R.; Watson, J.L.; Ragotte, R.J.; Borst, A.J.; See, D.L.; Weidle, C.; Biswas, R.; Yu, Y.; Shrock, E.L.; Ault, R. Atomically accurate de novo design of antibodies with RFdiffusion. bioRxiv 2025, 585103. [Google Scholar]

- Hayes, T.; Rao, R.; Akin, H.; Sofroniew, N.J.; Oktay, D.; Lin, Z.; Verkuil, R.; Tran, V.Q.; Deaton, J.; Wiggert, M. Simulating 500 million years of evolution with a language model. Science 2025, 387, 850–858. [Google Scholar] [CrossRef]

- Jiang, K.; Yan, Z.; Di Bernardo, M.; Sgrizzi, S.R.; Villiger, L.; Kayabolen, A.; Kim, B.; Carscadden, J.K.; Hiraizumi, M.; Nishimasu, H. Rapid in silico directed evolution by a protein language model with EVOLVEpro. Science 2024, 387, eadr6006. [Google Scholar] [CrossRef]

- Fowler, D.M.; Fields, S. Deep mutational scanning: A new style of protein science. Nat. Methods 2014, 11, 801–807. [Google Scholar] [CrossRef]

- Reetz, M.T.; Carballeira, J. Iterative saturation mutagenesis (ISM) for rapid directed evolution of functional enzymes. Nat. Protoc. 2007, 2, 891–903. [Google Scholar] [CrossRef]

- Bornscheuer, U.; Peds, S. Thermostabilization of an esterase by alignment-guided focussed directed evolution. Protein Eng. Des. Sel. 2010, 23, 903–909. [Google Scholar] [CrossRef] [PubMed]

- Miller, S.M.; Wang, T.; Liu, D. Phage-assisted continuous and non-continuous evolution. Nat. Protoc. 2020, 15, 4101–4127. [Google Scholar] [CrossRef]

- Esvelt, K.; Carlson, J.C.; Liu, D.R. A system for the continuous directed evolution of biomolecules. Nature 2010, 472, 499–503. [Google Scholar] [CrossRef]

- Cheng, P.; Mao, C.; Tang, J.; Yang, S.; Cheng, Y.; Wang, W.; Gu, Q.; Han, W.; Chen, H.; Li, S. Zero-shot prediction of mutation effects with multimodal deep representation learning guides protein engineering. Cell Res. 2024, 34, 630–647. [Google Scholar] [CrossRef]

- Bradley, P.; Misura, K.M.S.; Baker, D.J.S. Toward High-Resolution de Novo Structure Prediction for Small Proteins. Science 2005, 309, 1868–1871. [Google Scholar] [CrossRef] [PubMed]

- Guerois, R.; Nielsen, J.E.; Serrano, L. Predicting changes in the stability of proteins and protein complexes: A study of more than 1000 mutations. J. Mol. Biol. 2002, 320, 369–387. [Google Scholar] [CrossRef] [PubMed]

- Kellogg, E.H.; Leaver-Fay, A.; Baker, D. Role of conformational sampling in computing mutation-induced changes in protein structure and stability. Proteins 2011, 79, 830–838. [Google Scholar] [CrossRef]

- Yu, H.; Yan, Y.; Zhang, C.; Dalby, P. Two strategies to engineer flexible loops for improved enzyme thermostability. Sci. Rep. 2017, 7, 41212. [Google Scholar] [CrossRef]

- Peccati, F.; Alunno-Rufini, S.; Jiménez-Osés, G. Accurate prediction of enzyme thermostabilization with Rosetta using AlphaFold ensembles. J. Chem. Inf. Model. 2023, 63, 898–909. [Google Scholar] [CrossRef]

- Shringari, S.R.; Giannakoulias, S.; Ferrie, J.J.; Petersson, E. Rosetta custom score functions accurately predict ΔΔ G of mutations at protein–protein interfaces using machine learning. Chem. Commun. 2020, 56, 6774–6777. [Google Scholar] [CrossRef] [PubMed]

- Zhou, B.; Zheng, L.; Wu, B.; Tan, Y.; Lv, O.; Yi, K.; Fan, G.; Hong, L. Protein engineering with lightweight graph denoising neural networks. Mach. Learn. Deep. Learn. 2024, 64, 3650–3661. [Google Scholar] [CrossRef]

- Bryant, P.; Pozzati, G.; Zhu, W.; Shenoy, A.; Kundrotas, P.; Elofsson, A. Predicting the structure of large protein complexes using AlphaFold and Monte Carlo tree search. Nat. Commun. 2022, 13, 6028. [Google Scholar] [CrossRef]

- Wu, F.; Jin, S.; Jiang, Y.; Jin, X.; Tang, B.; Niu, Z.; Liu, X.; Zhang, Q.; Zeng, X.; Li, S.Z. Pre-Training of Equivariant Graph Matching Networks with Conformation Flexibility for Drug Binding. Adv. Sci. 2022, 9, 2203796. [Google Scholar] [CrossRef] [PubMed]

- Cheng, K.; Liu, C.; Su, Q.; Wang, J.; Zhang, L.; Tang, Y.; Yao, Y.; Zhu, S.; Qi, Y. 4D diffusion for dynamic protein structure prediction with reference and motion guidance. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; pp. 93–101. [Google Scholar]

- Kryshtafovych, A.; Montelione, G.T.; Rigden, D.J.; Mesdaghi, S.; Karaca, E.; Moult, J. Breaking the conformational ensemble barrier: Ensemble structure modeling challenges in CASP15. Proteins Struct. Funct. Bioinform. 2023, 91, 1903–1911. [Google Scholar] [CrossRef] [PubMed]

- Wayment-Steele, H.K.; Ojoawo, A.; Otten, R.; Apitz, J.M.; Pitsawong, W.; Hömberger, M.; Ovchinnikov, S.; Colwell, L.; Kern, D. Predicting multiple conformations via sequence clustering and AlphaFold2. Nature 2024, 625, 832–839. [Google Scholar] [CrossRef]

- Jing, B.; Berger, B.; Jaakkola, T. AlphaFold meets flow matching for generating protein ensembles. arXiv 2024, arXiv:2402.04845. [Google Scholar] [CrossRef]

- Barhoon, M.; Mahdiuni, H. Exploring Protein–Protein Docking Tools: Comprehensive Insights into Traditional and Deep-Learning Approaches. J. Chem. Inf. Model. 2025, 65, 6446–6469. [Google Scholar] [CrossRef]

- Schafer, J.W.; Lee, M.; Chakravarty, D.; Thole, J.F.; Chen, E.A.; Porter, L.L. Sequence clustering confounds AlphaFold2. Nature 2025, 638, E8–E12. [Google Scholar] [CrossRef]

- Praetorius, F.; Leung, P.J.; Tessmer, M.H.; Broerman, A.; Demakis, C.; Dishman, A.F.; Pillai, A.; Idris, A.; Juergens, D.; Dauparas, J. Design of stimulus-responsive two-state hinge proteins. Science 2023, 381, 754–760. [Google Scholar] [CrossRef]

- Garzon, D.; Akbari, O.; Bilodeau, C. PepMNet: A Hybrid Deep Learning Model for Predicting Peptide Properties Using Hierarchical Graph Representations. Mol. Syst. Des. Eng. 2024, 10, 205–218. [Google Scholar] [CrossRef]

- Fang, J. A critical review of five machine learning-based algorithms for predicting protein stability changes upon mutation. Brief. Bioinform. 2020, 21, 1285–1292. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting black-box models: A review on explainable artificial intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Von Eschenbach, W.J. Transparency and the black box problem: Why we do not trust AI. Philos. Technol. 2021, 34, 1607–1622. [Google Scholar] [CrossRef]

- Wellawatte, G.P.; Gandhi, H.A.; Seshadri, A.; White, A.D. A perspective on explanations of molecular prediction models. J. Chem. Theory Comput. 2023, 19, 2149–2160. [Google Scholar] [CrossRef]

- Novakovsky, G.; Dexter, N.; Libbrecht, M.W.; Wasserman, W.W.; Mostafavi, S. Obtaining genetics insights from deep learning via explainable artificial intelligence. Nat. Rev. Genet. 2023, 24, 125–137. [Google Scholar] [CrossRef]

- White, M.; Haddad, I.; Osborne, C.; Liu, X.-Y.Y.; Abdelmonsef, A.; Varghese, S.; Hors, A.L. The model openness framework: Promoting completeness and openness for reproducibility, transparency, and usability in artificial intelligence. arXiv 2024, arXiv:2403.13784. [Google Scholar]

- Bernett, J.; Blumenthal, D.B.; List, M. Cracking the black box of deep sequence-based protein–protein interaction prediction. Brief. Bioinform. 2024, 25, bbae076. [Google Scholar] [CrossRef]

- Joeres, R.; Blumenthal, D.B.; Kalinina, O.V. Data splitting to avoid information leakage with DataSAIL. Nat. Commun. 2025, 16, 3337. [Google Scholar] [CrossRef]

- Greenman, K.P.; Amini, A.P.; Yang, K.K. Benchmarking uncertainty quantification for protein engineering. PLOS Comput. Biol. 2025, 21, e1012639. [Google Scholar] [CrossRef]

- Dallago, C.; Mou, J.; Johnston, K.E.; Wittmann, B.J.; Bhattacharya, N.; Goldman, S.; Madani, A.; Yang, K.K. FLIP: Benchmark tasks in fitness landscape inference for proteins. bioRxiv 2021. [Google Scholar] [CrossRef]

- Wang, C.; Zhong, B.; Zhang, Z.; Chaudhary, N.; Misra, S.; Tang, J. Pdb-struct: A comprehensive benchmark for structure-based protein design. arXiv 2023, arXiv:2312.00080. [Google Scholar]

- Castorina, L.V.; Petrenas, R.; Subr, K.; Wood, C.W. PDBench: Evaluating computational methods for protein-sequence design. Bioinformatics 2023, 39, btad027. [Google Scholar] [CrossRef]

- Armer, C.; Kane, H.; Cortade, D.L.; Redestig, H.; Estell, D.A.; Yusuf, A.; Rollins, N.; Spinner, A.; Marks, D.; Brunette, T. Results of the protein engineering tournament: An open science benchmark for protein modeling and design. Proteins Struct. Funct. Bioinform. 2024. [Google Scholar] [CrossRef]

- Rocklin, G.J.; Chidyausiku, T.M.; Goreshnik, I.; Ford, A.; Houliston, S.; Lemak, A.; Carter, L.; Ravichandran, R.; Mulligan, V.K.; Chevalier, A. Global analysis of protein folding using massively parallel design, synthesis, and testing. Science 2017, 357, 168–175. [Google Scholar] [CrossRef]

- Qian, J.; Milles, L.F.; Wicky, B.I.; Motmaen, A.; Li, X.; Kibler, R.D.; Stewart, L.; Baker, D. Accelerating protein design by scaling experimental characterization. bioRxiv 2025. [Google Scholar] [CrossRef]

| Database | Data Type | Data Size |

|---|---|---|

| UniProt | Protein function annotation, domain and evolutionary information | More than 240 million sequences |

| BioLiP2 | Protein-ligand interaction | More than 900,000 entries |

| BRENDA | Provide EC number, dynamic parameters (Kcat/Km), and mutation effect data | More than 8600 enzymes |

| AlphaFold | Protein structure prediction | More than 210 million protein sequences |

| PDB | Experimental analysis and prediction of protein structures | More than 230,000 protein structures |

| ProTherm | Protein thermal stability parameters | More than 7000 types of mutation data |

| FireProt | Thermodynamic parameters of protein mutants | More than 13,000 mutation entries |

| M-CSA | Enzyme catalytic mechanism and sites | More than 650 detailed mechanisms |

| SoluProtMut | Protein solubility data | More than 17,000 types of mutation data |

| PhosphoSitePlus | The relationship between phosphorylation/ubiquitination sites and functions | More than 58,000 protein items |

| SCOP2 | Classification of protein structures | More than 860,000 entries |

| PROSITE | Protein domains, families and functional characteristics | More than 1900 entries |

| D3DistalMutation | The influence of inactive site mutations on enzyme activity | More than 7000 mutations |

| STITCH | Visualization of protein-ligand interaction networks and metabolic pathways | More than 70,000 compounds |

| ProtaBank | Repository of protein engineering sequence–function data (mutational scans, kinetics, stability, DMS) | More than 1.8 million unique variants and 7.7 million data points |

| Protein Design Archive | Manually curated database of designed protein sequences and structures | Over 1500 designed protein structures |

| SKEMPI 2.0 | A database containing experimentally measured ΔΔG values for protein–protein interfaces | More than 20,000 variants |

| MaveDB | Multiplexed assays of variant effect (MAVE/DMS) with variant-effect measurements and metadata | Millions of measurements; thousands of datasets |

| FireProtDB | Manually curated protein stability data for single-point mutants (ΔΔG, Tm) with experimental context | Tens of thousands of mutations |

| ThermoMutDB | Thermodynamic parameters for missense mutations (ΔG, ΔΔG, Tm) with experimental details | More than 10,000 experimental entries |

| BindingDB | Protein–small-molecule binding affinities (Kd/Ki/IC50) with experimental conditions | Millions of binding measurements |

| PDBbind | Protein–ligand/protein–protein complexes annotated with measured binding affinities | Tens of thousands of complexes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, S.; Wu, Q.; Fu, G.; Lu, D.; Wang, F.; Deng, L.; Nie, K. Breaking Evolution’s Ceiling: AI-Powered Protein Engineering. Catalysts 2025, 15, 842. https://doi.org/10.3390/catal15090842

Jin S, Wu Q, Fu G, Lu D, Wang F, Deng L, Nie K. Breaking Evolution’s Ceiling: AI-Powered Protein Engineering. Catalysts. 2025; 15(9):842. https://doi.org/10.3390/catal15090842

Chicago/Turabian StyleJin, Shuming, Qiuyang Wu, Gaokui Fu, Dong Lu, Fang Wang, Li Deng, and Kaili Nie. 2025. "Breaking Evolution’s Ceiling: AI-Powered Protein Engineering" Catalysts 15, no. 9: 842. https://doi.org/10.3390/catal15090842

APA StyleJin, S., Wu, Q., Fu, G., Lu, D., Wang, F., Deng, L., & Nie, K. (2025). Breaking Evolution’s Ceiling: AI-Powered Protein Engineering. Catalysts, 15(9), 842. https://doi.org/10.3390/catal15090842