Abstract

We develop a model of cheap talk with transparent and monotone motives from a seller to an informed buyer. By transparent and monotone motives, we mean that the seller’s preference does not depend on the state of the world and is increasing in the choice(s) of the buyer regardless of the state of the world. We first show that if the buyer is completely uninformed, only the babbling equilibrium exists. Then, we obtain our main result that even if the buyer has the slightest information, full revelation can be supported by using the crosschecking strategy of the buyer if and only if the seller has a CARA (constant absolute risk aversion) utility function unless the buyer has too much information. In this equilibrium, the buyer can punish the seller who sends a message far above the buyer’s information by ignoring the seller’s message. Paradoxically, no information and too much information of the buyer both eliminate the fully revealing equilibrium with the crosschecking strategy. We also obtain a counterintuitive result that the seller prefers a more informed buyer than a less informed buyer.

Keywords:

cheap talk; crosschecking strategy; fully revealing equilibrium; informed receiver; monotone motive; tolerance in deviation; transparent motive JEL Classification:

C7; D8

1. Introduction

People often obtain advice from experts. Lawyers give legal advice to clients. Patients obtain medical advice from doctors. Mechanics recommend some repair services to customers. Academic advisers give their opinions on theses of students. In those cases, advisees are often not completely ignorant of the subject, although it is true that they are less informed than the experts.

However, in the literature on cheap talk games that follows [1], which will be abbreviated as CS hereafter, the feature that the receiver (she) is also partially informed has been largely neglected1. It seems obvious that if the receiver is informed to some extent, the message of the sender (he) will be affected by the extent to which the receiver is informed. Then, a natural question would be whether the informativeness of the receiver can discipline the sender’s message. How different could a mechanic’s recommendations to a complete novice and to a customer with some expertise be? Do they make a more honest recommendation to knowledgeable customers? Are they more fraudulent to novices? Does an academic adviser write a recommendation letter for their student differently from someone who knows them a little more than someone who has no prior information about the student at all? Will a professor who has some information about the student interpret the same recommendation letter differently? Are lawyers more honest with professional clients in their legal service? In general, does an advisee’s partial information always make the recommendation of the expert credible and make them worse off? If so, how much?

As an example which is borrowed from Charkraborty and Harbaugh (2010) [7] and will be used as the main scenario in our paper, consider a salesperson who sells a product. If they are paid based on their sales performance, they will not have a proper incentive to be honest about the quality of the product. Such an incentive to exaggerate the quality may be disciplined if the consumer is partially informed about the quality themselves. Actually, many firms hire marketing experts to sell the products they want to sell using deceitful marketing practices, and consumers’ lack of information often tricks them into purchasing products that turn out to be unsatisfactory. If a consumer does not have all of the needed information about a product, they may mistakenly purchase that product, but the proper information about the product may protect them from being deceived into purchasing an unnecessary amount of goods.

In this example, the utility of the seller increases with the quantity purchased by the buyer regardless of the quality of the product. In other words, the seller has a transparent motive in the sense that their preference does not depend on their information of the quality, and they have a monotone motive in the sense that they always prefer the buyer purchasing more2. There was a widely held conjecture that if the sender has transparent motives, credible communication is not possible and only the babbling equilibrium exists since the interests of the sender and the receiver are too conflicting. But the falsity of the conjecture was shown by Seidmann (1990) [2] when the receiver has some private information and their action is one-dimensional, and by Charkraborty and Harbaugh [7] when the private information of the sender is multi-dimensional3.

Also, consider the example of a mechanic which is often used in the literature on experts starting from [8]. In this example, an informed expert recommends a certain kind of repair (expensive repair and inexpensive repair) to a consumer. It is clear that an expert need not be honest at all if the performance of his repair is unobservable to the consumer. However, there may be several disciplining devices of fraud. Pitchik and Schotter (1987) [8] considered second opinions obtained by searches and Wolinsky (1993) [9] considered the expert’s reputation. In this paper, we consider the consumer’s expertise as another disciplining device. Besides, in the model of Pitchik and Schotter (1987) [8], an expert’s recommendation is not cheap talk, but a binding option like a repair price. Our paper is distinguished from them in the sense that we are mainly interested in the payoff-irrelevant message of an expert.

In Crawford and Sobel (1982) [1], the preferences of a sender and a receiver are single-peaked. For example, we can consider the utility functions of a sender and a receiver given by and , respectively, where a is the receiver’s payoff-relevant action, is the state of the world, and is the conflict of interest between them. If we take two actions a and such that , it is easy to see that both the sender and the receiver prefer a to for , whereas both prefer to a for , implying that there is some common interest. Due to the common interest, the message inducing a and the one inducing can contain some truthful information about . However, in the situation we consider in this paper, the preference of the sender (seller) is not single-peaked. They always prefer to a for any pair of actions (), although the receiver (buyer) may prefer a to if is small and vice versa if is large. Thus, the communication mechanism of Crawford and Sobel (1982) [1] does not work in this setting but if the informed buyer has an outside option not to buy from the seller but to buy from other sellers, it could make their threat to penalize an extremely high message of the seller, thereby dissuading them from inflating their message.

Following the spirit of Charkraborty and Harbaugh (2010) [7], we will consider a seller (sender) and a buyer (receiver) in this paper and address the question of whether more informativeness of the buyer prompts the seller to communicate truthfully when the sender has transparent and monotone motives.

We mainly argue that there is a truthfully revealing communicative equilibrium in a fairly general setting with an unbounded state space. The difficulty in our setup arises mainly because the assumption of the normal distribution of noises with full supports makes off-the-equilibrium messages vanish completely. Even if the message is too high or too far from the buyer’s information, it is a possible event, although the likelihood is very low. So, the buyer cannot believe that it is a consequence of the seller’s lying. This makes it difficult to penalize strongly enough the seller who sends a higher message than the true value. However, as long as they have a two-dimensional action space, for example, if they can buy the product either from the seller or from other sellers, they can choose one action (which is relevant to the seller’s utility) to discipline the seller’s message and choose another (which is irrelevant to the seller’s utility) for their best response to the seller’s message based on the consistent posterior belief4.

To support the truth-revealing outcome as an equilibrium, we use a specific form of strategy from the buyer, which will be called “crosschecking strategies”. By a crosschecking strategy of the buyer, we mean a strategy whereby the buyer responds to the seller’s message, based on the belief that the seller’s message is true only if their message is congruent enough with their information in the sense that the message falls within the normal (confidence) range, i.e., it is not too far above from the buyer’s information, and that the message is exaggerated if it falls in the punishment range, i.e., it is much higher than the buyer’s information. Under the crosschecking strategy, the seller always sends a truthful message, but the message can fall either on the normal region or on the punishment region in equilibrium. In the former case, the buyer buys the amount corresponding to the seller’s message from the seller, while in the latter case, they buy a smaller amount than the seller’s message from the seller and buy the rest of the amount (difference between the seller’s message and the amount purchased from the seller) from other sellers. We will call the minimum distance between the buyer’s information and the punishment range the tolerance in deviation.

Our recourse to crosschecking strategies can be justified by casual observations. People often ignore the opinion of experts when it is strongly opposed to what they believe, inter alia, if the experts have a strong motive to go a certain way5. A non-expert tends to use their own information to tell whether the expert is deceiving them or not, and to decide whether to resort to a second source by exercising the outside option if the option is available6.

We first show that unless the seller’s information and the buyer’s information are both noiseless, the crosschecking strategy cannot induce full revelation if the utility function of the seller is linear in the buyer’s choice, since the penalty from inflating the seller’s information is not severe enough if the seller’s utility function is linear. We next show that if the utility function of the seller is strictly concave, full honesty of the seller is possible by using the crosschecking strategy even if the buyer has the slightest information about the state of the world unless their information is too precise. In this case, strict concavity of the seller’s utility function can make the penalty from inflating the message exceed the reward from it, so it can discipline the seller who is tempted to lie. However, if the buyer is too well informed, such truthful communication may not be possible. The rough intuition behind this result goes as follows. The deviation tolerance can become larger as a well-informed buyer becomes much better informed, so the seller will have an incentive to inflate their message. This surprising result is that more information about the buyer may hinder effective communication and consequently hurt them, counters the widely held perception that better information about the buyer will pay off by disciplining the seller’s message. We obtain another counterintuitive comparative static result that the seller as well as the buyer is better off as the buyer is more informed. This result is mainly because less informativeness of a buyer may bottleneck the transaction between them. Finally, we show that the seller having a CARA (constant absolute risk aversion) utility function is the necessary and sufficient condition for the existence of a fully revealing equilibrium with the crosschecking strategy.

If the buyer has no information or completely noisy information in the sense that the variance is infinity, it is clear that only the babbling equilibrium in which no meaningful message is possible. It is interesting that the seller’s message cannot be fully revealed in two opposite extreme cases, i.e., either if the buyer has no information or if they have very precise information. In the former case, the seller says anything because the buyer has no way to check the honesty of their message due to lack of information or useless information. In the latter case, the seller has no reason to be honest because there is little possibility that the buyer will obtain an unacceptable message from the seller, due to the high precision of their information.

There is some literature on cheap talk with transparent motives. Chakraborty and Harbaugh (2010) [7] and Lipnowski and Ravid (2020) [12] are the most notable examples. The term transparent motives is used to mean that the informed sender does not care about the state but only about the receiver’s action. The authors of both papers show that cheap talk can be informative even if the sender has a transparent motive. The main difference between our model from theirs is that the receiver is also (partially) informed in our model. In Charkraborty and Harbaugh (2010) [7], the informativeness of cheap talk relies on the multi-dimensionality of the state variable which implies that the receiver cares about multiple issues rather than one issue, which is not assumed in our model. Lipnowski and Ravid (2020) [12] introduce the possibility of an informed receiver in an example of a salesperson selling several products, but they use a different assumption that the receiver has information not about the valuations of the products but about the valuation of their outside option. In their model, the sender’s information and the receiver’s information are independent, (i.e., the receiver’s information does not give meaningful information about the sender’s information), so our crosschecking strategy cannot be used in their setting. The main source of the effectiveness of the crosschecking strategy in our paper is the correlation between the two pieces of information of the seller and the buyer.

The possibility of an informed receiver in a cheap talk game has been considered by several other authors. However, this paper provides a quite different setup and a new insight. Above all things, as we mentioned above, we assume that a sender has a transparent and monotone motive. Besides, we consider a situation in which the support of each information is unbounded under the assumption of normal distribution of noises, whereas all other papers assume a finite or bounded support7. A direct consequence of a normal distribution assumption is that there is no off-the-equilibrium message. That is, it is impossible to detect a false message for sure because any message is possible in equilibrium. Therefore, we cannot use Lai’s (2014) [5] punishment strategy based on a proper off-the-equilibrium belief due to inconsistent supports between the sender’s message and the receiver’s information, or have no reason to resort to Watson’s (1996) [3] matching strategy because the sender’s message and the receiver’s information do not match with probability one even if the sender is honest, as far as the information of both players has noise with unbounded supports. Since there is no off-the-equilibrium path nor off-the-equilibrium posterior belief in our model, the receiver cannot penalize a sender who is presumed to have sent a false message. In our paper, however, the receiver can penalize a sender by a crosschecking strategy. Owing to this strategy, the receiver is enabled to choose the action favorable to the sender if the sender’s message is not too far above their own information, and chooses the action unfavorable to them if it is too high. Seidmann (1990) [2] also showed the possibility that cheap talk could influence the receiver’s equilibrium actions even when the sender has transparent and monotone motives if the receiver is privately informed, but they did not answer the question of whether more information about the receiver makes honest communication of the sender easier or more difficult. Moreover, their model is quite restrictive in the sense that the support of the players’ information is finite.

In our model in which any message is possible in equilibrium, the crosschecking strategy can work well to discipline the sender’s incentive to inflate their information. The difference between the two pieces of information can give the receiver some information about whether the sender lied or not because of the correlation between the sender’s information and the receiver’s information. The receiver’s belief recognizes such valuable information and thus the crosschecking strategy based on the belief is to exploit the correlation structure between the sender’s information and the receiver’s information. Correlation between them is essential to driving the outcome of full revelation. This distinguishes our paper from Seidmann’s.

Does the receiver believe that the sender lied if the sender’s message is too high from their own information? This is not the case. They do believe that the sender sent the truthful message because they know that the sender chose the equilibrium strategy of reporting truthfully, but act strategically as if they do not believe it by ignoring the message and responding to the sender in a way that they take only their own information seriously. Otherwise, the sender would inflate their message. In other words, the receiver overrules the sender’s message, not because they believe that the message is likely to be wrong, but because they need to give them an incentive to report correctly. This feature of the crosschecking strategy is somewhat similar to the trigger strategy by Green and Porter (1984) [16] in that the player who receives some signal penalizes the opponent even if they know that the opponent did not cheat because they would cheat otherwise.

The main results of Lai (2014) [5] and Ishida and Shimizu (2016) [6] have a common feature with our result in that the incentive for truthful communication diminishes as the receiver is better informed. However, their main insight that the receiver’s response becomes less sensitive to the sender’s message as they are better informed as a result of Bayesian belief updating is not valid in our model. In our model, as the receiver’s expertise becomes more precise, the possibility of truthful communication disappears discontinuously at some level of their expertise. In their model, the receiver’s belief is a weighted average of their information and the sender’s information. So, as the receiver’s information is more accurate, the weight of their own information should be larger, and thus they must rationally respond to the sender’s message less sensitively. In our model, the receiver’s belief which directly governs the sender’s cheap talk message is not a weighted average of their information and the sender’s message.

Our results are sharply contrasted with Lai’s in several respects. According to Lai (2014) [5], the receiver who becomes informed could benefit only if the receiver’s information is sufficiently different from the sender’s, i.e., it is very useful information. In our model, reliable information could be conveyed from the sender only when the receiver’s information was sufficiently close to the sender’s information. Besides, in our model, a receiver who is informed is always better off than a receiver who is uninformed, whereas a completely uninformed receiver may be better off than an informed receiver in their model because the sender would provide an informed receiver less informative advice that yields the receiver a lower payoff. Also, most importantly, the results by Lai (2014) [5] and Ishida and Shimizu (2016) [6] hold only when the preferences of the sender and the receiver are sufficiently congruent, whereas our result of perfectly truthful communication holds even if their preferences are conflicting enough in the sense that the sender’s favorite is independent of the receiver’s favorite. Another important difference is that in Lai (2014) [5], the receiver’s information does not elicit full revelation, which is not the case in our model.

The paper is organized as follows. In Section 2, we set up a cheap talk model of an informed buyer. In Section 3, we characterize the fully revealing communicative equilibrium with crosschecking strategies in the cheap talk game. In Section 4, we provide some comparative statics to examine the effect of a change in the buyer’s informativeness. Concluding remarks and some ramifications follow in Section 5. Proofs are provided in Appendix A.

2. Model

There is a seller (sender) S, and a buyer (receiver) B. The state of nature is a random variable that is distributed over . For example, could be the quality of a product that a salesperson sells to a consumer. According to Bayes–Laplace’s principle of indifference (insufficient reason)8, we assume that is uniformly distributed over 9, i.e., players have no information about a priori. Although neither the seller nor the buyer knows the accurate value of , both of them receive a noisy signal on the state of nature for and B where , is stochastically independent with , and ’s are independent. It is important to note that B is also partially informed. We assume that follows a normal distribution10 with its mean zero and the variance , , where 11. The assumption of the inequality in the variances reflects the feature that the seller has higher expertise about than the buyer.

The game proceeds as follows. First, the state of nature is realized and then the seller and the buyer receive a private signal and , respectively, without knowing . After observing private information , S sends a payoff-irrelevant message (cheap talk) to B12. Then, receiving a message , B updates their posterior belief about , , and then forms their belief about , , by using m and , where . Based on this belief, they choose . The buyer’s payoff depends directly on , while the seller’s payoff depends only on . Thus, we will call an S’s payoff-relevant action, and an S’s payoff-irrelevant action. For example, when a realtor gives some information about the prospect in real estate in some area, the buyer may buy a house that the realtor recommends () or some other house in the area (). Note, that and are perfect substitutes from the buyer’s point of view, whereas they are not from the seller’s point of view. Also, note that both and are from an unbounded set.

The payoff to S is given by a continuously differentiable function and the payoff to B is given by twice continuously differentiable function . Throughout the paper, we will assume that (A1) where , , i.e., is increasing and concave in , and (A2) . The buyer’s utility function implies that it has a unique maximum in a for all and the maximizer of , denoted by , is strictly increasing in . The independence of the seller’s utility function on means that S has transparent motives, and the utility which is increasing in means that S has monotone motives. A typical example of transparent and monotone motives is the preference of a salesperson who is paid based on the quantity they sell. One possible interpretation for and a is that a is the total amount of a consumer’s purchases, while and are the amounts of purchases from the salesperson and from other sellers, respectively. Then, the salesperson’s utility increases with respect to the consumer’s purchases from them regardless of . The monotonic increase in in means that the buyer will want to buy more units of high which can be interpreted as quality. For example, an exponential utility function (for ) or its affine transformation which is called CARA (constant absolute risk aversion) utility function satisfies the assumption (A1), because and .

A strategy for S specifies a signaling rule given by a continuous function . A strategy for B is an action rule given by a function that determines and . The equilibrium concept that we will employ is that of a weak Perfect Bayesian equilibrium (wPBE). An equilibrium of this game consists of a signaling rule for S, an action rule of B and a system of beliefs where , such that

- (2-I)

- , where is the conditional density function of given ,

- (2-II)

- B’s posterior belief and are consistent with the Bayes’ rule on the equilibrium path.

- (2-III)

- where .

(2-I) and (2-III) are conditions for sequential rationality and (2-II) is the condition for consistency. Henceforth, we will simply use the notation of for the density function conditional on and for the corresponding distribution function by suppressing . For the time being, we restrict our attention pf B’s choice to (not to a), since our primary concern is the truth-telling incentive of the seller whose utility relies only on ,

Before we characterize equilibria, we will adapt some standard definitions often used in the literature.

Definition 1.

A message m induces an action of the buyer with if .

Definition 2.

An equilibrium is communicative (or influential) for if there exist two different observations , such that and for some where , . An equilibrium is uncommunicative (or babbling) for otherwise.

In other words, if two different messages sent by two different types of seller induce two different S’s payoff-relevant actions () of the buyer with some information , the equilibrium is communicative (or influential) for in the sense that some meaningful message that can affect the buyer’s choice of is conveyed by cheap talk communication in equilibrium13. Henceforth, we will call “communicative for ” just “communicative” for brevity.

Definition 3.

A communicative equilibrium is fully revealing if for any such that . In particular, if , a fully-revealing equilibrium is called a truth-revealing equilibrium14.

Observe that, insofar as the buyer has no information, the message sent by the seller cannot be credible at all in this model with transparent and monotone motives. In the CS model, the payoff function of S as well as that of B is single-peaked, so that, given , the favorite actions to S and B do not differ very much even if they do differ. This implies that, for some low value of , both S and B prefer one action to another, while the reverse is true for some other high value of . In other words, there is room for coordination between S and B and in effect, cheap talk enables such coordination to occur by conveying the message whether is high or low. In our model, however, the assumption of single-peaked preferences is violated and all the types of S prefer a higher level of the buyer’s action . Thus, S would like to pretend to have observed as high as possible to induce B’s highest action possible, regardless of their type.

We now summarize with

Proposition 1.

If B has no information about θ, there exists no communicative equilibrium.

It is easy to prove it. Suppose, for some distinct and , and where , , and in a communicative equilibrium. If , S with information would prefer sending to m, since . If , S with information would choose m instead of , since . This violates the definition of an equilibrium.

However, if B has some information about , the above argument breaks down. Suppose m induces and induces with for some . Nonetheless, we cannot conclude that S will prefer sending to m, because could induce a lower level of action for some other .

In the next section, we will make a formal analysis of cheap talk to an informed buyer.

3. Informed Buyer

As is typical in cheap talk games, even if the buyer has some noisy information about , a babbling equilibrium for exists if the seller sends any message regardless of their information and the buyer ignores whatever the seller says. Henceforth, we will be mainly interested in characterizing communicative equilibria which are fully revealing.

For our purpose of finding a fully-revealing communicative equilibrium, the following specific form of strategy profile will be useful, although it is not an equilibrium in itself. The actual equilibrium strategy profile will be provided later in (4-I), (4-II) and (4-III);

- (3-I)

- The seller with announces .

- (3-II)

- The buyer believes , andfor some .

- (3-III)

- The buyer buys the amount of from the seller and from other sellers.

The buyer’s purchasing rule given by (3-III) together with the belief given in (3-II) will be called a “crosschecking strategy”, and will be called tolerance in a deviation between m and . It says that the buyer punishes the seller by ignoring the seller’s message if their message is too high above their own information15.

If this is an equilibrium, there is no off-the-equilibrium message, because any message can occur even if the seller tells truthfully, as long as follows a normal distribution over . Therefore, the beliefs given in (3-II) do not satisfy the consistency, because the consistent beliefs must follow Bayes’ law by using both m and for any m (on any equilibrium path). Nonetheless, this strategy profile can give us useful insight for characterizing a wPBE.

3.1. Sequential Rationality

Aside from consistency, we can check the sequential rationality of the strategies given in (3-I) and (3-III). Since it is obvious that (3-III) is an optimal decision of the buyer based on the seller’s truth-revealing strategy and the belief , it is enough to focus on the optimal decision of the seller. Given that (), the seller will maximize

The economic reasoning behind this formula goes as follows. The first term represents the punishment that the seller would obtain when is very low (). The second term indicates their utility when falls into a normal confidence region (). Thus, the effect of inflating the message on the seller’s utility is

The first term is the effect of utility increases in normal cases due to the inflated announcement () and the second term is the loss that they are expected to bear from being punished by increasing their announcement marginally.

Truthful revelation requires that , i.e., the marginal gain is equal to the marginal loss. This implies that

If is linear in , . It is easy to see that there is no which satisfies (3). The proof exploits due to the linearity of the utility function. Then, because of the equality, it is clear that the direct utility effect measured in a large range of , which is , exceeds the penalty effect measured in a small range of for a small , which is roughly .

Proposition 2.

If the utility function is linear in , the truth-revealing strategy (3-I) together with the crosschecking strategy (3-III) cannot satisfy sequential rationality16.

If , it is obvious that there is no truth-revealing equilibrium with the crosschecking strategy, because implies , so that a gain from exaggeration exceeds the loss.

If , however, , i.e., the penalty effect of exaggerating becomes more severe, so Equation (3) may have a solution for which is independent of . We will denote the solution by .

To confirm the existence of the equilibrium tolerance 17, take which is a special form of CARA utility function. Equation (3) can be simplified into

or equivalently,

where is the hazard rate. This equation determines the equilibrium tolerance . Moreover, since has the distribution of , we have

where and . Note, that neither depends on , so does not, either. By substituting (6) and (7) into (4), we obtain

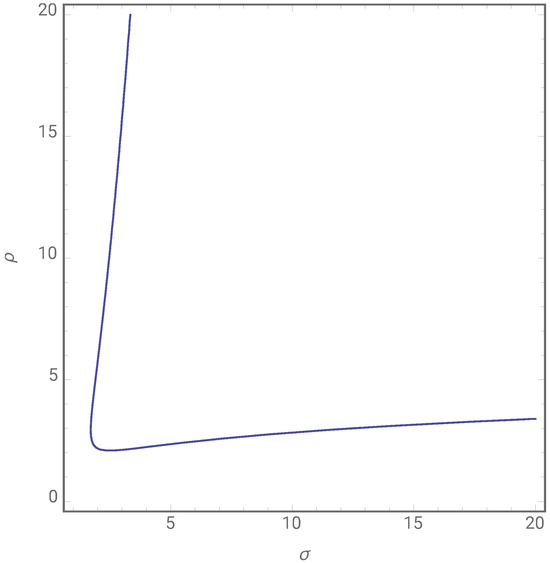

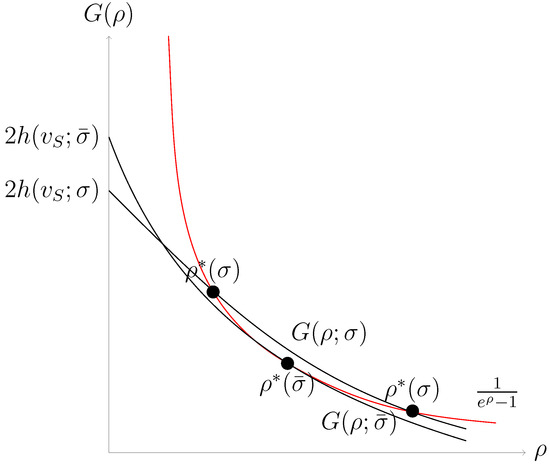

Figure 1 shows for this particular utility function that (i) there exists such that the first order condition given by (3) is satisfied for some whenever and (ii) there does not exist for low values of . It also shows that there are two solutions of except for . Note, that higher values of change more elastically with respect to a change in than lower values. If we define the elasticity of with respect to by , we can notice that where . Figure 2 illustrates which is the minimum for sequential rationality of fully revealing strategies and the crosschecking strategy given in (3-I)–(3-III), and above which there are two values for , roughly speaking, an elastic one and an inelastic one.

Figure 1.

Optimal for various values of .

Figure 2.

Multiple Solutions for when .

3.2. Consistency

Unfortunately, however, the beliefs given in (3-II) fail to satisfy consistency, because the consistency of a belief requires it to be updated by Bayes’ law for any m and insofar as any is an equilibrium message given the equilibrium strategy for . Thus, the unique consistent belief in this model is that

where , , and is the maximum likelihood estimator for 18, because the likelihood function is

This leads us to consider the following modified strategies and beliefs;

- (4-I)

- S with announces .

- (4-II)

- B believes and .

- (4-III)

- B chooses and .

Note, that B’s choice of a relies on b, while their choice of replies on . In the example of a salesperson, the strategy (4-III) can be interpreted as purchasing in total, but purchasing only from the seller and the remaining amount from other sellers. The outside option to buy from other sellers than the particular seller S can be a threat that enables the seller to report truthfully even if the consistency requirement of the belief does not give a leeway to doubt the genuineness of a suspicious message.

Clearly, the beliefs given in (4-II) satisfy consistency and the buyer’s response, , which is given in (4-III), is optimal given the belief. Moreover, given the buyer’s response, , the seller’s cheap talk message given in (4-I) is also optimal for , as we showed before. Therefore, the strategies and beliefs given in (4-I)–(4-III) constitute an equilibrium and it is truth-revealing. Now, our main result follows.

Proposition 3.

There exists such that for any , there is a truth-revealing equilibrium in which (4-I), (4-II), and (4-III) constitute an equilibrium for some that is independent of and , if the utility function u is any negative affine transformation of , i.e., where .

Intuitively, if the seller’s utility function is which is strictly concave, inflating the message entails increasing the penalty probability as well as increasing the direct utility but the latter effect on the utility is exceeded by the former penalty effect. Therefore, it may be optimal for the seller not to inflate their message.

This proposition says that the utility function of the seller satisfies the differential equation given by (3) for some which is independent of and , implying that under this utility function, there is a possibility that there is characterizing the equilibrium crosschecking strategy and, moreover, it does not depend on and . This utility function enables the seller to reveal the truth by making the punishment larger than the direct gain when they inflate their information.

Is it still possible that there is a truth-revealing equilibrium for a different form of utility function? The following proposition suggests that it is not possible.

Proposition 4.

If there is a truth-telling equilibrium such that (4-I), (4-II), and (4-III) constitute an equilibrium for some that is independent of and , then the utility function must have the form of where .

It says that an affine transformation of for some , i.e., , is indeed a necessary condition as well as a sufficient condition for the existence of a fully revealing equilibrium. So, we can conclude that when the buyer is partially informed, it is possible to fully reveal the private information of the seller with the crosschecking strategy only if the seller has a utility function of this form.

This result could be interpreted as a possibility theorem in the sense that truth-telling is possible in equilibrium if the seller has this CARA utility function, or interpreted as an impossibility theorem in the sense that truth-telling is possible only if the seller has the CARA utility function. Considering the fact that the CARA function is a reasonable approximation to the real but unknown utility function19, we believe that this result is reassuring20.

Before closing this section, it will be worthwhile to discuss the possibility of other communicative equilibria. Some readers may think that it is possible to construct the following trivial truth-revealing equilibrium in which , and where is a constant. First of all, it is not a strict equilibrium, although it is a wPBE. The seller’s truth-revealing strategy is not a unique best response to the buyer’s buying strategy. It relies heavily on a tie-breaking rule, whereas our equilibrium consists of a seller’s strategy (4-I) which is a unique best response to the buyer’s strategy and a buyer’s strategy (4-III) which is also a unique best response to the belief (4-II). This means that such an equilibrium is not robust to slight perturbations in payoffs. For example, if the seller obtains a slightly positive utility from a big lie itself, they will strictly prefer such a lie to being honest. Therefore, the argument that the seller is always truthful about their product quality because the buyer always buys the same amount from them regardless of their message does not seem to be so strongly convincing in the sense that they can lie freely for the same reason. Moreover, it is not a communicative equilibrium in our definition because no pair of different messages induces different seller’s payoff-relevant actions .

4. Comparative Statics

Is the buyer who is less informed more credulous? We will investigate the effect of an increase in on the equilibrium tolerance . Consider the first order condition of the seller’s incentive compatibility given by (5) as follows:

The left-hand side is the ratio of marginal benefit to marginal cost of inflating the message slightly in terms of utility. Roughly, can be interpreted as the relative ratio of the punishment probability to the no-punishment probability. The punishment probability is affected by both and . If increases, the probability is increased because the tail probability is increased. If increases, the probability decreases. Therefore, if the left-hand side of (5) does not change very much for large values of , a larger should be complemented by a larger to maintain the constant probability that a slightly inflated message is penalized, which means that a larger value of is associated with a larger value of . This is why the buyer uses a more lenient strategy which allows for a deviation to be more permissible, as information is less accurate for most of the values of (except for small values of and ). Of course, if and are very small, this monotonicity may not hold.

Now, on the seller’s side, is it more likely that the seller tells the truth as the buyer is more informed ( gets smaller)? Proposition 3 suggests that it is not true, because there may not exist a truth-revealing equilibrium for a very low value of . To see the intuition, consider the limiting case of and . Given any fixed , if and (with maintaining ) keep falling, the penalty probability approaches zero, and thus, the expected net loss from inflating m converges to zero, implying that the seller will have the incentive to inflate their message. However, as approaches zero (so that is too small), becomes larger, and thus, the penalty probability becomes even smaller; hence, no communicative equilibrium for low values of and .

We will briefly compare the utilities of players when the buyer is completely uninformed and when they are partially informed. It is clear that the buyer cannot be worse off by obtaining some noisy information with any finite variance . Conditional on information m and , the buyer’s belief about is . So, assuming that the seller reveals truthfully, the loss function of the buyer, is minimized when they choose . Since minimizes , is clearly lower than , which is the buyer’s loss when they are uninformed. On the other hand, it is also clear that the seller has no reason to prefer an informed buyer, since an informed buyer may choose , if , while an uninformed buyer will always choose .

It is more intriguing to compare utilities when the buyer is more informed and less informed. Increasing has two effects on the seller’s utility, its direct effect and its indirect effect through . The direct effect is negative because the probability that is low enough to penalize S is higher. On the other hand, the indirect effect is positive, because the seller is less likely to be penalized with larger , implying that B chooses than more often, as far as the seller reveals truthfully. The next proposition shows that the direct negative effect dominates the indirect positive effect, so decreases as increases for any . This is rather contrary to the widely held belief that the seller prefers a less informed buyer. The correct intuition behind this counterintuitive result is that the wrong information from a less informed buyer may screw up the deal between the seller and the buyer in the sense that they choose a very low quantity based on their own preposterous information.

Proposition 5.

In equilibrium, the seller’s utility decreases as increases for any , if .

Intuitively, if the tolerance schedule is inelastic with respect to a change in , the positive indirect effect through is outweighed by the negative direct effect, so the result immediately follows.

A seller’s preference for an informed buyer clearly relies on their information . It is obvious that the seller’s utility in a communicative equilibrium increases as increases because a higher value of shifts the conditional distribution of to the right and it has the direct positive effect of . This leads to the following proposition.

Proposition 6.

For any , there is a large such that for any , the seller with prefers an informed buyer with expertise to an uninformed buyer.

This proposition suggests that the monotonicity of with respect to for any is not extended to .

Finally, we are interested in how the buyer’s utility is affected by the accuracy of their own information.

Proposition 7.

The buyer with information is better off as their information becomes more accurate, i.e., increases.

This proposition implies that a buyer’s utility increases unambiguously with respect to the precision of their own information, unlike the seller’s utility. It is clear because the buyer’s objective is to minimize the mean square error of their decision based on their estimator of given the information available to them, which is to minimize the variance of their unbiased estimator . While the seller’s utility depends on the effect of on because their utility is determined by the amount the buyer buys from them, , the buyer’s utility is free from the effect of on because their utility is determined by the total amount they buy , not just the amount they buy from the seller .

5. Conclusions

In this paper, we have shown that the information of a buyer can effectively deter an expert from giving false information to the buyer. However, we are also well aware of the limitation of our current analysis that there can be other equilibria including the babbling equilibrium. The empirical validity of the equilibrium we constructed could be tested by investigating the relation between the degree of buyer’s expertise and the transaction volume between the trading partners; it will be a promising future research agenda.

In reality, consumer information can protect a consumer from fraud by a salesperson by disciplining their deceptive motive. A salesperson’s persuasion can be credible if consumers have some relevant information about the product. This is also true for referral processes of the quality of a newly introduced experience. Information diffusion by word-of-mouth communication can be credible if consumers who seek opinions have some expertise. We believe that the general insight in this paper can be applied to richer economic or social situations beyond transactions between a seller and a buyer.

Author Contributions

Conceptualization, J.Y.K.; Writing—Original Draft Preparation, J.Y.K.; Writing—Review & Editing, J.Y.K.; Supervision, J.Y.K.; Formal analysis, J.J. and J.Y.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Ministry of Education of the Republic of Korea and the National Research Foundation of Korea (NRF-2022S1A5A2A0304932311).

Data Availability Statement

No data was used for this research.

Conflicts of Interest

The author reports that there are no competing interests to declare.

Appendix A

Proof of Proposition 2.

We have

The inequality follows from and which is because if . The last equality follows from due to the linearity of . Therefore, the first-order condition given by (3) cannot be satisfied for any . □

Proof of Proposition 3.

Lemma A1.

If , the first order condition of the seller’s optimization given by (8) implies the second order condition.

Proof.

We have

Therefore, we have

since , and from the first order condition given by (4). □

Lemma A2.

If , local optimality of the seller implies global optimality.

Proof.

Since it is clear that a seller will not deviate to , we will check only the incentive to deviate to .

Since , Equation (2) can be rearranged into

We will show , .

Without loss of generality, assume that . Since is a local maximum, must satisfy the first-order condition,

or equivalently,

where is a hazard rate function. It is well known that a normal distribution function satisfies log-concavity so its hazard rate function is monotonic increasing, i.e., . (See Bagnoli and Bergstrm (2005) [26].) Also, we have and . Therefore, the Equation (A5) has a unique solution, which is and . This completes the proof. □

Now, we can rewrite as following:

The graph of is obtained simply by scaling of the vertical axis and the transition of the a-axis. This does not change the first-order condition and second-order condition. □

Proof of Proposition 4.

Derivation of :

The seller’s utility in the fully revealing equilibrium is given by

where and . We will use the following Leibniz rule.

Then, we obtain

Using , and , we have

where . The first term is the indirect effect, and the second and third terms are the direct effects.

Proof of Proposition 5.

Let be the equilibrium utility of a seller whose information has standard deviation . We will prove that for any . To do that, we will show that (i) is increasing in , and (ii) .

(i) Let . Then, x follows a standard normal distribution. Let the density function and the distribution function of the standard normal distribution be and respectively. Then, can be rewritten as

Due to Young’s Theorem, it suffices to show that .

By using and , we have

where . By using the Leibniz rule, we have

where . Therefore, we obtain

if , where is the elasticity of with respect to .

(ii) , since , and is independent of . We want to show that .

We have

since . □

Proof of Proposition 6.

Since the seller’s utility in a babbling equilibrium is , it suffices to show that there are large such that for all , , for all .

Lemma A3.

Let . Then, as .

Proof.

Lemma A4.

For any , there exists such that for any , .

Proof.

We have

If , we have

Note that . Since and is finite, we have

Therefore, for any , there exists such that for any ,

This implies that

□

For any given and the corresponding , (A10) implies

Since and , it follows from Lemma 4 that for any . □

Proof of Proposition 7.

Since , where , , , are independent, we have

Thus, it follows that

which means

Therefore, we obtain

Hence, we have

□

Notes

| 1 | Exceptions include Seidmann (1990) [2], Watson (1996) [3], Olszewski (2004) [4], Lai (2014) [5], Ishida and Shimizu (2016) [6], etc., to name a few. |

| 2 | Seidmann (1990) [2] used the terms (IA) and (CI) for a transparent motive and a monotone motive respectively. |

| 3 | Seidmann (1990) [2] also demonstrates that the conjecture is incorrect when the receiver has no information and their action is two-dimensional similar to Charkraborty and Harbaugh (2010) [7]. In our model, the sender cares about a one-dimensional action of the receiver unlike the other two papers. In other words, the sender’s utility depends only on an action among two actions available to the receiver. |

| 4 | Battaglini (2002) [10] also considers the two-dimensional action space of the buyer, but in their model, the two-dimensional type space and two sellers are crucial to informative cheap talk, while our result does not rely on them. |

| 5 | For example, at trials, jurors are instructed to ignore expert testimony rather than discount it, if they find it not credible. They are free to disregard the expert opinion in whole or in part, if they determine that the testimony is motivated by some bias or interest in the case. (Moenssens, 2009) [11]. |

| 6 | One of the main reasons why we focus on this communicative equilibrium based on crosschecking strategies is that it is faithful to Bayesian spirit which requires exploiting all the available information including the buyer’s information. One can think of other equilibria in which a seller always makes an honest report simply because they are indifferent to being honest and not, or more concretely speaking, because their message is always ignored no matter what they say is strongly convincing. However, this equilibrium seems to be less intuitively appealing. For detailed discussions, see the end of Section 3. |

| 7 | These papers include Seidmann (1990) [2], Watson (1996) [3], Olszewski (2004) [4], Chen (2009) [13], Moreno de Barreda (2012) [14], Galeotti [15] et al. (2013), Lai (2014) [5] and Ishida and Shimizu (2016) [6]. The support of the receiver’s information is assumed to be binary in Chen (2009) [13], Galeotti (2013) [15] and Lai (2014) [5], finite in Seidmann (1990) [2], Watson (1996) [3], Olszewski (2004) [4], Ishida and Shimizu (2016) [6], and bounded in Moreno de Barreda (2012) [14]. |

| 8 | The principle of indifference, so-named by Keynes (1921) [17], specifies that a uniform prior distribution should be assumed when nothing is known about the true state of nature before observable data are available. |

| 9 | Note, that we are assuming an improper prior distribution. |

| 10 | We assume the unbounded support for and and correspondingly unbounded strategy spaces to avoid triviality that differing (inconsistent) supports can lead to. For a similar approach, see Kartik et al. (2007) [18]. |

| 11 | Degan and Li (2021) [19] and Espinosa and Ray (2022) [20] also assume normally distributed noisy signal generating processes like us but they endogenize the precision of noises. In Degan and Li (2021) [19], the seller chooses the precision of the noise with some cost which is increasing in the precision size. Since the precision is observable, it can be used as a signal of their information together with the noisy signal realization. Similarly, Espinosa and Ray (2022) [20] assume that the seller (agent) chooses the precision of noises with some cost. In their model, however, the buyer (principal) cannot observe the precision but only observe the signal realization. |

| 12 | Since the cheap talk message of the seller, m, is payoff-irrelevant by the definition of cheap talk, the payoffs of the players ( and ) which are described below should not depend on m. This is distinguished from Pitchik and Schotter (1987) [8]. In their model, an expert makes a binding recommendation, for example, about the price, so it is not cheap talk, whereas we consider an unbinding recommendation of an expert (for example, about the quality) thereby making the payoff of the buyer not directly depend on the recommendation m. |

| 13 | Some information is conveyed in equilibrium if but for some different and such that and . But such an equilibrium is not in our interest, because our main concern is in the relation between the seller and the buyer and the transactions between them. |

| 14 | Since even fully-revealing strategies which are reveal the truth in equilibrium, those strategies are literally truth-revealing, So, in fact, the terms “fully-revealing” and “truth-revealing” could be exchangeable. |

| 15 | Since a message farther from the buyer’s signal is more likely to be punished, it can be regarded as a costly signal as in costly signaling models with single-crossing preferences (e.g., Cho and Kreps (1987) [21]) or double-crossing preferences (e.g., Chen et al. (2022) [22]). However, the cost is indirect in our model, whereas the signaling cost is direct in costly signaling models. |

| 16 | If , a fully revealing equilibrium can be attained by the buyer’s extreme form of crosschecking strategy (), if and if or by their self-confirmatory strategy, for any m. However, our assumption that excludes this case. |

| 17 | We are abusing the word “equilibrium”. We use the term in a broader sense to mean that it satisfies sequential rationality. |

| 18 | It is well known that under the normality assumption of error terms, the maximum likelihood estimator is equivalent to the (generalized) Bayesian estimator minimizing the loss function defined by the mean square error, which is the posterior mean. |

| 19 | See Zuhair et al. (1992) [23]. |

| 20 | For empirical findings that a CARA utility function fits the data reasonably well, see Snowberg and Wolfers (2010) [24] and Barseghyan et al. (2018) [25]. |

References

- Crawford, V.; Sobel, J. Strategic Information Transmission. Econometrica 1982, 50, 1431–1451. [Google Scholar] [CrossRef]

- Seidmann, D. Effective Cheap Talk with Conflicting Interests. J. Econ. Theory 1990, 50, 445–458. [Google Scholar] [CrossRef]

- Watson, J. Information Transmission When the Informed Party Is Confused. Games Econ. Behav. 1996, 12, 143–161. [Google Scholar] [CrossRef]

- Olszewski, W. Informal Communication. J. Econ. Theory 2004, 117, 180–200. [Google Scholar] [CrossRef]

- Lai, E. Expert Advice for Amateurs. J. Econ. Behav. Organ. 2014, 103, 1–16. [Google Scholar] [CrossRef]

- Ishida, J.; Shimizu, T. Cheap Talk with an Informed Receiver. Econ. Theory Bull. 2016, 4, 61–72. [Google Scholar] [CrossRef]

- Charkraborty, A.; Harbaugh, R. Persuasion by Cheap Talk. Am. Econ. Rev. 2010, 100, 2361–2382. [Google Scholar] [CrossRef]

- Pitchik, C.; Schotter, A. Honesty in a Model of Strategic Information Transmission. Am. Econ. Rev. 1987, 77, 1032–1036. [Google Scholar]

- Wolinsky, A. Competition in a Market for Informed Experts’ Services. Rand J. Econ. 1993, 24, 380–398. [Google Scholar] [CrossRef]

- Battaglini, M. Multiple Referrals and Multidimensional Cheap Talk. Econometrica 2002, 70, 1379–1401. [Google Scholar] [CrossRef]

- Moenssens, A. Jury Instructions on Expert Testimony. In Wiley Encyclopedia of Forensic Science; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Lipnowski, E.; Ravid, D. Cheap Talk with Transparent Motives. Econometrica 2020, 88, 1631–1660. [Google Scholar] [CrossRef]

- Chen, Y. Communication with Two-Sided Asymmetric Information; University of Southhampton: Southampton, UK, 2009. [Google Scholar]

- Moreno de Barreda, L. Cheap Talk with Two-Sided Private Information; University of Oxford: Oxford, UK, 2012. [Google Scholar]

- Galeotti, A.; Ghiglino, C.; Squintani, F. Strategic Information Transmission Networks. J. Econ. Theory 2013, 148, 1751–1769. [Google Scholar] [CrossRef]

- Green, E.; Porter, R. Noncooperative Collusion under Imperfect Price Information. Econometrica 1984, 52, 87–100. [Google Scholar] [CrossRef]

- Keynes, J.M. A Treatise on Probability; Macmillan: London, UK, 1921. [Google Scholar]

- Kartik, N.; Ottaviani, M.; Squintani, F. Credulity, Lies, and Costly Talk. J. Econ. Theory 2007, 134, 93–116. [Google Scholar] [CrossRef]

- Degan, A.; Li, M. Persuasion with Costly Precision. Econ. Theory 2021, 72, 869–908. [Google Scholar] [CrossRef]

- Espinosa, F.; Ray, D. Too Good to be True? Retention Rules for Noisy Agents. Am. Econ. J. Microecon. 2022, 15, 493–535. [Google Scholar] [CrossRef]

- Cho, I.-K.; Kreps, D.M. Signaling Games and Stable Equilibria. Q. J. Econ. 1987, 2, 179–221. [Google Scholar] [CrossRef]

- Chen, C.-H.; Ishida, J.; Suen, W. Signaling under Double-Crossing Preferences. Econometrica 2022, 90, 1225–1260. [Google Scholar] [CrossRef]

- Zuhair, S.; Taylor, D.; Kramer, R. Choice of Utility Function Form: Its Effect on Classification of Risk Preferences and the Prediction of Farmer Decisions. Agric. Econ. 1992, 6, 333–344. [Google Scholar] [CrossRef]

- Snowberg, E.; Wolfers, J. Explaining the Favorite–Long Shot Bias: Is it Risk-Love or Misperceptions? J. Political Econ. 2010, 118, 723–746. [Google Scholar] [CrossRef]

- Barseghyan, L.; Molinari, F.; O’Donoghue, T.; Teitelbaum, J. Estimating Risk Preferences in the Field. J. Econ. Lit. 2018, 56, 501–564. [Google Scholar] [CrossRef]

- Bagnoli, M.; Bergstrom, T. Log-Concave Probability and Its Applications. Econ. Theory 2005, 26, 445–469. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).