There are two types of epistemic/doxastic models used in the game-theoretic literature: the so-called “state-space” models and the “type-space” models. We will adopt the former (note that there is a straightforward way of translating one type of model into the other). In the standard state-space model of a given game, one takes as starting point a set of states (or possible worlds) and associates with every state a strategy for every player, thus providing an interpretation of a state in terms of players’ choices. If

is a state and

is the strategy of player

i at

then the interpretation is that, at that state, player

i plays

. If the game is simultaneous (so that there cannot be any unreached information sets), then there is no ambiguity in the expression “player

i plays

”, but if the game is an extensive-form game then the expression

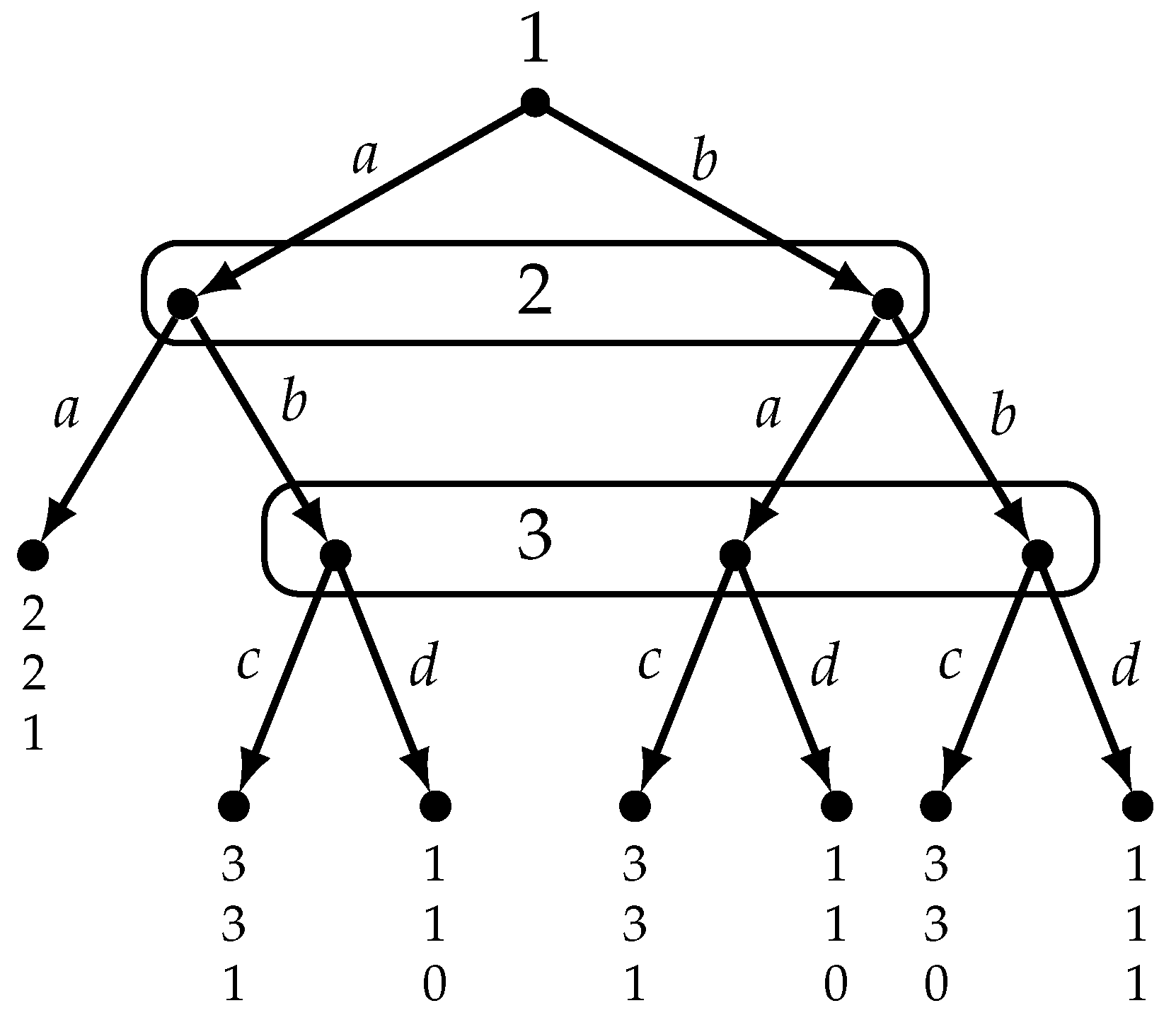

is ambiguous. Consider, for example, the game of

Figure 1 and a state

where Players 1 and 2 play

, so that Player 3’s information set is not reached; suppose also that the strategy of Player 3 associated with state

is

d. In what sense does Player 3 “play”

d? Does it mean that, before the game starts, Player 3 has made a plan to play

d if her information happens to be reached? Or does it mean (in a Stalnaker-Lewis interpretation of the counterfactual) that in the state most similar to

where her information set is actually reached, Player 3 plays

d? [This interpretation is adopted in [

22] where it is pointed out that in this type of models “one possible culprit for the confusion in the literature regarding what is required to force the backward induction solution in games of perfect information is the notion of a strategy”.] Or is Player 3’s strategy

d to be interpreted not as a statement about what Player 3 would do but as an expression of what the opponents think that Player 3 would do?

While most of the literature on the epistemic foundations of game theory makes use of strategy-based models, a few papers follow a

behavioral approach by associating with each state a

play (or outcome) of the game (the seminal contribution is [

23], followed by [

1,

8,

24,

25]; the focus of this literature has been on games with perfect information). The challenge in this class of models is to capture the reasoning of a player who takes a particular action while considering what would happen if she took a different action. The most common approach is to postulate, for each player, a set of conditional beliefs, where the conditioning events are represented by possible histories in the game, including off-path histories ([

23] uses extended information structures to model hypothetical knowledge, [

8] use plausibility relations and [

24] use conditional probability systems). Here we follow the simpler approach put forward in [

1], which models the “pre-choice” beliefs of a player, while the previous literature considered the “after-choice” beliefs. The previous literature was based on the assumption that, if at a state a player takes action

a, then she knows that she takes action

a, that is, in all the states that she considers possible she takes action

a. The pre-choice or deliberation stage approach, on the other hand, models the beliefs of the player at the time when she is contemplating the actions available to her and treats each of those actions as an “open possibility”. Thus, her beliefs take the following form: “if I take action

a then the outcome will be

x and if I take action

b then the outcome will be

y”, where the conditional “if

p then

q” is interpreted as a

material conditional, that is, as equivalent to “either not

p or

q” (in [

26] it is argued that, contrary to a common view, the material conditional is indeed sufficient to model deliberation; it is also shown how to convert pre-choice beliefs into after-choice beliefs, reflecting a later stage at which the agent has made up her mind on what to do). This analysis does not rely in any way on counterfactuals; furthermore, only the beliefs of the active players at the time of choice are modeled, so that

no initial beliefs nor belief revision policies are postulated. The approach is described below and it makes use of the history-based definition of extensive-form game, which is reviewed in

Appendix A.

3.1. Qualitative Beliefs

Let be a set, whose elements are called states (or possible worlds). We represent the beliefs of an agent by means of a binary relation . The interpretation of , also denoted by , is that at state the agent considers state possible; we also say that is reachable from by . For every we denote by the set of states that are reachable from , that is, .

is

transitive if

implies

and it is

euclidean if

implies

(it is well known that transitivity of

corresponds to positive introspection of beliefs: if the agent believes an event

then she believes that she believes

, and euclideanness corresponds to negative introspection: if the agent does not believe

then she believes that she does not believe

). We will assume throughout that the belief relations are transitive and euclidean so that

implies that

. Note that we do not assume reflexivity of

(that is, we do not assume that, for every state

,

; reflexivity corresponds to the assumption that a player cannot have incorrect beliefs: an assumption that, as [

27] points out, is conceptually problematic, especially in a multi-agent context). Hence, in general, the relation

does

not induce a partition of the set of states.

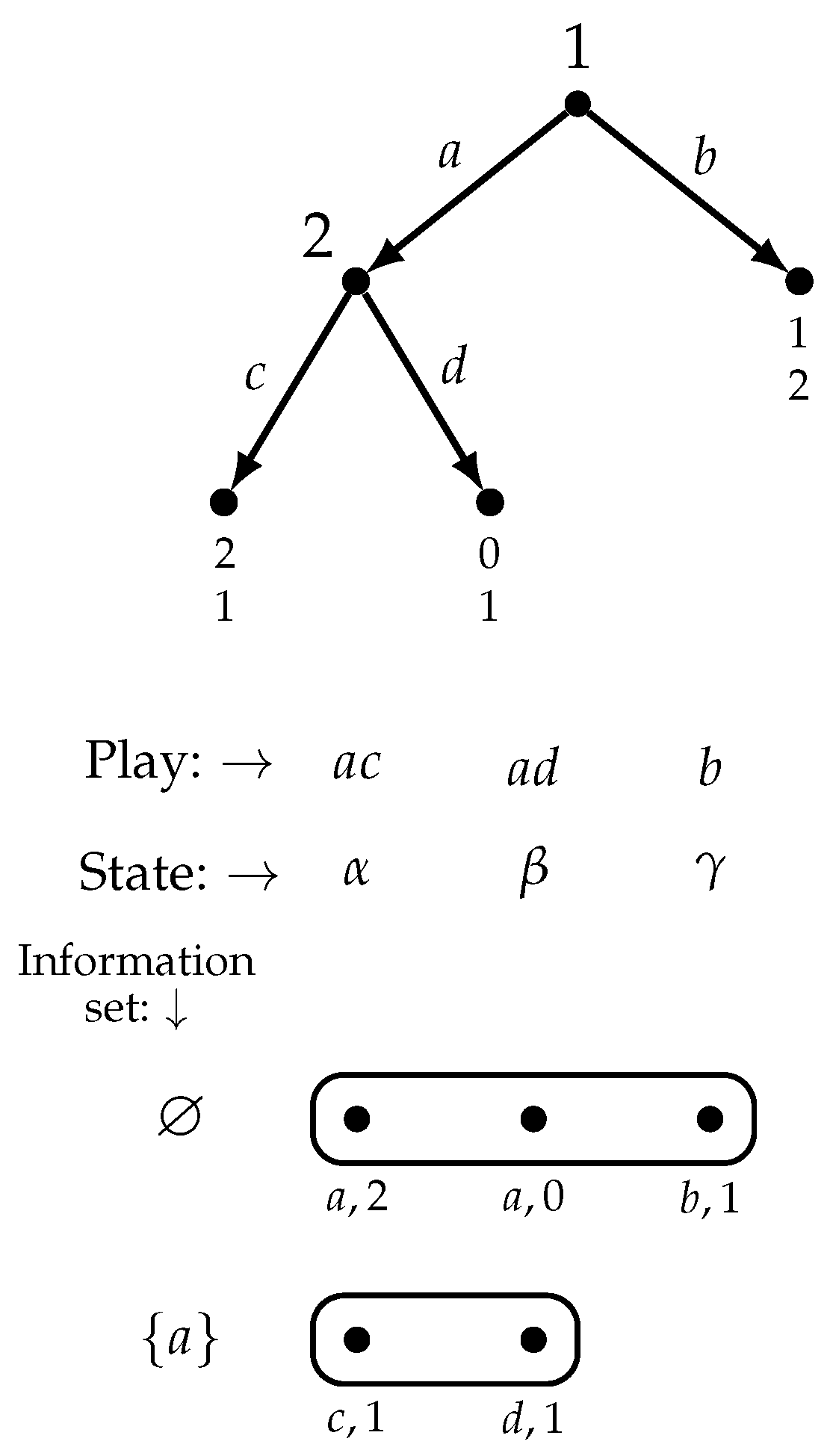

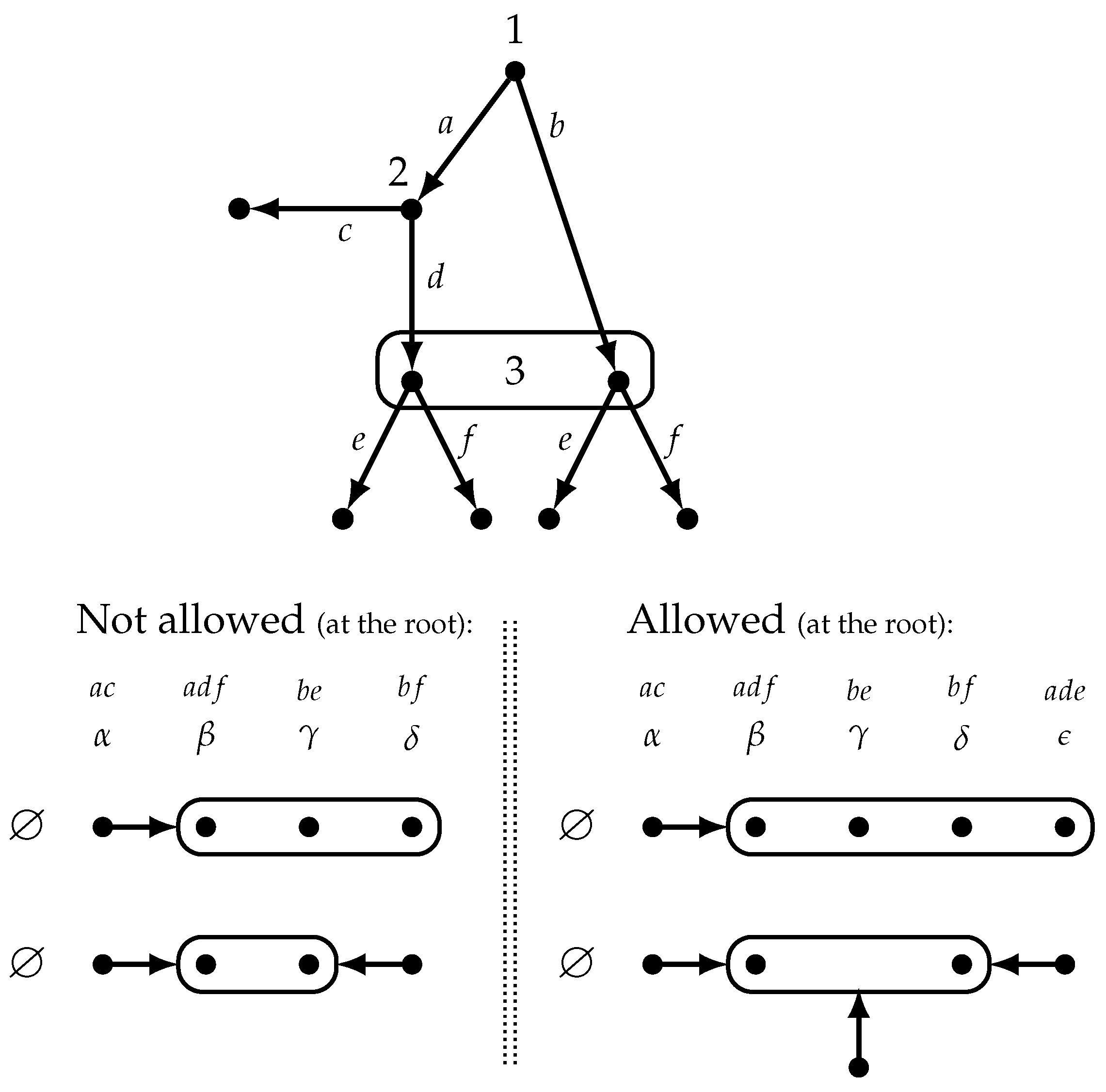

Graphically, we represent a transitive and euclidean belief relation as shown in

Figure 4, where

if and only if either there is an arrow from

to the rounded rectangle containing

, or

and

are enclosed in the same rounded rectangle (that is, if there is an arrow from state

to a rounded rectangle, then, for every

in that rectangle,

and, for any two states

and

that are enclosed in a rounded rectangle,

).

The object of beliefs are propositions or events (i.e., sets of states; events are denoted by bold-type capital letters). We say that

at state ω the agent believes event if and only if

. For example, in the case illustrated in

Figure 4, at state

the agent believes event

. We say that,

at state ω, event is true if

. In the case illustrated in

Figure 4, at state

the agent

erroneously believes event

, since event

is not true at

(

). We say that

at state ω the agent has correct beliefs if

(note that it is a consequence of euclideanness of the relation

that, even if the agent’s beliefs are objectively incorrect,

she always believes that what she believes is true: if

then

).

3.2. Models of Games

As a starting point in the definition of a model of a game, we take a set of states

and provide an interpretation of each state in terms of a particular play of the game, by means of a function

that associates, with every state

, a play or terminal history

. Each state

also provides a description of the beliefs of the active players by means of a binary relation

on

representing the beliefs of

, the player who moves at decision history

h. It would be more precise to write

instead of

, but we have chosen the lighter notation since there is no ambiguity, because we assume (see

Appendix A) that at every decision history there is a unique player who is active there. Note that beliefs are specified only at histories that are

reached at a given state, in the sense that

if and only if

.

Definition 1. A model of an extensive-form game is a tuple where

Ω is a set of states.

is an assignment of a terminal history to each state.

For every , is a belief relation that satisfies the following properties:

- 1.

if and only if [beliefs are specified only at reached decision histories and are consistent: consistency means that there is no event such that both and its complement are believed; it is well known that, at state ω, beliefs are consistent if and only if ].

- 2.

If then for some such that [the active player at history h correctly believes that her information set that contains h has been reached; recall (see Appendix A) that (also written as ) if and only if h and belong to the same information set of player (thus ]. - 3.

If then (1) and (2) if with then [by (1), beliefs satisfy positive and negative introspection and, by (2), beliefs are the same at any two histories in the same information set; thus one can unambiguously refer to a player’s beliefs at an information set, which is what we do in Figures 5–9].

- 4.

If and with , then, for every action (note that ), there is an such that .

The last condition states that if, at state and history h reached at (), player considers it possible that the play of the game has reached history , which belongs to her information set that contains h, then, for every action a available at that information set, there is a state that she considers possible at h and () where she takes action a at history (). This means that, for every available action, the active player at h considers it possible that she takes that action and thus has a belief about what will happen conditional on taking it. A further “natural” restriction on beliefs will be discussed later (Definition 6).

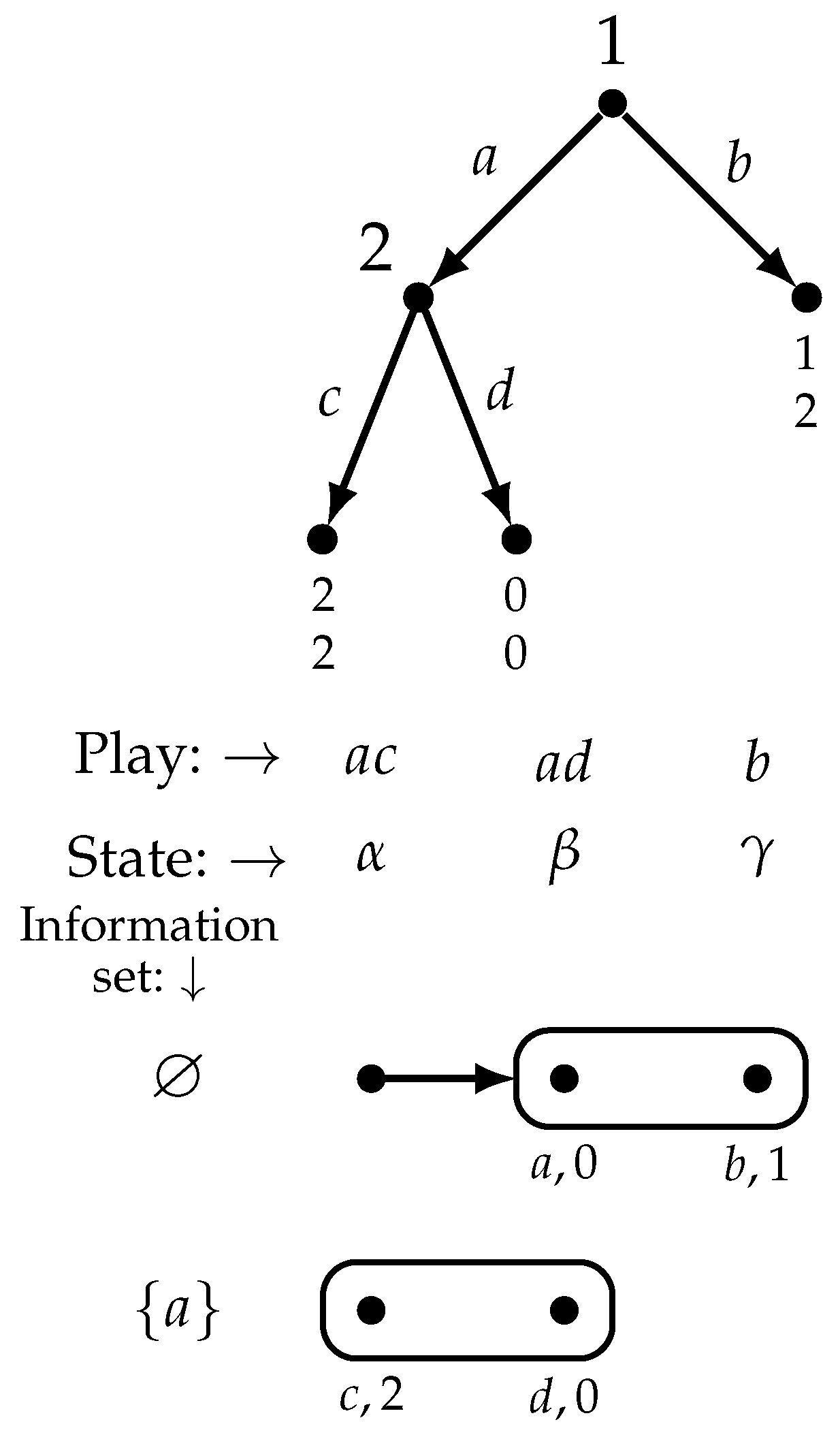

Figure 5 reproduces the game of

Figure 1 and shows a model of it. For every reached decision history, under every state that the corresponding player considers possible we have shown the action actually taken by that player and the player’s payoff (at the terminal history associated with that state).

Suppose, for example, that the actual state is . State encodes the following facts and beliefs.

- 1.

As a matter of fact, Player 1 plays a, Player 2 plays b and Player 3 plays d.

- 2.

Player 1 (who chooses at the null history ⌀) believes that if she plays a then Player 2 will also play a (this belief is erroneous since at state Player 2 actually plays b, after Player 1 plays a) and thus her utility will be 2, and she believes that if she plays b then Player 2 will play a and Player 3 will play d and thus her utility will be 1.

- 3.

Player 2 (who chooses at information set ) correctly believes that Player 1 played a and, furthermore, correctly believes that if he plays b then Player 3 will play d and thus his utility will be 1, and believes that if he plays a his utility will be 2.

- 4.

Player 3 (who chooses at information set ) erroneously believes that both Player 1 and Player 2 played b; thus, she believes that if she plays c her utility will be 0 and if she plays d her utility will be 1.

On the other hand, if the actual state is , then the actual play is and the beliefs of Players 1 and 2 are as detailed above (Points 2 and 3, respectively), while no beliefs are specified for Player 3, because Player 3 does not get to play (that is, Player 3 is not active at state since her information set is not reached).

3.3. Rationality

Consider again the model of

Figure 5 and state

. There Player 1 believes that if she takes action

a, her utility will be 2, and if she takes action

b, her utility will be 1. Thus, if she is rational, she must take action

a. Indeed, at state

she does take action

a and thus she is rational (although she will later discover that her belief was erroneous and the outcome turns out to be

not

so that her utility will be 1, not 2). Since Player 1 has the same beliefs at every state, we declare Player 1 to be rational at precisely those states where she takes action

a, namely

and

. Similar reasoning leads us to conclude that Player 2 is rational at those states where she takes action

a, namely states

and

. Similarly, Player 3 is rational at those states where she takes action

d, namely states

,

and

. If we denote by

the event that all the

active players are rational, then in the model of

Figure 5 we have that

(note that at state

Player 3 is

not active).

We need to define the notion of rationality more precisely. Various definitions of rationality have been suggested in the context of extensive-form games, most notably

material rationality and

substantive rationality [

28,

29]. The notion of material rationality is the weaker of the two in that a player can be found to be irrational only at decision histories of hers that are actually reached (substantive rationality, on the other hand, is more demanding since a player can be labeled as irrational at a decision history

h of hers even if

h not reached). Given that we have adopted a purely behavioral approach, the natural notion for us is the weaker one, namely material rationality. We will adopt a very weak version of it, according to which at a state

and reached history

h (that is,

), the active player at

h is rational if the following is the case: if

a is the action that the player takes at

h at state

(that is,

) then there is no other action at

h that, according to her beliefs,

guarantees a higher utility.

Definition 2. Let ω be a state, h a decision history that is reached at ω () and two actions available at h.

- (A)

We say that, at ω and h, the active player believes that b is better than a if, for all and for all such that (that is, history belongs to the same information set as h), if a is the action taken at history at state , that is, , and b is the action taken at at state , that is, , then . Thus, the active player at history h believes that action b is better than action a if, restricting attention to the states that she considers possible, the largest utility that she obtains if she plays a is less than the lowest utility that she obtains if she plays b.

- (B)

We say that player is rational at history h at state ω if and only if the following is true: if (that is, is the action played at h at state ω) then, for every , it is not the case that, at state ω and history h, player believes that b is better than a.

Finally, we define the event that all the

active players are rational, denoted by

as follows:

For example, as noted above, in the model of

Figure 5 we have that

.

3.4. Correct Beliefs

The notion of correct belief was first mentioned in

Section 3.1 and was identified with

local reflexivity (that is, reflexivity at a state, rather than global reflexivity). Since, at any state, only the beliefs of the active players are specified, we define the event that players have correct beliefs by restricting attention to those players who actually move. Thus, the event that the

active players have correct beliefs, denoted by

(‘T’ stands for ‘true’), is defined as follows:

For example, in the model of

Figure 5,

.

What does the expression “correct beliefs” mean? Consider state

in the model of

Figure 5 where the active players (Players 1 and 2) have correct beliefs in the sense of (

2) (

and

). Consider Player 1. There are two components to Player 1’s beliefs: (

i) she believes that if she plays

a then Player 2 will also play

a, and (

) she believes that if she plays

b then Players 2 and 3 will play

a and

d, respectively. The first belief is correct

at state , where Player 1 plays

a and Player 2 indeed follows with

a. As for the second belief, whether it is correct or not depends on how we interpret it. If we interpret it as the material conditional “if

b then

” (which is equivalent to “either not

b or

”) then it is indeed true at state

, but trivially so, because the antecedent is false there (Player 1 does

not play

b). If we interpret it as a counterfactual conditional “if Player 1

were to play

b then Players 2 and 3

would play

a and

d, respectively” then in order to decide whether the conditional is true or not one would need to enrich the model by adding a “similarity” or “closeness” relation on the set of states (in the spirit of [

3,

4]); one would then check if at the closest state(s) to

at which Player 1 plays

b it is indeed the case that Players 2 and 3 play

a and

d, respectively. Note that there is no a priori reason to think that the closest state to

where Player 1 plays

b is state

. This is because, as pointed out by Stalnaker ([

30], p.48), there is no necessary connection between counterfactuals, which capture causal relations, and beliefs: for example, I may believe that, if I drop the vase that I am holding in my hands, it will break (because I believe it is made of glass) but my belief is wrong because—as a matter of fact—if I were to drop it, it would not break, since it is made of plastic.

Our models do not have the resources to answer the question: “at state , is it true —as Player 1 believes—that if Player 1 were to play b then Players 2 and 3 would play a and d, respectively?” One could, of course, enrich the models in order to answer the question, but is there a compelling reason to do so? In other words, is it important to be able to answer such questions? If we are merely interested in determining what rational players do, then what matters is what actions they actually take and what they believe when they act, whether or not those beliefs are correct in a stronger sense than is captured by the material conditional.

Is the material conditional interpretation of “if I play a then the outcome will be x” sufficient, though? Since the crucial assumption in the proposed framework is that the agent considers all of her available actions as possible (that is, for every available action there is a doxastically accessible state where she takes that action), material conditionals are indeed sufficient: the material conditional “if I take action a the outcome will be x” zooms in—through the lens of the agent’s beliefs— on those states where action a is indeed taken and verifies that at those states the outcome is indeed x, while the states where action a is not taken are not relevant for the truth of the conditional.

3.5. Self-Confirming Play

We have defined two events: the event

that all the

active players are rational and the event

that all the

active players have correct beliefs. In the model of

Figure 5 we have that

and it so happens that

is a Nash equilibrium play, that is, there is a pure-strategy Nash equilibrium (namely,

) whose associated play is

. However, as shown below, this is not always the case.

At a play associated with a state

, each active player’s chosen action is rationally justified by her beliefs at the time of choice (since

) and the beliefs concerning what will happen after that action turn out to be correct (since

), so that no player is faced with evidence that her beliefs were wrong. Does that mean that, once the final outcome

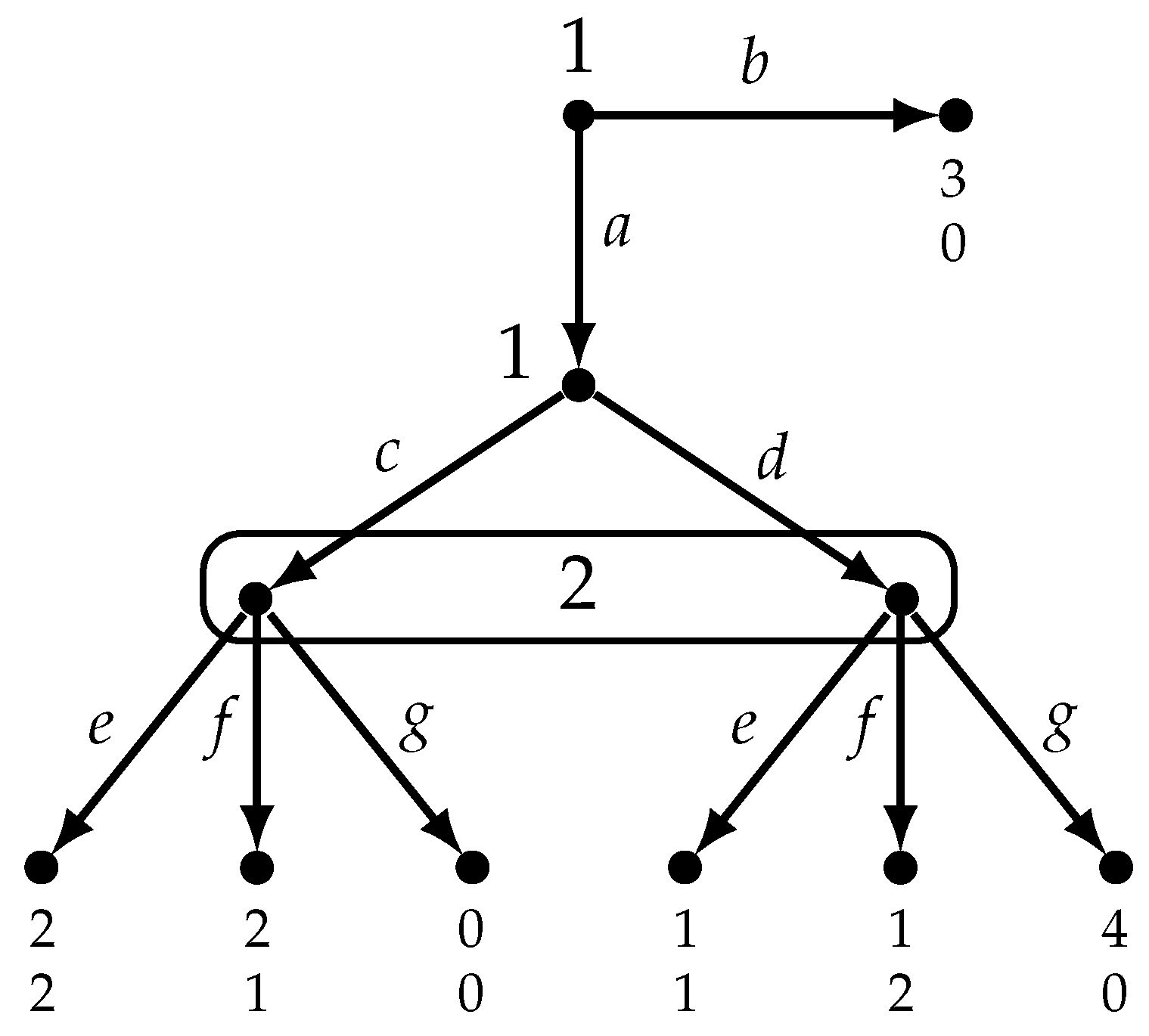

is revealed, no player regrets her actual choice? The answer is negative, because it is possible that a player, while not having any false beliefs, might not anticipate with precision the actions of the players who move after her. In the model shown in

Figure 6 we have that

, that is, at every state the active players are rational and have correct beliefs. Consider state

, where the play is

. At state

Player 1 is rational because she believes that if she plays

b her utility will be 1 and if she plays

a her utility might be 0 but might also be 2 (she is uncertain about what Player 2 will do). Thus, she does not believe that action

b is better than

a and hence it is rational for her to play

a (Definition 2). Player 2 is rational because she is indifferent between her two actions. However,

ex post, when Player 1 learns that the actual outcome is

, she regrets not taking action

b instead of

a. This example shows that, even though

,

is not a Nash equilibrium play, that is, there is no Nash equilibrium whose associated play is

.

Next we introduce another event which, in conjunction with

, guarantees that the active players’ beliefs about the opponents’ actual moves are

exactly correct (note that a requirement built in the definition of a self-fulfilling equilibrium ([

13], p.523) is that “each player’s beliefs about the opponents’ play are exactly correct”). Event

(’C’ for ’certainty’) defined below rules out uncertainty about the opponents’

past choices (Point 1) as well as uncertainty about the opponents’

future choices (Point 2). Note that Point 1 is automatically satisfied in games with perfect information and thus imposes restrictions on beliefs only in imperfect-information games.

Definition 3. A state ω belongs to event if and only if, for every reached history h at ω (that is, for every , and , (recall that is the information set that contains h),

- 1.

if and then ,

- 2.

, if and then .

Note that—concerning Point 1—a player may be erroneous in her certainty about the opponents’ past choices, that is, it may be that

, the actual reached history is

and yet player

is certain that she is moving at history

with

(for example, in the model of

Figure 5, at state

, which belongs to event

, and at reached history

, Player 3 is certain that she is moving at history

while, as a matter of fact, she is moving at history

), and—concerning Point 2— a player may also be erroneous in her certainty about what will happen after her choice (for example, in the model of

Figure 5, at state

and history

⌀, Player 1 is certain that if she takes action

a then Player 2 will also play

a, but she is wrong about this, because, as a matter of fact, at state

Player 2 follows with

b rather than

a).

In the model of

Figure 5 , while in the model of

Figure 6 , because at the null history

⌀ Player 1 is uncertain about what will happen if she takes action

a.

If state

belongs to the intersection of events

and

then, at state

, each active player’s beliefs about the opponents’

actual play are exactly correct. Note, however, that—as noted in

Section 3.4—there is no way of telling whether or not a player is also correct about what would happen after her counterfactual choices, because the models that we are considering are not rich enough to address the issue of counterfactuals.

Definition 4. Let G be a game and z a play (or terminal history) in G. We say that z is a self-confirming play if there exists a model of G and a state ω in that model such that (1) and (2) .

Definition 5. Given a game G and a play z in G, call z a Nash play if there is a pure-strategy Nash equilibrium whose induced play is z.

It turns out that, in perfect-information games in which no player moves more than once along any play, the two notions of self-confirming play and Nash play are equivalent ([

1], Proposition 1, p. 1012). For games with imperfect information, while it is still true that a Nash play is a self-confirming play, there may be self-confirming plays that are not Nash plays. The reason for this is that two players might have different beliefs about the potential choice of a third player.

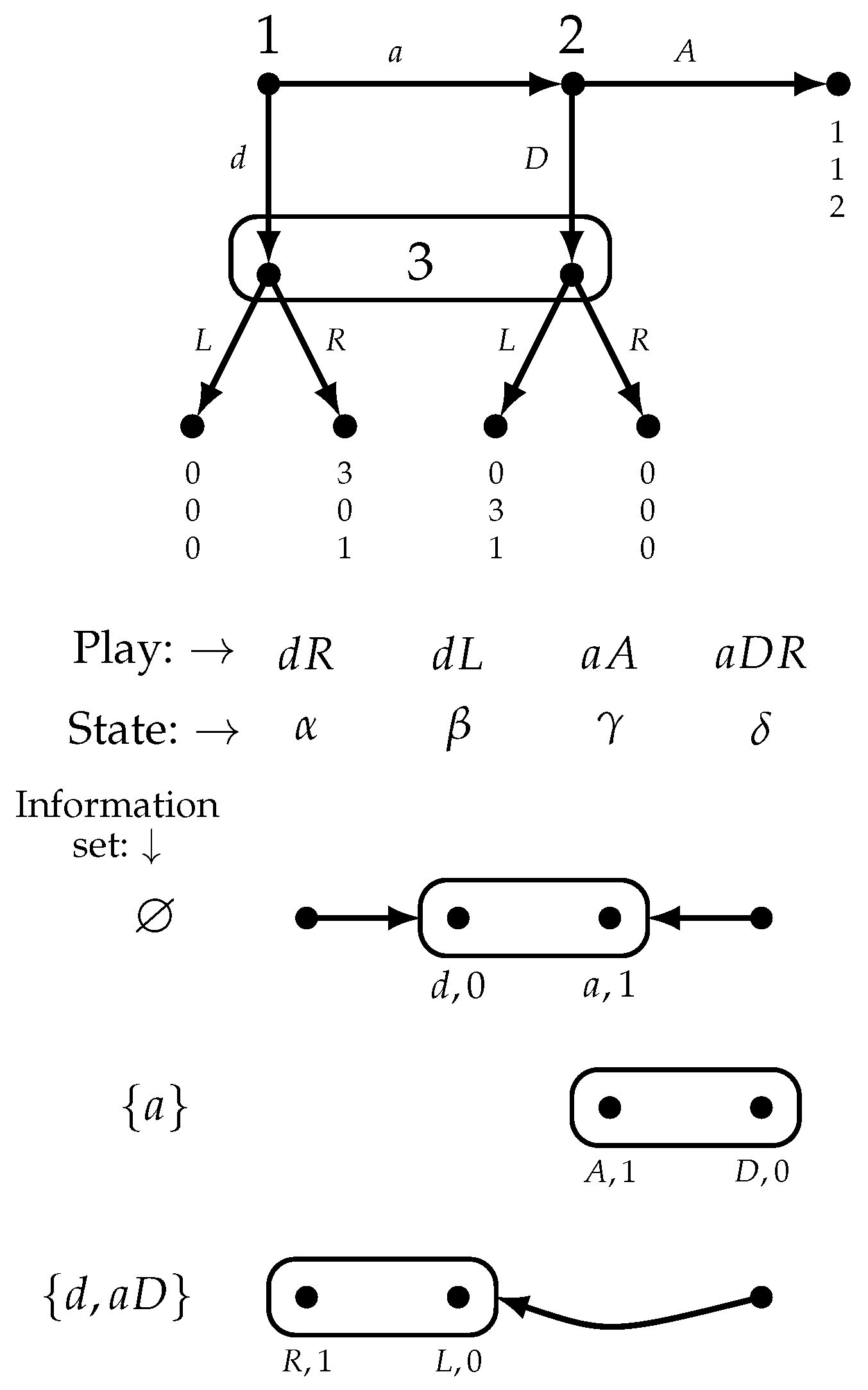

Figure 7 reproduces the game of

Figure 3 together with a model of it.

In the model of

Figure 7,

,

and

, so that

. Thus, at state

the active players (Players 1 and 2) are rational, have correct beliefs and have no uncertainty and yet

which is not a Nash play (there is no Nash equilibrium that yields the play

). Players 1 and 2 have different beliefs about what Player 3 would do at her information set: at state

Player 1 believes that if she plays

d then Player 3 will play

L, while Player 2 believes that if he plays

D then Player 3 will play

R.

Next we introduce a new event, denoted by (‘A’ stands for ‘agreement’), that rules out such disagreement and use it to provide a doxastic characterization of Nash play in general games (with possibly imperfect information). First we need to add one more condition to the definition of a model of a game that is relevant only if the game has imperfect information.

The definition of model given in

Section 3 (Definition 1) allows for “unreasonable” beliefs that express a causal link between a player’s action and her opponent’s reaction to it, when the latter does not observe the former’s choice. As an illustration of such beliefs, consider a game where Player 1 moves first, choosing between actions

a and

b, and Player 2 moves second choosing between actions

c and

d without being informed of Player 1’s choice, that is, histories

a and

b belong to the same information set of Player 2. Definition 1 allows Player 1 to have the following beliefs: “if I play

a, then Player 2 will play

c, while if I play

b then Player 2 will play

d”. Such beliefs ought to be rejected as “irrational” on the grounds that there cannot be a causal link between Player 1’s move and Player 2’s choice, since Player 2 does not get to observe Player 1’s move and thus cannot react differently to Player 1’s choice of

a and Player 1’s choice of

b. [It should be noted, however, that several authors have argued that such beliefs are not necessarily irrational: see, for example, [

31,

32,

33,

34,

35,

36]. A “causally correct” belief for Player 1 would require that the predicted choice(s) of Player 2 be the same, no matter what action Player 1 herself chooses.

Definition 6. A causally restricted model

of a game is a model (Definition 1) that satisfies the following additional restriction (a verbal interpretation follows; note that, for games with perfect information, there is no difference between a model and a restricted model, since (3) is vacuously satisfied). - 5.

Let ω be a state, h a decision history reached at ω () and a and b two actions available at h (). Let and be two decision histories that belong to the same information set of player () and be two actions available at (). Then the following holds (recall that denotes the information set that contains decision history h, that is, if and only if ):

In words: if, at state and reached history h, player considers it possible that, if she takes action a, history is reached and player takes action at and player i also considers it possible that, if she takes action b, then history is reached, which belongs to the same information set as , and player j takes action at , then either or at state and history h player i must also consider it possible that (1) after taking action a, is reached and player j takes action at and (2) after taking action b, is reached and player j takes action at .

Figure 8 shows a game and four partial models of it, giving only the beliefs of Player 1 (at history

⌀): two of them violate Condition 5 of Definition 6 (the ones on the left that are labeled “not allowed”), while the other two satisfy it. Note that the models shown in

Figure 5,

Figure 6 and

Figure 7 are all causally restricted models.

Now we turn to the notion of agreement, which is intended to rule out situations like the one shown in

Figure 7 where Players 1 and 2 disagree about what action Player 3 would take at her information set

.

Definition 7. We say that at state ω active players i and j consider future information set of player if there exist

- 1.

two decision histories and that are reached at ω (that is, ) and belong to i and j, respectively, (that is, and ),

- 2.

states and ,

- 3.

decision histories ,

such that, for some , and, for some , .

That is, player i at considers it possible that the play has reached history and, after taking an action at , information set of player k is reached, and player j at considers it possible that the play has reached history and, after taking an action at , that same information set of player k is reached.

Definition 8. We say that at state ω active players i and j are in agreement if, for every future information set that they consider (Definition 7), they predict the same choices(s) of player at h, that is, if player i is active at reached history and player j is active at reached history , with , then

- 1.

if and with , and , then there exists an such that, for some and , , and

- 2.

if with with , and then here exists an such that, for some and , .

Finally we define the event, denoted by

, that any two

active players are in agreement:

We can now state our characterization result, according to which a self-confirming play is a Nash play if and only if the beliefs of any two players are in agreement about the hypothetical choice(s) of a third player at a future information set that they both consider. As in [

1] we restrict attention to games that satisfy the property that each player moves at most once along any play. Equivalently, one could consider the agent form of the game, where the same player at different information sets is regarded as different players, but with the same payoff function.

Proposition 1. Consider a finite extensive-form game G where no player moves more than once along any play. Then,

- (A)

If z is a Nash play of G then there is a causally restricted model of G and a state in that model such that (1) and (2) .

- (B)

For any causally restricted model of G and for every state in that model, if then is a Nash play.

The proof of Proposition 1 is given in

Appendix B.

Note that, in a perfect-information game,

. Hence Proposition 1 in [

1] is a corollary of the above Proposition.