Behavioural Isomorphism, Cognitive Economy and Recursive Thought in Non-Transitive Game Strategy

Abstract

1. Competitive Decision-Making

2. Taxonomy of Strategy in RPS

2.1. Frequency-Based Strategy

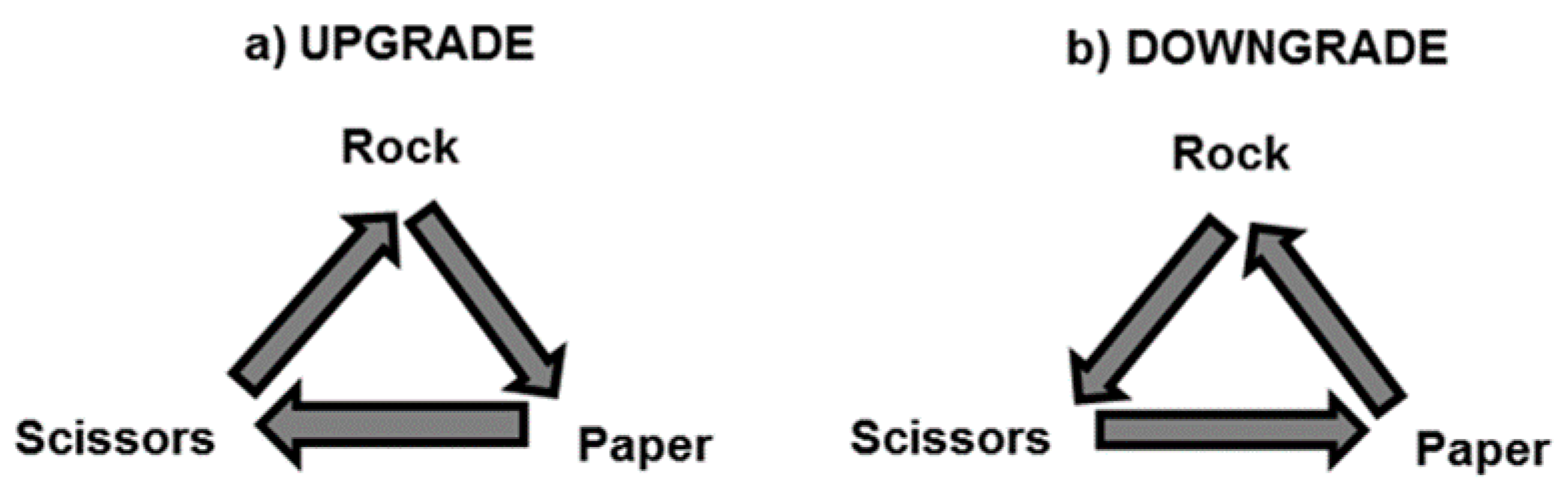

2.2. Cycle-Based Strategy

2.3. Outcome-Based Strategy

3. Behavioral Isomorphism in RPS Strategy

4. Differences in Cognitive Economy

5. Differences in Recursive Thought

{IF S(n + 1) = p THEN O(n + 1) = s,

IF O(n + 1) = s THEN S(n + 1) = r}.

6. Future Work into Attribution, Agency, and Acquisition

Supplementary Materials

Funding

Conflicts of Interest

References

- Decety, J.; Jackson, P.L.; Sommerville, J.A.; Chaminade, T.; Meltzoff, A.N. The neural basis of cooperation and competition. NeuroImage 2004, 23, 744–751. [Google Scholar] [CrossRef] [PubMed]

- Goodie, A.S.; Doshi, P.; Young, D.L. Levels of theory-of-mind reasoning in competitive games. J. Behav. Decis. Mak. 2012, 25, 95–108. [Google Scholar] [CrossRef]

- Yoshida, W.; Dolan, R.J.; Friston, K.L. Game theory of mind. PLoS Comput. Biol. 2008, 4, e10000254. [Google Scholar] [CrossRef] [PubMed]

- Sanabria, F.; Thrailkill, E. Pigeons (Columba livia) approach Nash equilibrium in experimental matching pennies competition. J. Exp. Anal. Behav. 2009, 91, 169–183. [Google Scholar] [CrossRef]

- Coleman, A.M. Cooperation, psychological game theory, and limitation of rationality in social interaction. Behav. Brain Sci. 2003, 26, 139–153. [Google Scholar] [CrossRef]

- Xu, B.; Zhou, H.-J.; Wang, Z. Cycle frequency in standard Rock-Paper-Scissors games: Evidence from experimental economics. Phys. A 2013, 392, 4997–5005. [Google Scholar] [CrossRef][Green Version]

- Gallagher, H.L.; Jack, A.I.; Roepstorff, A.; Frith, C.D. Imaging the intentional stance in a competitive game. NeuroImage 2002, 16, 814–821. [Google Scholar] [CrossRef]

- Toupo, D.F.P.; Strogatz, S.H. Nonlinear dynamics of the rock-paper-scissors game with mutations. Phys. Rev. 2015, 91, 052907. [Google Scholar] [CrossRef]

- Cook, R.; Bird, G.; Lünser, G.; Huck, S.; Heyes, C. Automatic imitation in a strategic context: Players of rock-paper−scissors imitate opponents’ gestures. Proc. R. Soc. B Biol. Sci. 2012, 1729, 780–786. [Google Scholar] [CrossRef]

- Dyson, B.J.; Wilbiks, J.M.P.; Sandhu, R.; Papanicolaou, G.; Lintag, J. Negative outcomes evoke cyclic irrational decisions in Rock, Paper, Scissors. Sci. Rep. 2016, 6, 20479. [Google Scholar] [CrossRef]

- Gao, J.; Su, Y.; Tomonaga, M.; Matsuzawa, T. Learning the rules of the rock-paper-scissors game: Chimpanzees versus children. Primate, in press.

- Lee, D.; Conroy, M.L.; McGreevy, B.P.; Barraclough, D.J. Reinforcement learning and decision making in monkeys during a competitive game. Cogn. Brain Res. 2004, 22, 45–58. [Google Scholar] [CrossRef]

- Sinervo, B.; Lively, C.M. The rock-paper-scissors game and the evolution of alternative male strategies. Nature 1997, 380, 240–243. [Google Scholar] [CrossRef]

- Zhang, R.; Clark, A.G.; Fiumera, A.C. Natural genetic variation in male reproductive genes contributes to non-transitivity of sperm competitive ability in Drosophila melanogaster. Mol. Ecol. 2013, 22, 1400–1415. [Google Scholar] [CrossRef]

- Belot, M.; Crawford, V.P.; Heyes, C. Players of matching pennies automatically imitate opponents’ gestures against strong incentives. Proc. Natl. Acad. Sci. USA 2013, 110, 2763–2768. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, B.; Zhou, H.-J. Social cycling and conditional responses in the Rock-Paper-Scissors game. Sci. Rep. 2014, 4, 5830. [Google Scholar] [CrossRef]

- Nash, J. Equilibrium points in n-person games. Proc. Natl. Acad. Sci. USA 1950, 36, 48–49. [Google Scholar] [CrossRef]

- Abe, H.; Lee, D. Distributed coding of actual and hypothetical outcomes in the orbital and dorsolateral prefrontal cortex. Neuron 2011, 70, 731–741. [Google Scholar] [CrossRef]

- Baek, K.; Kim, Y.-T.; Kim, M.; Choi, Y.; Lee, M.; Lee, K.; Hahn, S.; Jeong, J. Response randomization of one-and two-person Rock-Paper-Scissors games in individuals with schizophrenia. Psychiatry Res. 2013, 207, 158–163. [Google Scholar] [CrossRef]

- Bi, Z.; Zhou, H.-J. Optimal cooperation-trap strategies for the iterated rock-paper-scissors game. PLoS ONE 2014, 9, e111278. [Google Scholar] [CrossRef][Green Version]

- Zhou, H.-J. The rock-paper-scissors game. Contemp. Phys. 2016. [Google Scholar] [CrossRef]

- Lee, D.; McGreevy, B.P.; Barraclough, D.J. Learning decision making in monkeys during a rock-paper-scissors game. Cogn. Brain Res. 2005, 25, 416–430. [Google Scholar] [CrossRef]

- Palacios-Huerta, I. Professional play minimax. Rev. Econ. Stud. 2003, 70, 395–415. [Google Scholar] [CrossRef]

- Walker, M.; Wooders, J. Minimax play at Wimbledon. Am. Econ. Rev. 2001, 91, 1521–1538. [Google Scholar] [CrossRef]

- Griessinger, T.; Coricelli, G. The neuroeconomics of strategic interaction. Curr. Opin. Behav. Sci. 2015, 3, 73–79. [Google Scholar] [CrossRef]

- Neuringer, A. Can people behave “randomly”? The role of feedback. J. Exp. Psychol. Gen. 1986, 115, 62–75. [Google Scholar] [CrossRef]

- West, R.L.; Lebiere, C. Simple games as dynamic, coupled systems: Randomness and other emergent properties. Cogn. Syst. Res. 2001, 1, 221–239. [Google Scholar] [CrossRef]

- West, R.L.; Lebiere, C.; Bothell, D.J. Cognitive architectures, game playing, and human evolution. In Cognition and Multi-Agent Interaction: From Cognitive Modeling to Social Simulation; Sun, R., Ed.; Cambridge University Press: Cambridge, UK, 2006; pp. 103–123. [Google Scholar]

- Rapoport, A.; Budescu, D.V. Generation of random series in two-person strictly competitive games. J. Exp. Psychol. Gen. 1992, 121, 352–363. [Google Scholar] [CrossRef]

- Forder, L.; Dyson, B.J. Behavioural and neural adaptation of win-stay but not lose-shift strategies as a function of outcome value. Sci. Rep. 2016, 6, 33809. [Google Scholar] [CrossRef]

- Aczel, B.; Kekees, Z.; Bago, B.; Szollosi, A.; Foldes, A. An empirical analysis of the methodology of automatic imitation research in a strategic context. J. Exp. Psychol. Hum. Percept. Perform. 2015, 41, 1049–1062. [Google Scholar] [CrossRef]

- Mehta, J.; Starmer, C.; Sugden, R. The nature of salience: An experimental investigation of pure coordination games. Am. Econ. Rev. 1994, 84, 658–673. [Google Scholar]

- Kangas, B.D.; Berry, M.S.; Cassidy, R.N.; Dallery, J.; Vaidya, M.; Hackenberg, T.D. Concurrent performance in a three-alternative choice situation: Response allocation in a Rock/Paper/Scissors game. Behav. Process. 2009, 82, 164–172. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, B. Incentive and stability in the Rock-Paper-Scissors game: An experimental investigation. arXiv 2014, arXiv:1407.1170. [Google Scholar]

- Stöttinger, E.; Filipowicz, A.; Danckert, J.; Anderson, B. The effects of prior learned strategies on updating an opponent’s strategy in the Rock, Paper, Scissors game. Cogn. Sci. 2014, 38, 1482–1492. [Google Scholar] [CrossRef]

- Cournot, A. Recherches sur les principes mathematiques de la theorie des richesse. In Researches into the Mathematical Principles of the Theory of Wealth, English ed.; Bacon, N., Ed.; Macmillan: New York, NY, USA, 1897. [Google Scholar]

- Lee, D.; Seo, H.; Jung, M.W. Neural basis of reinforcement learning and decision making. Annu. Rev. Neurosci. 2012, 35, 287–308. [Google Scholar] [CrossRef]

- Thorndike, E.L. Animal Intelligence; Macmillan: New York, NY, USA, 1911. [Google Scholar]

- Bolles, R.C. Species-specific defense reactions and avoidance learning. Psychol. Rev. 1970, 77, 32–48. [Google Scholar] [CrossRef]

- Stagner, J.P.; Michler, D.M.; Rayburn-Reeves, R.M.; Laude, J.R.; Zentall, T.R. Midsession reversal learning: Why do pigeons anticipate and perseverate? Learn. Behav. 2013, 41, 54–60. [Google Scholar] [CrossRef]

- Sulikowski, D.; Burke, D. Win shifting in nectarivorous birds: Selective inhibition of the learned win-stay responses. Anim. Behav. 2012, 83, 519–524. [Google Scholar] [CrossRef]

- Lyons, J.; Weeks, D.J.; Elliott, D. The gambler’s fallacy: A basic inhibitory process? Front. Psychol. 2013, 4, 72. [Google Scholar] [CrossRef]

- Plonsky, O.; Teodorescu, K.; Erev, I. Reliance on small samples, the wavy recency effect, and similarity-based learning. Psychol. Rev. 2015, 122, 621–647. [Google Scholar] [CrossRef]

- Soutschek, A.; Schubert, T. The importance of working memory updating in the Prisoner’s dilemma. Psychol. Res. 2016, 80, 172–180. [Google Scholar] [CrossRef]

- Hahn, U.; Warren, P.A. Perceptions of randomness: Why three heads are better than four. Psychol. Rev. 2009, 116, 454–461. [Google Scholar] [CrossRef]

- Rayburn-Reeves, R.M.; Laude, J.R.; Zentall, T.R. Pigeons show near-optimal win-stay/lose-shift performance on a simultaneous-discrimination, midsession reversal task with short intertrial intervals. Behav. Process. 2013, 92, 65–70. [Google Scholar] [CrossRef]

- Marshall, A.T.; Kirkpatrick, K. The effects of the previous outcome on probabilistic choice in rats. J. Exp. Psychol. Anim. Behav. Process. 2013, 39, 24–38. [Google Scholar] [CrossRef]

- Elliott, R.; Vollm, B.; Drury, A.; McKie, S.; Richardson, P.; Deakin, J.F.W. Co-operation with another player in a financially rewarded guessing game activates regions implicated in theory of mind. Soc. Neurosci. 2006, 1, 385–395. [Google Scholar] [CrossRef]

- Rayburn-Reeves, R.M.; Molet, M.; Zentall, T.R. Simultaneous discrimination reversal learning in pigeons and humans: Anticipatory and perseverative errors. Learn. Behav. 2011, 39, 125–137. [Google Scholar] [CrossRef]

- Gaissmaier, W.; Schooler, L.J. The smart potential behind probability matching. Cognition 2008, 109, 416–422. [Google Scholar] [CrossRef]

- Tamura, K.; Masuda, N. Win-stay lose-shift strategy in formation changes in football. EPJ Data Sci. 2015, 4, 9. [Google Scholar] [CrossRef]

- Heyes, C.M. Theory of mind in nonhuman primates. Behav. Brain Sci. 1988, 21, 101–148. [Google Scholar] [CrossRef]

- Hachiga, Y.; Schwartz, L.P.; Tripoli, C.; Michaels, S.; Kearns, D.; Silberberg, A. Like chimpanzees (Pan troglodytes), pigeons (Columba livia domestica) match and nash equilibrate where humans (Homo sapiens) do not. J. Comp. Psychol. 2018, 133, 197–206. [Google Scholar] [CrossRef]

- Brauer, J.; Call, J.; Tomasello, M. Chimpanzees really know what others can see in a competitive situation. Anim. Cogn. 2007, 10, 439–448. [Google Scholar] [CrossRef]

- Vlaev, I.; Chater, N. Debiasing context effects in strategic decisions: Playing against a consistent opponent can correct perceptual but not reinforcement biases. Judgm. Decis. Mak. 2008, 3, 463–475. [Google Scholar]

- Dyson, B.J.; Sundvall, J.; Forder, L.; Douglas, S. Failure generates impulsivity only when outcomes cannot be controlled. J. Exp. Psychol. Hum. Percept. Perform. 2018, 44, 1483–1487. [Google Scholar] [CrossRef]

- Weiger, P.; Spaniol, J. The effect of time pressure on risky financial decisions from description and decision from experience. PLoS ONE 2015, 10, e0123740. [Google Scholar] [CrossRef] [PubMed]

- Sanfey, A.G.; Rilling, J.K.; Aronson, J.A.; Nystrom, L.E.; Cohen, J.D. The neural basis of economic decision-making in the Ultimatum game. Science 2003, 300, 1755–1758. [Google Scholar] [CrossRef] [PubMed]

- Van’t Wout, M.; Kahn, R.S.; Sanfey, A.G.; Aleman, A. Affective state and decision-making in the Ultimatum Game. Exp. Brain Res. 2006, 169, 564–568. [Google Scholar] [CrossRef] [PubMed]

- Laakasuo, M.; Palomäk, J.; Salmela, M. Emotional and social factors influence poker decision making accuracy. J. Gambl. Stud. 2015, 31, 933–947. [Google Scholar] [CrossRef] [PubMed]

- Palomäki, J.; Laakasuo, M.; Salmela, M. Losing more by losing it: Poker experience, sensitivity to losses and tilting severity. J. Gambl. Stud. 2014, 30, 187–200. [Google Scholar] [CrossRef] [PubMed]

- Mitzenmacher, M.; Upfal, E. Probability and Computing: Randomized Algorithms and Probabilistic Analysis; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Petry, N.M.; Blanco, C.; Auriacombe, M.; Borges, G.; Bucholz, K.; Crowley, T.J.; Grant, B.F.; Hasin, D.S.; O’Brien, C. An overview of and rationale for changes proposed for pathological gambling in DSM-5. J. Gambl. Stud. 2014, 30, 493–502. [Google Scholar] [CrossRef] [PubMed]

- Clarke, D. Impulsiveness, locus of control, motivation and problem gambling. J. Gambl. Stud. 2004, 20, 319–345. [Google Scholar] [CrossRef]

- James, R.L.; O’Malley, C.; Tunney, R.J. Why are some games more addictive than others: The effects of timing and payoff on perseverance in a slot machine game. Front. Psychol. 2016, 7, 46. [Google Scholar] [CrossRef]

- Larson, M.J.; South, M.; Krauskopf, E.; Clawson, A.; Crowley, M.J. Feedback and reward processing in high-functioning autism. Psychiatry Res. 2011, 187, 198–203. [Google Scholar] [CrossRef]

- McPartland, J.C.; Crowley, M.J.; Perszyk, D.R.; Mukerji, C.E.; Naples, A.J.; Wu, J.; Mayes, L.C. Preserved reward outcome processing in ASD as revealed by event-related potentials. J. Neurodev. Disord. 2012, 4, 16. [Google Scholar] [CrossRef]

- Muller, S.V.; Moller, J.; Rodriguez-Fornells, A.; Munte, T.F. Brain potentials related to self-generated and external information used for performance monitoring. Clin. Neurophysiol. 2005, 116, 63–74. [Google Scholar] [CrossRef]

- Holroyd, C.B.; Hajcak, G.; Larsen, J.T. The good, the bad and the neutral: Electrophysiological responses to feedback stimuli. Brain Res. 2006, 1105, 93–101. [Google Scholar] [CrossRef]

- Gu, R.; Feng, X.; Broster, L.S.; Yuan, L.; Xu, P.; Luo, Y.-J. Valence and magnitude ambiguity in feedback processing. Brain Behav. 2017, 7, e00672. [Google Scholar] [CrossRef]

- Dixon, M.J.; MacLaren, V.; Jarick, M.; Fugelsang, J.A.; Harrigan, K.A. The frustrating effects of just missing the jackpot: Slot machine near-misses trigger large skin conductance responses, but no post-reinforcement pauses. J. Gambl. Stud. 2013, 29, 661–674. [Google Scholar] [CrossRef]

- Ulrich, N.; Hewig, J. Electrophysiological correlates of near outcome and far outcome sequence processing in problem gamblers and controls. Int. J. Psychophysiol. 2019, in press. [Google Scholar]

- Miltner, W.H.R.; Brown, C.H.; Coles, M.G.H. Event related brain potentials following incorrect feedback in a time estimation task: Evidence for a generic neural system for error detection. J. Cogn. Neurosci. 1997, 9, 787–796. [Google Scholar] [CrossRef]

| Trial n | Strategy | Trial n + 1 | Trial n | Strategy | Trial n + 1 | |

|---|---|---|---|---|---|---|

| Other | Self | Other-cycle | Self | Outcome | Self-Outcome | Self |

| Rock | Paper | UPGRADE | Paper | Win | WIN-REPEAT | Paper |

| Rock | Scissors | UPGRADE | Paper | Lose | LOSE-DOWNGRADE | Paper |

| Rock | Rock | UPGRADE | Paper | Draw | DRAW-UPGRADE | Paper |

| Paper | Scissors | UPGRADE | Scissors | Win | WIN-REPEAT | Scissors |

| Paper | Rock | UPGRADE | Scissors | Lose | LOSE-DOWNGRADE | Scissors |

| Paper | Paper | UPGRADE | Scissors | Draw | DRAW-UPGRADE | Scissors |

| Scissors | Rock | UPGRADE | Rock | Win | WIN-REPEAT | Rock |

| Scissors | Paper | UPGRADE | Rock | Lose | LOSE-DOWNGRADE | Rock |

| Scissors | Scissors | UPGRADE | Rock | Draw | DRAW-UPGRADE | Rock |

| Trial n | Strategy | Trial n + 1 | Trial n + 1 | Trial n + 1 | ||

|---|---|---|---|---|---|---|

| Other | Self | Outcome | Self-Outcome | Self | Other | Self (Revised) |

| Rock | Paper | Win | WIN-REPEAT | Paper | Scissors | Rock |

| Rock | Scissors | Lose | LOSE-DOWNGRADE | Paper | Scissors | Rock |

| Rock | Rock | Draw | DRAW-UPGRADE | Paper | Scissors | Rock |

| Paper | Scissors | Win | WIN-REPEAT | Scissors | Rock | Paper |

| Paper | Rock | Lose | LOSE-DOWNGRADE | Scissors | Rock | Paper |

| Paper | Paper | Draw | DRAW-UPGRADE | Scissors | Rock | Paper |

| Scissors | Rock | Win | WIN-REPEAT | Rock | Paper | Scissors |

| Scissors | Paper | Lose | LOSE-DOWNGRADE | Rock | Paper | Scissors |

| Scissors | Scissors | Draw | DRAW-UPGRADE | Rock | Paper | Scissors |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dyson, B.J. Behavioural Isomorphism, Cognitive Economy and Recursive Thought in Non-Transitive Game Strategy. Games 2019, 10, 32. https://doi.org/10.3390/g10030032

Dyson BJ. Behavioural Isomorphism, Cognitive Economy and Recursive Thought in Non-Transitive Game Strategy. Games. 2019; 10(3):32. https://doi.org/10.3390/g10030032

Chicago/Turabian StyleDyson, Benjamin J. 2019. "Behavioural Isomorphism, Cognitive Economy and Recursive Thought in Non-Transitive Game Strategy" Games 10, no. 3: 32. https://doi.org/10.3390/g10030032

APA StyleDyson, B. J. (2019). Behavioural Isomorphism, Cognitive Economy and Recursive Thought in Non-Transitive Game Strategy. Games, 10(3), 32. https://doi.org/10.3390/g10030032