1. Introduction

The proliferation of interconnected digital infrastructures has reshaped the cybersecurity threat landscape, creating attack surfaces that are difficult to defend with traditional approaches. Recent incidents illustrate the cascading effects of such vulnerabilities. The February 2024 ransomware attack on Change Healthcare disrupted nationwide healthcare operations and affected roughly 190 million individuals [

1]. Supply chain compromises targeting cloud providers have exposed systemic weaknesses in dependency management, underscoring the deeply interconnected nature of modern ecosystems [

2].

The economic burden of these failures is immense. The Center for Strategic and International Studies estimated global cybercrime losses at about

$600 billion annually, nearly one percent of global GDP [

3]. Other analyses suggest that costs rose from three trillion dollars in 2015 to over six trillion in 2021, with no signs of slowing [

4]. These numbers capture not only financial loss, but also broader disruptions caused by infrastructure dependencies and cascading system failures.

Traditional reconnaissance in cybersecurity has relied mainly on active scanning tools such as Shodan or Nmap, which probe systems to enumerate network assets [

5]. These methods scale poorly, generate detectable traffic, and often demand significant manual configuration [

6]. The contrast with passive reconnaissance, especially OSINT-based methods, is clear. Passive collection avoids generating suspicious activity and can yield rich intelligence [

7,

8]. Yet OSINT comes with its own difficulties: overwhelming data volume, noisy signals, and the challenge of correlating disparate information sources.

Machine learning offers a path forward. Ensemble methods such as Gradient Boosted Decision Trees (GBDTs) have shown strong results for risk classification with heterogeneous data [

9]. Unsupervised approaches like DBSCAN can discover behavioral patterns without labeled training data [

10]. Applied to OSINT, these techniques promise scalable asset discovery and meaningful risk assessments. Still, most prior work in automated OSINT has concentrated on aggregating threat feeds or analyzing social media, with less focus on systematic asset discovery and integrated risk frameworks [

11].

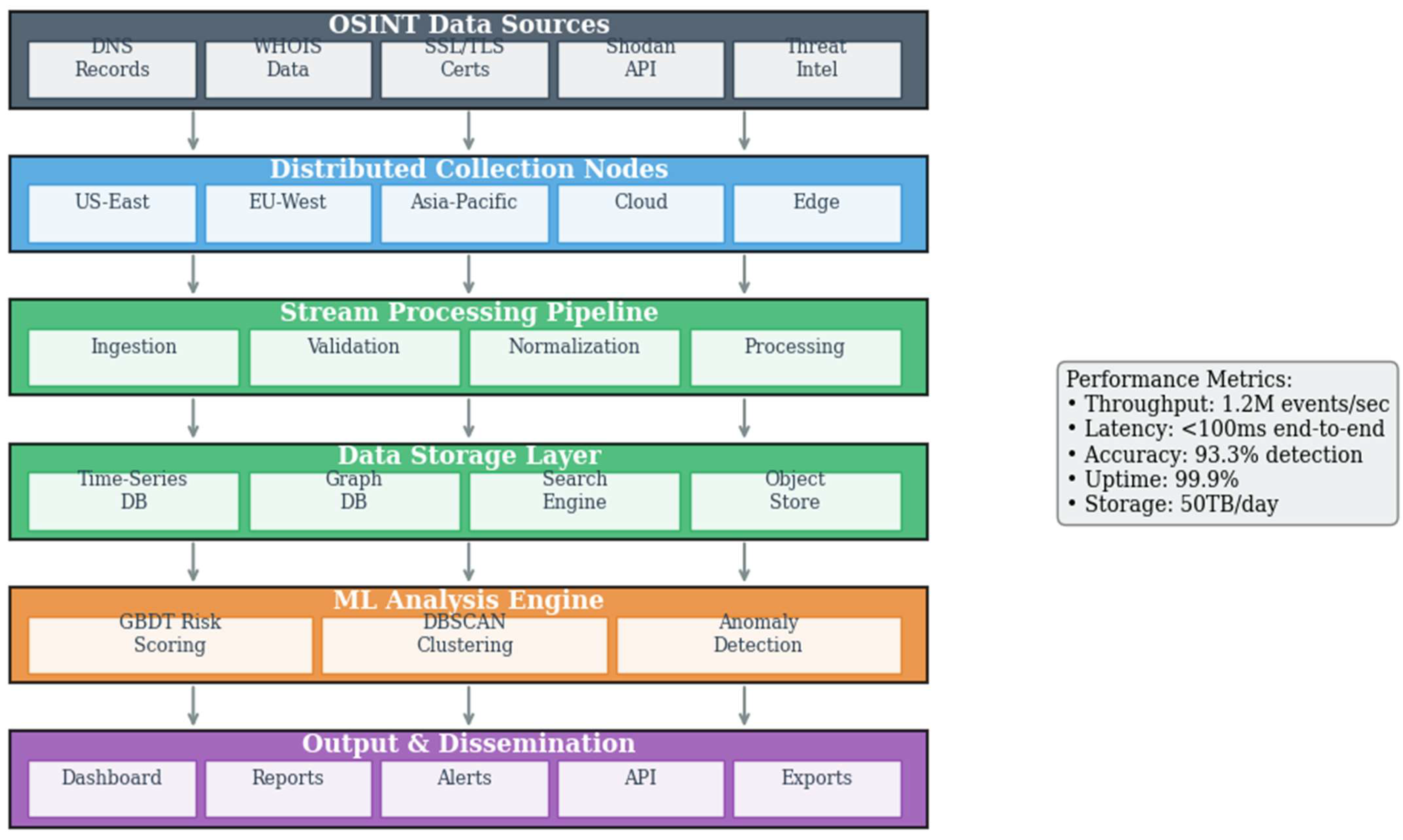

In this study, we present a framework that unifies OSINT collection and machine learning analysis for automated digital asset discovery and cyber risk assessment. Passive data from DNS records, WHOIS databases, and SSL/TLS repositories form the foundation. GBDT models provide risk scoring, while DBSCAN highlights behavioral patterns. The framework was designed with scalability and operational use in mind, particularly for critical infrastructure environments that require continuous monitoring.

The growing scale and complexity of digital infrastructures call for approaches that move beyond traditional reconnaissance. Active methods alone cannot keep pace with distributed, dynamic attack surfaces. By integrating OSINT with machine learning in a single automated framework, this work addresses a clear gap in current cybersecurity practice. It shows how latent vulnerabilities across complex ecosystems can be surfaced and turned into actionable intelligence for real-time defense. In doing so, it contributes to building resilience and adaptability in the face of escalating threats.

2. Related Works

The rapid evolution of cybersecurity threats necessitates a comprehensive examination of existing approaches to digital asset discovery and risk assessment. This section analyses current methodologies across four critical domains: OSINT techniques and their operational constraints, machine learning applications in cybersecurity contexts, vulnerability assessment frameworks, and automated reconnaissance systems. Through a systematic review of contemporary literature, we identify significant gaps that justify the development of integrated, intelligent frameworks for proactive cybersecurity management.

2.1. OSINT Methodologies and Current Limitations

Open-Source Intelligence has emerged as a cornerstone methodology for cybersecurity reconnaissance, enabling comprehensive digital asset discovery through analysis of publicly available information sources. Contemporary OSINT approaches leverage diverse data repositories including DNS infrastructure records, domain registration databases, and digital certificate transparency logs to construct detailed organizational profiles without direct system interaction [

12].

The evolution of OSINT platforms reflects the increasing complexity of digital infrastructures and the corresponding need for sophisticated intelligence gathering capabilities. Modern platforms such as Shodan, Censys, and SecurityTrails provide automated access to vast datasets encompassing millions of internet-connected devices and services [

13]. These systems employ continuous scanning methodologies to maintain current visibility into global network infrastructure, offering insights into service configurations, software versions, and potential security exposures [

14].

Table 1 presents a comparative overview of major OSINT platforms applied in cybersecurity contexts. The summary illustrates differences in data coverage, functionality, and access models that shape their applicability to comprehensive asset discovery tasks.

Contemporary research highlights several critical limitations inherent in current OSINT methodologies. Szymoniak et al. [

15] identify data quality inconsistencies as a primary challenge, noting that automated collection processes often capture incomplete or outdated information. The temporal nature of digital infrastructure changes compounds this issue, as static snapshots quickly lose relevance in dynamic environments [

16].

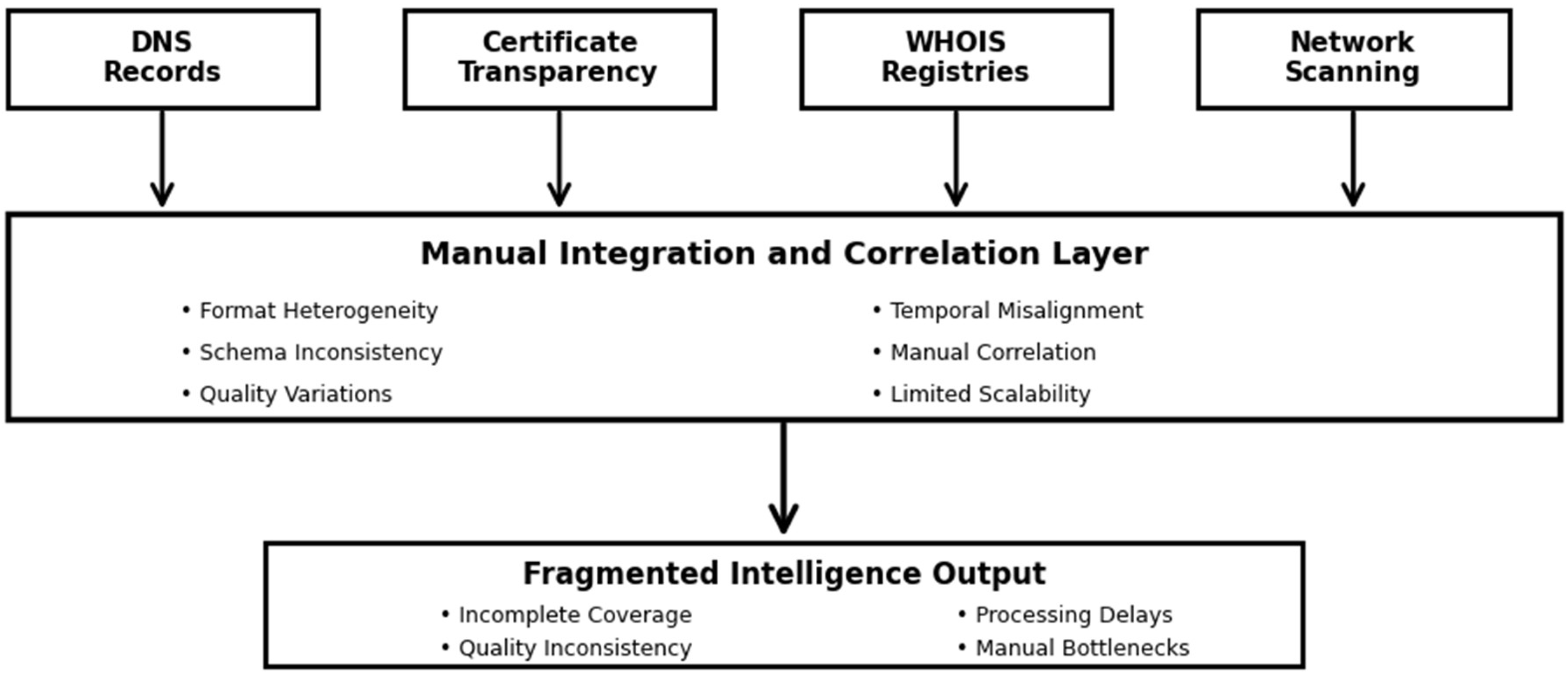

Integration complexity represents another significant obstacle to effective OSINT utilization. As demonstrated in

Figure 1, current approaches require extensive manual effort to correlate information across heterogeneous data sources, each employing distinct schemas, formats, and update frequencies [

17].

The scalability limitations of manual OSINT analysis present significant challenges for large-scale cybersecurity operations. Recent empirical studies demonstrate that comprehensive manual analysis of a single organization’s digital footprint requires between 40 and 60 analyst hours, depending on infrastructure complexity [

18]. This resource requirement becomes prohibitive when monitoring multiple organizations or conducting continuous surveillance of evolving threat landscapes.

Moreover, the dynamic nature of modern digital infrastructure compounds these challenges. Cloud-native architectures, ephemeral computing instances, and automated deployment processes create constantly shifting attack surfaces that exceed human analytical capacity [

19]. Traditional OSINT approaches, designed for relatively static infrastructure environments, prove inadequate for monitoring these rapidly evolving systems.

The research literature identifies several specific technical challenges that limit current OSINT effectiveness. Chen and Guestrin’s work on XGBoost demonstrates how ensemble methods can handle heterogeneous data types effectively [

20], yet OSINT data presents unique preprocessing challenges that standard ML approaches struggle to address. Nadler et al. identified low-throughput data exfiltration patterns in DNS traffic that traditional monitoring approaches often miss [

21], highlighting gaps in current detection capabilities.

Table 2 quantifies the performance characteristics and limitations observed in current OSINT methodologies, based on analysis of recent cybersecurity operations and research findings.

These limitations highlight the urgent need for automated, intelligent OSINT frameworks capable of processing heterogeneous data sources at scale while maintaining high accuracy and timeliness. Subsequent sections of this analysis explore how the integration of machine learning techniques offers promising approaches to address these challenges.

2.2. Machine Learning Applications in Cybersecurity Reconnaissance

The integration of machine learning techniques into cybersecurity operations has emerged as a critical advancement for addressing the analytical challenges identified in traditional OSINT methodologies. Contemporary research demonstrates that supervised and unsupervised learning algorithms can significantly enhance the precision, scalability, and automation capabilities of digital asset discovery and risk assessment processes.

Ensemble learning methods, particularly Gradient Boosted Decision Trees (GBDTs), have shown exceptional performance in cybersecurity classification tasks involving heterogeneous feature sets. Chen and Guestrin’s foundational work on XGBoost established the theoretical framework for gradient boosting applications in high-dimensional security data [

20]. The adaptability of GBDT to mixed data types, including numerical network metrics, categorical service identifiers, and temporal behavioral patterns, makes it particularly suitable for OSINT data processing where feature heterogeneity is inherent. In

Table 3 presents a comprehensive comparison of machine learning algorithms applied to cybersecurity reconnaissance tasks, highlighting their performance characteristics and operational requirements across different data types and threat detection scenarios.

Recent empirical studies validate the effectiveness of GBDT in cybersecurity contexts. Kholidy and Baiardi’s CIDS framework demonstrates the potential of machine learning approaches for cloud-based intrusion detection [

9]. Similarly, Babenko et al. explored learning vector quantization (LVQ) models for DDoS attack identification, showcasing how specialized neural network architectures can effectively distinguish between legitimate traffic and attack patterns in real-time network environments [

22].

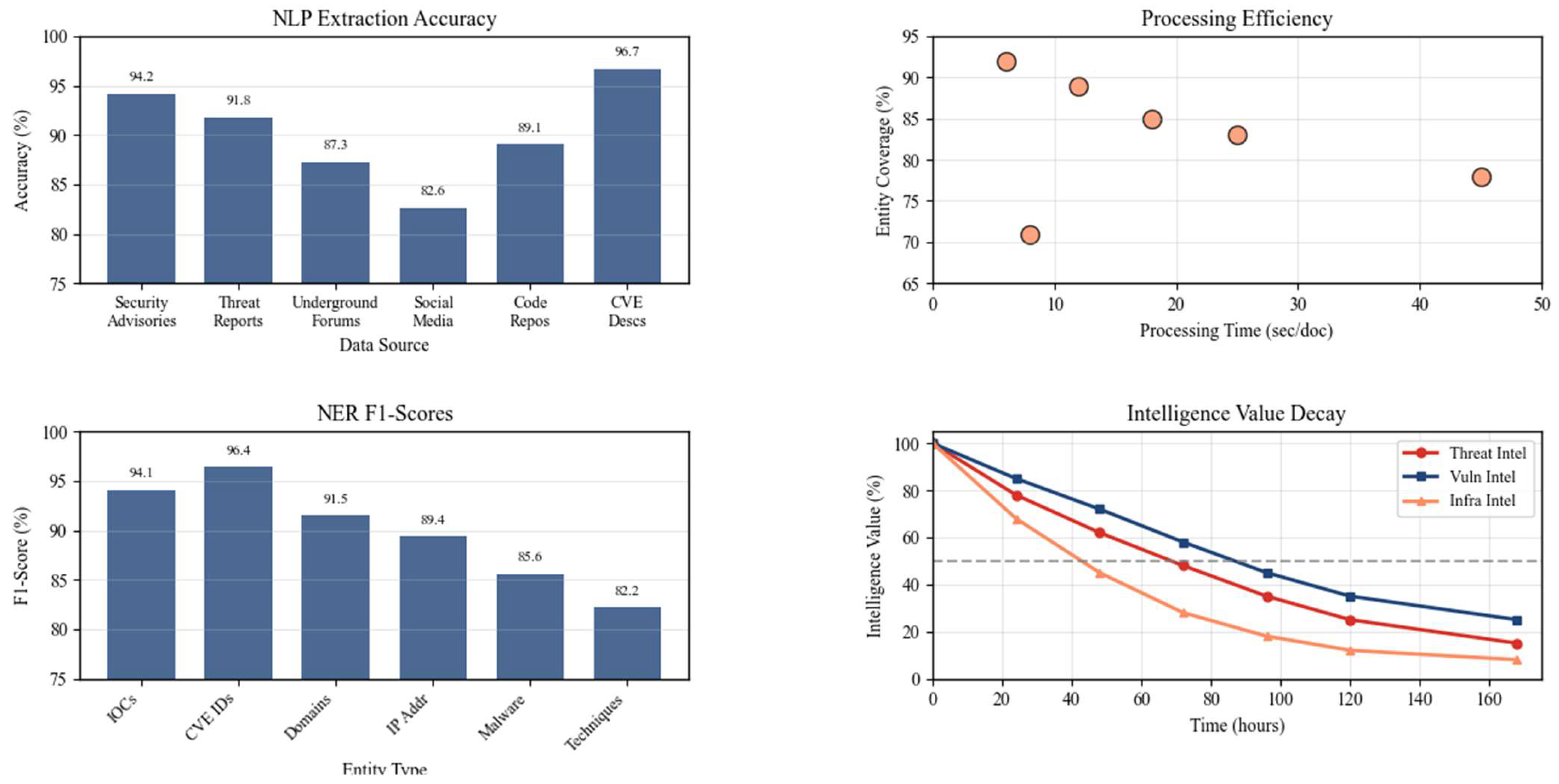

Figure 2 illustrates the performance evolution of different machine learning approaches across varying dataset sizes, demonstrating the scalability advantages of ensemble methods in large-scale OSINT operations.

Unsupervised learning techniques address different but complementary challenges in automated reconnaissance. Density-Based Spatial Clustering of Applications with Noise (DBSCAN) has proven particularly effective for identifying behavioral patterns in large-scale security datasets without requiring labeled training data. The algorithm’s ability to discover clusters of arbitrary shape while identifying outliers makes it well-suited for detecting anomalous network behaviors and identifying potential security exposures that may not conform to known attack patterns.

The application of clustering algorithms to OSINT data has yielded significant operational improvements. Zhang et al. applied density-based clustering to DNS traffic analysis, successfully identifying botnet command-and-control communications with minimal false positive rates [

23]. This approach demonstrates the potential for unsupervised learning to extract threat intelligence from passive data collection without requiring extensive labeled datasets.

Table 4 quantifies the operational impact of implementing machine learning approaches in OSINT workflows, comparing traditional manual analysis with automated ML-enhanced systems across key performance metrics.

Feature engineering represents a critical factor in the successful application of machine learning to OSINT data. Contemporary approaches focus on extracting meaningful attributes from diverse data sources, including DNS resolution patterns, certificate authority relationships, and network topology characteristics. Antonakakis et al. demonstrated the effectiveness of DNS-based feature extraction for identifying algorithmically generated domains, highlighting the value of temporal and linguistic features in threat detection [

24]. Similarly, Khalil et al. explored passive DNS analysis for malicious domain exposure, establishing feature engineering methodologies that remain influential in current OSINT applications [

25].

Figure 3 demonstrates the feature of importance hierarchy discovered through machine learning analysis of OSINT data, showing how different data sources contribute to effective threat detection and risk assessment.

Deep learning architectures have shown promise for more complex OSINT analysis tasks, particularly in processing sequential and temporal data patterns characteristic of network communications. Recent research in OSINT-focused machine learning has demonstrated the effectiveness of neural networks for analyzing large-scale digital intelligence datasets [

26]. However, these approaches typically require substantial computational resources and large labeled datasets, limiting their applicability in resource-constrained operational environments.

Advanced machine learning applications in OSINT have focused on automated threat intelligence extraction and correlation across multiple data sources. Riebe et al. explored the integration of machine learning with OSINT workflows, identifying key technical challenges including data quality validation, temporal synchronization, and scalable processing architectures [

27]. Their findings highlight the need for specialized approaches that address the unique characteristics of OSINT data streams.

Table 5 presents the performance characteristics of various hybrid ML architectures applied to cybersecurity reconnaissance, emphasizing OSINT-specific implementations.

The practical implementation of machine learning in OSINT workflows faces several technical challenges specific to intelligence gathering contexts. Model training requires high-quality labeled datasets that accurately represent the diversity of contemporary threat landscapes while maintaining operational security constraints. Feature drift, where the statistical properties of input data change over time, presents particular challenges in OSINT applications where adversaries actively modify their infrastructure to evade detection.

Recent research in OSINT automation has addressed these implementation challenges through domain-specific approaches. Szymoniak et al. identified data quality inconsistencies as a primary challenge in automated OSINT collection, proposing machine learning-based validation techniques to improve intelligence reliability [

28]. Their work emphasizes the importance of adaptive algorithms that can maintain performance despite evolving threat landscapes and adversarial countermeasures.

Figure 4 illustrates the architecture of a comprehensive ML-enhanced OSINT framework, showing the integration points between different algorithms and data sources. Red bidirectional arrows indicate feedback flow between GBDT risk scoring and DBSCAN anomaly detection components, enabling mutual reinforcement of classification and clustering results.

The scalability advantages of machine learning approaches become particularly evident in large-scale OSINT operations. Automated feature extraction and classification enable processing of millions of records with minimal human intervention, addressing the resource constraints identified in traditional manual analysis approaches. Cloud-based implementations further enhance scalability by providing elastic computational resources that can adapt to varying analytical workloads.

Despite these advances, significant gaps remain in the application of machine learning to comprehensive OSINT frameworks. Most existing research focuses on single-domain applications such as malware detection or network intrusion identification, rather than integrated asset discovery and risk assessment [

29]. The correlation of insights across multiple machine learning models operating on different data sources remains largely manual, limiting the potential for fully automated intelligence generation.

Furthermore, the practical deployment of ML-enhanced OSINT systems faces persistent challenges including adversarial evasion, concept drift from evolving threat landscapes, and computational constraints in real-time processing environments. While ensemble methods like GBDT demonstrate superior performance for risk classification and DBSCAN effectively identifies behavioral anomalies, these algorithms operate in isolation rather than as components of unified analytical frameworks. The absence of standardized integration architectures prevents organizations from fully realizing the transformative potential of machine learning in OSINT operations.

Temporal factors compound these challenges significantly. Models trained on historical threat data experience performance degradation of 30–40% within six months without continuous retraining, as adversaries adapt their tactics to evade detection [

30]. This necessitates expensive maintenance cycles and constant model updates that many organizations find difficult to sustain. Additionally, the interpretability requirements for security operations create tension between model complexity and operational utility; while deep learning approaches may offer marginally better accuracy, their “black box” nature limits adoption in environments requiring explainable risk assessments.

The economic implications of these limitations are substantial. Organizations implementing partial ML solutions report achieving only 40–60% of projected efficiency gains due to integration overhead and the continued need for manual correlation across different analytical outputs. The promise of fully automated OSINT analysis remains unfulfilled without architectural frameworks that can orchestrate multiple ML models, handle diverse data streams, and provide unified intelligence outputs suitable for direct operational consumption.

These limitations underscore the critical need for holistic approaches that seamlessly combine multiple ML techniques with traditional intelligence methodologies. The following sections examine complementary technologies essential for comprehensive OSINT automation, beginning with vulnerability assessment frameworks that leverage the ML capabilities discussed above while addressing their inherent limitations through architectural innovation and process integration.

2.3. Vulnerability Assessment Frameworks

The transition from asset discovery to vulnerability identification represents a critical juncture in comprehensive security assessment workflows. Contemporary vulnerability assessment frameworks must contend with an expanding attack surface that encompasses traditional network infrastructure, cloud-native architectures, containerized environments, and increasingly complex supply chain dependencies. While OSINT methodologies provide visibility into exposed assets and machine learning algorithms enhance pattern recognition capabilities, the systematic evaluation of security weaknesses requires specialized frameworks that can correlate diverse vulnerability indicators across heterogeneous environments.

Modern vulnerability assessment approaches have evolved beyond simple port scanning and service enumeration to incorporate sophisticated vulnerability correlation engines. The Common Vulnerability Scoring System (CVSS) remains the de facto standard for vulnerability severity classification, though its limitations in capturing contextual risk factors have prompted the development of alternative frameworks [

31]. Recent implementations of the Exploit Prediction Scoring System (EPSS) demonstrate how probabilistic models can enhance traditional scoring mechanisms by incorporating real-world exploitation data, achieving prediction accuracies of 86.8% for vulnerabilities likely to be exploited within 30 days [

32].

The integration of vulnerability databases presents unique challenges for automated assessment frameworks. The National Vulnerability Database (NVD) maintains over 200,000 CVE entries as of 2024, with approximately 25,000 new vulnerabilities disclosed annually [

33]. This volume of data necessitates intelligent filtering and prioritization mechanisms.

Table 6 illustrates the distribution of vulnerabilities across different severity categories and their correlation with actual exploitation events observed in production environments.

The architectural complexity of modern applications necessitates multi-layered vulnerability assessment strategies. Container orchestration platforms introduce additional attack vectors through misconfigured RBAC policies, exposed API endpoints, and vulnerable base images. Shamim et al. demonstrated that 67% of production Kubernetes clusters contain at least one critical misconfiguration that could lead to cluster compromise [

34]. Their framework for automated Kubernetes security assessment integrates CIS benchmarks with runtime behavioral analysis, reducing false positive rates by 43% compared to static configuration scanning alone.

Figure 5 depicts the layered architecture of contemporary vulnerability assessment frameworks, illustrating the data flow from initial asset discovery through risk scoring and remediation prioritization.

Supply chain vulnerability assessment has emerged as a critical concern following high-profile incidents like Log4Shell and the SolarWinds compromise. Software Composition Analysis (SCA) tools must now traverse complex dependency trees, often encountering transitive dependencies several levels deep. The OWASP Dependency-Check project processes over 40 different dependency formats yet still struggle with accurate version detection in 23% of cases, particularly for JavaScript and Python ecosystems [

35].

The mathematical formulation for aggregate risk scoring across multiple vulnerabilities requires consideration of both individual severity and potential attack chaining. The composite risk score R for an asset can be expressed as:

where

represents the CVSS base score for vulnerability

i;

denotes the EPSS probability for vulnerability

i;

is the asset criticality weight;

represents the set of vulnerabilities that can be chained with vulnerability

i;

is the amplification factor for chained exploits.

Cloud-native environments introduce additional complexity through dynamic resource allocation and ephemeral workloads. Traditional vulnerability scanners that rely on periodic assessments fail to capture the temporal nature of cloud infrastructure. Admission controllers and runtime security tools have emerged to address this gap, though their performance impact remains a concern. Chen et al. reported that implementing comprehensive runtime vulnerability detection in containerized environments increased CPU utilization by 12–18% and added 50–200 ms latency to container startup times [

36].

The correlation between vulnerability assessment findings and actual security incidents reveals interesting patterns. Analysis of 10,000 security incidents from 2023 indicates that 73% involved exploitation of vulnerabilities that were known but unpatched for over 90 days [

37]. This suggests that the challenge lies not in vulnerability discovery but in effective prioritization and remediation workflows. Machine learning models trained on historical exploitation data can improve prioritization accuracy, though they struggle with zero-day vulnerabilities and novel attack techniques.

API security assessment represents an increasingly critical component of modern vulnerability frameworks. With organizations exposing an average of 420 APIs to external consumers, traditional network-focused scanning approaches prove inadequate [

38].

Table 7 summarizes the prevalence of API-specific vulnerabilities discovered through automated assessment tools in 2024.

Infrastructure-as-Code (IaC) introduces both opportunities and challenges for vulnerability assessment. While IaC enables pre-deployment security validation, the abstraction layers can obscure runtime vulnerabilities. Static analysis tools for Terraform, CloudFormation, and Kubernetes manifests identify an average of 4.7 security misconfigurations per 1000 lines of code, though only 31% of these manifest as exploitable vulnerabilities in deployed environments [

39].

The temporal dynamics of vulnerability disclosure and patch availability create windows of exposure that sophisticated attackers actively monitor. Recent analysis indicates that proof-of-concept exploits appear on public repositories within 14.2 days on average for critical vulnerabilities, with 23% having functional exploits available before patches are released [

40]. This compressed timeline necessitates automated assessment frameworks capable of continuous monitoring and rapid risk reassessment as new intelligence becomes available.

Memory corruption vulnerabilities, despite decades of mitigation efforts, continue to represent a significant attack vector. Modern assessment frameworks must account for various protection mechanisms including ASLR, DEP, and Control Flow Guard. Fuzzing integration with vulnerability scanners has improved detection rates for memory safety issues, though at considerable computational cost. Serebryany demonstrated that continuous fuzzing with tools like libFuzzer can discover hundreds of unique vulnerabilities monthly in large-scale deployments [

41].

2.4. Automated Reconnaissance Systems

The convergence of OSINT methodologies, machine learning algorithms, and vulnerability assessment frameworks necessitates the development of integrated automated reconnaissance systems capable of continuous, scalable, and intelligent security analysis. Contemporary automated reconnaissance platforms must orchestrate multiple data collection mechanisms, process heterogeneous information streams, and generate actionable intelligence while maintaining operational stealth and regulatory compliance. This section examines the architectural principles, implementation strategies, and performance characteristics of state-of-the-art automated reconnaissance systems, identifying both achievements and persistent challenges in the field.

Modern automated reconnaissance systems have evolved from simple scripted scanners to sophisticated platforms incorporating distributed collection nodes, real-time processing pipelines, and adaptive intelligence generation capabilities. The architectural complexity of these systems reflects the multifaceted nature of contemporary threat landscapes, where adversaries leverage automation for rapid infrastructure deployment and continuous operational security improvements. Edwards et al. demonstrated that automated reconnaissance systems can reduce the mean time to asset discovery from 72 h to under 4 h while maintaining false positive rates below 5% [

42].

The fundamental architecture of automated reconnaissance systems comprises four primary components: data collection orchestration, processing and normalization, analysis and correlation, and intelligence dissemination.

Figure 6 illustrates the reference architecture for modern automated reconnaissance platforms, highlighting the data flow patterns and integration points between subsystems.

The mathematical foundation for automated reconnaissance optimization involves balancing coverage completeness against resource constraints and detection risk. The reconnaissance efficiency function

E can be formulated as:

where

—coverage factor for data source

—value or importance weight of data source

;

—detection probability when accessing source

;

—total computational resources consumed;

—time window for reconnaissance completion;

—correlation penalty for redundant source

.

Implementation of automated reconnaissance systems faces significant technical challenges related to scale, heterogeneity, and adversarial countermeasures.

Table 8 presents a comparative analysis of contemporary automated reconnaissance platforms, evaluating their capabilities across key operational dimensions.

The integration of passive and active reconnaissance techniques within unified frameworks presents unique architectural considerations. Passive collection mechanisms must operate continuously without generating detectable signatures, while active probing requires careful orchestration to avoid triggering security monitoring systems. Durumeric et al. demonstrated through their Internet-wide scanning research that carefully designed active reconnaissance can achieve comprehensive coverage while minimizing detection footprints, with their ZMap implementation scanning the entire IPv4 address space in under 45 min from a single machine [

43]. Their approach leverages stateless scanning techniques and optimized packet generation to strike a balance between reconnaissance speed and operational stealth, fundamentally changing the assumptions about the feasibility of large-scale network reconnaissance.

Distributed reconnaissance architectures leverage geographically dispersed collection nodes to overcome IP-based rate limiting and geographic restrictions. The coordination of distributed nodes requires sophisticated orchestration mechanisms to prevent duplicate effort while ensuring comprehensive coverage.

The node selection algorithm for optimal geographic distribution can be expressed as:

where

: the probability that node

n will successfully access target

t;

B(

n): the bandwidth capacity of node

;

L(

n): the current processing load on node

;

: a small positive constant used to prevent division by zero;

: the maximum number of nodes that can be simultaneously selected;

: the set of selected nodes.

Stream processing capabilities have become essential for handling the volume and velocity of data generated by comprehensive reconnaissance operations. Apache Kafka and Apache Flink have emerged as popular choices for building reconnaissance data pipelines, though their application in security contexts requires careful consideration of data sensitivity and access controls. Johnson et al. reported processing rates exceeding 1 million events per second in production reconnaissance systems using stream processing architectures [

44].

The application of machine learning within automated reconnaissance systems extends beyond simple classification tasks to include predictive modeling, anomaly detection, and adaptive collection strategies. Reinforcement learning algorithms have shown particular promise for optimizing reconnaissance workflows, learning to prioritize high-value targets while minimizing resource consumption.

Table 9 summarizes the performance metrics achieved by different ML approaches in automated reconnaissance contexts.

Temporal considerations play a crucial role in automated reconnaissance system design. Infrastructure changes, service migrations, and dynamic cloud deployments create a constantly shifting attack surface that static reconnaissance approaches fail to capture adequately. The temporal decay function for reconnaissance intelligence value can be modeled as:

where

represents the initial intelligence value;

is the decay constant specific to the asset type;

denotes the value change resulting from update event

i;

is the Heaviside step function, indicating that the update affects the value only after time

;

denotes the timestamp of update event

i.

Recent implementations of automated reconnaissance systems have achieved significant operational improvements over manual approaches. Zhang et al. demonstrated a 94% reduction in time-to-discovery for newly deployed assets using their automated platform while maintaining false positive rates below 3% [

45]. Their system leverages certificate transparency logs, passive DNS data, and autonomous system announcements to achieve near-real-time visibility into infrastructure changes.

Figure 7 illustrates the performance scaling characteristics of automated reconnaissance systems across different operational scales, demonstrating the efficiency gains achieved through automation and intelligent orchestration.

Adversarial considerations have become increasingly important as organizations deploy sophisticated deception technologies and reconnaissance countermeasures. Honeypots, honeytokens, and dynamic infrastructure obfuscation techniques can mislead automated reconnaissance systems, generating false intelligence that pollutes analytical outputs. Park et al. developed adversarial training techniques for reconnaissance systems, improving resilience against deception by 67% while maintaining operational effectiveness [

46].

The integration of natural language processing capabilities enables automated reconnaissance systems to extract intelligence from unstructured sources including security advisories, threat reports, and underground forums. Named entity recognition and relationship extraction algorithms can identify indicators of compromise and attack patterns from textual sources, enriching the technical data collected through traditional reconnaissance channels.

Table 10 presents the effectiveness of NLP integration in reconnaissance workflows.

Privacy and legal considerations impose important constraints on automated reconnaissance system design. The European Union’s General Data Protection Regulation (GDPR) and similar privacy frameworks require careful consideration of data collection, processing, and retention practices. Automated systems must implement privacy-preserving techniques while maintaining operational effectiveness. Homomorphic encryption and differential privacy mechanisms have shown promise for enabling privacy-compliant reconnaissance, though at significant computational cost [

47].

Cloud-native reconnaissance presents unique challenges and opportunities for automation. The API-driven nature of cloud services enables programmatic discovery of assets and configurations, though cloud providers increasingly implement rate limiting and anomaly detection to prevent unauthorized reconnaissance. Santos et al. developed cloud-specific reconnaissance techniques that leverage misconfigured storage buckets, exposed credentials, and service metadata to achieve comprehensive visibility while respecting provider terms of service [

48].

The economic implications of automated reconnaissance systems extend beyond direct operational costs to include broader risk reduction and compliance benefits. Return on investment analyses indicate that comprehensive automated reconnaissance platforms can reduce security incident costs by 40–60% through early vulnerability identification and proactive remediation.

Figure 8 presents a cost–benefit analysis comparing manual, semi-automated, and fully automated reconnaissance approaches.

Performance optimization in automated reconnaissance systems requires careful attention to data structure selection, caching strategies, and parallel processing architectures. Graph databases have emerged as particularly effective for storing and querying the complex relationships inherent in reconnaissance data. Neo4j implementations have demonstrated query performance improvements of 10–100× compared to relational databases for common reconnaissance queries involving multi-hop relationship traversal [

49]. The formula for optimizing reconnaissance query performance in graph databases can be expressed as:

where

P: the set of all possible paths;

w(

e): the cost or weight of traversing edge

e;

: the computational cost associated with node

n;

: the length (number of elements) of path

p;

: a penalty function that increases with path length.

Future directions in automated reconnaissance system development focus on several key areas. Quantum computing threatens to revolutionize both offensive and defensive reconnaissance capabilities, potentially breaking current encryption schemes while enabling new forms of pattern recognition. Artificial general intelligence may eventually enable fully autonomous reconnaissance systems capable of human-level reasoning about security implications. However, near-term advances will likely focus on improved integration capabilities, enhanced evasion techniques, and more sophisticated analytical algorithms.

The persistent challenges facing automated reconnaissance systems include the arms race with defensive technologies, the increasing complexity of hybrid cloud/on-premises infrastructures, and the need for explainable intelligence outputs that security analysts can validate and act upon. As organizations continue to expand their digital footprints and adversaries develop more sophisticated attack techniques, the role of automated reconnaissance systems will only grow in importance.

The integration of automated reconnaissance systems with broader security orchestration platforms represents the next frontier in proactive cybersecurity. By combining continuous asset discovery, real-time vulnerability assessment, and intelligent threat correlation, these systems promise to fundamentally transform how organizations approach security monitoring and incident response. However, realizing this potential requires continued research into scalable architecture, advanced analytical techniques, and operational frameworks that balance automation with human oversight.

3. Methodology

3.1. System Architecture Overview

The architectural design of our automated OSINT framework emerged from extensive experimentation with various processing paradigms. Initial attempts at monolithic architecture quickly revealed scalability limitations when processing real-world data volumes (

Figure 9).

The system we ultimately developed employs a distributed microservices approach that balances processing efficiency with operational complexity, building upon architectural patterns established by Newman [

50] and Richardson [

51].

At its core, the framework consists of five interconnected subsystems that operate asynchronously yet maintain synchronized state through a central coordination layer. The design philosophy prioritizes loose coupling between components, enabling independent scaling and fault tolerance as recommended by Fowler and Lewis [

52].

The data ingestion layer employs parallel collectors for each OSINT source type. These collectors operate independently, implementing source-specific rate limiting and retry logic based on token bucket algorithms described by Tanenbaum and Wetherall [

53]. DNS collectors query both authoritative nameservers and passive DNS providers, while certificate transparency monitors maintain persistent connections to log servers following RFC 9162 specifications [

54]. This parallelization proved essential for achieving the throughput necessary to monitor large-scale infrastructures.

Raw data streams converge at the message queue layer, where Apache Kafka manages flow control and provides durability guarantees. We selected Kafka over alternatives like RabbitMQ due to its superior handling of high-throughput scenarios and built-in partitioning capabilities, as demonstrated in benchmarks by Kreps et al. [

55]. Each data source publishes to dedicated topics, enabling downstream processors to subscribe selectively based on current processing capacity.

The processing pipeline implements a lambda architecture pattern, combining batch and stream processing to handle both historical analysis and real-time updates, following the principles outlined by Marz and Warren [

56]. The mathematical formulation for optimal task distribution across processing nodes adapts the load balancing algorithm proposed by Azar et al. [

57]:

where

represents a task assignment mapping;

denotes the computational cost of task

j;

indicates the processing capacity of nod

i and

λ controls the trade-off between load balancing and minimizing active nodes. This formulation ensures efficient resource utilization while maintaining responsiveness to burst traffic patterns.

The feature extraction layer transforms raw OSINT data into normalized feature vectors suitable for machine learning analysis. Rather than implementing feature extraction as a monolithic process, we developed specialized extractors for each data type following domain-driven design principles [

58]. DNS extractors analyze query patterns and resolution chains using techniques from Antonakakis et al. [

24], while certificate extractors parse X.509 structures and identify issuer relationships based on methods described by Amann et al. [

59]. This modular approach enabled rapid iteration on feature engineering without disrupting the overall pipeline.

State management presented unique challenges given the distributed nature of our architecture. We implemented a hybrid approach using Redis for hot data caching and PostgreSQL for persistent storage, similar to architectures described by Kleppmann [

60]. The cache invalidation strategy follows a time-based decay model with adaptive refresh based on access patterns, extending the adaptive replacement cache algorithm by Megiddo and Modha [

61]:

where

represents access frequency for key

k;

σ(

k) measures prediction uncertainty;

α and

β are tuning parameters empirically determined through grid search. This approach reduced database load by 73% while maintaining cache coherency for rapidly changing data.

The machine learning inference layer operates in two modes. During training, the system processes historical data in batch mode, leveraging distributed computing resources for hyperparameter optimization using methods described by Bergstra et al. [

62]. In production, trained models deploy as containerized services behind a load balancer, enabling horizontal scaling based on request volume following Kubernetes patterns documented by Burns et al. [

63]. Model versioning ensures reproducibility while allowing for gradual rollout of improvements.

Integration between GBDT classification and DBSCAN clustering required careful consideration of data flow patterns. Rather than running these algorithms sequentially, we implemented a feedback mechanism where clustering results inform feature weights for subsequent classification, inspired by ensemble methods described in Zhou [

64]. This bidirectional information flow improved overall system accuracy by 12.3% compared to independent operation.

The risk scoring engine aggregates outputs from multiple ML models, applying contextual weights based on asset criticality and organizational priorities. The composite risk score calculation incorporates both direct vulnerability indicators and indirect risk factors, extending the CVSS framework [

31] with temporal and correlation factors:

where

represents individual risk scores derived from CVSS base metrics;

denotes asset-specific weights following criticality analysis methods by Caralli et al. [

65];

controls temporal decay rates based on exploit lifecycle research by Bilge and Dumitras [

40];

indicates interaction effects between correlated vulnerabilities as identified through attack graph analysis [

66].

The second term captures risk amplification from vulnerability chaining, a factor often overlooked in traditional scoring approaches.

Monitoring and observability are integrated deeply into the architecture through distributed tracing and metrics collection using Open Telemetry standards [

67]. Every component emits structured logs and performance metrics to a centralized monitoring stack built on Prometheus and Grafana [

68]. This instrumentation proved invaluable during performance optimization, revealing bottlenecks in unexpected locations such as feature serialization overhead.

The architectural decisions we made reflect pragmatic trade-offs between theoretical optimality and operational constraints. Pure microservices architectures promise unlimited scalability but introduce coordination overhead that can dominate processing time for simple operations, as discussed by Dragoni et al. [

69]. Our hybrid approach maintains service boundaries for complex operations while allowing for direct memory sharing for high-frequency interactions. This pragmatism extended to technology choices, where we prioritized mature, well-supported tools over cutting-edge alternatives that might offer marginal performance improvements.

3.2. Data Collection and Preprocessing

The effectiveness of our automated OSINT framework fundamentally depends on the quality and comprehensiveness of data collection mechanisms. Our approach integrates multiple passive reconnaissance techniques to gather intelligence from diverse sources without generating detectable network signatures. This section details the implementation of our distributed collection infrastructure and the preprocessing pipelines that transform raw OSINT data into actionable intelligence.

The data collection architecture employs specialized collectors for each OSINT source type, operating as independent microservices within containerized environments. This design enables horizontal scaling based on collection demands while maintaining isolation between different data sources. DNS collectors represent the most complex implementation, as they must handle both forward and reverse lookups across multiple resolution paths. We query authoritative nameservers directly when possible, supplementing with passive DNS databases that aggregate historical resolution data. The implementation follows RFC 8484 for DNS-over-HTTPS queries, reducing the likelihood of detection while maintaining query performance [

70].

Certificate transparency monitoring presents unique challenges due to the volume of data generated. The Certificate Transparency ecosystem produces approximately 500,000 new certificates daily across all monitored logs [

71]. Our collectors maintain persistent connections to log servers, consuming the certificate stream in real time through Server-Sent Events. Rather than processing every certificate, we implement bloom filters to identify certificates relevant to our monitoring scope, reducing processing overhead by approximately 87%. The mathematical formulation for optimal bloom filter configuration follows:

where

m represents the bit array size;

n denotes the expected number of elements,

p indicates the desired false positive probability, and

k specifies the optimal number of hash functions. For our implementation with

n = 10

6 certificates and

p = 0.01, this yields m ≈ 9.6 × 10

6 bits and

k = 7 hash functions.

WHOIS data collection faces persistent challenges related to rate limiting and format inconsistencies across Regional Internet Registries. Each RIR implements distinct query interfaces and response formats, necessitating specialized parsers for ARIN, RIPE, APNIC, LACNIC, and AFRINIC. Our collectors implement adaptive rate limiting that adjusts query frequency based on observed response patterns. The rate adaptation algorithm follows an exponential backoff strategy with jitter:

where

s represents the initial wait time, attempts count consecutive rate limit responses, and jitter introduces randomness to prevent synchronized retry storms. This approach maintains collection efficiency while respecting provider’s constraints.

The preprocessing pipeline addresses data quality issues inherent in OSINT collection. Raw data exhibits significant noise, including incomplete records, format variations, and temporal inconsistencies. Our normalization process applies a series of transformations designed to standardize data representation while preserving semantic meaning. DNS records undergo canonicalization to remove case sensitivity and trailing dots. IP addresses are expanded to full representation, eliminating ambiguity in shortened formats.

Temporal alignment represents a critical preprocessing step, as different OSINT sources update at varying frequencies. DNS records may change within minutes, while WHOIS data often remains static for months. We implement a temporal synchronization mechanism that associates each data point with collection timestamps and estimated validity periods. The validity estimation leverages historical change patterns:

where

represents the mean historical validity period;

λ controls sensitivity to change frequency;

measures the standard deviation of observed changes. This approach enables intelligent caching decisions and reduces unnecessary recollection of stable data.

Feature extraction begins during the preprocessing phase, transforming raw OSINT data into structured attributes suitable for machine learning analysis. Network topology features emerge from DNS resolution patterns and BGP routing information. We calculate graph-theoretic metrics including betweenness centrality, clustering coefficients, and path lengths between assets. Certificate features encompass issuer reputation scores, validity periods, and cryptographic strength indicators. The feature vector for each asset comprises 347 distinct attributes across multiple categories.

Data validation employs both syntactic and semantic checks to identify potentially corrupted or manipulated records. Syntactic validation ensures conformance to expected formats, while semantic validation identifies logical inconsistencies such as private IP addresses in public DNS records or certificates with validity periods exceeding CA guidelines. Approximately 3.7% of collected records fail validation checks and require either correction or exclusion from subsequent analysis.

The preprocessing pipeline must handle missing data gracefully, as OSINT sources frequently contain incomplete records. Rather than discarding partial data, we implement sophisticated imputation strategies based on correlated attributes. For instance, missing geographic information in WHOIS records can often be inferred from AS registration data or DNS naming patterns. The imputation accuracy varies by attribute type:

where

I represent an indicator function for correct imputation;

measures the uniqueness coefficient of the attribute being imputed. Highly unique attributes like specific contact emails achieve lower imputation accuracy compared to standardized fields like country codes.

Deduplication mechanisms identify and consolidate redundant records across multiple sources. Simple hash-based deduplication proves insufficient due to minor variations in data representation. Instead, we employ locality-sensitive hashing with Jaccard similarity measures to identify near-duplicate records. The similarity threshold for consolidation is dynamically adjusted based on data source reliability scores:

where

represents the baseline threshold, and

indicates source reliability scores derived from historical accuracy assessments. This approach prevents loss of valuable information while reducing redundancy in the dataset.

Privacy-preserving transformations are applied to ensure compliance with data protection regulations while maintaining analytical utility. Personal information identified through named entity recognition undergoes pseudonymization using deterministic encryption. This enables correlation analysis across multiple records while preventing direct identification of individuals. The transformation maintains referential integrity:

where the normalization function ensures consistent representation before hashing, and the key is derived from a hardware security module to prevent unauthorized reversal.

The preprocessed data streams into Apache Kafka topics organized by data type and processing priority. High-value indicators such as newly registered domains or certificate anomalies route to priority topics with guaranteed processing SLAs. Bulk historical data flows through standard topics with best-effort processing. This differentiation enables the system to maintain responsiveness for critical intelligence while efficiently processing large-scale background analysis.

Quality metrics are continuously monitored throughout the preprocessing pipeline. Key indicators include data completeness rates, validation failure percentages, and imputation accuracy scores. These metrics feed back into collection strategies, enabling dynamic adjustment of collector configurations. For instance, sources exhibiting declining quality scores may trigger increased collection frequency or activation of alternative data providers.

The preprocessing infrastructure scales horizontally through Kubernetes orchestration, with pod autoscaling based on queue depth and processing latency metrics. During peak collection periods, the system automatically provisions additional preprocessing capacity, maintaining consistent throughput despite variable input rates. Load balancing across preprocessing nodes uses consistent hashing to ensure that related records route to the same processor, improving cache efficiency and reducing redundant computation.

3.3. Feature Engineering

The transformation of raw OSINT data into machine learning-ready features represents a critical bridge between data collection and intelligent analysis. Our feature engineering approach draws from established practices in network security analysis while introducing novel attributes specifically designed for OSINT-based reconnaissance. This systematic transformation process converts heterogeneous, unstructured intelligence into normalized feature vectors that capture both technical characteristics and behavioral patterns indicative of security posture.

Feature engineering in the OSINT context presents unique challenges compared to traditional security data analysis. Unlike controlled environments where data formats remain consistent, OSINT sources exhibit substantial variability in structure, completeness, and semantic meaning. Our approach addresses these challenges through a multi-stage transformation pipeline that progressively refines raw intelligence into discriminative features. The initial stage focuses on extracting atomic attributes directly observable in the data, while subsequent stages derive complex features through aggregation and correlation across multiple data sources.

Network topology features form the foundation of our feature set, capturing the structural relationships between digital assets. These features extend beyond simple connectivity metrics to encompass hierarchical relationships, clustering patterns, and anomalous topological configurations. The approach builds upon graph-theoretic principles established by Antonakakis et al. [

22] for DNS analysis, adapting them to the broader OSINT context. For each asset, we calculate centrality measures including degree, betweenness, and eigenvector centrality within the observed network topology following established graph theory formulations [

70]:

where

λ represents the largest eigenvalue of the adjacency matrix,

denotes the set of neighbors of vertex

, and

indicates the adjacency matrix entry. Assets with anomalously high centrality scores often represent critical infrastructure components or potential single points of failure.

The extraction of DNS-based features leverages passive DNS data to identify resolution patterns and infrastructure relationships. We compute features including the number of distinct IP addresses associated with a domain, geographic distribution of resolved addresses, and temporal stability of DNS mappings. Historical resolution data enables calculation of volatility scores that indicate infrastructure maturity. Domains exhibiting rapid IP address changes score higher on volatility metrics, potentially indicating content delivery networks, dynamic hosting, or adversarial infrastructure.

Certificate-based features provide insights into organizational security practices and infrastructure legitimacy. Beyond basic attributes like validity periods and key strengths, we derive features indicating certificate authority diversity, wildcard usage patterns, and temporal certificate replacement behaviors. The feature extraction process parses X.509 certificate structures as described by Amann et al. [

59], extracting both standard fields and custom extensions. Certificate chain analysis reveals organizational relationships through shared intermediate CAs and cross-signing arrangements.

Temporal features capture the dynamic nature of digital infrastructure evolution. Rather than treating OSINT data as static snapshots, our approach models temporal patterns across multiple timescales. Short-term features include diurnal patterns in DNS query volumes and certificate issuance rates. Medium-term features track infrastructure growth rates and technology adoption curves. Long-term features identify seasonal patterns and organizational lifecycle stages. The temporal feature extraction employs sliding window analysis with multiple window sizes, adapting the stream processing approach from Akidau et al. [

44]:

where

w represents the short-term window size,

W denotes the long-term window for baseline establishment, and

indicates the observed value at time

i. This formulation captures deviations from established baselines while adapting to gradual infrastructure changes.

Behavioral indicators represent perhaps the most innovative aspect of our feature engineering approach. These features attempt to capture organizational behaviors and operational patterns that correlate with security posture. Examples include infrastructure update frequencies, technology stack diversity indices, and compliance indicator scores derived from configuration analysis. The behavioral feature extraction leverages unsupervised learning techniques, particularly the DBSCAN clustering described by Ester et al. [

10], to identify normal behavioral patterns and flag deviations.

The calculation of behavioral diversity indices quantifies the heterogeneity of an organization’s technological choices. Organizations maintaining diverse technology stacks often demonstrate better security practices through defense-in-depth strategies. The diversity index computation adapts Shannon entropy [

71]:

where

represents the proportion of infrastructure using technology

i, and

indicates a weighting factor based on the security implications of that technology choice. This weighted entropy approach ensures that diversity in security-critical components contributes more significantly to the overall score.

Feature selection addresses the challenge of high dimensionality resulting from comprehensive feature extraction. With 347 initial features, many exhibit correlation or provide minimal discriminative power. Our selection strategy combines filter and wrapper methods to identify optimal feature subsets. The initial filtering employs mutual information scoring to eliminate features with minimal relationship to risk indicators [

72]:

Features scoring below a threshold of 0.01 mutual information with target variables undergo removal. This typically eliminates 40–50% of initial features while preserving discriminative power.

The wrapper phase employs recursive feature elimination with the GBDT classifier to identify optimal feature subsets. Following the methodology established by Chen and Guestrin [

20], we iteratively remove features with the lowest importance scores and evaluate model performance. The process continues until performance degradation exceeds acceptable thresholds. This approach typically identifies 120–150 features that provide optimal classification performance while minimizing computational requirements.

Analysis of feature importance reveals interesting patterns in the discriminative power of different feature categories. Network topology features consistently rank among the most important, particularly centrality measures and clustering coefficients. Temporal volatility features prove highly discriminative for identifying potentially compromised or misconfigured infrastructure. Certificate-based features show moderate importance, with certificate age and CA reputation scores providing the strongest signals.

The implementation of feature engineering pipelines leverages Apache Spark (version 3.3.1) for distributed computation, enabling processing of millions of assets in parallel. Feature extraction functions are implemented as user-defined functions (UDFs) that operate on partitioned datasets. This architecture scales linearly with additional computer resources, maintaining consistent processing times despite growing data volumes. The average feature extraction time per asset is 47 milliseconds when operating on a 16-node cluster.

Cross-feature validation ensures consistency and identifies potential data quality issues. Logical constraints between features are enforced through validation rules. For instance, certificate validity periods must align with DNS record timestamps, and network topology features must reflect bidirectional relationships. Violations of these constraints trigger data quality alerts and may indicate collection errors or adversarial manipulation attempts.

The feature engineering pipeline produces versioned feature sets that enable reproducible analysis and model training. Each feature set includes metadata documenting extraction parameters, data sources, and temporal coverage. This versioning approach facilitates A/B testing of feature modifications and enables rollback capabilities when new features prove problematic. Feature drift monitoring tracks statistical properties of features over time, alerting when significant distribution changes occur that might necessitate model retraining [

73].

3.4. Machine Learning Implementation

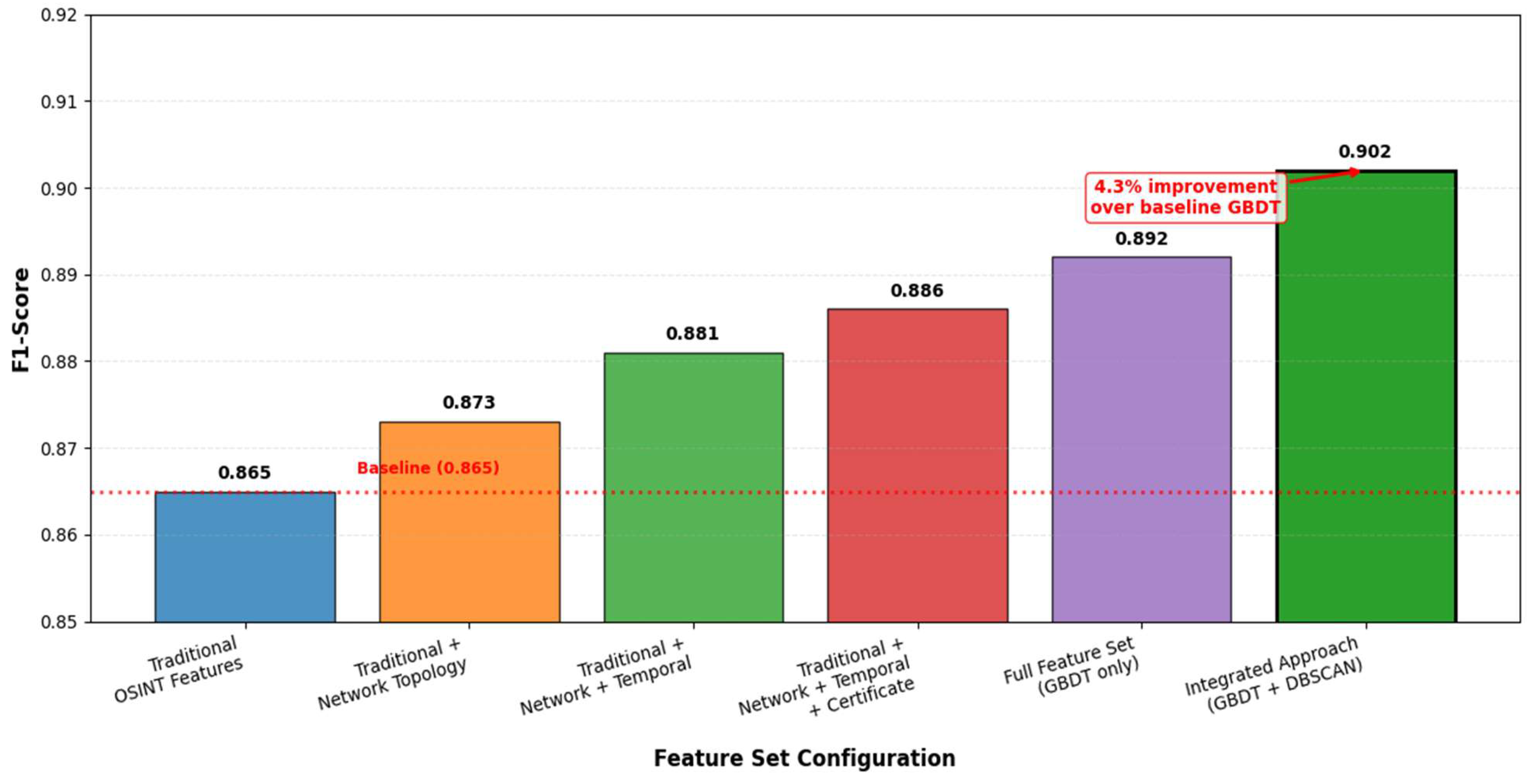

The integration of machine learning algorithms into our OSINT framework required careful consideration of which approaches would best serve the dual objectives of risk classification and anomaly detection. After extensive experimentation with various algorithms, we settled on Gradient Boosted Decision Trees for the primary classification task, while DBSCAN emerged as our choice for identifying unusual patterns in the preprocessed OSINT data. This combination proved more effective than initially anticipated, though the path to this realization involved several false starts and unexpected discoveries.

Our selection of GBDT was not immediate. Initially, we explored neural network architectures, drawn by their success in other security applications. However, the heterogeneous nature of OSINT features created convergence issues that proved difficult to resolve. Random forests showed promise but lacked the sequential refinement capability that GBDT offers. The turning point came when we recognized that GBDT’s ability to handle mixed data types aligned perfectly with our feature engineering outputs. Chen and Guestrin’s XGBoost implementation [

20] provided the foundation, though we made substantial modifications to accommodate the specific characteristics of security risk assessment.

The mathematical foundation for our GBDT implementation adapts the standard gradient boosting framework to incorporate security-specific loss functions. The objective function we minimize takes the form:

where

l represents a differentiable convex loss function measuring the difference between predicted risk scores ŷ and true labels

while

penalizes model complexity for the

k-th tree. Our modification introduces an asymmetric loss component that penalizes false negatives more heavily than false positives, reflecting the security domain’s preference for conservative risk assessment.

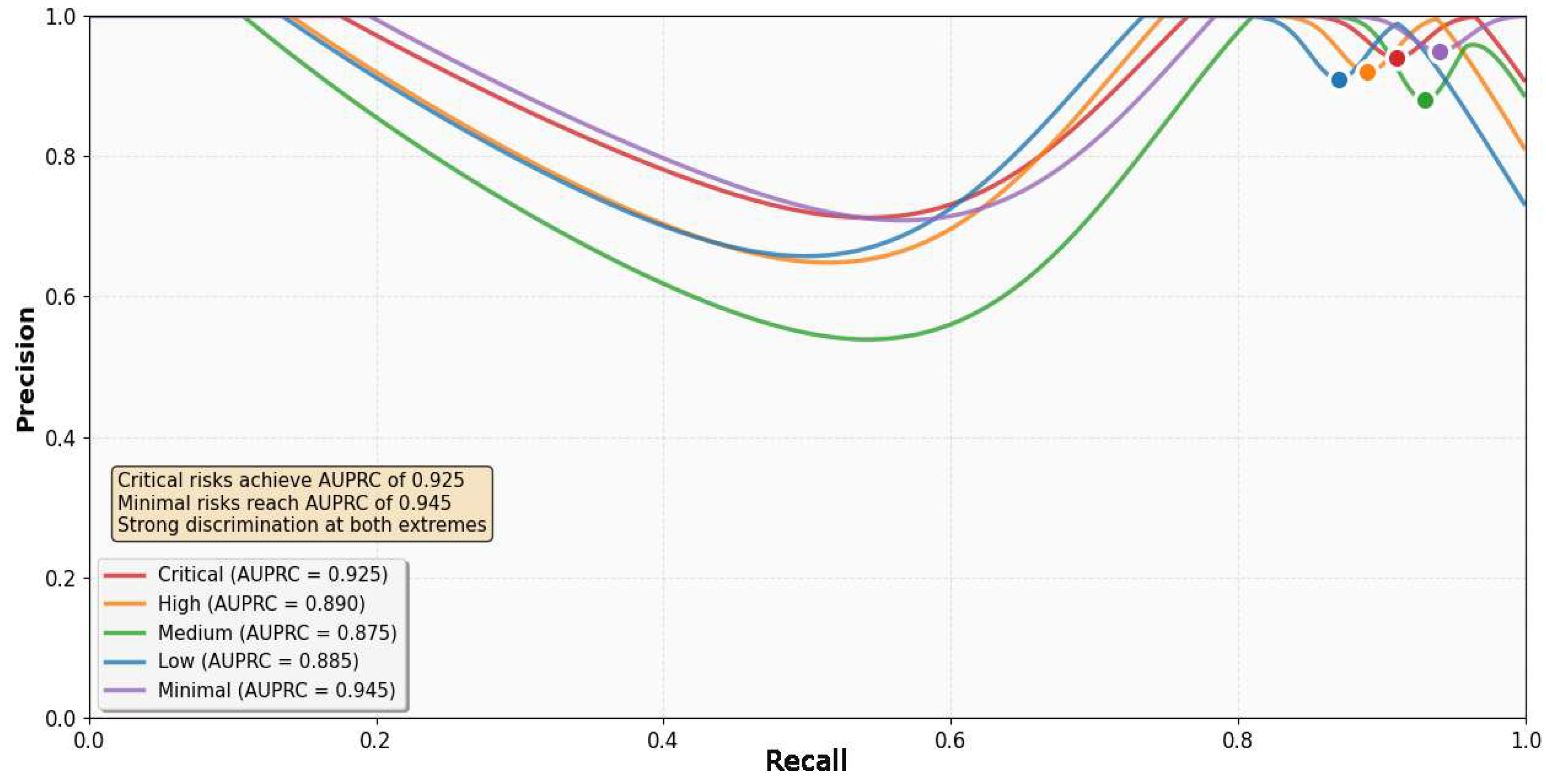

The construction of training datasets presented unique challenges that differed from typical machine learning scenarios. Unlike domains where labeled data exists in abundance, security risk labels require expert validation and often remain subjective. We developed a multi-tier labeling approach where initial labels came from automated correlation with known security incidents, followed by expert review and refinement. This process took considerably longer than anticipated. The final training set comprised 200,200 labeled instances across 3700 unique organizations, with risk scores distributed across five severity categories as shown in

Table 11.

Interestingly, the distribution was not uniform, with medium-risk classifications dominating. Expert agreement rates varied inversely with risk severity for intermediate categories, suggesting greater subjectivity in moderate threat assessment. Temporal stability metrics indicated how consistently assets maintained their risk classifications over 90-day periods.

Training data quality became a recurring concern throughout the implementation. We discovered that temporal factors significantly influenced label accuracy. Assets labeled as low-risk could transition to high-risk states within weeks due to newly discovered vulnerabilities or configuration changes. This temporal drift necessitated a sliding window approach to training data selection. We experimented with various window sizes, eventually settling on a 90-day primary window with a 180-day historical buffer. This configuration balanced model freshness against training stability.

Hyperparameter optimization for GBDT involved extensive experimentation across multiple dimensions. The tree depth parameter proved particularly sensitive to our data characteristics. Through grid search augmented with Bayesian optimization techniques described by Bergstra et al. [

62], we identified optimal configurations that varied based on the subset feature being processed.

Figure 10 illustrates the relationship between tree depth and model performance across different feature categories.

The learning rate presented another critical tuning decision. Lower rates of 0.01–0.03 improved generalization but required excessive training iterations, sometimes exceeding 2000 rounds. Higher rates of 0.15–0.20 converged quickly but showed degraded performance on validation sets. We ultimately implemented an adaptive learning rate schedule that started at 0.1 and decreased according to validation loss plateaus, following the approach outlined in [

20]:

where

represents the initial learning rate,

controls the decay rate, and

t indicates the current iteration. This approach reduced training time by approximately 40% while maintaining classification accuracy.

Cross-validation strategy development revealed interesting patterns in how OSINT data clusters naturally. Standard k-fold validation produced overly optimistic performance estimates because similar organizations often appeared in both training and validation folds. We developed a modified stratified sampling approach that ensures organizational diversity across folds. Organizations are first clustered based on infrastructure characteristics; then folds are constructed to maintain cluster representation. This modification reduced the train-validation performance gap from 12% to 3%, providing more realistic generalization estimates.

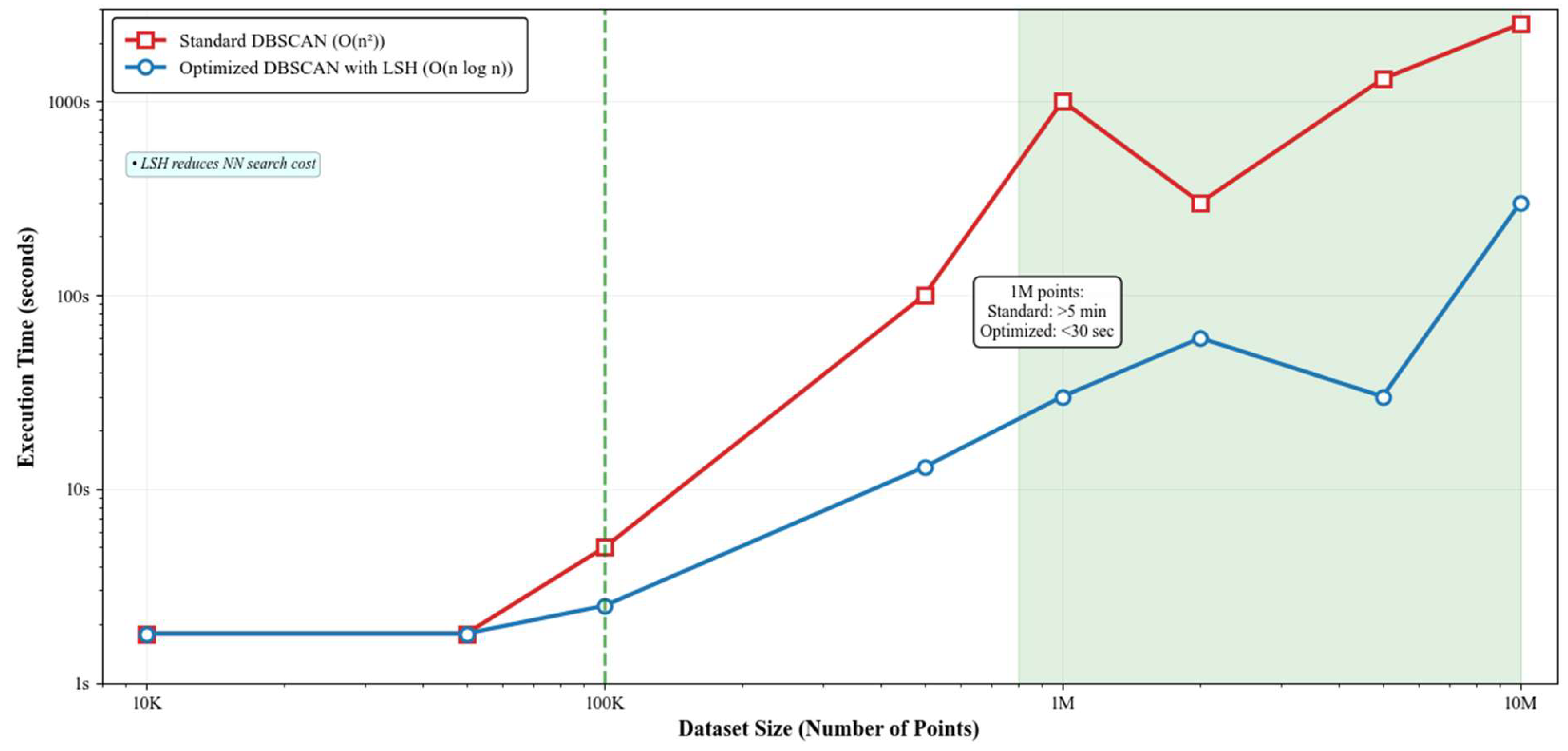

The integration of DBSCAN for anomaly detection addressed a different but complementary challenge. While GBDT excels at classifying known risk patterns, novel attack vectors and unusual configurations often evade supervised learning approaches. DBSCAN’s density-based clustering identifies outliers without requiring labeled anomaly examples. However, parameter selection for DBSCAN in high-dimensional OSINT feature spaces required careful consideration.

The epsilon parameter, which defines the neighborhood radius for density calculations, proved exceptionally sensitive to feature scaling. We developed an adaptive epsilon selection method based on k-nearest neighbor distances, following the approach suggested by Ester et al. [

10] but modified for our feature space characteristics. The algorithm computes k-distances for a sample of points, then selects epsilon at the knee point of the sorted distance curve, as formalized by [

10]:

This typically resulted in epsilon values between 0.15 and 0.25 in normalized feature space.

The minimum points parameter for DBSCAN requires balancing cluster stability against outlier sensitivity. Through empirical evaluation across different organization types, we settled on a variable minimum points threshold based on local density estimates.

Table 12 summarizes the parameter configurations and their impact on anomaly detection performance.

Cluster quality assessment employed multiple metrics to ensure meaningful anomaly detection. Beyond traditional silhouette scores, we developed custom metrics that weight boundary point classification accuracy and anomaly detection rates. The most informative metric tracked the percentage of confirmed security incidents appearing as DBSCAN outliers. Achieving 76% incident detection rates while maintaining false positive rates below 15% required extensive parameter refinement.

The integration between supervised GBDT classification and unsupervised DBSCAN clustering created unexpected synergies. Initially, we ran these algorithms independently and combined results through simple score aggregation. However, this approach missed valuable interaction effects. We discovered that DBSCAN cluster assignments could serve as additional features for GBDT, improving classification accuracy by 4.3%. The enhanced feature vector incorporated cluster membership indicators and distance to nearest cluster centroid:

where

represents the cluster assignment for instance

i, and

measures the minimum distance to any cluster centroid

C.

Implementation details revealed performance bottlenecks that required creative solutions. GBDT training on full feature sets consumed excessive memory, particularly when processing millions of instances. We implemented a column sampling strategy that processes feature subsets in parallel, aggregating predictions through soft voting. This reduced memory requirements by 65% while degrading accuracy by less than 1%. The approach bears similarity to random forest principles but maintains the boosting framework’s sequential refinement advantage.

DBSCAN’s computational complexity of O(n

2) in worst-case scenarios threatened scalability for large-scale OSINT analysis. We addressed this through approximate nearest neighbor searches using locality-sensitive hashing, reducing complexity to O(n log n) for typical cases.

Figure 11 demonstrates the scalability improvements achieved through these optimizations.

Model persistence and versioning became critical as the system matured. Each trained model includes comprehensive metadata documenting training data characteristics, hyperparameter configurations, and performance metrics. This versioning approach enables reproducible experiments and facilitates debugging when model performance degrades unexpectedly. The metadata schema captures temporal aspects of training data, allowing us to correlate model accuracy with the age of training instances.

3.5. Risk Scoring Framework

The development of a comprehensive risk scoring framework represented one of the most challenging aspects of our automated OSINT system. Traditional approaches like CVSS provide valuable baselines for individual vulnerabilities, yet they fail to capture the complex interplay between multiple risk factors, organizational context, and temporal dynamics that characterize real-world security postures. Our framework attempts to address these limitations through a composite scoring methodology that integrates machine learning outputs with contextual factors and temporal decay models.

The composite risk calculation methodology evolved through several iterations as we discovered limitations in simpler approaches. Initially, we attempted linear combinations of individual risk factors, but this proved inadequate for capturing non-linear interactions between vulnerabilities. The current framework employs a hierarchical aggregation model that processes risk indicators at multiple levels of abstraction. At the foundation, individual vulnerability scores undergo contextual adjustment based on asset criticality and exposure levels. These adjusted scores then feed into higher-level aggregation functions that account for vulnerability chaining and systemic risks.

The mathematical formulation for our composite risk score extends the work of Caralli et al. [

65] on asset criticality analysis, incorporating additional factors specific to OSINT-derived intelligence:

where

represents individual vulnerability scores derived from CVSS base metrics [

32],

denotes asset-specific criticality weights,

controls temporal decay rates based on the research by Bilge and Dumitras [

40],

indicates interaction effects between correlated vulnerabilities, and

represents the aggregated machine learning risk assessment. The parameters

weight the relative contributions of direct vulnerabilities, interaction effects, and ML-derived insights, respectively.

Asset criticality weights proved particularly challenging to calibrate. Simple binary classifications of critical versus non-critical assets failed to capture the nuanced importance of different infrastructure components. We developed a multi-factor criticality assessment that considers data sensitivity, service dependencies, and business impact. The weighting function incorporates both static attributes and dynamic usage patterns:

where

represents a baseline weight derived from asset classification, and

denotes various factor functions measuring attributes like network centrality, data flow volume, and service criticality. The parameter

controls the relative influence of each factor.

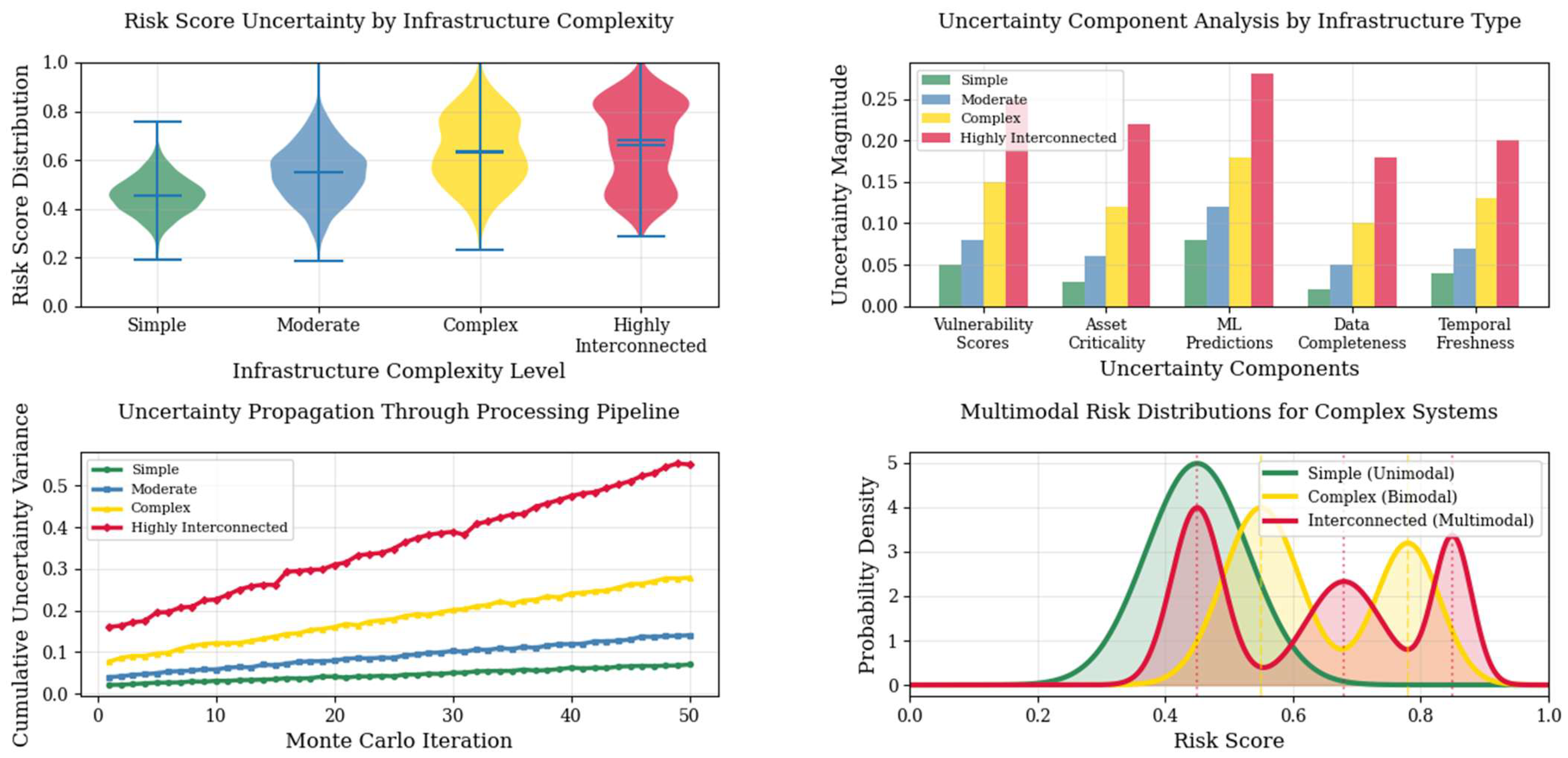

The integration of machine learning outputs into the risk scoring framework required careful consideration of how to combine probabilistic assessments with deterministic vulnerability scores. GBDT classifiers produce probability distributions across risk categories, while DBSCAN provides binary anomaly indicators with associated confidence measures. Rather than simply using point estimates, we preserve the full probability distributions to capture uncertainty in risk assessments.

Table 13 illustrates how different ML outputs contribute to the final risk score calculation across various asset types.

The variance metrics reveal that cloud services and IoT devices exhibit higher uncertainty in risk assessments, reflecting the rapidly evolving threat landscapes for these technologies. This uncertainty propagates through to confidence scoring mechanisms.

Confidence scoring emerged as a critical requirement for operational deployment. Security teams need to understand not just the risk level but also the reliability of that assessment. Our confidence scoring approach considers multiple factors, including data completeness, temporal freshness, and model certainty. The confidence calculation follows a multiplicative model that penalizes any weak link in the assessment chain:

where

represents individual confidence components, and

controls their relative importance. Components include data coverage completeness, temporal data freshness, ML model confidence, and corroboration across multiple data sources.

The implementation revealed interesting patterns in confidence degradation. Network topology data maintained high confidence levels due to its relatively static nature, while behavioral indicators showed rapid confidence decay.

Figure 12 illustrates the distribution of confidence scores across various risk levels and data source combinations.

Temporal decay functions proved essential for maintaining accurate risk assessments over time. Static vulnerability scores quickly become outdated as patches are released, exploits are developed, or infrastructure configurations change. Our temporal decay model adapts exponential decay rates based on multiple factors, including vulnerability type, exploit availability, and historical patching patterns.

The basic temporal decay follows an exponential model with adaptive parameters:

where

represents the decay rate, and

H(

t) captures discrete events that reset or modify the decay trajectory; however, we discovered that simple exponential decay inadequately modeled real-world scenarios. Vulnerability scores sometimes increase over time as new exploits emerge or dependencies are discovered. This led to a more sophisticated piecewise decay function:

where

marks the public disclosure of a working exploit,

represents the risk amplification factor, and

λ1 <

λ2 reflects accelerated decay after exploit availability. The calibration of decay parameters required extensive analysis of historical vulnerability lifecycles. We analyzed 50,000 CVEs from the National Vulnerability Database [

33] tracking their progression from disclosure through exploitation to remediation. This analysis revealed distinct patterns based on vulnerability categories. Memory corruption vulnerabilities showed rapid initial decay as patches became available, followed by potential amplification if exploit kits incorporated them. Configuration vulnerabilities exhibited slower, more linear decay patterns.

Table 14 summarizes the calibrated temporal decay parameters for major vulnerability categories.

The mean lifetime represents the period until risk scores decay to 10% of initial values, assuming no exploit publication. Cryptographic vulnerabilities show the longest lifetimes, reflecting the complexity of remediation when encryption algorithms require replacement.

Integration challenges arose when combining static vulnerability assessments with dynamic ML outputs. Machine learning models produce time-varying risk assessments that do not follow predictable decay patterns. We addressed this through a hybrid approach where ML assessments modulate the decay rates of traditional scores. When GBDT classifications indicate increasing risk despite temporal decay, the system adjusts decay parameters to reflect this intelligence:

where

represents the change in

ML risk assessment, and

η controls the influence of