2. Materials and Methods

This section presents the methodological framework for evaluating rubric-based student assessment with a large language model before and after fine-tuning. We describe data collection and annotation, prompt structure, the mistral-lora fine-tuning procedure, the scoring protocol, and the computational infrastructure (including Neo4j storage of responses and results). Throughout, we refer to the original instruction-tuned model as mistral-base and to the LoRA-adapted fine-tuned variant as mistral-lora.

2.1. Data Collection and Annotation

The dataset was derived from authentic university-level computer science examinations. Multiple versions of real exam sheets were used to extract open-ended and multiple-choice questions relevant to fundamental topics such as programming, artificial intelligence, and data-driven reasoning. We selected 20 open-ended questions and categorized them into two rubric types—10 technical and 10 argumentative—based on the task’s cognitive demands.

Each response was annotated according to a predefined rubric framework that included distinct criteria for answers and explanations. Answers were evaluated using a technical or an argumentative rubric, each comprising four dimensions aligned with the task’s cognitive demands. In parallel, each explanation was independently assessed using a dedicated three-criterion rubric focused on clarity, accuracy, and usefulness.

Table 2 summarizes the evaluation criteria across rubric types, reflecting the dual-layer annotation protocol.

Among these, dialecticality is the most conceptually nuanced and warrants further clarification. It refers to the respondent’s capacity to engage with contrasting viewpoints, anticipate counterarguments, or incorporate multiple perspectives in a coherent argumentative structure. Unlike coherence or originality, which focus on internal flow and novelty, dialecticality emphasizes the ability to reason within a dialogic framework, acknowledging alternative positions and constructing responses that reflect critical, debate-aware thinking. In educational settings, this dimension is essential for evaluating higher-order reasoning and argumentative maturity.

To enhance reproducibility, dialecticality was judged using four descriptor bands: (a) minimal engagement—a single asserted position with no credible alternatives; (b) emerging contrast—acknowledges at least one salient counterposition with brief engagement; (c) structured contrast—systematically contrasts multiple plausible positions and addresses counter-arguments with reasons or evidence; and (d) integrative synthesis—articulates a conditional resolution that integrates opposing claims, explicitly stating trade-offs, scope conditions, and residual uncertainty.

In the argumentative rubric, each criterion captures a distinct dimension of reasoning. Clarity evaluates the linguistic precision and readability of the response. Coherence measures logical progression and internal consistency. Originality refers to the presence of novel or non-trivial insights that go beyond rote or generic formulations. Dialecticality, as previously explained, assesses the respondent’s ability to engage critically with opposing perspectives or alternative viewpoints.

In the technical rubric, the criteria are oriented toward factual accuracy and domain-specific clarity. Accuracy measures the correctness of the content with respect to the question and underlying concepts. Clarity assesses how clearly the explanation conveys technical information without ambiguity. Completeness evaluates whether the response covers all essential aspects of the task. Terminology focuses on the appropriate and precise use of technical vocabulary relevant to the domain.

In the explanation rubric, each criterion targets the communicative and instructional value of the model’s justification. Clarity assesses whether the explanation is articulated in a concise and comprehensible manner. Accuracy evaluates the correctness of the explanation in relation to both the answer and the underlying concepts. Usefulness captures the pedagogical value of the explanation—its ability to support understanding, reinforce key ideas, or offer relevant context for the student.

Representative answers were generated by the two model variants, mistral-base and mistral-lora. The answers were intentionally varied in quality, length, and structure to reflect a realistic range of academic performance, including both strong and weaker responses. Each entry was independently reviewed to ensure clarity, coherence, and compatibility with the rubric criteria. The finalized dataset was serialized in JavaScript Object Notation Lines (JSONL) format, with each record containing the question, rubric type, input prompt, constructed response, and corresponding rubric labels. This annotated corpus was used to fine-tune mistral-lora and to enable a comparative evaluation against mistral-base (before vs. after adaptation). This base–vs–fine-tuned contrast constitutes a prompt-only baseline under identical prompts and decoding settings.

2.2. Fine-Tuning Procedure

The fine-tuning process aimed to adapt a general-purpose LLM to the specific linguistic and conceptual patterns characteristic of university-level computer science instruction. LLM fine-tuning has been shown to significantly improve model performance on domain-specific evaluation tasks when combined with rubric-aligned supervision [

18]. We selected Mistral-7B-Instruct-v0.2 as the base model due to its instruction-tuned architecture and compatibility with parameter-efficient adaptation methods [

19].

To optimize for resource-constrained training environments and maintain full control over the model’s pedagogical behavior, we used Low-Rank Adaptation (LoRA) [

20]. LoRA modifies only a small number of internal projection layers while freezing the mistral-base parameters, allowing efficient training with minimal computational overhead. Specifically, we applied LoRA to the q_proj and v_proj modules within the transformer architecture, using a rank of 8, lora_alpha = 16, and lora_dropout = 0.1. This configuration was chosen to maximize parameter efficiency while ensuring stable convergence. The resulting adapter layers were merged into the base model using a standard merge-and-unload procedure, yielding a standalone, fully integrated fine-tuned model.

The training dataset consisted of 643 prompt–response pairs derived from university-level course materials and structured according to a two-tier rubric (technical and argumentative). Each instance included the input prompt, the instructor-validated answer, and associated rubric metadata, all serialized in JSONL format. All examples were manually curated to ensure reproducible preprocessing. This dataset served as the input for supervised fine-tuning.

Training was configured for up to 10 epochs and stopped after 6 epochs due to early stopping, using float32 precision and a maximum sequence length of 1024 tokens. Early stopping monitored eval_loss with a patience of 2 epochs. The pipeline was optimized for CPU-only training with a per-device batch size of 1 and gradient accumulation of 4 steps (effective batch size 4), enabling stable convergence under constrained memory and computing resources.

2.3. Prompt Structure and Evaluation Pipeline

The response generation pipeline was designed to enable a direct comparison between mistral-base and mistral-lora. The objective was to evaluate the effect of fine-tuning on the model’s ability to generate accurate and pedagogically appropriate answers to real exam questions.

Each question was converted into a standardized prompt with explicit instructions and markup. The prompt began with the header “### Prompt:” followed by the exam question, and ended with “### Response:” as a signal for model output. This structure enforced uniformity across inputs and eliminated confounding variability unrelated to model parameters.

The same prompt was submitted independently to both mistral-base and mistral-lora. Each LLM processed the input in isolation, generating a distinct output under identical prompting conditions. All generations used deterministic decoding (do_sample = False).

All responses were stored in a Neo4j graph database, along with metadata such as the original question, rubric type, full prompt, model version, and timestamp. Each response node was linked to its corresponding prompt, model, and rubric category, enabling fine-grained analysis of evaluation behavior. This relational structure allowed for systematic queries and integration with downstream tools for automated scoring, rubric validation, and feedback comparison [

21].

The pipeline consisted of four reproducible stages:

Selection of representative exam questions from real student assessments;

Construction of standardized prompt templates embedding rubric-specific instructions;

Independent generation of responses by both the pre-trained and fine-tuned LLM;

Storage of model outputs and metadata in a structured Neo4j graph database.

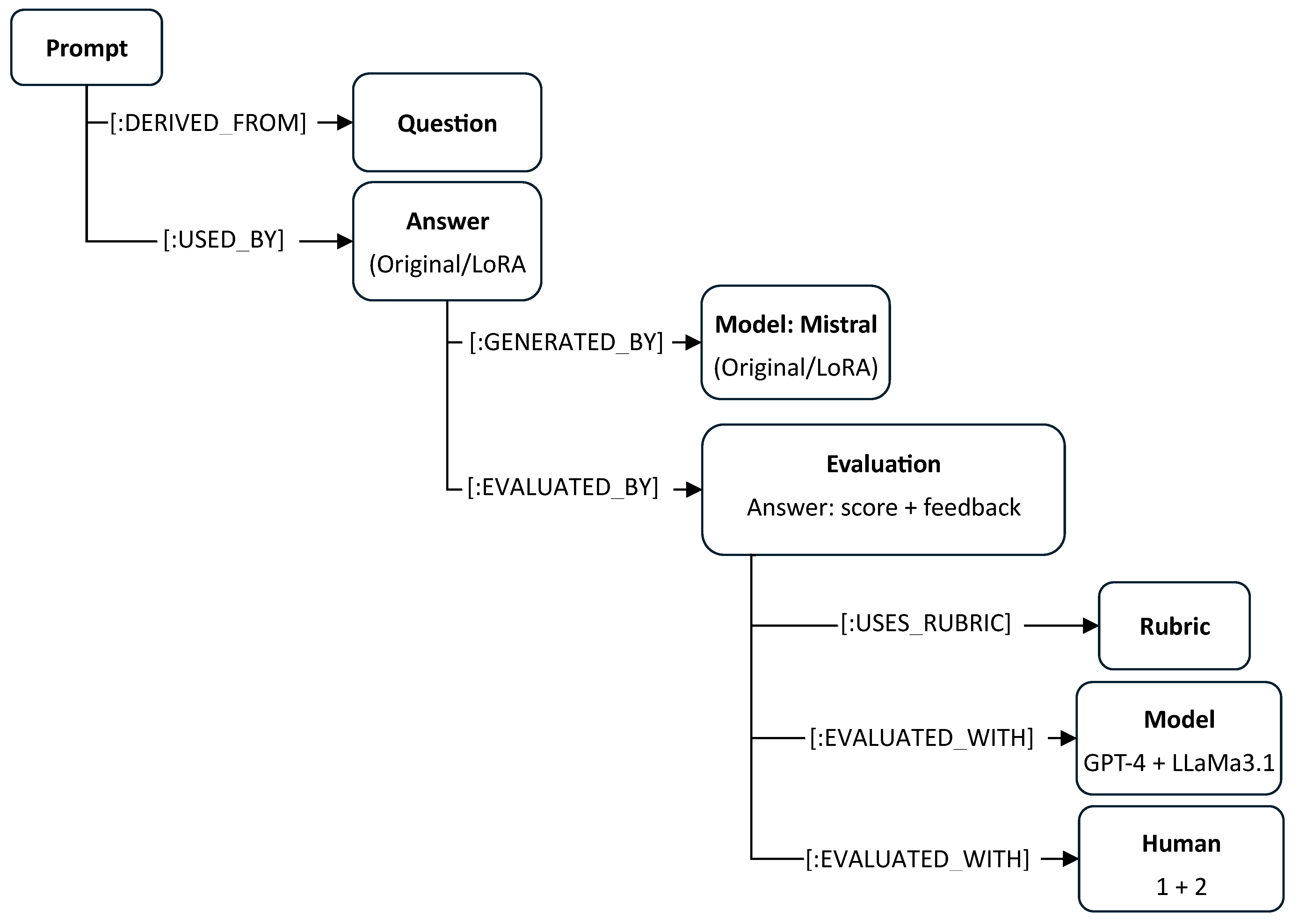

This process is illustrated in

Figure 1. The pipeline begins with the manual curation of open-ended questions, which are encoded into prompt–response templates. Each prompt is submitted in parallel to both model variants. The generated responses, along with the original prompts, are then serialized and stored in a Neo4j graph schema. Nodes represent entities such as the model, the prompt, and the generated response, while edges define their relationships, such as “produced_by” or “responds_to”. This graph-based architecture enables traceable evaluation flows, supports comparative analysis of model behavior, and integrates seamlessly with rubric-based scoring tools downstream.

This architecture provides the foundation for a structured comparison between the responses generated by each model, as detailed in the next section.

2.4. Scoring and Comparison Protocol

To assess the effect of fine-tuning on rubric-based evaluation quality, we implemented a structured comparison protocol between mistral-base and mistral-lora. The evaluation focused on accuracy, clarity, and rubric alignment of generated responses to real exam questions. Each question was processed identically by both models, and the resulting answers were scored by two human raters and two LLM judges (GPT-4 and Llama-3.1).

The evaluation process was performed using two LLM judges and two human raters, who received rubric-specific prompts detailing the four criteria associated with the corresponding rubric type—technical or argumentative, as defined in

Table 2. For each criterion, each rater generated both a numerical score from 1–10 and a concise textual justification. Each response was scored independently by every rater to eliminate cross-contamination and ensure that judgments reflected only the content of the answer.

The use of multiple evaluators was a core design decision that enabled scalable and consistent assessment across large response sets. All scores, feedback, and metadata from all raters were stored in a Neo4j graph database. Each record preserved the link between the evaluated response, its originating model, the associated rubric, the rater, and the question. This architecture allowed for transparent comparison of model behavior across aligned prompts and cross-rater analyses, and facilitated multi-level querying of scoring dynamics [

17].

Quantitative analysis included computation of absolute score differences for each rubric criterion, mean score comparison across models, and measurement of inter-model score variance. Additionally, correlation coefficients were calculated to capture the consistency of scoring trends across rubric dimensions. These metrics provided an objective view of how fine-tuning influenced numerical evaluation outcomes.

A complementary qualitative analysis focused on the semantic characteristics of the feedback. We reviewed a stratified subset of evaluations to examine tone, rubric specificity, coherence, and instructional value. Differences in language style and rubric fidelity between the two model versions revealed how fine-tuning affected not only scoring but also the pedagogical relevance of generated feedback.

In addition to scoring the main answers, we introduced a second evaluation layer dedicated to assessing the explanation component generated by the model. Each explanation was independently scored by the same set of raters using a dedicated rubric with three criteria: clarity, accuracy, and usefulness. These dimensions were selected to reflect both the linguistic quality and the instructional relevance of the justification provided. The evaluator assigned a numerical score from 1–10 for each criterion, accompanied by a brief summary comment. A composite explanation score was calculated as the arithmetic mean of the three scores. This dual-layer protocol enabled a more granular analysis of model behavior, capturing not only the correctness of the answer but also the pedagogical clarity of its rationale. All explanation-level evaluations were stored in the graph database alongside their corresponding answer evaluations, supporting structured comparisons across both output components.

For Dialecticality, scoring was calibrated using descriptor bands embedded in the evaluation rubric and in the LLM prompts, ensuring consistent thresholding and reproducible application of the criterion.

2.5. Infrastructure and Deployment

The evaluation pipeline was implemented entirely in Python 3.11 and executed on a CPU-based server equipped with dual Intel Xeon Gold 6252N processors (Intel Corporation, Santa Clara, CA, USA) at 2.30 GHz and 384 GB RAM. The system performed all stages—LoRA-based fine-tuning, inference, rubric-based evaluation, and structured graph storage in Neo4j—using multi-core CPU processing and optimized memory execution. Transformer-based models such as Mistral have been shown to operate efficiently on CPU infrastructure when combined with parallelization techniques [

22].

Model training and prompt handling relied on the Hugging Face transformers [

23] and datasets libraries, while adapter-based fine-tuning was performed using the Parameter-Efficient Fine-Tuning (PEFT) library [

24]. Fine-tuning was configured for up to 10 epochs and stopped after 6 epochs due to early stopping using float32 precision, with a sequence length of 1024 tokens and a per-device batch size of 1 with gradient accumulation of 4 steps (effective batch size 4), balancing convergence with memory constraints. Both mistral-base and mistral-lora were preserved for downstream inference.

Each question from the instructional dataset was converted into a standardized prompt and independently submitted to both the mistral-base and mistral-lora. The resulting answers were evaluated by two human raters and two LLM judges using rubric-specific prompts, which directed the evaluators to assign a score between 1 and 10 for each criterion and generate brief textual feedback. Evaluations were conducted independently for each answer to ensure input invariance and minimize bias.

All artifacts—questions, prompts, answers, model identifiers, evaluation scores, feedback, and rubric metadata—were stored in a Neo4j graph database. The schema encoded entities as labeled nodes (Prompt, Answer, Model, Evaluation, Rubric) and their semantic relations via directed edges such as DERIVED_FROM, GENERATED_BY, EVALUATED_BY, USES_RUBRIC, and EVALUATED_WITH. Each evaluation node stores two sets of scores and feedback: one for the main answer and one for the accompanying explanation, enabling dual-layer analysis of content and justification quality. Neo4j has proven effective in representing educational knowledge graphs and supporting graph-based reasoning for automated evaluation tasks [

25].

Figure 2 illustrates the architecture of this graph schema, showing the connections between prompts, models, answers, evaluations, and rubric criteria.

Transformer models operate efficiently on CPU infrastructure when supported by execution strategies such as adapter-based fine-tuning and parallelized inference routines. These techniques allow high-throughput deployment without relying on specialized hardware accelerators, making CPU-only configurations suitable for production-scale applications in educational and evaluative contexts. This is particularly important when implementing multi-component evaluation pipelines, such as the one proposed in this study, where both the student response and its accompanying explanation are independently assessed [

26].

3. Results

This section presents the results of a comprehensive comparative evaluation between mistral-base and mistral-lora applied to rubric-based student assessment tasks. Each response generated by the two model variants consisted of two components: a final answer and an accompanying explanation. These components were evaluated independently by two LLM judges and two human raters using rubric-specific criteria. The answer was scored based on a four-dimension rubric (technical or argumentative), while the explanation was assessed using a separate rubric focused on clarity, accuracy, and usefulness. Both numerical scores and textual feedback were analyzed to assess the impact of fine-tuning on rubric fidelity, scoring consistency, and explanatory quality. The findings are organized into five subsections: score distributions, per-question drift, rubric-specific effects, feedback structure, and inter-model consistency.

3.1. Scoring Distribution of Answers and Explanations

To assess the impact of domain-specific fine-tuning on rubric-based evaluation quality, we conducted a comparative analysis between two variants of the same large language model: mistral-base and mistral-lora trained on instructional content from a university-level computer science course. Both models were presented with an identical set of open-ended questions and produced two outputs for each prompt: a final answer and an accompanying explanation. Each component was independently evaluated by two LLM judges and two human raters. The answer was scored using a rubric corresponding to the question type (technical or argumentative), while the explanation was evaluated using a separate rubric focused on clarity, accuracy, and usefulness. All scores were assigned on a 1–10 scale per criterion.

Table 3 presents detailed descriptive statistics for scores assigned to responses generated by the base model (mistral-base) and its fine-tuned counterpart (mistral-lora), across rubric types and evaluation components. The fine-tuned model consistently outperformed the base version in every condition. For argumentative tasks, the average answer score increased from 6.95 to 7.77, and explanation scores improved from 7.87 to 8.64. In technical tasks, the gains were also evident: answer scores rose from 7.94 to 8.21, while explanation scores increased from 8.38 to 8.91. These improvements were accompanied by smaller standard deviations and narrower 95% confidence intervals in technical tasks, while dispersion remained comparable on argumentative tasks, indicating enhanced scoring stability primarily in the technical setting. The results indicate that domain-specific fine-tuning improves the quality and consistency of model outputs, with gains concentrated in technical settings and consistent improvements for explanations.

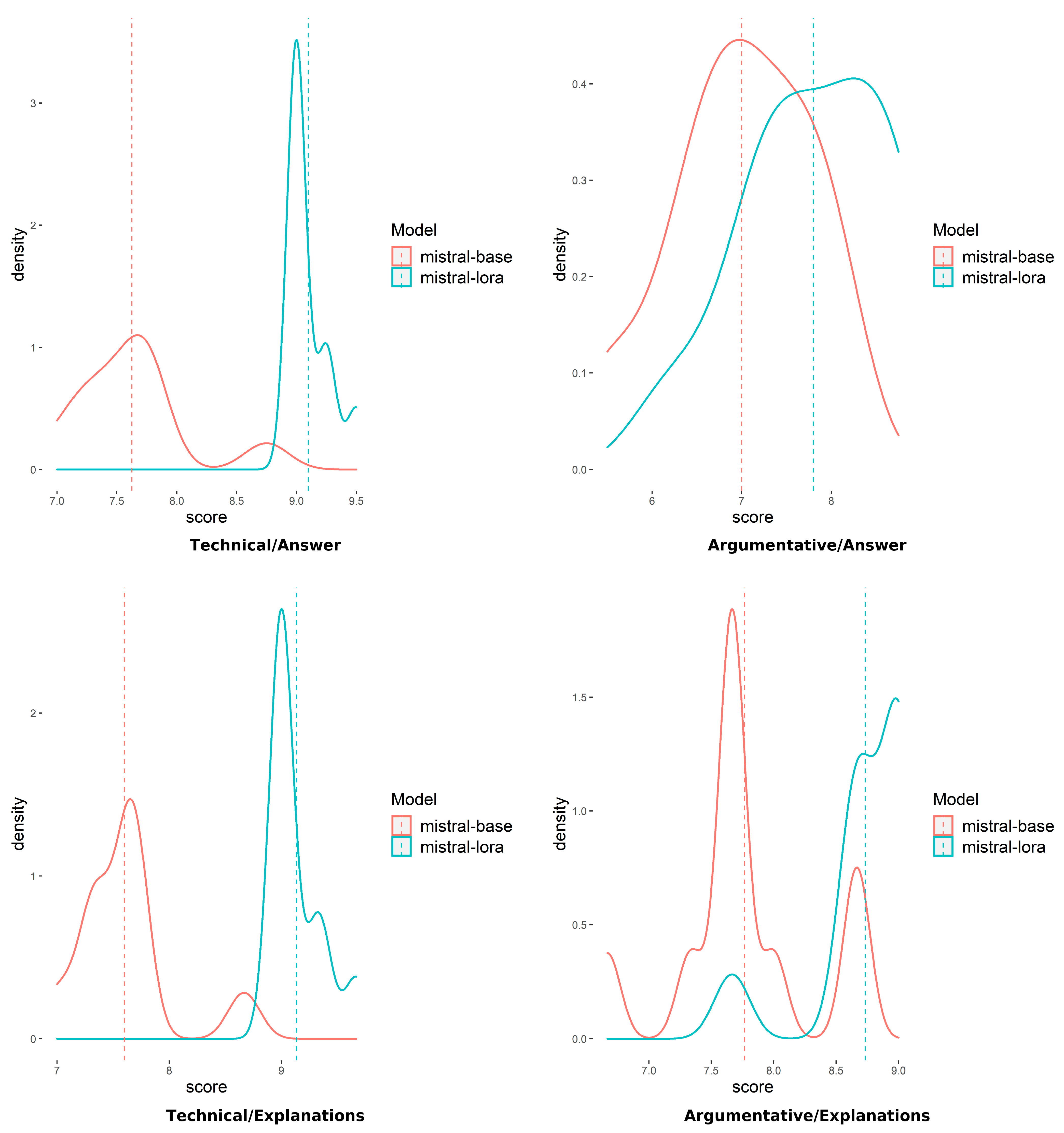

These trends are visualized in

Figure 3, which presents a box-and-whisker plot of pooled item-level scores across all raters for both model variants, disaggregated by rubric type and response component. This representation highlights the central tendency, dispersion, and presence of outliers across categories. The fine-tuned model (mistral-lora) consistently outperforms the base model (mistral-base), achieving higher medians across categories and narrower interquartile ranges in the technical categories, while dispersion remains comparable in the argumentative categories. These distributional patterns are consistent with improved content quality and greater scoring stability in the technical setting, reinforcing its alignment with rubric expectations in automated assessment contexts.

Inter-rater reliability is reported for three categories: Technical Answer, Argumentative Answer, and Explanation. Agreement is shown for Human–Human and for each LLM against the human mean, using ICC(2,1), Krippendorff’s α, and quadratic-weighted Cohen’s κ (QWK) computed on pooled item sets. Detailed values are listed in

Table 4.

We compute 95% bootstrap confidence intervals for ICC(2,1), Krippendorff’s α, and quadratic-weighted Cohen’s κ. p-values are adjusted via Holm–Bonferroni, and we report Hedges’ g together with Levene tests for variance.

Human–Human coefficients are similar for Technical and Argumentative Answers, while Explanation shows the weakest consistency. Alignment of the LLM judges with the human mean is near zero to moderate for answers and weak for explanations. Llama-3.1 is closer to the human mean on Technical Answer, while alignment is mixed on Argumentative Answer (higher Krippendorff’s α for GPT-4; higher ICC(2,1)/QWK for Llama-3.1), whereas both judges exhibit weak alignment on explanations (GPT-4 slightly higher Krippendorff’s α; Llama-3.1 slightly higher QWK). These patterns reinforce the earlier finding that explanations are harder to evaluate consistently than answers.

Table 5 reports the comparison between mistral-base and mistral-lora. We compare the fine-tuned model to the base model across three categories: Technical Answer, Argumentative Answer, and Explanation. For each rater we report the mean difference LoRA minus Base, Hedges’ g with a 95% bootstrap confidence interval, Welch’s t and degrees of freedom with two-sided

p-values adjusted via Holm–Bonferroni, the Levene’s test

p-value for variance equality, and the number of items (n). Rows are organized by category and then by rater, and positive values indicate an advantage for the fine-tuned model.

The technical setting shows the clearest contrast when the judge is GPT-4. The fine-tuned model exceeds the base by a mean of 1.47 points, with Hedges’ g 3.95 and a 95% bootstrap confidence interval from 2.42 to 8.91. The Welch’s t is 9.21 with 11.39 degrees of freedom and the two-sided p-value is <0.0001, which remains significant after Holm–Bonferroni. Levene’s p is 0.0723, indicating comparable variances. Human 1 reports a small negative difference with Delta mean −0.22 and Hedges’ g −0.19, confidence interval −1.00 to 0.74, p-value 0.6624, Holm–Bonferroni decision: retain. Human 2 reports Delta mean −0.15 and Hedges’ g −0.17, confidence interval −1.05 to 0.72, p-value 0.6954, Holm–Bonferroni decision: retain. Llama-3.1 estimates a null effect with Delta mean 0.00 and Hedges’ g 0.00, confidence interval −1.01 to 0.81, p 1.00, Holm–Bonferroni decision: retain. Each technical contrast uses ten items.

For argumentative answers the pattern is positive yet below the multiple-testing threshold. GPT-4 shows Delta mean 0.80 and Hedges’ g 0.96, confidence interval 0.19 to 2.13, Welch’s t 2.24 with 17.99 degrees of freedom, p-value 0.0379, Holm–Bonferroni decision: retain. Llama-3.1 reports Delta mean 0.60 and Hedges’ g 0.54, confidence interval −0.27 to 1.67, p-value 0.2257, Holm–Bonferroni decision: retain. Human 1 shows Delta mean 1.40 and Hedges’ g 0.84, confidence interval 0.05 to 2.36, p-value 0.0673, Holm–Bonferroni decision: retain. Human 2 shows Delta mean 0.48 and Hedges’ g 0.52, confidence interval −0.30 to 1.64, p-value 0.2426, Holm–Bonferroni decision: retain. Levene’s p-values lie between 0.6276 and 0.8082, consistent with stable variances. Ten items are included.

For explanations the magnitude depends on the judging rater. GPT-4 indicates a large advantage with Delta mean 1.25 and Hedges’ g 2.71, confidence interval 1.82 to 4.66, Welch’s t 8.73 with 35.21 degrees of freedom, p-value is <0.0001, Holm–Bonferroni decision: retain. Llama-3.1 shows a small positive contrast with Delta mean 0.33 and Hedges’ g 0.40, confidence interval −0.20 to 0.95, p-value 0.2042, Holm–Bonferroni decision: retain. Human 1 reports a moderate advantage with Delta mean 0.85 and Hedges’ g 0.87, confidence interval 0.29 to 1.64, Welch’s t 2.82 with 37.93 degrees of freedom, p-value 0.0077, Holm–Bonferroni decision: retain. Human 2 shows Delta mean 0.15 and Hedges’ g 0.18, confidence interval −0.42 to 0.86, p-value 0.5559, Holm–Bonferroni decision: retain. Levene’s p ranges from 0.1264 to 0.9150 and the sample comprises twenty items.

Overall, only the Technical Answer contrast judged by GPT-4 remains significant after Holm–Bonferroni; all other contrasts do not reach the multiple-testing threshold, with positive but smaller effects for Argumentative Answer and mixed magnitudes for Explanation, while variance checks remain stable throughout.

We assess statistical significance using Welch’s two-sided t-tests on judge-assigned scores for answers and explanations. For GPT-4 judge only, the effect was particularly strong for technical answers, while argumentative answers also showed a substantial improvement before multiple-testing correction. After Holm–Bonferroni correction, only the Technical Answer contrast judged by GPT-4 remains statistically significant. For explanations, both technical and argumentative components showed positive gains but did not reach the multiple-testing threshold. No additional significance is claimed for explanation-level results beyond this correction.

These findings are visually supported in

Figure 4 (GPT-4 judge only), which presents estimated probability density functions of judge-assigned scores, highlighting the distributional shifts induced by fine-tuning. The plots were generated in R using ggplot2 [

27] and ggdensity [

28], offering a smoothed perspective over scoring distributions for answers and explanations across both models.

Taken together, these results show that supervised fine-tuning on rubric-annotated instructional data enhances both the content and the explanatory layers of model outputs. On average, the fine-tuned model outperformed the base version, with gains concentrated in Technical Answer for GPT-4 after Holm–Bonferroni correction and consistent improvements for explanations. It also exhibited lower score variability, especially in technical settings, and stronger alignment with evaluation criteria. By independently assessing both answers and explanations across human and LLM judges, our analysis provides a more granular view of model improvement and highlights the pedagogical relevance of fine-tuning. These findings support the integration of rubric-aware LLMs into automated evaluation pipelines designed for educational use while acknowledging evaluator-specific effects.

Primary findings in this section are established using two human raters and two LLM judges (GPT-4 and Llama-3.1). After Holm–Bonferroni correction, significant gains concentrate in Technical Answer for GPT-4. Human ratings show small or non-significant effects for Technical Answer and moderate improvements for Explanation. This pattern indicates that GPT-4 exhibits the clearest difference between mistral-lora and mistral-base after Holm–Bonferroni correction, whereas effects for other raters remain mixed or non-significant. From

Section 3.2 onward we present GPT-4 single-judge diagnostics as exploratory, judge-specific visuals that complement the multi-rater results.

Given the limited alignment between LLM judges and human raters documented in

Table 4, the subsequent GPT-4 single-judge diagnostics are presented as exploratory, judge-specific visualizations that complement the multi-rater analysis; interpretations and claims are grounded in the multi-rater results.

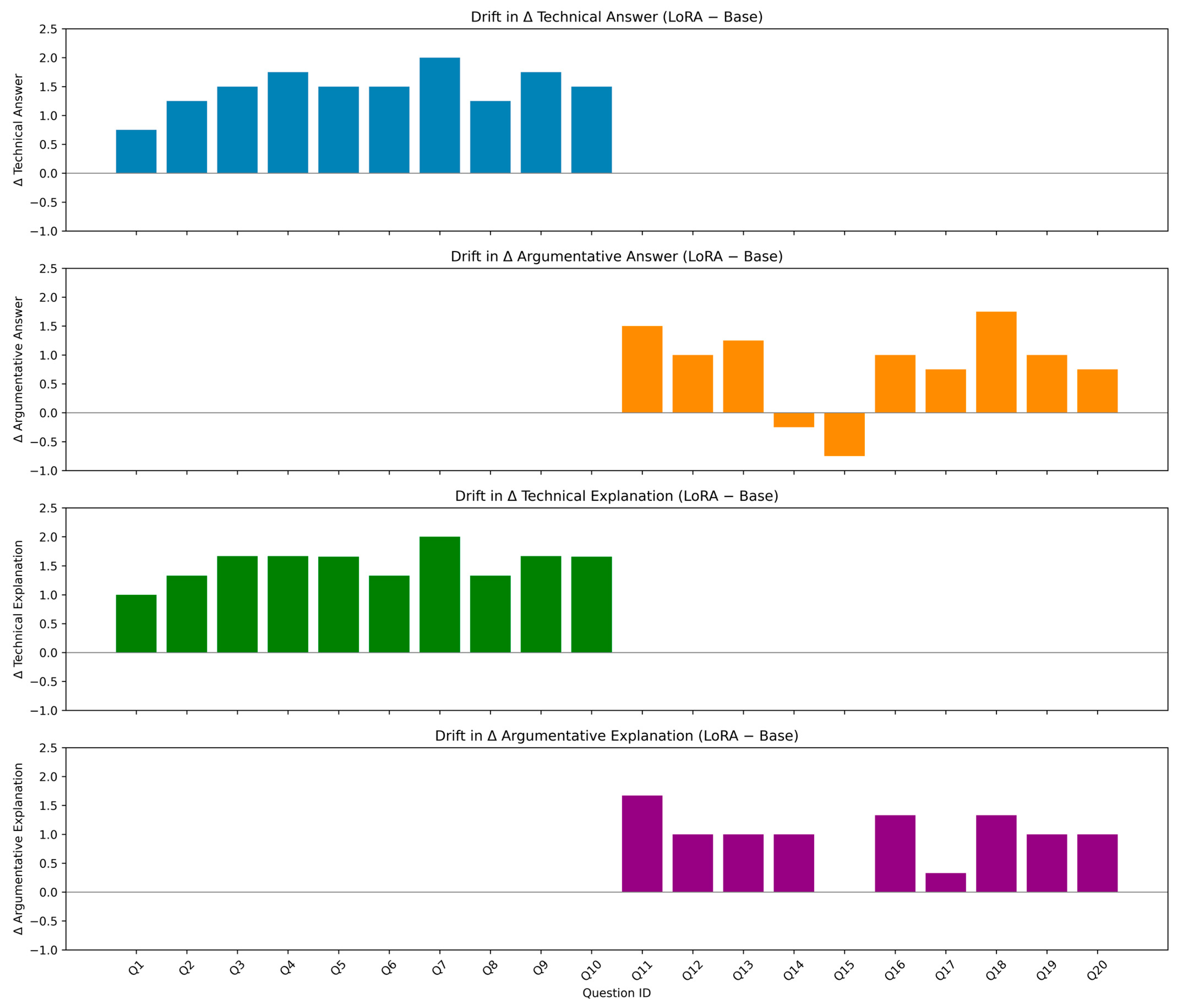

3.2. Scoring Drift per Question and Component

To assess how rubric-specific fine-tuning influenced individual responses, we computed the scoring drift for each question across four distinct evaluation categories: Technical Answer, Technical Explanation, Argumentative Answer, and Argumentative Ex-planation. We therefore report GPT-4 diagnostics as exploratory, judge-specific visualizations that illustrate item-level drift, motivated by the alignment patterns in

Table 4; these visuals complement—rather than replace—the multi-rater analysis. Within each component, GPT-4 criterion scores were first averaged to obtain a single 1–10 item score per question and component. For each component, the drift (Δ Score = mistral-lora − mistral-base) was calculated as the difference between the GPT-4—assigned score for the fine-tuned model and that of the base model. For each question

q and component

c, drift was defined as:

where values Δ > 0 indicate an advantage for mistral-lora. We summarize drift with category-level medians and interquartile ranges, and we do not perform inferential testing in this subsection.

Figure 5 presents the disaggregated score drift across all 20 questions, structured into four separate subplots corresponding to each evaluation category (GPT-4 judge only) and plotting all Δ values with a reference line at 0 to highlight direction and magnitude.

The most substantial improvements were observed in Technical Answers, where the fine-tuned model consistently outperformed the base version. Δ Scores ranged from +0.50 to +2.00, with nearly all questions showing positive drift. This indicates that fine-tuning had a strong effect on factual accuracy, domain-specific completeness, and terminology alignment—dimensions that are explicitly operationalized in the technical rubric.

Technical Explanations exhibited a similarly strong pattern: in 8 out of 10 questions, the Δ Score exceeded +1.5, reflecting not only enhanced content alignment but also improved pedagogical clarity. These high-gain items suggest that the fine-tuned model became more proficient at justifying its answers in a structured and instructive manner, directly supporting the explanatory expectations embedded in the rubric.

By contrast, the gains in Argumentative Answers were more heterogeneous. While the majority of questions showed positive drift, the magnitude was smaller, and two items registered slight score regressions. These were primarily linked to questions requiring abstraction, contextual interpretation, or rhetorical balance—areas where rubric dimensions such as Originality and Dialecticality are more open-ended and harder to optimize through supervised fine-tuning alone. This variability suggests that, although fine-tuning improves alignment with argumentative criteria, it does so less uniformly than in technical domains.

In the case of Argumentative Explanations, the fine-tuned model still outperformed the base variant in most cases, but the score differences were more modest and occasionally flat. This could be attributed to the inherently subjective nature of pedagogical usefulness and the greater stylistic freedom afforded by argumentative tasks. Nonetheless, no major regressions were observed, and even small gains in clarity and relevance indicate a general improvement in explanation quality.

Overall, the per-question drift analysis reveals a clear asymmetry in the effectiveness of fine-tuning: technical tasks benefit the most—both at the level of answer generation and explanation—while argumentative tasks, though improved, remain more sensitive to prompt framing and rubric abstraction. These findings reinforce the pedagogical value of rubric-guided adaptation and highlight areas where targeted refinements may further enhance consistency in open-ended assessment contexts.

To further emphasize the benefits of rubric-aligned fine-tuning,

Table 6 report the most significant changes in judge-assigned scores between the base and fine-tuned models, computed separately for answers and explanations.

Table 6A showcases the top five evaluation items with the highest positive scoring drift (Δ Score = mistral-lora − mistral-base), confirming that the fine-tuned model delivers consistently superior outputs—particularly on complex, open-ended questions involving abstraction, critical reasoning, and conceptual synthesis. In contrast,

Table 6 includes the only two cases where the fine-tuned model underperformed slightly, both involving argumentative prompts and marginal score reductions. These results underscore the robustness of the fine-tuning process, which yielded widespread improvements with minimal trade-offs, reaffirming the model’s enhanced alignment with rubric criteria and evaluative expectations.

The most substantial score regressions were observed on narrowly scoped tasks, where the fine-tuned model exhibited reduced precision or overgeneralized responses. As shown in

Table 6, only two evaluation items registered negative drift, both involving argumentative tasks and minor score decreases. No technical explanation received a lower score post fine-tuning, indicating that factual degradation was rare and highly localized. These isolated regressions suggest that the fine-tuned model maintained robust performance even in potentially sensitive evaluation contexts.

In contrast,

Table 6 highlights the evaluation items with the highest positive scoring drift, with Δ Score values reaching up to +2.00. These gains were most evident in conceptual questions requiring elaboration, abstraction, or rhetorical structure. Fine-tuning led to clearer answers, stronger rubric alignment, and pedagogically richer explanations. The consistent improvements across both answer and explanation components confirm that the model not only improved content generation but also internalized the stylistic and structural expectations embedded in the rubric.

Taken together, these results confirm that rubric-guided fine-tuning yields disproportionate gains on tasks involving higher-order reasoning, while introducing negligible trade-offs on deterministic items. The observed asymmetry supports the use of domain-adapted LLMs in formative assessment settings and motivates further exploration of adaptive fine-tuning strategies tailored to subcategories of educational tasks.

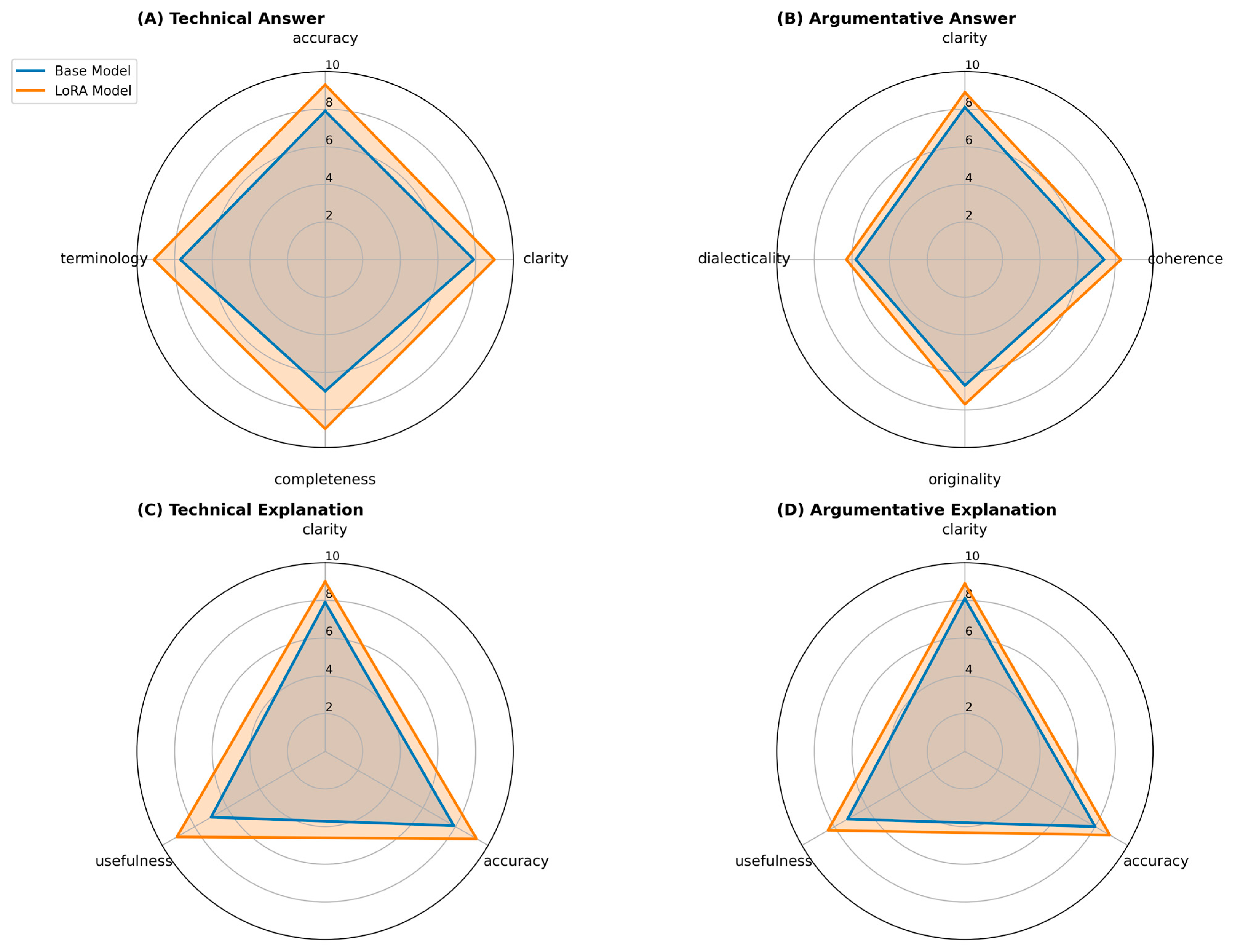

3.3. Rubric-Specific Improvements

To further examine how fine-tuning affected alignment with specific evaluation criteria, we disaggregated the judge-assigned scores across four rubric-defined categories: Technical Answer, Argumentative Answer, Technical Explanation, and Argumentative Explanation. For each of these, we computed the average score assigned to each individual rubric dimension, comparing the base and fine-tuned model outputs. This rubric-level analysis enabled a fine-grained understanding of where model adaptation had the strongest impact, and which cognitive or rhetorical dimensions were most improved through supervised instruction. No inferential testing is performed in this subsection; results are descriptive diagnostics.

Figure 6 presents the rubric-specific score averages for all four categories. In the Technical Answer component, the fine-tuned model showed pronounced improvements in Completeness (from 7.0 to 9.0) and Terminology (from 7.7 to 9.1), alongside moderate gains in Accuracy and Clarity. These changes indicate that the model developed a more structured, content-complete, and lexically precise style in its technical responses—key qualities emphasized by the rubric. This pattern is consistent with the expected benefits of domain-specific fine-tuning in factual settings.

For Technical Explanations, the fine-tuned model exhibited large gains across all three explanation criteria: Accuracy rose from 7.95 to 9.10, Clarity from 8.00 to 8.95, and Usefulness from 7.10 to 8.75. These results suggest that the model internalized not only the factual core of explanations but also the pedagogical expectations of clarity and instructional relevance, making its justifications more evaluable and aligned with learning objectives.

In the case of Argumentative Answers, the improvements were more moderate but remained consistent across all criteria. Originality increased from 6.7 to 7.7, and Dialecticality from 5.8 to 6.3, indicating a greater ability to generate novel content and engage with alternative perspectives. Clarity and Coherence also showed positive gains, although these improvements were less pronounced. This suggests that while the overall rhetorical structure of responses improved, the dimensions that require deeper critical engagement and nuanced reasoning continue to pose greater challenges for fine-tuning.

For Argumentative Explanations, the fine-tuned model also achieved higher scores across all criteria. Clarity, Accuracy, and Usefulness each improved compared to the base model, reflecting better alignment with rubric expectations. However, these improvements were less substantial than those observed in technical explanations. This indicates that while the model became more capable of justifying answers in open-ended contexts, explanatory quality in argumentative tasks—particularly those requiring interpretive reasoning—remains more difficult to enhance through rubric-based fine-tuning alone

For completeness,

Table 7 reports average rubric-based scores for each criterion across the four evaluation categories for both the base and the fine-tuned models, and includes

p-values from Welch’s unequal-variances

t-test to document the computations; interpretation focuses on the magnitude of the differences in mean scores and their 95% confidence intervals.

For each criterion, the table reports the p-value from Welch’s unequal-variances t-test and the 95% confidence interval for the difference in mean scores between the fine-tuned and the base model. These quantities document the computations and robustness checks—Welch’s unequal-variances t-test, Levene’s test for homogeneity of variances, and the Holm–Bonferroni step-down adjustment. Interpretation focuses on the magnitude and precision of the estimated differences in mean scores, as reflected by the confidence intervals.

Across categories, the estimated differences generally favor the fine-tuned model, with the largest gains in structured, objective criteria (e.g., Completeness, Terminology, and Explanation Accuracy), whereas more interpretive dimensions (e.g., Dialecticality and Usefulness in argumentative contexts) exhibit smaller and more variable gains, as reflected by wider 95% confidence intervals.

For Dialecticality within argumentative answers, the 95% confidence interval for the difference in mean scores is wide, indicating greater variability and overlapping score distributions; this pattern is consistent with the variability observed for dialectical reasoning tasks in

Figure 4.

Taken together,

Table 7 provides a descriptive summary of criterion-level differences between the fine-tuned and base models. Gains are most pronounced for structured, objective criteria (e.g., Completeness, Terminology, and Explanation Accuracy), whereas more interpretive dimensions (e.g., Dialecticality and Usefulness in argumentative contexts) show smaller and more variable differences, as reflected by wider 95% confidence intervals. These patterns indicate opportunities for targeted adaptation in tasks that require abstraction, debate, or contextual reasoning.

3.4. Semantic Feedback Analysis

Beyond the numerical scores, the textual justifications generated by the expert evaluator (GPT-4) offer valuable insight into how the answers and explanations produced by each model variant were interpreted under rubric guidance. In this section, we examine the semantic characteristics of that feedback to better understand the evaluative impact of model-generated content. Importantly, the goal is not to assess the evaluator itself, but to analyze how the base and fine-tuned models differ in the types of feedback they elicit when exposed to rubric-driven evaluation. Specifically, we ask: does fine-tuning result in outputs that trigger more precise, rubric-aligned, and pedagogically meaningful feedback? And conversely, do base model outputs attract more vague, critical, or structurally deficient evaluations?

To address this question, we first conducted a manual review of 20 representative evaluations, evenly distributed across rubric types (technical and argumentative) and components (Answer and Explanation). For each entry, we compared the feedback generated for the two model variants. Initial patterns indicated that responses from the fine-tuned model were more likely to elicit rubric-specific language, while base model outputs attracted feedback that was more critical, vague, or structurally generic.

To extend this analysis to the full dataset, we applied a rule-based lexical classification to all textual justifications produced by the expert evaluator. Each comment was scanned for the presence of predefined expressions grouped into six semantic categories:

Rubric keywords (e.g., accuracy, completeness, originality, dialecticality);

Pedagogical cues (e.g., well contextualized, supports understanding);

Vague comments (e.g., somewhat, acceptable, reasonable);

Generic praise (e.g., good, appropriate, well done);

Structural remarks (e.g., definition, cause, sequence);

Critical remarks (e.g., unclear, incomplete, lacks, limited).

Each comment could be assigned to multiple categories. Frequency counts were computed separately for each evaluation component (Answer and Explanation) and for each model variant (mistral-base vs. mistral-lora), allowing for a comparative semantic analysis.

The semantic profile of feedback provided by the expert evaluator across model variants is summarized in

Figure 7. The figure displays the frequency with which each semantic category was triggered during evaluation, separated by model (mistral-base vs. mistral-lora) and component (Answer vs. Explanation). This visualization highlights the relative prevalence of rubric-aligned language, critical remarks, vague expressions, and generic praise, enabling a comparative perspective on the evaluative discourse elicited by each model variant.

For the Answer component, the base model generated a high number of critical remarks (15) and several vague comments (6), while the fine-tuned model reduced these sharply to 2 and 0, respectively. Although rubric keywords were slightly more frequent in base responses (8 vs. 4), they were often embedded in negative framing. In contrast, the fine-tuned model received more instances of generic praise (3 vs. 0), suggesting higher fluency and rubric alignment at the surface level.

For the Explanation component, the difference was even more pronounced. The mistral-base model triggered 7 critical remarks and only 1 rubric keyword, while mistral-lora explanations activated 4 rubric keywords, 2 praises, and only 1 critical comment. These shifts confirm that explanations from the fine-tuned model were more coherent, complete, and easier to assess according to rubric logic.

Most notably, no pedagogical cues were detected in any feedback, across both models and both components. This suggests that the expert evaluator, as configured in our evaluation pipeline, did not spontaneously produce instructional framing in its feedback. It also implies that model outputs—even those from the fine-tuned variant—did not prompt language associated with instructional scaffolding or didactic support.

Taken together, the semantic profile of the expert evaluator feedback reveals that rubric-based fine-tuning improves not only score alignment, but also the evaluability of outputs. The reduction in vague and critical expressions—especially in explanations—indicates that responses generated by mistral-lora are clearer, more structured, and more directly aligned with rubric expectations. These effects extend beyond scoring accuracy, influencing the tone, structure, and educational value of the evaluation itself. By prompting clearer, rubric-grounded, and less ambiguous feedback, the fine-tuned model supports a more transparent and pedagogically useful assessment process—an essential property for LLMs deployed in instructional contexts.

3.5. Inter-Model Scoring Agreement

To assess performance differences between the two model variants, we computed the number of evaluation instances where the fine-tuned model outperformed, equaled, or underperformed the base model. These comparisons were made separately for both components—Answer and Explanation—and across rubric types (technical and argumentative). The results are presented in

Table 8, offering a structured overview of scoring trends across all 20 questions.

To assess scoring differences between the two model variants, we compared the final rubric-based evaluations for both Answer and Explanation components across all 20 questions. The results revealed a consistent advantage for the fine-tuned model. In 18 out of 20 cases, the LoRA variant received a higher score than the base model for the Answer component, while in the remaining two it underperformed slightly.

For the Explanation component, the trend was even stronger: LoRA outperformed the base model in 19 cases, with only one instance of equal scoring and no regressions. These results confirm that fine-tuning improved not only the quality of answers, but also the clarity and relevance of justifications, as judged by an expert LLM evaluator.

The two questions where mistral-lora scored lower than mistral-base were both argumentative in nature—Q14 (“What is the role of AI applications in stock market analysis?”) and Q15 (“What role do recommender systems play in a video streaming platform?”). In both cases, the mistral-lora-generated answers were slightly less precise or less elaborated, leading to modest score drops of −0.25 and −0.75 points, respectively. These regressions suggest that fine-tuning, while generally beneficial, may occasionally underperform on prompts requiring concise abstraction or interpretive breadth.

Overall, the pattern of improvement, especially the absence of regressions in Explanation scores, supports the conclusion that the fine-tuned model produces outputs that are more evaluable, rubric-aligned, and pedagogically coherent—making it a more reliable foundation for automated educational assessment.

4. Discussion

This section discusses the broader implications of the evaluation results, with particular emphasis on how rubric fidelity, feedback quality, scoring consistency, and the evaluability of explanations were influenced by fine-tuning. Our multi-rater results indicate that mistral-lora improves explanation quality consistently across raters and yields a statistically robust gain for Technical Answer when judged by GPT-4, while effects for human and Llama-3.1 remain mixed. These outcomes support rubric-aware fine-tuning as a viable component in educational assessment pipelines, particularly for explanation-focused feedback and transparent criterion alignment. At the same time, the single-judge diagnostics reported in later sections are complementary rather than standalone evidence. In practice, rubric-aware LLM scoring should be paired with human oversight to mitigate evaluator bias, monitor variance, and preserve fairness in grading.

4.1. Summary of Key Findings

The evaluation results confirm that rubric-aligned fine-tuning substantially enhances the performance of LLM-generated outputs, improving both the quality of answers and the evaluability of explanations. Across all 20 questions, the fine-tuned model achieved consistently higher scores in both the Answer and Explanation components. Improvements were observed across all rubric dimensions: accuracy, clarity, completeness, and terminology for technical questions, and clarity, coherence, originality, and dialecticality for argumentative ones. These findings reinforce the hypothesis that targeted fine-tuning supports deeper alignment with educational assessment criteria, as also demonstrated in recent studies on rubric-informed LLM evaluation in Science, Technology, Engineering, and Mathematics (STEM) domains [

27].

In addition to overall performance gains, score drift analysis revealed that the fine-tuned model consistently outperformed the base model across individual questions, with larger gains observed in technical prompts. This trend was confirmed by a disaggregated examination of rubric criteria, where average scores for all dimensions improved following fine-tuning. These results indicate not only global enhancement, but also local, criterion-specific benefits. Beyond raw score improvements, the integration of rubric annotations with graph-based storage ensured that these gains were traceable across criteria, allowing a more auditable evaluation process compared to traditional black-box approaches.

The expert evaluator’s textual feedback further supported these findings. Semantic analysis revealed that responses generated by the fine-tuned model elicited fewer vague or critical remarks, and more rubric-aligned justifications, particularly in the Explanation component. This shift in evaluative discourse suggests that the fine-tuned outputs were not only better aligned with scoring criteria, but also easier to interpret and justify through structured feedback—a finding consistent with previous work on AI-supported feedback generation in higher education [

17]. This indicates that rubric-guided fine-tuning not only enhances the clarity of generated responses, but also reduces the interpretive burden on instructors, making automated evaluation more pedagogically actionable in real teaching contexts.

Finally, model-level scoring comparisons confirmed the stability and reliability of these improvements. The fine-tuned model outperformed its base counterpart in nearly all cases, including a complete absence of regressions in explanation quality. This consistent advantage across rubric types and components underscores the robustness of the fine-tuning method. The choice of GPT-4 as expert evaluator is methodologically grounded in prior studies that have established its effectiveness in rubric-based educational assessment [

1].

Taken together, these findings demonstrate that rubric-based fine-tuning improves not only scoring accuracy, but also the evaluability, feedback quality, and pedagogical coherence of LLM-generated content—making it a promising foundation for stable, transparent, and pedagogically aligned automated assessment systems in higher education.

4.2. Interpretation and Implications

The results of this study demonstrate that fine-tuning a large language model on rubric-annotated instructional data has a profound effect on its evaluative behavior, particularly on the evaluability of explanations and the stability of rubric-aligned scoring. Mistral-lora did not merely improve average scores—it reorganized the generative structure of its responses to better reflect the logic of formal assessment. Outputs became more aligned with the cognitive demands of each rubric, displaying targeted improvements in dimensions such as completeness, originality, and dialecticality. These effects confirm that parameter-efficient fine-tuning is not limited to stylistic refinement but facilitates a deeper internalization of assessment constructs. Similar transformations have been observed in domain-specific fine-tuning for clinical tasks, where structural improvements in response composition were directly linked to rubric-constrained adaptation [

28].

Equally significant is the shift in how responses were interpreted by the expert evaluator. Feedback generated for mistral-lora outputs was more rubric-specific, semantically precise, and structurally grounded. Compared to base responses, which elicited more vague and critical remarks, fine-tuned outputs triggered clearer references to rubric-aligned constructs such as completeness, originality, and coherence. This transition suggests that fine-tuned models not only generate better responses, but also produce outputs that are easier to assess using structured criteria. Prior work on rubric-constrained fine-tuning supports this interpretation, showing that such models generate feedback that is both more aligned and more interpretable for human or automated evaluators [

29]. This highlights that improvements were not limited to numerical accuracy but extended to the explanatory layer, making model outputs more auditable and pedagogically actionable.

While rubrics provide a structured framework for evaluation, not all criteria lend themselves equally to objective scoring. In technical assessments, dimensions such as Accuracy and Terminology are typically grounded in factual correctness, making them less susceptible to interpretation. By contrast, criteria like Originality and Dialecticality, especially in argumentative tasks, require a higher degree of interpretive judgment. This asymmetry implies that evaluators—human or automated—must adapt their scoring behavior not just to rubric structure, but also to the epistemic nature of each criterion. The ability to distinguish between objective precision and interpretive depth is essential for producing evaluations that are both reliable and pedagogically defensible.

Traditional systems such as E-rater [

30] or BERT-based scoring models [

31] have demonstrated strong predictive accuracy, yet they function largely as black-box classifiers, offering limited rubric transparency and little support for evaluating explanations. Hybrid approaches that combine handcrafted linguistic features with deep learning embeddings [

7] further improve accuracy, but they rarely address traceability or auditability of the evaluation process. Comprehensive reviews of automated essay scoring confirm these limitations and highlight the lack of mechanisms for interpretability and pedagogical alignment [

32,

33]. In contrast, CourseEvalAI emphasizes rubric fidelity, the evaluability of both answers and explanations, and transparent storage of all evaluation artifacts in a graph database. This positions the framework not only as a method for improving predictive performance, but also as a step toward interpretable, auditable, and pedagogically valid automated assessment, where both answers and explanations are explicitly evaluable and stored in a traceable workflow.

Finally, the comparative evaluation patterns suggest an increase in scoring reliability. The mistral-lora model demonstrated stronger agreement with the expert evaluator (GPT-4) and no regressions in explanation quality, even in tasks involving open-ended reasoning. This consistency is essential for the adoption of LLM-based evaluation systems in high-stakes educational contexts, where fairness, transparency, and reproducibility are critical. These findings are consistent with recent studies of automated assessment pipelines, where fine-tuned models achieved measurable gains in rubric adherence, semantic completeness, and inter-rater alignment [

2]. They support the conclusion that rubric-based fine-tuning can serve as a scalable foundation for criterion-aligned evaluation in higher education, ensuring not only accuracy but also explanation-level evaluability and reproducibility.

From an AI-ethics perspective, rubric-aware evaluation advances fairness by making criteria explicit and auditable, strengthens accountability through inter-rater reliability and disagreement reporting, and improves transparency via explanation-level scoring; these benefits depend on regular calibration and bias monitoring.

Practical deployment is constrained by computational and operational costs. Rubric-aware inference increases latency and Graphics Processing Unit (GPU) time because it requires multiple prompts per response and aggregation across raters. Fine-tuning and periodic recalibration add training costs and storage overhead. Institutions with limited resources may need batching, caching, or model distillation to reduce load. Human oversight remains necessary for edge cases and appeals, which adds staff time. Privacy and compliance constraints can limit logging and data sharing, which in turn reduces auditability and slows replication.

4.3. Limitations

Despite the measurable improvements introduced by rubric-guided fine-tuning, the present framework exhibits several structural limitations that restrict its broader applicability, particularly regarding the evaluability of explanations across diverse academic settings. The framework was trained and evaluated exclusively on material from a single undergraduate Machine Learning course, using exam questions and responses written in English. This narrow scope limits its generalizability to other disciplines, educational levels, or multilingual contexts.

The fine-tuning relied on authentic exam materials from one undergraduate Machine Learning course. Given the small, domain-specific corpus, overfitting remains a risk despite LoRA’s parameter efficiency. We mitigated this by monitoring validation loss with early stopping (training stopped at epoch 6), and we note that the largest gains occur on technical items, which may reflect domain-specific adaptation. The sample size reflects the availability of authentic, instructor-validated exam data with consistent rubric annotations, prioritizing reliability over volume within the computer science domain. These data were carefully curated and consistently annotated with rubric-based labels to ensure reliability, alignment with course learning objectives, and representativeness within the computer science domain. Nevertheless, reliance on a single course constrains generalizability beyond this disciplinary scope.

Prior work has shown that domain-specific fine-tuning may fail to transfer when applied to contexts that require different rhetorical conventions or disciplinary epistemologies.

Another limitation concerns residual judge-related bias even under a multi-rater setup. In this study, we used two trained human raters and two LLM judges and reported inter-rater agreement (ICC(2,1), Krippendorff’s α, and quadratic-weighted Cohen’s κ (QWK) with confidence intervals), alongside judge-specific effects. Nevertheless, LLM judges remain sensitive to prompt phrasing and rubric instantiation, and rubric rigidity can constrain explanation evaluability across tasks and domains.

A further limitation is the absence of a LoRA ablation study (rank, target modules, fixed-epoch training), which leaves residual sensitivity to hyperparameter choices; given the ≈ 72 h training time per run on our hardware, we defer these experiments and register the plan for follow-up.

Finally, the rubric schema itself, although effective for structured evaluation, may not fully capture the complexity of student responses. The rubrics used—technical and argumentative—each consisted of four cognitive or structural dimensions (e.g., accuracy, clarity, originality, dialecticality), leaving out affective, cultural, or contextual factors that can influence interpretation. Moreover, responses involving creativity, interdisciplinarity, or nonstandard formats may not align well with rigid criteria. Expanding the rubric framework and incorporating multi-dimensional annotations could enable more flexible, inclusive, and context-aware assessment. In particular, the current design does not explicitly address multidimensional explanation quality, such as its capacity to scaffold learning or to support metacognitive reflection, which remain open challenges for future research.

Explanation scoring exhibits lower inter-rater reliability than answer scoring, exposing the evaluation pipeline to rater-specific bias and rubric rigidity. Overly prescriptive criteria may discount legitimate alternative reasoning and reduce the evaluability of explanations. To mitigate these risks, we recommend multi-rater aggregation with explicit adjudication procedures, rubric calibration using positive and negative exemplars, stability checks for LLM judges under prompt and seed perturbations, and transparent reporting of item-level disagreements and rater justifications. These practices improve fairness, interpretability, and reproducibility in educational deployments.

4.4. Pedagogical Implications

Integrating rubric-guided fine-tuning into automated assessment workflows has direct pedagogical relevance, extending beyond improvements in predictive accuracy to include the evaluability of explanations as a pedagogical resource. Transparent rubric alignment allows instructors to trace how each criterion influences model judgments, thereby enhancing accountability and reproducibility in grading [

3]. By independently evaluating both answers and explanations, CourseEvalAI supports formative feedback that is not only evaluative but also instructional, reinforcing learning outcomes through clearer justifications [

17]. By explicitly addressing explanation quality, automated feedback shifts from a purely summative mechanism to a formative tool that supports reflection and deeper understanding. This dual-layer approach addresses persistent concerns regarding fairness and student trust in automated grading systems, where opacity has historically undermined acceptance [

32]. Moreover, the use of graph-based storage enables instructors to audit evaluation flows, facilitating the adoption of AI-based tools in higher education environments that demand not only efficiency and pedagogical validity, but also transparent, explanation-level accountability [

25].

In classroom use, pairing answer-level and explanation-level scoring enables targeted formative feedback, alignment to explicit learning outcomes, and auditable justification trails that reduce grading variance and increase student trust.

In practical classroom settings, CourseEvalAI can support instructors both as a first-pass grading assistant and as a feedback generator. Instructors could use automated scores to pre-screen large cohorts of responses, focusing their attention on borderline or inconsistent cases, while students receive rubric-aligned feedback that clarifies strengths and weaknesses. Moreover, observed improvements in model scoring—such as technical answer averages increasing from 7.0 to 9.1—translate into tangible grading differences: shifting from a satisfactory level to an excellent one in typical higher-education marking schemes. This indicates that rubric-guided fine-tuning not only refines automated evaluation but also produces feedback and scoring patterns that have meaningful impact on student performance and grade interpretation.

From an implementation standpoint, we outline concrete integration pathways with learning management systems (LMS)—via plug-ins or API endpoints—to enable secure, role-based access (instructor, TA, student), gradebook synchronization, and auditable logs of automated decisions and feedback. Educator usability centers on configurable rubrics, first-pass grading with rubric-aligned feedback, disagreement review and score overrides with rationale capture, and exports to LMS gradebooks and analytics. Operational constraints include latency at class scale, inference/storage costs per assignment, batching policies that prioritize contested items, and institutional privacy/governance requirements (consent/opt-out and retention policies). These elements delineate practical pathways for integration, educator usability, and operational reliability in educational settings.

4.5. Future Work

Future research should extend the training corpus to include a broader array of academic disciplines, languages, and educational levels. The current implementation is limited to English-language instructional content from undergraduate computer science, restricting its applicability to other domains such as the humanities, law, or medicine—fields that operate under distinct rhetorical norms and assessment logics. Recent medical-AI studies comparing fine-tuning with retrieval-augmented generation indicate cross-domain applicability of LLM methods, including clinical decision support [

28]. Incorporating annotated data from diverse curricular settings would enable more robust testing of rubric alignment and response quality across educational contexts. Furthermore, multilingual fine-tuning should be prioritized to ensure compatibility with institutions operating in linguistically diverse environments. Future studies should also assess whether explanation-level evaluability can be preserved across disciplines and languages, ensuring that feedback remains both interpretable and pedagogically relevant.

In addition, we envision training separate, domain-specialized large language models using instructional data from each academic field, so that rubric-guided evaluation is attuned to disciplinary and rhetorical conventions; such specialization enables more accurate scoring and more pedagogically relevant feedback within each domain, while supporting systematic analyses of cross-domain transfer and failure modes.

Another critical direction involves scaling the evaluator component beyond the present multi-rater setup. In this study, we used two trained human raters and two LLM judges and reported inter-rater agreement ((ICC(2,1), Krippendorff’s α, and quadratic-weighted Cohen’s κ (QWK)) alongside judge-specific effects; future work will expand the rater pool across institutions, probe prompt/seed sensitivity more systematically, and compare aggregation protocols (e.g., majority, weighted, adjudication) to improve robustness in high-stakes deployments.

To sharpen these analyses, future work will replicate the question-level diagnostics at larger scale and across additional curricula, validating judge-specific effects and improving generalizability.

Finally, the rubric framework itself should be expanded to accommodate broader pedagogical constructs. The current rubrics focus on structural and cognitive criteria such as clarity, accuracy, and dialecticality, but do not address affective or creative dimensions. Yet these aspects—such as ethical reasoning, contextual sensitivity, or innovation—are increasingly central to modern educational practice. Moreover, as shown in our semantic analysis, the absence of pedagogical cues in evaluator feedback suggests a limitation in how current rubrics activate instructional framing. Future research should explore the design and fine-tuning of multidimensional rubrics that support more formative, nuanced, and pedagogically responsive feedback mechanisms. Similar efforts to model complex human behaviors through non-intrusive sensing and semantic annotation remain to be adapted to capture multidimensional explanation quality.

To establish classroom value, instructor-supervised studies (e.g., randomized A/B designs) should quantify the educational impact of explanation-level evaluation, measuring short- and longer-term learning gains (pre/post and delayed), feedback comprehension, and revision quality.

Methodologically, an ablation suite varying LoRA rank (r), target modules, and fixed-epoch training (without early stopping), while retaining the existing prompt-only baseline and adding a classical Automated Essay Scoring (AES) baseline, will delineate the contribution of each component. We will maintain the released reproducibility artifacts (single training-arguments snapshot, random seeds, and full training/evaluation logs) and extend them across domains and languages.

Finally, a broader comparative analysis should benchmark the evaluator against contemporary state-of-the-art LLM evaluators and classical AES baselines to contextualize robustness and validity beyond the present setup.

5. Conclusions

This study introduced CourseEvalAI, a rubric-aligned evaluation framework powered by mistral-lora. Through systematic comparison between mistral-base and mistral-lora, we observed clear gains in rubric fidelity, semantic structure, and evaluation consistency. The fine-tuned model outperformed the base version across all rubric dimensions, generating answers and explanations that were more complete, original, and aligned with formal academic standards. These improvements were consistent across both technical and argumentative tasks, confirming the efficacy of domain-specific fine-tuning in educational evaluation contexts, particularly for enhancing rubric fidelity and the evaluability of explanations.

Beyond content quality, the structure and tone of the feedback generated by the expert evaluator indicated that outputs from the fine-tuned model were more interpretable, rubric-compliant, and easier to assess. This dual gain—enhanced response quality and increased explanation-level evaluability—positions rubric-aware LLMs as reliable tools for transparent, criterion-based assessment in higher education. The absence of scoring regressions and the increase in inter-model agreement further reinforce the robustness of the approach.

Overall, this work offers a reproducible methodology for building and validating LLM evaluators in structured academic settings. By combining prompt engineering, supervised fine-tuning, and rubric-based expert scoring, the CourseEvalAI framework lays the groundwork for scalable, transparent, and pedagogically aligned evaluation pipelines. Future research should explore multidimensional rubrics, multilingual data, and multi-agent scoring architectures to improve the adaptability, equity, explanation-level accountability, and generalizability of automated assessment systems.

We established the primary findings with two human raters and two LLM judges, and we reported GPT-4 single-judge diagnostics as complementary analyses to guide future multi-rater replication.