1. Introduction

Application-controlled cloud caches implemented with fast in-memory key-value stores, like Redis and Memcached, have become ubiquitous in modern web architectures [

1]. Content providers use caches to reduce latency and increase throughput, increase user engagement and profits, and reduce infrastructure costs [

1,

2]. Proper configuration and tuning of these caches is critical, as sub-optimal configurations lead to increased miss rates and a resulting penalty in end-to-end performance, negatively affecting the business goals: It has been reported by Amazon that a 100 ms latency penalty can lead to a 1% sales loss, and by Google that an additional 400 ms delay in search responses can reduce search volume by 0.74% [

2].

A time-tested way to improve cache performance is to optimally partition the cache [

1,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15], for example, by dynamically partitioning the total memory between users or applications. However, current cloud caches support only static partitioning, while others provide no control over the partitioning. Redis (Remote Dictionary Server) is an example of the former, while Memcached is of the latter. While several solutions targeting cloud caches have been proposed [

9,

10,

11,

12,

13,

14], these have not been added to industry-grade software caches due to performance concerns.

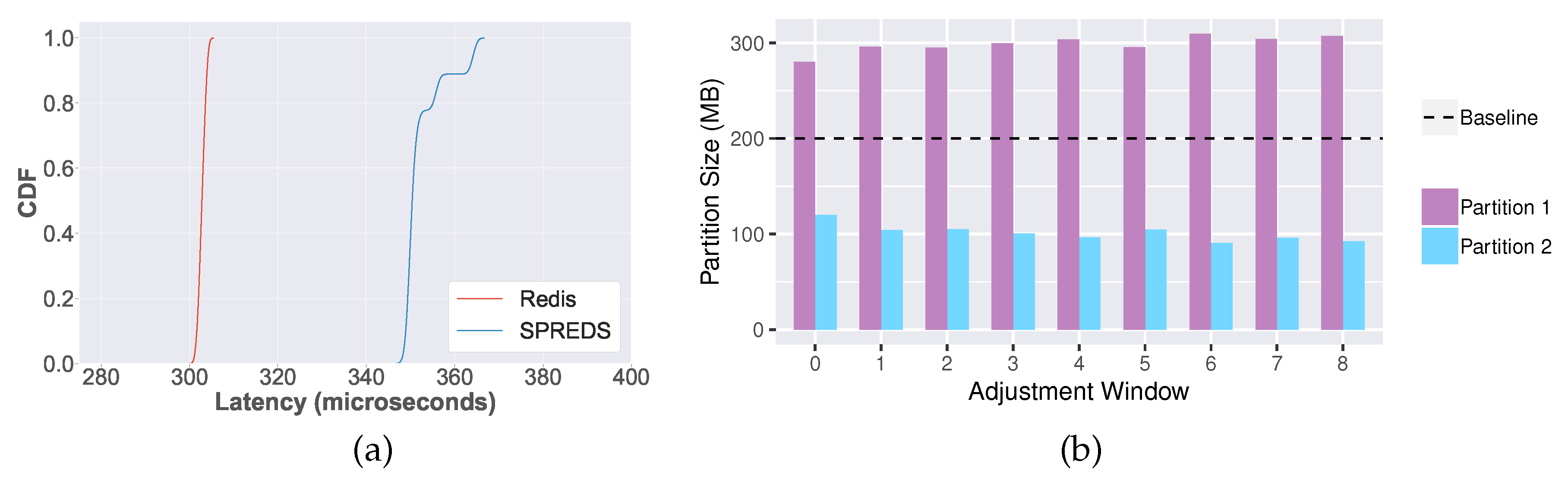

In this paper, we present a serverless computing architecture using the function-as-a-service model to optimize cloud caches for free. We implement this solution in SPREDS, a self-partitioning REDiS. SPREDS leverages modern data structures and statistical sampling methods to efficiently obtain online estimates of the real miss rate curves (MRCs) [

16]. The backend performance profiles and MRCs are combined into a utility function to maximize.

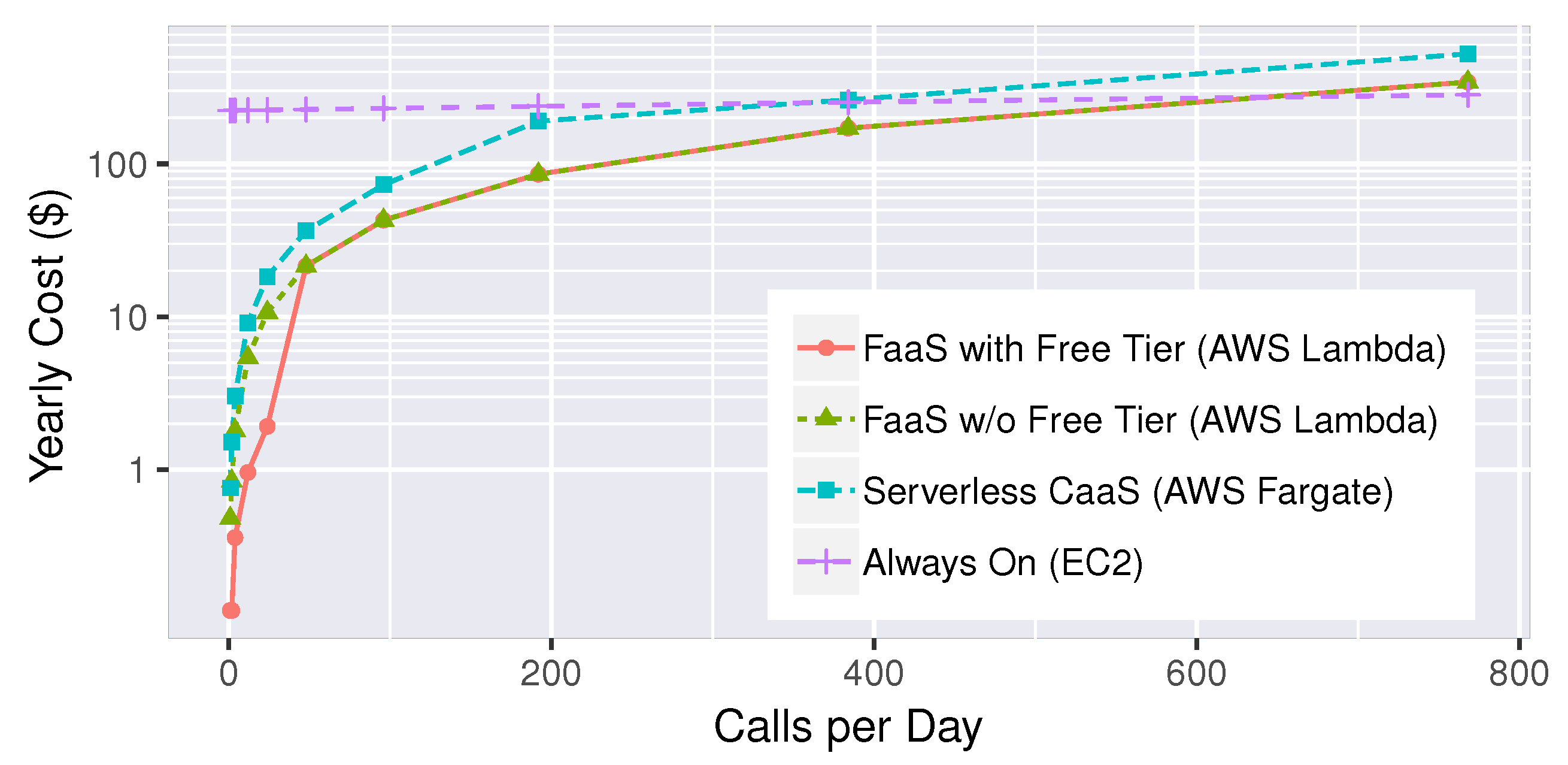

The resulting optimization problem is solved outside the cache using a serverless computing approach. Our experimental results show that the performance overhead due to monitoring the cache is low and that implementing the autonomic controller as a serverless microservice is feasible and useful. We present cloud cost calculations that show that the invocations to the controller are either free or very cheap, with the cost of running the controller as a serverless microservice being just 0.85% of the cost of the always-on alternative. Additionally, the optimization problem is solved outside of the machine running the cache and does not consume the resources of the caching nodes.

Using SPREDS as a case study, we argue that the proposed approach should be considered in future autonomic and self-* systems, as it is a low-cost and low-overhead way to calculate complex adaptation decisions applicable to systems running on public cloud providers. We end by outlining some challenges in implementing this vision and discuss how to address them.

1.1. Contributions and Paper Roadmap

This work makes the following contributions:

We survey the most current research in the domain of self-tuning cloud caches and study the designs used by prior approaches as a way to motivate the need for a more modern, cheaper, cloud-native solution (

Section 2).

We re-visit the memory partitioning problem and model it as a mathematical optimization problem with restrictions that are specific to the multi-instance cache on a shared node studied in this paper (

Section 3).

We present a novel design for implementing autonomic cloud caches that leverages serverless computing cloud offerings to implement the autonomic controller (optimization module) at a low cost (

Section 4).

We show the feasibility of our approach through implementing SPREDS, a real implementation of our design using Redis and AWS Lambda (

Section 4).

We present real experimental results and a corresponding cost analysis that validate the usefulness of our proposal (

Section 5).

We make a case for adopting our serverless approach in other autonomic, self-tuning systems and study the challenges in realizing this vision (

Section 6).

1.2. Relation to Prior Work by the Authors

The work herein builds upon prior contributions from our group [

1,

3,

16], as described herein.

In a work-in-progress paper [

1] presented at the 2017 ACM/SPEC International Conference on Performance Engineering (ICPE), we formalized the memory partitioning problem for the case of multi-instance cloud caches with heterogeneous backends. SPREDS is based on an extension of that model and one of the solvers in SPREDS is based on the algorithm introduced in [

1].

In a workshop paper [

16] presented at the Adaptive and Reflective Middleware (ARM) workshop, which was held in conjunction with the 2017 ACM/IFIP/Usenix Middleware Conference, we described how to instrument Memcached to obtain miss rate curves (MRCs) at a low performance cost. For the current work, we adapted that design and ported it to Redis. While the engineering details differ, there are no further scientific contributions in the monitoring module, and thus, we have not listed the lightweight monitoring as a contribution in the current paper.

Finally, the present paper is an extension of our paper from the Workshop on Self-Aware Computing (SeAC), held in conjunction with Foundations and Applications of Self* Systems (FAS* 2019) [

3]. As such, the contributions listed in this paper are the same ones as in [

3]. However, we have extended our SeAC work with additional use cases, more detailed information on our mathematical optimization model and the genetic algorithm we proposed to solve it, a considerably larger literature review, and more experimental results.

1.3. Threats to Validity

We assume that the mathematical optimization problem to find the optimal memory partitioning can be solved in a reasonable amount of time (e.g., takes no more than 25% of the time between adaptation cycles). If solving the optimization problem takes too long, the solution proposed in this paper is not applicable.

Our solution relies on estimated miss rate curves, as getting real MRCs with a low performance overhead is—to the best of the state-of-the-art knowledge in caching [

17]—not possible. However, the results we present from real cloud experiments show that the accuracy of the MRCs is good enough for our purposes: SPREDS can improve performance over a static partitioning approach. Nevertheless, it is possible that there may exist workloads for which the estimated curves are not good proxies of the real ones.

Automatic parameter tuning depends on being able to monitor dynamic behavior at a granularity that is useful for making informed predictions of the impact of changing specific parameters. Our solution leverages recent advances in efficient MRC estimation [

16] that apply only to the caching domain. An alternative approach to be considered for future work is constructing a utility function that relies only on the metrics and information that can be obtained from system logs; this would be a zero-overhead approach, as much useful information from each system component is typically already being logged, stored, and monitored—following a modern DevOps mindset [

18,

19].

Finally, we argue for using a serverless computing approach in our design and present cost estimates that are considerably cheaper than the alternative of an always-on service. Whether this is actually true or not in practice depends on (1) how frequently the optimization service is invoked and (2) the costs of each of the cloud services used in its implementation. We believe the serverless approach may be the cheapest option for small and medium-sized organizations in the near future, but cheaper alternatives may arise in the long run or may already be available for larger organizations, for which an always-on service is likely a better alternative.

2. Background, Motivation, and Related Work

Key-value stores are primitive databases that support efficient insertion and lookup of data indexed by user-defined keys. In-memory key-value stores work like remote hash tables or dictionaries and can answer requests with very high throughput and low latency. At the time of this writing, the most common in-memory key-value store software products are Redis (

https://redis.io) and Memcached (

https://memcached.org) [

20]. These products are frequently used as caches at the backend of modern web and mobile applications. Caches implemented with in-memory key-value stores are not transparent caches; the cache is invoked explicitly in the applications, serving complex business logic workflows. In this paper, we study Redis because it is the most popular key-value store as of December 2019 [

20].

We show a common use case of cloud caches in

Figure 1: a system that stores information—like product inventory, user profiles, and session information—in different storage backends. There is a frontend that aggregates information from these backends and presents it to the user. To improve latency and reduce pressure on the storage backends when serving user requests, the frontend typically first contacts a caching layer seeking the required information. If the data being sought is not stored in the cache—a cache miss—the application needs to contact the specific storage backend, get the data, and return it to the user. The application then contacts the cache to store a copy of the data so that future requests can be served directly from the cache. Each of these storage backends have their own unique performance profiles. For example, the databases supporting complex queries are much slower than object storage devices that store image files. The following is a small list that exemplifies the type of things being cached in the backends of web or mobile applications:

User profiles, where profiles contain data pulled from several other backend systems;

Tracking information, like user engagement counters;

User avatars or other images shown in the frontend to the end users;

Business reports resulting from querying multiple tables in an Online Transaction Processing (OLTP) database;

Session information, like the per-user application system state;

User status updates in social networks; users read the updates of their “friends”.

One important factor in the performance of a cache is how much memory it has available to store objects: The larger the cache, the higher the percentage of objects from the storage backend that it can hold and thus, the higher the likelihood of a request being a hit and not a miss.

The cache’s eviction policy determines which objects are removed from the cache to make space for new objects being added. Cloud caches support several eviction algorithms, with least recently used (LRU) or some variant of this algorithm being the most commonly used one as it is known to perform well for a wide variety of workloads. LRU assumes that recent past behavior is a good predictor of future behavior. When an object is to be evicted, it selects the object that has been used least recently because this is the one that it predicts is the least likely to be re-used in the near future. By default, Redis uses a randomized version of LRU [

21] that samples

x objects and evicts the one that was accessed least recently;

x is configurable and set to five by default. Since version 3, the LRU implementation of Redis also takes a pool of good candidates for eviction.

The other important factor influencing the performance of a cache is the workload characteristics [

22,

23] like the skewness of the distribution of the popularity of the objects and the degree of temporal locality present in the accesses to the objects in the cache. These workload characteristics are application-dependent and may be dynamic; thus, the caches must be able to self-adapt to these changes.

2.1. How Important Is (Optimal) Memory Partitioning in Cloud Caches?

To make the best use of CPU resources, modern microservices architectures favor shared-nothing and stateless approaches to distributed systems. A common scenario is for multiple Redis instances to be co-located in the same machine (see

Figure 1). Each of these instances serves a different workload or application and can be configured independently. In this scenario, the machine’s memory becomes an important resource that must be adequately partitioned between the cache instances.

We surveyed recent papers tackling the problem of self-partitioning cloud caches [

9,

10,

11,

12,

13,

14] and found that intelligent cloud cache partitioning can lead to significant performance gains: Depending on the service-level objectives and resulting utility function, these improvements can be in terms of higher throughput, higher cache hit rate, and reduced end-to-end latency.

Table 1 and

Table 2 summarize our observations. Based on the results presented by the prior studies and on our own observations, we posit that smart and adaptive memory partitioning in caches supporting different workloads leads to significant performance gains. Sadly, memory partitioning in Redis is currently only static and manual. The case of Memcached is even worse as it gives more memory to the application issuing more requests, regardless of whether this is good for the overall system [

24].

2.2. Architectures for Self-Partitioning Cloud Caches

Self-adjusting capabilities that involve solving complex optimization problems can impose unacceptable performance overheads on the system being tuned. We argue that a serverless architecture approach can be used to overcome this limitation. To better explain why this is the case, we first study the architectures used in prior self-partitioning caches. We summarize their architectural decisions regarding where to locate the monitoring engine and the autonomic controller in

Table 2 and analyze the limitations of these architectures next.

Prior projects have used one of the following approaches to limit the performance overhead introduced by solving the optimization problem:

- A1:

Make small, gradual changes to the memory allocations exploring the configuration space to find the optimal partitioning. This approach removes the CPU cost of solving the optimization problem but introduces frequent resizing costs as the internal state and data structures of the cache need to be modified for each small step in the exploration of the configuration space. Cliffhanger [

12] is an example of a system that makes small, continuous, costly changes.

- A2:

Simplify the problem by limiting the number of partitions and making other (unrealistic) assumptions about the workload, thus ensuring that solving the optimization problem becomes tractable without incurring high computation costs. This approach has been suggested as a way to solve the optimization problem within the cloud cache but without consuming excessive CPU resources, which would slow down the cache requests. Dynacache [

10] is an example that simplifies the problem to make it more tractable.

- A3:

Take the solving of the optimization problem outside of the caching software and move it to an external controller. This is the most common approach in the literature of self-adaptive systems (e.g., see [

1,

25,

26]).

Approach A3 is the better option because it does not incur frequent resizing costs (limitation of A1), nor does it make unrealistic assumptions about the workload (limitation of A2). For this reason, we opt for approach A3 in SPREDS.

It should be noted that while A3 overcomes the performance and accuracy limitations of A1 and A2, it may incur additional costs related to running the external controller. We are not aware of prior work comparing the monetary costs of running an external controller. These costs can differ depending on the architectural approach used to implement A3 in a real system:

(a) Always-on shared service: An always-on shared cloud adaptation service can be offered for free or at a reasonable cost by the cloud or a third-party provider. The costs are shared by tenants or absorbed by the provider seeking a competitive advantage. An example of a recent product in this domain is the self-indexing service for the Microsoft Azure SQL DB [

27]. However, this approach lacks flexibility and does not encourage innovation in the tuning algorithms (the service provider controls the algorithm and tenants cannot test improved adaptation algorithms). Furthermore, some utility functions—like those that seek to minimize operational costs—may go against the provider’s business interests and the providers would not offer them to their tenants.

(b) Always-on client-managed service: The cloud tenant runs the controller as one more always-on service in their system. For example, in the database domain, OtterTune [

25] has been proposed as an external database tuning system that can tune the performance of databases as well as external human experts. The costs of the always-on client-managed service approach can be too high for small or medium organizations if the service is infrequently used. In addition, it has the added overhead of having to manage an additional online service and thus, constitutes an option only suitable for large enterprises.

(c) Serverless microservice: The approach argued for in this paper is deploying the controller as a serverless microservice using a Function-as-a-Service (FaaS) offering like Amazon Web Services (AWS) Lambda (

https://aws.amazon.com/lambda/) or Azure Functions (

https://azure.microsoft.com/en-us/services/functions/). FaaS offerings are gaining increasing attention in the community as they let tenants run code without provisioning or managing servers and pay only for the compute time they consume [

28]. The solver is an external process owned by the client and implemented using a serverless architecture. This on-demand approach avoids service over-provisioning and reduces the costs of operating the controller. Furthermore, it lets the tenants tailor the utility function and optimization-solving algorithm according to their specific needs.

The details of our proposal are presented in

Section 4. In

Section 5, we experimentally validate the approach and present a cost analysis that shows that the always-on client-managed service approach is much more expensive than the serverless approach advocated for in this paper.

2.3. Why Aren’t Current Cloud Caches Already Self-Partitioning?

Some of the projects described in

Table 1 involved collaborations with industry—namely, Facebook, Akamai, and Microsoft. It is possible that these (and other) companies have self-partitioning cloud caches being used in production. However, these adaptation mechanisms have not been added to the open source versions of Redis or Memcached.

Approaches that gradually explore the configuration space hoping to find an optimal solution (e.g., [

12]) incur a high penalty when the cost of reconfiguration is not negligible, as has been observed in the current implementation of the resizing mechanism of Redis [

1]. Furthermore, these solutions are tailored for a specific solution and do not scale to self-tuning other knobs of the caching software. A better method involves utility-driven approaches, which are flexible and can support diverse service-level objectives. However, these are deemed unscalable due to the high CPU consumption of the algorithms used to solve the optimization problem [

29]. We believe the serverless architecture proposed in this paper can overcome both challenges and can facilitate adding self-adaptation functionality to cloud caches and other software in the near future.

2.4. Other Related Work

Earlier in this Section, we analyzed the most relevant prior work in self-partitioning cloud caches; the results of our analysis were presented in

Table 1 and

Table 2. In this subsection, we discuss other prior research that is related to SPREDS.

Other uses of workload-driven partitioning schemes for caches include partitioning flash-based caches into hot/cold areas to support efficient data compression [

30] or to determine the right number of replicas of each partition [

31]. In addition to improving performance, others have included the notions of fairness, isolation, and strategy proofness in their partitioning schemes [

24,

32]; SPREDS was designed for the case in which the applications belong to the same tenant and do not try to game the system. Another consideration that can be incorporated into the optimization problem is the penalty associated with memory reallocation during partitioning cycles; solutions like the one used in pRedis [

33], that factor this into the optimization problem, are orthogonal to the architectural approach proposed in this paper.

A few self-tuning database products and services have been proposed by academia and industry. OtterTune [

25] is a tuning service for MySQL and PostgreSQL that automates the process of finding good settings for a database’s configuration, reusing training data gathered from previous tuning sessions. Das et al. [

27] describe an auto-indexing service for Microsoft Azure SQL Database. Oracle recently released their Autonomous (cloud) Database (

https://www.oracle.com/database/autonomous-database.html) and ScyllaDB offers a database with limited self-managing properties (

https://www.scylladb.com/); however, the latter is a rule-based tuning solution [

34].

Idreos et al. [

35] recently introduced the concept of design continuums for the data layout of key-value stores and present a vision of self-designing key-value stores that automatically choose the right data layout for a specific workload and memory budget. This is a related but different problem than the one used as a case study in this paper; both self-* approaches can co-exist in the same key-value store.

In general, the idea of offering adaptations “as a service”, as a path to making autonomic systems a reality, has been argued before [

1,

26]. However, a traditional always-on service makes sense when the service is provided by the cloud or a third party provider, or for large organizations. In this paper, we propose a variant of this idea, with a serverless microservice architecture, and provide a proof-of-concept implementation highlighting its usefulness.

3. The Memory Partitioning Problem

We consider a system where a virtual or physical machine hosts

n instances of the caching software, each serving a different application as depicted in

Figure 1; these applications compete for the allocation of the total memory,

M. The amount of memory assigned to each application

i is denoted by

. Our model also works for a multitenant architecture, as long as the different applications sharing the cache belong to the same organization.

Table 3 contains a reference of the parameters used in the model definition.

When application i needs some data, it first looks for it in the cache. If the sought data is not there—i.e., a cache miss occurs—the application obtains the desired information from its corresponding storage backend. Each backend has its own performance profile; i.e., its own average latency to access the backend . For example, a database server used to build user profiles may likely be slower answering requests than a service that generates unique user identity avatars based on the client’s username or IP address (e.g., like Github’s identicon).

After a cache miss, the data is typically inserted in the cache (after making space for it by evicting less valuable data, if necessary) so that it will be available at the cache for future requests. However, as these are explicit—not transparent—caches, the application is free to implement a more complex admission logic.

We consider memory as the only shared resource, ignoring the sharing of CPU. In-memory key-value stores are memory- and not CPU-bound. This has been reported by Redis (

http://redis.io/topics/faq) and observed in real Memcached deployments [

12].

In this work, the goal is Pareto efficiency: fully utilize the memory, compute the ideal memory allocation

, and achieve the highest overall utility given individual utility functions,

s, and the total memory constraint

M. This can be expressed as the following optimization problem:

where

is the utility function of application

i as a function of its assigned memory

and configured

(which lets us indicate that one application is more or less important).

is the minimum memory assignment for application

i; it can be set to zero if it is acceptable for the system to decide not to cache the objects of some application.

We assume a non-adversarial model in which the applications are not trying to game the system. This is a reasonable assumption when all the applications belong to the same cloud client. Given that we consider a non-adversarial model, we do not seek strategy proofness [

24]. Some application could be able to issue workloads that lead to a higher memory assignment to said application, but this would be at the cost of reduced overall system performance.

For the utility function, we consider improving average access latency to objects in the cache to be our most important optimization metric. For eviction algorithms that fulfill the inclusion principle [

36]—e.g., LRU—giving more memory to the cache means that the hit rate will either stay the same or will improve. Thus, the way to optimize the performance for a single application is to give it as much memory as possible. As the memory is a shared resource that the applications are competing for, we devise a utility function that combines each of the utilities as a function of how much memory we have assigned to the application

, weighed by a user-defined weight (

) so that the tenant can declare that improving the performance of one application is more important than for others.

We posit that improving the hit rate of one application may not be as useful as improving the hit rate of another application due to differences in the performance profiles of the corresponding backends. For example, all other things being equal, if one application has a slow backend (e.g., one that processes slow, multi-table, Standard Query Language (SQL) queries), increasing the hit rate of the cache serving that application is more useful than increasing the hit rate of a cache serving a fast backend (e.g., a NoSQL database that stores session information). For this reason, for each application we calculate its effective access time (EAT) and weigh it by the inverse of the application’s access frequency (

). The formula for the EAT is calculated using the classic approach devised for two-level memory systems [

37], where we consider the access latency to the two levels—the cache and the backend storage—and the probability that an object or data item will be found in the fast memory tier, which in our case is given by the cache hit rate. The access latency to these two levels is denoted by

(object latency in the caching system) and

(object latency in the backend system, including the time to process a cache miss). For a given application, the cache hit rate is a function of how much memory the application has been assigned

and can be directly obtained from the application’s miss rate curve (MRC). In other words, the

is the time that it takes, on average, to access an object in application

i. We define the following per-application utility function:

where

is the hit rate of application

i as a function of assignment

, and

is the frequency of requests of

i.

Solving the Optimization Problem

Consider problem (1): If the s are quasi-linear, the resulting optimization can be solved by solving a sequence of feasibility problems, with a guaranteed precision of in iterations, where R is the length of the search interval. If the s are concave, we have a convex optimization problem easily solved with off-the-shelf solvers; for example, using gradient-based methods. When the s are discontinuous, non-differentiable, or non-convex, alternative approaches are required.

One alternative is to use a probabilistic search, in which a model generates candidate points in the search for an optimum. An adaptive mechanism may be added to the generative model to improve the performance of the sequentially generated candidates. We proposed one such approach to memory partitioning in earlier work [

1] and implemented it in SPREDS (see Algorithm 1). This genetic algorithm works for any partitioning problem as it makes no assumption of the shape of the utility curves. We next describe this approach, which is one of the two solvers implemented in SPREDS.

Let

with

, satisfying

and

. We assume that the variability of

can be well modeled by a Dirichlet distribution. The Dirichlet distribution

has a density function:

expected value

, and variance

, where

. We model the variability with a Dirichlet distribution

with parameter vector

. Then, we define

where

.

We propose the following general approach, inspired by evolutionary strategies, for solving problem (1) in the case of non-convex and non-quasi-linear function s. We begin by setting to for all i. We then generate K points for . Note that points generated in this way satisfy all restrictions of problem (1).

Using points in set

, we construct the following prior mixture density for

:

where

Z is a normalization constant and

is a non-negative function with finite mass concentrated around

(e.g., a radial basis function centered at

). We proceed by sampling

from

g and generating points

, where

is the vector with elements

for

. Note that while random variables

and

have the same expected value,

has the effect of reducing the variance of

by a factor of

.

The above generative procedure corresponds to the following Bayesian hierarchical structure:

where

G is the distribution function corresponding to mixture density

g.

Our method proceeds by alternatively generating parameters —the exploration stage—and generating J points of conditioned on —the exploitation stage. The prior distribution for is then updated using the K best cumulatively observed points, s, and the procedure is repeated until a satisfactory solution is found. Algorithm 1 provides the details of the proposed procedure.

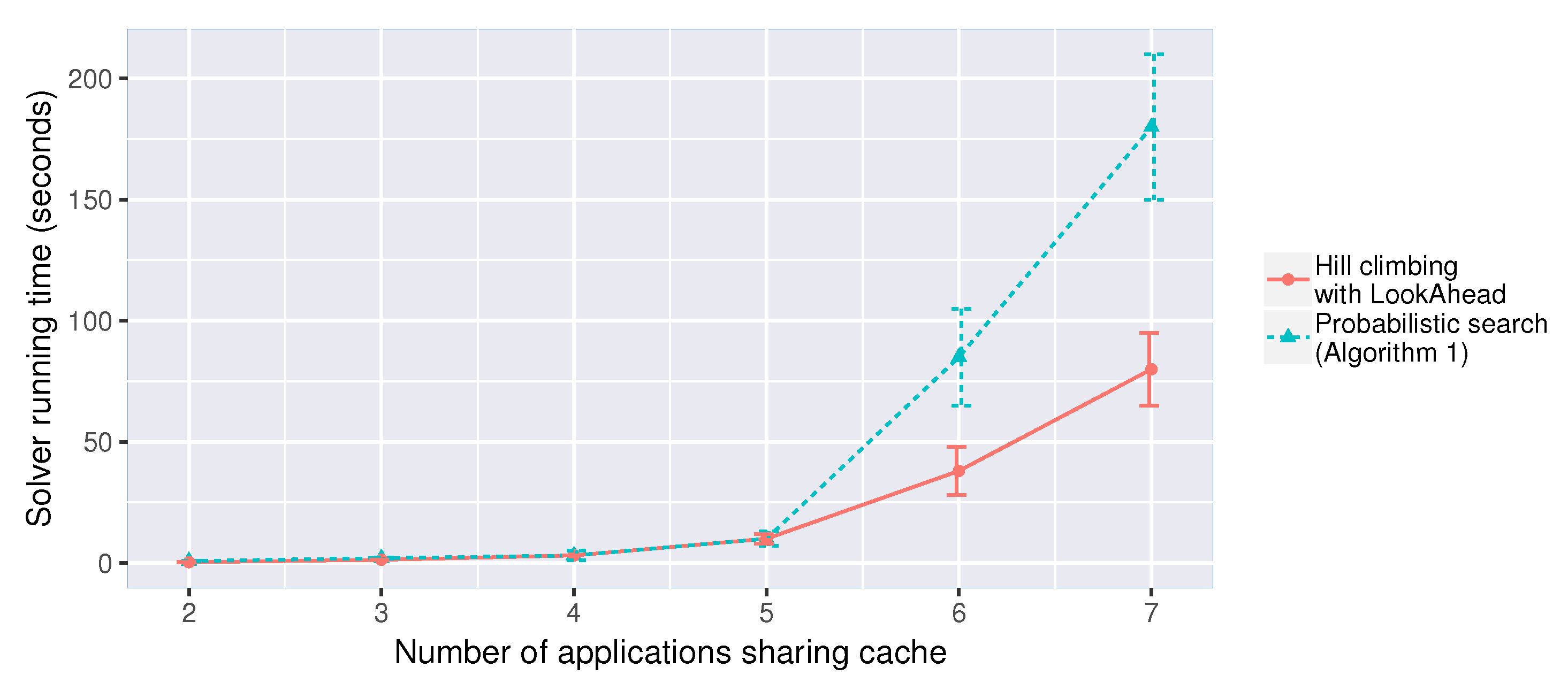

We also implemented a hill-climbing algorithm combined with a LookAhead approach [

7]. We added the LookAhead approach so that this algorithm can deal with non-convex utility curves; the genetic algorithm is applicable to any shape of the utility function. SPREDS considers the number of partitions and chooses the solving method depending on the complexity of the problem. Based on experimental results presented in

Section 4.3, we heuristically choose between both algorithms as follows. For deployments with a few partitions (

), we use the proposed genetic algorithm. For deployments with a larger number of partitions, we use the hill-climbing solver. Intelligently choosing the solver is outside of the scope of this paper [

1,

38].

| Algorithm 1: Probabilistic adaptive search |

![Computers 09 00014 i001 Computers 09 00014 i001]() |

4. Design and Implementation of SPREDS

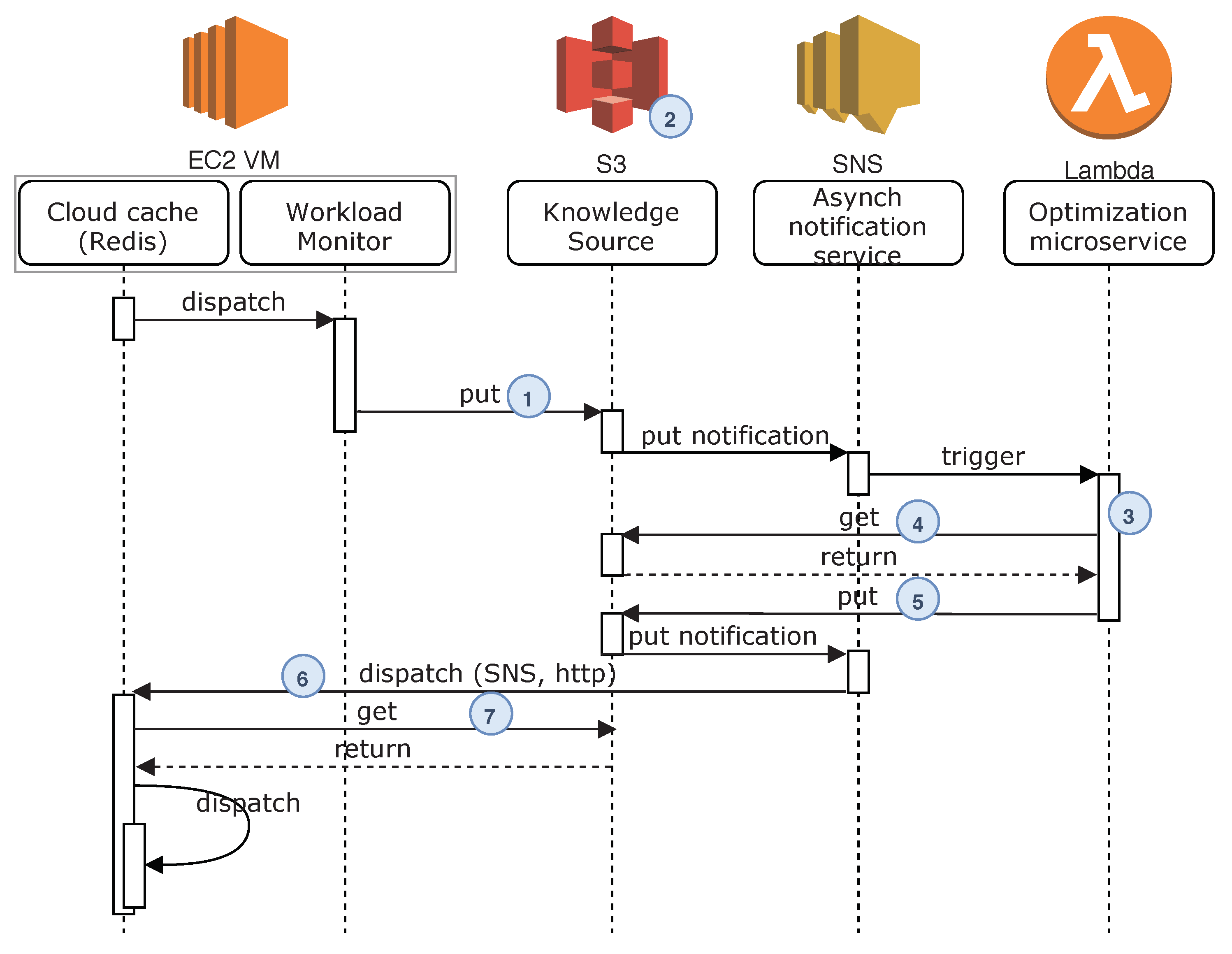

We map our design of a self-partitioning cloud cache to a MAPE-K loop [

39] with its corresponding monitor, analyze, plan, and execute functions.

The

monitoring component runs in the same machine as the cache and can be part of the cache or an external sidecar [

40] microservice. This component uses statistical sampling techniques to minimize the monitoring impact. The monitor stores every

N observations on a configurable cloud location. When a new set of observations is stored, a

calculate new adaptation event is triggered. The size of the set of observations,

N, is configurable and lets the user decide how frequently the system adaptations should be triggered—i.e., how frequently to calculate the optimal memory configuration and to re-partition the cache memory if necessary. Criteria for choosing

N include the cache throughput and how dynamic the application workloads are.

The controller is implemented as a serverless microservice. It performs the analyze phase when launched in response to a calculate new adaptation event. In the SPREDS implementation, this microservice solves the mathematical optimization to determine how to best partition the cache memory according to the specific set of observations captured by the monitor.

The controller generates an adaptation plan to implement the solution and stores it on a specific cloud storage location. The action of storing the adaptation plan on the cloud storage triggers an execute adaptation event, which is then received by the cache. When executed, this plan re-partitions the cache according to the most current (optimal) solution.

In our design, the

knowledge source is one or more locations (e.g., buckets or directories) in a cloud storage system managed by the provider. This is where the system stores the captured metrics, monitoring data, and adaptation plan. Alternatively, one or more specialized databases could be used for this purpose. For example, the Prometheus (

https://prometheus.io/) time series database is commonly used in cloud-native systems to store metrics analyzed by DevOps teams.

To validate our design, we implemented SPREDS as a modified version of the Redis key-value store that leverages several AWS services to periodically self-partition the memory seeking to maximize overall system utility, as depicted in

Figure 2. The current implementation of SPREDS extends Redis version 4. The Amazon Web Services products used in SPREDS are described in

Table 4.

4.1. Summary of How SPREDS Works and Outline for the Remainder of the Section

SPREDS captures a very small percentage of the requests it receives. The sampled requests are sent in real time to the workload monitor (

Section 4.2). The monitor is an external process running on the cache node; it temporarily stores the samples until a configurable number of

N samples is received. The monitor then stores the

N samples remotely on a S3 bucket. This triggers an SNS event that launches an AWS Lambda function to run the solver, which in turn finds the optimal partitioning of the cache using one of the two solvers (

Section 4.3). Based on the solution to the optimization problem, the Lambda function generates an adaptation plan to implement the partitioning. This plan is the output of the Lambda function, which it stores on an S3 bucket. Adding a new file to the S3 bucket triggers an

execute adaptation event, which is delivered asynchronously to the cache node using a Simple Notification Service (SNS) notification. When the cache receives the adaptation plan, it reconfigures itself according to the optimally calculated cache partitions (

Section 4.4). This loop runs continuously so that the cache can adapt to changes in workload or application demand.

4.2. Workload Monitor

In earlier work, we instrumented Memcached to construct online miss rate curves (MRCs) [

16]. We adopted this same approach in SPREDS, with minor changes due to the difference in internal implementation of Redis versus Memcached. To the best of our knowledge, this is the first implementation of the SHARDS [

17] MRC estimation algorithm on Redis. Next, we discuss some of the main challenges in the implementation of the lightweight monitoring approach used in SPREDS.

In computing, caches are used to accelerate access to objects stored in slower memory tiers. For this reason, caches are designed to be extremely fast when answering requests. It is important that any new functionality added to the caching software has minimal or no latency overhead. In our implementation, we move the monitoring of each request out of the critical path by implementing the monitoring agent as an external process and communicate using a high-performant asynchronous inter-process communication library (ZeroMQ,

https://zeromq.org).

Another challenge is the trade-off between the sampling frequency and the accuracy of the metrics obtained. We use uniform random spatial sampling [

12,

17,

41] to keep track of caching metrics with a low overhead. A function of the hash value of the object determines whether the object should be monitored or not, ensuring that all accesses to the same object are always monitored but only accesses to a small subset of the objects are tracked. The resulting reference stream is a scaled down representative and statistically self-similar version of the original reference stream [

17,

41,

42]. Cache metrics can then be scaled up to approximate the metrics of the full reference stream [

17,

41]. The overhead introduced by the sampling method is very low for key-value stores, as these already hash the object keys before inserting them. This hashing operation can be used to implement a sampling filter [

17] with sampling rate

using the following operation:

where

T and

P are configurable parameters and

hash is the hashing function used by the key-value store to locate an object based on its user-define

key. A reference to an object in the cache is sent to the monitoring function if and only if it satisfies the condition in (7). Each object that passes the sampling filter condition is monitored, and it represents

objects in the original object request stream. To generate accurate estimation of the miss rate curves (MRCs) based on this small sample, we use the SHARDS algorithm [

17]. As reported by Waldspurger et al. [

17], SHARDS can process up to 17M requests per second and build MRCs that are accurate within

of the original MRC, with a constant memory footprint. SPREDS generates one MRC curve for every cache partition (each of which corresponds to a workload or application).

4.3. Optimization (Solver)

We use two AWS Lambda functions, one for each of the solvers in SPREDS. AWS Lambda is a Function-as-a-Service offering from Amazon Web Services that lets users run on-demand cloud functions that can be directly invoked or triggered by events such as adding a new file to S3. AWS Lambda charges per function invocation and as a result is typically cheaper than paying for an always-on service running on a dedicated virtual machine or container.

The first Lambda function finds a solution to the optimization problem using Algorithm 1. The second function finds a solution to the problem using a local search (hill-climbing) algorithm. We use the following heuristic to chose between the two solvers: The genetic algorithm is used when the problem is small (≤7 applications sharing the cache); otherwise, we use the hill-climbing solver. We base this heuristic on observations made on exploratory experimental results (see

Figure 3): The performance of the genetic solver degrades more rapidly than that of the hill-climbing algorithm, as the complexity of the optimization increases. The workloads for each partition were chosen by randomly selecting sub-traces from the Yahoo workload; experiments with other traces yielded similar results.

4.4. Adaptation Plan and Execution

The adaptation plan instructs how to repartition the cache according to the new solution to the optimization problem. Redis supports online manual repartitioning through CONFIG SET commands, which can be communicated to the cache instances through an HTTP connection. We leverage these commands and express the adaptation plan as a set of CONFIG SET commands that change the partition sizes to match the solution found by the solver.

6. Open Challenges

We argued for a serverless computing approach for the adaptation component of a self-optimizing system. Our proof-of-concept implementation and cost analysis show that this is feasible and useful. In this section, we identify four challenges that must be addressed for this vision to become a reality.

First, cloud functions have a maximum running time (AWS: 15min, Azure: 10min, Google: 9min). If the calculations exceed the limit, the function expires and the work is lost. While it is possible for the limit to be extended in the near future, it is nevertheless possible that some problems take too long to solve as cloud functions. For example, in 2018 AWS increased its Lambda limit from 5 to 15 min (Source:

https://aws.amazon.com/about-aws/whats-new/2018/10/aws-lambda-supports-functions-that-can-run-up-to-15-minutes/). In the AWS ecosystem, the Fargate serverless container service can be used for such problems. The challenge is to design a system that can automatically use the cheapest service for the specific size (in terms of partitions) and frequency of its adaptation loop.

Second, some systems for which a serverless approach makes sense may outgrow it and require an always-on service. Again, a cloud-native solution should automatically choose the architecture that is best for the specific system configuration and complexity. This adaptation should be dynamic and transparent.

Third, we identify a challenge not specific to serverless architectures: interacting self-aware applications that use information from other systems during the adaptation decisions [

45]. As the serverless approach decouples the adaptation components from the main systems, such a system could be orchestrated if we provide proper APIs and connectors.

Fourth, even though we focused on adaptation decisions (especially those related to performance tuning), the community should think about realizing the vision of fully self-aware systems [

45]. Could a cloud-native architecture that leverages serverless computing offerings help in realizing such systems?