Process-Aware Enactment of Clinical Guidelines through Multimodal Interfaces

Abstract

1. Introduction

- The introduction has been partially rewritten and extended;

- A refined background section describing the characteristics of healthcare processes under different perspectives has been provided;

- The description of clinical guidelines is more detailed and complete;

- The section describing related works has been extended significantly with a new contribution describing the state-of-the-art of vocal interfaces;

- An improved user evaluation section discussing the complete flow of experiments to evaluate the effectiveness and the usability of the system has been proposed, measuring also the statistical significance of the collected results;

- All other sections of the paper have been edited and refined to present the material more thoroughly.

2. Background

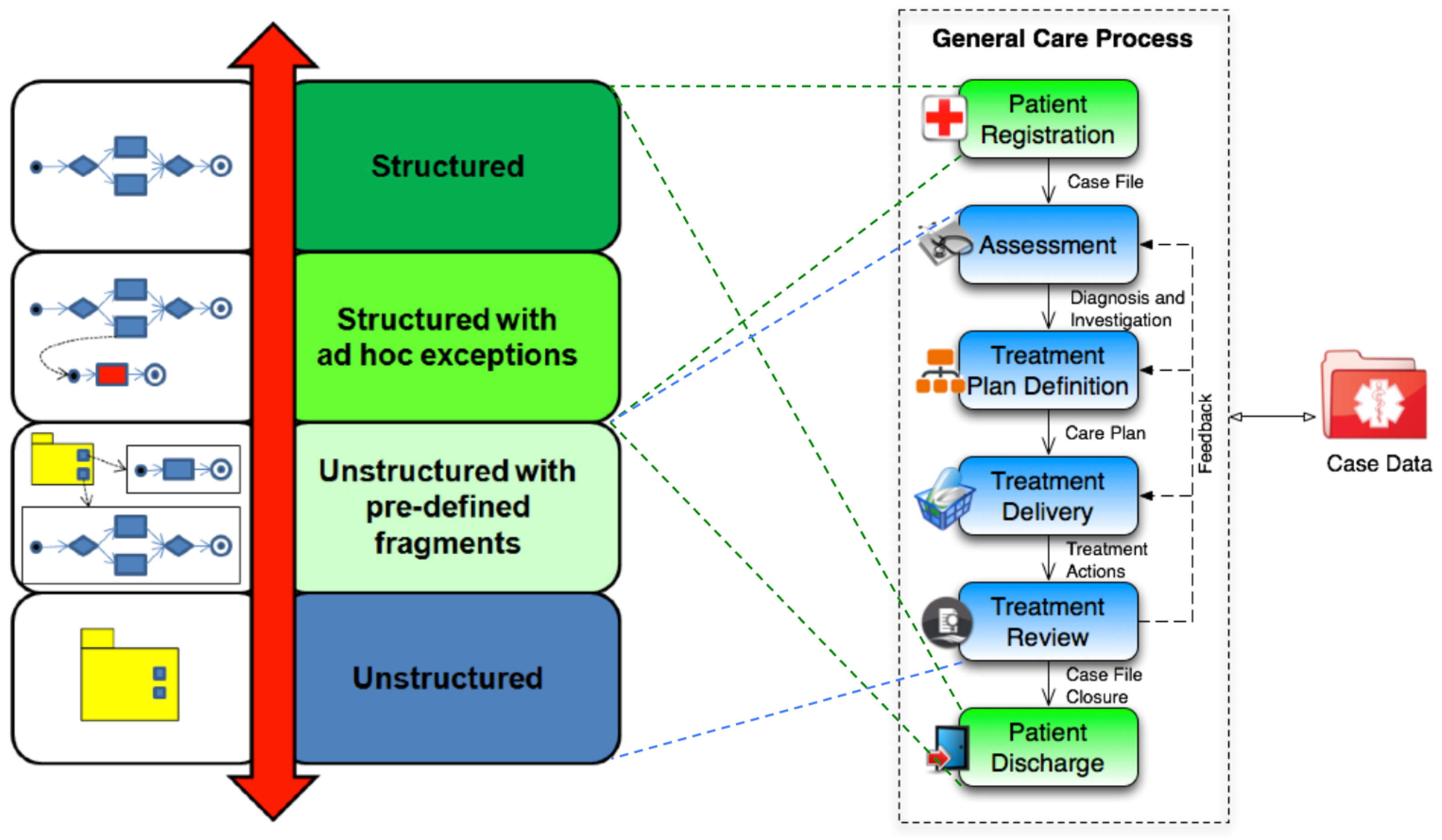

2.1. Healthcare Processes

- Elective care refers to clinical treatments that can be postponed for days or weeks [24]. According to [25], elective care can be classified into three subclasses: (i) standard processes, which are care pathways where the ordering of activities and their timing is predefined; (ii) routine processes, which are care pathways providing potential alternative treatments to be followed for reaching an overall clinical target; and (iii) non-routine processes, where the next step of the care pathway depends on how the patient reacts to a dedicated treatment [26].

- Non-elective care refers to emergency care, which has to be enacted immediately, and urgent care, which can be procrastinated for a short time.

- patient registration, which consists of creating a medical case file;

- patient assessment, where an initial diagnosis for the patient is performed;

- treatment plan definition, which refers to the realization of (dedicated) individual care plan;

- treatment delivery, which consists of enacting the clinical actions provided by the care plan;

- treatment review, which consists of a continuous monitoring of the impact and efficacy of enacted treatments, in order to provide feedback for the previous steps;

- patient discharge, consisting of the closure of the case file.

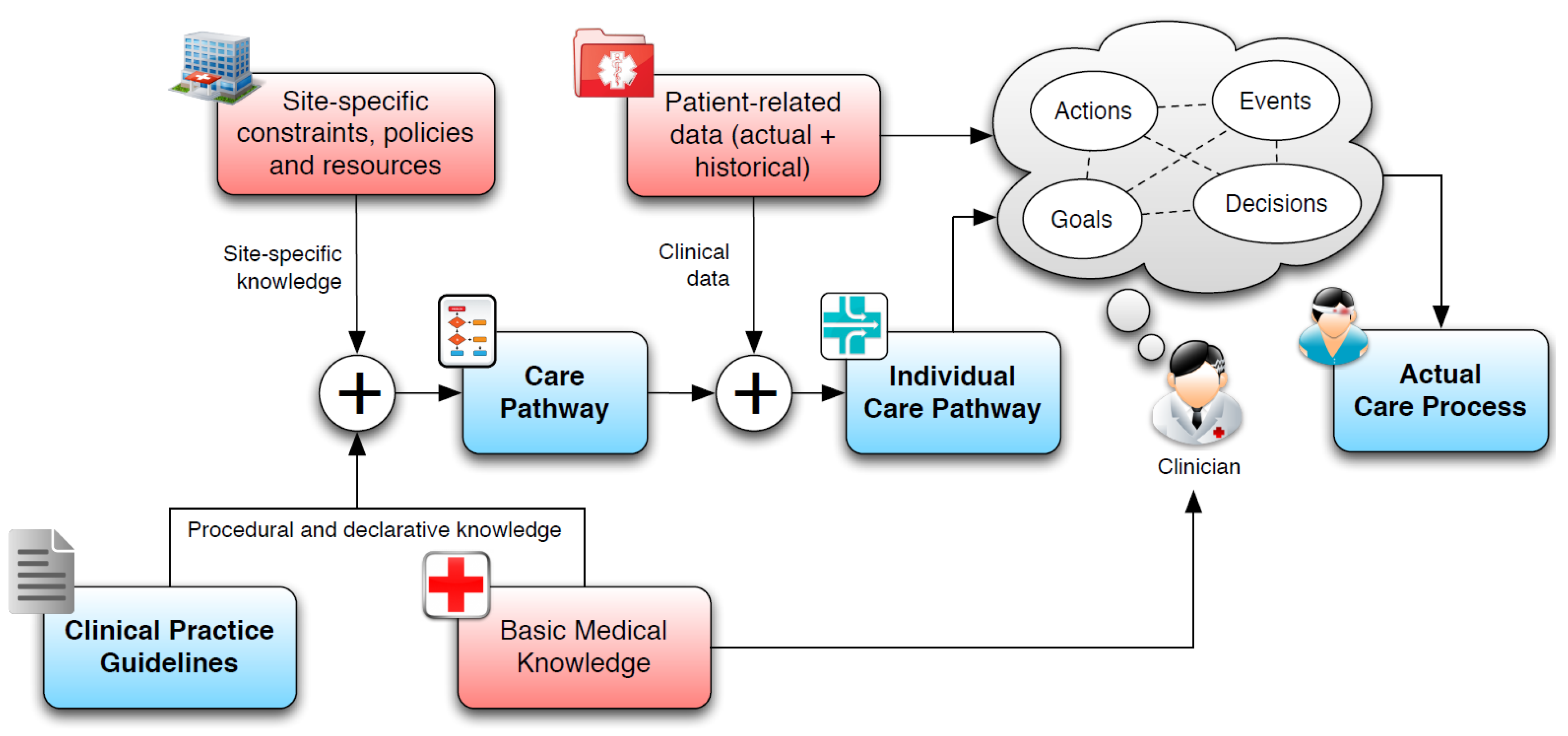

2.2. Clinical Guidelines

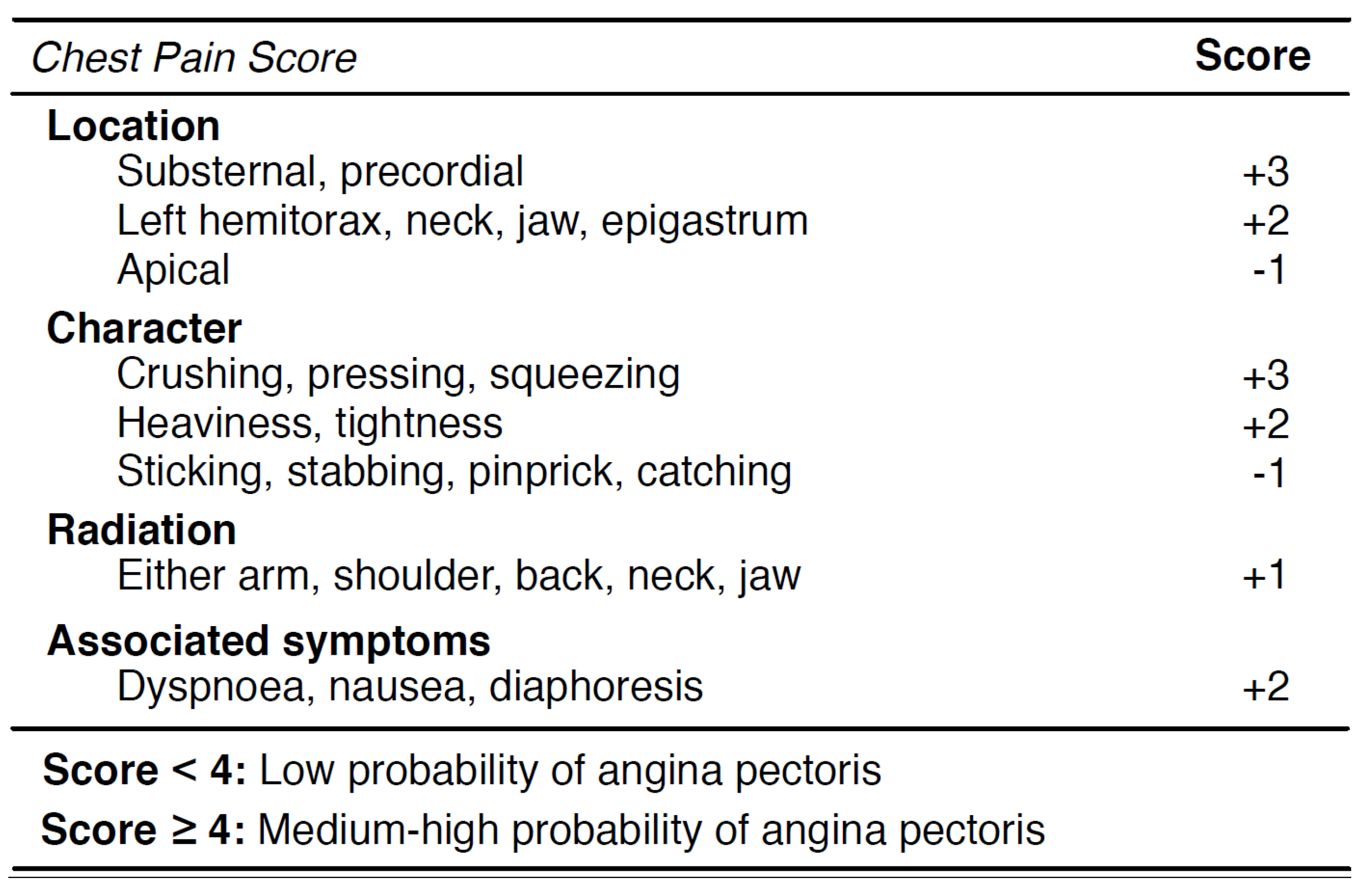

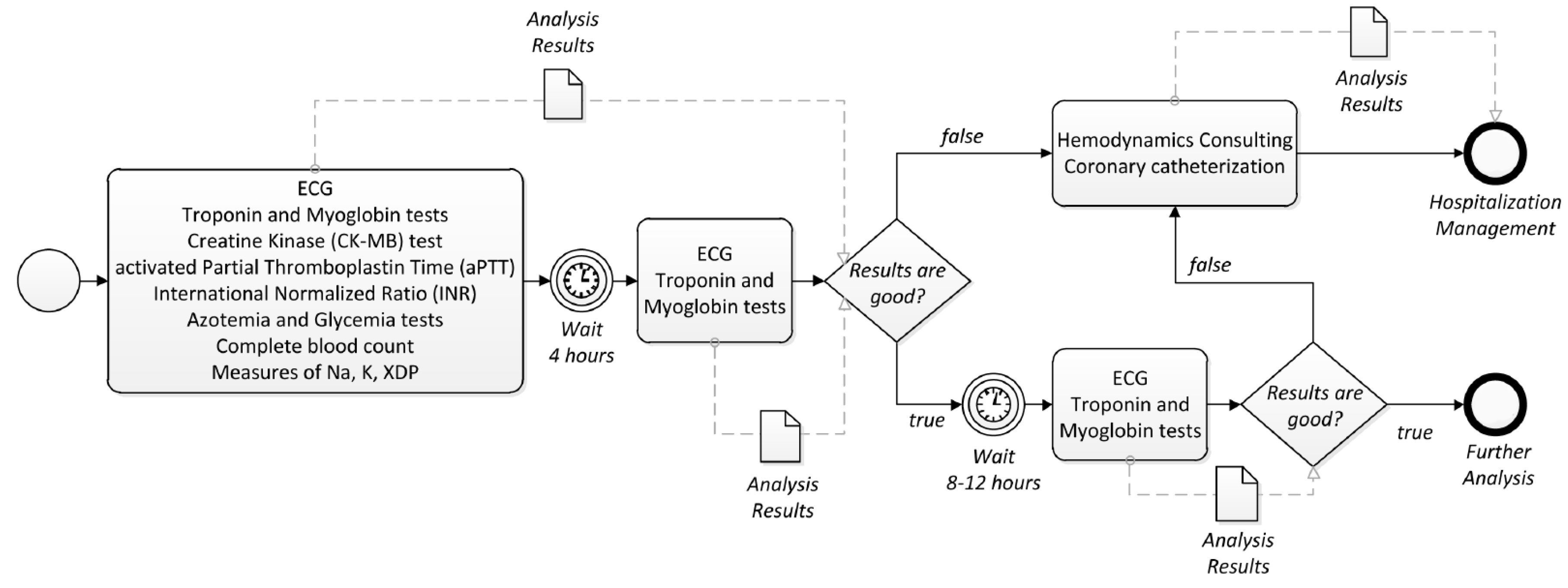

2.3. Case Study: Chest Pain

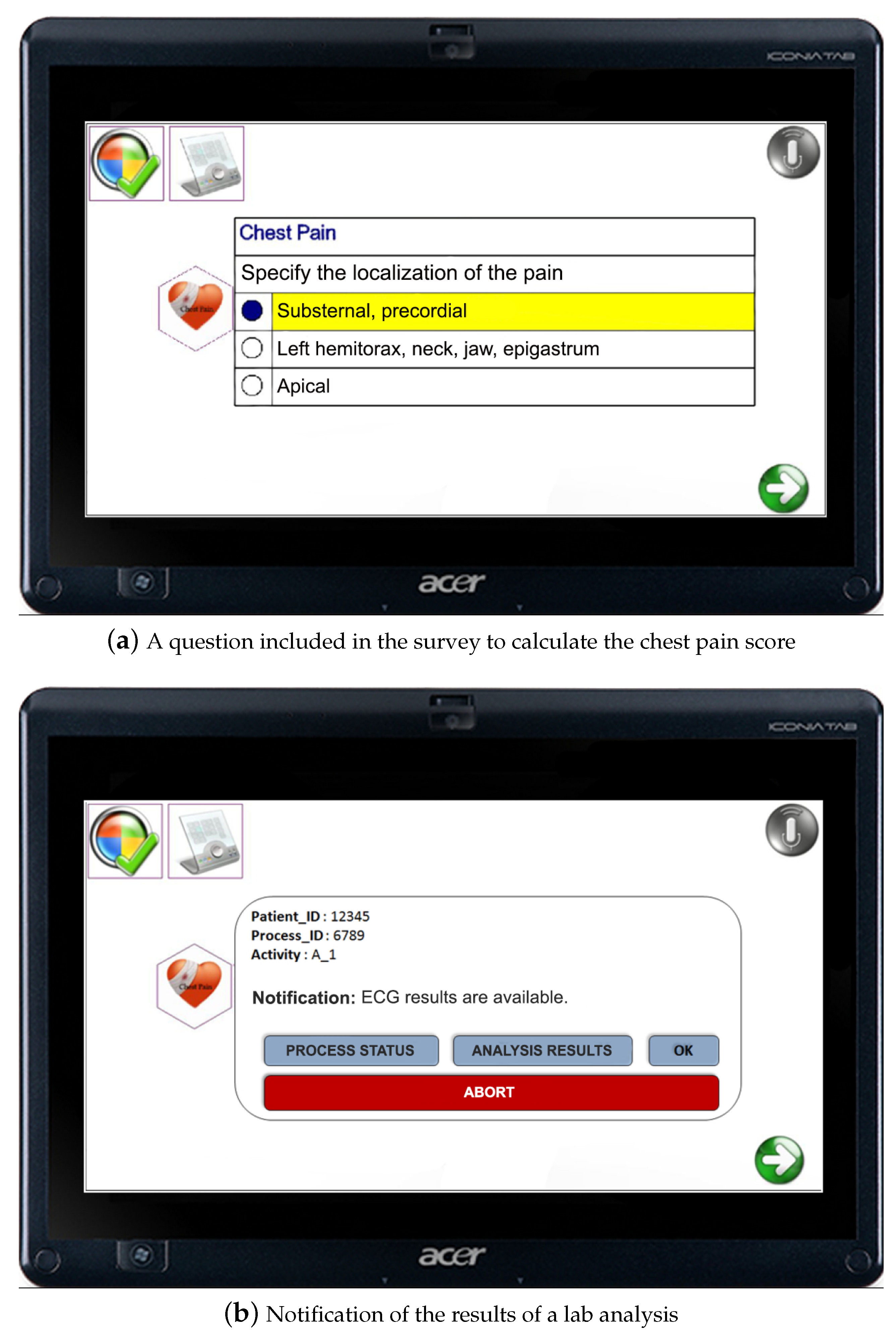

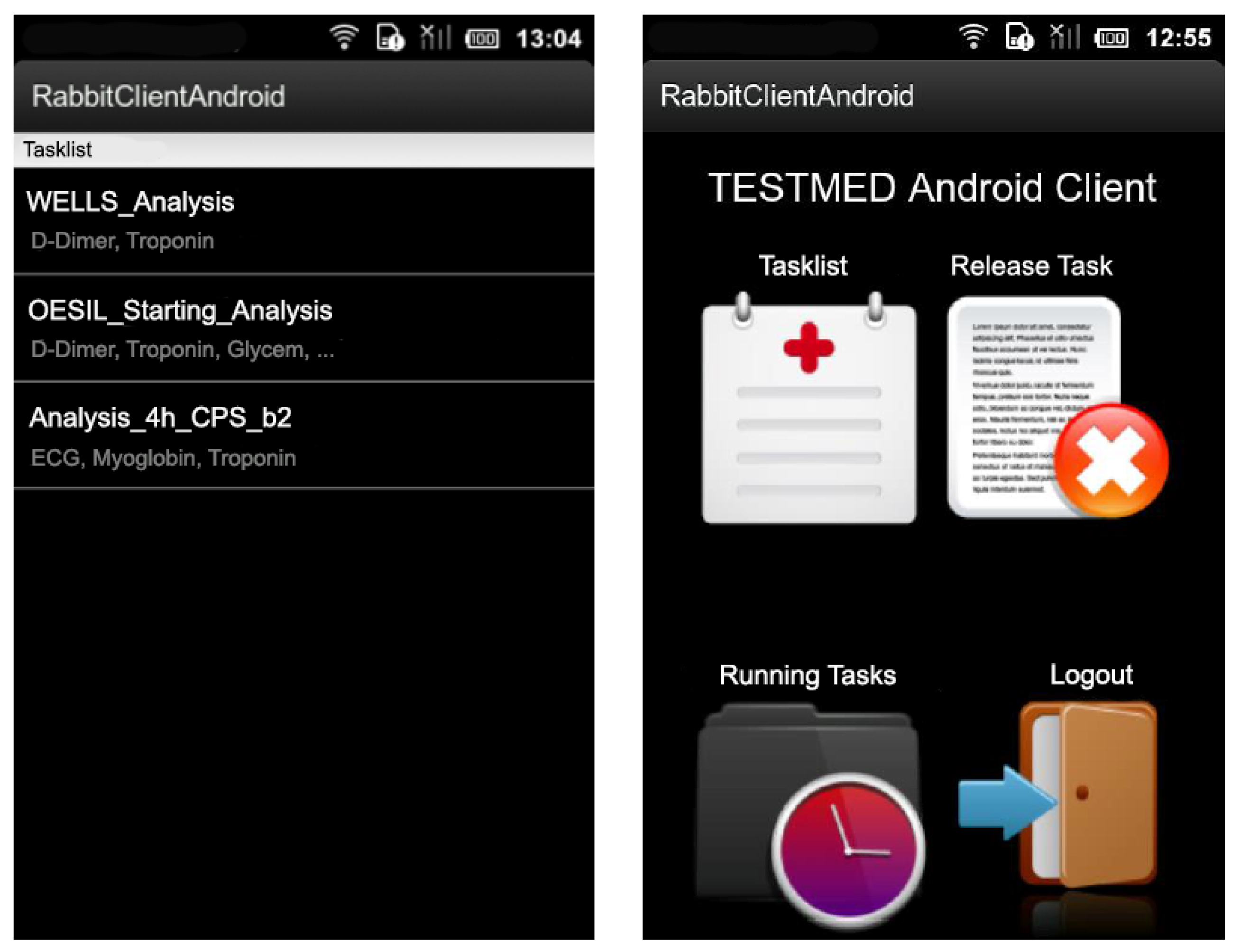

3. Enactment of Clinical Guidelines with TESTMED

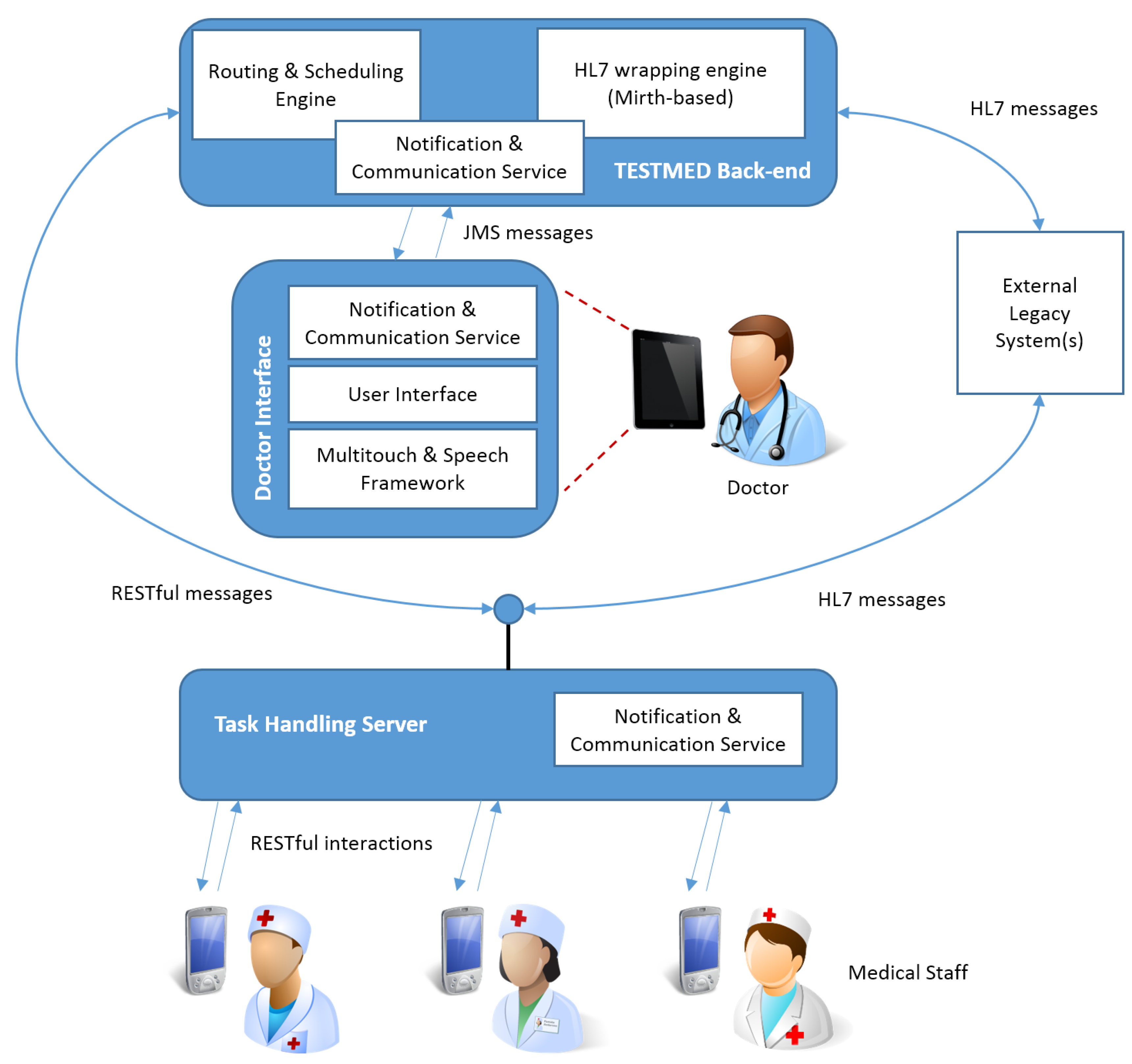

4. The Architecture of the TESTMED System

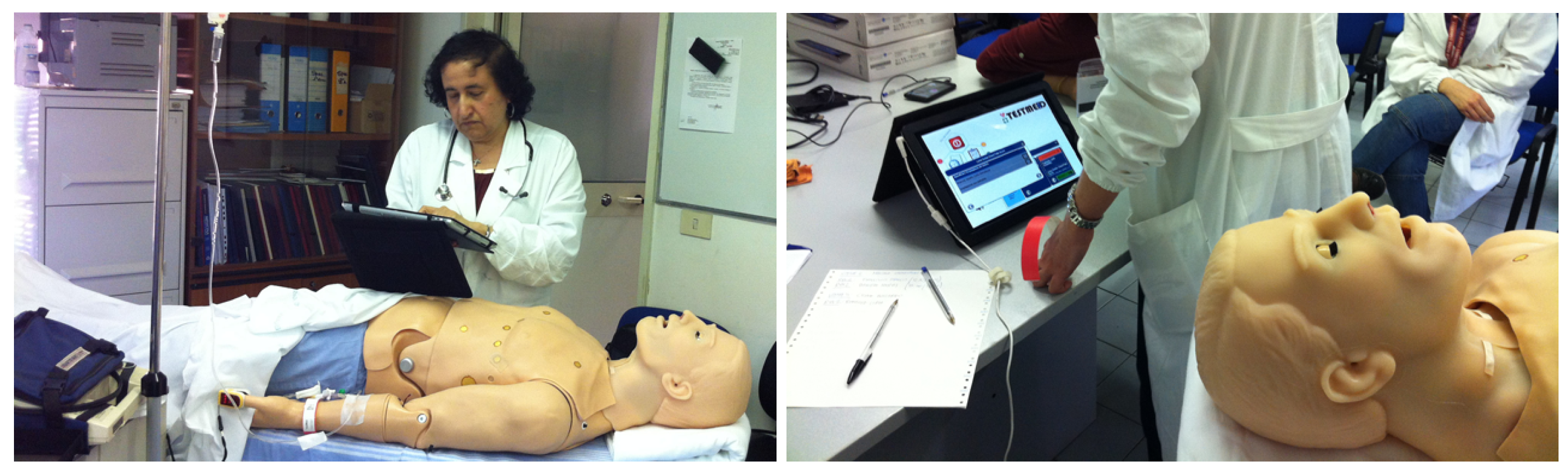

5. User Evaluation

- supporting the mobility of doctors for visiting the patients;

- facilitating the information flow continuity by supporting instant and mobile access;

- speeding up doctors’ work while executing CGs and performing clinical decision-making.

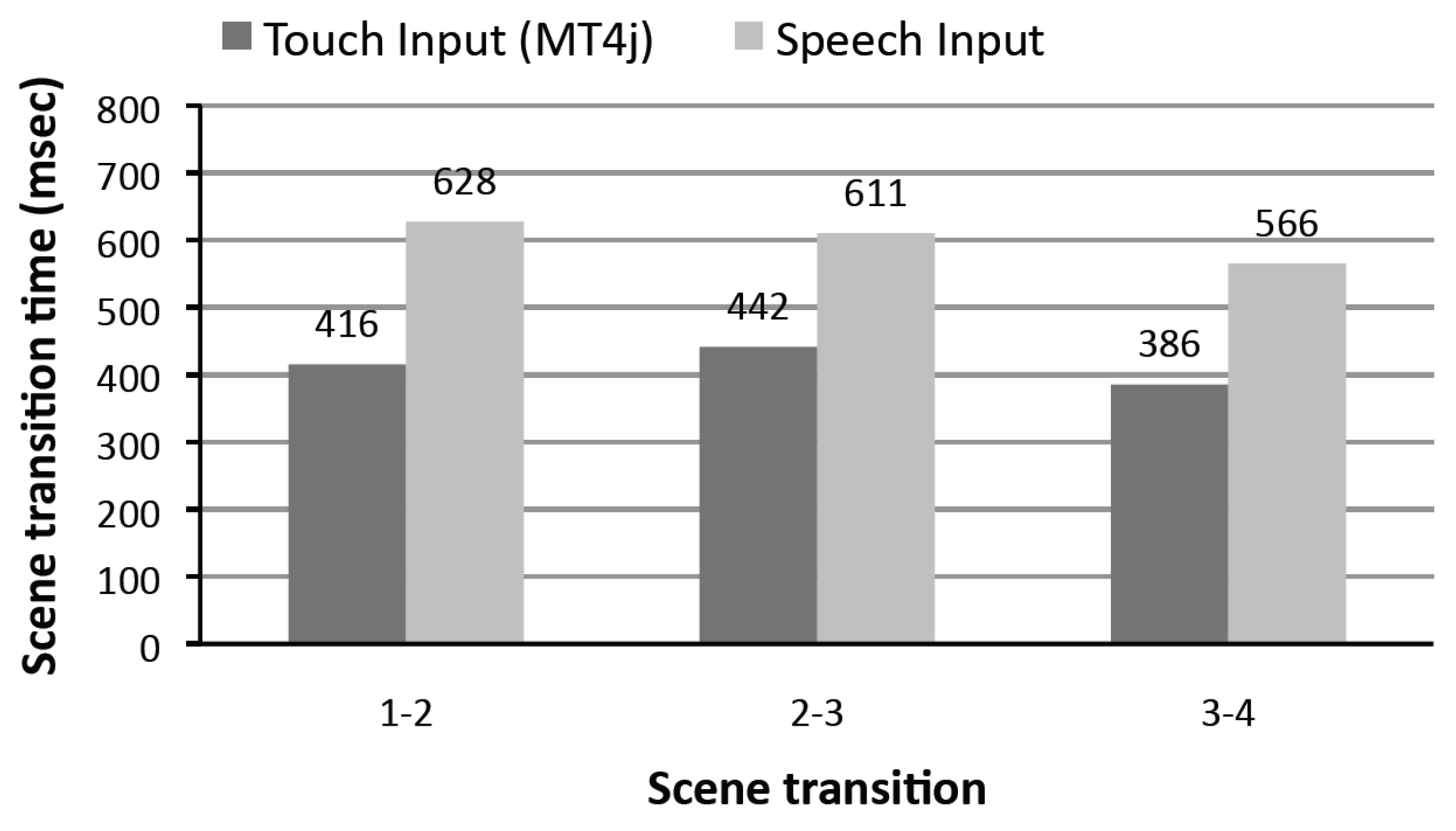

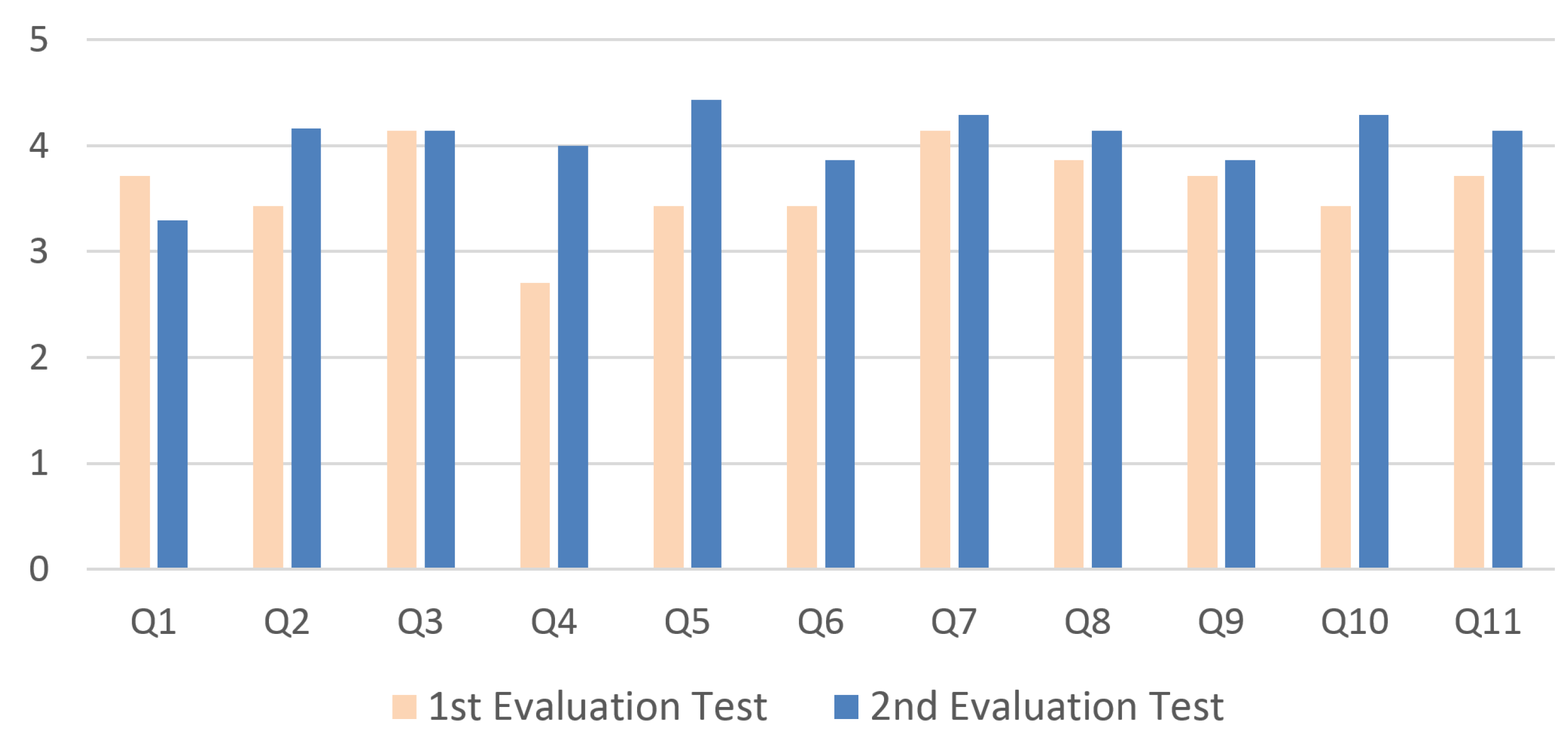

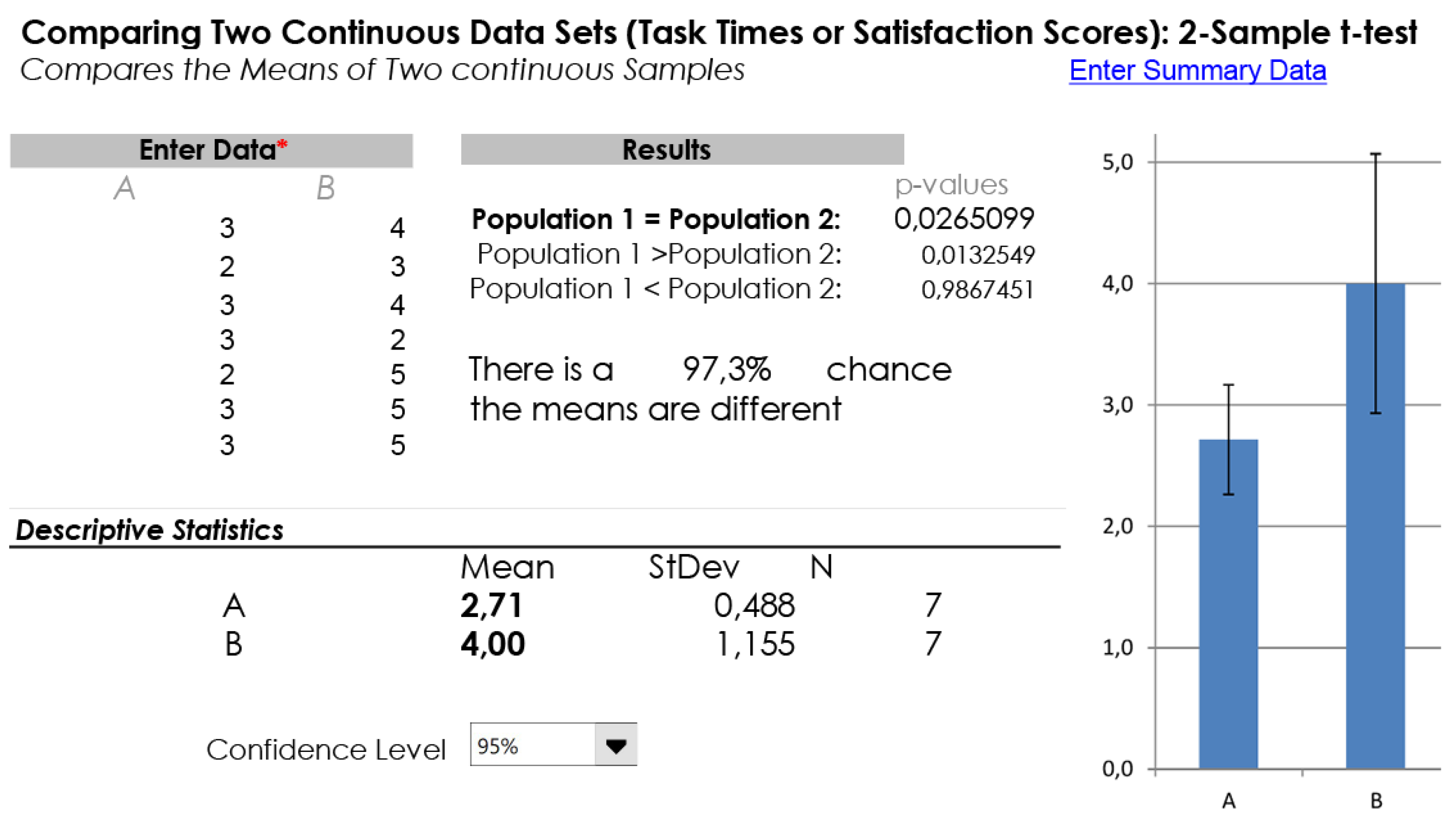

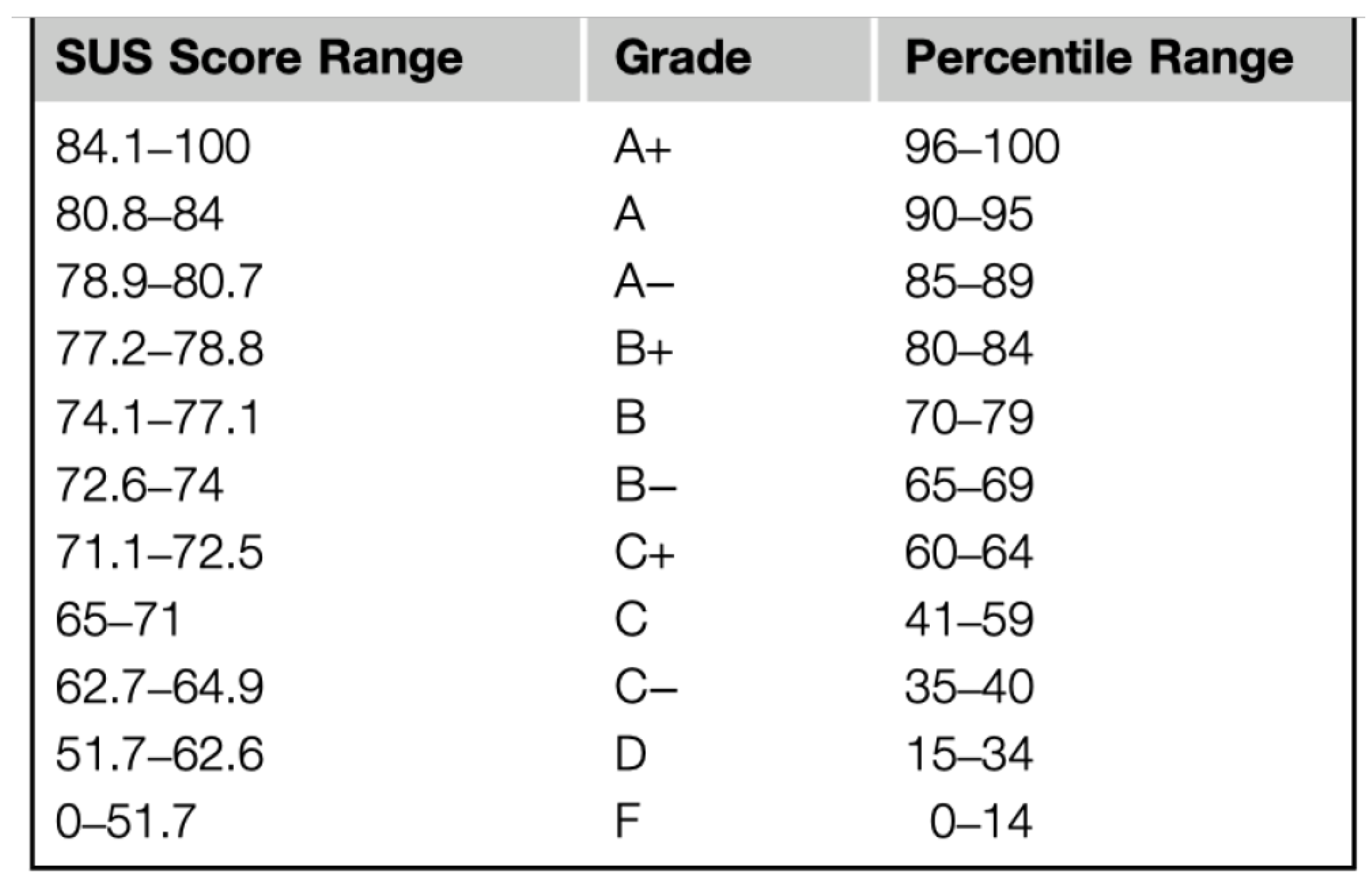

5.1. Evaluation Setting and Results of the First User Study

- Q1

- I have a good experience in the use of mobile devices.

- Q2

- The interaction with the system does not require any special learning ability.

- Q3

- I judge the interaction with the touch interface very satisfying.

- Q4

- I judge the interaction with the vocal interface very satisfying.

- Q5

- I think that the ability of interacting with the system through the touch interface or through the vocal interface is very useful.

- Q6

- The system can be used by non-expert users in the use of mobile devices.

- Q7

- The system allows for constantly monitoring the status of clinical activities.

- Q8

- The system correctly drives the clinicians in the performance of clinical activities.

- Q9

- The doctor may—at any time—access data and information relevant to a specific clinical activity.

- Q10

- The system is robust with respect to errors.

- Q11

- I think that the use of the system could facilitate the work of a doctor in the execution of its activities.

5.2. Evaluation Setting and Results of the Second User Study

6. Related Work

6.1. Process-Oriented Healthcare Systems

6.2. Mobile and Multimodal Interaction in the Healthcare Domain

6.3. Vocal Interfaces

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Van De Belt, T.H.; Engelen, L.J.; Berben, S.A.; Schoonhoven, L. Definition of Health 2.0 and Medicine 2.0: A systematic review. J. Med. Internet Res. 2010, 12. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, B.; Wang, J.; Wu, S.; Maglione, M.; Mojica, W.; Roth, E.; Morton, S.C.; Shekelle, P.G. Systematic review: Impact of health information technology on quality, efficiency, and costs of medical care. Ann. Intern. Med. 2006, 144, 742–752. [Google Scholar] [CrossRef] [PubMed]

- Cook, R.; Foster, J. The impact of Health Information Technology (I-HIT) Scale: The Australian results. In Proceedings of the 10th International Congress on Nursing Informatics, Helsinki, Finland, 28 June–1 July 2009; pp. 400–404. [Google Scholar]

- Buntin, M.B.; Burke, M.F.; Hoaglin, M.C.; Blumenthal, D. The benefits of health information technology: A review of the recent literature shows predominantly positive results. Health Aff. 2011, 30, 464–471. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; McCullough, J.S.; Town, R.J. The impact of health information technology on hospital productivity. RAND J. Econ. 2013, 44, 545–568. [Google Scholar] [CrossRef]

- Shachak, A.; Hadas-Dayagi, M.; Ziv, A.; Reis, S. Primary care physicians use of an eletronic medical recors system: A cognitive task analysis. J. Gen. Intern. Med. 2009, 24, 341–348. [Google Scholar] [CrossRef] [PubMed]

- Richtel, M. As doctors use more devices, potential for distraction grows. The New York Times, 14 December 2011. [Google Scholar]

- Booth, N.; Robinson, P.; Kohannejad, J. Identification of high-quality consultation practice in primary care: the effects of computer use on doctor–patient rapport. Inform. Prim. Care 2004, 12, 75–83. [Google Scholar] [CrossRef] [PubMed]

- Margalit, R.S.; Roter, D.; Dunevant, M.A.; Larson, S.; Reis, S. Electronic medical record use and physician–patient communication: An observational study of Israeli primary care encounters. Patient Educ. Couns. 2006, 61, 134–141. [Google Scholar] [CrossRef] [PubMed]

- Laxmisan, A.; Hakimzada, F.; Sayan, O.R.; Green, R.A.; Zhang, J.; Patel, V.L. The multitasking clinician: Decision-making and cognitive demand during and after team handoffs in emergency care. Int. J. Med. Inform. 2007, 76, 801–811. [Google Scholar] [CrossRef] [PubMed]

- Reichert, M. What BPM Technology Can Do for Healthcare Process Support. In Artificial Intelligence in Medicine; Peleg, M., Lavrač, N., Combi, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 2–13. [Google Scholar]

- Oviatt, S.; Coulston, R.; Lunsford, R. When do we interact multimodally?: Cognitive load and multimodal communication patterns. In Proceedings of the 6th International Conference on Multimodal Interfaces, State College, PA, USA, 13–15 October 2004; pp. 129–136. [Google Scholar]

- Pieh, C.; Neumeier, S.; Loew, T.; Altmeppen, J.; Angerer, M.; Busch, V.; Lahmann, C. Effectiveness of a multimodal treatment program for somatoform pain disorder. Pain Pract. 2014, 14. [Google Scholar] [CrossRef] [PubMed]

- Lenz, R.; Reichert, M. IT support for healthcare processes–premises, challenges, perspectives. Data Knowl. Eng. 2007, 61, 39–58. [Google Scholar] [CrossRef]

- Mans, R.S.; van der Aalst, W.M.P.; Russell, N.C.; Bakker, P.J.M.; Moleman, A.J. Process-Aware Information System Development for the Healthcare Domain—Consistency, Reliability, and Effectiveness. In Business Process Management Workshops: BPM 2009 International Workshops, Ulm, Germany, September 7, 2009. Revised Papers; Rinderle-Ma, S., Sadiq, S., Leymann, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 635–646. [Google Scholar]

- Sonnenberg, F.A.; Hagerty, C.G. Computer-Interpretable Clinical Practice Guidelines. Where are we and where are we going? Yearb. Med. Inform. 2006, 45, 145–158. [Google Scholar]

- Peleg, M.; Tu, S.; Bury, J.; Ciccarese, P.; Fox, J.; Greenes, R.; Hall, R.; Johnson, P.D.; Jones, N.; Kumar, A.; et al. Comparing Computer-Interpretable Guideline Models: A Case-Study Approach. J. AMIA 2003, 10, 52–68. [Google Scholar] [CrossRef] [PubMed]

- De Clercq, P.A.; Blom, J.A.; Korsten, H.H.; Hasman, A. Approaches for creating computer-interpretable guidelines that facilitate decision support. Artif. Intell. Med. 2004, 31. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Peleg, M.; Tu, S.; Boxwala, A.; Greenes, R.; Patel, V.; Shortliffe, E. Representation Primitives, Process Models and Patient Data in Computer-Interpretable Clinical Practice Guidelines: A Literature Review of Guideline Representation Models. Int. J. Med. Inform. 2002, 68, 59–70. [Google Scholar] [CrossRef]

- Dix, A.; Finlay, J.; Abowd, G.; Beale, R. Human-Computer Interaction; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1997. [Google Scholar]

- Cossu, F.; Marrella, A.; Mecella, M.; Russo, A.; Bertazzoni, G.; Suppa, M.; Grasso, F. Improving Operational Support in Hospital Wards through Vocal Interfaces and Process-Awareness. In Proceedings of the 25th IEEE International Symposium on Computer-Based Medical Systems (CBMS 2012), Rome, Italy, 20–22 June 2012. [Google Scholar]

- Cossu, F.; Marrella, A.; Mecella, M.; Russo, A.; Kimani, S.; Bertazzoni, G.; Colabianchi, A.; Corona, A.; Luise, A.D.; Grasso, F.; et al. Supporting Doctors through Mobile Multimodal Interaction and Process-Aware Execution of Clinical Guidelines. In Proceedings of the 2014 IEEE 7th Internaonal Conference on Service-Oriented Computing and Applications, Matsue, Japan, 17–19 November 2014; pp. 183–190. [Google Scholar]

- Mans, R.S.; van der Aalst, W.M.; Vanwersch, R.J. Process Mining in Healthcare: Evaluating and Exploiting Operational Healthcare Processes; Springer: Berlin, Germany, 2015. [Google Scholar]

- Lillrank, P.; Liukko, M. Standard, routine and non-routine processes in health care. Int. J. Health Care Qual. Assur. 2004, 17, 39–46. [Google Scholar] [CrossRef]

- Gupta, D.; Denton, B. Appointment scheduling in health care: Challenges and opportunities. IIE Trans. 2008, 40, 800–819. [Google Scholar] [CrossRef]

- Vissers, J.; Beech, R. Health Operations Management: Patient Flow Logistics in Health Care; Psychology Press: Hove, UK, 2005. [Google Scholar]

- Swenson, K.D. (Ed.) Mastering the Unpredictable: How Adaptive Case Management Will Revolutionize the Way that Knowledge Workers Get Things Done; Meghan-Kiffer Press: Tampa, FL, USA, 2010; Chapter 8. [Google Scholar]

- Russo, A.; Mecella, M. On the evolution of process-oriented approaches for healthcare workflows. Int. J. Bus. Process Integr. Manag. 2013, 6, 224–246. [Google Scholar] [CrossRef]

- Di Ciccio, C.; Marrella, A.; Russo, A. Knowledge-Intensive Processes: Characteristics, Requirements and Analysis of Contemporary Approaches. J. Data Semant. 2015, 4, 29–57. [Google Scholar] [CrossRef]

- Peleg, M.; Tu, S.W. Design patterns for clinical guidelines. Artif. Intell. Med. 2009, 47, 1–24. [Google Scholar] [CrossRef]

- Field, M.J.; Lohr, K.N. Clinical Practice Guidelines: Directions for a New Program; Institute of Medicine: Washington, DC, USA, 1990. [Google Scholar]

- Isern, D.; Moreno, A. Computer-based execution of clinical guidelines: A review. Int. J. Med. Inform. 2008, 77, 787–808. [Google Scholar] [CrossRef]

- Ottani, F.; Binetti, N.; Casagranda, I.; Cassin, M.; Cavazza, M.; Grifoni, S.; Lenzi, T.; Lorenzoni, R.; Sbrojavacca, R.; Tanzi, P.; et al. Percorso di valutazione del dolore toracico—Valutazione dei requisiti di base per l’implementazione negli ospedali italiani. G. Ital. Cardiol. 2009, 10, 46–63. [Google Scholar]

- Sutton, D.R.; Fox, J. The syntax and semantics of the PROforma guideline modeling language. J. Am. Med. Inform. Assoc. 2003, 10, 433–443. [Google Scholar] [CrossRef] [PubMed]

- Slavich, G.; Buonocore, G. Forensic medicine aspects in patients with chest pain in the emergency room. Ital. Heart J. Suppl. 2001, 2, 381–384. [Google Scholar] [PubMed]

- Coopers, Price Waterhouse Light Research Institute. Healthcare Unwired: New Business Models Delivering Care Anywhere; PricewaterhouseCoopers: London, UK, 2010. [Google Scholar]

- Chatterjee, S.; Chakraborty, S.; Sarker, S.; Sarker, S.; Lau, F.Y. Examining the success factors for mobile work in healthcare: A deductive study. Decis. Support Syst. 2009, 46, 620–633. [Google Scholar] [CrossRef]

- Oviatt, S.; Cohen, P.R. The paradigm shift to multimodality in contemporary computer interfaces. Synth. Lect. Hum. Centered Inform. 2015, 8, 1–243. [Google Scholar] [CrossRef]

- Sauro, J.; Lewis, J.R. Quantifying the User Experience: Practical Statistics for User Research; Morgan Kaufmann: Burlington, MA, USA, 2016. [Google Scholar]

- De Leoni, M.; Marrella, A.; Russo, A. Process-aware information systems for emergency management. In Proceedings of the European Conference on a Service-Based Internet, Ghent, Belgium, 13–15 December 2010; pp. 50–58. [Google Scholar]

- Marrella, A.; Mecella, M.; Sardina, S. Intelligent Process Adaptation in the SmartPM System. ACM Trans. Intell. Syst. Technol. 2016, 8, 25. [Google Scholar] [CrossRef]

- Marrella, A.; Mecella, M.; Sardiña, S. Supporting adaptiveness of cyber-physical processes through action-based formalisms. AI Commun. 2018, 31, 47–74. [Google Scholar] [CrossRef]

- Dadam, P.; Reichert, M.; Kuhn, K. Clinical Workflows—The killer application for process-oriented information systems. In BIS 2000; Springer: London, UK, 2000; pp. 36–59. [Google Scholar]

- Mans, R.S.; van der Aalst, W.M.P.; Russell, N.C.; Bakker, P.J.M. Flexibility Schemes for Workflow Management Systems. In Business Process Management Workshops; Springer: Berlin/Heidelberg, Germany, 2008; pp. 361–372. [Google Scholar]

- Rojo, M.G.; Rolon, E.; Calahorra, L.; Garcia, F.O.; Sanchez, R.P.; Ruiz, F.; Ballester, N.; Armenteros, M.; Rodriguez, T.; Espartero, R.M. Implementation of the Business Process Modelling Notation (BPMN) in the modelling of anatomic pathology processes. Diagn. Pathol. 2008, 3 (Suppl. 1), S22. [Google Scholar] [CrossRef]

- Strasser, M.; Pfeifer, F.; Helm, E.; Schuler, A.; Altmann, J. Defining and reconstructing clinical processes based on IHE and BPMN 2.0. Stud. Health Technol. Inform. 2011, 169, 482–486. [Google Scholar]

- Ruiz, F.; Garcia, F.; Calahorra, L.; Llorente, C.; Goncalves, L.; Daniel, C.; Blobel, B. Business process modeling in healthcare. Stud. Health Technol. Inform. 2012, 179, 75–87. [Google Scholar]

- Alexandrou, D.; Mentzas, G. Research Challenges for Achieving Healthcare Business Process Interoperability. In Proceedings of the 2009 International Conference on eHealth, Telemedicine, and Social Medicine, Cancun, Mexico, 1–7 Feburary 2009; pp. 58–65. [Google Scholar]

- Van der Aalst, W.; Adriansyah, A.; De Medeiros, A.K.A.; Arcieri, F.; Baier, T.; Blickle, T.; Bose, J.C.; van den Brand, P.; Brandtjen, R.; Buijs, J.; et al. Process mining manifesto. In Proceedings of the International Conference on Business Process Management, Clermont-Ferrand, France, 29 August–2 September 2011; pp. 169–194. [Google Scholar]

- Van der Aalst, W.M.P.; Weijters, T.; Maruster, L. Workflow Mining: Discovering Process Models from Event Logs. IEEE Trans. Knowl. Data Eng. 2004, 16, 1128–1142. [Google Scholar] [CrossRef]

- Van der Aalst, W.M. Process Mining: Data Science in Action; Springer: Berlin, Germany, 2016. [Google Scholar]

- Augusto, A.; Conforti, R.; Dumas, M.; Rosa, M.L.; Maggi, F.M.; Marrella, A.; Mecella, M.; Soo, A. Automated Discovery of Process Models from Event Logs: Review and Benchmark. IEEE Trans. Knowl. Data Eng. 2018. [Google Scholar] [CrossRef]

- Van der Aalst, W.M.P.; Adriansyah, A.; van Dongen, B.F. Replaying history on process models for conformance checking and performance analysis. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2012, 2, 182–192. [Google Scholar] [CrossRef]

- De Giacomo, G.; Maggi, F.M.; Marrella, A.; Patrizi, F. On the Disruptive Effectiveness of Automated Planning for LTLf-Based Trace Alignment. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3555–3561. [Google Scholar]

- De Leoni, M.; Marrella, A. Aligning Real Process Executions and Prescriptive Process Models through Automated Planning. Expert Syst. Appl. 2017, 82, 162–183. [Google Scholar] [CrossRef]

- De Giacomo, G.; Maggi, F.M.; Marrella, A.; Sardiña, S. Computing Trace Alignment against Declarative Process Models through Planning. In Proceedings of the Twenty-Sixth International Conference on Automated Planning and Scheduling (ICAPS 2016), London, UK, 12–17 June 2016; pp. 367–375. [Google Scholar]

- De Leoni, M.; Lanciano, G.; Marrella, A. Aligning Partially-Ordered Process-Execution Traces and Models Using Automated Planning. In Proceedings of the Twenty-Eight International Conference on Automated Planning and Scheduling (ICAPS 2018), Delft, The Netherlands, 24–29 June 2018; pp. 321–329. [Google Scholar]

- Maggi, F.M.; Corapi, D.; Russo, A.; Lupu, E.; Visaggio, G. Revising Process Models through Inductive Learning. In Business Process Management Workshops; zur Muehlen, M., Su, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 182–193. [Google Scholar]

- Fahland, D.; van der Aalst, W.M. Repairing process models to reflect reality. In Proceedings of the International Conference on Business Process Management, Tallinn, Estonia, 3–6 September 2012; pp. 229–245. [Google Scholar]

- Maggi, F.M.; Marrella, A.; Capezzuto, G.; Cervantes, A.A. Explaining Non-compliance of Business Process Models Through Automated Planning. In Service-Oriented Computing; Pahl, C., Vukovic, M., Yin, J., Yu, Q., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 181–197. [Google Scholar]

- Anzbock, R.; Dustdar, S. Semi-automatic generation of Web services and BPEL processes—A Model-Driven approach. In Proceedings of the 3rd International Conference on Business Process Management, Nancy, France, 5–8 September 2005; pp. 64–79. [Google Scholar]

- Poulymenopoulou, M.; Malamateniou, F.; Vassilacopoulos, G. Emergency healthcare process automation using workflow technology and web services. Med. Inform. Internet Med. 2003, 28, 195–207. [Google Scholar] [CrossRef] [PubMed]

- Poulymenopoulou, M.; Malamateniou, F.; Vassilacopoulos, G. Emergency healthcare process automation using mobile computing and cloud services. J. Med. Syst. 2012, 36, 3233–3241. [Google Scholar] [CrossRef] [PubMed]

- Leonardi, G.; Panzarasa, S.; Quaglini, S.; Stefanelli, M.; van der Aalst, W.M.P. Interacting Agents through a Web-based Health Serviceflow Management System. J. Biomed. Inform. 2007, 40, 486–499. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Lu, S.; Yang, K. Service-Oriented Architecture for SPDFLOW: A Healthcare Workflow System for Sterile Processing Departments. In Proceedings of the IEEE Ninth International Conference on Services Computing (SCC), Honolulu, HI, USA, 24–29 June 2012; pp. 507–514. [Google Scholar]

- Capata, A.; Marella, A.; Russo, R. A geo-based application for the management of mobile actors during crisis situations. In Proceedings of the 5th International ISCRAM Conference, Washington, DC, USA, 4–7 May 2008. [Google Scholar]

- Humayoun, S.R.; Catarci, T.; de Leoni, M.; Marrella, A.; Mecella, M.; Bortenschlager, M.; Steinmann, R. The WORKPAD User Interface and Methodology: Developing Smart and Effective Mobile Applications for Emergency Operators. In Universal Access in Human–Computer Interaction. Applications and Services; Springer: Berlin/Heidelberg, Germany, 2009; pp. 343–352. [Google Scholar]

- Marrella, A.; Mecella, M.; Russo, A. Collaboration on-the-field: Suggestions and beyond. In Proceedings of the 8th International Conference on Information Systems for Crisis Response and Management (ISCRAM), Lisbon, Portugal, 8–11 May 2011. [Google Scholar]

- López-Cózar, R.; Callejas, Z. Multimodal dialogue for ambient intelligence and smart environments. In Handbook of Ambient Intelligence and Smart Environments; Springer Science & Business Media: Berlin, Germany, 2010; pp. 559–579. [Google Scholar]

- Bongartz, S.; Jin, Y.; Paternò, F.; Rett, J.; Santoro, C.; Spano, L.D. Adaptive user interfaces for smart environments with the support of model-based languages. In Proceedings of the International Joint Conference on Ambient Intelligence, Pisa, Italy, 13–15 November 2012; pp. 33–48. [Google Scholar]

- Jaber, R.N.; AlTarawneh, R.; Humayoun, S.R. Characterizing Pairs Collaboration in a Mobile-equipped Shared-Wall Display Supported Collaborative Setup. arXiv 2019, arXiv:1904.13364. [Google Scholar]

- Humayoun, S.R.; Sharf, M.; AlTarawneh, R.; Ebert, A.; Catarci, T. ViZCom: Viewing, Zooming and Commenting Through Mobile Devices. In Proceedings of the 2015 International Conference on Interactive Tabletops & Surfaces (ITS ’15), Madeira, Portugal, 15–18 November 2015; ACM: New York, NY, USA, 2015; pp. 331–336. [Google Scholar] [CrossRef]

- Yang, J.; Yang, W.; Denecke, M.; Waibel, A. Smart sight: A tourist assistant system. In Proceedings of the Third International Symposium on Wearable Computers, San Francisco, CA, USA, 18–19 October 1999; p. 73. [Google Scholar]

- Collerton, T.; Marrella, A.; Mecella, M.; Catarci, T. Route Recommendations to Business Travelers Exploiting Crowd-Sourced Data. In Proceedings of the International Conference on Mobile Web and Information Systems, Prague, Czech Republic, 21–23 August 2017; pp. 3–17. [Google Scholar]

- Flood, D.; Germanakos, P.; Harrison, R.; McCaffery, F.; Samaras, G. Estimating Cognitive Overload in Mobile Applications for Decision Support within the Medical Domain. In Proceedings of the 14th International Conference on Enterprise Information Systems (ICEIS 2012), Wroclaw, Poland, 28 June–1 July 2012; Volume 3, pp. 103–107. [Google Scholar]

- Jourde, F.; Laurillau, Y.; Moran, A.; Nigay, L. Towards Specifying Multimodal Collaborative User Interfaces: A Comparison of Collaboration Notations. In International Workshop on Design, Specification, and Verification of Interactive Systems; Springer: Berlin/Heidelberg, Germany, 2008; pp. 281–286. [Google Scholar]

- McGee-Lennon, M.R.; Carberry, M.; Gray, P.D. HECTOR: A PDA Based Clinical Handover System; DCS Technical Report Series; Technical Report; Department of Computing Science, University of Glasgow: Glasgow, UK, 2007; pp. 1–14. [Google Scholar]

- Iyengar, M.; Carruth, T.; Florez-Arango, J.; Dunn, K. Informatics-based medical procedure assistance during space missions. Hippokratia 2008, 12, 23. [Google Scholar] [PubMed]

- Iyengar, M.S.; Florez-Arango, J.F.; Garcia, C.A. GuideView: A system for developing structured, multimodal, multi-platform persuasive applications. In Proceedings of the 4th International Conference on Persuasive Technology, Claremont, CA, USA, 26–29 April 2009. [Google Scholar]

- Marx, M.; Carter, J.; Phillips, M.; Holthouse, M.; Seabury, S.; Elizondo-Cecenas, J.; Phaneuf, B. System and Method for Developing Interactive Speech Applications. U.S. Patent US6173266B1, 9 Janurary 2001. [Google Scholar]

- Hedin, J.; Meier, B. Voice Control of a User Interface to Service Applications. U.S. Patent US6185535B1, 6 February 2001. [Google Scholar]

- Sharma, R.; Yeasin, M.; Krahnstoever, N.; Rauschert, I.; Cai, G.; Brewer, I.; MacEachren, A.M.; Sengupta, K. Speech-gesture driven multimodal interfaces for crisis management. Proc. IEEE 2003, 91, 1327–1354. [Google Scholar] [CrossRef]

- Potamianos, G. Audio-visual automatic speech recognition and related bimodal speech technologies: A review of the state-of-the-art and open problems. In Proceedings of the 2009 IEEE Workshop on Automatic Speech Recognition Understanding, Merano, Italy, 13 November–17 December 2009; p. 22. [Google Scholar] [CrossRef]

- Hansen, T.; Eklund, J.; Sprinkle, J.; Bajcsy, R.; Sastry, S. Using smart sensors and a camera phone to detect and verify the fall of elderly persons. In Proceedings of the 3rd European Medicine, Biology and Engineering Conference, Prague, Czech Republic, 20–25 November 2005. [Google Scholar]

- Lisetti, C.; Nasoz, F.; LeRouge, C.; Ozyer, O.; Alvarez, K. Developing multimodal intelligent affective interfaces for tele-home health care. Int. J. Hum. Stud. 2003, 59, 245–255. [Google Scholar] [CrossRef]

- Cohn, J.F.; Kruez, T.S.; Matthews, I.; Yang, Y.; Nguyen, M.H.; Padilla, M.T.; Zhou, F.; la Torre, F.D. Detecting depression from facial actions and vocal prosody. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009; pp. 1–7. [Google Scholar]

- Mumolo, E.; Nolich, M.; Vercelli, G. Pro-active service robots in a health care framework: Vocal interaction using natural language and prosody. In Proceedings of the 10th IEEE International Workshop on Robot and Human Interactive Communication. ROMAN 2001 (Cat. No.01TH8591), Paris, France, 18–21 September 2001; pp. 606–611. [Google Scholar] [CrossRef]

- Cohen, P.R.; Johnston, M.; McGee, D.; Oviatt, S.; Pittman, J.; Smith, I.; Chen, L.; Clow, J. QuickSet: Multimodal Interaction for Distributed Applications. In Proceedings of the Fifth ACM International Conference on Multimedia (MULTIMEDIA ’97), Seattle, WA, USA, 9–13 November 1997; ACM: New York, NY, USA, 1997; pp. 31–40. [Google Scholar] [CrossRef]

- Fleury, A.; Vacher, M.; Noury, N. SVM-Based Multimodal Classification of Activities of Daily Living in Health Smart Homes: Sensors, Algorithms, and First, Experimental Results. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 274–283. [Google Scholar] [CrossRef] [PubMed]

- Billinghurst, M.; Savage, J.; Oppenheimer, P.; Edmond, C. The expert surgical assistant. An intelligent virtual environment with multimodal input. Stud. Health Technol. Inform. 1996, 29, 590–607. [Google Scholar] [PubMed]

| Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q9 | Q10 | Q11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| User1 | 4 | 3 | 4 | 3 | 2 | 3 | 4 | 4 | 4 | 3 | 3 |

| User2 | 4 | 3 | 4 | 2 | 4 | 2 | 2 | 3 | 2 | 3 | 3 |

| User3 | 5 | 3 | 4 | 3 | 5 | 2 | 5 | 4 | 5 | 4 | 4 |

| User4 | 4 | 4 | 4 | 3 | 3 | 4 | 4 | 4 | 3 | 3 | 4 |

| User5 | 3 | 3 | 4 | 2 | 3 | 4 | 4 | 4 | 4 | 3 | 4 |

| User6 | 3 | 4 | 5 | 3 | 3 | 5 | 5 | 4 | 4 | 4 | 4 |

| User7 | 3 | 4 | 4 | 3 | 4 | 4 | 5 | 4 | 4 | 4 | 4 |

| Avg | 3.7 | 3.43 | 4.14 | 2.7 | 3.43 | 3.43 | 4.14 | 3.86 | 3.71 | 3.43 | 3.71 |

| Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q9 | Q10 | Q11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| User1 | 4 | 4 | 4 | 4 | 5 | 3 | 4 | 4 | 4 | 4 | 4 |

| User2 | 4 | 4 | 5 | 3 | 5 | 2 | 3 | 4 | 2 | 4 | 3 |

| User3 | 3 | 3 | 3 | 4 | 4 | 3 | 4 | 4 | 3 | 3 | 4 |

| User4 | 5 | 4 | 3 | 2 | 4 | 5 | 5 | 4 | 4 | 5 | 4 |

| User5 | 1 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| User6 | 3 | 5 | 4 | 5 | 4 | 5 | 4 | 4 | 4 | 5 | 5 |

| User7 | 3 | 4 | 5 | 5 | 4 | 4 | 5 | 4 | 5 | 4 | 4 |

| Avg | 3.2 | 4.16 | 4.14 | 4 | 4.3 | 3.86 | 4.29 | 4.14 | 3.86 | 4.29 | 4.14 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Catarci, T.; Leotta, F.; Marrella, A.; Mecella, M.; Sharf, M. Process-Aware Enactment of Clinical Guidelines through Multimodal Interfaces. Computers 2019, 8, 67. https://doi.org/10.3390/computers8030067

Catarci T, Leotta F, Marrella A, Mecella M, Sharf M. Process-Aware Enactment of Clinical Guidelines through Multimodal Interfaces. Computers. 2019; 8(3):67. https://doi.org/10.3390/computers8030067

Chicago/Turabian StyleCatarci, Tiziana, Francesco Leotta, Andrea Marrella, Massimo Mecella, and Mahmoud Sharf. 2019. "Process-Aware Enactment of Clinical Guidelines through Multimodal Interfaces" Computers 8, no. 3: 67. https://doi.org/10.3390/computers8030067

APA StyleCatarci, T., Leotta, F., Marrella, A., Mecella, M., & Sharf, M. (2019). Process-Aware Enactment of Clinical Guidelines through Multimodal Interfaces. Computers, 8(3), 67. https://doi.org/10.3390/computers8030067