Abstract

In this paper, a set of techniques used for downsampling and upsampling of 2D images is analyzed on various image datasets. The comparison takes into account a significant number of interpolation kernels, their parameters, and their algebraical form, focusing mostly on linear interpolation methods with symmetric kernels. The most suitable metrics for measuring the performance of upsampling and downsampling filters’ combinations are presented, discussing their strengths and weaknesses. A test benchmark is proposed, and the obtained results are analyzed with respect to the presented metrics, offering explanations about specific filter behaviors in general, or just in certain circumstances. In the end, a set of filters and parameters recommendations is offered based on extensive testing on carefully selected image datasets. The entire research is based on the study of a large set of research papers and on a solid discussion of the underlying signal processing theory.

1. Introduction

Downsampling of 2D images is a technique employed in order to reduce the resolution of an input image. This is most helpful for reducing the storage size of images while preserving as much of their information as possible. Upsampling is the reverse process of the former, and it consists of obtaining an output image of a higher resolution than that of the input. In practice, the focus lies on obtaining a high confidence image—one that presents no unwanted artifacts and preserves high levels of detail.

The problem referenced throughout this paper is also known as interpolation, and it resides in inferring a continuous function from a discrete set of values. The interpolation problem is not a new one. Meijering [1] describes the history of interpolation techniques from the Babylonian astronomers up to the modern adaptive and content-aware methods.

Research on interpolation techniques is of high value, especially in the medical imaging domain, as shown by Thevenaz et al. [2], where the required quality and resolution of obtained images—such as magnetic resonance imaging (MRIs)—must be as high as possible. Reconstructions of non-uniformly sampled volumes, such as that of viruses, are also accomplished with the aid of interpolation. Some techniques such as CAD (computer-assisted diagnostics) and CAS (computer-assisted surgery) rely on certain resolution constraints, either for identifying patterns in diagnostics or for providing high fidelity imaging for specialists.

As pointed out by Meiijering et al. in [3], the study of image interpolation in medical imaging research is of extreme importance for reconstructing accurate samples of CTs (computed tomography), MRIs, DSAs (digital subtraction angiography), and PETs (positron emission tomography).

Even though most of the research performed in regards to interpolation is tied to the medical imaging field, research has also been performed on various other types of images. The metrics used in either of them are dependent on several aspects regarding their application. Mitchell and Netravalli [4] subjectively tested the effectiveness of their filtering techniques with many samples shown to multiple users. This way, they showed some thresholds and conditions that their proposed interpolation kernel must obey in order to be satisfying to the human eye.

More quantitative approaches in evaluating interpolation results are subject to active research. However, one can distinguish evaluation methods based on the frequency response of the image and on its spatial response. A survey conducted by Amanatiadis and Andreadis [5] shows different approaches towards evaluating the quality of the interpolation algorithm in use. We note that Wang et al. [6] argue that the classical usage of MSE (mean squared error) and PSNR (peak signal-to-noise ratio) as metrics for image quality assessment does not cover the localization of errors and fails to cover perceptual measurements based on the HVS (human visual system). Mughal et al. [7] also discusses the usage of SSIM (structural SIMilarity) and proposes a weighted geometric mean from luminance, contrast, and structural similarity of different images. Wang et al. [6] show that Weber’s law [8] is respected with regards to the perceptual change in the visual stimulus introduced by the error signal against the original image. While SSIM is a significant quality assessment technique that involves human visual perception in the process of evaluating errors, it is still a 2D image of the spatial distribution of error with regards to the originally sampled 2D signal. For evaluation purposes, a scalar result quantifying the image quality globally is required. The MSSIM (mean structural SIMilarity) is also presented in [6] as a means of globally describing the impact error signals on a 2D visible image. The author proposes the following formulation of the SSIM:

where:

- The signals x and y are corresponding windows inside the original image,

- is the mean of the signal x,

- is the standard deviation of the signal x,

- is the covariance of the two signals x and y,

- and are two constants taken for stability purposes in the case where either term of the denominator is close to zero.

Al-Fahoum and Reza [9] argue for a better method of quantifying image quality, stating that, even though MSE and its counterparts provide a good measurement of overall deviation from one image to another, they could provide a quantitative measurement for the edge intensity of an image, PEE (percentage edge error). They define this measurement as the difference between the ES (edge sharpness) of the original image and that of the processed image normalized by the ES of the original image and , where EI is the edge intensity of a given point. The procedure of determining the EI is further described in [9] and takes into account edge intensities on both rows and columns. Al-Fahoum and Reza [9] also propose a subjective quality index, the mean opinion score for characterizing the difference between two images on a scale from zero to five that they further utilize to show the degree of similarity between two images.

Another technique commonly employed in terms of evaluating the quality of the filters for both downsampling and upsampling is that of computing the response of the filter and comparing it to the ideal low-pass filter characteristics. In this concern, please note that an evaluation of an interpolation kernel is given by Schaum [10], who shows the aforementioned properties of well-performing interpolation kernels.

Moller et al. [11] propose a Taylor series approach to signal filter design, which aims at reconstructing derivatives and minimizing the error in the acception of Taylor series expansion of a given interpolated signal from its samples. Not only is interpolation used for upsampling/downsampling, but it is also used for applying Euclidean geometric transforms to different images in 2D. Thus, many of the envisaged interpolation techniques yield different results depending on the application (upsampling/downsampling, rotation).

Some surveys in the field of image interpolation convolution kernels are described by Meijering [1] and usually take on a quantitative approach to measuring the quality of given transforms. Parker et al. [12] present a first survey on the topic of interpolation that shows the results obtained by certain polynomial filters and analyzes their results in the time and frequency domain. In [13], Lehman and Gonner continue with a survey regarding the common methods for the interpolation of medical images and analyze the size and shapes of their frequency response. They propose a means of measuring the quality of the interpolation using the normalized cross-correlation coefficient between the original image and the transformed and then with the reversed transformed applied to it. The higher this coefficient is, the better the result is. They also point out some key aspects regarding interpolation, the differences between interpolation and approximation methods, and they show the known integral-of-one principle of an interpolator, due to which the direct current component of the signal should be preserved as a consequence of the interpolation transform. In other words, Lehmann shows through several examples that, should the energy of the window function involved in the interpolation process be higher than the unit, then light areas of the image are bound to suffer from degradation, while a lower than unit total energy does more damage to the dark portions of the image.

Thevenaz et al. [2] perform another survey and present several interpolation kernels, such as Meijering [14], Schaum [10], German [15], Keys [16], Dodgson [17], and O-MOMS (optimal-maximal order minimal support) [18,19], while comparing them to the more classical ones based on the apodization of the sinc function.

Meijering [3] perfects a survey on different interpolation methods while taking into account more window functions than his predecessors and presents his conclusions with regards to the RMSE (root mean squared error) between the undersampled and the upsampled images, focusing on medical images and evaluating the given kernels on various experiments (resampling, rotation, translation, etc.).

Sudheer [20] presents a survey comprising multiple approaches towards image interpolation while keeping in mind the linear kernel-based approach and describing several other non-linear techniques for obtaining a super-resolution image from a normal resolution one.

Many other approaches other than using linear reconstruction kernels (interpolation functions) are described in [21,22,23,24,25], to name just a few.

The purpose of the current paper is to present and evaluate some of the existing convolution-based interpolation methods. We aim to provide a comprehensive list of methods, classifying them based on their method and means to evaluate their behavior. In order to evaluate the quality of a given filter, computing and comparison of both MSE/PSNR and MSSIM are employed.

where:

- is the original signal,

- is the resampled image,

- is the maximum value of the signal x.

In the case of a bi-dimensional signal (i.e., an image), the definitions in Equation (2) are similar.

The 2D resampling tests are applied on a significant dataset of images ranging from text, pictures, biological imagery, aerial views, textures, and artificial imagery. For this purpose, we use different databases described in Section 4.

The underlying objective is the provision of an optimal filtering technique quality-wise on each given dataset. This paper’s further objective is providing means to choose an appropriate method for interpolation in more specialized applications, such as image compression (see Section 6 for current and further development on the matter).

The rest of the paper is structured as follows: in the second section, the interpolation problem is defined, and the mathematical aspects of its workings are further detailed by providing the used formulas and notations that are used throughout this paper. In the third section, currently available methods are discussed and dissected in terms of linear interpolation methods (interpolation kernel approach), which are segregated into sinc-windowing techniques with the various window polynomial interpolation. At the end of the third section, insights into adaptive non-linear approaches towards interpolation are given in a succinct fashion. The fourth section consists of the results obtained on the described datasets and a comparison of the quality of the underlying filtering technology employed. Please note that only the interpolation kernel approach is evaluated, while the non-linear techniques succinctly described in the third section are not in the scope of the current paper. In the fifth section, the conclusions of this survey are presented with regards to the paper’s main objectives, while in the sixth section, further development opportunities are presented in regards to the paper’s main topic.

2. Problem Definition

This section comprises several theoretical considerations regarding the topic of downsampling and upsampling. Proving the theorems and lemmas involved throughout this section is out of the scope of this paper.

This section is structured such that in the first part, we give empirical qualitative criteria that are emphasized for the design of proper interpolation filters; the second describes a frequency domain approach. In the third part, we describe polynomial-based techniques, while in the fourth, we give some insights into the design of adaptive interpolation techniques.

In his thesis, Wittman [21] describes the objectives of an interpolation technique while making the distinction between the desired visual characteristics and the computational stability criteria that ought to be accomplished in order to claim success over a resampling schema. In this paper, we enumerate through the properties proposed by Wittman and succinctly describe some of their meanings:

- Geometric invariance—keeping geometrical information about the image subject

- Contrast invariance

- Noise—no supplementary noise addition to the original image

- Edge preservation

- Aliasing

- Over-smoothing

- Application awareness

- Sensitivity to parameters

The theoretical support provided for this paper is inspired by Oppenheim’s classic book on discrete time signal processing [26], as well as on the course by Serbanescu et al. [27], especially with respect to the topic of multirate signal processing. An introductory text on wavelet analysis is provided in [28] since numerous wavelet-based approaches to interpolation have been developed; an example in that concern is a paper by Nguyen and Milanfar [29].

Let be a discrete time signal where x is a continuous time signal . We denote T the sampling rate of the signal x and let be its sampling frequency. We state that is the Z-transform of discrete-time sequence and denote this fact as , . Let us define the convolution of analog signals x and h as . In this respect, taking into account that a discrete-time signal x is the sampling of an original analog signal at sampling intervals , we now derive . Assuming that x is a bandlimited signal at the frequencies of , then by the Nyquist sampling theorem, one can obtain the original analog signal from the given samples if the sampling frequency .

Please note that from here on, the terms interpolation and decimation are used interchangeably with those of upsampling and downsampling, respectively. We define in Figure 1 the schema described by Youssef in [30] for the operation of downsampling/upsampling for a given 1D signal input:

Figure 1.

Decimation/interpolation schema.

In this schema, we take a discrete time signal, the box represents the operation of decimation by a factor of n, the box the operation of interpolation by a factor of n, while the two represent the filters used as decimation preprocessing and interpolation post-processing, respectively. The internals of the following boxes are described in terms of time and then frequency response. The decimator is described by the following transfer function:

and the interpolator by:

For simplicity, we assume that in our case.

The two aforementioned filters are low-pass filters, and their application on the input signal is a convolution operation. From the properties of the Z-transform, we find that , where and , x and h are discrete time signals.

Let be the Z-transform of the input signal . We deduce that , . However, since w is defined as zero on the odd indices, we have . We use in order to emphasize the frequency response (as far as the Fourier transform is concerned) of the chain of filters described above.

Following the reasoning of [30], we state that the “useful” term in the above equation is precisely the first one and can consider that the second term appears because of the inherent aliasing nature of the high-frequency response. In order for the above frequency response of the entire system to be as close as possible to X(z) and in order to accomplish this desiderate, we argue that the first term of the above equation be as close as possible to X(z) while the second one be as close as possible to zero. In reality, it is impossible to accomplish this condition on the entire range. Therefore, as described by [30], one can deduce the following:

Theorem 1.

In the ideal case, filters g and p are low-pass filters with pass-band equals to . In this situation, the signal preserves the highest possible range of low frequencies and keeps the aliasing distortion as close to zero as possible.

We observe that, as common grounds for building a signal interpolator, one must approximate (as well as possible) the shape of a low-pass filter. This is a consequence of the Nyquist theorem stated above. That being said, for a bandlimited signal, it is safe to assume that the analog signal from which it derives can be reconstructed exactly using an ideal low pass filter with cutoff frequency at .

The problems that arise using this generic signal processing approach reside in that, even though most of the natural images present the highest concentration of energy among the low-frequency components, there are several higher frequencies that get removed from the original image, which can cause loss of detail at the level of discontinuities in the image.

Another factor that forbids the use of ideal low-pass filters is that their impulse response is infinite in the time domain. Thus, the time-domain of the ideal low-pass filter is given by , where .

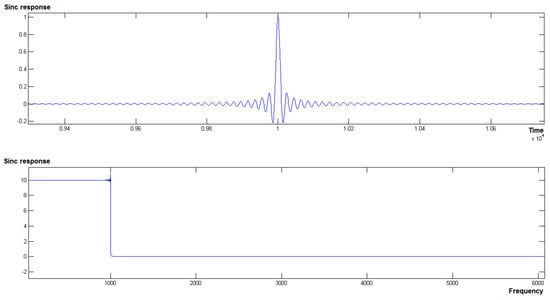

The shape of the sinc filter in the spatial domain against its shape in the frequency domain is shown in Figure 2. We note that an ideal low-pass filter cannot be obtained, since the sinc function has infinite support. One can observe the ripples around the cutoff frequency of the frequency domain of the aforementioned plot; from these, one can deduce that even though a large support was indeed employed when writing the sinc function [−1000, 1000] with a sampling rate of 0.1, one cannot ensure ideal low-pass filtering for this design.

Figure 2.

Truncated sinc function plot.

The general idea behind the design of frequency response-based filters is to try and approximate, as accurately as possible, the sinc function in the time domain while at the same time keeping the function in the FIR (Finite Impulse Response filter) constraints, since an infinite impulse response filter is not achievable in practice due to its infinite support and lack of causality (i.e., it takes into account many values from the future infinitely). In that respect, one can determine finite time computation of the convolution between the input signal and the convolution kernel, as well as come as close as possible to the ideal case described by the Nyquist sampling theorem.

As can be derived from Figure 2, we note that, even though the impulse has a large enough support domain, it still presents malformations around the cutoff frequency. Therefore, using a simple sinc function cutoff (taking only a limited time domain for the function) yields bad results in terms of quality over the amount of computation necessary.

The method of choice for accomplishing the tradeoff between the number of samples taken into account, the resolution of the filter, as well as its behavior in the stopband, is the technique of windowing or apodization [26]. A window is a function defined on the real numbers with the property that it is non-zero on a given interval and is zero outside the named interval. We continue this section with a succinct presentation of the windowing techniques and the tradeoffs one has to accomplish in order to create an efficient—and in the meantime, qualitative—approximation for the sinc filter in the frequency domain.

A windowed-sinc kernel is a function defined as:

Transforming into the frequency domain, a multiplication becomes convolution. Therefore, any sharp discontinuities result in the existence of significant high-frequency components that propagate for the entire length of the signal, because and, reciprocally, .

Three criteria define a well-performing windowed-sinc filter:

- Reduced roll-off—reducing the transition frequency difference between the passband and the stopband,

- Low ripples in the passband and the in the stopband—the deviations from the ideal amplification one in the passband and respectively zero in the stopband are to be minimized in order to obtain closeness to the ideal filter,

- Low energy in the stopband—that is to say, filters ought to produce most of their energy in the passband and close to zero in the stopband.

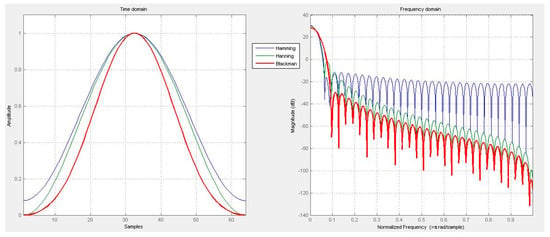

The most common windows employed in the field of finite impulse response filter design are the Hamming, the Blackman, and the Hanning windows. Many other windowing functions exist; they are described in Section 3. However, in Figure 3, we propose a comparison between a Hamming, a Hanning, and a Blackman window:

Figure 3.

Comparison between Hamming, Hanning, and Blackman windows.

From Figure 3, one can notice that the best performance in terms of stopband attenuation is obtained with the Blackman window. However, the fastest roll-off is obtained through a Hamming window, even though the latter has lower stopband attenuation. As a general rule in designing finite impulse response filters, one has to take into account the usage of a given function based on the underlying application. For example, in order to be able to better distinguish nearby frequencies, one should use the fastest transition filter in terms of passband to stopband roll-off. Such a window is the rectangular window filter or the mere truncating of the sinc function at a given sample.

These desiderates cannot be simultaneously accomplished. However, increasing the number of kernel samples usually reduces the roll-off and trades computation complexity for better filter approximation. Thorough explanations on the topic are offered in [26,31].

Appledorn [32] has a different approach to signal interpolation that involves the use of the Gaussian symmetric interpolation kernel of zero mean and linear combinations of its derivatives in order to approximate several desired characteristics resembling those of the ideal sinc kernel in both frequency and time-domain. He also brings forward results regarding the performances obtained by his proposed method.

We describe the basics of polynomial interpolation. Polynomial interpolants for discrete samples of data have been employed since the early days in mathematics. Among the best-known methods for polynomial interpolation are ones that belong to Lagrange and Newton. In fact, the latter is more adapted for computer processing, since it provides an iterative means to computing further degrees without needing to recompute the entire polynomials [33]. Important mathematicians also have their names related to polynomial forms of interpolation (Hermite, Bernstein, and Neville, to name a few).

For the purpose of this paper, we emphasize the specific image processing related matters taken into consideration. Besides the basic box and the linear interpolation kernel, there are other methods such as cubic interpolation, B-spline interpolation, piecewise symmetrical n-th order polynomials [14], and BC-splines [4], etc.

Generalizing the concept of polynomial interpolation and keeping the smoothness between several samples of interpolated data, Meijering et al. [14] manage to prove that a number of n-2 continuous derivatives are required to be continuous at the knots in order to characterize the underlying polynomials with only one parameter .

The theory of approximation comes as a handy tool for developing highly efficient yet well-behaved filters for the interpolation of data. The work of Thevenaz and Unser [2,34] provides the theoretical background for generalizing the interpolation problem with respect to the choice of more general approximation filters that are employed to perform interpolation with all the due restrictions and approximate arbitrary function of a given order. The proposed approaches employ a rewrite of an unknown function in a basis of approximating functions, as depicted in , while imposing a secondary condition that the samples match at integer values. Meanwhile, in classical interpolation, the parameters are precisely the samples of the initial function at integer nodes and the supplementary condition that and . A highly revealing paper on the matter is presented in [35], where examples of B-spline interpolation are also given.

The milder assumption resolves to solve a linear system of equations in order to find the exact coefficients after the imposition of the condition that . Furthermore, more efficient digital signal filtering techniques are described by Thevenaz and Unser in their further work [2,36,37]. Families of such functions are the B-spline functions [37], the Schaum [5] interpolator, and the O-MOMS approximation kernel [18].

In terms of B-spline interpolation, we emphasize the work of Unser [34,36,37] that strongly encourages the use of B-splines in the fields of signal and image processing, basing his works on the results previously obtained by Schoenberg [38]. The main idea behind this method resides in the expansion of a given n-th order spline polynomial in a sum of base spline polynomials:

By context-based methods, we denote the resampling techniques that do not rely on linear interpolation kernels and the application with the convolution operator. In this respect, one can emphasize multiple classes of approaches. Since defining each one of them is not in the scope of this paper, we enumerate some significant results and succinctly describe their functioning.

NEDI [24] is a statistical signal processing approach towards image interpolation. It overcomes issues such as directionality of a 2D image and surpasses the extreme calculation complexity of PDE solvers for deriving edges. It is based on the definition of an edge as a portion of the image space where an abrupt transition occurs in one direction and smooth transitions occur in the perpendicular one. It utilizes a Gaussian process assumption and the covariance matrix invariance on the image of interest and derives the interpolation weights of the covariance matrix for a set of points surrounding the value to be interpolated. As a matter of fact, the method proposed by Li is made even more computationally efficient by using classic interpolation in non-edge areas and the above approach for edge areas.

Wittman [21] proposes in his thesis a classification of existing interpolation methods other than linear convolutions, focusing on a mathematical approach to image processing. He further exemplifies each approach with a given methodology. We resume to succinctly look over the main methods proposed in [21]. PDE-based methods (anisotropic heat diffusion, Navier-Stokes, Perona-Malik), the variation of energy approaches (Mumford-Shah, Total Variation, active contours, etc.), multiscale analysis (wavelet approaches, Fourier analysis, etc.), machine learning (unsupervised learning) and statistical methods (Bayesian inference, etc.) are presented therein.

Hwang and Lee [39] also propose a method for improving the quality of linear and bicubic interpolation, stressing on the dependency of the weights involved in the process of interpolation to the local gradient measurement in all directions.

In the current paper, we focus on linear interpolation filters, which represent an efficient approach towards image upsampling, providing good trade-offs between quality and computational complexity. Our evaluation methodology is as follows:

| function RESAMPLE_EVAL(Image, Ratio, G, P): // Image is a 2D image // Ratio is the sampling ratio // G is the downsampling kernel and // P is the upsampling kernel Ds = Downsample(G(Image), Ratio) Us = P(Upsample(Ds, Ratio)) Return (MSSIM(Image, Us), MSE(Image, Us), PSNR(Image, Us), PEE(Image, Us)) |

For the approximating functions described in this chapter, the evaluation we take is slightly different. In this concern, the general architecture for evaluating such techniques is employed upon downsampling a “classical” interpolation (low-pass) filter and performs the required computations in the upsampling, adding a supplementary pre-filtering step to derive the coefficients implied therein. The technique denoted above is thoroughly described in [37].

In the next section, we present kernel functions for linear interpolation that we analyze in Section 4 and show their algebraic forms as well as their support interval. We also present the references of their definition as well as other Supplementary Information and comments regarding the choice of their parameters.

3. Available Methods

Firstly, in Table 1, we enumerate existing polynomial filtering techniques and give their algebraic expression. In the second part, we present windowed-sinc functions.

Table 1.

Polynomial interpolators and approximates.

We further present windowed-sinc techniques for signal interpolation. We describe only the window function involved without multiplying with the actual sinc function. To define terminology for the following table, we take:

- the window size,

- ,

- is a point on the sequence representing the window.

We assume that, by definition of the windowing technique, a window is defined as zero outside its given boundaries. In Table 2, we give analytical expressions of each window only on the interval where it is not null:

Table 2.

Window functions.

4. Results and Discussions

In order to test the performances of some existing approaches, several considerations are made in order to ensure consistency regarding the testing procedure as well as reduce the complexity of the required calculations. For that respect, we successfully implement a number of kernels from the list depicted above. The language of choice is MATLAB since it provides a higher-level application programming interface (API) for implementing image processing techniques. The built-in function imresize provides an extremely flexible fashion of performing the required operations with minimal effort.

Throughout the testing and the implementation stages of the current paper, we assumed that the scaling factor (for the downsampling and the upsampling operation) is to be of a power of two. In the case of our intensive testing method, we choose two and four as the scale factors to be taken into account. Also, in order to produce comparable results, the initial matrix representing the image is truncated to a size multiple by four in both width and height. The measurements employed on the image itself—MSE, PSNR, MSSIM—are relying on the fact that the original and the final images have the same size.

The aim of our analysis is to take into account multiple downsampling as well as upsampling techniques and conclude on the way they perform on given datasets. Since the operation is more dependent on the upsampling technique than on the downsampling technique, we assume that fewer downsampling methods are required in order to offer an overview of their performances. Also, due to the extreme computational costs, we choose to allow fewer degrees of freedom in the downsampling stage.

Since comparing temporal complexity is out of the scope of this paper, we provide a means of evaluating the temporal complexity of a given approach in terms of the width that the interpolation kernel is defined on. Since kernel data can be easily cached, pre-fetched, or looked up, the main complexity issue remains the window-size, since it determines the number of points that an interpolant takes into account when performing the weighting average.

The processing for performance measurements is performed onto the following datasets:

- Biological and medical imaging [50] (slice images through a frog’s body and their selection masks),

- Pictures [51] (some of the most widely-known images in image processing tasks; collection include images like mandrill, peppers, etc.),

- Textures [51] (natural textures and mosaics of textures with sharp transitions),

- Artificial imagery [51,52,53,54] (image documents, machine printed texts, complex layouts, etc.).

Further on, in Table 3, we present the evaluation matrix employed in the current approach. Each line represents an interpolation method.

Table 3.

Implemented methods.

For all the windowed-sinc functions, we use a separate parameter, namely the window width that also corresponds to the actual filter width and height. The values chosen for the given window width in these situations are in .

The implementation and testing are performed for each image in the dataset referenced, as in the following pseudocode:

| Function Analyze(Image): For scale = {2, 4} Foreach dmethod in METHODS: Foreach dparameterSet in ParameterSet(dmethod) Ds = Downsample(Image, 1 / scale, dmethod, dparameterSet); Foreach umethod in METHODS: Foreach uparameterSet in ParameterSet(umethod) Us = Upsample(Ds, scale, umethod, uparameterSet); [mse, psnr, mssim] = diff2(Us, Image); Write(mse, psnr, mssim); |

We call this experience preliminary since its results are not to be used to define specific behaviors for the given kernels but rather to predict behaviors that are to be tested in the proceedings of the experimentation.

The first surprising conclusion that comes ahead from analyzing the results obtained is that, usually, a Hamming downsampling window of size three determines very good performances for the overall image downsampling/upsampling method. The second parameter that determines good interpolation in what the upsampling is concerned with in regards to both PSNR and MSSIM is the window size. We notice that the best upsampling techniques usually consist of windowed-sinc functions with a long window support (11 is maximum). However, good results are also obtained with the aid of windows of shorter spatial support (such as seven or, even five). Since the number of tests performed is large, and following the results in their tabular format is cumbersome, we omit for the moment this raw analysis and proceed with a more fine-grained one with regards to the choice of a certain filter instead of another. We perform a set of experiments to denote the qualities and the defects of several candidates for the best upsampling filters. However, for completeness, the numerical results for the aforementioned performers with their corresponding values are presented in Supplementary Materials.

Experiment 1.

From the above observation, we take as downsampling filter the Hamming windowed-sinc with window size three and perform all the windowed sinc approaches mentioned with an increasing width from . We also take into account the B-splines of the same orders and the Appledorn Gaussian kernel of order five for the same window length. Finally, we set the scaling factor to be four. Let us take notice of the fact that some kernels stop performing as per expectations once the window is increased beyond a certain point. This is mainly due to the fact that the ripples induced by the sinc-shaped interpolation kernel create the “so-called” ringing artifacts that bring the image quality down.

Also, we note that the best performing interpolation kernel for the test-cases taken into account is the sinc function windowed by a sine window function. The analysis also reveals that the Welch and the sine window with a window-length of 11 also expose a good MSSIM value as well as a PSNR one. We also show that Lanczos windows are exposing good quality and monotonic characteristics with regards to the window length.

Experiment 2.

The second experiment we consider relies upon the validation of the previous observation that the Hamming window is indeed the best decimator to be employed in the process. We consider a fixed upsampling kernel—namely the windowed-sinc function with a Lanczos function of width four—and proceed with our reasoning as in the first experiment, except that at this moment, we vary the shape and width of the decimator instead of those of the interpolator. In this concern, we look up only for functions in the windowed-sinc family. After analyzing the measurements, it appears that our first assumption based on the preliminary experiment is close to that of a more thorough analysis. We notice good overall behaviors for the Hamming window, even though an anomaly tends to appear from the window of size three in that its performances are weaker than they are size five. That seems to be the case for multiple decimators, especially for the Lanczos filter, which appears to have a drastic turn for the worse as the window is increased for PSNR and MSSIM. The conclusion that can be drawn from this is that the best decimator achieved using this approach is the Hamming windowed-sinc function of width three. We also note that the almost linear increase of the flat top window might overcome its relatively poor results in the lower registry of the window size.

Experiment 3.

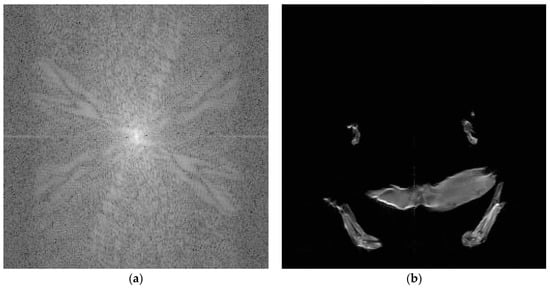

From Experiments 1 and 2, we take the best decimator/interpolator pair and apply them on more samples. Our aim is to discover the intrinsic image properties that determine a certain behavior. As performed in the previous experiments, we also use a scale of four to compare the results. We run the tests on multiple images from the dataset described in Section 2 using a Hamming windowed sinc of size three as the decimator and a sine windowed sinc of 11 as an interpolator. Some representative results are analyzed in Figure 4 and Figure 5.

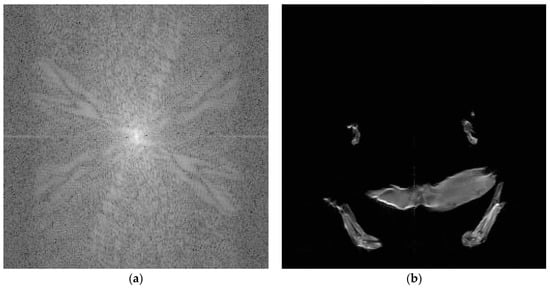

Figure 4.

Example of one image exhibiting bad interpolation results [peak signal-to-noise ratio (PSNR) = 30.61dB, mean structural SIMilarity (MSSIM) = 0.915] [50]. (a) representation in the frequency domain; (b) representation in the spatial domain.

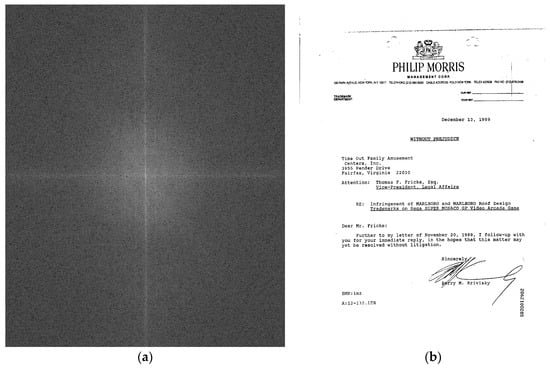

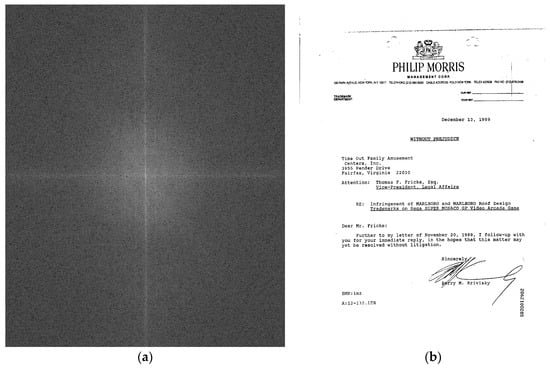

Figure 5.

Example of one image exhibiting good interpolation results (MSSIM = 0.99) [54]. (a) representation in the frequency domain; (b) representation in the spatial domain.

As we can easily notice from the previously shown analysis in the frequency domain, the image yielding good results has a very low-frequency spectrum. As most of its energy is concentrated in the center of the spectrum, little to no information is lost when low-pass filtering it as a result of the interpolation process.

The image that produces bad interpolation results has a wider bandwidth and is thus more susceptible to losses of information due to the low pass filter applied. We give the results of the respective interpolant in both cases in Figure 6.

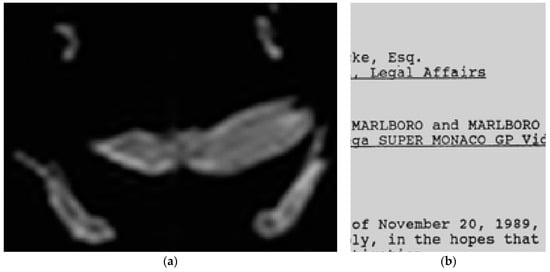

Figure 6.

Details of the interpolated versions of the images yielding bad and good results. Please note the blurriness of the “bad” image due to over-smoothing caused by the low pass filter. Since the “good” image is monochrome, the shades of gray normally would translate to white. Please note that the structure of the text is fully preserved and easily readable; (a) a detail of the image presented in Figure 4; (b) a detail of the image presented in Figure 5.

5. Conclusions

In this paper, theoretical aspects regarding the interpolation and decimation of 2D spatial images are presented, as well as an attempt at implementing various kernels for image downsampling and upsampling. Our focus resides in comparing certain techniques described in the signal processing and medical imaging literature and providing an optimal approach towards image resampling.

We emphasize the importance of the interpolators as well as that of the decimators used in the process and provide an algorithm for comparing several resampling techniques based on the restriction of knowledge of the ground truth—in our case, the original image.

The applications of the topics presented throughout this survey are numerous, ranging from medical imaging to lossy image compression. Many systematic approaches on the topic have been proposed in [1,2,3,13,20], to name a few. Although its roots lie in the digital signal processing, the theory of digital image interpolation approaches towards resampling of 2D signals have evolved well beyond. Modern approaches using PDEs, statistical processing, wavelet analysis, image inpainting, and artificial intelligence are of high interest [21,22,39]. The results obtained using the latter are proved to be more accurate, but the reticence for their usage lies in their much higher computational complexity.

Another topic of interest in the theory of interpolation resides in the liaisons with the approximation theory, as shown by Unser, Thevenaz, Schaum, and Blu et al. [10,18,34,36]. Their research has led to the development of the cardinal splines as first-order citizens in the world of interpolators. One must also be aware of the means of theoretically characterizing the performances of a given interpolation kernel, since many methods on the topic exist. Thus, frequency domain analysis together with Taylor series expansions is a notable approach to the matter.

Throughout the experiments, we observe the influence of the choice of decimators and interpolators for the task of image resampling. In this concern, our calculations show that the best decimators are variable-width windowed-sinc functions—especially the Hamming windowed-sinc with size three—while the interpolators that perform best are also windowed-sinc functions with higher window sizes, especially the sine windowed sinc function with width 11. B-splines also present good qualities in terms of interpolation.

6. Suggestions for Future Work

Future development on the matter may include a more thorough analysis of the existing techniques towards interpolation. In this respect, we stress on the centralization of more kernel-based techniques and also on the presentation of a unified method towards their characterizations.

Another aspect to be taken into account for further development on the matter is comparatively analyzing several adaptive (non-linear) methods for interpolation of sampled datasets and the tradeoffs that are accomplished between resampling quality and the computational complexity of the approach.

On the experimental side, a suggested approach could include testing subjective quality of the resampled image on given scales such as the ones used by Mitchell and Netravali in [4] or Al-Fahoum and Reza in [9].

Supplementary Materials

The following are available online at https://www.mdpi.com/2073-431X/8/2/30/s1, Table S1: Performance measurements of downsampling and upsampling filters’ combinations.

Author Contributions

Conceptualization, D.D. and C.-A.B.; Data curation, D.D. and C.-A.B.; Investigation, D.D. and C.-A.B.; Methodology, C.-A.B.; Software, D.D. and C.-A.B.; Validation, D.D. and C.-A.B.; Visualization, D.D.; Writing—original draft, D.D.; Writing—review & editing, C.-A.B.

Funding

This work was supported by a grant of the Romanian Ministry of Research and Innovation, CCCDI-UEFISCDI, project number PN-III-P1-1.2-PCCDI-2017-0689/“Lib2Life-Revitalizarea bibliotecilor si a patrimoniului cultural prin tehnologii avansate”/“Revitalizing Libraries and Cultural Heritage through Advanced Technologies”, within PNCDI III.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Meijering, E. A Chronology of Interpolation: From Ancient Astronomy to Modern Signal and Image Processing. Proc. IEEE 2002, 90, 319–342. [Google Scholar] [CrossRef]

- Thévenaz, P.; Blu, T.; Unser, M. Interpolation Revisited. IEEE Trans. Med. Imag. 2000, 19, 739–758. [Google Scholar] [CrossRef]

- Meijering, E.W.; Niessen, W.J.; Viergever, M.A. Quantitative Evaluation of Convolution-Based Methods for Medical Image Interpolation. Med. Image Anal. 2011, 5, 111–126. [Google Scholar] [CrossRef]

- Mitchell, D.; Netravali, A. Reconstruction Filters in Computer Graphics. Comput. Graph. 1988, 22, 221–228. [Google Scholar] [CrossRef]

- Amanatiadis, A.; Andreadis, I. A survey on evaluation methods for image interpolation. Meas. Sci. Technol. 2009, 20, 10. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Mughal, B.; Muhammad, N.; Sharif, M.; Rehman, A.; Saba, T. Removal of Pectoral Muscle Based on Topographic Map and Shape-Shifting Silhouette. BMC Cancer 2018, 18. [Google Scholar] [CrossRef] [PubMed]

- Weber’s law, Encyclopædia Britannica Inc. 2016. Available online: https://en.wikisource.org/wiki/1911_Encyclop%C3%A6dia_Britannica/Weber%27s_Law (accessed on 20 April 2019).

- Al-Fahoum, A.S.; Reza, A.M. Combined Edge Crispiness and Statistical Differencing for Deblocking JPEG Compressed Images. IEEE Trans. Image Process. 2001, 10, 1288–1298. [Google Scholar] [CrossRef]

- Schaum, A. Theory and Design of Local Interpolators. Graph. Models Image Process. 1993, 55, 464–481. [Google Scholar] [CrossRef]

- Moller, T.; Raghu, M.; Yagel, R. Evaluation and Design of Filters Using a Taylor Series Expansion. IEEE Trans. Vis. Comput. Graph. 1997, 3, 184–199. [Google Scholar] [CrossRef]

- Parker, A.J.; Kenyon, R.V.; Troxel, D.E. Comparison of Interpolation Methods for Image Resampling. IEEE Trans. Med. Imag. 1983, 2, 31–39. [Google Scholar] [CrossRef]

- Lehmann, T.M.; Gonner, C.; Spitzer, K. Survey: Interpolation in Medical Image Processing. IEEE Trans. Med. Imag. 1999, 18, 1049–1075. [Google Scholar] [CrossRef] [PubMed]

- Meijering, E.H.; Zuiderveld, K.J.; Viergever, M.A. Image Reconstruction by Convolution with Symmetrical Piecewise nth-Order Polynomial Kernels. IEEE Trans. Image Process. 1999, 8, 192–201. [Google Scholar] [CrossRef]

- German, I. Short Kernel Fifth-Order Interpolation. IEEE Trans. Signal Process. 1997, 45, 1355–1359. [Google Scholar] [CrossRef]

- Keys, R. Cubic Convolution Interpolation for Digital Image Processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Dodgson, N.A. Quadratic Interpolation for Image Resampling. IEEE Trans. Image Process. 1997, 6, 1322–1326. [Google Scholar] [CrossRef] [PubMed]

- Blu, T.; Thevenaz, P.; Unser, M. MOMS: Maximal-Order Interpolation of Minimal Support. IEEE Trans. Image Process. 2001, 10, 1069–1080. [Google Scholar] [CrossRef] [PubMed]

- Bozek, J.; Grgic, M.; Delac, K. Comparative analysis of interpolation methods for bilateral asymmetry. In Proceedings of the 52nd International Symposium ELMAR-2010, Zadar, Croatia, 15–17 September 2010; pp. 1–7. [Google Scholar]

- Sudheer, B.; Sreenivasa, M. A Survey on the Methods of Super-Resolution Image Reconstruction. Int. J. Comput. Appl. 2011, 15, 2. [Google Scholar] [CrossRef]

- Wittman, T. Mathematical Techniques for Image Interpolation; Report Submitted for Completion of Mathematics Department Oral Exam; Department of Mathematics, University of Minnesota: Minneapolis, MN, USA, 2005. [Google Scholar]

- Li, D.; Simske, S. Fast Single Image Super-resolution by Self-trained Filtering. In Proceedings of the 7th International Conference, ICIC 2011, Zhengzhou, China, 11–14 August 2011; pp. 469–475. [Google Scholar]

- Said, A. New Filters for Image Interpolation and Resizing; HPL-2007-179; Media Technologies Laboratory, HP Laboratories: Palo Alto, CA, USA, 2007. [Google Scholar]

- Li, X.; Orchard, M.T. New Edge-Directed Interpolation. IEEE Trans. Image Process. 2001, 10, 1521–1527. [Google Scholar] [PubMed]

- Reddy, K.; Reddy, K.L. Enlargement of Image Based Upon Interpolation Techniques. Int. J. Adv. Res. Comput. Commun. Eng. 2013, 2, 4631–4640. [Google Scholar]

- Oppenheim, A.V.; Schafer, R.W.; Buck, J.R. Discrete-time Signal Processing, 2nd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Serbanescu, A.; Serban, G.; Iana, G.V.; Oroian, T.; Rincu, I. Prelucrare Digitala a Semnalelor; Editura Universităţii din Piteşti: Pitesti, Romania, 2004. (In Romanian) [Google Scholar]

- Kon, M. Basics of Wavelets. Available online: http://math.bu.edu/people/mkon/Wavelets.pdf (accessed on 20 April 2019).

- Nguyen, N.; Milanfar, P. A Wavelet-Based Interpolation-Restoration Method for Superresolution. Circuits Syst. Signal Process. 2000, 19, 321–338. [Google Scholar] [CrossRef]

- Youssef, A. Analysis and Comparison of Various Image Downsampling and Unsampling Methods. In Proceedings of the DCC ’98 Data Compression Conference, Snowbird, UT, USA, 30 March–1 April 1998; p. 583. [Google Scholar]

- Smith, S.W. The Scientist and Engineer’s Guide to Digital Signal Processing, 2nd ed.; California Technical Publishing: San Diego, CA, USA, 1999. [Google Scholar]

- Appledorn, R.C. A New Approach to the Interpolation of Sampled Data. IEEE Trans. Med. Imag. 1996, 15, 369–376. [Google Scholar] [CrossRef] [PubMed]

- Vaseghi, S.V. Advanced Digital Signal Processing and Noise Reduction, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2000. [Google Scholar]

- Unser, M.; Aldroubi, A.; Eden, M. B-spline Signal Processing. I-Theory. IEEE Trans. Signal Process. 1993, 41, 821–833. [Google Scholar] [CrossRef]

- Getreuer, P. Linear Methods for Image Interpolation. 27 September 2011. Available online: http://dx.doi.org/10.5201/ipol.2011.g_lmii (accessed on 20 April 2019).

- Unser, M. Splines: A Perfect Fit for Signal and Image Processing. IEEE Signal Process. Mag. 1999, 16, 22–38. [Google Scholar] [CrossRef]

- Unser, M.; Aldroubi, A.; Eden, M. B-spline Signal Processing. II-Efficiency Design and Applications. IEEE Trans. Signal Process. 1993, 41, 834–848. [Google Scholar] [CrossRef]

- Schoenberg, I.J. Contribution to the Problem of Approximation of Equidistant Data by Analytic Functions. Q. Appl. Math. 1946, 4, 45–99. [Google Scholar] [CrossRef]

- Hwang, J.W.; Lee, H.S. Adaptive Image Interpolation Based on Local Gradient Features. IEEE Signal Process. Lett. 2004, 11, 359–362. [Google Scholar] [CrossRef]

- Hauser, H.; Groller, E.; Theussl, T. Mastering Windows: Improving Reconstruction. In Proceedings of the 2000 IEEE Symposium on Volume Visualization (VV 2000), Salt Lake City, UT, USA, 9–10 October 2000; pp. 101–108. [Google Scholar] [CrossRef]

- Cheng, F.; Wang, X. Quadratic B-Spline Curve Interpolation. Comput. Math. Appl. 2001, 41, 39–50. [Google Scholar] [CrossRef]

- Thyssen, A. ImageMagick v6 Examples—Resampling Filters. Available online: http://www.imagemagick.org/Usage/filter (accessed on 20 April 2019).

- Webster, R. A generalized Hamming window. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 176–177. [Google Scholar] [CrossRef]

- Qian, L.; Luo, S.; He, S.; Chen, G. Recursive algorithms for direct computation of generalized sliding discrete cosine transforms. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; pp. 3017–3020. [Google Scholar] [CrossRef]

- Nuttall, A. Some windows with very good sidelobe behavior. IEEE Trans. Acoust. Speech Signal Process 1981, 29, 84–91. [Google Scholar] [CrossRef]

- Duda, K.; Zieliński, T.P.; Barczentewicz, S.H. Perfectly Flat-Top and Equiripple Flat-Top Cosine Windows. IEEE Trans. Instrum. Meas. 2016, 64, 1558–1567. [Google Scholar] [CrossRef]

- Smith, J.O. Spectral Audio Signal Processing; W3K Publishing, 2011; ISBN 978-0-9745607-3-1. Available online: https://ccrma.stanford.edu/~jos/sasp/ (accessed on 22 April 2019).

- Varshney, L. On the Use of Discrete Prolate Spheroidal Windows for Frequency Selective Filter Design. Applications of Signal Processing. 23 February 2004. Available online: https://pdfs.semanticscholar.org/fa48/6f9f6478f856bf551b3db5be0859c71e73e5.pdf (accessed on 20 April 2019).

- Duda, K. DFT Interpolation Algorithm for Kaiser–Bessel and Dolph–Chebyshev Windows. IEEE Trans. Instrum. Meas. 2011, 60, 784–790. [Google Scholar] [CrossRef]

- Otto, R.; Engel, E.; Richter, D.; Newton, V.; Padilla, A.L.; Alexander, B.; Rivas, M.; Sahu, K.T.; Budinger, M.; Wong, S.; et al. LBNL DSD Whole Frog Project. Lawrence Berkeley National Laboratory. Available online: http://froggy.lbl.gov/ (accessed on 20 April 2019).

- Weber, A.G. The USC-SIPI Image Database: Version 5; USC-SIPI Report 315; University of Southern California, Viterbi School of Engineering: Los Angeles, CA, USA, 1997; pp. 1–24. [Google Scholar]

- Lewis, D.; Agam, G.; Argamon, S.; Frieder, O.; Grossman, D.; Heard, J. Building a Test Collection for Complex Document Information Processing. In Proceedings of the 29th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Seattle, WA, USA, 6–10 August 2006; pp. 665–666. [Google Scholar]

- Agam, G.; Argamon, S.; Frieder, O.; Grossman, D.; Lewis, D. The Complex Document Image Processing (CDIP) Test Collection Project; Illinois Institute of Technology: Chicago, IL, USA, 2006. [Google Scholar]

- The Legacy Tobacco Document Library (LTDL); University of California: San Francisco, CA, USA, 2007; Available online: https://www.industrydocuments.ucsf.edu/tobacco/ (accessed on 20 April 2019).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).