Global Gbest Guided-Artificial Bee Colony Algorithm for Numerical Function Optimization

Abstract

:1. Introduction

2. Artificial Bee Colony Algorithm

2.1. Gbest Guided Artificial Bee Colony Algorithm

2.2. Global Artificial Bee Colony Search Algorithm

3. The Proposed 3G-ABC Algorithm

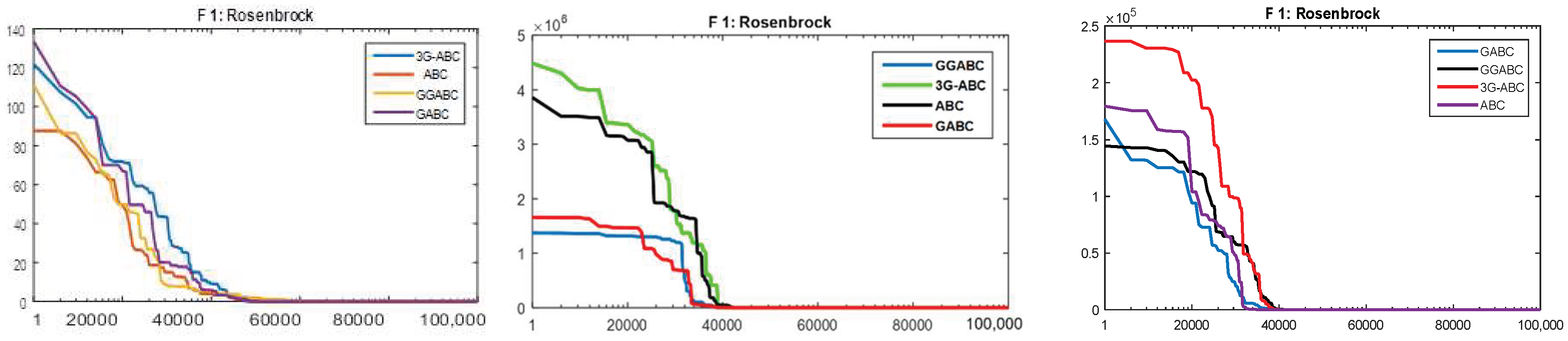

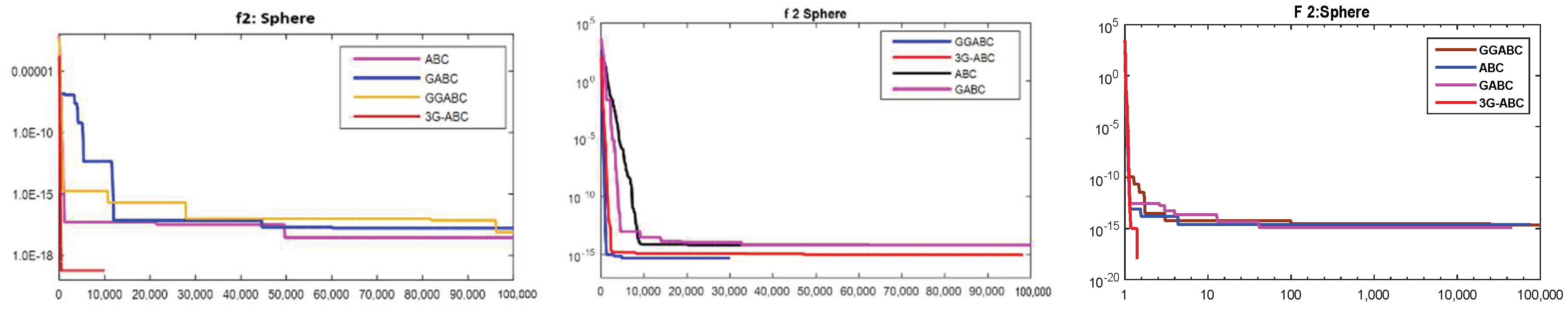

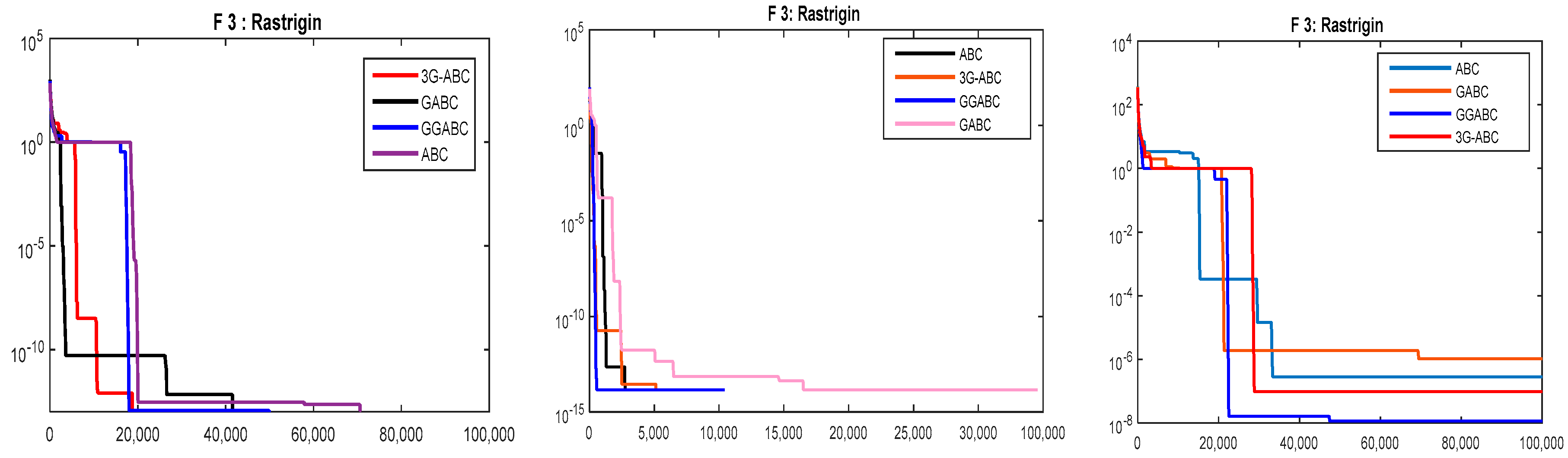

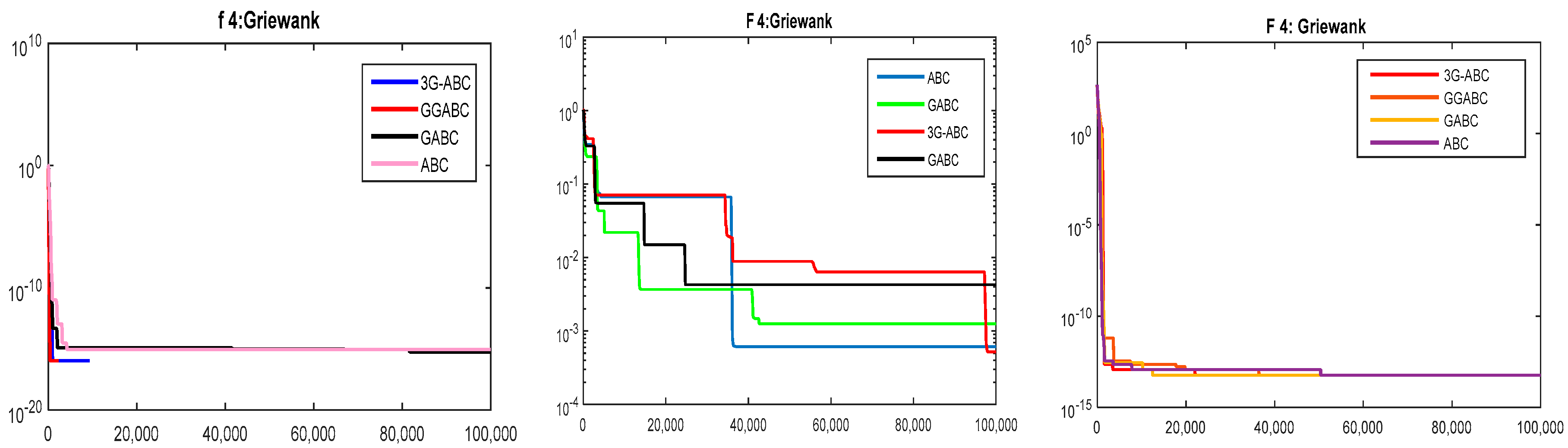

4. Simulation Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shah, H.; Tairan, N.; Garg, H.; Ghazali, R. A quick gbest guided artificial bee colony algorithm for stock market prices prediction. Symmetry 2018, 10, 292. [Google Scholar] [CrossRef]

- Tairan, N.; Algarni, A.; Varghese, J.; Jan, M.A. Population-based guided local search for multidimensional knapsack problem. In Proceedings of the 2015 Fourth International Conference on Future Generation Communication Technology (FGCT), Luton, UK, 29–31 July 2015; pp. 1–5. [Google Scholar]

- Faris, H.; Aljarah, I.; Mirjalili, S. Improved monarch butterfly optimization for unconstrained global search and neural network training. Appl. Intell. 2018, 48, 445–464. [Google Scholar] [CrossRef]

- Shah, H.; Ghazali, R.; Herawan, T.; Khan, N.; Khan, M.S. Hybrid guided artificial bee colony algorithm for earthquake time series data prediction. In International Multi Topic Conference; Springer: Cham, Switzerland, 2013; pp. 204–215. [Google Scholar]

- Shah, H.; Herawan, T.; Naseem, R.; Ghazali, R. Hybrid guided artificial bee colony algorithm for numerical function optimization. In International Conference in Swarm Intelligence; Springer: Cham, Switzerland, 2014; pp. 197–206. [Google Scholar]

- Garg, H. A hybrid PSO-GA algorithm for constrained optimization problems. Appl. Math. Comput. 2016, 274, 292–305. [Google Scholar] [CrossRef]

- Guo, J.; Sun, Z.; Tang, H.; Jia, X.; Wang, S.; Yan, X.; Ye, G.; Wu, G. Hybrid optimization algorithm of particle swarm optimization and cuckoo search for preventive maintenance period optimization. Discret. Dyn. Nat. Soc. 2016, 2016, 1516271. [Google Scholar] [CrossRef]

- Wu, B.; Qian, C.; Ni, W.; Fan, S. Hybrid harmony search and artificial bee colony algorithm for global optimization problems. Comput. Math. Appl. 2012, 64, 2621–2634. [Google Scholar] [CrossRef]

- Kang, F.; Li, J.; Li, H. Artificial bee colony algorithm and pattern search hybridized for global optimization. Appl. Soft Comput. 2013, 13, 1781–1791. [Google Scholar] [CrossRef]

- Shah, H.; Tairan, N.; Mashwani, W.K.; Al-Sewari, A.A.; Jan, M.A.; Badshah, G. Hybrid global crossover bees algorithm for solving boolean function classification task. In International Conference on Intelligent Computing; Springer: Cham, Switzerland, 2017; pp. 467–478. [Google Scholar]

- Duan, H.B.; Xu, C.F.; Xing, Z.H. A hybrid artificial bee colony optimization and quantum evolutionary algorithm for continuous optimization problems. Int. J. Neural Syst. 2010, 20, 39–50. [Google Scholar] [CrossRef]

- Pamucar, D.; Ljubojevic, S.; Kostadinovic, D.; órovic, B. Cost and risk aggregation in multi-objective route planning for hazardous materials transportation—A neuro-fuzzy and artificial bee colony approach. Expert Syst. Appl. 2016, 65, 1–15. [Google Scholar] [CrossRef]

- Xu, J.; Gang, J.; Lei, X. Hazmats transportation network design model with emergency response under complex fuzzy environment. Math. Probl. Eng. 2013, 2013. [Google Scholar] [CrossRef]

- Sremac, S.; Tanackov, I.; Kopic, M.; Radovic, D. ANFIS model for determining the economic order quantity. Decis. Making Appl. Manag. Eng. 2018, 1, 1–12. [Google Scholar] [CrossRef]

- Pamucar, D.; Círovic, G. Vehicle route selection with an adaptive neuro fuzzy inference system in uncertainty conditions. Decis. Making: Appl. Manag. Eng. 2018, 1, 13–37. [Google Scholar] [CrossRef]

- Li, Z.; Wang, W.; Yan, Y.; Li, Z. PS–ABC: A hybrid algorithm based on particle swarm and artificial bee colony for high-dimensional optimization problems. Expert Syst. Appl. 2015, 42, 8881–8895. [Google Scholar] [CrossRef]

- Yang, X.-S. Nature-Inspired Metaheuristic Algorithms; Luniver press: Frome, UK, 2010. [Google Scholar]

- Long, W.; Liang, X.; Huang, Y.; Chen, Y. An effective hybrid cuckoo search algorithm for constrained global optimization. Neural Comput. Appl. 2014, 25, 911–926. [Google Scholar] [CrossRef]

- Wu, D.; Kong, F.; Gao, W.; Shen, Y.; Ji, Z. Improved chicken swarm optimization. In Proceedings of the 2015 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; pp. 681–686. [Google Scholar]

- Wang, G.G.; Deb, S.; Cui, Z. Monarch butterfly optimization. Neural Comput. Appl. 2015, 1–20. [Google Scholar] [CrossRef]

- Qi, X.; Zhu, Y.; Zhang, H. A new meta-heuristic butterfly-inspired algorithm. J. Comput. Sci. 2017, 23, 226–239. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Garg, H. A hybrid GSA-GA algorithm for constrained optimization problems. Inf. Sci. 2019, 478, 499–523. [Google Scholar] [CrossRef]

- Zhu, G.; Kwong, S. Gbest-guided artificial bee colony algorithm for numerical function optimization. Appl. Math. Comput. 2010, 217, 3166–3173. [Google Scholar] [CrossRef]

- Uymaz, S.A.; Tezel, G.; Yel, E. Artificial algae algorithm (AAA) for nonlinear global optimization. Appl. Soft Comput. 2015, 31, 153–171. [Google Scholar] [CrossRef]

- Abraham, A.; Pedrycz, W. Hybrid artificial intelligence systems. In Innovations in Hybrid Intelligent; Systems, E., Corchado, J.M., Abraham, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Crepinšek, M.; Liu, S.-H.; Mernik, M. Exploration and exploitation in evolutionary algorithms: A survey. ACM Comput. Surv. (CSUR) 2013, 45, 35. [Google Scholar] [CrossRef]

- Guo, P.; Cheng, W.; Liang, J. Global artificial bee colony search algorithm for numerical function optimization. In Proceedings of the 2011 Seventh International Conference on Natural Computation (ICNC), Shanghai, China, 26–28 July 2011; Volume 3, pp. 1280–1283. [Google Scholar]

- Sharma, H.; Bansal, J.C.; Arya, K.; Deep, K. Dynamic swarm artificial bee colony algorithm. Int. J. Appl. Evol. Comput. (IJAEC) 2012, 3, 19–33. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. On the performance of artificial bee colony (ABC) algorithm. Appl. Soft Comput. 2008, 8, 687–697. [Google Scholar] [CrossRef]

- Gao, W.; Liu, S. Improved artificial bee colony algorithm for global optimization. Inf. Process. Lett. 2011, 111, 871–882. [Google Scholar] [CrossRef]

- Garg, H.; Sharma, S.P. Multi-objective reliability-redundancy allocation problem using particle swarm optimization. Comput. Ind. Eng. 2013, 64, 247–255. [Google Scholar] [CrossRef]

- Karabogă, D.; Ökdem, S. A simple and global optimization algorithm for engineering problems: Differential evolution algorithm. Turk. J. Electr. Eng. Comput. Sci. 2004, 12, 53–60. [Google Scholar]

- Shi, Y.; Eberhart, R.C. Empirical study of particle swarm optimization. In Proceedings of the 1999 Congress on Evolutionary Computation, Washington, DC, USA, 6–9 July 1999; Volume 3, pp. 1945–1950. [Google Scholar]

- Ozturk, C.; Karaboga, D. Hybrid artificial bee colony algorithm for neural network training. In Proceedings of the 2011 IEEE Congress on Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; pp. 84–88. [Google Scholar]

- Karaboga, D.; Gorkemli, B.; Ozturk, C.; Karaboga, N. A comprehensive survey: Artificial bee colony (ABC) algorithm and applications. Artif. Intell. Rev. 2014, 42, 21–57. [Google Scholar] [CrossRef]

- Binitha, S.; Sathya, S.S. A survey of bio inspired optimization algorithms. Int. J. Soft Comput. Eng. 2012, 2, 137–151. [Google Scholar]

- Kar, A.K. Bio inspired computing–a review of algorithms and scope of applications. Expert Syst. Appl. 2016, 59, 20–32. [Google Scholar] [CrossRef]

- Garg, H. Multi-objective optimization problem of system reliability under intuitionistic fuzzy set environment using cuckoo search algorithm. J. Intell. Fuzzy Syst. 2015, 29, 1653–1669. [Google Scholar] [CrossRef]

- Mashwani, W.K. Comprehensive survey of the hybrid evolutionary algorithms. Int. J. Appl. Evol. Comput. (IJAEC) 2013, 4, 1–19. [Google Scholar] [CrossRef]

- Garg, H. Solving structural engineering design optimization problems using an artificial bee colony algorithm. J. Ind. Manag. Optim. 2014, 10, 777–794. [Google Scholar] [CrossRef]

- Mernik, M.; Liu, S.-H.; Karaboga, D.; Crepinšek, M. On clarifying misconceptions when comparing variants of the artificial bee colony algorithm by offering a new implementation. Inf. Sci. 2015, 291, 115–127. [Google Scholar] [CrossRef]

- Crepinšek, M.; Liu, S.-H.; Mernik, L.; Mernik, M. Is a comparison of results meaningful from the inexact replications of computational experiments? Soft Comput. 2016, 20, 223–235. [Google Scholar] [CrossRef]

- Garg, H. A Hybrid GA—GSA Algorithm for Optimizing the Performance of an Industrial System by Utilizing Uncertain Data. In Handbook of Research on Artificial Intelligence Techniques and Algorithms; Vasant, P., Ed.; IGI Global: Hershey, PA, USA, 2015; pp. 620–654. [Google Scholar]

- Liang, J.J.; Qin, A.K.; Suganthan, P.N.; Baskar, S. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Shastri, A.S.; Kulkarni, A.J. Multi-cohort intelligence algorithm: An intra-and inter-group learning behaviour based socio-inspired optimisation methodology. Int. J. Parallel Emerg. Distrib. Syst. 2018, 33, 675–715. [Google Scholar] [CrossRef]

- Karaboga, D.; Ozturk, C. A novel clustering approach: Artificial bee colony (ABC) algorithm. Appl. Soft Comput. 2011, 11, 652–657. [Google Scholar] [CrossRef]

- Garg, H. Performance analysis of an industrial systems using soft computing based hybridized technique. J. Braz. Soc. Mech. Sci. Eng. 2017, 39, 1441–1451. [Google Scholar] [CrossRef]

- Garg, H. An efficient biogeography based optimization algorithm for solving reliability optimization problems. Swarm Evol. Comput. 2015, 24, 1–10. [Google Scholar]

- Garg, H.; Rani, M.; Sharma, S.P.; Vishwakarma, Y. Bi-objective optimization of the reliability-redundancy allocation problem for series-parallel system. J. Manuf. Syst. 2014, 33, 353–367. [Google Scholar] [CrossRef]

- Garg, H.; Rani, M.; Sharma, S.P.; Vishwakarma, Y. Intuitionistic fuzzy optimization technique for solving multi-objective reliability optimization problems in interval environment. Expert Syst. Appl. 2014, 41, 3157–3167. [Google Scholar] [CrossRef]

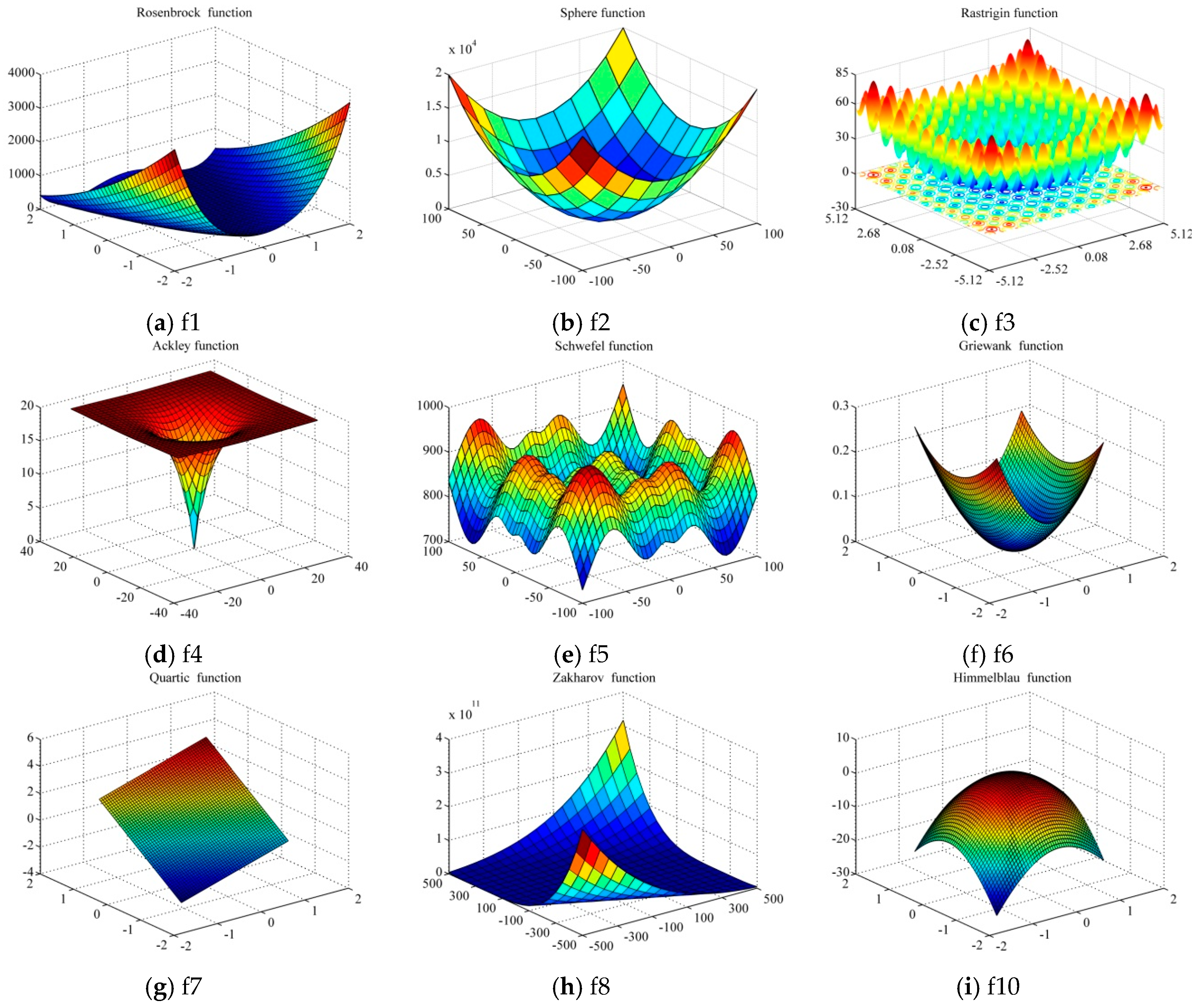

| Function Name | Function’s Formula | Search Range | f(x*) |

|---|---|---|---|

| Rosenbrock | f1(x) | [−2.048, 2.048] D | f(1) = 0 |

| Sphere | f2(x) | [−100, 100 ] D | f(0) = 0 |

| Rastrigin | f3(x) | [−5.12,5.12] D | f(0) = 0 |

| Ackley | F4(x) | [−32, 32] D | f(0) = 0 |

| Schwefel | F5(x) | [−600,600] D | f(0) = 0 |

| Griewank | F6(x) | (−1.28, 1.28) D | f(0) = 0 |

| Quartic | F7(x) | (−1.28, 1.28) D | f(0) = 0 |

| Zakharov | F8(x) | [−500, 500] D | f(420.96) = 0 |

| Weierstrass | f9(x) | (−1.28, 1.28) D | f(0) = 0 |

| Himmelblau | f10(x) | (−1.28, 1.28) D | f(0) = 0 |

| Shifted Rotated High Conditioned Elliptic | f11(x) | [−100, 100] D | f(0) = 0 |

| Shifted Rotated Scaffer’s | f12(x) | [−5, 5] D | f(0) = 0 |

| Shifted Rastrigin’s | f13(x) | [−5, 5] D | f(0) = 0 |

| Shifted Rosenbrock’s | f14(x) | [−100, 100] D | f(0) = 0 |

| Function | MSE/S.D | ABC | GABC | GGABC | 3G-ABC |

|---|---|---|---|---|---|

| f1 | MSE | 1.41 × 10−3 | 2.75 × 10−3 | 1.64 × 10−2 | 2.61 × 10−2 |

| Std | 1.12 × 10−2 | 3.58 × 10−2 | 1.90 × 10−2 | 2.87 × 10−2 | |

| f2 | MSE | 3.07 × 10−15 | 5.96 × 10−17 | 6.39 × 10−17 | 00 |

| Std | 1.67 × 10−12 | 1.48 × 10−17 | 1.23 × 10−16 | 1.68 × 10−14 | |

| f3 | MSE | 6.96 × 10−1 | 00 | 1.39 × 10−13 | 00 |

| Std | 7.12 × 10−1 | 00 | 7.96 × 10−14 | 3.16 × 10−10 | |

| f4 | MSE | 3.59 × 102 | 00 | 2.27 × 10−15 | 2.27 × 10−18 |

| Std | 1.37 × 10−2 | 00 | 1.24 × 10−16 | 2.24 × 10−16 | |

| f5 | MSE | 1.85 × 10−4 | 3.79 × 10−15 | 1.34 × 10−12 | 00 |

| Std | 6.73 × 10−5 | 9.17 × 10−16 | 9.91 × 10−11 | 2.11 × 10−16 | |

| f6 | MSE | 6.72 × 10−3 | 1.48 × 10−2 | 1.27305 × 10−3 | 2.73 × 10−4 |

| Std | 2.184 × 10−3 | 3.91 × 10−2 | 2.13 × 10−2 | 2.73 × 10−3 | |

| f7 | MSE | 1.09 × 10−1 | 2.73 × 10−3 | 4.12 × 10−3 | 2.73 × 10−5 |

| Std | 1.85 × 10−2 | 8.59 × 10−4 | 1.01 × 10−4 | 8.59 × 10−5 | |

| f8 | MSE | 1.09 × 10−1 | 2.73 × 10−3 | 4.12 × 10−3 | 2.73 × 10−5 |

| Std | 1.85 × 10−2 | 8.59 × 10−4 | 1.01 × 10−4 | 8.59 × 10−5 | |

| f9 | MSE | 1.73 × 10−1 | 1.12 × 10−2 | 4.16 × 10−10 | 5.80 × 10−11 |

| Std | 2.84 × 10−2 | 2.24 × 10−3 | 00.0 | 00.0 | |

| f10 | MSE | −73.2662 | −73.3245 | −73.45232 | −72.3221 |

| Std | 2.98 × 10−1 | 3.16 × 10−2 | 2.41×10−3 | 6.04 × 10−4 | |

| f11 | MSE | 2.26 × 102 | 3.74 × 103 | 4.94 × 102 | 2.50 × 102 |

| Std | 7.65 × 103 | 2.02 × 103 | 2.21 × 102 | 9.77 × 103 | |

| f12 | MSE | 2.40 | 1.80 | 1.84 | 1.33 |

| Std | 1.39 | 1.12 | 3.13 × 10−1 | 1.92 × 10−1 | |

| f13 | MSE | 7.86 | 4.93 | 3.42 | 3.09 |

| Std | 1.55 | 6.34 × 10−1 | 7.80 × 10−1 | 6.80 × 10−1 | |

| f14 | MSE | 2.20 × 10−3 | 2.41 × 10−4 | 5.22 × 10−4 | 6.58 × 10−3 |

| Std | 1.78 × 10−3 | 3.33 × 10−3 | 3.13 × 10−4 | 7.13 × 10−3 | |

| f15 | MSE | 1.13 × 10−2 | 2.15 × 10−3 | 3.24 × 10−4 | 2.23 × 10−3 |

| Std | 2.52 × 10−1 | 2.63 × 10−3 | 1.52 × 10−3 | 1.15 × 10−3 |

| Function | MSE/S.D | ABC | GABC | GGABC | 3G-ABC |

|---|---|---|---|---|---|

| f1 | MSE | 3.81 × 10−2 | 2.08 × 10−2 | 1.684 × 10−3 | 1.183×10−4 |

| Std | 0.2273 | 0.37021 | 0.879201 | 0.29862 | |

| f2 | MSE | 1.070 × 10−10 | 00 | 6.370 × 10−16 | 1.40×10−9 |

| Std | 1.327 × 10−10 | 00 | 1.203 × 10−16 | 00 | |

| f3 | MSE | 1.40 × 10−16 | 00 | 1.349 × 10−13 | 00 |

| Std | 00 | 00 | 1.966 × 10−14 | 00 | |

| f4 | MSE | 1.782 × 10−15 | 00 | 1.227 × 10−15 | 00 |

| Std | 1.129 × 10−11 | 00 | 00 | 00 | |

| f5 | MSE | 1.921 × 10−12 | 2.16 × 10−14 | 1.654 × 10−12 | 1.23 × 10−18 |

| Std | 2.712 × 10−10 | 2.4 × 10−14 | 2.891 × 10−11 | 2.11 × 10−15 | |

| f6 | MSE | 3.790 × 10−3 | 2.2901 × 10−2 | 1.240 × 10−3 | 2.73 × 10−3 |

| Std | 4.344 × 10−3 | 2.1093 × 10−2 | 2.13 × 10−2 | 2.73 × 10−3 | |

| f7 | MSE | 1.094 × 10−1 | 2.73 × 10−3 | 4.12 × 10−3 | 2.73 × 10−5 |

| Std | 1.855 × 10−2 | 8.59 × 10−4 | 1.01 × 10−4 | 8.59 × 10−5 | |

| f8 | MSE | 1.097 × 10−3 | 2.73 × 10−3 | 4.12 × 10−3 | 2.73 × 10−5 |

| Std | 1.858 × 10−3 | 8.59 × 10−4 | 1.01 × 10−4 | 8.59 × 10−5 | |

| f9 | MSE | 1.534 × 10−1 | 1.12 × 10−2 | 4.16 × 10−3 | 5.80 × 10−4 |

| Std | 3.94 × 10−2 | 2.24 × 10−3 | 4.12 × 10−3 | 1.25 × 10−3 | |

| f10 | MSE | −77.2231 | −77.3245 | −76.45232 | −76.3221 |

| Std | 1.134 × 10−1 | 1.16 × 10−2 | 1.41 × 10−3 | 2.04 × 10−4 | |

| f11 | MSE | 2.23 × 102 | 1.12 × 103 | 2.56 × 104 | 1.10 × 102 |

| Std | 1.63 × 102 | 1.13 × 104 | 2.21 × 104 | 2.12 × 103 | |

| f12 | MSE | 1.20 | 1.30 | 1.12 | 1.102 |

| Std | 1.02 | 2.11 × 10−1 | 1.12 × 10−1 | 1.107 × 10−1 | |

| f13 | MSE | 1.06 | 2.23 | 3.42 | 3.09 |

| Std | 1.55 | 6.34 × 10−1 | 7.80 × 10−1 | 6.80 × 10−1 | |

| f14 | MSE | 1.03 × 102 | 1.07 | 1.12 × 102 | 1.94 × 10−1 |

| Std | 1.96 | 1.63 × 10−1 | 1.42 × 10−1 | 1.23 × 10−1 | |

| f15 | MSE | 3.65 × 101 | 2.04 × 101 | 2.14 | 2.54 |

| Std | 3.05 | 3.34 × 10−1 | 2.06 | 2.43 × 10−1 |

| Function | MSE/S.D | ABC | GABC | GGABC | 3G-ABC |

|---|---|---|---|---|---|

| f1 | MSE | 6.41 × 10−2 | 2.08 × 10−2 | 1.686 × 10−3 | 1.18 × 10−3 |

| Std | 5.75 × 10−1 | 3.21 × 10−1 | 0.000491 | 00 | |

| f2 | MSE | 2.70 × 10−10 | 5.96 × 10−17 | 6.310 × 10−16 | 1.40 × 10−9 |

| Std | 1.67 × 10−10 | 1.48 × 10−17 | 1.203 × 10−16 | 1.68 × 10−14 | |

| f3 | MSE | 6.06 × 10−1 | 00 | 1.39 × 10−13 | 00 |

| Std | 6.35 × 10−1 | 00 | 7.96 × 10−14 | 3.16 × 10−10 | |

| f4 | MSE | 4.53 × 10−2 | 00 | 2.27 × 10−15 | 2.27 × 10−18 |

| Std | 2.34 × 10−2 | 0 | 1.24 × 10−16 | 2.24 × 10−16 | |

| f5 | MSE | 4.33 × 10−4 | 3.79 × 10−15 | 1.34 × 10−12 | 0 |

| Std | 7. 73 × 10−5 | 9.17 × 10−16 | 9.91 × 10−11 | 2.11 × 10−13 | |

| f6 | MSE | 3.79 × 10−3 | 4.21 × 10−3 | 6.92 × 10−3 | 2.13 × 10−3 |

| Std | 1.54 × 10−2 | 6.93 × 10−2 | 4.13 × 10−1 | 2.17 × 10−2 | |

| f7 | MSE | 1.09 × 10−1 | 2.73 × 10−3 | 4.12 × 10−3 | 2.73 × 10−5 |

| Std | 1.85 × 10−2 | 8.59 × 10−4 | 1.01 × 10−4 | 8.59 × 10−5 | |

| f8 | MSE | 1.09 × 10−2 | 2.73 × 10−2 | 4.12 × 10−2 | 3.73 × 10−4 |

| Std | 1.85 × 10−2 | 8.59 × 10−2 | 1.01 × 10−2 | 1.59 × 10−4 | |

| f9 | MSE | 1.54 × 10−1 | 1.12 × 10−2 | 4.16 × 10−3 | 5.80 × 10−4 |

| Std | 3.94 × 10−2 | 2.24 × 10−3 | 4.12 × 10−3 | 1.25 × 10−3 | |

| f10 | MSE | −77.2231 | −77.3245 | −76.45232 | −76.3221 |

| Std | 2.14 × 10−1 | 3.16 × 10−2 | 2.41 × 10−3 | 6.04 × 10−4 | |

| f11 | MSE | 2.26 × 105 | 3.74 × 103 | 4.94 × 104 | 2.50 × 104 |

| Std | 2.651 × 104 | 2.12 × 103 | 2.21 × 104 | 9.77 × 103 | |

| f12 | MSE | 2.404 | 1.85 | 1.84 | 1.33 |

| Std | 1.39 × 10−1 | 2.13 × 10−1 | 1.92 × 10−1 | 2.17 × 10−1 | |

| f13 | MSE | 7.81 | 2.23 | 3.42 | 3.09 |

| Std | 1.56 | 2.42 × 10−1 | 7.80 × 10−1 | 6.80 × 10−1 | |

| f14 | MSE | 1.25 × 101 | 2.15 × 101 | 2.19 × 101 | 1.23 × 101 |

| Std | 2.34 × 101 | 2.274 × 101 | 2.28 | 1.61 × 101 | |

| f15 | MSE | 3.12 × 101 | 2.13 × 101 | 1.22 × 101 | 1.71 × 101 |

| Std | 2.22 | 1.182 | 1.01 | 2.63 × 10−1 |

| SUMMARY of Sphere Function | ||||||

|---|---|---|---|---|---|---|

| Groups | FEs | Sum | Average | Variance | ||

| ABC | 10000 | 36812.73 | 3.681273 | 1634.661 | ||

| GABC | 10000 | 23729.47 | 2.372947 | 799.2924 | ||

| GGABC | 10000 | 35520.18 | 3.552018 | 1411.115 | ||

| 3G-ABC | 10000 | 20343. 12 | 2.023499 | 0.234222 | ||

| ANOVA | ||||||

| Source of Variation | SS | df | MS | F | P-value | F crit |

| Between Groups | 86165.02 | 3 | 28721.67 | 29.87602 | 2.77 × 10−19 | 2.605131 |

| Within Groups | 38449677 | 39995 | 961.3621 | |||

| Total | 38535842 | 39998 | ||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shah, H.; Tairan, N.; Garg, H.; Ghazali, R. Global Gbest Guided-Artificial Bee Colony Algorithm for Numerical Function Optimization. Computers 2018, 7, 69. https://doi.org/10.3390/computers7040069

Shah H, Tairan N, Garg H, Ghazali R. Global Gbest Guided-Artificial Bee Colony Algorithm for Numerical Function Optimization. Computers. 2018; 7(4):69. https://doi.org/10.3390/computers7040069

Chicago/Turabian StyleShah, Habib, Nasser Tairan, Harish Garg, and Rozaida Ghazali. 2018. "Global Gbest Guided-Artificial Bee Colony Algorithm for Numerical Function Optimization" Computers 7, no. 4: 69. https://doi.org/10.3390/computers7040069

APA StyleShah, H., Tairan, N., Garg, H., & Ghazali, R. (2018). Global Gbest Guided-Artificial Bee Colony Algorithm for Numerical Function Optimization. Computers, 7(4), 69. https://doi.org/10.3390/computers7040069