Abstract

(1) Background: A disabled student or employee in a certain university faces a large number of obstacles in achieving his/her ordinary duties. An interactive smart search and communication application can support the people at the university campus and Science Park in a number of ways. Primarily, it can strengthen their professional network and establish a responsive eco-system. Therefore, the objective of this research work is to design and implement a unified flexible and adaptable interface. This interface supports an intensive search and communication tool across the university. It would benefit everybody on campus, especially the People with Disabilities (PWDs). (2) Methods: In this project, three main contributions are presented: (A) Assistive Technology (AT) software design and implementation (based on user- and technology-centered design); (B) A wireless sensor network employed to track and determine user’s location; and (C) A novel event behavior algorithm and movement direction algorithm used to monitor and predict users’ behavior and intervene with them and their caregivers when required. (3) Results: This work has developed a comprehensive and universal application with a unified, flexible, and adaptable interface to support the different conditions of PWDs. It has employed an interactive smart based-location service for establishing a smart university Geographic Information System (GIS) solution. This GIS solution has been based on tracking location service, mobility, and wireless sensor network technologies. (4) Conclusion: The proposed system empowered inter-disciplinary interaction between management, staff, researchers, and students, including the PWDs. Identifying the needs of the PWDs has led to the determination of the relevant requirements for designing and implementing a unified flexible and adaptable interface suitable for PWDs on the university campus.

1. Introduction

Presently, over a billion people, including children (or about 15% of the world’s population) have been estimated to be living with disabilities [1]. The absence of or the presence of ineffective support services or tools can make people with disabilities (PWDs) overly dependent on their families, friends, and caregivers. As a result, they are prohibited from being economically active and socially included. In a university, to make PWDs economically independent and to provide them with a sense of inclusion, a PWD-friendly campus/environment is needed. It is very important for the designers to take into account all the possible users, their interactions among themselves as well as with the environment before developing any products or services. The user involvement at each and every phase of the development process helps the designers to accomplish the optimal requirements for the PWDs [2]. A proper solution for disabled people on the university campus can be defined as the one which is universal, adaptable, heterogeneous, and user-centered. In fact, it should be able to make PWDs life easy and productive instead of being idle and frustrated. Moreover, increased information sharing within the university campus has a positive impact on the evolution of the knowledge as well as the innovative capabilities of the academic environment. It would also give rise to opportunities and prospects for new collaborative and enriched basic and applied research.

The advances in mobile and wireless technology, and the new concept of location-based services (LBS) together with Geographic Information Systems (GIS), have led to the introduction of the smart adaptive professional network that is required for the establishment of a successful eco-system. The accelerated rapid development of the wireless network and mobile computing technologies that are the driving forces behind the mobile information services for PWDs has become an appealing research topic. In particular, the LBSs on mobile devices can convey location-based information to individual users, thus helping the users (including the PWDs) to acquire helpful and adaptive information [3]. Furthermore, the interactive LBSs enhance personalized and context-aware information communication, and maximize the gain from such dynamic information sharing by empowering the user with extended functionalities.

In this project, a unified interface has been designed to support the PWDs during their movement within the campus. This new approach uses the concept of GIS within the platform of wireless technologies and develops an interactive smart context-aware y to enhance information sharing, communication, and dissemination within the King Saud University campus. The interface has been designed to suit everybody on campus, including PWDs. The size of font, font color, window color, as well as the voice recognition used for controlling the users’ interface have been considered in the design and development according to the needs of the PWDs. Consideration of all of the above aspects led to the development of an information-intensive eco-system to support knowledge, creativity, and innovation within the multi-disciplinary environment.

The paper has been divided in six sections as follows. A literature review pertaining to GIS and sensors technologies has been conducted to determine the right solution for this project (as shown in Geographic Information System and Sensors Technologies). The interface has been designed in accordance with the specified criteria applicable to the impairment conditions of the majority of PWDs (as described in Design Consideration and Methodology). A review of the existing commercialized solutions has been performed to verify their compliance with the specified criteria, as well as to establish the direction of improvement, in order to maximize the PWD users’ satisfaction (as shown in the Interface Design consideration to Support PWD Needs). The complete design of the University-Based Smart and Context Aware Solution for People with Disabilities (USCAS-PWD) can be realized in the Group Design and Implementation Consideration. A description of the group selection for the PWDs to support the different impairment conditions has been presented in the implementation of the USCAS-PWD. An implementation of the Unified Interface has been described for the indoor (inside the building) and outdoor at the university campus within the section Implementation of the Unified Interface for Indoor Network. The event behavior algorithm and the movement direction algorithm are discussed within the algorithm section.

2. Geographic Information System and Sensors Technologies

With the continuous increase in online spatial data, the adaptation of spatial content according to the user’s context has become crucial. In addition, the introduction of GIS [4] has changed the way geographical and spatial information is manipulated, accessed, and understood. For example, Li [5] developed a conceptual model based on LBS to assist the urban pedestrian. The results of his study revealed the importance of user preferences on the information requirements. Similarly, Zipf [6] and Yuxia [7] presented several approaches to realize adaptive mobile GIS services in the domain of pedestrian navigation and tourist information. The Intelligent Map Agents (IMA) architecture developed by Gervais [8] for GIS was aimed at superseding the monolithic approach through a new dynamic, lean, and customizable system supporting spatially-oriented applications. Relevant to the concept of the adaptive GIS, personalization, and context-awareness, Aoidh [9] introduced an approach that implicitly monitored the user’s activity and generated a user profile reflecting her/his information preferences based on the interactions of the user with the system, and her/his physical location and movements. Brossard et al. [10] have also proposed a solution allowing the context to be managed inside an application’s conceptual model, in order to provide more flexible web applications from the user’s point of view.

Recently, considerable interest has been shown in interactive LBS, specifically in the fields of mobile communications and wireless networking. For instance, Jung [11] proposed an interactive approach by developing social networks between mobile users through collection of a datasets from about two million users. Chang et al. [12], developed an adaptive context-aware recommendation system. The system was built using a slow intelligence approach. The system was implemented as an application on the smart phone. A case study in Pittsburg, USA was conducted, and the results obtained by the experimental campaign were satisfactory and showed good perspective of this kind of approach. Similarly, Rebollo-Monedero [13] presented a novel protocol based on user collaboration to privately retrieve location-based information from an LBS provider. In their approach, the user queries, comprising accurate locations, remained unchanged, and the collaborative protocol did not impose any special requirements on the query–response function of the LBS. The application devised by Kbar [14] enabled the user to conduct a search using a set of three modes: keyword, location, and targeted profile. The GIS was utilized within a platform of wireless technologies to develop an interactive smart context-aware GIS for a University. Until now, many technologies have been developed based on Global Positioning System (GPS) receivers and General Packet Radio Service GPRS transmitters, where the positions are stored and accessed via MySQL or web servers through internet connectivity [15,16,17].

Different technologies, including WiFi tag through Access Point (AP) [18], sensor technology based on wireless personal area network (WPAN) IEE 802.15.4 [19], passive and active radio frequency identification (RFID) sensors, GPS technologies [20], etc., can be employed in tracking user location. For example, Ning et al. [21] introduced a tree-like code structure to develop a unified modeling scheme of the physical objects (in the RFID network) without an identification code. A hybrid platform which is based on the combination of RFID, WiFi, and GPS technologies showed better accuracy in comparison to the individual technologies [22]. In the system developed by Dao et al. [22], the user (with a smartphone) was detected by the RFID readers and the WiFi APs. Similarly, Li et al. [23] developed a system based on radio-over-fiber (RoF) network involving the simultaneous transmission of RFID, Wi-Fi, and ZigBee services. They integrated all the wireless signals in the electrical domain, and then modulated them onto the optical carrier through an optical modulator. The system was tested over a 2 km RoF link where RFID, WiFi, and ZigBee master nodes could communicate effectively. Meanwhile, S. Szewcyzk [24] analyzed the individual impairment behavior using machine learning techniques. They used inhabitant feedback to decrease the annotation time and improve their performance. In fact, the Wireless Body/Personal Area Network (WBPAN) developed by G. Yella Reddy [25] included low-cost, light, and small sensors for continuous health monitoring and sending instantaneous feedback to the user.

Assistive technologies (ATs) can also be used to track and monitor the activity and vital signs of elder and motor-impaired individuals through different types of wearable sensors, implantable sensors, and microsystems that can be swallowed, such as microcapsule devices [26]. Five basic features, including the automation, multi-functionality, adaptability, interactivity, and efficiency play a significant role in the design of smart homes [27]. In order to help blind people to walk on the streets, Chen and Zhi [28] proposed a solution based on sensors that detected the blind individual’s movement through an RFID tag installed on a cane. The walking path of the blind man was designed to incorporate the electronic tags underneath it. An indoor localization aid, called portable position and orientation estimation (POSE) was developed by Hesch and Roumeliotis [29] for visually-impaired persons, in order to increase their safety and independence. The 3D orientation of the cane was tracked in the first layer through measurements using a three-axis gyroscope and laser scanner. The 2D position of the person was detected in the second layer using corner features extracted from the laser-scan data, linear velocity measurements from the pedometer, and a filtered version of the cane’s yaw. Kammoun et al. [30] utilized micro-navigation (sensing immediate environments) and macro-navigation (reaching remote destinations) functions to design a system for blind people in indoor and outdoor environments. This system started searching for any object requested by the user in the captured images. After the object was detected, the system would direct the user to move their hand for grasping via voice command.

The multi-sensory (audio and force feedback) learning environment developed by Darrah [31] was used to teach visually-impaired or blind children. It consisted of a PC, a low-cost force feedback stylus-based haptic device, auditory cues, and high contrast graphics. Similarly, the electronic navigation system [32] could detect obstacles around the subject (visually-impaired and blind people) up to 500 cm in the front, left, and right directions through a network of ultrasonic sensors. Gallaghera et al. [33] also designed and tested an indoor navigation system for visually-impaired persons. This system was based on the internal hardware of the smartphone (such as the accelerometer) to measure the user’s position and direction. A navigation and way-finding system [34] for visually-impaired persons used RFID technology to estimate the position of the user. The system was designed for indoor use, and tags were installed under the floor. The tag reader was attached to a shoe or a stick that sent a query to tags. The orientation guiding system built by Ghiani et al. [35] for blind persons actually used vibrotactile feedback. The prototype used the RFID network and tags to determine the person’s surroundings, and was installed inside the museum to guide blind persons. Similarly, Colace et al. [36] developed a context-aware application based on a slow intelligence approach for cultural heritage application. The developed system was implemented as an application for the Android System. A case study with 50 users was conducted in which the users were made to visit in some rooms of the Capodimonte museum (Salerno, Italy ). The results showed that the users were satisfied with the performance of the application. Another RFID-based system [37] helped blind users to identify objects in the home/classroom. The system had four major components: RFID Reader, FM Transmitter, Database Server, and RFID Tags. The system developed by El-Alamy et al. [38] allowed visually-impaired persons to catch buses safely. The framework incorporated an auditory device, a tactile interface, and a wireless communication system. It also included a sensor, a radio frequency reader (RF) that was employed in the transport station. A wearable device was designed by Jain [39] to aid blind persons in indoor navigation. The system had two major constituents: wall modules were installed in the building, and the user end included a waist-worn gadget linked to a smartphone. All instructions were communicated to the user via the Text-to-Speech (TTS) engine of the smartphone application. The “Drishti” project [40] was aimed at designing a standalone-integrated system to help blind and low-vision individuals during outdoor navigation. It consisted of a wearable computer, GPS receiver, headset, and access to a wireless network for position information and way-guidance in the form of text-to-speech (TTS) voice commands. Gomez et al. [41] also developed a system to provide visually-impaired persons with a more practical and functional device based on the sensory substitution methods (SSDs). The device was called the See ColOr, and consisted of SSD, a 3D camera, bone-phones, an iPad for tactile feedback, and a 14-inch laptop. The recognition module based on computer vision allowed the users to reach targets and avoid obstacles. Similarly, Iannizzotto et al. [42] developed a system to help blind people to discover objects in the indoor environment around them. The system read the barcode attached to all interested objects. This system used an ultrasonic sensor to detect obstacles (i.e., objects with no tags). The ultrasonic system was attached to the user’s belt. This system used a head-mounted camera to read barcode tags, and a headphone to get the voice feedback of the query about any interested object passed to the system. Ando et al. [43] developed a device based on an IR multisensory array that could help blind people in environment orientation and movement. It was based on smart signal processing to provide the user with suitable information about the position of obstacles found in his/her path.

The emergence and evolution of the concept and technologies of Ambient Intelligent (AmI) and ubiquitous computing have paved the way towards building smart environments. These smart environments exploit the key enabling technologies, which are necessary to improve the quality of life and performance and provide assistance in different kinds of environments. AmI has specific features, attributes, and aspects, including context-awareness. A context-aware system provides a predictive behavior based on knowledge of the environment. A few researchers, including Cooper and Cooper [44], have addressed the issue of quality of life for people who suffered spinal cord injuries. They stated that technology plays a critical role in promoting the well-being, activity, and participation of individuals with spinal cord injury (SCI). School, work, travel, and leisure activities can all be facilitated by the technology. They pointed out that software has made computer interfaces adaptive and intelligent, through learning of the user’s behavior and optimizing its structure. Another important concept that can be employed in an assistive workplace for PWDs is the “Internet of Things (IoT)” [45]. The IoT can offer PWDs the assistance and support, they need to achieve a good quality of life and allows them to participate in social and economic life. The IoT depicts a world of networked smart devices, where everything is interconnected and has a digital entity [46]. According to Giannetsos et al. [47], user-based technologies can never succeed without appropriate provisions addressing security and privacy. As a result of the ubiquitous nature of data emerging from the sensors carried by people, the highly dynamic and mobile setting presents new challenges for information security, data privacy, and ethics. To overcome security issues in IoT m-Health devices, Doukas et al. [48] employed a system based on digital certificates and Public Key Infrastructure (PKI) data encryption. An adaptive approach, as proposed by Yau et al. [49], can also be used to prevent communication systems from various security attacks. Similarly, Templeton [50] addressed the issues of the security challenges in the increased use of cyber-physical devices in assistive environments. In fact, there have been many instances where the AT system was not properly secure, which means they were susceptible to fraud and harm as a result of unexpected interaction with other systems.

Most of the research works presented in this section have focused on home or outdoor ATs and neglected the social inclusion of PWDs in universities. In addition, most of the developed smart solutions and ATs were not comprehensive, as they addressed only one or two impairments. Furthermore, few of these papers attempted to analyze the behavior of PWD and provide relevant intervention methods to guide them at home or in the workplace.

Furthermore, it is clear from this section that the use of technologies based on GIS and LBS in mobile applications can help PWDs and other students on the university campus. This would also help in monitoring user activities and help PWDs in accessing and sharing of information flexibly. However, there have been issues, such as network security, interactive application and social networks and the use of IoT, etc., that require further attention. Moreover, a smart context-aware based design would help in analyzing PWDs users’ needs and to perform their activities efficiently. It also goes beyond meeting the users’ requirements by designing and implementing a smart AT solution that is adaptable to different conditions according to the specific context, which helps to attain better user satisfaction and adoption. A USCAS-PWD would assist PWDs in accessing relevant information anywhere on the campus freely, flexibly, securely, and at all times. It would also help them to communicate with each other and their caregivers efficiently and effectively. The design of such system on the university campus and within the buildings would require the identification of the user (PWDs) needs through a proper framework and design methodology. This methodology should be able to assess their needs and match them to relevant technologies that can satisfy them, as described in next section. Moreover, in order to support behavior analysis and intervention, there is a need of a wireless network that can be used to track user movements within the building. Real time tracking location system (RTLS) can be useful for behavior analysis of PWDs, as it helps to identify their location within the home, building, and work environments. The RFID and sensor networks play an essential role in tracking assets within a defined environment, where it transmits the identity associated with these assets wirelessly using radio waves [51].

3. Design Methodology and Consideration

The following sub-sections describe the interface design considerations to support PWD needs, assess existing software applications for the PWDs to identify improvements, identify the design features of USCAS-PWD that covers indoor and outdoor applications, and evaluate the proposed solution through a comparison between the existing software applications and the proposed design.

3.1. AT Design Methedology to Support PWD Needs

A user-driven process that focused on technologies as well as quality of life has been proposed by Federici and Scherer [52]. This solution, which relied on AT and addressed the needs of the PWDs was achieved through the Assistive Technology Assessment (ATA) process. In the ATA process, the improvement in individual functioning can be realized in three phases: phase 1—users seeking a solution, where a professional team would interact with these users to get their requirements and then match their needs to a suitable solution; phase 2—users validate the solution provided by the professional team; phase 3—users adopt the solution and get appropriate training. Three important considerations, including accessibility, universality, and sustainability have been proposed by the authors. The criterion “accessibility” is actually used to measure the level of environmental characteristics [53,54]. Similarly, the universality consideration leads to a common solution that satisfies the majority of stakeholders. A universal system is necessary because it can support a wide range of users [55]. A universal AT with multi-modal input and multimedia output interface as proposed by Karpov and Ronzhin [56] was based on four assistive systems: automatic audio-speech recognition (relevant for blind persons), text-to-audiovisual speech synthesis (relevant for blind persons), sign language visual synthesis (relevant for deaf persons), and multimodal hand-free computer control (relevant for motor disabled people). In the same manner, sustainability can be associated with how technology can adapt over time to a person’s changing needs.

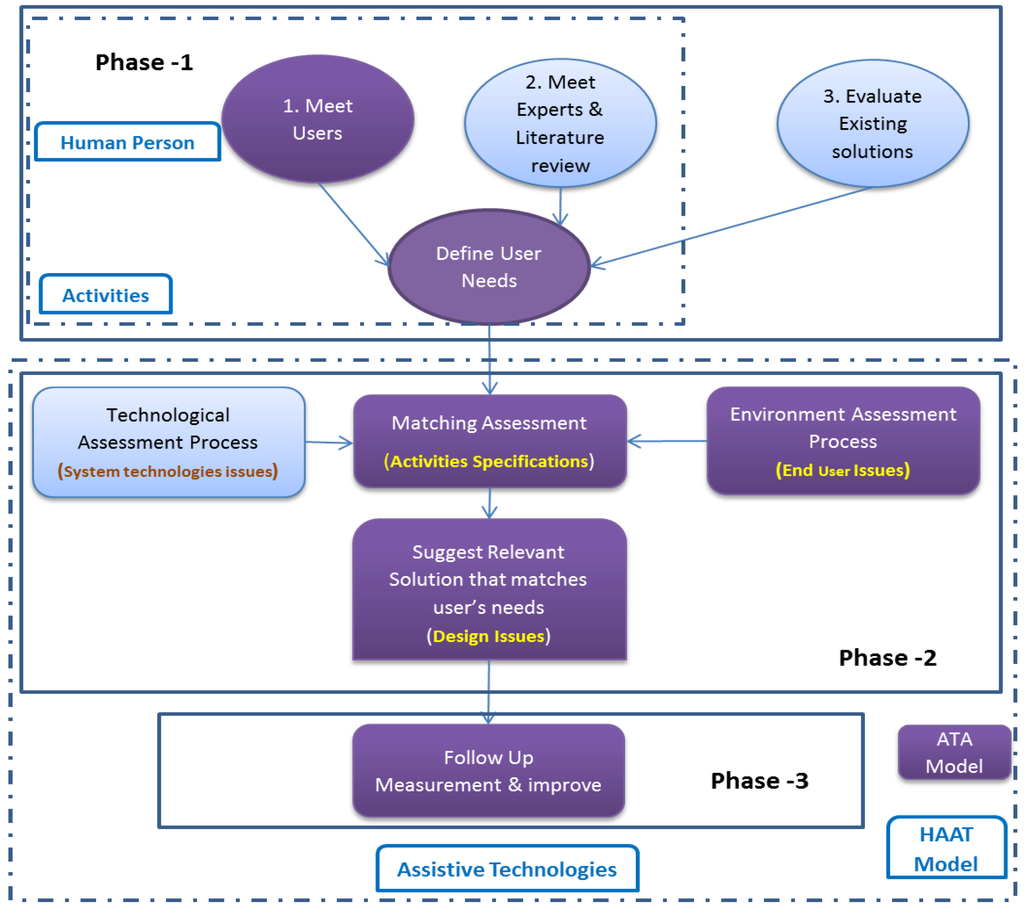

In this project, an AT proper based solution (AT-PBS) has been used to (a) improve the functional capabilities of the PWDs; (b) improve the information and communication means of the PWDs; and (c) improve the service support accessibilities of the PWDs. Two design models, including the ATA model [25] and the human activity assistive technology (HAAT) model [57], have been adopted, as shown in Figure 1. In phase 1, the objective was to define the requirements and needs of the PWDs. This was done by directly taking feedback from the PWD users, obtaining expert opinions, and analyzing the existing solutions in order to identify gaps. In phase 2, the professional team of the project identified the solution satisfying the needs of the PWDs and interacted with them to get their feedback. This was managed through environment assessment process, matching assessment and suggesting relevant solutions as in ATA. Finally, in phase 3, the proposed solution was tested by PWDs for ease of use and desired function. This has been aligned to Phase 3 of the ATA model and the assistive technologies assessment of the HAAT model.

Figure 1.

Combined design models of assistive technology assessment (ATA) and human activity assistive technology (HAAT).

3.2. AT Design Consideration for PWDs on the University Campus

To support a universal solution, there have been different ATs that can be used to support speech impairment (SI), hearing impairment (HI), visual impairment (VI), and motor impairment (MI). Table 1 presents the types of interfaces and ATs that can assist PWD with a particular impairment condition, where (x) signifies a technology that is relevant for that particular impairment.

Table 1.

Technologies essential in an Assistive System for different impairment conditions.

A Smart system must have at least one input and one output to allow PWD users to interact with the system effectively. In addition, it should have mobile technology to support them while moving. For input, it should have Voice Recognition (1), Voice to text (2), and Keyboard and mouse (3), while gesture control can be additional. For output, it should include Text-To-Voice (5) and Display Screen (6), while Vibration and Flashing (7) is supplementary. For mobility support, it should consist of Mobile Platform devices (8) and Tracking Location and Behavior (9). The needs of PWDs (phase 1) at the university campus were identified using the following three steps: surveying PWDs to know their requirements for accessibility solutions, obtaining expert opinions, conducting a literature review, and through surveying existing technological solutions to identify solutions’ strengths as well as areas of improvement. The implementation of the interface that supports the campus network and the unified interface that takes care of internal network coverage inside the building, as well as choosing the right technology for the voice recognition engine have been described in the section “Implementation of the USCAS-PWD”.

3.2.1. Identify the Requirements and Needs of PWDs (Phase-1)

In order to identify the needs of the PWDs, a user-centric design approach involving the surveying of PWD stakeholders is useful. Since PWDs might not be aware of the different AT solutions, there is a need to verify the relevant technologies, based on expert opinion and according to literature review. Moreover, there is a need to assess existing research and commercial technological solutions in order to identify criteria associated with the best practices and identify the right tools that can be used for voice recognition.

- Step 1:

- Survey PWDs to know their needs

Conventionally, the majority of customer product design processes do not explicitly pay attention to the needs of older or disabled people, which is in fact a wrong practice. There exists a number of approaches to surveying PWD stakeholders, such as observation, surveying, and interviewing, etc. As mentioned by Green et al. [58], the user needs vary depending on the product usage context. A very common practice used to identify the needs of PWDs involves discussion with the actual user or their caretakers [59].

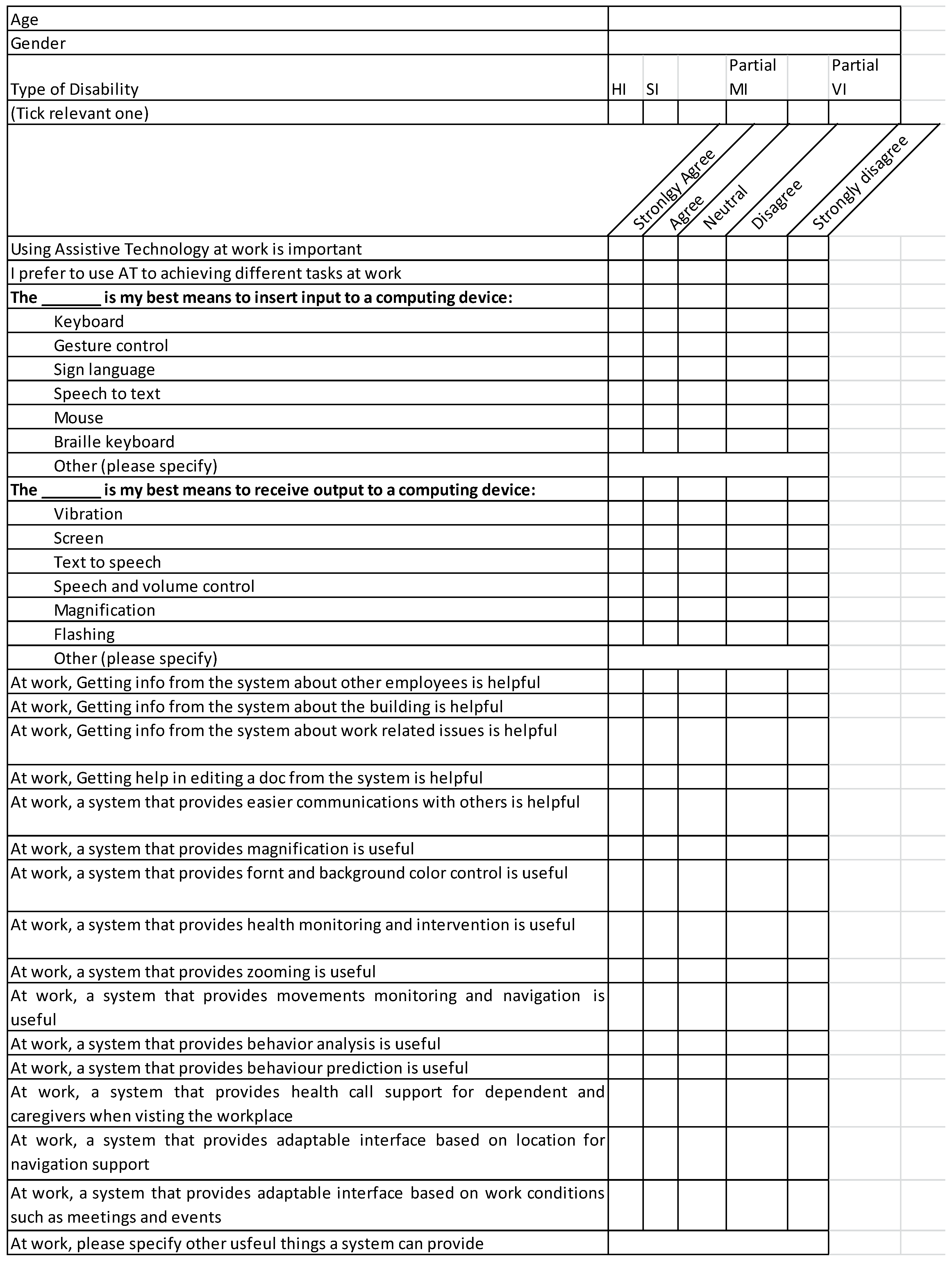

Similar to the work done in [60,61], in this project also, a survey targeting PWD students has been conducted using a questionnaire form which identified their needs (as shown in Appendix-A1), and the results of the survey are presented in Appendix-A2. The results revealed the following main requirements for designing an AT-based solution:

- Accessible system: To access any information related to the curriculum while walking on campus.

- Mobile searchable system: Ability to search for location and use of the LBS to reach their destination.

- Supported communication system: Ability to communicate with others and their teachers as well as their caregiver from anywhere on the campus, while moving.

- Auto-searchable system: Ability to find and talk to any person that matches their profile.

- Universal Interface system: To support different impairment conditions of the PWDs.

- Comprehensive solution: For information access, communicating with others, support of editing tools, and tracking users for intervention and guidance.

- Step 2:

- Identify the needs of PWDs through Experts and Literature Review

Another relevant technique that can be used to obtain the needs of PWD stakeholders is through expert opinion, and previous research work in that particular field. Butterfield and Ramseur [62] conducted a comprehensive literature review of the use of ATs at the workplace. They concluded that several needs of the disabled employee, such as accommodation, should be near to the workplace; the path used for the daily movement should be safe and easy; and proper navigation facilities should be provided. Yeager et al. [63] conducted research to study the barriers at the workplace for PWDs. It was reported by the PWDs that AT helped them to overcome some of the barriers; however, some issues still needed to be addressed. Galvin and Scherer [64] wrote a book on the evaluation, selection, and use of various ATs. Out of 15 chapters, ten were written by PWDs. The use of AT in various fields, such as the workplace, schools, playgrounds, etc. was described. The barriers, solutions, selection criteria, and future technologies were reported in the book. Based on the literature review and the issues highlighted in the introduction section, the following key needs for PWD have been identified:

- AT system or application should be user friendly

- AT solution should be mobile

- AT solution should be accurate and flexible to meet user needs

- AT solution should provide good help and support documentation

- AT solution should be comprehensive in nature

- AT solution should be interoperable

- Step 3:

- Survey of Existing Technological Solutions for PWDs Gap Analysis

Smartphone(s) have become very popular these days, and they can be easily owned by people to support mobility in the university campus. Therefore, many research and commercially available assistive solutions work on smartphone(s) platforms. A total of ten research/commercial products have been evaluated (as shown in Table 2) according to design criteria defined in Table 1. This helped the designers to identify the relevant criteria that support AT based on best practices. In addition, a brief assessment has been done based on the criteria of usability, support, and maintenance, as shown in Table 3 and Table 4 [65,66,67,68,69,70,71,72,73,74].

Table 2.

Assessment criteria for commercially available applications for people with disabilities (PWDs).

Table 3.

Assessment results of 10 commercially available assistive applications. Note that scale is between 0 to 10, where 0 for no evidence and 10 for full evidence.

Table 4.

Evaluation based on design consideration to support PWDs needs (Weightage: Yes, No, Partially Yes).

Table 3 provides the assessment of 10 research and commercial assistive applications. This assessment has been performed by scoring each technology (from 0 to 10) based on the evidence of child criteria of usability and maintainability, as identified in Table 2. It is clear that 3 out of 10 of these products have a good score for the majority of these criteria, and therefore they meet the specific conditions of PWD users in terms of usability and maintainability.

Innovative technologies can assist researchers and developers in providing intelligent solutions for PWDs which efficiently interact with the environment. These technologies employ different input methods, such as voice recognition, voice-to-text, and gesture control using sensors. They use different output methods, such as text-to-voice, vibration and haptic control, in addition to controlling devices, such as wheelchair and smart phone guidance systems. The current research lacks a complete unified smart solution relevant to the PWDs at the workplace or in the university environment. Therefore, this work has addressed this issue, as presented in Table 4. The research and commercially available solutions have mainly focused on a particular group of PWD. This means that the target group is not diverse. These types of systems are incomplete if a person suffers from more than one type of disability. Therefore, there is a need of a comprehensive solution that offers services to a wide range of PWD groups, rather than focusing on one group. In this work, a comprehensive and smart solution has been developed and implemented in an academic work environment. In order to design a supportive solution for PWDs, it should exhibit the following characteristics: usability in terms of flexibility, easy to use, adaptive to user conditions, and good maintainability after release.

- Conclusions from Phase 1

An efficient smart and context aware system, on the university campus can be achieved through a universal interface that supports the needs for occupational therapy and rehabilitation (Medical model) [76], and empowers PWDs through a user-centered solution (Social model) [77]. The following features of AT should be considered:

For an AT to suit all conditions of PWDs for better functional capabilities, and mandatory requirements for an AT-PBS (a) it should include the following features:

- Speech-to-text

- Text-to-speech and synthesis

- Speech/Voice command control

- Display enhancement through magnification and zooming

- Speech volume control

- Keyboard, mouse, and touch screen

For AT to support full Information accessibility, and enhancements to an AT-PBS (b) it should include the following features:

- Automatic emergency response

- Comprehensive support of activities at the university campus, including smart help including an auto-searchable system, profile setup, noting, and communication.

For AT to have better service support accessibility, and enhancements to an AT-PBS (c) it should include the following features:

- Mobility support

- Tracking, behavior analysis, and intervention

- Adaptable interface according to user profile

- Design for all by supporting universality

- Overcome existing environmental and social barriers by supporting usability solutions that are adaptive to the user’s conditions.

There can be more enhancements to support extra functional capabilities, such as gesture control and video recognition, video synthesis, sign language and avatar, Braille keyboard, and controlling the movement of a wheelchair through smart interface.

3.2.2. Identifying the Matching Solution Including USCAS-PWD System Design (Phase 2)

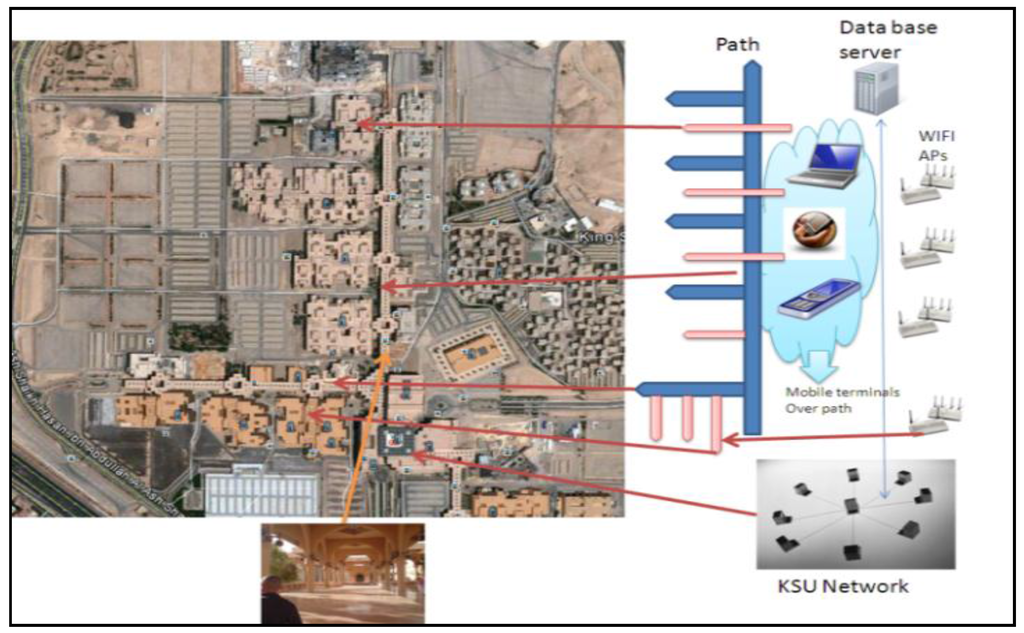

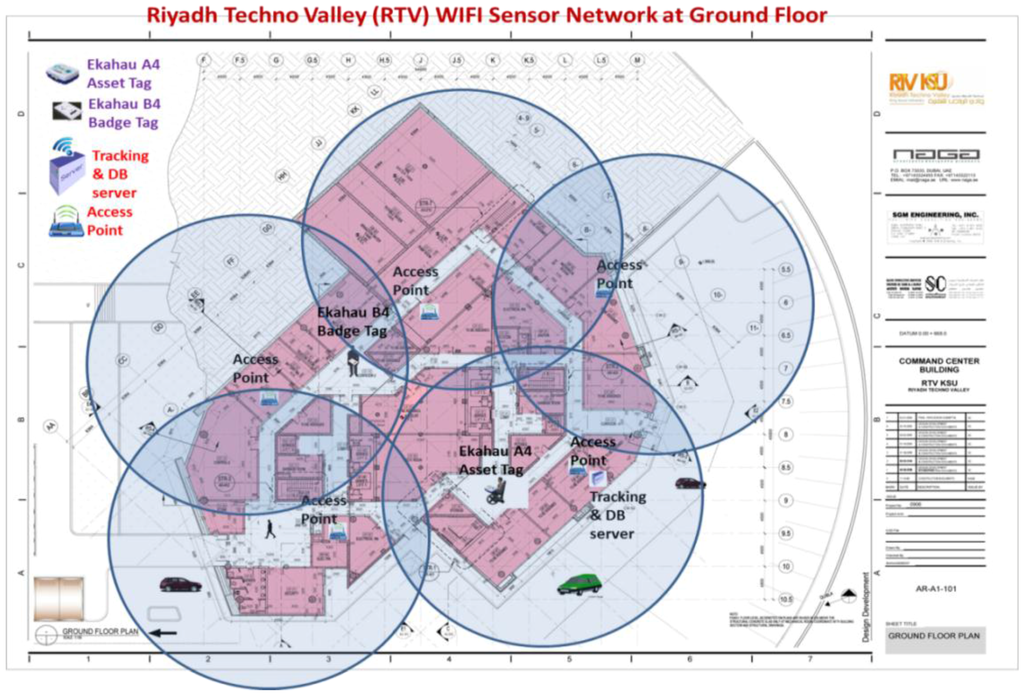

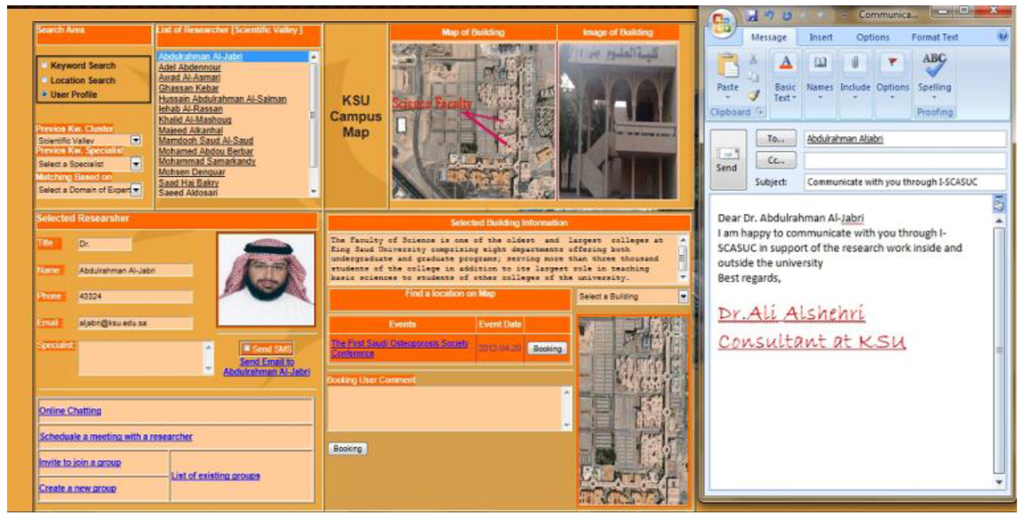

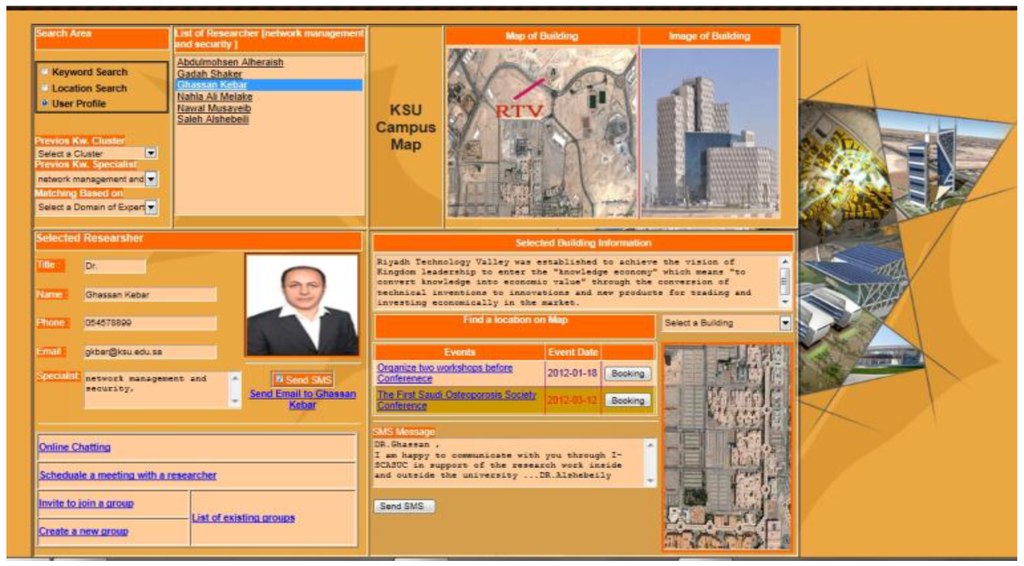

USCAS-PWD should incorporate the features identified in phase 1. As a result, a universal prototype solution that is secure, comprehensive, and which supports the needs of the majority of PWDs at the university campus have been developed. This proposed solution would be adaptive in nature; i.e., it would allow its users—including PWD students—to conduct a smart and regular search on the university campus, as well as inside the building. It also allows the users to interact with the selected party using different effective mechanisms, such as SMS, email, chatting, notification, and events booking. In addition, these solutions support a smart help feature for the PWDs to smartly identify their user profile and adopt the interface display parameters (color, fonts, etc.) and environments (speaker volume, mic volume, virtual mouse, and virtual keyboard) depending on the requirements as well as the predefined and stored profile. There are two program interfaces that have been designed and developed to support the PWDs at the campus network and inside the building. The first interface, which has been developed using the USCAS-PWD program (based on a web-based SQL database, and written in .net language), covers the university campus. This interface allows users to securely search for the relevant data using Wi-Fi and GPS technology anywhere on the campus while they are mobile or stationary. It also allows them to interact with the selected party effectively, as shown in Figure 2. The second program interface has been designed to cover the internal network within a building using a RFID/WiFi based-network. It covers the Riyadh Techno Valley (RTV) building and is written in the Java language, which interacts with a local SQL database and tracking location database. This interface allows the system to monitor PWD movement and location within the building, as shown in Figure 3. It also allows the PWDs to execute a smart helping program and smart editor program, as will be explained in the “Algorithm” section. The ATs used in the USCAS-PWD and the relevant criteria explained in the section “Design Consideration and Methodology” (Table 1) are illustrated in Table 4, along with other existing solutions.

Figure 2.

King Saud University (KSU) Smart and Context Aware University Campus Networking for PWD (USCAS-PWD).

Figure 3.

RFID–WiFi network inside RTV building.

- Comparison of Technology Design Features

Comparison of the design considerations of the USCAS-PWD interface program with the 10 existing software applications revealed that USCAS-PWD satisfied the input/output conditions for different impairment PWD groups. However, the other products lacked a comprehensive solution to support PWDs with multiple impairment conditions, as shown in Table 4. All existing software products for PWDs listed in Table 4 lacked the support of a flexible adaptable interface, and therefore could not be used by different PWDs with multiple impairment conditions. The USCAS-PWD can support 11 groups with different impairment conditions, as discussed in the following section. The other important criteria “Tracking Location and Behavior” have been partially supported in two existing solutions, but have been fully supported in USCAS-PWD. In addition, the USCAS-PWD supports speech recognition, along with five other existing solutions. Therefore, only USCAS-PWD satisfies the needs of the PWDs, in terms of supporting all necessary input/output technologies that are needed by all PWD groups. It also supports tracking location and behavior to assist PWDs at the work environment and on the university campus.

- Impairment Groups (Design and Implementation Consideration)

In order to cover the different PWD categories according to impairment conditions, it was realized that PWD who can see, or partially see, hear or partially hear, can move their hand or cannot, and can speak or are mute can be legitimate users of the system, and the groups which have been devised are shown in Table A1 of Appendix A3.

For a person with full vision and partial vision requirements that can co-exist with three other requirements (hearing, speaking, and moving hand), there would be 25 = 32 possible combination groups. Since there were constraints for this combination (by having at least one input and one output), the total number of groups were reduced to eight. These constraints restricted the number of groups as follows: for output from the system, a person must see or hear; for input to the system, a person must speak or move their hand. For a person with partial hearing, he/she must see but can/cannot speak or move hand, and therefore three other groups that were applicable (group 9, group 10, and group 11) are shown in Table 5. Hence, Table 5 identifies the different combinations according to different PWD impairments. It consists of the 11 groups that have been defined for different PWDs. The flexible interface would be designed to accommodate all conditions of these groups by adjusting the microphone volume, the speaker volume, and the font color, the font size, and the display window size and color.

Table 5.

Different groups according to impairment conditions.

4. Implementation of the USCAS-PWD

One of the main design considerations was to choose the right technological tools for voice recognition, which could support the features of the proposed solution at the PWD interface. Hence, a comparison between different technologies to support voice recognition, text-to-voice, and voice-to-text has been carried out as follows.

4.1. Technology Consideration and Comparison to Choose Voice Recognition Software

Voice recognition software plays an essential role in providing various services for PWDs in the proposed system. The choice of this software was made after the assessment of 11 popular software applications, which simplified the development process. This assessment was done in order to select a reliable software tool from existing solutions satisfying the relevant criteria. The assessment criteria consisted of two main parameters—namely, usability and sustainability and maintainability. These two parameters were named as the “Parent” criteria used to assess the software on certain “Child” criteria, as listed in Table 6 and described as follows:

Table 6.

Assessment criteria for voice recognition software.

4.1.1. Usability Criteria

Usability included the following “Child” parameters for assessing software applications:

- Ability to understand, learn, build, and install with the right documentation: These were assessed to determine the flexibility of software—i.e., if they could be easily understood for their functionality and use. They were also assessed to determine their building process and installation procedure (simple or difficult). The scoring was done on a scale of 0 to 10, with 10 assigned to the most flexible software.

- Speech to Text (STT) and Text to Speech (TTS) facility: 5 points were given to only one feature and 10 to both features (STT and TTS).

- Web Based: Software accessible on the web was scored at 10, otherwise it was given 5.

- Downloads: Scoring from 0 to 10 was given based on the number of downloads in a specific period. For example, the software that was downloaded between 500 and 1000 times got 5 points, whereas the one with more than 2000 downloads received 10 points.

4.1.2. Sustainability and Maintainability Criteria

Sustainability and maintainability included the following “Child” parameters for assessing the software:

- Identity, copyright, community usage, and accessibility: Assessment was based on clear and unique identity of the software. In addition, the evidence of current/future community of the software and the ability to download were evaluated on a scale of 0 to 10.

- Supportability, testability, and changeability: The software was assessed based on the evidence of existing and future developer support. In addition, the level of flexibility in modifying the software was scored on a scale of 0 to 10.

- Cost: Free software were assigned a score of 10, while reasonable price was given a score of 8. Expensive price software got a score of 6, and the ones that charged per subscription got only 0 to 5 points.

- Portability on operating systems: The software were assessed for their suitability on various operating systems, including Windows, Android, and iOS. The scoring was performed on a scale of 0 to 20. The software that was supported across multiple platforms scored 20. The score of 15 was given to one, compatible with Windows, Android, and iOS. The Windows and Android based software scored 10, and a Windows-only package scored 5.

- Available languages: The package that supported both English and Arabic was given a score of 10, whereas software with only one language scored 5.

- Available voices: Higher scores were given to software with a greater number of voices for converting sound from text. Software offering more than 15 voices scored 10.

- Programming languages: Support of the two languages C++ and Java attracted high score, with preference to Java. A score of 5 was given to only C++ supported one, while only Java supported software got 10 points, and support for both languages received a score of 15.

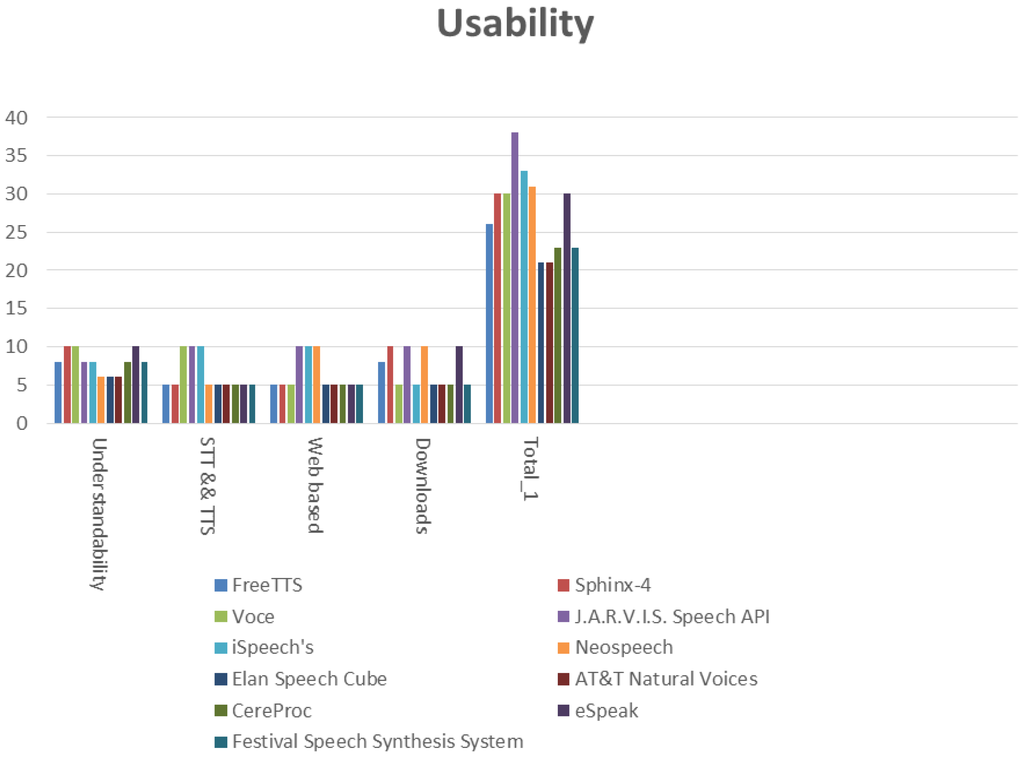

The scores given to 11 software applications were evaluated by two Engineering Programmers who developed the smart solution for the project in order to select a relevant software tool for voice recognition that can be integrated with the smart unified interface. These assessment results are based on the software application features, with evidence as shown in Table 6 [78,79,80,81,82,83,84,85,86,87], where the evidence of C3.1, C4.1, and C4.2 were given directly by the Engineers according to their test assessment. The numerical assessment is presented in Table B1 and Table B2 of Appendix B.

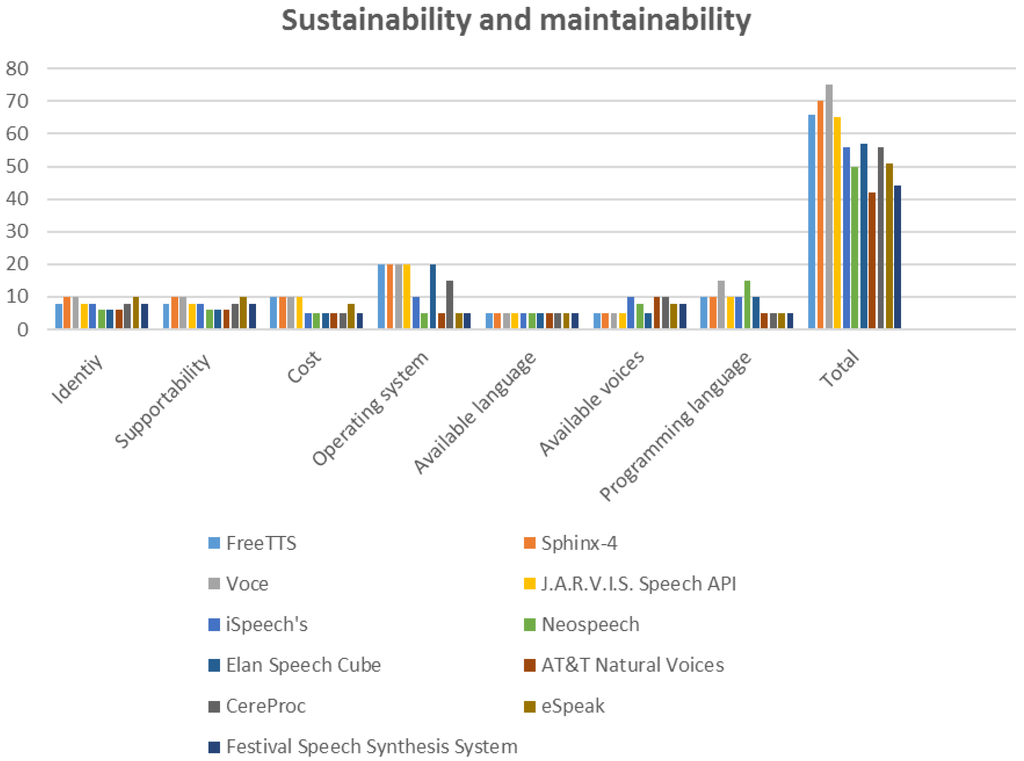

The software applications were assessed, and were based on two main Parent criteria—i.e., Usability and Sustainability and Maintainability. The output results are shown in Figure 4 and Figure 5. As can be seen in Figure 4, the J.A.R.V.I.S speech API scored the highest (38 points) in criterion Usability, whereas VOCE ranks as the fourth top speech API in regards to Usability. However, by considering the highest scores of Sustainability and Maintainability features of voice recognition software, VOCE attained the highest score with 75 points, whereas Sphinx came second at 70 and J.A.R.V.I.S speech API and iSpeech’s got scores of around 65 points, as shown in Figure 5. Since Sustainability and Maintainability features are considered critical to our application, VOCE became a clear choice for a speech recognition system, as it also satisfied other requirements, such as supporting both Java and C++. The software was freely available and it worked on all of the operating systems, including Windows, Android, and iOS. It also enabled both text-to-speech (TTS) and speech-to-text (STT). It also offers several speaking voices in the package and works in the English language. Although VOCE is not web-based like J.A.R.V.I.S, VOCE was the most suitable, as this feature was not very necessary.

Figure 4.

Usability scores of voice recognition software.

Figure 5.

Sustainability and Maintainability scores of voice recognition software application.

4.2. Implementation of the Unified Interface for the Indoor Network

According to the design considerations in previous sections, the implemented solution would address the following important features:

- Universal interface that suits the majority of PWDs,

- Comprehensive solution that supports all the services needed at the workplace and on the university campus,

- Support of the mandatory requirements of AT listed in the section “Identify the requirements and Needs for PWDs”,

- Support of the enhancement of AT to support full Information accessibility and automatic emergency response,

- Support of service capabilities, including mobility, tracking and intervention, behaviour analysis, adaptable user interface, and overcoming environmental and social barriers.

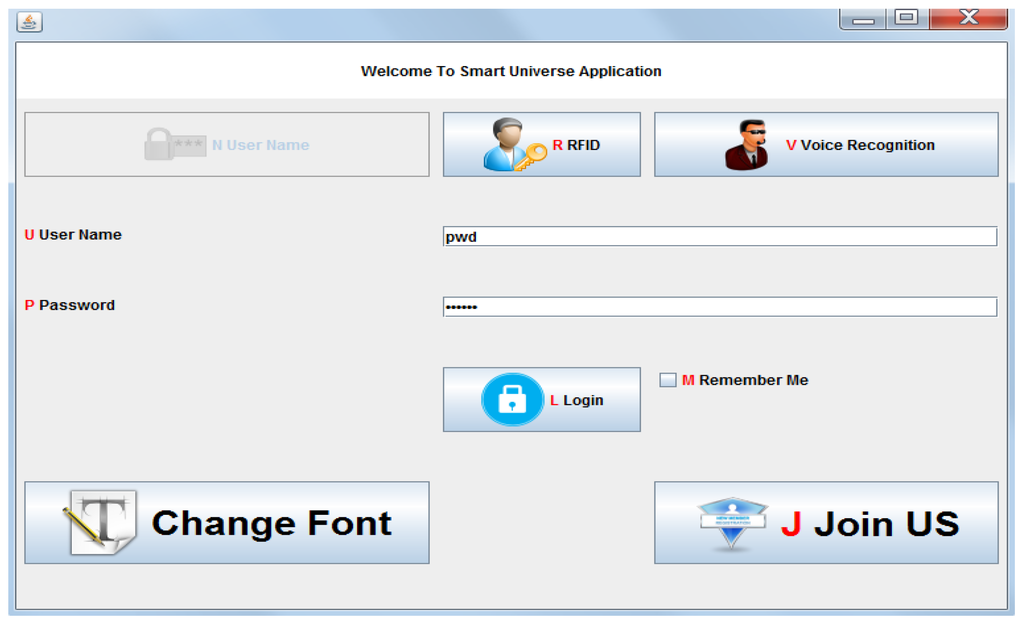

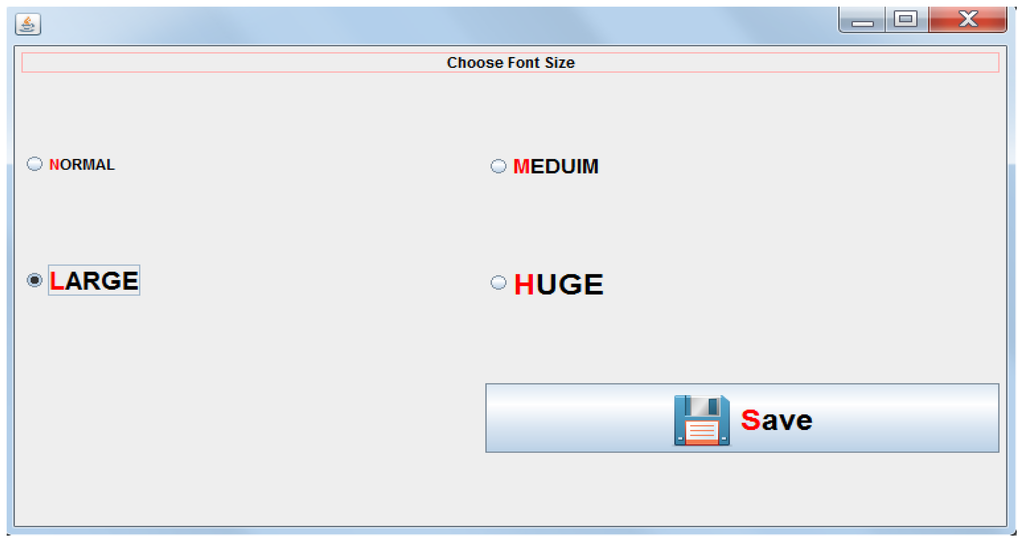

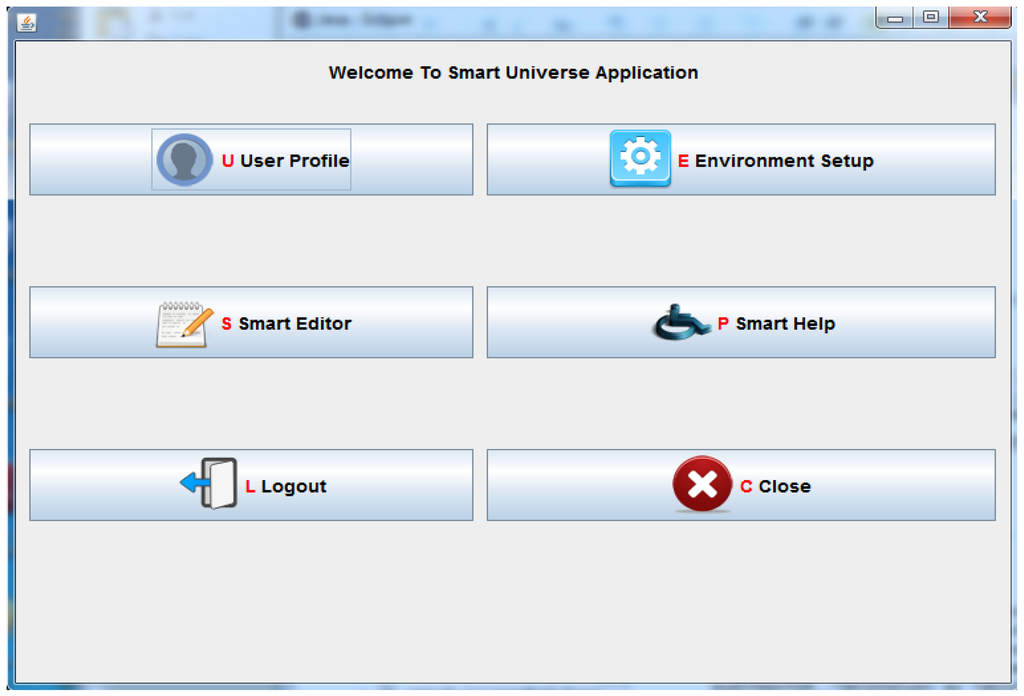

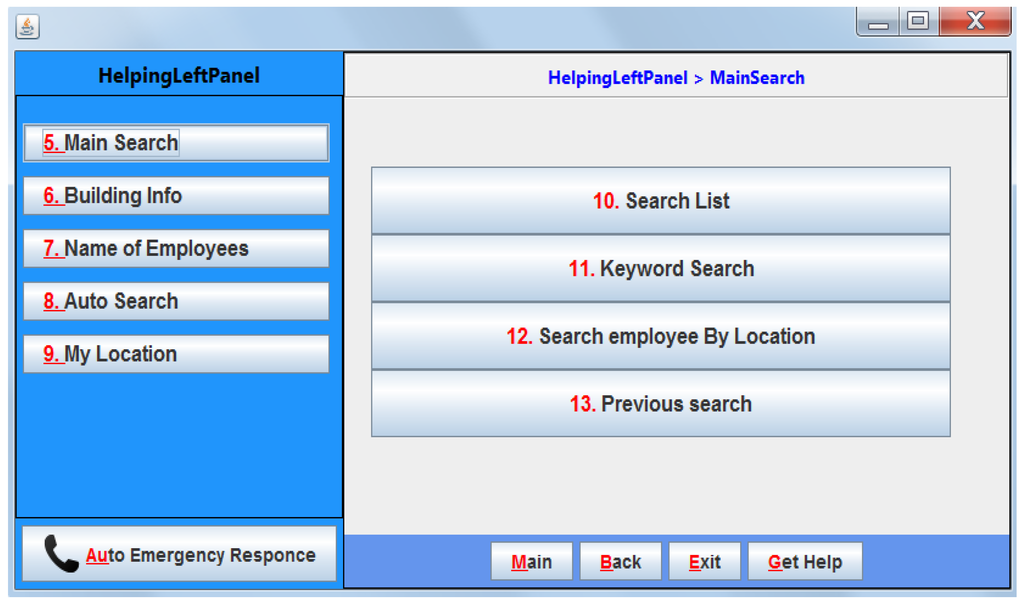

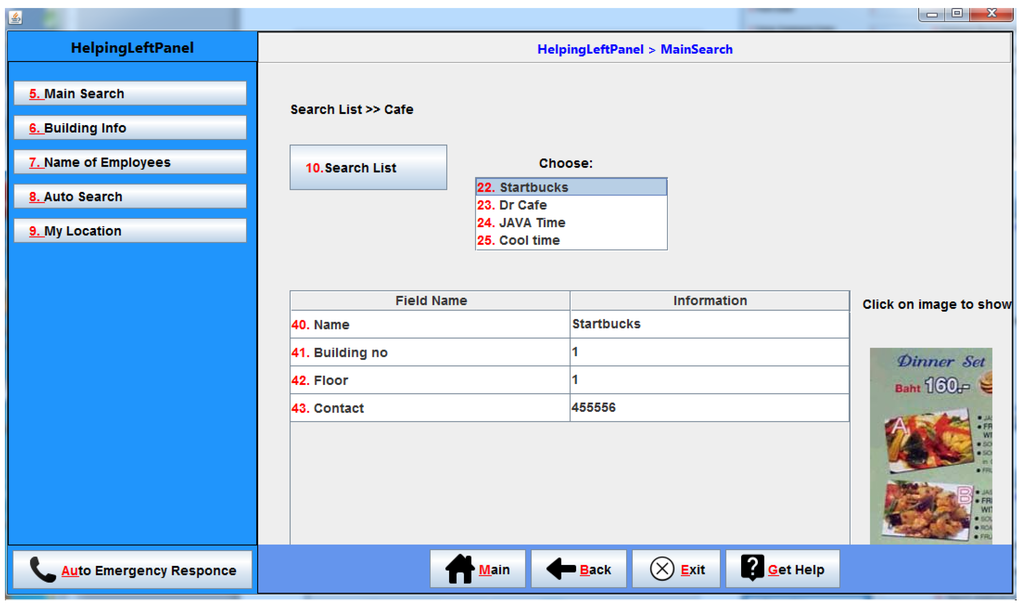

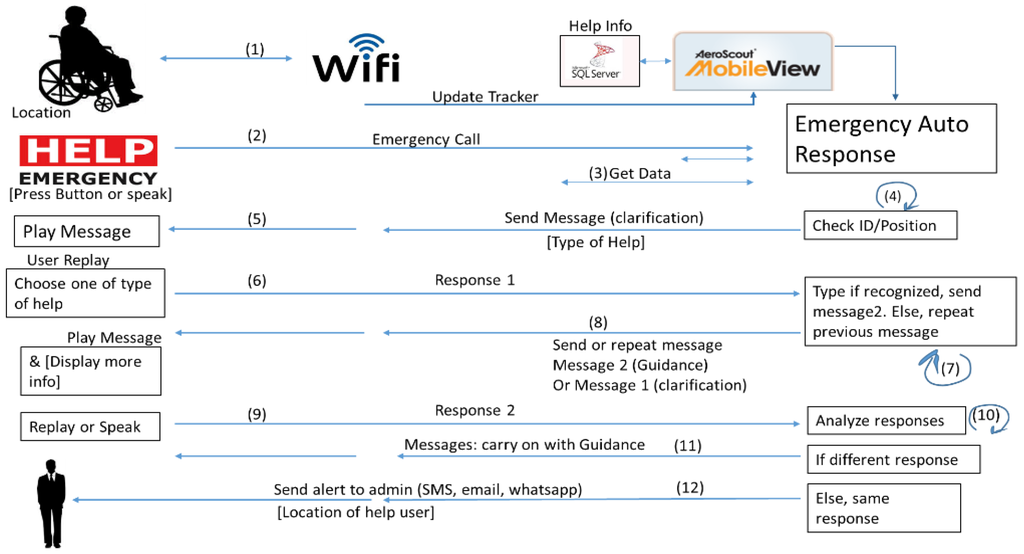

Therefore, a unified interface has been developed to allow PWD users/students to log into the system and adjust the display for different font size relevant to them, even at the first login window (as shown in Figure 6 and Figure 7), and as presented in movie-1 of the supplementary file “CommonUnified.mp4”. The user can login at the interface either using a keyboard with the relevant font size, depending on his/her condition, as well as using RFID or voice recognition. According to the user profile, the different interface with relevant font size and window color and size can be displayed. The user profile is set up either according to the default group he/she belongs to, or by customization through user preference. The first indoor interface for PWD after login allows the user to select between different applications that are set up to customize user profile, environment setup, smart editor, and smart help, as shown in Figure 8. Note that the privacy issue has also been addressed in the developed solution through encryption and authentication. The user would be authenticated during login using user name and password, and it will be based on RFID login in a future prototype version, while their messages would be encrypted. Three main program features, including setting up user profile and environment profile, smart editor and smart helping (as shown in Figure 8) have been supported for PWDs to provide a comprehensive solution on campus. Users can drive the interface program through keyboard, mouse, or voice-to-text by using the short-cut that is displayed in red in Figure 8, Figure 9, Figure 10 and Figure 11. Smart helping allows the PWD users to retrieve relevant guiding information from the help server using voice recognition, which would translate the voice into text and then into a command to get information from the server, as shown in Figure 9 and Figure 10, and as presented in movie-2 of the supplementary file “SmartHelpDesktop.mp4”. As shown in Figure 9 and Figure 10, the USCAS-PWD program allows users to select among multiple search options: Main Search includes search list, keyword search, search employee by location, and search history; Building info; Name of Employee, to get full information about any employee working in the building; Auto Search, which includes search by location and search by profile, which allows search results to be matched to user properties such as interest and specialties, where a list of users that match the user properties (interest or specialty) will be displayed on the auto search interface; and My Location, which determines the current user location within the building. In the search List, PWD users are given the option to select an item from the list, where these items are related to amenities in the building, as shown in Figure 10.

Figure 6.

Login Page.

Figure 7.

Customize Font Size First Page.

Figure 8.

First Window application for USCAS-PWD.

Figure 9.

Features in Help option of the interface (Interface relevant to PWD).

Figure 10.

Main Search Example.

Figure 11.

Communication and Event Setup.

In addition, the users would be able to setup events and communicate with the selected party through different mechanisms, such as sending SMS or email, or call other party, as shown in Figure 11 and as presented in movie-3 of the supplementary file “CommInter-BehAnala-ProfileSetup.mp4”. User location tracking within a building can be done using the RFID–WiFi system, which has been integrated with the unified interface to assist users in determining his/her location as well as tracking movement on map to reach a particular destination. The event behavior algorithm (Section 4.4.2) has been integrated to the track server, which determines if the user has executed the event according to schedule and sends an intervention message to him/her or her/his caregiver to advise them about a missing behavior or about the date and time of the events. Event setup allows the user to setup a new event by determining the event’s location, date, and time, as well as sending a reminder by using email or SMS, as shown in Figure 11. The intervention shown in Figure 11 allows the PWD user to setup and view the intervention messages that were sent to him/her as part of executing the event behavior algorithm, as described in section “Event and Noting Behavior Algorithm” and as presented in movie-3 of the supplementary file “CommInter-BehAnala-ProfileSetup.mp4”. The location tracking feature shown in Figure 11 allows the PWD user to track location while moving toward their destination in real time, and an alert message would be sent to him/her if moving in wrong direction according to movement algorithm, described in Section 4.4.1. The users would have the choice to disable tracking by not sending his/her identity through the interface if he/she is not willing to be tracked. Personal reminder allows the users to view and control the current and previous events’ status.

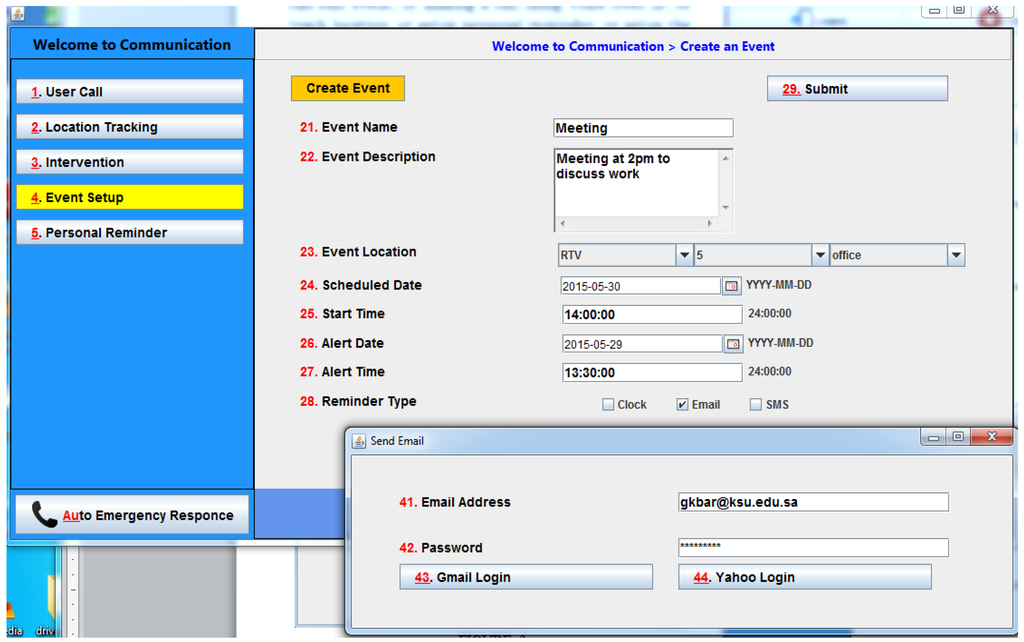

Furthermore, the solution would provide help or mergency response (as displayed in Figure 9, Figure 10 and Figure 11) to handle difficult situations and assist PWDs in getting the right help and guide them to solve their problems. The emergency call would be handled by the Auto Emergency Response (AER) Program installed on the server, as illustrated in Figure 12. The client terminal attached to the PWD user would be tracked continuously by the AER program through a WiFi AP that has been connected to the AER server through a wired or wireless connection, and the location data would be stored in the MSQL server (sequence 1). If a PWD user issues an emergency call through speech or by pressing a button, a signal would be sent to AER server (sequence 2), where the AER program would then request the location and map from the MSQL server to identify the user ID and its location (sequence 3). Then, the AER server would send a reply to the PWD user terminal with a few options, indicating the type of help they require for further assistance. The message received at the requested PWD terminal would be played to the PWD and displayed on the terminal to choose one of the help options he/she wants (sequence 5). The PWD user can then speak the chosen option, which would be sent to AER server (sequence 6) for analysis by the AER for further action (sequence 7). If the response coming from the PWD is according to the type of help that was sent to him/her, then a guidance message would be sent to the PWD again (sequence 8 with new message).

Figure 12.

Sequence diagram of Auto Emergency Response and Help program.

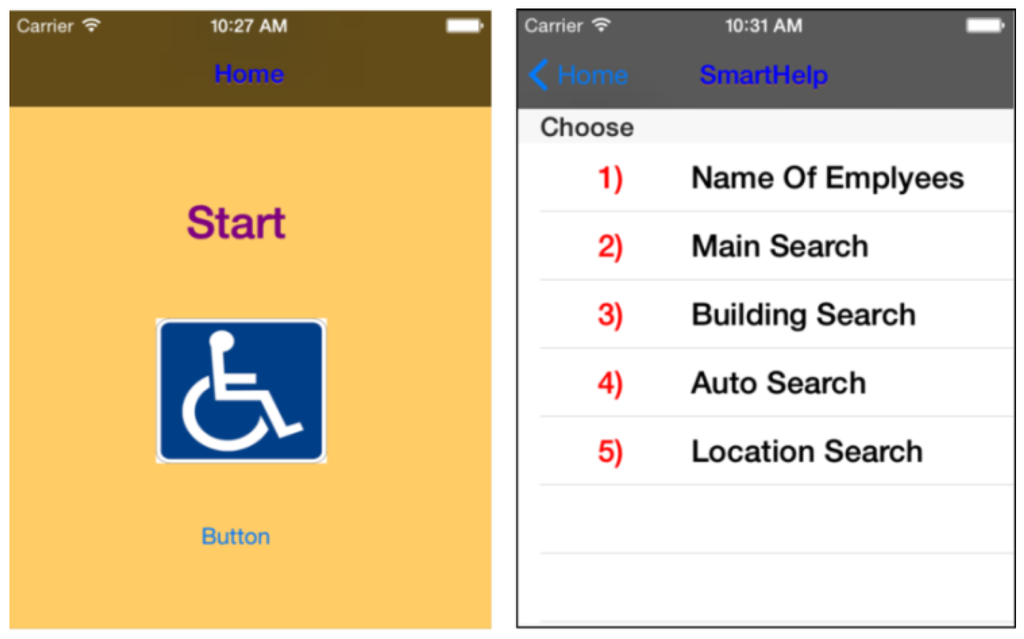

A light universal interface program has also been developed to run on a smart mobile (iPhone). It would allow the user to interact through the system to get information stored in MySql server. This has been achieved through an application running on the iPhone, which interfaces through a Web service based on Web Services Description Language (WSDL). This web service converts the received messages in C# format, so that it can be understood by the iPhone terminals, as shown in Figure 13 and Figure 14. In Figure 13, five options for the search have been supported. In Figure 14, the execution of search “Name of Employee” displayed three names. When character “g” is entered, two names have been filtered out, and more information about the selected name is displayed. Movie-4 of the supplementary file “MobileAddDataToDB.mp4” demonstrates how to add user data to the database using an iPhone.

Figure 13.

Light Smart Universal Interface, Smart Help, for iPhone.

Figure 14.

Light Smart Employee Interface, Search, for iPhone.

4.3. Implementation of the System for Outdoor at the Campus Network

The USCAS-PWD program has been implemented using the asp.net language and SQL database to support PWDs while moving at the campus, where the client can access the information using the web interface, as shown in Figure 3. The main relational tables represent building, researchers, cluster, events, keyword-search, specialist, researcher’s specialist, booking, and sending SMS and email. The fields associated with the building table are building ID, map, photo, IP address, location, building description, and image size. User would be able to book for an event according to chosen location found in the building. The researcher table is linked to the building table through building ID, cluster table through cluster ID, researcher specialist table through its ID, keyword-search ID, and sending SMS and email table Section ID. It also includes many fields regarding the researchers. This would allow the users to get all the information regarding the building and its researchers, as well as information regarding the researchers that are relevant to their domain cluster and specialist. Furthermore, users would be able to communicate with a selected found party using SMS, chat, or email.

The building IP address field included in the building table would be used by the USCAS-PWD program to make a comparison with the reading IP cluster of the user after it has been associated with the access point. Then, the USCAS-PWD program would determine the location of the users if the IP match was determined, and subsequently display the search result according to the search option that has been selected by users. The GIS mechanism presented in this paper provides a flexible cheap solution for users who don’t have GPS in their terminals. The location-based search is a smart adaptive search solution that can dynamically determine the user’s location on campus using WiFi IP subnetting-based location technology or GPS technology, and then display its location, information about the location’s department and its researchers, as well as any event associated with this department. Satisfactory feedback has been obtained from PWDs, students, and the developers who tested the prototype USCAS-PWD program. King Saud University’s (KSU) students—including the PWDs as well as researchers and RTV staff—would benefit from the USCAS-PWD program, since it allows them to display the matching research profile as well as relevant guidance, where the mobile devices would receive information regarding a building or a group of buildings in the surrounding areas and display it on the user’s terminal.

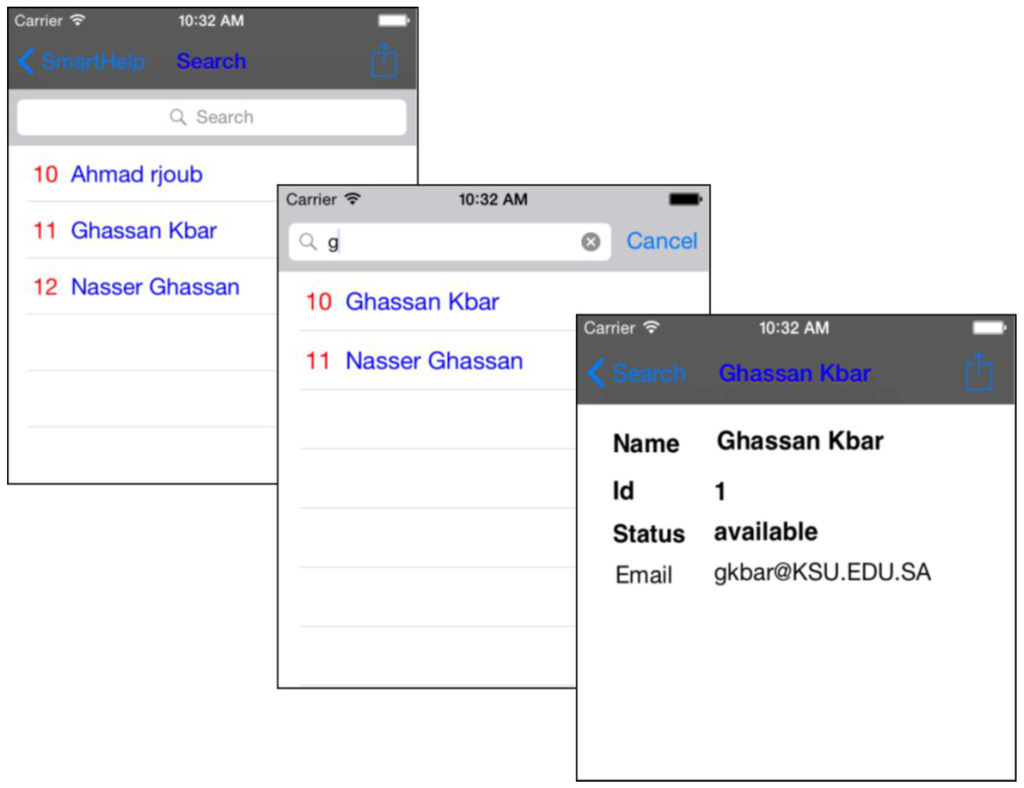

4.3.1. Search by Keyword and Booking Event

Users are able to search for any keyword associated with other researchers, clusters, or specialists anywhere on the university campus, whether the users are stationary or mobile. As shown in Figure 15, a user searches for a particular keyword in the cluster option “scientific valley”, and the USCAS-PWD program displayed the list of all matching researchers, gave the option to select a particular researcher displayed in the list, and displayed the relevant information about the researcher and the building he/she works in, as well as any event planned for this building. As shown in Figure 15, there are many researchers that have been found on the matching list, and the selected researcher information for “Saeed” and his RTV information are displayed. The user has also executed the “Find Direction” option of the Engineering department, where he is located at the science department, as shown in Figure 15. The arrow on the bottom right side in Figure 15 indicates the direction from the current location of the user to the destination that has been selected by this user.

Figure 15.

USCAS-PWD User Interface using keyword-search and Find Direction.

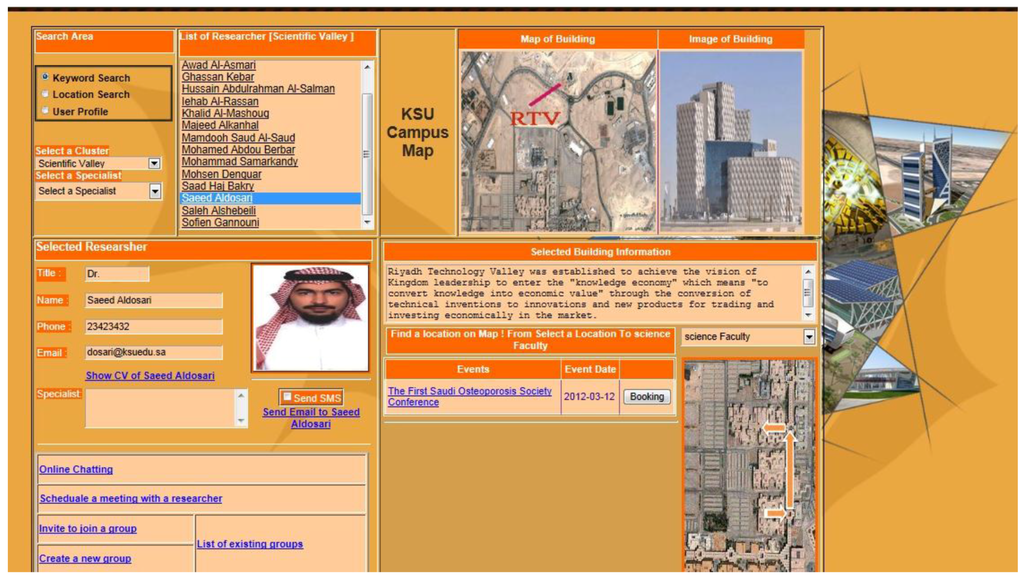

4.3.2. Auto-Search by Location and Find Direction

While user is moving on the university campus, the USCAS-PWD program would determine the user’s location and display information about the department existing in that location, as well as a list of all researchers in this department. If the user selects a researcher from the list, the relevant information of the selected researcher (e.g., “Dr. Abdulmuhseen” from Science) is displayed on left bottom of the window, and the information about Science department and its future events are displayed on the right window, as shown in Figure 16. The user interacts with the USCAS-PWD interface by booking the event. In addition, the user executed the Find Direction from the science building to the Engineering building, where the arrow on Figure 16 displays the direction.

Figure 16.

USCAS-PWD User Interface using Location-based search, event booking, and Find Direction on map.

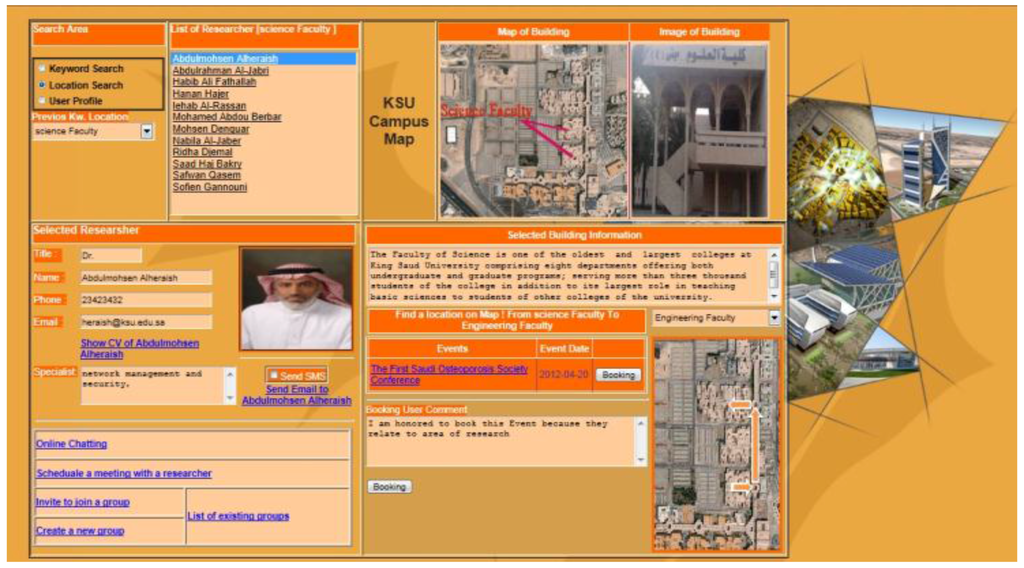

4.3.3. Auto-Search by User Profile and Sending Email and SMS

A user profile can be set explicitly by the user according to its predefined preferences or implicitly by observing user actions while applying the keyword search, which can update the interest field. This would allow the user profile to be updated dynamically according to the collection of search entries executed by the user. The auto-search by the user profile would first determine the user location and then identify the researcher’s profiles that match with the user’s profile and display them on the most suitable format of the matching result, as shown in Figure 17. Two options associated with user profile have been displayed for the user, which are specialist and interest. The interest list will be added by the user to indicate his/her interests. The interest list can also be updated dynamically based on previous keyword (KW) search, and previous Loc searches that have been added to the interest list if they have been selected by the same user with a threshold of n = 5 times. In Figure 17, the user has selected the previous Loc search, where the Loc Scientific valley has been chosen, and user chooses Dr Abdulrahman from the list of researchers. On the right hand side of the USCAS-PWD interface, the user is sending an email to Dr Abdulrahman, as shown in Figure 17. If the user chooses the specialist option, and since there are multiple keywords in his/her specialist field or in the interest field/Keyword search field, the USCAS-PWD program would keep checking the database to determine if it has a matching word in the specialist or interest fields that exists at the same department location and display them on the user interface. Figure 18 displays the results of the user’s profile-based search and previous KW option of the interest list, where “Network Management” is chosen, and Ghassan Kbar is selected from the displayed list. The user can also send an SMS to the same researcher, as shown in Figure 18.

Figure 17.

USCAS-PWD User Interface using User Profile-based search, using previous keyword search and sending email.

Figure 18.

USCAS-PWD User Interface using User Profile-based search, using previous keyword (KW) specialist search and sending SMS.

4.4. Algorithms

The following subsections describe the algorithms that have been implemented to determine the movement direction of the PWD user toward a destination. It also describes the event and noting behavior algorithm, which determines the right actions to be taken according to scheduled events and the conditions of the PWD user, including date and location.

4.4.1. Algorithm for Determining the Direction of PWD Movement within the Building

To check the movement of the current behavior and predict if it leads to correct expected movement direction, we need to check the following:

Assume that PWD is moving to target location (meeting room according to behavior a1 with room coordinate a1(x3) = (Tx, Ty), the current PWD user coordinate location is (Cx, Cy), and the updated PWD user coordinate after period of is ().

There are four possible relative locations of PWD in relation to target room a1 as follows:

- Cx > Tx and Cy > Ty (Group location A, east–west)

- Cx > Tx and Cy < Ty (Group location B, east–south)

- Cx < Tx and Cy > Ty (Group location C, west–north)

- Cx < Tx and Cy < Ty (Group location D, west–south)

The following presents the possible movement direction to determine if it is heading to target location or not:

For Group A: if ↓ decreases compared to Cx and , and ↓ decreases compared to Cy and , then the object is moving in the right direction, or else is moving in a false direction, where is the current coordinate location of the user, is the previous coordinate location of the user, and Cx is the original coordinate location of the user.

For Group B: if ↓ decreases compare to Cx and , and ↑ increases compared to Cy and , then the object is moving in the right direction, or else is moving in a false direction.

For Group C: if ↑ increases compared to Cx and , and ↓ decreases compared to Cy and , then the object is moving in the right direction, or else is moving in a false direction.

For Group D: if ↑ increases compared to Cx and , and ↑ increases compared to Cy and , then the object is moving in the right direction, or else is moving in a false direction.

The movement algorithm assists in predicting the movement direction of PWD users toward a destination and alerts them when they are moving in the wrong direction.

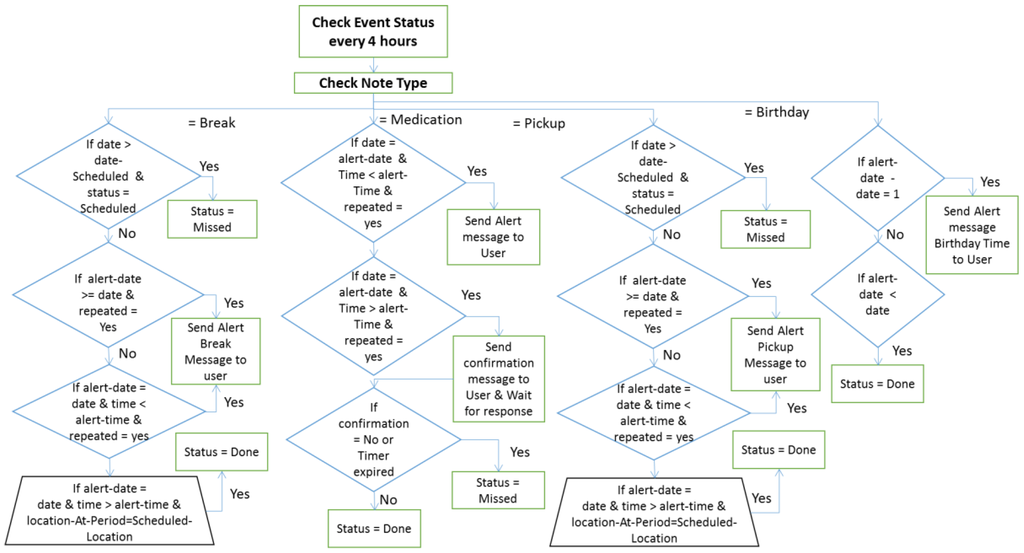

4.4.2. Event and Noting Behavior Algorithm

In this section, the noting and event algorithm that periodically (every 4 h) monitors the events set up by PWD users, as well as their location to determine how to intervene with them and their caregivers, has been described. In addition, messages would be logged in the database to be analyzed by the personal reminder interface. Figure 19 displays the event algorithm and how it tracks the user and updates event status to “Done” or “Missed”, according to the user’s location and scheduled date. Figure 20 illustrates the algorithm for monitoring personal noting according to four cases, which are break, medication, pickup, and birthday. Based on different conditions associated with scheduled date, alert date, and time, the user’s location, and noting status, different actions would be undertaken for these noting events. These actions can be sending an alert message to the user to remind him/her about taking medication on time, about pick up date and time, about birthday time, or break time. In addition, actions would include changing the noting event status to “Done” or “Missed”, depending on the location of the user, as well as getting his/her response on time. These algorithms would be executed on the server to monitor the execution of scheduled events of all PWD users and to remind them about future events or inform them about event inconsistency.

Figure 19.

Personal Reminder—Event Algorithm to run on server.

Figure 20.

Personal Reminder—Noting Algorithm to run on server.

5. Discussion and Conclusions

A universal interface that supports AT solution has been designed by following the ATA and HAAT design methodologies to satisfy the needs of PWDs. The proposed solution is based on a Smart and Context-Aware at University Campus for PWDs. The USCAS-PWD represents a smart solution that is universal, adaptive, mobility supportive, flexible, and provides an effective comprehensive solution, including search for implementing a professional networking tool, which can be used by the researchers and students and PWDs with different impairment conditions. This application interface supports PWDs on the university campus and inside a building that is equipped with a RFID–WiFi network. The USCAS-PWD program uses asp.net programming languages along with SQL database for the campus network, and RFID–WiFi technology indoors to determine the user’s location. The user location-based search, along with the adaptive user profile and keyword-search, together make the GIS solution—smart and very effective in determining the relevant information for parties as well as providing the ability to communicate with others effectively. The implementation presented in this paper proves a successful smart flexible search and professional networking tool that makes the working environment for students—including PWDs—more productive. The Smart Help feature facilitates the usage of a system for PWD that works on their voice commands by voice recognition system. Furthermore, an intervention event and behavior-based algorithm, as well as a direction movement algorithm have been devised, which help in identifying the behavior of PWD students and taking the right actions to inform caregivers about any issues so that they can be aware of PWD critical activities. This tracking of behavior assists PWDs by alerting them or their caregiver about future or missing events. Moreover, the global acceptance and the cultural aspects of this type of technology can be realized from the positive feedback received from the users. The feedback that has been received actually reflects the global acceptance of the proposed technologies.

The proposed universal interface based on USCAS solution to support multiple PWD users on the university campus proved to be successful in meeting their needs while moving indoors, sitting in the class rooms, and moving outdoors on the university campus. This is done through smart help and communication solutions as well as smart tools that are context-aware and help PWD users and students to do their task effectively. However, some of the issues related to conducting extensive user studies to evaluate the diverse environment and conducting relevant usability studies need to be done, so that a better solution can be achieved. Furthermore, addressing the privacy issues of PWDs needs to be improved in the future in order to accommodate all impairment conditions and cultural diversity. Future research should incorporate PWD students’ needs at the university level to address the curriculum for different departments, to integrate the student registration interface, and to allow PWD students to access their related course materials and interact with them online. Moreover, the AT smart interface should support student forums to address their needs so that feedback can be collected from these forums to improve the design interface continuously.

For future endeavors, the authors would evaluate the interconnectivity of the proposed system with other technologies, such as cars, bikes, etc. They will also consider implementing the solution to run on different mobile platforms, such as Android and Windows.

Supplementary Materials

The following are available online at www.mdpi.com/2073-431X/5/3/18/s1, Video S1: CommInter-BehAnala-ProfileSetup.mp4; Video S2: CommonUnified.mp4; Video S3: MobileAddDataToDB.mp4; Video S4: SmartHelpDesktop.mp4.

Acknowledgments

This Project was funded by The National Plan for Science Technology and Innovation (MARRIFAH), King Abdul-Aziz City for Science and Technology, Kingdom of Saudi Arabia, Award Number (12-ELE3220–02).

Author Contributions

The first author has conceived and designed the system; all authors analyzed users’ needs and determined the matching of AT solution. A development team consists of 4 engineers have developed the proposed solution. All authors contributed to writing the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PWD | People with disabilities |

| RFID | Radio-frequency identification |

| GIS | Geographic Information System |

| USCAS | University-based Smart and Context Aware Solution |

Appendix A1

The following questionnaire was developed to collect more users’ requirements. It was sent to a number of users from all four groups.

Appendix A2

The results are shown in the table below. The users were different from those who participated in the other elicitation methods. A total of 100 responses were collected and analyzed (25 for SI, 25 for HI, 25 for partial VI, and 25 for partial MI).

| Strongly Agree | Agree | Neutral | Disagree | Strongly Disagree | |

|---|---|---|---|---|---|

| Using Assistive Technology at work is important | 97% | 3% | 0% | 0% | 0% |

| I prefer to use AT to achieving different tasks at work | 97% | 3% | 0% | 0% | 0% |

| The _______ is my best means to insert input to a computing device: | |||||

| Keyboard | 48% | 2% | 3% | 2% | 45% |

| Gesture control | 30% | 23% | 19% | 5% | 23% |

| Sign language | 2% | 7% | 2% | 40% | 49% |

| Speech to text | 45% | 10% | 10% | 30% | 5% |

| Mouse | 49% | 4% | 1% | 5% | 41% |

| Braille keyboard | 0% | 3% | 1% | 26% | 70% |

| The _______ is my best means to receive output to a computing device: | |||||

| Vibration | 0% | 5% | 30% | 45% | 20% |

| Screen | 70% | 8% | 0% | 12% | 10% |

| Text to speech | 22% | 7% | 50% | 15% | 6% |

| Speech and volume control | 21% | 8% | 50% | 12% | 9% |

| Magnification | 80% | 15% | 5% | 0% | 0% |

| Flashing | 15% | 13% | 40% | 27% | 5% |

| At work, Getting info from the system about other employees is helpful | 80% | 15% | 5% | 0% | 0% |

| At work, Getting info from the system about the building is helpful | 95% | 5% | 0% | 0% | 0% |

| At work, Getting info from the system about work related issues is helpful | 98% | 2% | 0% | 0% | 0% |

| At work, Getting help in editing a doc from the system is helpful | 96% | 4% | 0% | 0% | 0% |

| At work, a system that provides easier communications with others is helpful | 85% | 10% | 5% | 0% | 0% |

| At work, a system that provides magnification is useful | 30% | 20% | 45% | 5% | 0% |

| At work, a system that provides font and background color control is useful | 27% | 17% | 35% | 21% | 0% |

| At work, a system that provides health monitoring and intervention is useful | 99% | 1% | 0% | 0% | 0% |

| At work, a system that provides zooming is useful | 32% | 19% | 47% | 2% | 0% |

| At work, a system that provides movements monitoring and navigation is useful | 45% | 17% | 5% | 25% | 8% |

| At work, a system that provides behavior analysis is useful | 75% | 13% | 8% | 2% | 2% |

| At work, a system that provides behavior prediction is useful | 72% | 19% | 5% | 1% | 3% |

Appendix A3

Table A1.

Different impairment classifications.

| Serial | See | Partially See | Hearing | Speak | Move Hand | Group |

|---|---|---|---|---|---|---|

| 1 | 0 | 0 | 0 | 0 | 0 | NA (at least partially see) |

| 2 | 0 | 0 | 0 | 0 | 1 | NA (at least partially see) |

| 3 | 0 | 0 | 0 | 1 | 0 | NA (at least partially see) |

| 4 | 0 | 0 | 0 | 1 | 1 | NA (at least partially see) |

| 5 | 0 | 0 | 1 | 0 | 0 | NA (at least partially see) |

| 6 | 0 | 0 | 1 | 0 | 1 | NA (at least partially see) |

| 7 | 0 | 0 | 1 | 1 | 0 | NA (at least partially see) |

| 8 | 0 | 0 | 1 | 1 | 1 | NA (at least partially see) |

| 9 | 0 | 1 | 0 | 0 | 0 | NA (Must hear if partially see) |

| 10 | 0 | 1 | 0 | 0 | 1 | NA (Must hear if partially see) |

| 11 | 0 | 1 | 0 | 1 | 0 | NA (Must hear if partially see) |

| 12 | 0 | 1 | 0 | 1 | 1 | NA (Must hear if partially see) |

| 13 | 0 | 1 | 1 | 0 | 0 | NA (at least speak or move hand) |

| 14 | 0 | 1 | 1 | 0 | 1 | 8 |

| 15 | 0 | 1 | 1 | 1 | 0 | 7 |

| 16 | 0 | 1 | 1 | 1 | 1 | NA (overlap with group 7 and 8) |

| 17 | 1 | 0 | 0 | 0 | 0 | NA (at least speak or move hand) |

| 18 | 1 | 0 | 0 | 0 | 1 | 2 |

| 19 | 1 | 0 | 0 | 1 | 0 | 1 |

| 20 | 1 | 0 | 0 | 1 | 1 | 5 |

| 21 | 1 | 0 | 1 | 0 | 0 | NA (at least speak or move hand) |

| 22 | 1 | 0 | 1 | 0 | 1 | 4 |

| 23 | 1 | 0 | 1 | 1 | 0 | 3 |

| 24 | 1 | 0 | 1 | 1 | 1 | 6 |

| 25 | 1 | 1 | 0 | 0 | 0 | NA (at least speak or move hand) |

| 26 | 1 | 1 | 0 | 0 | 1 | NA(Same as 2) |

| 27 | 1 | 1 | 0 | 1 | 0 | NA(Same as 1) |

| 28 | 1 | 1 | 0 | 1 | 1 | NA(Same as 5) |

| 29 | 1 | 1 | 1 | 0 | 0 | NA (at least speak or move hand) |

| 30 | 1 | 1 | 1 | 0 | 1 | NA(Same as 4) |

| 31 | 1 | 1 | 1 | 1 | 0 | NA(Same as 3) |

| 32 | 1 | 1 | 1 | 1 | 1 | NA(Same as 6) |

Appendix B

Table B1.

Details of voice recognition software applications.

| TTS—STT (C3.1) | Web Based (C3.2) | Downloads Number (Last Month) (C3.3) | Cost (C4.3) | Operating System(s) (C4.4) | Available Language(s) (C4.5) | Available Voices(s) (C4.6) | Programming language (C4.7) | |

|---|---|---|---|---|---|---|---|---|

| FreeTTS [78] | TTS | No | 1965 | Free | Cross-platform | English | Several | Java |

| Sphinx-4 [79] | STT | No | 16,204 | Free | Cross-platform | English | Several | Java |

| Voce [75] | Both | No | 508 | Free | Cross-platform | English | Several | Java/C++ |

| J.A.R.V.I.S [80] Speech API | Both | yes | 5792 | Free | Cross-platform | English | Several | Java |

| iSpeech’s [81] | Both | yes | 900 | Commercial | Windows, Android | English | 20 | Java |

| Neospeech [82] | TTS | yes | 6991 | Commercial | Windows | English | 13 | C/C++/Java |

| Elan Speech Cube [83] | TTS | No | NA | Free | Cross-platform | English | Several | Java |

| AT&T Natural Voices [84] | TTS | No | NA | Commercial | Windows | English | 20 | C++ |

| CereProc [85] | TTS | No | 144 | Commercial | Windows Android, iOS | English | 21 | C/Python |

| eSpeak [86] | TTS | No | 9339 | Free | Windows | English | Several | C++ |

| Festival Speech Synthesis System [87] | TTS | No | 10 | Free | Windows | English | Several | C++ |

Table B2.

Comparison of speech-recognition software applications.

| FreeTTS | Sphinx-4 | Voce | J.A.R.V.I.S. Speech API | iSpeech’s | Neospeech | Elan Speech Cube | AT&T Natural Voices | CereProc | eSpeak | Festival Speech Synthesis System | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| C1.1 | 8 | 10 | 10 | 8 | 8 | 6 | 6 | 6 | 8 | 10 | 8 |

| C1.2 | 5 | 5 | 10 | 10 | 10 | 5 | 5 | 5 | 5 | 5 | 5 |

| C1.3 | 5 | 5 | 5 | 10 | 10 | 10 | 5 | 5 | 5 | 5 | 5 |

| C1.4 | 8 | 10 | 5 | 10 | 5 | 10 | 5 | 5 | 5 | 10 | 5 |

| Tot_1 | 26 | 30 | 30 | 38 | 33 | 31 | 21 | 21 | 23 | 30 | 23 |

| C2.1 | 8 | 10 | 10 | 8 | 8 | 6 | 6 | 6 | 8 | 10 | 8 |

| C2.2 | 8 | 10 | 10 | 8 | 8 | 6 | 6 | 6 | 8 | 10 | 8 |

| C2.3 | 10 | 10 | 10 | 10 | 5 | 5 | 5 | 5 | 5 | 8 | 5 |

| C2.4 | 20 | 20 | 20 | 20 | 10 | 5 | 20 | 5 | 15 | 5 | 5 |

| C2.5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| C2.6 | 5 | 5 | 5 | 5 | 10 | 8 | 5 | 10 | 10 | 8 | 8 |

| C2.7 | 10 | 10 | 15 | 10 | 10 | 15 | 10 | 5 | 5 | 5 | 5 |

| Tot_2 | 66 | 70 | 75 | 65 | 56 | 50 | 57 | 42 | 56 | 51 | 44 |

| Total | 92 | 100 | 105 | 103 | 89 | 81 | 78 | 63 | 79 | 81 | 67 |

References

- World Health Organization. World Report on Disability “The Way Forward: Recommendations, Chp 9”. 2011. Available online: http://www.who.int/disabilities/world_report/2011/chapter9.pdf (accessed on 26 July 2016).

- Antona, M. The Universal Access Handbook; CRC Press: London, UK; New York, NY, USA, 2009. [Google Scholar]

- Chen, C.-M. Intelligent location-based mobile news service system with automatic news summarization. Expert Syst. Appl. 2010, 37, 6651–6662. [Google Scholar] [CrossRef]

- Longley, P.A.; Goodchild, M.F.; Maguire, D.J.; Rhind, D.W. Geographic Information Systems and Science; Wiley: New York, NY, USA, 2001. [Google Scholar]