1. Introduction

Despite increases in capacity, broadband wireless systems still suffer from limitations in bandwidth capacity [

1], leading to congestion at a base-station’s (BS’s) output buffer to its mobile stations (MSs). To reduce the risk of buffer overflow, this paper proposes a customized packet-scheduling regime for data-partitioned video streams. However, taking measures to reduce the impact of congestion at a BS buffer is only one part of the story of how to achieve resilient video streaming over wireless links, which are prone to error bursts [

2], leading to a lack of synchronization between video encoders and decoders. In fact, the proposed packet-scheduling regime takes place within the context of a video streaming system that makes use of two measures to counteract the impact of errors: (1) source-coded error resilience [

3],

i.e., within the video codec, in the form of data partitioning [

4]; and (2) channel-coded adaptive Forward Error Correction (FEC) combined with error-control signaling [

5]. However, these measures are powerless against packet drops through buffer overflow. Unfortunately, due to the predictive nature of video coding [

6], most packet losses also have an effect that extends in time until the decoder is reset (intra refreshed).

One source-coded error resilience scheme, data partitioning, in its current form, is vulnerable to congestion due to the number of small packets that are produced. Data partitioning, which is a form of layered error resilience, can provide graceful degradation of video quality and, as such, has found an application in mobile video streaming [

4]. In an H.264/Advanced Video Coding (AVC) codec [

7], when data partitioning is enabled, every slice is divided into three separate partitions: partition-A has the most important data, including motion vectors (MVs); partition-B contains intra coefficients; and partition-C contains inter coefficients, the least important data in terms of reconstructing a video frame at the decoder. These data are packed into three types of Network Abstraction Layer units (NALUs) output by the codec. The importance of each NALU-bearing packet is identified in the NALU header. Though it is possible to aggregate (or segment) NALUs [

8] before encapsulation in Internet Protocol (IP)/User Datagram Protocol (UDP)/Real-time Transport Protocol (RTP) packets, when data partitioning is in use, this would be to neglect the advantages of retaining smaller partition-A and -B bearing packets because smaller packets have a lower probability of channel error. Therefore, in this paper each NALU is assigned to its own packet, prior to the addition of network protocol headers.

To reduce the risk of buffer overflow for data-partitioned video streams, this paper proposes a packet-scheduling method, which can work in addition to other channel error protection methods, one of which is the adaptive rateless channel coding that is described in

Section 2. Packet-scheduling schemes, which are referred to in

Section 3, act irrespective of physical-layer (PHY-layer) data scheduling and may be independent of the data-link Medium Access Control (MAC) sub-layer (though some other schemes do indeed intervene at the MAC sub-layer). The proposed packet-scheduling method works by smoothing the packet-scheduling times across one or more video frame intervals, according to allowable latency. Packets are allocated a scheduling time for output to a BS buffer in proportion to their size.

As such, the scheduling method is relatively simple to implement, which is one of its attractions owing to the need to reduce latency for interactive services such as mobile video-conferencing and video telephony. In the paper, the potential value of the application-layer (AL) scheduling approach is demonstrated in simulations that take account of PHY-layer packetization and scheduling. The paper also comments on video-content-dependent packetization issues as an aid to others planning AL packet scheduling or video-smoothing algorithms [

9]. Alongside the scheduling regime, the paper also presents the gains from employing adaptive FEC as opposed to statically determining the redundancy overhead. In fact, adaptive rateless FEC is a significant feature of the proposed scheme. For codec-dependent aspects of the scheme, the reader is referred to [

10] by the authors.

In general, the contribution of this paper is that a resilient video streaming scheme needs to consider both error resilience and congestion resilience, as error resilience alone is unable to protect against packet drops before the wireless channel is even reached.

Section 2 describes the design decisions made by the authors in arriving at the video streaming system, such as the decision to employ rateless channel coding, which at the application layer of the protocol stack has largely superseded [

11] fixed rate codes, especially those codes with high decoding complexity.

Section 3 of the paper critically reviews recent work on packet scheduling, especially for use in multimedia applications.

Section 4 details the methodology of both the error resilience and the congestion resilience aspects of the video streaming scheme. This is followed in

Section 5 by an evaluation of selected aspects of the proposed scheme, as simulated for a Worldwide Interoperability for Microwave Access (WiMAX) wireless link, especially the performance of adaptive, rateless coding and the video-content response for packet scheduling. Finally,

Section 6 draws some conclusions about the proposed video-streaming scheme.

2. Achieving Error Resilience: Design Decisions

Robust unicast communication can be achieved by two means of error control [

12,

13]: (1) Automatic Repeat ReQuest (ARQ) and (2) FEC. ARQ allows a higher video data throughput than FEC provided there is a feedback channel and provided the channel is not inherently error prone. ARQs are also much simpler to process than FEC. However, as in the example of this paper, ARQ and FEC are not mutually exclusive: they can be employed together.

In best-effort wired networks, in order to add reliability to an unreliable protocol such as UDP, ARQ at the application layer can be achieved by Acknowledgments (ACKs), assuming the ACK latency does not impact upon the video display rate [

14]. For example, in [

15] the transmitter keeps a buffer of packets containing video frames, some of which have been transmitted but not acknowledged and others of which have yet to be transmitted. The transmitter must ensure that the buffer occupancy level does not exceed the potential transmission delay. Cyclic Redundancy Checks (CRCs) are required (and assumed in this paper) to detect errors, if the data has not already been so checked at the transport layer. For example, the UDP-Lite protocol [

16] allows, when desired, data with errors to be passed up the protocol stack. UDP Lite is a possible implementation route for the ARQ/FEC system in this paper, allowing data to be corrected at the receiver rather than lost to the wireless receiver.

In a broadband wireless link designed for multimedia traffic such as the WiMAX link of this paper, a communication frame [

17] is divided into two sub-frames: the first from the base station to mobile stations and the second from mobile stations to use for communication with the base station. Therefore, a natural way of delivering an AL ACK is by placing it in the return sub-frame, as we do in this paper. Notice that WiMAX also permits data-link layer ACKs and, alternatively, even a form of hybrid ACKs to be turned on on a per-link basis. However, switching ACKs on at the MAC sub-layer may result in arbitrary delays that are beyond the control of a multimedia application, which is why they were not enabled in this paper’s evaluations. Instead AL ACKs were sparingly utilized, namely just once per packet. Soft ACKs can also counter the problem of arbitrary delays resulting from data-link ACKs by limiting the delay to a maximum [

18], but there is a risk of poor-quality video. To reduce that problem, the source coding rate [

19] can be varied according to the potential delay. However, in designing our system, it was simpler to implement AL ACKs.

To avoid the problem of delay and possible resource consumption by ACKs, FEC-based approaches transmit source-coded data with additional redundant parity data. Traditional fixed-rate channel codes cannot dynamically adapt to changing channel conditions easily, while rateless codes, with changing ratios of redundant to information data, can now adapt in a graduated fashion. However, to enable adaptation, Hybrid-ARQ (H-ARQ), e.g., as in [

20], becomes necessary. In Type II H-ARQ, the transmitter only retransmits the necessary redundant data to increase the probability that the received data can now be reconstructed. In this paper, Type II H-ARQ is used. Moreover, as previously mentioned, to limit the impact of ARQs on latency, only one request for additional FEC is permitted.

Adaptive FEC with hybrid H-ARQ is still possible with fixed rate codes, but some other means of rate adaptation is required such as code puncturing [

21] (the removal of parity bits) or code extension (the addition of parity bits resulting in a slower code rate). Unfortunately, some codes, for example Reed-Solomon (RS), are known to consume battery power [

22], caused ultimately by their asymptotic (decoding) complexity which is O(

k3) for the Gaussian elimination algorithm and O(

k2) for the Berlekamp-Massey algorithm, where

k is the number of information symbols. Therefore, due to their computational complexity, fixed-rate codes were avoided by us. Instead, Raptor codes [

23], the variety of rateless code employed herein, have linear complexity both for encoding and decoding. They permit decoding if any

k encoded symbols successfully arrive at the decoder. Usually, a small percentage (approximately 5%–10%) of additional encoded symbols are transmitted for successful recovery, but in the case of the requirement for additional encoded symbols, these additional symbols can be generated by the rateless encoder, in what has been called the fountain approach [

24].

Raptor codes are one of a number of concatenated codes, e.g., the Turbo-Fountain code [

25], which historically were developed to reinforce Luby Transform (LT) codes [

26] when it was pointed out that LT codes had high error floors [

27]. High error floors imply that the risk of decoder failure does not fall away as the channel conditions improve; instead, the risk remains at the same level as for lower Signal-to-Noise Ratios (SNRs). In order to achieve this, linear-time decoding iterative belief-propagation algorithms are necessary, which can be applied both to Raptor’s outer code, a variant of Low-Density Parity Check (LDPC), and to the LT inner code. Furthermore, a systematic Raptor code can be achieved by initially applying the inverse of the inner code to the first

k symbols prior to the outer coding step, which operates on the first

k information symbols and an additional set of redundant symbols. Systematic channel codes separate the redundant coding data from the information data, allowing the information data to be passed directly to the decoder if no errors are detected (usually by means of CRCs). However, finding the inverse via Gaussian elimination increases the time complexity. Notice that in practice [

28], Raptor codes, despite their low theoretical time complexity, are only computationally effective if an appropriate outer code is chosen, originally LDPC and latterly two concatenated LDPC-like codes.

In a traditional packet erasure rateless coding scheme, if PHY-layer correction fails due to checksum detection [

29], then a packet becomes an erasure to be corrected by the rateless code at the application layer. However in our paper, upon PHY-layer correction failure, packets are not marked as erased but their data are passed to the application layer for Raptor code correction. This is possible because, in our paper, the information symbol is no longer an erased packet but a data block within a packet (intra-packet). (Notice that in the Multimedia Broadcast Multicast Service (MBMS) implementation of rateless codes [

30], blocks are in general inter packet, not intra packet. However, this arrangement has the potential to increase the organizational overhead and the latency of the decoding process.) For a real-time multimedia application, intra-packet symbols have the additional advantage that there is no longer a requirement to wait for

k packets to successfully arrive;

k data blocks within a packet can arrive. In a procedure introduced in [

31], each data block is assigned a checksum. If the checksum calculation fails, that block is then marked as an erasure. As an alternative, also in [

31], each block can be given a confidence value based on the log-likelihood ratio (LLR) of the bits within it. Thus, the reduced risk of decoder failure is traded off both against lower latency and also the reduced efficiency of the code. The latter is due to the risk of declaring as erased blocks that are actually valid. In our intra-packet scheme, the data block is reduced to the size of a single byte, which has the advantage that the risk of decoding failure (see next paragraph) from shortage of data symbols within a packet is reduced. However, this raises the issue of CRCs, as the overhead would be too much if each byte were to be protected by a CRC. Therefore, a hybrid scheme is more suitable, in which blocks of bytes are assumed to be protected by a single CRC and marked as erasures if the CRC fails.

In a Raptor code for the inner LT code, even in an error-free channel, there is a small probability that the decoding will fail. However, that probability converges to zero in polynomial time in the number of input symbols [

32] and a similar analysis applies to an outer LDPC [

33]. In this paper, the probability of decoder failure is modeled statistically by the following equation from [

34]:

where

is the decode failure probability of the code with

k source symbols if

m symbols have been successfully received (and 1−

Pf is naturally the success probability). Notice that for

k > 200 [

34] the model of Equation (1) almost ideally models the performance of the code. This implies that, if block symbols are used, approximately 200 blocks should be received before reasonable behavior takes place. Therefore, we require packets of at least 200 bytes in our scheme.

In summary, the resulting video communication system used by us over a WiMAX link has protection at several layers of the protocol stack. Data randomization is applied at the PHY layer to avoid runs of 1 s or Os. Subsequently, one of WiMAX’s modulation and coding schemes is selected. Mobile WiMAX offers modulation by one of Binary Phase-Shift Keying (BPSK), Quadrature Phase-Shift Keying (QPSK), 16-Quadrature Amplitude Modulation (QAM), or 64-QAM in descending order of robustness. The mandatory convolutional coding rate is selected from 1/2, 2/3, 3/4, and 5/6 in ascending order of protection. There are other WiMAX PHY-layer protection options such as Turbo coding or PHY-layer H-ARQ which were not configured in the simulation experiments of

Section 5. Thus, bit-level FEC protection is first applied at the PHY layer and additional FEC is applied in our scheme at the application layer by means of a rateless channel coder. However, unlike conventional block-based AL channel coding, a variant of packet-level channel coding, we used intra-packet byte-level rateless channel coding. By selecting this form of application-layer channel coding, we were able to use adaptive rateless coding enabled by means of a simple, low-latency H-ARQ mechanism.

4. Methodology

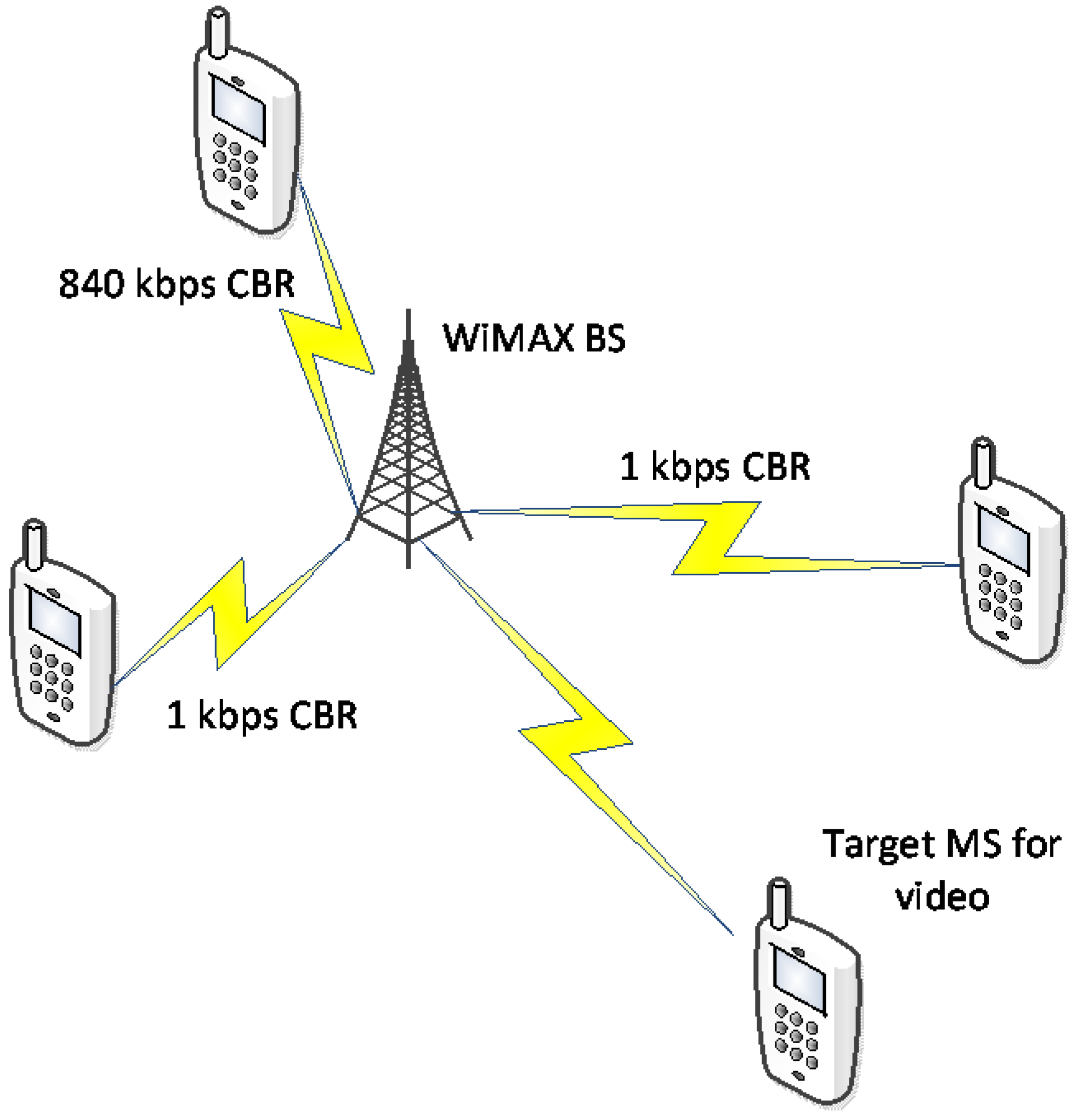

The error and congestion resilient video streaming scheme is demonstrated for IEEE 802.16, which is the standardized version of WiMAX wireless broadband technology [

50]. WiMAX continues to be rolled out in parts of the world that do not benefit from existing wired infrastructures or cellular networks. WiMAX is also cost effective in rural and suburban areas in some developed countries. It is designed to provide effective transmission at a cell’s edge by the allocation to a mobile user of sub-channels with separated frequencies to reduce co-channel interference. The transition to the higher data rates of IEEE 802.16 m [

51] indicates the technological route by which WiMAX will respond to the technological advances of its competitors, especially LTE. However, we modeled version IEEE 802.16e-2005 (mobile WiMAX), of which IEEE 802.16-2009 is an improved version [

52], as this is backwards compatible with fixed WiMAX (IEEE Project P802.16d), which still remains the most widely deployed version of WiMAX. Time Division Duplex (TDD) (

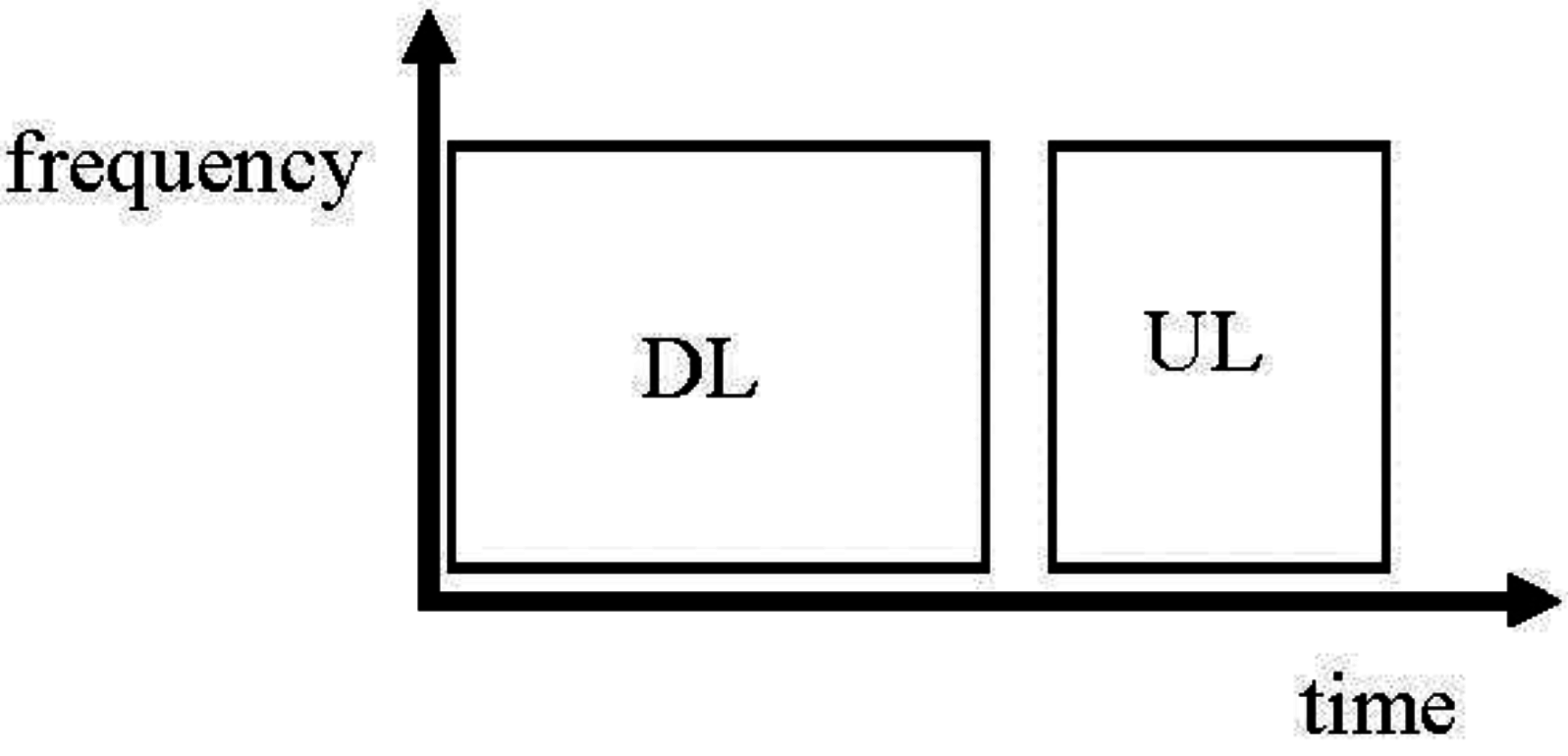

Figure 1) and effective scheduling of time slots between MSs through TDMA (not to be confused with AL packet scheduling as described in this paper) increases spectral efficiency.

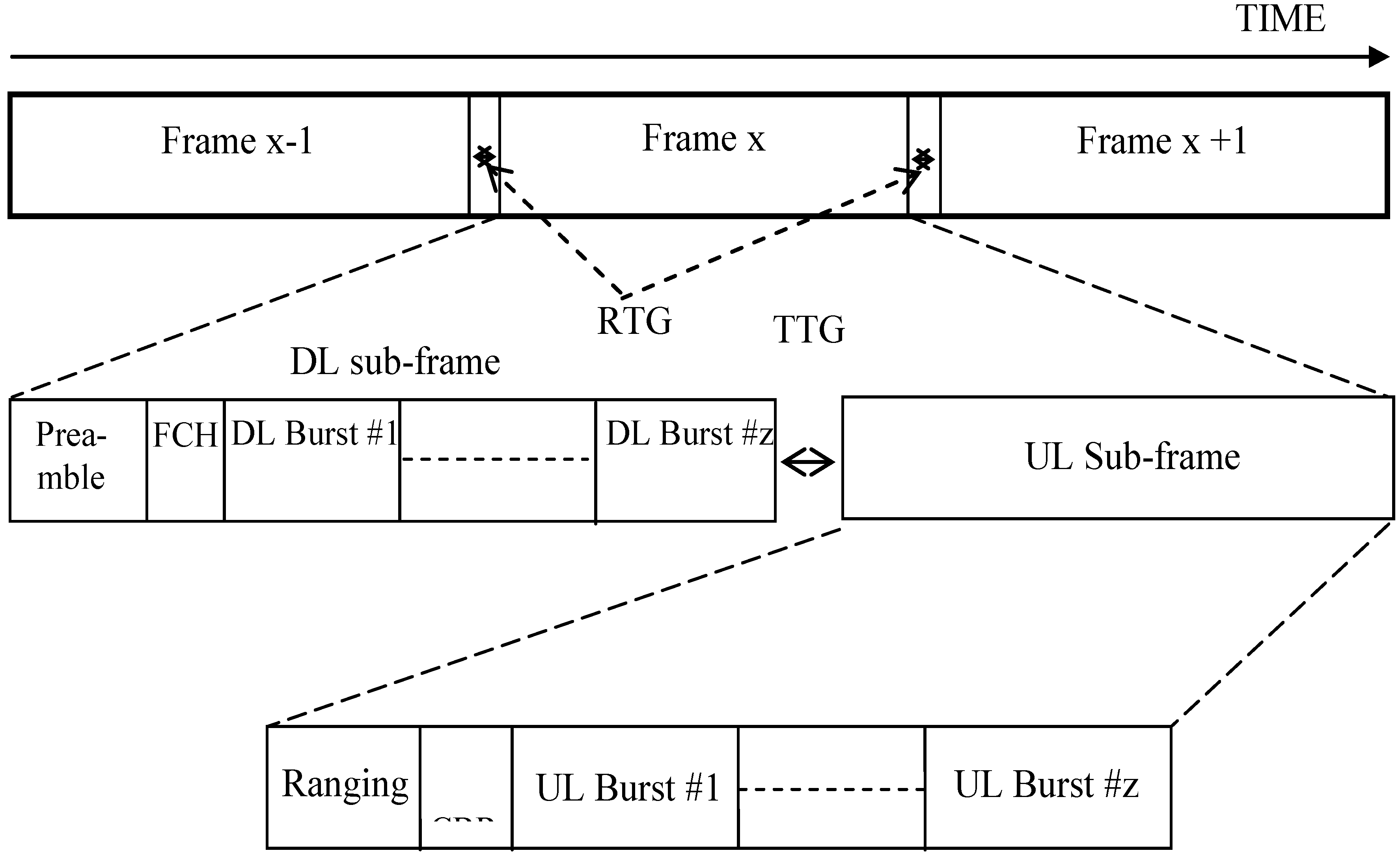

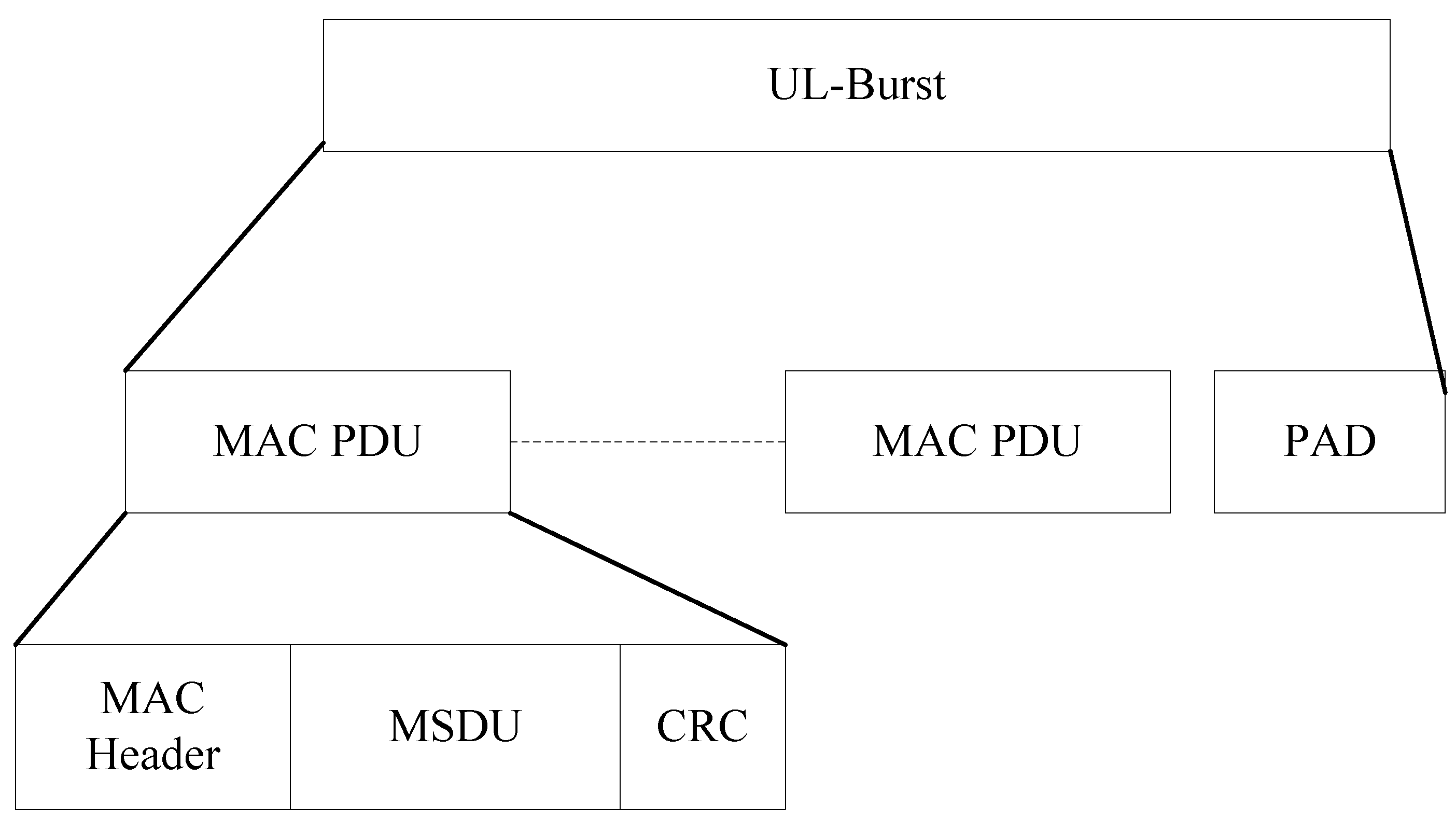

Figure 2 provides a closer look at the frame and sub-frame structure within which are contained per-MS data bursts. (For simplicity, the MS to BS sub-frame structure is illustrated.) Within each data burst are WiMAX packets, which at the MAC sub-layer are called Protocol Data Units (PDUs) and consist of a MAC header, MAC Service Data Unit (MSDU), and a CRC, as shown in

Figure 3. An MSDU corresponds to a packet passed to the MAC layer from higher layers in the protocol stack, most immediately from the transport layer. It consists of an IP packet with typical IP/UDP/RTP headers attached, though, in practice, packet header compression is normally employed over wireless links in order to reduce the heard overhead. The payload of this network packet will contain an NALU and additional rateless channel coding data added at the application layer, as described below.

Figure 1.

Worldwide Interoperability for Microwave Access (WiMAX) frame divided into two sub-frames separated by a guard interval, namely the downlink (DL) sub-frame from a base-station (BS) to mobile stations (MSs), and an uplink (UL) sub-frame following in time from the MSs to the BS. The time direction consists of successive Orthogonal Frequency-Division Multiple Access (OFDMA) symbols, while the frequency direction is assigned to data bursts on a per-MS basis (OFDMA is a refinement of Orthogonal Frequency Division Multiplexing (OFDM) that in mobile WiMAX allows more flexible sub-channelization in the frequency dimension). (Additional preamble, burst mapping, and ranging signaling is also present. For more details refer to [

50].)

Figure 1.

Worldwide Interoperability for Microwave Access (WiMAX) frame divided into two sub-frames separated by a guard interval, namely the downlink (DL) sub-frame from a base-station (BS) to mobile stations (MSs), and an uplink (UL) sub-frame following in time from the MSs to the BS. The time direction consists of successive Orthogonal Frequency-Division Multiple Access (OFDMA) symbols, while the frequency direction is assigned to data bursts on a per-MS basis (OFDMA is a refinement of Orthogonal Frequency Division Multiplexing (OFDM) that in mobile WiMAX allows more flexible sub-channelization in the frequency dimension). (Additional preamble, burst mapping, and ranging signaling is also present. For more details refer to [

50].)

Figure 2.

Sub-frame structure showing per-MS data bursts (numbered from 1 to z). (FCH = frame control header, RTG = receive/transmit transition gap, TTG = transmit/receive transition gap.)

Figure 2.

Sub-frame structure showing per-MS data bursts (numbered from 1 to z). (FCH = frame control header, RTG = receive/transmit transition gap, TTG = transmit/receive transition gap.)

Figure 3.

WiMAX burst structure showing MAC Service Data Unit (MSDU) packets. The DL-Burst is similar, except that there are additional headers included prior to the MAC PDUs. (PAD = padding, as the PDU size is variable.)

Figure 3.

WiMAX burst structure showing MAC Service Data Unit (MSDU) packets. The DL-Burst is similar, except that there are additional headers included prior to the MAC PDUs. (PAD = padding, as the PDU size is variable.)

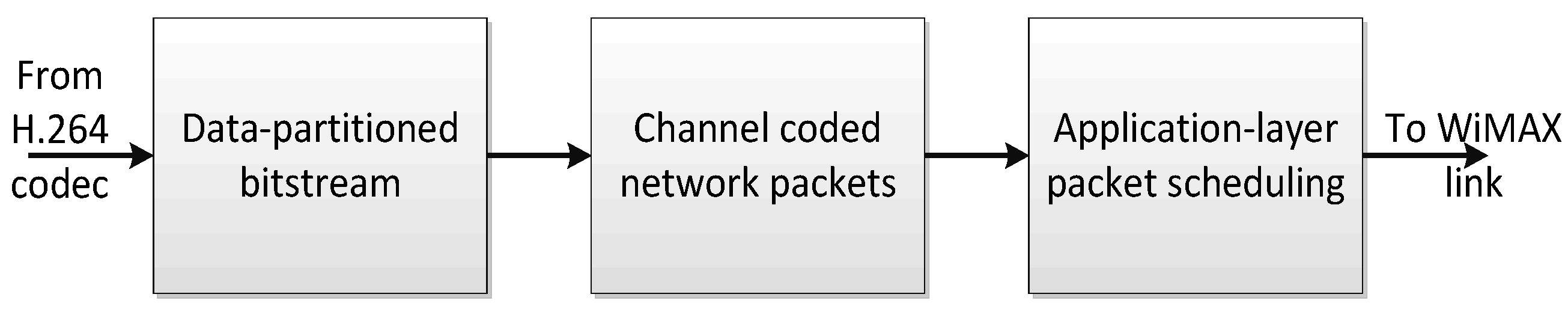

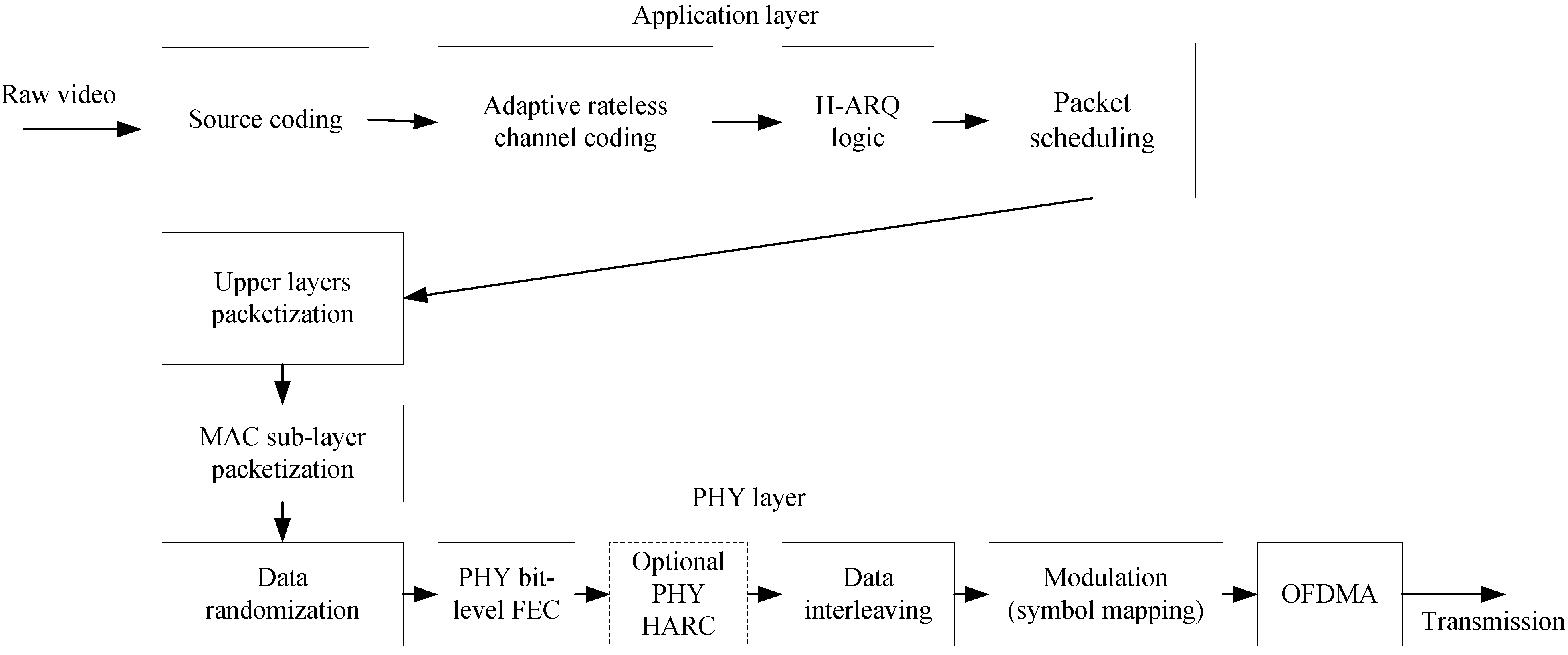

As a whole, the WiMAX video-streaming scheme,

Figure 4, comprises three components: (1) source-coded data partitioning of the video bitstream; (2) adaptive rateless channel coding; and (3) AL packet scheduling. This Section now describes these three components. As analyzed in

Section 1, the first two of these are error resilience measures, while the third, supports congestion resilience. Therefore, the following description of the methodology is split into error resilience and congestion resilience. The error-resilience description includes the way that the source packets (NALUs) are mapped to the underlying broadband wireless MAC and PHY layers.

Figure 4.

Overview of video-streaming scheme.

Figure 4.

Overview of video-streaming scheme.

4.1. Error Resilience

As previously remarked, data-partitioned H.264/AVC compressed video can be an effective means of placing more important data (as far as video decoding is concerned) in smaller, less error-prone packets in a wireless channel, provided low-quality video (quantization parameter (QP) greater than 30) is not transmitted, as then the less important packets diminish in size. As is common in mobile video communication, in this paper an IPPPP… frame coding structure is employed, that is an intra-coded (I)-frame followed thereafter by predictively-coded (P)-frames. Notice that bi-predictively-coded (B)-slices are not permitted in the H.264/AVC Baseline profile, aimed at reducing the complexity of bi-predictive coding on mobile devices. Random Intra Macroblock Refresh (RIMR) (forcibly embedding a given percentage of randomly placed intra-coded macroblocks (MBs) in a P-frame) was turned on to counteract spatio-temporal error propagation that would otherwise occur in the absence of I-frames. The advantages of omitting periodic intra-coded I-frames for wireless communication are reviewed in [

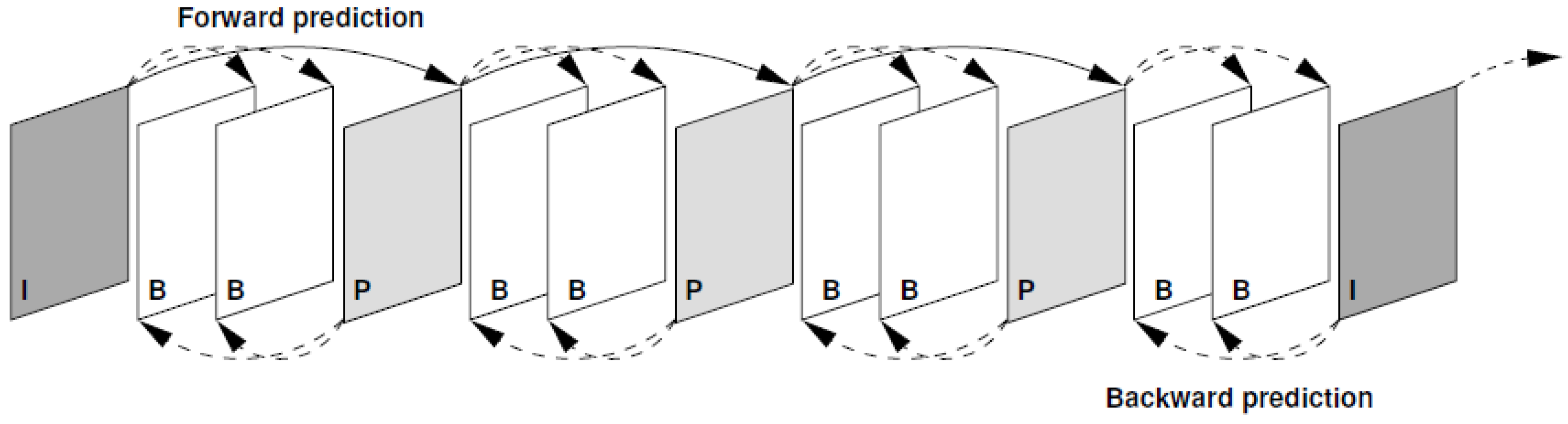

53].

Figure 5 illustrates the standard frame structure of a codec (called a Group of Pictures or GOP). In the common wireless transmission frame structure, P-frames take the place of the B-frames implying that all prediction is in the forward direction from one P-frame to the next. Moreover, the final I-frame in a GOP is also replaced by a P-frame, as I-frames are now not the means of re-setting the decoder in the event of error corruption or frame drops. The sequence of P-frames now extends to the end of the video sequence or stream. RIMR is employed by us to gradually re-set the decoding process, which implies that there will always be intra-coded MBs within each frame.

Figure 5.

Standard frame structure within a codec.

Figure 5.

Standard frame structure within a codec.

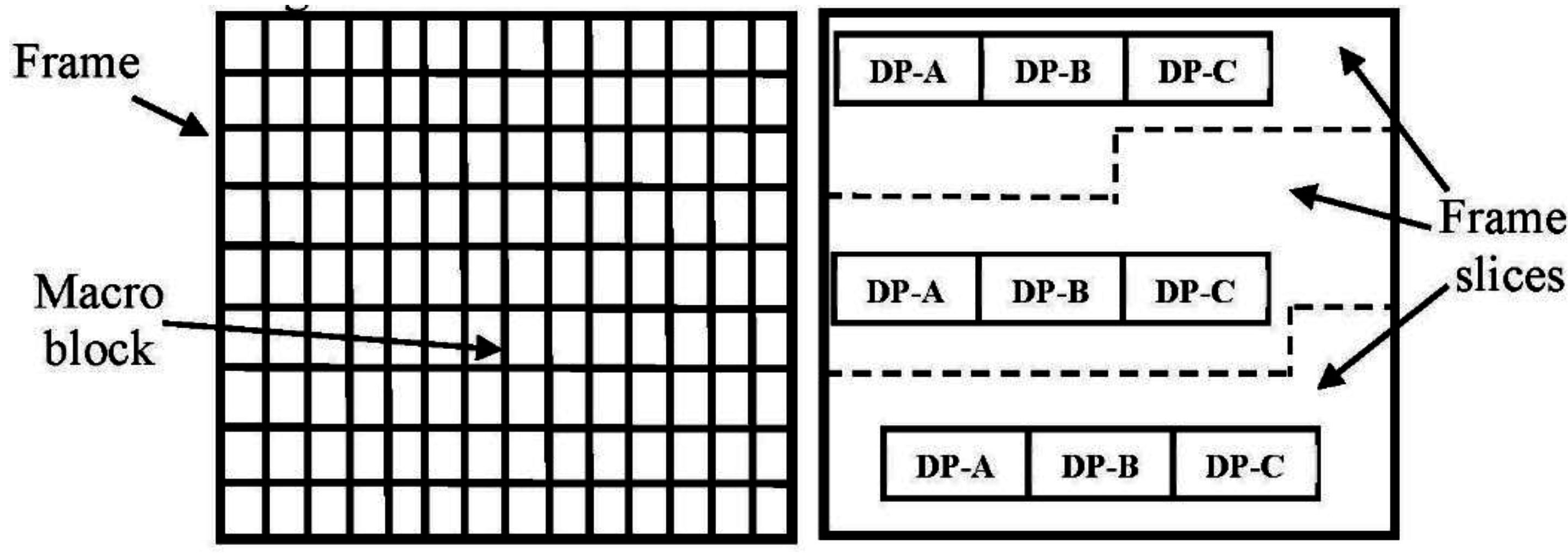

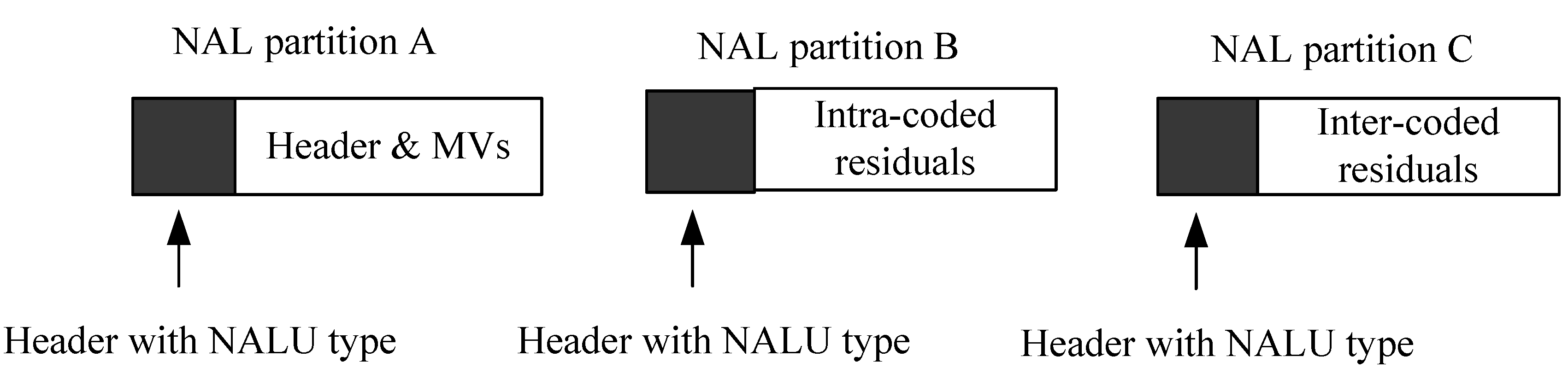

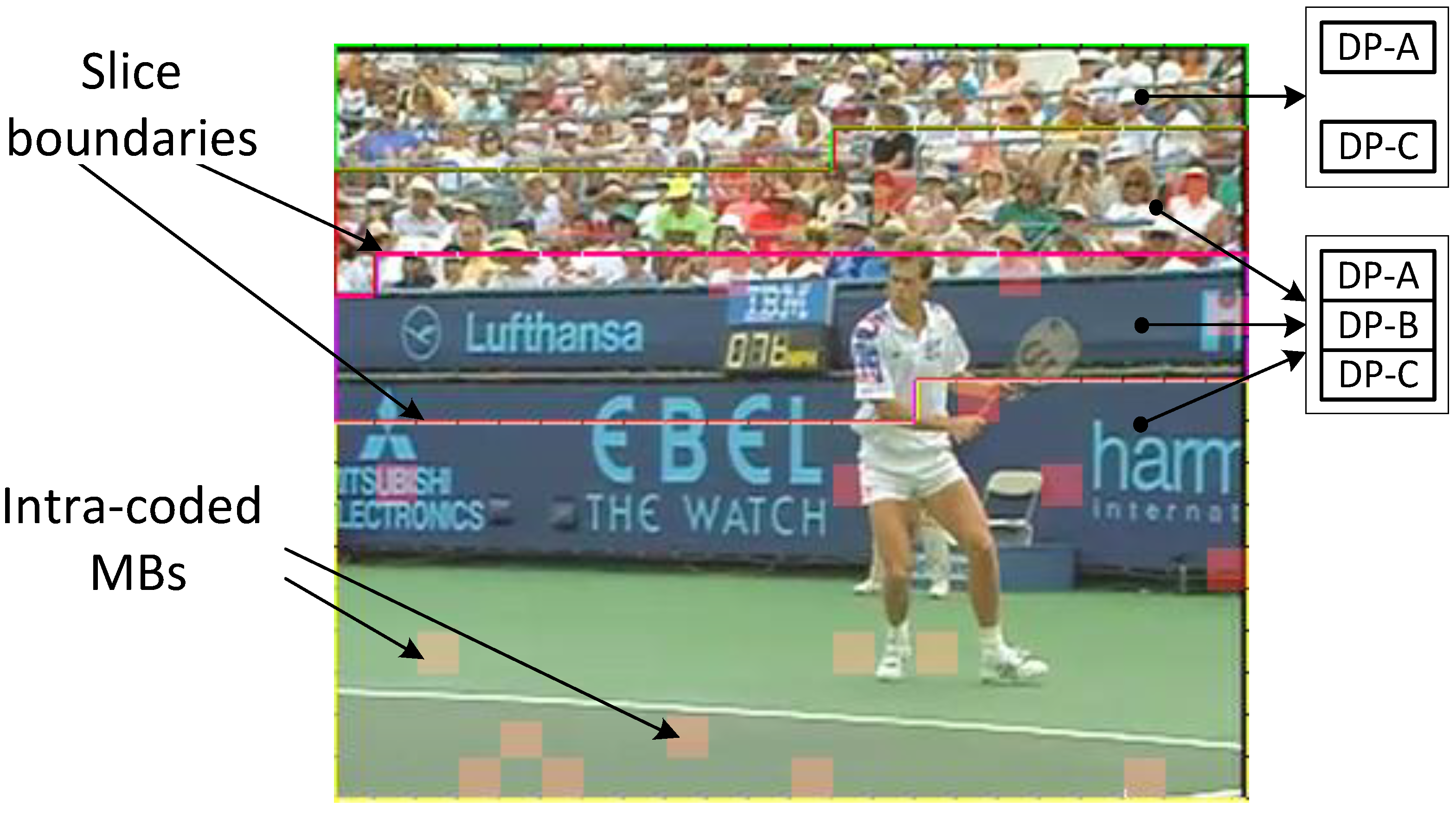

When data partitioning is enabled, every slice within a video frame (

Figure 6) is divided into up to three separate partitions and each partition is encapsulated in either of type 2 to type-4 NALUs (refer to

Figure 7). Partition-A, carried in an NALU of type 2, comprises the MB addresses and types, their MVs, and QPs. If any MBs in the frames are intra-coded, their frequency-transform coefficients are packed into a type-3 NALU or partition-B. Partition-C contains the transform coefficients of the motion-compensated inter-coded MBs and is carried in an NALU of type-4. (Partition-B and partition-C also contain Coded Block Patterns (CBPs), compact maps indicating which blocks within each MB contain non-zero coefficients.)

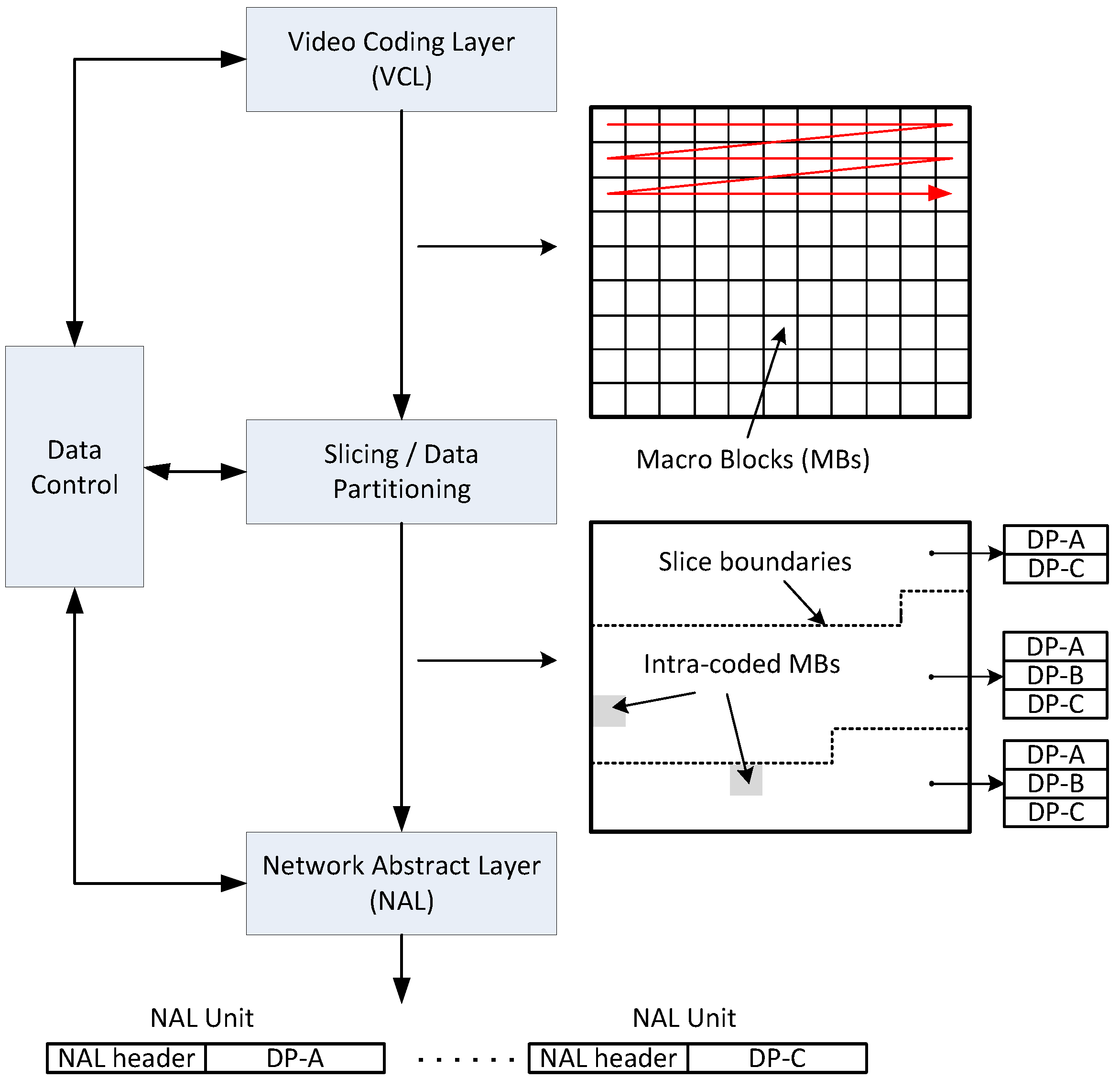

Figure 8 illustrates the overall process from the point of view of the H.264/AVC codec processing the raw video data input from a camera. A compressed video stream is output from the Video Coding Layer (VCL) after applying the normal redundancy reduction steps [

6] including motion estimation and compensation, transform coding, quantization and entropy coding, which result in a compressed bit-stream. As these steps take place on a per-MB basis, it is possible to separate groups of MBs into slices, which in

Figure 6 are formed from geometrically adjacent MBs, selected in raster scan order. The data-partition step separates out the per-MB data by function, e.g., all MVs of the MBs within a slice are separated out. The data-partition step subsequently assigns that data to the appropriate data-partition type. Notice that in

Figure 8, one of the slices has no partition-B data, because there are no intra-coded MBs within it. That situation may arise if RIMR MBs do not fall within the slice and also because the encoder does not select any MBs for intra coding, as might happen if an occluded object prevents a prediction match being found. The other two slices have MBs that are spatially-coded (intra-coded). Once partitioning has taken place, the data partitions are allocated to a NALU of the appropriate type at what is termed the NAL of the codec. The FEC redundant data (Raptor code data) are also included (refer to

Figure 9) within the packet payload along with checksums. If additional FEC data are requested over the uplink, that data also is included in the packet payload. As NALU aggregation was not enabled, each NALU is assigned to a separate network packet as its payload. Additionally, it is important to notice that, for our experiments, each video frame formed a single slice.

Figure 6.

Decomposing a video frame into partitions within slices.

Figure 6.

Decomposing a video frame into partitions within slices.

Figure 7.

H.264/AVC data partitioning in which the partitions of a single slice are assigned to three NAL units (types 2 to 4). As described in the text, the relative size of the partitions varies with the QP setting of the encoder and is not fixed, as shown in the schematic representation of this figure.

Figure 7.

H.264/AVC data partitioning in which the partitions of a single slice are assigned to three NAL units (types 2 to 4). As described in the text, the relative size of the partitions varies with the QP setting of the encoder and is not fixed, as shown in the schematic representation of this figure.

Figure 8.

H.264/ Advanced Video Coding (AVC) data flow for combined slicing with data partitioning.

Figure 8.

H.264/ Advanced Video Coding (AVC) data flow for combined slicing with data partitioning.

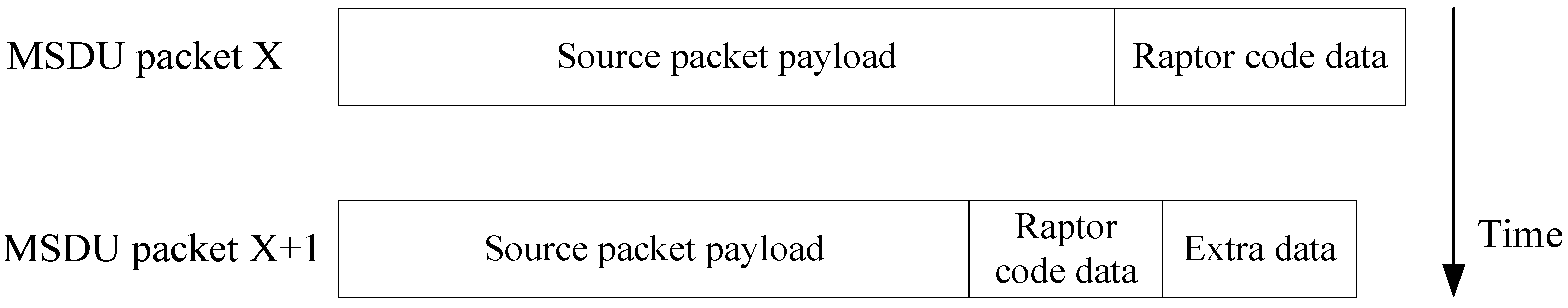

Figure 9.

WiMAX packets (MSDUs) from BS to an MS containing video source data from higher layers with additional application-layer-forward error correction (AL-FEC) added by the streaming application. Packet x + 1 contains additional piggybacked AL-FEC data requested to aid in recovery. The Raptor code data overhead is adapted to channel conditions. The amount of code data added is schematic only, as in practice around 5% would be present. Not shown are possible cyclic redundancy checks (CRCs) added by the application and Internet Protocol (IP)/User Datagram Protocol (UDP)/Real-time Transport Protocol (RTP) headers that would also be present in the source packet payload in addition to the Network Abstraction Layer units (NALU).

Figure 9.

WiMAX packets (MSDUs) from BS to an MS containing video source data from higher layers with additional application-layer-forward error correction (AL-FEC) added by the streaming application. Packet x + 1 contains additional piggybacked AL-FEC data requested to aid in recovery. The Raptor code data overhead is adapted to channel conditions. The amount of code data added is schematic only, as in practice around 5% would be present. Not shown are possible cyclic redundancy checks (CRCs) added by the application and Internet Protocol (IP)/User Datagram Protocol (UDP)/Real-time Transport Protocol (RTP) headers that would also be present in the source packet payload in addition to the Network Abstraction Layer units (NALU).

Reconstruction of the other partitions is dependent on the survival of partition-A, though that partition remains independent of the other partitions. Constrained Intra-Prediction (CIP) [

54] (Ref. [

54] introduces standardized intra CIP while proposing non-standard inter-CIP) was set in order to make partition-B independent of partition-C. When only partition-A survives, its motion vectors can then be employed in error concealment at the decoder using motion copy. When partition-A and partition-B survive, then error concealment can combine texture information from partition-B when available, as well as intra concealment when possible. When partition-A and partition-C survive, to reconstruct it requires partially discarding partition-C data reliant on missing partition-B MBs and then reconstructing either of them using partition-A MVs or partition-A with partition-C texture data, when it is available.

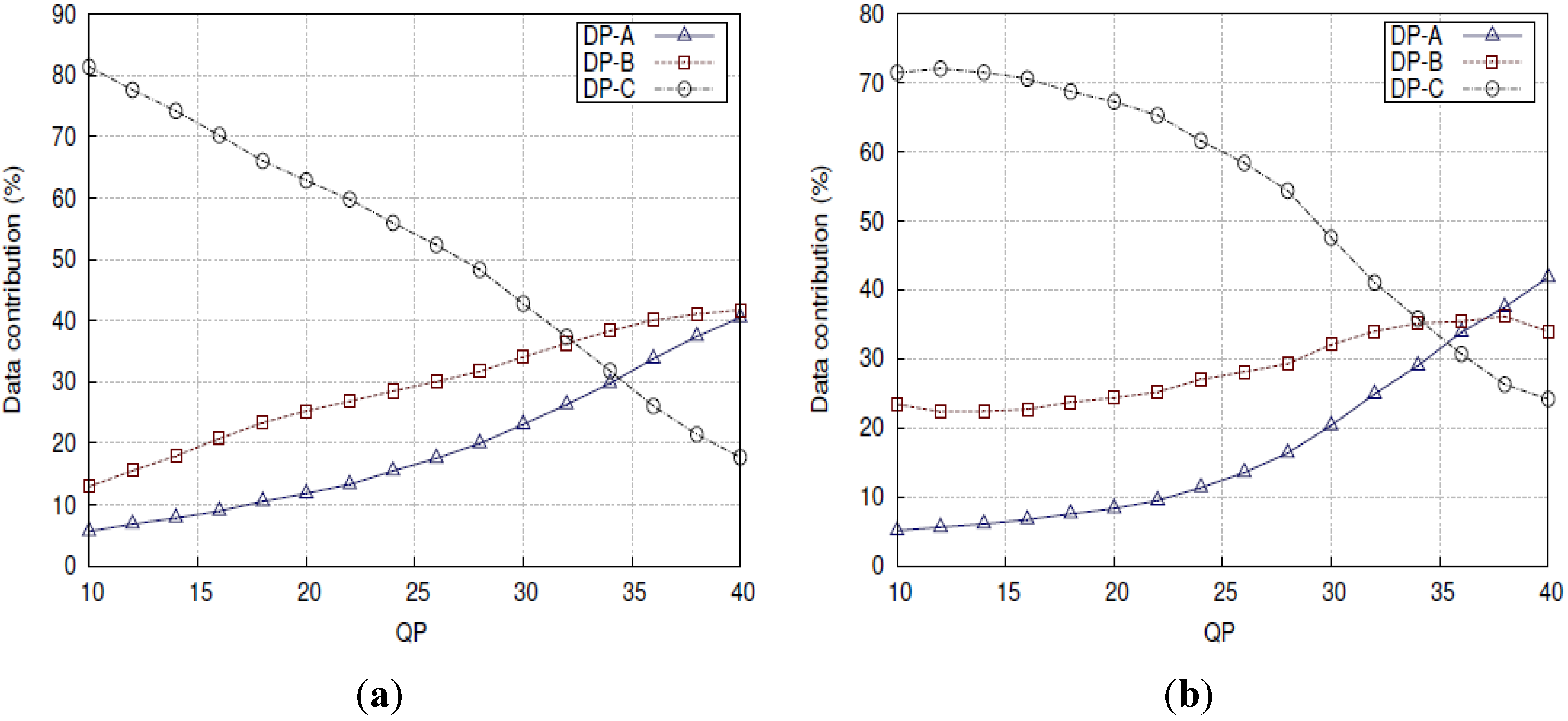

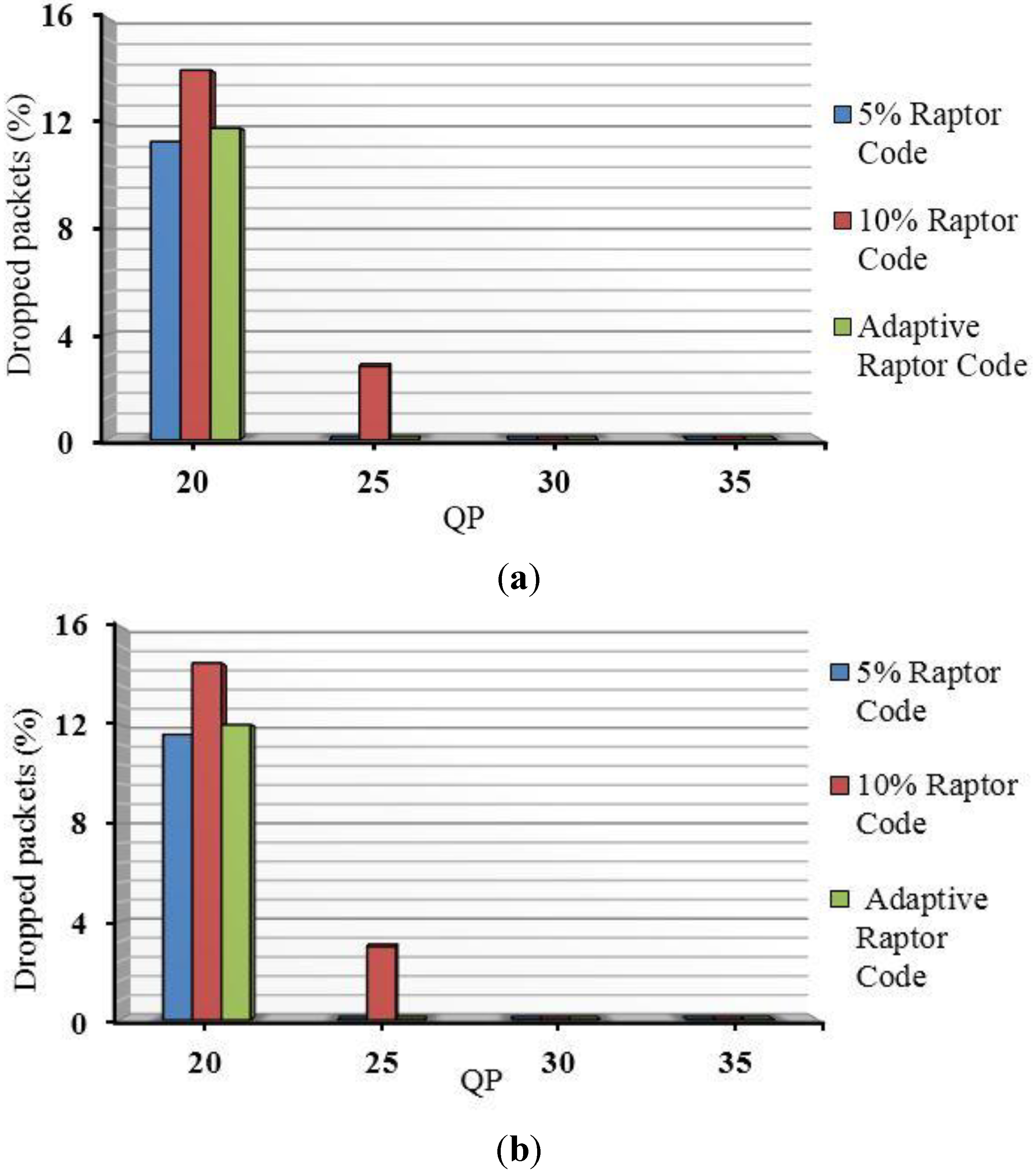

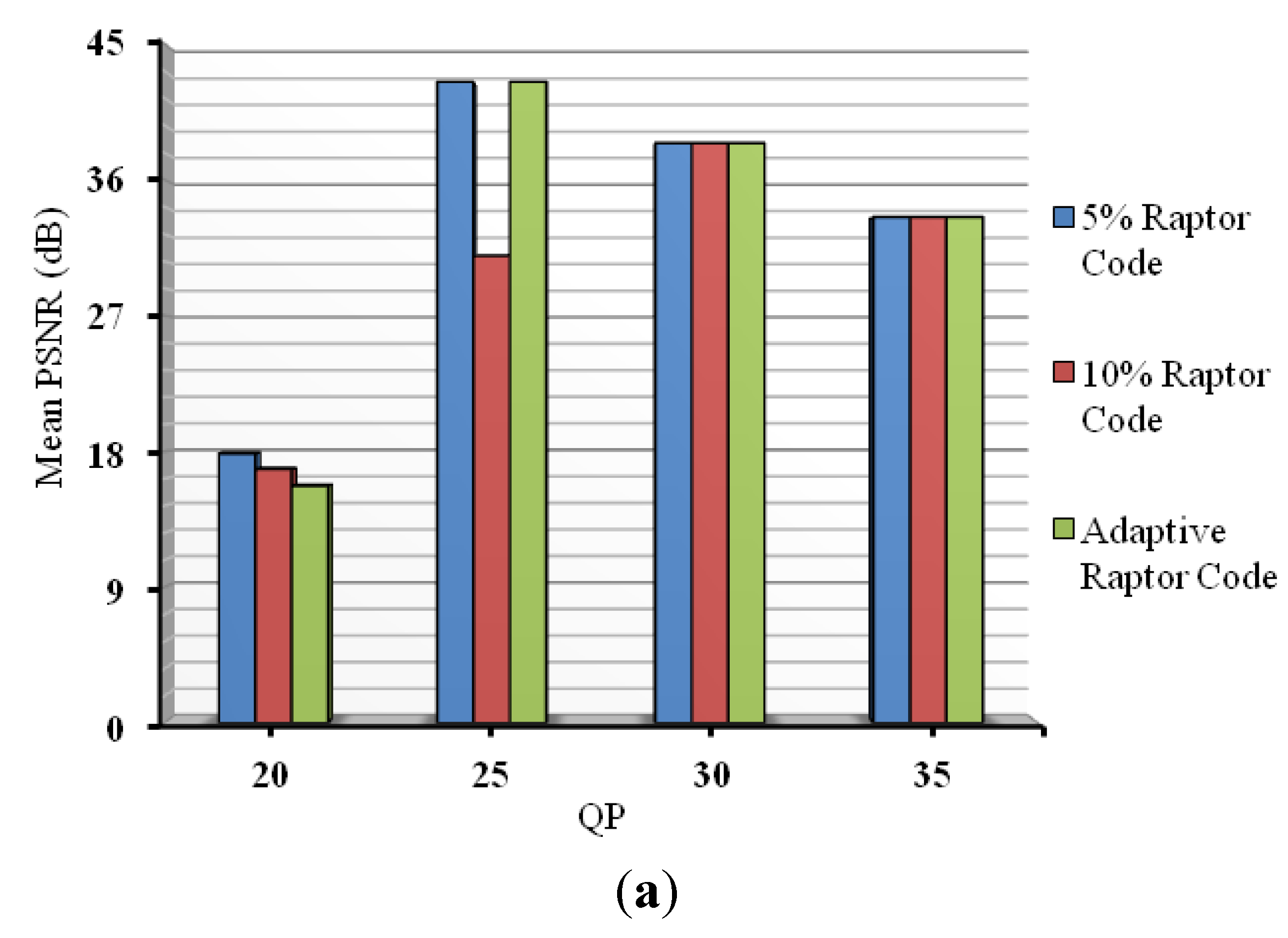

The relative size of the data-partition packets is determined by the quality of the video, which in turn is governed by the QP of the MBs. The QP is set in the configuration file of the H.264/AVC codec, prior to compression.

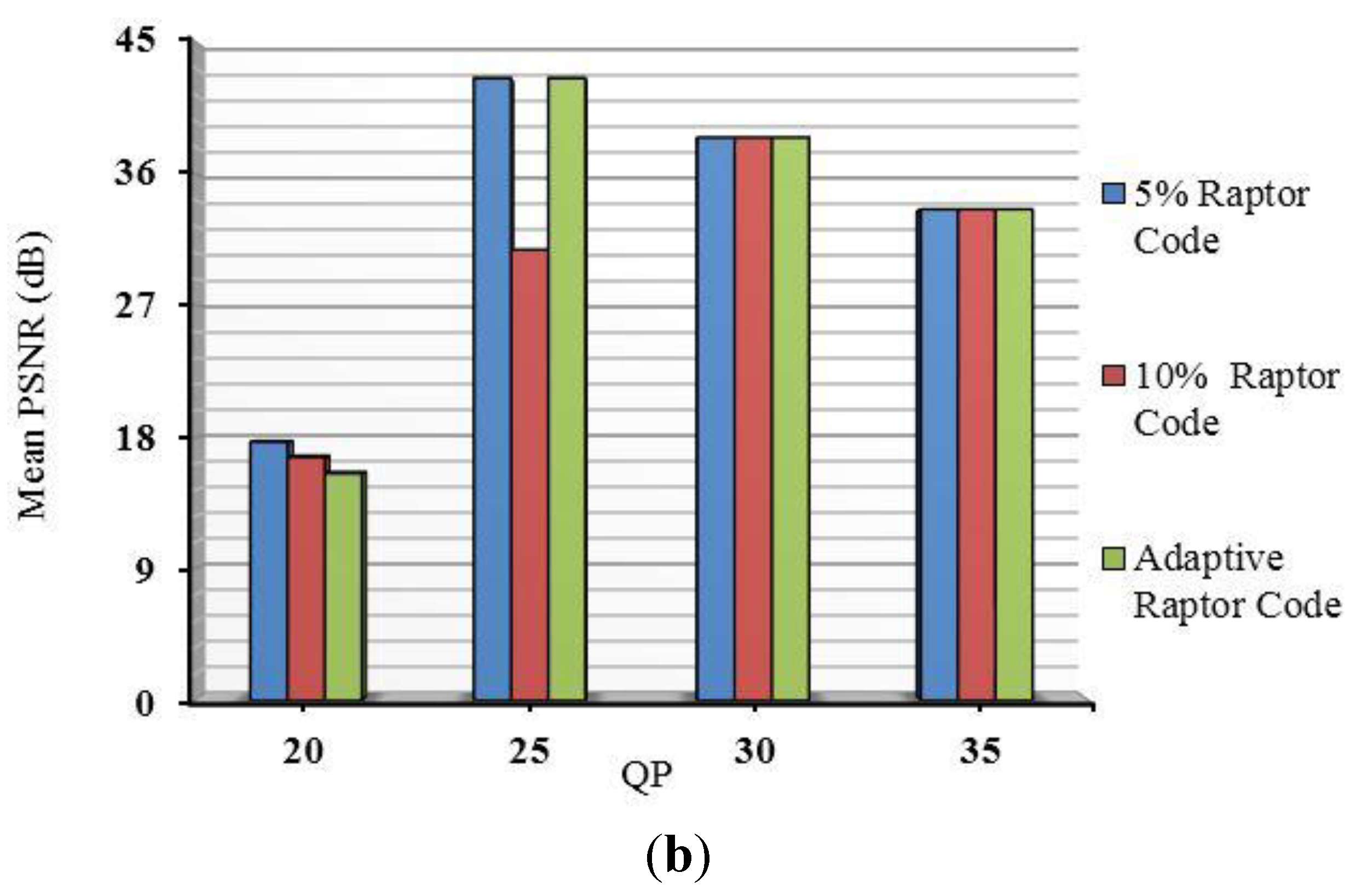

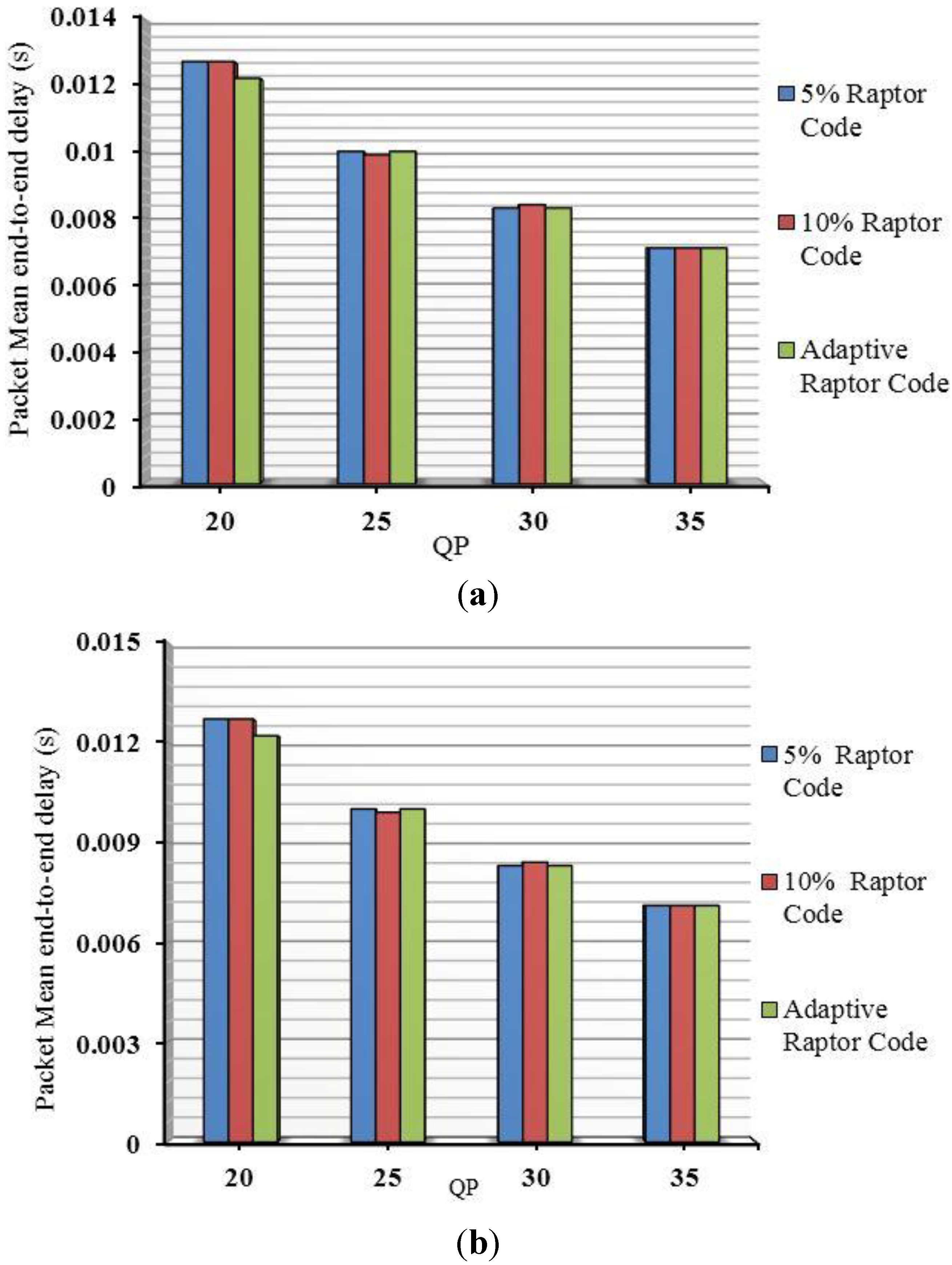

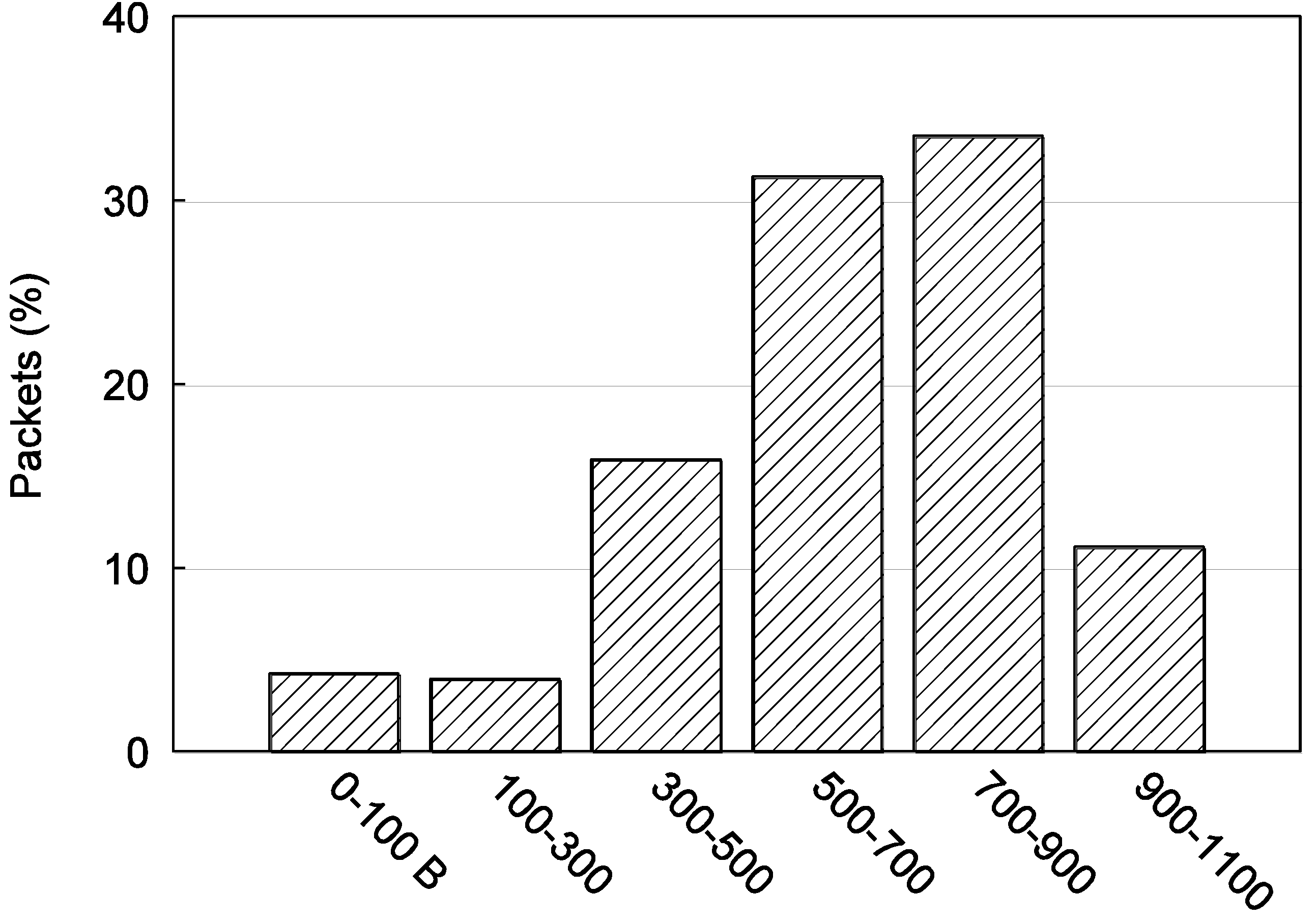

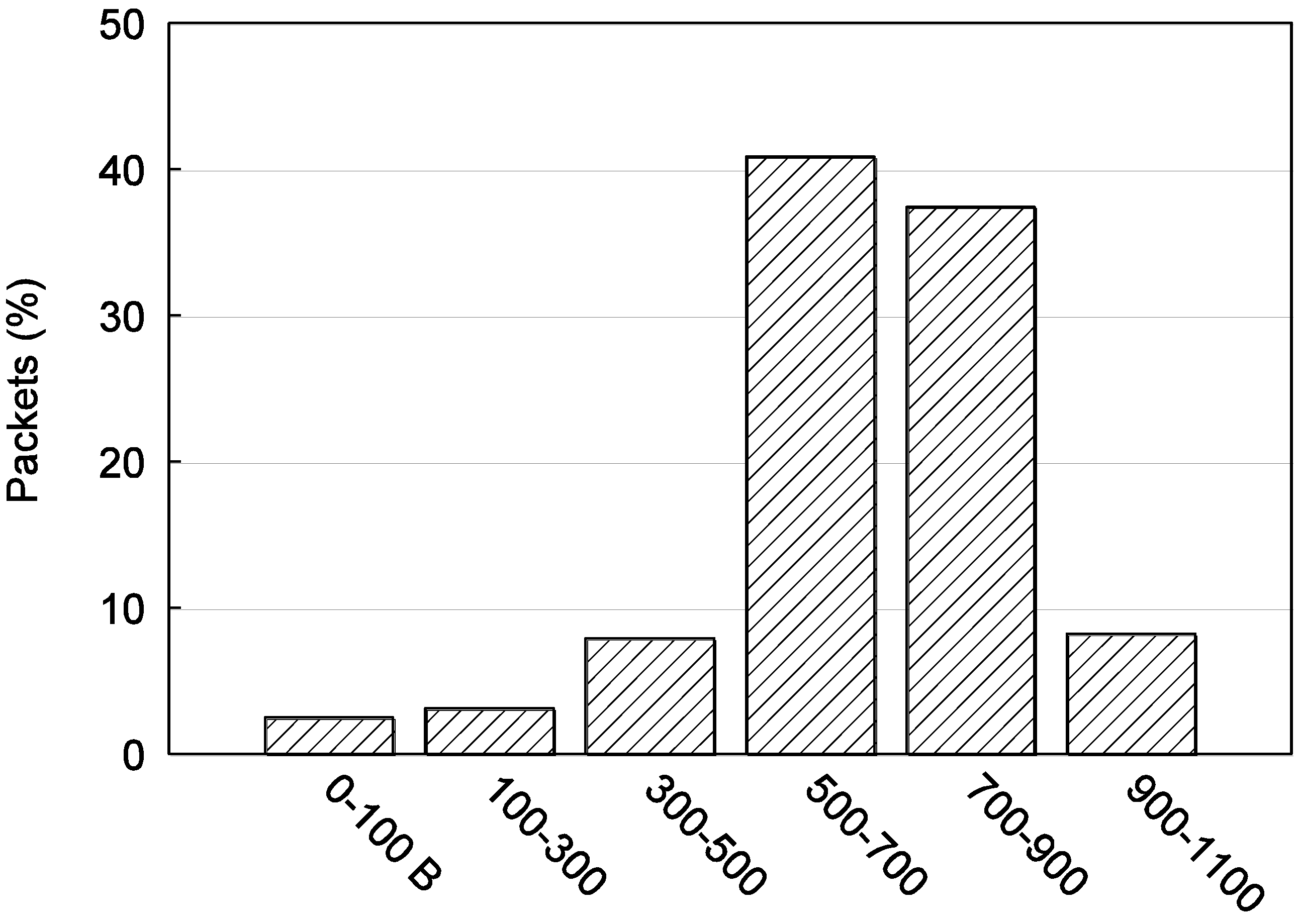

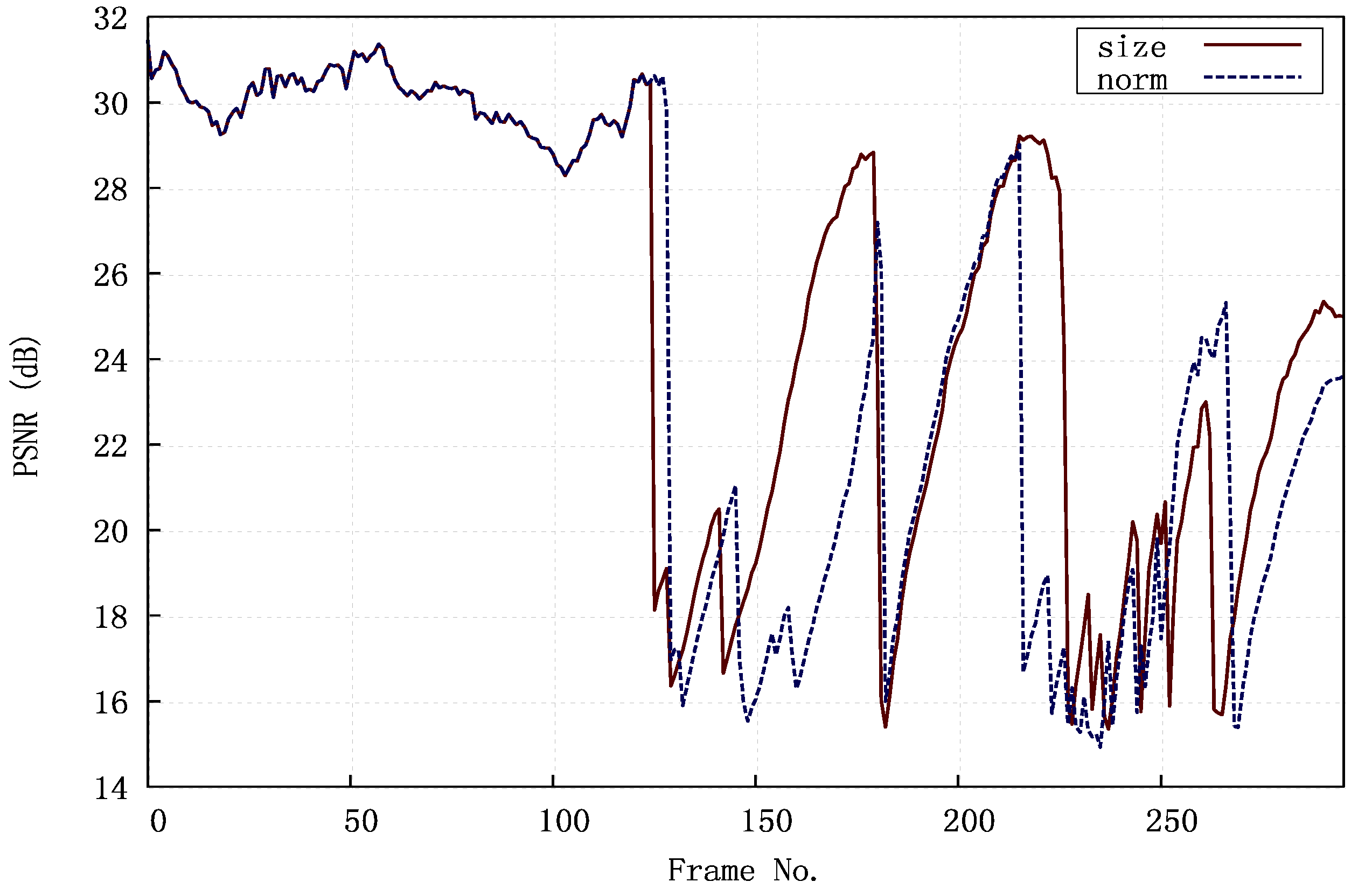

Figure 10 is a comparison between the relative sizes of the partitions according to QP for the two diverse reference video clips used later in

Section 5. For the purposes of assessing the impact of the QP, Variable Bit-Rate (VBR) was encoded in

Section 5.1’s evaluation. In VBR video, the QP remains constant in order to preserve video quality. However, many broadcasters prefer Constant Bit-Rate (CBR) video, as it allows transmission bandwidth and storage to be predicted. Transmission jitter is also reduced. For that reason, CBR video is tested in

Section 5.2, though the QP value may vary a little [

55] in order to maintain a constant bit-rate.

Figure 10.

Percentage contributions of data partitions (DPs) A, B and C over a range of QPs for (a) Paris and (b) Stefan video clips.

Figure 10.

Percentage contributions of data partitions (DPs) A, B and C over a range of QPs for (a) Paris and (b) Stefan video clips.

Rateless Raptor code is an effective form of FEC that provides protection to the data partitioned video stream with reduced computational complexity and capacity approaching overhead. Rate adaptation of AL FEC is performed in order to match wireless channel conditions. The code was applied at the level of bytes within a packet in the interests of reduced latency rather than at the usual packet level. Thus, the byte forms the block symbol size and only bytes passing the CRC at the PHY layer are accepted at the application layer channel decoder. The probability of channel byte loss (

BL) serves to predict the amount of redundant data to be added to the payload. In implementation,

BL is found through measurement of channel conditions, which is mandatory anyway in a WiMAX mobile station. If the original packet length is

L, then the redundant data is given simply by

which is arrived at by adding successively smaller additions of redundant data, based on taking the previous amount of redundant data multiplied by

BL. The statistical model of Equation (1) in

Section 2 was utilized to determine the Raptor code failure probability.

Packets with “piggybacked” repair data are also sent. Suppose a packet cannot be decoded despite the provision of redundant data. It is implied from Equation (1) that if less k symbols (bytes) in the payload are successfully received, a further k − m + e redundant bytes can then be sent to reduce the risk of failure. This reduced risk arises because of the exponential decay of the risk that is evident from Equation (1), which gives rise to Raptor code’s low error probability floor. In practice, e = 4, reduces the probability of failure to decode to 8.7%. Only one retransmission over a WiMAX link is allowed to avoid further increasing latency. If that retransmission fails to allow reconstruction, that packet is abandoned.

4.2. Congestion Resilience

With respect to congestion resilience, consider

Figure 11 in which a single video frame has been assigned equal bit length slices. The geometric space taken up by any slice is dependent on the coding complexity of the content. Shaded MBs in

Figure 11 represent intra-coded MBs. Each slice was further partitioned in source coding space into up to three partitions. As mentioned already, it is possible that partition-B may occasionally be absent (the top slice in

Figure 11) if no RIMR MBs are allocated to a slice and if no naturally intra-coded MBs are assigned to the slice by the encoder. (Naturally encoded intra MBs are inserted if an encoder can find no matching MB in a reference frame or as a way of improving the quality.) Similarly, it is possible that if, for example, an extremely high bit rate was allocated to the stream, partition C might not be present, as the encoder could afford the luxury of encoding all MBs with intra coding. However, the simple packet scheduling scheme for data-partitioned streams is independent of source-coding allocations of partitions and slices.

Figure 11.

Example video frame from Stefan split into equal bit length slices, showing each slice partitioned into up to three data partitions (DPs) (A, B, C).

Figure 11.

Example video frame from Stefan split into equal bit length slices, showing each slice partitioned into up to three data partitions (DPs) (A, B, C).

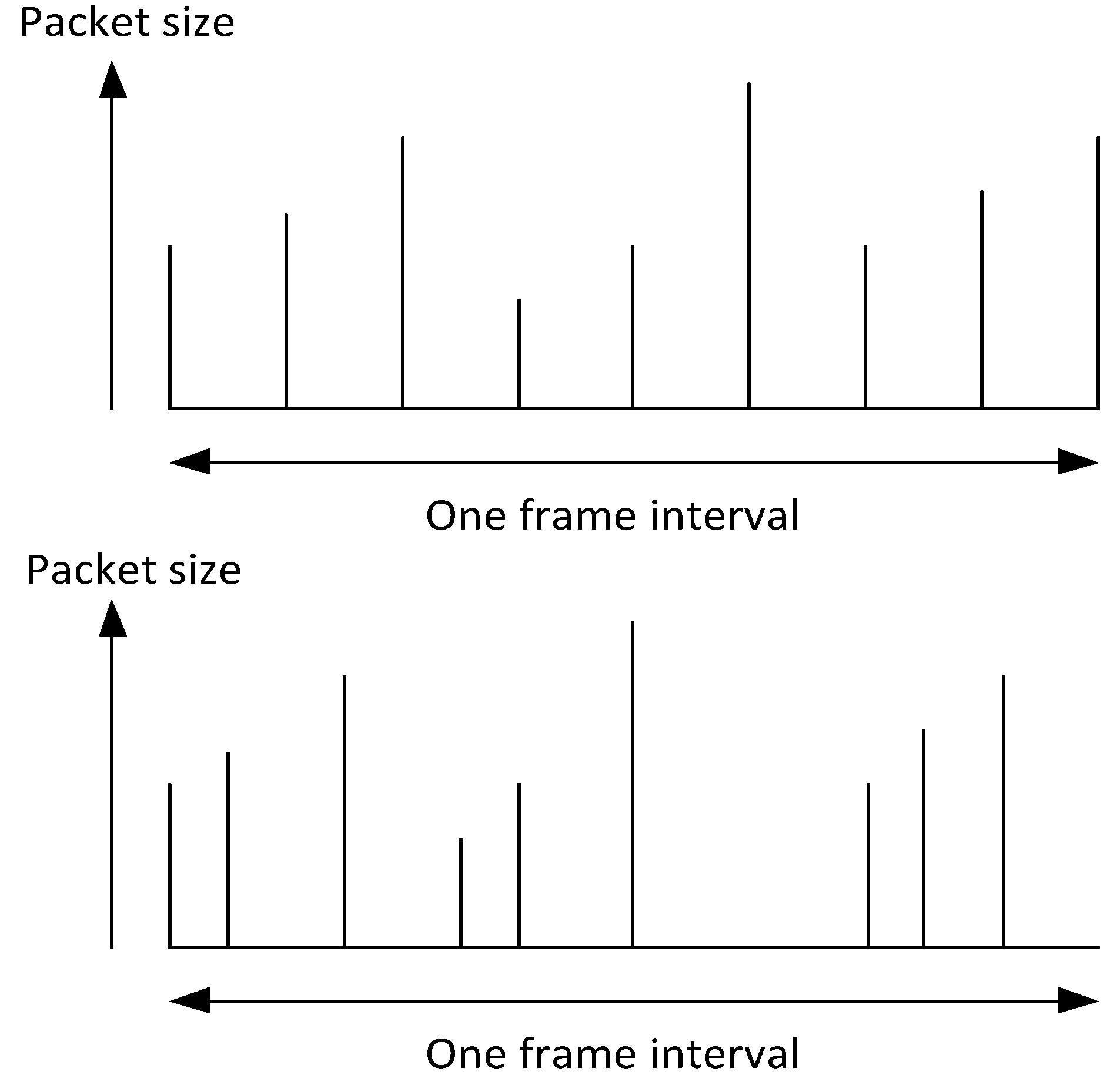

Figure 12 demonstrates the results of two alternative packet scheduling regimes. A frame interval is shown, which is 1/30 s at 30 frame/s. In the default case, scheduling is at equal intervals in time. We are aware that packet scheduling may never follow this ideal regime in a processor even with a real-time operating system present. However, the regime acts as a point of comparison. In the simple scheduling scheme proposed, packets are allocated a scheduling point according to their relative size within a frame’s (or multiple frames’) compressed size. Thus, for any one packet indexed as

j with length

, its scheduling time allocation is:

where

f is the fixed frame interval, and there are

n packets in that frame. The denominator of Equation (3) sums the lengths of the packets within frame

j and allocates a time interval relative to its size (numerator of Equation (3)) relative to the total length of the packets within frame

j.

Figure 12.

Example of packet scheduling regimes: (top) Equally spaced in time; (bottom) Packet ordering preserved but scheduling time allocated according to packet size.

Figure 12.

Example of packet scheduling regimes: (top) Equally spaced in time; (bottom) Packet ordering preserved but scheduling time allocated according to packet size.

The proposed scheduling method obviously preserves the original average bitrate, though there is a single video-frame latency while the frame’s packet sizes are assessed and the scheduling takes place. In [

56], it is pointed out that IEEE 802.21 Media Independent Handover (MIH) services (IEEE 802.21 WG, 2008) provide a general framework for cross-layer signaling that can be used to achieve the scheduling. In IEEE 802.21, a layer 2.5 is inserted between the level 2 link layer and the level 3 network layer. Upper-layer services, known as MIH users or MIHU communicate through this middleware to the lower layer protocols. For mobile WiMAX and later versions of WiMAX, another WiMAX-specific set of standardized communication primitives exists as IEEE 802.16 g.

Figure 13 identifies the place that AL packet scheduling takes place with the processing cycle and also summarizes the processing cycle as a whole. After compression through the video codec, rateless channel coding is applied at the application layer on an intra-packet basis at the byte level. A simple form of application-layer H-ARQ serves to operate the adaptive form of channel coding. AL packet scheduling now takes place for the source packets. After packetization at the upper protocol stack layers, the PHY layer is responsible for a series of protection measures already mostly described. Data interleaving of each PHY FEC block in an OFDM-based system acts to map adjacent bits across non-adjacent sub-carriers. As previously stated, PHY layer H-ARQ was not enabled in the evaluation of

Section 5.

Figure 13.

Overview of protection measures and packet handling across the upper and WiMAX protocol stack layers.

Figure 13.

Overview of protection measures and packet handling across the upper and WiMAX protocol stack layers.

6. Conclusions and Discussion of Future Work

Because of the way research is commissioned, it is sometimes not apparent that wireless links suffer not just from an increased level of errors but that the problems of congestion at the input to the wireless channel does not go away either. The errors tend not to be isolated but are correlated as bursts. This paper proposes a scheme that is both error resilient and congestion resilient. The error resilience scheme is multi-faceted as it includes adaptive rateless coding, ARQ error control, and source-coded data partitioning.

The proposed sized-based scheduling solution, specialized to data-partitioned video streams, will certainly benefit the types of content favored by mobile viewers according to quality-of-experience studies, which are studio scenes with limited motion activity. Active sports clips, especially those with small balls, are less attractive but may become more so as the move to higher resolutions on mobile devices continues (e.g., full Video Graphics Array (VGA) format (640 × 480 pixels/frame at 30 Hz) for streaming in Apple’s FaceTime). However, in that case the problem of packet scheduling regimes may become more acute. Future work will require a robust investigation of appropriate packet scheduling for congested broadband links for a range of content genres.

High Performance Video Coding (HEVC) [

62] emerged as a successor to H.264/AVC during the period of this research. Broadly, HEVC refines and extends the structure of H.264/AVC rather than radically departing from it. However, very few error-resilience tools were included in HEVC, in part because some of these, such as Flexible Macroblock Ordering, were reportedly [

63] rarely used in commercial applications. However, this situation provides an opportunity for researchers themselves to add data partitioning to the HEVC source code. Another likely reason why few error resilience tools were built into HEVC is that a full implementation is likely to be computationally demanding, including data partitioning coming at a cost of some other feature of the code. In contrast, direct and efficient implementation of Raptor code has become easier to accomplish with the development of RaptorQ [

64], a systematic version of Raptor code software. However, inclusion of such code in a commercial product is subject to a patent in many jurisdictions even if it may be available for educational and research purposes. Such issues do not fall within the scope of this article, though in terms of future research, increased opportunities of confirming the error-correcting performance of Raptor codes now exist. The inner LT code of Raptor is a late decoding type of coding scheme, as sufficient symbols need to be collected before decoding can commence. If network coding is permitted, Switched Code [

65] is then a low-latency form of rateless code that is worthy of investigation by us for multimedia applications, as it permits early decoding of packets. Another area for future work is in error resilience. For example, intra-refresh provision in [

66] is varied according to an estimate of the rate-distortion trade-off that takes into account expected errors over a wireless channel.