SemaTopic: A Framework for Semantic-Adaptive Probabilistic Topic Modeling

Abstract

1. Introduction

- How can context-sensitive semantic representation be integrated into topic models to generate more coherent, interpretable, and semantically meaningful topics?

- How can topic stability be ensured when the model is applied to evolving or partially modified corpora?

2. Related Work

- Static Embedding-based Approaches: Static word embeddings were introduced as an improvement over the bag-of-words (BoW) representation. Methods such as Word2Vec [8] and GloVe [13] learn low-dimensional, continuous vectors for words from large corpora by exploiting distributional statistics. The resulting fixed-length embeddings place semantically related words close to one another in the vector space, providing a compact representation of lexical meaning beyond sparse co-occurrence counts.To enhance the semantic quality of text representations and improve topic modeling, several approaches have incorporated static embeddings into probabilistic frameworks, particularly Latent Dirichlet Allocation (LDA). A notable example is LDA2Vec [12], which integrates Word2Vec embeddings into the LDA architecture. This hybrid model retains the probabilistic structure of LDA while leveraging distributional semantics to enhance topic cohesion. By aligning topic inference with word-level semantic proximity, it produces more coherent and interpretable topic representations, characterized by reduced lexical noise and more precise grouping of related terms. Consequently, the extracted latent themes are finer-grained, bridging statistical modeling with semantic awareness.The Embedded Topic Model (ETM), proposed in [11], provides a unified framework for jointly learning word and topic embeddings within the same semantic space. This integration reinforces the coherence and quality of extracted topics, particularly in sparse or short-text corpora. ETM strikes a balance between probabilistic modeling and semantic interpretation by constraining topic–word distributions to remain consistent with continuous word representations, thereby enhancing interpretability and semantic expressiveness.Although static embedding-based approaches such as LDA2Vec [12] and the Embedded Topic Model (ETM) [11] offer improvements over traditional bag-of-words models, they remain unable to capture context-dependent word meanings. Each word is assigned a single fixed vector regardless of usage, which causes polysemous words to be represented identically across contexts and leads to ambiguous semantic representations. For topic modeling, where precise clustering of semantically related terms is crucial, this context insensitivity poses a serious limitation to interpretability. Consequently, topics may include words that are statistically similar in the embedding space but unrelated in specific documents. Moreover, static embeddings are not adapted to domain-specific usage or linguistic variation, making them less robust for dynamic or heterogeneous corpora. Thus, despite the advances of LDA2Vec [12] and ETM [11], the reliance on static embeddings ultimately restricts their semantic expressiveness and applicability.The authors of [14] propose a topic extraction method in which k-means [15] is directly applied to word vectors, followed by document-weighted refinement and PCA-based dimensionality reduction [16], thereby bypassing the complexity of generative models. This approach achieves faster execution and improved scalability, yielding semantic coherence scores comparable to traditional models such as LDA [1], and is particularly well-suited for resource-limited scenarios. However, it suffers from key drawbacks: it lacks a probabilistic foundation and does not provide interpretable document–topic or topic–word distributions, which are often required in downstream applications. Moreover, its reliance on static embeddings prevents it from disambiguating polysemous words or adapting to complex linguistic variation. Thus, while computationally attractive, the method still falls short of the semantic flexibility and depth offered by contextual or neural topic modeling frameworks.The authors of [17] propose an approach to enhance the semantic quality of classical topic models by incorporating word representations derived from static embeddings. By enriching the bag-of-words input with dense semantic vectors, the model learns more accurate word similarities and improves topic assignment. This integration enables the extraction of more coherent and interpretable topics, particularly in cases of limited lexical overlap. However, the reliance on static embeddings—which assign a single representation to each word regardless of context—limits the model’s ability to disambiguate polysemous terms or adapt to domain-specific language. Despite this constraint, the approach represents a substantial improvement over purely frequency-based methods by introducing richer lexical and syntactic information into topic models.The authors of [18] introduce a novel topic modeling approach named WE-LDA which extends LDA [1] by integrating word embedding to enhance topic modeling in the domain of web service clustering. Experimental results obtained with the ProgrammableWeb dataset (presented with more details in [18]) demonstrate that WE-LDA outperforms LDA and other baselines and provides more coherent and meaningful topic clusters. Nevertheless, the model still has several important drawbacks. This approach [18] based on static embeddings model which cause ambiguity in topic assignments, especially when the same topic term has different meanings in different contexts.Although static embedding-based approaches represent an improvement in semantic cohesion compared to bag-of-words models, their context-independence remains a major limitation. Each word is mapped to a single vector regardless of usage, which prevents disambiguation of polysemous terms and adaptation to domain-specific contexts. Consequently, the resulting topics often appear semantically ambiguous and less robust when applied to heterogeneous corpora.

- Contextual Embeddings-based Approaches: The advent of transformer-based models has been a major turning point. Contextual embeddings generated by BERT [5], SBERT [7], and RoBERTa [6], adapt word representations based on their linguistic context, enabling more accurate and expressive semantic encoding. Inspired by these advances, recent models such as BERTopic [3] and Top2Vec [9] apply contextual embeddings to group semantically similar terms or documents, thus producing more cohesive and interpretable topics. The authors of BERTopic [3] combine transformer-based contextual embeddings with class-based TF-IDF weighting to generate interpretable topic representations. The proposed model can capture rich contextual semantics through the use of BERT-like models, and the c-TF-IDF mechanism prioritizes domain-relevant terms for enhanced topic distinctiveness. Because of its modular design, which facilitates interactive visualizations and dynamic topic reduction, it works especially well for exploratory data analysis. Even with its advantages, BERTopic is a prime example of the wider compromises found in sophisticated topic modeling techniques. It improves interpretability and semantic granularity, but it also has some drawbacks compared to clustering-based approaches. For instance, incorporating HDBSCAN [19] allows us to find semantically coherent document clusters, but at the risk of topic fragmentation due to over-clustering, which is especially the case with noisy and heterogeneous datasets. Furthermore, while context-aware embeddings have better semantic depth, they are tied to large pre-trained models, which lead to high computation costs and low scalability in low-resource settings. However, by combining contextual embeddings with density-based clustering, BERTopic is able to obtain an adequate compromise of adaptability and stability. This design makes it possible to derive stable and interpretable topic structures across different application domainsIn contrast, the authors of Top2Vec [9] present an innovative topic modeling technique that simultaneously embeds words and documents into a common semantic space. Without requiring predetermined topic numbers or intensive preprocessing like stop-word removal, this approach allows the model to detect dense clusters of semantically linked content and infer topic vectors straight from the input. Top2Vec [9] produces extremely meaningful and coherent topics by using the corpus’s natural semantic linkages, which improves the interpretability and applicability of the extracted themes. Nevertheless, Top2Vec [9] has a number of drawbacks in spite of its benefits. The necessity of high-dimension embedded input and dense vector operations may cause high computation cost, which may not be suitable for large-scale or resource-limited scenarios. Moreover, the model does not have an explicit probabilistic interpretations, so it is challenging to derive the interpretable topic–word or document–topic distributions required by many analytical applications. Furthermore, the quality of the underlying embeddings and clustering methods has a direct impact on its performance, making it susceptible to instability and parameter adjustment across a range of datasets. Although Top2Vec [9] successfully improves semantic semantic coherence and minimizes the need for manual intervention, its adoption in scaled, production-ready systems is hampered by these computational and interpretability issues.The authors of [20] demonstrate that using contextualized document embeddings significantly enhances topic coherence, because they efficiently capture complex links between concepts that conventional bag-of-words or co-occurrence-based models frequently miss by producing semantically rich and context-aware representations. The authors of [20] highlight the key role played by latent semantic structures offered by pre-trained transformer models in improving the interpretability and robustness of topic modeling. It also proves that incorporating the advanced contextual encoders can significantly enhance the quality and semantic coherence of extracted topics, which remedies the fundamental limitations of classic topic modeling methods.The authors of [21] introduce ALBERT, which is a variation of the original BERT [5] model, aiming to produce deep language models that are faster and consume less memory. It reduces parameter count by sharing weights between layers, independently scaling the size of embeddings with the hidden layer, to be more efficient at a similar sized model. ALBERT [21] also replaced BERT’s original sentence-pair prediction task with a new task called Sentence Order Prediction to learn the sentence relationship more effectively. Because of this, ALBERT is more accurate and efficient on a wide range of natural language tasks. However, it still has certain drawbacks, though, such as being slow in prediction and requiring precise tweaking to function well, like BERT (proposed in [5]). Additionally, because it shares parameters among layers, it may not be as adaptable for really difficult tasks.The authors of [22] present a simple three-step pipeline for semantic coherence-driven topic extraction from unlabeled text corpora. This approach combines BERT-based context embeddings to capture rich semantic representations, and structural links are preserved in a lower-dimensional space by employing UMAP (detailed in [23]) for dimensionality reduction. Then, clustering is conducted by k-means, the topic terms are obtained through TF-IDF scoring. The proposed method is simple and efficient, achieving performances equivalent to more complicated models at a lower computational cost. It has strong topic coherence and diversity and is applicable to multilingual and resource-poor scenarios. However, like all embeddings-based models, its performance comes down to the quality of the pre-trained embeddings and may need fine-tuning for domain-specific uses.The authors of [6] present several improvements over the original BERT architecture, such as removing the Next Sentence Prediction objective, utilizing larger batch sizes and longer training durations, dynamic masking, and pre-training with much more data. These enhancements result in more powerful and contextually sensitive language representations which achieve state-of-the-art performance on various NLP benchmarks. Nevertheless, the method has drawbacks as well: the enhanced performance is attained at the expense of significant processing power and extended training periods. For academics or practitioners with low hardware capabilities, this limits RoBERTa’s accessibility. Its dependence on massive amounts of data also raises questions of environmental effect, scalability, and the possible encoding of biases in the training corpus.

- Graph-based Approaches: Graph-Based Topic Model (GBTM) is structured and semantically rich alternative to classical topic models. These models view documents as graphs, with words as nodes and their syntactical or semantic relationship as edges. Such graph structure allows GBTMs to better encode both local and global semantic relationship between words, which can obtain more meaningful and interpretable topics.The authors of [24] propose a new model named Graph Topic Model (GTM), which enhances topic modeling via document relationships. It builds a graph where nodes are documents or words, and edges model word–document co-occurrence and document similarity. By applying Graph Convolutional Networks (GCNs), the proposed model, (GTM) spreads information across the graph, allowing it to learn more contextually aware and significant subjects.The authors of [25] propose a new approach to improve the interpretability of Graph Neural Topic Models (GNTMs), which, despite their semantic power, tend to act as black boxes. In this paper, the authors provide a local explanation framework that approximates the document-level topic predictions made by GNTMs by using a more straightforward interpretable model—Naïve Bayes—as a substitute. This method helps in revealing the effect of individual words (or node attributes) on topic assignment, and in turn offering local transparency and boosting trust on output of the model. The approach is demonstrated to provide high-quality approximation of the original GNTM on multiple benchmark datasets. Nevertheless, the explanation’s surrogate-based structure imposes some restrictions. Despite its great fidelity in many situations, the Naïve Bayes model might not adequately capture the complexities of the deep semantic representations encoded in GNTMs, particularly when working with more abstract or hierarchical topic relationships. Furthermore, the explanation’s local scope may not be able to generalize across documents or themes, which would restrict its usefulness in offering global interpretability.On the other hand, the authors of [26] propose the Pachinko Allocation Model (PAM) which aims to improve the interpretability of derived topics by taking into account inter-topic dependence though a hierarchical directed acyclic graph. Contrary to flat topic models like LDA [1], PAM [26] encodes intricate topic dependencies and co-occurrence patterns across multiple levels of abstraction. By capturing the hierarchical nature of topics directly this model allows for a more structured and semantically informed meaning interpretation of thematic content. The resultant subject hierarchies, however, may be difficult to understand in practice due to the additional complexity that could compromise clarity.The authors of [27] introduce NET-LDA which is a hybrid topic model that integrates the semantic document similarity into the original probabilistic framework of LDA. By building semantic graphs from contextual similarity between documents, the proposed NET-LDA merges lexically distant but semantically similar texts, resolving the problem of vocabulary mismatch in classical LDA [1]. The interpretability and semantic coherence of the generated themes are enhanced by this semantic pre-clustering, particularly in noisy and domain-specific corpora. One significant benefit is its adaptive process, which improves the model’s flexibility and topic relevance by dynamically weighing each document group’s contribution. Additionally, the modeling process is more intuitive and user-friendly with NET-LDA since it does not require a set number of topics. Nevertheless, the method has its limitations, such as the high computational cost as it needs both graph construction and clustering, and that it may be sensitive to the quality of the adopted semantic similarity measure. More importantly, although it has proved to be effective in a specific controlled environment, its applicability to very diversified or multilingual corpora requires empirical confirmation.Graph-based models effectively capture global and hierarchical semantic relations, yet their high computational demands and structural complexity limit their practical applicability.

- Neural-based Approaches: By using deep learning frameworks to identify intricate semantic patterns and contextual connections in textual data, neural topic modeling techniques constitute a substantial improvement. They use latent neural architectures, in contrast to standard models, to enhance topic coherence, flexibility, and scalability across sparse and heterogeneous corpora.The authors of [28] present a neural topic modeling approach that combines neural networks architectures with the probabilistic topic modeling. The derived Neural Topic Model (NTM) is the result of a reinterpretation of Latent Dirichlet Allocation (LDA) (presented in [1]) in a neural network consisting of two distinct hidden layers: one to model word-level topic distributions utilizing word embeddings, and another one to model document-level topic mixtures. Such a model can surpass the limitations of unigram-based representations while keeping a probabilistic interpretable model. Additionally, the authors of [28] present a supervised extension (sNTM) that adds an extra output layer for document label prediction. This makes it possible to optimize supervised learning tasks like regression and classification as well as topic representation simultaneously. Experimental tests on rating prediction, multi-class, and multi-label tasks demonstrate that the Neural Topic Model (NTM) and supervised Neural Topic Model (sNTM) perform better than conventional models like LDA [1], especially when it comes to producing themes that are coherent and rich in meaningful multi-word expressions. Notwithstanding its advantages, the model has certain drawbacks. These include a higher risk of overfitting in the supervised form, a dependence on pre-trained embeddings, and more complicated training than traditional topic modeling techniques. However, combining the interpretability of probabilistic topic models with the representational capability of neural models is made possible by the suggested framework.The authors of [29] propose a neural variational inference framework as a generalization of the classical topic models such as LDA [1]. The model is able to provide end-to-end training via neural networks and backpropagation The model also can effectively improve the scalability and flexibility in the topic inference. It provides a nonparametric expansion within a deep learning architecture by integrating a stick-breaking prior to support an infinite number of topics. Despite achieving efficient learning on standard standards and competitive topic coherence, the technique has significant drawbacks. These include diminished interpretability in comparison to classical approaches since neural inference is a black-box, training instability, and increased susceptibility to hyperparameter adjustment. Furthermore, the model’s dependence on intricate topologies and variational approximations may make it more difficult to evaluate and modify in real-world or resource-constrained contexts.The authors of [30] provide an overview of the development, approaches and applications of NTM. The paper describes how NTMs go beyond classical co-occurrence-based or probabilistic graphical models by using deep learning models—such as variational autoencoders, graph neural networks, contextual embeddings, and reinforcement learning frameworks—to access richer, more flexible latent topic structures. The described approaches (mentioned in [30]) are classified according to network architecture and application context, such as short texts, multilingual settings and evolving corpora. Moreover, the survey reviews the ability of NTMs to deal with the challenges faced by topic modeling, such as semantic interpretability, robustness, and transferability. However, according to the paper, we still face challenges in scalability, interpretability, training stability, and computational resources. Therefore, the authors of [30] offer a useful starting point for future investigations that seek to optimize neural topic modeling systems for use in real-world, scalable, and interpretable applications.Neural-based topic models leverage deep architectures to capture complex semantic patterns and enhance topic coherence, but their training instability, high computational cost, and reduced interpretability remain major obstacles to their widespread deployment.

- Criterion 1 (C1) Document-Level Semantic Representation: Assesses whether the model incorporates semantic information from the entire document (e.g., through contextual or document embeddings). Possible values: (i) Yes or (ii) No.

- Criterion 2 (C2) Word-Level Contextualization: Indicates whether word meanings vary with context (e.g., through models like BERT), allowing dynamic semantic interpretation. Possible values: (i) Yes or (ii) No.

- Criterion 3 (C3) Domain Context Awareness: Determines whether the model adapts to or integrates domain-specific knowledge, vocabulary, or embeddings. Possible values: (i) Yes or (ii) No.

- Criterion 4 (C4) Topic Granularity: Measures the model’s ability to identify not only broad themes but also sub-topics or hierarchical structures. Possible values: (i) Topics or (ii) Sub-Topics.

- Criterion 5 (C5) Intra-topic Coherence: Evaluates the semantic coherence of words within each topic, i.e., whether topic terms are meaningfully related. Possible values: (i) High, (ii) Medium or (iii) Low.

- Criterion 6 (C6) Topic Stability Across Corpus Variations: Indicates whether the extracted topics remain consistent when the corpus undergoes small changes (e.g., addition/removal of documents). Possible values: (i) Yes or (ii) No.

- Criterion 7 (C7) Term-Level Topic Stability: Checks whether the top terms within each topic are preserved across multiple runs or corpus versions. Possible values: (i) Yes or (ii) No.

- Criterion 8 (C8) Hyperparameter Optimization: Reflects the degree to which model tuning (e.g., for alpha, beta, or number of topics) is automated for performance improvement. Possible Values: (i) No, (ii) Partial, (iii) Full automation.

- Criterion 9 (C9) Determinism: Indicates whether the model returns the same output under identical conditions (fixed parameters and corpus), highlighting reproducibility. Possible values: (i) Yes or (ii) No.

3. Methodology

- (A) Contextual Semantic Representation: Exploits the semantic richness provided by Large Language Models (LLMs) to generate deep, context-aware embeddings at both the document and word levels. This ensures that the model captures nuanced meanings and domain-specific variations that traditional models often overlook,

- (B) Coherent Probabilistic Topic Extraction: Builds on this semantic foundation by first applying density-based clustering to reveal natural groupings within the corpus. These clusters guide an optimized probabilistic inference step that extracts topics that are not only statistically valid but also semantically coherent and interpretable, and

- (C) Topics Stability and Robustness Analysis: Introduces a systematic evaluation mechanism to assess the robustness and reproducibility of the discovered topics in response to corpus modifications (e.g., updates, additions, or removals). This step is essential to ensure that the topics remain consistent and comprehensible over time.

3.1. Contextual Semantic Representation

- Text Preprocessing: This sub-module applies a sequence of normalization steps including tokenization, stop-word removal, lowercasing, and lemmatization that transform the texts into a more uniform representation. These steps lead to increased lexical consistency and reduced semantic ambiguity, which in turn creates a cleaner and more reliable foundation for generating contextually rich and coherent topic representations.

- Named Entity Extraction: A Named Entity Recognition (NER) model is used to recognize and preserve semantically meaningful terms such as domain-specific entities and compound expressions. This also helps retain important multi-word expressions (e.g., artificial intelligence, machine learning) that might otherwise be fragmented or lost during preprocessing. By treating them as single units, the model enhances thematic consistency and contributes to more interpretable and relevant topics.

- Document Representation via Mean-Pooled Contextual Embeddings: To represent documents at the semantic level, each text is first tokenized and encoded using a pre-trained BERT model, which generates contextualized embeddings for all tokens. These token-level representations are then aggregated into a single fixed-size vector by applying mean pooling, i.e., computing the average across all token embeddings in a document. The resulting document embedding constitutes a compact yet expressive semantic representation that captures both the dominant thematic content and the contextual nuances of the text, while attenuating the influence of noise and local variations. This representation not only provides robustness for long or heterogeneous documents but also serves as an effective prefiltering mechanism: when used as input to the clustering stage, it ensures that documents are grouped according to their semantic proximity. In turn, this facilitates the extraction of more coherent, interpretable, and stable topics in the subsequent modeling phase.

3.2. Coherent Probabilistic Topic Extraction

- Dimensionality Reduction of Embeddings: The contextual document embeddings generated by language models such as BERT reside in high-dimensional spaces, which can negatively impact clustering and semantic analysis. While these embeddings are semantically rich, their high dimensionality can hinder both clustering performance and semantic pattern detection due to the curse of dimensionality, where data points become increasingly sparse and equidistant. To address this issue, we apply Uniform Manifold Approximation and Projection (UMAP) [23], a non-linear dimensionality reduction algorithm specifically designed to preserve both local and global semantic relationships during the projection process. UMAP reduces the dimensionality of the embeddings to a more computationally efficient space (typically 5–15 dimensions), enabling better discrimination between semantically distinct regions and reducing noise or redundant variance. This step enhances computational efficiency and improves cluster separability, making subsequent clustering results more interpretable and semantically coherent. It serves as a crucial bridge between deep contextual representations and probabilistic topic inference.

- Semantic Document Clustering: Although document embeddings provide rich semantic information, their continuous and high-dimensional nature makes them incompatible with traditional probabilistic topic models such as LDA, which require discrete input representations. To overcome this limitation, we apply semantic clustering to group similar documents into thematically coherent subsets, thereby pre-structuring the corpus prior to probabilistic inference. Specifically, we employ HDBSCAN (Hierarchical Density-Based Spatial Clustering of Applications with Noise) [19], a density-based clustering algorithm capable of automatically inferring the number of clusters, handling varying shapes and densities, and filtering out noisy data points. In contrast to centroid-based approaches such as k-means, HDBSCAN [19] is particularly well-suited for textual corpora, which are often heterogeneous and noisy. To improve cluster separability, we integrate UMAP [23] for dimensionality reduction, as it preserves both local and global semantic relationships more effectively than alternatives such as PCA [16] or t-SNE [31]. The combination of UMAP [23] and HDBSCAN [19] enables the identification of semantically coherent clusters without requiring prior knowledge of the number of clusters, ensuring robustness to noise and enhancing the interpretability, cohesion, and accuracy of the topics extracted in subsequent modeling stages.

- Automatic Optimization of Parameters: To enhance the semantic coherence, semantic relevance, and reproducibility of the extracted topics, we integrate an automated hyperparameter tuning procedure into our extended LDA-based topic modeling module. This model, adapted to each document cluster, incorporates contextual cues from the embeddings and clustering stages, thus mitigating the limitations of classical co-occurrence-based models. Instead of relying on fixed or manually selected hyperparameters, our framework explores a predefined configuration space to identify the optimal set of values for each cluster, ensuring that topic extraction is tailored to the semantic granularity and distribution of each group. The optimization process includes the following parameters:

- : Controls the sparsity of topic distribution per document. Smaller values encourage each document to focus on fewer topics (i.e., more specific themes), while larger values allow for broader topic mixtures. We sample in the range with increments of 0.1 to explore varying levels of topical concentration.

- : Regulates the sparsity of the word distribution per topic. A smaller results in topics composed of a few highly distinctive terms, enhancing interpretability; a larger produces broader vocabularies. We similarly discretize in with steps of 0.1.

- : Defines the number of latent topics to extract per document cluster. This allows the model to adapt to clusters of varying sizes and thematic diversity, supporting both fine-grained segmentation and broader thematic overviews.

The proposed framework integrates an automated, data-driven configuration tuning mechanism to optimize key hyperparameters such as , , and the number of topics K. For each tested configuration, semantic coherence is quantitatively measured using the metric. The configuration that achieves the highest semantic coherence score is automatically selected, ensuring that the resulting topics are both interpretable and contextually meaningful. The semantic coherence score for a given topic t is computed as follows:where:- t: A topic extracted by the model.

- : The set of all unordered word pairs within the top-N most probable words in topic t, i.e., , where is the top-N word list of topic t.

- : Normalized Pointwise Mutual Information between and , estimated from a reference corpus using a fixed-size sliding context window.

- : contextual embeddings vectors of and , obtained from a transformer-based model (e.g., BERT).

- : Cosine similarity between the embeddings serving as a proxy for semantic closeness.

Traditional probabilistic topic models such as LDA suffer from high sensitively to manually set hyperparameters. In practice, exhaustive tuning is often avoided due to its substantial computational cost, and most users rely on a small number of hand-selected configurations often insufficient to capture optimal semantic granularity. This limited coverage can significantly hinder topic coherence and interpretability. To overcome this limitation, the proposed framework embeds an end-to-end semantic coherence-based optimization pipeline that automatically searches the configuration space to maximize topic coherence, as measured by . This optimization process is structured into three distinct phases, each targeting a specific aspect of parameter tuning to enhance topic coherence:- (i)

- Model initialization: The search begins with a range number of topic numbers K in [2–100]. For each value of K, all possible combinations of Dirichlet priors and within the interval are tested. This dense sampling of the hyperparameter space ensures an exhaustive and fine-grained optimization. It allows the framework to identify, for each cluster, the configuration that yields the highest semantic coherence. This approach marks a significant improvement over traditional manual tuning, which is often limited to a small and heuristic subset of configurations.

- (ii)

- Semantic coherence evaluation: Each candidate configuration from the hyperparameter grid is evaluated using semantic coherence metric , as defined previously. This score provides a proxy for human interpretability by measuring the semantic consistency among top-ranked topic terms. Its systematic use ensures fair and reliable assessment of topic quality across different model instances.

- (iii)

- Parameter selection: For each semantically coherent document cluster, the configuration with the highest score is automatically selected. This ensures that the resulting topic distributions are not only statistically optimal but also thematically meaningful and contextually coherent.

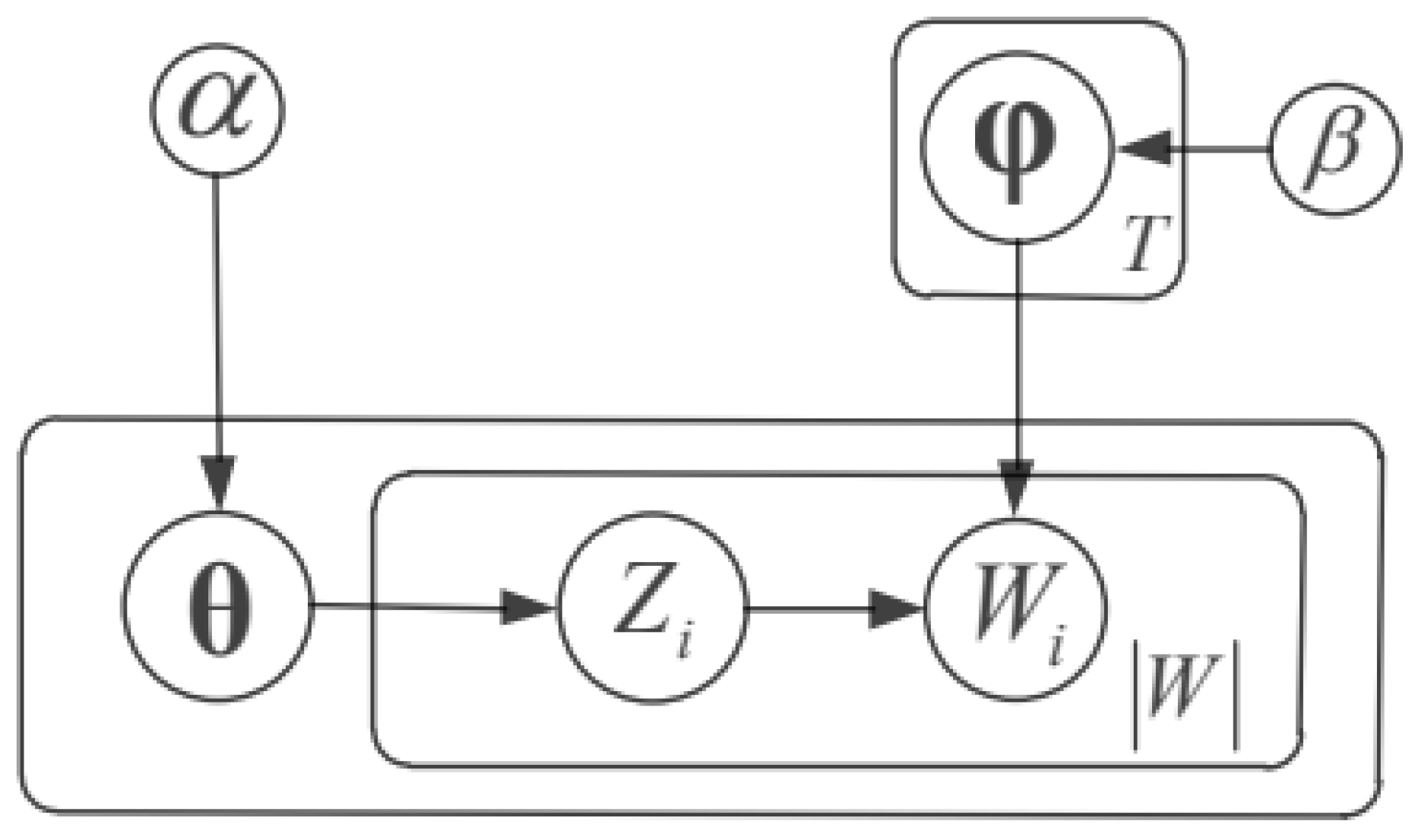

This built-in optimization procedure eliminates the need for manual parameter tuning, allowing the model to dynamically adapt to various corpus structures and domain-specific characteristics. As a result, the framework improves generalization and usability across a wide range of real-world applications. In particular, this automatic coherence-driven hyperparameter search avoids manual trial-and-error, ensures optimal settings per cluster, and improves both topic quality and reproducibility. - Topic Extraction: Once the best hyperparameter setting is found, can extract topics from semantically coherent clusters with the best parameters, enhancing readability and discrimination. These locally refined topics are then combined to generate a coherent, semantically rich global topic set.Within each semantic cluster, once the optimal hyperparameters are identified, extended LDA is applied to infer the internal thematic distributions. If the inference yields a single coherent distribution, the cluster is considered as a global topic. Conversely, if two or more semantically coherent distributions emerge, the cluster is modeled as a topic with sub-topics. Importantly, this decision is guided by the semantic coherence of the inferred distributions, rather than by the cluster size. In this way, sub-topics arise only when the probabilistic model reveals sufficient internal separability, ensuring that granularity reflects semantic reality rather than arbitrary thresholds.Figure 2 provides a schematic illustration of the sub-topic detection process in . Semantic clusters, obtained through HDBSCAN, are refined using extended LDA with optimized hyperparameters . A cluster may either remain as a single global topic when only one coherent distribution is inferred, or be decomposed into multiple sub-topics when several coherent and distinct distributions are detected. The figure exemplifies the latter case, where Cluster 2 is divided into three semantically coherent sub-topics, highlighting how captures both macro- and micro-level thematic structures.The full generative process of the model is detailed in Algorithm 1. Its probabilistic formulation and component interactions are illustrated in Figure 3.extends classical topic modeling by introducing an automatic and context-aware framework that enhances both semantic coherence and topic distinctiveness. The method ensures interpretability and robustness by first segmenting the document space within each cluster, and then applying probabilistic topic modeling within each cluster. This modular architecture enables the generation of richer and more coherent topic structures, while addressing several key limitations of traditional probabilistic models particularly their limited adaptability to diverse corpora and their reliance on manual hyperparameter tuning.

Algorithm 1 Generative Process of SemaTopic. Require: Corpus D, pre-trained BERT model, semantic coherence metric - 1:

- Generate contextual embeddings for each document using BERT

- 2:

- Apply UMAP to reduce dimensionality of embeddings

- 3:

- Cluster documents using HDBSCAN to obtain semantic clusters

- 4:

- for each cluster do

- 5:

- for each combination of , (step 0.1), and do

- 6:

- for each topic to do

- 7:

- Draw topic–word distribution

- 8:

- end for

- 9:

- for each document do

- 10:

- Draw topic proportions

- 11:

- for each word in d do

- 12:

- Sample topic assignment

- 13:

- Sample word

- 14:

- end for

- 15:

- end for

- 16:

- Evaluate semantic coherence score for current configuration

- 17:

- end for

- 18:

- Select maximizing

- 19:

- Retain topic distributions from optimal configuration

- 20:

- end for

- 21:

- Merge topics from all clusters to form the final global topic set

Table 2 defines the main variables used in the model. It includes document-level parameters such as topic proportions (), word-level assignments (), and BERT embeddings (e), as well as cluster-level components () and topic–word distributions (). These variables describe the generative process and structure of the model.Table 3 presents the core variables involved in the generative process of the standard Latent Dirichlet Allocation (LDA) model. The model assigns a topic distribution to each document, governed by the Dirichlet prior . For each word position i, a latent topic is sampled from , and the actual word is drawn from the word distribution of the corresponding topic , which is itself drawn from another Dirichlet prior . These probabilistic components collectively describe how documents in a corpus are generated based on latent topic structures.The plate notation diagram of mentioned in Figure 3 is a methodological refinement of the traditional probabilistic topic models, allowing for semantic understanding and structural granularity. Whereas traditional LDA (related plate notation presented in Figure 4) processes the corpus as a uniform container of documents, combines the use of contextual embeddings to divide the corpus into coherent thematic clusters. In the second step, topic inference is performed in each cluster to further strengthen thematic distinctiveness and semantic coherence.Incorporating embeddings from Large Language Models into our framework allows us to extract more coherent and semantically meaningful topic representations by effectively capturing the context-dependent subtleties of word usage. At the same time, automatic hyperparameter tuning makes sure that each semantic group is modeled under the best settings, without requiring ad hoc tuning by the user, thus enhancing the overall quality of the extracted topics. This structured, context-aware model improves the interpretability and semantic coherence of topics, but does not lose the probabilistic ground of traditional models like LDA.

3.3. Topics Stability and Robustness Analysis

- Corpus Modification: To simulate realistic variations in input data, a perturbed version of the original corpus D is created, denoted . Two types of modifications are applied:

- Addition: New documents are added to corpus D.

- Removal: A random subset of documents are removed which is intended to simulate data loss, sampling bias, or incomplete data.

These modifications aim to test whether the model can maintain consistent topic structure despite changes in data distribution. - Re-Extraction of Topics: The model is then reapplied to the modified corpus , using the same semantic clustering structure and hyperparameter settings that were applied to the original corpus D. This ensures that changes in the resulting topic set are solely due to corpus perturbation, not to differences in model configuration. The new topics are then compared to the original topic set T.

- Topic Similarity Measurement: To evaluate the degree of alignment between the original and perturbed topic sets, three complementary metrics are employed:

- Jaccard Similarity: Computes the word overlap between the top-n terms of matched topics in T and , indicating lexical consistency.

- Cosine Similarity: Measures the similarity between full topic–word probability distributions (treated as vectors), capturing global distributional alignment.

- Topic Match Ratio: Represents the proportion of topics in the original set T that have at least one matching topic in with a similarity score above a predefined threshold .

- Validation of Topic Consistency: We measure the topic stability under corpus perturbations using computed similarity scores. The high values of similarity among various evaluation metrics suggest that the model consistently captures the coherent topic structures and also show that it is robust and reliable model. Conversely, significant divergence among runs may indicate sensitivity to data variance, clearly visible in specific sections for additional calibration or system improvements.

- Jaccard similarity, based on the overlap of top-n topic words,

- Cosine similarity, computed over the full topic–word distributions treated as high-dimensional vectors.

- Average Similarity Score , for both Jaccard and Cosine, capturing the global alignment between topic structures;

- Topic Match Ratio (TMR) R, measuring the proportion of original topics in T that have at least one closely matching counterpart in , based on a predefined similarity threshold .

| Algorithm 2 Robustness Evaluation of SemaTopic. |

Require: Original corpus D, modified corpus , trained model , similarity threshold

|

4. Experiments

4.1. Environment

- Text Preprocessing: Conducted using NLTK (https://www.nltk.org/) for tokenization, lemmatization, subword normalization, and domain-adapted stopword filtering.

- Contextual Embeddings: Generated via the SentenceTransformers (https://www.sbert.net/) library, using pre-trained BERT-based models fine-tuned for sentence-level semantic similarity.

- Named Entity Recognition (NER): Applied to preserve domain-specific entities and compound concepts, thereby enhancing thematic semantic coherence.

- Dimensionality Reduction: Two techniques were employed:

- Semantic clustering: Performed using HDBSCAN [19] due to its capacity to detect arbitrary-shaped clusters and filter out noise without requiring the number of clusters in advance.

- Topic modeling and semantic coherence scoring: Managed through the Gensim (https://pypi.org/project/gensim/) library (19 July 2024), which enables probabilistic modeling via LDA and supports semantic coherence evaluation using the metric.

4.2. Execution Time by Stage

4.3. Experimental Protocol

4.3.1. Objective 1: Topic Quality and Semantic Expressiveness Evaluation

- Dataset:A domain-specific corpus was collected from Google Scholar, composed of 965 scientific papers. The dataset comprises diverse academic disciplines and was divided as follows: 169 scientific articles related to Economics, 155 documents in Astronomy, 130 in Management, 170 in Nutrition, 130 in Music, and 211 papers in Computer Science. We tried to prepare a balanced thematic distribution corpus in order to provide a robust foundation for evaluating the semantic extracted topics using our model across heterogeneous knowledge domains (the used dataset is available at: https://drive.google.com/drive/folders/1rVBhcPeYYSQ_bT9HMwkWRY2T1oNsTrzN?usp=drive_link, accessed on 11 July 2025).

- Evaluation metrics:

- Topic coherence: In order to get higher-precision domain semantics, the system was based on cluster-wise topic modeling. After learning document embeddings and applying unsupervised clustering by integrating HDBSCAN, the corpus was partitioned into four clusters that correspond to semantically coherent sub-domains of scientific literature. This segmentation allows us to apply a local topic modeling approach where our model () is trained for each cluster to better accommodate the granularity of internal topics. The best-topic model for each cluster is determined by an exhaustive grid search over a three-dimensional hyperparameter space including:

- −

- Number of topics

- −

- Document–topic prior

- −

- Topic–word prior

- Graph-based visualization: Graph-based visualization is an efficient method to qualitatively evaluate the semantic structure and separability of derived topics. It gives insights on how topic terms are structured and related within and across clusters.

4.3.2. Objective 2: Robustness and Stability Assessment

- Dataset: In order to evaluate the robustness of our approach, we used a domain-specific corpus composed of 946 scientific articles. A controlled perturbation was performed randomly excluding 33 documents from the original corpus (This corpus is publicly available accessed on 11 July 2025 https://drive.google.com/drive/folders/1F3vebIkREgKA2Bh9ScsCnRkIuR2t6lD-?usp=drive_link).

- Evaluation metrics:

- Rank-Biased Overlap (RBO): This metric was used for measuring top-term lexical similarity between model runs.

- Word-probability weight differences: This metric was used to quantify the difference of probability distribution over words in runs.

- Jaccard similarity index: This is computed on the top-n words of original and perturbed topics.

- Cosine similarity matrix: This is calculated between the topic vectors of perturbed and original corpora.

- Topic Matching Rate (TMR): This measures how well topics in the original corpus aligned with those from the perturbed corpus.

4.3.3. Objective 3: Comparative Analysis with State-of-the-Art Methods

- Qualitative Evaluation: Graph-based analysis was used to qualitatively analyze the structural and semantic quality of the generated topic models. This method was performed through the visualization and interpretation of topic-term bipartite networks, allowing one to analyze key features like modularity, the density of graphs, term redundancy, and cluster distance.

- Quantitative Evaluation: This module was based on the topic coherence as the main evaluation mechanism. Semantic coherence scores are numerical estimates of the resemblance between terms ranked highly in each topic. Higher scores imply better semantic alignment and, therefore, better topic quality.

4.3.4. Objective 4: Evaluation of Scalability and Multilingual Robustness

- Dataset: The evaluation was conducted on an extended corpus derived from the CEUR-WS (https://ceur-ws.org/) proceedings, comprising approximately 1700 documents from multiple workshops and academic domains. To ensure reliable semantic modeling, only documents with a textual coverage exceeding 50% of their content were retained. In addition to English texts, the dataset includes a substantial number of non-English terms (e.g., Russian), thereby providing a natural benchmark for testing multilingual adaptability. This setup enables a joint evaluation of scalability and multilingual robustness.

- Evaluation metrics:

- Scalability assessment: Execution time and memory usage were measured across the main pipeline stages (embedding generation, dimensionality reduction, clustering, and topic inference). This analysis allowed us to quantify computational efficiency and identify trade-offs associated with processing larger and more heterogeneous corpora.

- Multilingual robustness: Topic coherence scores were computed and complemented with qualitative inspections of graph-based topic visualizations. This dual perspective was employed to assess whether semantically coherent topics could still be identified despite the presence of non-English terms. The persistence of meaningful clusters across languages was considered a key indicator of multilingual robustness.

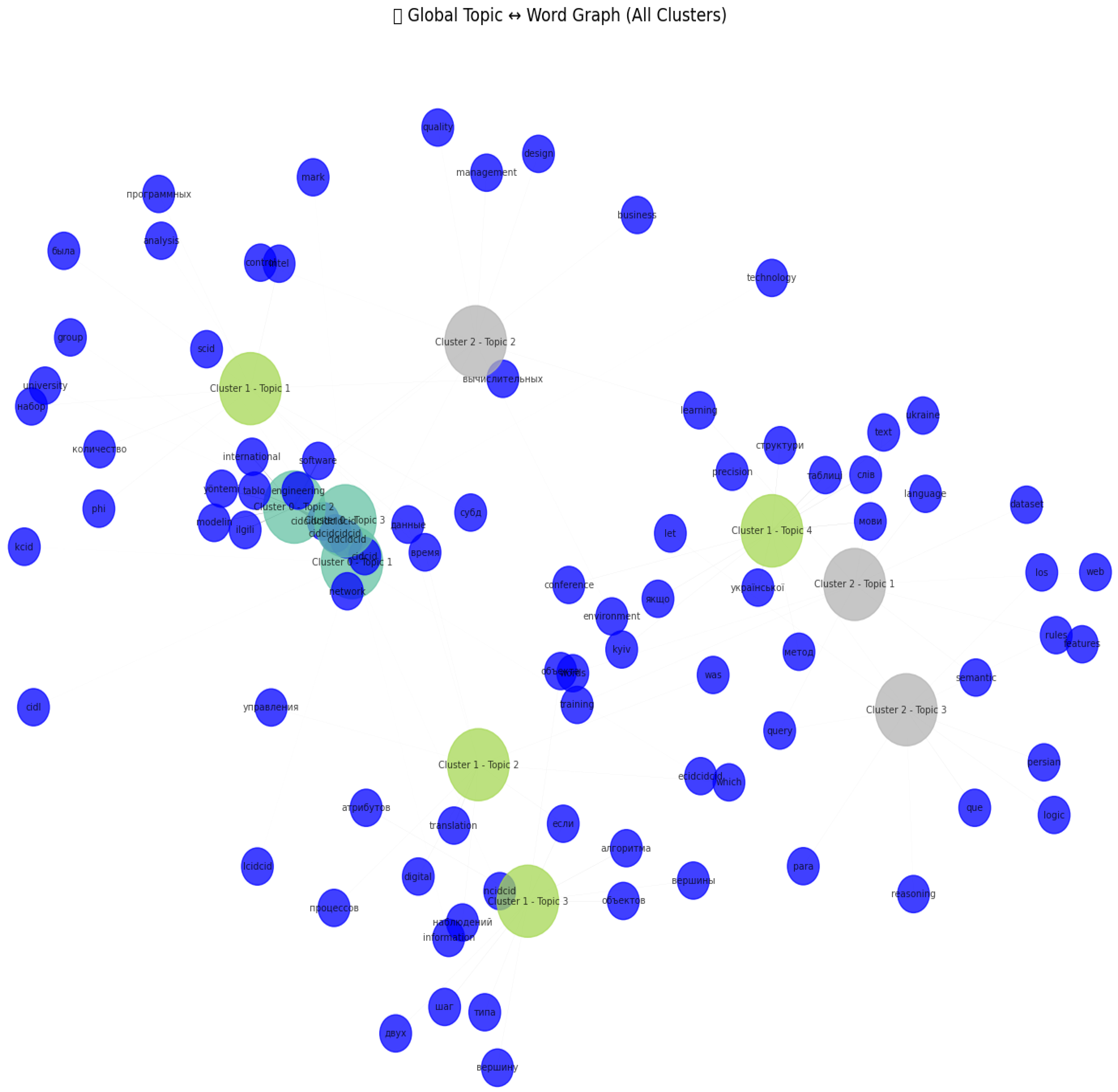

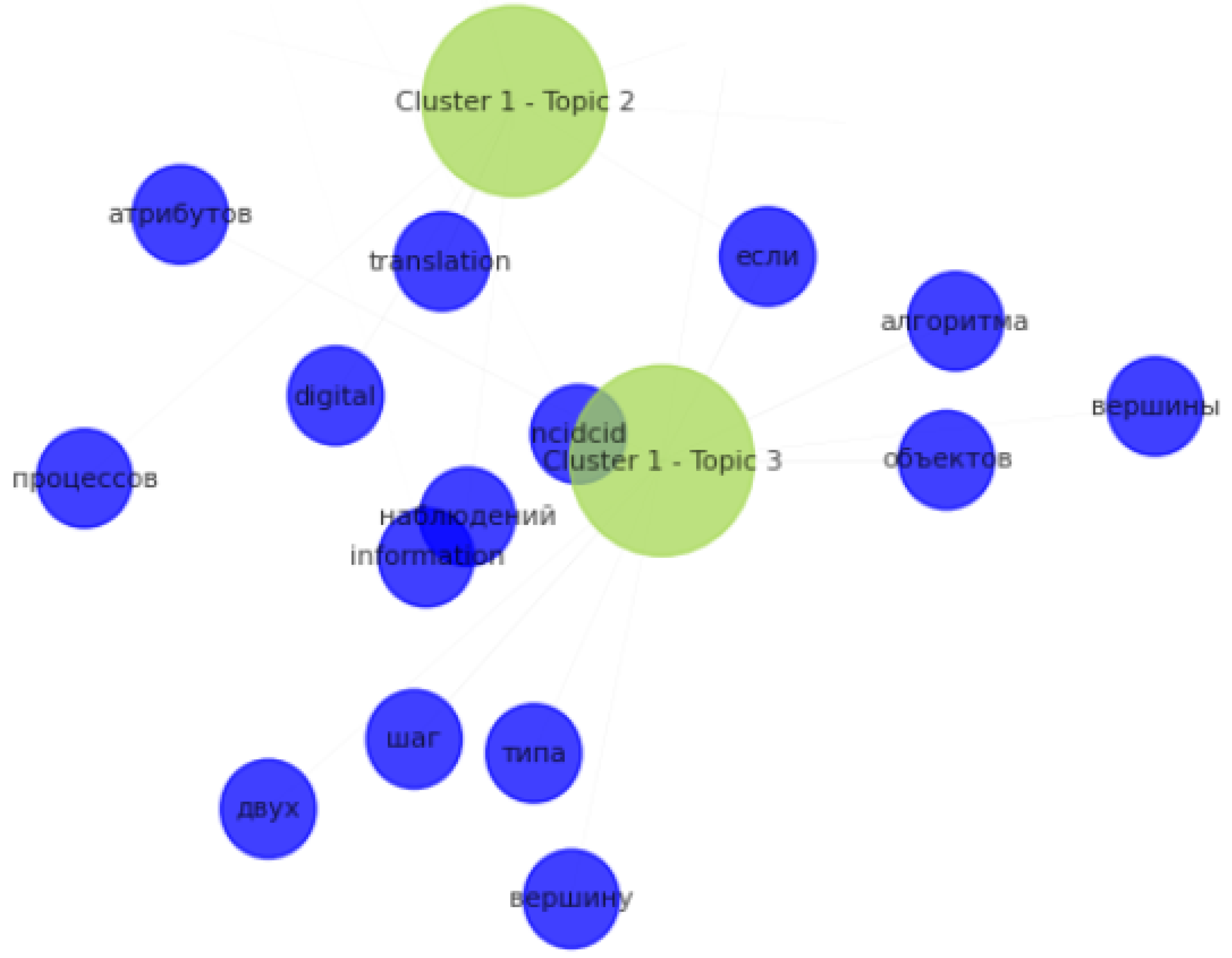

- Graph-based visualization: A global topic–word graph was generated to illustrate the semantic integration of multilingual terms within clusters. These visualizations provide additional qualitative evidence of the framework’s ability to capture cross-lingual relations while preserving internal topic coherence.

4.4. Evaluation Results

4.4.1. Objective 1: Topic Quality and Semantic Expressiveness Evaluation

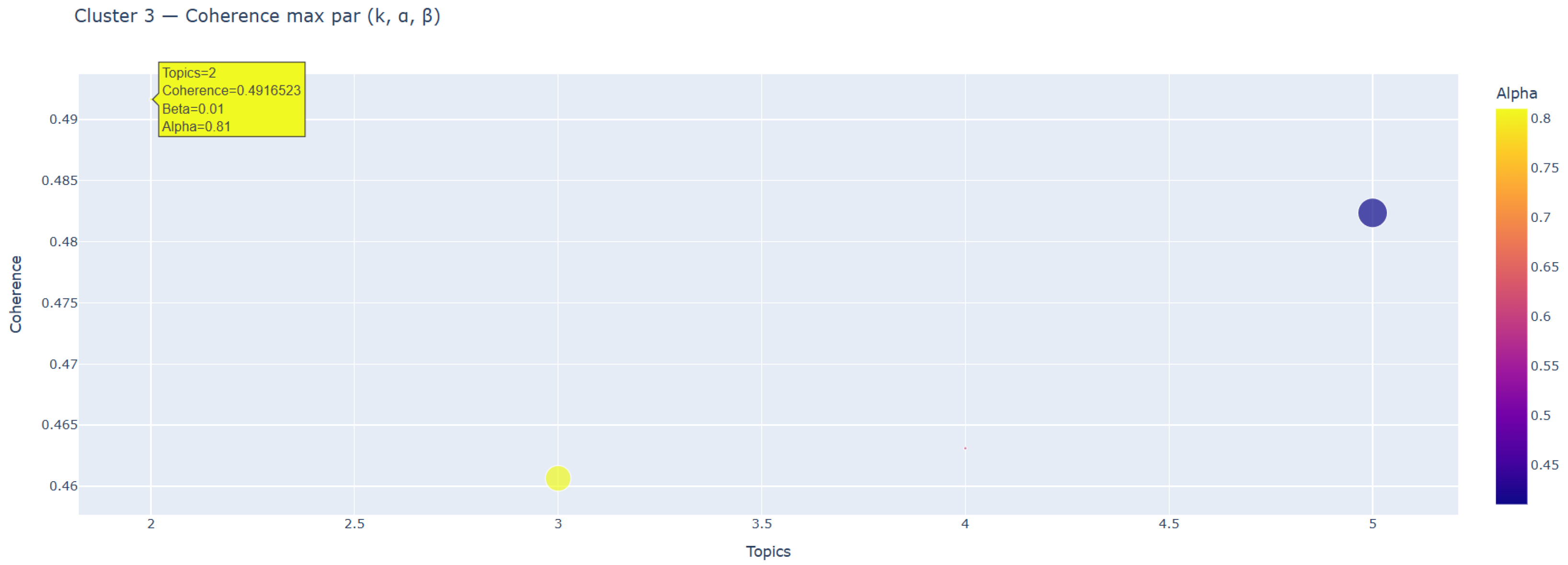

- Topic coherence: In 100 configurations for each cluster, the topic model was varied in steps of 0.1 for possible values of and . The score was employed to measure the semantic coherence of each candidate, which is a metric to measure the semantic regularity of the top terms by word co-occurrence statistics and the contextual similarity. It is also an important index of semantic coherence, which can automatically search for the most interpretable topic structure for each cluster.As shown in Table 5, using the semantic coherence measure not only guarantees a high semantic consistency, but also improve the interpretability and the robustness of the model. In all four semantic clusters, a two-topic solution was clearly preferred indicating the existence of compact and thematically concentrated groups of documents. Furthermore, the difference between the optimal hyperparameters and for each cluster demonstrates the adaptive property of our proposed approach (). The model does not adopt a uniform set of parameters for the entire corpus, but it adjusts the configuration so that it reflects local distributional properties in each cluster. This adaptive tuning leads to more precise and interpretable topic extraction and reinforcing the effectiveness of the cluster-wise decomposition strategy of the framework.Table 5. Optimized hyperparameters for each semantic cluster.

Cluster Number of Topics (K) Topic-Document Prior () Topic–Word Prior () Cluster 0 2 0.81 0.01 Cluster 1 2 0.92 0.21 Cluster 2 2 0.87 0.41 Cluster 3 2 0.91 0.01 Table 6 summarizes the distribution of the semantic clusters utilized in the topic modeling process, which are represented in terms of the number of documents and total words assigned to each cluster. These clusters are the ones for which the best hyperparameters have been previously determined (as presented in Table 5). The table also shows different cluster sizes, Cluster 3 having the largest size (128 documents) and Cluster 1 being the smaller one (31). Nevertheless, the global word counts are still quite similar, so the lexical representation is balanced over the clusters.Table 6. Corpus statistics per semantic cluster.Cluster ID Number of Documents Words Count Cluster 0 89 6795 Cluster 1 31 2011 Cluster 2 97 6820 Cluster 3 128 6347 This adaptive modeling approach overcomes the drawback of uniform topic modeling by adjusting the topic granularity to the semantic alignment of the cluster. As a result, in addition to building more coherent local topics, can boost the system-wide robustness of the topic modeling pipeline, demonstrating the scalability and adaptability of the framework over domain-specific semantics. - Overfitting Analysis: In the optimal case, on the reduced space, each cluster forms a clearly separated and compact group without overlapping, indicating meaningful topic boundaries.Figure 5 and Figure 6 represent 2D visualizations of the topic assignments using UMAP [23] and t-SNE, respectively, which provides complementary views of the structure and semantic coherence of the topics in our proposed approach. Each document is a point. Similar points are closer to each other in the space. As can be seen, both projections are characterized by well-separate and clear topic clusters, and little sign of overlap.This spatial segregation in topic word distributions is a strong indication that the topics are semantically coherent and non-redundant. In addition, the agreement of UMAP [23] and t-SNE [31] verifies the robustness of our approach and its ability to generalize, i.e., the model is able to learn useful patterns rather than overfitting to noise or superficial correlations.

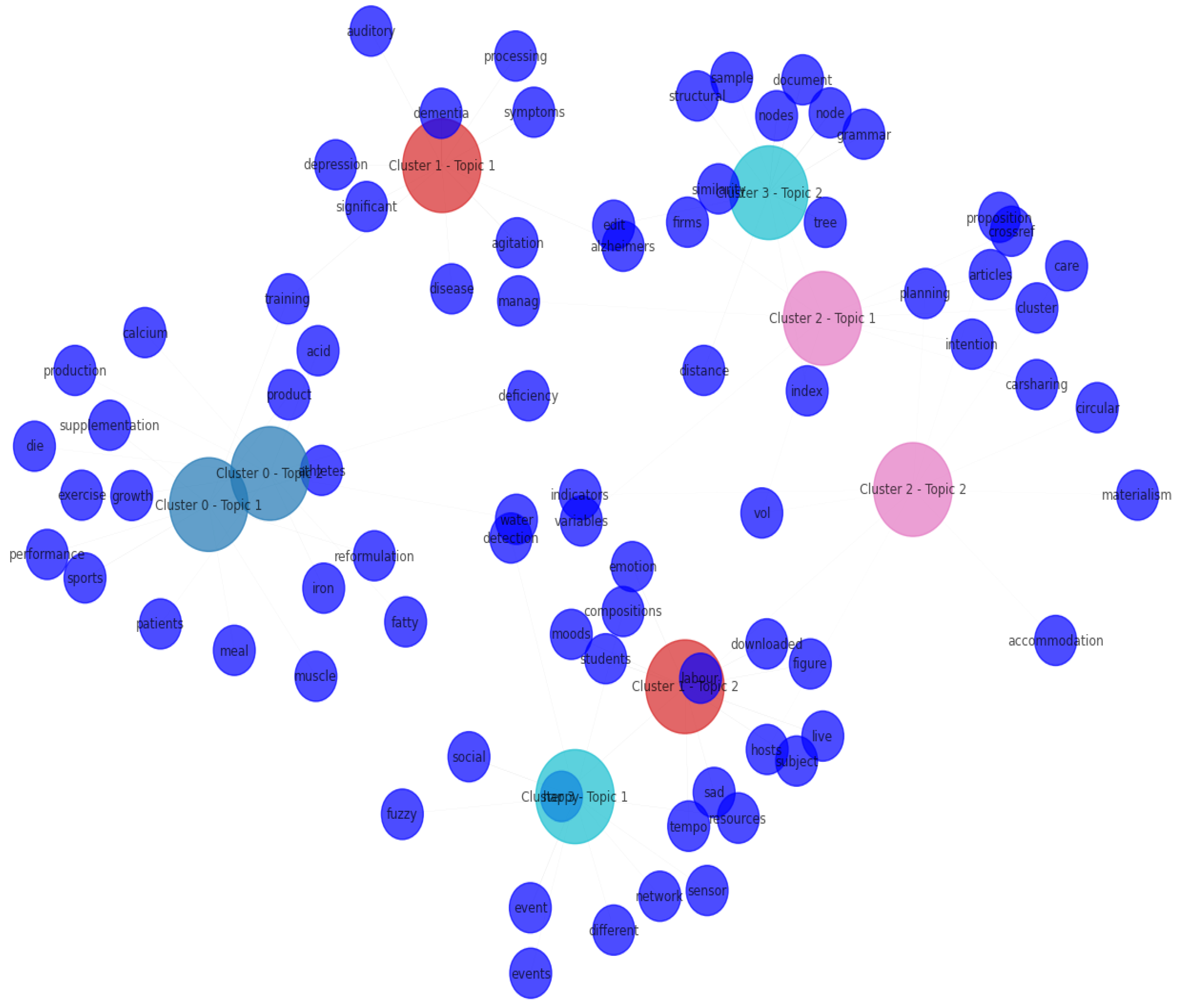

- Graph-based visualization:Figure 7 shows a topic-term bipartite graph that reflects the topic structure learned by the model on a semantically clustered scientific dataset. In this visualization:

- −

- Large colored nodes represent topics, where each color corresponds to a different semantic cluster. The graph comprises four clusters (Cluster 0 to Cluster 3), each containing two topics, for a total of eight topic nodes.

- −

- Smaller blue nodes correspond to the most significant terms (i.e., top-ranked words) related to each topic, as determined from their inferred topic–word distributions.

- −

- Edges connect each topic node with its highest words to give a sense of the content and uniqueness of the topic.

Figure 7. Bipartite graph representation of topics and terms across semantic clusters.The visualization provides several obvious patterns. First, term sets for various topics are clearly separated with little overlapping. This demonstrates a high topic exclusivity and a strong semantic coherence. Second, the intra-cluster topic pairs are more concentrated, while inter-cluster topics are distributed in a separate manner in the semantic space. This validates the rationality of the clustering-based decomposition before the means of topic modeling. The separation confirms that not only focuses on fine-grained semantic topics inside a cluster but also maintains diversity between clusters. Therefore, the graph provides qualitative evidence of both topic visibility and semantic cluster in order to supplement the overall interpretability and semantic coherence of the resultant topic structure.Topic-term associations in the global view (Figure 7) may look compressed because the nodes are densely layered. In this respect, Figure 8 provides an enlarged view of the graph around Cluster 1—Topic 1 and Cluster 3—Topic 2. This local perspective makes word boundaries more clearly visible and improves the readability of terms, which is important for a text-approximation-based qualitative analysis of topic content.Figure 8. Zoomed bipartite visualization highlighting topic-term structures in Cluster 1—Topic 1 and Cluster 3—Topic 2.Figure 8. Zoomed bipartite visualization highlighting topic-term structures in Cluster 1—Topic 1 and Cluster 3—Topic 2.In Figure 8, words like dementia, symptoms, and agitation are highly associated with Cluster 1—Topic 1, indicating a cohesive topic theme concerning neurodegenerative disorders. On the contrary Cluster 3—Topic 2 is also characterized by words like grammar, nodes, document, which represent a semantic focus on structural or computational linguistics. This localized visualization provides a qualitative indication of how well the model is able to produce semantically coherent and topically distinct themes, and further demonstrates the interpretability and discriminative capability of the framework across diverse semantic contexts.Importantly, the used dataset accessed on 11 July 2025, (https://drive.google.com/drive/folders/1rVBhcPeYYSQ_bT9HMwkWRY2T1oNsTrzN?usp=drive_link) does not explicitly contain documents related to neurodegenerative diseases or references to computation linguistics. Nonetheless, the discovery of thematically coherent topics—corroborated by their associated terms—showcases the power of the model to learn latent semantic structures. This capability demonstrates the strength of to uncover sensible and interpretable topics that do not depend on predefined domain annotations and confirms its suitability for unsupervised exploration of complex and heterogeneous corpora.

4.4.2. Objective 2: Robustness and Stability Assessment

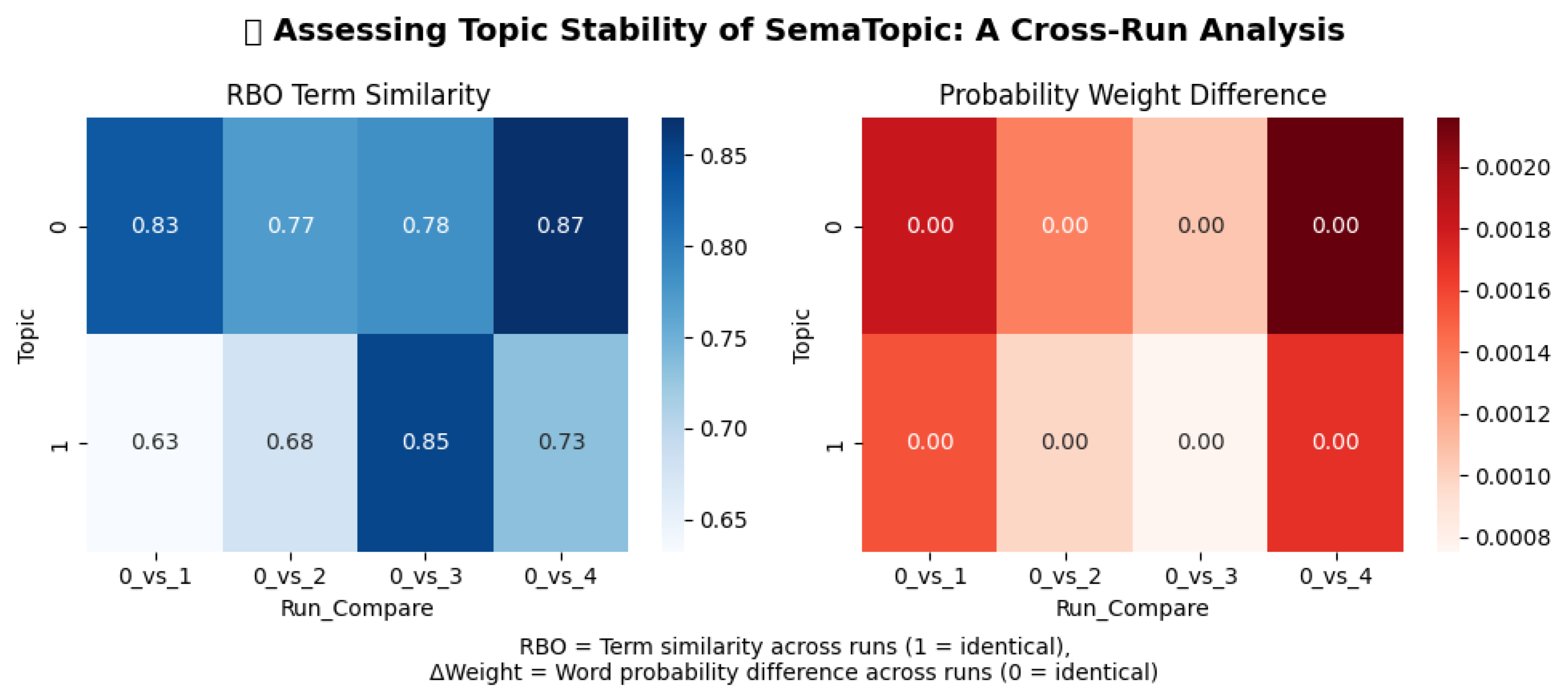

- Stability evaluation:In Figure 9, we offer a detailed analysis on the stability of against varying runs using two different measures: RBO (RankBiased Overlap) for top-term similarity, and the difference of word-probability weight. The derived RBO score values are indicative of the high level of the lexical consistency of Topic 0 for which they range between 0.77 and 0.87, while Topic 1 fluctuates at a moderate level. At the same time, the small differences in probability weights (between 0.00 and 0.0021) show strong distributional stability across runs. Taken together, these results supported the stability and reproducibility of under stochastic perturbations, and supported the fact that our approach is reliable for generating similar topic structures for semantic analysis downstream, supporting it as a reliable method for deriving consistent topics for subsequent semantic analysis.Figure 9. Assessing topic stability in across runs.

- Robustness evaluation:For the robustness analysis, the modified corpus was used to re-run the model so as to check if it is robust to input variability. Despite the data reduction, the model still recovered the same number of semantic clusters (four), supporting its structural stability.Figure 10 and Figure 11 show the cosine similarity and the Jaccard similarity matrices with high similarity between the original and the perturbed list of topics. Some pairs of topics across the runs have high cosine similarity (e.g., 0.8, 0.7, 0.6), and the Jaccard overlaps are larger than 0.5 for the key matched pairs, confirming that semantic consistency is maintained not only in terms but also in distribution.Furthermore, the TMR analysis in the bar chart (Figure 12) demonstrates that preserved at least 50% topic match for all clusters, and some clusters even achieved a perfect match rate (TMR = 1.0). Together, these results confirm the robustness of under realistic corpus perturbations. Our proposed model preserves the high-level topic structure of the real-world dataset, and keeps the semantic coherence in topic extraction, indicating its robustness in handling dynamic or partial real-world datasets.

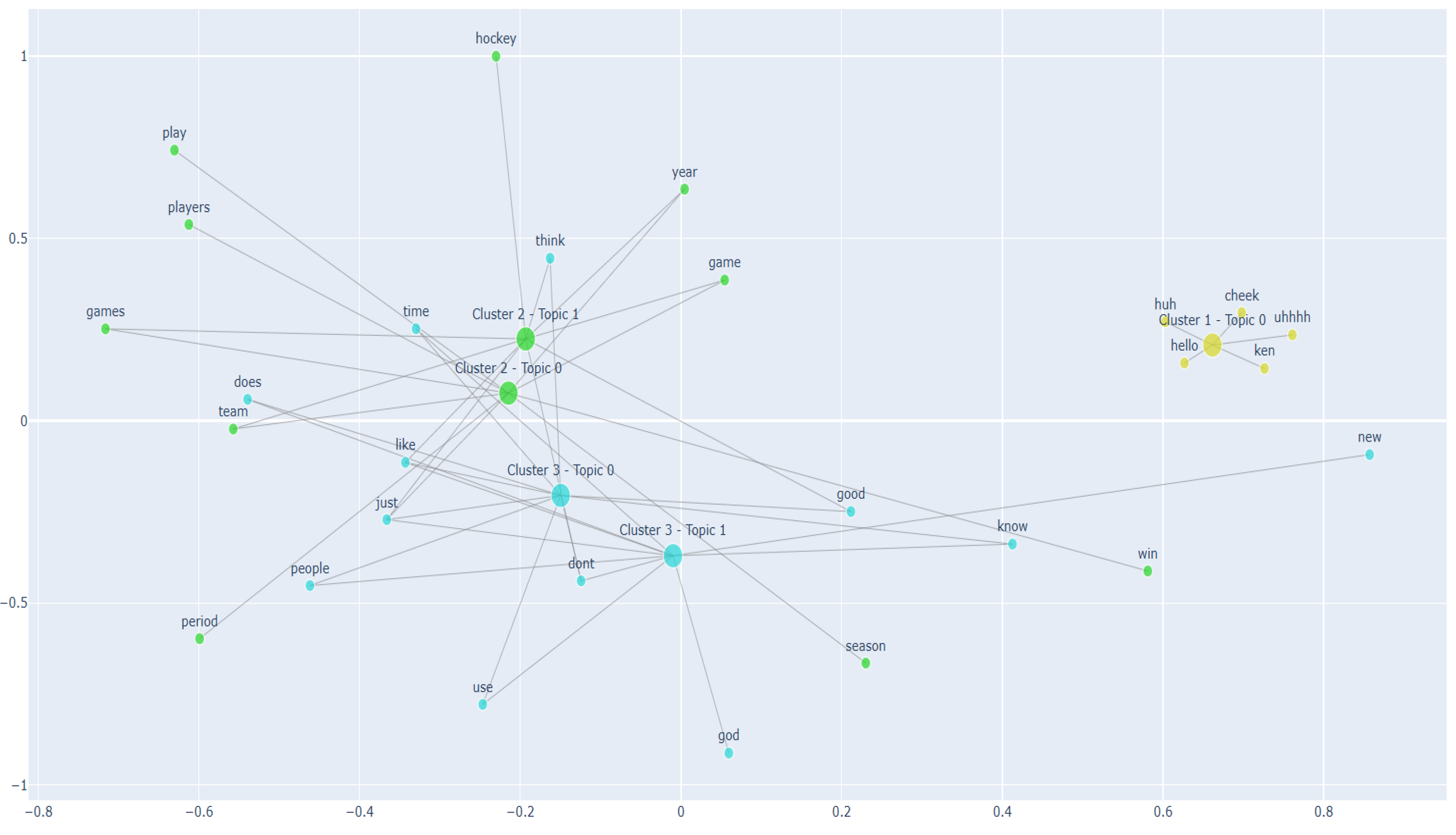

4.4.3. Objective 3: Comparative Analysis with State-of-the-Art Methods

- Qualitative evaluation: The comparative study between topic-term network visualizations obtained by different topic modeling techniques (BERTopic [3] (Figure 13), LDA+ [10] (Figure 14) and (Figure 7)) reveals the semantic and structural improvement of over others. As shown in Figure 13, BERTopic (based on strong transformer-based embeddings) suffers from high topic fragmentation, creating an overly dense and interconnected graph in which semantic boundaries are not clearly delineated and many keywords are redundantly found in multiple topics. This absence of separation reduces interpretability and limits the convenience of domain applications. On the other hand, LDA+ representation in Figure 14 is sparser and more readable, though it is a global topic model which does not capture the heterogeneous subdomains of the corpus. It can lead to too generic topics with no concrete detail and ignorance of local semantic variations.On the other hand, the graph in Figure 7 shows strongly connected clusters, each consisting of two more coherent and significantly different topics designed for the semantic area. Low redundancy and strong topical-term cohesion cause the visual spacing and fewer edge overlaps between terms. This design guarantees that each cluster is modeled with the appropriate topic granularity, leading to semantically rich and non-overlapping topics. Furthermore, the decrease in graph density, as well as increased modularity, demonstrates how our approach can capture meaningful structure and increase interpretability and semantic coherence. In short, this comparative study highlights the robustness, scalability and domain adaptability of . This is considered an effective substitute for obtaining high-quality and human-interpretable topics from complex textual data.Figure 13. Topic-term bipartite graph generated by BERTopic on a domain-specific scientific corpus of 946 scientific articles.Figure 13. Topic-term bipartite graph generated by BERTopic on a domain-specific scientific corpus of 946 scientific articles.Figure 14. The extracted topics by our previous approach (LDA+) on the domain-specific scientific corpus of 946 scientific articles.Figure 14. The extracted topics by our previous approach (LDA+) on the domain-specific scientific corpus of 946 scientific articles.In order to empirically evaluate the performance of our proposed approach compared to current state-of-the-art topic modeling methods, we conducted an experiment on the 20 Newsgroups dataset (https://www.kaggle.com/datasets/crawford/20-newsgroups—formed from informal and noisy short text tweets, accessed on 1 July 2025) using three different models: , BERTopic and LDA+. This experimental study was used not only for performance comparison, but also for studying how semantic reinforcement mechanisms can improve topic extraction. It also enabled us to see how a difference in quality and quantity of input data may affect the properties of topic modeling.As shown in Figure 15, although BERTopic integrates semantic clustering using class-based TF-IDF and applies transformer-based embeddings, the generated graph structure is very dense and does not make sense, presenting heavy overlapping topics, redundant key phrases, and little structure separation of topics. This is an indication that the semantic clustering alone, when unaware of the latent structure of the corpus or when combined with weak disambiguation strategies, can cause topic granularity to be inflated without adding distinction to the semantics.On the contrary, in Figure 16, LDA+ shows that it is able to improve traditional LDA with optimized priors but gives too sparse a topic graph. The associations between topics and terms are weak and even-decoupled, leading to imprecise and low-informative topics. This highlights the limitations of non-contextual models when confronted with short and informal texts, for which term frequency alone is not enough to capture latent topics.By comparison, the graph produced by our model in Figure 17 presents discrete topic clusters, strong term-topic coherence and a clear structure at the global level. These gains result from three key design choices: (1) the use of contextual embeddings for expressing fine-grained semantics at the document level, (2) the use of semantic clustering, where documents that share latent semantic affinity are grouped together, and (3) the adaptive fine-tuning of our model parameters per semantic cluster to maximize topic coherence.Figure 15. Topic-term bipartite graph generated by BERTopic using the 20 Newsgroups dataset.Significantly, this advantage is maintained even for the noisy, low-quality nature of the dataset, stripped of any neatly-structured linguistic information. The reported enhancements demonstrate that the quality of the data is a decisive factor in the performance of topic model. Therefore, in the output of our approach, some extracted topic terms seem generic, vague or semantically weak and not due to poor modeling, but rather due to an immediate effect of the restricted degree of lexical richness and semantic density characterizing the available input. However, our approach remains capable of preserving topic separability and semantic coherence in a structural form, surpassing baseline methods (BERTopic [3], LDA+ [10]) in visual clarity and interpretability.These findings show not only that semantic refinement strategies can be used to contain data noise but also that it is still the quality and expressiveness of input documents that plays a determinant role in topic-term quality.To substantiate the above findings with concrete evidence, Table 7 presents a qualitative comparison of representative topics extracted by LDA+, BERTopic, and on the Google Scholar dataset. This analysis complements the graph-based visualizations by illustrating the actual topic content and highlighting differences in semantic clarity and interpretability.As observed, produces semantically dense and thematically coherent topics, in which terms are conceptually aligned and readily interpretable (e.g., semantic, coherence, representation, topic). In contrast, LDA+ tends to generate overly broad and generic groupings that lack fine-grained semantic detail, while BERTopic—despite its use of transformer-based embeddings—frequently combines loosely related or noisy terms (e.g., airbnb, ukraine, gps, xml, lyft) within a single topic. These findings highlight the superior ability of to deliver topics that are both structurally well-organized and semantically faithful, thereby enhancing interpretability compared to existing baselines.Figure 16. Topic-term bipartite graph generated by LDA+ using the 20 Newsgroups dataset.Figure 17. Topic-term bipartite graph generated by using the 20 Newsgroups dataset.Table 7. Qualitative comparison of representative topics generated by LDA+, BERTopic, and on the Google Scholar corpus (965 documents).Table 7. Qualitative comparison of representative topics generated by LDA+, BERTopic, and on the Google Scholar corpus (965 documents).

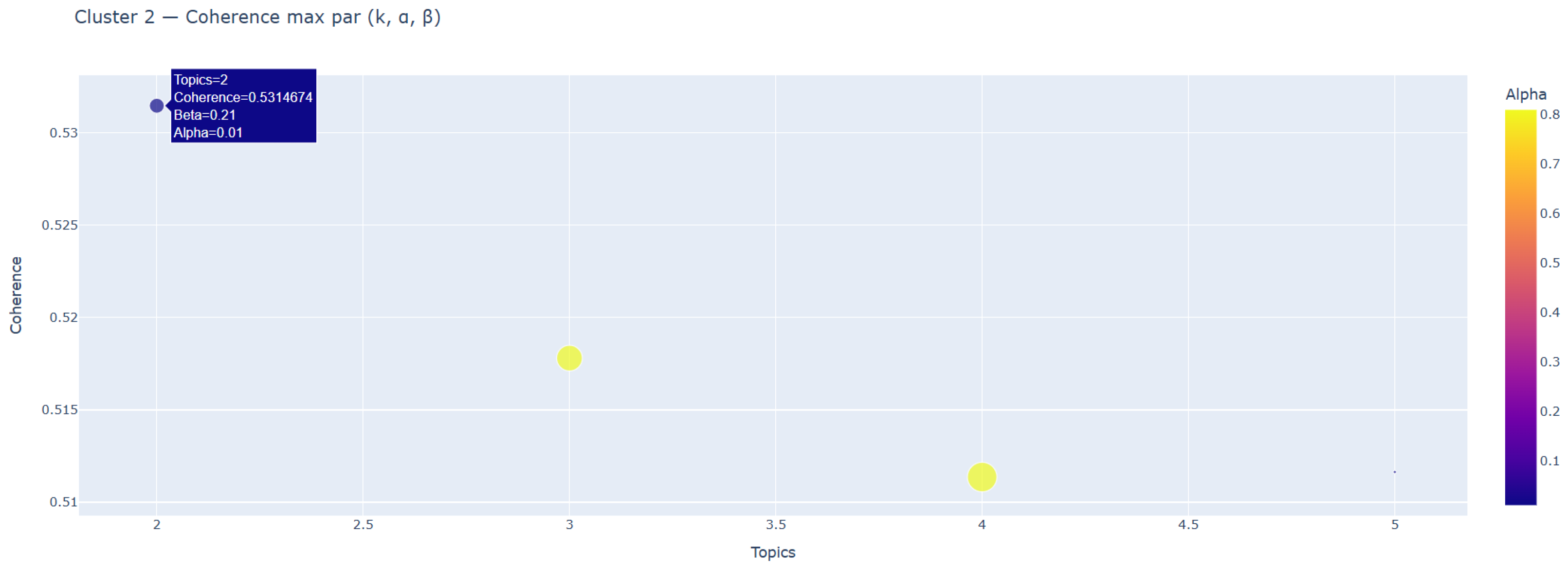

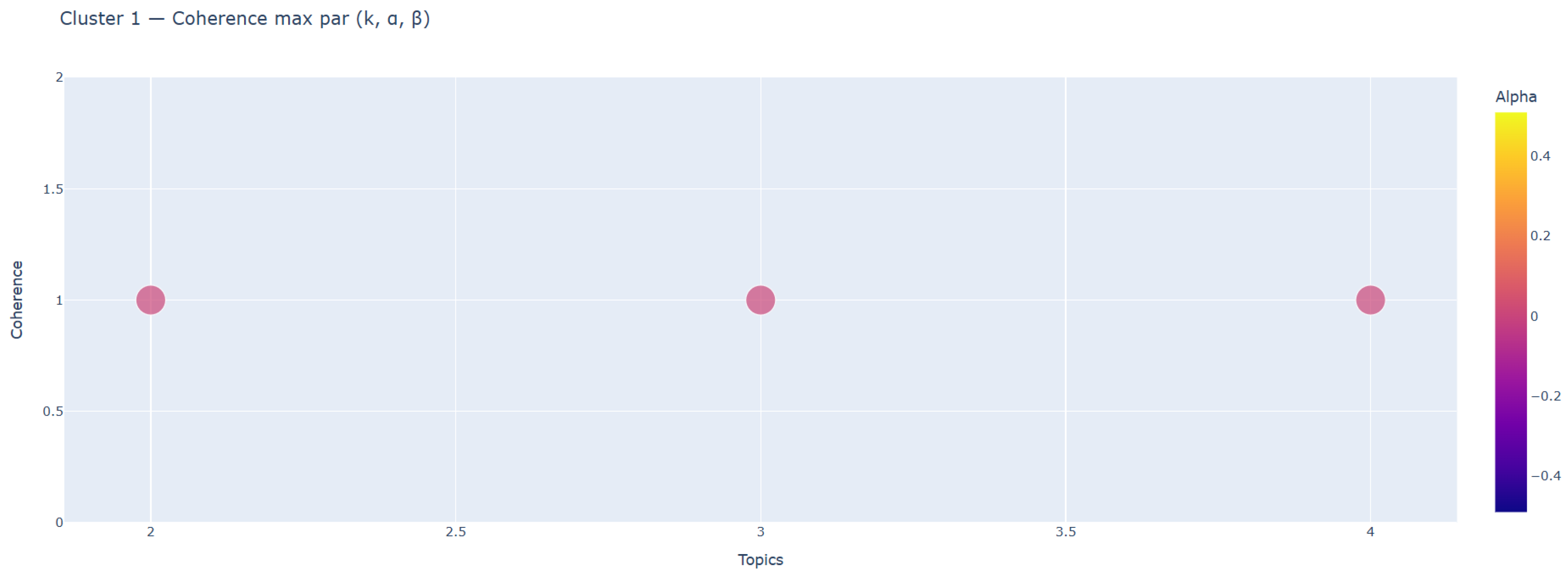

Model Topic 1 Topic 2 Topic 3 LDA+ data, information, user, web, media research, study, service, social, tech health, music, news, medical, odd BERTopic airbnb, ukraine, gps, xml, lyft ecosystem, schizophrenia, rdf, intake, folate bash, student, dyslexia, nutrient, taxi SemaTopic (ours) semantic, coherence, representation, topic, distribution retrieval, index, structure, similarity, model clustering, pattern, fuzzy, detection, accuracy - Quantitative evaluation: In addition to the first qualitative investigation with topic-term bipartite graph representations, we also performed more quantitative semantic coherence-driven validation to add more evidence supporting the effectiveness comparison between the three models. This was done to finally establish the semantic coherence of the extracted topics, by making the semantic coherence metric the benchmark measure. Experiments were performed on the 20 Newsgroups dataset, a difficult benchmark because of its noisy, tweet-like nature and small lexical diversity. In order to handle the semantic sparsity of data, the maximum number of candidate topics per cluster was limited to five in the optimization process. It is shown in Figure 18 that Cluster 2 obtains the best topic coherence of 0.5315 under the configuration , , . In addition, Cluster 3 reaches the maximum semantic coherence of 0.4906 with , , , as shown in Figure 19. On the other hand, Cluster 1 with 38 documents (Figure 20) shows a semantic coherence score of 1.0 in every configuration. This saturation suggested that the data are not fine-grained enough to separate a wider variety of topics, so Cluster 1 was treated as a monolithic topic under which individual clusters are combined into a single topic model.outperforms all the baselines in terms of semantic coherence. The BERTopic also utilizes clustering based on semantics and yielded a semantic coherence score of 0.5004, but the reasoning of the topic is just obscure due to the densely twisted graph. In the same way, LDA+ had an optimum semantic coherence value of 0.4729 with , , , but it failed to take fine-grained semantic context integrated into clustering. By comparison, integrates contextualized embeddings with adaptive Dirichlet parameter learning, which can generate more semantically coherent and discriminative topic structures.To summarize, this dual mode evaluation, visualization and semantic coherence scoring provides a validation for the robustness and semantic faithfulness of our proposed framework. Despite the noise and structural disadvantages of the 20 Newsgroups corpus, effectively maintains topic separability and meaning, and demonstrates the essential importance of semantic-aware modeling using rich context embeddings in low-resource text analytics.

4.5. Objective 4: Evaluation of Scalability and Multilingual Robustness

- Scalability assessment: One of the essential requirements of a topic modeling framework is its ability to scale efficiently to larger corpora without compromising topic quality. To assess this property, we compared the performance of on two datasets of different sizes and characteristics: the Google Scholar corpus (965 documents, primarily monolingual) and the CEUR-WS proceedings corpus (1700 documents, multilingual). Execution time was measured for the entire pipeline, which includes embedding generation, dimensionality reduction, clustering, and probabilistic topic inference.As shown in Table 9, execution time increased from approximately 40 min for 965 documents to 500 min for 1700 documents. This supra-linear growth is mainly explained by the higher computational cost of embedding generation and density-based clustering (HDBSCAN), which become more demanding on heterogeneous corpora. Despite this increase, the pipeline consistently produced stable and interpretable topics, confirming the scalability of .Table 9. Execution time of SemaTopic across different corpora.

Corpus Documents Execution Time (min) Observations Google Scholar 965 40 Monolingual dataset; relatively fast execution. CEUR-WS 1700 500 Larger, multilingual dataset; slower but stable processing. These findings demonstrate that is capable of handling corpora beyond one thousand documents while preserving semantic interpretability and coherence. This confirms the scalability of the framework, making it applicable to real-world, large-scale text- mining scenarios. - Multilingual robustness: The results obtained on the CEUR-WS dataset highlight the ability of to maintain semantic consistency across multilingual and heterogeneous clusters. As shown in Table 10, Cluster 0 achieved the highest coherence score (0.60) despite containing a relatively smaller number of documents (166) and a vocabulary size of 4824. Cluster 1, which comprised the largest portion of the dataset (1310 documents, 5970 unique terms), maintained a moderate coherence score of 0.52. Even Cluster 2, with the smallest number of documents (224), preserved a coherence level of 0.47, reflecting a non-trivial degree of semantic stability. Even the lowest coherence (0.47) is comparable or higher than baselines on monolingual corpora, showing that can mitigate cross-lingual noise.Table 10. Topic coherence and hyperparameter settings for the CEUR-WS corpus.

Cluster Documents Vocabulary Size Coherence K (Topics) Cluster 0 166 4824 0.60 0.71 0.20 3 Cluster 1 1310 5970 0.52 0.50 0.01 3 Cluster 2 224 3135 0.47 0.11 0.01 3 Overall, these findings indicate that effectively balances coherence and scalability across varying corpus sizes and linguistic diversity. The relatively stable hyperparameter settings (, , and K) across clusters further reinforce the robustness of the probabilistic inference layer, demonstrating that the framework can reliably adapt to multilingual input without compromising topic interpretability.To complement these quantitative results, we further employ graph-based visualizations to qualitatively examine how multilingual terms (e.g., Russian and Ukrainian words) are integrated within clusters. This analysis provides additional evidence of the model’s ability to capture cross-lingual semantic relationships while preserving topic coherence. - Graph-based visualization: It was employed to qualitatively assess the semantic organization of topics and the integration of multilingual terms (Figure 21, Figure 22 and Figure 23).This integration of multilingual terminology confirms that captures cross-lingual semantic relations without degrading topic coherence. Such qualitative evidence complements the quantitative results, reinforcing the framework’s scalability and robustness for large and multilingual corpora.These results (Figure 21, Figure 22 and Figure 23) demonstrate that, unlike many embedding-based baselines that are sensitive to corpus size or language variation, maintains both scalability and cross-lingual robustness. This highlights its potential for real-world deployment in heterogeneous and multilingual text-mining scenarios.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Correction Statement

Conflicts of Interest

References

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Mersha, M.A.; Yigezu, M.G.; Kalita, J. Semantic-Driven Topic Modeling Using Transformer-Based Embeddings and Clustering Algorithms. arXiv 2024, arXiv:2410.00134v1. [Google Scholar]

- Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv 2022, arXiv:2203.05794v1. [Google Scholar]

- Abdelrazeka, A.; Eida, Y.; Gawisha, E.; Medhata, W.; Hassana, A. Topic Modeling Algorithms and Applications: A Survey. Inf. Syst. 2022, 112, 102131. Available online: https://www.researchgate.net/publication/364447028 (accessed on 1 October 2022). [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv 2019. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Angelov, D. Top2Vec: Distributed representations of topics. arXiv 2020, arXiv:2008.09470. [Google Scholar] [CrossRef]

- Drissi, A.; Khemiri, A.; Sassi, S.; Tissaoui, A.; Chbeir, R.; Jemai, A. LDA+: An Extended LDA Model for Topic Hierarchy and Discovery. In Recent Challenges in Intelligent Information and Database Systems, Proceedings of the 14th Asian Conference, ACIIDS 2022, Ho Chi Minh City, Vietnam, 28–30 November 2022; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Dieng, A.B.; Ruiz, F.J.; Blei, D.M. Topic Modeling in Embedding Spaces. 2020. Available online: https://aclanthology.org/2020.tacl-1.29/ (accessed on 1 July 2020).

- Moody, C.E. Mixing Dirichlet Topic Models and Word Embeddings to Make lda2vec. arXiv 2016. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global Vectors for Word Representation. Researchgate. 2014. Available online: https://www.researchgate.net/publication/284576917 (accessed on 1 January 2014).

- Sia, S.; Dalmia, A.; Mielke, S.J. Tired of Topic Models? Clusters of Pretrained Word Embeddings Make for Fast and Good Topics too! Association for Computational Linguistics. 2020. Available online: https://aclanthology.org/2020.emnlp-main.135/ (accessed on 1 January 2020).

- Likas, A.; Vlassis, N.; Verbeek, J.J. The global k-means clustering algorithm. Pattern Recognit. 2003, 36, 451–461. [Google Scholar] [CrossRef]

- Van der Linde, A. PCA-Based Dimensionality Reduction. J. Nonparametric Stat. 2003, 15, 77–92. Available online: https://www.tandfonline.com/doi/abs/10.1080/10485250306037 (accessed on 1 October 2021). [CrossRef]

- Nguyen, D.Q.; Billingsley, R.; Du, L.; Johnson, M. Improving Topic Models with Latent Feature Word Representations. arXiv 2018, arXiv:1810.06306v1. [Google Scholar] [CrossRef]

- Shi, M.; Liu, J.; Zhou, D.; Tang, M.; Cao, B. WE-LDA: A Word Embeddings Augmented LDA Model for Web Services Clustering. In Proceedings of the 2017 IEEE 24th International Conference on Web Services, Honolulu, HI, USA, 25–30 June 2017. [Google Scholar]

- Campello, R.J.; Moulavi, D.; Sander, J. Density-Based Clustering Based on Hierarchical Density Estimates. In Advances in Knowledge Discovery and Data Mining, Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Gold Coast, Australia, 14–17 April 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 160–172. [Google Scholar]

- Federico, B.; Silvia, T.; Dirk, H. Pre-training is a Hot Topic: Contextualized Document Embeddings Improve Topic Coherence. arXiv 2021, arXiv:2004.03974. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. Albert: A lite bert for self-supervised learning of language representations. arXiv 2020, arXiv:1909.11942. [Google Scholar] [CrossRef]

- Diego, S.U. A Process for Topic Modelling Via Word Embeddings. arXiv 2023, arXiv:2312.03705. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction. arXiv 2020. [Google Scholar] [CrossRef]

- Zhou, D.; Hu, X.; Wang, R. Neural Topic Modeling by Incorporating Document Relationship Graph. arXiv 2020. [Google Scholar] [CrossRef]

- Rajendran, B.; Vidya, C.G.; Sanil, J.; Asharaf, S. A Local Explainability Technique for Graph Neural Topic Models. Hum. Centric Intell. Syst. 2024, 4, 53–76. [Google Scholar] [CrossRef]

- Li, W.; McCallum, A. Pachinko allocation: DAG-structured mixture models of topic correlations. In Proceedings of the ACM, ICML ’06: 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 577–584. [Google Scholar]

- Ekinci, E.; Omurca, S. NET-LDA: A Novel Topic Modeling Method Based on Semantic Document Similarity. Turk. J. Electr. Eng. Comput. Sci. 2020, 28, 2244–2260. Available online: https://www.researchgate.net/publication/340732115 (accessed on 1 July 2020). [CrossRef]

- Cao, Z.; Li, S.; Liu, Y.; Li, W.; Ji, H. A novel neural topic model and its supervised extension. In Proceedings of the ACM, AAAI’15: Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 2210–2216. [Google Scholar]

- Miao, Y.; Grefenstette, E.; Blunsom, P. Discovering Discrete Latent Topics with Neural Variational Inference. arXiv 2018, arXiv:1706.00359. [Google Scholar] [CrossRef]

- Wu, X.; Nguyen, T.; Luu, A.T. A Survey on Neural Topic Models: Methods, Applications, and Challenges. arXiv 2024, arXiv:2401.15351v2. [Google Scholar] [CrossRef]

- Cieslak, M.C.; Castelfranco, A.M.; Roncalli, V.; Lenz, P.H.; Hartline, D.K. t-Distributed Stochastic Neighbor Embedding (t-SNE): A tool for eco-physiological transcriptomic analysis. Mar. Genom. 2020, 51, 100723. [Google Scholar] [CrossRef]

| Approach | Challenge 1 | Challenge 2 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | |

| lda2vec [12] (2016) | – | No | – | Topics | Medium | – | – | No | No |

| WE-LDA [18] (2017) | Yes | No | – | Topics | Medium | – | – | No | No |