AI Test Modeling for Computer Vision System—A Case Study

Abstract

1. Introduction

- The goal of computer vision is to understand the content of digital images. Typically, this involves developing methods that attempt to reproduce the capability of human vision.

- Understanding the content of digital images may involve extracting information from an image, i.e., an object, a text description, a three-dimensional model, and so forth.

- The goal of computer vision is to replicate human vision using digital images through three main processing components in consecutive order: image acquisition, image processing and image analysis, and understanding.

- Enhanced automation—With computer vision, machines can take on more complex tasks that would otherwise require human intervention. For example, robots with computer vision can perform tasks like sorting objects or detecting defects in manufacturing processes.

- Improved accuracy—Unlike humans, computers can analyze visual data with extreme accuracy and precision. This makes computer vision a valuable tool in fields like medical imaging and security, where accurate interpretation of visual data can be critical.

- Increased efficiency—Computer vision can help streamline processes and make them more efficient. For example, computer vision can be used in agriculture to detect pests or diseases in crops, helping farmers to take targeted action and save time and resources.

- New possibilities—With computer vision, there are countless new possibilities for innovation and creativity. For example, computer vision can be used to develop new forms of art or entertainment or to create new tools and applications that we cannot even imagine yet.

- Improved accessibility—Finally, computer vision can help make technology more accessible to people with disabilities. For example, computer vision can be used to develop assistive technologies, such as devices that can help people with vision impairment navigate their surroundings.

2. The Literature Review

3. Test Modeling for Computer Vision Systems

3.1. Understanding of Testing Computer Vision Systems

- Difficulty in establishing well-defined, clear, and measurable quality testing requirements;

- A lack of well-defined and practiced quality testing and assurance standards;

- A lack of well-defined, systematic quality testing methods and solution;

- A lack of automatic test tools with well-defined, adequate quality test coverage;

- A lack of automatic, adequate quality test coverage analysis techniques and solutions.

3.2. Conventional Test Modeling

3.3. AI Test Modeling

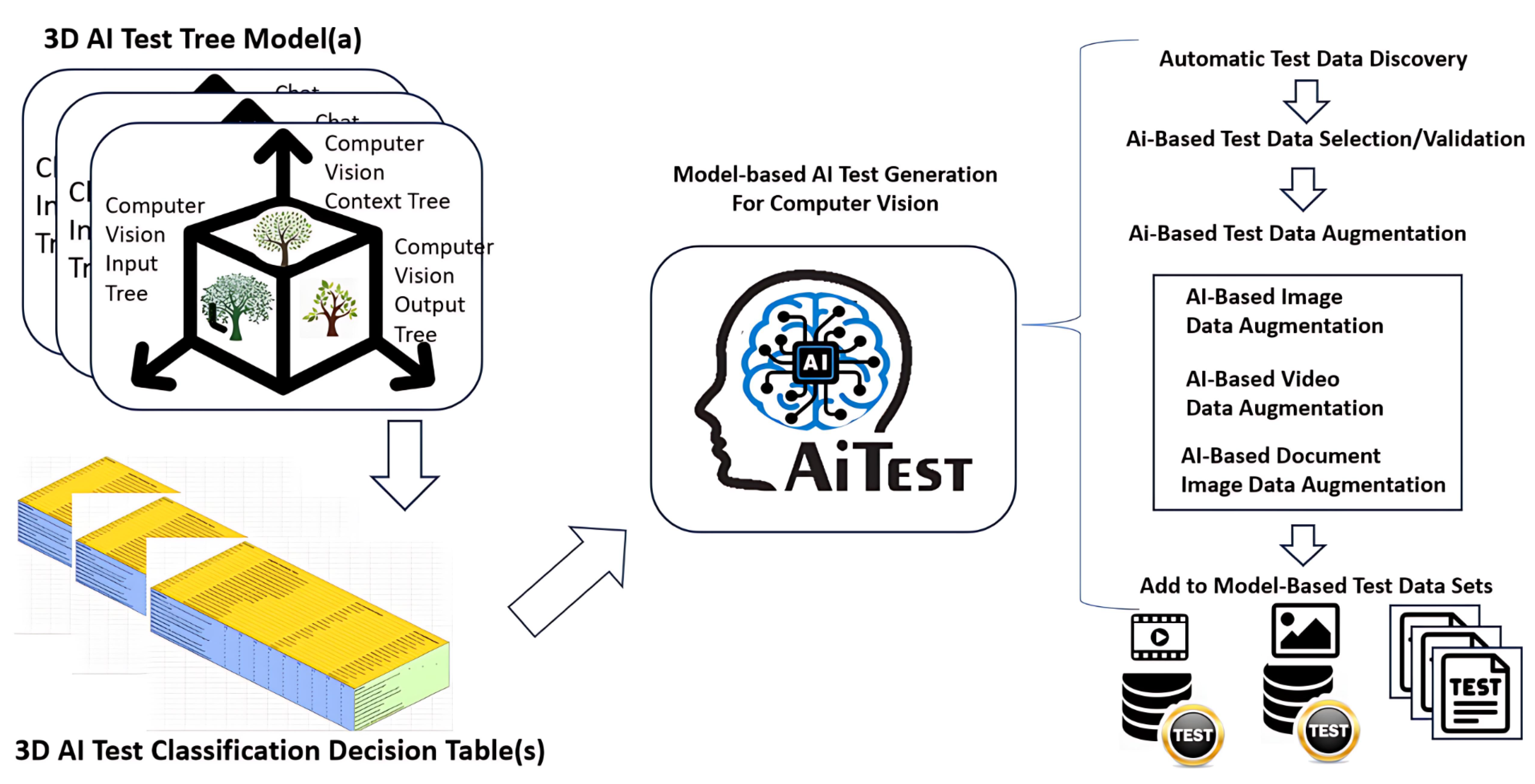

- Establish a test model using the 3D tree model below:

- (a)

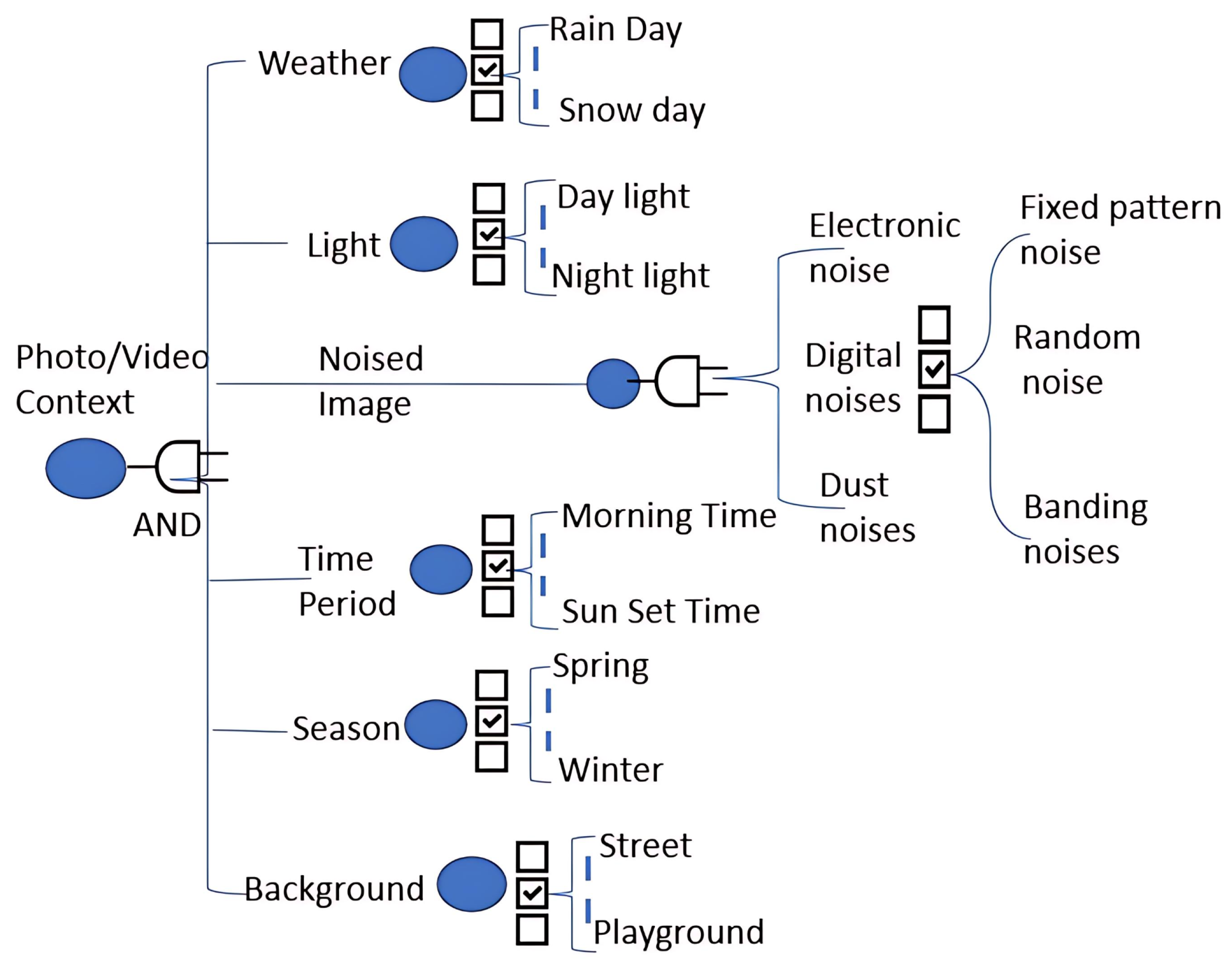

- Establish a context tree model to represent well-classified contexts for the computer vision system;

- (b)

- Establish an input tree model to represent well-classified inputs for the computer vision system;

- (c)

- Establish the corresponding output tree model to represent well-classified outputs from the computer vision system under test.

- Automatically generate a 3D classification decision table for each computer vision feature (function) based on the derived 3D tree model.

3.4. Context Classification Modeling

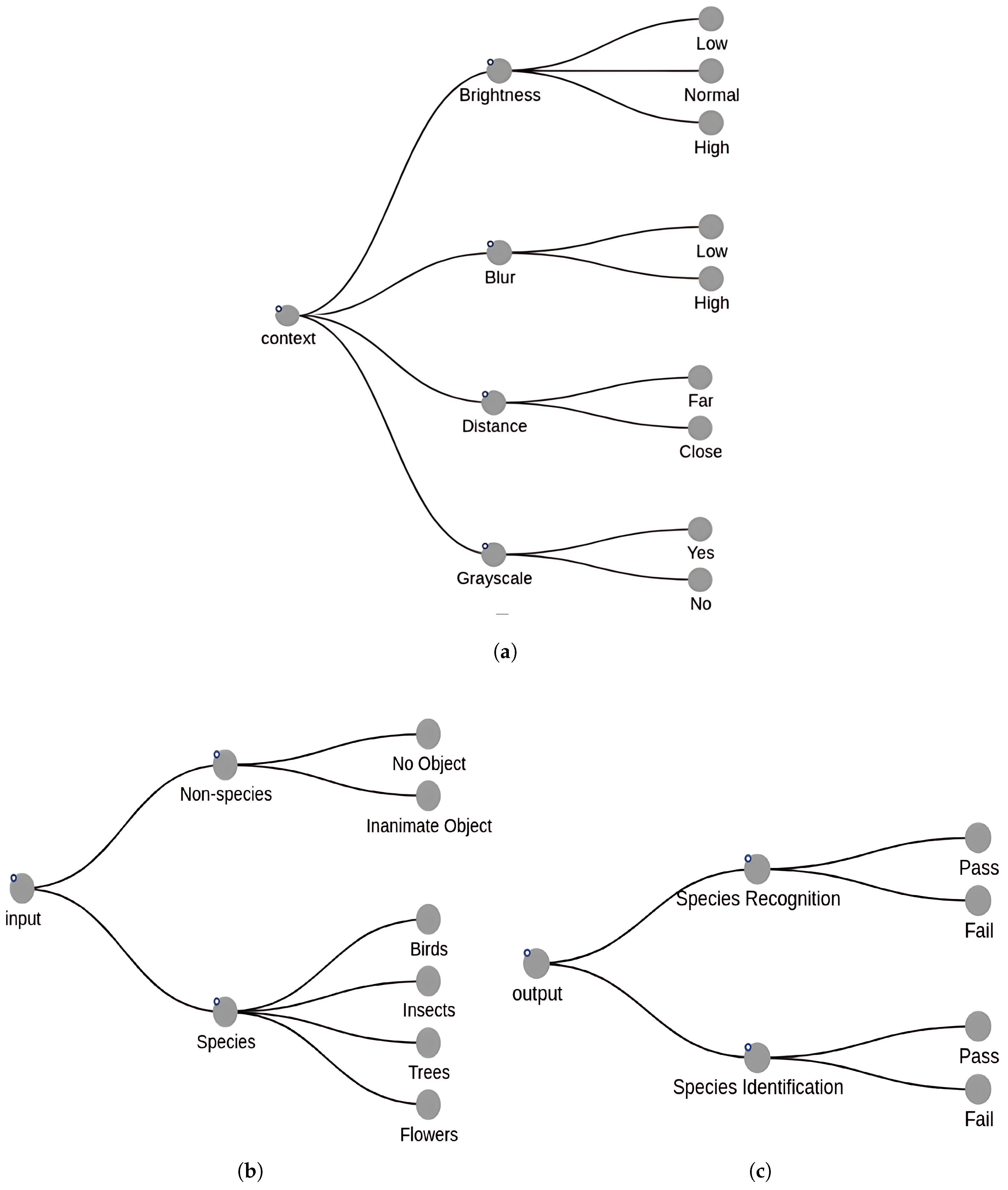

3.5. Input Classification Modeling

3.6. Output Classification Modeling

4. Test Generation and Data Augmentation for Intelligent Computer Vision Systems

4.1. Test Generation

- Test Data Discovery: Using Internet-based solutions to search for, select, and validate discovered test data for a computer vision system’s targeted computer vision feature (or function).

- Test Data Augmentation: Using diverse test data augmentation solutions and tools, including machine learning models and frameworks, to generate diverse augmented test data based on the selected test data (images, videos, and document images) for a selected computer vision feature/function in a computer vision system under test.

- Model-Based Test Data Generation: Using a model-based test data generation tool to generate diverse test data to generate well-classified model-based test data (images, videos, and document images) for a selected computer vision feature/function in a computer vision system under test to achieve a well-defined (or selected) adequate test coverage criterion.

- AI-Based Test Data Generation: Using machine learning models and AI techniques to generate desirable test data for a targeted computer vision feature (or function) based on a given test scheme of vision feature (or function) in a computer vision system to achieve well-defined test coverage criteria.

- Real-Time On-Site Collected Test Data and Processing: Using various test data collection methods and tools to collect real-time on-site raw data (camera videos, photos, and/or document images) via computer vision system APIs. To prepare the collected data as targeted test data, machine learning models or tools will be used to preprocess the collected data and convert it into targeted test data for well-defined, adequate test criteria.

4.2. Data Augmentation

5. Adequate Test Coverage and Standards for Computer Vision Systems

Model-Based Test Adequacy for a Computer Vision Intelligence System

- Three-Dimensional AI Test Classification Decision Table Test Coverage—To achieve this coverage, the test set (3DT-Set) must include one test case for any 3D element (CT-x, IT-y, OT-z) in the 3D AI test classification decision table;

- Context classification decision table test coverage—To achieve this coverage, the test set (3DT-Set) must include one test case for any rule in a context classification decision table;

- Input classification decision table test coverage—To achieve this coverage, the test set (3DT-Set) must include at least one test case for any rule in an input classification decision table;

- Output classification decision table test coverage—To achieve this coverage, the test set (3DT-Set) must include at least a true case for any rule in an output classification decision table.

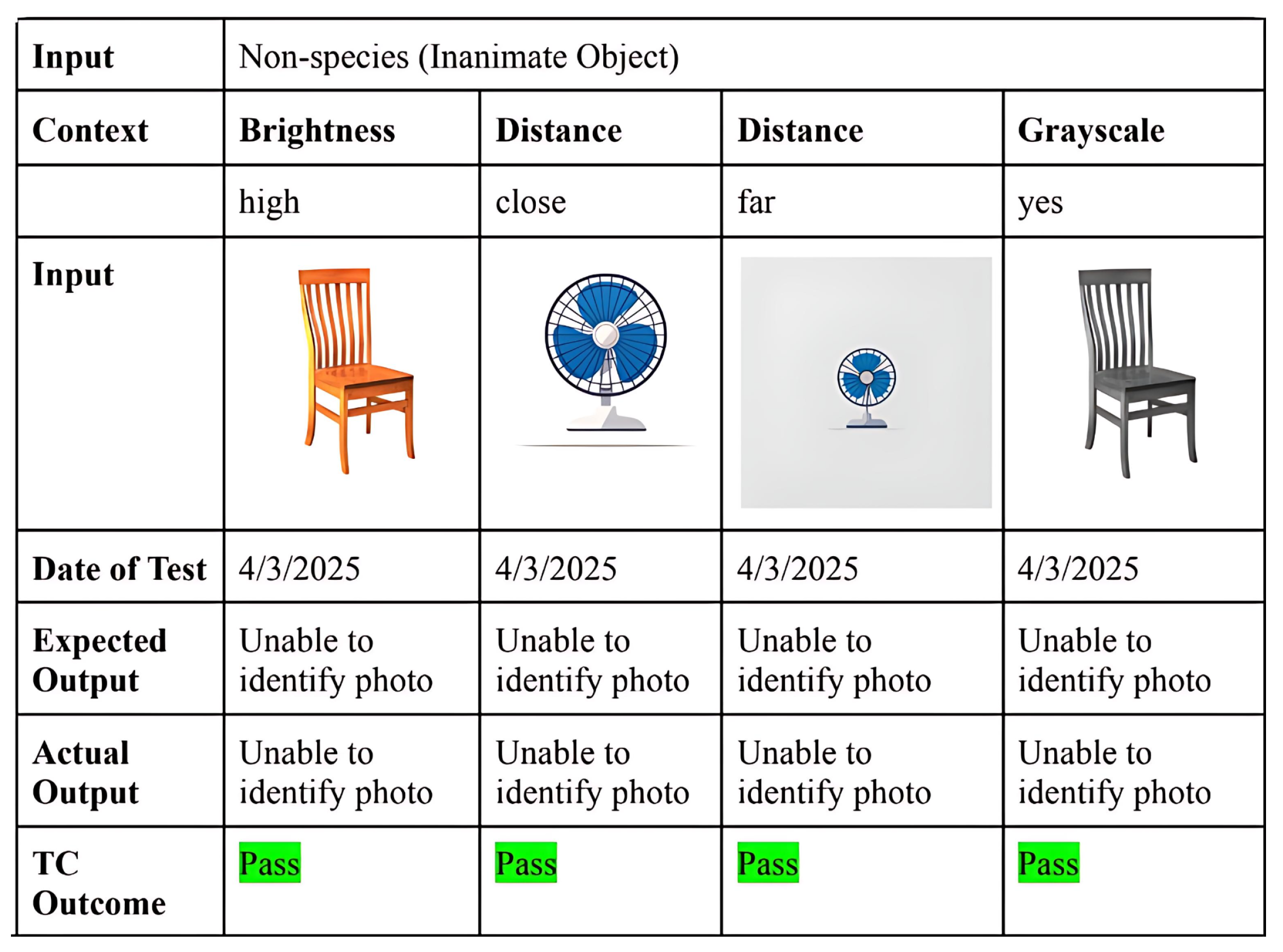

6. A Case Study—Seek by iNaturalist

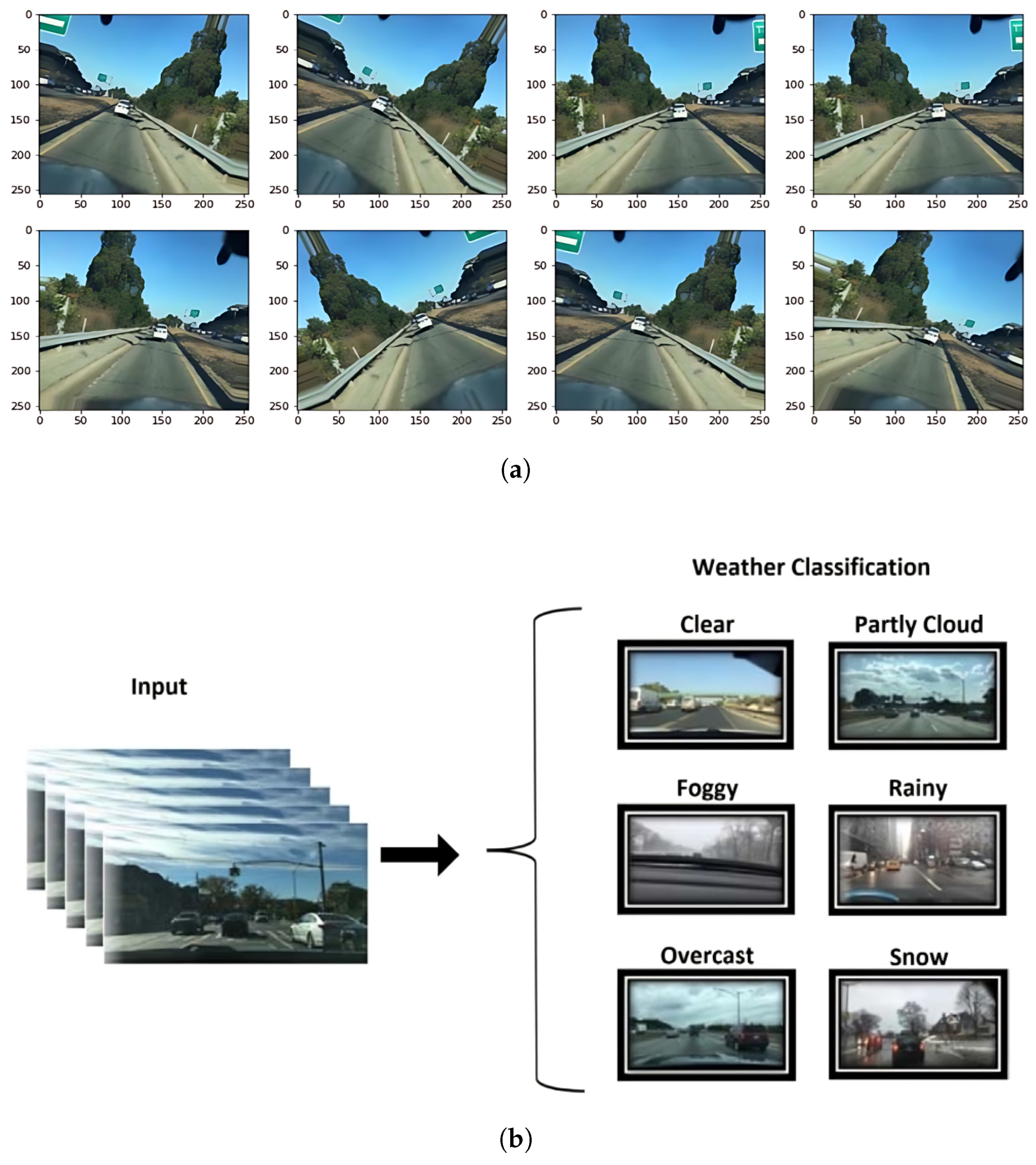

6.1. Methodology and Dataset Construction

- Image Sourcing: Species images were collected directly from the Seek app, while non-species images were supplemented with publicly available resources (e.g., household items, simple backgrounds).

- Selection Criteria: The images were selected to represent four species categories—insects, flowers, trees, and birds—and four non-species categories—chair, bottle, fan, and plain background. This ensured the inclusion of supported and unsupported inputs.

- Contextual Variation: Augmentations were systematically applied to introduce controlled variations (e.g., Gaussian blur, brightness scaling, grayscale conversion), ensuring that the test dataset reflected realistic imaging conditions encountered by end users.

6.2. AI Test Modeling for Selected AI Features

6.3. AI Function Test Cases, Data Generation and Test Coverage

- Contextual Relevance: Augmentations were selected based on typical real-world variations that included blur, brightness, distance, and grayscale changes, among others, in image capture. They were chosen as they were generally identified as the main sources of performance degradation in deployed vision systems.

- Controlled Augmentation: Data augmentation methods (e.g., rotation, brightness scaling, simulated blur) were controlled so that the generated images were realistic without imposing synthetic distortions that would not occur naturally.

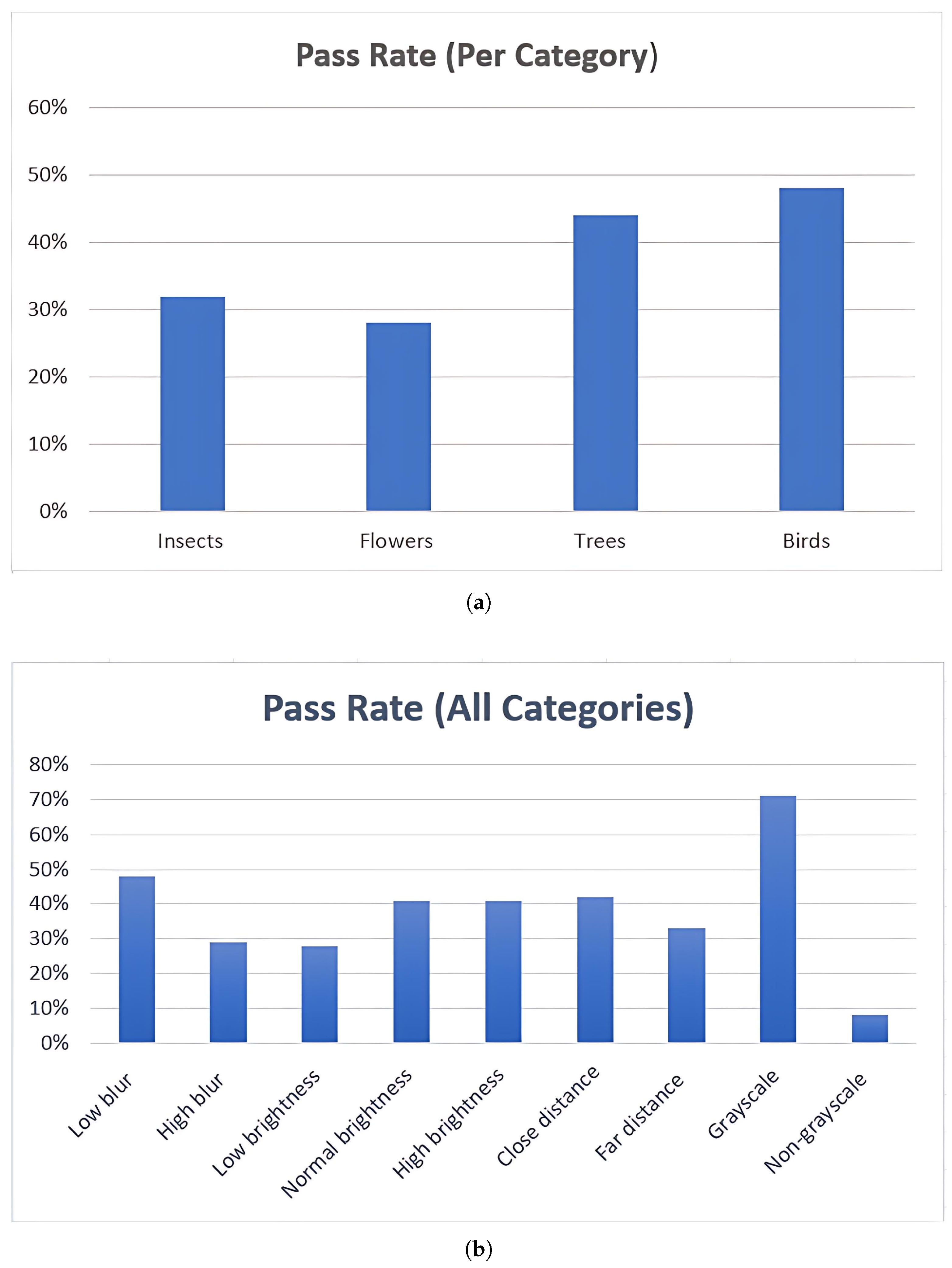

6.4. Results and Failure Mode Analysis

- Image quality failures: High blur and low brightness consistently led to misclassifications.

- Species confusion: Some flower images were misclassified as trees, indicating difficulty with fine-grained distinctions.

- Color feature weaknesses: The performance was unexpectedly better in grayscale than in color, suggesting over-reliance on texture or shape features rather than robust color feature extraction.

- Distance sensitivity: The accuracy dropped at farther distances, showing limitations in feature resolution handling.

7. Discussion

- Academic Contribution: It extends model-based testing concepts to computer vision systems, considering blur, brightness, distance, and color variability. Unlike existing model-based approaches, the framework explicitly incorporates adequacy metrics, automated augmentation, and systematic classification of testing scenarios.

- Practical Contribution: It gives developers and engineers a consistent and broadly applicable way of testing CV systems. The framework complements ad hoc testing or benchmark-driven validation by integrating model-based design with automated augmentation.

- The proposed 3D AI test model with decision tables provides systematic, structured coverage across multiple dimensions.

- Model-based AI function testing enhances traceability and repeatability.

- Automated test data generation and augmentation improve coverage without the need for extensive manual datasets.

- Contextual variation had strong effects: grayscale surprisingly outperformed color, blur and distance degraded recognition, and brightness variations altered the outcomes.

- These findings emphasize the value of context-driven testing, which uncovers weaknesses often hidden by benchmark datasets.

- By integrating coverage, adequacy, and automated validation, the methodology addresses key shortcomings of the existing solutions.

8. Conclusions and Future Scope

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gao, J.; Agarwal, R.; Garsole, P. AI Testing for Intelligent Chatbots—A Case Study. Software 2025, 4, 12. [Google Scholar] [CrossRef]

- Durelli, V.H.S.; Durelli, R.S.; Borges, S.S.; Endo, A.T.; Eler, M.M.; Dias, D.R.C.; Guimarães, M.P. Machine Learning Applied to Software Testing: A Systematic Mapping Study. IEEE Trans. Reliab. 2019, 68, 1189–1212. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, Y.; Gao, J.; Gao, L.P.; Cheng, J. Deep Learning-Based Mobile Application Isomorphic GUI Identification for Automated Robotic Testing. IEEE Softw. 2020, 37, 67–74. [Google Scholar] [CrossRef]

- Gao, Y.; Tao, C.; Guo, H.; Gao, J. A Deep Reinforcement Learning-Based Approach for Android GUI Testing. In Proceedings of the Web and Big Web and Big Data: 6th International Joint Conference, APWeb-WAIM 2022, Nanjing, China, 25–27 November 2022; Proceedings, Part III. pp. 262–276. [Google Scholar] [CrossRef]

- Gao, J.Z. UASACT 2023 Keynote Talk: Smart City Traffic Drone AI Cloud Platform—Intelligence, Big Data, and AI Cloud Infrastructure; Keynote presented at UASACT 2023, Kaohsiung Exhibition Center, Taiwan. Sponsored by TDECA, IEEE CISOSE 2023, and IEEE Future Technology; San Jose State University: San Jose, CA, USA, 2023. [Google Scholar]

- Gao, J.; Wang, D.; Lin, C.P.; Luo, C.; Ruan, Y.; Yuan, M. Detecting and learning city intersection traffic contexts for autonomous vehicles. J. Smart Cities Soc. 2022, 1, 1–27. [Google Scholar] [CrossRef]

- Matsuzaka, Y.; Yashiro, R. AI-Based Computer Vision Techniques and Expert Systems. AI 2023, 4, 289–302. [Google Scholar] [CrossRef]

- Ayub Khan, A.; Laghari, A.A.; Ahmed Awan, S. Machine Learning in Computer Vision: A Review. EAI Endorsed Trans. Scalable Inf. Syst. 2021, 8, e4. [Google Scholar] [CrossRef]

- Wotawa, F.; Klampfl, L.; Jahaj, L. A framework for the automation of testing computer vision systems. In Proceedings of the 2021 IEEE/ACM International Conference on Automation of Software Test (AST), Madrid, Spain, 20–21 May 2021; pp. 121–124. [Google Scholar] [CrossRef]

- Hassaballah, M.; Hosny, K.M. (Eds.) Recent Advances in Computer Vision: Theories and Applications, 1st ed.; Volume 1: Studies in Computational Intelligence; Springer: Cham, Switzerland, 2019; pp. 113–187. [Google Scholar] [CrossRef]

- King, T.M.; Arbon, J.; Santiago, D.; Adamo, D.; Chin, W.; Shanmugam, R. AI for Testing Today and Tomorrow: Industry Perspectives. In Proceedings of the 2019 IEEE International Conference On Artificial Intelligence Testing (AITest), Newark, CA, USA, 4–9 April 2019; pp. 81–88. [Google Scholar] [CrossRef]

- Marijan, D.; Gotlieb, A. Software Testing for Machine Learning. Proc. Aaai Conf. Artif. Intell. 2020, 34, 13576–13582. [Google Scholar] [CrossRef]

- Sugali, K. Software Testing: Issues and Challenges of Artificial Intelligence & Machine Learning. Int. J. Artif. Intell. Appl. 2021. Available online: https://ssrn.com/abstract=3948930 (accessed on 17 July 2025).

- Amalfitano, D.; Faralli, S.; Hauck, J.C.R.; Matalonga, S.; Distante, D. Artificial Intelligence Applied to Software Testing: A Tertiary Study. ACM Comput. Surv. 2023, 56, 1–38. [Google Scholar] [CrossRef]

- Baqar, M.; Khanda, R. The Future of Software Testing: AI-Powered Test Case Generation and Validation. In Proceedings of the Intelligent Computing; Arai, K., Ed.; Springer: London, UK, 2025; pp. 276–300. [Google Scholar]

- Salman, H.; Uddin, M.N.; Acheampong, S.; Xu, H. Design and Implementation of IoT Based Class Attendance Monitoring System Using Computer Vision and Embedded Linux Platform; Springer International Publishing: Cham, Switzerland, 2019; Volume 927, pp. 25–34. [Google Scholar] [CrossRef]

- Khemasuwan, D.; Sorensen, J.S.; Colt, H.G. Artificial intelligence in pulmonary medicine: Computer vision, predictive model and COVID-19. Eur. Respir. Rev. 2020, 29, 200181. [Google Scholar] [CrossRef] [PubMed]

- Gargin, V.; Radutny, R.; Titova, G.; Bibik, D.; Kirichenko, A.; Bazhenov, O. Application of the computer vision system for evaluation of pathomorphological images. In Proceedings of the 2020 IEEE 40th International Conference on Electronics and Nanotechnology (ELNANO), Kyiv, Ukraine, 22–24 April 2020; pp. 469–473. [Google Scholar] [CrossRef]

- Shams, A.; Schekelmann, A.; Mülder, W. A proof of concept for providing traffic data by AI based computer vision as a basis for smarter industrial areas. Procedia Comput. Sci. 2022, 201, 239–246. [Google Scholar] [CrossRef]

- Moore, S.; Liao, Q.V.; Subramonyam, H. fAIlureNotes: Supporting Designers in Understanding the Limits of AI Models for Computer Vision Tasks. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 23–28 April 2023. [Google Scholar] [CrossRef]

- Sharma, A.; Prasad, K.; Chakrasali, S.V.; Gowda V, D.; Kumar, C.; Chaturvedi, A.; Pazhani, A.A.J. Computer vision based healthcare system for identification of diabetes & its types using AI. Meas. Sens. 2023, 27, 100751. [Google Scholar] [CrossRef]

- Fuentes-Peñailillo, F.; Carrasco Silva, G.; Pérez Guzmán, R.; Burgos, I.; Ewertz, F. Automating Seedling Counts in Horticulture Using Computer Vision and AI. Horticulturae 2023, 9, 1134. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, L.; Konz, N. Computer Vision Techniques in Manufacturing. IEEE Trans. Syst. Man, Cybern. Syst. 2023, 53, 105–117. [Google Scholar] [CrossRef]

- Tang, Y.M.; Kuo, W.T.; Lee, C. Real-time Mixed Reality (MR) and Artificial Intelligence (AI) object recognition integration for digital twin in Industry 4.0. Internet Things 2023, 23, 100753. [Google Scholar] [CrossRef]

- Li, C.H.; Chow, E.W.H.; Tam, M.; Tong, P.H. Optimizing DG Handling: Designing an Immersive MRsafe Training Program. Sensors 2024, 24, 6972. [Google Scholar] [CrossRef] [PubMed]

- Yazdi, M. Augmented Reality (AR) and Virtual Reality (VR) in Maintenance Training. In Advances in Computational Mathematics for Industrial System Reliability and Maintainability; Springer Series in Reliability Engineering; Springer: Cham, Switzerland, 2024; pp. 169–183. [Google Scholar]

- Kalluri, P.R.; Agnew, W.; Cheng, M.; Owens, K.; Soldaini, L.; Birhane, A. Computer-vision research powers surveillance technology. Nature 2025, 643, 73–79. [Google Scholar] [CrossRef]

| Ref. | Objective | Methodology | Application | Challenges |

|---|---|---|---|---|

| [16] | Fast, accurate IoT-based attendance system on embedded Linux | Haar cascade for face detection, LBP histogram for recognition | Face detection and recognition | Large cloud storage requirements |

| [17] | Review of AI use in pulmonary medicine for specialists | A literature review on CV in imaging, ML for prediction, and AI in COVID-19 response | CV and AI in pulmonary medicine | Potential iatrogenic risks from AI algorithms |

| [18] | CV for scoring and counting in pathomorphological images vs. manual estimation | Python 2.7 for slide analysis | Pathomorphological image evaluation | 9.2% error, 90.8% cancer marker detection accuracy |

| [19] | Scalable, low-cost AI-based CV for real-time traffic in industrial areas | Open-source ML, edge devices, ad hoc setup, modular dashboard | Traffic monitoring, decision support, smart infrastructure | Indoor setup only, heavy inference load, partner expertise needed |

| [20] | Workflow for early exploration of model behavior and failures | fAIlureNotes tool for model evaluation and failure identification | UX support in understanding AI limits | Limited AI expertise and lack of accessible tools |

| [21] | AI/ML model for improved prediction accuracy | Symptom-based diabetes classification from dataset | Diabetes type identification in healthcare | Demand for accurate, timely disease forecasting |

| [22] | Seedling counting via object detection and a mobile app | CRISP-DM for data capture, processing, and training | Horticulture for agro-process automation | Counting efficiency between 57 and 96% |

| [23] | Method survey in CV: detection, recognition, segmentation, 3D modeling | Manufacturing system with sensing, CV, decision making, actuation | Manufacturing | Implementation, preprocessing, labeling, benchmarking |

| [24] | Integrating MR with AI recognition for digital twins | Real-time MR–AI with IoT connectivity | Industry 4.0 object recognition/monitoring | High computation; accuracy–latency trade-offs |

| [25] | Designing MR-based safe training for dangerous goods (DGs) | Designing MR-based safe training for dangerous goods (DGs) | Logistics and industrial safety training | Realism of scenarios; transfer to real-world tasks |

| [26] | Applying AR/VR to enhance maintenance training | Interactive AR/VR learning modules | Industrial system maintenance | Industrial system maintenance |

| [27] | CV–surveillance link analysis | Paper–patent analysis, language pattern mining | Human-targeted detection in surveillance | Obfuscation, normalization, transparency gaps |

| Feature | Task Performed |

|---|---|

| Object extraction | It helps in the extraction of objects from an image |

| Object detection and classification | It helps with detecting and classifying an object from an image |

| Object tracking and counting | It helps with tracking and counting objects in an image |

| Object behavior detection and classification | It helps with behavior detection for objects and their classification in an image |

| Object identification, recognition, segmentation, and feature extraction | It is used to identify/recognize and segment an object along with extracting features from an image |

| Document preprocessing and classification | It helps with the preprocessing of a document and its classification in an image |

| Text/data extraction and collection from documents | It helps in text and data extraction along with its collection in a document |

| Document analysis and understanding | It helps understand and analyze a document |

| Document review and audit | It helps with auditing and reviewing a document |

| Data/text validation | It is used to validate the data and the data in a document |

| Coverage | Task Performed |

|---|---|

| Object detection and classification | The test covers domain-specific object detection and its classification |

| Object tracking | The tracking of objects is covered in this test coverage |

| Object behavior detection and classification | This covers behavior detection for objects and their classification in an image |

| Object counting | This covers counting objects within an image |

| Object segmentation | This covers the segmentation of the object from an image |

| Domain-specific object recognition | This covers the domain-specific recognition of objects in an image |

| Feature extraction | This covers the extraction of features from an image |

| Object extraction | This covers the extraction of specific objects from an image |

| Category | Pass Rate (Per Category) |

|---|---|

| Species | |

| Insects | 8/25 = 32% |

| Flowers | 7/25 = 28% |

| Trees | 11/25 = 44% |

| Birds | 12/25 = 48% |

| Total | 38/100 = 38% |

| Non-Species | |

| Chair | 6/6 = 100% |

| Fan | 2/2 = 100% |

| Blue Background | 4/4 = 100% |

| Bottle | 4/4 = 100% |

| Total | 16/16 = 100% |

| Context Included | Pass Rate (All Categories) |

|---|---|

| Species | |

| Low blur | 23/48 = 48% |

| High blur | 15/52 = 29% |

| Low brightness | 10/36 = 28% |

| Normal brightness | 14/32 = 41% |

| High brightness | 14/32 = 41% |

| Close distance | 22/52 = 42% |

| Far distance | 16/48 = 33% |

| Grayscale | 4/52 = 8% |

| Non-grayscale | 34/48 = 71% |

| Non-Species | |

| Low blur | 2/16 = 12.5% |

| High blur | 2/16 = 12.5% |

| Low brightness | 2/16 = 12.5% |

| Normal brightness | 2/16 = 12.5% |

| High brightness | 2/16 = 12.5% |

| Close distance | 2/16 = 12.5% |

| Far distance | 2/16 = 12.5% |

| Grayscale | 2/16 = 12.5% |

| Non-grayscale | 2/16 = 12.5% |

| Total | 16/16 = 100% |

| Aspect | Conventional Testing | Model-Based Testing | AI Testing | Benchmark Testing |

|---|---|---|---|---|

| Objective | Applying traditional system testing message to support test design | Setting up the test model to support test design and automation | Automating and optimizing the test design using AI/ML testing models | Comparing the performance on fixed test sets with standardized metrics |

| Test Approach | Mostly ad hoc, scripted, and conventional system function | Formalized using decision tables and model-based | AI-driven adaptive testing with dynamic feedback loops | Test-set-driven evaluation with static metrics |

| Test Data Generation | Manual or conventional test case generation | Derived systematically from model transitions and constraints | Generated automatically using AI/ML, including domain-specific data | Predefined test sets (e.g., ImageNet, COCO), limited flexibility |

| Test Augmentation | Rare or manual augmentations | Scenario-based extension using model variations | Automated augmentation (noise, blur, rotation, adversarial perturbations) | Limited augmentation, often restricted to test set scope |

| Test Validation | Human oracle or ad hoc oracle testing, often subjective | Model oracle validation against expected behavior | AI-assisted validation, anomaly detection, bug analysis | ad-hoc test validation based on given test set |

| Test Coverage | Limited conventional coverage achieved (e.g., test scenario coverage) | Systematic model-based coverage achieved for CV systems | Broad coverage through automated generation and augmentation | Restricted to benchmark test set scope |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, J.; Agarwal, R. AI Test Modeling for Computer Vision System—A Case Study. Computers 2025, 14, 396. https://doi.org/10.3390/computers14090396

Gao J, Agarwal R. AI Test Modeling for Computer Vision System—A Case Study. Computers. 2025; 14(9):396. https://doi.org/10.3390/computers14090396

Chicago/Turabian StyleGao, Jerry, and Radhika Agarwal. 2025. "AI Test Modeling for Computer Vision System—A Case Study" Computers 14, no. 9: 396. https://doi.org/10.3390/computers14090396

APA StyleGao, J., & Agarwal, R. (2025). AI Test Modeling for Computer Vision System—A Case Study. Computers, 14(9), 396. https://doi.org/10.3390/computers14090396