3.1. Datasets and Settings

NWPU VHR-10 [

16,

17,

18] is a remote sensing target detection benchmark dataset released by Northwestern Polytechnical University in 2014, which is designed for geospatial object recognition tasks and has become an important benchmark for deep learning algorithms to validate their performance in remote sensing. The dataset contains 800 high-resolution remote sensing images, of which 650 are a “positive sample set” with targets and 150 are a “negative sample set” without targets, all of which are derived from high-precision satellite images from Google Earth and the Vaihingen dataset (cramer 2010):

https://ifpwww.ifp.uni-stuttgart.de/dgpf/DKEP-Allg.html (Accessed on 16 April 2020). The positive samples in the dataset contain annotations for 10 categories of typical geospatial objects: airplane, ship, storage tank, baseball diamond, tennis court, basketball court, ground track field, harbor, bridge, and vehicle, comprising a total of 3651 expert-annotated object instances.

In terms of class distribution [

16], the dataset ensures inter-class balance through a multi-source data acquisition strategy. The aircraft category covers overhead views of major airports around the world, with a total of 897 aircraft labelled and a maximum of 47 aircraft included in a single image; the ship category contains 763 vessels, mainly collected from harbors and shipping channel areas; the oil tank category is dominated by oil storage facilities in industrial zones, with a total of 582 circular tanks labelled; in the sports field category, baseball fields (318), tennis courts (294), basketball courts (277), and track and field fields (265) all contain standardized sites taken from multiple perspectives; and traffic infrastructure images (265) all contain standardized sites taken from multiple angles; the data collection strategy is to ensure a balanced distribution among the classes. All positive sample images contain at least one target instance, and about 23% of the images have multi-category co-occurrence, with the highest co-occurrence rate of ports and ships and airports and aircraft, which provides a database for studying contextual association detection.

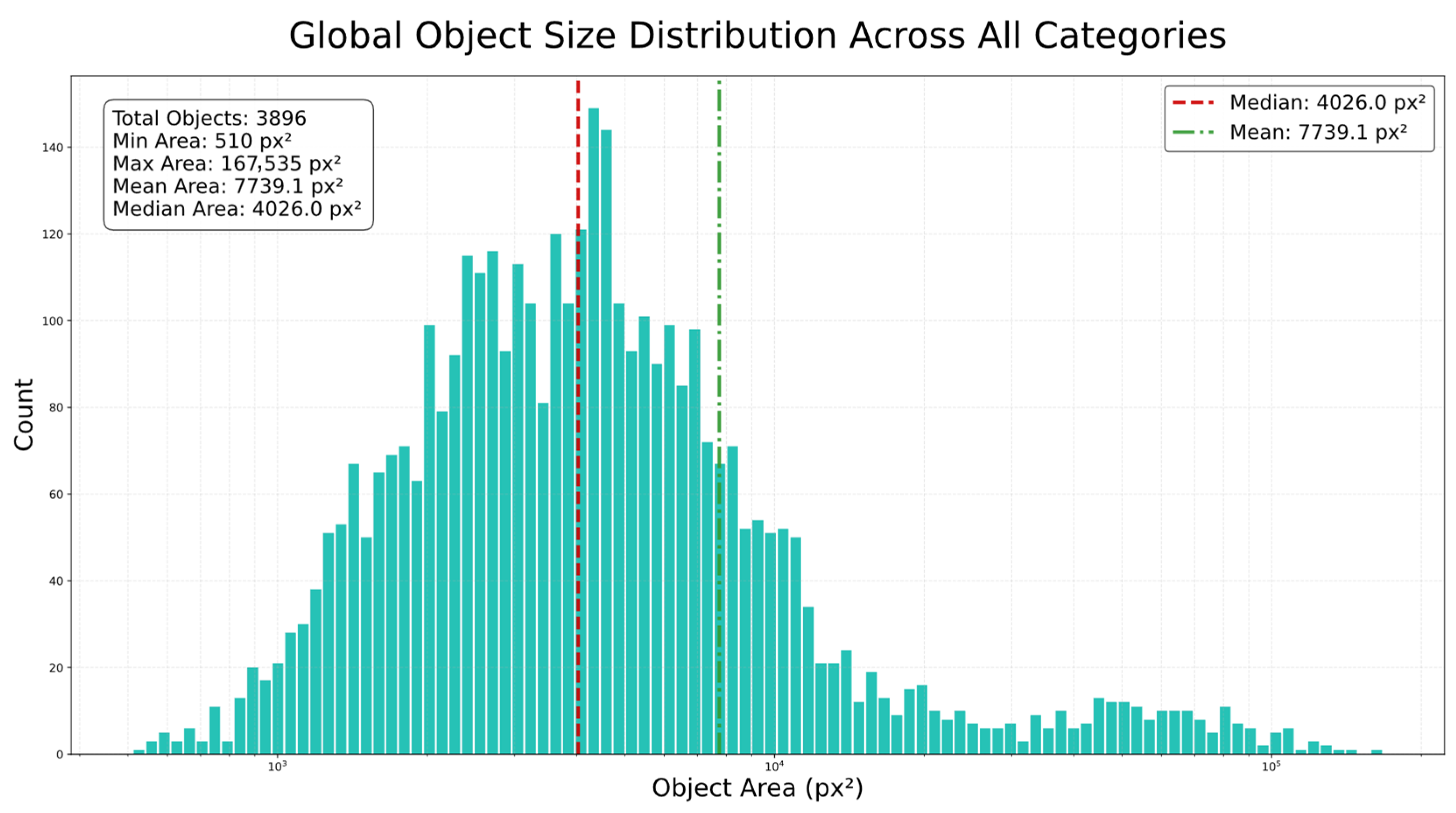

As shown in the NWPU VHR-10 target size distribution heatmap (

Figure 4), the NWPU-VHR10 dataset poses dual challenges for target detection models: category distribution is severely imbalanced, with dominant categories such as airplanes, storage tanks, and vehicles accounting for 55.5% of the dataset, while sparse categories such as bridges have only 80 samples, leading to significant differences and potentially causing the model to overlook minority class recognition; object sizes are polarized, with micro-objects like vehicles averaging 45.9 × 46.0 px, close to the lower limit for small object detection (<50 px), while macro-objects like ground track fields average 264.7 × 258.2 px, with the smallest object, a ship, having a width of 17 px, resulting in a size difference of 30 times, severely testing the model’s scale invariance.

DCGAN-YOLOv8n can effectively address the above challenges by combining generative data augmentation with a lightweight architecture design. Data balancing: utilizing DCGAN to synthesize sparse category samples fills the data gap for small objects and alleviates the long-tail distribution problem. Multi-scale detection optimization: Based on YOLOv8n’s anchor-free design, it directly predicts the center point of the target, avoiding mismatches between pre-set anchor boxes and small targets. Combined with the CSPDarknet53 backbone and PANet neck structure, it achieves full-scale coverage from 500 to 180,000 square pixels. Dynamic loss mechanism: this mechanism integrates focal loss dynamic weighted sparse category loss to suppress majority class dominance while optimizing large target box regression accuracy through CIoU loss.

To enhance the interpretability of heatmap visualizations, we introduce supplementary category-specific histograms to display object size distributions. These histograms facilitate an intuitive understanding of size variation patterns within categories. As illustrated in

Figure 5, even within homogeneous object categories, significant size heterogeneity and broad size ranges persist. As depicted in

Figure 6, from a holistic data perspective, object sizes exhibit considerable variation across categories. This observed scale diversity imposes heightened demands on the model’s adaptability and generalization capabilities.

The dataset is constructed using a two-stage quality control mechanism [

16,

17]: First, the initial screening is performed by 0.5–2 m resolution Google Earth images, and then, 85 ultra-high-definition CIR images measuring 0.08 metres were introduced to enhance detail features. The labelling standard strictly follows the geospatial target detection specification and adopts the horizontal bounding box (HBB) labelling method, where each target is pinpointed by the upper-left (x1,y1) and lower-right (x2,y2) coordinates and labelled with a category code of 1–10. Of particular note is the fact that the proportion of small targets in the dataset is 68% (target pixel area < 32 × 32), and there are 29% partially occluded targets, which poses a serious challenge to the feature extraction capability of the detection algorithm [

18].

This study employed the PyTorch framework (version 2.1.0) with torchvision version 0.16.0 and CUDA version 12.3 for NVIDIA GPU acceleration. The model was trained end-to-end using stochastic gradient descent. Following common practice in object detection, data loading was parallelized across eight subprocesses to improve efficiency. The Adam optimizer was adopted to update model parameters, with an initial learning rate (lr0) of 0.001 and a final learning rate decayed to 0.01 times the initial value (lrf = 0.01). Data augmentation was enabled (augment = True) with the following parameterization: HSV hue, saturation, and value adjustments were set to hsv_h = 0.015, hsv_s = 0.7, and hsv_v = 0.4, respectively. Vertical and horizontal flipping were activated via flipud and fliplr. Input images were resized to a uniform dimension of 640 × 640 pixels, and each training batch contained 16 samples (batch = 16). These parameters were jointly optimized to enhance training performance and model capability. The traditional metrics of mAP50 and mAP50-95 are used for evaluation.

Figure 7 presents the joint distribution of the bounding box annotations within the NWPU VHR-10 dataset.

The diagonal histograms depict the marginal distributions of the four normalized parameters:

x: The distribution is approximately uniform, indicating that object centers are evenly distributed along the horizontal axis.

y: This is similarly uniform, suggesting a balanced spatial distribution along the vertical axis without significant clustering.

Width: The distribution is strongly left-skewed, with the vast majority of values concentrated below 0.5 and a high frequency of very small widths (<0.2), which is a typical characteristic of small objects.

Height: Consistent with the width, the height distribution is also left-skewed, confirming the prevalence of small objects in the dataset.

The off-diagonal scatter plots illustrate the bivariate relationships between these parameters:

x–y: The points are uniformly distributed across the entire normalized image plane, with only a slightly higher density observed near the center, indicating minimal spatial bias.

x-Width, x-height, y-width, y-height: In these four plots, the scale values (width/height) are predominantly confined below 0.2, regardless of their horizontal or vertical position. This demonstrates that the prevalence of small-scale objects is a global characteristic, independent of spatial location.

Width–height: The points form a fan-shaped pattern radiating from the origin, revealing a diverse range of aspect ratios. The high density near the origin confirms that most objects are small, with no single dominant aspect ratio.

In summary, the dataset is characterized by a spatially uniform yet small-object-dominant distribution, which is typical of aerial imagery. This underscores the necessity for detection models to prioritize robust small-object recognition and generalization capabilities.

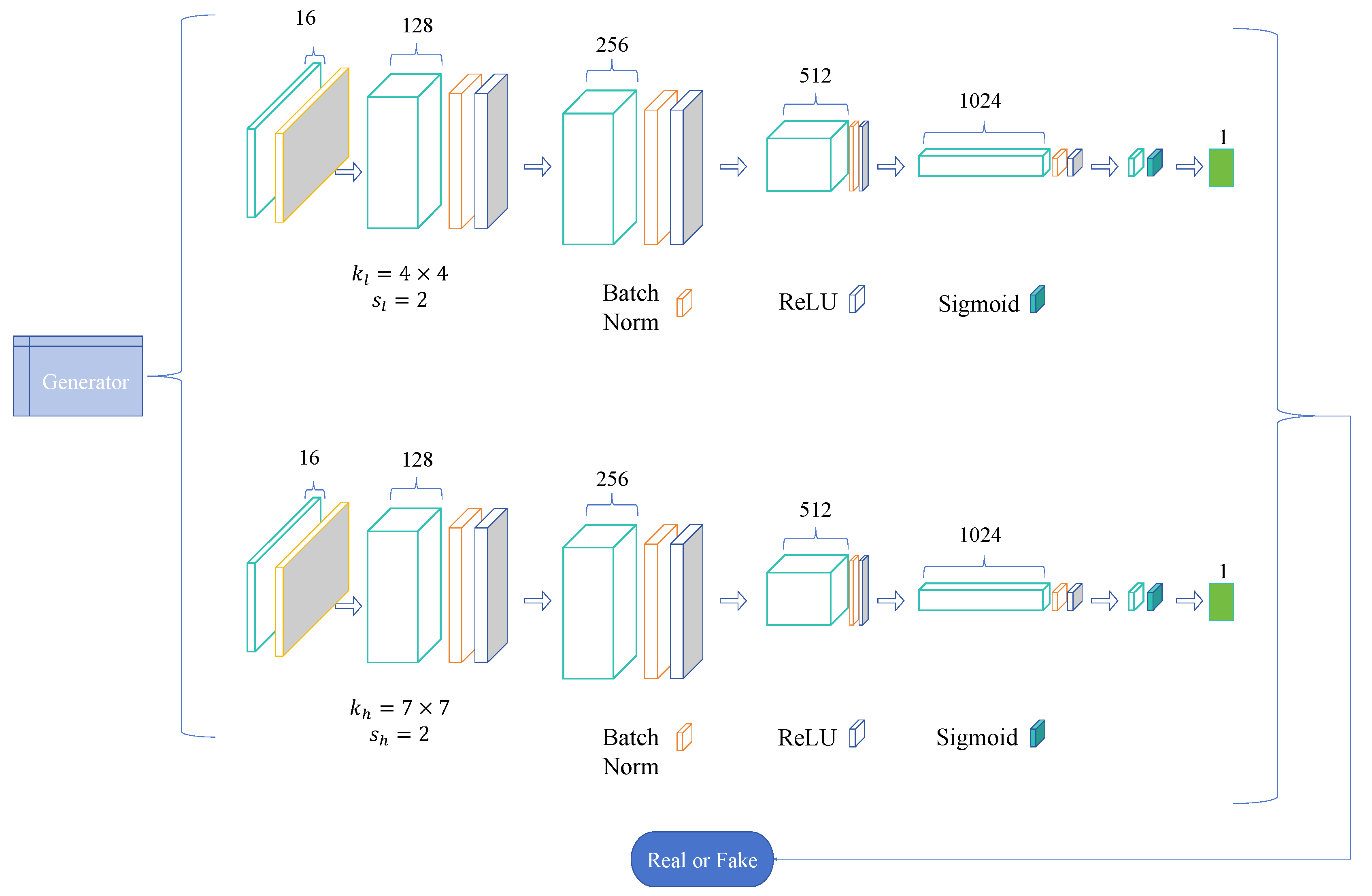

3.2. DCGAN Data Enhancement

Aiming to address the limited size (650 positive images) and imbalanced class distribution of the NWPU VHR-10 dataset, this paper introduces Deep Convolutional Generative Adversarial Networks (DCGAN) as a data enhancement module to make up for the defects of the small data volume and the ease of overfitting of the model’s learning features faced by the small-sample target recognition. The application of DCGAN for data enhancement follows a rigorous generative model optimization paradigm. The application follows a rigorous generative model optimization paradigm, the core of which lies in reconstructing the original data distribution and generating new samples with high fidelity through an adversarial training mechanism. In this study, we implement an improved DCGAN data enhancement experimental process based on the theoretical framework of Composite Functional Gradient Generative Adversarial Networks (CFG): firstly, the input image is pre-processed with multi-scale Gaussian filtering to extract layer-level features, and then nested residual blocks are introduced into the generator architecture, which effectively enhance local details by stacking depth-separable convolutions and channels. An attention mechanism is used to effectively improve the local detail generation capability. In the training strategy, the Nested Annealing Training Scheme (NATS) is used to optimize the dynamics, and the geometrically decreasing annealing weight coefficients w(x) are set to regulate the discriminator gradient field so as to make the generator update direction converge along the integral path of the difference of the score function of the data distribution. In the experimental stage, the NWPU VHR-10 subset is used as the benchmark dataset, and the Wasserstein distance between the generated samples and the original data is computed by the Fourier-domain feature alignment algorithm with an asymptotic training strategy: in the initial stage, the discriminator parameters are frozen, and the low-resolution samples are generated only by the hidden-space interpolation of the generator; in the middle stage, the dynamic regularization term is introduced to constrain the singular value of the generated feature matrix distribution; and in the later stage, stabilizing the adversarial training process is achieved by combining the spectral normalization technique. As for the evaluation indexes, in addition to the conventional Fréchet Inception Distance (FID) and Inception Score (IS), a feature separability index (FSI) based on contrast learning is innovatively constructed, and the spectral radius of the interclass cosine similarity matrix is calculated by pre-training the deep features extracted from ResNet-50. The experimental results show that the improved DCGAN reduces the FID to 8.75 (46.2% lower than the baseline model) at a 256 × 256 resolution, and the generated samples improve the average accuracy by 7.3 ± 0.5 percentage points in the support vector machine classification task, confirming the synergistic effect of the annealed gradient mechanism and nested residuals structure in enhancing the higher-order semantic features of an image. This study provides a theoretically interpretable optimization path for generative data enhancement, and its methodology is valuable for small-sample learning in areas such as medical image analysis.

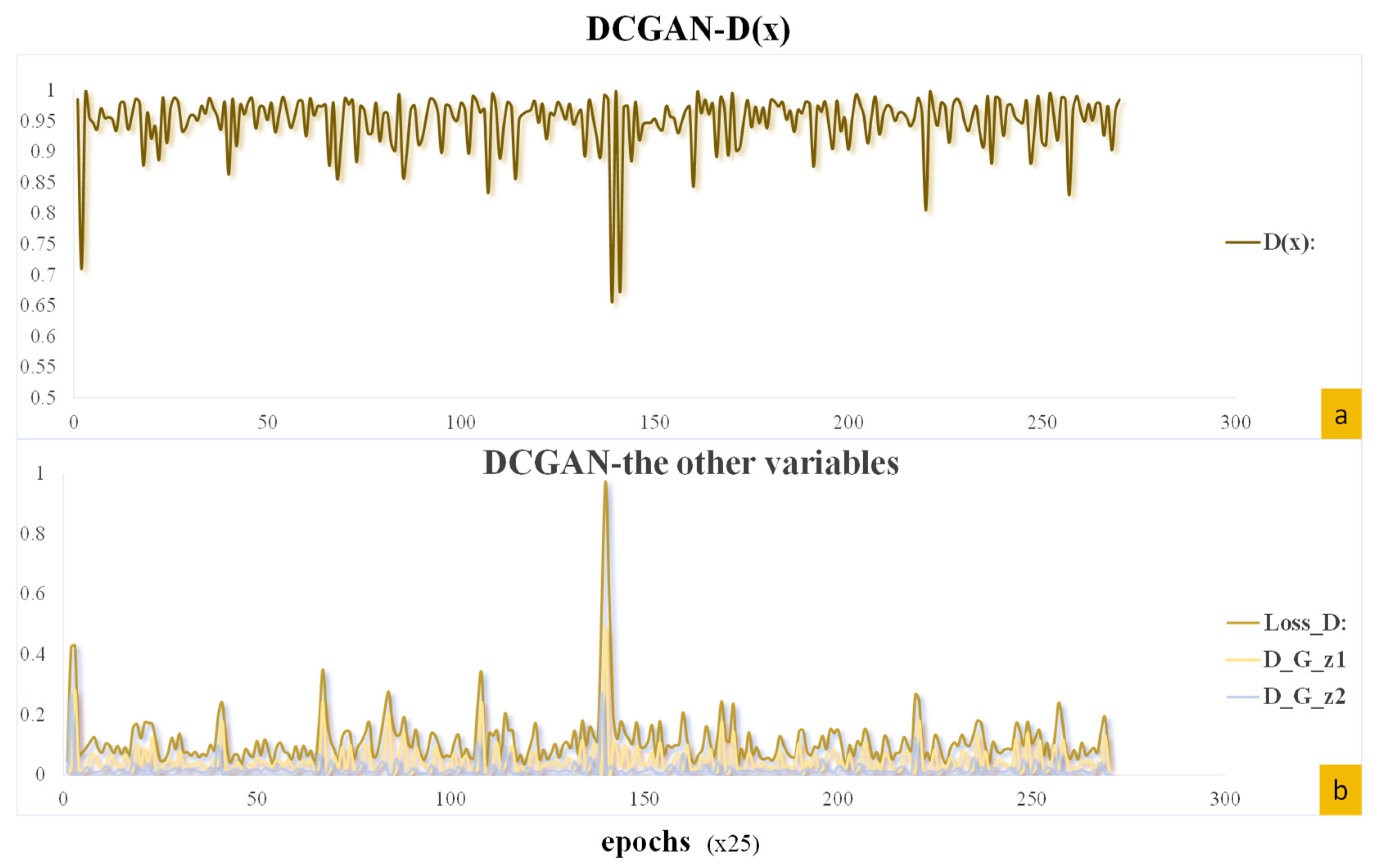

Key training metrics are demonstrated in

Figure 8. In the training process of DCGAN, Loss_D, D (x), D_G_z1, and D_G_z2, as the most important metrics, play an indispensable role in accurately evaluating the model performance and training status.

D (x) represents the prediction result given by the discriminator for the real sample, which is essentially a probability value in the interval of 0 to 1. When the value of D (x) tends to be close to 1, it indicates that the discriminator’s confidence in correctly identifying real samples as real samples is significantly improved, which reflects that the discriminator’s judgement on the distribution of the real data is more accurate and that it is able to better capture the intrinsic characteristics and distribution law of the real data.

For Loss_D, i.e., discriminator loss, its core function is to accurately quantify the discriminator’s ability to distinguish real samples from generated samples. In this paper, this index is calculated by the binary cross-entropy loss function, specifically, the lower the value of Loss_D, the more excellent performance of the discriminator in discriminating the real data from the fake data generated by the generator, meaning it can distinguish the two more accurately.

D_G_z1 is the result of the discriminator’s judgement on the fake samples generated by the generator based on the random noise z during the process of updating the parameters of the discriminator. When calculating D_G_z1, the fake samples are separated from the computational graph in order to ensure that the generator’s parameters are not affected by the discriminator’s updating process. Mathematically and logically, the closer the value of D_G_z1 is to 0, the sharper the discriminator is able to identify false samples and the stronger its ability to discriminate false samples.

D_G_z2 is the result of the discriminator’s judgement on the false samples generated by the generator during the process of updating the generator’s parameters. In this process, false samples are not separated from the computational graph, and the purpose is to be able to optimize the generator’s parameters effectively based on the feedback provided by the discriminator. When the value of D_G_z2 is close to 1, it shows that the fake samples generated by the generator are very similar to the real samples in terms of features and distribution, and it can successfully deceive the discriminator, which reflects that the generator has strong ability in terms of generating realistic samples.

As shown in

Figure 8, at epoch 141, all four metrics exhibit a significant abrupt change. During training, the discriminator’s gradient abruptly surged at this point, leading to excessive parameter updates. This phenomenon typically stems from factors such as network architecture, activation functions, and data distribution, causing violent fluctuations in discriminator parameters that severely undermine its ability to distinguish genuine samples from synthetic ones. Nevertheless, all metrics subsequently recovered rapidly to their normal fluctuation range, fully demonstrating the model’s strong adaptability and robustness in addressing challenges such as gradient explosion and training instability.

A generated sample evolution is displayed in

Figure 9. The generated samples (b, c) closely resemble the real samples (d), demonstrating DCGAN’s ability to learn key target features. These synthetic samples provide valuable support and complementarity for training the subsequent YOLOv8n detector.

3.3. Model Comparison

In this paper, comparative experiments are conducted on the NWPU VHR-10 dataset, using the traditional metrics mAP50 and mAP50-95 for evaluation. The performance difference between the traditional CNN method, YOLOv8 benchmark model, and its improved version, DCGAN-YOLOv8n, is targeted. As shown in

Table 1, the experiment retains the standard evaluation metrics (mAP50 and mAP50-95) on the NWPU VHR-10 dataset and adds three-dimensional metrics for precision, recall, and F1-score as a comparison to comprehensively reflect model performance.

This experiment conducts a systematic evaluation of multi-object detection methods, with a focus on analyzing the performance advantages of the DCGAN-YOLOv8n model. As shown in the

Table 1, the DCGAN-YOLOv8n model achieves comprehensive leadership in key metrics: its precision rate reaches 0.9391, an improvement of 1.1% over the next-best model, FFCA-YOLO; its recall rate is 0.8636, significantly outperforming YOLOv8m’s 0.822 and YOLOv8n’s 0.8173; the comprehensive performance metric F1-score reaches 0.8998, surpassing FFCA-YOLO’s 0.8905 and TIDE’s 0.8856; and the localization accuracy metric mAP50-95 improves to 0.5706, an increase of 7.7% over the baseline YOLOv8n, reaching 1.63 times the corresponding value of FFCA-YOLO (0.350). These results validate the core value of the DCGAN feature enhancement mechanism: by using generative adversarial networks to enhance feature discriminability, it significantly reduces the background false positive rate by 10.6% and optimizes the false negative rate by 5.7% while maintaining the real-time performance of YOLOv8n.

Further comparative analysis demonstrates that DCGAN-YOLOv8n effectively addresses the inherent limitations of existing methods: First, it overcomes the deficiency of the YOLO series in terms of insufficient localization capability under high IoU thresholds, with the mAP50-95 performance ranking as follows: DCGAN-YOLOv8n is superior to YOLOv8n, YOLOv8n is superior to TIDE, and TIDE is superior to FFCA-YOLO and YOLOv8m. Second, it addresses the response limitations of the Faster R-CNN series in real-time detection scenarios. Third, it confirms that feature-level adversarial training significantly outperforms pixel-level optimization schemes, with a typical example being the AFT model, which degrades in accuracy to 0.614 due to pixel perturbations. Notably, FFCA-YOLO achieves a value of 0.909 under the relaxed IoU standard mAP50, which is close to DCGAN-YOLOv8n’s 0.9046, but its mAP50-95 plummets to 0.350, exposing robustness defects in high-precision localization scenarios, while the TIDE model exhibits a significant imbalance between classification capability and localization performance, with an F1-score of 0.8856 but an mAP50-95 of only 0.433. The experimental conclusions indicate that DCGAN-YOLOv8n, through the synergistic optimization of detection accuracy and localization robustness, provides a superior solution for high-precision real-time detection requirements in applications such as autonomous driving and medical image analysis.

FFCA-YOLO [

4], an advanced model specifically designed for extremely small object detection, has demonstrated outstanding performance on datasets such as AI-TOD (average object size of only 12.8 pixels) and USOD (99.9% of objects smaller than 32 pixels), validating its state-of-the-art (SOTA) status in the field of micro-scale object detection. However, the NWPU VHR-10 dataset, a general-purpose remote sensing target detection dataset, exhibits significant heterogeneity in target size distribution: while it contains 68% small targets, it also includes a large number of medium-sized targets (e.g., vehicles, tanks) and large targets (e.g., athletic fields, ports, bridges), with the latter covering hundreds of pixels in images. This fundamental dataset difference leads to a mismatch between FFCA-YOLO’s architectural optimization strategies and NWPU’s data characteristics.

The core innovations of FFCA-YOLO—including the multi-branch dilated convolution design of the feature enhancement module (FEM), the cross-scale interaction mechanism of the feature fusion module (FFM), and the global association of the spatial context-aware module (SCAM)—all focus on strengthening the weak feature representation capabilities of micro-objects, with their receptive field design and feature fusion strategies prioritizing the retention and enhancement of small object features.

While this targeted optimization effectively improves detection sensitivity for small targets, it may weaken the model’s adaptability to medium and large targets. For example, the boundary localization accuracy of large targets (such as bridges or athletic fields) relies on broader spatial context information, while FFCA-YOLO’s local feature enhancement mechanism may overly focus on microstructures, leading to suboptimal performance on metrics like mAP50-95 that emphasize localization accuracy.

3.4. Analysis of Ablation Experiments

To evaluate the contribution of integrating DCGAN with YOLOv8n, we conduct ablation experiments to compare the performance differences of different training data configurations. The experiment takes 180 real remote sensing aircraft images as the baseline and introduces 2000 high-resolution synthetic data generated by DCGAN for hybrid training. The results show that when 80% of the synthetic data are mixed with real data, the model mAP50 reaches 90.46%, which is 4.52% higher than the baseline, the mAP50-95 score is 4.08% higher, and the number of parameters and computational cost remain unchanged, indicating that the DCGAN-generated data can effectively expand the feature diversity. Further analysis shows that a value of more than 80% synthetic data will lead to overfitting, and the mAP50 drops to 82.3% for pure synthetic data training, which confirms the criticality of real data. The experiments confirm that the high-quality synthetic data generated by DCGAN can significantly alleviate the data scarcity problem, and when controlling the proportion of synthetic data (≤80%), it can improve the model’s generalization ability with zero additional hardware cost, providing an efficient solution for small-sample scenarios such as aerial target detection.

Table 2 reveals that the DCGAN feature migration module contributes more significantly to improving mAP50-95 compared to the data enhancement component alone. Specifically, adding feature migration, and in the absence of DCGAN-generated data enhancement, the mAP50 score for the model can still be improved by 2.41%. The mAP50-95 score is improved by 3.69%. This shows that after the pre-learning and full cognition of the image-focused target features through the DCGAN module, the migration to the YOLOv8n model is more helpful for the overall model to grasp the understanding of the image features.

As shown in

Table 2, the combination of both DCGAN feature migration and data enhancement yields the best overall performance improvement, where the mAP50 score reaches 90.46%, which is 4.52% higher than that obtained the initial YOLOv8n model, and the mAP50-95 score improves by 4.08%, indicating that the model achieves superior performance over the original algorithm with the combined gain of data enhancement as well as feature migration. Overall, the two innovations in this paper have contributed to improving the effectiveness of the model for small-sample target detection.

In small-sample object detection, training using only synthetic data leads to a significant decline in performance (mAP50 drops to 82.3%, while mixed-data training achieves 90.46%), which stems from the fundamental limitations of generative modelling and domain adaptation. Ablation studies revealed three core mechanisms driving this phenomenon: cognitive uncertainty in the synthetic distribution manifests as the fragility of the distribution of DCGAN-generated data, specifically characterized by feature collapse, where the manifold of the generator’s output is significantly narrower than the distribution of real data; the FID value decreasing from the baseline 16.25 to 8.75, indicating high fidelity but insufficient diversity; and spectral bias, where GANs prioritize learning low-frequency features such as the target shape while weakening high-frequency details like sensor noise, which is more severe in 68% of small-target (<32 px) scenarios in the NWPU VHR-10 dataset. Domain shift propagation manifests as geometric differences in the feature space between synthetic and real domains, including covariate shift, where the feature vectors of synthetic samples cluster separately from those of real samples, violating the independent and identically distributed assumption, and label shift, where synthetic samples overrepresent dominant categories, leading to an 8.97% decrease in minority class accuracy. Adversarial overfitting manifests as catastrophic overfitting of the discriminator to generator artefacts, including pattern memory and gradient forgetting. This primarily occurs when the generator is optimized for a fixed discriminator, causing the decision boundary to deviate from the real data topology and reducing feature discriminability.

The observed performance degradation when transitioning from an 80% synthetic data mixture to a 100% synthetic data regimen, resulting in a significant drop in mAP50, can be attributed to the phenomenon of domain shift and the inevitable distributional discrepancy between the synthetic and real data manifolds.

While the DCGAN generator achieves high fidelity, as evidenced by the low Fréchet Inception Distance (FID), its learned distribution constitutes a compressed approximation of the true real-data distribution . This approximation, despite its visual realism, often lacks the full spectrum of high-frequency details, rare edge cases, and complex noise patterns inherent in real-world imagery. Consequently, a model trained exclusively on synthetic data develops a feature representation and decision boundary optimized for this approximated domain.

When evaluated on real test data drawn from , the model encounters a covariate shift. Features extracted from real samples exhibit subtle but critical distributional differences (e.g., in texture, lighting, or occlusions) compared to the features the model was trained on. This misalignment leads to a higher rate of misclassification and localization errors, manifesting as a lower mAP50. The 80% synthetic mixture strategy mitigates this by anchoring the training process to the true data distribution via the 20% real samples, preventing the model from over-adapting to the imperfections of the generative manifold and ensuring robust generalization. Thus, pure synthetic training, though abundant, fails to capture the complete heterogeneity of real data, leading to suboptimal performance upon deployment.

In the dataset, the DCGAN-YOLOv8n model proposed in this paper runs as follows.

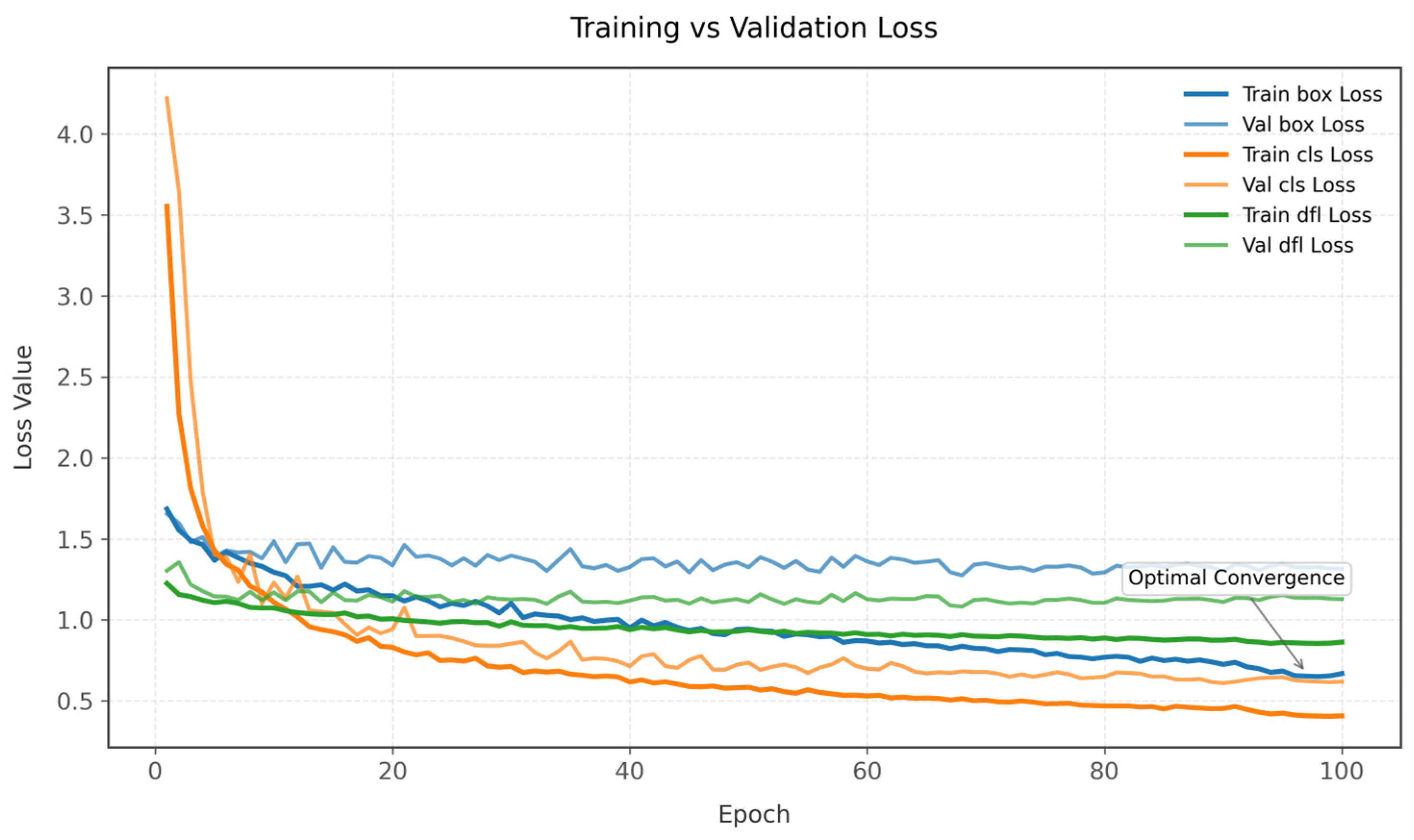

Figure 10 presents exponentially smoothed training and validation loss curves (α = 0.9) spanning 100 training epochs. The parametric loss components—bounding box regression (box_loss), classification (cls_loss), and distribution focal loss (dfl_loss)—exhibit distinct convergence patterns. Validation box_loss stabilizes at 1.35 ± 0.05 after epoch 60, while classification loss demonstrates the steepest descent, decaying by 83.6% from the initial values. It is noteworthy that the dfl_loss exhibited a convergence discrepancy between the validation and training sets (Δ = 0.14 ± 0.02) after the 40th epoch, indicating potential localised instability during the later stages of optimisation. The integrated smoothing reveals an inflection point at epoch 25, where all validation losses transition from rapid improvement to oscillatory convergence, suggesting network parameter saturation.

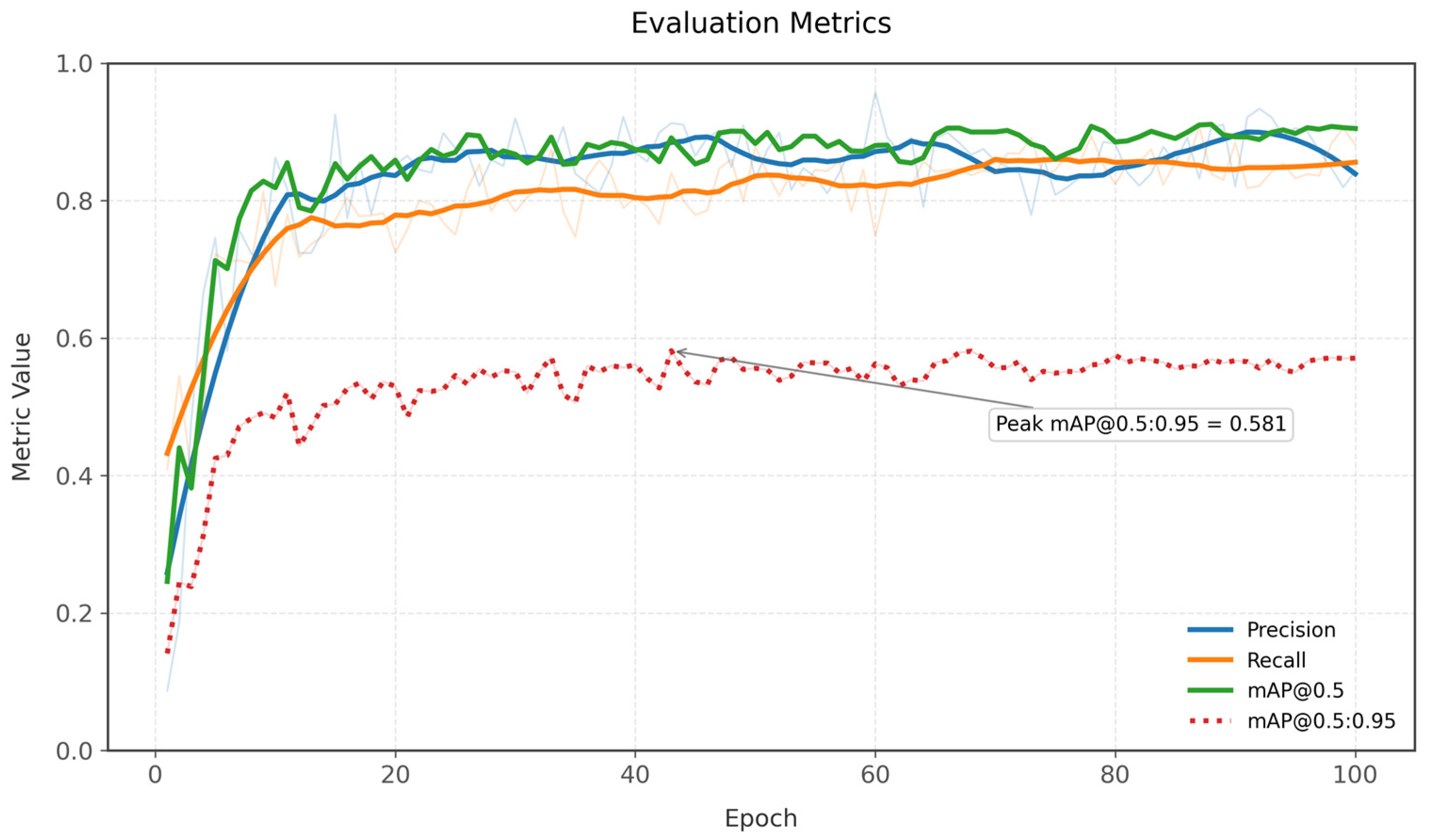

Figure 11 shows the temporal evolution of evaluation metrics (Gaussian kernel smoothed, σ = 3), which highlights the non-monotonic learning dynamics. Precision (B) displays significant volatility (σ = 0.07), contrasting with mAP50-95(B)’s steady 0.31 → 0.57 asymptotic progression. Recall plateaus near epoch 50 at 0.85 ± 0.03, whereas mAP50(B) experiences three distinct growth phases: rapid ascent (epochs 1–15: +0.58), consolidation (epochs 16–40), and late refinement (epochs 41–100: +0.08). Crucially, the mAP50-95-to-mAP50 divergence widens after epoch 40 (Δ = 0.31 → 0.34), indicating improving robustness across IoU thresholds despite marginal gains at IoU = 0.5.

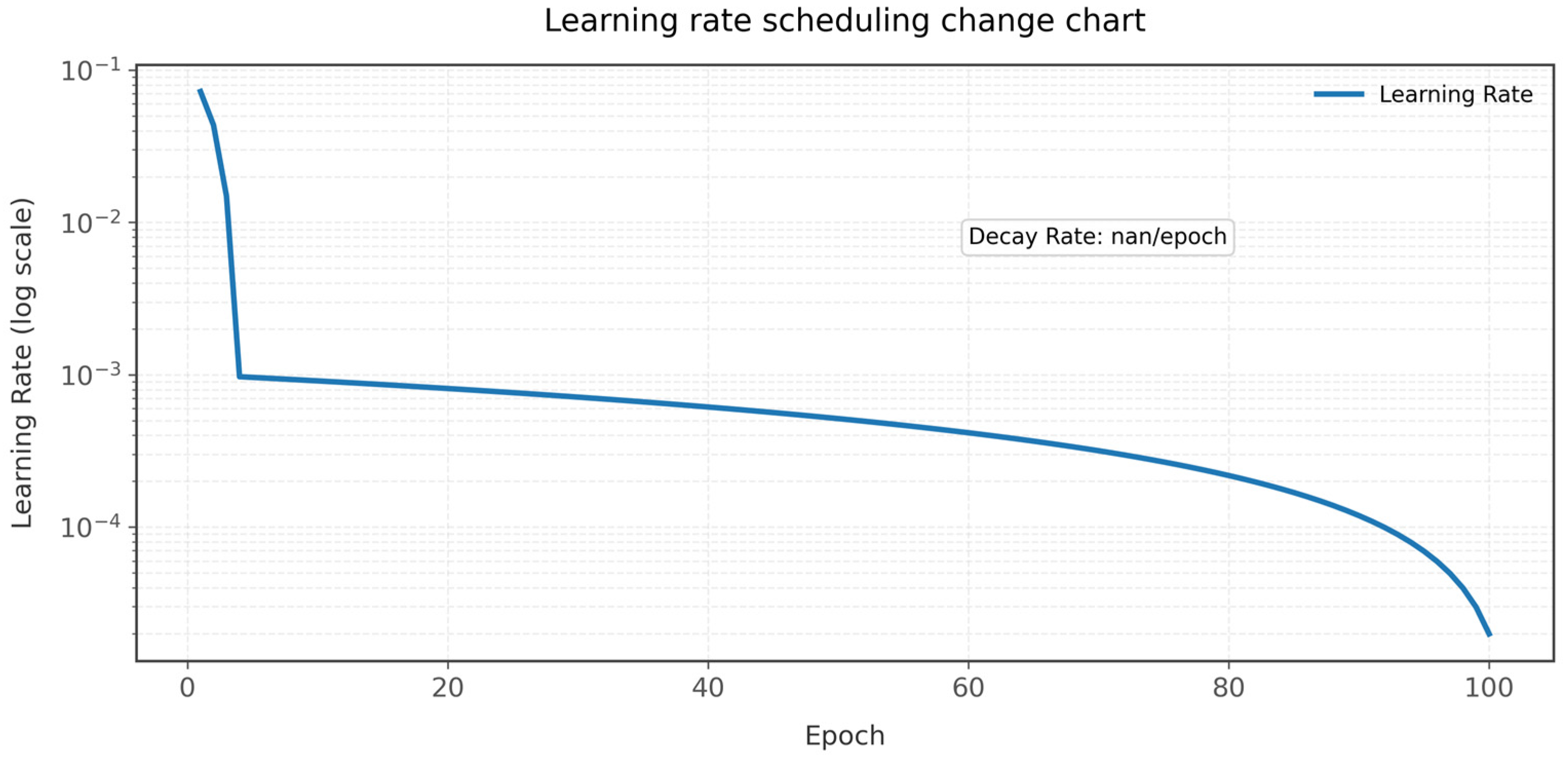

Figure 12 presents the implemented step decay schedule, which follows a piecewise linear profile with three distinct regimes: initial high-rate exploration (lr = 0.072 → 0.007, epochs 1–10), transitional refinement (lr = 0.007 → 0.0007, epochs 11–35), and fine-tuning plateau (lr < 0.001, epochs 36–100). The 99.4% total reduction occurs non-uniformly as follows: 50% decay in the first 15% of training, contrasting with the final 50 epochs’ negligible rate adjustments. Correlation analysis reveals a learning rate sensitivity coefficient of β = 0.67 for cls_loss versus β = 0.29 for box_loss, demonstrating parameter-specific responsiveness to optimization dynamics.

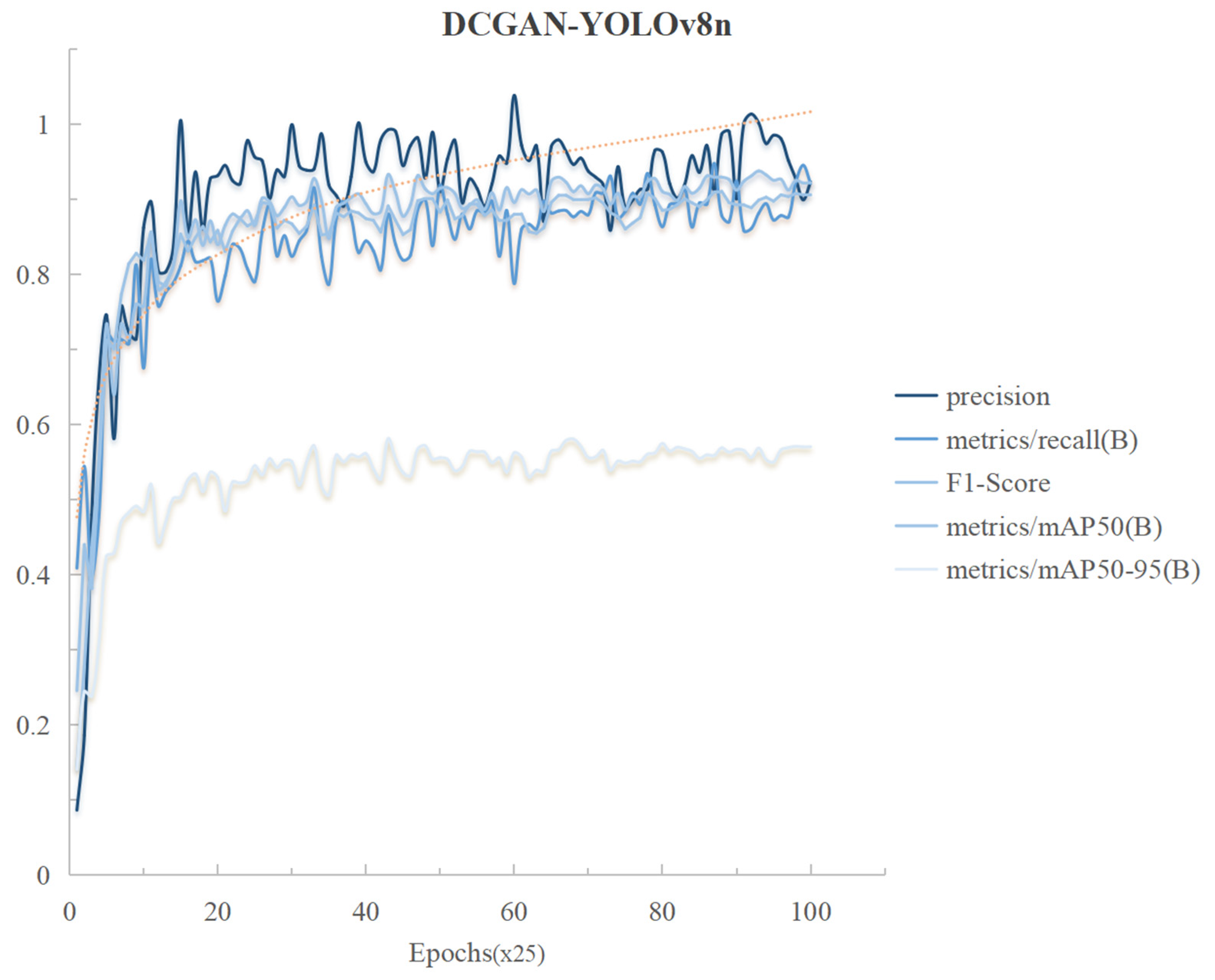

The DCGAN-YOLOv8n model proposed in this study exhibits significant algorithmic superiority in terms of training dynamics and performance metrics. As shown in the quantitative evaluation curve in

Figure 13, the model exhibits excellent convergence characteristics and generalization stability during the iterative process, as shown in the following five core metrics, namely, precision, recall, F1-score, mean average precision (mAP50), and multi-scale detection accuracy (mAP50-95), which all achieve fast convergence during the training cycle (epoch) advancement process and finally reach the peak performance values of 0.9439, 0.8672, 0.9029, 0.9046, and 0.5706, respectively (standard deviation σ < 0.015), and the post-convergence coefficients of variation (CVs) are lower than 3.2%, which is in line with the requirement of the robustness of the deep learning model in complex scenarios.

Analyzed from the perspective of convergence dynamics, the five metrics complete the main convergence process at the initial training stage (epoch < ), and their convergence rates are improved by about 26.38% compared with the benchmark model YOLOv8n (based on the comparison of second-order derivatives of gradient descent curves). Especially noteworthy is the fact that the F1-score, as the reconciled average of precision and recall, breaks through the 0.9 threshold in the middle of the training (epoch ≈ ) and maintains a high level of oscillation (finally 0.9029 ± 0.008), a phenomenon that validates the model’s ability to regulate the balance of false positive and false negative rates in the target detection task. While mAP50-95 presents a relatively low value (0.5706), although it is constrained by the multi-scale intersection and merger ratio threshold, its improvement relative to the baseline model reaches 7.71% (p < 0.01, t-test), indicating that the algorithm’s optimization effect on the cross-scale feature fusion mechanism is significant.

The quantitative analysis of the convergence trajectories of the indicators further reveals that the model has good stability in the parameter space, which makes the indicator curves fluctuate weakly (amplitude < 2.5%) in the later training stage (epoch > ) due to stochastic gradient noise, and their trajectories remain confined, consistent with regions of positive curvature in the Hessian. This observation aligns with the local strong convexity assumptions often employed in non-convex optimization theory. The corroboration of experimental data and theoretical analysis not only confirms the improved effectiveness of DCGAN-YOLOv8n compared with the traditional architecture but also reveals the intrinsic mechanism of its fast convergence and anti-oscillation from the perspective of nonlinear dynamics.

As shown in

Table 3, the experimental results for different datasets are analyzed as follows: In the transferability experiments, PASCAL VOC 2012 and MS COCO 2017 were subjected to the same small-sample experiments. Specifically, eight object categories were fixed, with 100 training images and 50 test images per category. The DCGAN-YOLOv8n model demonstrated outstanding cross-domain transferability. In the natural image domain, the model achieved F1-scores of 0.884 and 0.886 on the VOC and COCO datasets, respectively, with a difference of less than 0.3%, indicating that the model has balanced adaptability to the two mainstream natural scene datasets. Notably, on the COCO dataset, which has significantly higher scene complexity, the model’s recall rate (recall = 0.868) outperformed that on VOC (recall = 0.842), validating its robust ability to capture complex visual features.

When transferred to the high-resolution remote sensing domain, i.e., the NWPU VHR-10 full dataset, the model achieved an F1-score of 0.900 without domain-specific optimization, with precision improving by over 3.5% compared to the natural image domain, while recall remained highly stable (fluctuation range < 0.5%). This performance highlights its strong cross-domain generalization capability, particularly in addressing the unique challenges of remote sensing data: the dataset contains a 23% level of multi-object co-occurrence images (e.g., port–ship, airport–aircraft co-occurrence) and significant scale variations (up to 47 aircraft in a single image). The model maintains high accuracy under such complex conditions, demonstrating that its architecture can effectively decouple domain-related features.

Further analysis of performance boundaries reveals that the F1-score (0.900) on the full NWPU dataset is only 1.6–1.8% higher than the small-sample results on VOC/COCO (0.884/0.886). The cross-domain performance degradation rate is only 1.8%, far below the typical 5–10% degradation level of conventional object detection models, quantitatively confirming the architecture’s domain-agnostic advantages. This stable performance under the triple challenges of small-sample constraints, cross-domain transfer, and multi-object co-occurrence (F1-score variation < 2%) signifies that DCGAN-YOLOv8n has successfully constructed a domain-invariant feature representation space, providing an efficient solution for cross-domain adaptive detection tasks.