1. Introduction

Neurodegenerative disorders such as Parkinson’s Disease (PD) progressively impair the nervous system, underscoring the importance of early and accurate diagnosis to enable timely intervention and treatment planning. However, early-stage diagnosis remains a significant challenge due to heterogeneous symptom manifestations, evolving disease trajectories, and the fragmented nature of available clinical and biosignal data. Traditional machine learning (ML) models use individual modalities such as EEG, gait, or speech, but lack generalizability due to limited sample size and privacy constraints.

Researchers have investigated a wide range of data types—including acoustic, motion, EEG, and imaging—to identify biomarkers for Parkinson’s disease, emphasizing the utility of non-invasive feature extraction and acknowledging challenges like data variability and quality. Haq et al. [

1] reviewed deep learning applications in PD, including preprocessing of clinical data, classification, and E-health integration. A 2023 study [

2] highlighted the effectiveness of CNNs and RNNs in processing EEG, MRI, speech, and sensor-based data, particularly in handling time-series complexity and ensuring model generalization. Dixit et al. [

3] examined AI methods across multimodal datasets and advocated incorporating omics and health records for early PD detection. Similarly, Pradeep et al. [

4] evaluated 50 studies, analyzing ML/DL techniques, datasets, tools, and diagnostic limitations. A recent review [

5] pointed to multi-biomarker ML integration as a promising evolution beyond conventional diagnostic strategies.

Speech biomarkers have been extensively investigated due to their non-invasive nature and the early impact PD has on vocal articulation and prosody. CNNs and RNNs are widely used in PD speech analysis for their ability to capture complex vocal patterns. Models trained on sustained phonation from acoustic cardioid and smartphone recordings achieved up to 94.55% accuracy and 92.40% AUC using MLP, KNN, and SVM [

6], based on data by Sarkar et al. [

7]. An SVM with RBF kernel classified continuous speech from 43 PD and 9 HC subjects with 81.8% accuracy [

8]. Deep models like DenseNet161, evaluated on mPower data, reached 89.75% accuracy [

9].

BiLSTM models have proven adept at learning temporal speech dynamics, as evidenced in experiments on datasets like GYENNO and UCI, where leave-one-subject-out validations supported high generalization [

10,

11]. Hybrid approaches—such as combining LDA with neural networks and genetic optimization—have further pushed accuracy beyond 95% [

12]. Integrating principal component analysis with fuzzy KNN has also achieved commendable results [

13], while novel multimodel systems combining convolutional, recurrent, and dense architectures (DMVDA) have reached accuracy levels near 98% [

14].

Gait signals represent another critical dimension in PD diagnostics. Machine learning classifiers—including SVM, random forests, and neural networks—have been extensively applied to kinematic features extracted from wearable sensors [

15,

16,

17]. Recursive feature elimination and hybrid classifiers help identify salient spatiotemporal features crucial for PD classification [

17].

Freezing of Gait (FoG), a severe and episodic symptom, has received attention in recent years. Deep learning models using time-windowed spectral features have improved classification accuracy, with systems like DeepFoG offering real-time detection using minimal hardware [

18,

19]. Supervised models, including decision trees, SVMs, and ensemble methods, have reached up to 99.4% accuracy with gait-based temporal-spatial features using 10-fold cross-validation [

20]. CNN, RNN, and LSTM models have also been tested on home-recorded tri-axial sensor data, where combining spectral features and deep learning improved accuracy and response speed [

21]. On datasets like Physionet and Daphnet [

22], LSTM and RNN models demonstrated superior performance under cross-validation, particularly when combined with transfer learning or handcrafted signal transformations [

23]. In cerebral palsy gait analysis, a GRBF kernel SVM achieved 83.33% accuracy in classifying CP versus normal gait in children [

24,

25].

Traditional PD detection methods often focus on EEG-derived neural features such as elevated delta/theta power, reduced alpha, increased beta activity, abnormal gamma and PAC patterns, and complexity measures like ApEn. ERP-based features aid early-stage detection. Techniques ranging from HOS-based ML [

26,

27,

28] to CNNs, RNNs, hybrid RNNs, and wavelet-CSP classifiers have reported AUCs up to 99.5% [

29,

30]. Classical ML models like SVM perform well with neuroimaging but depend heavily on manual feature extraction. More advanced models—such as conv-RNNs, VAEs, and FCNets—using rs-fMRI have achieved up to 98.5% accuracy and improved decoding of functional connectivity [

31,

32,

33,

34].

A major advancement comes from a multimodal study that combined EEG and MRI using Light-GBM, achieving 97.17% accuracy and outperforming traditional methods [

35]. Another model integrating EEG and fMRI with AdaBoost reached 93.45% accuracy [

36]. While such fusion methods typically rely on homogeneous data sources, our study uniquely incorporates diverse biomarkers from varied sources. However, fusing biosignals like speech, EEG, gait, and fMRI presents challenges due to asynchronous data collection and the need to track temporal disease progression.

Federated Learning (FL) offers a promising solution by enabling collaborative training across decentralized datasets. Initiated by Google [

37], FL allows clients to update local model copies, which are then aggregated centrally via strategies like FedAvg or PFA to build robust global models [

38,

39,

40,

41]. In medical applications, FL adheres to HIPAA and GDPR standards, facilitating secure training across institutions while combating limited sample sizes and demographic imbalance [

40,

41]. FL improves model generalizability by aggregating data from multiple sources, mitigating small-sample limitations in medical imaging. Models such as U-Net and CNN-GNN hybrids have been adapted to FL, improving performance in MRI segmentation, histopathology, and even motor imagery classification from EEG [

42,

43]. FL is also effective in EHR systems, where it addresses temporal and data-view heterogeneity [

44]. Platforms like FLamby [

45] and FRESH [

46] support privacy-conscious analytics and secure wearable data sharing. Domain-specific FL has been shown to handle non-uniform data distributions effectively. Feng et al. [

47] introduced an encoder-decoder FL setup for MR reconstruction, capturing domain-invariant features globally while allowing local decoder training. Chakravarty et al. [

48] merged CNNs and GNNs to address domain shifts in X-ray imaging. Ensemble models, GANs, and contrastive learning have been used to align histopathological images and reduce dataset bias [

49,

50,

51]. Contrastive learning techniques [

52,

53] enhance self-supervised model pretraining, aiding fine-tuning even with limited labeled data. Pre-trained U-Net encoders can be locally trained before refining global models [

54,

55]. FL has proven valuable in brain tumor segmentation, COVID-19 detection, and diabetic retinopathy screening, while multi-task FL supports simultaneous MRI-based disorder classification [

56,

57]. Knowledge distillation, as shown by Kumar et al. [

58] and He et al. [

59], can further enhance FL by guiding client models using pre-trained networks, improving efficiency and reducing data dependency in weakly supervised contexts.

Effective model aggregation in FL integrates decentralized updates while preserving privacy. Techniques like FedAvg, FedProx, and Progressive Fourier Aggregation (PFA) help mitigate heterogeneity. PFA improves knowledge transfer by prioritizing low-frequency components [

60], while client training-loss-weighted updates have refined FL applications in COVID-19 diagnostics [

61]. FedSLD addresses label imbalance issues in medical imaging [

62]. FL has also been successfully applied in pancreas segmentation [

63], thyroid image classification [

64], and anal cancer prediction [

65], enhancing rare disease analysis. In BCI research, FL has improved EEG-based motor imagery classification using FedAvg and FedProx, showing scalability with client number and data volume [

66]. Federated Transfer Learning (FTL) has proven superior to traditional DL in privacy-sensitive, small-sample EEG classification scenarios [

67].

FL enables collaborative model training across multiple institutions or devices without centralizing sensitive data. This makes it particularly suitable for integrating PD biomarkers, which often exist across disparate datasets and patient groups. Our study leverages this capability by developing a federated diagnostic pipeline that integrates speech, EEG, fMRI, and gait data. This unified approach not only addresses the privacy and scalability concerns but also improves early-stage PD detection, including critical symptoms like FoG.

To summarize, the key contributions of this work are outlined below, highlighting the novelty, methodological advances, and clinical relevance of the proposed framework:

A modular and generalizable fusion-aided Federated Learning (FL) framework for multimodal PD biomarker fusion has been developed, integrating EEG, fMRI, gait, and speech data while ensuring patient privacy.

The feasibility of the framework has been demonstrated across PD-related tasks, including Freezing of Gait (FoG) detection, EEG–fMRI-based diagnosis, and speech-based classification, thereby establishing its scalability to different symptoms and related disorders.

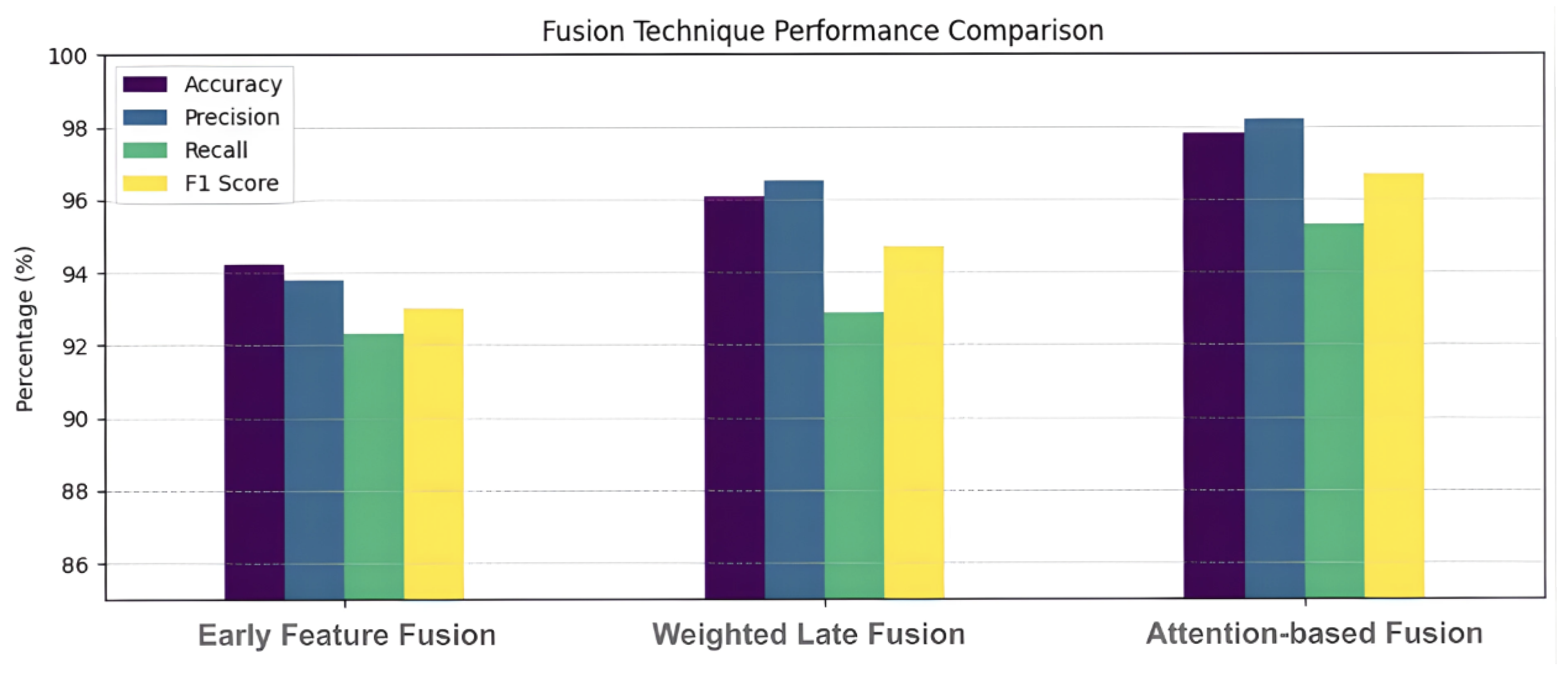

Multiple fusion strategies (early, weighted, and attention-based) have been compared and evaluated, with attention-based fusion shown to achieve the highest accuracy (up to 97.8%) for FoG detection.

Stage-specific and demographic effects have been explicitly analyzed, and potential fairness gaps have been identified, motivating the incorporation of fairness-aware FL strategies in future work.

This study constitutes the first application of FL to multimodal PD biomarker fusion, distinguishing it from prior biomedical FL applications (e.g., Alzheimer’s, epilepsy) by addressing heterogeneous and asynchronous modalities.

The remainder of this article is structured as follows:

Section 2 presents the methodology and federated framework;

Section 3 describes the datasets;

Section 4 reports experimental results;

Section 5 discusses limitations, implications, and future work; and

Section 6 concludes the paper.

2. Materials and Methods

The methodology for this research centers around constructing a robust Federated Learning (FL) framework that enables multimodal data integration for PD diagnosis. In the proposed architecture, individual client nodes are responsible for processing different modalities of data, including EEG, fMRI, speech, gait, EMG, and accelerometry. These client nodes operate independently, training models locally on their respective datasets to preserve data privacy and comply with regulations such as HIPAA and GDPR. Once the local models are trained, only the model updates are communicated to a central server. Our framework supports both horizontal FL (sample-partitioned data across patients) and vertical FL (feature-partitioned data across institutions), reflecting clinical scenarios where institutions contribute homogeneous or complementary modalities.

At the client nodes, specific machine learning models are applied based on the modality of data being processed. EEG and gait signals, which are time-series in nature, are analyzed using Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), including Long Short-Term Memory (LSTM) networks. EEG data also benefit from Event-Related Potential (ERP) image conversion and are processed using Graph CNNs to capture spatial and spectral dependencies. Resting-state fMRI data are processed using ResNet-18 models, pre-trained on large-scale image data to improve feature extraction.

The central server aggregates model updates from client nodes using dynamic aggregation algorithms such as FedAvg and Fed-Dyn [

68]. FedAvg averages the weights from different client models, while Fed-Dyn incorporates regularization terms to accommodate the heterogeneity in data distributions across client sites. This aggregation results in a global model that represents a more generalized understanding of Parkinson’s Disease indicators across multiple data types. In this study, the Fed-Dyn aggregation strategy was adopted due to its ability to regularize client updates and thereby mitigate client drift under heterogeneous, non-IID data distributions.

Feature fusion is a critical component of the methodology. Early fusion involves concatenating features from multiple modalities before feeding them into the classifier. This approach assumes equal relevance of all modalities. Weighted fusion assigns modality-specific importance based on empirical performance or clinical relevance, allowing more significant signals to have greater influence. Attention-based fusion dynamically learns the relevance of each feature set during training, assigning weights adaptively to optimize classification performance. This method has demonstrated the highest performance in experimental results. A simplified block diagram of the fusion-aided federated learning framework, which trains LSTM on client nodes and trains DNN at central nodes is shown in

Figure 1.

Prior to training, extensive preprocessing is applied to all data types. EEG data undergo artifact removal using Independent Component Analysis (ICA), bandpass filtering (1–40 Hz), and normalization. ERP images are generated for improved temporal resolution. fMRI data are preprocessed using motion correction, slice timing correction, spatial smoothing (5 mm FWHM), and ICA-based noise removal, followed by co-registration with anatomical images. Speech data are segmented into 25 ms frames and denoised using bandpass filters (300–3400 Hz), while gait data (EMG and accelerometer signals) are filtered, normalized, and converted into descriptive statistical features such as entropy, zero-crossing rates, and power.

This decentralized training process enables multimodal data integration without requiring raw data transfer, enhancing privacy and scalability. The framework is validated through a series of experiments involving different modality combinations, fusion strategies, and data distributions. The result is a flexible, generalizable, and privacy-aware diagnostic model for PD, capable of supporting real-world clinical applications.

2.1. Federated Learning Protocol

Initialization: The central server initializes a global model with weights . This model is distributed to each client.

Local training: Each client performs local training on their data

by minimizing the local loss function:

where

is cross-entropy loss,

is learning rate, and

t denotes local iteration.

Secure aggregation: After

E local epochs, each client sends updated parameters

to the server. The server aggregates the updates using Federated Averaging (FedAvg):

where

is the size of client

k’s dataset,

is the total data across clients.

Decision-Level Fusion: Let

and

be outputs from LSTM and CNN. Final prediction via soft voting or a meta-classifier

h:

where

balances the contribution from each model. The training setup employed batch sizes ranging from 32 to 64, a learning rate of 0.001 with the Adam optimizer, an 80–20 train–test split, and 5 local epochs. To mitigate overfitting, dropout rates between 0.3 and 0.5, with L2 regularization, were applied.

2.2. EEG and fMRI Based FL Framework

Client-side processing is decentralized using specialized deep learning models tailored to each modality. EEG signals (Client Node 1) are processed using a CNN with four convolutional layers and max-pooling, along with a GSP-GCN architecture that models EEG electrodes as graph nodes and functional connectivity (Pearson Correlation Coefficient) as edges. The iNEAT algorithm aligns local (single-hop) and global (multi-hop) brain network topologies, enhancing robustness. A Chebyshev polynomial-based GCN then captures spectral–spatial dependencies, and saliency maps provide interpretable localization of brain regions relevant to classification. For fMRI data (Client Node 2), a ResNet-18 model initialized with pretrained AlexNet weights extracts Parkinson’s-related features from resting-state images. Resting-state fMRI images are processed using a ResNet-18 model—comprising 18 layers with a 7 × 7 initial kernel, four convolutional blocks with skip connections, and ReLU activation—initialized with pretrained AlexNet weights (five convolutional layers: 96, 256, 384 filters; three max pooling layers; three fully connected layers) to enhance PD-specific feature learning.

All models are trained locally on an 80–20 train–test split, with the GSP-GCN model additionally validated using 5-fold cross-validation and optimized via Adam and cross-entropy loss. Only model updates are shared with the central server, preserving data privacy. The server aggregates updates using Fed-Dyn, an adaptive algorithm that accounts for client-specific variation to enhance learning from heterogeneous sources.

To improve classification robustness, the framework employs both early and late fusion strategies. In early fusion, extracted features from EEG and fMRI are combined prior to classification, while in late fusion modality-specific classifiers such as SVMs are trained separately and their decisions are integrated at the final stage. Two autoencoders further reduce dimensionality and extract modality-specific features before fusion. Training involves 100 federated communication rounds, significantly more than standard implementations, which helps stabilize performance without compromising efficiency. The PD-DS-I dataset [

68] underpins this framework, providing heterogeneous multimodal data critical for real-world applicability.

Data preprocessing is rigorously handled to ensure signal clarity and consistency. EEG signals undergo Independent Component Analysis (ICA), bandpass filtering, and epoch segmentation using EEGLAB and Python-based tools. Event-Related Potentials (ERPs) are extracted by aligning EEG epochs with stimulus events using the MNE Python package (version 1.10.1) and converted into ERP images for use in the EEG classification pipeline. Meanwhile, fMRI preprocessing includes motion correction, slice timing, spatial smoothing, intensity normalization, and ICA-based artifact removal, executed via an automated Python-FSL pipeline. These steps are essential to remove physiological and acquisition noise, ensuring high-quality inputs for the learning models.

Modification to the FL-framework: The modified FL framework suitable for EEG and fMRI integration builds upon the standard FedAvg framework detailed in

Section 2.1 by introducing two key enhancements: dynamic control and hybrid fusion. While both approaches begin with a global model distributed to clients for local training, the modified framework modifies the client update step by adding a dynamic control variable that regularizes the model with respect to its historical trajectory. This term helps stabilize training, especially in heterogeneous (non-IID) data settings, by reducing client drift—a limitation not addressed in the standard FedAvg method. Furthermore, while the attached method relies solely on decision-level fusion (combining predictions from two models using a weighted average), the first methodology uses a hybrid approach: it performs model-level fusion through federated aggregation (integrating parameters learned from different modalities like EEG and fMRI) and then applies decision-level fusion at inference. It also includes a final output stage where predictions from the global model and the server are adaptively combined. Clients update the model using:

Global model aggregation across clients:

Dynamic control update on server:

Central server aggregation of decision-level weights:

Decision-level fusion of predictions:

2.3. Speech-Based FL Framework

Continuous speech and vowel pronunciation data were processed at distributed client nodes employing complementary neural architectures: Long Short-Term Memory (LSTM) networks for capturing temporal dependencies, and Convolutional Neural Networks (CNNs) for extracting spectral features. Each client model was trained on locally partitioned data and transmitted model updates to a central server, where a Deep Neural Network (DNN) was trained to aggregate and refine the classification outputs. The first experiment involved models trained on distinct modalities from a single dataset, while the second evaluated FL across datasets sharing the same speech modality. A third experimental variation combined different speech modalities from separate datasets using decision-level fusion at the central server.

The experimental framework leveraged two speech datasets. The Italian Parkinson’s Voice and Speech Database provided continuous paragraph readings and isolated vowel phonations, yielding over 297,000 25 ms speech segments. The second dataset consisted of sustained vowel recordings annotated with clinical speech parameters (e.g., jitter, shimmer), filtered to include only Parkinson’s and healthy control samples. Both datasets underwent standardized preprocessing, including denoising via high-order bandpass filtering and time-window segmentation. The FL setup employed secure aggregation techniques and iterative federated averaging (FedAvg) for model optimization. Performance evaluation relied on standard metrics including accuracy, precision, and F1-score. Post-training fine-tuning was conducted after each learning round, confirming the potential of the proposed federated architecture as a scalable and privacy-preserving solution for multimodal PD speech diagnostics.

2.4. Gait-EEG Based FL Framework

The methodology employed in this study focuses on developing reliable and interpretable models for detecting Freezing of Gait (FoG) in individuals with Parkinson’s Disease (PD) by leveraging Electroencephalography (EEG) and gait-based signals. The approach evolves from unimodal analysis to advanced multimodal integration within a privacy-preserving Federated Learning (FL) framework. Initially, EEG and gait signals were examined separately to assess their individual discriminative capabilities. EEG data, preprocessed to capture neural markers associated with FoG episodes, were evaluated using a range of machine learning algorithms, including Support Vector Machines (SVMs) with radial basis function kernels, k-Nearest Neighbors (KNNs), boosted decision trees, Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs). A comparable suite of models was also applied to gait data, which included Electromyography (EMG) and acceleration-derived features that reflect both temporal and spectral characteristics. Owing to the limited dataset size (data from only 12 PD participants), deep neural networks were not considered.

Upon establishing benchmark performances for each modality independently, the study advanced to multimodal fusion strategies aimed at exploiting the complementary nature of EEG and gait signals. An initial feature-level fusion method was implemented by manually extracting relevant features from both modalities, concatenating them, and subsequently applying SVM classification. To maintain data privacy while enabling collaborative model training across distributed sources, a fusion-aided Federated Learning (FL) architecture was developed. The dataset was partitioned into three subsets. Client 1 processed EEG data using a CNN with four convolutional layers of decreasing kernel sizes and associated max-pooling layers. Client 2 handled gait data through a CNN comprising three convolutional layers and two fully connected layers. The central server aggregated probabilistic model outputs (logits) from both clients using the Federated Averaging (FedAvg) algorithm and trained a hybrid model capable of jointly learning from EEG and gait data.

To further improve classification accuracy, the central server explored three fusion strategies: early feature fusion, weighted feature fusion, and attention-based fusion. Early feature fusion involved simple concatenation of feature vectors from both modalities before classification. In the weighted fusion approach, feature embeddings derived from EEG and gait signals were combined using an element-wise summation with modality-specific weights, offering tunable flexibility in emphasizing more informative features. Finally, the attention-based fusion mechanism dynamically assigned importance to each modality’s features, guided by their relevance to the classification task. We used scaled dot-product attention with randomly initialized weight matrices. The Query (

Q), Key (

K), and Value (

V) were computed for each modality and refined during backpropagation using the Adam optimizer (learning rate: 0.001). The attention weights dynamically emphasized modality relevance during training, as formalized in Equation (

9).

where

Q,

K, and

V represent transformed features from EEG and fMRI streams, and

is the dimensionality of the key. Here, EEG and gait embeddings were processed separately and then integrated using an attention network that learned adaptive weighting, followed by a fully connected classifier. This hierarchical and adaptive methodology from isolated modality evaluation to advanced multimodal fusion within an FL context provided a comprehensive framework for assessing and enhancing FoG detection using neurophysiological and biomechanical data in PD.

3. Dataset

To evaluate the proposed framework, we utilized three diverse and complementary datasets encompassing different biomarker modalities relevant to Parkinson’s Disease.

The PD-DS-I dataset [

69], curated by the Latin American Brain Health Institute (BrainLat), includes multimodal neuroimaging and electrophysiological recordings. It contains data from 780 participants, including 530 individuals diagnosed with Parkinson’s Disease, Alzheimer’s Disease, or Multiple Sclerosis, and 250 healthy controls. Of these, 31 PD patients and 41 healthy controls with both EEG and resting-state fMRI (rs-fMRI) recordings were selected for this study. The participants span a wide age range (21–89 years) and include both genders, though a slight male predominance exists among the PD subjects. EEG data were recorded during the resting state with high-density arrays, and fMRI data were obtained using standardized scanning protocols. The dataset is particularly valuable for its inclusion of both structural and functional imaging data, enabling deep analysis of brain connectivity.

The Italian Parkinson’s Voice and Speech Database [

70,

71] contributes acoustic biomarkers, capturing both continuous speech and sustained vowel phonations. It includes recordings from 28 PD patients and 37 healthy controls, collected under clinical supervision. Subjects were asked to read a phonetically balanced paragraph and to produce specific vowels such as /a/ and /i/. From these recordings, over 297,600 25-millisecond segments were extracted. The dataset spans a broad age distribution and includes detailed metadata, such as Hoehn and Yahr staging. Preprocessing involved high-order bandpass filtering and normalization to standardize vocal characteristics across subjects. This dataset supports the extraction of spectral and prosodic features, which serve as key acoustic biomarkers for distinguishing Parkinson’s Disease patients from healthy controls.

The second speech dataset (Vowel Pronunciation) comprises synthesized recordings of sustained vowels /A/ and /I/ produced by individuals diagnosed with Parkinson’s disease, multiple system atrophy, and progressive supranuclear palsy, as well as healthy controls [

72]. It serves as a valuable benchmark for assessing pitch detection algorithms, modal fundamental frequency estimators, and sub-harmonic detectors. For this study, only the recordings from the PD (93 subjects) and healthy control (88 subjects) groups were utilized. Additionally, the dataset includes relevant acoustic features such as jitter, shimmer, Subharmonic-to-Harmonic Ratio (SHR), and Harmonic-to-Noise Ratio (HNR).

The MOVE dataset [

73] provides multimodal physiological and motion signals for detecting Freezing of Gait (FoG), a severe motor complication in PD. It includes synchronized EEG, EMG, and Accelerometry (ACC) data collected during walking tasks from 12 PD patients (6 male and 6 female), most of whom exhibited FoG episodes. The dataset totals approximately 3 h and 42 min of sensor data, acquired at high sampling rates (EEG and EMG at 1000 Hz, accelerometers at 500 Hz). EEG signals were captured using a 25-channel montage, and EMG and inertial sensors were placed on the arms, shanks, and waist. Annotations were provided by clinical experts to mark FoG events, which varied in duration from 1 to over 200 s. This dataset supports detailed analysis of gait abnormalities and their neurological correlates in real time.

Together, these datasets offer a comprehensive foundation for evaluating the FL-based framework. Each contributes distinct modalities processed at separate client nodes, enabling the simulation of real-world distributed healthcare environments while supporting robust, multimodal PD classification and FoG detection.

4. Results

Extensive experiments were performed using the federated learning framework to assess the contribution of different modalities, fusion strategies, and learning architectures. Results demonstrated strong performance across all experimental setups, confirming the robustness and versatility of the proposed approach.

4.1. PD Diagnosis Using EEG + fMRI

In experiments focused on EEG and fMRI integration using the PD-DS-I dataset, we observed that multimodal fusion outperformed single-modality models. When EEG and fMRI data were used in isolation, the ROC-AUC scores were 0.75 and 0.8, respectively. The experimental setup explored various configurations of EEG and fMRI data across client nodes and the central server within an FL framework to improve PD diagnosis. The experimental details are listed in

Table 1.

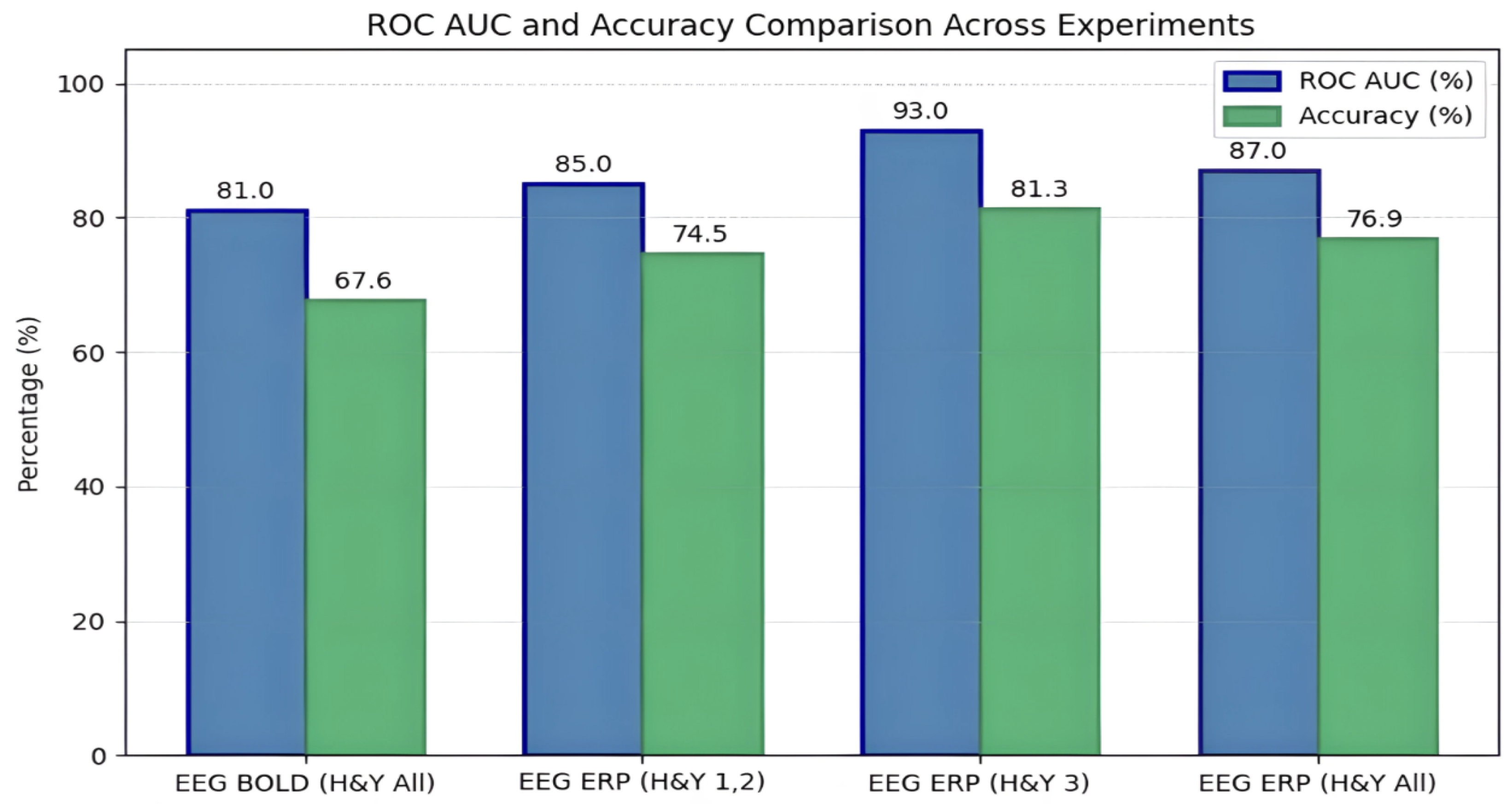

In Experiments 1 and 2, the data were strategically partitioned to simulate real-world data segregation across institutions. EEG BOLD signals and fMRI images were distributed between client nodes and the server in Experiment 1, achieving 67.6% accuracy (ROC AUC: 0.81). Experiment 2 focused on ERP images from EEG and included an additional variation with stricter stage-based training separation, leading to an improved accuracy of 74.5% and an ROC AUC of 0.85 when the central model was trained only on stage 1 data. These early studies highlighted the benefit of multimodal fusion and stage-specific modeling for enhanced diagnostic accuracy.

Subsequent experiments (3–8) examined the influence of disease stage and demographic segmentation. Experiment 3, which used only H&Y stage 3 data, achieved the highest performance (81.3% accuracy, ROC AUC: 0.93), confirming that advanced-stage data offers clearer biomarker signals. Including subjects from all H&Y stages (Experiment 4) slightly lowered performance to 76.9% (ROC AUC: 0.87).

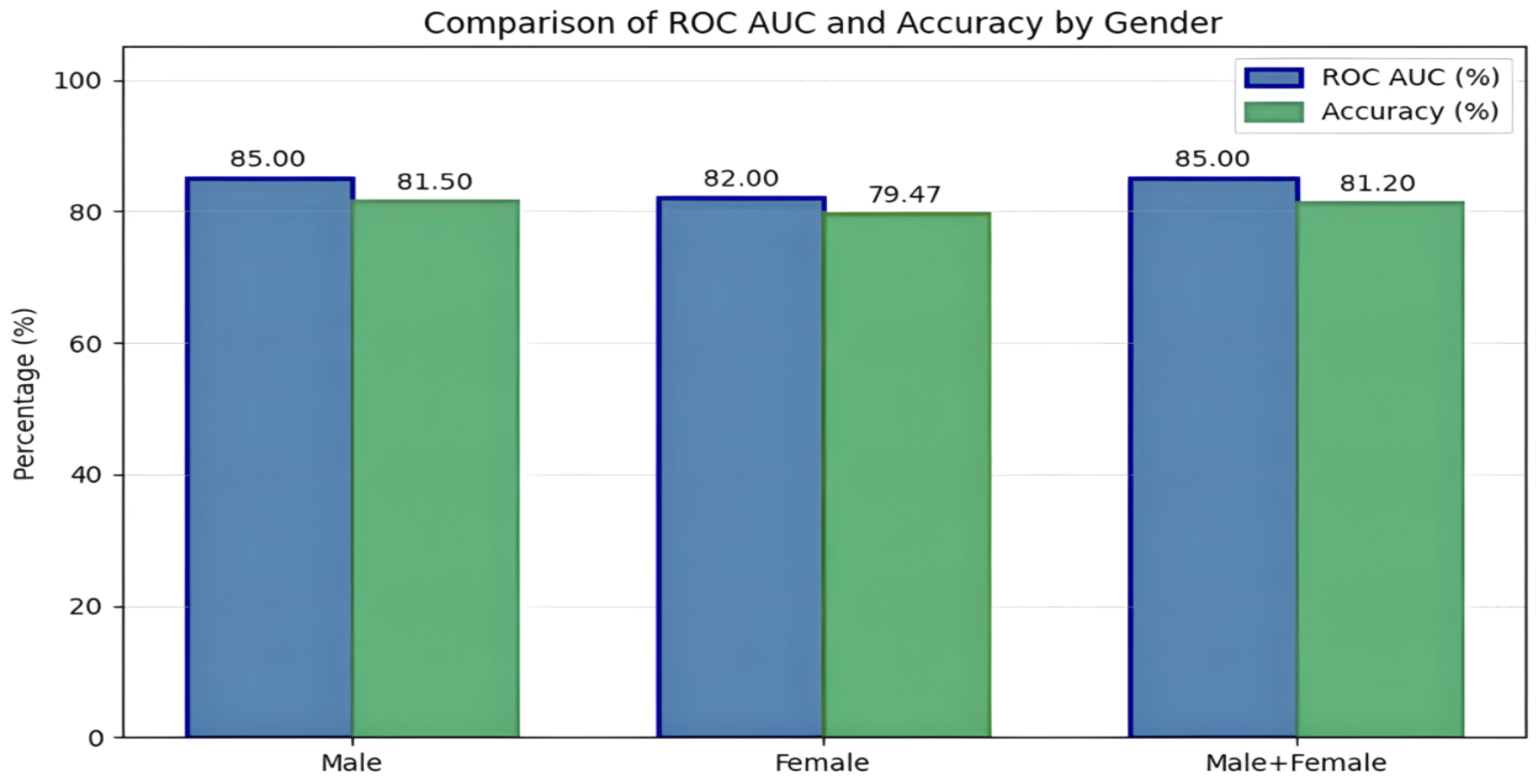

Gender-based analyses in Experiments 5–8 revealed that male-only configurations marginally outperformed female-only setups, though both achieved high accuracy (up to 81.5% accuracy, and ROC-AUC of 0.85 for males and 79.47% accuracy, and ROC-AUC of 0.82 for females). Mixed-gender client configurations also yielded strong results (80.33–81.2% accuracy), underscoring the robustness of the FL system across varied subject distributions and validating its utility in personalized, multimodal PD diagnostics. The performance of the stage-specific and gender-specific framework is compared in the following

Figure 2 and

Figure 3.

4.2. Speech-Based FL Experiments

In the case of speech biomarkers using the Italian Parkinson’s Voice and Speech Database, several experiments were designed around continuous speech and vowel phonation, as detailed in our earlier work [

74]. LSTM models proved most effective for capturing time-dependent speech features, achieving testing accuracies of 83.88% (continuous speech) and 85.42% (vowels). Federated learning across two client nodes, each trained on distinct speech types, followed by late fusion at the central server, achieved a central testing accuracy of 85% with an F1 score of 0.77. Testing federated setups across different datasets (i.e., using vowel data from one dataset and continuous speech from another) further confirmed the framework’s ability to generalize across domains.

The results of the three experiments in this study provide a comprehensive understanding of how different data modalities, machine learning models, and data source heterogeneity affect the performance of a Federated Learning (FL) framework for Parkinson’s Disease (PD) detection using speech biomarkers.

In the first experiment, the study assessed the impact of deploying various machine learning models at the client nodes when training on the same data type from a single data source. The dataset was divided into three equal partitions for both continuous speech and vowel pronunciation samples. Each client trained a model independently using one data segment, and a central server subsequently aggregated the outputs. Among the models evaluated—SVM, KNN, CNN, LSTM, and CRNN—the LSTM model outperformed the others across both data types. Specifically, it achieved testing accuracies of 83.88% on continuous speech and 85.42% on vowel pronunciation. CNN followed closely, particularly for continuous speech data. The central server employed a Deep Neural Network (DNN) with dense layers and dropout to integrate the client outputs. This configuration demonstrated improved classification capabilities over individual clients due to aggregated knowledge. The precision and F1 score evaluations reinforced LSTM’s superior ability to model temporal patterns in speech data. The highest F1 score in this setup reached 0.73 for the LSTM-LSTM model using vowel pronunciation data, highlighting its robust classification potential.

The second experiment introduced a degree of heterogeneity by training the FL framework on the same data type—vowel pronunciation—but sourced from two different datasets. Each client node was trained on vowel data from a distinct source, while the central server trained on data segments from unseen subjects in the first dataset. LSTM models were again deployed at the client nodes. To study the trade-off between communication frequency and model performance, different numbers of communication rounds were tested, ranging from 5 to 200. The results showed that increasing the number of rounds up to 100 improved testing accuracy and F1 score—reaching 83.66% and 0.7651, respectively—with manageable training time. Beyond 100 rounds, however, the accuracy gains diminished while the training time increased sharply, illustrating the saturation point for communication efficiency in FL settings. This experiment validated the FL framework’s capacity to generalize across different data sources while maintaining high classification accuracy and precision, even in the presence of inter-dataset variability.

The third experiment advanced the investigation by evaluating the framework’s adaptability to heterogeneous data types from different sources. Here, one client was trained on continuous speech segments from dataset 1, while the other used vowel segments from dataset 2. At the central server, late fusion (decision-level fusion) was employed, wherein separate DNN models processed each data type independently, and their outputs were merged at the decision stage. This setup demonstrated that combining heterogeneous data types not only preserved but even enhanced classification performance. The central server achieved an overall testing accuracy of approximately 85%, with a precision of 81.8% and an F1 score of 0.77. These outcomes, visually summarized in

Figure 4, indicate that the fusion of diverse but complementary acoustic features significantly strengthens the diagnostic signal in PD classification. Notably, this performance was comparable to or slightly better than the results achieved using only homogeneous data types, underscoring the framework’s robustness and versatility in handling complex, real-world scenarios involving distributed and multi-modal data.

4.3. Freezing of Gait Detection (EEG + Gait)

The MOVE dataset was used to assess Freezing of Gait (FoG) detection. Client nodes processed EEG and gait (EMG + accelerometry) data independently, and fusion was conducted at the central server. Due to the limited number of participants (n = 12), we employed SVM, k-NN, and lightweight CNN classifiers with L2 regularization. Feature-level fusion combined entropy-based gait features with ERP-derived EEG embeddings. The best results were achieved with a hybrid CNN model at the server that yielded a validation accuracy of 87% and training accuracy of 92%.

The experimental results of this study underscore the effectiveness of both unimodal and multimodal approaches for detecting Freezing of Gait (FoG) in Parkinson’s Disease patients, with progressively improved performance through model complexity and data fusion. In the unimodal analysis, EEG-based classification yielded the best results, with a CNN model achieving the highest accuracy of 92%. This was followed closely by an SVM model with 90.36%, while boosted decision trees, k-Nearest Neighbor (KNN), and Recurrent Neural Network (RNN) performed with slightly lower accuracies around 86.02%, 84.47%, and 84.36%, respectively. For gait-based detection, CNN again proved to be the most effective model, with an accuracy of 87%, surpassing other models such as boosted trees at 85.61%, SVM at 84.7%, and KNN at 83.2%. The results are summarized in

Table 2.

Building upon these individual modalities, the early feature-level fusion approach that combined manually extracted features from EEG and gait signals yielded a classification accuracy of approximately 90% using an RBF-SVM model. Further enhancements were achieved through a fusion model applied to raw EEG and gait data, which reached 93% accuracy. Within the Federated Learning (FL) framework, distinct models trained on distributed EEG and gait datasets showed solid individual performance, with the EEG-CNN achieving training and validation accuracies of approximately 85% and 80%, and the gait-CNN reaching around 88% and 83%, respectively. However, the hybrid model trained on aggregated outputs from both clients significantly improved classification accuracy, achieving 92% in training and 87% in validation. The corresponding confusion matrices indicated a reduction in both false positives and false negatives, reinforcing the value of multi-modal integration.

The central server further explored three advanced fusion strategies to maximize performance. Early feature fusion based on concatenation produced an accuracy of 94.2%, demonstrating a notable improvement over earlier fusion techniques. A weighted feature fusion strategy, which adjusted the contributions of EEG and gait features based on their importance, resulted in a further increased accuracy of 96.1%. The highest accuracy was obtained using the attention-based feature fusion approach, which dynamically assigned relevance to features from each modality. This mechanism effectively captured nuanced relationships between the signals, optimizing the final classification output. The performance comparison between different fusion techniques for gait-EEG integration is shown in

Table 3 and

Figure 5.

Across all fusion methods, improvements in accuracy were accompanied by increasingly balanced confusion matrices, indicating a robust capacity to identify both FoG and non-FoG events accurately. Collectively, these results validate the superiority of multimodal data fusion—particularly under a privacy-preserving federated learning framework—for enhancing the detection of FoG in Parkinson’s Disease.

4.4. Selection of Communication Round

Across all experiments, performance improves with the number of communication rounds, saturating near 100 rounds. Beyond this, accuracy gains diminish while training time rises sharply. This demonstrates that 100 rounds represent a practical balance for clinical deployments. Preliminary testing showed that increasing local epochs (e.g., E = 5) reduced communication without significantly affecting accuracy. Future iterations of our framework will explore gradient compression, sparsification, and periodic update schemes to reduce bandwidth—particularly vital for wearable and mobile health applications. The F1 score, testing accuracy, and training time for the central server are recorded in

Table 4.

Moreover, attention to gender-specific and stage-specific analyses revealed key insights into PD manifestation, supporting the case for personalized modeling. These findings validate that the federated learning framework not only supports privacy-preserving training but also effectively combines heterogeneous modalities to enhance PD diagnosis and symptom classification across different stages and patient demographics.

5. Discussion

The results of this study underscore the transformative potential of Federated Learning (FL) in the realm of Parkinson’s Disease (PD) diagnostics, especially when integrating heterogeneous data sources. Across all experiments, multimodal integration consistently outperformed single-modality analysis, validating the hypothesis that incorporating diverse biomarkers leads to more robust and generalizable diagnostic models.

One of the major contributions of this research is demonstrating that EEG and fMRI data, when fused using a federated architecture, can reveal underlying neural dysfunctions more effectively than when analyzed separately. The performance boost achieved through stage-specific experiments, particularly those focusing on Hoehn and Yahr (H&Y) stage 3 indicates that the severity of PD symptoms aligns with clearer neurobiological signals. This implies that future diagnostic systems should consider disease staging as a key parameter when training and deploying models.

The integration of speech biomarkers further adds depth to the framework by incorporating non-invasive, easily collectible data that reflect both motor and non-motor manifestations of PD. LSTM-based models performed well in capturing the temporal dependencies inherent in speech patterns, and the federated approach demonstrated resilience when integrating data from multiple databases. This validates the framework’s capacity to operate in realistic clinical scenarios where data types and sources are highly variable.

The most significant results were obtained from Freezing of Gait (FoG) detection using EEG, EMG, and accelerometry data. This represents a substantial advancement, as FoG is one of the most debilitating symptoms of PD. Attention-based fusion clearly outperformed both early and weighted fusion strategies, suggesting that adaptive, relevance-based integration of multimodal features is crucial for capturing subtle gait abnormalities. The high accuracy (97.8%) achieved in this experiment not only validates the model architecture but also sets a new benchmark for privacy-preserving gait analysis.

Furthermore, we acknowledge several limitations that merit discussion. First, while the MOVE dataset provided high-fidelity EEG and gait signals for Freezing of Gait (FoG) detection, the small sample size presents potential challenges for generalizability. To mitigate overfitting, we deliberately avoided deep neural networks and instead utilized shallow classifiers such as SVMs, k-NN, and CNNs with limited parameters. Additionally, we employed L2 regularization, dropout layers, and stratified cross-validation to enhance robustness. These strategies collectively helped reduce the risk of model overfitting given the limited dataset. Future work will incorporate power analysis to guide the design of minimally sufficient sample sizes and explore data augmentation techniques tailored to biosignals.

We note the discrepancy in modality-specific sample sizes, particularly between EEG–fMRI experiments (31 PD subjects) and speech-based experiments (over 90 PD subjects). This variation could theoretically introduce bias during multimodal fusion. However, we emphasize that fusion was only performed within paired-modality datasets (e.g., EEG with fMRI or gait), and never across modalities with unmatched subject samples. Moreover, our weighted and attention-based fusion strategies dynamically adjusted the influence of each modality based on learned relevance, helping reduce any imbalance-induced skew. Cross-validation and equal partitioning were further used to ensure balanced model training.

The asynchronous nature of data collection across modalities (e.g., EEG and gait) raised valid concerns about temporal alignment. In our current framework, feature-level fusion was employed, where descriptive statistical and spectral features were extracted independently from each modality. These features were then fused without requiring real-time synchronization. For EEG, ERP-derived image representations captured temporal structure, while gait signals were summarized using entropy and spectral descriptors. Future iterations of our framework will explore time alignment using dynamic time warping and transformer-based multimodal encoders that are better suited for asynchronous signals.

Gender-specific analyses revealed slight performance differences, with male-only models achieving marginally higher classification scores compared to female-only models. While our dataset contained a reasonable gender balance, this finding suggests potential biological or hormonal influences on biomarker expression. The inclusion of Hoehn and Yahr staging allowed us to examine the effects of disease severity across demographic groups. Nevertheless, we did not incorporate explicit fairness-aware strategies such as adversarial debiasing or re-weighted loss functions. Future work will investigate gender-sensitive modeling strategies, including subgroup-specific federated learning and fairness constraints, to promote equity in diagnostic accuracy across populations.

Another important aspect is the scalability and privacy compliance of the proposed FL framework. By enabling collaborative model training without sharing raw data, the system adheres to HIPAA and GDPR standards. This makes it suitable for deployment in clinical environments where patient privacy is paramount. Moreover, the use of dynamic aggregation techniques like Fed-Dyn ensures that models remain robust even in the face of non-IID (non-identically distributed) data, which is often the case in multi-center medical research. Although this choice improved stability in our experiments, a comprehensive comparison with other federated learning strategies (e.g., FedProx, SCAFFOLD) will be pursued in future work to further validate robustness.

From a methodological perspective, the comparative analysis of fusion techniques provides a roadmap for future research. While early fusion offers simplicity and reasonable accuracy, attention-based fusion emerges as the most effective strategy. Its ability to assign weights dynamically based on the contextual relevance of features allows for more nuanced interpretation of complex physiological and behavioral signals. Overall, this research contributes a scalable, interpretable, and privacy-aware diagnostic solution for Parkinson’s Disease. It serves not only as a validation of federated learning in neurodegenerative diagnostics but also as a blueprint for integrating additional modalities such as genomics, proteomics, and longitudinal health records in future work.

Future improvements may involve real-time model updates, cross-device personalization, and the use of synthetic data augmentation to enhance both accuracy and generalizability. To validate the global relevance and clinical utility of the framework, benchmarking across diverse international datasets and clinical cohorts will be critical. While the current FL setup assumes ideal conditions—such as consistent client participation and sufficient bandwidth—real-world scenarios often present challenges like intermittent client dropout, limited computational resources, and evolving data distributions. However, the architecture is designed to support realistic extensions. Planned improvements include asynchronous training, dropout-tolerant aggregation (e.g., sampling with redundancy), on-device adaptation via lightweight models or federated distillation, and fault-tolerant asynchronous FL protocols.

6. Conclusions

This extended research demonstrates the growing potential of federated multimodal learning in advancing Parkinson’s Disease diagnosis. By seamlessly integrating biomarkers in specific multimodal combinations—such as EEG with fMRI, speech with vowel pronunciation, and EEG with gait and accelerometry—the proposed framework achieves high diagnostic accuracy while preserving patient privacy through decentralized Federated Learning. Our approach effectively addresses the limitations of unimodal models and isolated datasets, offering a scalable and privacy-compliant pathway to clinical translation.

This study also emphasizes the importance of accounting for demographic factors and modality-specific biases in multimodal learning. We showed that sample size limitations can be mitigated through model simplification, regularization, and careful validation, while asynchronous signal fusion remains feasible through feature-level integration. Moving forward, synchronization-aware fusion, fairness-aware learning strategies, and power-aware experimental design will be prioritized to further enhance the generalizability, robustness, and equity of the proposed federated diagnostic framework.

To build upon this foundation, future work will focus on expanding the framework to incorporate multi-center, cross-domain datasets, enabling greater generalization across diverse populations and institutions. Particular attention will be given to refining temporal fusion strategies, which are essential for capturing dynamic disease progression and improving predictive power in early-stage PD detection. Innovative experimentation can potentially include the integration of omics data—such as genomics, proteomics, and metabolomics—to provide molecular-level insights that complement current physiological and behavioral biomarkers.

Leveraging edge-computing-enabled wearable devices to collect real-time data streams from multiple sources (e.g., smart insoles, wristbands, and EEG caps) can enable continuous monitoring and early symptom prediction. Testing personalized federated learning strategies by clustering patients based on disease stage, medication profile, or gender, and training sub-models within each subgroup before merging them via meta-fusion could improve sensitivity to clinical nuances and ensure equitable model performance.

Rigorous validation of generalization across stages, demographics, and environmental conditions will be essential. Synthetic data augmentation, domain adaptation, and cross-site transfer learning will be incorporated to further improve robustness and adaptability. Collectively, these extensions will bring the proposed system closer to real-world deployment and support its adoption in future intelligent healthcare systems.