The purpose of this section is to explore the most prominent advances in AI within the field of computer science. We have identified four key areas of focus: Internet of Things (IoT), machine learning and deep learning, natural language processing (NLP), and software development.

4.1. AI in Internet of Things

Internet of Things (IoT) has evolved from an experimental idea to a cornerstone technology in the modern digital world. Early examples can be traced back to the 1990s, such as the John Romkey’s internet-connected toaster [

44], but IoT has since grown into a highly advanced system of interconnected devices capable of automation and real-time exchange. Today, the IoT is assimilated as a vast network of sensors, actuators, and communication technologies that enable data collection, sharing, and automation in several domains of applications [

45].

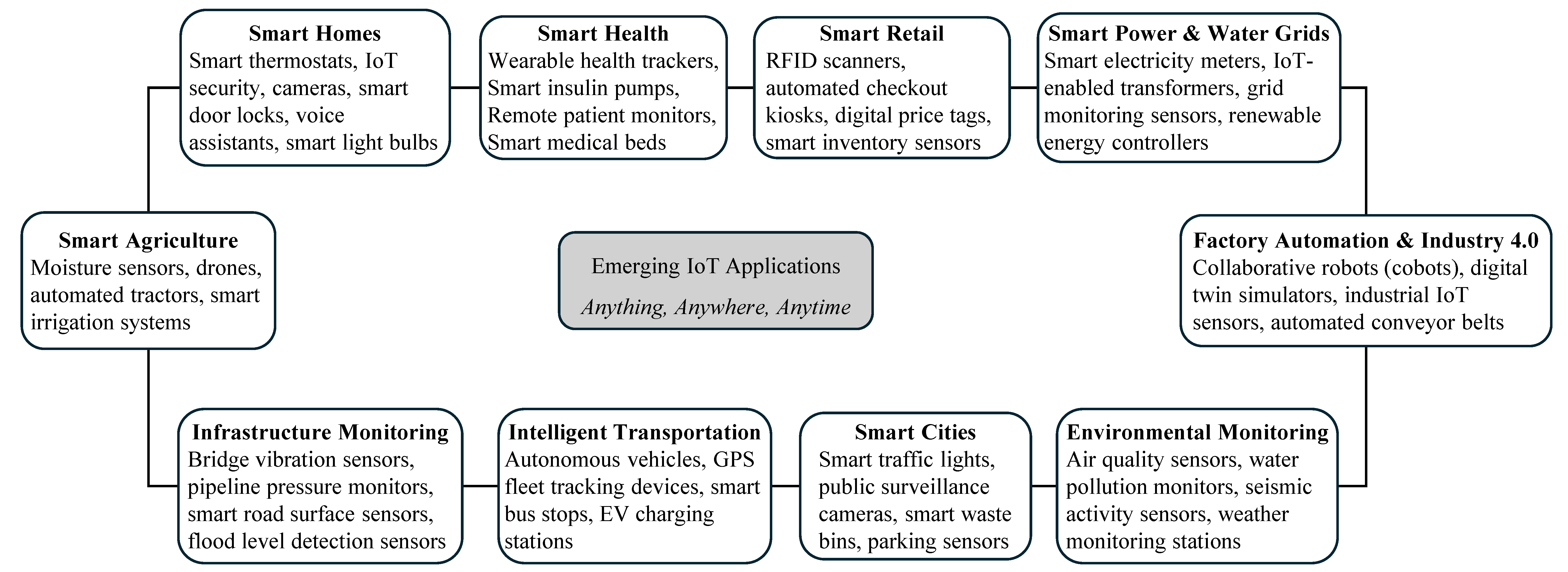

Figure 2 shows the diversity of IoT applications. As the growth of IoT networks continues, AI has become instrumental in equipping devices with the power not only to collect and share data but also to make intelligent decisions. This evolution is particularly evident in various application domains, from home-based networks to industrial systems, where AI-driven IoT systems have shown improved responsiveness compared to traditional systems [

46]. In addition to improving responsiveness, AI also contributes to improving performance and security in IoT networks. By integrating with blockchain, for example, AI facilitates tamper-proof, decentralized authentication mechanisms for key management. In practice, as described by [

47], blockchain provides an immutable, decentralized ledger for device authentication and key distribution, whereas AI improves this framework by dynamically monitoring device behavior and applying adaptive trust policies. This synergy between AI and blockchain prevents key compromise and tampering, but also supports real-time anomaly detection, ensuring that even valid keys are not misused for spoofing or session hijacking. The combination of AI and blockchain provides a scalable, tamper-resistant approach to IoT authentication that strengthens resilience against insider threats and man-in-the-middle exploits. Moreover, beyond the improvement in responsiveness and security, AI techniques are also used to optimize resource allocation and reduce energy consumption in various application domains (smart cities, healthcare, agriculture, industrial IoT systems, etc.). For example, artificial intelligence of things (AIoT) has been used in agriculture to optimize irrigation and crop health monitoring, thus improving both efficiency and resource management [

48]. AIoT has also been applied to smart city functions, such as optimizing traffic flow [

49].

Furthermore, AI is instrumental in improving real-time data processing [

50]. Edge computing, in particular, enables data processing closer to the source, reducing latency and bandwidth consumption in IoT networks. Using AI on the edge, IoT applications benefit from capabilities such as predictive analytics, anomaly detection, and autonomous decision making [

50,

51]. A concrete example of these benefits is provided by [

52], who demonstrated that coupling AI-based decision-making algorithms with IoT sensors can effectively predict system failures and optimize production processes in cyber-physical production systems (CPPS) in real time. Building on these local improvements, AI is also showing its mark in next-generation communications. In particular, AI has been used to optimize network design and operation for 5G and Beyond 5G (B5G) technologies in complex environments such as massive multiple-input multiple-output (MIMO) systems [

53]. Another key advancement influenced by AI as discussed by [

54] is in digital twins, where AI-enabled sensors, combined with 5G and IoT, improve the efficiency of sensing technologies and enable real-time data processing and decision making across various domains, including smart homes and robotic control systems. While digital twins exemplify its integration with virtual applications, AI’s impact extends to human-centered applications as well. This is particularly visible in domains such as elderly care and autonomous robotics, where AI-driven systems provide real-time assistance through federated platforms and edge computing [

55,

56].

Figure 2.

Internet of Things. Selected reference for each IoT domain: smart homes [

57]; smart health [

58]; smart retail [

59]; smart power and water grids [

60]; smart agriculture [

61]; infrastructure monitoring [

62]; intelligent transportation [

63]; smart cities [

64]; environmental monitoring [

65]; factory automation and industry 4.0 [

66].

Figure 2.

Internet of Things. Selected reference for each IoT domain: smart homes [

57]; smart health [

58]; smart retail [

59]; smart power and water grids [

60]; smart agriculture [

61]; infrastructure monitoring [

62]; intelligent transportation [

63]; smart cities [

64]; environmental monitoring [

65]; factory automation and industry 4.0 [

66].

Recent advancements in AI for edge computing have further enhanced IoT systems by addressing the specific security challenges faced by edge nodes (ENs). AI-driven techniques are used to improve intrusion detection systems (IDS), which detect denial of service (DoS) and distributed denial of service (DDoS) attacks with greater precision [

67]. Machine learning (ML) extracts malicious patterns from previous datasets, improving IDS detection rates compared to traditional methods [

68]. AI is also used to optimize access control by categorizing ENs based on privileges. This enables stricter access restrictions for high-privilege IoT devices, mitigating potential attacks [

68]. The integration of AI across these systems helps provide real-time decision making and improves the scalability of IoT networks, making them more efficient and secure. Next, AI’s role in real-time detection of security attacks is emphasized by its integration with complex event processing (CEP) models, which collect and correlate data to identify threats such as DoS, malware, and privacy breaches [

69]. By combining CEP with machine learning, these systems can rapidly adapt to new types of attacks, even those not explicitly modeled. This has proven to be very effective in sectors such as IoT healthcare, where real-time attack detection is critical [

69]. This is further evidenced by techniques such as support vector machines (SVM) and random forest (RF), known for their high detection accuracy and commonly used for feature selection and attack classification [

20]. In addition, deep neural networks (DNNs) and convolutional neural networks (CNNs) offer alternatives for learning complex features [

68]. Recent research has highlighted the role of AI in enabling autonomic behaviors in next-generation computing systems, particularly in cloud, fog, and edge computing environments. AI-driven edge computing facilitates real-time data processing and autonomous decision making, reducing latency and enhancing the efficiency of IoT applications [

51,

70]. Moreover, AI-based resource management in edge and fog computing optimizes resource allocation while minimizing energy consumption [

50]. In addition, AI has proven critical for the development of self-healing and self-protecting IoT systems, allowing automated anomaly detection and system optimization. This autonomic capacity enables IoT networks to scale efficiently and handle the huge data loads generated by billions of interconnected devices, ensuring robust security and performance [

20,

70].

AI has been at the core of many advancements in IoT, as supported by the literature, yet we believe there is still untapped potential to unlock, provided certain challenges are addressed. Firstly, while IoT security has improved, data privacy remains a sensitive area [

71]. This is due to the immersive nature of IoT: a widespread integration across several domains and a continuous collection and sharing of data across devices. Subsequent solutions of AI in IoT need to account for data privacy. In designing future AI-driven solutions in IoT, the findings of recent research on privacy preservation methods should be considered, such as privacy partition, lightweight obfuscation, energy theft detection with energy privacy preservation in smart grids, and local differential privacy [

71,

72,

73]. These methods ensure that privacy and security are integral components of the design process, rather than being an afterthought. Blockchain and federated learning are other techniques that can also be combined with AI to ensure data privacy. Federated learning allows training models without transferring sensitive data [

74], while blockchain ensures secure and decentralized authentication [

47]. Secondly, there is unpredictability and bias involved when decisions are made by AI models, especially in human-centered applications such as elderly care, smart healthcare monitoring, AI-driven rehabilitation systems. Therefore, AI models should be provided with considerable and diverse training data to ensure the optimal accuracy of their predictions and recommendations. The research in explainable AI conducted by [

21,

75,

76] highlights how to identify and eliminate potential biases. It will facilitate a better understanding of the reasoning behind AI predictions, improve the user’s trust, and maintain a level of accountability regarding the decision making of AI models.

AI has engineered remarkable achievements in the Internet of Things by enhancing security, scalability, and real-time decision making. However, addressing challenges such as data privacy, unpredictability, and bias remains crucial to unlocking its future potential. As critical infrastructures continue to integrate with the Internet of Things, AI models will be needed to handle the vast amount of data that comes with them. Ensuring privacy will remain a key aspect within these systems. Future research trends towards the use of privacy-preserving techniques like federated learning and differential privacy to protect sensitive information while enabling the efficient use of AI. As IoT expands across more sectors, the urgency of these challenges and potential solutions will likely increase.

Table 2 offers a comparative mini-synthesis of IoT-related studies, tabulating specific tasks, datasets, model families, headline metrics, and dataset limitations.

4.2. AI in Machine Learning and Deep Learning

Machine learning (ML) and deep learning (DL) are subfields of AI that have changed the way data are processed and interpreted. ML involves algorithms that make predictions and/or decisions based on the data they learned [

77]. DL, on the other hand, employs multiple layers of artificial neural networks to find complex patterns in large datasets [

78]. Both techniques have advanced enormously in recent years, and this is due to several factors such as the availability of extensive data, the prowess of computational power, and innovations in the field of algorithms [

79]. Current progress in ML has focused on optimizing various learning techniques, such as supervised, unsupervised, and reinforcement learning. Refs. [

75,

80] discuss the use of reinforcement learning in dynamic environments where policies are continuously adapted to optimize outcomes. AI-driven decision-making systems in autonomous vehicles are an example where reinforcement learning techniques are adapted to real-time changes in traffic conditions and pedestrian behavior. Similarly, refs. [

81,

82] highlight advances in metalearning, a technique that enables models to learn new tasks with minimal training time and data. It has been used effectively in personalized healthcare to diagnose rare conditions using a limited number of labeled examples.

Further advances in reinforcement learning were explored in [

83] by integrating reward-shaping techniques to accelerate the learning process. Fine-tuning reward signals are used to significantly improve the efficiency of training AI agents in complex environments such as game simulations and robotic control tasks. Active learning [

84,

85] is another example in which the model selectively asks the user for labels on the richest data points (the ones with the most information). With this technique, a model can achieve high accuracy despite accessing a limited amount of labeled data, making it highly suitable for applications in medical diagnostics, where obtaining labeled data is often expensive and time consuming.

Deep learning architectures have seen considerable innovation, with developments in convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformers enhancing performance across various tasks. The studies of [

84,

86] illustrate the superior precision of transformers in natural language processing tasks. They capture long-range dependencies in text specifically because of their self-attention mechanisms. For instance, refs. [

87,

88] illustrate examples of transformers being used in language translation, where they outperform traditional sequence-to-sequence models by producing more coherent translations. Meanwhile, medical imaging and autonomous driving are areas in which CNNs have broken through in addition to the field of traditional image processing. Refs. [

56,

89] discuss specific cases in which CNNs detect anomalies in medical scans. This has enabled the early diagnosis of conditions such as lung cancer and diabetic retinopathy in patients. Lightweight neural networks, such as MobileNet and SqueezeNet, are also efficient alternatives to traditional CNNs. They can be used in scenarios where computational resources are limited, but efficiency and accuracy are still expected. These architectures are the most suitable for devices with limited resources, such as smartphones and IoT sensors [

90,

91].

Hybrid models have emerged with the integration of ML and DL with other AI techniques. Refs. [

77,

92] discuss how combining ML algorithms with fuzzy logic, genetic algorithms, or traditional statistical approaches can improve decision-making processes in complex environments. Hybrid AI models have been used in predictive maintenance systems for manufacturing as an example [

93]. The combination of ML and expert knowledge has produced a more accurate prediction of equipment failures. ML and DL have driven significant advancements in numerous domains. In healthcare, AI-powered diagnostic systems have improved the accuracy of disease detection and patient monitoring, as demonstrated in studies by [

94,

95,

96]. For example, patients with skin cancer were accurately detected with the use of deep learning algorithms trained on large medical image data sets, at a level comparable to that of expert dermatologists [

97]. In robotics and autonomous systems, reinforcement learning techniques are used to improve navigation and control algorithms, enabling more adaptive and resilient robot behaviors in unstructured environments [

98]. Other studies highlight how deep learning was used to detect autonomous drones in real time and avoid collisions on complex terrains [

99,

100].

As AI models grow in complexity, scalability, privacy, and efficiency have become crucial areas of focus. Refs. [

53,

101,

102] have proposed continuous improvements in hardware and algorithms to manage energy consumption and reduce costs, potential solutions to handle the growing computational requirements of state-of-the-art models.

The research discussed by [

93,

103,

104] emphasizes the development of distributed training techniques and model parallelism, which allow for faster training of large-scale neural networks across multiple GPUs. These techniques have been applied to large-scale language models, such as GPT-3, to significantly reduce training times and computational costs [

78,

105,

106]. Other methods such as model pruning, quantization, and knowledge distillation have been used to reduce the computational load of AI models without sacrificing accuracy, an ideal fit to deploy AI models on edge devices [

107,

108]. The emergence of federated learning is another key development that addresses scalability and data privacy. Federated learning enables models to decentralize training across data sources while preserving data privacy. Refs. [

109,

110] discuss how federated learning is being applied in healthcare, where models are trained on data from multiple hospitals without sharing sensitive patient information. Beyond decentralized training, federated learning also allows personalized model refinement as shown by [

111], leading to more accurate and personalized predictions. Differential privacy is another method that offers solutions, especially for data leakage risks when training complex models [

112,

113].

Machine learning and deep learning have made substantial strides in advancing the field of AI, driving innovations across various applications, and improving automation, decision making, and predictive capabilities. Their success is largely driven by the increasing availability of data, advances in computational power, and the development of innovative learning algorithms. Improvements in accuracy and efficiency have been observed across several domains (healthcare, natural language processing, autonomous systems, smart agriculture, etc.) with the use of ML and DL techniques. However, challenges such as data privacy, scalability, and algorithmic bias persist. As models become more complex, researchers should focus on scalability and energy efficiency to avoid unsustainable computational costs. Likewise, protecting sensitive data and addressing bias are other issues that need to be considered. Advancements in explainable AI and hybrid models, which combine traditional methods with ML/DL techniques, will continue to improve transparency and trust in AI systems. Ultimately, the integration of ML and DL across industries will continue to shape the future of AI, opening up new possibilities in fields such as quantum computing, real-time decision making, and personalized AI applications.

Table 3 offers a comparative mini-synthesis of ML/DL-related studies, tabulating specific tasks, datasets, model families, headline metrics, and dataset limitations.

4.3. AI in Natural Language Processing

In recent years, AI-driven advances have revolutionized natural language processing (NLP), pushing the field beyond traditional rule-based approaches and statistical models. NLP, at its core, seeks to enable computers to understand, interpret, and generate human language in a way that is both meaningful and contextually relevant [

114]. The early methods in NLP involved explicit programming, in which linguists crafted sets of rules that attempted to capture the structure and syntax of the language. Although these rule-based systems were pioneering at the time, they were inherently limited by their rigidity and dependence on human-defined rules, failing to capture the nuance and fluidity of human language [

115]. The introduction of AI, particularly through machine learning and deep learning techniques, allowed NLP to evolve from these fixed approaches to a data-driven discipline capable of adapting to diverse language tasks [

114].

The transformational shift in NLP began with the adoption of machine learning, where statistical methods allowed models to learn patterns from data without requiring explicit rules [

115]. However, it was the emergence of deep learning, specifically neural networks with multiple layers, that provided the power necessary to recognize and generate complex language patterns [

79]. This evolution led to the development of large-scale language models capable of understanding language context in ways previously unimaginable. AI models trained on vast datasets, comprising billions of words and sentences, started outperforming traditional approaches in tasks such as translation, summarization, and sentiment analysis [

116,

117,

118].

Today, the capabilities of NLP are largely supported by these AI advances, which make them central to both foundational research and applied technologies in the field. A pivotal advancement in NLP came with the development of transformer architectures, which introduced a new way to process and understand language. Traditional models, such as recurrent neural networks (RNNs), struggled with long-term dependencies due to their sequential nature, which made them less effective in retaining context over longer text sequences [

119]. Transformers addressed this limitation through a self-attention mechanism that allowed models to weigh the importance of each word in a sentence relative to every other word, regardless of their position. Introduced by [

119], transformers replaced RNNs and quickly became the foundation for a new era of NLP models. This architecture laid the groundwork for the development of models such as bidirectional encoder representations from transformers (BERT), generative pre-trained transformer (GPT), and text-to-text transfer transformer (T5), which have become central to state-of-the-art NLP applications. As discussed in [

119], through transformers, models can handle context more flexibly and accurately, allowing AI to process text at a scale and depth that mirror human comprehension. These transformer-based models are often referred to as large language models (LLMs), and they represent some of the most significant advancements in NLP. LLMs, such as BERT [

120] and GPT [

121], take advantage of vast datasets and billions of parameters to capture a wide range of linguistic features and patterns. In essence, LLMs operate by pre-training on extensive text corpora, where they learn underlying language structures, relationships, and contexts. During pre-training, models use objectives like masked language modeling, where certain words are hidden and the model learns to predict them, or autoregressive techniques, where models generate text one word at a time based on preceding context. This extensive training enables LLMs to generalize many NLP tasks, including translation, question answering, and summarization, as seen with the original GPT models [

122,

123].

BERT was among the first models to leverage the power of transformers in a bidirectional way, which means that it could consider both the preceding and following context for each word in a sentence. This bidirectional approach was a breakthrough, as it allowed a more nuanced understanding of context, critical for tasks such as question answering and sentiment analysis [

120]. Similarly, models such as the GPT introduced by OpenAI showcased the potential of unidirectional transformers for text generation. GPT models, particularly GPT-3, demonstrated the capability of short-cut learning, where the model could perform tasks with minimal examples, showcasing an unprecedented level of adaptability [

124,

125]. Notably, recent iterations of GPT, such as GPT-4 and beyond, have further scaled up in both parameter size and training techniques, resulting in significant improvements in understanding complex queries, providing contextual responses, and even performing domain-specific tasks with higher accuracy [

126]. These large language models have had a transformative impact on the field of NLP. Not only have they set new benchmarks in terms of performance on various tasks, but they have also redefined how AI can be applied to language. Refs. [

126,

127] mentioned that GPT-3, for example, with its 175 billion parameters, has shown impressive performance in tasks ranging from translation to creative writing, and it can generate coherent and contextually appropriate text with minimal supervision. Such capabilities underscore the power of LLMs and the way their AI-driven architecture has enabled them to handle a wide array of language tasks with high accuracy. The sheer scale and adaptability of LLMs have made them fundamental tools in NLP, where they serve as the basis for numerous downstream applications.

Transfer learning and fine-tuning have further amplified the utility of large language models by allowing them to quickly adapt to specific tasks with limited additional data [

128]. Transfer learning allows a pre-trained model to be fine-tuned on a smaller, task-specific dataset, making it feasible to apply these models in various contexts without requiring vast amounts of labeled data. For example, BERT can be pre-trained on a large general corpus and then fine-tuned on a specialized dataset for tasks such as sentiment analysis [

129]. This adaptability has made transfer learning a key method in NLP, as it significantly reduces the computational costs and time associated with training models from scratch. Fine-tuning, particularly with techniques such as masked language modeling and next-sentence prediction, allows these pre-trained models to excel in specific applications, such as classification, summarization, and information retrieval [

128]. In addition to the general-purpose applications aforementioned, transfer learning has also opened doors to domain-specific adaptations. By fine-tuning domain-specific corpora, NLP models can be customized for specialized fields such as legal, medical, and scientific NLP. In medical NLP, for example, fine-tuning clinical notes or medical research papers allows models to interpret complex jargon and extract critical information relevant to healthcare professionals and patients [

130,

131]. Similarly, in the legal field, models tuned to case law and statutory language can assist in legal research by retrieving pertinent case precedents or summarizing lengthy legal documents [

132,

133]. These domain-specific adaptations highlight the versatility of AI-driven NLP models, which can be modified to meet the needs of different sectors without extensive re-engineering.

The role of AI in multilingual NLP is equally transformative, particularly as NLP applications expand to global audiences. Traditional NLP models often faced limitations in handling languages other than English due to a lack of labeled data in other languages [

134]. However, advances in multilingual models, such as mBERT and XLM-R, have enabled models to perform cross-lingual tasks by training in multiple languages simultaneously [

135,

136]. These multilingual models can handle a wide range of languages, offering a level of inclusivity that supports linguistic diversity in NLP applications. For example, mBERT has been used effectively in translation tasks and zero-shot learning, where a model trained on one language can perform tasks in another language without explicit training data in that language [

137].

Although earlier AI-driven NLP architectures raise their own challenges, today’s biggest challenges are centered on LLMs [

138]. One of the most prominent limitations is the reliance on vast computational resources, which has made training and deploying LLMs financially and environmentally costly. Ref. [

139] stated that models like GPT-3 and GPT-4 require extensive GPU or TPU resources, and their training processes consume substantial electricity, raising concerns about the sustainability of these methods. As a result, ref. [

139] observed that there is an increasing focus on developing more efficient architectures and training practices, such as model pruning and quantization, to mitigate their environmental impact. These computational and sustainability concerns reflect the broader challenges discussed in

Section 4.2 on machine learning and deep learning. In that context, different techniques were highlighted as strategies for reducing computational cost and improving energy efficiency, including distributed training, pruning, quantization, and knowledge distillation. Looking at LLMs, a pairing of those strategies and advancements in specialized hardware (GPUs, TPUs, and AI accelerators) can partially reduce the costs of scaling while enabling deployment in more resource-constrained environments.

Another critical issue as discussed by [

140] is the interpretability of LLMs, as these models often operate as “black boxes”, making it challenging to understand how they arrive at particular conclusions. This lack of transparency can be problematic in domain applications that require accountability, such as healthcare and legal systems. Although researchers are exploring explainability techniques, achieving meaningful transparency without compromising performance remains an ongoing challenge [

141,

142]. Ethical concerns are also at the forefront of discussions surrounding AI-driven NLP. LLMs can inadvertently learn and perpetuate biases present in their training data, leading to output that may reinforce stereotypes or unfair representations. Ref. [

143] illustrated that language models trained on Internet-based corpora can reflect and even amplify harmful biases related to gender, race, and nationality. Addressing these biases is complex, as it involves both improving the quality of the dataset and developing bias mitigation techniques during model training [

144]. In addition, there are security and privacy concerns associated with NLP models, particularly those used in sensitive domains. LLMs trained on vast data repositories may unintentionally retain specific details from training data, posing risks of data leakage. Privacy-preserving methods, such as differential privacy, are being explored to protect against unintended data disclosure, although they add an additional layer of complexity to model development [

145,

146].

The transformative role of AI in NLP has opened new possibilities for processing, generating, and understanding human language with an unprecedented degree of accuracy and adaptability. Large language models, powered by transformers and enabled by techniques such as transfer learning, have redefined the boundaries of NLP, allowing for sophisticated applications across languages, domains, and industries. However, alongside these advances come significant challenges, particularly in areas of computational efficiency, ethical concerns, and the need for greater transparency in AI decision making. Future research will likely focus on addressing these limitations, with a push toward more sustainable, interpretable, and ethically robust models. As the field evolves, AI-driven NLP is positioned not only to broaden its applications but also to refine its approach to achieving responsible and inclusive language technology.

Table 4 offers a comparative mini-synthesis of NLP-related studies, tabulating specific tasks, datasets, model families, headline metrics, and dataset limitations.

4.4. AI in Software Development

The evolution of modern software systems has brought about increased complexity, which introduces challenges in maintaining quality, security, and reliability. Traditional software development practices often fail to meet the rigorous demands of modern systems, leading to the need for innovative solutions. AI has emerged as a transformative force in software development, bringing new dimensions of efficiency, accuracy, and scalability to the software development life cycle (SDLC). Through AI, organizations can optimize resources, automate repetitive tasks, improve quality assurance, and predict defects before they occur, ultimately improving the robustness and resilience of software applications [

147,

148,

149]. The integration of AI into SDLC is not simply a technological upgrade, but a paradigm shift that redefines how software is designed, developed, and maintained. This section delves into the impact of AI on each phase of the SDLC—planning and requirement analysis, design, development, testing, deployment, and maintenance—illustrating the various ways AI enhances both productivity and reliability. From predictive analytics in project planning to real-time defect detection in maintenance, AI’s role across the SDLC stages represents a holistic transformation of the software development process. As AI continues to evolve, its applications in software engineering are expected to deepen, further bridging the gaps between complex system requirements and the capabilities of development teams.

In the initial stage of planning and requirement analysis, AI assists project managers and development teams by providing predictive analytics and optimizing resource allocation [

148]. AI-driven project management tools analyze historical project data to forecast resource requirements, timelines, and potential risks. For example, machine learning models leverage past project information to predict resource constraints and optimize task allocation, ensuring efficient workflow. Studies show how machine learning algorithms accurately estimate project durations and detect bottlenecks, improving planning accuracy from the outset [

148]. AI also supports early-stage defect prediction by identifying potential vulnerabilities in the proposed code or design structure based on historical data. By predicting areas where issues are likely to arise, AI enables teams to plan proactively and allocate resources to address these areas. For example, defect prediction models use logistic regression and neural networks to assess high-risk areas in code even before implementation begins [

149]. This proactive approach helps streamline resource planning and prioritization, minimizing risks early in the development cycle. Beyond project forecasting and defect prediction, AI-driven NLP tools enhance requirement gathering by automating the extraction and analysis of requirements from unstructured data. NLP can analyze user feedback, survey responses, and documentation, identifying common themes and customer needs with minimal manual intervention. For example, AI models can categorize requirements by priority or importance using sentiment analysis and entity recognition, ensuring that critical features receive attention first [

150]. AI-powered chatbots further support interactive requirement gathering by capturing detailed customer preferences in real time, allowing stakeholders to communicate needs directly to the development team [

151]. These tools help to ensure comprehensive requirement coverage, reducing misunderstandings and misaligned expectations later in development. NLP tools also help with consistency and conflict resolution by automatically detecting ambiguities or contradictions within requirement documents, allowing teams to clarify issues early in the SDLC [

152].

During the design stage, AI applications focus on improving architecture and code structure, providing suggestions for efficient and robust designs. AI-based code generation and program synthesis tools offer pre-built templates, code structures, and design patterns that align with industry standards. For example, platforms such as GitHub Copilot and Amazon CodeWhisperer leverage large language models (LLMs) to assist developers by recommending modular design patterns and architectural frameworks based on functional requirements [

153,

154]. This not only speeds up the design process, but also enhances consistency in code structure. In addition, AI tools improve the quality of the design by identifying potential structural flaws in the codebase. AI-powered bug detection and code quality enhancement tools provide insight into logical inconsistencies or security vulnerabilities in the design phase [

155,

156]. These tools analyze design artifacts and preliminary code structures, ensuring that design choices adhere to best practices and are less likely to introduce errors at later stages. This AI-driven approach to design validation improves the overall robustness of software systems.

In the development stage, AI significantly enhances the efficiency and quality of the coding. Automation in coding tasks reduces repetitive work, and machine learning models automate code generation, error detection, and refactoring. Genetic algorithms, neural networks, and transformer-based models such as GPT-3 assist developers by generating code snippets and suggesting fixes for code smells and inefficiencies [

157,

158,

159]. This automation minimizes manual intervention, helping developers keep focus on high-value coding activities. AI-driven code generation and program synthesis tools continue to play a central role in implementation, offering code suggestions based on previous successful implementations. These models interpret user input and generate functionally complete code, enhancing productivity. Different test and metric-based methods assess AI-generated code to ensure quality and accuracy. New approaches like CodeScore are more concerned with functional accuracy than traditional metrics [

160,

161,

162]. This allows developers to confidently incorporate AI-generated code into projects without compromising quality. Real-time defect prediction further assists during development by analyzing code in progress to identify areas prone to errors. AI-based defect prediction models detect potential issues in the codebase, allowing developers to resolve them early and avoid costly rework. For example, machine learning models continuously monitored code changes, accentuating high-risk segments that could require closer inspection, thus streamlining the debugging process and improving code reliability [

163].

Testing is one of the most impactful areas for AI applications within the SDLC. Software testing benefits from AI through automated test case generation, prioritization, and regression testing. By analyzing historical test results, machine learning models generate targeted test cases that focus on high-risk areas, reducing time and resource expenditure [

164,

165]. Reinforcement learning algorithms have shown effectiveness in dynamically prioritizing test cases, adjusting execution based on previous failure patterns to ensure critical areas are rigorously tested. AI-driven automation also simplifies regression testing, particularly in continuous integration environments. By identifying code segments likely affected by recent changes, AI prioritizes testing efforts to focus on these segments, ensuring that software updates do not introduce new bugs [

166]. This prioritization ensures efficient test coverage without redundant efforts, which is especially valuable in large, complex systems. Bug detection and code quality enhancement during testing is heavily relying on AI-driven static and dynamic code analysis tools. These tools identify code logic issues, performance bottlenecks, and security vulnerabilities before deployment. For example, the study of [

167] shows that AI models analyze the structure, logic, and execution paths of the code to detect anomalies, which in turn helps developers achieve a higher standard of quality before the software reaches users.

In the deployment phase, AI plays a critical role in facilitating smooth transitions from development to production through automation in continuous integration and continuous deployment (CI/CD) pipelines. Ref. [

168] mentioned that reinforcement learning models are used to prioritize test cases within CI/CD frameworks, dynamically adjusting the test execution order based on identified risks from previous tests. This targeted prioritization ensures that high-risk code segments are thoroughly evaluated prior to deployment, which helps reduce the chances of critical failures in production. As noted in the study conducted by [

169], reinforcement learning-based CI/CD tools adapt to changing project needs, thus minimizing downtime and improving software reliability. This adaptability makes AI-driven CI/CD pipelines particularly valuable in agile development environments where frequent updates and rapid deployments are the norm. AI also improves the efficiency of deployment by continuously monitoring and analyzing system performance, detecting potential problems early in the deployment process [

170]. For example, AI-based anomaly detection models in CI/CD environments analyze metrics such as response time, resource utilization, and error rates to identify unusual patterns that may signal underlying issues. This real-time feedback allows teams to proactively address issues before they impact end users, ensuring a stable deployment. As outlined by [

171], such predictive capabilities are especially beneficial in native cloud applications, where scaling and resource allocation must be continuously optimized. AI-driven monitoring tools can dynamically adjust the infrastructure based on anticipated demand, thus reducing latency and maintaining performance even during peak loads.

In the maintenance phase, AI provides continuous monitoring and predictive capabilities to address issues proactively, helping to reduce downtime and maintain software performance over time. AI-driven bug detection and code quality enhancement tools monitor applications in real time, identifying code anomalies, security vulnerabilities, and performance issues as they arise [

155,

156]. These tools employ machine learning algorithms to analyze system metrics, such as response times, error rates, and resource usage patterns, enabling the detection of subtle emerging problems before they escalate. For instance, models trained on historical bug data and system usage patterns can detect recurring issues or new patterns indicative of potential defects, ensuring that maintenance efforts are prioritized for the most pressing concerns. Ref. [

170] shows that continuous monitoring allows developers to act quickly on issues, minimize impact on end users, and support high availability. In addition to monitoring, defect prediction models provide critical insight during maintenance by forecasting which parts of the code are likely to experience degradation or require updates. These AI models use historical data, including past bug reports and system logs, to predict high-risk areas in the code that may require proactive maintenance. For instance, machine learning models in maintenance environments can prioritize code segments that exhibit patterns of instability, allowing teams to preemptively address issues [

172]. Such predictive maintenance approaches help optimize resource allocation by focusing efforts on areas with the highest probability of failure, thus preventing sudden breakdowns and prolonging the useful life of the system. In addition, AI-powered self-healing systems are an emerging trend, where models can autonomously identify, isolate, and resolve minor issues, further reducing the need for human intervention [

173]. This proactive approach not only reduces manual workload but also improves software reliability, setting the stage for increasingly autonomous and resilient software ecosystems.

Table 5 summarizes some AI methods and their contributions in the different stages of SDLC.

Despite AI’s advancements in software development, several challenges and limitations persist. One primary challenge is the test oracle problem in AI-driven testing, where determining correct outputs is difficult due to the black-box nature of AI [

179]. In complex models, the expected outcomes are often unclear, complicating validation efforts and increasing reliance on human verification. Ref. [

179] highlight this limitation, especially when AI models operate with minimal transparency, making it difficult for developers to establish robust testing criteria. Another significant issue is data quality and diversity, particularly in defect prediction and CI/CD pipeline models [

155]. Predictive models are highly dependent on the quality and scope of their training data, and biases or limitations within these datasets can result in unreliable outcomes. This concern is noted in the study of [

180], which underscores the need for high-quality representative datasets to avoid biased predictions that could negatively impact software quality. Addressing these limitations requires an emphasis on data governance and bias mitigation techniques to ensure that AI models are built on comprehensive, diverse data. Emerging research suggests that explainable AI (XAI) will play a pivotal role in addressing transparency issues, particularly for applications in high-stakes software systems where understanding AI-driven decisions is essential. For example, refs. [

140,

181,

182] discuss how XAI can help bridge the interpretability gap, potentially mitigating the test oracle problem by making the model output more understandable. In addition to XAI, AI-driven DevOps is also expected to evolve with increasingly sophisticated real-time feedback and monitoring systems, enabling autonomous adjustments to deployment parameters. This trend aligns with the findings of [

170], where feedback mechanisms allow real-time adjustments in CI/CD workflows, ensuring continuous stability and efficiency.

Hybrid AI approaches, which combine rule-based and machine learning methods, offer promising improvements to handle complex edge-case scenarios. The research of [

172] points to the potential of hybrid approaches that improve the robustness of the model by integrating structured rules-based logic. In addition, self-healing systems represent an innovative direction, where AI autonomously identifies and corrects problems, reducing the reliance on manual interventions during the maintenance phase. Early studies suggest that self-healing capabilities can streamline operations, particularly in large-scale applications with critical uptime requirements [

173,

183]. Finally, as ethical considerations gain importance, regulatory frameworks and ethical AI practices will become essential in guiding AI deployment within software development [

184,

185,

186]. The importance of transparency, fairness, and accountability in AI-driven systems should be highlighted, especially since AI assumes more autonomous roles in software engineering. This focus on ethical standards and regulatory compliance will ensure that AI applications not only enhance efficiency but also adhere to standards that prioritize user safety and ethical integrity.

Table 6 offers a comparative mini-synthesis of software-development-related studies, tabulating specific tasks, datasets, model families, headline metrics, and dataset limitations.