Enhancement in Three-Dimensional Depth with Bionic Image Processing

Abstract

1. Introduction

2. Related Work

3. Methods

3.1. Gabor Filtering for Texture Enhancement

| Algorithm 1: Gabor filtering for texture enhancement |

| Input: gray_img, orientations Output: texture_enhanced 1: energy_map = zeros (gray_img.shape) # Initialize texture energy accumulator 2: gabor_real = generate_gabor (θ, type = ‘real’, freq = 0.15, sigma = 2) # Real part (even symmetry) 3: gabor_imag = generate_gabor (θ, type = ‘imag’, freq = 0.15, sigma = 2) # Imaginary part (odd symmetry) 4: real_resp = convolve (gray_img, gabor_real) # Convolve for real response 5: imag_resp = convolve (gray_img, gabor_imag) # Convolve for imaginary response 6: dir_energy = sqrt (real_resp2 + imag_resp2) # Compute texture energy for current direction 7: energy_map = max (energy_map, dir_energy) # Keep maximum response across directions 8: enhanced_edges = 1.5 × energy_map # Amplify edges (tunable gain) 9: texture_enhanced = gray_img + enhanced_edges # Blend original with enhanced textures 10: return clamp (texture_enhanced, 0, 255) # Ensure valid pixel values |

3.2. Retinex-Based Illumination Correction

| Algorithm 2: Retinex-based illumination correction |

| Input: rgb_img, low_light_thresh Output: enhanced_rgb 1: avg_lum = mean (rgb_to_gray(rgb_img)) # Compute average luminance (0–255) 2: if avg_lum < low_light_thresh: # Adapt to low−light conditions 3: blur_strength = 6 # Increase blur for robust illumination estimation 4: reflect_weight = 0.75 # Prioritize detail (reflectance) over original 5: else: 6: blur_strength = 3 # Default blur for normal lighting 7: reflect_weight = 0.5 # Balance original and enhanced details 8: end if 9: illum_map = gaussian_blur (rgb_img, blur_strength, separable = True) # Estimate illumination 10: reflect_map = rgb_img/(illum_map + # Extract detail (reflectance) component 11: enhanced_base = (1 − reflect_weight) × rgb_img + reflect_weight × reflect_map # Blend components 12: enhanced_rgb = gamma_correct (enhanced_base, gamma) # Enhance dark regions 13: return clamp (enhanced_rgb, 0, 255) # Ensure valid pixel values |

3.3. RGB Adaptive Adjustment

| Algorithm 3: RGB adaptive adjustment |

| Input: rgb_img, depth_map, target_ratios Output: adjusted_rgb 1: original_gray = rgb_to_gray(rgb_img) # Compute original luminance for brightness conservation 2: current_ratios = mean (rgb_img, axis = (0,1))/255.0 # Normalized RGB channel proportions (0–1) 3: adjust_factors = target_ratios/(current_ratios + ) # Channel-wise correction factors (avoid division by zero) 4: adaptive_strength = 0.8 * exp (−0.5 (1 − depth_map)) # Depth-dependent gain (stronger for nearer objects) 5: for c in 0 to 2: # Iterate over R (0), G (1), B (2) channels 6: rgb_img […, c] = adjust_factors[c] adaptive_strength # Apply adaptive channel adjustment 7: end for 8: adjusted_gray = rgb_to_gray(rgb_img) # Compute luminance after channel correction 9: luminance_factor = original_gray/(adjusted_gray + ) # Factor to preserve original brightness 10: adjusted_rgb = rgb_img luminance_factor […, None] # Apply brightness conservation (broadcast to three channels) 11: return clamp (adjusted_rgb, 0, 255) # Ensure pixel values are within a valid range (0–255) |

4. Experimental Designs

4.1. Platform Configuration

4.2. Semi-Global Block Matching Algorithm

4.3. Evaluation

- RMSE: Root mean square error. RMSE quantifies the absolute accuracy of a depth map or parallax map by calculating the root mean square value of pixel-level deviation between the predicted image and the real image. This index is sensitive to outliers. The lower the value, the higher the pixel level’s matching degree. It is suitable for evaluating the global accuracy of geometric reconstruction, such as the degree of restoration of object shape in a VR scene.

- AbsRel: Absolute relative error. AbsRel measures the relative error ratio of depth estimation and weakens the influence of the depth absolute value scale through normalization. Its core value lies in the balanced evaluation of the error between close-range and long-range. The lower the value, the more the depth perception conforms to the real spatial relationship. It is the key indicator for evaluating the effect of stereo perception in the VR field.

- SSIM: Structural similarity. SSIM evaluates the visual fidelity of images from three dimensions of brightness, contrast, and structure, and simulates the perceptual characteristics of human eyes on structural information. The closer the index value is to 1, the closer the image is to the reference image visually, especially good at capturing the distortion types that affect the subjective image quality, such as blur and noise.

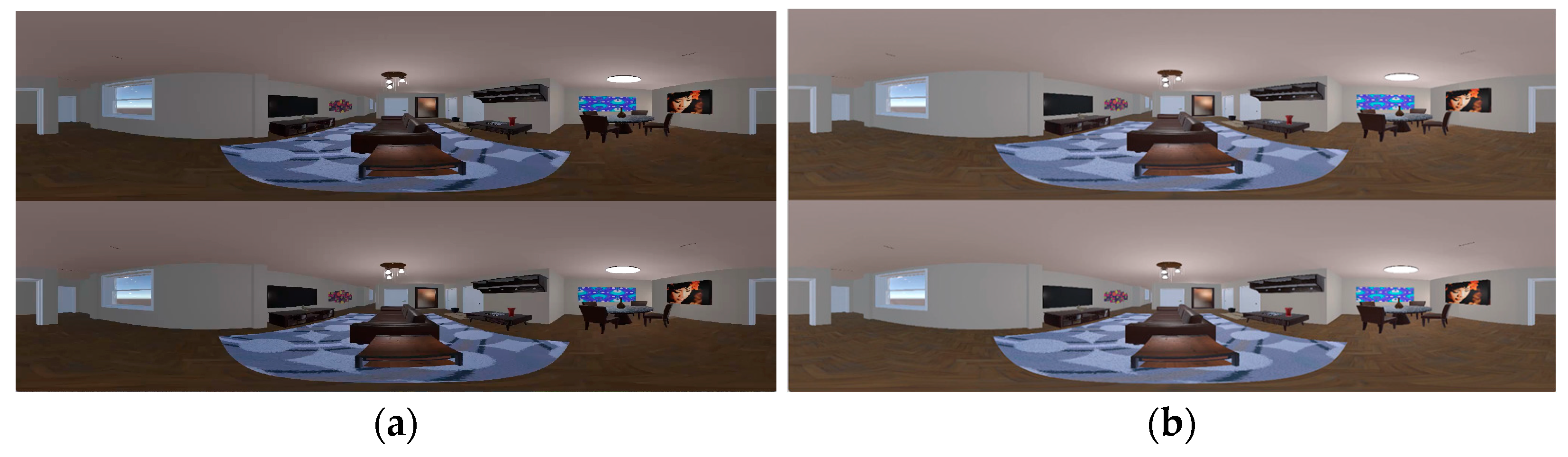

5. Results

5.1. Performance of Individual Algorithms

5.2. Integrated Bionic Algorithm

6. Discussion

6.1. Research Achievements

6.2. Limitations

6.3. Comparison with Deep-Learning Methods

7. Conclusions

- Designed a visual cortex cell simulation algorithm based on a Gabor filter, significantly enhancing the texture feature extraction effect.

- Designed a human visual effects simulation algorithm based on an improved Retinex model, effectively solving the problem of brightness balance under complex lighting conditions.

- Developed an RGB adaptive adjustment algorithm, which enhances color naturalness and stereoscopic effect through color temperature compensation and depth perception enhancement.

- Efficient modular system integration has been achieved on the Unity platform, meeting the real-time requirements of VR applications.

- Through a combination of subjective and objective evaluation methods and horizontal comparison with mainstream algorithms, the effectiveness and superiority of the algorithm have been comprehensively verified.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Kuasakunrungroj, A.; Kondo, T.; Rujikietgumjorn, S.; Kaneko, H.; Lie, W.-N. Comparison of 3D point cloud processing and CNN prediction based on RGBD images for bionic-eye’s navigation. In Proceedings of the 4th International Conference on Information Technology (InCIT), Bangkok, Thailand, 24–25 October 2019. [Google Scholar] [CrossRef]

- Hu, H.; Chen, C.P.; Li, G.; Jin, Z.; Chu, Q.; Han, B.; Zou, S.P. Bionic vision processing for epiretinal implant-based metaverse. ACS Appl. Opt. Mater. 2024, 2, 1269–1276. [Google Scholar] [CrossRef]

- Yang, M. Multi-feature target tracking algorithm of bionic 3D image in virtual reality. J. Discret. Math. Sci. Cryptogr. 2018, 21, 763–769. [Google Scholar] [CrossRef]

- Xi, N.; Ye, J.; Chen, C.P.; Chu, Q.; Hu, H.; Zou, S.P. Implantable metaverse with retinal prostheses and bionic vision processing. Opt. Express 2023, 31, 1079–1091. [Google Scholar] [CrossRef]

- Zhang, P.; Su, L.; Cui, M.; Cui, N. Research on 3D reconstruction based on bionic head-eye cooperation. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 13–16 October 2020. [Google Scholar] [CrossRef]

- Chu, Q.; Chen, C.P.; Hu, H.; Wu, X.; Han, B. iHand: Hand recognition-based text input method for wearable devices. Computers 2024, 13, 80. [Google Scholar] [CrossRef]

- Li, L.; Chen, C.P.; Wang, L.; Liang, K.; Bao, W. Exploring artificial intelligence in smart education: Real-time classroom behavior analysis with embedded devices. Sustainability 2023, 15, 7940. [Google Scholar] [CrossRef]

- Li, H.; Wang, W.; Ma, W.; Zhang, G.; Wang, Q.; Qu, J. Design and analysis of depth cues on depth perception in interactive mixed reality simulation systems. J. Soc. Inf. Disp. 2021, 30, 87–102. [Google Scholar] [CrossRef]

- Lew, W.H.; Coates, D.R. The effect of target and background texture on relative depth discrimination in a virtual environment. Virtual Real. 2024, 28, 103. [Google Scholar] [CrossRef]

- Gong, S.-M.; Liou, F.-Y.; Lee, W.-Y. The effect of lightness, chroma, and hue on depth perception. Color Res. Appl. 2023, 48, 793–800. [Google Scholar] [CrossRef]

- De Paolis, L.T.; De Luca, V. Augmented visualization with depth perception cues to improve the surgeon’s performance in minimally invasive surgery. Med. Biol. Eng. Comput. 2019, 57, 995–1013. [Google Scholar] [CrossRef]

- Salim, A.Z.; Abdul-Kareem, L.I. A review of advances in bio-inspired visual models using event-and frame-based sensors. Adv. Technol. Innov. 2025, 10, 44–57. [Google Scholar] [CrossRef]

- Guo, M.; Gao, H.; Liu, Y.; Song, W.; Yang, S.; Wang, Y. Experimental research on depth perception of comfortable interactions in virtual reality. J. Soc. Inf. Disp. 2025, 33, 263–273. [Google Scholar] [CrossRef]

- Chen, S.-C.; Duh, H. Mixed reality in education: Recent developments and future trends. In Proceedings of the 18th IEEE International Conference on Advanced Learning Technologies (ICALT), Mumbai, India, 9–13 July 2018. [Google Scholar] [CrossRef]

- Gabbard, J.L.; Swan, J.E., II; Hix, D. The effects of text drawing styles, background textures, and natural lighting on text legibility in outdoor augmented reality. PRESENCE-Virtual Augment. Real. 2006, 15, 16–32. [Google Scholar] [CrossRef]

- Gong, M.; Zhou, Z.; Ma, J. Change detection in synthetic aperture radar images based on image fusion and fuzzy clustering. IEEE Trans. Image Process. 2012, 21, 2141–2151. [Google Scholar] [CrossRef] [PubMed]

- Murin, E.A.; Sorokin, D.V.; Krylov, A.S. Method for semantic image segmentation based on the neural network with Gabor filters. Program. Comput. Softw. 2025, 51, 160–166. [Google Scholar] [CrossRef]

- Hu, Y.; Kundi, M. A multi-scale Gabor filter-based method for enhancing video images in distance education. Mob. Netw. Appl. 2023, 28, 950–959. [Google Scholar] [CrossRef]

- Kamanga, I.A. Improved edge detection using variable thresholding technique and convolution of Gabor with Gaussian filters. Signal Image Process. Int. J. 2022, 13, 1–15. [Google Scholar] [CrossRef]

- Li, C.; He, C. Variable fractional order-based structure-texture aware Retinex model with dynamic guidance illumination. Digit. Signal Process. 2025, 161, 105140. [Google Scholar] [CrossRef]

- Anila, V.S.; Nagarajan, G.; Perarasi, T. Low-light image enhancement using retinex based an extended ResNet model. Multimed. Tools Appl. 2024, 84, 29143–29158. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhu, J.; Li, L.; Ma, H. A joint network for low-light image enhancement based on Retinex. Cogn. Comput. 2024, 16, 3241–3259. [Google Scholar] [CrossRef]

- Chen, L.; Liu, Y.; Li, G.; Hong, J.; Li, J.; Peng, J. Double-function enhancement algorithm for low-illumination images based on retinex theory. J. Opt. Soc. Am. A 2023, 40, 316–325. [Google Scholar] [CrossRef]

- Chen, P.-D.; Zhang, J.; Gao, Y.-B.; Fang, Z.-J.; Hwang, J.-N. A lightweight RGB superposition effect adjustment network for low-light image enhancement and denoising. Eng. Appl. Artif. Intell. 2024, 127, 107234. [Google Scholar] [CrossRef]

- Liu, C.; Ma, S.; Liu, Y.; Wang, Y.; Song, W. Depth perception in optical see-through augmented reality: Investigating the impact of texture density, luminance contrast, and color contrast. IEEE Trans. Vis. Comput. Graph. 2024, 30, 7266–7276. [Google Scholar] [CrossRef]

- Hildreth, E.C.; Royden, C.S. Integrating multiple cues to depth order at object boundaries. Atten. Percept. Psychophys. 2011, 73, 2218–2235. [Google Scholar] [CrossRef]

- Ivanov, I.V.; Kramer, D.J.; Mullen, K.T. The role of the foreshortening cue in the perception of 3D object slant. Vis. Res. 2014, 94, 41–50. [Google Scholar] [CrossRef] [PubMed]

- Kruiyff, E.; Swan, J.E., II; Feiner, S. Perceptual issues in augmented reality revisited. In Proceedings of the 9th IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Seoul, Republic of Korea, 13–16 October 2010. [Google Scholar] [CrossRef]

- Ping, J.; Weng, D.; Liu, Y.; Wang, Y. Depth perception in shuffleboard: Depth cues effect on depth perception in virtual and augmented reality system. J. Soc. Inf. Disp. 2020, 28, 164–176. [Google Scholar] [CrossRef]

- Rossing, C.; Hanika, J.; Lensch, H. Real-time disparity map-based pictorial depth cue enhancement. Comput. Graph. Forum 2012, 31, 275–284. [Google Scholar] [CrossRef]

- Li, Y.-Z.; Zheng, S.-J.; Tan, Z.-X.; Cao, T.; Luo, F.; Xiao, C.-X. Self-supervised monocular depth estimation by digging into uncertainty quantification. J. Comput. Sci. Technol. 2023, 38, 510–525. [Google Scholar] [CrossRef]

- Fu, H.; Gong, M.; Wang, C.; Batmanghelich, K.; Tao, D. Deep ordinal regression network for monocular depth estimation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Z.; Liu, X.; Jiang, J. DepthFormer: Exploiting Long-Range Correlation and Local Information for Accurate Monocular Depth Estimation. Mach. Intell. Res. 2023, 20, 837–854. [Google Scholar] [CrossRef]

- Sun, Q.; Tang, Y.; Zhang, C.; Zhao, C.; Qian, F.; Kurths, J. Unsupervised estimation of monocular depth and VO in dynamic environments via hybrid masks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 2023–2033. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Camera position | Left (−0.0325, 0, 0) Right (0.0325, 0, 0) |

| Vertical field of view | 111 |

| Near clipping plane | 0.1 |

| Far clipping plane | 50 |

| Indicator | Sensitivity | Limitation |

|---|---|---|

| RMSE | Sensitive to large errors | Ignore local error distribution |

| AbsRel | Sensitive to relative errors | Sensitive to low true values |

| SSIM | Sensitive to structural similarity | computational complexity |

| Algorithm | RMSE | AbsRel | SSIM |

|---|---|---|---|

| Original | 18.8073 | 0.2547 | 0.8815 |

| Gabor | 18.1284 | 0.2484 | 0.9011 |

| Retinex | 18.5324 | 0.2529 | 0.8931 |

| RGB | 18.2120 | 0.2526 | 0.8875 |

| Algorithm | RMSE | AbsRel | SSIM |

|---|---|---|---|

| Original | 17.9192 | 0.3320 | 0.9185 |

| Gabor | 17.5821 | 0.3292 | 0.9194 |

| Retinex | 17.4390 | 0.3255 | 0.9199 |

| RGB | 17.5468 | 0.3294 | 0.9189 |

| Algorithm | Advantage | Limitation |

|---|---|---|

| SSAO | Enhance details and realism, stable performance in complex scenes | Possible noise and high computational complexity |

| SSR | Provide realistic reflection effects and stable performance in complex scenes | Poor performance and high computational complexity for non-specular reflective materials |

| SH | High computational efficiency and good dynamic lighting effect | Low lighting accuracy and poor effect in complex scenes |

| Algorithm | RMSE | AbsRel | SSIM |

|---|---|---|---|

| SSAO [28] | 17.9711 | 0.2384 | 0.8838 |

| SSR [29] | 18.7345 | 0.2455 | 0.8827 |

| SH [30] | 18.5761 | 0.2455 | 0.8875 |

| Bionic | 18.0125 | 0.2401 | 0.9069 |

| Algorithm | RMSE | AbsRel | SSIM |

|---|---|---|---|

| SSAO | 17.8451 | 0.3316 | 0.9195 |

| SSR | 17.5344 | 0.3267 | 0.9193 |

| SH | 17.6433 | 0.3268 | 0.9188 |

| Bionic | 17.1425 | 0.3217 | 0.9214 |

| Perception Dimension | Original | SSAO | Bionic |

|---|---|---|---|

| Depth perception clarity | 68.2 ± 9.1 | 74.3 ± 8.2 | 77.6 ± 5.4 |

| Stereoscopic/vertical Depth | 65.4 ± 10.2 | 72.8 ± 8.7 | 77.1 ± 7.9 |

| Object contour clarity | 70.1 ± 8.5 | 80.2 ± 6.9 | 78.6 ± 8.1 |

| Realistic scene | 65.8 ± 9.3 | 73.5 ± 8.1 | 80.9 ± 5.7 |

| visual comfort | 71.2 ± 8.5 | 79.3 ± 7.8 | 91.5 ± 7.3 |

| Artifact perception | 80.6 ± 7.2 | 81.2 ± 6.0 | 82.7 ± 8.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Chen, C.P.; Han, B.; Yang, Y. Enhancement in Three-Dimensional Depth with Bionic Image Processing. Computers 2025, 14, 340. https://doi.org/10.3390/computers14080340

Chen Y, Chen CP, Han B, Yang Y. Enhancement in Three-Dimensional Depth with Bionic Image Processing. Computers. 2025; 14(8):340. https://doi.org/10.3390/computers14080340

Chicago/Turabian StyleChen, Yuhe, Chao Ping Chen, Baoen Han, and Yunfan Yang. 2025. "Enhancement in Three-Dimensional Depth with Bionic Image Processing" Computers 14, no. 8: 340. https://doi.org/10.3390/computers14080340

APA StyleChen, Y., Chen, C. P., Han, B., & Yang, Y. (2025). Enhancement in Three-Dimensional Depth with Bionic Image Processing. Computers, 14(8), 340. https://doi.org/10.3390/computers14080340