Leveraging Contrastive Semantics and Language Adaptation for Robust Financial Text Classification Across Languages

Abstract

1. Introduction

- A contrastive-learning-based semantic alignment mechanism is proposed, which significantly enhances the model’s robustness in recognizing sentiment equivalence across languages;

- A language-adaptive modulation mechanism is introduced to achieve synergistic improvement between cross-lingual transferability and monolingual performance;

- The effectiveness and generalization ability of the proposed method are systematically validated on multiple cross-lingual financial sentiment classification tasks, with overall performance surpassing that of existing mainstream multilingual model baselines.

2. Related Work

2.1. Financial Sentiment Analysis

2.2. Multilingual Pretrained Language Models and Domain Adaptation

2.3. Contrastive Learning and Cross-Lingual Semantic Alignment

3. Materials and Methods

3.1. Data Collection

3.2. Data Preprocessing

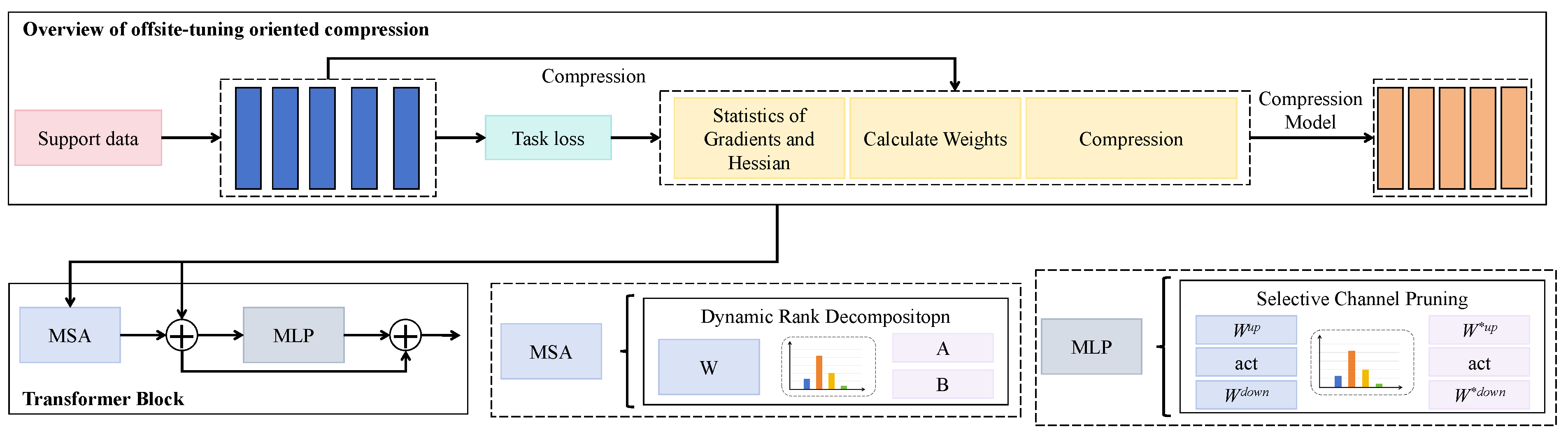

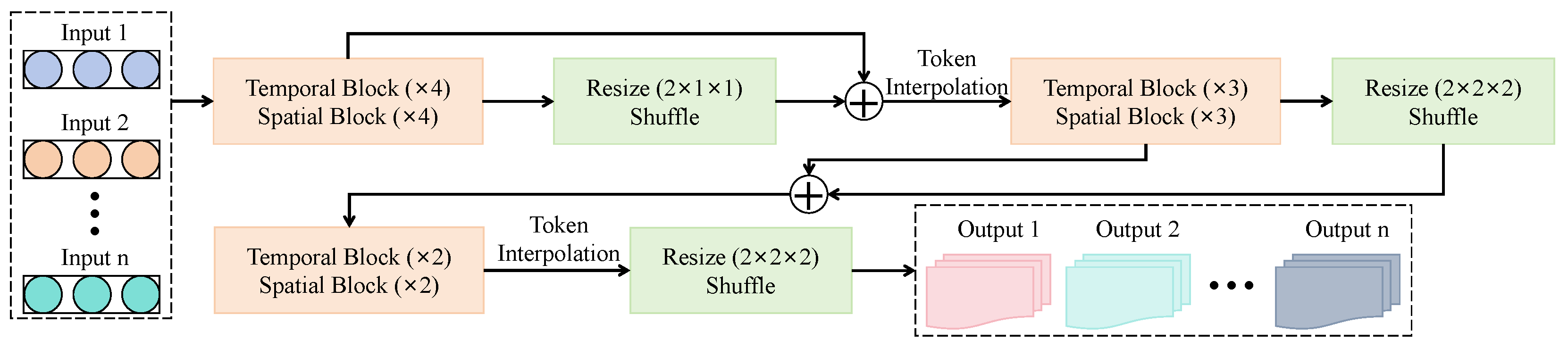

3.3. Proposed Method

3.3.1. Overall

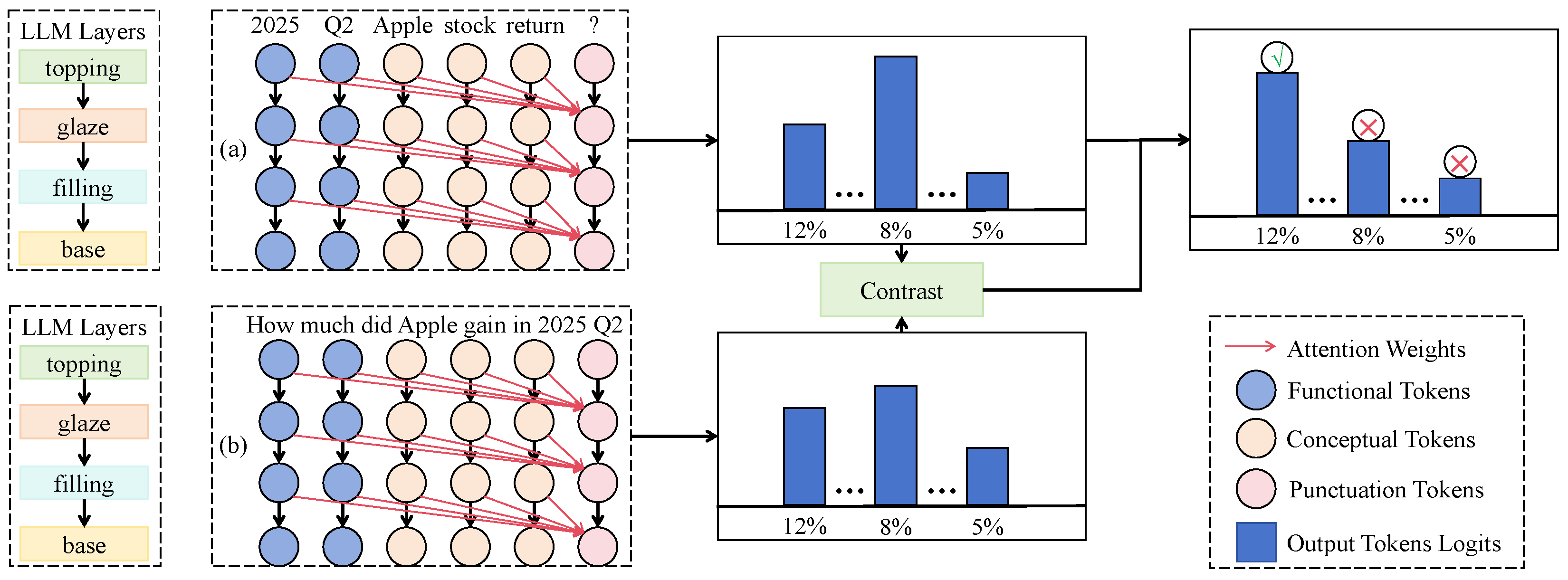

3.3.2. Semantic Contrastive Alignment

3.3.3. Language-Adaptive Tuning

3.3.4. Domain-Specific Decoder

4. Results and Discussion

4.1. Experimental Setup

4.1.1. Evaluation Metrics

4.1.2. Baseline

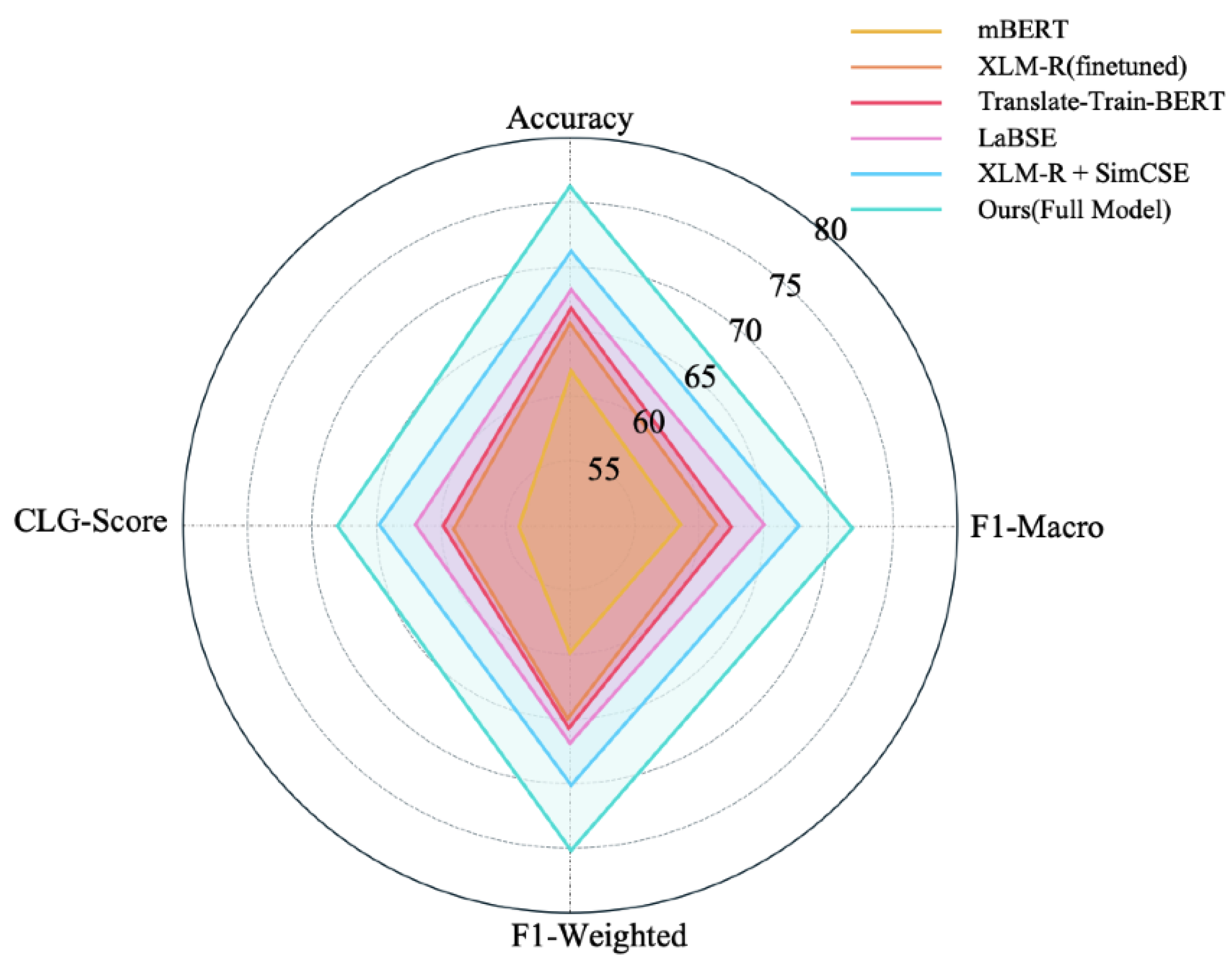

4.1.3. Software and Hardware Platform

4.2. Cross-Lingual Financial Sentiment Classification Results (Training Language → Testing Language)

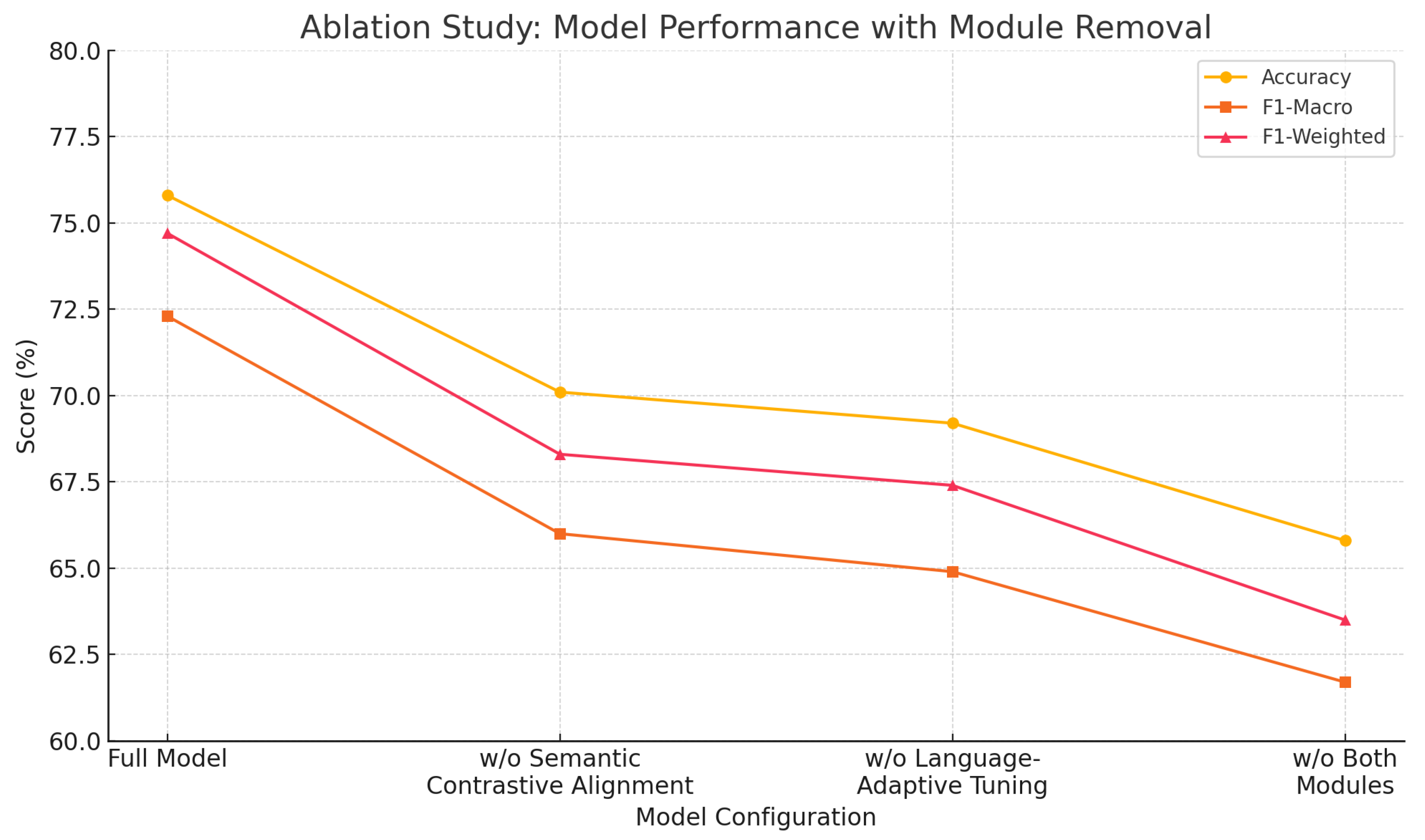

4.3. Performance Degradation Under Module Ablation

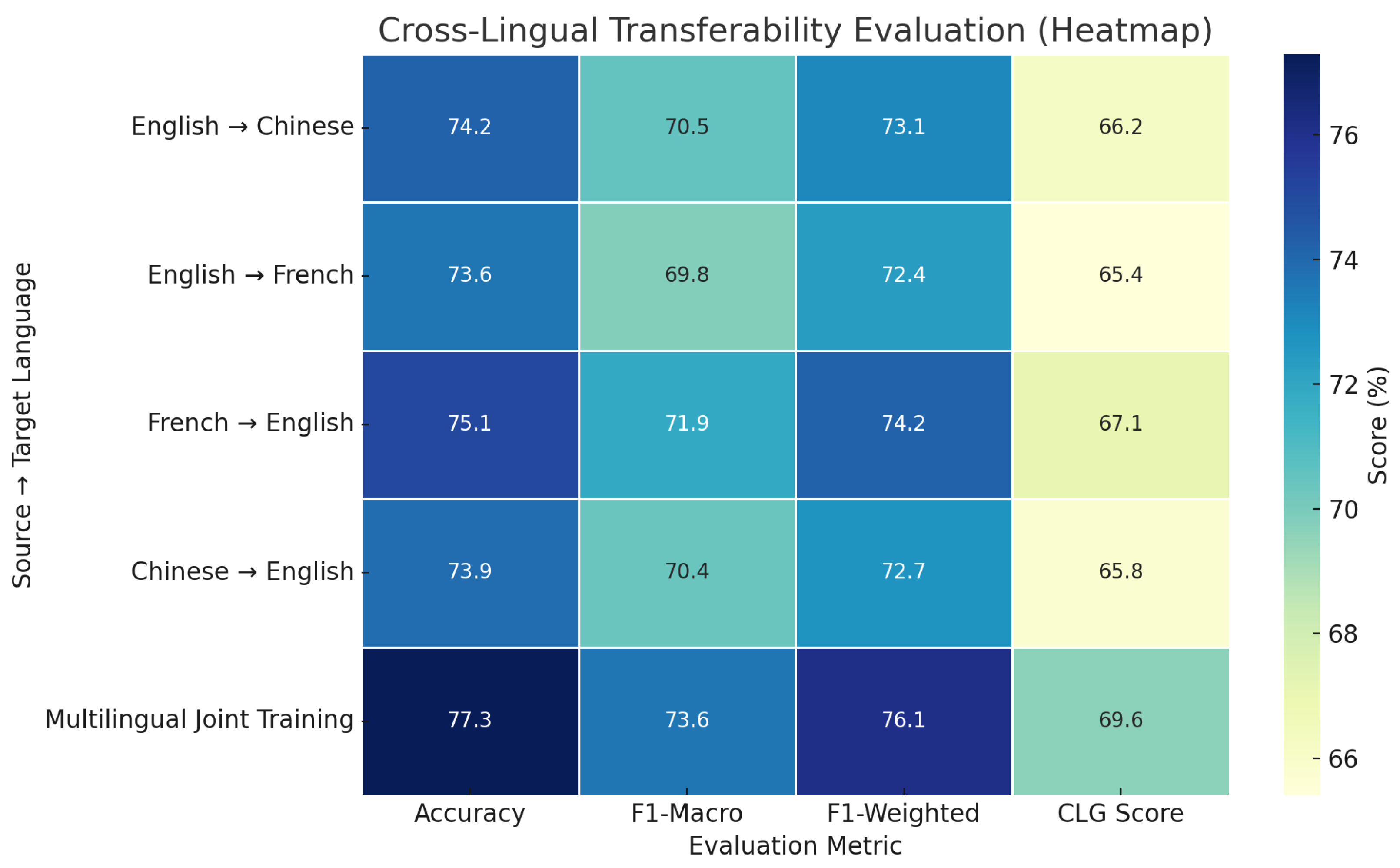

4.4. Transferability Evaluation Across Source → Target Languages

4.5. Discussion

4.6. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alahmadi, K.; Alharbi, S.; Chen, J.; Wang, X. Generalizing sentiment analysis: A review of progress, challenges, and emerging directions. Soc. Netw. Anal. Min. 2025, 15, 45. [Google Scholar] [CrossRef]

- Smailović, J.; Grčar, M.; Lavrač, N.; Žnidaršič, M. Stream-based active learning for sentiment analysis in the financial domain. Inf. Sci. 2014, 285, 181–203. [Google Scholar] [CrossRef]

- Hajek, P.; Munk, M. Speech emotion recognition and text sentiment analysis for financial distress prediction. Neural Comput. Appl. 2023, 35, 21463–21477. [Google Scholar] [CrossRef]

- Rao, Y.; Lei, J.; Wenyin, L.; Li, Q.; Chen, M. Building emotional dictionary for sentiment analysis of online news. World Wide Web 2014, 17, 723–742. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Y.; Yin, R.; Zhang, J.; Zhu, Q.; Mao, R.; Xu, R. Target-based sentiment annotation in Chinese financial news. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 13–15 May 2020; pp. 5040–5045. [Google Scholar]

- Sohangir, S.; Wang, D.; Pomeranets, A.; Khoshgoftaar, T.M. Big Data: Deep Learning for financial sentiment analysis. J. Big Data 2018, 5, 3. [Google Scholar] [CrossRef]

- Almalki, S.S. Sentiment Analysis and Emotion Detection Using Transformer Models in Multilingual Social Media Data. Int. J. Adv. Comput. Sci. Appl. 2025, 16, 324–333. [Google Scholar] [CrossRef]

- Kumar, A.; Albuquerque, V.H.C. Sentiment analysis using XLM-R transformer and zero-shot transfer learning on resource-poor Indian language. Trans. Asian Low-Resour. Lang. Inf. Process. 2021, 20, 1–13. [Google Scholar] [CrossRef]

- Li, X.; Zhang, K. Contrastive Learning Pre-Training and Quantum Theory for Cross-Lingual Aspect-Based Sentiment Analysis. Entropy 2025, 27, 713. [Google Scholar] [CrossRef]

- Du, K.; Xing, F.; Mao, R.; Cambria, E. Financial sentiment analysis: Techniques and applications. ACM Comput. Surv. 2024, 56, 1–42. [Google Scholar] [CrossRef]

- Yang, S.; Rosenfeld, J.; Makutonin, J. Financial aspect-based sentiment analysis using deep representations. arXiv 2018, arXiv:1808.07931. [Google Scholar] [CrossRef]

- Malo, P.; Sinha, A.; Korhonen, P.; Wallenius, J.; Takala, P. Good debt or bad debt: Detecting semantic orientations in economic texts. J. Assoc. Inf. Sci. Technol. 2014, 65, 782–796. [Google Scholar] [CrossRef]

- Yang, Y.; Uy, M.C.S.; Huang, A. Finbert: A pretrained language model for financial communications. arXiv 2020, arXiv:2006.08097. [Google Scholar] [CrossRef]

- Sheetal, R.; Aithal, P.K. Enhancing Financial Sentiment Analysis: Integrating the LoughranMcDonald Dictionary with BERT for Advanced Market Predictive Insights. Procedia Comput. Sci. 2025, 258, 2244–2257. [Google Scholar]

- Huang, A.H.; Wang, H.; Yang, Y. FinBERT: A large language model for extracting information from financial text. Contemp. Account. Res. 2023, 40, 806–841. [Google Scholar] [CrossRef]

- Doddapaneni, S.; Ramesh, G.; Khapra, M.M.; Kunchukuttan, A.; Kumar, P. A primer on pretrained multilingual language models. arXiv 2021, arXiv:2107.00676. [Google Scholar] [CrossRef]

- Kalia, I.; Singh, P.; Kumar, A. Domain Adaptation for NER Using mBERT. In Proceedings of the International Conference on Innovations in Computational Intelligence and Computer Vision; Springer: Berlin/Heidelberg, Germany, 2024; pp. 171–181. [Google Scholar]

- Ma, Y. Cross-language Text Generation Using mBERT and XLM-R: English-Chinese Translation Task. In Proceedings of the 2024 International Conference on Machine Intelligence and Digital Applications, Ningbo, China, 30–31 May 2024; pp. 602–608. [Google Scholar]

- Rizvi, A.; Thamindu, N.; Adhikari, A.; Senevirathna, W.; Kasthurirathna, D.; Abeywardhana, L. Enhancing Multilingual Sentiment Analysis with Explainability for Sinhala, English, and Code-Mixed Content. arXiv 2025, arXiv:2504.13545. [Google Scholar] [CrossRef]

- Ri, R.; Kiyono, S.; Takase, S. Self-translate-train: Enhancing cross-lingual transfer of large language models via inherent capability. arXiv 2024, arXiv:2407.00454. [Google Scholar]

- Liu, J.; Yang, Y.; Tam, K.Y. Beyond surface similarity: Detecting subtle semantic shifts in financial narratives. arXiv 2024, arXiv:2403.14341. [Google Scholar] [CrossRef]

- Yan, G.; Peng, K.; Wang, Y.; Tan, H.; Du, J.; Wu, H. AdaFT: An efficient domain-adaptive fine-tuning framework for sentiment analysis in chinese financial texts. Appl. Intell. 2025, 55, 701. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, C.; Poole, B.; Krishnan, D.; Schmid, C.; Isola, P. What makes for good views for contrastive learning? Adv. Neural Inf. Process. Syst. 2020, 33, 6827–6839. [Google Scholar]

- Zhang, H.; Cao, Y. Understanding the Benefits of SimCLR Pre-Training in Two-Layer Convolutional Neural Networks. arXiv 2024, arXiv:2409.18685. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Gao, T.; Yao, X.; Chen, D. Simcse: Simple contrastive learning of sentence embeddings. arXiv 2021, arXiv:2104.08821. [Google Scholar]

- Hafner, M.; Katsantoni, M.; Köster, T.; Marks, J.; Mukherjee, J.; Staiger, D.; Ule, J.; Zavolan, M. CLIP and complementary methods. Nat. Rev. Methods Prim. 2021, 1, 20. [Google Scholar] [CrossRef]

- Feng, F.; Yang, Y.; Cer, D.; Arivazhagan, N.; Wang, W. Language-agnostic BERT sentence embedding. arXiv 2020, arXiv:2007.01852. [Google Scholar]

- Wang, Y.S.; Wu, A.; Neubig, G. English contrastive learning can learn universal cross-lingual sentence embeddings. arXiv 2022, arXiv:2211.06127. [Google Scholar] [CrossRef]

- Tang, G.; Yousuf, O.; Jin, Z. Improving BERTScore for machine translation evaluation through contrastive learning. IEEE Access 2024, 12, 77739–77749. [Google Scholar] [CrossRef]

- Pires, T.; Schlinger, E.; Garrette, D. How multilingual is multilingual BERT? arXiv 2019, arXiv:1906.01502. [Google Scholar] [CrossRef]

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, E.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised cross-lingual representation learning at scale. arXiv 2019, arXiv:1911.02116. [Google Scholar]

- Yu, S.; Sun, Q.; Zhang, H.; Jiang, J. Translate-Train Embracing Translationese Artifacts; Association for Computational Linguistics: Dublin, Ireland, 2022. [Google Scholar]

| Language | News | Announcements | Social Media |

|---|---|---|---|

| English | 18,542 | 12,764 | 9380 |

| Chinese | 16,308 | 14,227 | 11,106 |

| French | 12,695 | 10,024 | 8517 |

| Total | 47,545 | 37,015 | 28,993 |

| Language | Positive | Neutral | Negative |

|---|---|---|---|

| English | 14,582 | 12,347 | 13,757 |

| Chinese | 13,276 | 14,865 | 13,500 |

| French | 10,384 | 10,928 | 9924 |

| Model | #Params | Pretraining Data | Architecture | Key Features |

|---|---|---|---|---|

| mBERT | ∼110 M | Wikipedia (104 langs) | Transformer, 12 layers, 768-d | Early MPLM, basic cross-lingual capability |

| XLM-R (finetuned) | ∼270 M | CommonCrawl CC-100 (100 langs) | Transformer, 24 layers, 1024-d | Strong multilingual encoder, finetuned on financial data |

| Translate-Train-BERT | ∼110 M | Wikipedia (English) + Translated corpora | Transformer, 12 layers, 768-d | Translate non-English to English before training |

| LaBSE | ∼470 M | Translation pairs (109 langs) | Dual-encoder Transformer | Optimized for multilingual sentence alignment |

| XLM-R + SimCSE | ∼270 M | CC-100 (100 langs) + contrastive finetuning | Transformer, 24 layers, 1024-d | Combines multilingual encoder with sentence-level contrastive learning |

| Model | Accuracy | F1-Macro | F1-Weighted | CLG Score |

|---|---|---|---|---|

| mBERT | 62.4 | 58.3 | 60.2 | 0.541 |

| XLM-R (finetuned) | 66.1 | 62.7 | 65.4 | 0.584 |

| Translate-Train-BERT | 67.3 | 63.5 | 66.1 | 0.597 |

| LaBSE | 68.5 | 65.2 | 67.8 | 0.614 |

| XLM-R + SimCSE | 71.4 | 67.9 | 70.2 | 0.646 |

| Ours (Full Model) | 75.8 | 72.3 | 74.7 | 0.684 |

| Model Configuration | Accuracy | F1-Macro | F1-Weighted |

|---|---|---|---|

| Full Model | 75.8 | 72.3 | 74.7 |

| w/o Semantic Contrastive Alignment | 70.1 | 66.0 | 68.3 |

| w/o Language-Adaptive Tuning | 69.2 | 64.9 | 67.4 |

| w/o Both Modules | 65.8 | 61.7 | 63.5 |

| Source Language → Target Language | Accuracy | F1-Macro | F1-Weighted | CLG Score |

|---|---|---|---|---|

| English → Chinese | 74.2 | 70.5 | 73.1 | 0.662 |

| English → French | 73.6 | 69.8 | 72.4 | 0.654 |

| French → English | 75.1 | 71.9 | 74.2 | 0.671 |

| Chinese → English | 73.9 | 70.4 | 72.7 | 0.658 |

| Multilingual Joint Training | 77.3 | 73.6 | 76.1 | 0.696 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Lin, Q.; Meng, F.; Liang, S.; Lu, J.; Liu, S.; Chen, K.; Zhan, Y. Leveraging Contrastive Semantics and Language Adaptation for Robust Financial Text Classification Across Languages. Computers 2025, 14, 338. https://doi.org/10.3390/computers14080338

Zhang L, Lin Q, Meng F, Liang S, Lu J, Liu S, Chen K, Zhan Y. Leveraging Contrastive Semantics and Language Adaptation for Robust Financial Text Classification Across Languages. Computers. 2025; 14(8):338. https://doi.org/10.3390/computers14080338

Chicago/Turabian StyleZhang, Liman, Qianye Lin, Fanyu Meng, Siyu Liang, Jingxuan Lu, Shen Liu, Kehan Chen, and Yan Zhan. 2025. "Leveraging Contrastive Semantics and Language Adaptation for Robust Financial Text Classification Across Languages" Computers 14, no. 8: 338. https://doi.org/10.3390/computers14080338

APA StyleZhang, L., Lin, Q., Meng, F., Liang, S., Lu, J., Liu, S., Chen, K., & Zhan, Y. (2025). Leveraging Contrastive Semantics and Language Adaptation for Robust Financial Text Classification Across Languages. Computers, 14(8), 338. https://doi.org/10.3390/computers14080338