1. Introduction

In the digital era, organisations operate on a global scale, relying heavily on internet platforms for customer engagement and service delivery. Consequently, computing infrastructure has become the backbone of modern business operations. However, as technology continues to drive these operations, the threat of data breaches by malicious actors remains a persistent concern. Failure to implement adequate security measures can result in the loss of sensitive information, regulatory penalties, and reputational harm [

1]. To mitigate such risks, many organisations deploy intrusion detection systems (IDSs), which monitor and alert regarding suspicious network behaviour [

2]. Traditional IDSs, which are predominantly signature-based, operate by comparing incoming network traffic against a database of known attack patterns. While effective in identifying previously encountered threats, these systems fall short when confronted with zero-day attacks [

3]. These attacks represent exploits that target unknown vulnerabilities before corresponding detection signatures are available (

Figure 1).

As a result, signature-based IDSs are inherently limited in their ability to detect and respond to emerging cyber threats. Their reliance on frequent updates and high maintenance costs further limits their responsiveness and scalability, especially for smaller organisations [

2,

3]. Anomaly-based IDSs offer an alternative by modeling normal network behaviour and flagging deviations [

4]. Nevertheless, they often suffer from high false positive rates. With this, IDSs are proving inadequate in addressing emerging threats, especially zero-day attacks [

5]. These systems often require up to 30 days to detect such threats [

6], leaving organisations exposed to substantial risks during that window [

3]. The consequences of zero-day attacks are severe. The average cost of recovering from a zero-day breach exceeds USD 1.2 million [

6], excluding business disruption, regulatory penalties, and long-term reputational damage [

7]. Given that modern businesses rely on real-time digital platforms, any downtime can affect productivity and revenue. Despite investments in IDSs and non-technical mitigation strategies, organisations remain vulnerable. Moreover, attackers are becoming sophisticated by exploiting unknown vulnerabilities across diverse technologies.

Therefore, the continued use of outdated IDSs in the face of modern and evasive attacks has placed organisations at a serious disadvantage, and there is an urgent need to modernise IDS capabilities. Another challenge lies in the datasets used to train IDS models. Widely used benchmarks such as KDD-CUP99, NSL-KDD, UNSW-NB15, and CICIDS-2017 are outdated and fail to reflect the complexity of modern network threats [

8]. These datasets often lack real-world zero-day attack data and can lead to high false positive rates due to poor generalisation, limiting the models’ effectiveness in real deployments. Given these limitations, there is a growing interest in leveraging machine learning (ML) to enhance IDS capabilities [

9]. ML techniques have demonstrated promise in detecting sophisticated and previously unseen attacks, including zero-day exploits [

10]. By learning from large and diverse datasets, they can uncover hidden patterns and classify complex threat behaviours with improved accuracy and efficiency. This study utilises UGRansome [

10], a contemporary dataset comprising real-world zero-day activities and modern network traffic behaviours [

11]. The dataset includes three target classes, anomaly (A), signature (S), and synthetic signature (SS), which enable a more nuanced evaluation of model performance across attack types [

6]. The research opts to evaluate the performance using standard metrics such as accuracy, precision, recall, F1-score, and computational time [

6,

9] and investigate the effectiveness of both classical and quantum ML (QML) models in detecting zero-day attacks using the UGRansome dataset. This research is guided by the following central question:

To what extent can classical and QML models accurately classify anomaly, signature, and synthetic signature categories in the UGRansome dataset for effective zero-day attack detection?

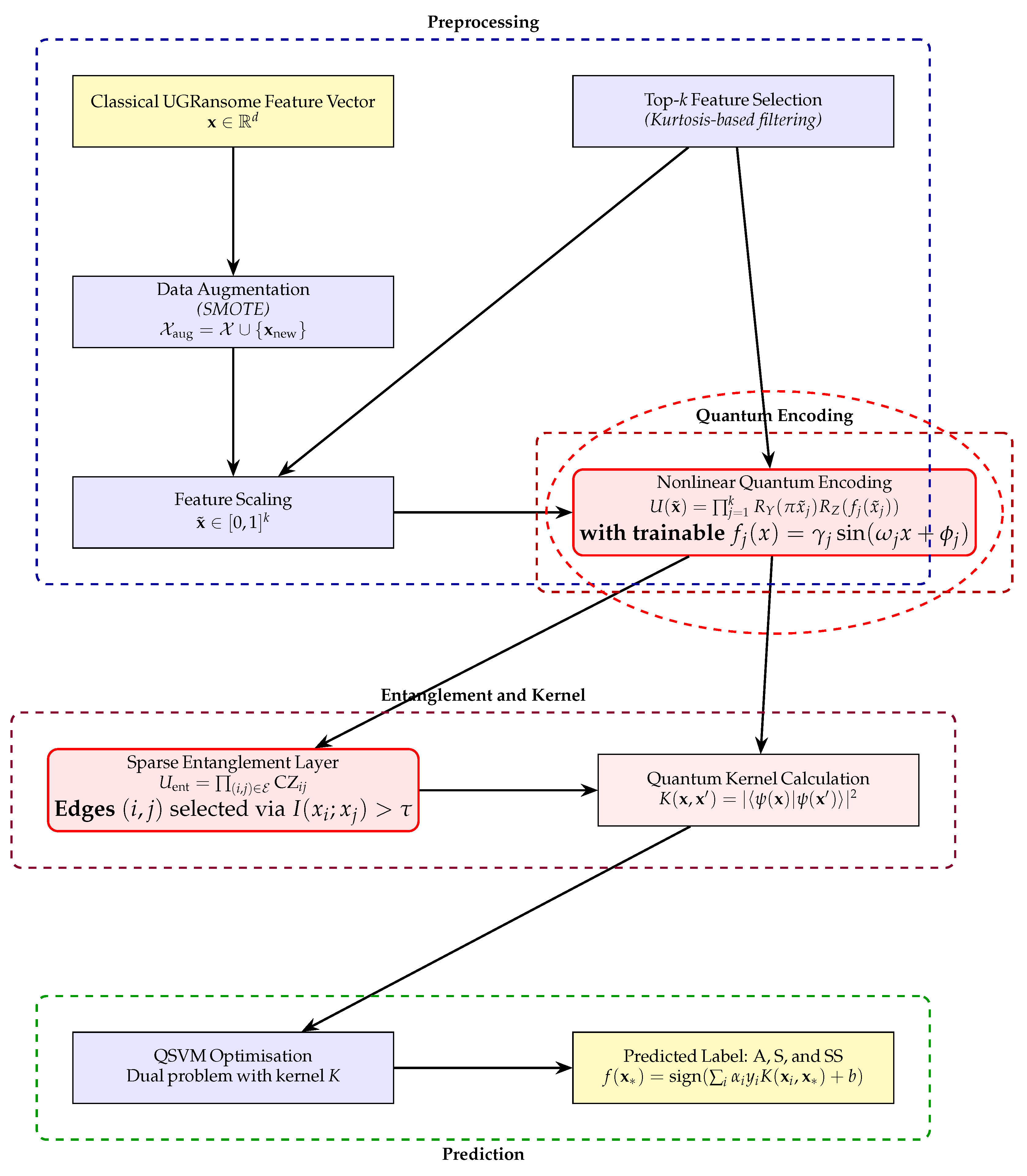

The first objective is to assess how well ML classifiers distinguish between the key target classes (A, S, SS) embedded within the dataset. This enables the identification of previously unseen attack patterns, which are critical for the advancement of adaptive IDS mechanisms. The second objective focuses on benchmarking the performance of quantum models, particularly quantum support vector machines (QSVMs), against conventional classifiers. Their performance is evaluated with the aim of understanding their applicability in real-world cybersecurity settings. The third objective is to address the issue of class imbalance, which is prevalent in intrusion detection datasets and often leads to biased learning outcomes. To mitigate this, the study incorporates the synthetic minority oversampling technique (SMOTE), borderline-SMOTE, and SMOTE with edited nearest neighbours (SMOTEENN), to synthetically augment underrepresented classes, particularly those representing zero-day attacks. This study introduces a novel quantum hybrid pipeline tailored for detecting zero-day attacks, offering conceptual advancements through the integration and customisation of QML components for cybersecurity contexts. Specifically, the key innovations include the following:

Learnable nonlinear quantum encoding: Unlike standard fixed encodings, we design a parameterised and trainable encoding circuit that enables the quantum layer to adapt to the data manifold, effectively capturing latent structures specific to zero-day attack patterns.

Mutual information-guided sparse entanglement: We propose a principled method for configuring entanglement in quantum circuits based on mutual information analysis of classical features. This departs from arbitrary or full entanglement schemes by offering a data-aware circuit design, reducing quantum overhead while preserving critical interdependencies.

Quantum kernel optimisation for SVMs: We go beyond using standard QSVM kernels by constructing a quantum kernel informed by the encoded and entangled state space tailored to the cyber threat detection task, providing both theoretical and empirical improvements over classical SVM baselines.

Domain-specific quantum simulation: While QML methods have been explored in generic classification tasks, our application to zero-day detection provides a unique and practically relevant testbed. The proposed framework demonstrates superior classification performance compared to existing techniques.

Although our work builds on established components, it offers a new conceptual synthesis and methodological enhancements that address the specific challenges of quantum cybersecurity modeling—notably, feature sparsity, pattern complexity, and adversarial dynamics inherent in zero-day exploits. The manuscript is structured as follows:

Section 2 presents a review on zero-day attack classification, evaluating existing datasets and ML techniques.

Section 3 introduces the proposed QSVM for zero-day attack detection.

Section 4 details the results and analysis of the proposed QSVM, including a comparative evaluation with related works. Finally,

Section 5 concludes the study.

2. Literature Review

The origins of ML and artificial intelligence (AI) date back to the 1950s and 1960s, with foundational contributions from researchers such as Alan Turing, John McCarthy, Arthur Samuel, Alan Newell, and Frank Rosenblatt [

12]. Arthur Samuel developed the first functional ML model, while Rosenblatt introduced the perceptron algorithm inspired by biological neurons. This algorithm became the basis for artificial neural networks (ANNs) [

12]. ML techniques are categorised into four groups: supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning [

9,

12]. Supervised learning involves training algorithms on labeled datasets, where inputs and outputs are known, enabling models to make accurate predictions. Common methods include regression and classification using algorithms such as SVMs, decision trees (DTs), random forest (RF), naïve Bayes (NB), and neural networks (NNs) [

13]. In recent years, supervised learning has expanded with the emergence of QML, which utilises principles from quantum computing to enhance the speed and complexity of pattern recognition tasks [

14,

15]. QML algorithms, such as the QSVM, use quantum bits (qubits) and superposition to represent and process information in ways that are infeasible for classical systems. Although still in its early stages [

16], QML shows promise in solving high-dimensional classification problems [

14] and has the potential to revolutionise domains like cybersecurity [

17].

2.1. Established Datasets

The KDD Cup 99 dataset, developed by the Defense Advanced Research Projects Agency (DARPA), has been widely used in the development and evaluation of IDSs [

18]. It contains over 4.4 million records representing both normal and attack traffic. A major limitation is the high volume of duplicate records, comprising 78% of the training set and 75% of the testing set [

18]. In addition, 14 attack types present in the test set are absent from the training data, complicating model generalisation. Approximately 89% of the dataset comprises attack traffic, while only 11.15% is normal.

Table 1 summarises the distribution of records by attack type.

The NSL-KDD dataset was developed by the Canadian Institute of Cybersecurity as an improved version of the KDD Cup 99 dataset [

19]. It addresses some of the drawbacks of KDD Cup 99, including duplication and imbalance issues (

Table 1). NSL-KDD includes additional features such as connection duration, protocol types, services, and content features like failed login attempts and root access. Both the KDD Cup 99 and NSL-KDD datasets are unsuitable for zero-day attack detection because they contain outdated, known attack patterns and lack examples of novel threats. Their data imbalance and duplicates also bias models, limiting their ability to detect new and unseen attacks effectively. The UNSW-NB15 dataset, introduced in 2015 by researchers at the University of New South Wales [

20], represents an advancement over earlier datasets like KDD Cup 99 and NSL-KDD by encompassing both legacy and modern attack types. It includes 49 features spanning statistical, content-based, and traffic-based attributes. The dataset covers nine attack categories alongside normal traffic, collected from a mix of real and synthetic network traffic in controlled environments (

Table 2). Despite its comprehensive nature, UNSW-NB15 exhibits class imbalance with a predominant proportion of normal traffic (87.35%). This imbalance, coupled with its creation date, constrains its effectiveness in detecting more recent and sophisticated attacks, including zero-day exploits (

Table 2). In turn, the CICIDS2017 dataset, published in 2017 by the Canadian Institute for Cybersecurity, addresses limitations found in previous datasets by incorporating modern network traffic scenarios and a wider variety of attack types [

21]. Featuring 84 attributes, this dataset includes common attacks such as DoS, brute force, infiltration, port scanning, and botnet (

Table 2). The dataset suffers from data integrity issues and an imbalance dominated by normal traffic (83.34%). Both datasets contribute valuable resources for intrusion detection research, yet their respective limitations demonstrate the ongoing need for updated and balanced datasets that reflect evolving cybersecurity threats (

Table 3).

2.2. The UGRansome Dataset

The dataset considered in this study is UGRansome, accessed on 13 August 2025 from

https://www.kaggle.com/datasets/nkongolo/ugransome-dataset/data, a recent benchmark developed at the University of Pretoria. UGRansome is employed for zero-day attack and ransomware detection [

13], serving as a basis for evaluating intrusion detection systems (IDSs) [

4]. Besides benign traffic such as user datagram protocol (UDP), port scanning, scan, and secure shell (SSH), the dataset includes malicious traffic like blacklist, botnet, DoS, nerisBotnet, and spam [

22]. It captures ransomware communication patterns, providing network flow-based features such as bytes transferred, malicious addresses, duration, and protocol flags (

Table 3). The dataset has a three-class structure, particularly useful for zero-day attack prediction because it captures both known (S, SS) and unknown (A) threat behaviours in a way that supports robust model generalisation (

Table 4). This structure mimics real-world conditions by exposing the model to a spectrum of threat patterns [

23], from known to entirely unknown, thereby enhancing its ability to detect zero-day attacks that do not match existing signatures (

Table 5).

2.3. Machine Learning for Zero-Day Exploits Detection

Su [

11] introduced a hybrid ML framework that integrates Chi-Square feature selection with the African vultures optimisation algorithm (AVOA) for hyperparameter tuning using the UGRansome dataset. The framework evaluates three classifiers, the extra trees classifier (ETC), extreme learning machine (ELM), and adaptive boosting classifier (ADAC), with ETC optimised by the AVOA achieving the highest accuracy of 0.981. The Chi-Square method enhanced interpretability and performance by identifying “financial impact” and “clusters” as key features. The approach demonstrates strong potential for real-time deployment in cybersecurity systems [

11] while highlighting the need for further work on adaptability to zero-day patterns.

Rios-Ochoa et al. [

6] used the UGRansome dataset to address critical shortcomings in IDSs, including overreliance on binary classification, poor dataset quality, and the lack of real-time deployment. The authors introduced a comprehensive end-to-end framework covering feature extraction, dataset quality assessment, and comparative model training using conventional ML and deep learning (DL) classifiers. While RF achieved 100% offline accuracy, the multilayer perceptron (MLP) outperformed it in real-time deployment (70% vs. 62%).

The study emphasised that high offline performance does not guarantee real-world effectiveness, revealing that low feature variability and outdated data hinder generalisation. The authors suggest that specific models may improve detection robustness for zero-day variants.

Mohamed et al. [

10] introduced a novel probabilistic composite model for zero-day exploit detection, focusing on enhancing anomaly detection in terms of accuracy, adaptability, and computational efficiency. The proposed framework integrates four innovative components: (1) an Adaptive WavePCA-Autoencoder (AWPA) for denoising and dimensionality reduction during preprocessing, (2) a Meta-Attention Transformer Autoencoder (MATA) to enhance feature extraction, (3) Genetic Mongoose-Chameleon Optimisation (GMCO) for efficient and accurate feature selection, and (4) an Adaptive Hybrid Exploit Detection Network (AHEDNet) to reduce false positives. Experimental results using the UGRansome dataset demonstrated superior performance, achieving accuracy up to 0.9919, precision up to 0.9968, and minimal error. These results confirm the model’s robustness in detecting previously unseen threats.

Yan et al. [

13] implemented a two-layer ML framework for malware detection using the UGRansomware (UGRansome) dataset. The first layer employs a stacked ensemble model combining six classifiers. The second layer uses light gradient boosting machine (LightGBM) to classify the detected malware into specific families. While this layer performs well on major families, it struggles with less represented categories, showing a noticeable drop in accuracy. The study depicts various challenges in fine-grained malware family attribution, particularly under class imbalance.

Sokhonn et al. [

24] proposed a privacy-preserving hierarchical clustering method performed on the UGRansome dataset, demonstrating the feasibility of conducting clustering on encrypted network traffic data without exposing the underlying information. The results from the proposed encrypted method were visually consistent with SciPy’s plaintext clustering output. Due to the fixed-point arithmetic and approximate operations, minor discrepancies were observed in cluster assignments. These inconsistencies may impact applications where clustering fidelity is critical.

Chaudhary and Adhikari [

25] investigate the effectiveness of DT, SVM, and MLP for malware detection using the UGRansome dataset. Through a comparative analysis based on accuracy, precision, recall, and F1-score, the DT emerged as the most effective model. While the study evaluates ransomware detection, it does not explicitly simulate zero-day attacks with novel ransomware variants.

2.4. QML for Zero-Day Exploits Detection

QML utilises principles of quantum computation to enhance classical ML tasks [

26]. A QML model consists of three key components: quantum data encoding, parameterised quantum circuits (PQCs), and quantum measurements for classification or regression [

27]. Quantum information is represented using

qubits, which are unit vectors in a two-dimensional Hilbert space

[

26,

27]. A single qubit can be written as a linear superposition of orthonormal basis states

and

(Equation (

1)).

An

n-qubit system lives in a

-dimensional space

, enabling QML models to represent complex data structures compactly [

28]. Classical input data

, with

d features, must be encoded into quantum states using a quantum feature map (QFM) represented by a unitary operator

acting on the initial state

(Equation (

2)).

Here,

is a unitary operator parameterised by the classical input

, which encodes the data into the quantum

[

28,

29]. Learning in QML is driven by parameterised unitary transformations

, where

are trainable parameters (e.g., rotation angles of quantum gates such as

,

, and

). The overall parameterised quantum state is obtained by applying

after the data encoding, yielding (Equation (

3))

The final state

is measured to obtain classical outcomes for downstream tasks. For binary classification tasks, a common observable is the Pauli-

Z operator

Z, typically applied to a designated qubit within the tensor product of Pauli operators that define the measurement basis [

30]. The expected value of this measurement is given by (Equation (

4))

The scalar value

is then thresholded (e.g., by a sigmoid function) to produce the predicted label [

31]. To train the QML model, a cost function

is defined based on cross-entropy loss for classification or mean squared error (MSE) for regression [

31,

32]. The goal is to find the optimal parameters

that minimise this cost function, often using hybrid quantum optimisation methods (Equation (

5)).

This mathematical formalism underpins QML models such as quantum convolutional neural networks (QCNNs) [

33], variational quantum classifiers (VQCs) [

30], and hybrid quantum deep learning (HQDL) [

15]. These models can potentially achieve better generalisation on high-dimensional data by exploiting quantum parallelism and entanglement [

34].

2.5. Review of QML Approaches in Cyber Threat Detection

Elsedimy et al. [

35] introduced a novel intrusion detection framework, the QSVM grey wolf optimiser (GWO), designed to improve detection performance and reduce false positive rates in hybrid IDSs (HIDSs). The model integrates a QSVM with a GWO to fine-tune parameters. The QSVM is utilised for binary classification tasks by selecting an appropriate kernel function to maximise the decision boundary. Experimental evaluation is conducted using an internet of things (IoT) dataset.

The performance of the QSVM is benchmarked against several state-of-the-art models. The QSVM-GWO achieves a training accuracy of 99.11% and outperforms existing models. Similarly, Eze et al. [

36] compared classical models (SVM, CNN) with their quantum counterparts (QSVM, QCNN) for malicious uniform resource locator (URL) detection, demonstrating quantum kernel advantages for QSVM, though QCNNs lagged due to hardware constraints.

Vijayalakshmi et al. [

37] implemented QSVMs to detect anomalies in network traffic using the NSL-KDD dataset. Four different kernel functions (linear, polynomial, radial basis function (RBF), and sigmoid) were applied to evaluate their impact on QSVM performance. The results demonstrated that the RBF kernel provided better separation or decision boundaries between normal and anomalous traffic.

Mahdian and Mousavi [

38] implemented QSVMs on a quantum hardware to detect and classify quantum entanglement using VQCs. The model achieved 90% classification accuracy, even under the presence of noisy intermediate-scale quantum (NISQ) devices. The authors tested various circuit configurations across different quantum processors, including Perth, Lagos, and Nairobi, to evaluate the model’s robustness. The results demonstrate that the proposed approach not only performs well on two-qubit systems but also extends effectively to three-qubit entangled states.

In contrast, Tripathi et al. [

39] developed a quantum long short-term memory (QLSTM) architecture using VQCs to analyse malware system calls, optimising quantum circuit depth and qubit configurations to overcome hardware limitations. The QLSTM detected distributed DoS (DDoS) attacks in network traffic, reporting consistent gains over Saeed [

40]’s framework, which incorporated LSTM. Abreu et al. [

16] developed and evaluated QuantumNetSec, an HIDS that combines quantum and classical computing techniques to improve network threat detection. By applying personalised QML methods, the system identified both binary and multiclass threats.

Testing on publicly available datasets showed that QuantumNetSec outperformed conventional models. Extending these efforts, Sridevi et al. [

41] introduced a hybrid quantum classical neural network (HQCNN) integrating latent Dirichlet allocation (LDA) and wavelet transforms with CNN and quantum layers, achieving superior performance in Android malware and DDoS classification on real hardware.

Durgut et al. [

15] explored the use of a hybrid quantum classical deep neural network (HQCDNN) to identify security vulnerabilities in smart contracts. This model aimed to detect access control issues, arithmetic errors, time manipulation, and DoS. The SmartBugs wild dataset is used for training and evaluation, with Term Frequency–Inverse Document Frequency (TF-IDF) employed for feature extraction. Experiments were conducted using configurations with two-qubit and four-qubit quantum layers, alongside a classical deep neural network (DNN) for baseline comparison. Results achieved 78.2–96.4% accuracy and 80.2–96.6% F1-score, surpassing conventional models.

This review emphasises that QML’s practical advantage in intrusion detection hinges on both hardware maturity and targeted problems. Our study contributes to this growing body of work by empirically validating quantum kernel superiority and proposing a reproducible pipeline for secure threat detection using QSVMs, while addressing deployment feasibility under current hardware constraints [

42].

4. Results

The UGRansome dataset is divided into training and testing sets, with 80% (89,523 samples) allocated to training and 20% (22,381 samples) to testing. The training set, comprising input features () and corresponding labels (), is used to train ML models by learning the relationships and patterns within the data. The test set () evaluates model generalisation on unseen samples. This setup enables unbiased performance evaluation based on metrics such as accuracy, precision, recall, and F1-score. Models tested include SVM, NB, RFC, XGBoost, the voting ensemble method, and QSVM with lower-performing classifiers enhanced using SMOTE data-balancing techniques.

- 1.

Support Vector Machine

To establish a classical benchmark, we employ

LinearSVC from the

Scikit-Learn library, training on the top-

k encoded features with default hyperparameters and up to 100 iterations. As shown in

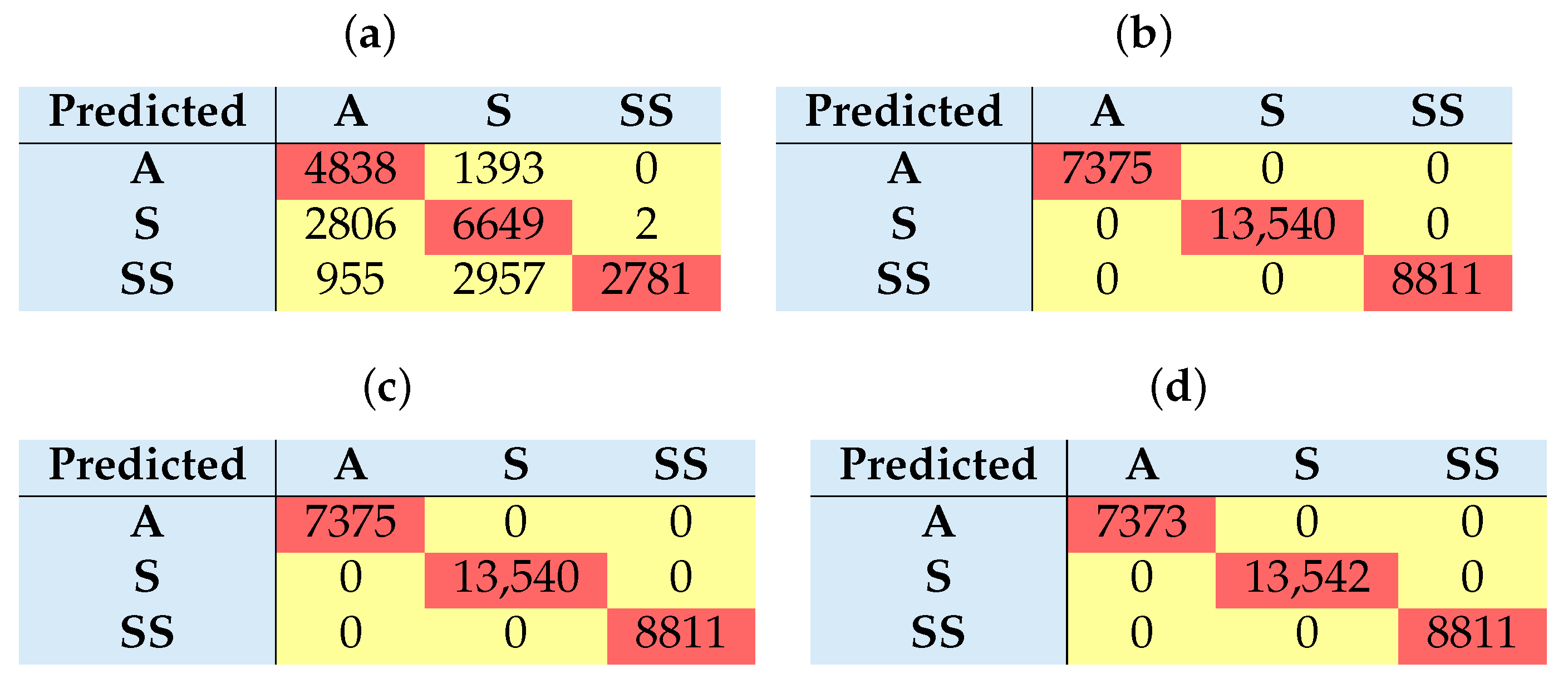

Table 8, the model achieves balanced F1-scores of 65% for both anomaly (A) and signature (S) classes but performs poorly on the synthetic signature (SS) class with a sharp recall drop to 42%, despite an unusually high precision of 1.00. This imbalance indicates that the classifier is overly conservative, confidently predicting SS only in clear-cut cases, leading to a high false negative rate—a critical flaw in the context of detecting zero-day threats. The confusion matrix in

Figure 6a further reveals a bias toward misclassifying samples, exhibiting the model’s reliance on linear separability and its limited ability to capture nuanced attack behaviour. These results highlight structural limitations rooted in class imbalance, insufficient feature discrimination, and the inadequacy of linear decision boundaries.

The inability of the linear SVM to reliably detect SS attacks poses a security risk. In practice, such blind spots mean that obfuscated threats often representative of zero-day exploits can evade early detection, undermining system resilience. The model’s tendency to misclassify SS samples into more familiar categories reflects a deeper issue (

Table 8): linear classifiers may be unsuited for capturing behavioural deviations that characterise modern threats. Consequently, we pivot to more expressive models and techniques—specifically, quantum-enhanced classification with data-level augmentation—as suggested by Mohanty et al. [

69], to address these weaknesses and improve detection.

- 2.

SVM with Borderline-SMOTE

To overcome the limitations observed in the baseline SVM, particularly the poor detection of SS attacks, we employed the

SGDClassifier with hinge loss to approximate a linear SVM, coupled with

CalibratedClassifierCV for probability estimation. Borderline-SMOTE was applied to generate synthetic samples concentrated near the decision boundary of the minority SS class, enhancing the model’s ability to separate overlapping regions. This approach led to near-perfect classification, with precision, recall, and F1-scores approaching 1.00 across all classes (

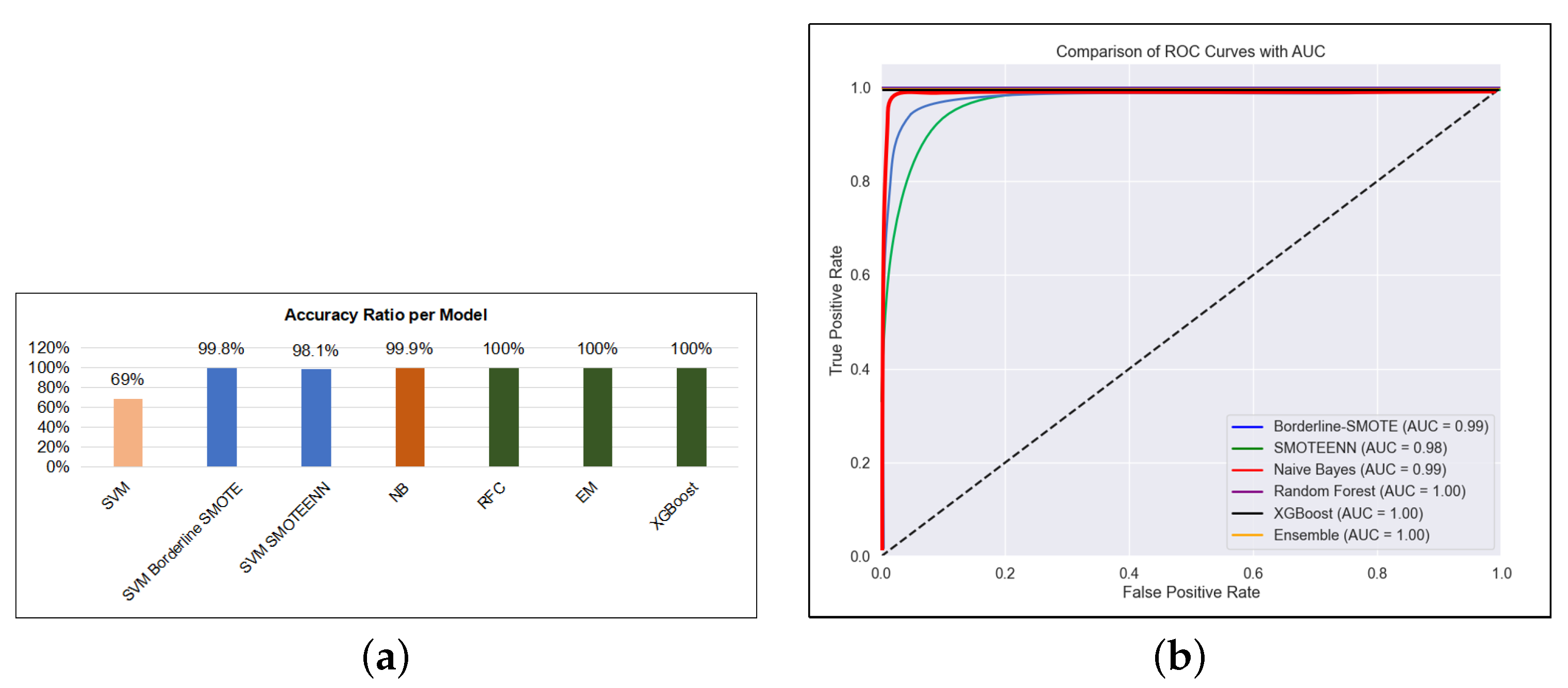

Table 9). In particular, the recall for SS rose from 42% in the baseline to 100%, eliminating the prior detection gap for emerging threats. The confusion matrix (

Figure 6) and ROCs (

Figure 7) support this, showing minimal misclassification and perfect AUCs (

Table 9).

However, the extremely high performance raises concerns about possible overfitting due to the synthetic nature of the training data. Since borderline-SMOTE constructs new data points based on existing borderline instances, it may inadvertently introduce redundancy or amplify noise, especially if the original minority class was poorly sampled. Thus, while the results are promising, further evaluation is necessary to validate the model’s generalisation capability (

Figure 8).

- 3.

SVM with SMOTEENN

To further address class imbalance, the model incorporates SMOTEENN, which combines K-NN-based synthetic minority oversampling with edited nearest neighbours (ENN) to remove noisy majority samples. This hybrid approach is implemented using a

SGDClassifier with a

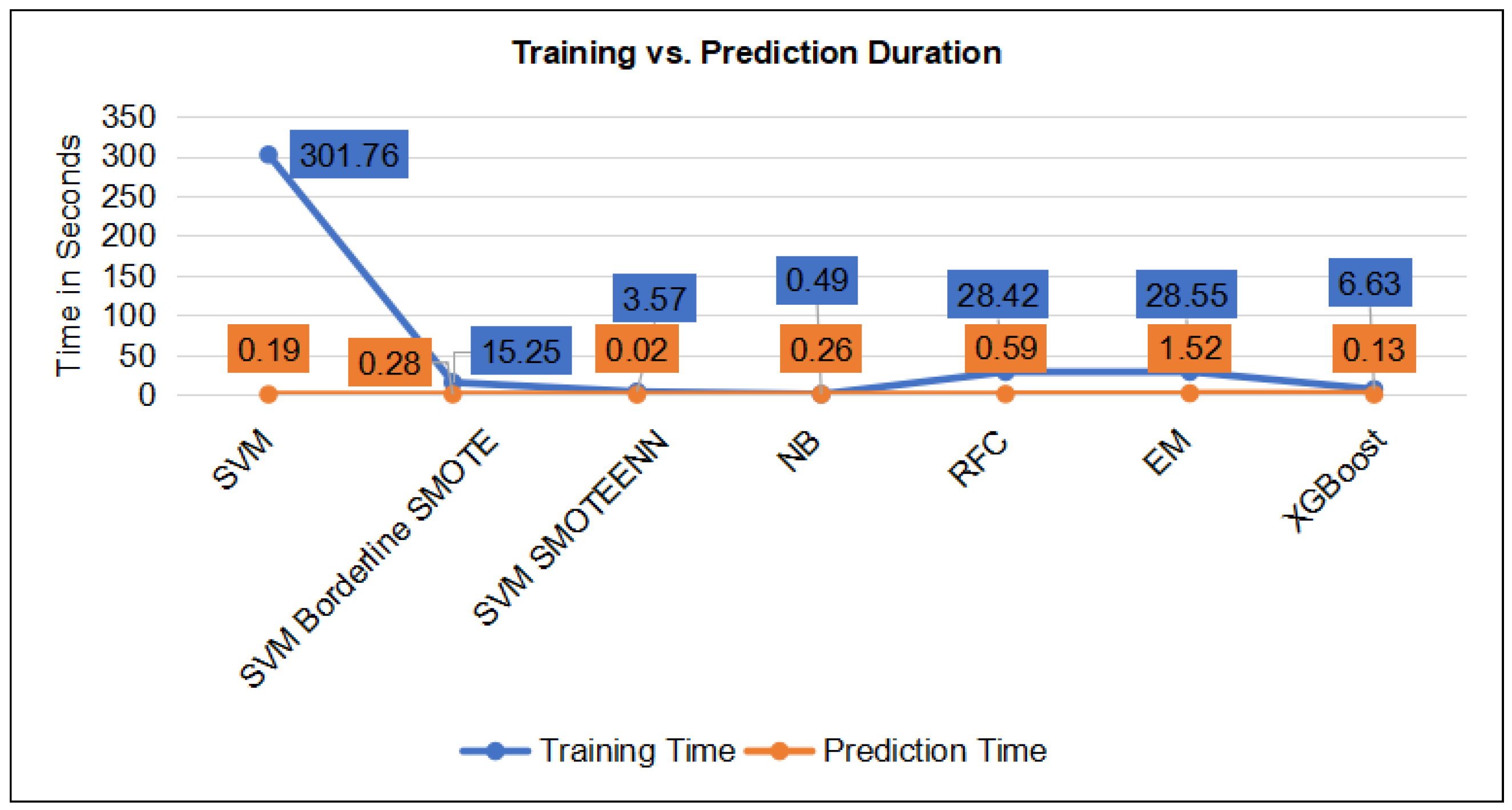

StandardScaler, offering efficient linear approximation and stable convergence. The model trained in 3.76 s and made predictions in 0.05 s (

Table 10). While overall classification metrics are high, the SS class often linked to zero-day or synthetic threats exhibited a slight drop in recall, suggesting residual difficulty in modeling its overlapping patterns. This limitation is visualised in the confusion matrix (

Figure 6), though the ROCs remain strong with AUCs of 1.0 across all classes (

Figure 9). Despite the high discriminative power, time complexity remains a critical consideration. As shown in

Figure 8, even small delays in training or inference can reduce a model’s effectiveness in fast-evolving threat environments. Models that require longer training cycles may struggle to adapt to rapidly changing attack vectors, such as zero-day exploits, which often demand continuous learning on large data streams.

Similarly, slower prediction times can hinder real-time intrusion detection, reducing the system’s ability to contain threats before lateral movement occurs.

Table 11 summarises the comparative performance, with ensemble learning methods standing out as the most robust approach, achieving perfect scores in precision, recall, and F1 across all attack categories (

Figure 9a,b).

The XGBoost model stands out as the most effective and practical choice for zero-day attack detection (

Figure 9), delivering perfect accuracy, precision, recall, and F1-score alongside efficient training (6.63 s) and prediction times (0.13 s). While the RFC and ensemble models also achieved flawless detection rates, their comparatively longer training and inference times (

Figure 8) may hinder timely deployment in fast-evolving threat environments. The NB model offers rapid processing and high overall accuracy (99%), but its lower recall on zero-day anomalies risks missing critical attacks, potentially increasing system vulnerability. The baseline SVM, though initially underperforming with 64% accuracy and high training overhead, benefited from SMOTE augmentation, improving accuracy to over 98%, but introduced false positives in non-zero-day classes, which could lead to increased operational costs. These findings demonstrate the importance of balancing detection performance with computational efficiency and false alarm rates. By maintaining this balance, XGBoost emerges as the most reliable solution, ensuring prompt, accurate detection of zero-day exploits while minimising resource consumption and false alerts (

Figure 9).

4.1. Threat Classification Divergence and Model

Behaviour

The experiment reveals notable divergence in classification outcomes stemming from fundamentally different feature representations employed by SMOTE-based oversampling methods and quantum kernels. While both approaches seek to address class imbalance, they influence decision boundaries through distinct mechanisms. Quantum kernels, utilised in QSVMs, map data into higher-dimensional spaces where complex, nonlinear relationships, particularly in densely populated regions, can be better captured to improve generalisation. However, this can also shift class boundaries in unexpected ways [

70], as evidenced by instances where samples originally classified as anomalies (A) are reassigned to the signature-based (S) class. Conversely, oversampling methods such as borderline-SMOTE and SMOTEENN explicitly rebalance the dataset by generating synthetic minority samples, directly reshaping the classifier’s decision boundaries toward underrepresented threat classes. This fundamental difference explains why classical and quantum-enhanced models interpret borderline regions differently, particularly in the imbalanced UGRansome dataset comprising three distinct threat types. These divergences are critical because they impact the model’s ability to accurately differentiate subtle threat behaviours, which is essential for reliable zero-day attack detection. Understanding these shifts highlights the importance of evaluating per-class confidence levels and applying model calibration techniques to mitigate misclassification risks. Thus, this analysis not only contextualises performance differences observed across models but also motivates further investigations into their interpretability and operational reliability in various cybersecurity environments.

4.2. QSVM Evaluation

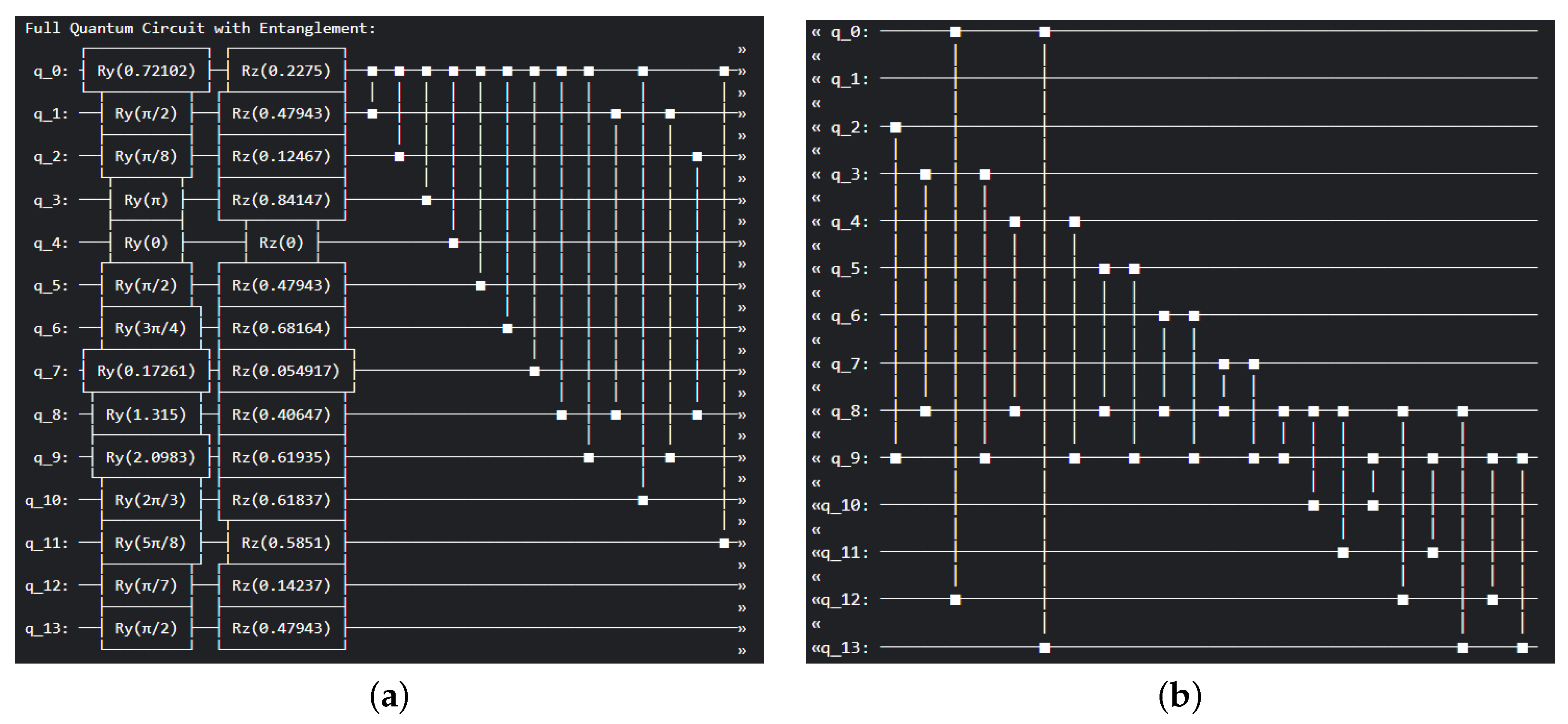

Building on the observed divergence in threat classification behaviour, the QSVM model was implemented using

Qiskit and integrated with

scikit-learn to enable hybrid quantum classical learning [

71]. To investigate how quantum feature encoding impacts decision boundaries, the model employed the

ZZFeatureMap with sparse entanglement and two repetitions, selected for its ability to map nonlinear relationships in the input space [

71,

72,

73]. Both three-qubit and four-qubit circuits were evaluated to assess how increasing quantum state dimensionality affects the model’s capacity to differentiate between threat behaviours (

Table 12). This setup provides insights into how quantum-enhanced kernels influence class separability, especially in borderline or imbalanced regions identified in earlier comparisons. To further strengthen the performance results and explain model behaviour, multiple kernel types were tested, including linear, polynomial, and RBF for classical SVMs, and compared against quantum kernels used in the QSVM implementation.

The quantum experiments were conducted using the

aer_simulator_statevector backend with 1024 shots, offering a high-fidelity and noise-free environment to emulate quantum state evolution. Although real quantum hardware such as

ibm_nairobi (a superconducting qubit processor) was considered [

72], it was not employed due to practical constraints, such as gate errors, decoherence, and execution latency, which can undermine result consistency.

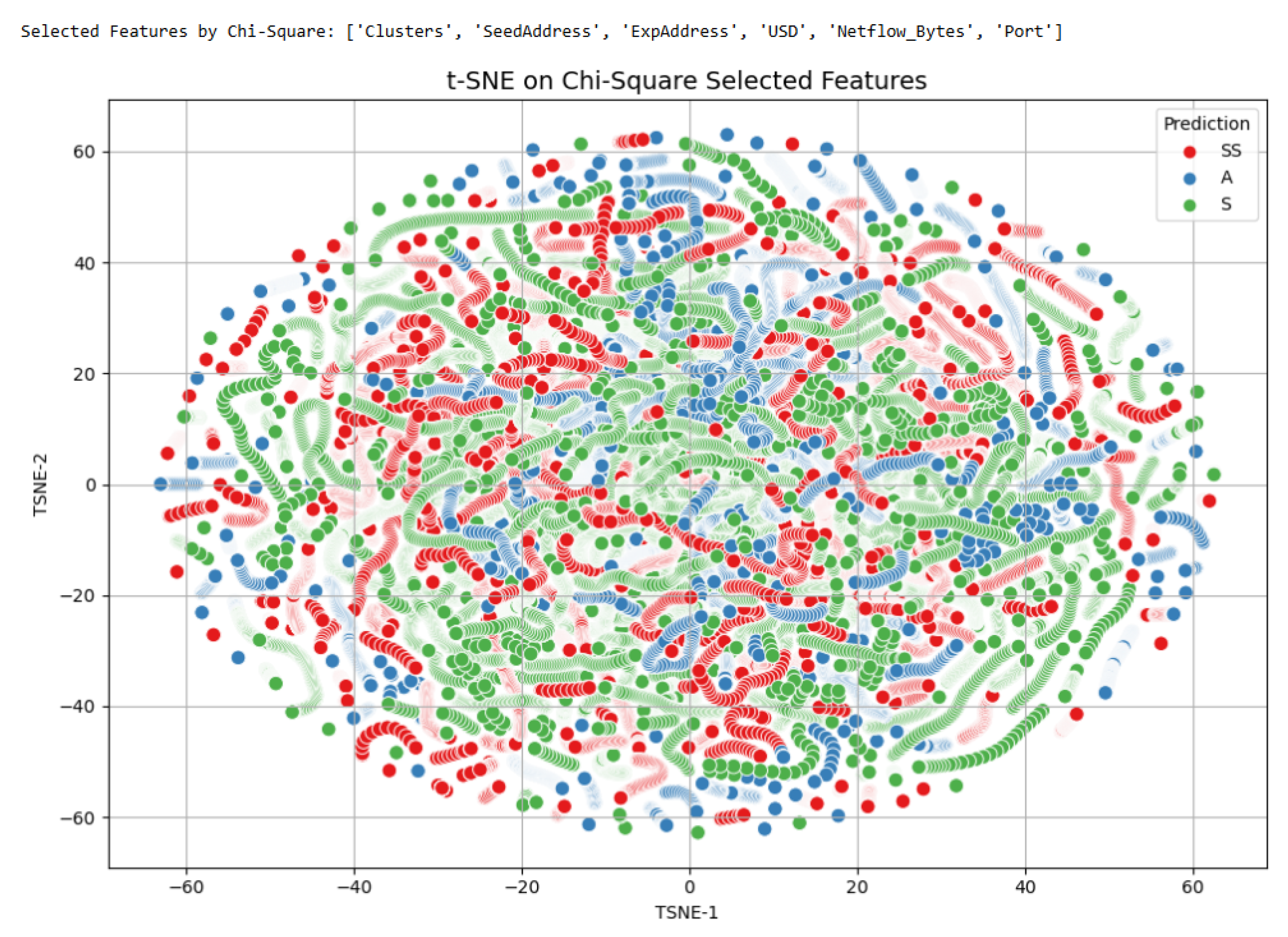

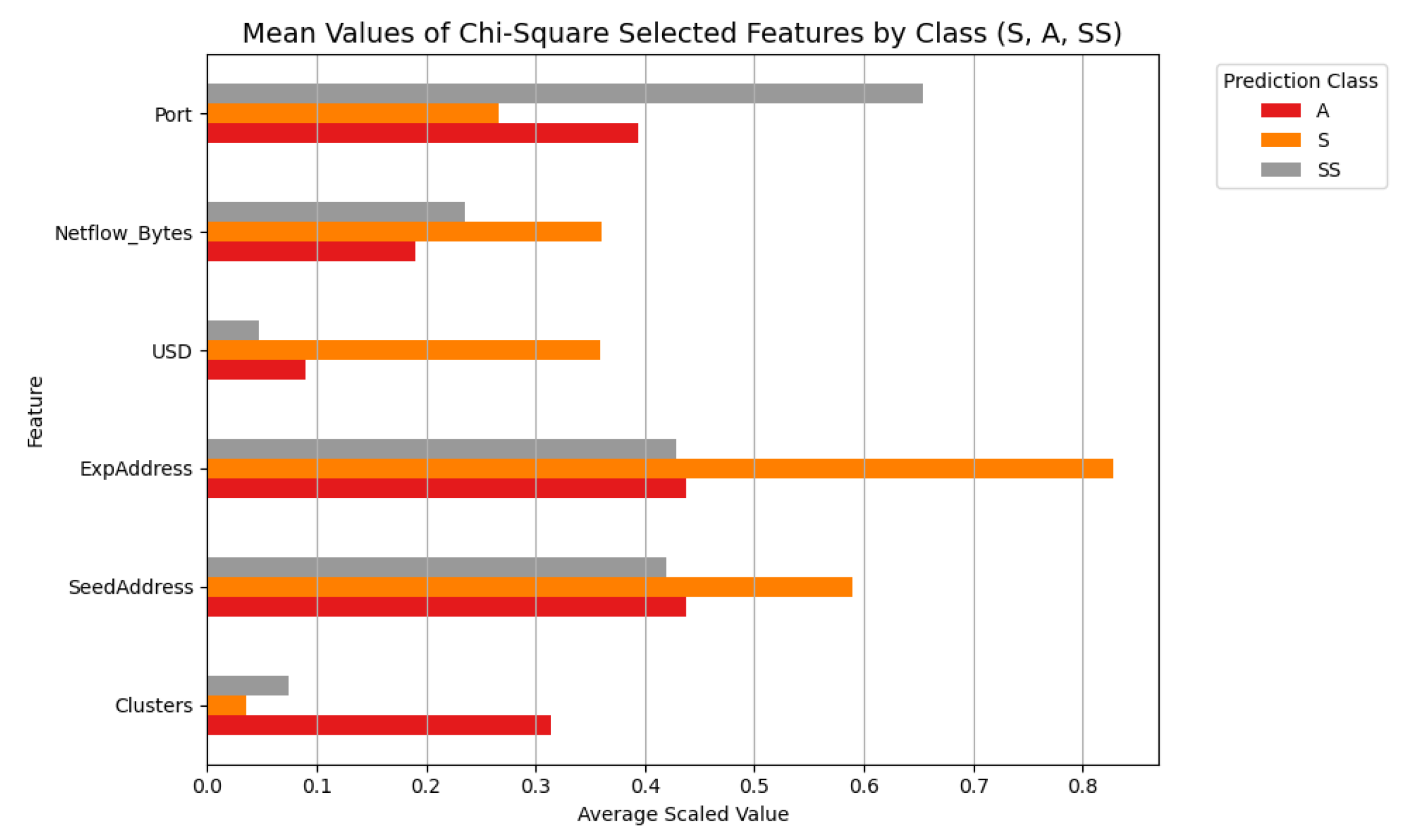

The simulator-based setup thus ensured reproducibility and eliminated noise-related performance variance [

73], allowing for a focused evaluation of the QSVM’s structural advantages. Feature selection was conducted using

SelectKBest with the Chi-Squared (

) statistic, retaining the six most discriminative features following

MinMaxScaler normalisation. The QSVM model consistently outperformed its classical counterparts across both three-qubit and four-qubit configurations, as shown in

Table 12, reinforcing the positive impact of QFM on classification boundaries, as discussed by Sihare [

74]. QSVM exhibited stronger generalisation to minority classes, particularly the anomaly (A) class representing zero-day threats. High recall on this class indicates robust detection of true positives, which is critical for early response in dynamic threat environments. Concurrently, elevated precision values reflect the model’s ability to reduce false alarms, enhancing trust and operational feasibility. These results validate the effectiveness of quantum-enhanced learning under realistic class imbalance, supporting the case for integrating quantum models in threat classification pipelines [

75]. The experiment reveals a clear trend: increasing the number of qubits from three to four enhances the QSVM’s ability to capture complex patterns associated with zero-day attacks (

Table 12). Specifically, the four-qubit model improves recall for the anomaly class from 99.61% to 99.89%, with a corresponding gain in F1-score and overall accuracy. This performance gain is not incidental but stems from the increased representational capacity of the QFM. A higher qubit count expands the Hilbert space in which data is embedded, enabling the model to construct more expressive and nonlinear decision boundaries. Such expressiveness is particularly crucial for detecting zero-day threats, which often exhibit deviations from known patterns. This improvement supports a central hypothesis of QML: deeper quantum encodings allow for richer, more discriminative kernel spaces, thereby enhancing generalisation on previously unseen data. The boost in recall does not compromise precision, indicating the QSVM maintains high specificity and resists overfitting, even as its sensitivity increases. This balance is essential in security applications where false negatives may permit critical threats to go undetected. Further supporting this conclusion,

Table 13 shows that the QSVM achieves near-perfect recall for high-risk attack classes such as APT (0.9989) and nerisBotnet (0.9831), with corresponding high precision and F1-scores. These findings portray the model’s ability to generalise effectively across diverse threat profiles, validating its utility in real-world cybersecurity environments. In general, the results demonstrate that quantum kernel scalability, achieved via increased qubit encoding, directly translates to measurable improvements in zero-day exploit detection, solidifying QSVM as a promising tool for proactive and resilient cyber defense systems.

4.3. Impact of Quantum Feature Mapping

Beyond the performance gains attributed to increased qubit capacity, the choice of QFM plays a central role in shaping the QSVM’s effectiveness. As shown in

Table 13, the model consistently achieves high recall across diverse attack types, including evasive threats such as

APT,

cryptohitman, and

nerisBotnet, highlighting its capacity to minimise false negatives, a critical requirement in cyber threat intelligence. This sensitivity, however, comes with slightly reduced precision in certain categories (e.g.,

blacklist: 93.11%,

exploratory domain attack (EDA): 92.07%), reflecting a modest increase in false positives. Such a trade-off is common in security-focused applications, where failing to detect an attack is more costly than triggering a false alarm. In the proposed framework, this balance is implicitly governed by hyperparameter tuning and the structure of the QFM, which determines the expressivity of the induced kernel space. The proposed QFMs embed classical data effectively and enable the separation of nonlinear patterns. A more expressive map tends to improve recall by capturing subtle feature relationships but may also increase boundary overlap, thereby lowering precision. Conversely, simpler mappings reduce overfitting risk and false positives. In our configuration, the selected QFM strikes an effective balance, enhancing detection across threat classes while maintaining F1-scores above 0.93. Moreover, circuit design choices—such as the entanglement strategy, repetition depth, and number of qubits—interact with the QFM to fine-tune the performance trade-off, as recommended by Wang [

76]. As such, adapting the quantum circuit to the characteristics of specific threat landscapes is essential for achieving optimal detection performance in real-world scenarios.

4.4. Discussion

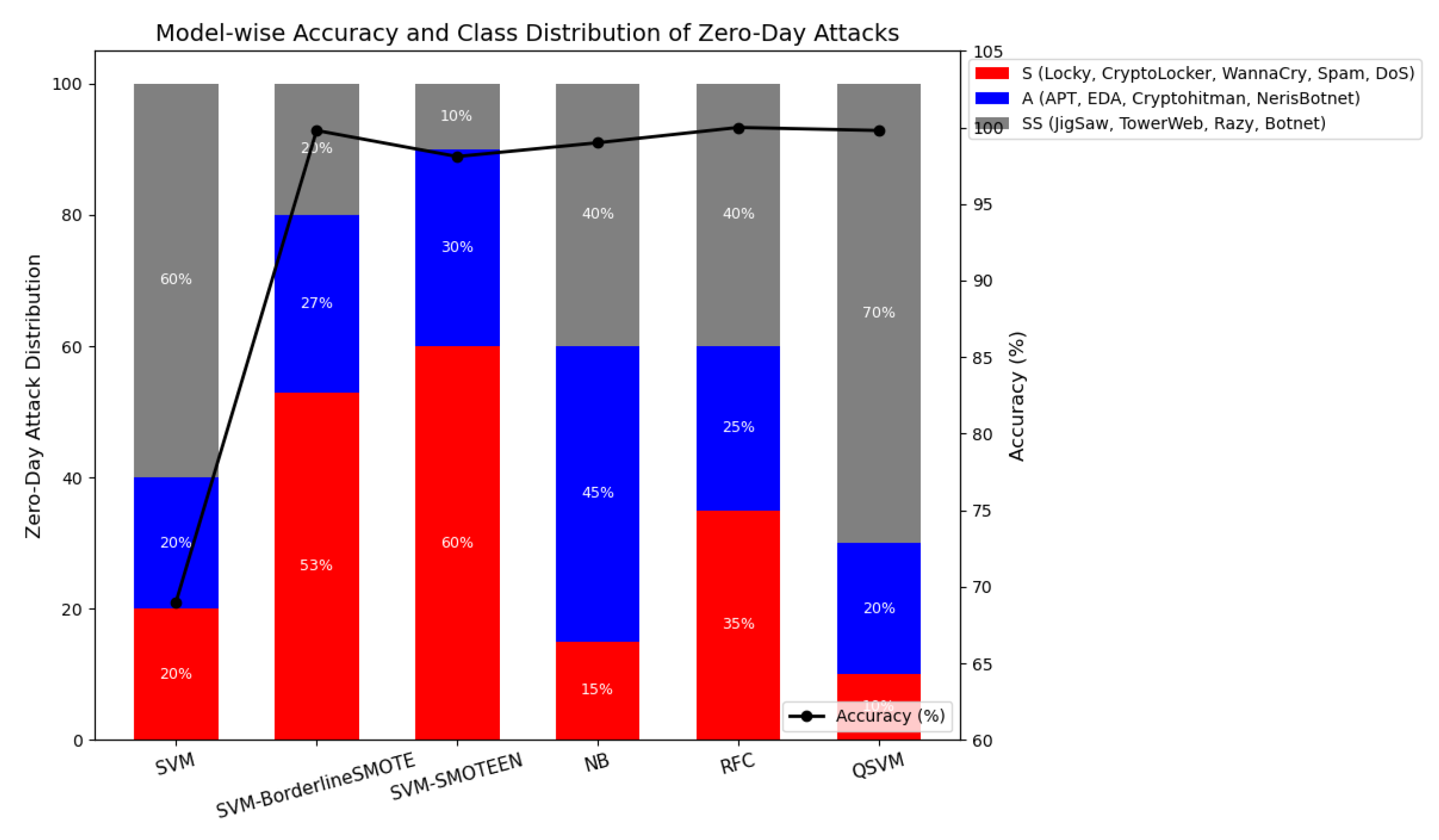

The experimental results presented in

Figure 10 provide critical insights into the performance of various models for detecting zero-day threats, categorised into three primary classes: A-type (e.g.,

APT,

cryptohitman,

nerisBotnet), S-type (e.g.,

locky,

cryptoLocker,

wannaCry,

spam,

DoS), and SS-type (e.g.,

jigSaw,

towerWeb,

razy,

botnet). Among the evaluated models, the QSVM achieved a remarkable accuracy of 99.8%, with 70% of its predictions successfully targeting SS-class threats. This underscores the strength of quantum kernels in capturing complex and latent relationships, particularly those present in polymorphic attack vectors. However, the QSVM exhibited limited sensitivity to S-type threats, identifying only 10% in that category. This performance gap may reflect the quantum model’s bias toward novel or entangled patterns. In contrast, the RFC achieved a perfect accuracy of 100%, with a well-balanced threat detection profile across classes: 35% S-type, 25% A-type, and 40% SS-type. The ensemble architecture of RFC enables it to generalise across diverse attack landscapes, making it particularly effective in heterogeneous environments. Similarly, the NB classifier attained 99% accuracy but exhibited a skewed detection preference, capturing 45% A-type threats—suggesting potential overfitting to structured attack patterns while underperforming in the S-type category (15%).

SVMs enhanced with data-balancing strategies showed varied behaviour. The SVM augmented with borderline-SMOTE delivered a strong accuracy of 99.8%, achieving the most balanced detection across classes: 53% S-type, 27% A-type, and 20% SS-type. This outcome highlights the effectiveness of focusing synthetic samples near decision boundaries to mitigate class imbalance and improve generalisability. Conversely, the SVM using SMOTEENN reached 98.1% accuracy but skewed heavily toward S-type threats (60%), likely due to the inclusion of noisy examples during oversampling, which may distort decision boundaries. The baseline SVM, with no resampling, yielded the lowest performance (69% accuracy) and uniform class distributions, reinforcing the importance of preprocessing and imbalance mitigation in zero-day modeling. Overall, the results demonstrate that the QSVM and RFC are both highly effective in detecting emerging SS and A-type threats, while the SVM with borderline-SMOTE offers the most balanced performance across all categories, making it a compelling option for real-world zero-day detection scenarios.

4.5. Discussion on Perfect Performance Results

While several models including ensembles such as XGBoost achieved perfect accuracy on the evaluated dataset, such results are uncommon in real-world cybersecurity scenarios and warrant cautious interpretation. To mitigate risks of overfitting and data leakage, we adhered to rigorous evaluation protocols involving clean dataset splits, stratified cross-validation, statistical significance testing, and comprehensive preprocessing to prevent label leakage or sample duplication [

77]. Nonetheless, these exceptional scores may partly reflect dataset-specific factors, including limited diversity of zero-day variants and the use of synthetic balancing techniques that can simplify classification. Given the dynamic and evolving nature of zero-day attacks, which often present unpredictable patterns, ongoing validation through real-time deployment and adaptive learning is essential to assess the true operational robustness of these models. Among the approaches reviewed, ensemble learning methods demonstrate superior performance, particularly in terms of classification accuracy (

Figure 11). Models such as ETC, XGBoost, and RF achieve high detection rates, though few assess zero-day attack scenarios. Additionally, quantum-based models such as QSVM and QuantumNetSec show promising results but face practical limitations (

Table 14), including restricted qubit counts and the absence of real-time or explainable outputs.

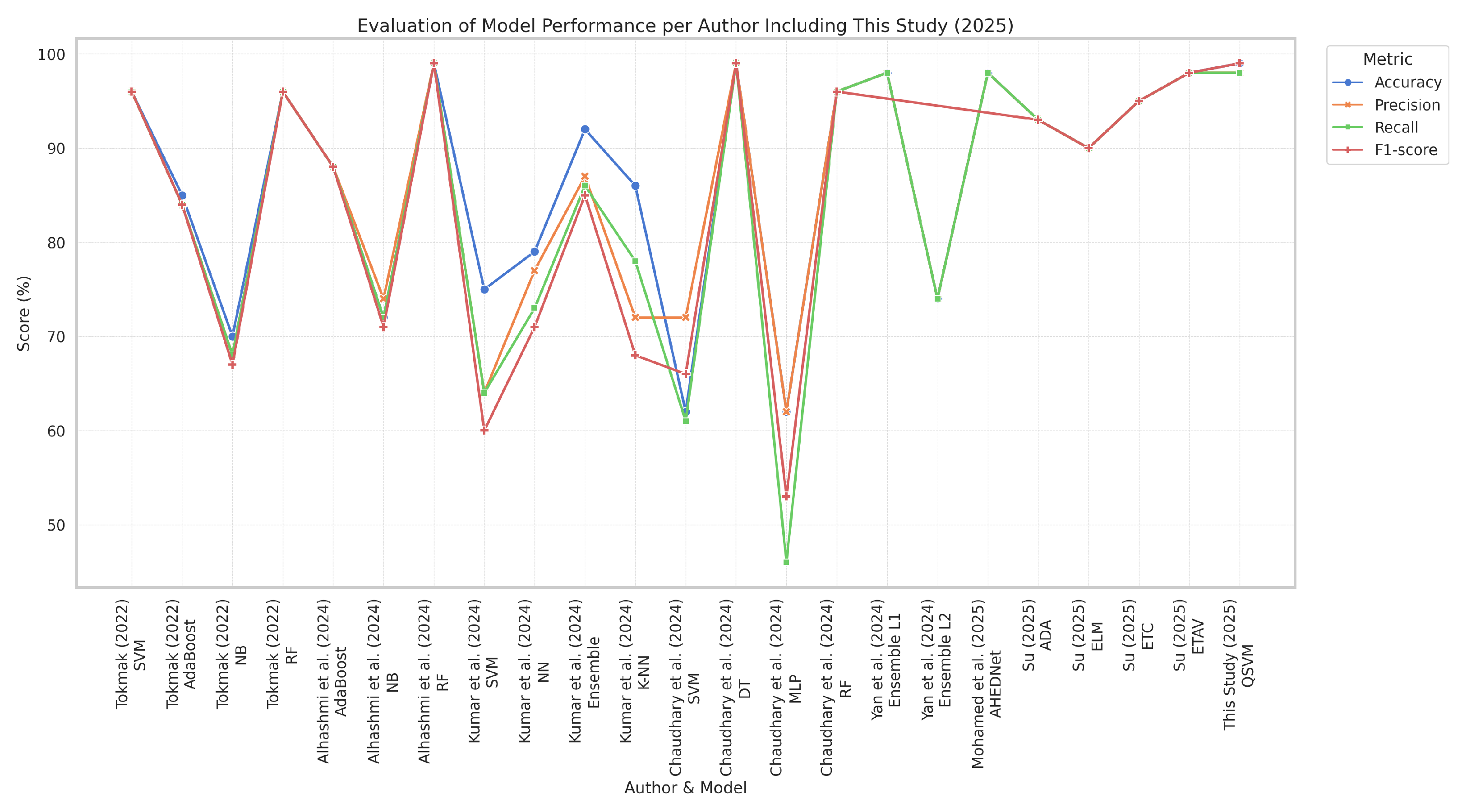

4.6. Comparison with Existing Studies

As shown in

Table 14, the majority of studies utilising the UGRansome dataset for IDSs do not implement QML for zero-day exploit detection (

Figure 11).

Figure 11 shows the proposed QSVM outperforming several classical methods evaluated in prior studies (

Table 14). With accuracy and F1-scores of 99%, our QSVM demonstrated superior detection capabilities, particularly for zero-day attacks. Compared to models such as AdaBoost (85–93%), NB (68–74%), and conventional SVM (62–96%), the proposed QSVM showed higher precision and recall. These gains reflect the model’s enhanced ability to generalise and capture complex and high-dimensional data structures typical of novel threat patterns. The improvement in F1-score suggests a balanced optimisation of precision and recall, critical in minimising both false positives and false negatives in IDS. While QML and data-balancing techniques such as SMOTE are established in isolation, this study advances beyond a mere aggregation of known methods. Our work proposes a novel and modular QSVM encoding framework grounded in information theory that introduces nonlinear and learnable QFMs that adapt to zero-day attacks. Furthermore, we establish a mutual information-based criterion for entanglement, enabling dynamic qubit connectivity that reflects data dependencies rather than fixed entanglement patterns. This architecture supports scalable and interpretable circuit design, bridging the gap between explainability and quantum kernel methods. Thus, our contribution extends beyond engineering optimisation and introduces methodological innovation relevant to real-world cybersecurity modeling in quantum-enhanced systems.

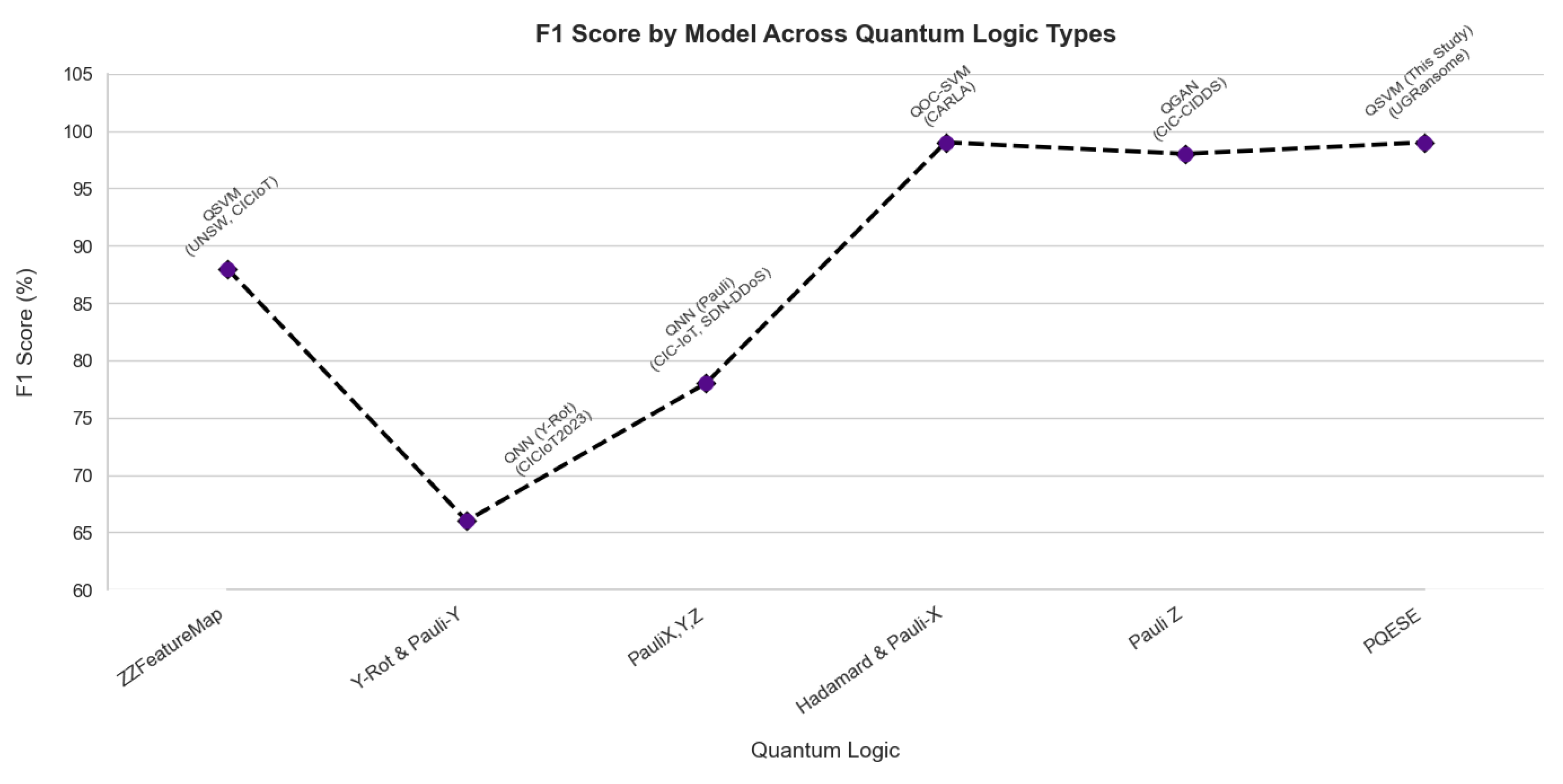

Table 15 provides a comparative analysis of recent QML approaches for intrusion detection.

Unlike prior works that mostly rely on standard feature maps (e.g., ZZFeatureMap or Pauli encodings) and limit experimentation to benchmark datasets such as CICIoT2023 or CIC-IDS2017 (

Figure 12), our study introduces an adaptive nonlinear encoding with data-driven sparse entanglement tested on UGRansome for zero-day exploit detection.

Unlike prior QML applications in cybersecurity, our contribution extends beyond merely integrating quantum subroutines. We provide both theoretical and empirical justification for their role in enhancing feature representation and classification robustness. Central to our approach is a mutual information-guided entanglement mechanism that injects domain-relevant correlations into the quantum state preparation, enabling a more expressive feature space and introducing an inductive bias that classical kernels struggle to replicate without significant computational overhead. We design a parameterised quantum circuit (PQC) that encodes 14 zero-day exploit features into multi-qubit states using a hybrid of amplitude encoding and variational entanglement layers. This encoding is informed by prior statistical analysis of feature importance, thereby improving generalisability. To evaluate quantum advantage, we compare our parameterised quantum encoding with sparse entanglement (PQESE) against state-of-the-art classical and quantum kernels. While classical models achieve high accuracy, they often overfit in low-data regimes. In contrast, our quantum model maintains competitive accuracy with reduced variance and improved decision boundaries across imbalanced classes. Achieving 99% accuracy and F1-score on the UGRansome dataset, our model outperforms existing approaches (ranging from 85 to 94%) not only in performance but in architectural innovation (

Figure 12). Unlike prior work that omits implementation details or focuses on static features, our method addresses hardware constraints and generalisation challenges. Specifically, the QSVM leverages a hybrid encoding scheme combining parameterised rotation gates and controlled-Z (CZ) entanglement, using sparse topologies to retain computational efficiency. This configuration enables precise modeling of high-dimensional behaviours typical of zero-day attacks. Compared to quantum models employing simpler encodings (e.g., Hadamard or Pauli-Y mappings), our PQESE configuration yields improved generalisation under adversarial variability (

Figure 12). These results demonstrate the architectural benefits of coupling expressive embeddings with entanglement-efficient connectivity [

86], especially for intrusion detection where robustness is critical. Though classical models like XGBoost achieve near-perfect accuracy, our QSVM offers structural advantages beyond marginal gains. Quantum kernel estimation enables feature spaces that scale exponentially with qubit count, capturing complex correlations in network traffic that classical methods approximate less effectively (

Table 15). Additionally, our QSVM uses fewer support vectors and produces simpler decision boundaries, potentially yielding computational benefits as hardware advances. While our findings are based on noiseless simulations, they lay the groundwork for real-world deployment where quantum-induced embeddings could enhance generalisation in adversarial contexts.

4.7. Implications for Critical Infrastructure Security

In critical infrastructure environments such as power grids, financial systems, and healthcare networks, any undetected zero-day threat can result in severe operational, financial, or safety consequences. The demonstrated sensitivity of QSVM models to novel attack patterns suggests their potential to function as a high-assurance anomaly detection component within such environments. The quantum-enhanced kernel using PQESE enables the system to better model nonlinear and complex feature interactions, which are often missed by classical models. As a result, early detection of polymorphic threats becomes more feasible, enhancing both resilience and response time in real-world deployments.

4.8. Explainability of Zero-Day Exploits Detection

Local interpretable model-agnostic explanations (LIME) is employed to quantify the contribution of each feature to the model’s predictions, providing interpretable insights into the classification process (

Figure 13) [

11].

Figure 13 depicts the distribution of LIME feature importance values across the three threat classes. In this study, these values highlight which features most strongly influence the model’s decision to classify a traffic flow as belonging to the zero-day (A) category. Each horizontal bar corresponds to the cumulative contribution of specific features such as

port,

netflow bytes, and

expAddress towards a particular class prediction. The length of the bars along the x-axis represents the magnitude of each feature’s influence [

11]. Key observations include the following:

High importance scores for the port feature within the SS class suggest that specific port usage patterns are critical in distinguishing benign from malicious behaviours.

Elevated LIME values for seedAddress and clusters in the A class indicate these features serve as strong indicators of zero-day exploit activity.

In real-world scenarios, these feature contributions empower cybersecurity analysts to trace model predictions back to observable network attributes, providing essential transparency. This explainability is especially critical for zero-day attacks, as it helps justify automated alerts and supports forensic investigations of previously unseen threat behaviours.

4.9. Ablation Analysis

To evaluate the contribution of the t-SNE feature selection mechanism, we conducted an ablation study on QSVM performance. As presented in

Table 16, removing t-SNE led to a consistent drop across all metrics (

Table 17).

For instance, the four-qubit QSVM saw its F1-score fall from 0.9825 (with t-SNE) to 0.9328, and the three-qubit version declined from 0.9796 to 0.9085. These reductions highlight t-SNE’s role in enhancing feature separability. To assess statistical significance, we applied the Wilcoxon signed-rank test [

87] using 10-fold cross-validation. Mean values of accuracy, precision, recall, and F1-score were reported, and the resulting

p-value of 0.031 (

Table 17) confirms that the performance differences are statistically significant. These results validate t-SNE as a critical component of the QSVM pipeline. To mitigate overfitting and reduce computational complexity, the Chi-Square feature selection retained the six most discriminative features. This decision was supported by feature importance scores and preliminary ablation results, which showed marginal or negative returns when including more than six features. Configurations using 10 or more features led to slight overfitting. As shown in

Table 16, the six-feature setup achieved an F1-score of 0.93, performing comparably or in some cases better than larger feature sets. While Chi-Square is a univariate method, it proved both interpretable and computationally efficient for our context.

4.10. Limitations and Future Work

Despite promising results, this study presents several limitations that warrant further investigation. Firstly, the QSVM relied on simulated quantum kernels due to current hardware limitations. These simulations do not fully capture the stochastic nature of real-world quantum noise, limited qubit connectivity, decoherence effects, or gate fidelity issues prevalent in NISQ. Consequently, while results on classical simulators are encouraging, real-device performance may vary substantially. Secondly, the UGRansome dataset, while offering a structured benchmark for zero-day attack detection, represents a narrow slice of network environments. Its focus on specific patterns constrains its generalisability across diverse infrastructures. Notably, advanced threats such as zero-click exploits and fileless malware remain underrepresented [

88]. Zero-click attacks executed without user interaction often evade detection by leaving minimal traces, whereas fileless malware operates entirely in-memory using legitimate system tools (e.g., PowerShell), bypassing traditional endpoint monitoring [

88]. The absence of granular system telemetry, volatile memory dumps, or behavioural traces in the dataset limits the QSVM’s capacity to detect such threats. Although the QSVM is theoretically capable of modeling high-dimensional feature spaces, its performance is contingent on the quality and scope of the input features. Integrating system-level forensic attributes, enriched behavioural metadata, and memory features may enhance detection fidelity in future implementations.

Furthermore, while the application of the Chi-Square feature selection reduced dimensionality and computational overhead, it may overlook feature interactions essential to adversarial behaviour. Similarly, t-SNE facilitated visual separability, but its use as a feature reduction method introduces challenges in preserving global structure and interpretability. Class imbalance was addressed using SMOTE; however, its interpolation nature may fail to reflect the dynamic, temporal, and adversarial properties of real-world zero-day attacks. Although oversampling was limited to training folds during cross-validation, SMOTE may still introduce optimistic bias. Future work should explore generative augmentation techniques (e.g., variational autoencoders) that simulate realistic attack behaviours and evolution over time. Future directions include

Investigating dynamic or adaptive quantum kernels that update based on feedback from training performance;

Exploring more expressive encoding strategies, such as data re-uploading circuits or higher-order entanglement architectures;

Employing hybrid quantum classical encoding (HQCE) to increase representational capacity while maintaining scalability;

Integrating quantum explainability frameworks (e.g., QLIME) to improve interpretability and forensic traceability of quantum predictions.

To validate real-world viability, future research will involve deploying the proposed QSVMs on physical quantum processors and evaluating them under noisy conditions. In parallel, quantum error mitigation techniques tailored to the specific encoding and circuit depth will be assessed to ensure robustness. Finally, generalisation capabilities will be benchmarked against heterogeneous datasets to ensure adaptability across threat landscapes. In sum, while this study demonstrates the potential of PQESE for zero-day attack detection, it also opens numerous avenues for enhancement, robustness, and interpretability. These directions will be critical to translating QML from experimental simulations to operational cybersecurity systems.

5. Conclusions

This study assessed various ML classifiers for zero-day attack detection using a labeled network traffic dataset. Among the evaluated models, ensemble learning techniques, most specifically XGBoost, achieved exceptional performance, correctly identifying zero-day instances with no false positives and demonstrating impressive efficiency in training and prediction. In contrast, classical SVMs yielded limited performance. However, when augmented with data-balancing strategies such as borderline-SMOTE and SMOTEENN, their accuracy improved significantly. To address the remaining limitations of classical SVMs in capturing complex threat patterns, a QSVM was implemented with three-qubit and four-qubit quantum layers. The inclusion of quantum-enhanced feature mappings led to further gains in performance, achieving impressive accuracies and F1-scores. Compared to conventional models, the QSVM outperformed ensemble-based and probabilistic classifiers. Looking ahead, future work will explore deploying the QSVM on physical quantum hardware to assess its viability under real-world noise conditions. Additional research will also focus on incorporating federated and continual learning paradigms to support adaptive threat detection across decentralised systems.

Key Takeaway: Quantum-enhanced learning models, especially QSVMs, demonstrate strong potential for elevating zero-day exploit detection in terms of accuracy and interpretability, paving the way for more resilient and intelligent quantum NIDSs (QNIDSs).

List of Abbreviations: presents abbreviations used throughout this manuscript.