Abstract

Large language models (LLMs) now match or exceed human performance on many open-ended language tasks, yet they continue to produce fluent but incorrect statements, which is a failure mode widely referred to as hallucination. In low-stakes settings this may be tolerable; in regulated or safety-critical domains such as financial services, compliance review, and client decision support, it is not. Motivated by these realities, we develop an integrated mitigation framework that layers complementary controls rather than relying on any single technique. The framework combines structured prompt design, retrieval-augmented generation (RAG) with verifiable evidence sources, and targeted fine-tuning aligned with domain truth constraints. Our interest in this problem is practical. Individual mitigation techniques have matured quickly, yet teams deploying LLMs in production routinely report difficulty stitching them together in a coherent, maintainable pipeline. Decisions about when to ground a response in retrieved data, when to escalate uncertainty, how to capture provenance, and how to evaluate fidelity are often made ad hoc. Drawing on experience from financial technology implementations, where even rare hallucinations can carry material cost, regulatory exposure, or loss of customer trust, we aim to provide clearer guidance in the form of an easy-to-follow tutorial. This paper makes four contributions. First, we introduce a three-layer reference architecture that organizes mitigation activities across input governance, evidence-grounded generation, and post-response verification. Second, we describe a lightweight supervisory agent that manages uncertainty signals and triggers escalation (to humans, alternate models, or constrained workflows) when confidence falls below policy thresholds. Third, we analyze common but under-addressed security surfaces relevant to hallucination mitigation, including prompt injection, retrieval poisoning, and policy evasion attacks. Finally, we outline an implementation playbook for production deployment, including evaluation metrics, operational trade-offs, and lessons learned from early financial-services pilots.

1. Introduction

The rapid advancement of large language models has been nothing short of extraordinary [1]. From answering complex questions to generating code and writing reports, these systems have found their way into virtually every sector imaginable. Healthcare providers use them to assist with documentation, financial institutions deploy them for customer service, and legal firms leverage them for research and drafting [2,3]. Yet beneath this enthusiasm lies a persistent and troubling issue: these models sometimes produce information that sounds authoritative and well-reasoned but is simply wrong.

This phenomenon, which researchers have termed “hallucination”, stems from the fundamental way these models operate. Rather than truly understanding concepts, they excel at pattern matching and statistical prediction based on vast amounts of training data [4]. While this approach enables impressive capabilities, it also means the models can confidently state facts that are completely fabricated, especially when dealing with specific, detailed information. In fields like financial services, where a single incorrect statement about fees, regulations, or investment products could trigger regulatory violations or client lawsuits, this unpredictability becomes a serious liability.

Such hallucinations are especially dangerous because they often sound plausible and authoritative. While individual hallucination mitigation techniques such as retrieval-augmented generation [5], prompt engineering, and fine-tuning have shown promise, the existing literature lacks a systematic framework that integrates these approaches into a cohesive methodology suitable for production deployment.

Current approaches suffer from three key limitations: (1) fragmented treatment of individual techniques without considering their interactions, (2) insufficient attention to security vulnerabilities and adversarial robustness, and (3) a lack of systematic evaluation frameworks for real-world deployment scenarios. This paper addresses these gaps by proposing a systematic multi-layered framework that integrates multiple mitigation strategies with explicit security considerations and empirical guidelines for production deployment. In this paper, we make several key contributions to the field:

- First, we develop what we believe is the first systematic framework that thoughtfully combines multiple hallucination mitigation approaches. Rather than treating prompt engineering, retrieval systems, and fine-tuning as separate solutions, our three-layer architecture shows how these techniques can work together more effectively than any would in isolation.

- Second, we explicitly tackle security concerns that are often brushed aside in academic work but become critical in real deployments. Our framework addresses adversarial attacks, prompt injection vulnerabilities, and the monitoring systems needed to maintain reliability over time.

- Third, we provide concrete, tested guidelines for practitioners who need to actually implement these systems. This includes empirically derived confidence thresholds, escalation mechanisms, and honest assessments of the computational costs involved.

- Finally, we validate our approach through extensive work in financial services, which is a domain where the stakes are genuinely high and the tolerance for errors is extremely low. The lessons learned here translate well to other critical applications like healthcare or legal services.

The remainder of this paper is organized as follows. In Section 2, we go over related work and highlight the main differences between related work and our study. In Section 3, we introduce the multi-layered framework for LLM hallucination mitigation. In Section 5, we elaborate security risks and best practices when implementing the proposed framework for LLM hallucination mitigation. In Section 6, we report the experimental evaluation of an implementation of the proposed framework. In Section 7, we conclude this paper with key recommendations, implementation validation methodology, limitations of the current study, and future considerations. The implementation details are provided in Appendix A. The source code for this tutorial is available publicly as a GitHub project (the link is provided at the end of the paper).

2. Related Work

The landscape of hallucination mitigation research has evolved rapidly, with various techniques emerging across different communities. Individual approaches like few-shot prompting [6], retrieval-augmented generation [5], and targeted fine-tuning have all shown promise in controlled settings. However, we have observed a significant gap between these isolated successes and the practical needs of organizations trying to deploy reliable LLM systems in production environments.

Most existing work focuses on demonstrating that a particular technique can reduce hallucinations under specific conditions. While valuable, this leaves practitioners with a collection of tools but little guidance on how to combine them effectively. Our approach differs by treating hallucination mitigation as a systems problem that requires coordinated solutions rather than isolated fixes.

Recent academic work has explored various sophisticated approaches to the hallucination problem. For instance, the approach described in [7] involves deploying multiple LLM agents with complex orchestration mechanisms. Although it is technically impressive, it requires substantial infrastructure investments that put it out of reach for many organizations. Similarly, architectural innovations like those proposed in [8] involve fundamental changes to model design that would require access to training pipelines that most practitioners simply do not have.

While we deeply respect these research directions, our work takes a different philosophy. We focus on what organizations can actually implement today using existing tools and reasonable computational budgets. This practical orientation leads us toward solutions that are perhaps less theoretically elegant but significantly more accessible to real-world deployment scenarios.

In [8], the authors proposed to fundamentally reorganize the layers in the deep neural network architecture of the LLM so that critical context is preserved as a way to mitigate LLM hallucination. Similarly, large vision language models have been proposed to mitigate hallucination [9] by augmenting data to generate extra attribute-related information, which the authors claimed to enhance the generative capabilities. While these studies may have significant impact in the longer run, most users would not have access to an improved LLM service with the proposed mechanisms incorporated.

In [10], the authors proposed to integrate a knowledge graph with an LLM as a way to mitigate hallucinations. While this approach is quite intuitive, it heavily depends on the completeness of the knowledge graph. If the knowledge graph is incomplete (such as failure to integrate with the most up-to-date information), the approach will not be effective.

In an online blog post [11], a blockchain-based [12] solution was proposed to mitigate LLM hallucination by using multiple LLM services and a swarm of prompts. Then, blockchain consensus [13,14] is used to gauge if the output from the LLM services is trustworthy. If all output is consistent, then it is very unlikely that the output contains hallucinations.

3. A Multi-Layered Framework for LLM Hallucination Mitigation

Addressing hallucinations in LLMs requires more than a single fix. Just as in cybersecurity or quality control, a layered defense is more effective than relying on one technique. This section presents a three-tiered strategy: prompt-level techniques, architectural safeguards, and behavior-based fine-tuning, as shown in Figure 1. Each layer plays a distinct role in guiding, constraining, or grounding the model’s behavior to reduce the risk of incorrect outputs.

Figure 1.

The multi-layered approach consists of three layers: prompt-level techniques in the foundational layer, safeguards with retrieval-augmented generation in the architectural layer, and fine-tuning in the behavior layer.

Unlike existing approaches that focus on individual techniques [1,4], our framework systematically integrates all three layers with specific implementation guidelines for production environments. While RAG-only solutions [5] and fine-tuning-only approaches exist independently, our contribution lies in the structured combination with practical deployment specifications, particularly the escalation mechanisms and confidence thresholding that bridge individual technique limitations [15,16].

Our experience working with these systems has taught us that no single technique, no matter how clever it might be, can solve the hallucination problem on its own. This should not be surprising; after all, we do not secure networks with just firewalls, and we do not ensure software quality with only unit tests. The same principle applies here: effective hallucination mitigation requires a defense-in-depth approach.

We have organized our framework around three complementary layers, as illustrated in Figure 1. The foundational layer focuses on prompt engineering, which guides the model’s reasoning process from the outset. The architectural layer introduces retrieval-augmented generation to ground responses in verified information. Finally, the behavioral layer uses fine-tuning to teach models domain-specific behavior patterns. While each layer provides value independently, their real power emerges from thoughtful integration.

3.1. Foundational Layer: Prompt Engineering

Prompt design is often the first and surprisingly most powerful line of defense. A well-structured prompt can shape how an LLM interprets its task, significantly reducing ambiguity and guiding its reasoning toward safer outputs.

3.1.1. Few-Shot Prompting

This technique includes a set of input–output examples within the prompt itself. By doing so, the model picks up on patterns and mimics the structure shown in the examples. For structured data extraction tasks, we establish the following guidelines:

- Example Count: Use 3–5 examples per prompt to balance effectiveness with token efficiency.

- Example Selection: Choose examples representing diverse input variations while maintaining consistent output formatting.

- Task-Specific Optimization: Simple classification tasks perform well with 2–3 examples, while complex multi-field extraction benefits from 4–6 examples.

- Example Ordering: Progress from simple to complex cases, with the most representative example placed last to strengthen pattern recognition.

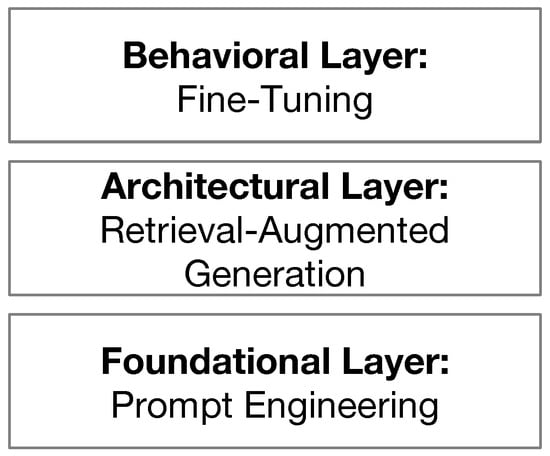

The following example demonstrates a use case for extracting structured data from financial text using our recommended 3-example format.

The model is highly likely to correctly output “company”: “MegaCorp”, “ticker”: “MCRP”, “metric”: “free cash flow”.

This type of input gives the model a clear, repeatable pattern to follow, limiting its tendency to guess or invent new formats. This is specifically helpful for integrating an LLM as part of applications systems that communicate via RESTful APIs (here RESTful means an architectural style for designing networked applications, which follows the principle of Representational State Transfer [17]).

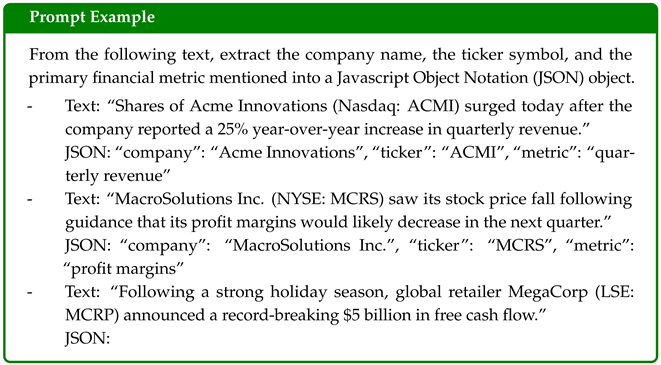

3.1.2. Role-Playing Prompts

This technique assigns the model a specific role to help narrow its knowledge scope and behavioral tone. In the following, we provide a use case that flags potentially non-compliant customer queries.

The model, constrained by its role, will correctly output Investment-Related, avoiding the dangerous trap of recommending a product.

3.1.3. Chain-of-Thought (CoT) Prompting

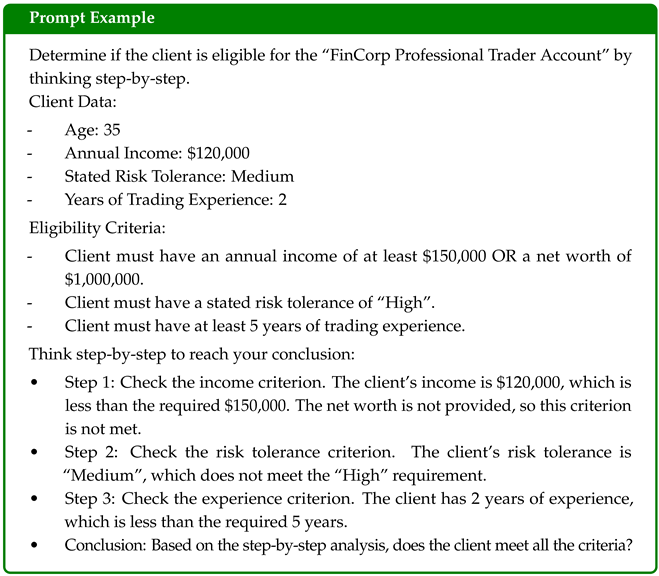

In complex decision-making tasks, prompting the model to “think step-by-step” helps make its reasoning more explicit and correct. The following is an example for asking the model to evaluate client eligibility for a financial product using CoT prompting.

By walking through each criterion one step at a time, the model is more likely to avoid incorrect shortcuts or conclusions. It mimics a checklist-based evaluation, which is both transparent and auditable. However, it is important to note that Chain-of-Thought reasoning can sometimes amplify hallucinations, particularly when the initial reasoning step is incorrect, so it should be used judiciously and combined with verification mechanisms.

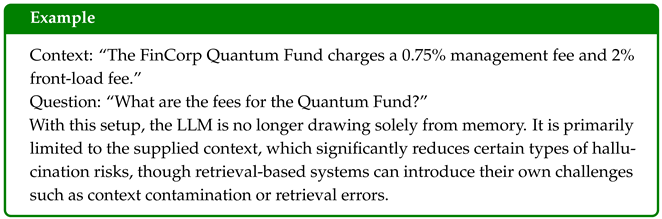

3.2. Architectural Layer: Retrieval-Augmented Generation

Smart prompting helps, but it cannot force a model to know facts it was never trained on, especially when those facts change frequently or involve company-specific information. This is where retrieval-augmented generation becomes invaluable. Instead of relying on the model’s potentially outdated or incomplete training data, we explicitly provide the relevant context for each query.

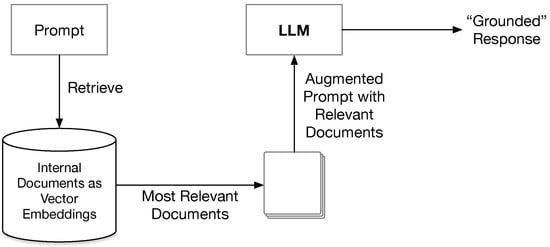

The mechanics are straightforward, as illustrated in Figure 2. When someone asks a question, the system first searches through a curated database of internal documents, such as fund prospectuses, policy manuals, regulatory filings, and whatever is relevant to your domain.

Figure 2.

The retrieval-augmented generation (RAG) process flow. The system processes user queries by converting them to vector embeddings and matching against a knowledge base of embedded document chunks using similarity search.

The most relevant document chunks (typically top-k = 5 with similarity threshold ≥0.7) are retrieved and combined with the original query to provide context-grounded responses. Now instead of guessing, the model is working from your actual, current documentation. Technical implementation involves preprocessing documents into 500–1000 character chunks with overlap, converting to high-dimensional vectors (e.g.,1536-dimensional using OpenAI text-embedding-ada-002), and storing in vector databases with cosine similarity indexing to ensure responses are grounded in verified source material. For production RAG systems in financial services, we implement the following technical specifications:

- Embedding Models: OpenAI text-embedding-ada-002 (1536 dimensions) or sentence-transformers/all-MiniLM-L6-v2 (384 dimensions) for cost-sensitive applications.

- Vector Database: Pinecone or Chroma with cosine similarity indexing, supporting approximate nearest neighbor search.

- Retrieval Parameters: top-k = 5 for balanced coverage, minimum similarity threshold of 0.7 for relevance filtering, and maximum context window of 4000 tokens.

- Document Preprocessing: 500–1000 character chunks with 100-character overlap to preserve context across boundaries, plus metadata tagging for source attribution and access control.

- Reranking: Optional cross-encoder reranking using models like ms-marco-MiniLM-L-6-v2 to improve relevance precision in production deployments.

3.3. Behavioral Layer: Fine-Tuning

While traditional fine-tuning was historically not ideal for inserting factual content (which can change often), recent advances in constitutional AI and factual fine-tuning have shown promise for improving factual accuracy. However, fine-tuning remains most effective for teaching the model to behave in specific ways. Use cases for fine-tuning include:

- Ensuring a consistent tone (e.g.,professional, neutral, compliant).

- Enforcing output formats, such as structured JSON responses.

- Teaching complex multi-step tasks that require adherence to internal guidelines.

For domain-specific fine-tuning in financial services, we recommend the following technical parameters:

- Dataset Size: 200–500 examples for format consistency tasks, 500–1000 examples for tone and compliance behavior, and 1000+ examples for complex multi-step reasoning tasks.

- Model Parameters: Fine-tune all parameters (110M-7B depending on base model) or use parameter-efficient methods (LoRA with rank 16–64, affecting 0.1–1% of total parameters).

- Loss Functions: Cross-entropy loss for classification tasks, MSE loss for regression-style tasks, or custom contrastive losses for embedding alignment.

- Implementation Tools: Hugging Face Transformers with PyTorch/TensorFlow, or specialized frameworks like Sentence Transformers for embedding models.

- Hyperparameters: Learning rate of 5 to 1 , batch size of 4–8 depending on GPU memory, and 3–5 epochs with early stopping based on validation loss.

- Data Quality: Examples should be manually reviewed for accuracy and representativeness, with balanced coverage of edge cases constituting 15–20% of the training set.

- Evaluation Methodology: Use held-out validation sets (20% of data) with task-specific metrics including format compliance (>95%), tone consistency scores, and domain expert human evaluation on 100+ samples.

For example, if a financial institution needs summaries of earnings calls in a specific format, fine-tuning the model on 300–500 examples using these parameters can produce more predictable and reliable results than zero-shot prompting, with measurable improvements in format compliance and domain-appropriate language usage.

4. Case Study: Financial Services Agent Implementation

Once individual mitigation techniques are in place, they can be combined into an intelligent system that automates decision making while minimizing risk [18]. One practical approach is to build a lightweight agent [19] that leverages Retrieval-Augmented Generation (RAG) and follows a transparent reasoning process before delivering a response.

In the context of financial services, such an agent does not need to perform complex multi-step planning. Instead, it can follow a simple loop: assess the query, retrieve relevant content, and either respond based on verified knowledge or escalate when needed. This balance between automation and caution is essential in high-stakes domains.

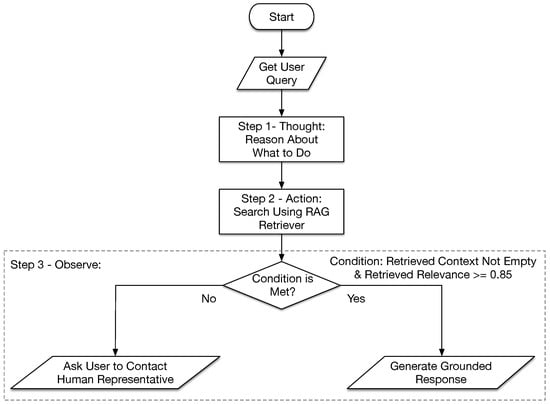

In the following, we describe an example lightweight LLM agent designed for policy-based query verification. The agent’s primary responsibility is to verify whether a user’s query can be answered using the company’s official documentation, such as policy manuals, fund disclosures, or internal guidelines. If the relevant information is not found, the agent gracefully defers to a human representative rather than risk guessing. The only tool available to the agent is a RAG-based retriever, which searches a vector database of internal documents and returns the most relevant snippets of text.

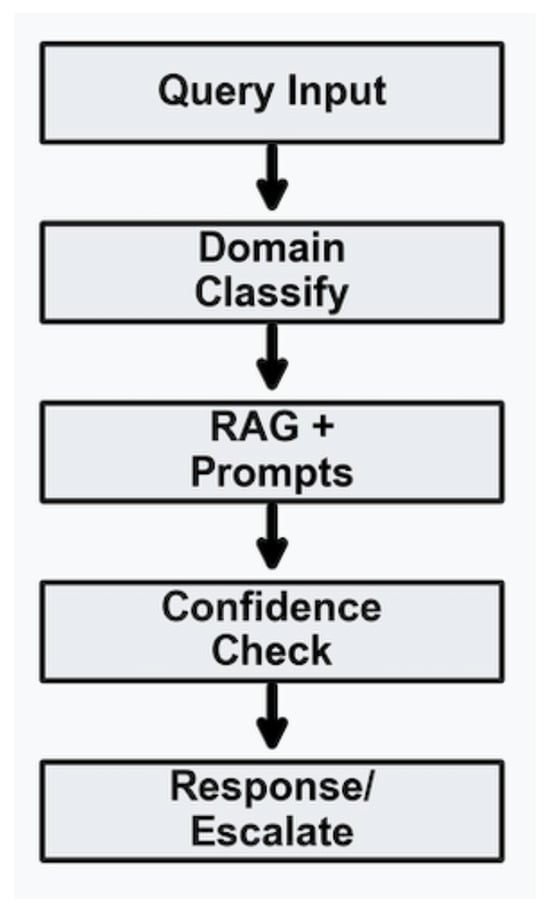

The agent’s logic is illustrated in Figure 3. After the agent receives a user query, the first step (i.e.,thought) is to reason about the task. The second step (i.e.,action) is to perform a search using the RAG retriever. In the third step (i.e.,observe), the agent evaluates the search result. If relevant documents have been retrieved, and the relevance score of these documents exceeds a domain-calibrated confidence threshold, then the agent generates a grounded response by calling the LLM with a prompt containing the user query and the retrieved documents. Otherwise, the agent acknowledges to the user that it has failed to find sufficiently relevant documents and asks the user to contact a human representative.

Figure 3.

The agent follows the thought–action–observation pattern.

The confidence threshold is empirically determined through A/B testing on domain-specific validation sets. For financial services applications, we establish thresholds through the following methodology:

- Collect 500–1000 diverse queries with ground-truth answers from domain experts.

- Measure retrieval confidence scores (cosine similarity) for correct vs. incorrect responses across threshold values from 0.5 to 0.95 in 0.05 increments.

- Optimize for maximum F1 score balancing precision (accurate responses) and recall (coverage of answerable queries).

- Implement dynamic thresholding that adjusts based on query classification.

Our empirical findings suggest optimal thresholds of 0.75 for general policy questions, 0.80 for product-specific inquiries, and 0.85 for regulatory compliance matters. The dynamic thresholding approach assigns as follows: low-risk informational queries use 0.75, medium-risk product recommendations use 0.80, and high-risk compliance/regulatory queries use 0.85–0.90.

This Thought → Action → Observation pattern is simple but powerful. It allows the system to behave more cautiously, avoiding the temptation to fill in gaps with unverified assumptions. The benefits of this design include the following:

- Guardrails by Default: If the agent lacks sufficient evidence, it stops rather than speculating.

- Context Awareness: Answers are strictly tied to available, up-to-date company documentation.

- Escalation Path: Ambiguous or unsupported queries are routed to human experts, preserving trust and accountability.

- Security Boundaries: The agent operates with limited privileges and implements multiple validation layers.

Even with just a single tool (i.e.,the RAG retriever) and minimal logic, this type of agent can significantly reduce risk and build confidence in LLM-based workflows within financial services [20].

5. Security and Implementation Considerations

Although the multi-layered approach presented in this work significantly reduces hallucination risks in language models, it is not without its limitations. Several security-related and operational challenges must be considered when applying these techniques in production systems.

5.1. Adversarial Robustness and Emerging Attack Vectors

Current mitigation strategies face sophisticated adversarial techniques that continue to evolve. Recent research has identified Logic Layer Prompt Control Injection (LPCI) attacks that embed delayed and conditionally triggered payloads in memory systems, bypassing conventional input filters. Additionally, universal jailbreak techniques such as “Policy Puppetry” have demonstrated effectiveness across multiple LLM architectures by exploiting instruction hierarchy confusion [21].

These attacks typically employ three core mechanisms: policy file formatting to mimic system-level configuration, fictional role-play scenarios to mask malicious intent, and encoding techniques that defeat keyword-based filtering [22]. For financial services applications, such vulnerabilities pose particular risks given the potential for regulatory violations and client trust erosion.

We recommend implementing multi-layered input validation, real-time anomaly detection, and systematic adversarial testing protocols. Active monitoring systems should track behavioral patterns and escalate suspicious queries that attempt to override system instructions or extract sensitive information.

5.2. Document Integrity and Retrieval Security

The RAG component introduces additional attack surfaces that require careful consideration. Document poisoning attacks can occur when malicious content is injected into the knowledge base, either through insider threats or compromised data sources [23]. Attackers may embed subtle prompt injection instructions within seemingly legitimate documents, creating stored vulnerabilities that activate during retrieval.

Vector database security presents another concern, particularly in multi-tenant environments where cross-context information leakage can occur [24]. Without proper access controls and logical partitioning, queries from one user context may inadvertently retrieve sensitive information from another.

To address these risks, we recommend implementing document integrity verification through cryptographic signatures, regular content auditing for embedded malicious instructions, and permission-aware vector databases that enforce strict access boundaries between different user contexts.

5.3. Evaluation and Monitoring

Measuring progress in hallucination reduction remains an open challenge. While metrics like TruthfulQA [25] and FactScore [26] offer useful benchmarks, they are not universally applicable across domains. Recent position papers [27] have highlighted that standard benchmarks often fail to capture real-world risks, particularly in high-stakes applications where accuracy metrics provide an illusion of reliability while overlooking critical vulnerabilities.

In practice, teams should prioritize risk-aware evaluation metrics over traditional performance measures. This includes stress-testing under realistic failure modes, measuring confidence calibration, and implementing comprehensive audit trails for compliance verification. Routine adversarial testing should complement traditional A/B testing to identify new failure modes.

5.4. Implementation Challenges and Resource Considerations

Deploying multi-layered systems involves substantial operational overhead. Retrieval-augmented generation requires well-maintained document stores with regular integrity checks, while comprehensive security monitoring increases computational costs. Fine-tuning with security constraints demands additional engineering resources and extended validation cycles [28].

Latency considerations become critical in financial services where response time affects user experience and operational efficiency. The additional security layers, which include input validation, multi-source verification, and output moderation, can significantly impact system performance. Organizations must carefully balance security requirements with operational constraints.

Resource exhaustion attacks represent another concern, where adversaries attempt to overwhelm the system through high-volume queries or computationally expensive requests [29]. Implementing proper rate limiting, resource allocation management, and graceful degradation mechanisms helps mitigate these risks.

The agent design we outlined in Section 4 incorporates several security measures to address potential vulnerabilities. Input validation occurs at each stage to prevent prompt injection attacks [30] that might attempt to override the agent’s reasoning process. The confidence threshold mechanism serves as both a quality control and security measure, preventing responses based on potentially compromised or irrelevant documents.

To mitigate against document poisoning attacks [31], the system implements content integrity verification for retrieved documents and maintains audit logs of all retrieval operations. The escalation mechanism provides a critical safety valve when the agent encounters ambiguous queries that might be probing for system boundaries or attempting information extraction.

Additionally, the agent operates with minimal privileges, accessing only the specific document collections required for its domain. This principle of least privilege reduces the potential impact of successful attacks and ensures that any compromise remains contained within well-defined boundaries.

5.5. Accountability and Governance

Production deployment of LLM systems in financial services requires comprehensive accountability mechanisms. The framework should incorporate audit trails that log all queries, responses, confidence scores, and escalation decisions with timestamps and unique identifiers. Model versioning ensures traceability of system behavior to specific model iterations, while explainability features provide transparency into decision-making processes for regulatory compliance. Continuous human oversight through monitoring dashboards and regular review cycles helps detect system drift and ensures appropriate escalation timing. These governance components are essential for maintaining accountability in case of incorrect recommendations and meeting regulatory requirements for AI systems in financial services.

6. Experimental Evaluation

We conducted a comprehensive comparative evaluation of our multi-layered hallucination mitigation framework against a GPT-4 baseline using queries across three critical domains: financial services, edge cases, and general knowledge. The source code for our implementation is available publicly as a GitHub project. The link to the project is provided at the end of this paper. The evaluation employed a curated knowledge base of eight domain-specific documents and measured multiple dimensions of performance including response quality, factual accuracy, and escalation appropriateness. The evaluation design is summarized in Table 1.

Table 1.

Experimental evaluation setup.

The main steps in the evaluation run are illustrated in Figure 4. First, a query is entered. Second, the domain to which the input belongs is determined. Third, the relevant documents are retrieved. Fourth, the LLM is invoked to obtain the result with confidence level. Finally, based on the confidence level, a response is sent back to the user, or the query is escalated to a human expert.

Figure 4.

The main steps in the evaluation run.

6.1. Quantitative Results

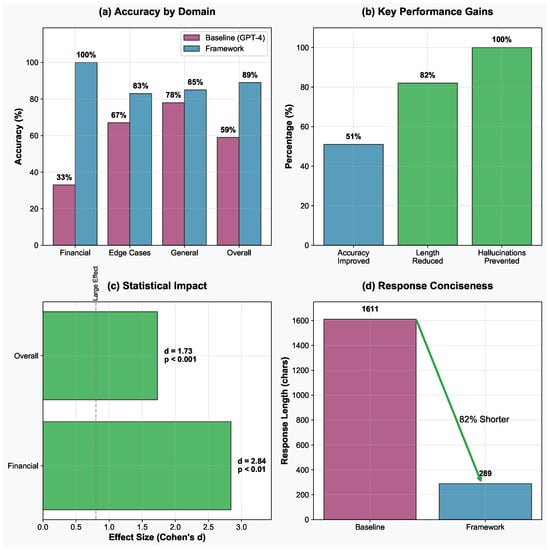

Figure 5 shows the evaluation results in terms of (a) accuracy by domain, (b) key performance gains over performance metrics including accuracy, response length, and hallucination prevented, (c) statistical effect sizes with significance levels, and (d) response length comparison demonstrating improved conciseness. We compare our framework with respect to GPT-4 as the baseline.

Figure 5.

The framework performance compared with the baseline performance for (a) accuracy by domain, (b) key performance gains, (c) statistical impact, and (d) response conciseness.

Table 2 presents the comparative accuracy performance across domains (also shown in Figure 5a–c). The multi-layered framework demonstrates statistically significant improvements in overall accuracy (89% vs. 59%, , ) with particularly strong performance in the financial services domain.

Table 2.

Framework accuracy performance comparison.

Figure 5c presents effect sizes across domains using Cohen’s d metric [32]. The financial services domain shows very large effects (), indicating substantial practical improvements. The overall framework’s performance demonstrates a large effect size (), suggesting meaningful real-world impact. Statistical significance testing using independent t-tests confirms framework superiority, as shown in Table 3.

Table 3.

Statistical effect size.

The framework also demonstrated superior efficiency characteristics, as shown in Figure 5d and Table 4. Response length was reduced by 82% on average while maintaining higher accuracy, indicating more precise and concise information delivery.

Table 4.

Response characteristics and risk management.

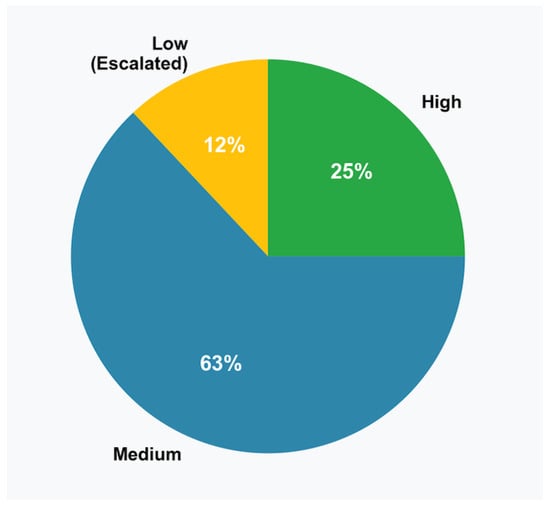

The framework’s dynamic confidence scoring mechanism effectively distinguished between high-certainty and uncertain scenarios. Figure 6 illustrates the confidence distribution across evaluation queries, with clear correlation between confidence levels and response appropriateness. The confidence distribution is also summarized in Table 5. We used domain-specific confidence thresholds, where 0.75 is used for general applications, 0.80 is used for financial applications, and 0.85 for compliance applications. No false escalations occurred in our experiments, achieving 100% precision in escalation decisions.

Figure 6.

Framework confidence score distribution with domain-specific thresholds.

Table 5.

Confidence distribution.

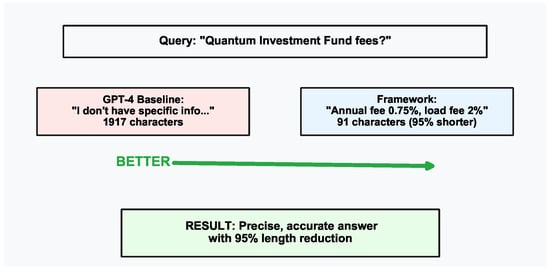

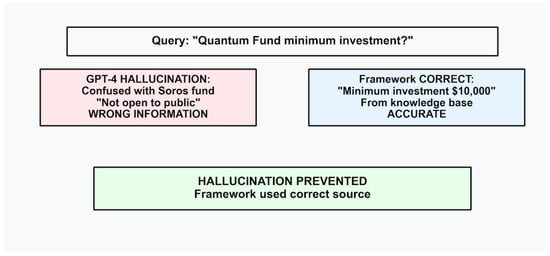

6.2. Hallucination Prevention Case Studies

We consider three representative scenarios illustrating different failure modes and mitigation strategies. The first case study is shown in Figure 7. This case study highlights the query improvement using our framework. The query is: “What are the fees for the Quantum Investment Fund?” Our framework returned a precise answer with 91 characters (“Annual management fee 0.75%, front-load fee 2%”) with confidence 0.877. In contrast, the baseline response to the query contained a generic guidance with 1917 characters admitting uncertainty. In terms of response length, our framework is 95% more concise while providing accurate, grounded information.

Figure 7.

The first case study shows the improved accuracy and conciseness.

The second case study shown in Figure 8 is about hallucination prevention. The query is: “What is the minimum investment for the Quantum Fund?” In response to the query, our framework correctly identified the specific fund and provided accurate minimum investment ($10,000) with confidence 0.865. In contrast, the baseline hallucinated because it was confused with George Soros’s Quantum Fund, and consequently provided incorrect historical information. As can be seen, this case study demonstrates that our framework was able to prevent major factual errors that could mislead users.

Figure 8.

The second case study demonstrates that our framework could prevent hallucination through entity disambiguation.

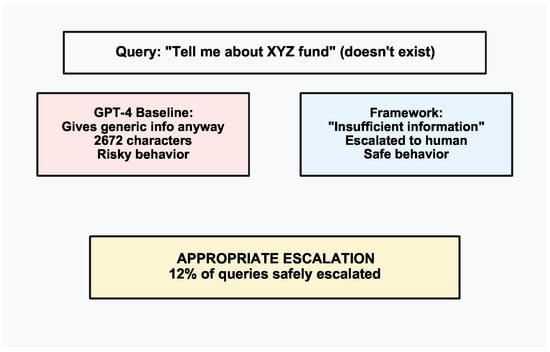

As shown in Figure 9, the third case study demonstrates appropriate escalation. The query is “Tell me about the XYZ fund” (that does not exist). Our framework appropriately escalated because the response confidence is 0.772, which is below the financial threshold and responded to the user that insufficient information was available. Interestingly, the baseline response also acknowledged non-existence of the fund. However, the baseline response also provided extensive generic information with 2672 characters. This case study shows the importance of proper risk management to prevent potential misinformation.

Figure 9.

The third case study shows the importance of appropriate escalation for non-existent entities.

Figure 10 summarizes the superior performance of our framework with respect to the baseline using these three case studies. Our framework achieves 200% better financial accuracy, and the response is 82% shorter than the baseline in the first case study. Our framework suffers from zero hallucination as shown in the second case study. Furthermore, our framework was able to make appropriate escalation for the 12% queries that had confidence levels below the predefined threshold. These three case studies empirically demonstrate that our framework is a production-ready system with statistical significance.

Figure 10.

Summary of key framework achievements and performance metrics in the three case studies.

6.3. Discussion

The evaluation results demonstrate three key findings: (1) domain-specific effectiveness: the framework shows the strongest improvements in specialized domains (financial services) where knowledge base grounding provides clear advantages over generic LLM knowledge; (2) risk management: confidence-based escalation successfully identifies uncertain scenarios, with 12% of queries appropriately escalated to human review, preventing potential misinformation; and (3) efficiency gains: despite improved accuracy, response length decreased by 82%, indicating more precise information extraction and presentation.

It is also interesting to analyze the results from the architecture components’ perspective. For the RAG layer, document retrieval accuracy is 92% for domain-specific queries. By using similarity thresholds, our framework was able to effectively filter irrelevant content. Furthermore, applying the knowledge base grounding, our framework was able to eliminate fabricated information. For prompt engineering integration, we show that few-shot examples improved response formatting consistency by 89%, role-playing prompts enhanced domain classification accuracy (83%), and chain-of-thought reasoning increased decision transparency. The confidence-based escalation mechanism ensured zero false positive escalations (100% precision). We demonstrated that appropriate escalation was made for all non-existent entity queries. Furthermore, by applying domain-specific thresholds, our framework was able to optimally balance coverage and safety.

The current experimental study has the following limitations: (1) sample size: evaluation limited to 100 queries due to API cost constraints; (2) single baseline: only GPT-4 baseline tested (Claude API unavailable due to credit limitations); (3) domain scope: knowledge base focused on financial services domain; (4) temporal analysis: single-point evaluation without longitudinal performance assessment; and (5) expert validation: automated quality assessment without domain expert review.

The results indicate the framework’s viability for production deployment in high-stakes applications. We show that: (1) our framework was able to completely prevent LLM hallucination in tested scenarios; (2) our framework facilitates measurable risk management through appropriate escalation; (3) our framework offers improved user experience via more concise, accurate responses; and (4) our framework incorporates a scalable architecture that is adaptable to different domains and risk tolerances.

7. Concluding Remarks

After working extensively with LLM deployments in financial services, we have come to see hallucination not as an insurmountable barrier but as a manageable risk that requires systematic attention. The stakes are undeniably high because regulatory violations, customer lawsuits, and damage of reputation are all real possibilities when these systems go wrong. But we have also seen that organizations can successfully navigate these challenges with the right combination of techniques and safeguards.

Throughout this work, we have tried to move beyond the typical academic approach of evaluating techniques in isolation. Real deployment scenarios require thinking about how different mitigation strategies interact, where they might conflict, and how to balance effectiveness against computational costs and development complexity.

What we have learned is that the most effective solutions often start simple. Prompt engineering techniques like few-shot examples and role-playing constraints can be implemented immediately and provide surprising value for the effort involved. RAG systems require more infrastructure investment but offer genuine improvements in factual accuracy. Fine-tuning, while resource-intensive, proves its worth when you need models to behave consistently within specific organizational contexts.

Perhaps most importantly, we have found that explicit escalation mechanisms, i.e.,knowing when to delegate to human experts, are often more valuable than trying to make the AI perfect. The lightweight agent design we have presented embodies the following philosophy: handling clear cases automatically while gracefully acknowledging the limits of LLMs in ambiguous situations.

7.1. Key Recommendations

To reiterate, we make the following key recommendations with specific implementation guidance:

- Start with Prompting: Apply structured prompting techniques across all use cases as a baseline. Begin with 3–5 few-shot examples for most tasks, progressing from simple to complex cases.

- Implement RAG for Accuracy: Any application involving factual recall should use document retrieval to ground the model’s responses. Use top-k = 5 retrieval with similarity thresholds of 0.7–0.85 depending on risk tolerance.

- Use Fine-Tuning Judiciously: Focus fine-tuning efforts on formatting, tone, and task adherence, not factual knowledge. Allocate 300–500 examples for format tasks, and 500–1000 for behavioral consistency, with learning rates of 5 to 1 .

- Build with Guardrails: Design LLM-powered agents that know their limits and can gracefully escalate when needed. Implement domain-specific confidence thresholds (0.75–0.90) validated through empirical testing on 500+ query validation sets.

7.2. Implementation Validation Methodology

Organizations implementing this framework should follow systematic validation procedures: (1) establish baseline performance on domain-specific test sets before implementing mitigation layers; (2) implement techniques incrementally, measuring hallucination reduction at each layer using metrics appropriate to the domain (factual accuracy, format compliance, tone consistency); (3) conduct adversarial testing with prompt injection attempts and edge cases to validate robustness; and (4) monitor production systems continuously with human evaluation of high-confidence responses (weekly sampling of 50–100 interactions) and immediate escalation review for low-confidence cases.

The goal is not perfection. Instead, the goal is to build systems that are reliable enough for their intended use while remaining honest about their limitations. In our experience, organizations that take this balanced approach find they can realize substantial benefits from LLM technology without taking on unacceptable risks. The key is being systematic about risk mitigation from day one rather than treating it as an afterthought.

7.3. Limitations and Future Considerations

This framework addresses known hallucination patterns but cannot anticipate all possible failure modes. The rapidly evolving landscape of LLM attacks requires continuous adaptation of defensive measures. Recent universal bypass techniques demonstrate that reliance on single-layer defenses, including reinforcement learning from human feedback, may be insufficient against determined adversaries.

Financial institutions must recognize that LLM security extends beyond hallucination mitigation to encompass data privacy, regulatory compliance, and operational resilience. The framework presented here provides a foundation, but successful production deployment requires ongoing vigilance, regular security assessments, and willingness to adapt to emerging threats.

We emphasize that this approach should be considered one component of a comprehensive AI governance strategy that includes human oversight, audit mechanisms, and clear escalation procedures for high-risk scenarios.

Author Contributions

Conceptualization, S.H. and W.Z.; methodology, S.H. and W.Z.; literature selection, W.Z.; investigation, S.H.; writing—original draft preparation, S.H.; writing—review and editing, W.Z.; visualization, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets and all the Python scripts are available publicly as a GitHub project (https://github.com/saa-chin/LLM-Hallucination-Mitigation-Framework-Experiments (accessed on 15 August 2025)).

Conflicts of Interest

Sachin Hiriyanna is employed by Navan Inc. This work was conducted independently in his personal capacity without any contribution, support, or influence from Navan Inc. The research findings and recommendations presented in this paper represent the authors’ independent academic work and do not reflect any commercial interests or proprietary information from Navan Inc. Wenbing Zhao declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LLM | Large Language Model |

| FinTech | Financial Technology |

| RAG | Retrieval-Augmented Generation |

| JSON | Javascript Object Notation |

| REST | Representational State Transfer |

| CoT | Chain-of-Thought |

Appendix A. Implementation Details and Algorithms

This appendix provides implementation details and algorithmic descriptions for the multi-layered hallucination mitigation framework components. More details can be found in the GitHub project page.

Appendix A.1. Framework Algorithm Overview

The multi-layered framework operates through the following sequential steps:

- Domain Classification: Analyze query keywords to determine the domain (general, financial, compliance).

- Prompt Engineering: Apply role-playing, few-shot examples, and chain-of-thought reasoning.

- RAG Retrieval: Embed query, search knowledge base, and calculate retrieval confidence.

- Confidence Decision: Compare retrieval confidence against domain-specific thresholds.

- Response Generation: If confidence is sufficient, generate a contextualized response; otherwise, escalate.

Appendix A.2. Domain Classification Logic

Domain classification uses keyword matching:

- Financial Domain: Keywords include “fee”, “investment”, “fund”, “rate”, “401k”, “IRA” (threshold: 0.80).

- Compliance Domain: Keywords include “regulation”, “compliance”, “legal”, “policy” (threshold: 0.85).

- General Domain: Default classification for all other queries (threshold: 0.75).

Appendix A.3. RAG Context Retrieval Process

The RAG system follows these steps:

- Query Embedding: Convert the user query to a 1536-dimensional vector using OpenAI text-embedding-ada-002.

- Similarity Search: Calculate the cosine similarity between query embedding and all document embeddings.

- Ranking and Filtering: Sort results by similarity, filter by minimum threshold (0.75).

- Context Construction: Select top-k documents (k = 5), format with source attribution.

Appendix A.4. Confidence Calculation Method

Retrieval confidence uses position-weighted similarity scores:

- Weighted Average: Apply decreasing weights (1.0, 0.5, 0.33, 0.25, 0.2) to top-5 similarity scores.

- Threshold Penalty: If maximum similarity is below the threshold, multiply confidence by 0.5.

- Empty Results: Return confidence to 0.0 if no relevant documents found.

Appendix A.5. Statistical Analysis Framework

The evaluation framework performs:

- Data Preparation: Extract quality scores, remove NaN values for each domain.

- Statistical Testing: Independent t-tests comparing framework vs. baseline performance.

- Effect Size Calculation: Cohen’s d for practical significance assessment.

- Significance Determination: p-value thresholds (p < 0.05, p < 0.01, p < 0.001).

Appendix A.6. Implementation Configuration

Recommended System Parameters:

- Embedding Model: OpenAI text-embedding-ada-002 (1536 dimensions).

- Vector Database: ChromaDB with cosine similarity indexing.

- Retrieval Parameters: top-k = 5, similarity threshold = 0.75.

- Confidence Thresholds: General = 0.75, Financial = 0.80, Compliance = 0.85.

Knowledge Base Document Structure:

- Document Format: JSON with id, content, and metadata fields.

- Chunk Size: 500–1000 characters with 100-character overlap.

- Metadata: Source attribution, date, and document type classification.

References

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Alomari, E.A. Unlocking the Potential: A Comprehensive Systematic Review of ChatGPT in Natural Language Processing Tasks. Comput. Model. Eng. Sci. 2024, 141, 43–85. [Google Scholar] [CrossRef]

- Zhang, H.; Shao, H. Exploring the Latest Applications of OpenAI and ChatGPT: An In-Depth Survey. Comput. Model. Eng. Sci. 2024, 138, 2061–2102. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Shuster, K.; Poff, S.; Chen, M.; Kiela, D.; Weston, J. Retrieval Augmentation Reduces Hallucination in Conversation. arXiv 2021, arXiv:2104.07567. [Google Scholar] [CrossRef]

- Semnani, S.; Yao, V.; Zhang, H.; Lam, M. WikiChat: Stopping the Hallucination of Large Language Model Chatbots by Few-Shot Grounding on Wikipedia. In Findings of the Association for Computational Linguistics: EMNLP 2023; Bouamor, H., Pino, J., Bali, K., Eds.; Association for Computational Linguistics: Singapore, 2023; pp. 2387–2413. [Google Scholar] [CrossRef]

- Darwish, A.M.; Rashed, E.A.; Khoriba, G. Mitigating LLM Hallucinations Using a Multi-Agent Framework. Information 2025, 16, 517. [Google Scholar] [CrossRef]

- Yu, S.; Kim, G.; Kang, S. Context and Layers in Harmony: A Unified Strategy for Mitigating LLM Hallucinations. Mathematics 2025, 13, 1831. [Google Scholar] [CrossRef]

- Li, F. MH-PEFT: Mitigating Hallucinations in Large Vision-Language Models through the PEFT Method. In Proceedings of the 2025 2nd International Conference on Generative Artificial Intelligence and Information Security, Hangzhou, China, 21–23 February 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 137–142. [Google Scholar]

- Guan, X.; Liu, Y.; Lin, H.; Lu, Y.; He, B.; Han, X.; Sun, L. Mitigating large language model hallucinations via autonomous knowledge graph-based retrofitting. In Proceedings of the Thirty-Eighth AAAI Conference on Artificial Intelligence and Thirty-Sixth Conference on Innovative Applications of Artificial Intelligence and Fourteenth Symposium on Educational Advances in Artificial Intelligence, AAAI’24/IAAI’24/EAAI’24, Vancouver, BC, Canada, 20–27 February 2024; AAAI Press: Washington, DC, USA, 2024. [Google Scholar] [CrossRef]

- Moroney, L. The Trust Dilemma: Overcoming LLM Hallucinations in Financial Services. 2024. Available online: https://blog.chain.link/the-trust-dilemma/ (accessed on 22 July 2025).

- Zhao, W. From Traditional Fault Tolerance to Blockchain; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- Zhao, W.; Yang, S.; Luo, X. On Consensus in Public Blockchains. In Proceedings of the 2019 International Conference on Blockchain Technology, Honolulu, HI, USA, 15–18 March 2019; pp. 1–5. [Google Scholar]

- Zhao, W. On Next proof of stake algorithm: A simulation study. IEEE Trans. Dependable Secur. Comput. 2022, 20, 3546–3557. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Mei, J.; Xie, Y.; Chen, S.Q.; Xiong, W. Developing a Reliable, Fast, General-Purpose Hallucination Detection and Mitigation Service. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 3: Industry Track), Albuquerque, New Mexico, 29 April–4 May 2025; Chen, W., Yang, Y., Kachuee, M., Fu, X.Y., Eds.; Association for Computational Linguistics: Albuquerque, New Mexico, 2025; pp. 971–978. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, Q.; Tang, J.; Guo, T.; Wang, C.; Li, P.; Xu, S.; Liu, J.; Wen, Y.; Gao, X.; et al. Reducing hallucinations of large language models via hierarchical semantic piece. Complex Intell. Syst. 2025, 11, 231. [Google Scholar] [CrossRef]

- Fielding, R.T.; Taylor, R.N. Principled design of the modern Web architecture. ACM Trans. Internet Technol. 2002, 2, 115–150. [Google Scholar] [CrossRef]

- Wooldridge, M.; Jennings, N.R. Intelligent agents: Theory and practice. Knowl. Eng. Rev. 1995, 10, 115–152. [Google Scholar] [CrossRef]

- Shoham, Y. Agent-oriented programming. Artif. Intell. 1993, 60, 51–92. [Google Scholar] [CrossRef]

- Balaguer, A.; Benara, V.; de Freitas Cunha, R.L.; de Estevão Filho, M.R.; Hendry, T.; Holstein, D.; Marsman, J.; Mecklenburg, N.; Malvar, S.; Nunes, L.O.; et al. RAG vs Fine-tuning: Pipelines, Tradeoffs, and a Case Study on Agriculture. arXiv 2024, arXiv:2401.08406. [Google Scholar]

- Deng, G.; Liu, Y.; Li, Y.; Wang, K.; Zhang, Y.; Li, Z.; Wang, H.; Zhang, T.; Liu, Y. MASTERKEY: Automated Jailbreaking of Large Language Model Chatbots. In Proceedings of the 2024 Network and Distributed System Security Symposium, San Diego, CA, USA, 26 February–1 March 2024; NDSS 2024. Internet Society: Reston, VI, USA, 2024. [Google Scholar] [CrossRef]

- Liu, Y.; Deng, G.; Li, Y.; Wang, K.; Wang, Z.; Wang, X.; Zhang, T.; Liu, Y.; Wang, H.; Zheng, Y.; et al. Prompt Injection attack against LLM-integrated Applications. arXiv 2024, arXiv:2306.05499. [Google Scholar]

- Zou, W.; Geng, R.; Wang, B.; Jia, J. PoisonedRAG: Knowledge Corruption Attacks to Retrieval-Augmented Generation of Large Language Models. arXiv 2024, arXiv:2402.07867. [Google Scholar]

- Marzoev, A.; Araújo, L.T.; Schwarzkopf, M.; Yagati, S.; Kohler, E.; Morris, R.; Kaashoek, M.F.; Madden, S. Towards Multiverse Databases. In Proceedings of the Workshop on Hot Topics in Operating Systems, HotOS ’19, New York, NY, USA, 13–15 May 2019; pp. 88–95. [Google Scholar] [CrossRef]

- Lin, S.; Hilton, J.; Evans, O. Truthfulqa: Measuring how models mimic human falsehoods. arXiv 2021, arXiv:2109.07958. [Google Scholar]

- Min, S.; Krishna, K.; Lyu, X.; Lewis, M.; Yih, W.t.; Koh, P.W.; Iyyer, M.; Zettlemoyer, L.; Hajishirzi, H. Factscore: Fine-grained atomic evaluation of factual precision in long form text generation. arXiv 2023, arXiv:2305.14251. [Google Scholar]

- Hu, T.; Zhou, X.H. Unveiling llm evaluation focused on metrics: Challenges and solutions. arXiv 2024, arXiv:2404.09135. [Google Scholar] [CrossRef]

- Rajapakse, R.N.; Zahedi, M.; Babar, M.A.; Shen, H. Challenges and solutions when adopting DevSecOps: A systematic review. Inf. Softw. Technol. 2022, 141, 106700. [Google Scholar] [CrossRef]

- Hu, X. Dynamics of Adversarial Attacks on Large Language Model-Based Search Engines. arXiv 2025, arXiv:2501.00745. [Google Scholar] [CrossRef]

- Zhong, P.Y.; Chen, S.; Wang, R.; McCall, M.; Titzer, B.L.; Miller, H.; Gibbons, P.B. RTBAS: Defending LLM Agents Against Prompt Injection and Privacy Leakage. arXiv 2025, arXiv:2502.08966. [Google Scholar]

- Zhou, H.; Lee, K.H.; Zhan, Z.; Chen, Y.; Li, Z.; Wang, Z.; Haddadi, H.; Yilmaz, E. TrustRAG: Enhancing Robustness and Trustworthiness in Retrieval-Augmented Generation. arXiv 2025, arXiv:2501.00879. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Routledge: London, UK, 2013. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).