Spectral Graph Compression in Deploying Recommender Algorithms on Quantum Simulators

Abstract

1. Recap of the Problem Statement

- Large User Profiles: As mentioned earlier, financial recommender systems must support user profiles comprising 50 to 100 fields—as mandated by regulation—far exceeding the three to five fields typically used in e-commerce platforms.

- Regulatory Constraints: Recommendations must be tailored to investor suitability, often requiring piecewise linear outputs that map precisely to client-specific financial goals and risk profiles.

- Expert System Integration: Recommendations are also subject to human oversight, typically provided by certified financial analysts or licensed portfolio managers. Our implementation incorporates a domain-specific expert system inspired by the investment analytics engines used by the largest global asset managers. To further improve scalability and compliance acceptance, we plan to integrate expert token routing (ETR) techniques—an evolution beyond the standard mixture-of-experts approach. ETR enables more efficient routing of expert insights across high-dimensional and causal datasets, which are common in financial contexts involving hundreds of interacting factors such as asset type, geography, sector performance, financial disclosures, and news sentiment.

2. Summary of Previous Work: Quantum-Inspired Investor Clustering for Financial Recommendations

2.1. Problem Statement and Modeling

2.2. Graph Representation

- Modeled investors as nodes in graph .

- Edges represent profile similarity with weights ; one example is the Pearson correlation:where denotes the k-th feature of investor i. We transformed weights to to ensure positivity.

2.3. Max-Cut Clustering

- Formulated investor clustering as the Max-Cut problem:

- Addressed NP-completeness through quantum approximation.

2.4. Quantum Optimization (QAOA)

- Initialized superposition: ;

- Applied problem Hamiltonian: , ;

- Applied mixer Hamiltonian: ;

- Optimized parameters classically to minimize .

2.5. Experimental Results

- Cirq-GPU showed optimal speed-accuracy tradeoff;

- Observed exponential time complexity in brute-force: ;

- cuQuantum provided highest accuracy but poor scalability;

- IonQ simulator showed lowest accuracy.

2.6. Practical Considerations

- Addressed scalability challenges for financial datasets (with profiles of 50 to 100 data fields);

- Prioritized gate-based quantum devices over annealers for commercial viability;

- Identified hardware constraints (qubit count, coherence time), likely requiring hybrid approaches;

- Future work to focus on graph sparsification for real QPU deployment.

3. Graph Compression Methodology and Validation

3.1. Attribute Selection and Feature Ranking

- ANOVA F-value: Measures the degree to which each feature is linearly associated with the categorical label y, using the one-way ANOVA test. The F-score is computed as:This method is applied when X consists of continuous numerical values.

- Chi-Squared Test: Assesses the dependence between each feature and the class label, useful for categorical or discretized attributes. The chi-squared statistic for feature is given by:where O and E are the observed and expected frequencies, respectively.

- Mutual Information (MI): Captures the mutual dependence between feature and label y, estimating how much knowing reduces any uncertainty about y. MI is computed as:

- Random Forest Feature Importance: A tree-based ensemble method that provides an importance score based on how often and how significantly a feature contributes to decision splits across all trees. Let be the average reduction in impurity when splitting on feature j.

3.2. Graph Compression via Laplacian Spectral Embedding

3.2.1. Motivation: Quantum Hardware Constraints and Need for Compression

3.2.2. Compression Using the Normalized Graph Laplacian

3.2.3. Construction of the Compressed Graph

3.2.4. Inverse Mapping: Reconstructing Original Node Labels

3.2.5. Approximate Reconstruction of Original Graph

3.2.6. Correctness

- Spectral clustering theory shows that the eigenvectors of the graph Laplacian approximate solutions to the normalized graph cut problem.

- The low-frequency eigenvectors of the Laplacian encode the community structure in the graph.

- The projection maintains inter-cluster relationships, while mapping them into a lower-dimensional space.

- The reconstruction serves as a best-rank-k approximation under the Frobenius norm, minimizing reconstruction error.

3.3. Model Training

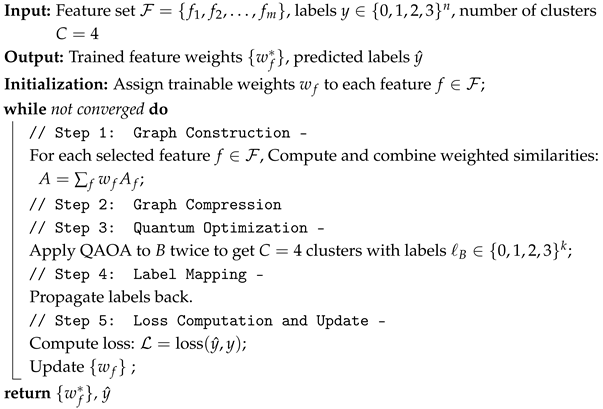

| Algorithm 1: Quantum-assisted investor segmentation via spectral compression |

|

3.4. New Investor Recommendation Based on Nyström Extension

3.4.1. Process

3.4.2. Initial Setup

3.4.3. Nyström Extension

- is a row vector representing the edge connections of the new node;

- U is the matrix of eigenvectors from the original graph;

- is the inverse of the diagonal matrix of eigenvalues corresponding to the eigenvectors in U.

3.4.4. Label Prediction

3.4.5. Correctness of the Nyström Extension

| Algorithm 2: Label prediction for new investor using Nyström extension |

Input: Trained weights , new investor feature , original eigenvectors U, eigenvalues |

Output: Predicted label |

// Step 1: Compute Similarity to Existing Nodes |

// Step 2: Nyström Embedding |

// Step 3: Assign Label |

Compute label where is cluster c’s centroid |

return

|

3.5. Noise Simulation in Quantum Computing

- Test the error resilience of quantum algorithms;

- Validate quantum error correction schemes;

- Benchmark performance under realistic noise conditions.

3.6. Correctness Validation of Graph Compression

3.6.1. Experimental Methodology

- Graph Corpus: 100 Erdős–Rényi graphs ( model) with nodes, edge probability , and no self-loops.

- Compression Regimes: representing compression ratios from 83% () to 17% ().

- alidation Workflow:

- Compute exact MaxCut via brute-force search;

- Apply spectral coarsening via Laplacian eigen decomposition;

- Solve compressed graph using exact methods;

- Project solution to original space via cluster assignments;

- Calculate approximation ratio .

- Metrics: Mean approximation ratio (), range , standard deviation ()

3.6.2. Experimental Results

3.6.3. Key Empirical Observations

- Monotonic Improvement: Solution fidelity increases with k:

- Asymptotic Convergence:

- Diminishing Returns: Relative improvement decreases by 44% from versus

3.6.4. Practical Implications

- Quantum Advantage Threshold: () maintains with 67% compression

- Optimal Operating Point: () balances 50% compression with

- High-Fidelity Region: () ensures with error

3.6.5. Conclusion

- Spectral compression preserves MaxCut structure with even at 83% compression;

- Solution fidelity improves logarithmically with decreasing compression;

- Optimal quantum advantage occurs at (67% compression).

| Validation Insight: At 50% compression (), the method achieves 89.4% approximation accuracy with just 1.57% standard deviation—demonstrating robust performance for quantum hardware reduction. |

3.7. Graph Compression Framework for Quantum Readiness

3.7.1. Louvain-Based Hierarchical Coarsening

- Community Detection:where Q denotes the modularity function.

- Size-Constrained Aggregation: This is shown as Algorithm 3 as follows:

| Algorithm 3: Community merging algorithm |

|

- Supernode Construction: Each final cluster forms a supernode in the compressed graph .

3.7.2. Edge Weight Aggregation

3.7.3. Output Compression

3.8. Validation Methodology

3.8.1. Small-Graph Verification

3.8.2. Large-Scale Consistency Testing

- Testbed: 1000 synthetic graphs generated using:

- Metrics:

3.9. Experimental Results

4. Real-World Case Study on Financial Products Recommendation

- Graph construction from investor similarity metrics;

- Quantum-accelerated graph partitioning into product-based clusters;

- Nyström Extension for real-time recommendations to new investors.

4.1. Success Criteria

4.1.1. Precision at K (P@K)

- A recommendation is “correct” if the predicted product matches the ground-truth product assignment for the investor.

- Precision@1 quantifies the model’s ability to prioritize the single most relevant product, critical in high-stakes domains like insurance, where incorrect recommendations can lead to financial or reputational risks.

4.1.2. Recall at K (R@K)

- Each investor has exactly one ground-truth relevant product (e.g., the product they purchased historically or were manually assigned). Thus, the denominator equals the total number of investors.

- Recall@1 ensures the model does not overlook valid investor–product relationships, even when constrained to a single recommendation.

4.1.3. Normalized Discounted Cumulative Gain at K (NDCG@K)

- Discounted Cumulative Gain (DCG@1):where is the relevance score of the recommended product (1 if correct, 0 otherwise).

- Ideal DCG (IDCG@1): For a perfect ranking, the ideal DCG is as follows:

- NDCG@1:

- The final NDCG@1 is the average of across all investors, equivalent to Precision@1 and Recall@1.

- NDCG@1 emphasizes the criticality of ranking the single most relevant product at the top position, mirroring real-world investor behavior where only the first recommendation is typically considered.

4.1.4. Metric Interpretation and Threshold Justification

- Unified Target (80%): All metrics converge to the same threshold due to the single-recommendation constraint. This ensures consistency and simplicity in evaluation.

- Alignment with Industry Standards: The 80% threshold is consistent with commonly accepted benchmarks for high-accuracy recommendation systems in regulated domains.

4.2. Stability Analysis

4.3. Experimental Methodology and Results

4.3.1. Dataset and Feature Selection

- 6: Citizen/Permanent Resident/Foreigner;

- 7: Ethnicity;

- 8: Illiquid Assets (Real Estate);

- 9: Liquid Assets (including Public Retirement Funds);

- 10: Liabilities;

- 12: Monthly Income from Employment;

- 13: Typical Variable Monthly Income;

- 17: Additional Protection Required.

4.3.2. Graph-Based Model Training and Validation

4.3.3. Results and Analysis

5. Conclusions

- Effective Graph Compression: Spectral Laplacian embedding reduces graph dimensionality by up to 50% while preserving structural integrity, achieving an approximation ratio of 89.4% in Max-Cut solutions. This compression is essential for overcoming current limitations in qubit count and connectivity.

- Quantum-Classical Synergy: QAOA, when combined with classical preprocessing (feature selection and spectral compression), provides a scalable solution for high-dimensional financial datasets, outperforming brute-force methods in computational efficiency while maintaining competitive accuracy.

- Regulatory Compliance: The framework adheres to stringent financial industry requirements by ensuring stability in recommendations—new investor inferences via the Nyström Extension do not perturb existing assignments, a critical feature for auditability and compliance.

- Practical Validation: Real-world testing on insurance product recommendations achieved good precision in label retention for existing investors, demonstrating the model’s robustness in mission-critical applications.

- Integrating quantum-specific compression techniques (e.g., entanglement-guided sparsification) to further reduce resource overhead.

- Deploying the pipeline on actual quantum processing units (QPUs) to benchmark real-world performance against simulated results.

- Extending the framework to dynamic graph settings for real-time portfolio rebalancing.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fabozzi, F.J.; Markowitz, H.M. Equity Valuation and Portfolio Management; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Markowitz, H. Portfolio Selection. J. Financ. 1952, 7, 77–91. [Google Scholar] [CrossRef]

- Goemans, M.X.; Williamson, D.P. Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. ACM 1995, 42, 1115–1145. [Google Scholar] [CrossRef]

- Karp, R.M. 36 Reducibility among Combinatorial Problems (1972). In Ideas That Created the Future; MIT Press: Cambridge, MA, USA, 2021. [Google Scholar]

- Dagum, P.; Luby, M. Approximating probabilistic inference in Bayesian belief networks is NP-hard. Artif. Intell. 1993, 60, 141–153. [Google Scholar] [CrossRef]

- Albash, T.; Lidar, D.A. Adiabatic quantum computation. Rev. Mod. Phys. 2018, 90, 015002. [Google Scholar] [CrossRef]

- Kremenetski, V.; Hogg, T.; Hadfield, S.; Cotton, S.J.; Tubman, N.M. Quantum Alternating Operator Ansatz (QAOA) Phase Diagrams and Applications for Quantum Chemistry. arXiv 2021, arXiv:2108.13056. [Google Scholar] [CrossRef]

- Punnen, A.P.; Punnen, A.P. The Quadratic Unconstrained Binary Optimization Problem: Theory, Algorithms, and Applications, 1st ed.; Springer International Publishing AG: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Hrga, T.; Povh, J. MADAM: A parallel exact solver for max-cut based on semidefinite programming and ADMM. Comput. Optim. Appl. 2021, 80, 347–375. [Google Scholar] [CrossRef]

- Farhi, E.; Goldstone, J.; Gutmann, S. A Quantum Approximate Optimization Algorithm. arXiv 2014, arXiv:1411.4028. [Google Scholar] [CrossRef]

- Cameron, I.; Tomesh, T.; Sasycamorem, Z.; Safro, I. Scaling Up the Quantum Divide and Conquer Algorithm for Combinatorial Optimization. arXiv 2024, arXiv:2405.00861. [Google Scholar] [CrossRef]

- Harrigan, M.P.; Sung, K.J.; Neeley, M.; Satzinger, K.J.; Arute, F.; Arya, K.; Atalaya, J.; Bardin, J.C.; Barends, R.; Boixo, S.; et al. Quantum approximate optimization of non-planar graph problems on a planar superconducting processor. Nat. Phys. 2021, 17, 332–336. [Google Scholar] [CrossRef]

- Kochenberger, G.; Hao, J.K.; Glover, F.; Lewis, M.; Lü, Z.; Wang, H.; Wang, Y. The unconstrained binary quadratic programming problem: A survey. J. Comb. Optim. 2014, 28, 58–81. [Google Scholar] [CrossRef]

- Friedman, H.M. Long Finite Sequences. J. Comb. Theory. Ser. A 2001, 95, 102–144. [Google Scholar] [CrossRef]

- Bayraktar, H.; Charara, A.; Clark, D.; Cohen, S.; Costa, T.; Fang, Y.L.; Gao, Y.; Guan, J.; Gunnels, J.; Haidar, A.; et al. cuQuantum SDK: A High-Performance Library for Accelerating Quantum Science. arXiv 2023, arXiv:2308.01999. [Google Scholar] [CrossRef]

- Quantum AI Team. Cirq: A Python Framework for Creating, Editing, and Optimizing Quantum Circuits. GitHub. 2024. Available online: Https://github.com/quantumlib/Cirq (accessed on 4 April 2025).

- Lee, W.B.; Carney, E.T.; Constantinides, A.G. Computational Results from Portfolio Graph Cut Simulations. In Proceedings of the Annual Meeting of the American Statistical Association, Joint Statistical Meeting, Virtual, 8–12 August 2021. [Google Scholar]

- Lee, W.B.; Constantinides, A.G. Computational Experiments for a Quantum Computing Application in Finance. In Proceedings of the IEEE International Conference on Quantum Computing and Engineering, Bellevue, WA, USA, 17–22 September 2023. [Google Scholar]

- Deb, S.K.; Pan, W.D. Quantum Image Compression: Fundamentals, Algorithms, and Advances. Computers 2024, 13, 185. [Google Scholar] [CrossRef]

- Casals, M.; Belis, V.; Combarro, E.F.; Alarcón, E.; Vallecorsa, S.; Grossi, M. Guided Graph Compression for Quantum Graph Neural Networks. arXiv 2025, arXiv:2506.09862. [Google Scholar] [CrossRef]

- Liu, C.; Lee, W.B.; Constantinides, A.G. Quantum Testing of Recommender Algorithms on GPU-Based Quantum Simulators. Computers 2025, 14, 137. [Google Scholar] [CrossRef]

- Morris, O.J.; Lee, M.J.; Constantinides, A.G. Graph Theory for Image Analysis: An Approached Based on the Shortest Spanning Tree. IEEE Proc. F Commun. Radar Signal Process. 1986, 133, 146–152. [Google Scholar] [CrossRef]

- Scanlon, J.; Deo, N. Graph-theoretic algorithms for image segmentation. Proc. IEEE Int. Symp. Circuits Syst. 1999, 6, VI-141–VI-144. [Google Scholar]

- Li, P.; Tao, H.; Zhou, H.; Zhou, P.; Deng, Y. Enhanced Multiview attention network with random interpolation resize for few-shot surface defect detection. Multimed. Syst. 2025, 31, 36. [Google Scholar] [CrossRef]

| Simulator | Relative Error | Processing Time (s) |

|---|---|---|

| cuQuantum | 23.07% | 6930.77 |

| Cirq-GPU | 24.18% | 15.67 |

| Cirq-IonQ | 50.90% | 252.57 |

| Brute-force | 0% | 112.62 |

| k | Approximation Ratio () | Error | ||||

|---|---|---|---|---|---|---|

| 5 | 0.17 | 0.7346 | 0.6892 | 0.8014 | 0.0300 | 0.2654 |

| 10 | 0.33 | 0.8369 | 0.7872 | 0.8828 | 0.0290 | 0.1631 |

| 15 | 0.50 | 0.8937 | 0.8667 | 0.9328 | 0.0157 | 0.1063 |

| 20 | 0.67 | 0.9293 | 0.8915 | 0.9781 | 0.0215 | 0.0707 |

| 25 | 0.83 | 0.9617 | 0.9366 | 0.9924 | 0.0142 | 0.0383 |

| Metric | Value | Statistical Significance |

|---|---|---|

| Mean ARI | 0.720 ± 0.004 | p < 0.001 (vs. random) |

| Median ARI | 0.685 | 95% CI [0.675, 0.695] |

| Standard Deviation | 0.167 | IQR = 0.21 |

| Minimum ARI | 0.260 | |

| Maximum ARI | 1.000 | 12% of samples |

| ID | Attribute |

|---|---|

| 1 | Family Status |

| 2 | Number of Children |

| 3 | Number of Grandchildren |

| 4 | Occupation Sector |

| 5 | Seniority Level |

| 6 | Citizen/Permanent Resident/Foreigner |

| 7 | Ethnicity |

| 8 | Illiquid Assets (Real Estate) |

| 9 | Liquid Assets (inc. Public Retirement Funds) |

| 10 | Liabilities |

| 11 | Monthly Expenses |

| 12 | Monthly Income from Employment |

| 13 | Typical Variable Monthly Income |

| 14 | Additional Emergency Funds |

| 15 | Existing Coverage |

| 16 | Existing Group Coverage |

| 17 | Additional Protection Required |

| 18 | Readiness to Pay for Insurance |

| 19 | Intended to Access Cash Value |

| 20 | Growth Asset Focus |

| 21 | Investment Experience |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Lee, W.B.; Constantinides, A.G. Spectral Graph Compression in Deploying Recommender Algorithms on Quantum Simulators. Computers 2025, 14, 310. https://doi.org/10.3390/computers14080310

Liu C, Lee WB, Constantinides AG. Spectral Graph Compression in Deploying Recommender Algorithms on Quantum Simulators. Computers. 2025; 14(8):310. https://doi.org/10.3390/computers14080310

Chicago/Turabian StyleLiu, Chenxi, W. Bernard Lee, and Anthony G. Constantinides. 2025. "Spectral Graph Compression in Deploying Recommender Algorithms on Quantum Simulators" Computers 14, no. 8: 310. https://doi.org/10.3390/computers14080310

APA StyleLiu, C., Lee, W. B., & Constantinides, A. G. (2025). Spectral Graph Compression in Deploying Recommender Algorithms on Quantum Simulators. Computers, 14(8), 310. https://doi.org/10.3390/computers14080310