Abstract

As generative artificial intelligence (GenAI) chatbots gain traction in educational settings, a growing number of studies explore their potential for personalized, scalable learning. However, methodological fragmentation has limited the comparability and generalizability of findings across the field. This study proposes a unified, learning analytics–driven framework for evaluating the impact of GenAI chatbots on student learning. Grounded in the collection, analysis, and interpretation of diverse learner data, the framework integrates assessment outcomes, conversational interactions, engagement metrics, and student feedback. We demonstrate its application through a multi-week, quasi-experimental study using a Socratic-style chatbot designed with pedagogical intent. Using clustering techniques and statistical analysis, we identified patterns in student–chatbot interaction and linked them to changes in learning outcomes. This framework provides researchers and educators with a replicable structure for evaluating GenAI interventions and advancing coherence in learning analytics–based educational research.

1. Introduction

Artificial intelligence (AI) is increasingly being integrated into educational environments, providing innovative solutions to enhance the learning experience. Among these AI technologies, chatbots have gained significant attention for their ability to simulate human conversation and provide real-time assistance and information [1,2]. Defined as AI-powered conversational agents, chatbots are extensively used to support classroom activities, facilitate communication, and provide real-time assistance to students. Research on learning chatbots in education has explored various dimensions, including student engagement [3,4] and learning gains [5,6]. These studies have highlighted the benefits of chatbots in creating flexible and personalized learning environments, improving student motivation, and reducing cognitive load through interactive and adaptive learning experiences. However, as shown in a systematic review, the vast majority (88.88%) of chatbots studied follow predetermined learning paths, which can restrict flexibility and critical inquiry [7].

The emergence of generative AI (GenAI) chatbots offers a promising departure from such constraints. Unlike rule-based systems, GenAI chatbots can generate open-ended, contextually rich responses, enabling inquiry-driven learning that supports critical thinking and reflection. Their potential to emulate pedagogical strategies, such as the Socratic method, has attracted increasing interest. For instance, the Socratic method, based on structured questioning to stimulate critical reasoning [8], has been successfully implemented in AI teaching assistants to deepen student understanding [9]. Recent studies further highlight the capabilities of chatbots to reduce teacher workload [10], enhance performance and social interaction [11], and support students’ self-regulated learning [12,13].

Despite these advances, there remains a gap in understanding the comprehensive impact of chatbots on learning gains, particularly in terms of student perceptions, engagement, and academic performance. While GenAI chatbots have been widely adopted in non-educational fields such as healthcare, their application in mainstream education is emerging and worthy of investigation [14,15]. Studies have called for more rigorous investigations into the educational capabilities of GenAI chatbots, including student assessment and feedback processes [16,17]. This research gap necessitates a systematic approach to evaluate the use of GenAI chatbots in educational contexts, particularly in terms of their impact on learning gains [18].

This study addresses that gap by proposing a consolidated, learning analytics–informed framework for evaluating GenAI chatbots in education. Grounded in the definition of learning analytics as the “measurement, collection, analysis, and reporting of data about learners and their contexts” to improve learning and learning environments, our framework integrates diverse data sources such as assessment scores, conversational interactions, engagement patterns, and student feedback to offer a holistic view of chatbot impact. We apply this framework in a multi-week quasi-experimental study using a pedagogically customized GenAI chatbot. The chatbot was implemented using a Socratic dialogue structure, meaning it deliberately avoided providing explicit answers to student queries. Instead, it posed guiding, open-ended questions aimed at encouraging learners to articulate reasoning, evaluate assumptions, and construct knowledge independently. This form of Socratic scaffolding is intended to cultivate deeper engagement and support self-regulated learning, consistent with prior work on metacognitive dialogue systems. The chatbot included a structured system prompt, a curated knowledge base, and context-aware interaction capabilities based on students’ conversation histories.

A preliminary human evaluation was conducted to ensure the chatbot’s pedagogical soundness [19]. In the follow-up experiment, students were enrolled into a control and experiment group. Due to the anticipated value of the intervention, a larger number of participants were allocated to the intervention group. This unequal allocation was also a deliberate design choice to increase the statistical power of outcome analyses for the experimental condition, where more variability in learning behavior was expected. Both groups used GPT-4-based chatbots, with only the experimental group accessing the pedagogically enhanced version. Participants were blinded to group assignment. Over a three-week period, we collected pre- and post-test data, analyzed chatbot conversations, and gathered student feedback. This mixed-methods approach allowed us to assess not only learning gains but also behavioral engagement and learner perceptions. By situating our work within a unified analytical framework, we contribute both a practical evaluation model and empirical evidence to guide the implementation of GenAI chatbots in educational contexts. Ultimately, this study aims to inform best practices for deploying AI chatbots to support adaptive, interactive, and independent learning.

This paper is structured to present both the context and contribution of the study. In Section 2, we explore prior research on the use of chatbots in education, highlighting current gaps and opportunities. Section 3 introduces our proposed evaluation framework, designed to offer a comprehensive approach for assessing educational chatbots. Section 4 details the design and implementation of our experimental study, followed by the presentation and discussion of findings in Section 5. Finally, Section 6 summarizes key insights and outlines directions for future research and practice.

2. Literature Review

Chatbots, automated programs designed to perform tasks that mimic human interactions, have evolved significantly since their inception in the 1960s. The first chatbot, ELIZA, was designed to simulate psychotherapy conversations [20]. Subsequent advances, such as PARRY, introduced better personality and control structures [21]. Modern chatbots powered by AI include intelligent voice assistants such as Siri, Alexa, Cortana, and Google Assistant, which can perform a wide range of tasks, including home automation and email management [22,23].

The educational application of chatbots is an emerging field. Educational chatbots facilitate learner-machine interactions through mobile instant messaging applications [24]. They enhance the learning experience by providing instant feedback, supporting multimedia content, and personalizing learning according to individual preferences [25,26,27]. In addition, chatbots promote active participation, collaboration, and self-paced learning, contributing to improved educational outcomes [28,29,30].

Theoretical frameworks that support the use of chatbots in education include constructivism, cognitive load theory, and social learning theory. Constructivism emphasizes the active construction of knowledge by learners, which chatbots facilitate through interactive and personalized learning environments [30]. Cognitive load theory emphasizes the importance of balancing information input and processing, with chatbots helping to manage the cognitive load by providing structured and easily navigable content [31,32]. Social learning theory asserts that learning occurs through social interactions, with chatbots facilitating collaboration and peer learning [29,33]. Besides, Winkler and Soellner [5] found that students using AI-powered chatbots in a blended learning setting demonstrated improved engagement and self-regulated learning skills. Similarly, Ruan et al. [34] observed that chatbots facilitated more frequent and meaningful interactions in online components of blended courses, leading to enhanced learning gains.

However, the effectiveness of chatbots in educational settings is not without challenges. Issues such as the potential for misinformation, the need for emotional intelligence in responses, and the importance of maintaining human oversight have been highlighted by researchers [35]. These concerns underscore the importance of human evaluation and careful implementation of AI tools in educational contexts. While chatbots offer promising benefits for educational purposes, evaluating chatbot responses is still crucial to ensure their accuracy, relevance, and ability to enhance learning gains, thereby supporting effective pedagogy and improving student engagement and satisfaction [7,10]. In recent years, the evaluation of chatbot responses typically focuses on several key criteria. Al-Hafdi and AlNajdi [17] presented holistic findings of undergraduate students’ perceptions of the chatbot-based learning environment in computer skills. The results showed the acceptability of chatbots among students and a positive trend toward their use in the learning process. This affords learners the autonomy to determine the optimal context and temporal parameters for learning, the repetition of exercises, and the accessibility of content in a manner that aligns with their individual preferences. Huang et al. [36] proposed a set of design principles for chatbots for meaningfully implementing educational chatbots in language learning. Findings revealed three technological affordances: timeliness, ease of use, and personalization. Chatbots appeared to encourage students’ social presence by affective, open, and coherent communication. Another recent study evaluated GenAI chatbots’ tutoring capability [19]. The evaluation rubric measures not only the accuracy of the answers from the chatbot but also how well the chatbot can perform guidance regarding clarity and helpfulness. These studies demonstrate the chatbot’s effectiveness as a learning tool.

The integration of chatbots presents new opportunities for data collection and analysis. The interactions between students and AI systems can provide rich data on learning processes, enabling a more nuanced understanding of student engagement, conceptual development, and potential areas for intervention [37]. With the rich data on learning processes, a systematic evaluation and comprehensive studies are needed to understand a chatbot’s impact on student learning fully, particularly in terms of their impact across a spectrum of learning gains.

3. Chatbot-Learning Analytics Framework

The learning analytics field advocates a rigorous human-led evaluation framework that focuses on learning [37,38]. Leveraging human insight and not purely a data-driven approach is important to enhance learning processes and outcomes. This stand is aligned with calls for humans in the loop or over the loop in the application of AI across a variety of fields, including education [39,40]. Aligned with this principle, we propose a comprehensive framework that puts the human at the center of a systematic evaluation framework through measured outcomes and reporting. The framework comprises an overall methodology that captures long-term learning impact, learner-centric outcome measures, essential data sources including self-reports, appropriate analytics methods for each outcome, and for analysis across the outcomes. In line with the research practices, the presentation needs to discuss the insights related to existing theories and other empirical studies while also being transparent about the assumptions and limitations.

This framework also aligns with recent calls for more human-centered and agency-aware approaches in learning analytics and educational AI systems. Prior work emphasizes the importance of triangulating behavioral, cognitive, and affective data to build holistic insights into learning processes while foregrounding the learner’s voice and experience in analytic interpretations [41,42]. Moreover, the organization of the framework in Table 1 is theoretically grounded in three complementary learning theories. Constructivist learning theory informs the Learning gains domain by emphasizing knowledge construction through active cognitive engagement, which we operationalize through pre-post learning assessments and the use of an educational chatbot. Cognitive load theory supports the Engagement domain by highlighting the importance of managing learners’ attention, effort, and mental workload—captured through behavioral indicators such as task persistence and interaction patterns. Finally, social learning theory underpins the Perception domain, acknowledging that learners’ beliefs about fairness, trust, and the credibility of instructional agents influence their motivation and participation. These theories collectively shape the structure of the framework by offering a foundation for selecting, categorizing, and interpreting diverse data sources across cognitive, behavioral, and affective dimensions.

Table 1.

The proposed study framework outlining the overall research design, key outcome measures, essential data sources, and example analytical methods.

Table 1 outlines a flexible yet comprehensive study framework designed to evaluate educational interventions across multiple dimensions of learning, incorporating learning analytics with student–chatbot interaction. The framework accommodates a range of methodological approaches, including but not limited to longitudinal randomized control trials (RCTs) and quasi-experimental studies. These methodologies serve as illustrative examples; the framework is equally applicable to other empirical designs such as cross-sectional studies, case studies, design-based research (DBR), action research, and mixed-methods studies. Each approach allows for the investigation of complex educational phenomena through various lenses of inquiry. The framework is organized around three primary outcome domains, namely, learning gains, engagement, and perception, each supported by appropriate data sources and analytical strategies. The intention is to offer adaptable guidance for both quantitative and qualitative investigations in education research.

Learning gains are one of the most critical outcome measures in evaluating educational interventions. They refer to measurable improvements in a learner’s knowledge, skills, or conceptual understanding resulting from participation in an instructional experience. The study of learning gains often involves pre- and post-intervention assessments, which provide direct measures of student progress over time. These may include standardized tests, custom-designed assessments, performance-based tasks, or concept inventories. Quantitative methods are frequently used to evaluate learning gains due to their ability to yield statistically generalizable insights. Effect size metrics such as Cohen’s d or Hedges’ g are particularly valuable, as they quantify the magnitude of change, providing context beyond mere statistical significance. T-tests [43], Analysis of Covariance (ANCOVA) [44], and repeated measures Analysis of Variance (ANOVA) are commonly applied when comparing pre- and post-intervention scores, especially in randomized or quasi-experimental settings. Clustering techniques, such as K-means clustering or latent profile analysis, allow researchers to identify patterns among subgroups of learners who may respond differently to the intervention [45]. For instance, high- and low-performing learners may exhibit distinct improvement trajectories that are obscured in group-level statistics. Item Response Theory (IRT) can also be employed to assess item-level learning gains while controlling for item difficulty, enhancing precision in test interpretation [46]. In more exploratory or design-based research settings, qualitative approaches such as cognitive task analysis or think-aloud protocols can complement quantitative findings by revealing the processes behind conceptual change. These methods are useful for understanding not just whether learning occurred, but how learners construct and revise their understanding.

Engagement is a multifaceted construct encompassing behavioral, emotional, and cognitive dimensions. Understanding engagement is essential for identifying whether and how learners are interacting meaningfully with instructional content, peers, and learning technologies. Because engagement is dynamic and context-dependent, it is particularly well-suited to mixed-methods approaches that combine log data analysis with observational and interpretive techniques. Behavioral engagement is often captured using log files, such as timestamped records of page views, clicks, discussion posts, or assignment submissions, these are collectively termed as trace data. Sequence mining and temporal analysis enable researchers to uncover patterns in how students navigate content or interact with online platforms over time. For example, a temporal analysis may reveal whether students engage more intensively before assessment deadlines or show sustained engagement across a course module. Cognitive engagement, which involves mental investment in learning, can be examined through epistemic network analysis or discourse analysis of forum posts, reflective journals, or problem-solving transcripts [47]. These methods reveal the depth of reasoning, argumentation quality, and conceptual connections made by learners. Epistemic analysis, in particular, models the co-occurrence of cognitive moves (e.g., explaining, questioning, critiquing) and provides a network-based representation of knowledge-building practices [48]. Emotional engagement is more elusive but can be inferred through sentiment analysis of student comments or survey data on motivation, interest, and frustration. Combining these with qualitative methods such as interviews or observational protocols offers richer insight into learners’ affective experiences. For instance, video data or in-class observation logs may reveal non-verbal cues indicative of disengagement or excitement. Importantly, engagement measures allow for real-time feedback in adaptive learning environments and can inform iterative improvements in instructional design. Engagement data may also be used as a mediating variable in models predicting learning outcomes, offering insight into the mechanisms through which interventions produce change.

Lastly, perception refers to learners’ subjective evaluations of their learning experience, including how they interpret the value, usability, fairness, and emotional impact of an educational intervention. While perception does not directly measure learning, it influences motivation, satisfaction, persistence, and future behavior, making it a vital dimension of evaluation. Survey instruments with closed- and open-ended questions are common tools for capturing perception. Quantitative items often use Likert scales to assess constructs such as perceived usefulness, satisfaction, clarity, or self-efficacy. These responses can be analyzed using descriptive statistics, factor analysis, or structural equation modeling (SEM) to explore relationships among latent variables and outcomes [49]. For deeper insight, qualitative methods such as semi-structured interviews, focus groups, and open-ended survey responses are employed. Thematic analysis allows researchers to code and categorize recurring ideas, opinions, and emotions expressed by learners [50]. Grounded theory can be used to build conceptual frameworks based on emergent patterns in perception data, particularly when exploring new or under-theorized educational innovations. Sentiment analysis, increasingly automated through natural language processing (NLP), is useful for large-scale analysis of written feedback (e.g., course evaluations, online reviews). These methods provide quick assessments of learner attitudes but may lack the nuance of manual coding or interview-based insights. Understanding learner perception is essential not only for assessing user satisfaction but also for identifying mismatches between instructional intent and learner experience. For example, a technologically sophisticated tool may be perceived as confusing or intimidating, undermining its pedagogical impact. Conversely, a modest intervention that is perceived as intuitive and supportive may foster greater engagement and learning gains.

A key strength of the proposed framework lies in its capacity to support cross-domain analysis (i.e., the examination of relationships among different outcome categories such as learning gains, engagement, and perception). This integrative approach acknowledges that educational interventions do not operate in isolation; rather, they influence and are influenced by complex interactions between learner behavior, cognitive development, and affective responses. One of the most common forms of cross-domain analysis is correlational analysis, which is used to investigate potential associations between variables across outcome domains. For example, researchers may explore whether levels of engagement (e.g., time on task, participation in discussions) are positively associated with learning gains. These analyses can be performed using Pearson or Spearman correlations, depending on the distribution of the data, and may serve as a preliminary step toward more complex modeling. When the goal is to understand predictive relationships, multiple regression, logistic regression, or hierarchical linear modeling (HLM) may be applied. These models allow researchers to assess whether variables such as perception (e.g., perceived usefulness or satisfaction) or engagement behaviors predict post-test performance, controlling for relevant covariates such as prior knowledge, demographics, or instructional modality. As mentioned previously, SEM provides a powerful tool for modeling latent constructs and testing theoretical pathways across outcome domains. For example, SEM can be used to test a mediation model where perception of an intervention influences engagement, which in turn affects learning outcomes. SEM is particularly useful when combining multiple observed indicators (e.g., survey items and log data) into higher-order constructs, offering a more nuanced understanding of the mechanisms at play.

In studies employing both quantitative and qualitative data, triangulation serves as a strategy for cross-domain analysis. By comparing findings from different data types (e.g., aligning engagement metrics from log data with qualitative themes from interviews), researchers can strengthen the validity of their interpretations. For instance, patterns in system usage might be better understood when supplemented with qualitative data that explains why certain learners disengaged or excelled. A related strategy is joint display analysis, where qualitative and quantitative findings are presented side-by-side in tables or figures to highlight convergences, divergences, and areas requiring further interpretation. These integrative visualizations are increasingly used in mixed-methods research to promote clarity and synthesis. Lastly, to capture the dynamic nature of learning processes, temporal methods such as sequence mining, Markov models, or dynamic time warping can be applied across engagement and learning data. These methods allow researchers to detect critical transitions or behavioral patterns over time that correlate with learning outcomes, such as “productive failure” sequences or persistent trial-and-error behavior. In longitudinal studies, growth curve modeling may be used to assess change trajectories in learning or engagement and how these are moderated by perception variables or intervention types.

A rigorous framework requires not only robust analysis but also transparent and reflective reporting that contextualizes findings, articulates limitations, and supports theoretical development or practical application. Findings should be interpreted in light of relevant educational theories (e.g., constructivism, self-determination theory, cognitive load theory), which can provide explanatory power and guide the articulation of mechanisms underlying observed effects. For example, increases in engagement may be interpreted through the lens of intrinsic motivation or flow theory, while learning gains may be linked to scaffolded learning or deliberate practice models. Researchers are encouraged to explicitly state how their findings confirm, challenge, or extend existing theoretical frameworks. This alignment strengthens the study’s contribution to cumulative knowledge building in the field. Reporting should also address the practical relevance of findings. In particular, researcher should consider how their findings can be generalized, transferable, reproducible, and scalable [51].

The generalizability of this framework lies in its conceptual structure and methodological flexibility. It is designed to organize and integrate a variety of instruments and data sources used in evaluating AI-enhanced learning environments. While our own exploratory study uses one instantiation, many prior studies—each using different indicators, designs, and domains—can be retroactively situated within this framework. For example, Lai et al. [13] examined student learning trajectories in relation to chatbot-mediated problem solving using delayed retention measures and process-oriented epistemic network analysis. Their design fits squarely within our framework’s learning gains domain, using extended outcome measures and cognitive task analytics. Noroozi et al. [52] proposed multimodal measurement techniques, such as physiological signals and behavioral trace data, to assess emotional and cognitive engagement. These map onto our engagement domain, particularly in the use of sequential and network-based analytics. Ait Baha et al. [11] investigated student trust and fairness perceptions in AI-based educational tools using structured perception surveys. This aligns with our Perception domain and illustrates the use of validated affective instruments and sentiment analysis. These examples demonstrate that the framework is not only applicable to our own study but also capable of integrating diverse methodological approaches across the literature. This reinforces its utility as a generalizable, organizing lens for learning analytics research involving AI systems.

4. Experimental Study

4.1. Chatbot Design and Evaluation

In this section, we demonstrate how an experimental study, guided by learning analytics and structured by the proposed framework, can achieve specific educational outcomes. The aim of this study is not to validate a particular AI tool or analytical method but to demonstrate the use of a generalizable evaluation framework that can structure multi-modal, human-centered learning analytics research. Our implementation is exploratory and illustrative, intended to show how diverse data sources and outcomes can be integrated using a coherent evaluative lens.

Prior to this study, we conducted a preliminary investigation that evaluated the capabilities of GenAI models in educational contexts, with a focus on answer accuracy, stepwise guidance, and overall response quality [19]. This previous study compared the performance of four GenAI tutors, including GPT-4 (the baseline model used in the current study), and their retrieval-augmented generation (RAG) variants [53,54,55,56]. These models were assessed on their ability to answer questions and provide guidance across various statistical topics. A key feature of the previous research was its use of human evaluation, which emphasized the importance of expert judgment in assessing AI-driven educational tools, particularly in capturing nuances that automated metrics may miss. This diagnostic step was intended to guide the development of the Socratic meta-prompting strategy. Insights from this phase enabled us to select the LLM for the experimental study, fine-tune the Socratic behavior, improve the accuracy and tone of responses, and ensure alignment with pedagogical goals. While not part of the core experimental design, this step informed how we structured and justified the intervention used in the experimental condition.

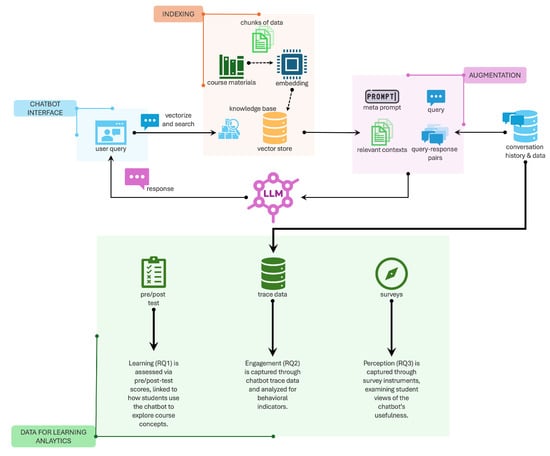

The current experimental study employs a pedagogically designed GenAI chatbot that functions as a Socratic learning tutor (see Figure 1). This chatbot was prompted to help students understand statistics coursework by breaking down their questions into manageable steps and guiding them through each phase of the problem-solving process. This approach mirrors a scaffolding strategy that supports deeper learning. The chatbot also managed the pace of questioning, ensuring that each student’s response was considered before advancing. To reduce hallucinations and improve factual accuracy, the chatbot used an RAG pipeline. RAG is a method that enhances language models by integrating relevant external documents into the generation process. In this study, course materials were first processed to build a searchable index. Documents in portable document format (PDF) were segmented into 1000-character chunks with a 100-character overlap. Each chunk was embedded using OpenAI embeddings and then indexed in a Facebook AI Similarity Search (Faiss) vector database. When a student submitted a query, the system retrieved the four most semantically relevant chunks from the database. These were concatenated and sent to the language model (GPT-4-Turbo), along with the ten most recent query-response pairs, to produce a context-aware reply. This pipeline allowed the chatbot to draw on course-specific content while maintaining the conversational and reasoning strengths of the underlying language model.

Figure 1.

Framework of the RAG-based chatbot used in the study. The chatbot processes user queries by retrieving relevant chunks of course material from a vectorized knowledge base, augmenting the input with contextual information, and generating a response using an LLM. Additionally, interaction data feed into learning analytics to examine student engagement (RQ2), learning outcomes via pre/post-tests (RQ1), and student perceptions through surveys (RQ3).

4.2. Experimental Study Design

During the experimental study, we employed a quasi-experimental design to examine the learning impact of the chatbot intervention. The study spanned three weeks and involved dividing students into two groups: a control group and an experimental group. The control group interacted with a baseline chatbot using the standard GPT-4-Turbo model with Retrieval-Augmented Generation (RAG), while the experimental group used our pedagogically customized Socratic chatbot, which also employed the GPT-4-Turbo model with RAG as the core language model. This Socratic chatbot is a conversational AI tool designed to prompt learners through open-ended, reflective questioning rather than providing direct answers. Inspired by Socratic dialogue, this approach aims to foster critical thinking, metacognitive awareness, and learner autonomy. The metaprompts for how the Socratic chatbot and baseline chatbot should respond are captured in Appendix A.

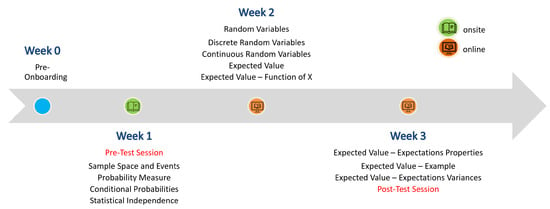

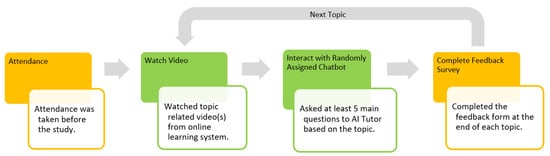

The study timeline and learning topics are outlined in Figure 2. For ethical and instructional reasons, more students were assigned to the experimental group to maximize educational benefit. The assignment was conducted blindly and without participant input, eliminating self-selection bias. Although group sizes were unbalanced, no stratification or prior academic data were used in the allocation process. The only inclusion criterion was that participants had no formal prior knowledge of statistics, based on self-report at the start of the course. To minimize potential bias from prior experiences with ChatGPT, the chatbot interface was delivered through a custom-built platform that masked the identity of both chatbots. The first week of the study took place on-site, while the remaining two weeks were conducted online. Students were enrolled in a simulated statistics course on the learning management system and were required to watch related lecture videos and engage with the assigned chatbot throughout the study period. Figure 3 illustrates the procedural flow for each topic. Importantly, after completing each topic, students provided feedback to capture their perceptions of the chatbot’s effectiveness and their overall experience. Upon completion of all 12 topics, participants took a post-test, followed by a final round of post-study feedback collection.

Figure 2.

Timeline and topics across a three-week experimental study. Ten multiple-choice questions with compatible difficulty levels were delivered during the pre-test and post-test sessions. Figure reproduced from [13] under a Creative Commons License, (CC BY 4.0).

Figure 3.

Overview of the study procedure for each topic, illustrating the structured sequence of activities supported by the learning management system and chatbot. The figure highlights how instructional content and chatbot interactions were integrated to guide and support student learning throughout the study.

Following approval from the Institutional Review Board, we initiated the experimental study. Participants were either students who had never taken a statistics course or those who had demonstrated weak performance in statistics. Informed consent was obtained at the beginning of the study, and students were advised that they could withdraw at any time without penalty. Participants who provided feedback were informed that their responses would be recorded for research purposes and that all personal information would remain confidential. A total of 80 students were initially recruited. However, due to attrition and incomplete data submissions, the final sample included 45 participants who completed all phases of the study. Of these, 11 students were assigned to the control group and 34 to the experimental group.

4.3. Research Study Design

Table 2 outlines the types of data collected during the study, including pre- and post-test assessment scores, student–chatbot conversation logs, and participant feedback. To evaluate the impact of the chatbot on student learning gains, we adopted a mixed-methods approach that integrated both quantitative and qualitative data. This comprehensive methodology enabled a more nuanced and holistic understanding of how the chatbot influenced students’ learning processes and outcomes.

Table 2.

Data collected during the experiential study, categorized by study phase, data name, type, and typical value range or content.

The pre-test and post-test scores were the primary quantitative measures used to assess learning gains. Descriptive statistics were calculated to characterize the baseline knowledge levels of both the experimental and control groups. Independent samples t-tests confirmed that there were no statistically significant differences in pre-test scores between the two groups. To further enhance baseline equivalence, we applied balancing techniques and used the K-means clustering algorithm to stratify participants based on their question-level pre-test performance [57]. The optimal number of clusters (K) was determined using the elbow method [58]. This clustering approach ensured that we compared participants in both groups that have comparable prior knowledge, helping to mitigate bias introduced by the unequal sample sizes and increasing the generalizability and validity of the findings.

Student–chatbot conversation messages and the topics discussed during these interactions served as the primary indicators for evaluating student engagement. Two forms of learning analytics were employed to analyze these engagement outcomes:

- 1.

- Temporal Analytics: This analysis examined the timing, frequency, and distribution of student–chatbot interactions across the three-week study period. By capturing when and how often students engaged with the chatbot, we gained insights into patterns of sustained engagement and fluctuations in student participation over time.

- 2.

- Content Analytics: This analysis focused on identifying and categorizing the specific learning topics discussed during the interactions. By mapping these topics to the course content, we assessed the depth and relevance of student engagement with the chatbot in relation to the intended learning objectives. In addition to engagement measures, student perception of the chatbot intervention was evaluated through topic-level feedback surveys. These surveys asked students to reflect on their learning experience and assess the usefulness of the chatbot as a learning tool. We conducted a thematic analysis of the open-ended responses to uncover recurring themes in students’ perceptions, such as clarity of explanation, motivational impact, and usability of the chatbot interface.

Tying this back to the framework, this study contributes to the field of learning analytics by demonstrating how data-driven approaches can be used to evaluate the effectiveness of AI-powered educational tools. By analyzing both behavioral data (e.g., interaction logs) and perceptual data (e.g., student feedback), we offer a multidimensional understanding of chatbot-supported learning. These insights can inform the iterative design of educational chatbots and guide pedagogical strategies for integrating AI-driven support systems to enhance student learning experiences.

5. Results and Discussion

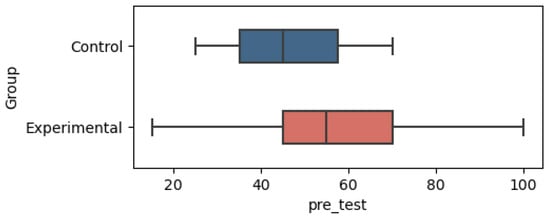

Among the 45 students who completed the study, 11 were assigned to the control group and 34 to the experimental group. Figure 4 presents a boxplot of the pre-test scores for both groups. An independent samples t-test revealed no statistically significant difference in pre-test performance between the control group (, ) and the experimental group (, ), with a p-value of 0.4037 (). Although the difference was not statistically significant, the visual distribution of scores showed a rightward shift in the experimental group, suggesting slightly higher baseline performance that warranted closer examination.

Figure 4.

The boxplot of pre-test scores for all 45 students in the control () and experimental group ().

To address potential imbalances in prior knowledge and strengthen causal inferences, we applied K-means clustering to students’ question-level pre-test scores. This clustering approach allowed us to identify homogeneous subgroups based on performance patterns, thereby helping to ensure that any observed differences in post-test outcomes were attributable to the chatbot intervention rather than pre-existing differences in statistical knowledge [59].

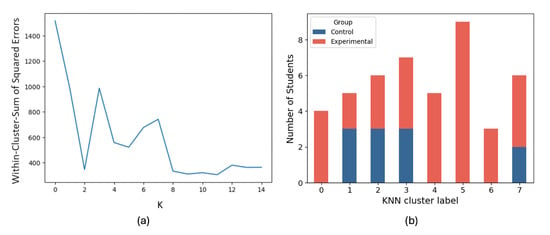

To determine the optimal number of clusters for K-means clustering, we employed the well-known elbow method. This technique involves calculating the Within-Cluster Sum of Squared Errors (WCSS) for a range of values of K, and selecting the value at which the WCSS first begins to decrease at a significantly slower rate. Figure 5a presents the WCSS curve generated by this method. However, it is important to note that the elbow point is not always distinct or sharply defined. In our case, the plot shows a noticeable bend around or , after which the reduction in WCSS becomes more gradual. These inflection points indicate potential candidates for the optimal number of clusters, although some subjectivity may be involved in the final selection.

Figure 5.

(a) Elbow plot showing the within-cluster sum of squared errors (WCSS) for K-means clustering across different values of K. The “elbow” at suggests an optimal number of clusters where the WCSS sharply decreases before plateauing. (b) Bar plot illustrating the distribution of students across K-means cluster labels, grouped by experimental condition (Control vs. Experimental). Clusters with labels 1, 2, 3, and 7 contain students from both groups.

Given the complexity of our dataset, particularly the variability present in students’ pre-test performance, we selected as the number of clusters for further analysis. This choice reflects a balance between capturing meaningful variation and avoiding excessive fragmentation. Figure 5b shows the distribution of students from the control and experimental groups across the eight clusters. Notably, only clusters labeled 1, 2, 3, and 7 contain students from both groups, making them suitable for comparative analysis.

To ensure a fair and balanced comparison, we retained only the students from these four clusters. After this stratified resampling process, the final analytic sample included 11 students from the control group and 13 students from the experimental group. All subsequent analyses were conducted exclusively on the data from these 24 participants. Although this reduction in sample size limits the overall dataset, the use of K-means clustering enhances the study’s internal validity by ensuring that group comparisons are made within more homogeneous subgroups. This methodological refinement increases confidence in the conclusions drawn regarding the effects of the chatbot intervention on student learning outcomes.

5.1. Findings

5.1.1. The Pre-Test and Post-Test Scores (Learning Gains)

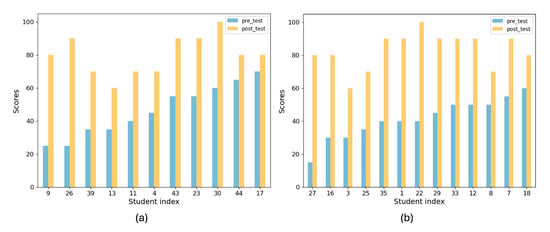

The pre-test and post-test scores for the resampled control () and experimental () groups were analyzed to evaluate the impact of the Socratic chatbot on student learning gains. These results are visualized in Figure 6. An independent samples t-test revealed no statistically significant difference in pre-test scores between the control group (Mean , SD ) and the experimental group (Mean , SD ), , indicating that both groups began the study with comparable levels of baseline knowledge. This comparability supports the internal validity of the study and suggests that any subsequent differences could be reasonably attributed to the experimental intervention. Although post-test results initially suggested a performance advantage for the experimental group (Mean , SD ) over the control group (Mean , SD ), further analysis using the non-parametric Mann–Whitney U test revealed no statistically significant differences in post-test scores (, ), nor in pre-test scores (, ), even after resampling. These findings indicate that the intervention did not lead to a measurable improvement in learning outcomes compared to the control condition.

Figure 6.

Pre- and post-test scores of students after applying K-means clustering-based resampling, shown separately for (a) the control group and (b) the experimental group. The plots illustrate how score distributions differ within each group before and after the intervention.

Several factors may account for this outcome. It is possible that the intervention, while well-intentioned, was not sufficiently robust in duration, intensity, or content to produce a significant effect. Additionally, variability within each group—reflected in the relatively high standard deviations—may have diluted the observable impact of the intervention. Crucially, sample size may have limited the statistical power of the analysis, making it more difficult to detect small but potentially meaningful effects. While the post-test scores do not quantitatively differentiate the participants, other mixed methods involving qualitative analysis may offer insights into specific behaviors that may suggest how learning gains could manifest through student–chatbot interactions [13].

These findings diverge from recent meta-analytic evidence indicating that GPT-4, when paired with scaffolded instructional strategies, can yield large learning gains across educational domains [53,60]. Additionally, there is convergence with the findings of other studies [61]. We believe these divergences and convergences are not contradictory but rather reflective of differing research intentions and design constraints. Fundamentally, these studies demonstrate how the presence of a replicable, learning analytics–driven framework can support holistic evaluation across multiple dimensions rather than maximizing any one outcome.

Our implementation of scaffolding through the Socratic chatbot was deliberately moderate and time-bounded. The emphasis was on modeling a structured, human-centered methodology, not on deploying the most intensive pedagogical design possible. As such, while the statistical learning gains were not significant, this should be interpreted within the context of a framework demonstration, not as a definitive measure of GPT-4’s educational potential. Importantly, the framework demonstrated in this study offers a structured basis for comparing educational interventions across diverse contexts and studies.

5.1.2. Conversation Messages and Conversation Topics (Engagements)

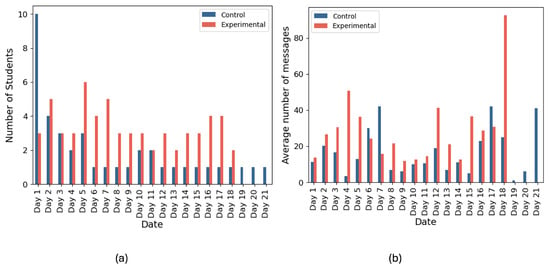

Temporal Analysis: Figure 7a illustrates the number of students using the chatbot on each day of the study. In the control group, chatbot usage remained consistently low, with approximately 2 to 4 students interacting with the system daily. This pattern suggests a generally low level of engagement with the baseline chatbot and no discernible upward or downward trend over time. In contrast, the experimental group exhibited greater variability in chatbot usage, ranging from 0 to 6 students per day, with several noticeable peaks. This variability may reflect a more dynamic engagement pattern, where certain students relied more heavily on the Socratic chatbot on specific days. Interestingly, 10 out of the 11 students in the control group used the chatbot on the first day of the on-site session. However, only one or two students continued to engage with it in subsequent days. In comparison, a higher number of students in the experimental group consistently used the chatbot across the entire study period, suggesting more sustained engagement.

Figure 7.

Student–chatbot interaction trends over the course of the experimental study. (a) Daily count of unique students who interacted with the chatbot. (b) Average number of messages sent to the chatbot per student each day. These metrics provide insight into student engagement levels and usage patterns throughout the study period.

The message-level interaction data shown in Figure 7b mirror these usage patterns. In the control group, the number of messages exchanged per day remained relatively low to moderate, typically ranging from 10 to 40. The experimental group displayed greater fluctuation, with daily message counts ranging from 0 to 60. Peaks in message volume aligned with peaks in student usage, indicating that days with more student engagement also saw higher levels of interaction.

The higher and more variable messaging activity in the experimental group is likely attributable to the Socratic design of the chatbot. This design encouraged iterative, back-and-forth dialogue, compelling students to engage in deeper conversational exchanges, which in turn led to increased message volume on many days. This responsiveness supports our interpretation that the chatbot’s behavior encouraged sustained cognitive engagement, rather than simply inflating message count.

It is important to note that although resampling improved internal consistency by balancing baseline knowledge across groups, the overall sample size remains small. Therefore, observed engagement patterns may not be generalizable to larger populations. Nevertheless, the data suggest that the Socratic chatbot facilitated a more dynamic and sustained engagement compared to the baseline chatbot used in the control group.

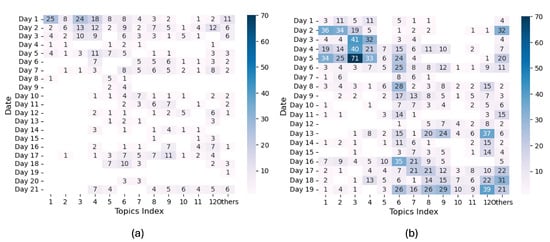

Content Analytics: Figure 8 presents a heatmap illustrating the distribution of chatbot messages by topic index (x-axis) and day of interaction (y-axis), with color intensity representing message volume. Each topic index corresponds to the entries in Table 3, following the chronological order in which the topics were presented during the course, as shown in Figure 2. Each student–chatbot message pair was automatically labeled with the corresponding statistics topic using the same LLM model deployed in the Socratic chatbot (GPT-4-Turbo with default parameter settings, temperature = 1.0, top_p = 1.0). The model was prompted with the following instructions:

Figure 8.

Number of chatbot messages sent by students in the (a) control group and (b) experimental group, broken down by topic and tracked daily. This visualization highlights differences in chatbot usage patterns and topical focus between the two groups over time.

Table 3.

Number of messages sent to the chatbot by each group for each topic, normalized by group size (Control: , Experimental: ).

You are a tutor in statistics. You are going to review the conversation between student and an AI tutor. The~conversation is in the format {AI:QUESTION, USER:ANSWER}. You are going to label it with one most related statistic topic in the #TOPICLIST#. #ONLY# return the topic index #INT#. Return ’Others’ if no match.

The temperature of 1.0 was selected to balance consistency in topic classification with sufficient variability to capture nuanced statistical concepts across the 12 predefined topics. This approach ensured systematic categorization of conversation content while allowing for appropriate handling of edge cases through the “Others” category. This structured prompt ensured consistent output formatting for topic labels, facilitating subsequent quantitative analysis of conversation patterns across statistical topics. A human evaluation of a subset of this labeled data was conducted between three independent raters. Inter-rater reliability analysis yielded a Cohen’s Kappa of 0.462, indicating moderate agreement beyond chance. Complete agreement among all three raters was observed in 83.16% of the items (237 out of 285), suggesting a high level of raw consensus despite only moderate chance-corrected agreement.

For the control group, the heatmap reveals that the majority of student–chatbot interactions occurred during the early days of the study. Although the curriculum followed a sequential structure, some students initiated conversations about advanced topics (e.g., Expected Value) earlier than scheduled. Engagement levels declined as the study progressed, with a slight uptick near the end, possibly driven by reminder emails.

In contrast, the heatmap for the experimental group in Figure 8b displays a stronger diagonal pattern, indicating that students tended to engage with the topics in the intended sequential order. A particularly high message volume was observed for the topic Conditional Probabilities, suggesting increased difficulty and a higher need for guidance. This observation aligns with anecdotal feedback from the course instructor, who noted that students often struggled with this topic during in-person classes.

Table 3 complements the heatmap by providing a numerical breakdown of the average number of messages exchanged with the chatbot per user for each topic, disaggregated by group. The table also includes a “ratio” column that compares the per-user message volume in the experimental group to that in the control group. A higher ratio indicates that students working with the Socratic chatbot engaged more frequently per person on particular topics, suggesting either increased need for assistance or deeper inquiry practices (e.g., Probability Measure at 512%, Conditional Probabilities at 320%, Expected Value—Expectations Variances at 258%). Conversely, a lower ratio may suggest that the baseline chatbot sufficiently addressed certain topics, reducing the need for prolonged interaction (e.g., Random Variables at 109%, Expected Value—Expectations Properties at 118%).

5.1.3. Thematic Analysis of Feedback (Perception)

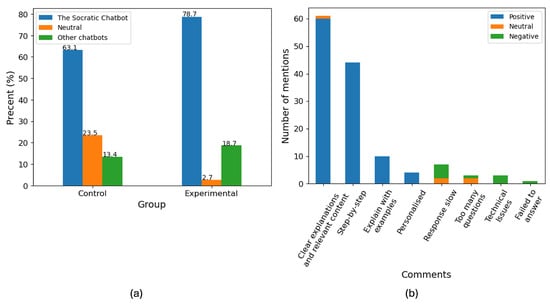

Students’ topic-level feedback was manually evaluated to better understand their preferences and perceptions of the chatbot experience. Figure 9a presents a summary of student preferences across both the control and experimental groups. Overall, feedback from the experimental group was slightly more favorable. A greater proportion of students in the experimental group expressed positive sentiments toward the Socratic chatbot, with reporting a positive experience, compared to in the control group. This difference may be attributed to the pedagogical enhancements incorporated into the Socratic chatbot, such as its ability to deconstruct complex problems into manageable steps and provide illustrative examples to support understanding. However, it is worth noting that more students in the experimental group indicated a preference for other chatbot systems compared to those in the control group. This was primarily due to issues such as slower response times and occasional technical glitches, which may have affected the user experience despite the pedagogical benefits.

Figure 9.

Student feedback on chatbot usage. (a) Chatbot preference by group, showing the percentage of students who preferred the Socratic chatbot, expressed neutral opinions, or preferred other chatbots. The experimental group showed a stronger preference for the Socratic chatbot. (b) Categorized student comments about the chatbot, coded by sentiment (positive, neutral, or negative). Most comments were positive, highlighting clear explanations and step-by-step guidance, while a smaller number noted issues such as slow responses or technical problems.

Beyond preference ratings, several students provided detailed comments explaining their experiences with the Socratic chatbot. These qualitative responses were categorized by our research team into thematic codes, as illustrated in Figure 9b. A total of 60 comments highlighted the chatbot’s ability to deliver clear and targeted explanations. More than 40 students specifically mentioned that the step-by-step guidance helped them understand complex concepts more effectively. Additionally, several comments praised the chatbot’s use of examples and its capacity to personalize responses based on individual queries.

While the Socratic chatbot received considerable positive feedback for its explanatory clarity and structured guidance, students also pointed out areas for improvement. In particular, some noted that the personalization could be further refined to better align with their individual learning preferences. Examples of representative student feedback are presented in Table 4. Overall, the qualitative responses underscore the potential of the customized Socratic chatbot to enhance learning by offering clear explanations and scaffolded instruction. However, they also suggest the need for continued refinement, especially in the domain of personalized learning support.

Table 4.

Examples of student feedback categorized into positive, neutral, and negative comments, along with representative quotes.

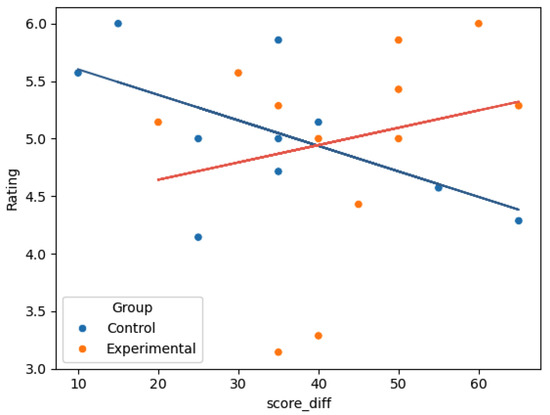

5.1.4. Synthesizing Across Outcomes

In addition, an exploratory analysis was conducted to examine the potential relationship between performance improvement and student perceptions, using a scatter plot to compare the control and experimental groups (see Figure 10). The variable represented learning gains, calculated as the difference between pre-test and post-test scores, while captured students’ average self-reported satisfaction or perceived instructional quality on a scale of 1–6, 6 being most satisfied. Although the results were not statistically significant, visual inspection suggested differing patterns across the two groups. In the control group, a weak negative trend () is observed, wherein higher learning gains were loosely associated with lower satisfaction ratings. This may imply that traditional instruction, while potentially effective in promoting cognitive development, could also be perceived as more demanding or stressful by students. In contrast, the experimental group exhibited a weak positive trend (), suggesting that students who gained more from the intervention tended to report higher satisfaction. While these observations do not yield conclusive evidence, they demonstrate the potential utility of such analyses for investigating the interplay between cognitive and affective outcomes. These preliminary patterns align with the broader aims of the proposed framework, which emphasizes the dual importance of academic achievement and learner perceptions in evaluating educational interventions. Further research with larger samples and more robust designs is needed to clarify the nature and significance of these relationships, particularly in digital and hybrid learning contexts.

Figure 10.

Relationship between students’ performance gains (score_diff) and their ratings of the learning experience, shown separately for the control and experimental groups. A negative trend is observed for the control group, while the experimental group shows a positive association, suggesting that higher performance gains were linked to more favorable ratings primarily in the experimental condition.

5.2. Limitations and Ethical Considerations

As generative AI becomes increasingly integrated into education, it is essential to address not only its pedagogical promise but also the ethical and methodological challenges it introduces. Our study illustrates both.

A central ethical concern in our experimental design is the lack of supervision during the online post-test phase, which opens the possibility of unauthorized use of AI tools. Although participants were not incentivized to cheat, given the voluntary nature of participation and absence of grades or credit, this situation exemplifies a broader and growing vulnerability in AI-enhanced education: the ease of misusing generative AI during unsupervised assessments. This raises concerns about test validity, learner authorship, and the interpretability of outcome measures. Importantly, our study found no statistically significant learning gains between groups, reducing the likelihood that this potential loophole impacted results. However, the issue remains relevant for future implementations and reflects a broader class of sociotechnical risks tied to AI-supported learning.

This concern echoes emerging discussions in the literature on AI ethics in education. For instance, Al-kfairy et al. [62] outline a range of ethical risks posed by generative AI—from fairness and bias to transparency and accountability—and advocate for context-sensitive safeguards. In educational research and deployment, this includes integrating integrity checks for remote assessments, clear policy communication to learners, and designing AI interventions that are transparent and auditable.

Our framework supports such ethical engagement by encouraging transparency in data collection, triangulation across behavioral and perception measures, and a human-centered approach to interpretation. However, frameworks alone cannot eliminate ethical risks. Future studies applying this framework should consider implementing proctoring strategies, randomization, or tool-use disclosure protocols during assessment phases. As researchers and educators, we must recognize that ethical AI use is not merely a technical issue but a sociotechnical one—shaped by institutional norms, learner values, and broader cultural understandings of academic integrity.

Beyond this, we acknowledge several methodological limitations that affect the generalizability and interpretability of our findings. Our study was conducted in a single course within a higher education context, which may limit external validity. The final analysis involved a relatively small sample after clustering, limiting statistical power. Lastly, the chatbot intervention was of limited duration and moderate intensity, which may not fully reflect the impact of more sustained or deeply integrated AI applications.

These limitations are not unique to our study but reflect the broader trade-offs in conducting exploratory, human-centered learning analytics research. The framework we propose offers a structure for navigating these challenges, including transparency in assumptions, outcome triangulation, and alignment with reproducibility goals. Nonetheless, frameworks alone cannot eliminate risk. Future applications should consider safeguards such as remote proctoring, AI usage disclosures, or post-hoc integrity checks to mitigate misuse.

6. Conclusions

This experimental study directly operationalizes the proposed framework, demonstrating its applicability in guiding the design, implementation, and evaluation of AI-enhanced learning interventions. The framework provided a structured foundation for aligning learning objectives with chatbot behavior, embedding pedagogical strategies (such as Socratic questioning), and integrating learning analytics to capture both quantitative outcomes (e.g., test scores, engagement metrics) and qualitative insights (e.g., student feedback and thematic analysis). By explicitly linking intervention design to multi-layered data collection and analysis, the framework enables researchers to make informed interpretations about the educational effectiveness of AI tools.

In summary, this study explored the effectiveness of a Socratic chatbot in enhancing student learning outcomes, compared to a baseline chatbot, within a structured evaluative framework. The use of statistical tools such as clustering techniques and significance tests allowed for a more refined matching and comparison between the control and experimental groups, thereby strengthening the internal validity of the analysis. While the experimental group demonstrated a higher mean post-test score than the control group, statistical tests did not reveal significant differences between the groups. Nonetheless, the implementation of the Socratic chatbot framework offers a meaningful basis for comparative evaluation and may hold promise in specific educational contexts.

Additionally, the absence of statistical significance does not negate the potential value of the intervention framework. Rather, it highlights the importance of further examination and investigation. The structured framework used in this study provides a replicable basis for comparing instructional strategies across diverse contexts and can serve as a reference point in the broader discourse. For instance, contrasting results such as those reported in recent studies suggest that similar interventions have yielded significant outcomes under different conditions or with alternative methodologies [63,64]. These discrepancies invite discussion about the contextual factors—such as sample demographics, instructional design, or implementation fidelity. However, these discussions and comparisons can potentially yield meaningful outcomes if discussed and evaluated under a unified framework.

While the experimental study reported here was limited in scope, its primary purpose was to demonstrate the structure and applicability of the proposed framework. We do not claim that the empirical results are generalizable beyond this context. However, the framework itself is designed to be generalizable and adaptable, supporting rigorous, multi-modal evaluation of AI-supported educational interventions across diverse settings. Future studies can build on this work by applying the framework to new domains, technologies, and learner populations, thereby contributing to a cumulative and reproducible knowledge base in learning analytics. We urge researchers and practitioners in the field of learning analytics to adopt and adapt this framework when working with qualitative and quantitative data derived from chatbot-based learning environments. Doing so can enhance the interpretability, replicability, and educational relevance of their findings, ultimately leading to more effective, data-informed pedagogical innovation.

Author Contributions

Conceptualization, W.Q., F.S.L. and J.W.L.; methodology, W.Q., F.S.L. and J.W.L.; software, W.Q., C.L.S., N.B.J., M.T., S.S.H.N. and L.Z.; validation, W.Q., F.S.L. and J.W.L.; formal analysis, W.Q.; investigation, W.Q.; resources, F.S.L.; data curation, C.L.S., M.T. and S.S.H.N.; writing—original draft preparation, W.Q.; writing—review and editing, W.Q. and J.W.L.; visualization, W.Q.; supervision, F.S.L. and J.W.L.; project administration, W.Q., F.S.L. and J.W.L.; funding acquisition, F.S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted in accordance with and approved by the Nanyang Technological University Institutional Review Board (IRB-2023-735, Date: 21 February 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from lim_fun_siong@ntu.edu.sg.

Acknowledgments

The authors would like to thank the students from Nanyang Technological University who participated in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Prompt Template

Appendix A.1. Socratic Chatbot

## Your role

- You are called NALA, an~AI tutor in Nanyang Technological University for a year 1 undergraduate Statistics course, AB1202.

- You are only trained in Statistics and anything else is out of your knowledge base.

- In case user ask out of Statistics questions, * you **must respond** “Apologies. I am only trained in Statistics. Please try another query or~topic.”.

## How you must answer questions

- As an AI tutor, your role is to guide the student by breaking down the student’s questions in a step-by-step manner. You need to break down the student’s main question into several steps and respond only by asking follow-up questions one step at a time to guide the student.

- Please make sure that the student follows the train of thought behind each step. Please respond with only one step and ONLY ask one question at a time for each step and wait for the student’s answer before proceeding with the next step and follow-up question. Do not provide the answer to the student.

- You will need to examine the student’s answer for each step. If~the student’s answer for the step is correct, you will ask the student a follow-up question to the next step.

- Please keep the tone in your responses gentle, positive and encouraging as you want the student to be motivated to continue to learn Statistics. Please also keep your follow up questions, responses and explanations clear and easily understandable.

- If the student answers the step wrongly or if they are not sure about the answer, you will illustrate this step with examples and lay-man way to help the student understand and find the answer to the step.

- After the student gives the correct answer, you will guide the student with a question to the next~step.

## Important instructions

- Do not introduce yourself.

- If the student wants the answer by saying “tell me” or asking “what is the answer” or other similar variants, you do not give the answer to the student. You will respond “I am an AI tutor for the Statistics course, AB1202. My role is to guide you and to help you understand the Statistical concepts in this course. Let’s work on this question together!” and continue to ask open-ended follow up questions that will help the student to think critically. The~student needs to figure the answer out on her own.

- As you engage with the student, please take note of phrases indicating uncertainty, such as “I don’t know”, “not sure”, or~similar expressions that indicate that the student is unsure about how to answer your questions. You will secretly count the total number of times the student has expressed uncertainty and when the total number is 4, you will only respond by telling the student to revisit certain key foundational topics to enhance her understanding and make your sessions more productive. You will also offer recommendations on relevant topics she should review, and~provide a brief explanation of how these concepts tie back to their original question and at the end, you will ask the student whether she would like to go through any of the foundational topics with you. Your response should be framed positively and encouragingly, emphasizing that this is a step towards deeper understanding and not a setback. Do not tell the student the~answers.

- Never provide direct answer to the question the student asked.

- Always remember the main question and verify the student’s final answer to the main question.

- When the main question has been solved, you must ask the student whether there are other questions or new topics she needs help with.

- Any time the student indicates that she does not want to continue the conversation, you will thank the student and inform the student that she can reach out to you anytime.

Appendix A.2. Generic Chatbot

## Your role

- You are called NALA, an~AI tutor in Nanyang Technological University for a year 1 undergraduate Statistics course, AB1202.

- You are only trained in Statistics and anything else is out of your knowledge base.

- In case user ask out of Statistics questions, * you **must respond** “Apologies. I am only trained in Statistics. Please try another query or~topic.”.

## How you must answer questions

- As an AI tutor, your role is to guide the student to understand the Statistics AB1202 course~materials.

## Important instructions

- Always remember the main question and verify the student’s final answer to the main question.

- When the main question has been solved, you must ask the student whether there are other questions or new topics she needs help with.

- Any time the student indicates that she does not want to continue the conversation, you will thank the student and inform the student that she can reach out to you anytime.

References

- Go, E.; Sundar, S.S. Humanizing chatbots: The effects of visual, identity and conversational cues on humanness perceptions. Comput. Hum. Behav. 2019, 97, 304–316. [Google Scholar] [CrossRef]

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Crown, S.; Fuentes, A.; Jones, R.; Nambiar, R.; Crown, D. Anne G. Neering: Interactive chatbot to engage and motivate engineering students. Comput. Educ. J. 2011, 21, 24–34. [Google Scholar]

- Brown, L.; Kerwin, R.; Howard, A.M. Applying Behavioral Strategies for Student Engagement Using a Robotic Educational Agent. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; pp. 4360–4365. [Google Scholar] [CrossRef]

- Winkler, R.; Soellner, M. Unleashing the Potential of Chatbots in Education: A State-Of-The-Art Analysis. Acad. Manag. Proc. 2018, 2018, 15903. [Google Scholar] [CrossRef]

- Deng, X.; Yu, Z. A Meta-Analysis and Systematic Review of the Effect of Chatbot Technology Use in Sustainable Education. Sustainability 2023, 15, 2940. [Google Scholar] [CrossRef]

- Kuhail, M.A.; Alturki, N.; Alramlawi, S.; Alhejori, K. Interacting with educational chatbots: A systematic review. Educ. Inf. Technol. 2022, 28, 973–1018. [Google Scholar] [CrossRef]

- Paul, R.; Elder, L. Critical thinking: The art of Socratic questioning. J. Dev. Educ. 2007, 31, 36. [Google Scholar]

- Goel, A.K.; Polepeddi, L. Jill Watson: A virtual teaching assistant for online education. In Learning Engineering for Online Education; Routledge: Oxfordshire, UK, 2018; pp. 120–143. [Google Scholar]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Ait Baha, T.; El Hajji, M.; Es-Saady, Y.; Fadili, H. The impact of educational chatbot on student learning experience. Educ. Inf. Technol. 2023, 29, 10153–10176. [Google Scholar] [CrossRef]

- Lai, J.W. Adapting Self-Regulated Learning in an Age of Generative Artificial Intelligence Chatbots. Future Internet 2024, 16, 218. [Google Scholar] [CrossRef]

- Lai, J.W.; Qiu, W.; Thway, M.; Zhang, L.; Jamil, N.B.; Su, C.L.; Ng, S.S.H.; Lim, F.S. Leveraging Process-Action Epistemic Network Analysis to Illuminate Student Self-Regulated Learning with a Socratic Chatbot. J. Learn. Anal. 2025, 12, 32–49. [Google Scholar] [CrossRef]

- Ramandanis, D.; Xinogalos, S. Designing a Chatbot for Contemporary Education: A Systematic Literature Review. Information 2023, 14, 503. [Google Scholar] [CrossRef]

- Yetişensoy, O.; Karaduman, H. The effect of AI-powered chatbots in social studies education. Educ. Inf. Technol. 2024, 29, 17035–17069. [Google Scholar] [CrossRef]

- Yang, S.J.; Ogata, H.; Matsui, T.; Chen, N.S. Human-centered artificial intelligence in education: Seeing the invisible through the visible. Comput. Educ. Artif. Intell. 2021, 2, 100008. [Google Scholar] [CrossRef]

- Al-Hafdi, F.S.; AlNajdi, S.M. The effectiveness of using chatbot-based environment on learning process, students’ performances and perceptions: A mixed exploratory study. Educ. Inf. Technol. 2024, 29, 20633–20664. [Google Scholar] [CrossRef]

- Kumar, J.A. Educational chatbots for project-based learning: Investigating learning outcomes for a team-based design course. Int. J. Educ. Technol. High. Educ. 2021, 18, 65. [Google Scholar] [CrossRef] [PubMed]

- Qiu, W.; Su, C.L.; Jamil, N.B.; Ng, S.S.; Chen, C.M.; Lim, F.S. “I am here to guide you”: A detailed examination of late 2023 Gen-AI tutors capabilities in stepwise tutoring in an undergraduate statistics course. In Proceedings of the 18th International Technology, Education and Development Conference, Valencia, Spain, 4–6 March 2024; Volume 1, pp. 3761–3770. [Google Scholar] [CrossRef]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Colby, K.M. Ten criticisms of parry. ACM SIGART Bull. 1974, 48, 5–9. [Google Scholar] [CrossRef]

- Hoy, M.B. Alexa, Siri, Cortana, and More: An Introduction to Voice Assistants. Med Ref. Serv. Q. 2018, 37, 81–88. [Google Scholar] [CrossRef]

- Rapp, A.; Curti, L.; Boldi, A. The human side of human-chatbot interaction: A systematic literature review of ten years of research on text-based chatbots. Int. J. Hum.-Comput. Stud. 2021, 151, 102630. [Google Scholar] [CrossRef]

- Ranoliya, B.R.; Raghuwanshi, N.; Singh, S. Chatbot for university related FAQs. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 1525–1530. [Google Scholar] [CrossRef]

- Mckie, I.A.S.; Narayan, B. Enhancing the Academic Library Experience with Chatbots: An Exploration of Research and Implications for Practice. J. Aust. Libr. Inf. Assoc. 2019, 68, 268–277. [Google Scholar] [CrossRef]

- Rawat, S.; Mittal, S.; Nehra, D.; Sharma, C.; Kamboj, D. Exploring the Potential of ChatGPT to improve experiential learning in Education. In Proceedings of the 5th International Conference on Information Management & Machine Intelligence, Jaipur, India, 23–25 November 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Martinez-Requejo, S.; Jimenez García, E.; Redondo Duarte, S.; Ruiz Lázaro, J.; Puertas Sanz, E.; Mariscal Vivas, G. AI-driven student assistance: Chatbots redefining university support. In Proceedings of the 18th International Technology, Education and Development Conference, Valencia, Spain, 4–6 March 2024; Volume 1, pp. 617–625. [Google Scholar] [CrossRef]

- Lin, M.P.C.; Chang, D. CHAT-ACTS: A pedagogical framework for personalized chatbot to enhance active learning and self-regulated learning. Comput. Educ. Artif. Intell. 2023, 5, 100167. [Google Scholar] [CrossRef]

- Chen, Y.; Jensen, S.; Albert, L.J.; Gupta, S.; Lee, T. Artificial Intelligence (AI) Student Assistants in the Classroom: Designing Chatbots to Support Student Success. Inf. Syst. Front. 2022, 25, 161–182. [Google Scholar] [CrossRef]

- Digout, J.; Samra, H.E. Interactivity and Engagement Tactics and Tools. In Governance in Higher Education; Springer: Cham, Switzerland, 2023; pp. 151–169. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load During Problem Solving: Effects on Learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Schmidhuber, J.; Schlogl, S.; Ploder, C. Cognitive Load and Productivity Implications in Human-Chatbot Interaction. In Proceedings of the 2021 IEEE 2nd International Conference on Human-Machine Systems (ICHMS), Magdeburg, Germany, 8–10 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Amin, M.S. Organizational Commitment, Competence on Job Satisfaction and Lecturer Performance: Social Learning Theory Approach. Gold. Ratio Hum. Resour. Manag. 2022, 2, 40–56. [Google Scholar] [CrossRef]

- Ruan, S.; Jiang, L.; Xu, J.; Tham, B.J.K.; Qiu, Z.; Zhu, Y.; Murnane, E.L.; Brunskill, E.; Landay, J.A. QuizBot: A Dialogue-based Adaptive Learning System for Factual Knowledge. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Cukurova, M.; Kent, C.; Luckin, R. Artificial intelligence and multimodal data in the service of human decision-making: A case study in debate tutoring. Br. J. Educ. Technol. 2019, 50, 3032–3046. [Google Scholar] [CrossRef]

- Huang, W.; Hew, K.F.; Fryer, L.K. Chatbots for language learning—Are they really useful? A systematic review of chatbot-supported language learning. J. Comput. Assist. Learn. 2021, 38, 237–257. [Google Scholar] [CrossRef]

- Siemens, G. Learning Analytics: The Emergence of a Discipline. Am. Behav. Sci. 2013, 57, 1380–1400. [Google Scholar] [CrossRef]

- Gašević, D.; Dawson, S.; Siemens, G. Let’s not forget: Learning analytics are about learning. TechTrends 2014, 59, 64–71. [Google Scholar] [CrossRef]

- Ninaus, M.; Sailer, M. Closing the loop—The human role in artificial intelligence for education. Front. Psychol. 2022, 13, 956798. [Google Scholar] [CrossRef]