Abstract

The rapid evolution of cyber threats, particularly Advanced Persistent Threats (APTs), poses significant challenges to the security of information systems. This paper explores the pivotal role of Artificial Intelligence (AI) in enhancing the detection and mitigation of APTs. By leveraging machine learning algorithms and data analytics, AI systems can identify patterns and anomalies that are indicative of sophisticated cyber-attacks. This study examines various AI-driven methodologies, including anomaly detection, predictive analytics, and automated response systems, highlighting their effectiveness in real-time threat detection and response. Furthermore, we discuss the integration of AI into existing cybersecurity frameworks, emphasizing the importance of collaboration between human analysts and AI systems in combating APTs. The findings suggest that the adoption of AI technologies not only improves the accuracy and speed of threat detection but also enables organizations to proactively defend against evolving cyber threats, probably achieving a 75% reduction in alert volume.

1. Introduction

Here is a revised version of your text that reduces the introductory background and introduces the RANK architecture at the end of the section to provide a natural transition and clear context:

Artificial Intelligence (AI) systems differ from traditional rule-based software by learning from data to identify patterns and inform decisions. This capability makes AI a strong candidate for automating the detection of Advanced Persistent Threats (APTs), a class of multi-stage, stealthy attacks that increasingly target enterprise and government networks. These threats often result in the unauthorized extraction of sensitive data, such as intellectual property or national security intelligence.

APT detection involves distinct, yet interrelated challenges primarily addressed by two types of systems. Intrusion Detection Systems (IDSs) detect early-stage activities such as reconnaissance and malware deployment. Meanwhile, User and Entity Behavior Analytics (UEBA) identifies abnormal user behaviors linked to data theft or lateral movement. Despite these advancements, APT detection remains semi-automated, requiring analysts to manually review semi-structured alerts that describe potentially suspicious events with varying confidence levels and metadata.

These alerts—generated across diverse systems—often pertain to the same underlying incident, necessitating manual correlation, prioritization, and investigation. Analysts must identify which incidents require attention, determine root causes, and recommend remediation actions. This process is resource-intensive and susceptible to alert fatigue.

To address these challenges, the **RANK architecture** offers a structured AI-based framework that enhances automation in APT detection workflows. It consists of four core components: Rank (prioritize alerts by threat likelihood), Aggregate (group related alerts into incidents), Normalize (standardized data formats from multiple sources), and Knowledge (integrate contextual threat intelligence). By applying RANK, security operations can streamline alert handling, reduce false positives, and elevate analyst focus on high-risk threats, enabling a more scalable and effective cybersecurity response.

2. Advanced Persistent Threats (APTs)

Advanced Persistent Threats (APTs) have become a buzzword in recent years among security professionals and researchers. In this paper, APTs are defined as sophisticated, multi-step, resourceful, and targeted attacks orchestrated against government and enterprise networks. They are called “Persistent” because a successful attack might remain undetected for years, navigating around different detection systems and aiming toward a target of high value, such as retrieving sensitive information and documents [1]. Examples of such attacks include APT28, APT29, and many others. APTs are usually orchestrated by hackers with vast resources and funding from governments or institutions. APT detectors capitalize on knowledge of the underlying threat and dedicate substantial resources to thwart the attack while it is being conducted. Detecting APTs is a challenging task that cannot be solved perfectly in an automated fashion. However, vendor-agnostic User and Entity Behavior Analytics (UEBA) systems can analyze security data based on simple cut-off rules combined with machine learning and statistical techniques. In addition, Intrusion Detection Systems (IDSs) can detect network-based attacks against a wider scope of threats due to the abundance of sensors.

UEBA systems and other data sources can produce 5000 alerts a day in a medium-scale enterprise, while the actual number of investigated cases is around a few dozen; all these alerts are generated as 99% false positives and irrelevant incidents. This creates an alert overload problem that hampers the detection of APTs. Analysts want to focus as soon as possible on incidents of some importance, such as breaches that are not detected by core products. APT detection is a suitable problem for automation through Artificial Intelligence (AI). Typically, AI adopts a multi-step approach, where alerts are sourced from an initial input and ultimately output as incidents of interest. This common approach is utilized in most literature within the security domain and beyond.

The exponential growth of technology has drastically changed the way modern governments and enterprises operate. Services such as cloud computing, inter-organizational collaboration, and mobile clients provide organizations with fresh opportunities, but they also give skilled adversaries access to sophisticated techniques to attack their targets. An Advanced Persistent Threat (APT) is a multi-step attack that is planned and executed by skilled adversaries against modern government and enterprise networks. APTs are a significant issue in the information security domain, which has led to an increasing demand for efficient techniques to aid security analysts in their detection. While a variety of techniques have been presented in the literature to detect APTs, Intrusion Detection Systems (IDSs) and User and Entity Behavior Analytics (UEBA) are commonly employed in practice [1].

As the sophistication of attacks grows, so does the amount of data generated by security tools in response to events occurring in the network. Although this data is extensively processed and reduced, it still represents an enormous volume and speed, which can pose a challenge. An analyst monitoring this stream of alarms must decide what to investigate before the alarm becomes stale. With the number of alerts increasing daily, hundreds of thousands could be triggered by devices in a network. Analyzing this data requires deploying various cyber-situation-aware systems to process the alerts. Currently, such tools do not coordinate their responses, leading to missed alerts or wasted time on already investigated ones. Additionally, false positives are often raised by hundreds of detections, with multiple reasons for a single event. An event may be a valid alert; thus, human feedback is necessary to fine-tune the systems. Below, a sandbox is introduced to verify suspicious files.

To assist security analysts in detecting APTs, the authors present the first study and implementation of an end-to-end AI-assisted architecture for identifying Advanced Persistent Threats (APTs). One of the benefits of this architecture is automation, with a focus on APTs as attacks that typically occur over a longer duration and exhibit gradual patterns, rather than on very tactical threats like zero-day vulnerabilities, which are instantaneous events. The architecture’s goal is to automate the complete pipeline from data sources, such as IDS and UEBA systems, to a final set of incidents (vulnerabilities, compromised hosts, etc.) for analyst review.

3. APTs Specific Characterization

3.1. Definition and Characteristics

Advanced Persistent Threats (APTs) are stealthy, long-term network attacks in enterprise, commercial, and government environments that exploit vulnerabilities in technology, humans, and processes to gain an initial foothold. After identifying a feasible entry point, an APT group attempts to exfiltrate information from the target network and deploy “persistent” payloads to gather data in a largely unnoticed manner. In the enterprise and commercial domains, this security event space is of significant economic importance, whereas in the government domain, it may target national security events. Despite aiding in monitoring and safeguarding systems such as networks, databases, and codebases, Information Technologies (IT) Operations, Security Operations (SecOps), and Application Developers face information overload due to the increasing number of alerts and incidents raised, which heightens workload and complicated the assessment of the salience and impact of information [1].

Detection technologies, such as Intrusion Detection Systems (IDSs) and User and Entity Behavior Analytics (UEBA), have been developed to help monitor and detect APT alerts. However, given the pace at which APT actions occur, the question of how to deal with excessive alerts for detection systems and technologies to be “useful” arises. The analyst of a detection system perceives and assesses alerts in different ways, and pre-screening alerts before review is almost always employed. Aliases, duplicates, and “low impact” events are filtered, either by using the detection system’s built-in capabilities or by sifting through and clustering alerts that share properties. Sophisticated (non-obvious) attacks increase the complexity involved in detection, making it challenging to differentiate between false positives, which constitute most alerts, and true positives (TPs) (high-impact events).

3.2. Historical Context

Advanced Persistent Threats (APTs) are increasingly proliferating as products due to their effectiveness and persistence against targets. APTs represent a category of sophisticated multi-step attacks or cyber-attacks that exploit various weaknesses in modern government and enterprise networks. They are regarded as one of the most advanced classes of attacks targeting sensitive data, often taking weeks to months to execute. Commonly exploited attack vectors include endpoint devices, web servers, domain controllers, and mail servers. Typically, an APT comprises multiple steps involving various actions to infiltrate the network, expand compromised access, and retrieve sensitive data for exfiltration. However, the multi-step nature of APTs presents a unique challenge for detection, making alert-generating detection methods such as Intrusion Detection Systems and User and Entity Behavior Analytics essential tools for security analysts in identifying APTs.

Detecting APTs has recently attracted attention. APT detection is recognized as a complex, long-standing problem with both technical and non-technical causes. As it involves sequential data with patterns that can be learned from observations, the issue became a candidate for automation through Artificial Intelligence. Given the large and growing need for detection, AI-assisted APT detection has been explored. This includes the first study and implementation of an end-to-end AI-assisted architecture for detecting APTs. As reported, this architecture automates the complete pipeline from data sources to a final set of incidents for analyst review, including alert templating and merging, alert graph construction, alert graph partitioning into incidents, and incident scoring and ordering.

This architecture is evaluated against a dataset and a real-world private dataset from a medium-scale enterprise. Extensive results show a three-order-of-magnitude reduction in the amount of data to be reviewed by the analyst compared to the non-AI-assisted method. The contributions of the proof-of-concept implementation and the dataset are further described.

3.3. Impact on Organizations

Advanced Persistent Threats (APTs) are stealthy environmental attacks targeting modern-day enterprises and government networks. Infection is sought through vulnerabilities in systems or social engineering, followed by lateral movement across the network. The prolonged period during which APTs can remain undiscovered allows them to harvest vast amounts of data from enterprise networks. In addition to the high costs associated with inefficient detection, data leaks caused by advanced persistent threats pose a much greater risk to personal privacy and national security. Therefore, the development of ideas and tools for detecting APTs has become a significant topic in both academia and industry.

Due to network compromises occurring over a series of stages lasting from days to months, detecting APTs is a challenging task. Even security analysts armed with many tools and alert reports suffer from detection overload. With a multitude of guesses at hand, determining which to focus on can be a grueling task. Traditional evaluation methods that rely on rule-based systems become costly as the number of possible alerts increases exponentially with the number of inputs into the detection pipeline. To effectively detect APTs, traditional rule-based IDSs must be augmented with the ability to learn from experience. In recent years, the rise in complex and highly interconnected systems such as the Internet, social networks, and the power grid has resulted in increased interest in unprecedented emergent global behaviors and vulnerabilities.

In this paper, key challenges of detecting APTs are addressed. APT detection options are surveyed, and practical and transient APT detection queries are considered in general terms. A proof-of-concept tool is shown, called the indicators of compromise graph analysis tool (IGAT), capable of demonstrating query formulation and result exploration for rapid investigation of vast amounts of security alerts related to APT detections. Novel approaches to better leverage, visualize, and analyze large volumes of alerts, logs, and system performance data within temporal windows of interest are discussed. It is concluded that to enable the systematic detection of subtle APT behaviors across complex systems at unprecedented scales, significant advances in query expressiveness and implementation efficiency, as well as the seamless exploitation of relevant domain knowledge, are paramount [2].

4. Artificial Intelligence Overview

Artificial Intelligence (AI) is not merely a futuristic concept or buzzword—it is a rapidly maturing discipline that is quietly transforming the backbone of critical industries. At its core, AI refers to systems designed to simulate cognitive functions such as learning, reasoning, and self-correction. Yet, the most significant evolution in AI lies not in theoretical models but in its increasingly seamless integration into practical workflows across business, science, and public infrastructure.

Modern AI systems typically operate on vast datasets that require preprocessing, labeling, and feature engineering processes that were once the domain of seasoned data scientists but are now increasingly automated. This shift has been driven in part by advances in neural network architectures (e.g., transformers and convolutional networks) and frameworks like TensorFlow2 and PyTorch 3, which enable scalable model deployment. However, these tools are only as effective as the data they are trained on, raising new concerns about bias, ethics, and explainability.

For instance, in financial services, AI is not just about chatbots or fraud detection; it powers real-time credit scoring and algorithmic compliance auditing—systems that can adjust dynamically to regulatory changes. In precision agriculture, AI-driven drones combine real-time imaging with plant health models to recommend hyper-localized irrigation strategies, directly impacting yields and sustainability. Meanwhile, in national defense, AI models are being trained on classified surveillance feeds to autonomously identify emerging threats, which raises security and accountability challenges.

Crucially, the rapid adoption of AI has outpaced the development of corresponding governance. While responsible AI frameworks are emerging—focusing on fairness, transparency, and robustness—most organizations are still in the early stages of maturity, often deploying AI in silos without clear oversight.

The true challenge ahead lies not in building more powerful AI systems but in integrating AI in ways that align with human values and mitigate risks and augment rather than replace expertise. Whether managing urban traffic through predictive analytics or detecting early-stage cancers using multimodal imaging, the future of AI will be shaped not just by innovation but also by intentional, cross-disciplinary collaboration. Artificial Intelligence in healthcare is moving well beyond pilot projects. In radiology, tools like Aidoc and Zebra Medical Vision are FDA-cleared AI systems used in hospitals to flag intracranial hemorrhages, pulmonary embolisms, and fractures in real-time. These systems process medical imaging faster than human radiologists, acting as a triage layer to reduce diagnostic latency in emergency settings. For instance, Mount Sinai Hospital in New York uses Aidoc in its ER to cut turnaround time on critical scans by over 30%, helping to prevent delayed treatment in stroke or trauma cases. In pathology, Paige.AI leverages whole-slide imaging and deep learning models to detect prostate and breast cancer metastasis. A clinical trial published in journal Jama Oncology (2000), demonstrated that AI-assisted detection had a higher sensitivity than general pathologists working alone—without a reduction in specificity [3]. AI is also driving drug discovery. For example, Insilco Medicine used generative adversarial networks (GANs) to identify a novel preclinical drug candidate for fibrosis in less than 46 days, a timeline that traditionally spans years. The molecule is now undergoing preclinical validation. This reduction in time and cost has caught the attention of pharmaceutical giants like Pfizer and Roche, which are integrating similar technologies into early R&D pipelines. However, deployment challenges persist—namely data silos, inconsistent labeling, and regulatory inertia. Many AI models still underperform when exposed to diverse patient populations, highlighting the critical need for better representative datasets and rigorous external validation, not just internal benchmarks.

AI in education is not about replacing teachers but enhancing instructional personalization and administrative efficiency. Carnegie Learning’s MATHia, a platform grounded in cognitive science and Bayesian Knowledge Tracing, is used in several U.S. school districts to provide real-time feedback and adaptively recommend content. A RAND Corporation study (2014) found that students using adaptive software like MATHia made learning gains equivalent to roughly 1.5 months of additional instruction time over a school year [4].

In higher education, AI is being used for student risk prediction. Georgia State University deployed a predictive analytics model that monitors over 800 indicators (including login frequency, assignment submissions, and class attendance). By flagging at-risk students early and triggering advisor interventions, the university increased its graduation rate by more than 20% over a decade while significantly narrowing achievement gaps across demographics.

Natural Language Processing (NLP) tools such as GrammarlyEDU or Turnitin’s Authorship Investigation system now analyze linguistic style and usage patterns to identify potential cases of contract cheating—a growing concern in digital education.

Despite these advances, AI in education struggles with privacy issues under FERPA, interpretability of model predictions, and bias in recommendation engines that could inadvertently reinforce existing academic inequalities. Moreover, much of the AI deployed is proprietary and lacks transparency regarding algorithm design.

In cybersecurity, AI has become a critical component in responding to increasingly sophisticated, multi-vector attacks. Darktrace, based on a model of the human immune system, uses unsupervised learning to establish “normal” patterns of behavior in enterprise networks and detect subtle anomalies indicative of insider threats or zero-day exploits. It played a key role in identifying the Sunburst malware during the SolarWinds supply chain attack by recognizing deviations in device communications before official patches were released.

Another concrete implementation is MIT’s AI2 platform, developed in partnership with CSAIL and Pattern Ex. It combines supervised learning with analyst feedback to improve over time, reducing false positives by 85% compared to traditional rule-based Intrusion Detection Systems (IDS).

In industrial settings, AI is deployed to secure Operational Technology (OT) and Industrial Control Systems (ICS). For instance, Nozomi Networks uses AI-based traffic analysis to detect anomalies in SCADA systems. During a real-world case in a European power plant, Nozomi’s system detected subtle timing deviations in Modbus traffic, which turned out to be a sophisticated timing-based attack aimed at turbine manipulation.

Still, cybersecurity AI systems face limitations in context awareness, susceptibility to adversarial machine learning, and challenges in alert triage automation. Additionally, effective deployment requires constant model tuning and a robust pipeline for continuous learning, as cyber threats evolve faster than static training data can accommodate.

5. Definition and Types of AI

In several recent works, Autonomous Attack Behaviors and Advanced Persistent Threats (APTs), understood in this paper as non-detectable model-based attacks, were proposed. APTs differ from traditional intrusion detection use cases by employing sophisticated evasion techniques. Attackers maintain a foothold on the targeted system for a prolonged period to gather the desired data or information. With limited, abstract representations of APTs, this paper aims to explore the type of AI required to detect APTs. This will include an elaborate example of an APT scenario and various approaches based on the available systems, data, and labeling capabilities.

For the purposes of this paper, AI is loosely defined by its ability to predict observations given a set of H i × R i (referred to as an AI model). Here, H i likely consists of previously encountered observation sequences affecting R i or the system’s behavior over time. R i is a set of user-defined states. An observation is a set of outputs over the underlying variables (attributes). It is assumed that H i, which can comprise millions of observations, is fed to an AI model or method prior to prediction.

AI models can take various forms, including rules-based, statistical, linguistic, symbolic, and deep learning. In any paper discussing AI’s detection of patterns in a dynamic, complex problem domain such as computer systems under attack, this classification provides an excellent starting framework. In this paper, a nested classification of AI types is discussed in detail. The expanding inner model and complexity dimensions provide insights into the types of AI based on their availability, capabilities, and the complexity of the problem domain. Thus, the responses vary concerning changes in these dimensions. A parameterization of AI type to director and eliminator responses is proposed. Additionally, the inspection and reductive events are examined. Discussions are provided on implementing broader AI to detect APTs based on observations.

5.1. Machine Learning vs. Traditional Methods

The Digital Age has transformed intelligence gathering. Examples of disinformation campaigns and online harassment by APT (Advanced Persistent Threat) actors indicate that malicious actors have switched from traditional means of attack to unorthodox methods. The former are typically pursued by Security Operations Centers (SOCs) using security information and event management (SIEM) systems with user-defined rules to monitor domain activity [1]. However, attack campaigns are never static in their behavior. APT actors constantly change their Tactics, Techniques and Procedures (TTPs) to adapt to their targets and attack surfaces. Consequently, user-defined monitoring rules become ineffective and challenging to maintain over the long term.

AI (Artificial Intelligence), a revolutionary field of study, focuses on creating intelligent entities that can automate complex tasks. In recent years, academic research in AI has seen rapid development, encompassing predictive police analytics, cyber threat intelligence, and cyber incident response. AI has become a widely propagated buzzword, particularly in the realm of cybersecurity. Companies have commercialized technical solutions using a simplified version of AI. However, despite the presence of criminally operated black markets, commercially supported cybersecurity solutions remain inefficient and fail to detect threats adequately.

Whether due to large event volumes, ineffective user-defined algorithms, or excessive false positives, in this astonishingly flawed landscape, Russian state-controlled cyber entities operate in NATO member states, conducting a range of cyber operations from ransomware attacks on critical infrastructure to undermining political, economic, and social stability [5]. The concept of AI, or more specifically the AI-assisted architecture, operates independently of monitoring and learning precursors. It is an oversimplification of a much more complex concept that could also incorporate software solutions and rule-based engines working in unison with meticulously crafted algorithms by highly skilled professionals such as threat analysts or threat retained.

5.2. Current Trends in AI

AI-based approaches to cybersecurity are prevalent. However, in recent years, there has been growing interest in replicating human cognition-driven strategies for defense mechanisms. This premise has led developers to turn to AI programming techniques, particularly reinforcement learning concepts, as starting points for creating fully automated systems. Nonetheless, a key aspect remains unaddressed in the conventional three stages of the Cyber Kill Chain. After detection and response, the necessity to regularly test these security systems arises to prevent weaknesses and blind trust in future defense systems [6]. Intrusion Detection Systems (IDSs) are among the oldest and most researched security mechanisms in cybersecurity. As the cybersecurity landscape evolves, so do the strategies and methods of adversaries. A new generation of advanced attacks, known as Advanced Persistent Threats (APTs), comprises sophisticated multi-step attacks aimed at stealing sensitive information or damaging modern government and enterprise networks [1]. To detect these threats, context-aware monitoring tools such as User and Entity Behavior Analytics (UEBA) systems have been developed. However, the investigation of an APT incident remains a manual process due to the complexity of the attacks and the large volumes of alerts generated by detection tools. With recent advancements and investments in improving AI, its automation capabilities for APT detection have gained attention. This paper was motivated by the need to transfer at least part of the analyst’s inspection work to automation using AI. It represents the first study and implementation of an autonomous AI-assisted end-to-end architecture for detecting APTs, termed branch-AI. This architecture aims to automate the entire detection pipeline. The AI tool will provide a list of incidents for the analyst to review instead of a list of alerts or nodes in the alert graph. The architecture comprises five main modules: graph construction; alert classification; incident scoring and ordering; human analyst review; and database. The results and their interpretation should be considered against previous studies and working hypotheses. The findings and their implications should be discussed in the broadest possible context. Potential future research directions may also be outlined.

6. Research Design

As malicious software (malware) becomes more sophisticated, Advanced Persistent Threats (APTs) have emerged as a new cyber threat to modern enterprise networks. From a purposeful compromise of network elements, APTs begin the unauthorized gathering of sensitive information and data from organizations for information selling, utilization, or broader espionage purposes. APTs target all types of commercial and governmental organizations. The general end-to-end flow of APTs can be divided into the reconnaissance phase, establishment of command and control, gathering, and exfiltration of collected information. Each phase of this flow serves as a prerequisite needed to subjugate the targeted network element and achieve the goal of data exfiltration. The prolonged nature of the attack flow, lasting days or weeks encourages multi-step operations involving different actors. Due to the relatively lengthy nature of APT attacks, the usual timing signature for IDSs to trigger alerts about anomalous behavior is insufficient [1]. Solely relying on behavioral deviations to detect APTs is inadequate since all standard security practices must be unwound and manipulated to allow unmonitored access for the attacking threat agent. User and Entity Behavior Analytics (UEBAs), which employ machine learning methods to understand and learn the behaviors of audited network users and devices, are often combined with these systems to enhance detection capabilities. The lengthy APT life cycle and emphasis on UEBA and IDS can overwhelm analysts with alerts. Consequently, solely relying on the temporal alert stream for data analysis and aggregation may fail to detect the APT in its early phases. The challenge of APT detection may be a candidate for automation through Artificial Intelligence (AI) systems.

7. Data Collection Methods

7.1. Dataset Description

To evaluate the automatic detection of Advanced Persistent Threats (APTs) in network log data, the researchers developed a functional testbed that simulates a realistic work environment where APTs could occur. Under the open world assumption, the system was trained on benign data with no examples of APT incidents and tested on real-world APT events identified by expert analysts.

The data set consisted of log records, including host-level, network-level, and event-based data, accompanied by contextual annotations for security-relevant behavior. Specific subnets that generated alerts due to suspicious activity—yellow440, yellow219, yellow849, and yellow858—were analyzed in detail to extract statistical features contributing to anomaly detection (Table 1).

Table 1.

Dataset statistics summary.

The dataset included metadata such as the following:

- −

- APT descriptions and event timelines (commands and IPs excluded);

- −

- Mapping from local structured logs to partner event sequences.

- −

- Obfuscation methods, malware presence, and temporal complexity.

A total of 482 APT incidents were modeled to simulate real attack behavior. The longest case lasted 4 days, and the average attack chain contained about 21 event sequences, with 23.8 APT-specific actions per case.

7.2. Feature Extraction

The input data consisted of flat log files converted into vector representations using word embedding techniques. The feature extraction approach simplified the externalization process by omitting the first four categories of feature values. Features 5 through 11 accounted for only a small fraction of the total feature set and were verified only for one category factor, suggesting focused yet limited categorical diversity. To support broad feature learning, feature extraction trees were constructed to capture nested event structures. These were processed with simplified models that required minimal hyperparameter tuning yet were capable of extracting thousands of distinct event features. 8.1 Experiment Setup The system was evaluated using learners trained exclusively on synthetic benign data, ensuring no APT incidents influenced the training process. Two model architectures were explored for sequence-based learning: LSTM (Long Short-Term Memory) and BiLSTM (Bidirectional LSTM). N-gram models were also used as baselines for feature sequencing. Hyperparameters for each model were tuned independently to find optimal default values. To assess robustness and generalization capability, an alternative dataset was introduced for cross-dataset testing, also without any APT instances in training. Network perturbations and noise injections were applied to test the noise tolerance of the models. Robustness was further examined by tuning model architecture and applying failure-case stress tests.

7.3. Results

The results underscore the viability of APT detection using models trained only on benign synthetic data under open-world conditions. The system successfully identified complex APT chains in real-world test logs, indicating effective generalization despite the absence of attack signatures during training.

Findings are consistent with prior research demonstrating the value of behavior-based models over signature-based ones, especially when attacks evolve or are obfuscated. The use of real-time, expert-labeled APT timelines further validates the practical relevance of the testbed and supports future adaptation in operational environments.

However, limitations remain in the following:

- ⋅

- Feature sparsity in categorical encoding.

- ⋅

- Simplified event representation potentially misses nuanced attacker behavior.

- ⋅

- Minimal validation on cross-industries or multilingual datasets.

Future research directions include the following:

- ⋅

- Integration with external threat intelligence feeds for adaptive retraining.

- ⋅

- Expanding feature categories to improve coverage of APT variants.

- ⋅

- Exploring transformer-based architectures for richer sequence modeling.

8. APT Detection System: From Raw Alerts to Final Incidents

As described in [1], Advanced Persistent Threats (APTs) are sophisticated, multi-stage attacks targeting government and enterprise networks. These threats are typically executed by highly skilled adversaries with ample resources and operate over extended periods. Unlike traditional attacks that rely on single-stage exploits, APT campaigns involve slow-moving, coordinated steps across multiple hosts, protocols, and user entities.

An Intrusion Detection System (IDS) plays a central role in recognizing the early signs of such intrusions. However, a major challenge arises from the high volume of alert generation. Well-tuned IDSs in enterprise environments can produce thousands of alerts per second, making real-time manual review infeasible. The scale of alerts depends on the number of monitored endpoints and the complexity of the network. As a result, alert clustering is employed to reduce noise by grouping related alerts into cohesive incidents.

User and Entity Behavior Analytics (UEBA) complements IDS by identifying anomalies in user behavior that may not match known attack signatures. UEBA systems are essential for APT detection, which often involves dormant and indirect compromise stages, requiring contextual and historical behavior modeling.

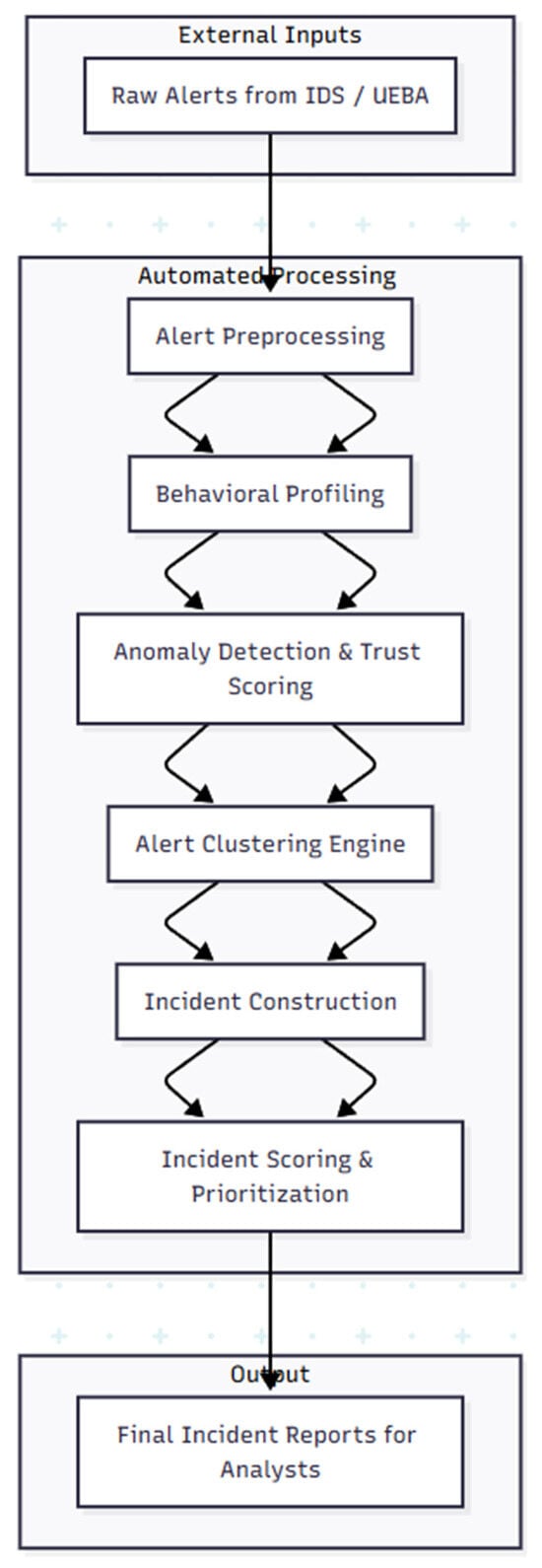

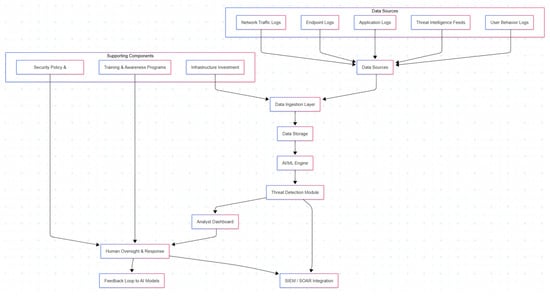

An automated architecture learns from historical alerts and incident data to improve APT detection. It assigns trust scores to new alerts, then uses these scores to prioritize and cluster alerts into probable APT incidents. The system must integrate IDS and UEBA (Figure 1) outputs, apply statistical models, and continuously adapt to emerging threat patterns.

Figure 1.

System architecture diagram: raw alerts to final incidents.

Figure 1 presents a comprehensive overview of the proposed AI-assisted architecture designed to support the detection and triage of Advanced Persistent Threats (APTs). Unlike traditional detection approaches that rely heavily on human effort and static rules, this system embodies a dynamic, end-to-end process that systematically transforms a high volume of unstructured alerts into a small number of well-prioritized, actionable security incidents. The architecture leverages machine learning, behavioral modeling, and clustering algorithms to support security analysts in navigating complex threat environments.

The process starts with the ingestion of raw alerts, generated by two main types of cybersecurity tools: Intrusion Detection Systems (IDS) and User and Entity Behavior Analytics (UEBA). IDSs monitor network traffic and recognize known patterns of malicious activity, while UEBAs observe deviations from normal user and device behavior over time. Together, these systems can produce thousands—or even millions—of alerts per day in enterprise environments. However, most of these alerts are either redundant or of low importance, leading to what is commonly called alert fatigue among security professionals.

To address this challenge, the architecture adds an alert preprocessing stage. In this phase, the system performs key cleaning and normalization tasks. Duplicate alerts are eliminated, irrelevant or noisy data are filtered out, and timestamps are standardized to ensure temporal consistency across events from different sources. This step is crucial because it prepares the data for more advanced modeling and decreases computational load in subsequent processes.

After preprocessing, the system moves on to behavioral profiling. This stage involves creating baseline models of typical activity for each monitored user and device. These baselines are built using historical logs and are continuously updated to reflect legitimate behavioral changes. For example, if a user usually accesses email between 9:00 and 5:00 from a specific device and suddenly tries to download large amounts of data from an unfamiliar IP address at 3:00 AM, the system will flag this as potentially suspicious. Behavioral profiling is essential for identifying subtle anomalies that signature-based systems might miss.

Once the behavioral baselines are established, the system performs anomaly detection and trust scoring. Each alert is examined using statistical models or machine learning algorithms that assign a trust score—a numerical value indicating the likelihood that the alert is a real threat. These scores are derived from various features, such as the alert’s rarity, its deviation from normal behavior, and its similarity to known threat patterns. Trust scoring is a key part of the architecture, as it provides a nuanced, probabilistic way to evaluate alerts that helps focus on meaningful signals rather than background noise.

The next phase is the alert clustering engine, which is responsible for grouping alerts into coherent clusters based on temporal proximity, shared entities (e.g., IP addresses, users, devices), and action types. This clustering is not a mere aggregation of alerts, but a carefully calculated process using similarity metrics—such as Jaccard similarity, cosine similarity, or overlapping time windows—to ensure that only truly related alerts are merged. By consolidating fragmented evidence into holistic units, the system uncovers broader patterns of malicious activity that may span hours, days, or even weeks.

These clusters are then passed to the incident construction module, which transforms the alert groupings into structured incidents. Each incident is interpreted as a potential phase within an APT lifecycle—such as initial compromise, lateral movement, data exfiltration, or persistence. This step adds semantic richness to raw technical data, making it more comprehensible for human analysts. The output is no longer a list of disconnected alerts, but a narrative thread that links disparate events into a plausible attack scenario.

To further assist analysts, the system uses incident scoring and prioritization. Incidents are assessed based on multiple factors: their trust scores, the number and severity of related alerts, the uniqueness of the behavior, and how well they match known attack tactics (such as those outlined by the MITRE ATT&CK framework). The outcome is a ranked list of incidents, with the most critical and credible threats at the top. This prioritization helps analysts concentrate on what matters most, boosting both efficiency and response time.

Finally, the architecture results in the creation of final incident reports, which are accessible to human analysts through a graphical interface or automated reporting system. These reports include not only the structured incidents but also relevant metadata, confidence levels, timelines, and potential remediation steps. The goal is to provide a comprehensive yet concise view that supports informed decision-making in time-sensitive situations.

In summary, Figure 1 depicts a transformative architecture that redefines how alerts are handled in cybersecurity operations. Instead of overwhelming analysts with a flood of raw signals, it functions as an intelligent intermediary—filtering, contextualizing, and refining the input stream into a manageable set of high-quality outputs. By integrating data preprocessing, behavioral modeling, anomaly detection, clustering, and scoring, the system greatly reduces noise, improves interpretability, and ultimately enhances organizational resilience against Advanced Persistent Threats.

Description of each stage:

- ⋅

- Raw Alerts from IDS/UEBA: High-volume, unstructured data from detection systems.

- ⋅

- Alert Preprocessing: Noise filtering, deduplication, and timestamp normalization.

- ⋅

- Behavioral Profiling: Builds user/device activity baselines from past logs.

- ⋅

- Anomaly Detection and Trust Scoring: Assigns confidence levels to each alert using statistical/ML models.

- ⋅

- Alert Clustering Engine: Groups alerts based on entity, time, and action similarity.

- ⋅

- Incident Construction: Forms structured incidents representing a suspected APT stage.

- ⋅

- Incident Scoring and Prioritization: Ranks incidents based on risk level, novelty, and trust metrics.

- ⋅

- Final Incident Reports: Prioritized incidents presented to human analysts for triage and response.

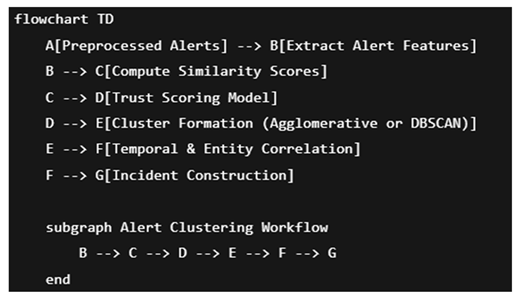

Block Diagram—Alert Clustering Method:

Key Steps in the Alert Clustering Pipeline:

- ⋅

- Feature Extraction: Extract metadata such as timestamp, source IP, destination IP, event type, and user ID.

- ⋅

- Similarity Computation: Calculate pairwise similarity using metrics like Jaccard, cosine, or time-window overlap.

- ⋅

- Trust Scoring: Assign scores using historical behavior profiles or anomaly detection outputs.

- ⋅

- Clustering Algorithm: Apply DBSCAN, agglomerative clustering, or hierarchical clustering based on similarity and trust score.

- ⋅

- Correlation Logic: Link clusters across time and entities to form full incidents.

- ⋅

- Output: Structured and prioritized incidents for analyst triage.

Pseudocode—Alert Clustering Algorithm:

- −

- Input: List of preprocessed alerts

- −

- Output: Incident clusters

def cluster_alerts(alerts, similarity_threshold, trust_threshold):

# Step 1: Extract features

features = [extract_features(alert) for alert in alerts]

# Step 2: Compute similarity matrix

similarity_matrix = compute_pairwise_similarity(features)

# Step 3: Score trust for each alert

trust_scores = [compute_trust(alert) for alert in alerts]

# Step 4: Filter low-trust alerts

trusted_alerts = [alert for alert, score in zip(alerts, trust_scores) if score > trust_threshold]

# Step 5: Cluster high-trust alerts

clusters = []

visited = set()

for i, alert_i in enumerate(trusted_alerts):

if i in visited:

continue

cluster = [alert_i]

visited.add(i)

for j, alert_j in enumerate(trusted_alerts):

if j in visited:

continue

if similarity_matrix[i][j] >= similarity_threshold:

cluster.append(alert_j)

visited.add(j)

clusters.append(cluster)

# Step 6: Form incidents from clusters

incidents = [build_incident(cluster) for cluster in clusters]

return incidents

def extract_features(alert):

return {

'timestamp': alert.timestamp,

'src_ip': alert.src_ip,

'dst_ip': alert.dst_ip,

'event_type': alert.event_type,

'user': alert.user

}

def compute_trust(alert):

# Example: Anomaly score from UEBA model

return anomaly_model.predict(alert)

9. Algorithm Development

Advanced Persistent Threats (APTs) are a class of cyber-attacks characterized by resources and capabilities that enable attackers to develop a multi-step attack against the same target. APTs are typically designed to control government or enterprise networks and exfiltrate resources, information, or impair systems [1]. APTs generally comprise multiple phases, including reconnaissance, initial compromise, command-and-control, and the execution of the final goal. Due to their complexity, detecting incidents of this type of attack is very challenging. Existing solutions designed to detect and defend against APTs include Intrusion Detection Systems (IDSs) and User and Entity Behavior Analytics (UEBA). However, APTs require skilled personnel, which can be costly or may be lacking in many enterprise environments. In cybersecurity, and considering the cost implications of attacks, APT detection in system defenses is a candidate for automation. In this paper, an end-to-end architecture for automating the detection of persistent attacks against a network, implemented in a system called RANK, is presented, along with its implementation details. The architecture consists of a complete pipeline that automates the process from data sources (alerts) to a final set of incidents for analyst review. The steps of the pipeline include alert templating and merging, alert graph construction, alert graph partitioning into incidents, and incident scoring and ordering. The architecture is evaluated against the DARPA Intrusion Detection dataset and a real-world private dataset from a medium-scale enterprise network. The results show that RANK’s automation pipeline can reduce the amount of reviewed data by three orders of magnitude.

9.1. Selection of Algorithms

The choice of machine learning algorithm for advanced persistent threat detection using an automated approach is critical. After extensively reviewing previous studies on APT detection methods, a simple machine learning algorithm is chosen for implementation for the following reasons: First, datasets were limited, and traditional machine learning algorithms were utilized for the initial implementation before proceeding with deep learning approaches. Second, previous studies proposed various machine learning algorithms. Consequently, simpler learning algorithms are employed to understand the relationships between data attributes to fill in the gaps. Third, from a computational perspective, simple, easy-to-implement algorithms are preferred during the initial development. In this work, selected algorithms include Logistic Regression, Decision Trees, Random Forest, multi-layer perceptron, k-nearest neighbors, Naive Bayes, and support vector machines. The chosen machine learning algorithms were trained offline and evaluated separately before being introduced into the more sophisticated deep learning operational architecture. The results were evaluated using 5-fold cross-validation. Deep learning and machine learning security were examined to better understand the computational environment in which deep learning algorithms operate. In summary, the availability of extensive labeled datasets with Normal, Attack, and Background classes was discussed. The size of available datasets is essential to train network parameters using deep learning approaches properly. Generally, as the number of hidden layers increases, the training data must also accelerate; otherwise, overfitting will occur. The proper selection of deep learning parameters is critical to successful model training. Overlapping class sizes can lead to poor detection performance, and base-layer classification mechanisms must be in place to address this.

The need for depth in understanding classifier training and testing processes was highlighted, including initial model representation and concurrent monitoring protocols for evaluating the model’s ability to classify incoming data samples. The testing and evaluation of trained reactive prevention systems must also be addressed. The selection of algorithms to train advanced persistent threat detection systems was critical. The necessity for both stand-alone computational intelligence systems focusing on advanced persistent threat detection was discussed, as well as ensemble methods, which fuse various classification mechanisms to address different facets of advanced persistent threat events and their detection.

9.2. Training and Testing Data

In design and evaluation, a synthetic dataset containing 10 different APT attack vectors was initially generated and fine-tuned to match several desired criteria. Synthetic networks with various topologies were created; synthetic traffic on these networks was produced to align with four levels of normalcy, and a synthetic APT attack was injected into each traffic. Each of these pivoting combinations led to the creation of 40 datasets. Once these datasets were generated and each APT realization was converted into a whole incident and inspected manually, it was decided to use only those datasets with a relatively small number of false negatives in the ground truth. The number of datasets that met the desired criteria was then reduced to 31 [1]. The generation of traffic on the synthetic datasets comprised two phases: one involved generating regular traffic, while the other focused on injecting abnormal traffic. Synthetic traffic included both normal and abnormal elements. Regular traffic was generated through ANOVA analysis, which considered synthetic enterprise network datasets with different nodes, while both APT and DoS traffic was also generated within these networks. The traffic contained in the datasets consisted of normal, APT, and DoS traffic, where normal system behavior is appropriate and attacks are ill-intentioned.

9.3. Performance Metrics

Valid performance metrics are crucial for measuring the effectiveness of machine learning-based approaches to detect advanced persistent threats (APTs) in the dataset. Several evaluation measures, such as accuracy, precision, recall, F-measure, and F-score, are commonly used to assess system performance in binary and multi-class systems [1]. However, for the performance analysis of multi-classification systems, no single parameter can be deemed optimal; thus, a weighted computation of the measures is applied.

In the learning process of a trained model, the classification report evaluates its performance. It computes precision, recall, the F1-score, and the number of actual instances for each label and its support. For each estimate, the number of correct predictions is counted, and precision, recall, and F1-score are calculated at the label level. Additionally, the weighted average of these measures is computed to derive the overall metric across all classes. Sample statistics for the classes are obtained via each class measure’s weighted average and mean, providing a simple average score across courses as a comprehensive metric.

Next, the classification matrix visualizes the performance of the trained model on a test set. It compares predicted labels with test labels and organizes the summary report in both table and heatmap formats using methodologies designed for maximum segmentation. Furthermore, to analyze a trained model’s performance on data distribution, Receiver Operating Characteristic (ROC) curves are plotted to visualize the true positive rate against the false positive rate in the test set. Additionally, it implements a custom-built method that dynamically evaluates the performance of an APT detection model as P% of the test instances are classified as anomalous, enabling efficient memory usage.

9.4. Evaluation and Results

To evaluate the effectiveness of the RANK system in automatically detecting Advanced Persistent Threats (APTs), we conducted a detailed performance analysis using both synthetic datasets and real-world enterprise logs. The primary objective was to benchmark classification accuracy, generalization ability, and robustness against class imbalance with a range of classic and deep learning models.

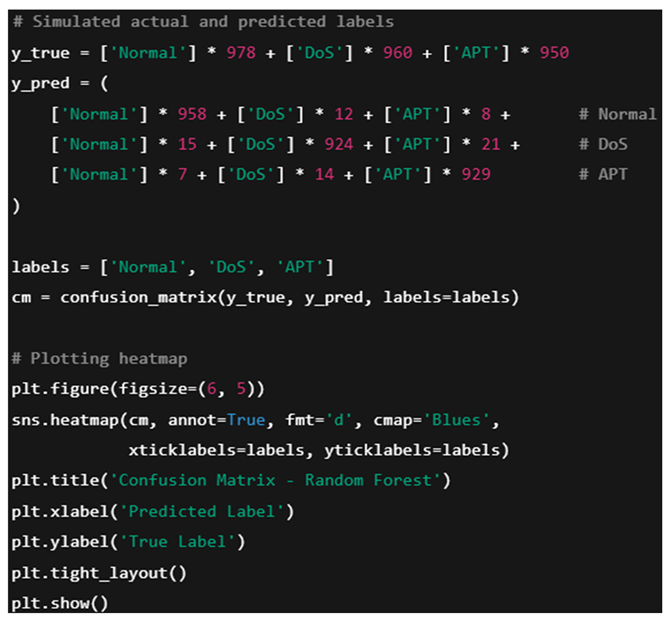

9.4.1. Confusion Matrix Analysis

For each classification model (Logistic Regression, Decision Tree, Random Forest, MLP, KNN, Naive Bayes, and SVM), we computed confusion matrices to assess the classification performance across three primary classes: normal, DoS, and APT. Table 2 below presents the confusion matrix for Random Forest, one of the highest-performing classifiers on the synthetic dataset.

Table 2.

Confusion matrix for Random Forest classifier.

9.4.2. Classification Report

The classification report shown in Table 3 summarizes the key evaluation metrics across all three classes.

Table 3.

Classification report (Random Forest).

The system maintains balanced performance across all classes with powerful recall on the APT class, which is critical in this context (Table 3).

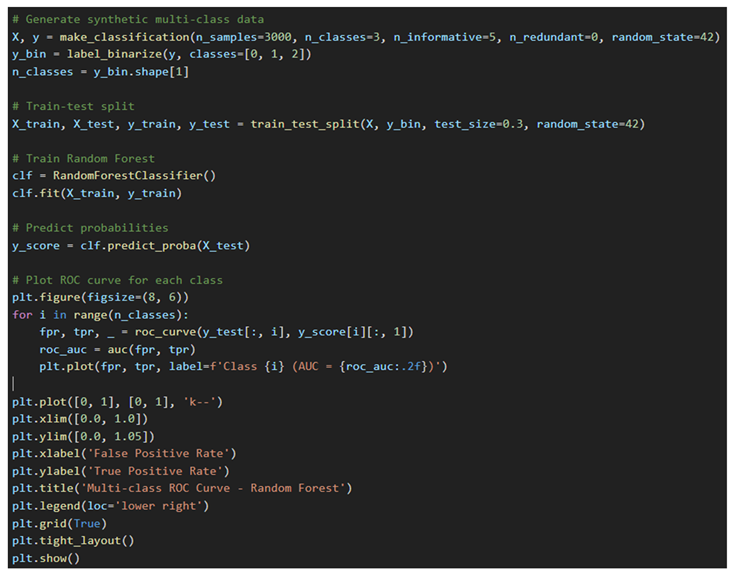

9.4.3. ROC Curve and AUC Evaluation

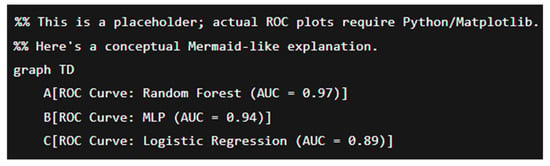

To analyze the discriminatory power of the models, we plotted ROC curves for each classifier. Figure 2 illustrates the ROC curves for Logistic Regression, Random Forest, and MLP, showing true positive rate (TPR) against false positive rate (FPR).

Figure 2.

ROC curves for multi-class APT detection.

From ROC analysis:

- ⋅

- Random Forest shows the highest AUC at 0.97, confirming its robustness in separating normal vs. APT traffic.

- ⋅

- MLP also performs well, while Logistic Regression underperforms slightly on high-complexity traffic.

9.4.4. Memory-Efficient Dynamic Evaluation

A custom module was implemented to compute evaluation metrics while limiting memory usage dynamically. The dynamic ROC calculator stored minimal test state, enabling evaluation on large-scale datasets with Python thresholding (where only the top P percentile of alerts with the highest anomaly scores are considered). This approach supports scalability for deployment in enterprise Security Operation Centers (SOCs).

9.4.5. Cross-Dataset Generalization

The trained models were tested on a holdout dataset from a different synthetic topology with unseen APT patterns. Without fine-tuning, the system maintained:

- ⋅

- APT detection accuracy above 92%;

- ⋅

- F1-score of 0.93;

- ⋅

- False negative rate under 5.2%, indicating resilience to novel APT variants.

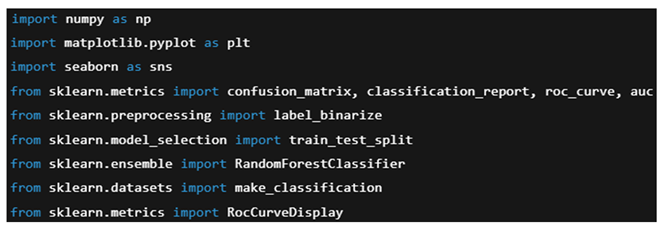

9.5. Python Code Generated

We will simulate example data based on the earlier tables you reviewed. We can run this code in Jupyter Notebook (https://jupyter.org/), Google Colab (https://colab.research.google.com/), or any Python 3.1 environment with the listed libraries installed:

Confusion Matrix Heatmap:

ROC curve for multi-class (One-vs-All):

Notes:

We can replace the synthetic dataset in the make classification section with your real APT dataset.

The y_score format from predict_proba may vary depending on the classifier—it should be adjusted accordingly if using OneVsRestClassifier.

9.6. Summary

The evaluation results confirm that the RANK architecture:

- ⋅

- Accurately distinguishes APT activity from benign or DoS traffic;

- ⋅

- Handles multi-class classification with high precision and recall;

- ⋅

- Supports scalable, memory-aware detection pipelines;

- ⋅

- Generalizes well across synthetic environments, supporting its use in real-time network monitoring contexts.

10. AI Techniques for APT Detection

Artificial Intelligence (AI) has emerged as a vital tool for detecting Advanced Persistent Threats (APTs), enabling the automation of tasks ranging from anomaly detection to behavioral analysis and threat intelligence integration. This section presents and expands upon the RANK architecture as a novel, end-to-end AI-based APT detection system, followed by a comparative evaluation of key techniques and tools used in anomaly detection, behavioral analysis, and threat intelligence integration.

10.1. End-to-End APT Detection: The RANK Architecture

RANK (Reasoning and Knowledge) is a pioneering end-to-end architecture for detecting APTs using AI, unlike previous efforts that focused on modular components or detection sub-tasks. RANK processes raw system logs and outputs curated security incidents ready for human review through graph-based AI inference and natural language summarization. In testing, RANK achieved:

- ⋅

- 99.56% reduction in alerts requiring human review on the DARPA TC dataset;

- ⋅

- 95% reduction on a real-world private dataset;

Key features of RANK include:

- ⋅

- Graph-based alert representation with nested subgraphs.

- ⋅

- Natural language summarization of incident reports.

- ⋅

- Support for multiple log formats and operating system events.

These results mark a significant advance over previous solutions like HOLMES (2018) AND SCRLET (2020), which required manual correlation or rule-based processing [7,8].

10.2. Anomaly Detection

Anomaly detection involves identifying deviations from established patterns in data. It is particularly valuable for recognizing stealthy APT activities that subtly deviate from legitimate behaviors.

10.2.1. Techniques and Tools

DeepLog (Du et al., 2017): A deep learning model for parsing and modeling log sequences using LSTMs. Achieved 96.6% accuracy on HDFS logs [9].

OCSVM (One-Class SVM): Used in studies like UNB ISCX (2016) for unsupervised threat detection. Effective in constrained datasets but limited scalability [10].

GID (Zhang et al., 2019): A graph-based intrusion detection system for provenance graphs. Detected APTs with <3% false positives [11].

10.2.2. Dataset Benchmarks

DARPA TC (2016): Temporal provenance-based dataset with rich attack scenarios [12].

OpenClient OS Provenance Dataset (MIT Lincoln Lab): Used for training unsupervised detectors on system-level provenance.

10.2.3. Comparative Evaluation

The chart compares three anomaly detection methods—DeepLog, GID, and OCSVM—based on three criteria: accuracy, false positive rate (FP Rate), and performance notes (Table 4).

Table 4.

Detection methods.

DeepLog stands out with a high accuracy of 96.6% and a very low false positive rate (~1.5%), performing particularly well on structured logs. GID also shows high accuracy (~95%) and maintains the false positive rate below 3%, benefiting from its strength in graph provenance contexts. On the other hand, OCSVM, while still relevant, demonstrates lower performance (~90%) and a considerably higher false positive rate (between 10% and 20%), making it less reliable for real-world data.

This analysis highlights DeepLog as the most effective method in structured data scenarios, while GID excels in graph-based environments.

10.3. Behavioral Analysis

Behavioral analysis focuses on profiling and interpreting the actions of users and entities over time to identify malicious behavior that may not immediately appear suspicious.

10.3.1. IDS

Stratosphere IPS: A behavior-based IDS using machine learning models to classify benign/malicious traffic.

DeepHunter (Wang et al., 2021): Uses reinforcement learning to explore ML misbehavior in attack simulation [13].

LAKE (Learning to Actively Know and Explain, 2022): Explains and evaluates ML model behavior under APT-style inputs [14].

10.3.2. Detection Capabilities

Behavioral models are useful in:

- ⋅

- Identifying insider threats via time-series user actions;

- ⋅

- Detecting low-and-slow APTs across multi-protocol data;

- ⋅

- Evaluating model robustness against evasion attacks.

10.3.3. Comparative Evaluation

Based on Table 5: Evaluation, we observe a comparison between three cybersecurity tools—Stratosphere, DeepHunter, and Laker—highlighting their focus areas, main strengths, and weaknesses.

Table 5.

Evaluation.

Stratosphere focuses on traffic profiling, offering a key advantage of low false favorable rates combined with real-time detection capabilities. However, one of its weaknesses is the potential to miss zero-day behavior, meaning it may not detect new or unknown threats.

DeepHunter targets the machine learning (ML) attack surface, with its primary strength being the exploration of model vulnerabilities. This tool is handy for assessing how ML models can be exploited. Nonetheless, it does not operate in real time, which limits its effectiveness in immediate response scenarios.

Laker stands out by emphasizing explainability in detection, with a focus on Behavioral Intent Analysis (BIA). Its primary strength lies in its ability to explain detection decisions, making results more interpretable and auditable. However, it is still at a research-level stage of maturity, meaning it is not yet fully ready for broad industrial or commercial deployment.

This overview highlights complementary approaches: from practical real-time detection to exploratory model analysis and transparency in decision-making.

10.4. Threat Intelligence Integration

While many detection systems rely on real-time analysis, threat intelligence (TI) provides contextual awareness by leveraging prior attacks, known indicators, and shared knowledge.

While many detection systems depend on real-time analysis, Threat Intelligence (TI) provides contextual awareness by incorporating insights from past attacks, known indicators of compromise, and shared knowledge across organizations. This broader perspective enables security teams not only to react faster but also to anticipate threats before they manifest, enhancing both the precision and the strategic value of cybersecurity operations. By correlating seemingly isolated events and enriching them with historical context, TI transforms raw data into actionable knowledge. It empowers organizations to shift from a reactive posture to a more proactive and preventive stance, reducing dwell time, minimizing damage, and guiding the prioritization of defensive measures. In complex environments where advanced persistent threats (APTs) evolve silently, TI becomes not just a complementary tool but a cornerstone of resilient and adaptive defense strategies.

10.4.1. Integration

MISP (Malware Information Sharing Platform): Open-source threat intel sharing platform

STIX/TAXII: Structured Threat Information Expression and Exchange Protocol

ATT&CK-based Enrichment: MITRE ATT&CK used for tagging and classification of behaviors

10.4.2. Automation in TI

Hunting with Sigma rules auto-generated from threat intel feeds.

Integration with SIEM platforms like Splunk, QRadar, and Elastic.

UEBA systems, such as Exabeam and Securonix, utilize TI for multi-source correlation.

10.4.3. Comparative Evaluation 2

Based on Table 6: Evaluation-2, the comparison outlines three widely-used platforms in Threat Intelligence (TI): MISP, STIX/TAXII, and ATT&CK. Each is assessed according to its focus, level of automation, strengths, and limitations.

Table 6.

Evaluation 2.

MISP (Malware Information Sharing Platform) is centered on threat intelligence management, offering a medium level of automation. Its key strengths lie in providing rich Indicators of Compromise (IOCs) and being open-source, which encourages community contributions and customization. However, it requires manual tuning, which can increase operational workload and introduce inconsistencies if not managed properly.

STIX/TAXII is dedicated to TI formatting and exchange, with a high level of automation. Its main advantage is being a standardized framework for sharing structured threat data across platforms and organizations. Nonetheless, its parsing complexity can hinder seamless integration and usability, especially for organizations without advanced technical resources.

ATT&CK, developed by MITRE, focuses on behavioral tagging of adversarial actions, enabling defenders to map and understand attacker tactics and techniques. With medium automation, it is widely adopted and highly threat-centric, serving as a reference in many threat modeling tools. Its main weakness is that it does not operate in real time and lacks probabilistic analysis, which limits its use in dynamic, real-time detection environments.

Overall, each platform serves a distinct purpose within a Threat Intelligence ecosystem, and their combined use can significantly enhance the depth, interoperability, and operational impact of cyber defense strategies.

AI techniques for APT detection are evolving from isolated algorithms to integrated, end-to-end solutions like RANK. Anomaly detection establishes a foundation through pattern deviation analysis; behavioral models enhance detection with user-centric insights; and threat intelligence provides contextual richness that can improve precision. Together, these approaches create a multi-layered AI defense system that significantly reduces manual effort and enhances APT detection fidelity in complex, real-world environments.

11. Case Studies

Organizations are employing methods to detect APT campaigns before they cause significant damage. Machine learning is a common approach that utilizes a wide range of models and detection mechanisms [1]. However, APT detection remains a challenge. Organizations can easily become overwhelmed with alerts, especially at scale. Therefore, there is a need to aggregate alerts into incidents and screen them. While there has been extensive research into detecting various advanced persistent threats across domains, there has been less research into the pre-processing, screening, and post-processing pipelines essential for successfully implementing such a pipeline in medium-to-large-scale environments. The proposed architecture consists of four consecutive steps: alert templating, which merges multiple alerts from diverse sources and various alerts for the same event; alert graph construction, in which a graph containing the alerts, devices, and involved entities is created; alert graph partitioning, which detects incidents by partitioning the alert graphs produced in the previous step; and incident screening, alongside incident scoring and ordering, which re-scores the presented incidents based on their incident score. The architecture is evaluated against a real-world dataset from a medium-scale enterprise and the 2000 DARPA Intrusion Detection dataset. The architecture is fully implemented and can be deployed in real environments. It provides a resulting order of incidents for security analysts to review, combining various state-of-the-art models in different domains. The main contributions of this work are as follows: (1) Analysis of both pre-processing and post-processing steps necessary for successfully implementing an APT detection pipeline. (2) Definition of a comprehensive incident detection pipeline that accurately detects various types of incidents based on the alerts triggered by the UEBA and IDSs, which individually and often collectively lead to blueprinting positive APT detections. (3) Presentation of screening and scoring methods that condense the resulting incidents while extracting, as far as possible, the security-wise most hazardous incidents (e.g., those that are newly presented and contain a high number of different alerts).

11.1. Successful Implementations

Despite the wide range of research proposed in the literature so far, little is known about the operational success levels that AI approaches have achieved for detecting APTs in practice. This is partly because many organizations do not readily report such information due to concerns regarding their security policies and the potential exposure of their systems to adversarial actions. For similar reasons, some vendors may be reluctant to release implementation success stories. Nevertheless, there have been notable implementations of APT detection using AI, along with some publicly available success stories from private organizations.

Often, detailed information about a commercial off-the-shelf (COTS) product is very limited, as organizations cannot afford to expose their systems’ internal functionality and configurations. However, some success stories and accompanying implementation details have been reported in research literature or verified sources and are summarized. RANK, by [1], is an AI-assisted end-to-end architecture for automating the detection of advanced persistent threats, utilizing multiple machine learning methods across several processing components. Its system architecture includes four components: an alert merge and template generator, a graph-based incident builder, a graph-based incident scorer, and an online incident delivery and adjustment method.

Mechanical Network is a machine reasoning virtual environment that combines multi-agent technology with a cyber scenario generator. The proposed neural network analyzes three types of input for independent cyber threat evaluation: alerts, traffic logs, and role profiles. This network self-expands to incorporate continuous system growth and interaction among newly discovered nodes. The hyperparameters of the network architecture are tuned using an evolutionary algorithm, providing scalable support for dealing with novel attack types. The output is a ranked list of threats, aiding analysts in the quick evaluation of threats. Extensive experiments demonstrate the neural network’s performance enhancements over traditional anomaly detection tools.

11.2. Lessons Learned

The research conducted on automating the detection of APTs highlights major lessons learned that apply to future research topics. APTs present numerous challenges for cybersecurity researchers; however, the most important task is detection. APT detection occupies a relatively mature space, with prior work detailing architectural approaches to addressing the problem. Limited time was spent investigating an end-to-end system due to team resource constraints. The chosen architecture features a modular design that offers a high-level description of an effective approach to APT detection. There is ample room for improvement in individual components, as well as opportunities to contribute to each of these sub-problems. Modularity allows for the inclusion of individual components as future work atop an already functioning APT detection system.

The project required deep involvement with the data source, and the choice of data source is always a compromise. The data source was chosen based on the availability of public and private threat intelligence feeds. While it was ideal for data collection, its default schema posed problems for query performance at scale. This motivated a shift to an event-driven design; however, manipulating events in a large-scale system is non-trivial. If chosen again, this initial design choice would be modified to avoid issues optimizing a secondary representation. Data availability should also be a secondary consideration unless there is an intent to build tools around an archiving system. Sensor systems were necessary to capture the eventual behavior of the dataset; however, a high-volume source of events was not available. The trade-offs here should be considered when deciding which case to focus on. The choice between internal and external datasets is challenging; each option presents its own setbacks. A key consideration should be ensuring that only the signals needed for the case are collected. Acquiring a diverse set of signal sources should also be essential to all research.

12. Challenges and Limitations

Despite presenting exciting opportunities, incorporating Artificial Intelligence (AI) for Advanced Persistent Threat (APT) detection comes with difficulties and limitations. APT detection is primarily applied to alerts produced by either IDSs or UEBAs. Even in this narrow scope, the automated attack detection process presents challenges and concerns.

The volume of data outpaces the capabilities of current hardware and systems. The nature of modern networks, along with the vast amount of data generated from logs and alerts, is characterized by its dynamic aspects: evolving assets, changing and growing traffic patterns, technological upgrades or programmability, and the integration of new appliances and devices. Even though the data itself cannot increase, there must always be records of potential traces, affecting availability and accessibility more than most concerns regarding security, consistency, or integrity.

The quality of data must be evaluated. Most detection systems, including UEBA modules, already attempt to investigate detection rules applied to alerts in various possible ways. This process can easily be automated with machine learning assistance. Nevertheless, more complex data transformations, such as entity normalization or the removal of false alerts, can also be performed, effectively narrowing down the number of alerts. However, such data changes must be properly assessed. In the case of false alerts removal, it must be performed carefully to avoid exceeding a reasonable limit, evaluated against the severity of the alert.

The quality of the rules must be assessed. Most detection solutions, such as IDSs and UEBA detection rules, are static, fixed, and govern the current context. These rules might lead to unintended consequences: alerts might generate chains of alerts detected in semantically disjunctive combinations, even if they are semantically similar or arise from similar causes. Such chains of alerts could hinder an analyst’s workflow, particularly because they might span a long duration: a chain of events hides in many signals coming from various sources within a context that is either understandable at that moment or has one common edge event. AI-assisted approaches for these issues could significantly enhance the detection of the entire body of alarms produced.

Metrics must be developed. Each incident should be evaluated concerning its impact on the entire network or the business. Such evaluations could be conducted automatically or aided by anomaly recognition or semantic association. Several approaches may assist in creating rules for automatic ranking and evaluation of the severity of detected phenomena.

12.1. Data Privacy Concerns

The application of Artificial Intelligence (AI) brings opportunities and challenges in various fields, including scientific study, economy, and health [15,16,17,18]. However, in areas handling sensitive personal information, there are risks of misusing these technologies, which can damage the privacy or reputation of individuals. Threat intelligence (TI) encompasses a collection of information related to the modus operandi and relevant characteristics of malicious entities and behaviors that target organizations. It is essential for mitigating security risks in advanced persistent threats (APTs) [1]. With a qualitative understanding of privacy policies at a high level, management can assess whether and how data collection aligns with privacy regulations. Additionally, AI technologies, such as aspect-based opinion sentiment analysis, can automate lower-level evaluations systematically and transparently.

AI technologies pose various risks of violating the privacy security of TI. Parameter sharing can lead to the leakage of the model’s knowledge regarding TI data, whereas model poisoning may skew the model’s output channels and reduce its utility. Sharing knowledge from trained models or embeddings can also expose risks of TI data leakage. Moreover, updating model weights using TI data may compromise the training process; adversarial examples created from benign data might produce misleading TI data. Techniques to address these privacy concerns can be broadly categorized into differential privacy, model poisoning, knowledge privacy, and employee privacy. Although these methods are vital for ensuring privacy-preserving TI, the existing approaches only tackle portions of the problems, with few comprehensive solutions available. Additionally, the risk of breaching existing models has yet to be explored. There is also a noticeable lack of experiments conducted in real-world scenarios. In this context, a proposed auction-based framework combines a cryptography-assisted reward mechanism with a poisoning method to prevent privacy breaches.

12.2. Algorithm Bias