Exploring Deep Learning Model Opportunities for Cervical Cancer Screening in Vulnerable Public Health Regions

Abstract

1. Introduction

2. Search Strategy and Selection Criteria

3. Cervical Cancer in Vulnerable Public Health Regions

4. Global Rise of AI

5. Applications of Deep Learning in Cervical Cancer Screening

6. Limitations and Challenges

7. Current Market and Research Directions

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Novais, I.R.; Coelho, C.A.; Machado, H.C.; Surita, F.; Zeferino, L.C.; Vale, D.B. Cervical cancer screening in Brazilian Amazon Indigenous women: Towards the intensification of public policies for prevention. PLoS ONE 2023, 18, e0294956. [Google Scholar] [CrossRef] [PubMed]

- Silveira, R.P.; Pinheiro, R. Entendendo a Necessidade de Médicos no Interior da Amazônia-Brasil. Rev. Bras. Educ. Med. 2014, 38, 451–459. [Google Scholar] [CrossRef]

- Bruni, L.; Serrano, B.; Roura, E.; Alemany, L.; Cowan, M.; Herrero, R.; Poljak, P.F.; Murillo, R.; Broutet, N.; Riley, L.M.; et al. Cervical cancer screening programmes and age-specific coverage estimates for 202 countries and territories worldwide: A review and synthetic analysis. Lancet Glob. Health 2022, 10, e1115–e1127. [Google Scholar] [CrossRef]

- Fatahi Meybodi, N.; Karimi-Zarchi, M.; Allahqoli, L.; Sekhavat, L.; Gitas, G.; Rahmani, A.; Fallahi, A.; Hassanlouei, B.; Alkatout, I. Accuracy of the Triple Test Versus Colposcopy for the Diagnosis of Premalignant and Malignant Cervical Lesions. Asian Pac. J. Cancer Prev. 2020, 21, 3501–3507. [Google Scholar] [CrossRef]

- Instituto Nacional de câncer (INCA). Estimativa 2023: Incidência de Câncer No Brasil. 2022. Available online: https://www.inca.gov.br/sites/ufu.sti.inca.local/files//media/document//estimativa-2023.pdf (accessed on 3 December 2024).

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef]

- Instituto Nacional de câncer (INCA). Atlas da Mortalidade. 2022. Available online: https://www.inca.gov.br/app/mortalidade (accessed on 3 December 2024).

- Knaul, F.M.; Rodriguez, N.M.; Arreola-Ornelas, H.; Olson, J.R. Cervical cancer: Lessons learned from neglected tropical diseases. Lancet Glob. Health 2019, 7, e299–e300. [Google Scholar] [CrossRef]

- Gomes, L.C.; Pinto, M.C.; Reis, B.J.; Silva, D.S. Epidemiologia do câncer cervical no Brasil: Uma revisão integrativa. J. Nurs. Health 2022, 12, e2212221749. [Google Scholar]

- Simelela, P.N. WHO global strategy to eliminate cervical cancer as a public health problem: An opportunity to make it a disease of the past. Int. J. Gynecol. Obstet. 2021, 152, 1–3. [Google Scholar] [CrossRef]

- Hodges, A. Alan Turing: The Enigma; Princeton University Press: Princeton, NJ, USA, 2012. [Google Scholar]

- Turing, A.M. Computing Machinery and Intelligence. Mind 1950, 59, 433–460. Available online: https://phil415.pbworks.com/f/TuringComputing.pdf (accessed on 3 December 2024). [CrossRef]

- McCarthy, J.; Minsky, M.; Rochester, N.; Shannon, C. A proposal for the Dartmouth Summer Research Project on Artificial Intelligence. Stanford University. 1955. Available online: https://www-formal.stanford.edu/jmc/history/dartmouth/dartmouth.html (accessed on 3 December 2024).

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.; Hinton, G.; Williams, R. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Nobel Prize in Physics. Foundational Discoveries in Machine Learning and Artificial Neural Networks. 2024. Available online: https://www.nobelprize.org/prizes/physics/2024/prize-announcement/ (accessed on 3 December 2024).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomes, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Hiranuma, N.; Park, H.; Baek, M.; Anishchenko, I.; Dauparas, J.; Baker, D. Improved protein structure refinement guided by deep learning based accuracy estimation. Nat. Commun. 2021, 12, 1340. [Google Scholar] [CrossRef]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly accurate protein structure prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Nobel Prize in Chemistry. Computational Protein Design and Protein Structure Prediction. 2024. Available online: https://www.nobelprize.org/prizes/chemistry/2024/press-release/ (accessed on 3 December 2024).

- Menezes, L.J.; Vazquez, L.; Mohan, C.K.; Somboonwit, C. Eliminating Cervical Cancer: A Role for Artificial Intelligence. In Global Virology III: Virology in the 21st Century; Shapshak, P., Balaji, S., Kangueane, P., Chiappelli, F., Somboonwit, C., Menezes, L.J., Sinnott, J.T., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 405–422. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Chen, E.; Banerjee, O.; Topol, E.J. AI in health and medicine. Nat. Med. 2022, 28, 31–38. [Google Scholar] [CrossRef]

- Tandon, R.; Agrawal, S.; Rathore, N.P.S.; Mishra, A.K.; Jain, S.K. A systematic review on deep learning-based automated cancer diagnosis models. J. Cell. Mol. Med. 2024, 28, e18144. [Google Scholar] [CrossRef]

- Sarhangi, H.A.; Beigifard, D.; Farmani, E.; Bolhasani, H. Deep learning techniques for cervical cancer diagnosis based on pathology and colposcopy images. Inform. Med. Unlocked 2024, 47, 101503. [Google Scholar] [CrossRef]

- Sha, Y.; Zhang, Q.; Zhai, X.; Hou, M.; Lu, J.; Meng, W.; Wang, Y.; Li, K.; Ma, J. CerviFusionNet: A multi-modal, hybrid CNN-transformer-GRU model for enhanced cervical lesion multi-classification. iScience 2024, 27, 111313. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.R.; Kim, Y.J.; Ju, W.; Nam, K.; Kim, S.; Kim, K.G. Comparison of machine and deep learning for the classification of cervical cancer based on cervicography images. Sci. Rep. 2021, 11, 16143. [Google Scholar] [CrossRef]

- Charlotte, J.H.; Jeffrey, M.D. Artificial Intelligence and Machine Learning in Clinical Medicine, 2023. N. Engl. J. Med. 2023, 388, 1201–1208. [Google Scholar] [CrossRef]

- Vargas-Cardona, H.D.; Rodriguez-Lopez, M.; Arrivillaga, M.; Vergara-Sanchez, C.; García-Cifuentes, J.P.; Bermúdez, P.C.; Jaramillo-Botero, A. Artificial intelligence for cervical cancer screening: Scoping review, 2009–2022. Int. J. Gynecol. Obstet. 2024, 165, 566–578. [Google Scholar] [CrossRef]

- FDA (Food and Drug Administration). Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices. 2024. Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices (accessed on 3 December 2024).

- Hou, X.; Shen, G.; Zhou, L.; Li, Y.; Wang, T.; Ma, X. Artificial Intelligence in Cervical Cancer Screening and Diagnosis. Front. Oncol. 2022, 12, 851367. [Google Scholar] [CrossRef]

- Bossuyt, P.M.; Reitsma, J.B.; Bruns, D.E.; Gatsonis, C.A.; Glasziou, P.P.; Irwig, L.; Lijmer, J.G.; Moher, D.; Rennie, D.; de Vet, H.C.W.; et al. STARD 2015: An updated list of essential items for reporting diagnostic accuracy studies. BMJ 2015, 351, h5527. [Google Scholar] [CrossRef]

- Liu, X.; Rivera, S.C.; Moher, D.; Calvert, M.J.; Denniston, A.K. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: The CONSORT-AI Extension. BMJ 2020, 370, m3164. [Google Scholar] [CrossRef]

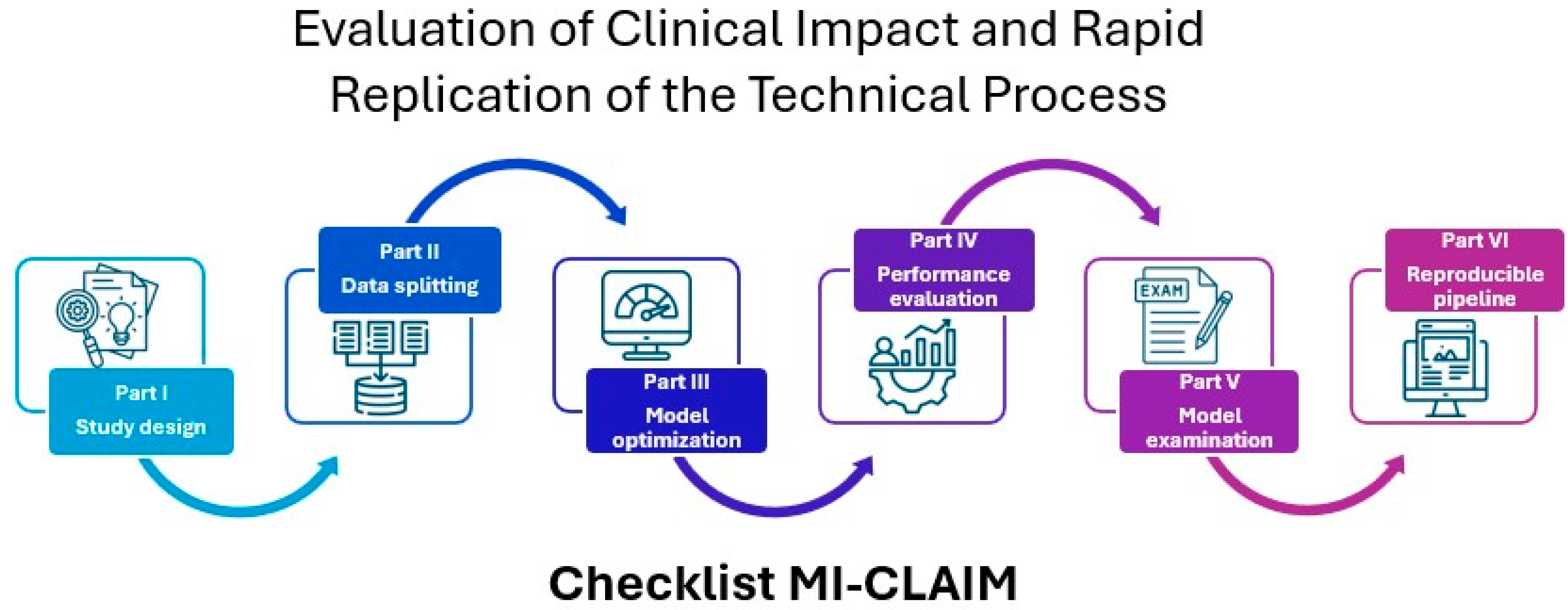

- Norgeot, B.; Quer, G.; Beaulieu-Jones, B.K.; Torkamani, A.; Dias, R.; Gianfrancesco, M.; Arnaout, R.; Kohane, I.S.; Saria, S.; Topol, E.; et al. Minimum information about clinical artificial intelligence modeling: The MI-CLAIM checklist. Nat. Med. 2020, 26, 1320–1324. [Google Scholar] [CrossRef]

- Turic, B.; Sun, X.; Wang, J.; Pang, B. The Role of AI in Cervical Cancer Screening. Cervical Cancer—A Global Public Health Treatise; IntechOpen: London, UK, 2021. [Google Scholar] [CrossRef]

- Wang, J.; Yu, Y.; Tan, Y.; Wan, H.; Zheng, N.; He, Z.; Mao, L.; Ren, W.; Chen, K.; Lin, Z.; et al. Artificial intelligence enables precision diagnosis of cervical cytology grades and cervical cancer. Nat. Commun. 2024, 15, 4369. [Google Scholar] [CrossRef]

- da Silva, D.C.B.; Garnelo, L.; Herkrath, F.J. Barriers to Access the Pap Smear Test for Cervical Cancer Screening in Rural Riverside Populations Covered by a Fluvial Primary Healthcare Team in the Amazon. Int. J. Environ. Res. Public Health 2022, 19, 4193. [Google Scholar] [CrossRef]

- Mehedi, M.H.K.; Khandaker, M.; Ara, S.; Alam, M.A.; Mridha, M.F.; Aung, Z. A lightweight deep learning method to identify different types of cervical cancer. Sci. Rep. 2024, 14, 29446. [Google Scholar] [CrossRef] [PubMed]

- World Medical Association. Declaration of Helsinki: Ethical Principles for Medical Research Involving Human Subjects. Adopted by the 18th WMA General Assembly, Helsinki, Finland, June 1964, and Amended by Subsequent General Assemblies, Tokyo 1975, Venice 1983, Hong Kong 1989, Somerset West 1996, Edinburgh 2000. Available online: https://history.nih.gov/download/attachments/1016866/helsinki.pdf (accessed on 2 December 2024).

- WHO (World Health Organization). Ethics and Governance of Artificial Intelligence for Health. 2021. Available online: https://www.who.int/publications/i/item/9789240029200 (accessed on 2 December 2024).

- Senado Federal. Projeto de Lei n° 2338, de 2023. 2023. Available online: https://www25.senado.leg.br/web/atividade/materias/-/materia/157233 (accessed on 2 December 2024).

- Agência Nacional de Vigilância Sanitária (ANVISA). Resolução da Diretoria Colegiada—RDC n° 657, de 24 de Março de 2022. Dispõe Sobre a Regularização de Software como Dispositivo Médico (Software as a Medical Device—SaMD). 2022. Available online: https://in.gov.br/en/web/dou/-/resolucao-de-diretoria-colegiada-rdc-n-657-de-24-de-marco-de-2022-389603457 (accessed on 2 December 2024).

- Brasil. Lei n° 13.709, de 14 de Agosto de 2018. Lei Geral de Proteção de Dados Pessoais—LGPD. 2018. Available online: https://www.planalto.gov.br/ccivil_03/_ato2015-2018/2018/lei/l13709.htm (accessed on 2 December 2024).

- European Union. Regulation (EU) 2016/679. General Data Protection Regulation—GDPR. Official Journal of the European Union L 119/1, 4 May 2016. Available online: https://gdpr-info.eu/ (accessed on 2 December 2024).

- United States. Department of Health and Human Services. Health Information Privacy (HIPAA for Professionals). 2024. Available online: https://www.hhs.gov/hipaa/for-professionals/index.html (accessed on 2 December 2024).

- Mango, L.J. Computer-assisted cervical cancer screening using neural networks. Cancer Lett. 1994, 77, 155–162. [Google Scholar] [CrossRef] [PubMed]

- Monzon, F.A.; Lyons-Weiler, M.; Buturovic, L.J.; Rigl, C.T.; Henner, W.D.; Sciulli, C.; Dumur, C.I.; Medeiros, F.; Anderson, G.G. Multicenter Validation of a 1,550-Gene Expression Profile for Identification of Tumor Tissue of Origin. J. Clin. Oncol. 2009, 27, 2503–2508. [Google Scholar] [CrossRef] [PubMed]

- Campanella, G.; Hanna, M.G.; Geneslaw, L.; Miraflor, A.; Silva, V.W.K.; Busam, K.J.; Brogi, E.; Reuter, V.E.; Klimstra, D.S.; Fuchs, T.J. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 2019, 25, 1301–1309. [Google Scholar] [CrossRef]

- Raciti, P.; Sue, J.; Ceballos, R.; Godrich, R.; Kunz, J.D.; Kapur, S.; Reuter, V.; Grady, L.; Kanan, C.; Klimstra, D.S.; et al. Novel artificial intelligence system increases the detection of prostate cancer in whole slide images of core needle biopsies. Mod. Pathol. 2020, 33, 2058–2066. [Google Scholar] [CrossRef]

- da Silva, L.M.; Pereira, E.M.; Salles, P.G.O.; Godrich, R.; Ceballos, R.; Kunz, J.D.; Casson, A.; Viret, J.; Chandarlapaty, S.; Ferreira, C.G.; et al. Independent real-world application of a clinical-grade automated prostate cancer detection system. J. Pathol. 2021, 254, 147–158. [Google Scholar] [CrossRef]

- Raciti, P.; Sue, J.; Retamero, J.A.; Ceballos, R.; Godrich, R.; Kunz, J.D.; Casson, A.; Thiagarajan, D.; Ebrahimzadeh, Z.; Viret, J.; et al. Clinical validation of artificial intelligence-augmented pathology diagnosis demonstrates significant gains in diagnostic accuracy in prostate cancer detection. Arch. Pathol. Lab. Med. 2023, 147, 1178–1185. [Google Scholar] [CrossRef]

- Nakisige, C.; de Fouw, M.; Kabukye, J.; Sultanov, M.; Nazrui, N.; Rahman, A.; de Zeeuw, J.; Koot, J.; Rao, A.P.; Prasad, K.; et al. Artificial intelligence and visual inspection in cervical cancer screening. Int. J. Gynecol. Cancer 2023, 33, 1515–1521. [Google Scholar] [CrossRef]

- Lu, M.Y.; Chen, B.; Williamson, D.F.K.; Chen, R.J.; Zhao, M.; Chow, A.K.; Ikemura, K.; Kim, A.; Pouli, D.; Patel, A.; et al. A multimodal generative AI copilot for human pathology. Nature 2024, 634, 466–473. [Google Scholar] [CrossRef]

- Abinaya, K.; Sivakumar, B. A Deep Learning-Based Approach for Cervical Cancer Classification Using 3D CNN and Vision Transformer. J. Digit. Imaging Inform. Med. 2024, 37, 280–296. [Google Scholar] [CrossRef]

- Zhao, T.; Gu, Y.; Yang, J.; Usuyama, N.; Lee, H.H.; Naumann, T.; Gao, J.; Crabtree, A.; Abel, J.; Moung-Wen, C.; et al. BiomedParse: A biomedical foundation model for image parsing of everything everywhere all at once. arXiv 2024, arXiv:2405.12971. [Google Scholar] [CrossRef]

- Codella, N.C.F.; Jin, Y.; Jain, S.; Gu, Y.; Lee, H.H.; Ben Abacha, A.; Santamaria-Pang, A.; Guyman, W.; Sangani, N.; Zhang, S.; et al. MedImageInsight: An open-source embedding model for general domain medical imagin. arXiv 2024, arXiv:2410.06542. [Google Scholar] [CrossRef]

- Al-Kharusi, Y.; Khan, A.; Rizwan, M.; Bait-Suwailam, M.M. Open-Source Artificial Intelligence Privacy and Security: A Review. Computers 2024, 13, 311. [Google Scholar] [CrossRef]

- Pallua, J.D.; Brunner, A.; Zelger, B.; Schirmer, M.; Haybaeck, J. The future of pathology is digital. Pathol. Res. Pract. 2020, 216, 153040. [Google Scholar] [CrossRef]

| Application/Product | Functionality | Model Type | Sample (n) | Performance Metric | Status | Reference |

|---|---|---|---|---|---|---|

| PAPNET Cytological Screening System | Computer-assisted system for screening conventional cervical smears. The system uses neural networks to locate and recognize potentially abnormal cells and display them for review by cytologists. | Feedforward neural networks with backpropagation architecture | Rilke et al. (200) Kish et al. (191) Slagel et al. (208) Kharazi et al. (357) | Sensitivity: 96% Triage efficiency: 81% Sensitivity: 98% Triage efficiency: 97% Sensitivity: 100% Triage efficiency: 85% Sensitivity: 95% Triage efficiency: 58% | FDA approved | Mango LJ [48] |

| Pathwork Tissue of Origin Test | Gene expression profile-based test to identify the tissue of origin of tumors, particularly metastatic and poorly differentiated or undifferentiated primary tumors. | Machine learning algorithm with a cross-validation system | 547 (258 metastatic and 289 undifferentiated primary) | Sensitivity: 87.8% Especificity: 99.4% For metastatic tumors: 84.5% For primary tumors: 90.7% | FDA approved | Monzon et al. [49] |

| Paige Prostate | The system performs classification of whole slide images (WSIs) to detect prostate cancer | The model combines Multiple Instance Learning (MIL) and Convolutional Neural Networks (CNNs—ResNet34) along with Recurrent Neural Networks (RNNs) | 24,859 WSIs | Area under the ROC curve (AUC): 0.991 | FDA approved | Campanella et al. [50] |

| 6644 WSIs and validated on 232 WSIs of needle biopsies | Sensitivity: 96% Especificity: 98% Pathologists sensitivity: from 74% to 90% | Raciti et al. [51] | ||||

| 600 transrectal ultrasound-guided prostate needle core biopsy regions from 100 patients | Sensitivity: 0.99 Especificity: 0.93 Negative predictive value (NPV): 1.0 Reduction in diagnostic time: 65.5% | da Silva et al. [52] | ||||

| 610 prostate needle biopsies, originating from 218 institutions | Sensitivity: 97.4% Especificity: 94.8% Area under the ROC curve (AUC): 0.99 | Raciti et al. [53] | ||||

| AI Decision Support System for VIA | AI-based decision support system for visual inspection of the cervix with acetic acid (VIA) in cervical cancer screening, focused on low-income countries. | Deep learning algorithm trained with VIA inspection images, developed on an Android-based device | 83 VIA inspection images, used to assess the diagnostic performance of the system compared to specialists and healthcare professionals | Sensitivity: 80.0% Especificity: 83.3% Area under the ROC curve (AUC): 0.84 | Field validation | Nakisige et al. [54] |

| PathChat | A multimodal generative AI copilot developed for human pathology. It provides diagnoses, answers questions based on images and text, and assists in clinical and educational decision making. | It uses a multimodal learning model that combines a vision encoder (trained with 100 million histopathological images) and a LLM (Llama 2 with 13 billion parameters). The architecture includes a multimodal projector module that connects the output of the vision encoder to the LLM | 56,000 instructions and 999,202 question-and-answer interactions. Additionally, it was validated with whole-slide images from various organs and over 54 diagnoses | Precision (image-only): 78.1% Precision (with clinical context): 89.5% Precision (open-ended questions): 78.7% Precision (microscopy): 73.3% Precision (diagnostic): 80.3% Precision (clinical questions): 80.3% Precision (auxiliary tests): 80.3% Win rate (pathologist preference): 56.5% | Development and validation | Lu et al. [55] |

| Artificial Intelligence Cervical Cancer Screening System (AICCS) | Cervical cancer screening system using AI for detection and classification of WSIs of cervical cytology. The system assists in identifying abnormal cells and provides accurate classification of cervical cytology grades. | Combination of a cell detection model based on RetinaNet and Faster R-CNN (Convolutional Neural Network) for patch-level detection, and a whole image classification model using Random Forest and DNN | 16,056 participants, with prospective and retrospective validation data, and a randomized observational clinical trial | Sensitivity: 0.946 Especificity: 0.890 Area under the ROC curve (AUC): 0.947 Precision: 0.892 | Clinical validation (Randomized observational clinical trial) | Wang et al. [38] |

| No description | The application classifies cervical images into five categories (normal, mild dysplasia, moderate dysplasia, carcinoma in situ, and severe dysplasia) | Hybrid model using 3D CNN (3D Convolutional Neural Networks) and Vision Transformer (ViT) with a Kernel Extreme Learning Machine (KELM) classifier | The study used the Herlev Pap smear dataset, which contains 917 cervical cancer image samples divided into 5 classes | Accuracy: 98.6% Precision: 97.5% Sensitivity: 98.1% Especificity: 98.2% F1 score: 98.4% | Research purposes | Abinaya & Sivakumar [56] |

| BiomedParse | Biomedical foundational model for image analysis that simultaneously performs segmentation, detection, and object recognition tasks in biomedical images. It is capable of identifying and labeling all objects in an image based on text descriptions. | Multimodal model based on the SEEM architecture, which includes an image encoder (Focal-based), a text encoder (PubMedBERT-based), a mask decoder, and a meta-object classifier | 3.4 million triples of image, segmentation mask, and semantic label of the biomedical object and 6.8 million image-mask-description triples, from over 1 million images. The semantic labels encompass 82 major biomedical object types across 9 imaging modalities | Average Dice score: 0.94 in segmentation tasks, superior to MedSAM and SAM. In object recognition, it outperformed Grounding DINO in precision (+25%), recall (+87.9%) e F1-score (+74.5%). Invalid description detection: AUC-ROC with a 0.99 detection rate and 0.93 precision in identifying text prompts that do not describe objects present in the image | Validation | Zhao et al. [57] |

| MedImageInsight | Open-source medical image embedding model capable of performing classification, image-image search, and report generation across various medical domains, including radiology, dermatology, histopathology, and more. The model aims to provide evidence-based decision support for medical diagnosis. | Two-tower architecture (image-text) optimized with UniCL, using a vision encoder (DaViT with 360 million parameters) and a text encoder (252 million parameters). It supports report generation and sensitivity and specificity adjustments via ROC curves | PatchCamelyon: 262,144 breast histopathological tissue images, each labeled with the presence or absence of cancer | mAUC (mean Area Under the ROC Curve): 0.963 MI2 (CLs.): 0.943 (Classification metric using the MedImageInsight model) MI2 (KNN): 0.975 (Classification using KNN with image embeddings) | Open-source model for research purposes | Codella et al. [58] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Lima, R.C.; Quaresma, J.A.S. Exploring Deep Learning Model Opportunities for Cervical Cancer Screening in Vulnerable Public Health Regions. Computers 2025, 14, 202. https://doi.org/10.3390/computers14050202

de Lima RC, Quaresma JAS. Exploring Deep Learning Model Opportunities for Cervical Cancer Screening in Vulnerable Public Health Regions. Computers. 2025; 14(5):202. https://doi.org/10.3390/computers14050202

Chicago/Turabian Stylede Lima, Renan Chaves, and Juarez Antonio Simões Quaresma. 2025. "Exploring Deep Learning Model Opportunities for Cervical Cancer Screening in Vulnerable Public Health Regions" Computers 14, no. 5: 202. https://doi.org/10.3390/computers14050202

APA Stylede Lima, R. C., & Quaresma, J. A. S. (2025). Exploring Deep Learning Model Opportunities for Cervical Cancer Screening in Vulnerable Public Health Regions. Computers, 14(5), 202. https://doi.org/10.3390/computers14050202