Abstract

Fiber-flaw detection on pill surfaces is a critical yet challenging task in industrial pharmacy due to diverse defect characteristics. To overcome the limitations of traditional methods in accuracy and real-time performance, this study introduces SA-MGhost-DVGG, a novel lightweight network for enhanced detection. The proposed network integrates an MGhost module for reducing parameters and computational load, a mixed-channel spatial attention (SA) module to refine features specific to fiber regions, and depthwise separable convolutions (DepSepConv) for efficient dimensionality reduction while preserving feature information. Experimental evaluations demonstrate that SA-MGhost-DVGG achieves a mean detection accuracy of 99.01% with an average inference time of 2.23 ms per pill. The findings confirm that SA-MGhost-DVGG effectively balances high accuracy with computational efficiency, offering a robust solution for industrial applications.

1. Introduction

In the pharmaceutical health industry, drug quality and safety are of paramount importance. Therefore, strict inspection procedures must be implemented to prevent substandard products from entering the market [1]. Among various potential manufacturing defects, the detection of fiber-type flaws on pill surfaces poses a persistent and significant challenge to quality assurance and patient safety. The main characteristic of fibers is that they are extremely fine, sometimes with diameters of only tens of microns, even thinner than a human hair (which has a diameter of 60–90 microns). Furthermore, fibers vary in type, size, and color. Some fibers have colors similar to the pill itself, resulting in low contrast between them, making them difficult to detect accurately. Traditional inspection methods relying on manual visual checks are not only labor-intensive and inefficient but are also highly prone to misjudgments and omissions due to operator fatigue, failing to meet the demands of large-scale, high-speed production lines in the modern pharmaceutical industry. Consequently, achieving reliable, high-throughput detection of fiber flaws on pills and developing robust automated machine vision systems are of significant importance.

Automated defect detection methods primarily follow two technological paths: traditional statistical image processing techniques and, more recently, methods based on deep learning. Relevant scholars have applied these to defect detection in various fields, including agriculture [2,3], industry [4,5,6], manufacturing [7,8], and many other professions. For example, morphological opening operations, Otsu’s binarization method, and Sobel edge detection have been used to detect fruit surface defects [9]; color feature extraction and support vector machines (SVMs) have been employed to identify printed circuit board (PCB) solder joint defects [10]; and periodic structural features of photovoltaic (PV) modules have been extracted using spectrum analysis and Fourier reconstruction to improve the accuracy of automated defect detection in electroluminescence (EL) images [11]. Although statistical image processing techniques have achieved success in certain applications, they face severe challenges when addressing targets such as fiber-flaws on pill surfaces. The variable morphology of fibers and the extremely low contrast with the pill background make it difficult for image processing techniques to ensure the robustness and accuracy of detection, thereby failing to meet the requirements of the pharmaceutical industry.

Deep learning, by automatically learning hierarchical features directly from data, has demonstrated outstanding performance in various complex visual recognition tasks. Several CNN architectures have been successfully applied to detect different types of surface defects, such as using VGG networks with pre-trained weights to detect three types of building defects caused by moisture [12], employing capsule networks for classifying fabric defects [13], and using modified versions of ResNet to detect minute scratches on plastic shells [14]. Within the pharmaceutical field itself, researchers have also explored deep learning applications. For instance, image segmentation techniques have been used to detect defects on the edges of soft capsule shells [15]. CNNs have been utilized for detecting pill appearance defects [16]. LeNet or AlexNet-based ensemble models have been employed for pill identification [17], and ResNet-like two-stage object detection algorithms have been adopted for detecting illicit drug pills [18]. While these studies have validated the feasibility of deep learning in pharmaceutical product inspection, they mostly target relatively obvious or regularly shaped defects and lack precise detection of fine fibers. Furthermore, many standard deep learning models are computationally intensive, characterized by a large number of parameters and high floating point operations (FLOPs). This computational burden typically leads to slow inference speeds, which makes them unsuitable for the stringent real-time processing demands of online batch production in pharmaceutical quality control. To promote the online application of deep learning networks, some researchers have developed lightweight designs for complex deep neural networks to improve inference speed while maintaining accuracy [19]. Techniques such as depthwise separable convolutions [20], novel compact modules [21], attention mechanisms, and specialized lightweight backbone networks [22,23,24] aim to reduce model complexity and accelerate inference, while striving to maintain competitive accuracy. These lightweight models represent significant progress in balancing speed and precision for deployment on resource-constrained platforms and are a current direction in related fields. However, while general-purpose lightweight models improve computational efficiency, they often achieve this by compressing feature representations. This compression can inadvertently weaken the model’s sensitivity to targets that are fine, possess a small spatial scale, and exhibit low contrast.

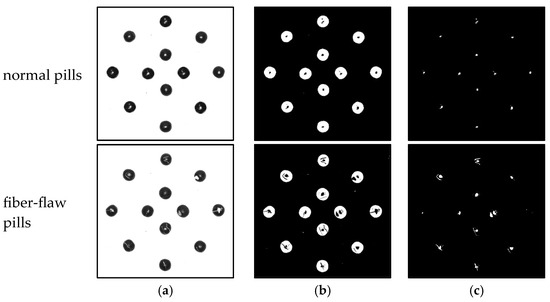

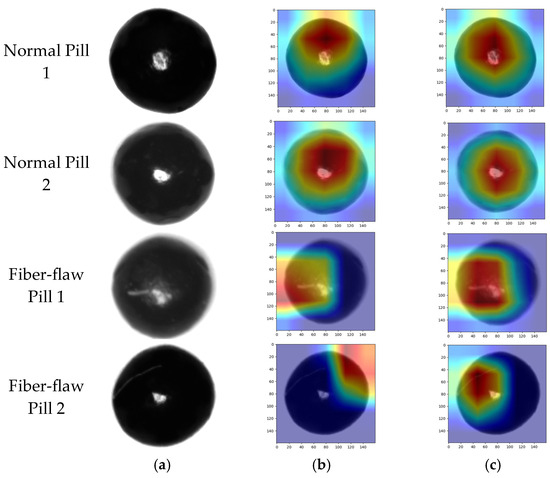

In this work, it is necessary to detect fiber flaws on the surface of handmade pills online and in real time. A middle-hole plane light source is used for illumination, and pill images acquired by a camera are shown in Figure 1a.

Figure 1.

Images for normal pills and fiber-flaw pills. (a) Original image. (b) Inverse binarization image. (c) Highlighted area image.

As can be seen in Figure 1, there are highlighted areas on the surface of each pill. For fiber-flaw pills, fiber reflection under the light source will also form a highlighted area. Since the pill is handmade and not a standard sphere, there may be more than one highlight area on the pill surface, whether for a normal pill or an abnormal pill. It can be found that the shape of the highlighted area of normal pills seems to be relatively regular, and fiber pills usually have long and thin highlighted areas. However, this visual distinction is not consistently reliable enough for definitive classification. Consequently, accurately distinguishing fiber-flaw pills from normal pills based solely on the shape characteristics of these highlighted areas is challenging and does not meet the detection requirements.

To address the aforementioned limitations, this work proposes a lightweight convolutional neural network named SA-MGhost-DVGG. This network enhances detection accuracy and improves detection speed, demonstrating strong practical application value for the online detection of surface defects on pills. The specific contributions are as follows:

(1) Design of the SA-MGhost-DVGG network architecture employing an improved lightweight MGhost module. This module, by introducing the Mish activation function and incorporating group normalization, aims to preserve and enhance rich features crucial for detecting minute defects while substantially compressing model parameters and computational load.

(2) Integration of a mixed-channel spatial shuffle attention (SA) module into the lightweight backbone of SA-MGhost-DVGG. This SA module focuses on further refining key information related to fiber regions from the extracted features. By adaptively enhancing the feature weights of these regions, it specifically improves the network’s recognition accuracy and learning capability for fiber flaws on pill surfaces.

(3) Adoption of depthwise separable convolutions (DepSepConv) within the network architecture to replace traditional max-pooling layers for efficient downsampling. This aims to maximize the retention of detailed feature information during dimensionality reduction, avoiding potential information loss associated with conventional pooling.

(4) Validation of the proposed SA-MGhost-DVGG network’s advantages and practical application potential in fiber-flaw detection on pills through comparative experiments with several classical models as well as lightweight models such as MobileViT and EfficientNet-Lite2.

The remainder of this work is organized as follows: Section 2 describes the image dataset used for the experiments and its acquisition method; Section 3 details the proposed SA-MGhost-DVGG network architecture and its core components; Section 4 presents and analyzes the experimental setup, evaluation metrics, comparative experimental results, and relevant ablation studies; finally, Section 5 summarizes the entire paper and provides an outlook on future research directions.

2. Data Acquisition of Pill Image

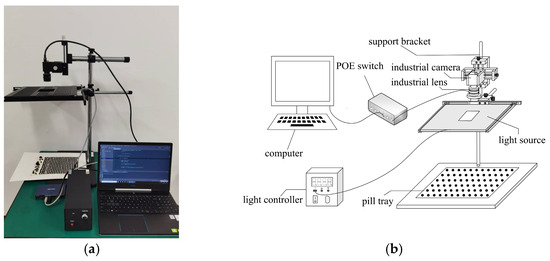

2.1. Construction of the Experimental Device

The experimental device used in this work consists of a support bracket, an industrial camera, an industrial lens, a middle-hole plane light, a POE switch, a pill tray, and a computer. The experiment site and the sketch map of the experimental device are respectively shown in Figure 2a,b. The support bracket not only clamps the industrial camera but also supports the light source. The plane light ensures even illumination of the pill, thereby reducing image noise caused by uneven illumination. The light intensity is controlled by a light controller. The resolution of the industrial camera is 5496 × 3672 pixels and the focal length of the industrial lens is 25 mm, which is enough to photograph the fiber on the pill clearly. The camera and lens are located above the light source, and the camera takes the pill image through the hole of the plane light. The POE switch transmits the image captured by the camera to the computer and provides DC power for the camera. The pill tray with grooves is designed to carry the pills to be detected. The size of the pill tray is 200 mm × 300 mm. The diameter of the grooves is 5 mm and the distance between the adjacent groove center is 10 mm. The diameter of the pill is about 7 mm. Therefore, the pills could be evenly distributed on the pill tray and avoid contact with each other. A computer is used to store and process pill images. The original pill images captured by the experimental device are shown as Figure 1a.

Figure 2.

Experimental device for pill image acquisition. (a) Experiment site. (b) Sketch map of the experimental device.

2.2. Acquisition of Pill Image Dataset

In order to obtain a single pill image for detection, the high-resolution pill images must be processed by inverse binarization, flood filling, denoising, and pill contour extraction. Inverse binarization divides the pixel points into several classes according to the gray value. Based on the flood-filling algorithm, a mask image of the pill image could be obtained. The contours of the pills could be extracted from the mask image, which helps to segment a single pill image from the original image.

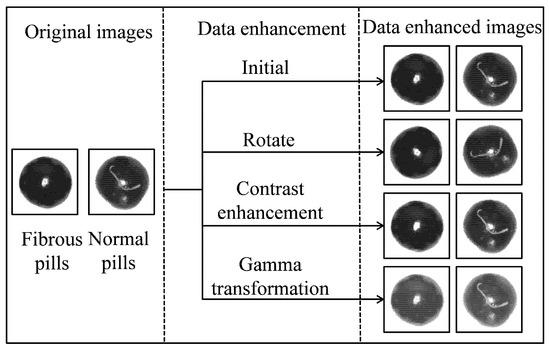

Subsequently, “image enhancement” is employed to increase the diversity of the training dataset. In order to prevent overfitting and improve the generalization ability of the model, data enhancement operation was carried out on the pill images. The image enhancement methods used in this work include rotation (0~360 degrees random angle), contrast enhancement (0.5~1.5 random parameters), and gamma transformation (0.5~2 random parameters) as shown in Figure 3. These data augmentation operations not only expand the diversity of the dataset but also simulate variations such as illumination changes and viewpoint shifts that may be encountered during practical image acquisition, thereby enhancing the trained model’s generalization ability and robustness to these common types of perturbations. Following data enhancement, the dataset comprised a total of 36,220 images, with 17,964 normal pill images and 18,256 fiber-flaw pill images. The training set, validation set, and test set were divided in the ratio of 6:2:2 for both the normal pill images and fiber-flaw pill images. Thus, the number of the training set is 21,733, of which 10,779 are normal pills and 10,954 are fiber-flaw pills. The number of the validation set is 7244, of which 3593 are normal pills and 3651 are fiber-flaw pills. The number of the test set is 7243, of which 3592 are normal pills and 3651 are fiber-flaw pills.

Figure 3.

Graph of image enhancement.

3. Classification Model of Fiber-Flaw Pills Based on SA-MGhost-DVGG

3.1. General Framework of SA-MGhost-DVGG

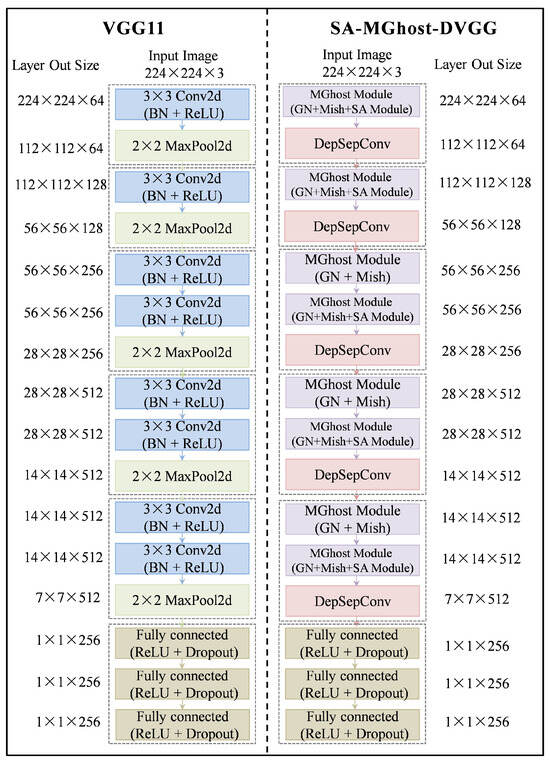

The problem of fiber-flaw pill recognition can be regarded as a two-class problem, that is, the pill images are divided into fiber-flaw pills and normal pills. Due to its excellent classification performance, the VGG network has been used as the basic network in many classification tasks and detection tasks. In this work, several VGG networks have been tested on pill image classification based on transfer learning, and VGG11 was finally selected as the basic network. Based on the VGG11 network as the basic framework, this work designed the SA-MGhost-DVGG network according to the actual needs of fiber-flaw pill detection. The SA-MGhost-DVGG network was developed to improve the detection accuracy and speed, so as to realize online high-precision detection of batch pills.

The dense connection between neurons in the full connection layer involves many parameters. Taking VGG11 as an example, the parameters of its three fully connected layers account for about 93% of the total parameters. The VGG network is designed on the ImageNet dataset which contains 1000 class objects, but the classification task in this work is only for two kinds of pills. In addition, because the features of the pill image are relatively simple, the overly complex network will lead to feature attenuation, thus reducing the recognition accuracy. Therefore, it is necessary to reduce the complexity of the network while retaining its feature extraction ability.

The comparison of the VGG11 network framework and the SA-MGhost-DVGG network framework is shown in Figure 4. In the VGG11 network, multi-level concatenation of convolution layers and pooling layers are applied to extract multi-scale features and reduce the data dimension of input data. The full connection layer connects all the neurons in the adjacent two layers and plays the role of “classifier” in the whole CNN. Although the network could identify some defective pills, it is difficult to detect them quickly enough to meet the real-time detection requirements in the actual production process due to the large amount of parameters. The accuracy of the VGG11 network is another problem to be solved in defective pill detection. In order to solve these problems, the lightweight network SA-MGhost-DVGG was proposed in this work. SA-MGhost-DVGG learns high-dimensional features by stacking multiple lightweight MGhost modules. This strategy was designed in this work to improve detection speed. By introducing DepSepConv, the size of the feature map was reduced without affecting feature extraction. To improve the accuracy of the network, the SA module is used to evaluate the importance of the information in the intermediate feature map. Finally, the high-dimensional feature vectors are flattened and input into the full connection layer for classification.

Figure 4.

The VGG11 network framework vs. The SA-MGhost-DVGG network framework.

3.2. Lightweight Improvement

In order to improve the computing speed of the network, a self-designed MGhost module is applied in this work. In the MGhost module, the Mish function is used as the activation function, and a group normalization layer is employed to accelerate the convergence speed and reduce the overfitting risk of the network.

The common convolution used for feature extraction in traditional deep learning networks has a large number of parameters and FLOPs. To lightweight the network, a new structure for feature extraction, the MGhost module, which is an improvement of the Ghost module, was designed in this work. The MGhost module was used for feature extraction on the baseline of the VGG network, which has greatly improved the inference speed of the network.

3.2.1. Ghost Module

As well known, there are many similar feature maps in the training process of a CNN, which are like ghosts of each other [25]. Sufficient information in feature layers can ensure a comprehensive understanding of the input data and can effectively reflect the learning ability of the network. Although sufficient information in feature layers is very important for the network, some convolution operations to obtain similar feature maps are redundant, which wastes computing time and storage resources. The convolution structure of the Ghost module is designed to solve this problem. The Ghost module could obtain features that can fully reflect the input data by linear operation and fewer parameters, providing the network with stronger expressive ability with a lightweight structure.

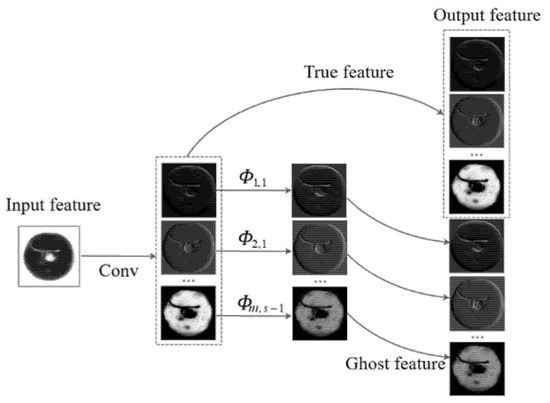

The processing process of the Ghost module is shown in Figure 5. Firstly, the original convolution operation is retained to generate the true feature layer. Secondly, the ghost feature layer is obtained by a linear transformation of the true feature layer. Finally, the true feature layer and the ghost feature layer are spliced together to form a complete feature layer.

Figure 5.

Processing process of the Ghost module.

3.2.2. MGhost Module Design

In the Ghost module, the activation function plays a key role in converting the weighted input into the nonlinear output. The activation function used in the Ghost module is the ReLU function. To promote the performance of the Ghost module, the activation function was improved based on the Mish function in this work. Considering that the Mish function is nonmonotonic with continuous derivability, it allows the existence of gradient flow when the input is near 0. Its smoothing characteristic is more conducive to retaining and learning the subtle feature gradients of faint, low-contrast defects such as fibers, thereby enhancing the model’s sensitivity to such targets. Thus, the Mish function is compatible with the quantization process and can significantly improve the accuracy of the network.

The implementation process of the MGhost module is as follows:

Firstly, the ordinary convolution. Suppose that the input is X∈R (w × h × c) where w, h, and c are the width, height, and channels of the input data, respectively. A new feature map is generated by convolution operation and the formula is as follows.

where is the convolution kernel, is the bias. is the feature map output. The width, height, and channels of are , , and , respectively.

The feature map output is transmitted nonlinearly through the Mish function by the following formula.

where is the nonlinear output feature map.

Secondly, linear transformation. One-dimensional convolution is used to linearly transform each channel of . Then, feature maps are obtained without changing the height and width of the output feature map. The linear transformation could be expressed as:

where is the channel of , denotes the linear transformation for , is the feature map generated by the linear transformation of , is the number of times of linear transformation.

Finally, feature concatenation. The feature generated by ordinary convolution and the feature generated by the linear change are concatenated together to form a complete feature.

where is the complete feature, is the set of all .

3.2.3. FLOPs Comparison of MGhost Module and Ordinary Convolution

FLOPs of MGhost module () are given by:

where, and are the length and width of the output feature map, is the size of the convolution kernel, and are the number of channels of the input feature map and the output feature map, is the number of times of linear transformation.

FLOPs of ordinary convolution () are given by:

By comparing the FLOPs of the feature map obtained by MGhost module with the feature map obtained by ordinary convolution operation, the compression ratio is obtained as follows:

As can be seen, changes with the value of . Since > 1, the MGhost module has fewer FLOPs and faster calculation speed than the ordinary convolution module.

3.2.4. Feature Visualization and Comparison

To more intuitively evaluate the effectiveness of the proposed MGhost module in terms of feature learning and to compare it with the standard Ghost module, feature maps extracted by both modules when processing the same input samples were visualized. Representative normal pill and fiber-flaw pill samples were selected, and feature heatmaps were employed to display the regions of interest and activation intensities captured by different modules from the image information. The results are shown in Figure 6.

Figure 6.

Feature visualization heatmap. Colors range from blue (low intensity) to red (high intensity). (a) Pill Sample. (b) Ghost. (c) MGhost.

As shown in Figure 6, the features learned by the MGhost module exhibit more concentrated activation regions, which can more accurately reflect the key visual characteristics of normal pills. For fiber-flaw pill samples, the features learned by the MGhost module are highly concentrated in the fiber-flaw regions, and the activation intensity at the flaw location is significantly higher than in other areas. In contrast, the features learned by the Ghost module may show less focused or insufficient response intensity in the defect regions, with some highly activated areas even deviating from the actual flaw locations.

In summary, this feature-level visual comparison intuitively reveals the enhancement in feature learning quality of the MGhost module over the traditional Ghost module, providing strong support for its superior performance in downstream tasks.

3.3. High Performance Improvement

3.3.1. Spatial Attention

Due to the similarity between highlighted areas and fibers on the pill surface, identifying fiber-flaw pills requires not only acquiring fiber feature details but also understanding the global differences between normal and abnormal pills. The attention mechanism is one of the important ways to improve CNNs’ overall ability. The attention mechanism measures the importance of the feature map by obtaining a weight matrix. A new feature map could be reconstructed with the weight matrix based on the original feature map. In this work, the SA module is deployed on top of the features extracted by MGhost; its mixed-channel spatial attention mechanism allows for more precise focusing on and enhancement of feature responses from fiber regions on the pill surface amidst complex backgrounds, while also suppressing potential background interference.

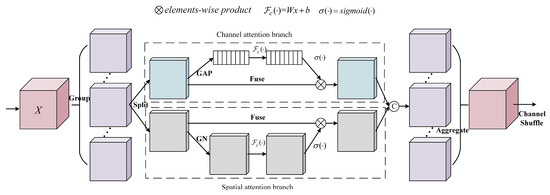

SA is a mixed-channel space attention mechanism [26]. Firstly, the input feature map is grouped along the channel dimension using the Group operation. Then, by the feature map split (Split) operation, the sub-features of each group are processed on the two dimensions of channel and space in parallel. For the channel attention branch, the global average pooling (GAP) is used to generate channel statistics. For the spatial attention branch, the Group Normalization (GN) operation is used to generate spatial statistics. Finally, the channel shuffle operation is used to realize the information interaction between different sub-features of the two branches. The framework of SA is shown in Figure 7.

Figure 7.

Graph of SANet structure.

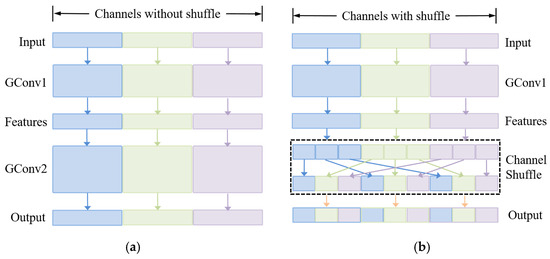

A key component of the SA module is the Channel Shuffle operation. As illustrated in Figure 8a, employing only Group Convolution, while reducing parameters for network lightweighting, can lead to a state where feature groups are isolated from each other due to a lack of information interaction between different groups. This may limit the network’s feature learning capability. To overcome this limitation, the Channel Shuffle operation is introduced, the effect of which is shown in Figure 8b. Channel Shuffle effectively reorganizes the channel order of feature maps, promoting information flow and feature fusion between different groups, which plays a crucial role in enhancing the network’s learning performance. The specific implementation process of Channel Shuffle is described as follows:

Figure 8.

Graph of “Channels without shuffle” and “Channels with shuffle”. (a) Channels without shuffle. (b) Channels with shuffle.

Suppose the input layer which has channels is divided into groups. First, expand the input layer into a three-dimensional (3D) matrix with a size of , where . Simplify, set here. Then, transpose the 3D matrix along and to obtain a shuffled feature map. Finally, divide the shuffled feature map into groups and obtain a new feature map.

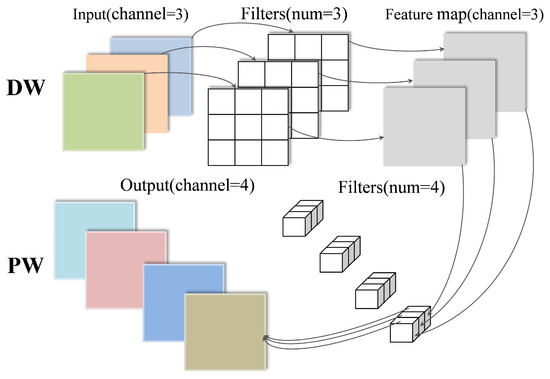

3.3.2. DepSepConv

In many networks, the maximum pooling layer is used for downsampling. However, pooling operations will lose information and are destructive. In order to enhance the feature expression ability of the network, the method of DepSepConv is adopted in the proposed network to achieve feature dimensionality reduction. Comparing DepSepConv with the pooling operation, although a small number of parameters and FLOPs are increased, little information will be lost. This not only contributes to improved detection accuracy but also enables the network to extract sufficient discriminative information even when faced with slight degradation in image quality, thereby enhancing the model’s robust performance.

DepSepConv can be divided into two parts: depth convolution (DW) and point convolution (PW), as shown in Figure 9.

Figure 9.

Graph of DepSepConv.

DW processes the spatial information on each channel. Each channel is convolved by only one convolution kernel. The number of feature map channels output by DW is the same as the input. PW combines the output feature map of DW in the depth direction by convolution to generate a new feature map. The convolution kernel size is , where is the number of channels on the previous layer. The number of output feature maps of PW is determined by the number of convolution kernels.

4. Verification

4.1. Experimental Details and Evaluation Indicators

4.1.1. Experimental Details

The experiment was completed on a PC with a Windows 10 computer system, Intel(R) Core (TM) i7-9750H CPU @ 2.60 GHz, 32 GB main memory, and NVIDIA GeForce RTX 2070 graphics chip. The Adam optimizer is used for network training in this work. The learning rate is adjusted adaptively according to the loss value. The initial learning rate is set to 1 × 10−4. If the loss value after three consecutive epochs did not decrease, the learning rate will be changed to one-tenth of the initial value. The experiment adopted a BatchSize of 16 and was run for 100 epochs. In addition, the Xavier method is adopted to initialize the network parameters and breakpoint continuous training is used to prevent the data loss caused by computer power failure halfway. To ensure the robustness of the evaluation, all models were trained and tested independently 6 times. The dataset partitioning was fixed prior to the experiments. Each independent run utilized a different random seed to control aspects such as model weight initialization, shuffling of training data, and Dropout operations, while other hyperparameters were maintained consistently.

4.1.2. Evaluation Indicators

In this work, the indicators of parameters (Params), floating point operations (FLOPs), true positives (TP), true negatives (TN), reasoning time (RTime), validation set accuracy (Valacc), test set accuracy (Testacc), and training time (TTime) are used to evaluate the network. Params are mainly used to describe the size of the network. FLOPs are generally used to measure the complexity of algorithms or networks. TP represents the detection accuracy of normal pills where a test observes a normal pill, where a normal pill was also predicted. It represents the detection accuracy of normal pills. TN represents the detection accuracy of fiber-flaw pills where a test observes a fiber-flaw pill, where a fiber-flaw pill was also predicted.

4.2. Experimental Results and Analysis

4.2.1. Comparison of Identification Results of Different Networks

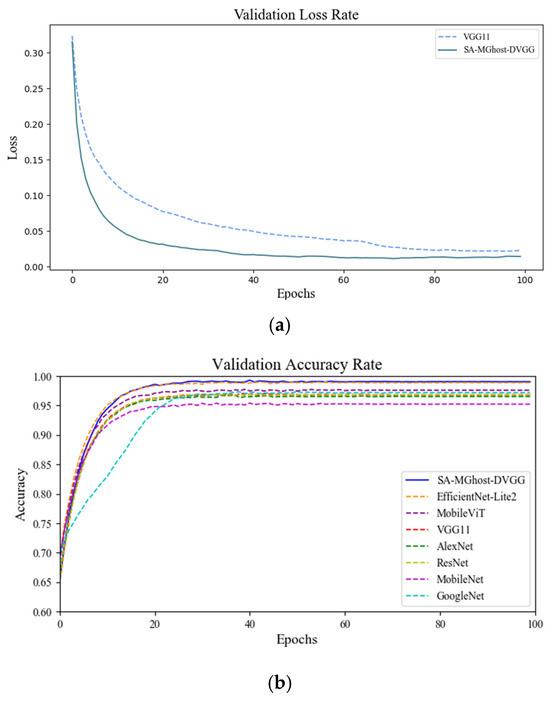

The validation loss rate curve in Figure 10a shows that the convergence speed of SA-MGhost-DVGG is much faster than that of VGG11 in the training process. This is because the Adam optimizer adjusts the learning rate adaptively according to the training results at each epoch. Additionally, the application of the group normalization strategy is another reason to promote the convergence speed of the network.

Figure 10.

Comparison chart of verification set loss value and accuracy. (a) Verification set loss value. (b) Validation set accuracy.

In order to verify the performance of the proposed network of SA-MGhost-DVGG, several classical convolution networks were employed for comparison, including VGG11, AlexNet, ResNet, MobileNet, and GoogleNet. Furthermore, to provide a more comprehensive benchmark against advanced lightweight methods and network backbone designs, the performance of EfficientNet-Lite2 and MobileViT was also compared. As can be seen from Figure 10b, SA-MGhost-DVGG has higher accuracy and stability than other networks.

The validation set accuracy (Valacc), test set accuracy (Testacc), training time (TTime), and reasoning time (RTime) of the classical image feature count method and different networks under the same experimental conditions are compared in Table 1. As can be seen, although the traditional image feature count method has a fast detection speed, its detection accuracy is far from meeting industrial demand. The accuracy of deep learning networks is higher than that of the traditional image feature count method. It can be noted that SA-MGhost-DVGG achieves a balance between network performance and detection speed in the pill detection task.

Table 1.

Experimental results of different networks.

As can be seen from the data in Table 1, compared to both classical networks and other lightweight architecture models, SA-MGhost-DVGG demonstrates advantages in high accuracy and fast detection speed for the pill surface defect detection task, enabling it to better meet practical industrial requirements.

4.2.2. Ablation Experiments

The networks which employed the Ghost module and MGhost module on the baseline of VGG11 are called Ghost-VGG and MGhost-VGG, respectively. SE attention [27], BAM attention [28], CBAM attention [29], and SA embedded into MGhost-VGG are named SE-MGhost-VGG, BAM-MGhost-VGG, CBAM-MGhost-VGG, and SA-MGhost-VGG, respectively. The downsampling method (DSMethod) of MaxPool and DepSepConv were compared in the ablation experiment in this work. SA-MGhost-DVGG employed DepSepConv as the downsampling method and other networks used the MaxPool downsampling method. The Params, FLOPs, TN, TP, Testacc, and RTime of these models are shown in Table 2.

Table 2.

Results of ablation experiment.

As shown in Table 2, compared with VGG11, the Params of MGhost-VGG reduced by nearly 50% and FLOPs reduced by nearly 93%, the Testacc increased by 0.11%, and the RTime decreased by 59.53%. Compared with Ghost-VGG, MGhost-VGG is also slightly improved in Testacc and RTime. Table 2 also shows that the recognition ability of the network has been improved by adding different kinds of attention mechanisms. Among them, the network with SA, SA-MGhost-VGG, has the best detection results. Compared with MGhost-VGG, although the Params and FLOPs of SA-MGhost-VGG are increased slightly, the Testacc increased by 1.52%. By using DepSepConv instead of the maximum pooling for downsampling, the Testacc of SA-MGhost-DVGG was improved by 0.42%, the flaw-pill detection rate TN reached 99.08%, and the RTtime was 2.23 ms. Compared with VGG11, the detection accuracy of Testacc was increased by 2.16% and the time cost RTime was reduced by 56.61%. Although the RTtime of SA-MGhost-DVGG is 0.15 ms slower than that of MGhost-VGG, the detection accuracy Testacc increased by 1.94%. Therefore, it is worth sacrificing a small amount of speed to further improve the accuracy in the field of medicine quality detection.

5. Conclusions

In order to improve the detection method of fiber-flaw pills, a lightweight convolution neural network named the SA-MGhost-DVGG network was proposed in this work, which significantly enhanced the detection speed and accuracy. In terms of light weight, the proposed MGhost module was used in the SA-MGhost-DVGG network by replacing the convolution layer in VGG11, which reduced the amount of network Params and FLOPs without affecting feature learning. In terms of high performance, the SA-MGhost-DVGG network employed a mixed SA mechanism to improve the ability of the network to learn from fiber regions and used DepSepConv for dimensionality reduction without affecting feature extraction. A good balance was achieved between Params and detection accuracy in the SA-MGhost-DVGG network. The experimental results have shown that the average detection accuracy reached 99.01% and the average detection time of each pill was 2.23 ms for the SA-MGhost-DVGG network. The proposed network has strong practical application value in flaw-pill detection, which could also be extended to other similar surface defect detection work. Furthermore, comprehensively evaluating its deployment characteristics on resource-constrained microcomputing devices, alongside exploring advanced model compression techniques, presents an important direction for future work.

Author Contributions

Conceptualization, J.L. and H.W.; methodology, J.L. and H.W.; formal analysis, J.L. and H.L.; resources, H.W.; data curation, J.L. and Z.W.; writing—original draft preparation, J.L.; writing—review and editing, H.W. and Z.W.; project administration, H.W.; funding acquisition, H.W.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by [National Natural Science Foundation of China] grant number [NO.62473283].

Data Availability Statement

The data presented in this study are available on request from the corresponding author. (Non-disclosure due to lab policy and confidentiality agreements).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, Y.; Kuo, P.; Guo, J. Automatic industry PCB board DIP process defect detection system based on deep ensemble self-adaption method. IEEE Trans. Compon. Packag. Manuf. Technol. 2020, 11, 312–323. [Google Scholar] [CrossRef]

- Mittal, S.; Dutta, M.K.; Issac, A. Non-destructive image processing based system for assessment of rice quality and defects for classification according to inferred commercial value. Measurement 2019, 148, 106969. [Google Scholar] [CrossRef]

- Fan, S.; Li, J.; Zhang, Y.; Tian, X.; Wang, Q.; He, X.; Zhang, C.; Huang, W. On line detection of defective apples using computer vision system combined with deep learning methods. J. Food Eng. 2020, 286, 110102. [Google Scholar] [CrossRef]

- Wu, H.; Lei, R.; Peng, Y. Pcbnet: A lightweight convolutional neural network for defect inspection in surface mount technology. IEEE Trans. Instrum. Meas. 2022, 71, 3518314. [Google Scholar] [CrossRef]

- Wang, C.; Shu, Q.; Wang, X.; Guo, B.; Liu, P.; Li, Q. A random forest classifier based on pixel comparison features for urban LiDAR data. ISPRS J. Photogramm. Remote. Sens. 2019, 148, 75–86. [Google Scholar] [CrossRef]

- Pan, Z.; Yang, J.; Wang, X.-E.; Wang, F.; Azim, I.; Wang, C. Image-based surface scratch detection on architectural glass panels using deep learning approach. Constr. Build. Mater. 2021, 282, 122717. [Google Scholar] [CrossRef]

- Shojaeinasab, A.; Charter, T.; Jalayer, M.; Khadivi, M.; Ogunfowora, O.; Raiyani, N.; Yaghoubi, M.; Najjaran, H. Intelligent manufacturing execution systems: A systematic review. J. Manuf. Syst. 2022, 62, 503–522. [Google Scholar] [CrossRef]

- Dai, W.; Mujeeb, A.; Erdt, M.; Sourin, A. Soldering defect detection in automatic optical inspection. Adv. Eng. Inform. 2020, 43, 101004. [Google Scholar] [CrossRef]

- Cao, W.; Liu, Q.; He, Z. Review of pavement defect detection methods. IEEE Access 2020, 8, 14531–14544. [Google Scholar] [CrossRef]

- Rajesh, A.; Jiji, G.W. Printed circuit board inspection using computer vision. Multimed. Tools Appl. 2023, 83, 16363–16375. [Google Scholar] [CrossRef]

- Yu, J.; Yang, Y.; Zhang, H.; Sun, H.; Zhang, Z.; Xia, Z.; Zhu, J.; Dai, M.; Wen, H. Spectrum analysis enabled periodic feature reconstruction based automatic defect detection system for electroluminescence images of photovoltaic modules. Micromachines 2022, 13, 332. [Google Scholar] [CrossRef]

- Perez, H.; Tah JH, M.; Mosavi, A. Deep learning for detecting building defects using convolutional neural networks. Sensors 2019, 19, 3556. [Google Scholar] [CrossRef]

- Kahraman, Y.; Durmuşoglu, A. Classification of defective fabrics using capsule networks. Appl. Sci. 2022, 12, 5285. [Google Scholar] [CrossRef]

- Ho, C.-C.; Hernandez, M.A.B.; Chen, Y.-F.; Lin, C.-J.; Chen, C.-S. Deep Residual Neural Network-Based Defect Detection on Complex Backgrounds. IEEE Trans. Instrum. Meas. 2022, 71, 5005210. [Google Scholar] [CrossRef]

- Zheng, S.; Zhang, W.; Wang, L.; He, S. Special Shaped Softgel Inspection System Based on Machine Vision. In Proceedings of the 2015 IEEE 9th International Conference on Anti-Counterfeiting, Security, and Identification (ASID), Xiamen, China, 25–27 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 124–127. [Google Scholar] [CrossRef]

- Mac, T.T.; Hung, N.T. Automated Pill Quality Inspection Using Deep Learning. Int. J. Mod. Phys. B 2021, 35, 2140050. [Google Scholar] [CrossRef]

- Swastika, W.; Prilianti, K.; Stefanus, A.; Setiawan, H.; Arfianto, A.Z.; Santosa, A.W.B.; Rahmat, M.B.; Setiawan, E. Preliminary Study of Multi Convolution Neural Network-Based Model to Identify Pills Image Using Classification Rules. In Proceedings of the 2019 International Seminar on Intelligent Technology and Its Applications (ISITIA), Surabaya, Indonesia, 28–29 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 376–380. [Google Scholar] [CrossRef]

- Ou, Y.-Y.; Tsai, A.-C.; Wang, J.-F.; Lin, J. Automatic Drug Pills Detection Based on Convolution Neural Network. In Proceedings of the 2018 International Conference on Orange Technologies (ICOT), Nusa Dua, Bali, Indonesia, 23–26 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Yeung, C.C.; Lam, K.M. Efficient Fused-Attention Model for Steel Surface Defect Detection. IEEE Trans. Instrum. Meas. 2022, 71, 2510011. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, J.; Zhu, L.; Zhang, K.; Liu, T.; Wang, D.; Wang, X. An improved MobileNet-SSD algorithm for automatic defect detection on vehicle body paint. Multimed. Tools Appl. 2020, 79, 23367–23385. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, K. Transformer Fault Diagnosis Method Based on MTF and GhostNet. Measurement 2025, 249, 117056. [Google Scholar] [CrossRef]

- Long, Z.; Suyuan, W.; Zhongma, C.; Jiaqi, F.; Xiaoting, Y.; Wei, D. Lira-YOLO: A lightweight model for ship detection in radar images. J. Syst. Eng. Electron. 2020, 31, 950–956. [Google Scholar] [CrossRef]

- Zhao, B.; Dai, M.; Li, P.; Xue, R.; Ma, X. Defect detection method for electric multiple units key components based on deep learning. IEEE Access 2022, 8, 136808–136818. [Google Scholar] [CrossRef]

- Xiao, D.; Kang, Z.; Yu, H.; Wan, L. Research on belt foreign body detection method based on deep learning. Trans. Inst. Meas. Control. 2022, 44, 2919–2927. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1577–1586. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Yang, Y.B. Sa-net: Shuffle attention for deep convolutional neural networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2235–2239. [Google Scholar] [CrossRef]

- Mehrish, A.; Majumder, N.; Bharadwaj, R.; Mihalcea, R.; Poria, S. A review of deep learning techniques for speech processing. Inf. Fusion 2023, 99, 101869. [Google Scholar] [CrossRef]

- Yaseen, M.U.; Nasralla, M.M.; Aslam, F.; Ali, S.S.; Khattak, S.B.A. A novel approach based on multi-level bottleneck attention modules using self-guided dropblock for person re-identification. IEEE Access 2022, 10, 123160–123176. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).