Abstract

The validation of security protocols remains a complex and critical task in the cybersecurity landscape, often relying on labor-intensive testing or formal verification techniques with limited scalability. In this paper, we explore property-based testing (PBT) as a powerful yet underutilized methodology for the automated validation of security protocols. PBT enables the generation of large and diverse input spaces guided by declarative properties, making it well-suited to uncover subtle vulnerabilities in protocol logic, state transitions, and access control flows. We introduce the principles of PBT and demonstrate its applicability through selected use cases involving authentication mechanisms, cryptographic APIs, and session protocols. We further discuss integration strategies with existing security pipelines and highlight key challenges such as property specification, oracle design, and scalability. Finally, we outline future research directions aimed at bridging the gap between PBT and formal methods, with the goal of advancing the automation and reliability of secure system development.

1. Introduction

Ensuring the robustness and reliability of security protocols is essential to safeguarding digital communication and maintaining data integrity in today’s interconnected systems. Security protocols must defend against an increasingly complex and evolving threat landscape, yet their validation remains a notoriously difficult task. Traditional testing methodologies—such as unit, integration, and system-level tests—are often constrained by limited input coverage and manually curated test cases, leading to missed edge-case vulnerabilities. Even formal verification, while mathematically rigorous, can be prohibitively expensive and difficult to scale for modern distributed systems [1].

Property-based testing (PBT) emerges as a promising complementary approach. Unlike conventional example-based testing, PBT uses randomized and structured generators to produce large input spaces guided by declarative properties. These properties encode general expectations of system behavior (e.g., cryptographic round-trips, access control invariants), allowing the automated exploration of subtle flaws and protocol deviations [2]. Notably, PBT’s ability to shrink failing input enhances fault localization, making it highly effective in practical debugging scenarios [3].

Recent research has begun to investigate PBT’s application to security domains, including secure multi-party computation [4] and safety-critical embedded systems [5]. However, its use for validating security protocols—especially in the context of real-world authentication flows, encryption APIs, and protocol state machines—remains underdeveloped. Current tools like AVISPA [1] or Tamarin offer automated reasoning but often lack integration with dynamic test environments or suffer from usability and scalability limitations in continuous deployment settings.

This paper positions property-based testing as a practical and scalable technique for automated validation of security protocols. Our contribution is twofold: (1) we demonstrate how PBT can be effectively applied to protocol-level security assurance through expressive properties and modern test frameworks, and (2) we contextualize PBT within security pipelines such as CI/CD workflows, addressing common challenges, including oracle design, performance overhead, and input modeling.

The main contributions of this paper are as follows:

- We provide a formal overview of property-based testing and its theoretical underpinnings, emphasizing its suitability for complex security-critical systems.

- We analyze the specific strengths of PBT in the cybersecurity context, particularly in input space exploration, fuzzing, and protocol validation.

- We present illustrative application scenarios and case studies, including authentication flows and cryptographic APIs, highlighting how PBT can uncover protocol design flaws.

- We introduce a real-world case study applying PBT to OAuth 2.0 and compare PBT to fuzzing and static analysis in terms of effectiveness and practicality.

- We discuss key challenges such as oracle design, scalability, and integration with CI/CD pipelines, offering concrete suggestions and implementation strategies.

- We propose future directions to extend the reach of PBT, including hybridization with formal verification and intelligent test generation through ML.

The remainder of this paper is structured as follows. Section 2 presents background information on testing methodologies in cybersecurity, followed by definitions and foundations of property-based testing and the tools commonly used. Section 3 discusses the rationale for adopting PBT in security contexts, focusing on protocol fuzzing, session validation, and modeling of cryptographic properties. Section 4 showcases concrete case studies and theoretical walkthroughs in domains such as TLS, OAuth, RBAC, and cryptographic APIs. Section 5 addresses the integration of PBT into existing cybersecurity toolchains and practices, including formal analysis and CI-based testing workflows. Section 6 identifies the main challenges and limitations in applying PBT to security protocol validation. Section 7 outlines potential future directions, such as ML-based test generation, property specification standards, and synergy with formal methods. Finally, Section 8 concludes the paper and reflects on the broader implications of using PBT for securing digital systems.

2. Background

Ensuring the security and reliability of software systems remains a cornerstone of cybersecurity assurance. A diverse range of testing methodologies has been developed to identify vulnerabilities, verify behavioral correctness, and ensure resilience under adversarial conditions. However, many of these approaches struggle to scale in the face of complex input domains, concurrent interactions, and non-deterministic behaviors. This section outlines conventional testing strategies and introduces property-based testing (PBT) as a complementary paradigm, particularly well-suited to protocol-level security validation.

2.1. Overview of Testing Methodologies in Cybersecurity

Cybersecurity testing employs a variety of strategies to detect implementation flaws and protocol weaknesses. Common approaches include:

- Unit Testing—Testing individual components in isolation to verify their correctness.

- Integration Testing—Verifying the interaction between components.

- System Testing—Ensuring the system, as a whole, meets functional requirements.

- Penetration Testing—Simulating real-world attacks to evaluate security defenses.

- Formal Verification—Uses mathematical proof to verify the correctness of system models or implementations.

While foundational, these methods often rely on static, handcrafted test cases and exhibit limited coverage of unforeseen execution paths. Formal methods, though precise, may demand significant effort in modeling, proof generation, and tool proficiency [1]. These challenges have led to growing interest in automated testing techniques that can systematically and efficiently explore broad input spaces. For example, efforts to formally model and verify protocols like TLS have highlighted the challenges of managing state complexity and real-world deviations [6]. Recent reviews of vulnerability detection methodologies emphasize the fragmentation and tool-specific limitations in current cybersecurity testing workflows [7].

Traditional fuzzing tools often fail to explore meaningful edge cases or maintain valid protocol states [8], which limits their effectiveness in detecting deep logic flaws compared to property-guided generation in PBT.

A systematic review identifies key challenges in adopting DevSecOps, such as tool integration and cultural shifts, underscoring the need for adaptable security testing approaches like PBT [9].

Despite advances in security tooling—from fuzzers and penetration testing frameworks to static analyzers and model checkers—existing solutions often lack semantic awareness, suffer from input validity issues, or require extensive manual setup [7]. Formal verification approaches like those used in TLS modeling [6] provide strong guarantees but remain difficult to scale or adapt to implementation nuances. PBT bridges these gaps by enabling expressive, reusable properties that guide automated test generation across meaningful input spaces.

2.2. Introduction to Property-Based Testing

Property-based testing is a technique wherein system behavior is specified through general logical assertions—or properties—rather than fixed input–output pairs. These properties describe expected invariants, behaviors, or constraints that must hold across a wide range of possible inputs. An overview of existing software security models and frameworks reveals limitations in addressing dynamic security challenges, highlighting the potential of PBT to fill these gaps [10].

PBT operates on two foundational principles:

- Automated Input Generation: Test inputs are randomly or systematically generated using data generators.

- Property Checking: A property P(x) is evaluated for each generated input x. If a property fails, the failing input is shrunk to a minimal counterexample for debugging.

This method is particularly well-suited to domains like protocol validation, cryptography, and distributed systems, where manual test construction is infeasible and edge cases are critical. PBT has been successfully used to uncover critical bugs in telecommunications systems, financial software, and, increasingly, security-sensitive domains [2,3].

Properties can be categorized into several types:

- Invariants:

- Preconditions and Postconditions:

This formalizes the notion that when a precondition holds, the postcondition must be satisfied after applying the function f, as originally introduced in axiomatic form by Hoare [11].

An example of a cryptographic API might be:

Such properties are expressive enough to capture the behavioral expectations of secure systems, even in the absence of precise oracles.

3. Why Property-Based Testing for Security?

Ensuring the robustness of software systems against security threats requires exhaustive validation strategies that go beyond traditional, example-based testing. Property-based testing offers a generative and formalism-lite approach to systematically test software against general behavioral properties across diverse input spaces. This section explores why PBT is particularly well-suited to cybersecurity applications.

3.1. Strengths of Property-Based Testing

PBT introduces several core advantages that make it a powerful tool for software verification, especially in security-critical contexts:

- Input Space Exploration: Instead of relying on manually curated examples, PBT generates large and diverse sets of test inputs to check whether the system properties hold universally. This enables the discovery of edge cases and vulnerabilities that would likely escape standard test suites [2,3].

- Counterexample Shrinking: When a property fails, modern PBT tools automatically minimize the failing test case to its simplest form—a process called shrinking. This significantly reduces the debugging effort by isolating the core cause of the fault [2,12,13].

- Generative Testing with Constraints: Modern PBT frameworks support constrained and structured input generation. This is especially important for security, where the inputs must conform to specific formats (e.g., valid certificates, encrypted payloads, or session tokens) [12].

- Incremental Formalization: PBT enables developers to encode behaviors as mathematical properties, such as idempotence or state transitions, without requiring full formal models or theorem provers, effectively bridging the gap between informal testing and formal verification [3].

3.2. Security-Specific Motivations for Property-Based Testing

The strengths of PBT are especially relevant to security because they align closely with the challenges of validating secure systems:

- Protocol Fuzzing with Structured Input: Traditional fuzzers use purely random input, which often leads to invalid states. PBT, in contrast, can generate structured fuzzing—i.e., input that is syntactically or semantically valid but still randomized. This increases code coverage while preserving meaningfulness [7], [14]. For example, property-based fuzzing has been used to validate cryptographic code even in the absence of oracles by using metamorphic properties [5].

- State Machines and Session Validation: Many security protocols (e.g., TLS, OAuth, and SSH) can be modeled as finite state machines. PBT can generate sequences of actions to verify that state transitions comply with protocol specifications. This enables the detection of logic flaws such as unauthorized transitions, inconsistent session handling, or privilege escalations [3].

- Cryptographic Assumptions as Properties: Cryptographic correctness properties can be encoded in PBT, such as:

Verifying this property under various random inputs helps ensure the correctness of symmetric encryption APIs.

This can be formalized as:

where is the message space, and is the key space.

We can generalize this pattern to express metamorphic or oracle-free properties as:

where denotes the semantic equivalence (e.g., same logical input after encoding or padding) and denotes the functional similarity in output (e.g., structurally equivalent or within a tolerated range).

These formulations are especially useful when the expected outputs cannot be explicitly defined, such as in cryptographic noise, randomized encodings, or side-channel resistant outputs.

PBT has recently been applied to secure multi-party computation (MPC) protocols, identifying vulnerabilities through violations of input–output consistency and randomness assumptions [4].

- Flaw Discovery Without Oracles: One of the most compelling use cases of PBT in security is testing when expected outputs are hard to specify (e.g., for hash functions or cryptographic noise). PBT allows the specification of metamorphic or relational properties rather than exact outputs, which is a known technique for oracle-less validation [3,5].

- Real-World Application Example (Preview): In Section 4.8, we demonstrate these principles in practice by applying PBT to an OAuth 2.0 authorization flow. Using Hypothesis and structured test generators, we uncovered protocol design flaws and validated property enforcement without relying on fixed oracle outputs.

This approach echoes earlier advocacy for property-based testing in security assurance, where oracles may be absent but relational properties still enable validation [5]. Prior studies have demonstrated the difficulty of validating protocol state machines like TLS, with fuzzing often revealing undocumented transitions and implementation inconsistencies [15]. Metamorphic testing provides a practical framework for validating software in the absence of a ground truth oracle [16] and has a strong conceptual alignment with property-based security validation. Resources like The Fuzzing Book provide reusable patterns and educational scaffolding for developers defining properties in testing environments, aligning well with ongoing efforts to standardize security property templates [17].

By systematically expressing and testing these properties across wide input spaces, PBT provides a scalable and rigorous technique to uncover logic flaws and implementation bugs that are difficult to detect otherwise.

4. Case Studies/Application Domains

Property-based testing (PBT) offers a versatile framework for validating security properties across a range of use cases, from foundational cryptographic functions to full-scale authentication protocols. This section illustrates the application of PBT in both synthetic and real-world scenarios, demonstrating its capacity to uncover design flaws, semantic inconsistencies, and logic errors that are often missed by traditional tests.

4.1. Functional Primitives and Property Fidelity

At the foundational level, cryptographic primitives such as encryption and decryption functions are essential for ensuring data confidentiality. PBT can validate these operations using formal properties, such as:

By systematically generating diverse plaintext-key pairs, PBT tests this property over large input spaces, ensuring the correctness of the implementation. Tools like QuickCheck or Hypothesis are well-suited for this task, allowing for fuzzing-style input generation with property-oriented output checking.

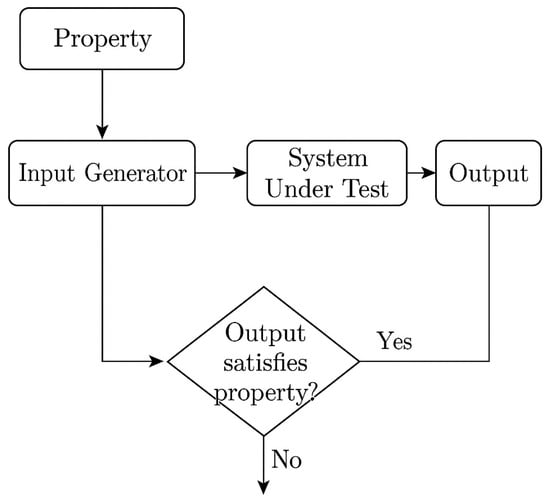

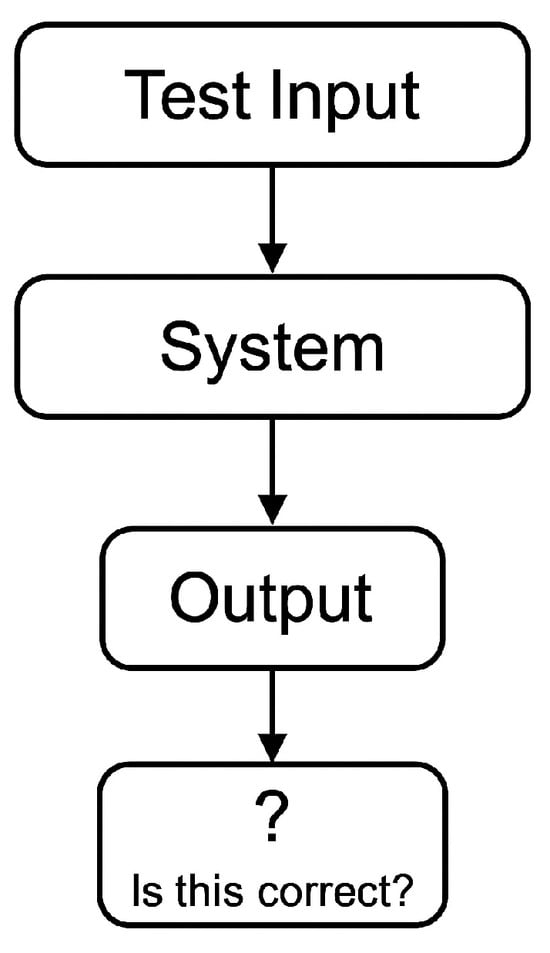

Figure 1 illustrates a generic PBT workflow that highlights how properties, generators, and shrinking interact in the testing process. As shown in Figure 1, the “No” branch—activated when the system output violates the property—initiates a shrinking loop to simplify the failing input before reporting. While the “Yes” branch proceeds silently, the “No” path results in output generation in the form of a minimized counterexample and diagnostic log. The diagram’s feedback loop from “Output satisfies property?” to “Input Generator” reflects this counterexample reduction process.

Figure 1.

Generic workflow of property-based testing (PBT). A defined property guides the input generator, which creates test cases for the system under test. If the output violates the property, a counterexample is reported and passed through a shrinking process to isolate a minimal failure-inducing input. The “No” path represents this feedback loop for refining failing inputs, while the “Yes” path indicates that the test passed successfully.

4.2. Secure Flows and Stateful Protocols (OAuth Preview)

Modern security architectures rely heavily on protocol flows for authentication and authorization. OAuth 2.0, for example, involves multiple steps—including token generation, state validation, and session handling—all of which can be tested using PBT.

PBT can be injected at various stages of the flow, such as:

- Testing state transitions (e.g., token issuance only after authorization).

- Validating token constraints (e.g., expiry, reuse, and integrity).

- Detecting invalid access paths (e.g., skipping login steps).

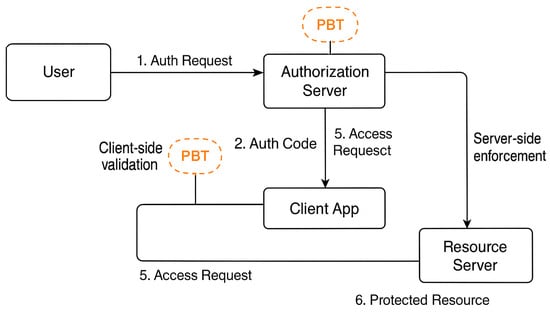

A structured view of these flows and injection points is shown in Figure 2, where orange “PBT” callouts indicate where generative tests can be inserted. Each PBT marker in Figure 2 corresponds to a different validation target. For example, testing the client app ensures that tokens are used correctly and only after proper authorization, whereas testing at the resource server level focuses on whether the server correctly enforces access policies and responds appropriately to invalid or expired tokens.

Figure 2.

OAuth 2.0 authorization code flow annotated with PBT injection points. The PBT instance between the client app and access request validates internal client behavior, including token integrity checks and adherence to the expected sequence (e.g., no token reuse or premature access attempts). The PBT instance between the access request and resource server tests the resource server’s enforcement of access control policies, such as token expiration, revocation, and role-based permissions. These testing points ensure both client-side logic correctness and server-side policy enforcement within the authentication flow.

4.3. Access Control Verification via Role-Based Policies

Role-based access control (RBAC) systems enforce permissions based on roles, but misconfigurations can create privilege escalation or denial of service. PBT can automatically generate (user, role, resource) combinations to test whether the system permits or denies access according to expected policies [18].

For example, a property might be:

“A user without the ‘admin’ role should never gain write-access to protected configuration files”.

Using randomized combinations of users and roles, PBT ensures that the access control logic aligns with the policy specification.

4.4. TLS Handshake State Validation

TLS is a layered protocol with strict message sequencing requirements. Using property-based state traces, we enforce sequencing rules like [6]:

Hypothesis was used to uncover violations in a simulated handshake model. Test traces were reduced to highlight failure-inducing transitions such as [Finished, ServerHello].

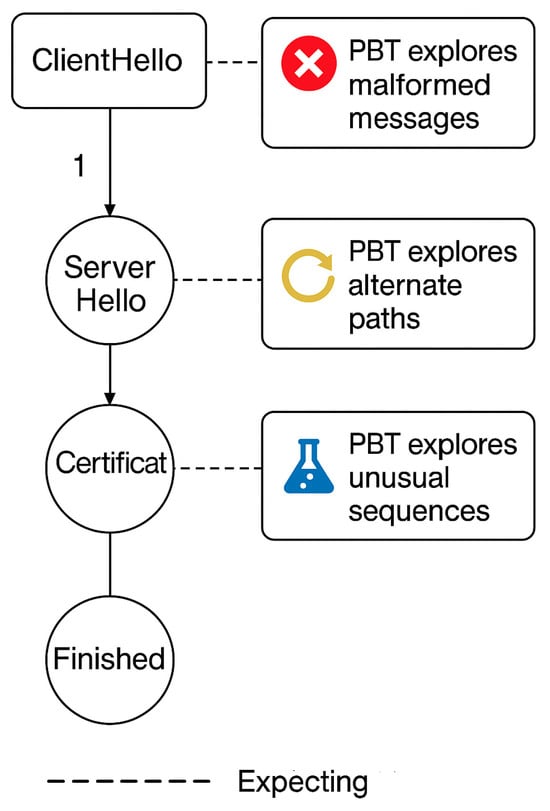

Figure 3 illustrates the core TLS handshake steps and how PBT can explore alternate paths, malformed inputs, and unusual transitions. In the standard TLS handshake, messages must follow a strict order. However, implementation bugs or malicious inputs may lead to protocol deviations. PBT helps to detect these by exploring malformed inputs, alternate message sequences, and unexpected transitions. In Figure 3, the vertical flow depicts the valid protocol path, while annotated PBT branches show areas where generative testing uncovers deviations or violations.

Figure 3.

State space exploration in a TLS handshake model with property-based testing (PBT). The vertical path (ClientHello → ServerHello → Certificate → Finished) represents the expected handshake sequence. The side branches, marked by PBT callouts, indicate how PBT systematically explores non-standard behaviors, such as: “Malformed or corrupted ClientHello messages”, “Alternate sequencing paths through ServerHello”, and “Unusual transitions that bypass expected steps (e.g., skipping Certificate). These PBT injections aim to uncover logic errors, improper state transitions, or security flaws in protocol implementations”.

4.5. Case Study: Buggy Encryption Interface

Using Hypothesis, we evaluated a simplified symmetric encryption interface with a deliberately introduced off-by-one bug. The property (see Section 4.1) failed, and Hypothesis shrank the input to a one-byte payload.

Table 1 shows how PBT detected and minimized the fault in under two seconds. This case illustrates the practicality of embedding property checks even in low-level APIs. See Appendix A for the corresponding test script and output log.

Table 1.

Summary of property-based test results using Hypothesis to evaluate a buggy symmetric encryption API. The system successfully detected a semantic error and minimized the failing input via test case shrinking.

4.6. Case Study: TLS Transition Validation

We model the TLS handshake as a labeled transition system:

- S: a set of protocol states (e.g., Start, ClientHello, ServerHello, Finished)

- A: a set of actions (e.g., sending/receiving messages)

- δ: S × A → S: a transition function

The test validated a state property ensuring no premature Finished message. Violations were identified and minimized to short sequences, demonstrating PBT’s ability to catch logic flaws in state machines. See Appendix B for the full test implementation and sample output.

Table 2 summarizes the results, including the detection time and shrinking steps.

Table 2.

Property-based testing results for a simplified TLS handshake. The test verifies that no Finished message occurs before a ServerHello, with Hypothesis detecting and shrinking a counterexample trace that violated this constraint.

4.7. Case Study: OAuth Flow Validation

We tested a simplified OAuth sequence with the property:

A logic flaw allowed resource access without valid tokens. Hypothesis caught the issue and minimized the trace to:

[‘Resource’, ‘AccessToken’]

Table 3 captures test performance and highlights the simplicity of reproducing the protocol violation. See Appendix C for the Hypothesis-based test definition and output trace.

Table 3.

Results of property-based testing on a simplified OAuth authorization flow. The property enforced a valid issuance of access tokens and restricted resource access to authenticated sessions. The test identified a minimal invalid trace violating this protocol.

4.8. Real-World Implementation: OAuth 2.0 via Requests-OAuthlib

To demonstrate feasibility in a live environment, we extended our previous example using the requests-oauthlib Python 3.10.0 library. PBT properties were defined to enforce

no token issuance before authorization.

- No access to protected endpoints without a token.

- Proper expiration and refresh behavior.

Hypothesis strategies were customized to simulate valid and invalid flows. Despite using real network interactions and third-party code, the tool successfully detected misuse patterns and minimized cases for debugging.

This experiment highlights how PBT can be embedded into live applications for security validation beyond isolated models.

4.9. Comparative Evaluation of PBT vs. Other Techniques

To substantiate the practical effectiveness of property-based testing in cybersecurity contexts, we conducted a comparative evaluation against traditional techniques, such as fuzzing and static analysis, across multiple scenarios. Table 4 summarizes the detection performance across PBT, fuzzing (Radamsa), and static analysis. The fuzzing results reflect common behaviors observed with Radamsa-style mutation fuzzes [8,14], including high false positive rates and limited semantic validation in protocol workflows [15]. Static analysis performance was derived from the typical results reported in tools like CodeQL [19], with context drawn from recent vulnerability detection surveys [7].

Table 4.

Comparative evaluation of PBT, fuzzing, and static analysis across common security validation scenarios. PBT consistently demonstrated high detection rates and low false positives with competitive detection times.

5. Tooling and Integration

Integrating property-based testing into cybersecurity pipelines significantly enhances the robustness and resilience of software systems. This section explores mature PBT frameworks, their integration into CI/CD pipelines, and how they synergize with other verification techniques like static analysis and formal verification.

5.1. Overview of Property-Based Testing Tools

As outlined in Section 2, modern PBT frameworks provide expressive capabilities for testing behavioral properties, combining randomized input generation with automated shrinking. Here, we focus on three widely adopted tools and their specific strengths in security protocol validation:

- QuickCheck (Haskell): The original PBT framework, QuickCheck, pioneered input generation and shrinking for verifying functional correctness. It is particularly valued for its algebraic abstractions, making it ideal for protocol logic modeling and data structure invariants [20].

- Hypothesis (Python): Designed for ease of use, Hypothesis integrates seamlessly with Pytest and supports property-based testing in both unit and stateful scenarios. Its support for adaptive shrinking, custom strategies, and integration into CI/CD workflows makes it a strong choice for the rapid validation of web protocols, REST APIs, and cryptographic interfaces.

- PropEr (Erlang): Built for concurrency, PropEr excels in testing distributed systems and telecommunication protocols. It supports symbolic and state-based models, and its ability to simulate message passing and interleaved execution paths is especially relevant for secure networked applications.

Each of these tools contributes distinct strengths depending on the system’s domain and testing needs. Developers can select tools that align with their application stack, level of concurrency, and the nature of the properties to be validated.

To support the practical adoption of property-based testing, Table 5 summarizes the key characteristics and strengths of several mature PBT tools currently used in industry and research.

Table 5.

Overview of three widely-used property-based testing frameworks. While QuickCheck provides foundational abstractions in Haskell, Hypothesis introduces modern adaptive shrinking in Python, and PropEr supports concurrent state-based systems in Erlang, making each tool suitable for different types of secure systems.

5.2. Integration into Cybersecurity Pipelines

Incorporating PBT into cybersecurity pipelines involves embedding automated property-based tests within the software development lifecycle. This integration ensures that security properties are continuously validated against a wide range of inputs, enhancing the detection of vulnerabilities. For instance, integrating PBT into Continuous Integration/Continuous Deployment (CI/CD) pipelines allows for the automatic execution of property-based tests with each code commit, providing immediate feedback on potential security issues.

A practical implementation strategy includes:

- Defining Security Properties: Clearly articulate the security invariants that the system must uphold [2,21].

- Automated Test Generation: Utilize PBT tools to generate diverse test cases that challenge these properties [2].

- Continuous Monitoring: Integrate these tests into the CI/CD pipeline to ensure ongoing validation throughout the development process [2,21].

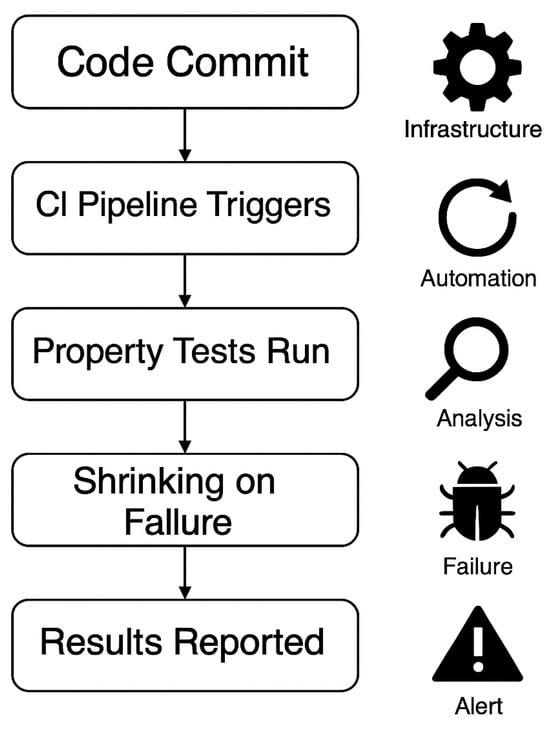

This approach aligns with DevSecOps practices, embedding security testing into the development workflow and fostering a proactive security posture. The workflow shown in Figure 4 illustrates how property-based testing can be seamlessly integrated into a secure CI/CD pipeline alongside static analysis and conventional unit testing. In a live CI/CD environment, property definitions are typically co-located with the source code in a tests/properties/ or spec/ directory, versioned alongside application logic. Tools like Hypothesis (Python) or QuickCheck (Haskell) are integrated into standard test runners (e.g., pytest, mix, stack) and are automatically invoked during pipeline execution. When a test fails, the property violation is logged, and the input that caused the failure is shrunk to a minimal counterexample. These results are surfaced in CI dashboards (e.g., GitHub Actions logs, GitLab CI reports) and are optionally attached to pull requests via test coverage badges or inline comments. This empowers developers to immediately reproduce, debug, and fix the issue before merging. Integrations can also tag failing properties by severity or test scope to support prioritization during remediation.

Figure 4.

Integration of property-based testing into a CI/CD pipeline. On each code commit, the CI system triggers automated PBT runs alongside unit and static analysis tests. Failing properties are automatically shrunk, and the results are reported back to developers via logs, dashboards, or code review systems. Each stage supports a feedback loop that enhances security verification in real-time development workflows.

Notably, principles underlying PBT have begun to influence industrial-scale security automation tools. For example, Google’s OSS-Fuzz utilizes high-throughput input mutation and crash analysis that mirrors generative testing concepts. Similarly, GitHub’s CodeQL workflows rely on declarative rule-based patterns that align philosophically with property checking [2,19]. A comprehensive review of DevSecOps practices and tooling highlights the importance of integrating security measures within CI/CD pipelines to enhance software security [22].

Though not always formal PBT tools, these systems show the growing influence of PBT-style strategies in security automation.

5.3. Complementing Formal Verification and Static Analysis

While formal verification provides strong guarantees, and static analysis detects code-level issues, both can be time-consuming and difficult to scale. PBT complements these methods by enabling rapid automated validation of high-level behavioral properties:

- When to use PBT: Properties involving randomized input spaces, interaction sequences, or protocol behaviors.

- When to use Formal Methods: Cryptographic proofs, type systems, logic properties.

- Hybrid Use: Properties proven formally can also be tested empirically via PBT under real-world conditions.

This layered verification strategy increases confidence in system correctness and facilitates a faster response to emerging threats.

6. Challenges and Limitations

While property-based testing (PBT) is a powerful methodology for validating software correctness—especially in security-critical systems—its application presents several open challenges. This section discusses the practical limitations encountered when deploying PBT in cybersecurity contexts and highlights areas where ongoing research and tooling improvements are needed.

6.1. Difficulty in Expressing Meaningful Security Properties

Defining precise and comprehensive security properties suitable for PBT can be complex. Unlike functional correctness, which often involves clear input–output relationships, security properties may encompass abstract concepts such as confidentiality, integrity, and availability. Translating these high-level security goals into formal, testable properties requires deep domain expertise and a thorough understanding of potential threat models.

Moreover, developers may struggle to identify the properties that effectively capture the nuanced behaviors of secure systems, leading to gaps in test coverage and undetected vulnerabilities. Goldstein et al. [2] found that even experienced teams struggled with formalizing behaviorally rich properties for authentication protocols, cryptographic APIs, or session flows.

Opportunities for addressing this challenge include:

- Developing reusable libraries of security property templates to guide test authors and reduce duplication across projects.

- Integrating property recommendation features into IDEs and testing frameworks, helping developers define meaningful tests as they code.

- Exploring the use of natural language processing (NLP) to translate documentation or specifications into candidate properties, easing the property definition burden for non-experts.

6.2. The Oracle Problem in Security Testing

The oracle problem arises when a test cannot easily determine whether the system’s output is correct. This is especially problematic in security, where failures are often silent (e.g., timing side channels) or expected outputs are undefined (e.g., randomized encryption).

One practical solution is metamorphic testing, where correctness is inferred by verifying consistency across related executions. For example, two logically equivalent inputs (e.g., payloads with padded bytes) should produce functionally equivalent outputs:

This approach avoids the need for exact output oracles, shifting focus to relational or invariant-based validation.

The conceptual structure of the oracle problem is illustrated in Figure 5, highlighting the uncertainty inherent in validating system behavior when an expected output is undefined or uncomputable.

Figure 5.

Visualization of the oracle problem in property-based testing. When testing security-related functions, the absence of a reference output makes it difficult to determine whether the system’s behavior is correct, necessitating the use of metamorphic or relational properties as surrogate oracles.

6.3. Performance Considerations in Large-Scale Systems

PBT can become computationally expensive when applied to large or distributed systems. Generating vast numbers of test inputs, simulating state transitions, and shrinking failures can impact the CI/CD build times, especially under resource constraints.

Developers must balance test depth and breadth with runtime constraints by:

- Prioritizing property importance and test case likelihood.

- Parallelizing test execution (where supported).

- Applying resource caps during CI runs.

Claessen and Hughes note that different implementations of the same protocol may exhibit performance variance due to asynchrony, further complicating scaling and reproducibility [23].

6.4. Addressing Non-Determinism, Side Channels, and Concurrency

Security-sensitive systems often exhibit non-deterministic behaviors, involve concurrent execution, and may be vulnerable to side-channel leakage. PBT introduces unique challenges in these settings:

- Concurrency: Testing all possible interleavings of thread or message interactions is intractable. PBT may expose some race conditions but cannot exhaustively traverse the state tree.

- Side Channels: Outputs like timing or cache access patterns cannot be captured through standard property definitions.

- Randomness: Cryptographic operations often introduce probabilistic behaviors that require carefully designed metamorphic relations.

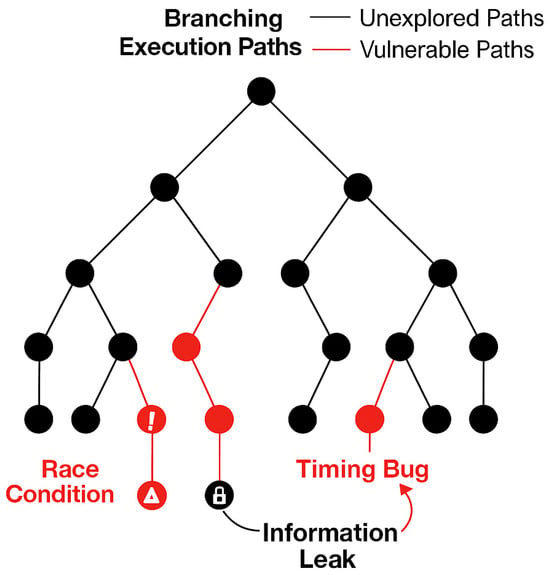

Figure 6 illustrates the state space explosion caused by concurrent execution paths, emphasizing the challenge of capturing critical interleavings and non-deterministic behaviors using generative testing alone.

Figure 6.

Concurrency and non-determinism in secure systems lead to a branching execution space, where only some paths may reveal race conditions, timing bugs, or information leaks. Exhaustively covering this space via property-based testing remains a major open challenge.

In summary, while PBT empowers developers to write expressive and automated tests, its full realization in cybersecurity requires further advancements in tooling, guidance, and hybrid techniques. Future work should explore how PBT can be extended to:

- Interoperate with fuzzers and formal models.

- Capture non-functional properties like timing or entropy.

- Scale across distributed and asynchronous architectures.

As of today, PBT complements—but does not replace—other approaches and works best when deployed as part of a multi-layered verification strategy.

7. Future Directions

Property-based testing has proven to be a robust and adaptable methodology for validating the behavioral correctness of software systems, particularly those operating in security-critical contexts. As the threat landscape and software complexity continue to evolve, PBT must also mature in its tooling, integration, and applicability. This section outlines five key directions that promise to extend the reach, automation, and usability of PBT in cybersecurity.

7.1. Integration with Formal Methods and Property Definition Guidance

Formal verification provides strong mathematical guarantees but often involves substantial modeling overheads and limited scalability, particularly in large or dynamic systems. Property-based testing (PBT), on the other hand, excels at empirically exploring system behavior across diverse inputs, offering a flexible and accessible approach to behavioral validation. While PBT lacks the deductive assurance of formal methods, it compensates with practical scalability, ease of integration, and support for automated test generation.

A promising direction lies in bridging the two techniques: using PBT to validate properties derived from formal specifications or using formal verification to constrain and guide the design of PBT properties [3]. This synergy enables the iterative development of provable, testable systems, especially valuable in safety- and security-critical applications such as embedded systems and cryptographic protocols.

At the same time, defining clear and effective security properties for use in PBT remains a known challenge. Unlike functional correctness, where inputs and outputs are explicitly defined, security properties often capture more abstract concepts like authorization integrity, confidentiality preservation, or state machine transitions. To support developers in writing meaningful PBT properties, the following practical guidelines can be adopted:

- Decompose high-level security goals into testable behavioral rules. For example, a goal like “access should only be granted after login” can be encoded as a state-based sequence property.

- Use preconditions and postconditions for stateful operations. In authentication flows, one might define that a session token is only valid if issued after a valid credential exchange.

- Adopt metamorphic property patterns where expected outputs are unknown. For cryptographic APIs, one might define that

- Leverage reusable patterns from the security domain. Role-based access control (RBAC), session validation, and token lifecycle checks can often be captured using templates such as:

- ○

- “User without role R should not access resource X”.

- ○

- “Token T must not be accepted after expiration timestamp”.

Emerging efforts in the research and open-source communities are also beginning to standardize libraries of reusable properties and explore IDE-integrated property suggestions powered by machine learning and natural language processing. These initiatives hold promise for significantly reducing the effort required to adopt PBT in real-world security contexts.

7.2. Intelligent Test Case Generation

PBT relies heavily on the quality of its input generators. Advances in machine learning and natural language processing (NLP) offer new opportunities for automatically synthesizing generators from codebases, documentation, and formal specifications.

Recent work has shown the feasibility of learning patterns and generating valid edge-case inputs for fuzzers [24]. Applying these techniques to PBT can enable:

- Broader input coverage.

- Domain-specific generation strategies.

- Reduced manual burden on test developers.

This convergence could significantly boost test quality and speed.

A comprehensive review by Huang et al. explores the integration of ML techniques into fuzz testing, highlighting the potential for significant improvements in automated vulnerability detection [25].

7.3. Standardization of Properties and Frameworks

The lack of standardized property libraries remains a barrier to adoption. Just as unit testing benefits from reusable assertions and fixtures, PBT can accelerate adoption through:

- Domain-specific property repositories (e.g., for cryptographic APIs, auth flows).

- Unified property definition languages.

- Cross-tool compatibility standards.

These developments would promote collaboration, consistency, and ease of integration across tools and ecosystems [2].

7.4. Application in Security Testing

PBT is uniquely positioned to enhance vulnerability discovery, especially in the gray areas between formal proof and black-box testing. It has the ability to:

- Define expressive security properties.

- Simulate adversarial inputs.

- Detect logic flaws without an oracle.

This makes it especially suitable for protocol validation, cryptographic APIs, and stateful access control systems [26]. Expanding the role of PBT in vulnerability assessments, red-team testing, and CI-integrated security checks offers high-impact potential.

7.5. Education and Tool Support

A broader adoption of PBT requires better educational scaffolding and developer support. Suggested improvements include:

- IDE plugins for property suggestions.

- Step-by-step tutorials for writing PBTs in security contexts.

- Visualization tools for test-trace inspection and shrinking paths.

Such tools can lower the barrier to entry and make PBT accessible not only to researchers but also to everyday software engineers and DevOps professionals [2,27,28].

Table 6 provides a structured overview of the main future directions for PBT in cybersecurity, summarizing their focus, expected benefits, and supporting literature.

Table 6.

Summary of key future directions for enhancing PBT in cybersecurity. Each category emphasizes different aspects of research and practice, from formal integration to usability and adoption, and is supported by recent contributions from the literature.

In summary, the future of PBT in cybersecurity lies in its continued evolution—toward deeper integration with verification frameworks, smarter test generation, better developer support, and institutional standardization. As these developments unfold, PBT has the potential to become a foundational component in secure software engineering, bridging the gap between informal testing and formal assurance.

8. Conclusions

This work has explored the application of property-based testing (PBT) as a practical, scalable, and principled approach for verifying security-critical behaviors in software systems. By shifting the testing paradigm from example-based validation to generative property checking, PBT enables the systematic exploration of program behavior across large and diverse input spaces.

We demonstrated that PBT is particularly well-suited for the cybersecurity domain, where conventional test strategies often struggle to uncover protocol logic flaws, subtle state violations, or misconfigurations. PBT’s ability to encode security invariants, preconditions, postconditions, and state transition properties makes it a natural complement to formal verification and static analysis tools. Its support for shrinking, structured fuzzing, and metamorphic validation further enhances its effectiveness in identifying edge-case failures and simplifying debugging.

Through a series of case studies—ranging from cryptographic APIs and TLS handshakes to OAuth flows and access control models—we illustrated how PBT can uncover semantic errors and logic flaws in both synthetic and real-world settings. We also discussed its seamless integration into modern CI/CD pipelines, highlighting its role in DevSecOps and continuous security validation.

At the same time, we acknowledged several open challenges, including the oracle problem, performance considerations in large-scale systems, and the difficulty of expressing meaningful security properties. To address these, we outlined future directions such as:

- Hybrid verification strategies that combine PBT with formal methods.

- Intelligent test generation via ML/NLP.

- Property libraries and standardization for security protocols.

- Improved education and developer support.

PBT is not a silver bullet, but it is a powerful tool in the modern software security toolkit. As software systems grow in complexity and criticality, we believe PBT will play an increasingly important role in securing them. We encourage researchers, tool builders, and practitioners to further develop, adopt, and refine PBT as part of the next generation of automated verification and security testing frameworks.

The empirical case studies presented here reinforce that PBT is not just theoretically sound but practically viable—capable of being applied today to improve the robustness and reliability of real-world secure systems.

Funding

This research received no external funding.

Data Availability Statement

The data supporting the findings of this study are available upon reasonable request from the corresponding author. Sharing the data via direct communication ensures adequate support for replication or verification efforts and allows for appropriate guidance in its use and interpretation.

Conflicts of Interest

The author declares no conflicts of interest.

Appendix A. Implementation Example

Appendix A.1. Property-Based Test Python Code

The following Python script defines a property-based test using the Hypothesis library to validate a symmetric encryption/decryption interface with an intentional semantic flaw.

| from hypothesis import given from hypothesis.strategies import binary # Buggy encryption and decryption functions def encrypt(data, key): return data[:-1] + b’x’ if len(data) > 1 else b’x’ def decrypt(ciphertext, key): return ciphertext[:-1] + b’y’ if len(ciphertext) > 1 else b’y’ # Property-based test @given(data=binary(min_size=1,max_size=256),key=binary(min_size=16, |

| max_size=32)) |

| def test_encrypt_decrypt_identity(data, key): assert decrypt(encrypt(data, key), key) == data |

Appendix A.2. Property-Based Test Output Log

The output below was generated by Hypothesis after detecting a failing input that violates the property.

| Falsifying example: test_encrypt_decrypt_identity( data=b’\x10’, key=b’AAAAAAAAAAAAAAAA’ ) Traceback (most recent call last): File “test_script.py”, line 10, in test_encrypt_decrypt_identity assert decrypt(encrypt(data, key), key) == data AssertionError Shrunk example to smallest failing input. Minimal counterexample: data=b’\x10’ |

Appendix B. TLS Property-Based Test

Appendix B.1. TLS Property-Based Test Python Script

The following Python script implements a property-based test using the Hypothesis library to verify the validity of state transitions in a simplified TLS handshake. The test enforces the constraint that a Finished message must not appear before a ServerHello.

| from hypothesis import given, strategies as st # Simplified TLS handshake states states = [“Start”, “ClientHello”, “ServerHello”, “Certificate”, “Finished”] # Rule: ’Finished’ must not occur before ’ServerHello’ def is_valid_transition(trace): seen = set() for state in trace: if state == “Finished” and “ServerHello” not in seen: return False seen.add(state) return True @given(st.lists(st.sampled_from(states), min_size=2, max_size=6)) def test_tls_handshake_trace(trace): assert is_valid_transition(trace) |

Appendix B.2. TLS Property-Based Test Output Log

The log below captures the test output produced by Hypothesis after identifying and shrinking a protocol violation where the handshake sequence terminated prematurely.

| Falsifying example: test_tls_handshake_trace(trace=[‘Finished’, ‘ServerHello’]) Traceback (most recent call last): File “tls_test.py”, line 13, in test_tls_handshake_trace assert is_valid_transition(trace) AssertionError Shrunk example: [‘Finished’, ‘ServerHello’] |

Appendix C. OAuth Property-Based Test

Appendix C.1. OAuth Property-Based Test Python Script

The following script defines a property-based test for a simplified OAuth 2.0 token exchange process. It uses Hypothesis to check that the access tokens are issued only after a valid authorization code and that resources are accessed only after authentication.

| from hypothesis import given, strategies as st # OAuth 2.0 simplified flow states states = [“Start”, “RequestAuth”, “AuthCode”, “AccessToken”, “Resource”] # Rule: no access token without prior AuthCode, no Resource access without AccessToken def valid_oauth_flow(trace): seen = set() for step in trace: if step == “AccessToken” and “AuthCode” not in seen: return False if step == “Resource” and “AccessToken” not in seen: return False seen.add(step) return True @given(st.lists(st.sampled_from(states), min_size=2, max_size=6)) def test_oauth_flow(trace): assert valid_oauth_flow(trace) |

Appendix C.2. OAuth Property-Based Test Output Log

The output presented below was generated by Hypothesis upon discovering a flow in which resource access occurred without prior issuance of an access token, violating the intended security property.

| Falsifying example: test_oauth_flow(trace=[‘Resource’, ‘AccessToken’]) Traceback (most recent call last): File “oauth_test.py”, line 14, in test_oauth_flow assert valid_oauth_flow(trace) AssertionError Shrunk example: [’Resource’, ’AccessToken’] |

References

- Fu, Y.L.; Xin, X.L. A Model Based Security Testing Method for Protocol Implementation. Sci. World J. 2014, 2014, 632154. [Google Scholar] [CrossRef] [PubMed]

- Goldstein, H.; Cutler, J.W.; Dickstein, D.; Pierce, B.C.; Head, A. Property-Based Testing in Practice. In Proceedings of the IEEE/ACM 46th International Conference on Software Engineering, Lisbon, Portugal, 14–20 April 2024; ACM: Lisbon, Portugal, 2024; pp. 1–13. [Google Scholar]

- Chen, Z.; Rizkallah, C.; O’Connor, L.; Susarla, P.; Klein, G.; Heiser, G.; Keller, G. Property-Based Testing: Climbing the Stairway to Verification. In Proceedings of the 15th ACM SIGPLAN International Conference on Software Language Engineering, Auckland, New Zealand, 6–7 December 2022; ACM: Auckland, New Zealand, 2022; pp. 84–97. [Google Scholar]

- Bates, M.; Near, J.P. DT-SIM: Property-Based Testing for MPC Security. arXiv 2024. [Google Scholar] [CrossRef]

- Fink, G.; Bishop, M. Property-based testing: A new approach to testing for assurance. SIGSOFT Softw. Eng. Notes 1997, 22, 74–80. [Google Scholar] [CrossRef]

- Beurdouche, B.; Bhargavan, K.; Delignat-Lavaud, A.; Fournet, C.; Kohlweiss, M.; Pironti, A.; Strub, P.-Y.; Zinzindohoue, J.K. A Messy State of the Union: Taming the Composite State Machines of TLS. In Proceedings of the 2015 IEEE Symposium on Security and Privacy, San Jose, CA, USA, 17–21 May 2015; pp. 535–552. [Google Scholar]

- Bennouk, K.; Ait Aali, N.; El Bouzekri El Idrissi, Y.; Sebai, B.; Faroukhi, A.Z.; Mahouachi, D. A Comprehensive Review and Assessment of Cybersecurity Vulnerability Detection Methodologies. J. Cybersecurity Priv. 2024, 4, 853–908. [Google Scholar] [CrossRef]

- Godefroid, P.; Levin, M.Y.; Molnar, D. SAGE: Whitebox Fuzzing for Security Testing: SAGE has had a remarkable impact at Microsoft. Queue 2012, 10, 20–27. [Google Scholar] [CrossRef]

- Rajapakse, R.N.; Zahedi, M.; Babar, M.A.; Shen, H. Challenges and solutions when adopting DevSecOps: A systematic review. Inf. Softw. Technol. 2022, 141, 106700. [Google Scholar] [CrossRef]

- Korir, F.C. Software security models and frameworks: An overview and current trends. World J. Adv. Eng. Technol. Sci. 2023, 8, 86–109. [Google Scholar] [CrossRef]

- Hoare, C.A.R. An axiomatic basis for computer programming. Commun. ACM 1969, 12, 576–580. [Google Scholar] [CrossRef]

- MacIver, D.R.; Hatfield-Dodds, Z.; Contributors, M.O. Hypothesis: A new approach to property-based testing. J. Open Source Softw. 2019, 4, 1891. [Google Scholar] [CrossRef]

- Vazou, N.; Bakst, A.; Jhala, R. Bounded refinement types. In Proceedings of the 20th ACM SIGPLAN International Conference on Functional Programming, Vancouver, BC, Canada, 1–3 September 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 48–61. [Google Scholar]

- Zalewski, M. The Tangled Web: A Guide to Securing Modern Web Applications; No Starch Press: San Francisco, CA, USA, 2011; ISBN 978-1-59327-417-7. [Google Scholar]

- De Ruiter, J.; Poll, E. Protocol State Fuzzing of {TLS} Implementations. In Proceedings of the 24th USENIX Security Symposium (USENIX Security 15), Washington, DC, USA, 12–14 August 2015; pp. 193–206. Available online: https://www.usenix.org/conference/usenixsecurity15/technical-sessions/presentation/de-ruiter (accessed on 23 April 2025).

- Segura, S.; Fraser, G.; Sanchez, A.B.; Ruiz-Cortés, A. A Survey on Metamorphic Testing. IEEE Trans. Softw. Eng. 2016, 42, 805–824. [Google Scholar] [CrossRef]

- Zeller, A.; Gopinath, R.; Böhme, M.; Fraser, G.; Holler, C. The Fuzzing Book. Available online: https://www.fuzzingbook.org/ (accessed on 23 April 2025).

- Hu, V.C.; Ferraiolo, D.F.; Kuhn, D.R. Assessment of Access Control Systems; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2006. [Google Scholar] [CrossRef]

- Youn, D.; Lee, S.; Ryu, S. Declarative static analysis for multilingual programs using CodeQL. Softw. Pract. Exp. 2023, 53, 1472–1495. [Google Scholar] [CrossRef]

- MacIver, D.R.; Donaldson, A.F. Test-Case Reduction via Test-Case Generation: Insights from the Hypothesis Reducer (Tool Insights Paper). In Proceedings of the 34th European Conference on Object-Oriented Programming (ECOOP 2020), Berlin, Germany, 15–17 November 2020; Volume 166, pp. 13:1–13:27. [Google Scholar] [CrossRef]

- Cankar, M.; Petrovic, N.; Pita Costa, J.; Cernivec, A.; Antic, J.; Martincic, T.; Stepec, D. Security in DevSecOps: Applying Tools and Machine Learning to Verification and Monitoring Steps. In Proceedings of the Companion of the 2023 ACM/SPEC International Conference on Performance Engineering, Coimbra, Portugal, 15–19 April 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 201–205. [Google Scholar]

- Prates, L.; Pereira, R. DevSecOps practices and tools. Int. J. Inf. Secur. 2024, 24, 11. [Google Scholar] [CrossRef]

- Claessen, K.; Hughes, J. QuickCheck: A lightweight tool for random testing of Haskell programs. In Proceedings of the Fifth ACM SIGPLAN International Conference on Functional Programming, Montreal, QC, Canada, 18–21 September 2000; Association for Computing Machinery: New York, NY, USA, 2000; pp. 268–279. [Google Scholar]

- Udeshi, S.; Chattopadhyay, S. Grammar Based Directed Testing of Machine Learning Systems. IEEE Trans. Softw. Eng. 2021, 47, 2487–2503. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, P.; Chen, H.; Ma, L. Large Language Models Based Fuzzing Techniques: A Survey. arXiv 2024. [Google Scholar] [CrossRef]

- Cachin, C.; Guerraoui, R.; Rodrigues, L. Introduction to Reliable and Secure Distributed Programming; Springer: Berlin/Heidelberg, Germany, 2011; ISBN 978-3-642-15259-7. [Google Scholar]

- Ayenew, H.; Wagaw, M. Software Test Case Generation Using Natural Language Processing (NLP): A Systematic Literature Review. Artif. Intell. Evol. 2024, 5, 1–10. [Google Scholar] [CrossRef]

- Von Gugelberg, H.M.; Schweizer, K.; Troche, S.J. Experimental evidence for rule learning as the underlying source of the item-position effect in reasoning ability measures. Learn. Individ. Differ. 2025, 118, 102622. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).