Beyond Snippet Assistance: A Workflow-Centric Framework for End-to-End AI-Driven Code Generation

Abstract

1. Introduction

1.1. Originality and Novelty

- Automating complete software development processes, including code generation, validation, and testing.

- A structured execution pipeline based on the concept of a Chain Of Thought that dynamically refines AI-generated code based on feedback loops, validation mechanisms, and rollback strategies.

- Automatic Context Discovery, dynamically identifying the minimal relevant code files for a given request by analyzing project structure and dependencies. This allows the framework to work efficiently with large projects without feeding the entire codebase, selecting only the necessary code to fulfill the task. It improves LLM accuracy and simplifies the selection of relevant context from extensive codebases.

- Self-healing automation enables the framework to detect and refine incorrectly generated code that does not fulfill the requirements by providing feedback to LLM and automatically regenerating the code.

- Automatic Code Merging, preserving manual code modifications while seamlessly integrating AI-generated updates. The framework compares AI output with manually altered code, allowing developers to modify code alongside AI automation rather than relying solely on automatic code generation.

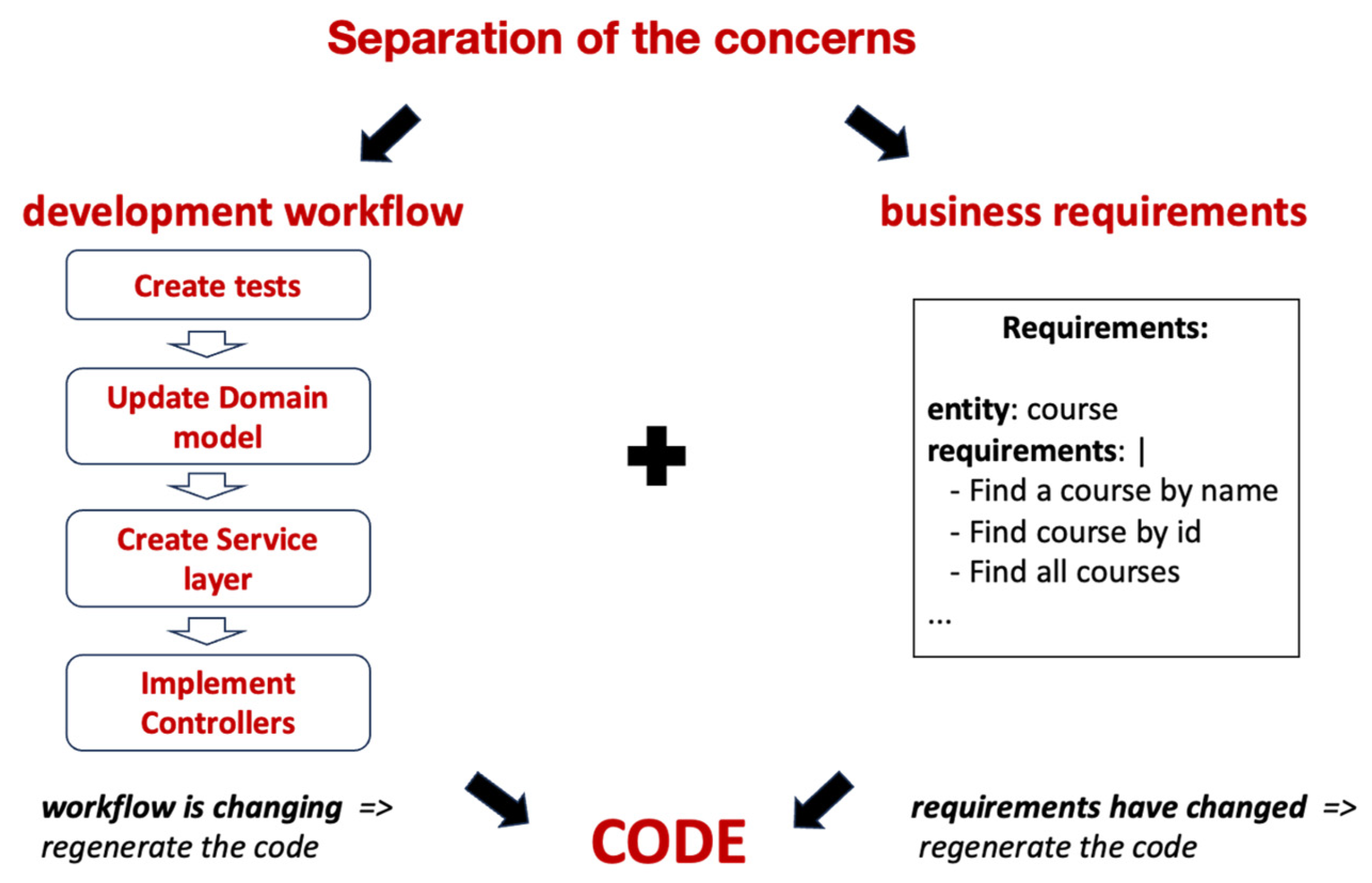

- Separation of Concerns, ensuring that requirements (what should be done) remain independent from workflows (how it should be done). Developers can modify requirements without changing workflows or update workflows and regenerate the code based on the same requirements.

1.2. Original Contribution

- Moves beyond snippet-level assistance to provide comprehensive AI-driven automation.

- Introduces the Prompt Pipeline Language (PPL), a declarative scripting format that allows for the definition of multi-step AI execution workflows.

- Implements automated code validation and rollback mechanisms, ensuring that generated code meets quality standards before integration.

- Demonstrates a reference implementation, JAIG (Java AI-powered Generator), showcasing how structured workflows enhance AI-assisted development.

- Section 2 (Related Work) reviews existing AI-assisted coding tools and their limitations in automating software development workflows.

- Section 3 (Materials and Methods) introduces the workflow-centric framework, describes the Prompt Pipeline Language (PPL), and presents the reference implementation, JAIG.

- Section 4 (Automating Development with Workflow-Oriented Prompt Pipelines) details the structured execution model, separation of concerns, and AI-assisted code merging.

- Section 5 (Enhancing the Reliability of LLM-Generated Code) explores mechanisms for self-healing automation, automated validation, and error correction.

- Section 6 (Prompt Pipeline Language) explains the iterative execution approach used for workflow automation.

- Section 7 (Practical Applications) demonstrates real-world use cases, including feature implementation, refactoring, debugging, and test generation.

- Section 8 (Results) presents empirical findings on efficiency, accuracy, and reliability.

- Section 9 (Discussion) interprets the results, compares the framework with existing AI-assisted approaches, and outlines limitations.

- Section 10 (Conclusion) summarizes key findings and discusses future research directions.

- Supplementary Materials (Example: Workflow written in Prompt Pipeline Language) provides a detailed example of a PPL-based workflow.

2. Related Work

- Refactoring large, complex codebases [5];

- Implementing multi-step workflows like creating database models, updating service layers, and generating API endpoints;

- Conducting automated code reviews to ensure adherence to coding standards and best practices;

- Detecting and fixing code smells, such as duplicated code or overly complex methods [5];

- Generating comprehensive unit and integration tests to ensure high code coverage [10];

- Replacing deprecated APIs with modern alternatives;

3. Materials and Methods

- Basic Workflow Building Blocks: These are the basic capabilities that enable other, more comprehensive features.

- Prompt Pipeline Language (PPL): A declarative scripting format for defining multi-step automation workflows.

- Reference Implementation (JAIG): A Java-based AI-powered code generation tool that integrates these concepts.

- Evaluation Metrics: Assessing accuracy, efficiency, and reliability of AI-generated code through empirical testing.

- Algorithmic Approach: Automatic Context Discovery, Automatic Rollbacks, and Multi-Step Execution methods.

3.1. Basic Workflow Building Blocks

- Files/Folders Inclusion in Prompts: Enables developers to specify the path of files or folders in a prompt, ensuring that these files are included in the input context.

- Automated Response Parsing and File Organization: After AI-generated code is produced, it must be structured correctly within the project’s directory hierarchy.

- Automatic Rollbacks: If the generated code fails to meet expectations, the framework reverts changes to maintain a consistent project state.

- Automatic Context Discovery: Dynamically analyzes project structure and dependencies to determine the minimum set of relevant files required for a given request.

3.2. Implementation of the Prompt Pipeline Language

- Directives: Commands that control execution flow, such as #repeat-if for retry conditions and #save-to for output file destinations.

- Reusability Mechanisms: Support for placeholders and reusable templates that allow developers to define standardized workflows across multiple projects.

- Self-Healing Mechanisms: Integration of automated test generation and validation to detect errors and trigger corrective actions before merging AI-generated code into production.

3.3. Reference Implementation: JAIG (Java AI-Powered Generator)

- Automated Code Generation: Developers provide structured prompts containing project context and requirements, which JAIG processes to generate Java classes, methods, and APIs.

- Workflow Execution: Uses PPL to execute multi-step automation, ensuring that generated code is structured, compiled, and tested before integration.

- Code Validation and Refinement: Implements automatic rollback mechanisms to revert faulty AI-generated outputs and enables iterative improvement through prompt modifications.

- Test Generation and Execution: Automatically generates and executes unit tests to ensure the correctness of generated code.

- Code Merging: Prevents AI-generated code from overwriting manually edited files by performing intelligent diffs and merges.

3.4. Evaluation Methodology

- Automation Efficiency: Categorized tasks as fully automated, partially automated, or requiring manual intervention to measure the framework’s automation impact.

- Productivity Gains: Analyzed task completion times across code generation, testing, refactoring, and documentation updates.

- Code Reliability: Evaluated compilation success, automated rollbacks, and validation accuracy to ensure AI-generated code correctness.

- Workflow Adaptability: Examined modifications to workflows as an alternative to manual refactoring.

3.5. Algorithmic Approach for Workflow Execution

3.5.1. Automatic Context Discovery Algorithm

- Main classes, interfaces, and their relationships.;

- API endpoints and exposed services;

- Core business logic modules;

- Database schema and key entities;

- Configuration files and external dependencies.

- Understanding the Developer’s Request: The framework parses the prompt to identify the affected modules, classes, or APIs.

- Dependency Analysis: The framework traces dependencies between classes and functions to determine what additional files must be included.

- Automatic Context Assembly: Based on the identified dependencies, the framework dynamically constructs the optimal context before passing it to the LLM.

- Identify the UserService class as the main modification target;

- Retrieve dependencies like UserRepository, AuthController, and SecurityConfig;

- Include relevant API documentation and configuration files;

- Exclude unrelated parts of the project, preventing unnecessary context overload;

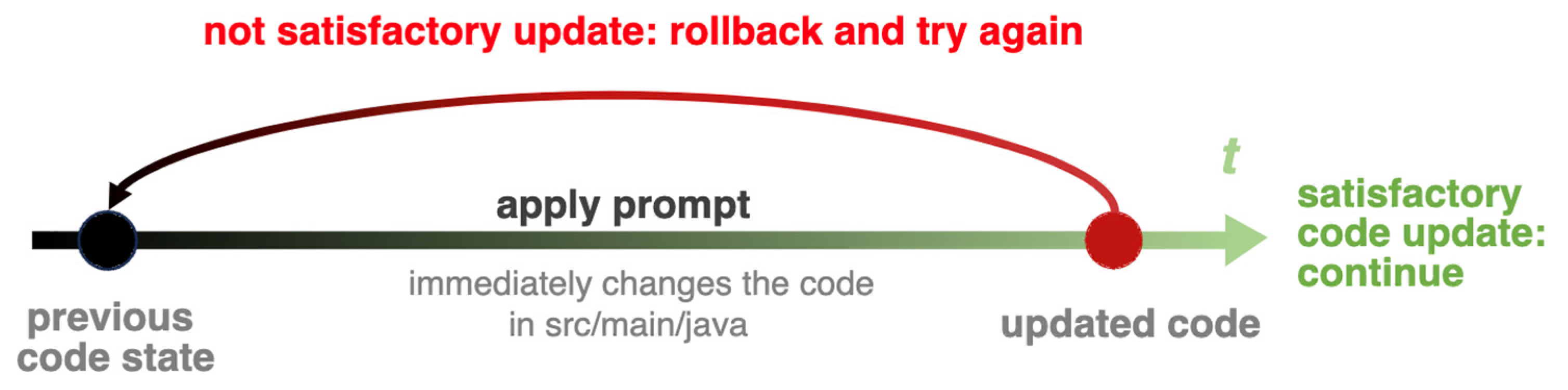

3.5.2. Automatic Rollback Handling Algorithm

- Snapshot Creation: Before applying AI-generated modifications, the system stores the original code state to ensure recovery if needed.

- Code Regeneration: When code needs to be updated or re-executed, the system generates new output based on the latest prompt and workflow state.

- Validation Check: The newly generated code undergoes compilation, static analysis, and automated tests to verify correctness.

- Rollback Trigger: If validation fails or if regeneration was triggered due to prompt updates, the system automatically restores the last working version and logs the failure for developer review.

- Loop Prevention: To avoid infinite rollback-regeneration loops, workflows must define a limited number of refinement attempts before escalating the issue for manual intervention.

- Successful Execution and Code Integration: If validation passes, the AI-generated code is parsed, validated, and merged with the existing codebase.

3.5.3. Multi-Step Prompt Pipeline Execution

- Step Definition: The workflow is defined using PPL, specifying input dependencies and execution order.

- Sequential Execution: Each step is processed in order, feeding the output of one prompt into the next.

- Error Handling and Retries: If a step fails validation, it is retried up to a predefined threshold before triggering a rollback.

- Final Integration: Once all steps are completed, the final AI-generated output is merged into the project.

3.6. Prompt Directives

- #model: <model_name>: selects the AI model used for processing a prompt. Developers can switch between models for different levels of complexity (e.g., GPT-4o for general tasks, GPT-o1 for high-reasoning requirements).

- #temperature: <value>: adjusts the creativity level of responses. A higher value produces more varied outputs, while a lower value ensures deterministic results.

- #save-to: <path_to_file>: saves a generated response to the specified path.

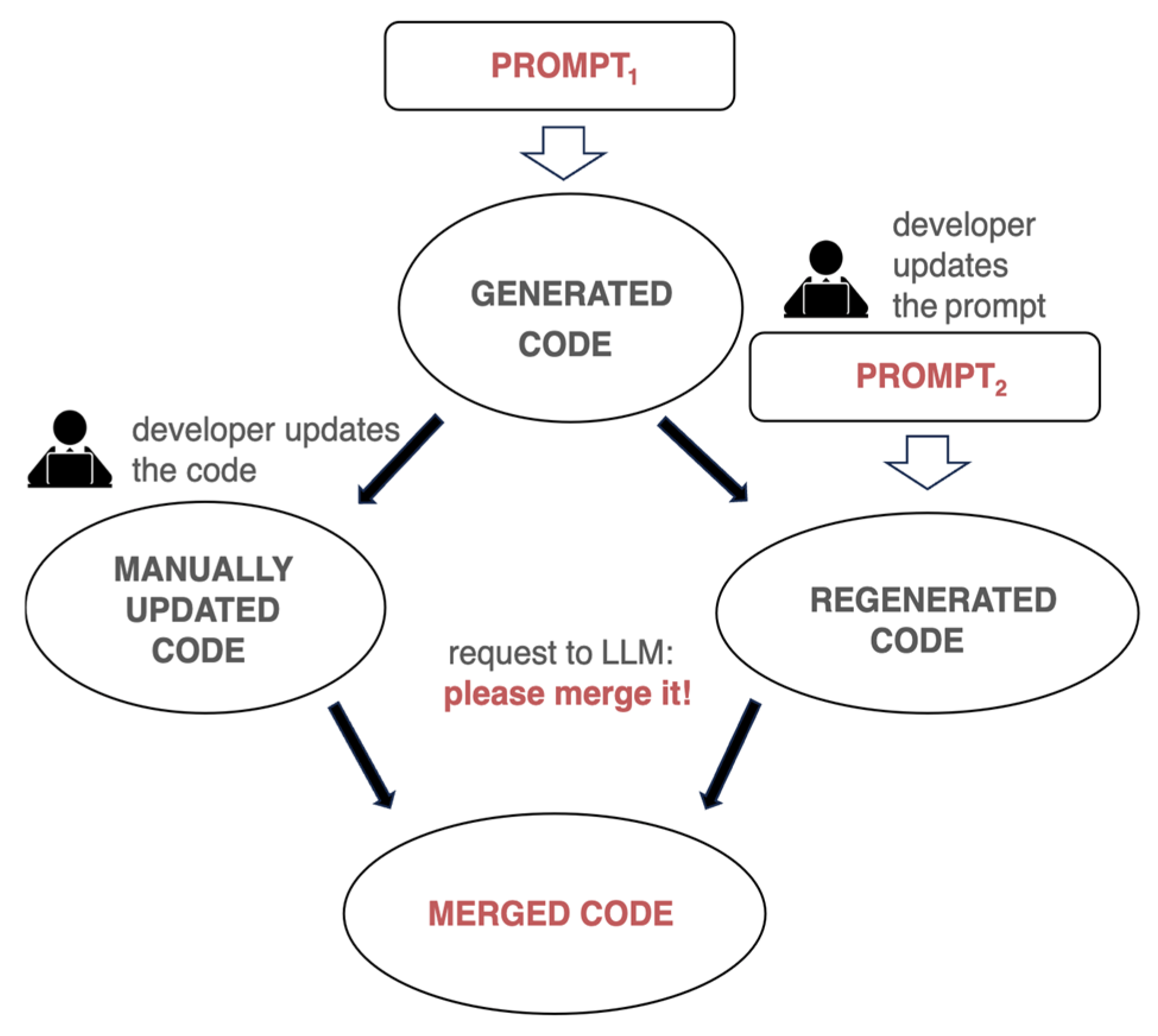

3.7. AI-Assisted Code Merging

- Manual code updates: Developers modify the codebase directly.

- Automatic code updates: Changes in requirements (prompt modifications) triggering AI-driven code regeneration.

- Manual code updates are preserved while incorporating AI-generated updates.

- AI-generated code is adjusted based on recently modified project files.

- Developers can independently modify the codebase or the requirements without introducing conflicts.

- Initial Code Generation: AI generates code based on an initial prompt (Prompt1).

- Manual Updates: Developers modify the generated code as needed.

- Prompt Update and Regeneration: If requirements change, an updated prompt (Prompt2) triggers AI to regenerate the code.

- Merging Process: Instead of replacing manual updates, the framework requests the LLM to merge the regenerated code with the manually updated version.

- Final Output: The merged code incorporates both AI-generated updates and developer modifications, ensuring consistency.

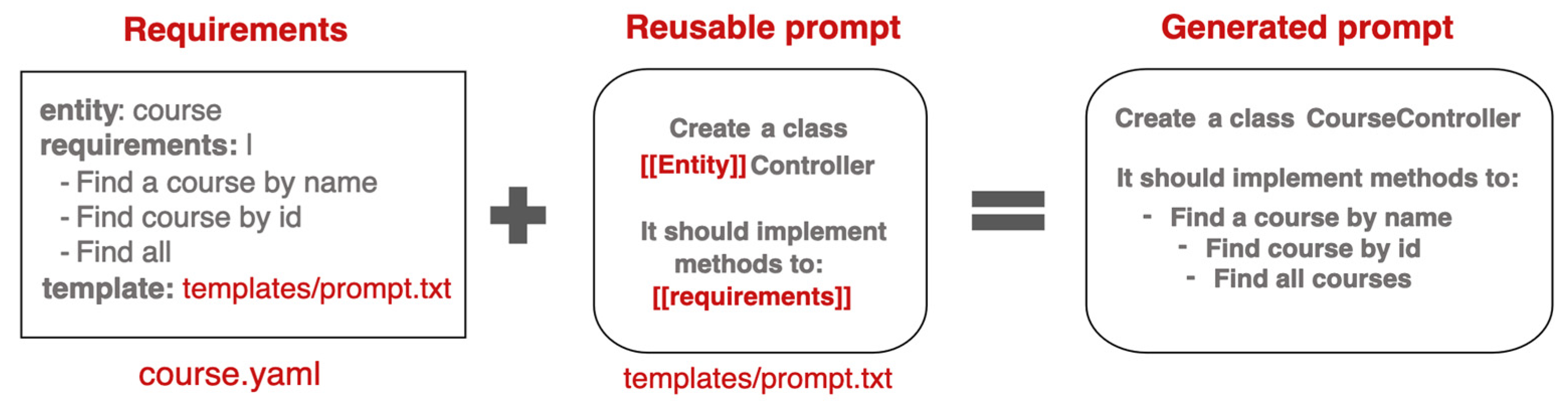

3.8. Reusable Prompt Templates

- Instead of hardcoding values in every prompt, placeholders such as [[Entity]] and [[requirements]] are used.

- At runtime, these placeholders are automatically substituted with actual values from structured data files (e.g., course.yaml).

- LLM processes the dynamically assembled prompt, ensuring that responses are tailored to specific tasks.

4. Automating Development with Workflow-Oriented Prompt Pipelines

- Updating the domain model;

- Modifying the service layer;

- Creating API endpoints;

- Writing unit tests.

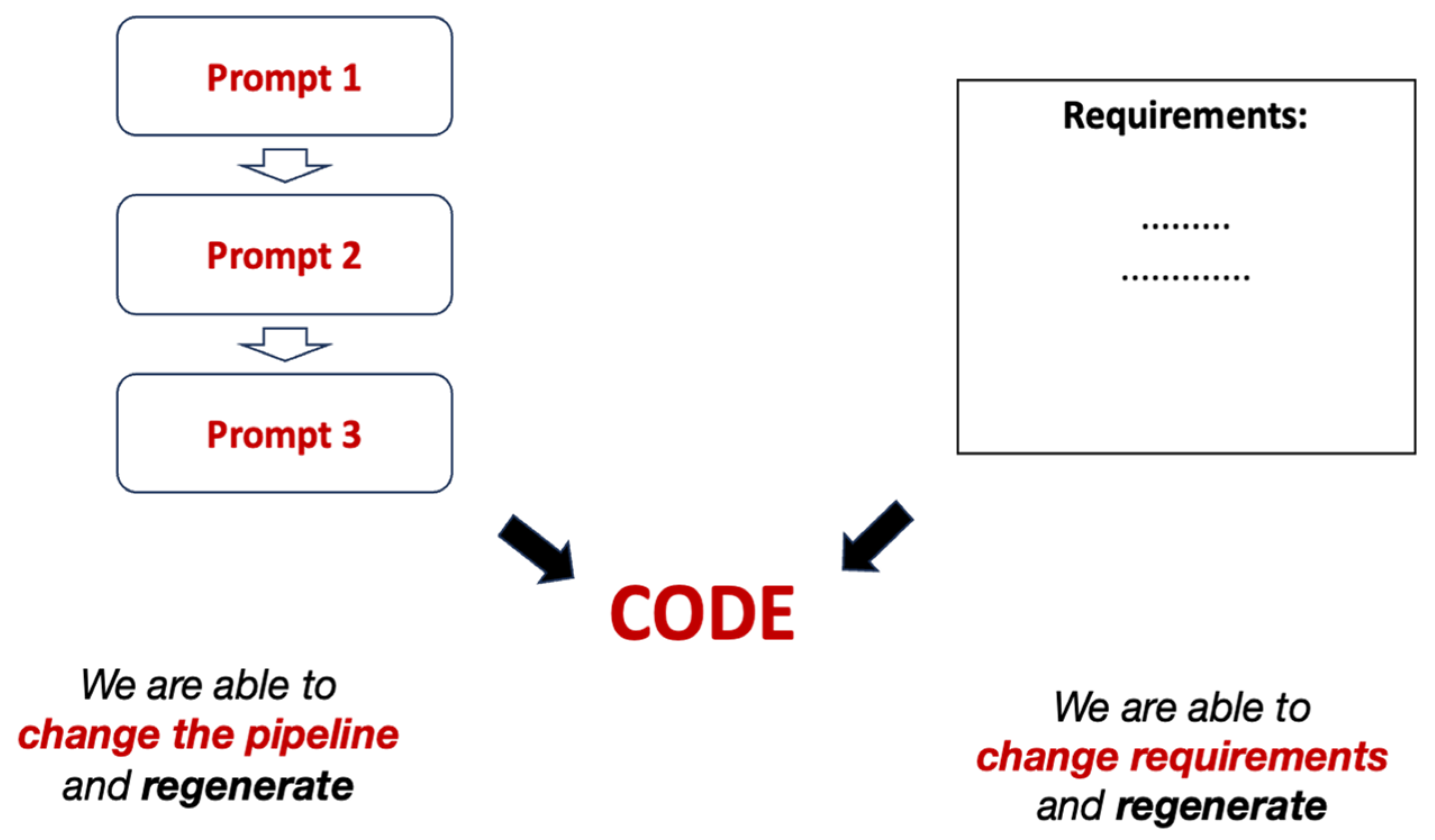

4.1. Prompt Pipeline for Workflow Automation

- One prompt generates the domain model;

- Another handles the service layer;

- A separate prompt defines API interactions;

- Additional prompts cover testing and documentation.

- Developers can modify the pipeline to adjust how code is generated;

- Or they can update requirements without altering the workflow.

4.2. Separation of Concerns in Workflow Automation

- Changing the workflow: When a workflow is updated (e.g., to generate additional documentation), the same requirements can be reapplied to the updated workflow. Steps that have already been executed (e.g., domain model generation) are not re-executed unless affected by the changes. However, if an early step in the workflow is modified, such as domain model generation, all subsequent dependent steps must be reprocessed to ensure alignment across the entire workflow.

- Changing the requirements: When requirements change, the framework automatically rolls back changes from previous workflows and regenerates only the necessary parts of the codebase, ensuring minimal manual intervention while keeping the implementation aligned with updated specifications. This allows developers to focus on refining the requirements while the system ensures consistency. Ideally, once a workflow is established, developers only need to update the requirements or introduce new ones, while the workflow handles the rest automatically, reducing manual overhead.

5. Enhancing the Reliability of LLM-Generated Code

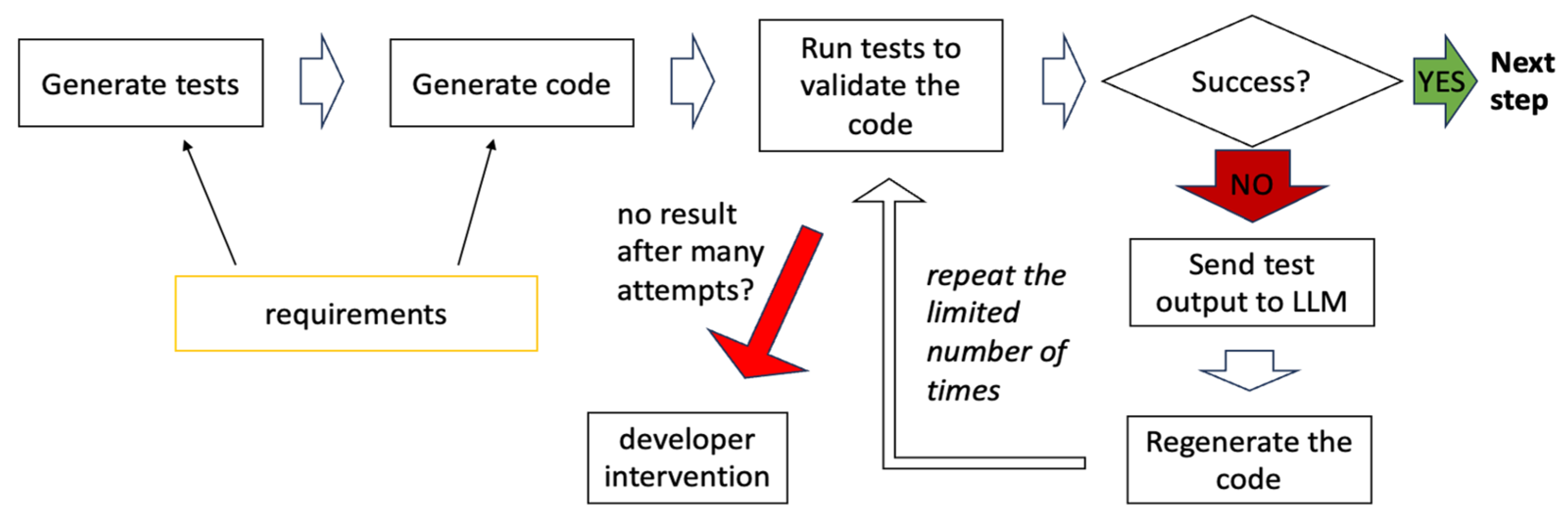

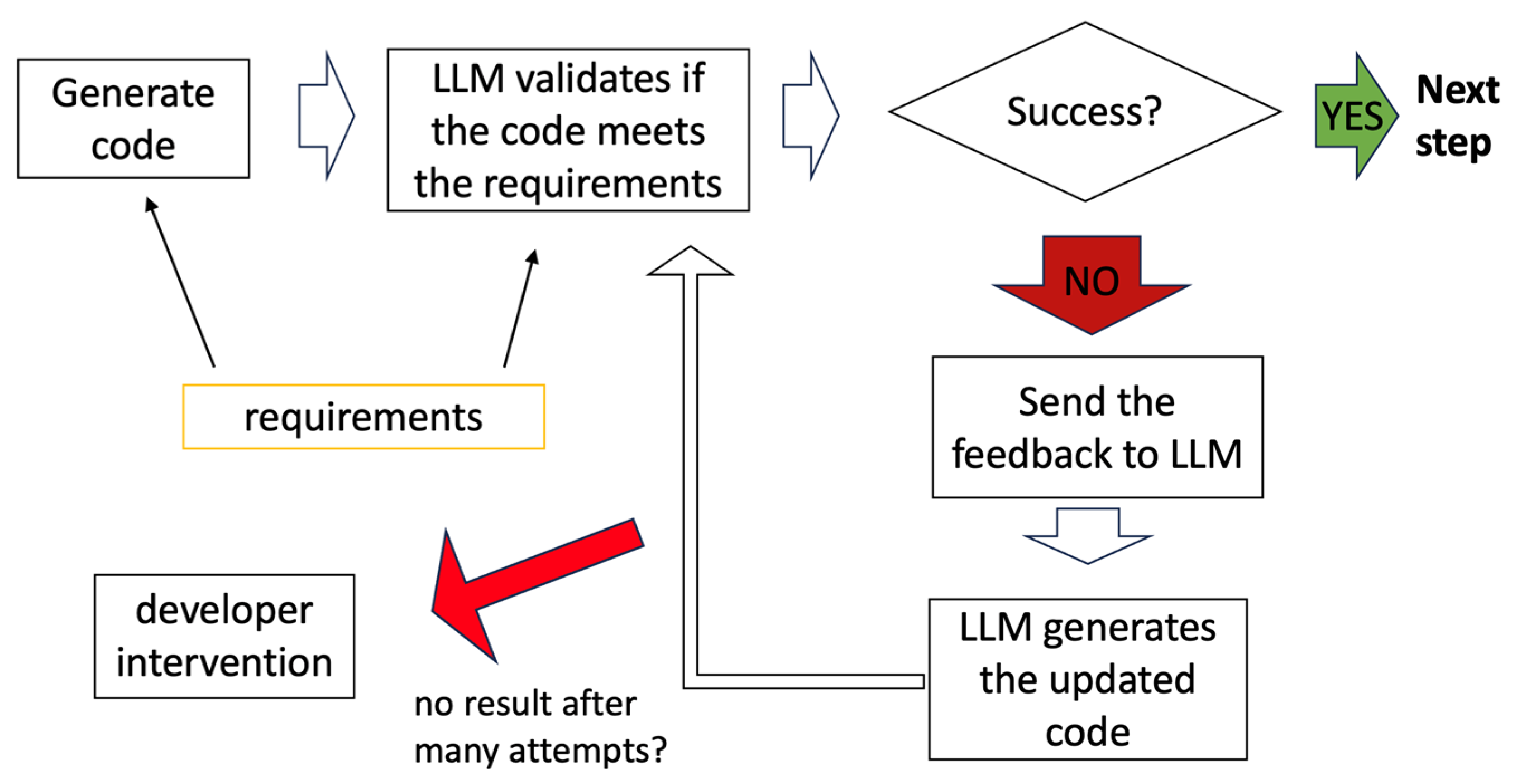

5.1. Self-Healing of the Generated Code

- Code Validation with Automated Tests: Generates and runs tests to verify that the code aligns with the requirements, identifying errors automatically.

- Code Validation Using LLM Self-Assessment: Uses an LLM to analyze the generated code against predefined requirements, ensuring that even when tests pass, logical errors are caught before they introduce downstream failures.

5.2. Code Validation with Automated Tests

- Refine the requirements to improve clarity for LLM processing,

- Select an alternative model for validation or code regeneration,

- Adjust the prompt pipeline to enhance the quality of generated output

5.3. Code Validation Using LLM Self-Assessment

5.4. Combining Tests and Self-Assessment

- Generating Model: GPT-4o generates the code.

6. Prompt Pipeline Language

6.1. Key Features of PPL

- Prompt Chaining: The output of one prompt automatically becomes the input for the next, structuring complex tasks into smaller, manageable steps.

- Execution Control: Conditions (e.g., validation failures) can trigger retries, alternative strategies, or human intervention.

- Self-Healing and Feedback Loops: If a step fails, the workflow refines itself, using feedback to improve generated results.

- Automated Workflow Updates: Workflows and requirements remain independent, allowing incremental modifications without affecting validated outputs.

- Templated Prompt Execution: PPL supports placeholders and conditional logic, dynamically generating prompts based on structured templates (detailed in Supplementary Materials).

6.2. Iterative Execution Algorithm and Workflow Adaptation

- Prompt Execution: The prompt is combined with the requirements and sent to the LLM for processing.

- Validation and Self-Assessment: The generated output is validated using automated tests and self-assessment mechanisms.

- Self-Healing and Refinement: If validation fails (e.g., due to a compilation error or unmet requirements), targeted feedback is provided, and the prompt is re-executed to improve the response.

- Human Oversight and Adjustments: If automatic corrections fail after a predefined number of attempts, the system escalates the issue to a developer for manual intervention.

- Incremental Workflow Updates: When new steps are introduced into the workflow, only the affected steps are re-executed, preserving validated outputs and avoiding unnecessary regeneration.

- Final Integration and Execution: AI-generated outputs are merged into the existing codebase while maintaining manual modifications. The final code is verified and ready for execution.

6.3. Automated Workflow Generation

- Analyze Task Description: The system extracts key requirements from natural language specifications.

- Define Optimal Prompt Sequence: The generator constructs a logical order of prompts using best practices for validation, execution, and error handling.

- Apply Execution Directives: Execution conditions, validation rules, and feedback loops are automatically assigned for optimal efficiency.

- Optimize for Performance: The generator eliminates redundant prompts and ensures efficient dependency resolution.

- Generate and Validate Workflow: A trial run is performed to verify correctness before deployment.

7. Practical Applications of Workflow-Oriented AI Code Generation

7.1. Feature Implementation and Code Generation

- Automated Feature Development: The framework can generate boilerplate code, including REST API endpoints [39], service classes, and database models using reusable prompt pipelines.

- Improved Development Workflows: This approach is particularly beneficial in established coding practices where standardized templates allow for seamless automation.

7.2. Code Quality and Optimization

- Automated Code Review: The framework analyzes codebases, detects adherence to coding standards, and flags potential bugs [40]. It generates detailed reports with suggestions for improvement, enabling a more structured and thorough review process.

- Refactoring Large Codebases: The framework enhances maintainability by breaking down large, complex methods into smaller, modular functions, improving readability and efficiency.

- Fixing Code Smells: The system identifies issues such as duplicated code, deep nesting, and long methods [5] and suggests structured refactoring improvements.

7.3. Error Resolution and Refactoring

- Automated Bug Fixing: The framework can detect and resolve simple bugs that do not require extensive debugging. Future iterations may incorporate advanced debugging capabilities.

- Replacing Deprecated APIs: AI-powered automation scans for outdated API usage and replaces them with modern alternatives, provided it has the necessary mappings.

- Security Vulnerability Analysis: The system proactively scans for common security issues, such as SQL injection, and recommends best practices for mitigation.

7.4. Test Generation and Validation

- Automated Unit Test Creation: The framework generates unit tests based on the existing class structure and method signatures, improving test coverage and reducing manual effort.

- Enhanced Test-Driven Development (TDD): Developers can seamlessly integrate AI-generated test cases into their projects, ensuring higher code reliability.

- Automated Test Execution: The system runs tests on generated code to validate correctness, refining and regenerating code if necessary.

7.5. Documentation Generation

- Automated Code Documentation: The framework automatically documents REST API endpoints, class hierarchies, and method details in a structured format, ensuring alignment with the latest code updates. Documentation generation can be integrated into CI/CD pipelines, ensuring documentation remains consistent with ongoing code changes.

- Customizable Output Formats: Documentation can be generated in various formats (e.g., Markdown, HTML, PDF) to accommodate different project needs and improve maintainability.

8. Results

8.1. Automation Efficiency

- Fully Automated: The task is completed entirely without human intervention. The system generates, validates, and integrates the output based on predefined workflows. The generated results are of sufficient quality to eliminate the need for manual review.

- Partially Automated: The workflow automates most of the process, but a human must review or adjust the output before committing the results.

- Requiring Manual Intervention: The task is too complex or context-dependent to be fully automated. AI may provide recommendations, but a developer must manually implement or finalize the solution.

8.2. Measured Time Savings

- A 92% reduction in code review time, as AI automatically generated structured review reports.

- A 96% decrease in unit test creation time enables rapid test coverage expansion.

- A 100% acceleration in documentation updates, ensuring API and system documentation remained consistent with generated code. This process was fully automated, eliminating the need for manual documentation maintenance.

8.3. Self-Healing Mechanisms and Reliability

- Detects errors and provides feedback to AI.

- Regenerates code based on the failure context.

- Repeats until the issue is resolved or escalates to human intervention.

8.4. Workflow Modification: An Alternative to Code Refactoring

- Adjust workflow rules to change how code is generated.

- Add new workflow steps to extend functionality, such as automatically updating API documentation whenever a new endpoint is created.

8.4.1. Modifying Workflow Rules: REST to gRPC Migration

- Automatic code regeneration without modifying individual files.

- Consistent updates to service definitions, method signatures, and client stubs.

- An 80% faster migration compared to manual refactoring.

8.4.2. Adding New Workflow Steps: OpenAPI Documentation Generation

- Eliminated manual effort to keep documentation up to date.

- Ensured consistency between generated API specifications and actual implementations.

- Reduced documentation effort by 90%, as updates were now automated.

9. Discussion

9.1. Interpretation of Results

- Highly structured tasks (code reviews, test generation, API creation) are nearly fully automated, leading to significant time savings.

- Workflows are reusable. The initial setup effort is compensated by long-term efficiency gains.

- Workflow modifications replace traditional refactoring, making large-scale changes faster and more structured.

- Self-healing mechanisms improve reliability, reducing error rates and ensuring higher-quality AI-generated code.

- Incremental automation is possible: new steps (e.g., documentation updates) can be added seamlessly to existing workflows.

9.2. Comparison with Existing AI-Assisted Development Approaches

9.3. Limitations and Challenges

9.3.1. Scalability Challenges for Large, Enterprise-Level Codebases

- Optimizing context discovery algorithms to efficiently extract the minimal required code sections for LLMs.

- Implementing incremental code generation to reduce processing overhead by modifying only affected components rather than regenerating entire workflows.

- Exploring the capability of handling large-scale workflows in parallel.

9.3.2. Dependence on LLM-Generated Code and Potential Subtle Errors

- Enhancing multi-step validation with external static analysis tools and AI-assisted formal verification techniques.

- Leveraging ensemble AI models combining different LLMs or rule-based systems to improve robustness.

- Ensuring a human-in-the-loop review process for critical components before deployment.

9.3.3. Handling Domain-Specific Languages (DSLs)

- Extending the framework’s prompt pipeline language (PPL) to support custom DSLs and structured metadata.

- Integrating specialized LLMs trained on domain-specific corpora to improve accuracy in DSL-based projects.

- Implementing custom transformation layers that automatically parse LLM-generated responses into valid DSL syntax.

9.3.4. Initial Learning Curve and Adoption Complexity

- Familiarity with prompt engineering and structured pipeline-based execution.

- Understanding how to modify workflows to achieve the desired automation outcome.

- Balancing human intervention with AI-driven processes to avoid over-reliance on automation.

- Providing predefined workflow templates for common development tasks (e.g., API generation, test automation, refactoring).

- Developing intuitive UI tools to assist in workflow creation and modification, reducing reliance on manual prompt configuration.

- Offering gradual onboarding strategies, allowing teams to transition from semi-automated to fully automated workflows in phases.

9.3.5. Handling Edge Cases and Complex Business Logic

- Highly domain-specific business logic that lacks clear formalization.

- Scenarios requiring nuanced human decision-making, such as architectural trade-offs or strategic code restructuring.

- Edge cases that involve implicit dependencies across multiple components.

- Hybrid AI + Human review approach: Let AI handle structured parts while human developers oversee complex decisions.

- Enriching AI-generated prompts with historical project context to improve decision accuracy for complex scenarios.

- Introducing adaptive self-learning mechanisms, where the framework continuously refines AI behavior based on developer feedback.

9.4. Future Research Directions

- Dialog-Based Workflow Management: Enabling interactive AI that asks clarifying questions during requirement formulation to ensure all necessary details are provided upfront. This reduces ambiguities and improves the accuracy of AI-generated code.

- Dynamic Workflow Adaptation: Allowing workflows to adjust in real-time based on project changes, reducing the need for manual intervention and making AI-driven development more responsive.

- Human-in-the-Loop Automation: Investigating methods for incremental developer feedback throughout the workflow, allowing AI to refine outputs dynamically based on real-time user input rather than only escalating after failure.

- Self-Optimizing Workflows: Using reinforcement learning and historical data to continuously refine AI-generated workflows, improving efficiency and adaptability over time.

- Hybrid AI Models for Task Optimization: Combining lightweight AI models for routine tasks with advanced LLMs for complex decision-making, improving efficiency, performance, and cost-effectiveness.

- Scalability and Deployment in Production: Investigating how workflow-driven automation scales across large projects and multi-developer teams, ensuring maintainability, security, and efficiency in real-world applications.

- Testing Workflow Reliability: Developing systematic methods to verify AI-generated workflows across varying requirements, ensuring correctness, stability, and consistency.

- Interactive Workflow Optimization: Studying real-time feedback mechanisms that help developers refine AI-generated workflows dynamically, adapting to evolving requirements and constraints.

- AI-Assisted Code Explainability and Justification: Research methods to generate human-readable explanations of AI-generated code, providing insights into design choices and ensuring better trust and adoption among developers.

- AI-Driven Security Analysis and Compliance Enforcement: Investigating how AI can automatically detect security vulnerabilities and ensure compliance with industry regulations (e.g., OWASP, GDPR, HIPAA) before deployment.

- Cross-Project Knowledge Transfer: Developing AI techniques that learn from past projects to improve workflow recommendations, optimize context selection, and enable intelligent code reuse across multiple repositories.

10. Conclusions

10.1. Real-World Applications and Industry Adoption

- Automated API Development: Generating REST/gRPC services, API documentation, unit, and integration tests with minimal manual effort.

- Continuous Code Review: Integrating into CI/CD pipelines to analyze code changes, enforce coding standards, detect potential issues, and generate structured review reports, ensuring high-quality code before integration.

- AI-Assisted Refactoring: Standardizing modernization efforts by replacing legacy patterns, outdated tools, deprecated libraries, and inefficient code structures with optimized implementations, reducing technical debt and improving maintainability.

- Code Compliance and Security Enforcement: Ensuring generated code adheres to industry standards, security policies, and regulatory requirements (e.g., OWASP, GDPR, HIPAA), reducing security risks and improving auditability.

10.2. Future Framework Enhancements

- Broader Language Support: Expanding beyond Java to support Python, JavaScript, and C++, enabling adoption across diverse tech stacks.

- Embedding Code Debugging in the Workflow: Integrating AI-driven debugging tools directly into the workflow. After code generation, the debugger will automatically execute the generated code, capturing runtime insights such as variable states, execution flow, and errors. This feedback loop will iteratively refine the code before validation and integration, improving reliability and reducing the need for manual debugging.

- CI/CD Pipeline Integration: Enabling automated deployment of AI-generated code. Enhancements will focus on seamless integration with DevOps tools (e.g., Jenkins, GitHub Actions, GitLab CI/CD) to automate builds, testing, and deployment workflows. Additionally, post-deployment monitoring and rollback mechanisms will enhance reliability and performance.

- Workflow Visualization and Debugging: Introducing graphical representations of workflows for better execution tracking, issue analysis, and optimization. This may include a graphical debugger that maps workflow steps, highlights errors, and enables interactive debugging.

- Integration with Industry-Standard Tools: Strengthening compatibility with IDEs (e.g., IntelliJ, VS Code), testing frameworks (JUnit, Mocha), and project management platforms (Jira, GitLab) to ensure seamless adoption in development environments.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- GitHub Copilot. Available online: https://github.com/features/copilot (accessed on 1 March 2025).

- Cursor, the AI Code Editor. Available online: https://www.cursor.com/ (accessed on 1 March 2025).

- Iusztin, P.; Labonne, M. LLM Engineer’s Handbook: Master the Art of Engineering Large Language Models from Concept to Production; Packt Publishing: Birmingham, UK, 2024. [Google Scholar]

- Raschka, S. Build a Large Language Model; Manning: New York, NY, USA, 2024. [Google Scholar]

- Fowler, M. Refactoring: Improving the Design of Existing Code, 2nd ed.; Addison-Wesley Professional: Boston, MA, USA, 2018. [Google Scholar]

- Bonteanu, A.M.; Tudose, C.; Anghel, A.M. Multi-Platform Performance Analysis for CRUD Operations in Relational Databases from Java Programs using Spring Data JPA. In Proceedings of the 13th International Symposium on Advanced Topics in Electrical Engineering (ATEE), Bucharest, Romania, 23–25 March 2023. [Google Scholar]

- Bonteanu, A.M.; Tudose, C.; Anghel, A.M. Performance Analysis for CRUD Operations in Relational Databases from Java Programs Using Hibernate. In Proceedings of the 2023 24th International Conference on Control Systems and Computer Science (CSCS), Bucharest, Romania, 24 May 2023. [Google Scholar]

- Bonteanu, A.M.; Tudose, C. Performance Analysis and Improvement for CRUD Operations in Relational Databases from Java Programs Using JPA, Hibernate, Spring Data JPA. Appl. Sci. 2024, 14, 2743. [Google Scholar] [CrossRef]

- Tudose, C. Java Persistence with Spring Data and Hibernate; Manning: New York, NY, USA, 2023. [Google Scholar]

- Tudose, C. JUnit in Action; Manning: New York, NY, USA, 2020. [Google Scholar]

- Martin, E. Mastering SQL Injection: A Comprehensive Guide to Exploiting and Defending Databases; Independently Published; 2023; Available online: https://www.amazon.co.jp/-/en/Evelyn-Martin/dp/B0CR8V1TKH (accessed on 1 March 2025).

- Caselli, E.; Galluccio, E.; Lombari, G. SQL Injection Strategies: Practical Techniques to Secure Old Vulnerabilities Against Modern Attacks; Packt Publishing: Birmingham, UK, 2020. [Google Scholar]

- Imai, S. Is GitHub Copilot a Substitute for Human Pair-programming? In An Empirical Study. In Proceedings of the ACM/IEEE 44th International Conference on Software Engineering: Companion Proceedings, Pittsburgh, PA, USA, 22–24 May 2022; pp. 319–321. [Google Scholar]

- Nguyen, N.; Nadi, S. An Empirical Evaluation of GitHub Copilot’s Code Suggestions. In Proceedings of the 2022 Mining Software Repositories Conference, Pittsburgh, PA, USA, 22–24 May 2022; pp. 1–5. [Google Scholar]

- Zhang, B.Q.; Liang, P.; Zhou, X.Y.; Ahmad, A.; Waseem, M. Demystifying Practices, Challenges and Expected Features of Using GitHub Copilot. Int. J. Softw. Eng. Knowl. Eng. 2023, 33, 1653–1672. [Google Scholar] [CrossRef]

- Yetistiren, B.; Ozsoy, I.; Tuzun, E. Assessing the Quality of GitHub Copilot’s Code Generation. In Proceedings of the 18th International Conference on Predictive Models and Data Analytics in Software Engineering, Singapore, 17 November 2022; pp. 62–71. [Google Scholar]

- Suciu, G.; Sachian, M.A.; Bratulescu, R.; Koci, K.; Parangoni, G. Entity Recognition on Border Security. In Proceedings of the 19th International Conference on Availability, Reliability and Security, Vienna, Austria, 30 July–2 August 2024; pp. 1–6. [Google Scholar]

- Jiao, L.; Zhao, J.; Wang, C.; Liu, X.; Liu, F.; Li, L.; Shang, R.; Li, Y.; Ma, W.; Yang, S. Nature-Inspired Intelligent Computing: A Comprehensive Survey. Research 2024, 7, 442. [Google Scholar] [CrossRef] [PubMed]

- El Haji, K.; Brandt, C.; Zaidman, A. Using GitHub Copilot for Test Generation in Python: An Empirical Study. In Proceedings of the 2024 IEEE/ACM International Conference on Automation of Software Test, Lisbon, Portugal, 15–16 April 2024; pp. 45–55. [Google Scholar]

- Tufano, M.; Agarwal, A.; Jang, J.; Moghaddam, R.Z.; Sundaresan, N. AutoDev: Automated AI-Driven Development. arXiv 2024, arXiv:2403.08299. [Google Scholar]

- Ridnik, T.; Kredo, D.; Friedman, I. Code Generation with AlphaCodium: From Prompt Engineering to Flow Engineering. arXiv 2024, arXiv:2401.08500. [Google Scholar]

- Alenezi, M.; Akour, M. AI-Driven Innovations in Software Engineering: A Review of Current Practices and Future Directions. Appl. Sci. 2025, 15, 1344. [Google Scholar] [CrossRef]

- Babashahi, L.; Barbosa, C.E.; Lima, Y.; Lyra, A.; Salazar, H.; Argôlo, M.; de Almeida, M.A.; de Souza, J.M. AI in the Workplace: A Systematic Review of Skill Transformation in the Industry. Adm. Sci. 2024, 14, 127. [Google Scholar] [CrossRef]

- Ozkaya, I. The Next Frontier in Software Development: AI-Augmented Software Development Processes. IEEE Softw. 2023, 40, 4–9. [Google Scholar] [CrossRef]

- Chatbot App. Available online: https://chatbotapp.ai (accessed on 1 March 2025).

- Using OpenAI o1 Models and GPT-4o Models on ChatGPT. Available online: https://help.openai.com/en/articles/9824965-using-openai-o1-models-and-gpt-4o-models-on-chatgpt (accessed on 1 March 2025).

- Varanasi, B. Introducing Maven: A Build Tool for Today’s Java Developers; Apress: New York, NY, USA, 2019. [Google Scholar]

- Sommerville, I. Software Engineering, 10th ed.; Pearson: London, UK, 2015. [Google Scholar]

- Anghel, I.I.; Calin, R.S.; Nedelea, M.L.; Stanica, I.C.; Tudose, C.; Boiangiu, C.A. Software Development Methodologies: A Comparative Analysis. UPB Sci. Bull 2022, 83, 45–58. [Google Scholar]

- Ling, Z.; Fang, Y.H.; Li, X.L.; Huang, Z.; Lee, M.; Memisevic, R.; Su, H. Deductive Verification of Chain-of-Thought Reasoning. Adv. Neural Inf. Process. Syst. 2023, 36, 36407–36433. [Google Scholar]

- Li, L.H.; Hessel, J.; Yu, Y.; Ren, X.; Chang, K.W.; Choi, Y. Symbolic Chain-of-Thought Distillation: Small Models Can Also “Think” Step-by-Step. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 2665–2679. [Google Scholar]

- Cormen, T.H.; Leiserson, C.; Rivest, R.; Stein, C. Introduction to Algorithms; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Smith, D.R. Top-Down Synthesis of Divide-and-Conquer Algorithms. Artif. Intell. 1985, 27, 43–96. [Google Scholar] [CrossRef]

- Daga, A.; de Cesare, S.; Lycett, M. Separation of Concerns: Techniques, Issues and Implications. J. Intell. Syst. 2006, 15, 153–175. [Google Scholar] [CrossRef]

- Walls, C. Spring in Action; Manning: New York, NY, USA, 2022. [Google Scholar]

- Ghosh, D.; Sharman, R.; Rao, H.R.; Upadhyaya, S. Self-healing systems—Survey and synthesis. Decis. Support Syst. 2007, 42, 2164–2185. [Google Scholar] [CrossRef]

- Claude Sonnet Official Website. Available online: https://claude.ai/ (accessed on 1 March 2025).

- Claude 3.5 Sonnet Announcement. Available online: https://www.anthropic.com/news/claude-3-5-sonnet (accessed on 1 March 2025).

- Fielding, R.T. Architectural Styles and the Design of Network-Based Software Architectures. Ph.D. Thesis, University of California, Irvine, CA, USA, 2000. [Google Scholar]

- Martin, R.C. Clean Code: A Handbook of Agile Software Craftsmanship; Pearson: London, UK, 2008. [Google Scholar]

| Task | Fully Automated | Partially Automated | Manual Intervention |

|---|---|---|---|

| Code Review | 80% | 20% | 0% |

| Documentation Generation | 100% | 0% | 0% |

| Unit Test Generation | 95% | 5% | 0% |

| API Endpoint Creation | 90% | 10% | 0% |

| Business Logic Implementation | 30% | 50% | 20% |

| Simple Bug Fixes | 20% | 50% | 30% |

| Complex Bug Fixes | 0% | 40% | 60% |

| Feature | GitHub Copilot | Workflow-Centric AI Framework (JAIG) |

|---|---|---|

| Code Generation | Suggests snippets based on local context | Collects relevant project context, generates structured code, validates outputs, and refines through iterative feedback |

| Workflow Automation | None, focused on single-task assistance | Full automation of multi-step workflows |

| Validation and Testing | Requires manual review and testing | Built-in validation, test generation, and self-healing mechanisms |

| Error Handling | Manual | Automatic rollbacks and iterative refinement |

| Integration with Human Developers | Complements manual coding | Automates structured workflows while allowing human intervention in complex cases |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sonkin, V.; Tudose, C. Beyond Snippet Assistance: A Workflow-Centric Framework for End-to-End AI-Driven Code Generation. Computers 2025, 14, 94. https://doi.org/10.3390/computers14030094

Sonkin V, Tudose C. Beyond Snippet Assistance: A Workflow-Centric Framework for End-to-End AI-Driven Code Generation. Computers. 2025; 14(3):94. https://doi.org/10.3390/computers14030094

Chicago/Turabian StyleSonkin, Vladimir, and Cătălin Tudose. 2025. "Beyond Snippet Assistance: A Workflow-Centric Framework for End-to-End AI-Driven Code Generation" Computers 14, no. 3: 94. https://doi.org/10.3390/computers14030094

APA StyleSonkin, V., & Tudose, C. (2025). Beyond Snippet Assistance: A Workflow-Centric Framework for End-to-End AI-Driven Code Generation. Computers, 14(3), 94. https://doi.org/10.3390/computers14030094