1. Introduction

With the continuous advancement of information technology and the widespread application of the Internet of Things (IoT) and big data, digitization and intelligence have become important trends in the development of the manufacturing industry. The European Commission put forward the concept of Industry 5.0 in 2021, aiming to promote the sustainable development of the manufacturing industry through technological innovation and industrial upgrading [

1]. In terms of technology, Industry 5.0 will integrate various advanced technologies, such as artificial intelligence, Internet of Things (IoT), big data analysis, cloud computing, and others, to form an efficient and synergistic industrial ecosystem [

1]. Although 5G communication technology has driven the widespread application of technologies such as artificial intelligence in the manufacturing industry, enabling the digitization and networking of production processes, it has certain limitations in certain aspects, such as network intelligence and stronger communication capabilities, which prevent it from fully meeting the requirements of Industry 5.0 [

2,

3]. To meet Industry 5.0, the sixth-generation networks (6G) have garnered attention from the industrial and academic communities.

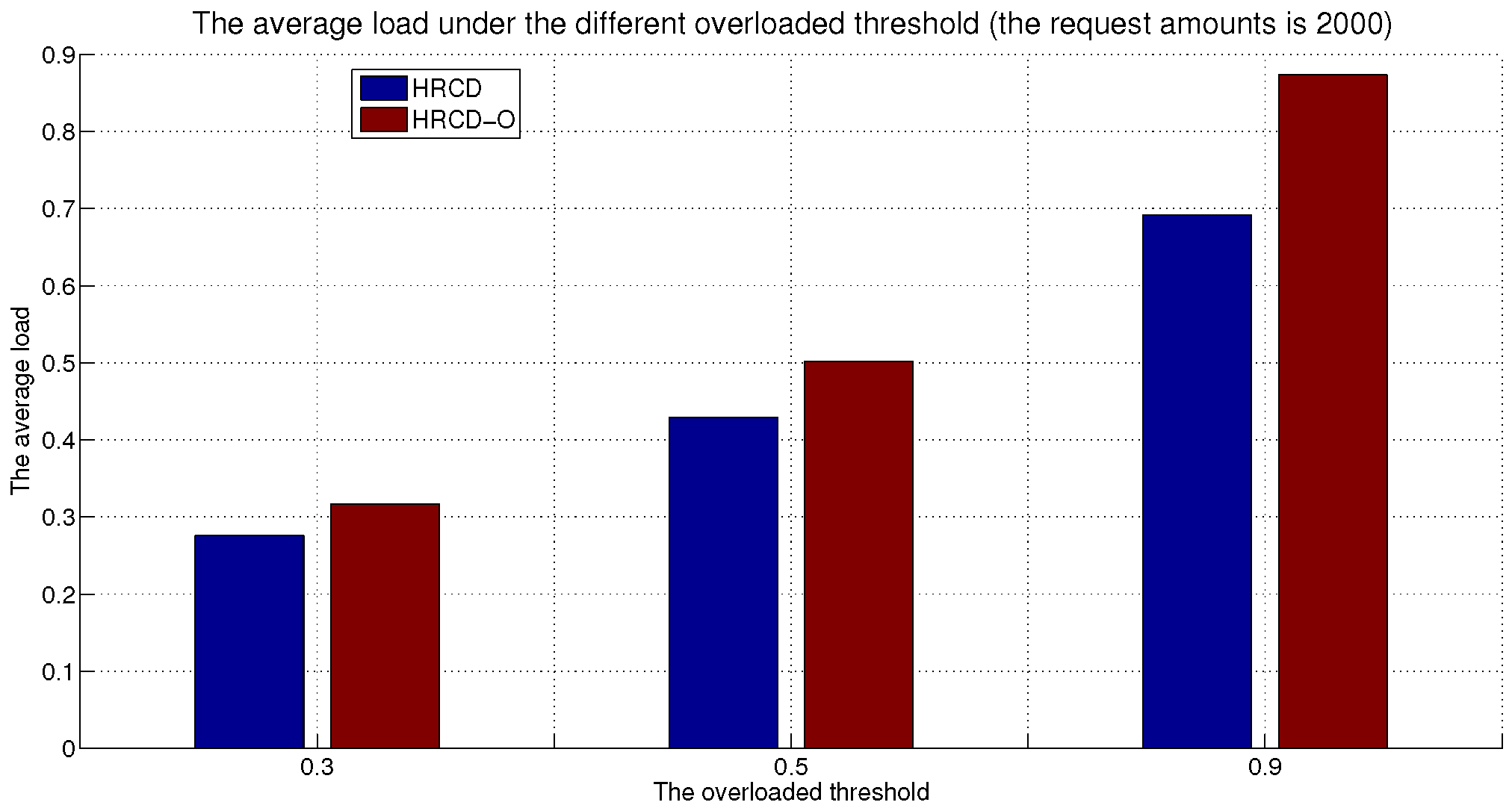

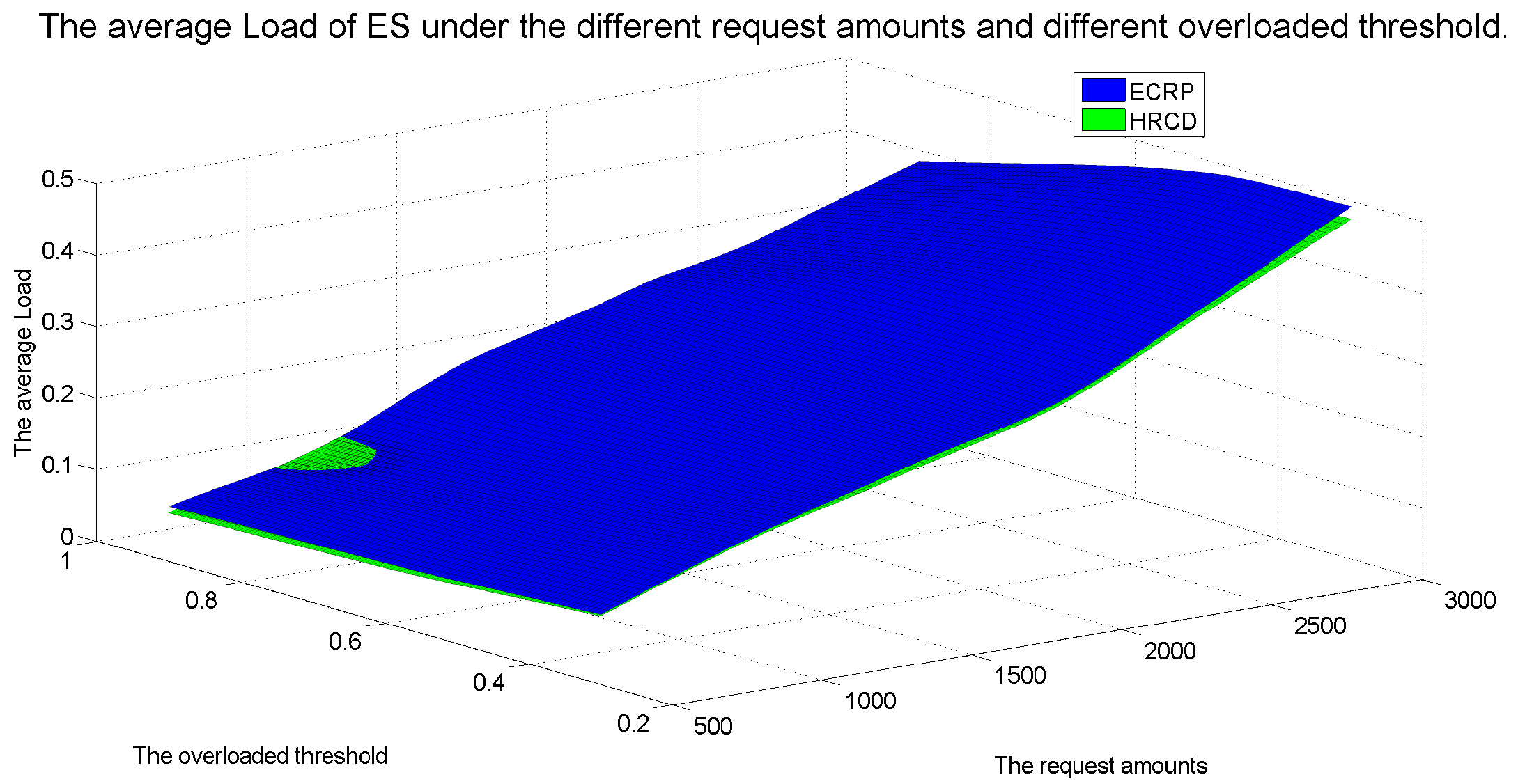

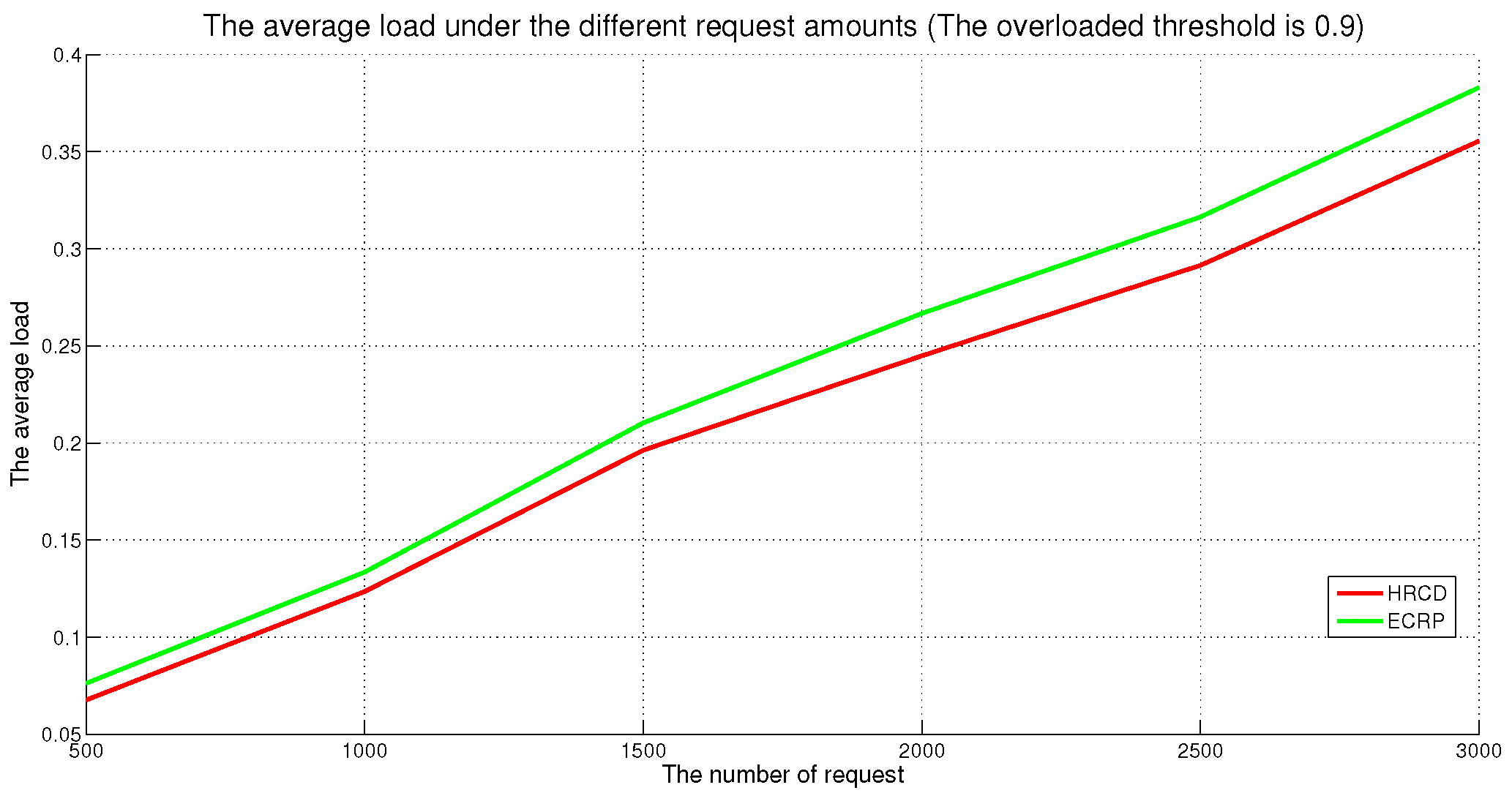

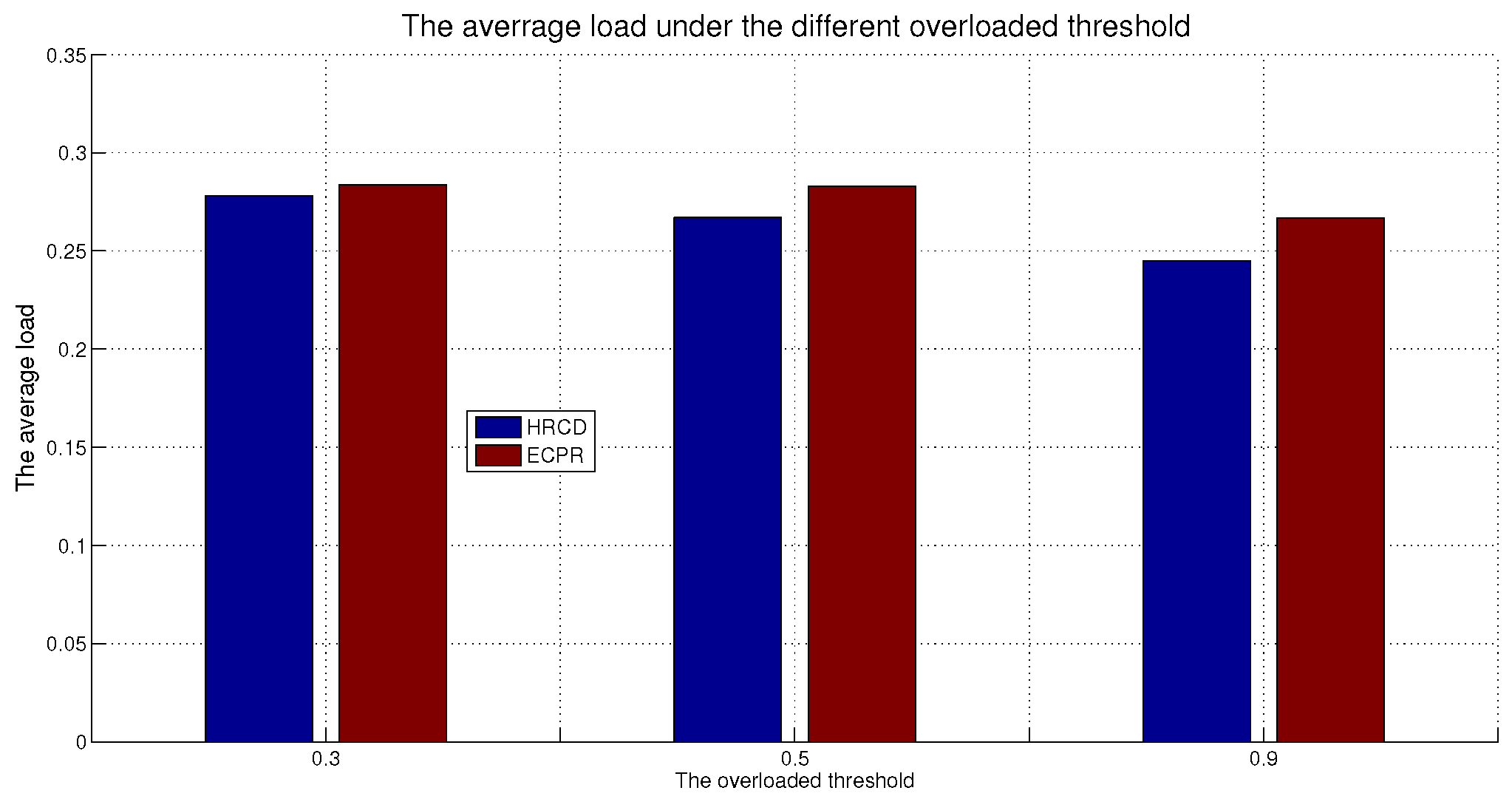

Regarding 6G networks, many scholars believe that the future 6G networks will primarily evolve in two directions [

2,

3,

4,

5,

6,

7], first, leveraging wireless signals from ground, air, and satellite equipment to build a globally connected network, and second, providing ubiquitous artificial intelligence and services. To ensure the evolution of 6G in these two directions, edge computing under 6G (6G Edge for short) has become a research hotspot in recent years [

6,

8,

9]. Edge computing is a distributed computing architecture that transforms large-scale services originally processed entirely by central nodes into distributed edge nodes [

2,

5,

6]. Under 6G Edge, smartphones will still be used in 6G [

2,

3,

4]. They will also become an important component of terminal devices. The edge servers are distributed in different geographical areas. The edge infrastructure provider (EIP) can deploy edge servers equipped with small-scale cloud-like computing resources at base stations and access points in close proximity to end-users [

10]. Based on the pay-as-you-go pricing model, app vendors can hire resources on edge servers in a specific geographical area for hosting applications [

11] or caching popular data [

12,

13] to serve their users in areas with low latency. App vendors hire resources on edge servers for caching data to serve their users within the edge servers’ coverage areas [

14,

15]. The distributed setup brings many beneficial functions to edge computing, such as low latency and mobility [

8]. In this way, end-users’ increasingly stringent latency requirements can be fulfilled [

16]. Many scholars believe that 6G Edge will achieve significant breakthroughs in areas such as Industry 5.0, autonomous mobility, etc. [

2,

3,

4,

5,

6,

7,

8].

The pay-as-you-go pricing model is a commonly used consumption pattern in Edge [

3,

4,

5]. This consumption pattern is also a common model for utilizing 6G edge computing services in large communities. Personnel concentration and the diversification of User Equipment (UE for short) are fundamental characteristics of large communities. To enhance the Quality of Service (QoS) for users of large communities, the EIP needs to deploy more base stations. While the adoption of 6G communication technology can effectively mitigate the issue of mutual interference among multiple base stations [

17,

18], the concentration of people and the diversity of devices also pose new challenges for the pay-as-you-go consumption model. Firstly, the vast amount of communication data involved in edge computing, coupled with the diversity of devices, exerts tremendous service pressure on the edge [

2,

3,

4,

6]. Secondly, UE is usually carried by users, and their locations dynamically change as users move. UE needs to access the network from different access points to improve their QoS, which undoubtedly increases the communication burden of the edge node.

The multi-replica technology can effectively alleviate the issue of excessive service pressure in the context of 6G Edge [

1,

2,

3,

4,

5,

6,

7,

17,

18]. Typically, these methods involve deploying replicas on edge devices situated close to UE [

10,

11,

12,

13,

14,

15,

16]. In large communities characterized by high personnel density, the service pressure on edge nodes remains notably elevated. Compared to Edge Service nodes (ESs for short), UE is situated closer to the data access points. By placing replicas on UE, we can not only diminish the burden on ESs but also further curtail data access latency. With the burgeoning maturity of Device-to-Device (D2D for short) communication technology, UE participation in edge services has garnered increasing attention from scholars [

19,

20]. However, this approach also confronts a multitude of challenges, primarily manifested as follows.

(1) The question arises regarding which UE should be served by which replicas. In Edge, replicas are positioned on ESs that are proximal and stationary relative to UE, offering data access services to UE within their communication radius. Consequently, the service target of replicas on ESs remains consistent. In contrast, the position of UE in large communities is dynamically fluid. When replicas are hosted on UE, it becomes challenging to ascertain which replicas cater to which UE. (2) The issue of replica placement location emerges. ESs possess superior service capabilities and are typically situated at the network topology’s “exit” points in Edge. Consequently, selecting ESs as the replica deployment node can enhance replica hit rates. However, in large communities, UE positions are time-varying, and the network topology is also dynamically evolving. (3) The problem of selecting data for replica creation is as follows. Data accessed by geographically dispersed users exhibits a geographical locality pattern [

21,

22,

23]. To fulfill the data access requirements of various geographical units, traditional replica placement methodologies typically select “hotspot” data of specific types as replicas (hotspot data denotes data that has been frequently requested per unit time). However, in Edge, UE is time-varying and may be situated in diverse geographical units at different times, rendering it difficult to measure the hotspot data of specific types.

In summary, since the time-varying nature of UE under 6G edge computing for Large-Scale Communities, and with the rapid growth in the amount of UE and mobile communication volume, it is difficult to meet the needs of Edge by simply placing replicas on ESs. Therefore, this paper proposes a Hybrid Replica strategy based on the Community Division under 6G Edge, named the HRCD, aiming to further reduce access latency and improve system performance by placing replicas on ESs and UE. the HRCD uses the Label Propagation Algorithm (LPA for short) to determine whether the time-varying UE belongs to the stable service set and selects appropriate multiple pieces of UE within the stable set as replica placement nodes. Meanwhile, it uses uniform distribution to select appropriate hotspot data as replica data according to the data access situation. The main contributions of this paper are summarized as follows:

The HRCD employs the PLA algorithm to categorize time-varying terminals into multiple stable communities and implements a hybrid replica placement strategy. This strategy involves distributing replicas across both edge nodes and end-devices, thereby achieving system load balancing and minimizing data access latency.

The HRCD determines the stable set that time-varying UE belongs to based on its inherent properties and data access patterns. To select replica placement nodes from this set, the HRCD utilizes fuzzy clustering analysis. Here, the inherent properties of UE refer to various indicators that influence a terminal node’s capacity to serve other nodes or itself, including the node’s load, residual computational power, available storage space, and topological distance from other nodes, among others.

The HRCD selects hotspot data for replica creation within the stable set by considering both the geographical proximity of nodes and data access patterns within the set. Based on these factors, the HRCD categorizes the data appropriately for replica creation.

We conduct an experimental evaluation of the HRCD’s performance in comparison to similar edge replication strategies to validate its superior performance.

The rest of this paper is organized as follows.

Section 2 reviews the related work.

Section 3 overviews the HRCD.

Section 4 presents the HRCD in detail.

Section 5 theoretically analyzes the HRCD’s validity and efficiency.

Section 6 experimentally evaluates the HRCD’ performance against the similar edge replication strategies.

Section 7 summarizes this paper and points out future work.

3. The Implementation Scenarios of the HRCD

As depicted in

Figure 1, the HRCD primarily addresses the communication scenarios of a large-scale community within 6G edge computing, characterized by high-density micro base stations. UE is carried by users, and their movements traverse the service areas of various base stations over time. To vividly demonstrate the implementation steps of the HRCD, let us consider UE1 as a terminal node that traverses multiple service areas. Drawing from real-world scenarios, UE1 moves sequentially through numerous US areas, as highlighted by the red line in

Figure 1. For instance, if

is carried by a corporate employee, its movement pattern is likely to adhere to a regular route between home and the workplace.

The specific implementation steps are as follows:

Step 1: Initialization Phase. determines its label based on the categories of its historical access data, while ESs determine its label based on the categories of the historical access data of all terminal nodes within its service area. The labels for both UE and ESs are not fixed but rather constitute a set of labels.

Step 2: Label Exchange Phase. As shown in

Figure 1, when

moves into the service area of

, it exchanges labels with

, meaning that

distributes its labels to

. When exchanging labels among UE, the HRCD assumes that the UE within the service scope of the edge server remains constantly online and that the D2D link remains consistently accessible.

Step 3: Determining the Stable Set Belonging to

. As shown in

Figure 1, within

’s service area,

employs the HRCD method to assess the similarity of data access patterns and inherent attributes between

and other UE, thereby determining the stable service set to which

belongs.

Step 4: Selecting Replica Placement Nodes within the Stable Set. the HRCD selects appropriate sets of terminal devices as replica placement nodes from each community, based on the predefined stable community sets. It considers multiple factors that influence data access and load balancing, and employs fuzzy clustering analysis to select node sets from within the stable set for replica placement.

Step 5: Selecting Replica Objects within the Stable Set. The HRCD creates replicas based on the categories of data in the candidate placement nodes and the hotspot data within each category. When creating replicas, the HRCD first allocates them uniformly according to the proportion of requests for each category of data within the replica placement node set in the stable set, determining the category of replica to be created. It then selects data for replica creation uniformly, based on the proportion of requests for hotspot data under different data categories. selects hotspot data for replica creation.

Step 6: Data Access Phase. When accessing data, the HRCD prioritizes requests for data from within the stable set. If the request fails, it proceeds to request data from edge devices. Similarly, when accessing data, prioritizes selecting replica data from the replica placement node set within the stable set. If the request is unsuccessful, obtains replica services from .

The HRCD prioritizes fetching data from the stable set. In the event of failure, it shifts to requesting data from edge nodes. If those attempts also fall through, it resorts to sourcing data from the cloud. This hybrid replica placement method stands out from traditional hybrid approaches. Instead of relying on predictive algorithms to pre-cache data on end devices, the HRCD leverages community detection algorithms and fuzzy clustering analysis to identify terminal nodes for replica placement, based on observed data access patterns. Moreover, when it comes to creating replicas, the HRCD takes a different tack from the traditional approach of prioritizing popular (or hotspot) data. Instead, it creates replicas based on data categories, taking into account access patterns within stable sets. This innovative approach helps enhance the success rate of replica access.

4. The HRCD Algorithm

This section will provide a comprehensive explanation of the algorithm steps involved in the HRCD. Previously,

Section 4.2 introduced the detailed steps for obtaining a stable set of UE.

Section 4.3 delved into the challenge of determining the set of replica placement nodes. Furthermore,

Section 4.4 elaborated on the selection process for a replica creation object. Lastly,

Section 4.5 detailed the steps required for placing replicas on the UEs.

4.1. The Table of Partial Parameters for the HRCD

Some of the parameters involved in the HRCD are shown in

Table 2.

4.2. Algorithm for Obtaining Stable Set of Nodes

The core of the HRCD lies in accurately identifying which UE is served by the replicas. The requested data exhibits geographical locality characteristics at the edge [

23], meaning that nodes within the service area of an edge server share similar data access requirements. In simpler terms, UE served by the same edge server has a higher likelihood of accessing identical data. Consequently, this UE develops stronger connections due to its shared data access patterns. Leveraging Complex Network Community Theory [

29,

30], we can characterize the relationships among UE at the edge by analyzing data access similarity. Thus, through community discovery, we can ascertain the community to which a piece of time-varying UE belongs. In other words, based on complex network community theory, we can identify the stable service sets that various UE is affiliated with.

The HRCD determines the relationship between time-varying nodes based on their inherent properties and the actual situation of data access. The inherent properties of UE are measured from the perspective of UE users. For example, assume and are used by and , and assume that users have three inherent attributes, workplace, unit, and gender, expressed as . So, the inherent properties of and are represented as , . If and ; then, when and move to the same service area of an ES, compared to other UE, they have a higher probability of accessing the same data category due to some of their properties being the same; that is, compared to other UE, and are closely connected and have a high probability of belonging to the same “community”. However, judging the data category accessed by UE solely based on the same attributes still has limitations, and other factors need to be considered. For example, since there is an attribute difference between and , the data categories that they are concerned about may be different. To find similarities in accessing data, it is also necessary to pay attention to their historical access information.

According to the above considerations, the HRCD determines the stable services set as follows: Firstly, the HRCD defines node labels and ES labels. Then, UE periodically records the labels of encountering ESs in a decentralized adaptive manner. Finally, according to the recording labels and inherent attributes of UE, the stable set to which UE belongs is determined through the similarity degree.

4.2.1. The Method of Obtaining the UE’s Label

We assume that

has a unique data type.

records the requested data in its log. the HRCD obtains the recent

accessing records from the log and counts the frequency of each data category in

, expressed as follows:

represents the frequency of

class data appearing in

. In the initial state,

takes the top

data category with the highest frequency as its label, expressed as follows:

4.2.2. The Method of Obtaining the ES’s Label

According to the principle of geographic locality in edge computing [

23], for simplicity, we use the data category of hotspots within the ES service area as the label for ESs. The method is as follows.

ESs periodically count the data requests within the area of the service in descending order according to the request count, obtain the top data with the highest number of requests, and count the frequency of each category of data in . takes the top data category with the highest frequency as its label, in which, . Here, represents the average number of UE labels in the current service area, and is a custom constant.

4.2.3. The Method of Label Propagation

UE can move within different ES service areas. If UE requests data on ESs (or other nodes within the ES service area that place replicas), then it records ES labels related to the requested data; i.e.,the ES propagates its label to the UE. UE can save the fixed number of labels and replace them with the longest unused algorithm [

34]. Meanwhile, UE records the accessed data information in a log. When UE records labels, if the recorded label is a new label in the UE label record, update the number of label records, expressed as

. If the requested data does not exist in the ES, it is necessary to request data from the remote cloud through the ESs. At this time, the UE still records the accessed data category as its historical access data.

4.2.4. Determine the Stable Service Set to Which the UE Belongs According to the Membership Degree

The HRCD takes into account both the inherent properties and historical access information of UE and determines the stable service set to which the UE belongs based on the membership degree of the UE. Here, the membership function is shown in Equation (

3) as follows:

Here, and are weight coefficients. and are data access similarity and intrinsic attribute similarity for and , respectively.

The method of obtaining is as follows:

Using

as the domain of discourse (

indicates UE within

service area). Use every UE recorded label as an indicator; that is,

are indicators. The proportion

of the k-th data type label on

is represented as

Given

and

, the similarity of their access data can be obtained by the following Equation (

5):

The method of obtaining is as follows:

Using

to denote the k-th attribute of

, and using

n to denote the number of attributes. The fixed attribute set of

is represented as

. Given

, the similarity of their intrinsic attributes can be obtained by the following Equation (

6):

where

when

.

when

. For example,

,

, if

,

, it indicates that users of

and

have the same work unit and residential unit; then, its inherent attribute similarity is

.

Given

, when dividing the stable service set to which the UE belongs, the HRCD firstly defines a threshold

according to the actual situation. Given

, it obtains its membership degree with other nodes within its service area according to Formula (

3). Take the node set that satisfies

as the stable service set to which

belongs, expressed as

. Here,

It indicates the average membership degree of other UE within and .

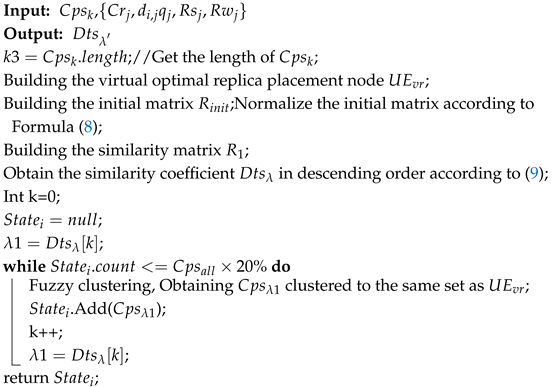

According to the above description, the pseudocode for the HRCD to obtain the stable service set to which a node belongs is shown in Algorithm 1.

| Algorithm 1: The algorithm for obtaining stable set of UE |

Input: , , , Output: The stable set to which belongs Obtaining the proportion of k-th class labels according to ( 4); Obtaining between and other nodes in according to ( 5); Obtaining between and other nodes in according to ( 6); Obtaining between and other nodes in according to ( 3); Obtaining the average membership degree of other UE in and according to ( 7); Obtaining according to ; |

4.3. The Algorithm for Obtaining Replica Placement Nodes

Determining the optimal replica placement node is pivotal in the process of replica placement. Various factors, including a node’s service capacity, topological position, and load, must be taken into account when selecting replicas. Typically, replica placement algorithms opt for a single “optimal” node for replica deployment. However, given the dynamic nature of UE in edge computing, selecting a single “optimal” node may result in that node being relocated outside the service area of an ES, thereby rendering it unable to continue serving other UE.

To tackle this challenge, the HRCD employs fuzzy clustering analysis to identify a comprehensive set of replica placement nodes. The detailed steps involved are outlined below.

4.3.1. The Evaluation Indicators

Assuming any stable service set under , select any node from , and consider its following indicators: the number of records for new label , the backhaul with , the node load , the remaining space for placing replicas and the remaining bandwidth for nodes. HRCD uses as the evaluation indicator.

4.3.2. Building the Optimal Virtual Placement Node

If there exists a virtual UE, denoted as

, in

with the following indicators:

where

. That is,

has the smallest backhaul, the smallest load, the largest remaining storage space, and the largest remaining bandwidth. Meanwhile,

represents the number of times a new data type has been received. According to the principle of data locality under Edge, there is a higher probability of accepting the new data type when the UE moves to a new ES. Therefore,

can serve as the frequency at which the UE moves in and out under different ESs. That is,

has the minimum movement frequency. It is obvious that

is the “optimal” replica placement node in

. But it is a virtual node and cannot place replicas. So, the HRCD uses fuzzy clustering analysis to find UE with high similarity to

from

as a candidate set of replica placement nodes.

4.3.3. Building the Initial Matrix

The HRCD takes

as the domain,

as the evaluation indicators to construct the initial matrix

(The initial matrix is shown in

Table 3), in which

.

The HRCD uses the quantity product method to normalize the dimensions of different indicators in

and establishes the fuzzy similarity matrix

. The quantity product method is shown in Formula (

8):

where

represents the similarity between

and

in

.

represents the second indicator of

.

.

According to Formula (

8),

can be transformed into the following similarity matrix

:

4.3.4. Obtaining the Node Set of Replica Placement

The HRCD uses the fuzzy clustering analysis method to cluster

. According to

-Cut set theory [

35,

36],

takes non-repeating values from

and arranges them in descending order, expressed as follows:

According to the HRCD’s goals, it selects a set of nodes with high similarity to

from

as candidate nodes for replica placement. According to

-Cut set theory, the number of nodes in the cluster is closely related to the value of

. A larger

means that there less UE in the same set as

. Less UE participating in replica services easily leads to these nodes’ service pressure increasing. A smaller

indicates that more devices are divided into the same set as

. Although placing replicas on more devices can effectively balance the load, it can lead to serious waste of storage resources. Therefore, the HRCD uses the Pareto distribution [

37] to determine the appropriate

threshold. Assuming when

, if the number of devices in the same set as nodes in

and

reaches 20% of the total number of

, clustering will be stopped, and the cluster set at this time is expressed as

. Then

is the candidate set for placing replicas.

Meanwhile, during clustering, the HRCD also records the node set during each iteration of

and

clustering, expressed as

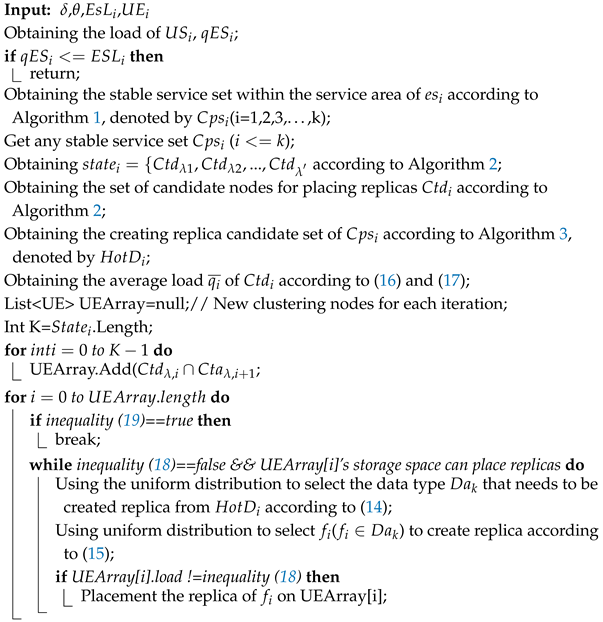

According to the above description, the algorithm pseudocode for obtaining candidate sets for replica placement within a stable set is as follows in Algorithm 2.

| Algorithm 2: The algorithm for obtaining candidate replica placement nodes |

![Computers 14 00454 i001 Computers 14 00454 i001]() |

4.4. The Method of UE Selecting the Data to Create Replica

Given that the label record of UE can capture its historical movement patterns across different ESs, HRCD leverages this information to optimize replica placement. Additionally, by selecting popular data for replication, data access latency can be minimized and load-balanced effectively. Therefore, the HRCD identifies and replicates hotspot data, categorized by data type, based on label exchange dynamics. The process involves the following steps:

Step 1: In a decentralized and adaptive manner, the candidate replica placement nodes tally the number of data requests within their respective stable service sets.

Given nodes and () and assuming the replica for has been placed on , if requests data from , the number of requests for is increased by 1, denoted as .

Step 2: periodically obtains the data request situation.

The HRCD uses the following Formula (

10) to count the request situation in

according to the data type.

Step 3: According to Formula (

10), order data in a descending order based on data type, denoted by

is the k-th data type in descending order according to request counts.

Step 4: Select the candidate data sets for creating replicas based on data types.

Obtain the total number of requests for different data types in

during a unit of time according to Formula (

10), denoted by

. So, the proportion of requests for the k-th data type, denoted by

, can be expressed as:

According to (

13), obtain the number of candidate replicas, denoted by

, that need to be created in

.

where

is a user-defined coefficient.

represents the average remaining storage capacity of all UE (

. k =

.length.

According to (

14), the proportion of the k-th data type in

is

When selecting candidate replica sets, the HRCD selects hotspot data from different data types based on the proportion of requests for each category of data to join , forming a candidate replica set for , expressed as .

Based on above description, the pseudo code UE selects candidate data to create replicas as shown in Algorithm 3.

| Algorithm 3: The algorithm for obtaining candidate replica placement nodes |

Input: The data request status of during a unit of time, The remaining space for nodes to place replicas Output: Phase 1: () counts the request information of its replicas in a decentralized manner. Phase 2: In , the number of requests is periodicly counted according to the data type by ; Obtaining the proportion of requests for different category of data during a unit time according to ( 12); Arranging the number of requests for data in descending order by data category, obtaining ; Obtaining the number of replicas for according to ( 14); Join the different categories of data to the candidate replica set according to the number of requests; Return ; |

4.5. The Replica Placement Algorithm

The HRCD employs a uniform distribution approach to pick data from the candidate replica set for replication purposes. Additionally, it determines the optimal nodes for placing these replicas by considering both the load of the candidate placement nodes and the order of clustering derived from fuzzy clustering analysis. The specific steps involved are outlined below.

4.5.1. Obtaining the Priority of Placing Replicas in the Node Set According to the Order of Fuzzy Clustering

When selecting the replica placement node, the HRCD prioritizes selecting UE with high similarity to as the replica placement node.

During the clustering process, as the number of iterations increases, candidate placement nodes gradually cluster into . The earlier clustering into the , the higher the similarity with . Therefore, the HRCD obtains the priority of candidate nodes as replica placement nodes based on the order of fuzzy clustering. The method is as follows:

Assuming . The cluster sets corresponding to (), (), () are , respectively. When , is the new clustering node set. When , is the new clustering node set. When selecting a replica placement node, prioritize the nodes in .

4.5.2. Selecting Data Objects to Create Replicas

When creating the replica, the HRCD first determines the data type for creating the replica, and then selects the data for creating the replica. The HRCD first obtains the proportion of each type of data according to Formula (

14), and then uses the uniform distribution to determine the data type for creating replicas, denoted by

(

). Then, according to Formula (

15), select

(

) from the

using the uniform distribution and create a replica.

At (

15), the denominator represents the number of requests during a unit time for all hotspot data belonging to the

type in

. The numerator represents the amount of

requested during a unit time. According to (

14) and (

15), when selecting data to create the replica, the more times the

in the

type data is accessed, the higher the probability of being selected as the replica data.

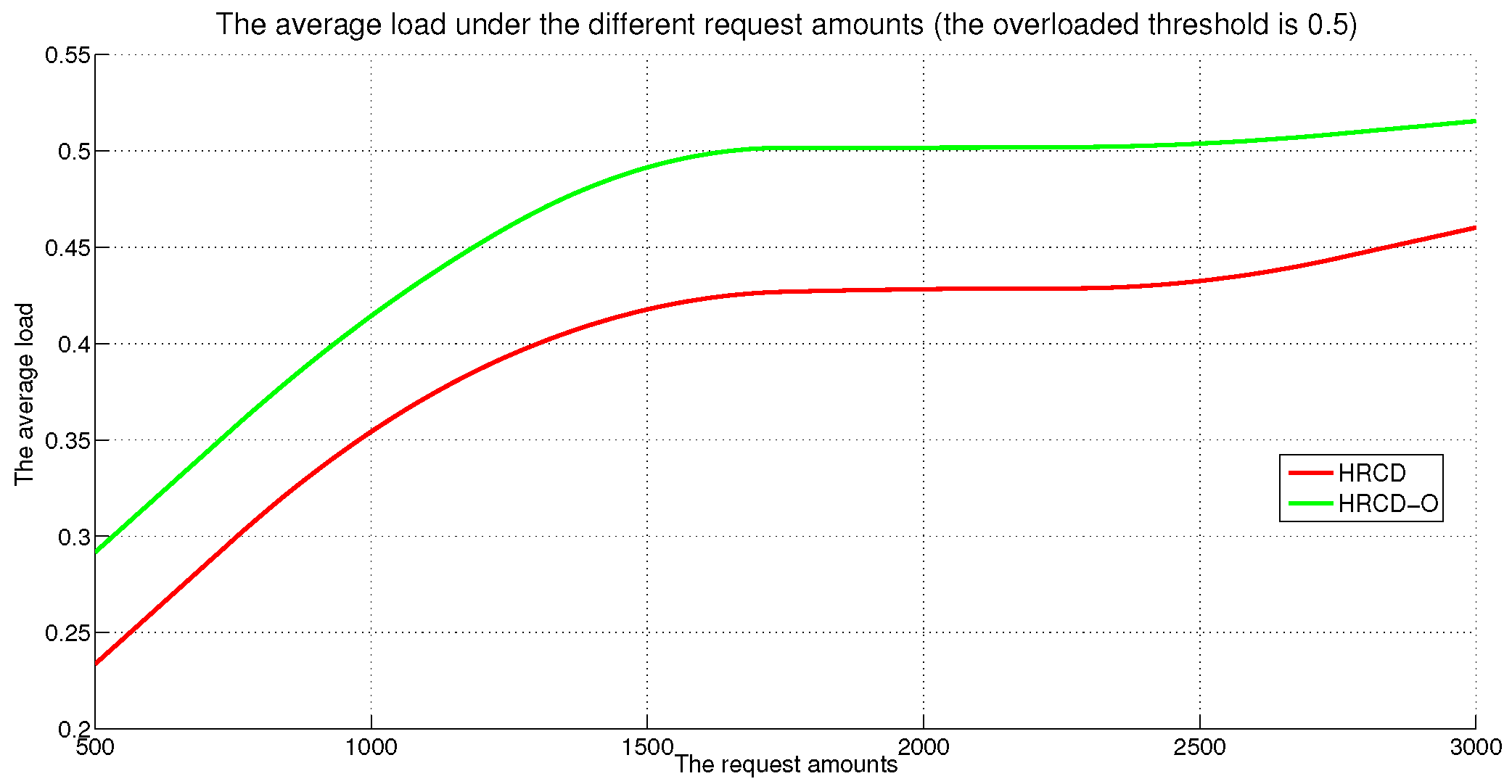

4.5.3. Obtaining the Number of Replicas Created According to the Node Load

Many factors can cause changes in node load. The HRCD only considers the load caused by requests. Assuming the maximum number of requests that

(

can handle during a unit of time is

. The node load is

where

represents the total number of requests during a unit of time for all replicas on

. The average load of

can be expressed as

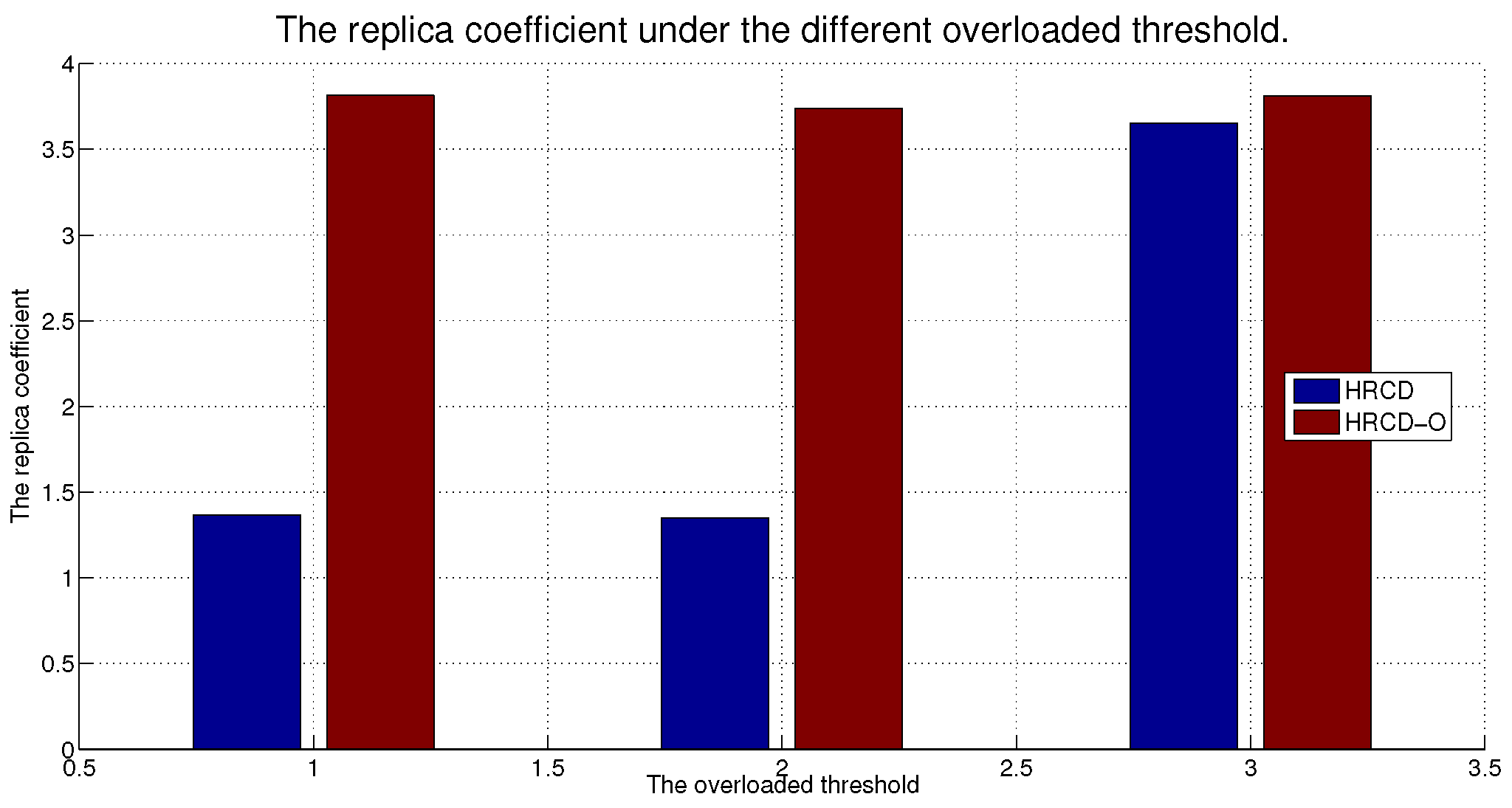

The HRCD defines a threshold

(

) that can be dynamically adjusted according to actual situations. With the number of requests increasing during a unit of time, the node load increases too. Load balancing is one of the important goals of the HRCD. Since placing replicas on nodes is an important reason for increasing requests, the HRCD assumes that

satisfies inequality (

18) and stops placing replicas on

to reduce node load.

Here, in (

18),

is called the overloaded threshold of

. When the load of a replica node reaches its overloaded threshold, it needs to stop responding to data requests. Although more replicas can balance the load of ESs, more replicas can also increase the maintenance burden and waste storage space. Therefore, the HRCD sets the lightweight load of

, denoted by

. When the request load of

is less than

, there is no need to create a replica within any stable set. Meanwhile, the HRCD also defines a lightweight load threshold for a stable services set, denoted by

(

). After placing replicas on the UE within

, if the average load

of

satisfies inequality (

19), stop placing replicas on any node within

.

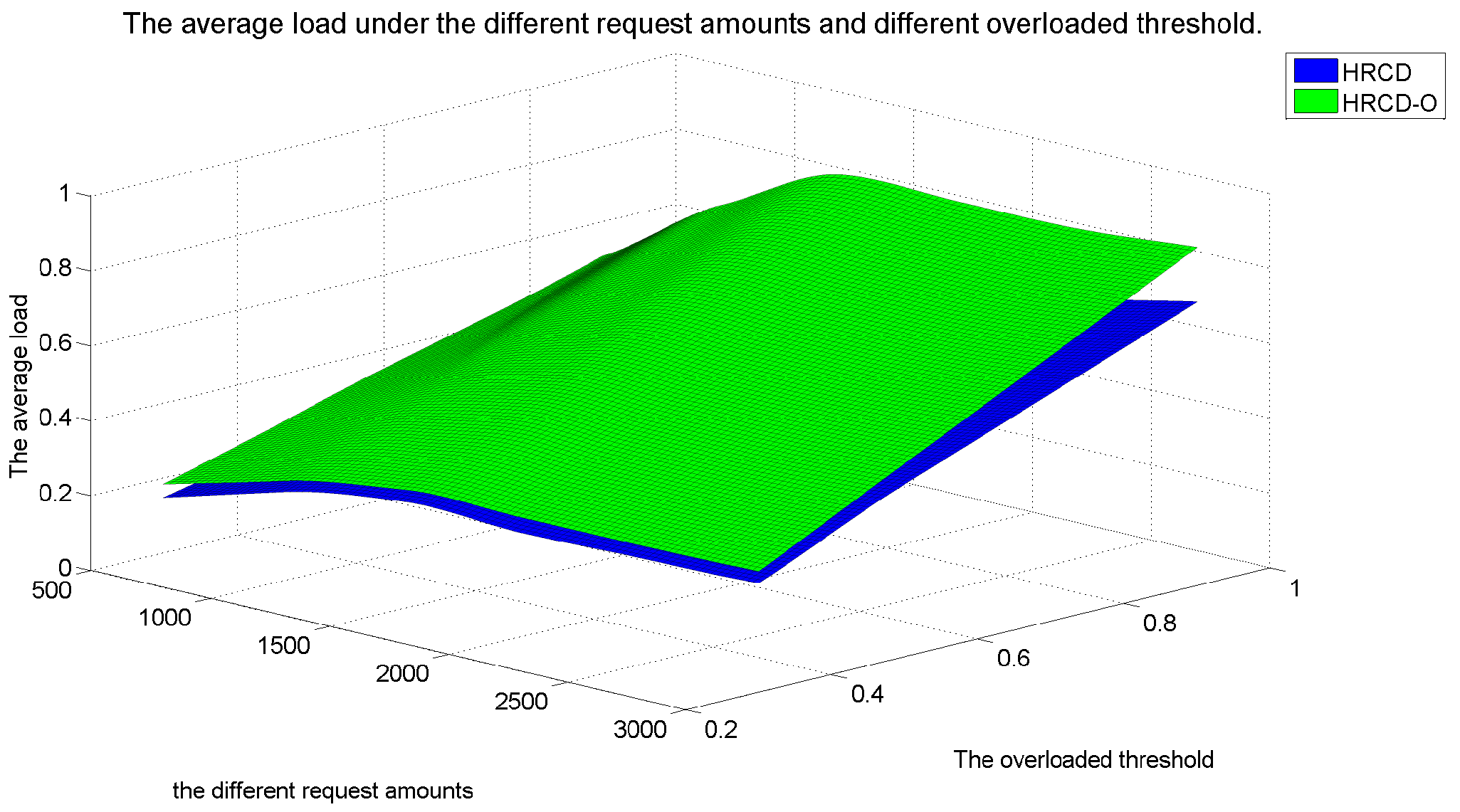

According to the above description, the pseudocode of placing replicas on UE is shown in Algorithm 4.

| Algorithm 4: HRCD replica Placement Algorithm |

![Computers 14 00454 i002 Computers 14 00454 i002]() |

While placing replicas on UE can indeed alleviate the service burden on ESs, there are instances where UE within a stable set is unable to retrieve the requested data locally. In such cases, they still have to request data from the ES. To address this, the HRCD adopts a traditional approach of deploying replicas of hotspot data on ESs based on the frequency of requests. This strategy aims to minimize the amount of UE acting as replica hosts and to reduce data access latency for UE.

7. Conclusions

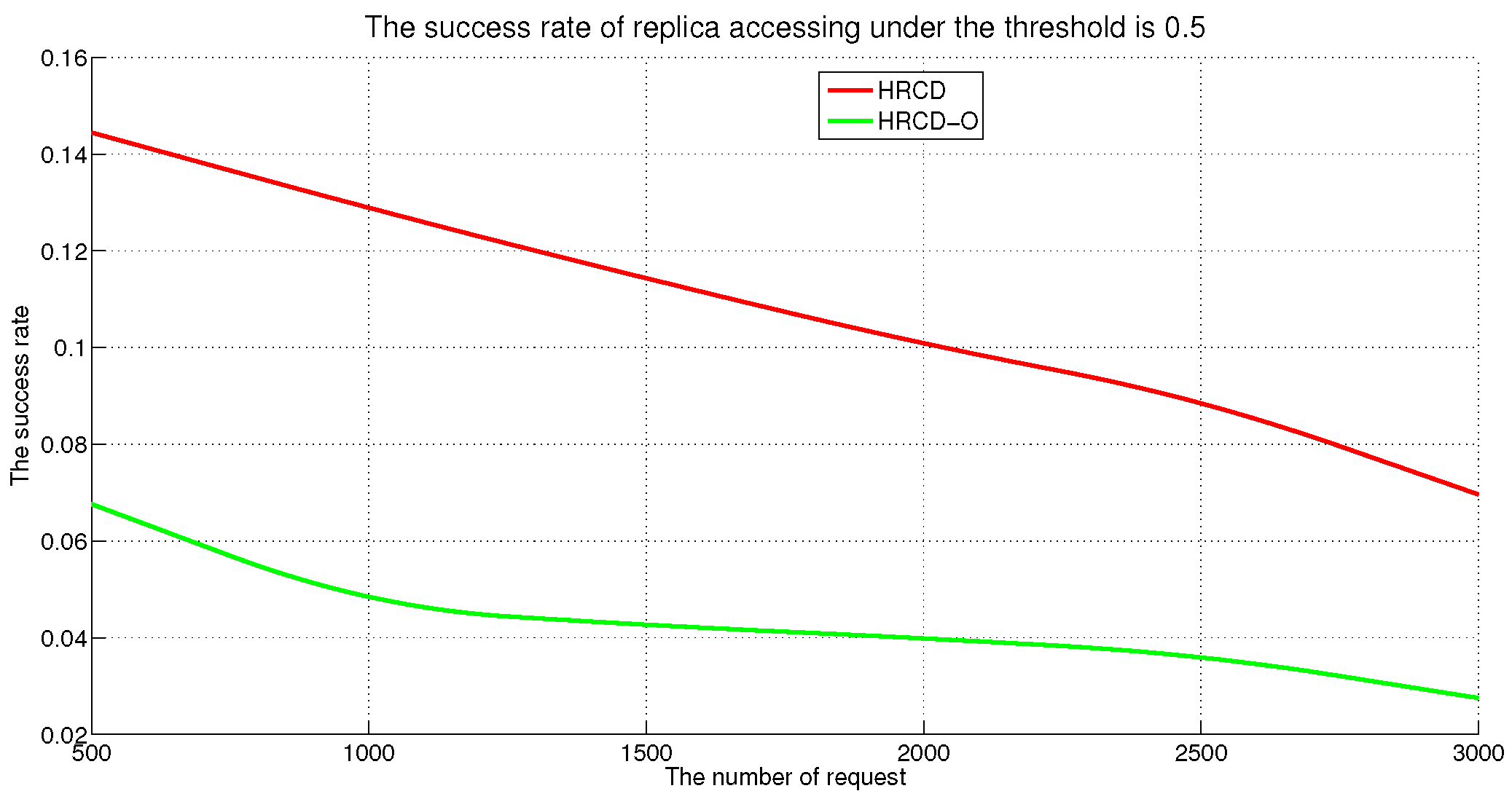

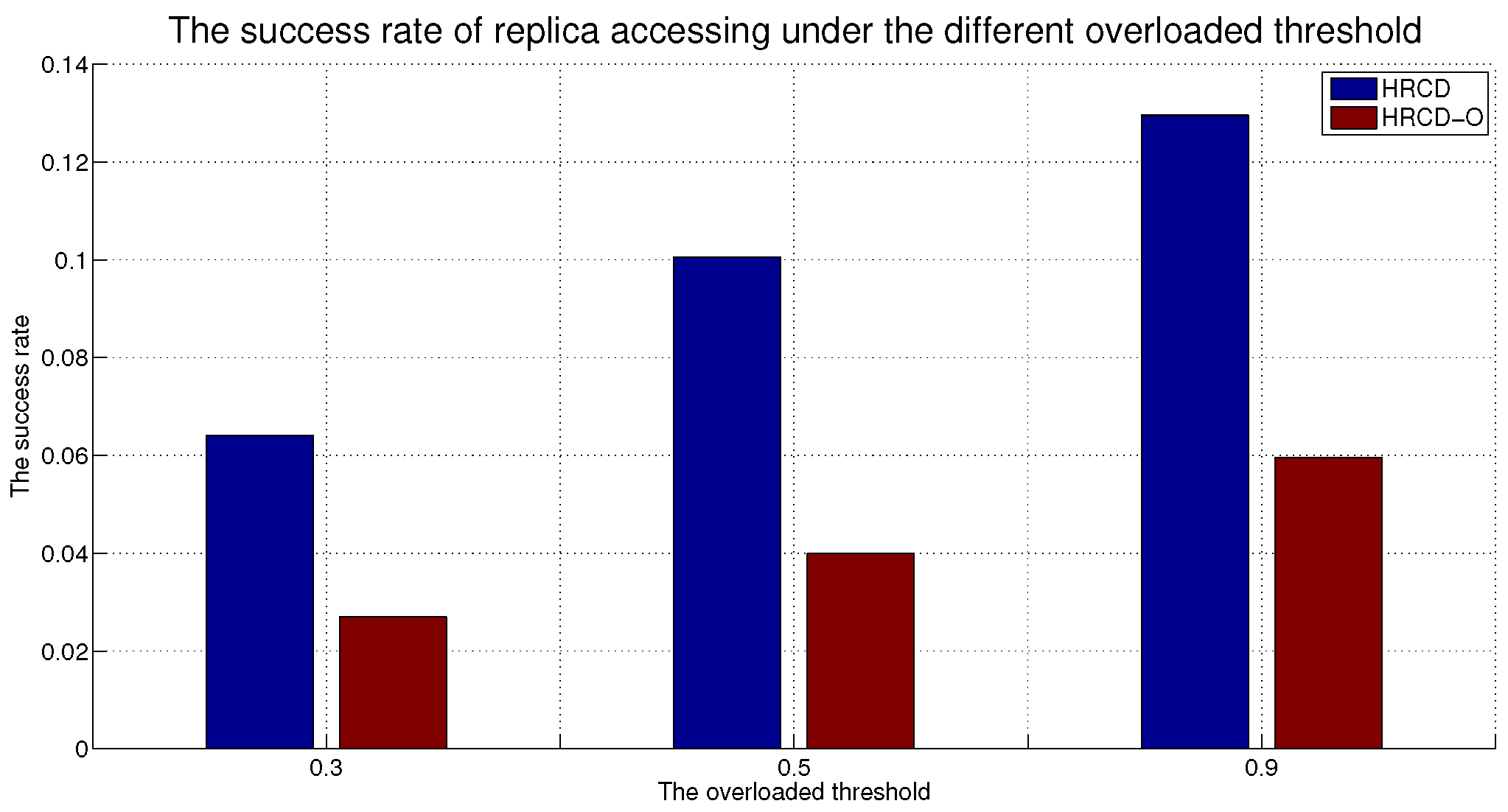

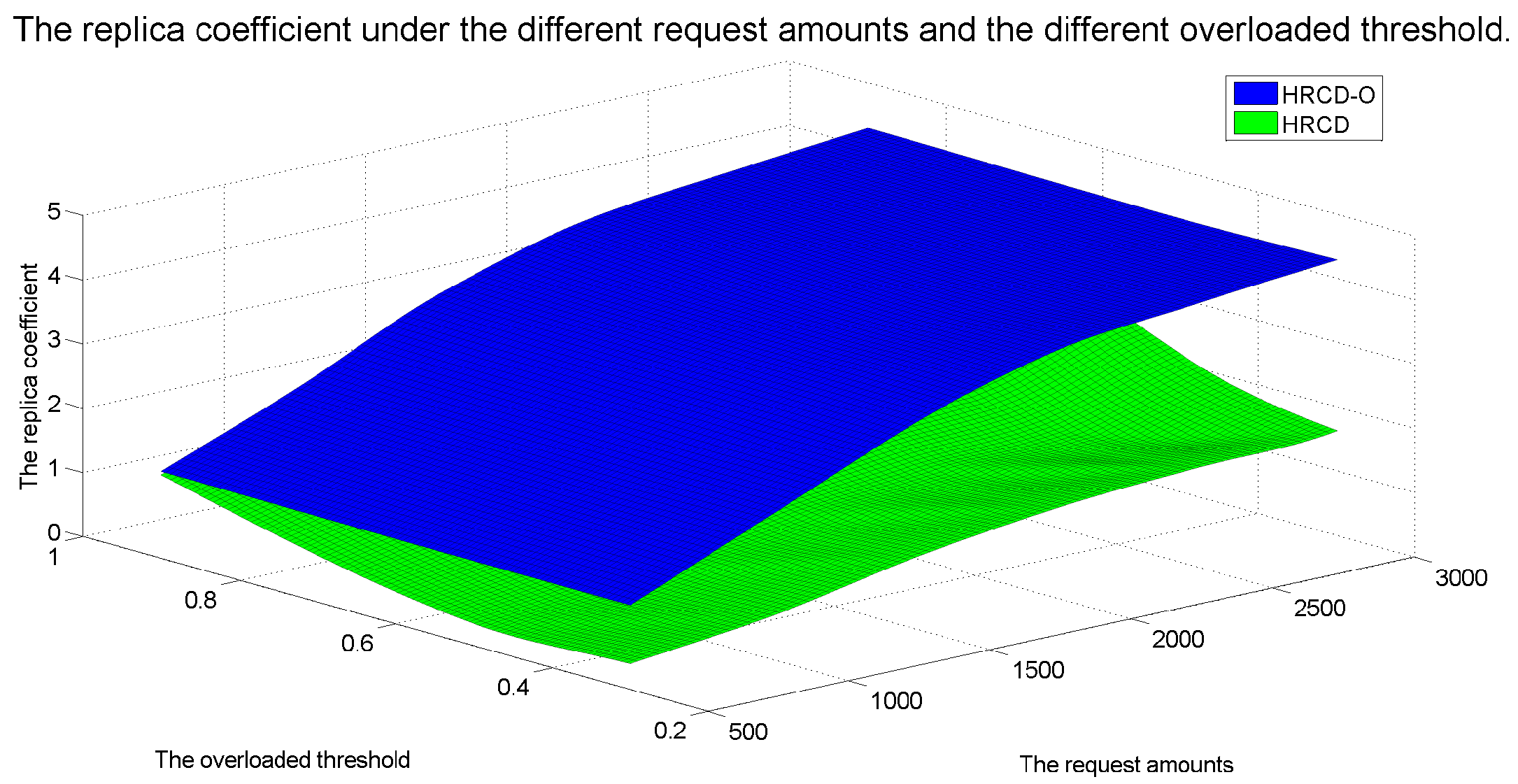

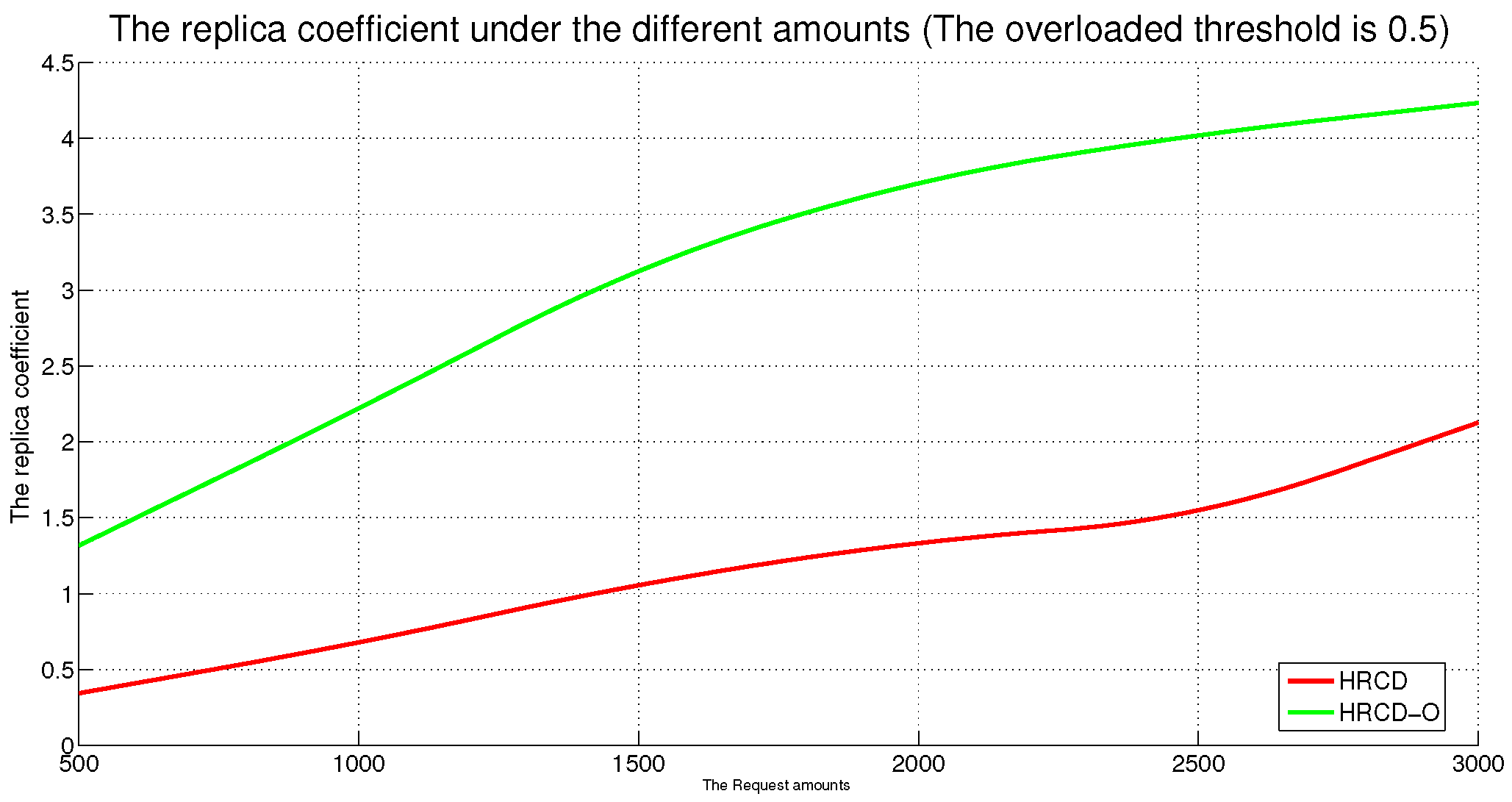

In large-scale 6G edge computing communities, placing replicas on ESs can significantly reduce data access latency for UE. However, the rapid growth in the amount of UE and ES communications has led to a progressive increase in the service pressure on ES, adversely impacting their service performance. To mitigate this issue, this article proposes placing replicas on UE to alleviate the service pressure on ESs. However, the time-varying nature of UE under Edge presents challenges in replica placement. To address this problem, this paper draws inspiration from community discovery algorithms and introduces a hybrid replica placement method called the HRCD. The HRCD aims to place replicas on both UE and ESs to balance system load and minimize data access latency. It begins by defining labels for UE and ESs based on data access patterns. UE records the labels of ESs they encounter during their movement in a distributed and adaptive manner. Using membership functions, the HRCD divides UE within the ES service area into multiple stable service sets based on the recorded labels and inherent attributes of the UE. Next, the HRCD employs fuzzy clustering analysis to select “excellent” node candidate sets as replica placement nodes within these stable sets. When choosing the replica creation object, the HRCD prioritizes hotspot data with geographic locality characteristics based on data access patterns. the HRCD also creates an appropriate number of replicas according to the load of ESs and UE, and selects replica placement nodes from the candidate set in the order determined by fuzzy clustering. Additionally, it places hotspot data within the ES service area directly on ESs to further reduce data access latency. Compared to ES/UE-based methods in

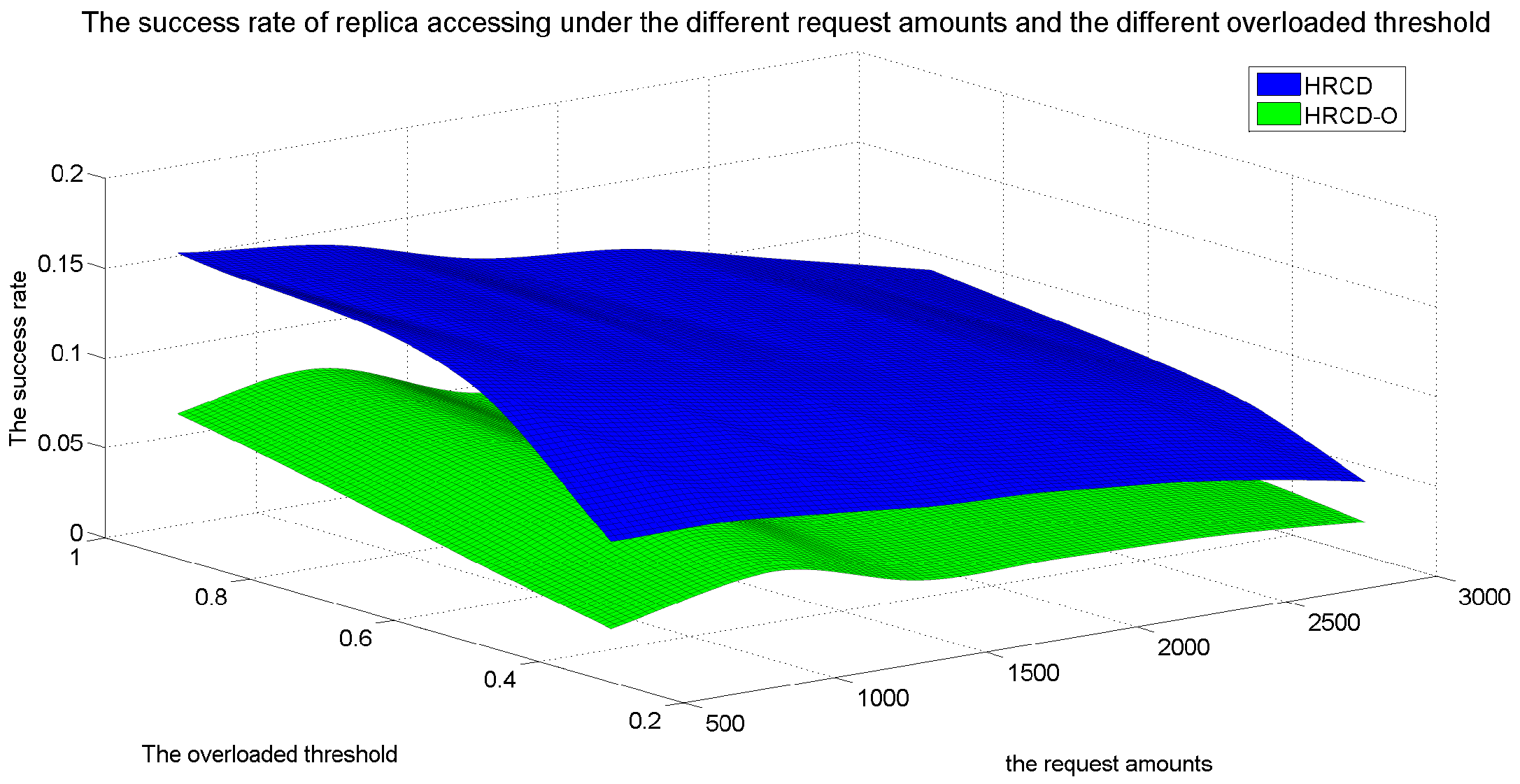

Table 1, the HRCD uniquely integrates community division and fuzzy clustering to achieve load balancing (

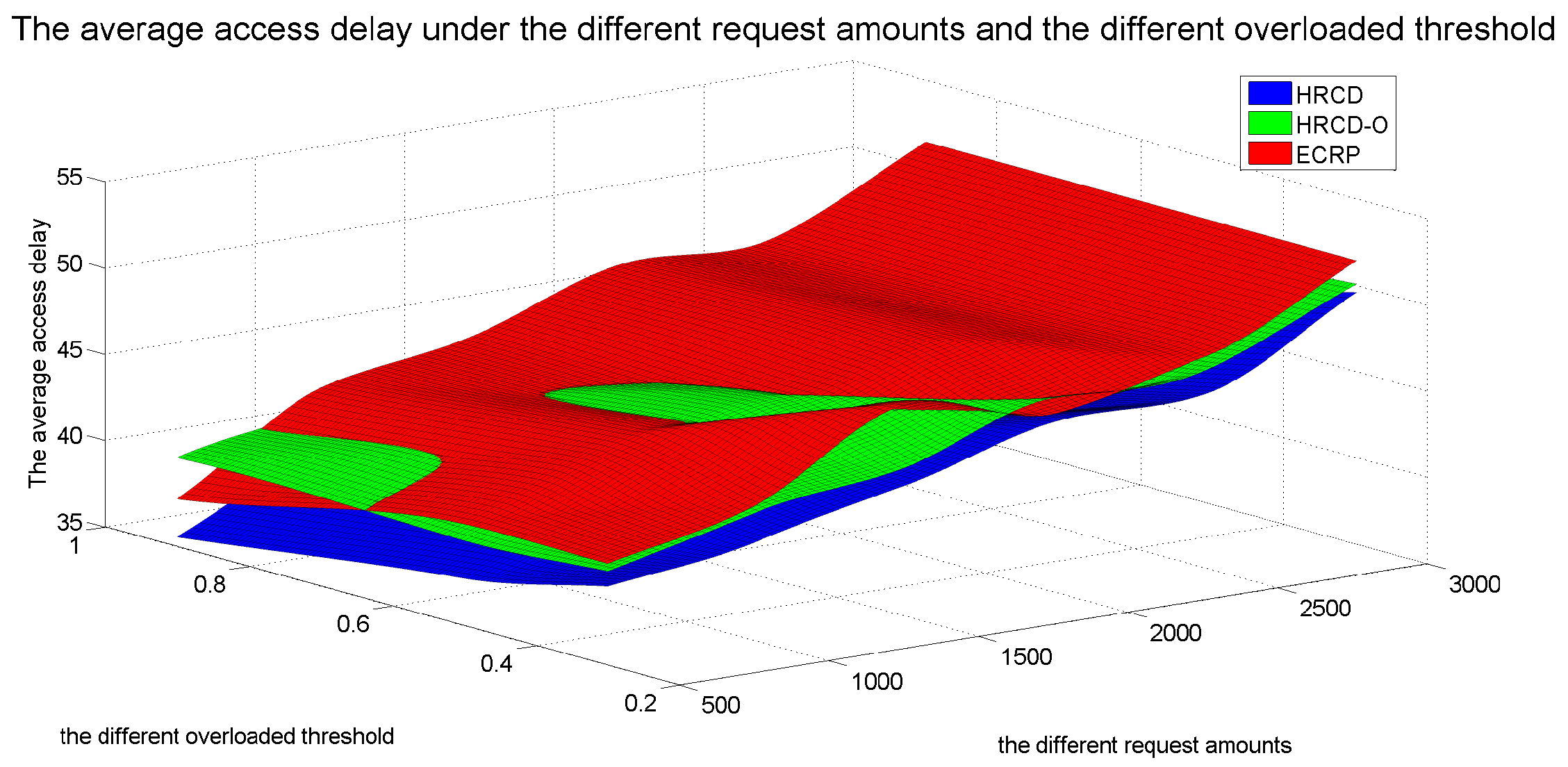

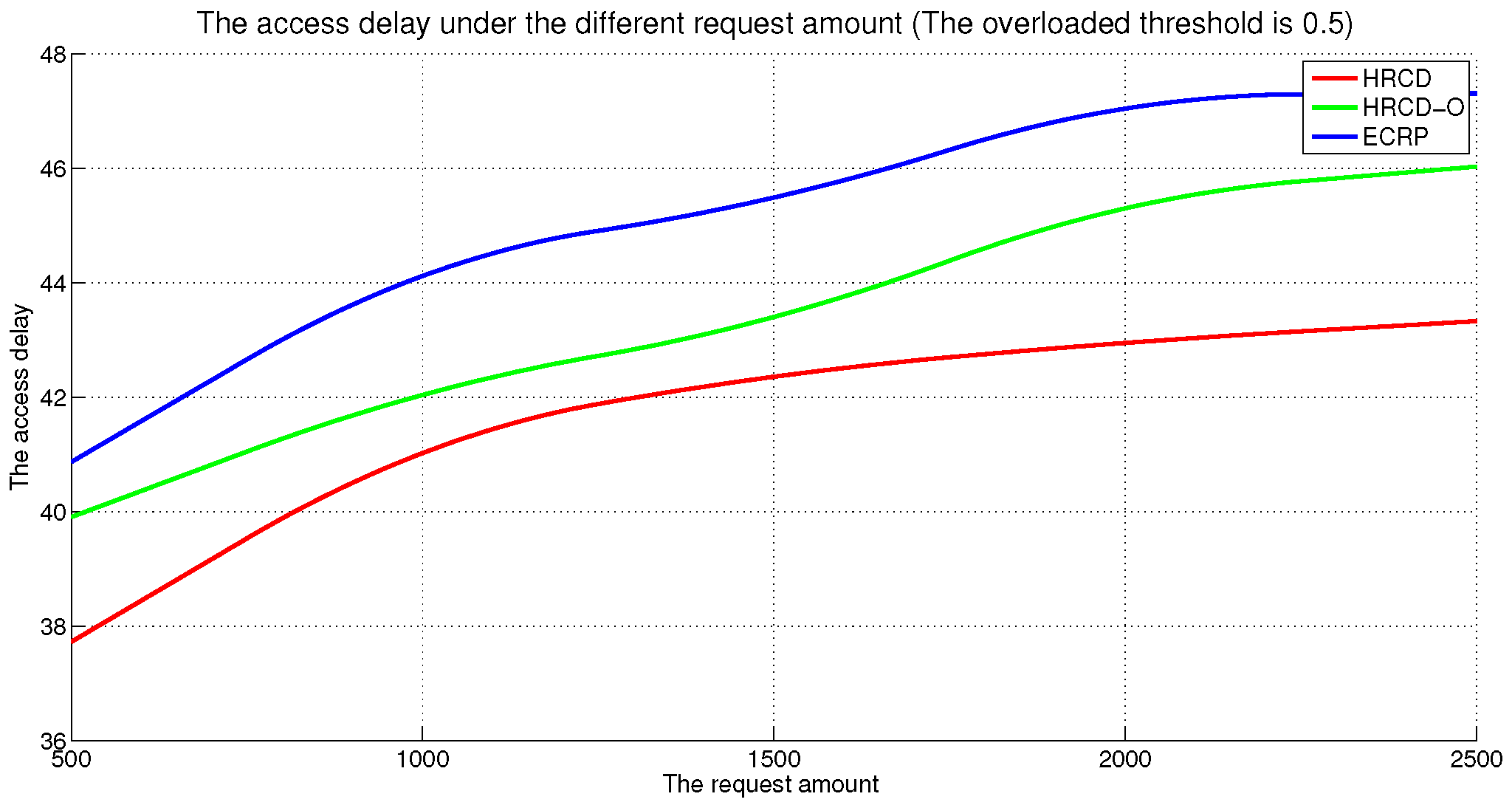

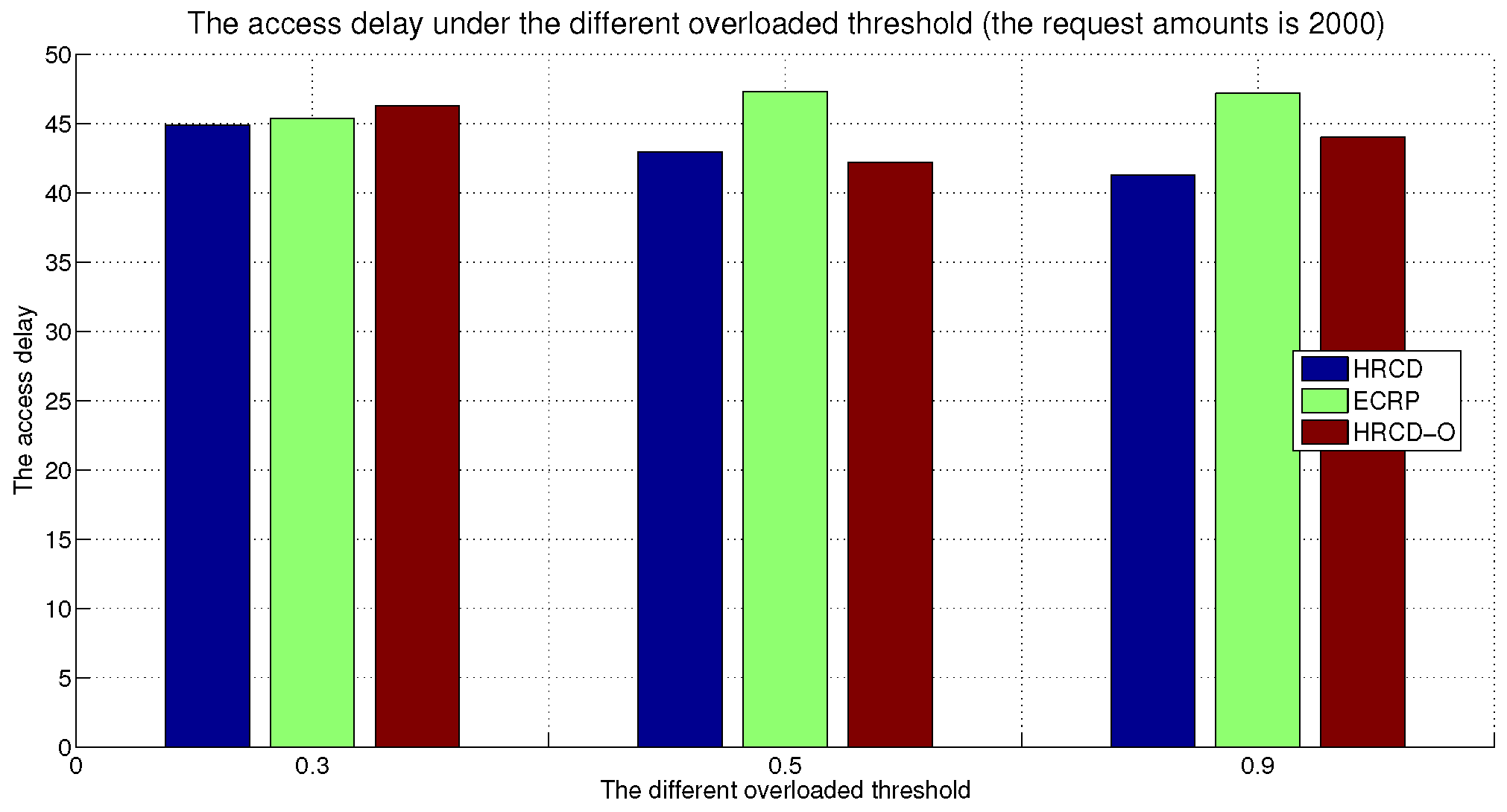

Section 6.3.1) and low latency (

Section 6.3.2) in dynamic 6G edge environments.

Although the HRCD has demonstrated relatively superior performance compared to other similar methods under 6G Edge for large-scale community, it has a notable limitation: it not only places replicas on the UE but also redundantly on the ES. This approach has a high tendency to repeatedly deploy the same replicas, leading to a significant number of redundant replicas and a considerable waste of ES storage space. Additionally, the HRCD operates as a replica placement algorithm that requires nodes to engage in label exchange, replica management, and algorithm execution during their mobility. Meanwhile, the reliability of D2D communication (such as terminal disconnections, edge node failures, or unreliable communication links) also impacts the the HRCD replication strategy. These operations consume node resources, resulting in a decrease in service capacity. Therefore, in the future, we plan to conduct in-depth research on determining which replicas should be placed on ESs, as well as examining the energy consumption of nodes, to achieve a balanced optimization between storage utilization and system performance, and we also plan to conduct an in-depth study on the impact of D2D communication reliability on replica placement to better align with the needs of real-world scenarios.