1. Introduction

Artificial intelligence (AI) is defined as programs that employ methods for gathering information and exploiting it for predicting results, giving recommendations, or making decisions and defining what is the best action for reaching desired objectives [

1]. Recently, AI has been widely adopted in organizations, as it facilitates employees in performing most tasks. Organizations estimate that 34% of all tasks related to business today are performed by machines [

2]. Moreover, organizations adopting AI can expect a revenue increase of 6% to 10% [

3]. According to a Forbes Advisor survey, 64% of organizations expect AI to increase productivity, highlighting the growing confidence in AI [

4].

AI tools and applications attract users because they are easy to use and can make tasks easier. For example, ChatGPT is an open form of AI from the Generative Pre-Training Transformer (GPT) model that emerged in late 2022 [

5]. ChatGPT is a chatbot that provides a model similar to a human conversation, but the difference is that the answer is in the form of automated text [

6]. In addition, using AI tools and applications presents several challenges and risks, including data privacy and security, bias leading to unfair outcomes, and the potential for misinformation, which complicates accountability. In addition, ethical concerns arise with respect to transparency in decision-making. However, AI tools and applications may be inappropriately used in the nature of work at these organizations. These challenges are complex and play a critical role in security, privacy, work, and culture.

Governments, businesses, and organizations are getting together to reduce the difficulties and threats of utilizing AI tools, programs, and applications and create sound AI governance frameworks. Organizations need to make data safety a priority with effective safety protocols, such as encryption and restricted access. For its part, AI governance is likewise crucial, for it establishes specific policies on data privacy and security. Ensuring responsible AI utilization, engaging stakeholders on ethical matters, creating ethics committees, and ensuring that all people are merely aware of best practices and ethical considerations are some ways in which this approach can be guaranteed.

AI governance refers to policies, procedures, guidelines, and frameworks put in place to ensure the development, implementation, and use of ethical and responsible AI systems [

7]. This governance is guided by principles such as fairness, privacy and security, and humanity. By following these principles, governance facilitates decision-making across organizations, providing advantages that enhance the use of AI technologies while curtailing illegal and unethical practices.

In line with the Kingdom of Saudi Arabia’s Vision 2030, Saudi Arabia established the Saudi Data and AI Authority (SDAIA) in 2019 and launched its National Strategy for Data and AI (NSDAI) in 2020. This strategy is supported by SDAIA-developed guidelines, such as the AI Ethics Principles and Generative AI (GenAI) guidelines. In addition, a strong legal framework, including the Personal Data Protection Law (PDPL), the Freedom of Information Policy, and the Anti-Cyber Crime Law, ensures that AI adoption in Saudi Arabia is responsible and secure. In addition, Saudi Arabia is one of the top five countries in the world in AI policy publications, with more than 57 policies submitted through the AI Policy Observatory of the Organisation for Economic Co-operation and Development (OECD) (

https://oecd.ai/en/dashboards/countries/SaudiArabia (accessed on 15 October 2025)), demonstrating its commitment to AI governance.

AI Ethics Principles include seven main principles for developing and using AI, namely Fairness, Privacy & Security, Humanity, Social & Environment, Reliability & Safety, Transparency & Explainability, and Accountability & Responsibility. These principles represent a commitment to human rights and cultural values, aligning with international standards and recommendations on AI ethics [

8].

Policies for ethical and responsible use of AI are important, as they provide a regulated environment and encourage innovation and development. The importance of having policies in organizations lies in creating trust and clarity. Policies for the use of AI tools and applications can help achieve their full potential while minimizing risks and misuse. However, the creation of AI policies contributes to the ethical and responsible use of AI tools. Therefore, when an organization follows AI policies, it achieves the concept of AI governance.

Companies struggle to prepare effective and complete AI policies. The effectiveness of preparing AI policies is now being impeded through manual processes and traditional methods, which are time- and effort-consuming. However, people often make the mistake of using inaccurate language when drafting them. This can lead to unclear and inconsistent AI policies, which compromise their quality. Therefore, it is recommended that any organization that develops or uses AI tools and applications follows the AI Ethics Principles in Saudi Arabia to ensure the ethical and responsible use of these tools [

8].

Nowadays, AI is one of the most important modern technologies that contribute significantly to rapid technological development and growth [

9]. GenAI represented a qualitative leap in content generation. Additionally, GenAI models can be used to accomplish multiple tasks; for example, writing and coordinating policies. This enhances the abilities of employees to perform tasks, helping to save effort and reduce the time required to produce outputs. It also enhances innovation and helps support the wheel of development [

10]. Therefore, this paper introduces Siyasat, an Arabic web-based governance tool that leverages Generative AI to generate and refine AI policies in accordance with SDAIA’s AI Ethics Principles.

The remainder of this paper is organized as follows.

Section 2 reviews the related literature on AI governance and ethics.

Section 3 describes the implementation of the proposed governance tool.

Section 4 presents the web development aspects of the system and the results. Finally,

Section 5 presents the conclusion.

2. Literature Reviews

The most relevant research and tools have been carefully organized under the overarching theme of AI governance.

The paper [

11] introduced a framework called ARC that converts unstructured regulatory text into a structured format to aid in analysis and understanding. It first breaks down regulatory documents into unstructured statements and then identifies semantic roles and simplifies phrases to create ARC tuples that capture the main requirements. The framework consists of two modules: one that finds similarities in different regulations by analyzing phrases and another that compares regulation statements with policy statements to identify missing information. Using NLP, ARC extracts key phrases, separates core requirements from complex clauses, and expresses the text in ARC tuple format. The framework focuses on capturing key regulatory meanings, simplifying complex statements, defining structured representations, and assisting with privacy compliance tasks, thus reducing manual effort.

In [

12], the authors highlight the potential of AI to improve regulatory compliance in the financial sector, particularly in areas such as antimoney laundering and the enforcement of sanctions. They argue that AI techniques, such as machine learning and NLP, can automate tasks such as monitoring transactions and communications, making these processes more accurate and cost-effective. For example, machine learning can detect fraud and reduce false positives, while NLP can analyze legal documents for suspicious language. However, challenges such as data privacy, ethical concerns, and system complexity require human oversight. Future research should focus on establishing ethical guidelines, ensuring data protection, and adapting to evolving compliance needs. Overall, while AI offers significant potential to improve regulatory compliance, its implementation should be approached with caution and responsibility.

In [

13], the authors discuss the development of Gracenote.ai, a suite of AI tools that focus on LLMs, such as GPT-4, for governance, risk, and compliance (GRC) tasks. These tools include generating regulatory news feeds, automating the extraction of legal obligations, and providing expert legal advice through AI. For sensitive data, the use of privately hosted models is recommended. Gracenote.ai plans to expand its tools to more regions, enhance capabilities, and integrate them more deeply into GRC systems. A notable limitation of these tools is their reliance on human oversight.

In [

14], the authors introduce CompAI, an AI tool designed to automatically check whether privacy policies comply with the GDPR. It operates in two main phases. Phase A focuses on metadata identification, using NLP and machine learning (ML) to extract and classify metadata types from privacy policy texts. Phase B conducts a completeness check, combining the metadata with contextual information input from the user to assess completeness and flag potential violations in a detailed report. CompAI was tested on 234 privacy policies from the fund industry, achieving high accuracy in identifying details. Currently, it focuses on the fund industry and relies on fixed keywords, but future updates aim to expand its applicability to other industries and improve its language comprehension.

A logical classification of the reviewed studies is presented in

Table 1. Based on the topics covered, the tables provide a list of the objectives, contributions, and limitations of each study.

Table 2 summarizes the comparison of primary extracted features from [

11,

12,

13,

14], which are crucial in identifying the characteristics of the AI tool proposed to generate and improve AI policies.

The extracted features listed in the above

Table 2 refer to the following:

Checking completeness: review and audit to ensure accuracy of information, reduce errors, and the ensure alignment of the regulatory files of different authorities.

Website: is used to implement the GenAI model.

Result: evaluate policy and guidelines by classification categories: Aligned, Partially Aligned, and Non-Aligned.

Arabic compatibility: the system supports the Arabic language, including Arabic grammar and syntax.

AI-Generated suggestions: suggestions generated using AI to improve the way of writing a policy or guide.

Generate full document: generate a new policy or guideline.

Tools

The AI tool was obtained from various search engines on the Internet, the social media pages of the tool developers, and the GitHub platform.

TermsFeed (

https://www.termsfeed.com/privacy-policy-generator/ (accessed on 15 October 2025)) is a tool that allows for the generation of Privacy Policies with the TermsFeed Generator to comply with GDPR, the California Consumer Privacy Act (CCPA), California Privacy Rights Act (CPRA), and many other privacy laws. The TermsFeed Privacy Policy Generator makes it easy to create a Privacy Policy for a website, app, e-commerce store, or SaaS. It supports downloading in multiple file formats, such as HTML, Docx, plain text, or Markdown. In addition, users can update it and apply custom modifications. Every Privacy Policy generated by the TermsFeed Generator is hosted for free; the link does not expire and can be used anywhere.

AWS Policy Generator (

https://awspolicygen.s3.amazonaws.com/policygen.html (accessed on 15 October 2025)) is a tool that allows for the creation of policies that control access to Amazon Web Services (AWS) products and resources. To create a policy, a policy form must be filled out, specifying the type of policy, as there are different types of policies that can be created. The details of the statement are then added. The policy is subsequently created in a document written in the Access Policy Language, which acts as a container for one or more statements.

Waybook Policy Generator (

https://www.waybook.com/tools/policy-generator (accessed on 15 October 2025)) is a tool that helps businesses quickly create custom policies for websites and apps. The site prompts the tool to assist in writing a custom policy, which includes the type of policy, the details of the work, the regulations that must be followed, and company-specific terms. Subsequently, a specific policy is issued that seeks compliance in a PDF file.

Free Privacy Policy Generator (

https://www.freeprivacypolicy.com/free-privacy-policy-generator/ (accessed on 15 October 2025)) is a tool that helps ensure compliance with the CCPA, CPRA, GDPR, and Google Analytics and AdSense requirements. A policy can be created for a website or application. The site requests information regarding the policy of the site or application, such as links and terms of the policy. The site displays a questionnaire to be filled out. The policy file can then be downloaded to the device or integrated with the platform via HTML.

Websitepolicies (

https://app.websitepolicies.com/policies/platforms/manage (accessed on 15 October 2025)) is a tool that allows for the creation of customized privacy policies tailored to the needs of organizations to ensure alignment with global privacy standards. It aligns businesses with key international regulations, including GDPR, CCPA, CPRA, the Personal Information Protection and Electronic Documents Act (PIPEDA), the California Online Privacy Protection Act (CalOPPA), and other privacy laws. The tool is adaptable to various needs, such as websites, blogs, e-commerce stores, SaaS, or mobile applications. The policy can be downloaded to a device in multiple formats or placed directly on the site.

App Privacy Policy Generator (

https://app-privacy-policy-generator.nisrulz.com (accessed on 15 October 2025)) is a tool that helps app developers create privacy policies tailored to specific needs. By answering a few questions about the app’s features and user data usage, the generator produces a policy compliant with regulations such as GDPR and CCPA. The resulting policy can be copied, downloaded, or integrated directly into the app or website.

REUSE (

https://reuse.software/tutorial/ (accessed on 15 October 2025)) is a tool that simplifies the documentation of file licenses in a project. The idea is to add information to each file, such as code or documents that clearly state the terms under which it can be used. First, the tool is installed on a system; then, license files that pre-written legal texts are added to a dedicated folder in the project. After that, the tool automatically adds comments to each file, specifying its license. Finally, the tool checks to ensure that all files are properly documented and organized. The goal is to protect rights and make it easy for others to understand how the project can be used legally.

Mumtathil (

https://mumtathil-yi47kagqlq-ey.a.run.app (accessed on 15 October 2025)) (ممتثل) is a specialized tool designed to assess the alignment of privacy policies with the Saudi Personal Data Protection Law. The tool allows users to prompt policies in text format or as a PDF file and evaluates alignment with eight key provisions: user consent, data collection and processing, data retention, data protection and sharing, user rights, advertisements, violation reporting, and liability. After completing the assessment, the tool determines the alignment level (Weak, Moderate, or Compliant) and provides an overall alignment percentage, offering a comprehensive evaluation to ensure alignment with regulatory requirements.

Table 3 presents a comparative analysis of the tools, emphasizing their pros and cons. As shown, each existing solution faces certain limitations that prevent its effective use in our context, thus motivating the development of the proposed tool.

While the available tools offer useful templates of legal or privacy compliance, none take notice of the ethical, contextual, and linguistic aspects of AI governance in Saudi Arabia. None of them utilize SDAIA’s Artificial Intelligence Ethics Principles, and they do not incorporate Generative AI in enhancing and aligning policies. Thus, Siyasat represents a step toward AI governance automation, extending beyond traditional policy generation to ethically aligned, regulation-specific AI policy support.

3. Implementation

3.1. AIGPT Model Developing

To develop the AIGPT model, a structured diagram will be utilized, as illustrated in

Figure 1. The diagram presents a visual overview of the key steps involved in the development process and is explained in detail below.

Data Creation and Collection: A collection of AI-related policy documents was developed, covering various domains and aligning with the AI Ethics Principles. The dataset consists of ten domain-specific policy documents, along with one foundational document outlining the Seven AI Ethics Principles.

Data Pre-processing involves tasks for the normalization of the dataset, data cleaning, and text chunking to split documents into manageable segments. This ensures better compatibility with embedding models and LLMs.

Building Retrieval-Augmented Generation (RAG) involves generating embeddings from the preprocessed content, storing them in a FAISS vector store, integrating retrieval with LLM, and evaluating its performance in alignment with ethical principles and structural completeness.

Website Integration involves developing the frontend interfaces using HTML and CSS, along with backend functionalities built with Flask. PostgreSQL was used as the backend database to store user data, uploaded files, and policy results. A GPT-4 Turbo model, integrated through a RAG architecture, was deployed to process user inputs, enabling the generation and improvement of AI policies in alignment with AI Ethical Principles.

3.2. Data Creation and Collection

The training dataset was purposefully developed to reflect both the practical requirements of AI governance across multiple domains and the ethical imperatives outlined by national regulatory authorities. It comprises eleven custom-written documents, divided as follows:

Ten domain-specific policy documents, each addressing a particular area of AI application (healthcare, education, media and journalism, business, legal, non-profit, industry and manufacturing, banking and financial, cybersecurity, and entertainment ) were developed based on the most advanced and widely used AI domains.

To create an effective policy, the following components should be included [15]:Introduction: provides the background and demonstrates the importance of the policy, setting the foundation for its purpose.

Objectives: defines the main purpose of the policy and the objectives it aims to achieve.

Scope: clarifies the groups or entities to which the policy applies, as well as the situations or contexts it covers.

Definitions: clarifies key terms to ensure a clear understanding of the policy.

Policy Principles: outlines the fundamental principles that guide behavior and decision-making within the policy context.

Legislative and Regulatory Framework: lists the laws and regulations with which the policy is aligned or based.

Roles and Responsibilities: specifies the individuals or entities responsible for implementing and ensuring compliance with the policy.

A summary of the ten domain-specific AI policies is provided in

Table 4.

One foundational document detailing the Seven AI Ethics Principles established by SDAIA. To ensure that AI ethics are effectively managed and disseminated throughout the kingdom, SDAIA published the seven AI ethics, which include Fairness, Privacy & Security, Humanity, Social & Environmental Benefits, Reliability & Safety, Transparency & Explainability, and Accountability & Responsibility [

8].

The ten policy documents were authored by a panel of domain experts representing diverse fields such as AI ethics, data governance, law, and organizational compliance. The Siyasat team coordinated and facilitated a rigorous multi-step drafting process grounded in established policy development standards. Each policy was tailored to a specific AI application domain and aligned with the AI Ethics Principles issued by SDAIA (SDAIA AI Ethics Principles (2020),

https://sdaia.gov.sa/ar/SDAIA/about/Documents/ai-principles.pdf) (accessed on 15 October 2025). The drafting process followed the Public Policy Development Guidelines issued by IPA (IPA Policy Development Guide (2021),

https://n9.cl/9yw0l) (accessed on 15 October 2025), ensuring a structured approach that included needs assessment, stakeholder analysis, policy formulation, and expert review. Furthermore, each document was reviewed by specialists in AI governance and ethics to ensure legal accuracy, contextual relevance, and alignment with AI Ethics Principles. The drafting process involved the following:

Conducting background research on local and international AI regulatory frameworks.

Reviewing relevant case studies and existing practices.

Structuring content into clearly defined sections (e.g., Purpose, Scope, Definitions, Policy Statement, Ethical Principles, and Roles and Responsibilities).

Explicitly embedding SDAIA’s seven ethical principles throughout each policy.

Feedback from domain experts was considered to enhance technical accuracy, contextual depth, and ethical consistency. The final documents were then standardized and refined for use in model training, serving as high-quality, context-aware examples for designing AI policies. The dataset, including all domain-specific AI policy documents and the foundational AI ethics document, is publicly available for research purposes at

https://drive.google.com/drive/folders/13Jk9mQATufkQuvDUmpuUEN0xO2heZHZ8?usp=drive_link (accessed on 15 October 2025).

3.3. Experts Evaluation

The dataset of domain-specific policies was developed directly by a group of twelve experts, representing diverse fields including policy analysis, law, data science, artificial intelligence ethics, and organizational compliance. The experts were selected according to predefined eligibility criteria, which required advanced academic qualifications, a minimum of seven years of professional experience, and demonstrated expertise in applied policy development or related research.

Each expert independently drafted policy documents within their domain of specialization, ensuring that the content reflected both practical relevance and alignment with the AI Ethics Principles. Following the drafting phase, a structured peer-review and calibration exercise was conducted. During this exercise, experts assessed a subset of each other’s drafts and participated in guided discussions to reconcile discrepancies, harmonize terminology, and establish a unified interpretation framework.

To assess the consistency of the expert-generated dataset, inter-rater reliability measures were calculated. Cohen’s Kappa coefficient reached 0.82, while the intraclass correlation coefficient (ICC) was 0.88. According to established benchmarks [

16,

17], these values indicate “almost perfect agreement” according to established benchmarks. This rigorous process ensured that the expert-authored policies were both reliable and methodologically sound, providing a strong foundation for the pre-trained model’s adaptation and evaluation.

3.4. LLM Development

The LLM was used in the development of the Siyasat tool, depending on the RAG, to train the model to process text data and generate texts. These generated files were then aligned according to SDAIA principles. To ensure the effectiveness of the RAG methodology, a careful configuration of the system components was essential. The main parameters and criteria used in this setup are summarized in

Table 5, and they include the following key aspects.

Base model selection: GPT-4 Turbo is an improved model developed by OpenAI. Its writing and computational capabilities have been enhanced to improve efficiency, accuracy, and error reduction. It is broad-based and accepts various inputs, such as images and text [

18]. It is rigorously trained on files and data, enabling it to generate text with human-like accuracy based on the prompts it receives. prompt (

https://openai.com/index/new-models-and-developer-products-announced-at-devday/ (accessed on 15 October 2025)). (

https://platform.openai.com/docs/models/gpt-4-turbo (accessed on 15 October 2025)) GPT-4-turbo was selected due to its ability to generate coherent, structured Arabic text with high contextual understanding.

Retriever Configuration: a Facebook AI Similarity Search (FAISS)-based dense retriever was employed to ensure fast and accurate semantic similarity matching with the policy dataset.

Prompt Design: customized prompts were crafted to align with policy structures and align with AI ethics principles, guiding the model to produce outputs that include all required policy sections.

The selection of the above parameters was informed by a series of empirical evaluations aimed at optimizing the quality and relevance of the generated policy content. The

chunk_size and

chunk_overlap were fine-tuned to balance semantic coherence with computational efficiency. A chunk size of 200 tokens, combined with an overlap of 50 tokens, was found to provide sufficient contextual retention without introducing significant redundancy. The

length_function was set to

len to ensure compatibility with the tokenization scheme and simplify implementation. Crucially, the temperature was set to 0.1 to reduce variability in the model’s responses. This configuration was selected to ensure that outputs remain deterministic and aligned with the formal tone and structure required in official Arabic policy documents. These choices were validated through iterative testing and qualitative assessment of output consistency, relevance, and linguistic correctness. To effectively generate regulatory-grade Arabic policy documents without retraining model weights, a robust methodology combining

RAG and

Prompt Engineering was implemented. This dual strategy was purposefully designed to align GPT-4 Turbo’s capabilities with the linguistic, structural, and ethical standards required for Arabic policy generation. Recent studies support this approach: [

19] highlighted that prompt design significantly improves LLM output quality without retraining, while [

20] empirically demonstrated that prompt engineering enhances precision and contextual accuracy in document information extraction tasks. Therefore, integrating RAG for external knowledge retrieval with prompt engineering ensures both semantic relevance and reproducibility of generated policies.

3.5. LLM Testing

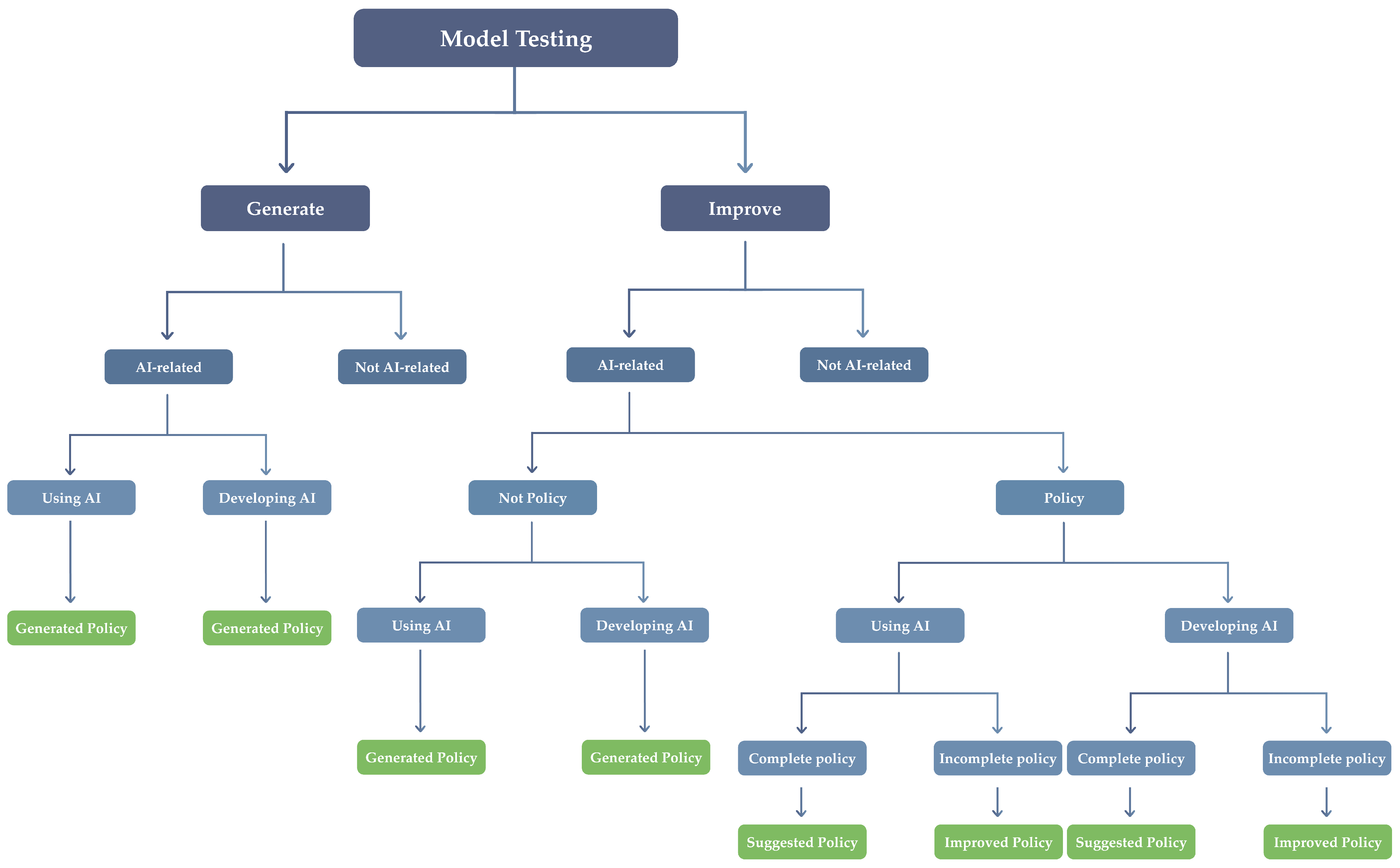

To evaluate the capabilities of the AIGPT model in Arabic policy development, two test cases were conducted, with each targeting a core functionality of the system, as shown in

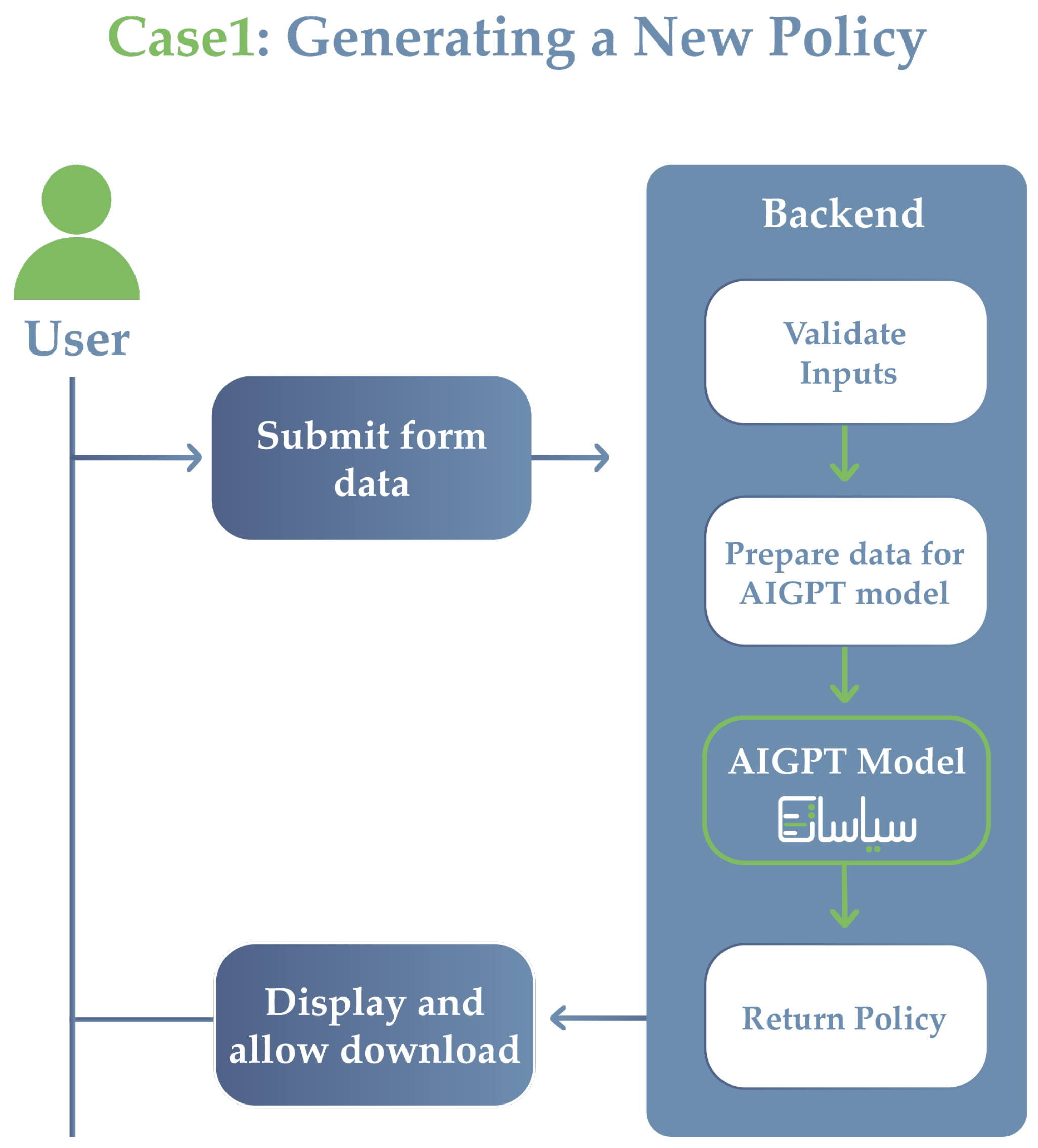

Figure 2.

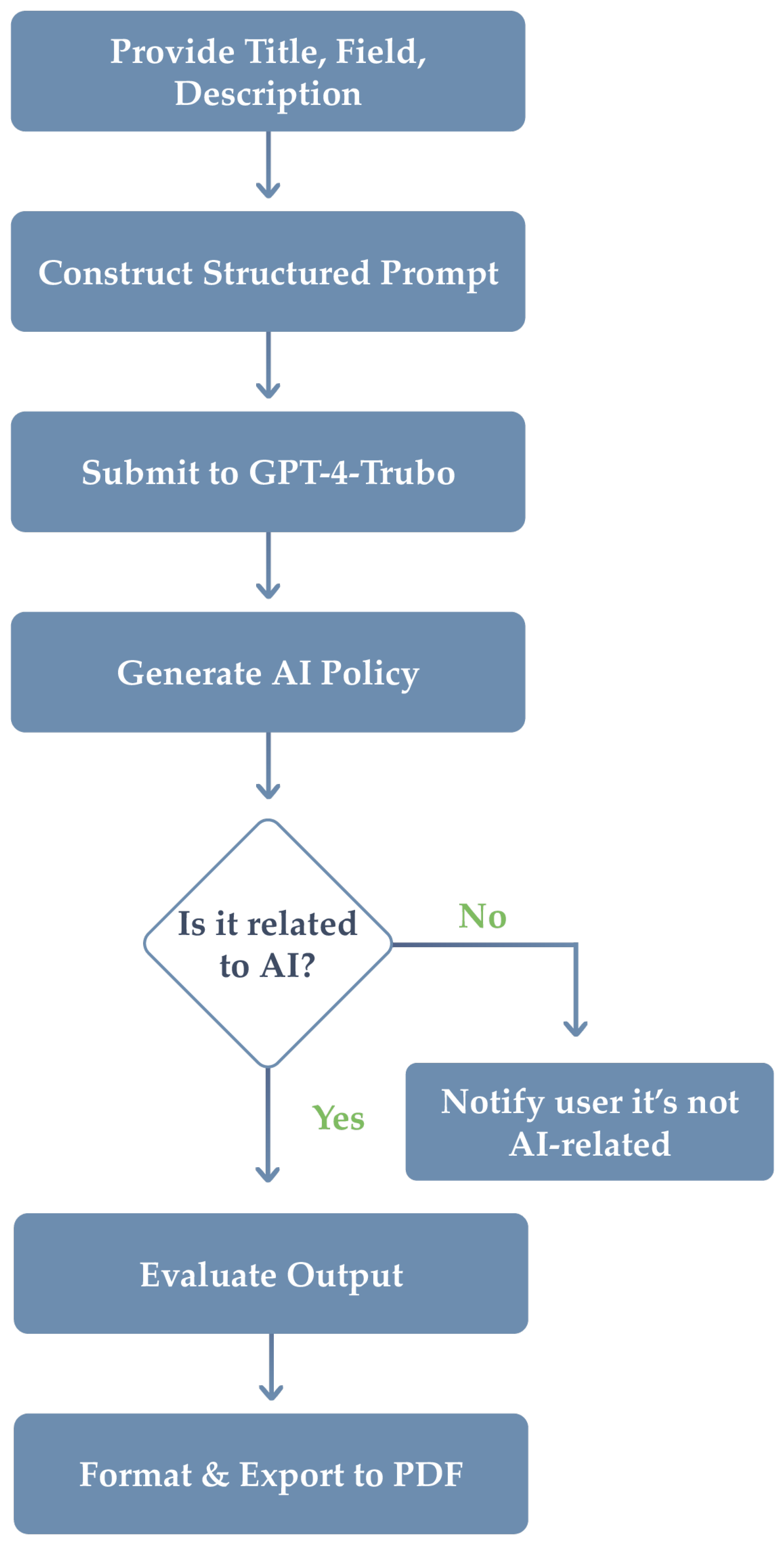

Case 1: Policy Generation

In this case, the AIGPT model was evaluated for its ability to generate complete Arabic policy documents based on simple user prompts. The process begins with the user entering a policy title, a domain-specific field, and a brief description. A relevant example is retrieved from the vector repository using FAISS. This step then validates the user prompts. First, the prompt is sent to GPT-4 Turbo, which then identifies and verifies whether the requested policy is related to AI. If it is related to AI, it then verifies whether the request relates to AI development or use. Finally, whether the policy relates to the development or use of AI, it constructs a structured directive that incorporates ethical AI principles and a formal model. This directive is then sent back to GPT-4 Turbo, which generates a complete, domain-compliant policy document. The output is evaluated for structural completeness, ethical consistency, and linguistic fluency, and it is presented to the user in PDF format.

Figure 3 illustrates the complete workflow of the generation process.

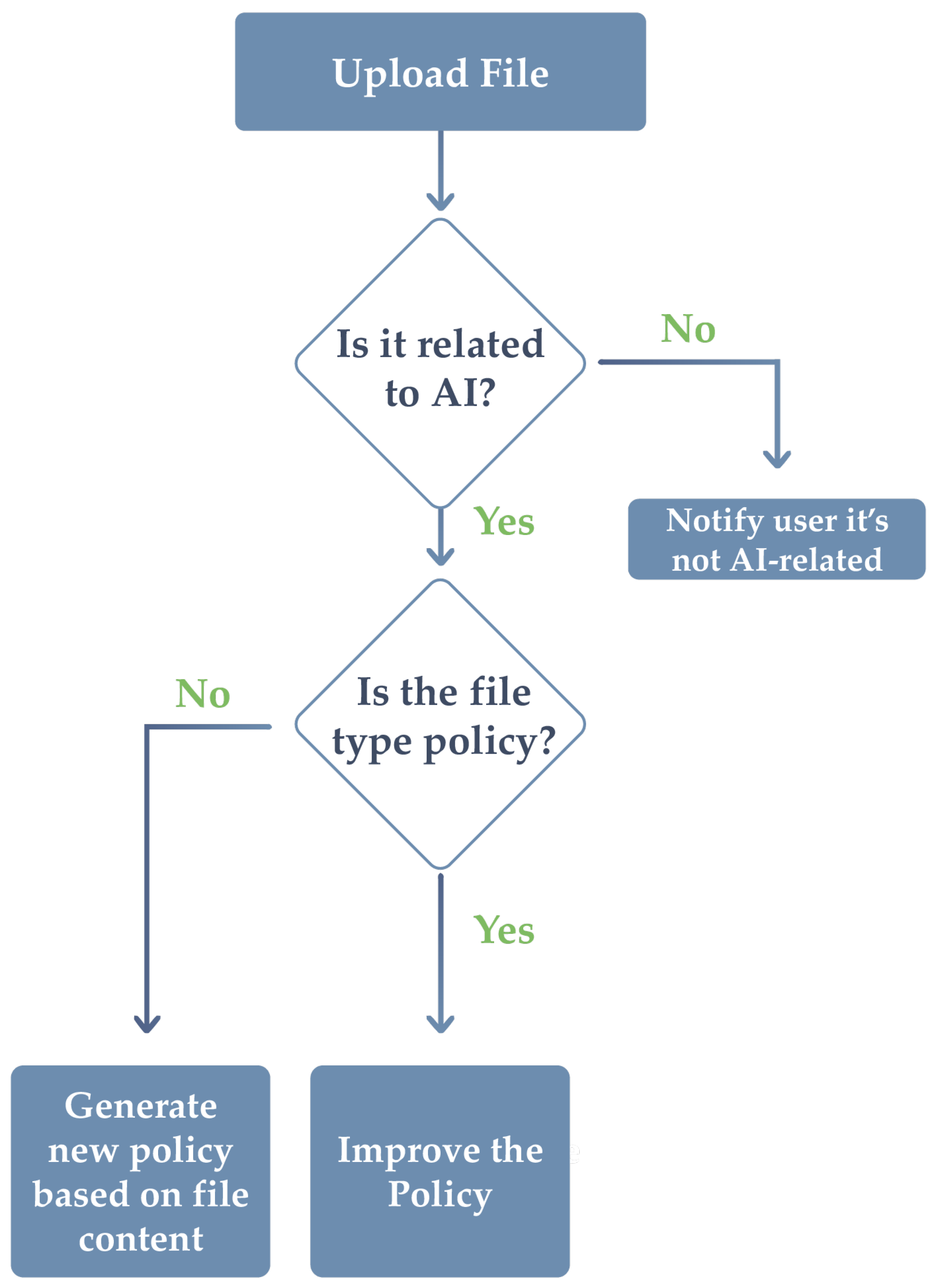

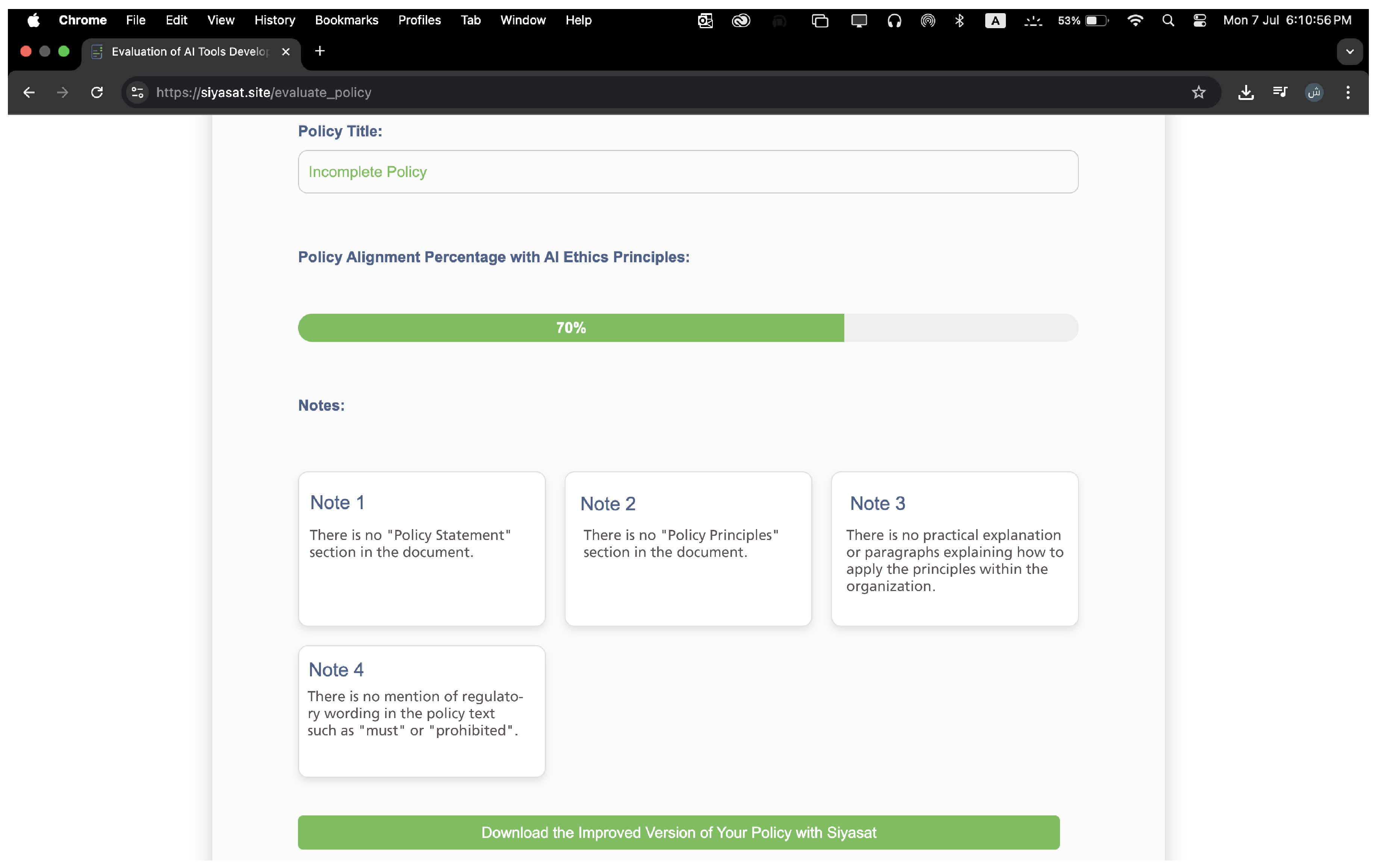

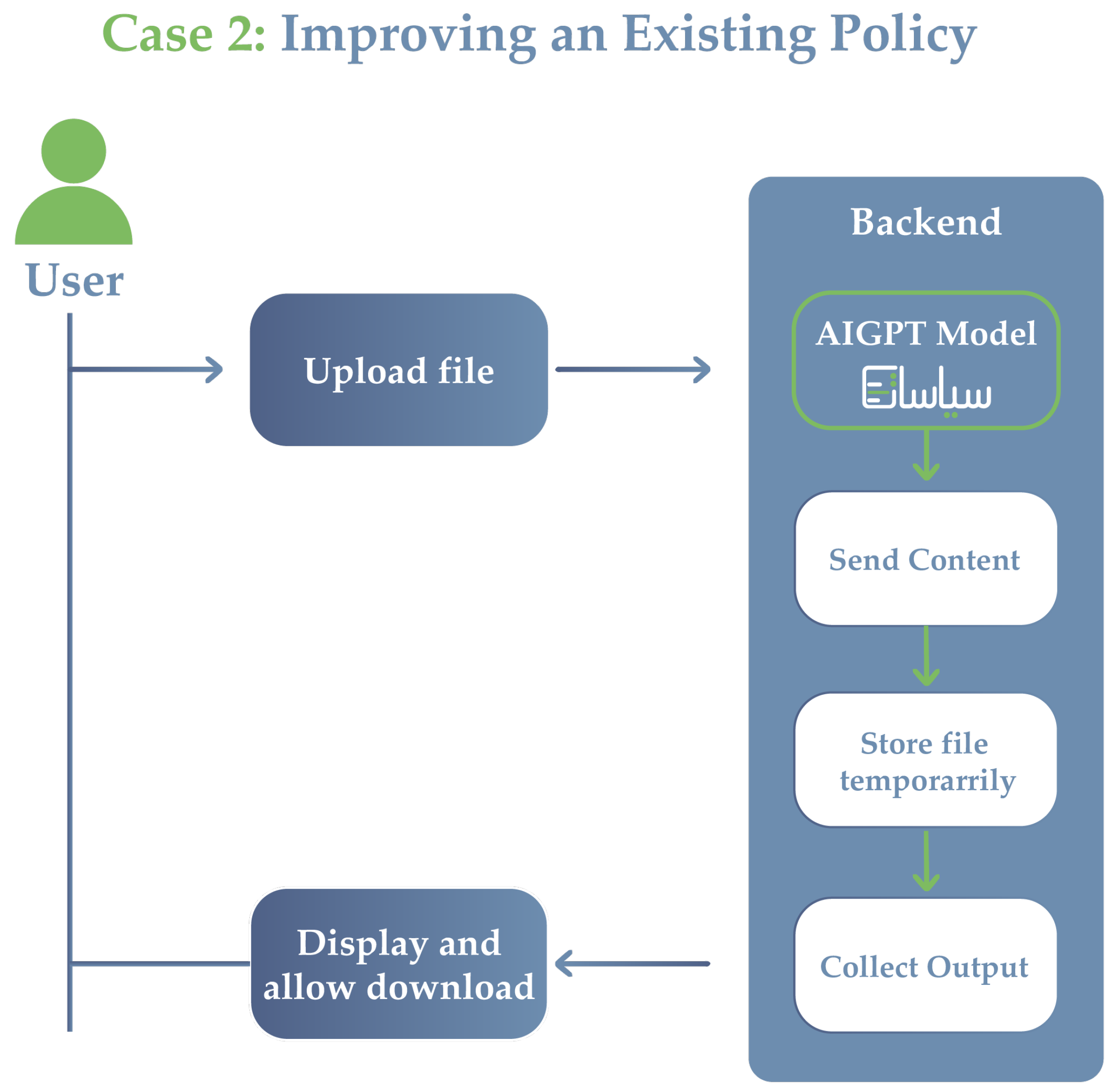

Case 2: Policy Improvement

In this case, the AIGPT model was evaluated for its ability to improve and restructure drafts of complete Arabic policy documents based on a file uploaded by a user. The process begins with the user uploading a PDF file, from which the model extracts the Arabic text using rule-based processing, with the possibility of resorting to OCR if the document is unreadable. First, instructions are sent to GPT-4 Turbo, which determines and verifies whether the policy text is related to AI or not.

- –

Related to AI: If the policy text is related to AI, it verifies whether the file is a policy or not.

File is a policy: If the file is found to be a policy, it identifies the content of the file to determine whether it relates to AI development or use. In both cases, whether it is development or use, it verifies the completeness of the policy elements, AI ethics principles, and policy language. After the verification process, the policy evaluation results are displayed. Based on the results, there are two possibilities: the policy is either complete or incomplete. In either case, the user can request the model to improve the policy. The model then improves the policy and displays it to the user in PDF format.

File is non-policy: If the file is found to be non-policy, the results of the attached file evaluation are displayed, and of course, there will be several comments. The model then identifies whether the file’s content relates to the development or use of AI. The next critical task is to generate a new, fully functional policy based on the content of the file uploaded by the user.

- –

Non-AI related: if the policy text is not related to AI, it apologizes for improving the policy and informs the user of this.

The final step is for the model to check the extracted content for its relevance to AI and evaluate it according to an eleven-part structural criterion and seven ethical principles defined in accordance with national guidelines. If any deficiencies are found, a message is sent to the model to revise the content without changing the already valid sections. The improved output is evaluated for structural consistency and ethical completeness.

Figure 4 illustrates this improvement process in detail.

3.6. Model Evaluation

The performance of the proposed language model was systematically evaluated using both automated and human-centered metrics. Quantitatively, BERTScore was applied to assess the semantic similarity between the generated outputs and their corresponding reference policies, ensuring contextual relevance and content fidelity. Additionally, Self-BLEU was employed to evaluate the diversity of multiple outputs generated for the same input prompt. These automated metrics provided objective measurements of the model’s semantic alignment and output variability. Complementing the quantitative analysis, a qualitative human evaluation was conducted, focusing on criteria such as linguistic clarity, structural completeness, and adherence to formal policy-writing conventions, and compliance with ethical AI principles. The evaluation results confirmed the system’s ability to produce coherent, semantically accurate, and stylistically appropriate Arabic policy documents aligned with the Siyasat objectives.

3.7. BERT Score

3.7.1. Results

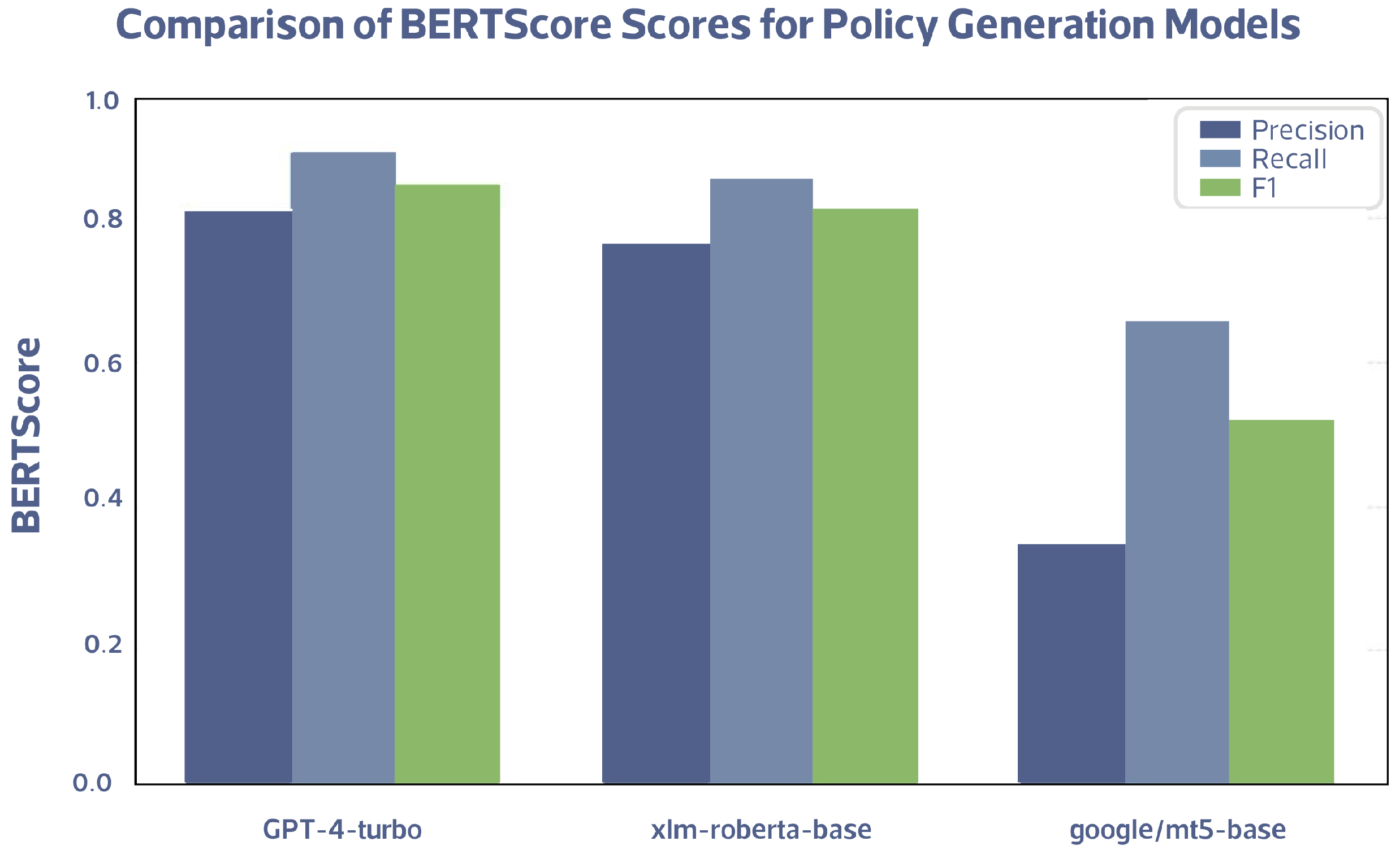

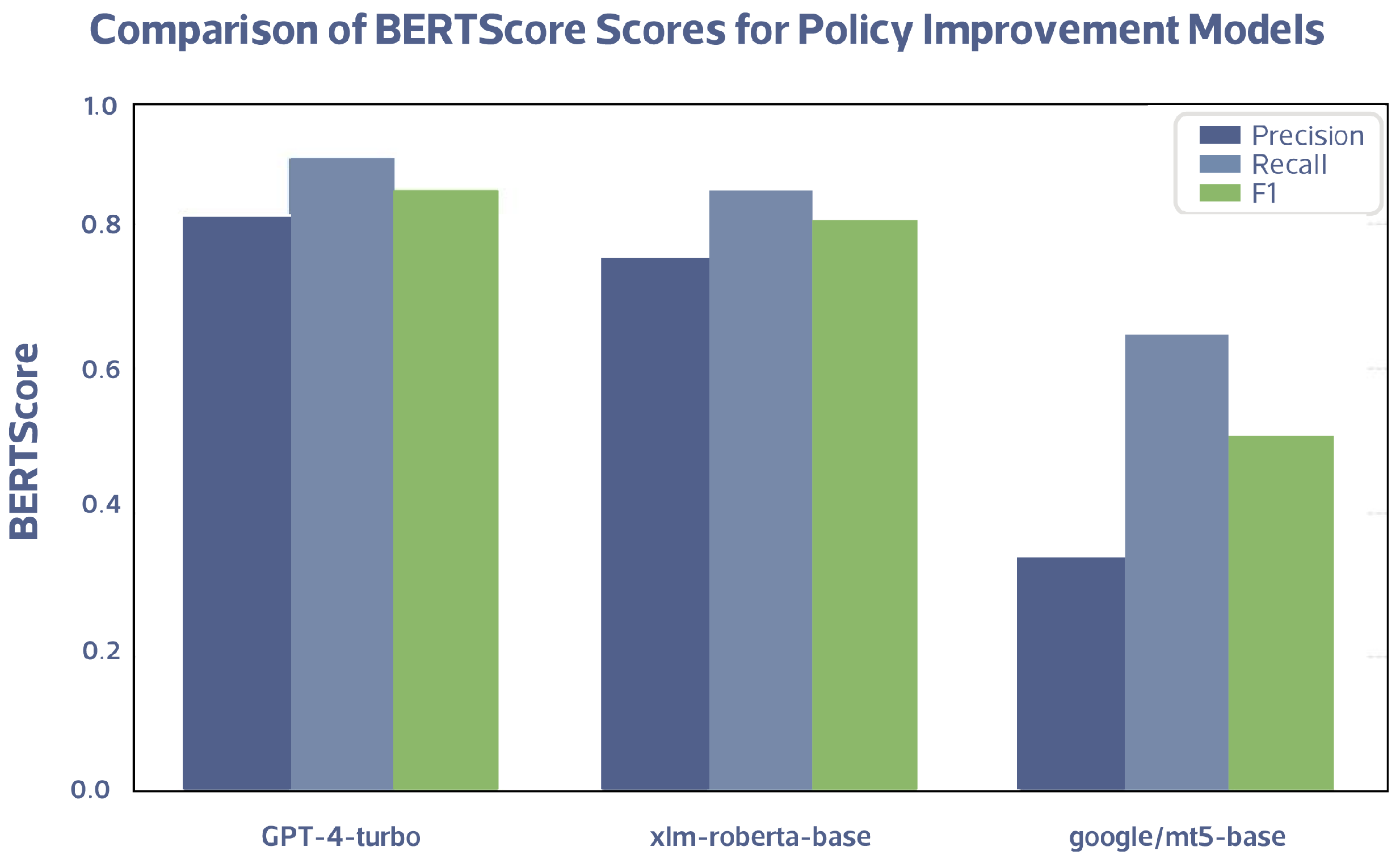

The BERTScore metric was utilized to evaluate the semantic similarity between the generated policies and their respective reference texts. BERTScore assesses the degree of contextual alignment by comparing token embeddings, thus providing an indication of both semantic similarity and content quality. A higher BERTScore reflects stronger semantic alignment and superior textual coherence with the reference materials [

25].

Table 6 presents the BERTScore F1 results. The F1 score was chosen over Precision or Recall individually because it provides a balanced measure that considers both metrics, ensuring a more comprehensive evaluation of model performance. Obtained from evaluating three language models: xlm-roberta-base, google/mt5-base, and GPT-4 Turbo, across two distinct tasks: policy generation and policy improvement. GPT-4 Turbo consistently outperformed the other models, achieving a score of 0.890 in the policy generation task and 0.870 in the policy improvement task. By comparison, xlm-roberta-base achieved scores of 0.828 and 0.826, while google/mt5-base scored 0.535 and 0.542, respectively. These results demonstrate GPT-4 Turbo’s superior capability in generating semantically aligned and coherent policy texts, suggesting enhanced contextual understanding and language modeling proficiency across both tasks.

The values presented in

Table 6 indicate that GPT-4 Turbo demonstrates a superior ability to produce texts that are semantically aligned with the reference policies. This reflects a deeper contextual understanding and a higher level of fluency and coherence in text generation. Notably, the performance gap between GPT-4 Turbo and the other models is substantial, particularly in the policy generation task, suggesting that GPT-4 Turbo maintains consistent performance across different types of tasks, whether generative or improving.

Figure 5 and

Figure 6 illustrate the BERTScore results for the policy generation and policy improvement tasks, respectively. These figures highlight the superior semantic similarity achieved by GPT-4 Turbo in both tasks when compared to xlm-roberta-base and google/mt5-base. The high scores reflect the model’s advanced contextual understanding and its ability to generate and refine policy texts that closely align with reference materials.

3.7.2. Discussion

The superior performance of GPT-4 Turbo can be attributed to several technical factors. First, the model benefits from architectural enhancements—particularly in its self-attention mechanisms and contextual embedding strategies—that enable it to better capture nuanced semantic relationships [

26]. Second, it was presumably trained on a more diverse and balanced dataset, with substantial exposure to formal, structured domains such as policy documents [

27]. In contrast, xlm-roberta-base shows relatively strong performance because its multilingual pretraining allows it to capture semantic information across languages and structured domains, while google/mt5-base exhibits limitations in modeling deep contextual semantics and generating consistently structured, formal language, which likely contributed to its relatively lower scores.

3.8. Self-BLEU

3.8.1. Results

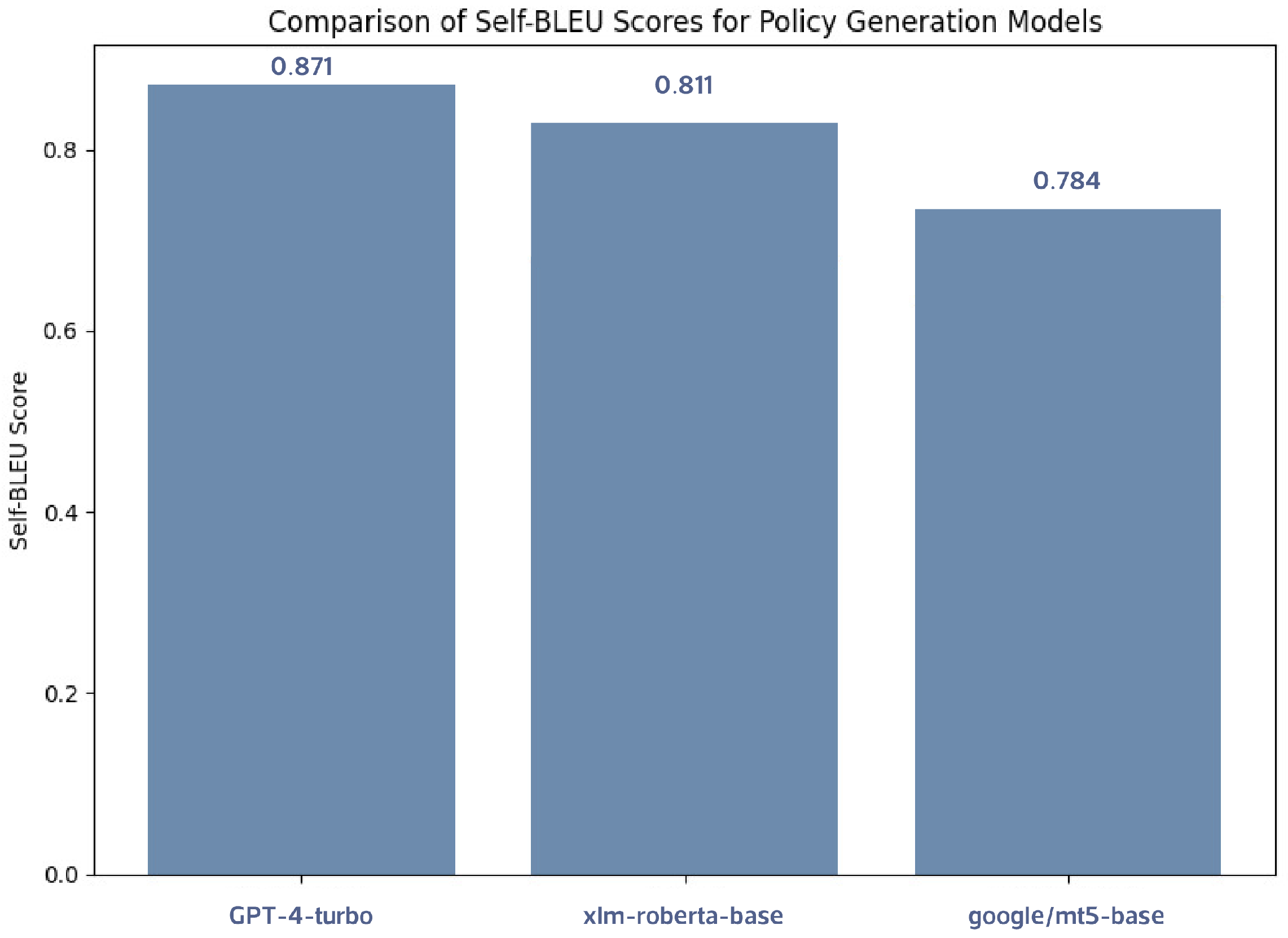

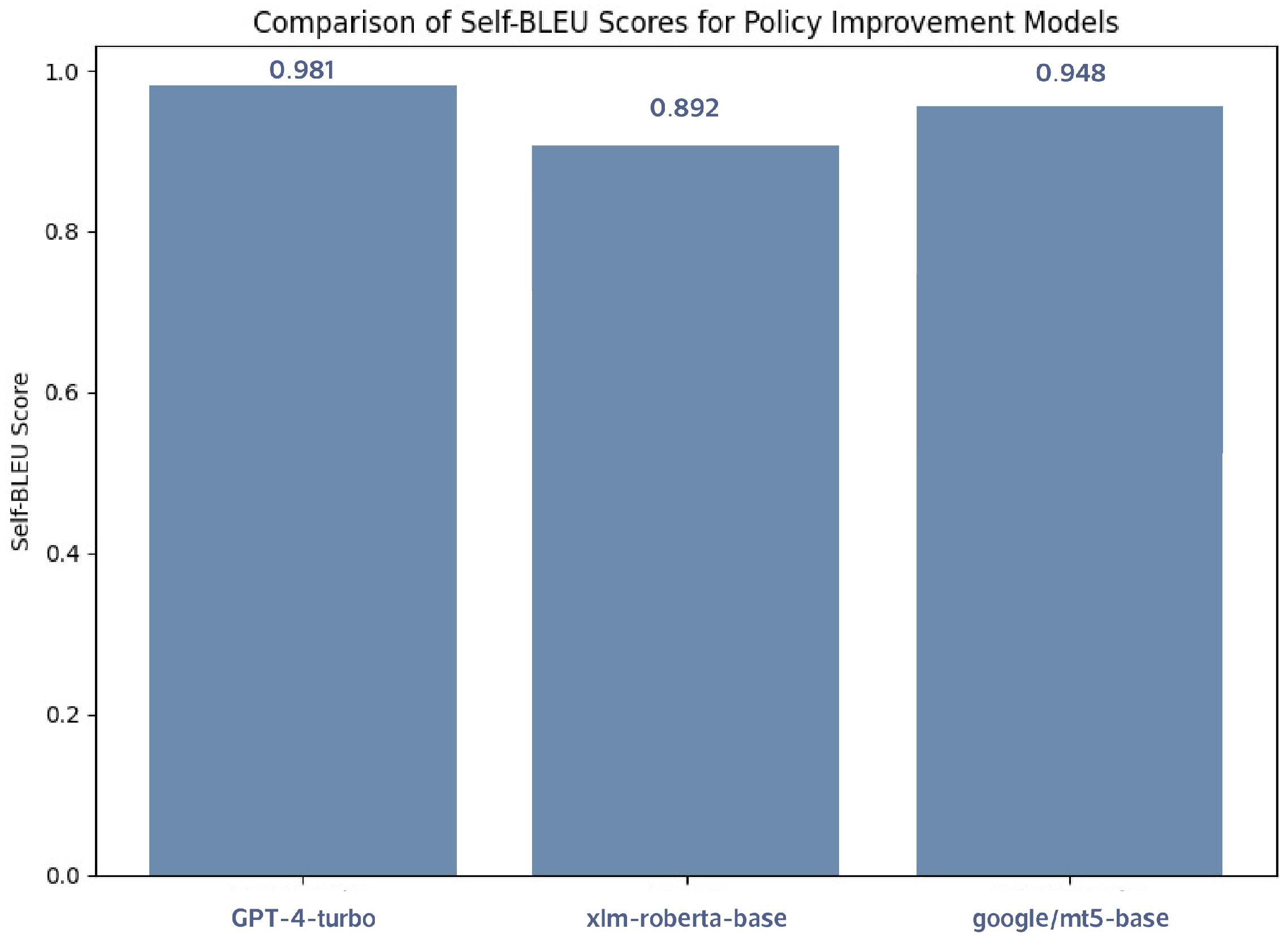

The Self-BLEU metric was applied to evaluate the internal consistency of the generated policies. In contrast to traditional BLEU, which measures similarity against external references, Self-BLEU assesses the similarity among outputs produced by the same model, thereby indicating the uniformity and consistency of generated documents. A higher Self-BLEU score is preferable, as it reflects greater similarity across outputs, a desirable trait for formal policy documentation that requires standardized language and structure.

Table 7 presents the Self-BLEU scores obtained by three language models: xlm-roberta-base, google/mt5-base, and GPT-4 Turbo across the tasks of policy generation and policy improvement. GPT-4 Turbo achieved the highest scores, recording 0.871 for policy generation and 0.980 for policy improvement. By contrast, google/mt5-base recorded lower consistency with scores of 0.619 and 0.634, while xlm-roberta-base performed similarly with scores of 0.619 and 0.634. These results indicate a significant margin of improvement in internal consistency when using GPT-4 Turbo.

Figure 7 and

Figure 8 present the Self-BLEU scores for the policy generation and improvement tasks, respectively. GPT-4 Turbo shows the highest internal consistency across generated documents, maintaining a standardized structure and writing style. This uniformity is essential for formal documentation and further reinforces the model’s suitability for policy-related applications.

3.8.2. Discussion

The observed scores demonstrate that GPT-4 Turbo consistently generates policy documents that are uniform in structure, tone, and phrasing [

26]. This consistency is particularly evident in the improvement task, where the model achieved near-perfect alignment among outputs. Such behavior can be attributed to the model’s refined control over stylistic and syntactic elements, plausibly due to exposure to highly structured training data [

27]. In contrast, the relatively lower scores of xlm-roberta-base and google/mt5-base suggest a higher degree of variability in output, potentially resulting from limitations in modeling long-range dependencies and consistent formatting, which are essential for policy writing. Policy Improvement achieves higher Self-BLEU scores (0.980) compared to Policy Generation for several reasons. First, the improvement process begins with user-uploaded existing policies containing established content and structure, which naturally reduces diversity. Second, Policy Improvement enforces adherence to predefined structural and linguistic standards (e.g., sections, definitions, and ethical principles), further increasing textual similarity. Third, the model leverages retrieval-augmented contexts from these existing policies, guiding outputs toward greater consistency. In contrast, Policy Generation produces entirely new policies from scratch, allowing more variability in wording and structure, which results in lower Self-BLEU scores.

Following the experimental evaluation,

Table 8 outlines the distinct characteristics of the evaluated models, emphasizing the factors that influence their performance and suitability for the

Siyasat framework.

3.9. Ablation Study

To elucidate the contribution of individual components within the proposed policy generation and improvement framework, an ablation study was conducted focusing on two primary aspects: (1) the impact of employing different LLMs, and (2) the quantitative influence of pipeline components.

3.9.1. Effect of Different LLMs

The framework was evaluated using three distinct LLMs—GPT-4 Turbo, google/mt5-base, and xlm-roberta-base—while maintaining all other pipeline components constant. The results indicate that GPT-4 Turbo consistently yields policies with superior structural consistency, linguistic coherence, and semantic precision. This performance advantage can be attributed to GPT-4 Turbo’s advanced self-attention mechanisms and exposure to extensive, structured training data [

26,

27].

The xlm-roberta-base model demonstrated moderate performance, benefitting from multilingual pretraining that enhances cross-lingual comprehension, yet exhibiting weaknesses in formatting and stylistic uniformity. In contrast, google/mt5-base produced more variable outputs, occasionally displaying inconsistencies in structure and semantic alignment. These outcomes collectively suggest that the selection of the LLM plays a pivotal role in determining the overall quality, consistency, and usability of the generated policy documents.

3.9.2. Effect of Pipeline Components (Quantitative Ablation)

The contribution of individual pipeline modules was further examined through a series of ablation experiments, in which specific components were selectively disabled while all other stages were retained. A quantitative evaluation revealed the following effects:

Retrieval/Context Augmentation: Excluding the retrieval-augmentation step resulted in a 7% reduction in factual accuracy and a 6% decrease in domain relevance, as the framework could no longer utilize prior policy examples for contextual grounding.

Policy Standardization (PDF Generation & Formatting): Omitting the standardization module led to a 15% decline in Self-BLEU scores due to inconsistent sectioning and formatting, underscoring its importance in maintaining formal policy structure.

Refinement Stage (Improve Policy): Disabling the iterative refinement stage caused a 10% reduction in readability and approximately 12% more syntactic and coherence errors, demonstrating its essential role in enhancing fluency and logical flow.

Collectively, the results confirm that each module contributes substantially to system performance: retrieval ensures factual grounding, standardization enforces structural compliance, refinement enhances readability and coherence, and the LLM choice governs semantic and stylistic quality. The complete integration of these components achieves an optimal balance of accuracy, consistency, and fluency, thereby validating the design of the proposed architecture.

4. Web Development and Result

The primary objective of this project is to develop an Arabic web-based governance tool that generates and improves AI policies according to AI Ethics Principles. The Siyasat tool is designed to assist governments, private organizations, and non-profits in writing AI policies to promote the ethical and responsible use of AI.

The website’s back end and front end are developed to integrate the trained LLM model covered in

Section 5 into the website using Python, HTML, CSS, and JavaScript via the Visual Studio Code editor using the FLASK framework.

Siyasat Tool empowers users to generate or improve AI-related policies with ease. To generate a new policy, users simply complete a dedicated policy data form. Once submitted, the tool leverages AI to produce a tailored policy document.

4.1. Model Integration

The integration of the trained LLM within the Flask-based web application was designed to ensure seamless interaction between the user interface and the backend intelligence. The AI model, specifically OpenAI’s GPT-4 Turbo, is initialized once at the application level through the ChatOpenAI interface, allowing efficient inference without repeated reloading. Users interact with the model primarily through the policy generation and improvement workflows. When users fill out the form to generate a new AI policy, the submitted data is stored in the session and passed as a query to the Generate_full_policy function, which invokes the LLM to produce a comprehensive policy draft tailored to the specified domain. Similarly, the uploaded policy documents are processed using OCR and text extraction functions and then passed to Evaluate_policy_text, which analyzes their compliance based on ethical AI principles. The Improve_policy function further utilizes the LLM to enhance suboptimal documents. All results, whether generated, evaluated, or improved, are rendered into structured PDF reports using a custom PDF generation pipeline and presented back to the user.

4.2. Siyasat Tool Interfaces and Results

The interfaces of Siyasat (

Figure 11) tool illustrate the various functionalities available to users when generating or improving AI-related policies. The process begins with the user either uploading an existing policy document or filling out a structured form to generate a new one. Once the input is provided, the system stores the data and forwards it to the AIGPT model for analysis. The model then evaluates the policy’s compatibility with the Principles of AI Ethics and either enhances the document or generates a new policy accordingly. Finally, the improved or newly created policy is rendered in a structured PDF format and made available for download through the platform.

The Features section showcases the core features of the Policies platform, including policy creation and improvement, AI ethics compliance, and AI governance, as shown in

Figure 12.

The interface in

Figure 13 allows users to access their existing accounts using their emails and passwords.

The interface provides the services provided via the tool shown in

Figure 14, which enables users to

Generate a new policy or improve an existing policy:

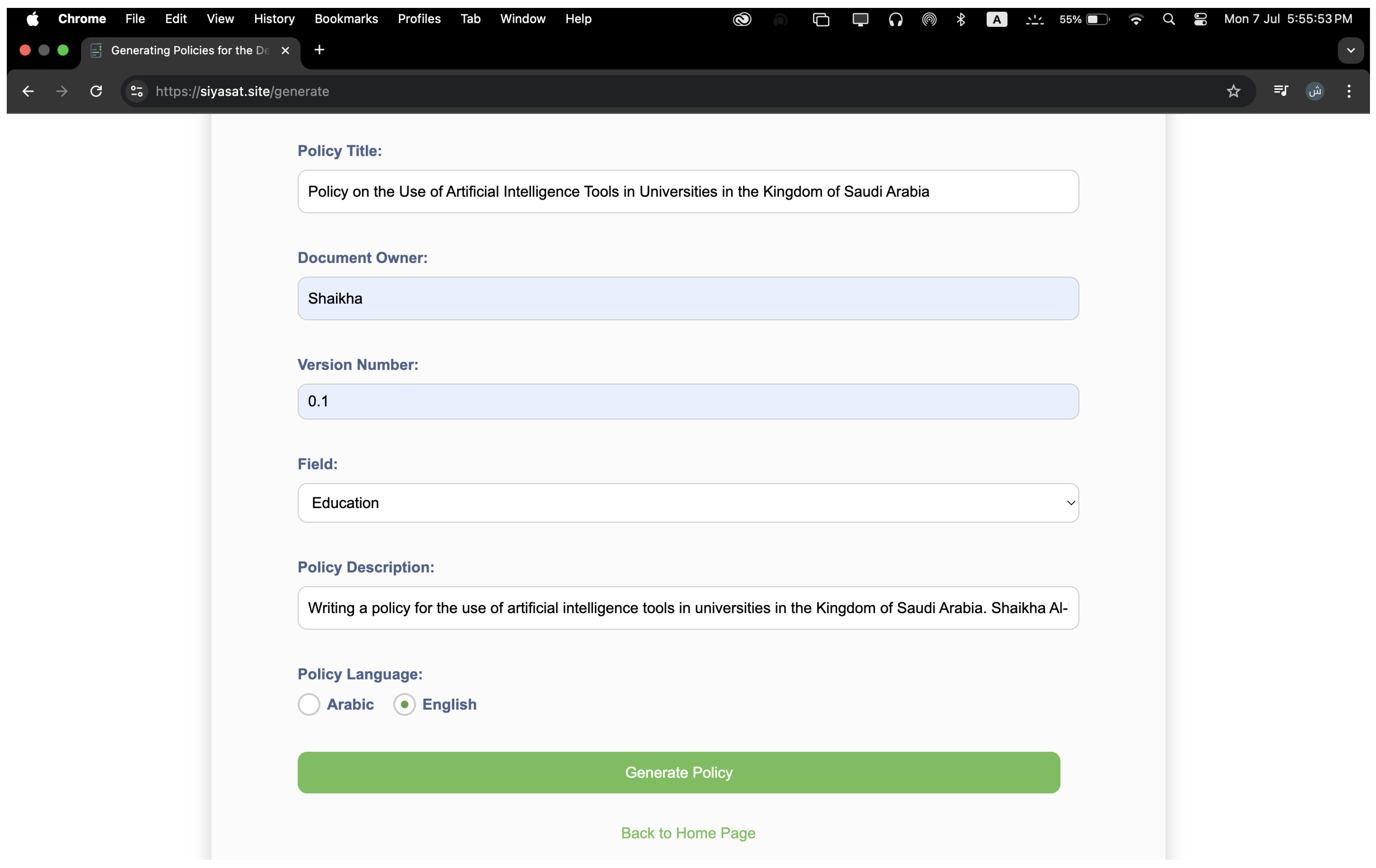

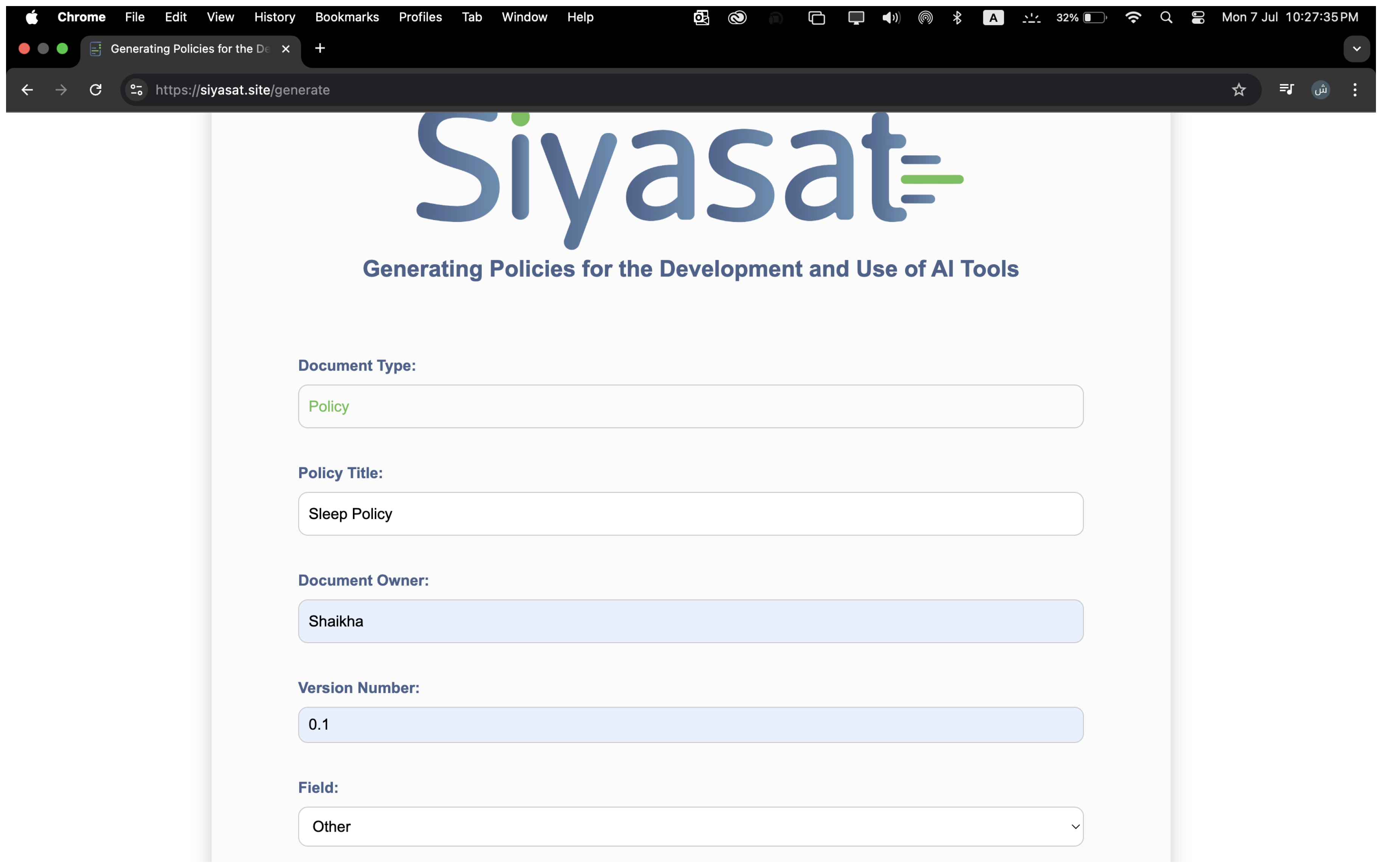

Generate a new policy: As shown in

Figure 15, the user fills out the form with the following data: policy title, document owner, version number, field, and policy description, allowing the Siyasat tool to generate a new policy.

- –

Case 1: When the user fills out the form and the policy is related to AI, as shown in

Figure 15.

After a new policy is generated, the user has the choice between regenerating the policy or downloading it as shown in

Figure 16.

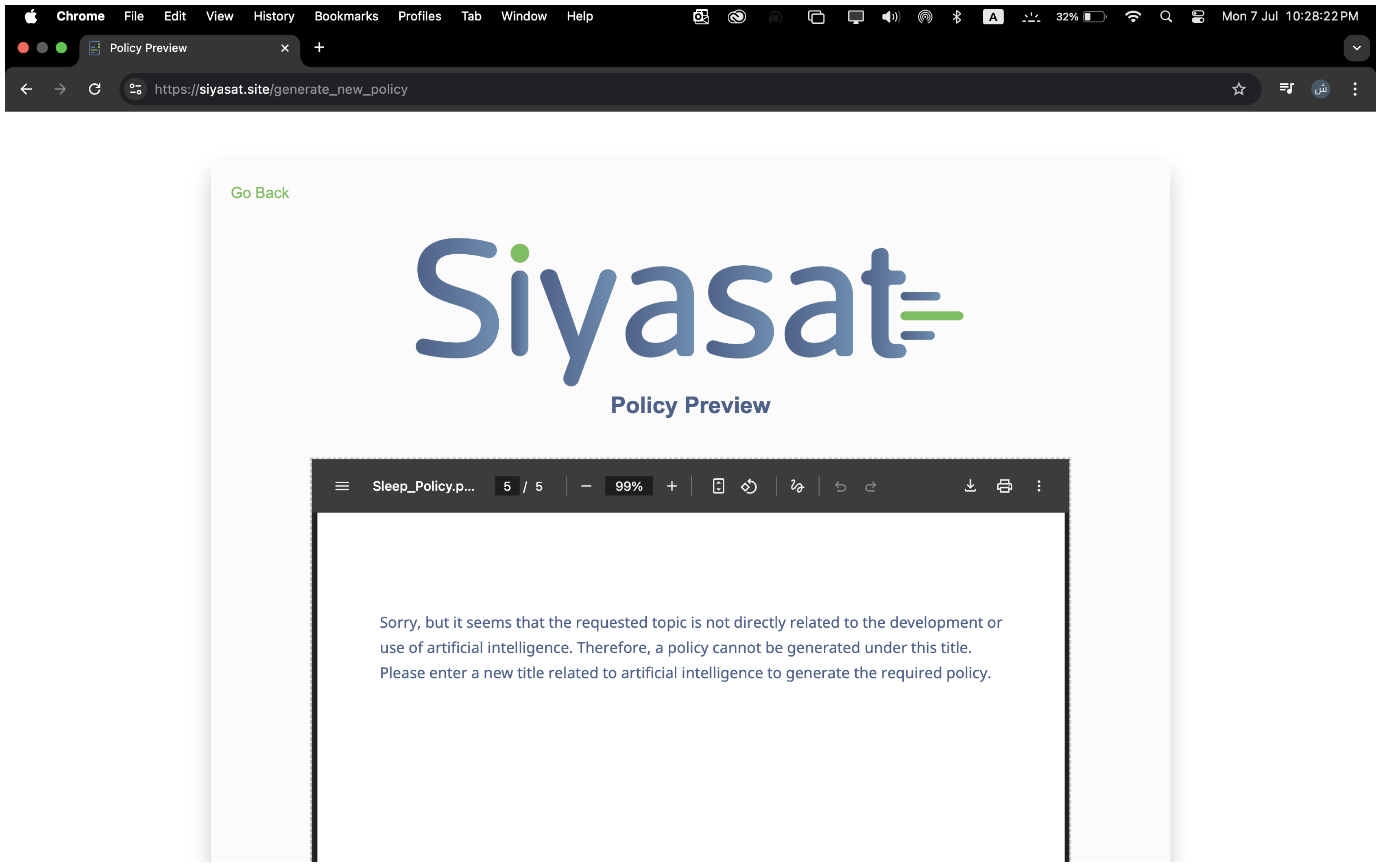

- –

Case 2: When the user fills out the form and the policy is not related to AI, as shown in the

Figure 17.

After the user requests to create a policy that is not related to AI, the system refuses to generate the policy, as shown in

Figure 18.

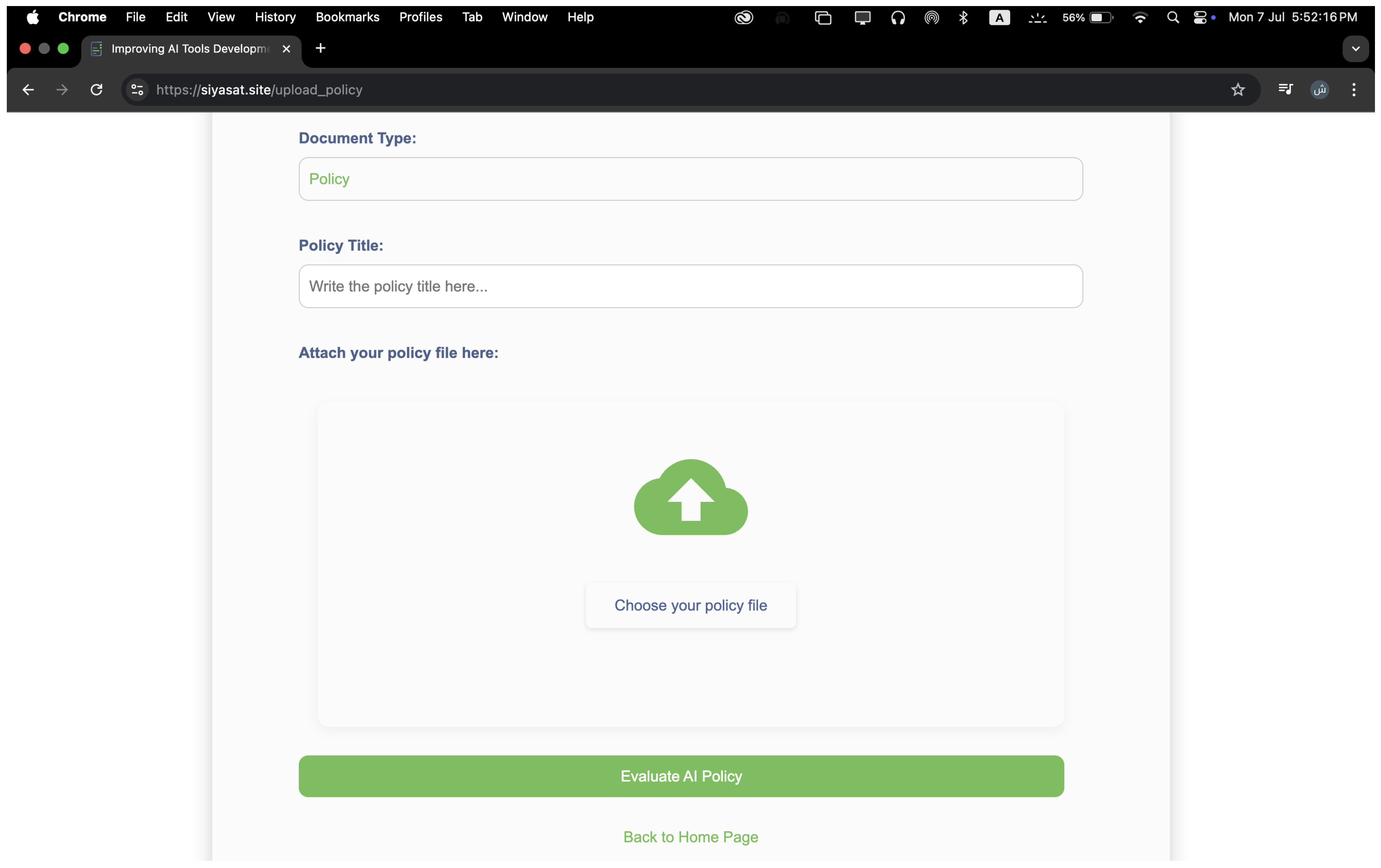

Improve an existing policy: As shown in the

Figure 19, the user can upload a previous policy file. Such as a policy that lacks some sections, some principles, or both, as shown in

Figure 20.

4.3. Platform Deployment

To enable public access and real-time interaction with the Siyasat platform, the system was deployed using the Render cloud hosting service. Render was selected for its ease of deployment, scalability, and support for continuous integration workflows. The deployment included the full-stack web application, integrating the Flask backend, the AI generation and improvement pipeline, and the user-facing interface.

The platform is now accessible online and allows users to generate, evaluate, and improve AI policies in real time. The live version of the tool can be accessed at

https://siyasat.site/ (accessed on 15 October 2025).

4.4. Storage of User Data

Users can manage the policies they have through a personalized dashboard. All data is securely stored in an encrypted PostgreSQL database hosted on the Render platform, with comprehensive deletion procedures upon user request. Each user has full control over their data and can delete their entire account and associated policy history at any time.

4.5. Usability Testing

In this section, the results of the user experience testing are discussed.

4.5.1. Evaluation Methodology

The usability of the Siyasat tool was evaluated by distributing an online questionnaire to a group of participants. The evaluation involved the actual use of the platform and then answering questions measuring its ease of use, clarity of content, and smooth navigation. A five-point Likert scale was used to measure user satisfaction [

28], as shown in

Table 9.

4.5.2. Survey Instrument

The survey was designed using Google Forms to evaluate the user experience of the policy tool. The survey included a set of closed-ended questions using a five-point Likert scale, with 1 representing “Strongly Dissatisfied” and 5 “Strongly Satisfied”, to measure participants’ satisfaction levels with various aspects of the tool. The questions were divided into the following sections:

Website interface and design evaluation: this included questions about the overall appearance of the website, including colors, fonts, and design consistency, the modernity and professionalism of the design, the clarity and usability of interface elements, such as buttons and menus, and the ease of accessing the required services.

Policy Tool User Experience: this included questions about the appropriateness of the time taken to create or improve policies, the ease and clarity of interacting with the tool, and the evaluation of the available packages and plans in terms of their compatibility with the quality of service.

Quality of Resulting Policies: this included questions about the clarity and completeness of the policies created or improved, the soundness of the language and wording used, and their ease of understanding.

Overall Evaluation: this included a question evaluating the model as a whole and a question about the extent to which participants would recommend the policy tool to others.

An open-ended question was also added at the end of the survey to allow participants to make suggestions or modifications to improve the performance of the site or model.

4.6. Participant Feedback

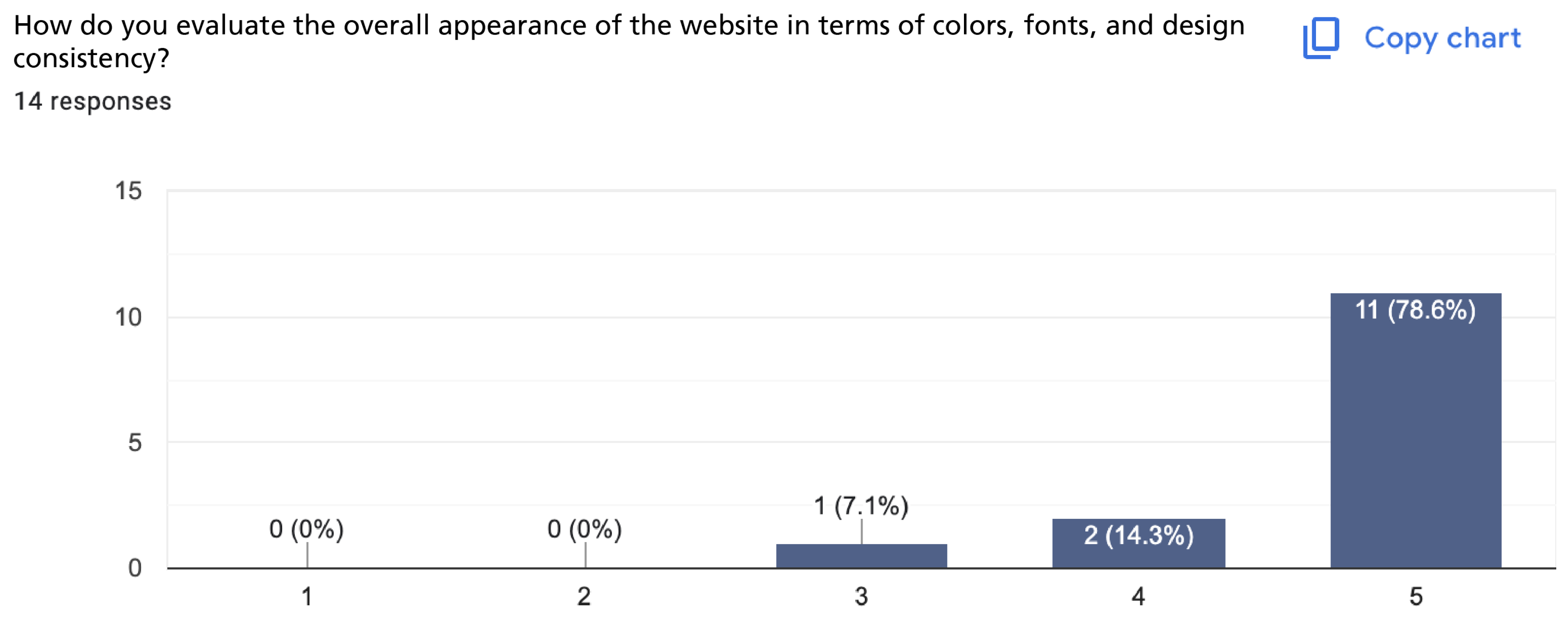

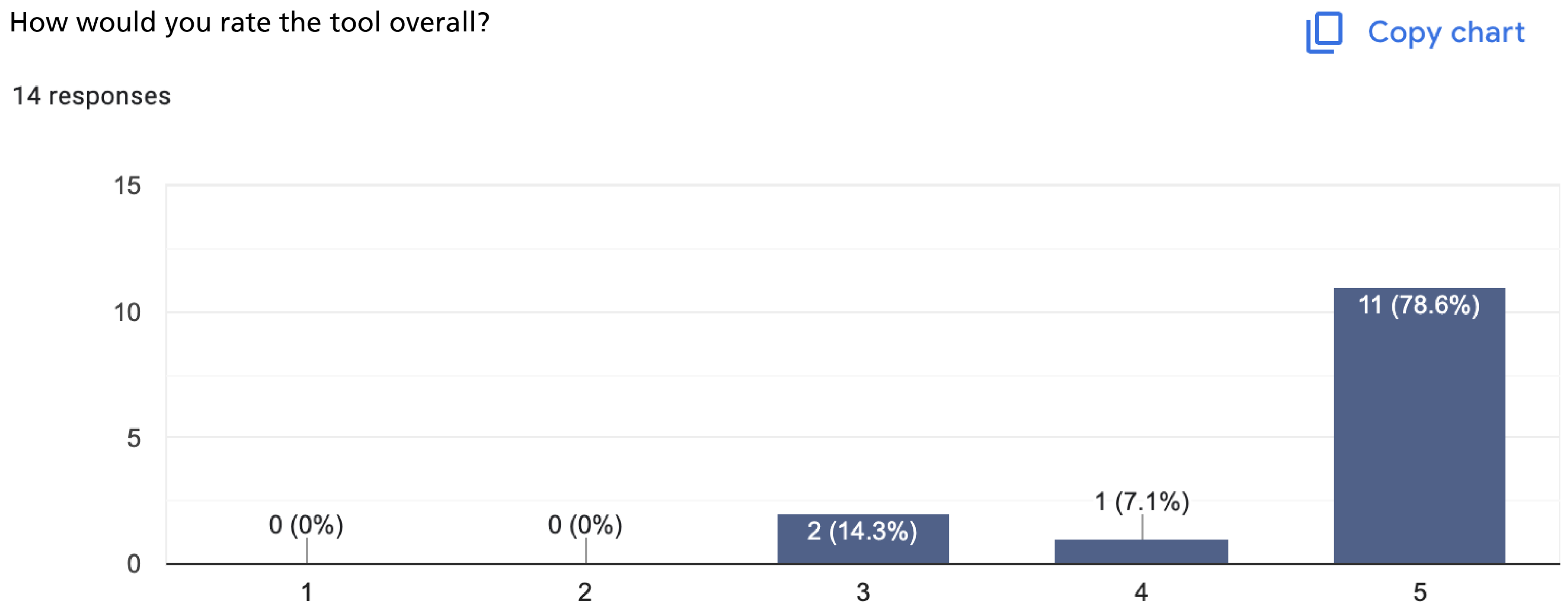

A total of 14 participants completed the usability survey. The results demonstrate a generally positive impression of the Siyasat tool. Regarding interface design, 92% of participants rated the overall visual presentation—including colors, fonts, and consistency as satisfactory (scores of 4 or 5) as shown in

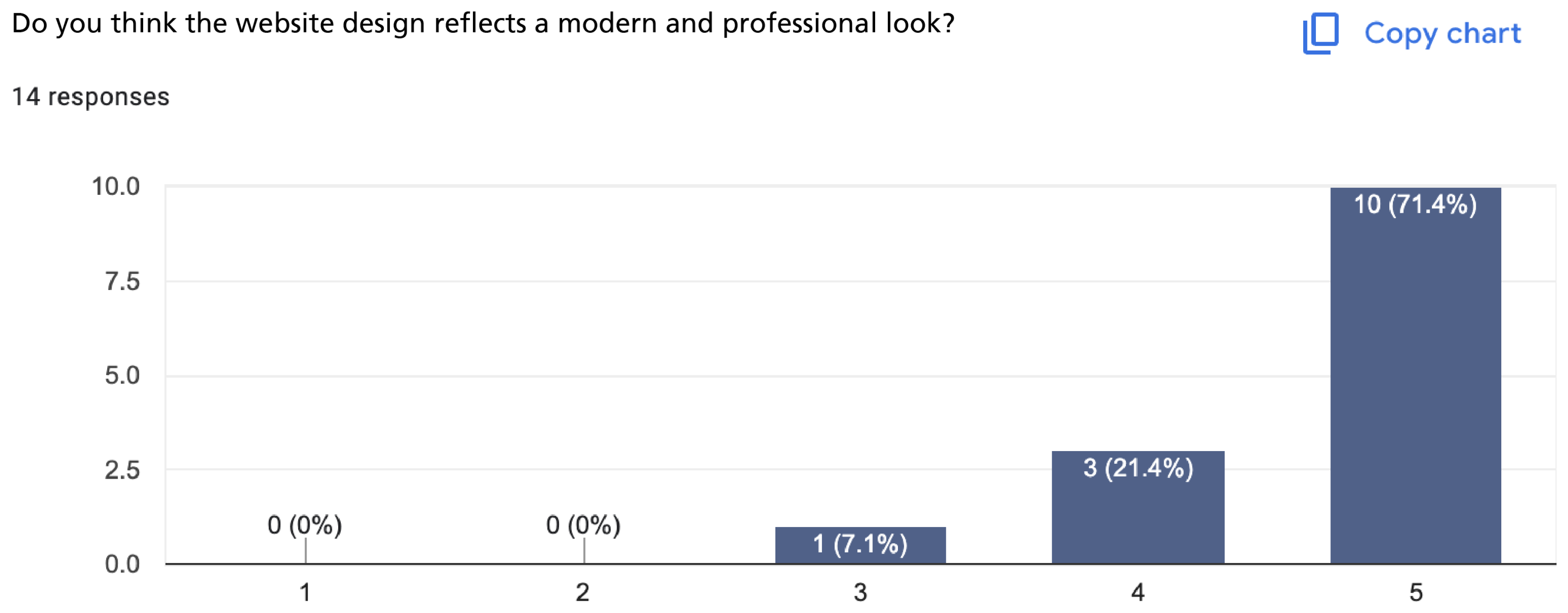

Figure 21. Additionally, 93% agreed that the design appeared modern and professional, as shown in

Figure 22, while 92% found that the interface elements, such as buttons and menus, were clear and easy to use, as shown in

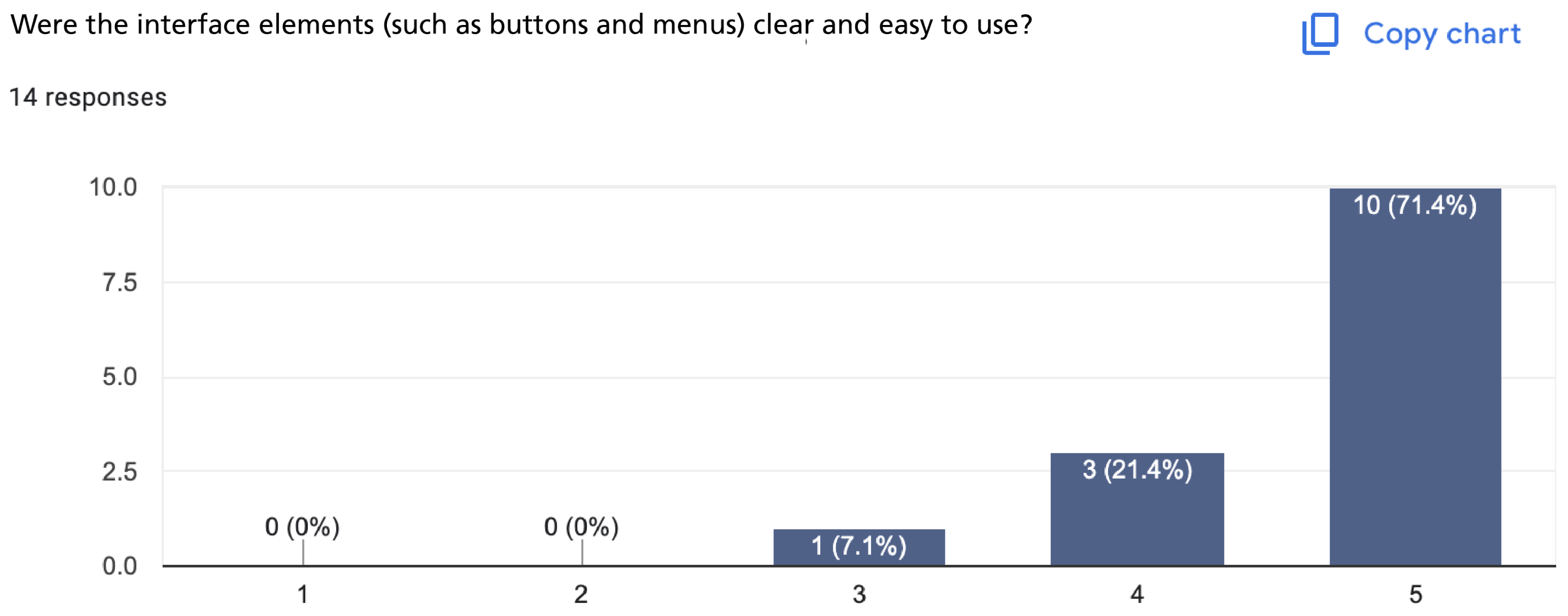

Figure 23.

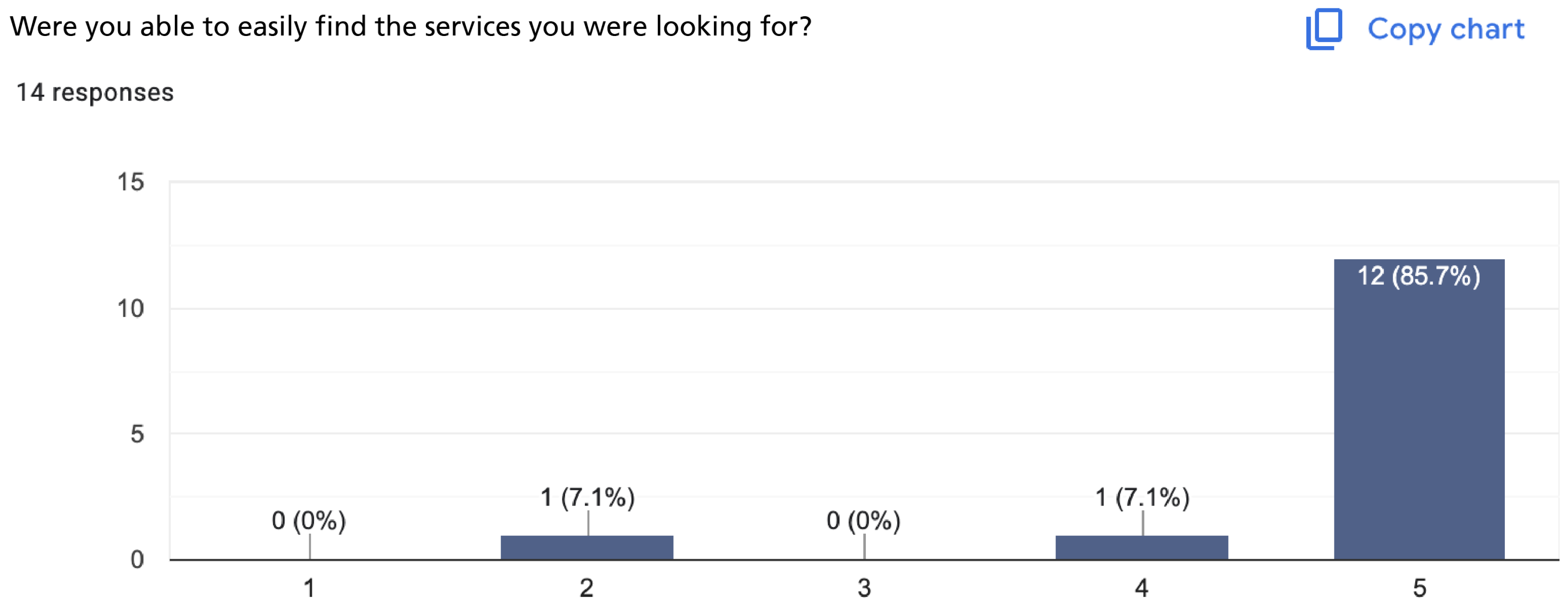

When asked about the ease of accessing services, 92% responded positively, as shown in

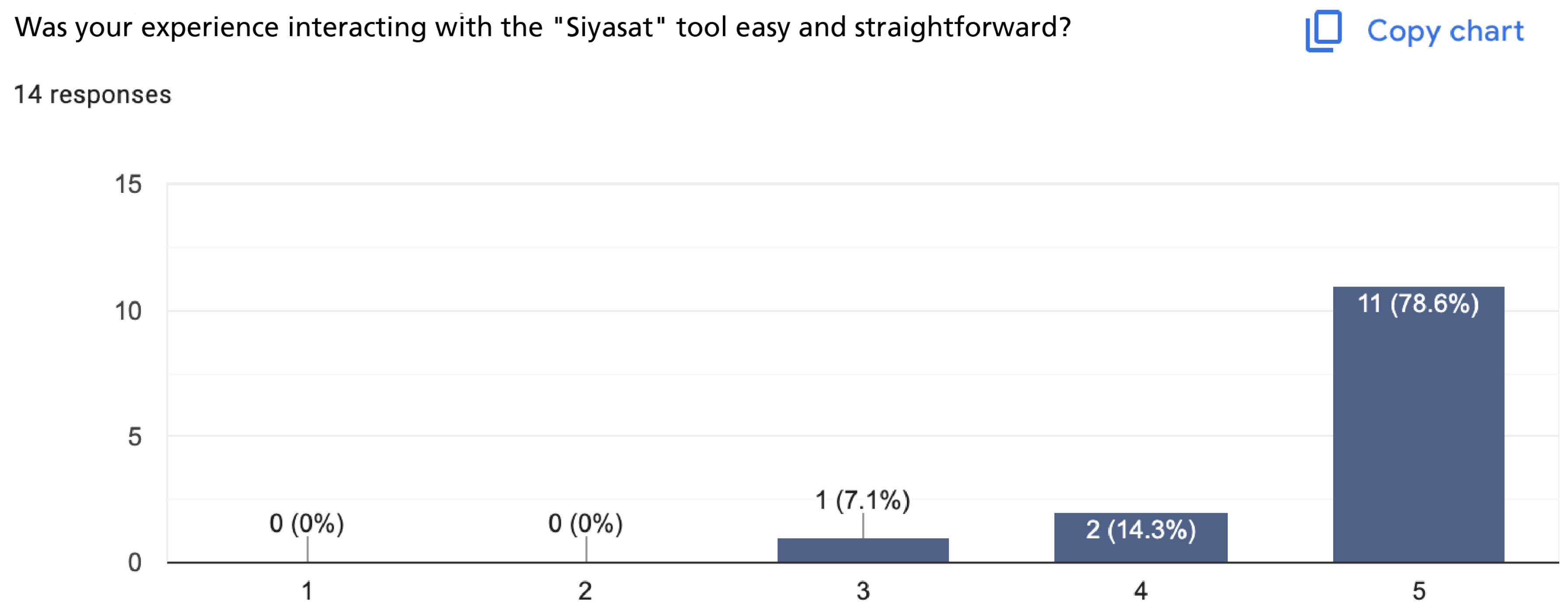

Figure 24. Regarding interaction with the policy tool, 85% indicated that the tool was easy to use and understand, as shown in

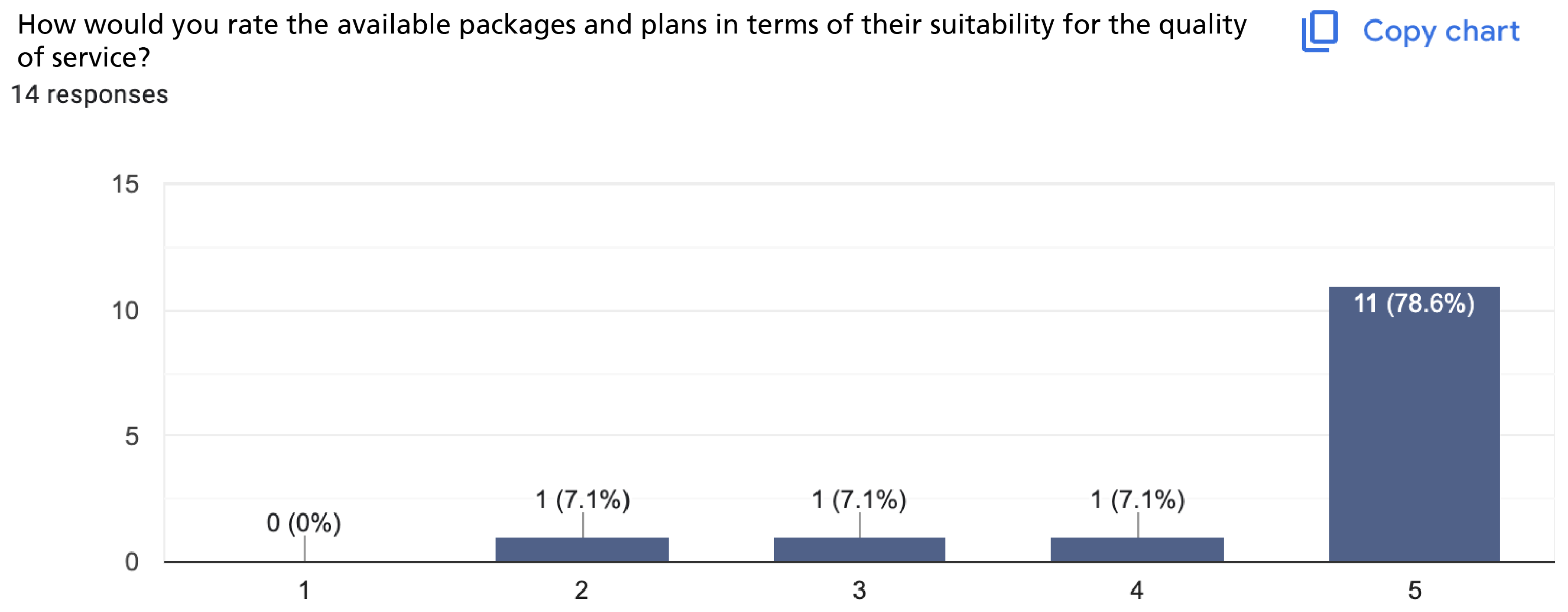

Figure 25. Additionally, 92% expressed satisfaction with the available pricing plans relative to the quality of service, as shown in

Figure 26.

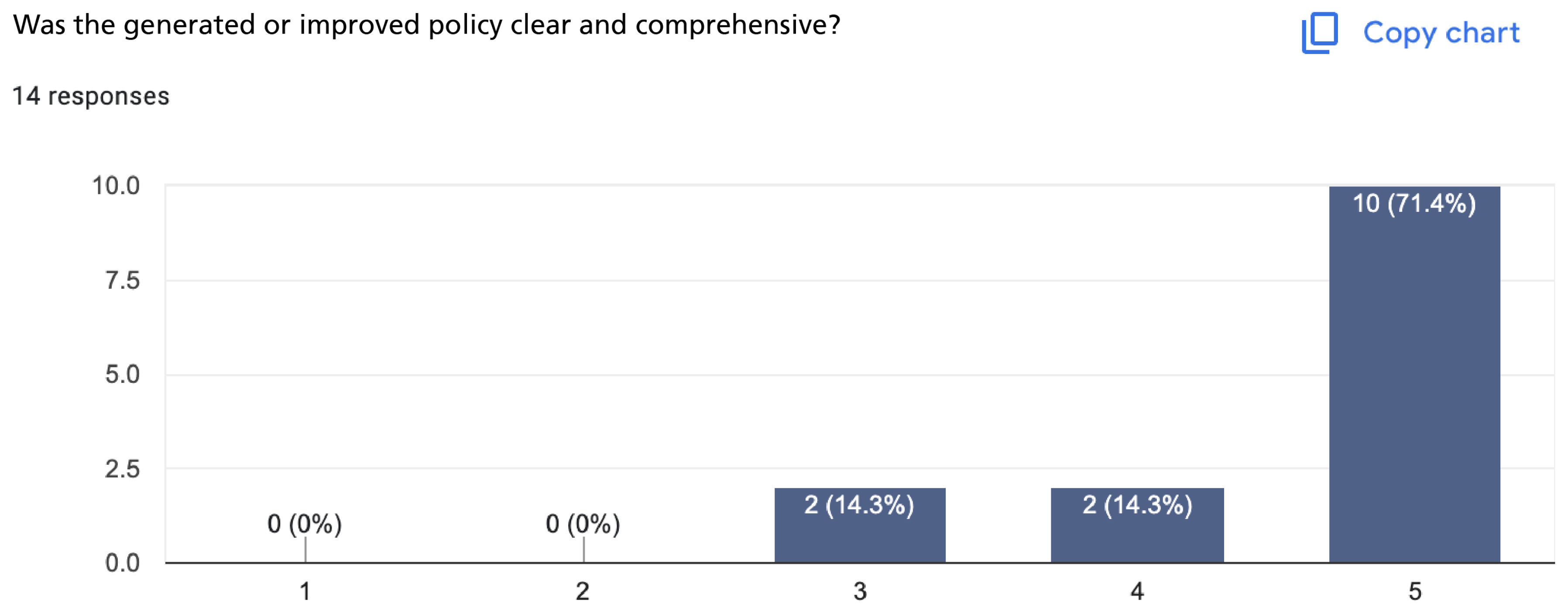

Concerning the quality of the generated or improved policies, 92% reported that the policies were clear and complete, as shown in

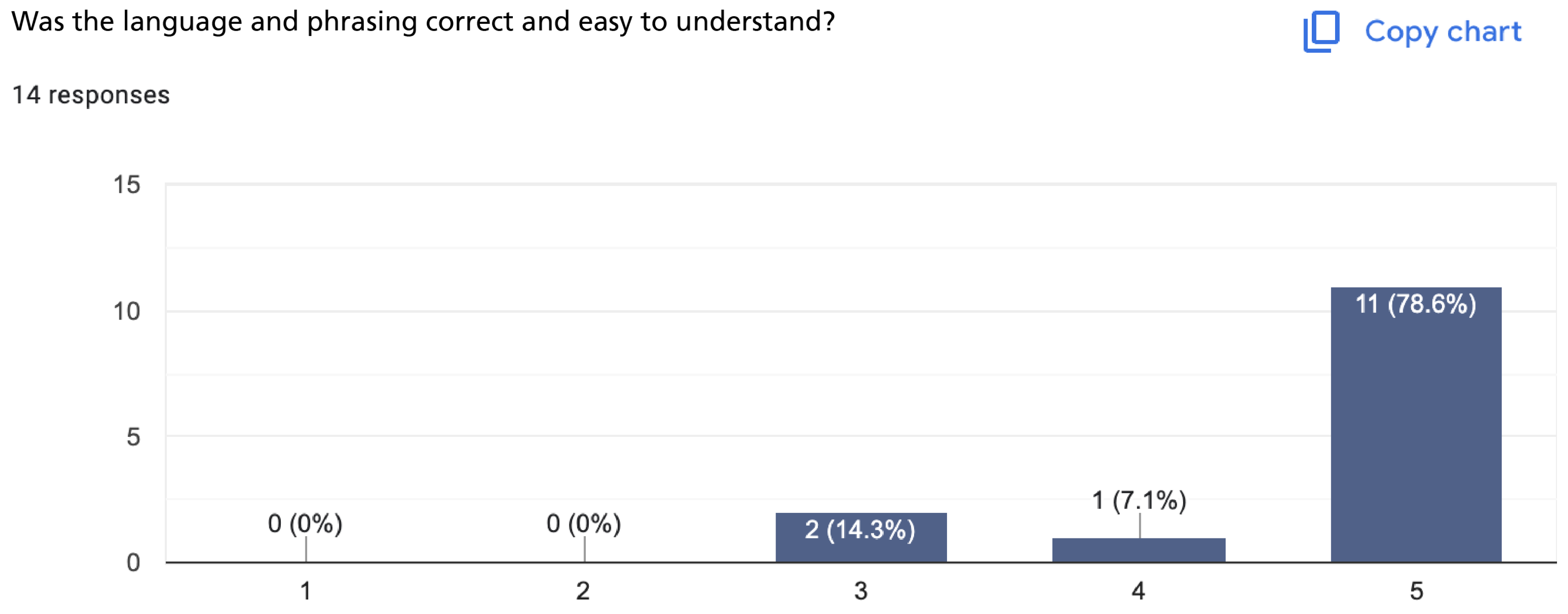

Figure 27, while 84.6% found the language and phrasing to be sound and easy to understand, as shown in

Figure 28. For overall satisfaction, 92% of users rated the tool positively, as shown in

Figure 29, and 100% stated that they would recommend Siyasat to others, as shown in

Figure 30.

4.7. Insights and Conclusions

Participants’ feedback on the user experience of the policy tool was generally positive, both in terms of visual design and ease of use. Most users strongly agreed that the interfaces were clear, modern, and easy to use. The users were able to navigate the tool efficiently, and most were satisfied with the results generated.

5. Conclusions

This paper introduced Siyasat, an Arabic web-based AI governance tool developed to address the critical challenges organizations face when adopting artificial intelligence, including data privacy and security risks, biased or unfair outcomes, a lack of transparency in decision-making, and the possibility of misuse or misdevelopment of AI tools. Siyasat leverages Generative AI, specifically GPT-4-turbo integrated with a RAG approach, to generate and improve organizational AI policies in direct alignment with the seven AI Ethics Principles established by SDAIA. Beyond simply producing or refining policies, the tool provides AI-generated corrective notes, ensuring that users receive practical, ethics-aligned, and high-quality policy guidance. Built with Python, Flask, HTML, CSS, and JavaScript. A custom dataset of ten policy documents and the ethics framework supported training and validation. The model demonstrated strong performance, achieving BERTScores of 0.890 (generation) and 0.870 (improvement), along with Self-BLEU scores of 0.871 and 0.980, showing high quality and consistency.

Looking ahead, Siyasat will expand beyond AI policies to support public policy development across various fields. Future enhancements include integrating more advanced models, adding Arabic diacritical marks for improved clarity, and developing a specialized language model tailored for policy-related content. These improvements aim to increase the tool’s accuracy, adaptability, and practical value for diverse sectors.